Disaster recovery

IBM Virtualization Engine TS7700 failover scenarios, and disaster recovery (DR) planning and considerations, with or without Geographically Dispersed Parallel Sysplex (GDPS), are covered.

The following topics are covered:

•Copy Export Implementation and Usage

•GDPS Implementation and Considerations

11.1 TS7700 Virtualization Engine grid failover principles

To better understand and plan for the actions to be performed with the TS7700 Virtualization Engine grid configuration in failures, key concepts for grid operation and the many failure scenarios that the grid has been designed to handle are described. A TS7700 Virtualization Engine grid configuration provides the following data access and availability characteristics:

•Accessing the data on a particular cluster requires that a host mount request be issued on a virtual device address that is defined for that cluster. The virtual device addresses for each cluster are independent. In a prior generation, the Peer-to-Peer (PTP) Virtual Tape Server (VTS) mount request was issued on a virtual device address defined for a virtual tape controller and the virtual tape controller, then decided which VTS to use for data access.

•All logical volumes are accessible through any of the virtual device addresses on the TS7700 Virtualization Engine clusters in the grid configuration. The preference is to access a copy of the volume in the Tape Volume Cache (TVC) that is associated with the TS7700 Virtualization Engine cluster on which the mount request is received. If a recall is required to place the logical volume in the TVC on that TS7700 Virtualization Engine cluster, it will be done as part of the mount operation.

If a copy of the logical volume is not available at that TS7700 Virtualization Engine cluster (either because it does not have a copy or the copy it does have is inaccessible because of an error), and a copy is available at another TS7700 Virtualization Engine cluster in the grid, the volume is accessed through the TVC at the TS7700 Virtualization Engine cluster that has the available copy. If a recall is required to place the logical volume in the TVC on the other TS7700 Virtualization Engine cluster, it will be done as part of the mount operation.

•Whether a copy is available at another TS7700 Virtualization Engine cluster in a multicluster grid depends on the Copy Consistency Point that had been assigned to the logical volume when it was written. The Copy Consistency Point is set through the Management Class storage construct. It specifies if and when a copy of the data is made between the TS7700 Virtualization Engine clusters in the grid configuration. The following Copy Consistency Policies can be assigned:

– Rewind Unload (RUN) Copy Consistency Point: If a data consistency point of RUN is specified, the data created on one TS7700 Virtualization Engine cluster is copied to the other TS7700 Virtualization Engine cluster as part of successful rewind unload command processing, meaning that for completed jobs, a copy of the volume will exist on both TS7700 Virtualization Engine clusters. Access to data written by completed jobs (successful Rewind Unload) before the failure is maintained through the other TS7700 Virtualization Engine cluster. Access to data of incomplete jobs that were in process at the time of the failure is not provided.

– Deferred Copy Consistency Point: If a data consistency point of Deferred is specified, the data created on one TS7700 Virtualization Engine cluster is copied to the specified TS7700 Virtualization Engine clusters after successful rewind unload command processing. Access to the data through the other TS7700 Virtualization Engine cluster is dependent on when the copy completes. Because there will be a delay in performing the copy, access might or might not be available when a failure occurs.

– No Copy Consistency Point: If a data consistency point of No Copy is specified, the data created on one TS7700 Virtualization Engine cluster is not copied to the other TS7700 Virtualization Engine cluster. If the TS7700 Virtualization Engine cluster to which data was written fails, the data for that logical volume is inaccessible until that TS7700 Virtualization Engine cluster’s operation is restored.

– Synchronous Copy Consistency Point: When Synchronous Mode is specified, the data that is written to TS7700 is compressed and simultaneously written or duplexed to two TS7700 locations. When Sync is used, two clusters must be defined as sync points. All other clusters can be any of the remaining consistency point options allowing additional copies to be made.

– Copy Consistency Override: With the introduction of the multicluster grid, the logical volume Copy Consistency Override feature has been enabled. By using Cluster Settings → Copy Policy Override, on each library, you can control existing RUN consistency points. Be careful in using this option because it might mean there are fewer copies of the data available than your copy policies have specified.

•The Volume Removal policy for hybrid grid configurations is available in any grid configuration that contains at least one TS7720 cluster. Because the TS7720 “Disk-Only” solution has a maximum storage capacity that is the size of its TVC, after the cache fills, this policy allows logical volumes to be automatically removed from cache while a copy is retained within one or more peer clusters in the grid. When the auto-removal starts, all volumes in the scratch (Fast Ready) category are removed first because these volumes are intended to hold temporary data. This mechanism can remove old volumes in a private category from the cache to meet a predefined cache usage threshold if a copy of the volume is retained on one of the remaining clusters. A TS7740 cluster failure can affect the availability of old volumes (no logical volumes are removed from a TS7740 cluster).

•If a logical volume is written on one of the TS7700 Virtualization Engine clusters in the grid configuration and copied to the other TS7700 Virtualization Engine cluster, the copy can be accessed through the other TS7700 Virtualization Engine cluster. This is subject to the so-called volume ownership.

At any time, a logical volume is “owned” by a cluster. The owning cluster has control over access to the volume and changes to the attributes associated with the volume (such as category or storage constructs). The cluster that has ownership of a logical volume can surrender it dynamically to another cluster in the grid configuration that is requesting a mount of the volume.

When a mount request is received on a virtual device address, the TS7700 Virtualization Engine cluster for that virtual device must have ownership of the volume to be mounted or must obtain the ownership from the cluster that currently owns it. If the TS7700 Virtualization Engine clusters in a grid configuration and the communication paths between them are operational (grid network), the change of ownership and the processing of logical volume-related commands are transparent to the operation of the TS7700 Virtualization Engine cluster.

However, if a TS7700 Virtualization Engine cluster that owns a volume is unable to respond to requests from other clusters, the operation against that volume will fail, unless additional direction is given. Clusters will not automatically assume or take over ownership of a logical volume without being directed. This is done to prevent the failure of the grid network communication paths between the TS7700 Virtualization Engine clusters resulting in both clusters thinking they have ownership of the volume. If more than one cluster has ownership of a volume, that might result in the volume’s data or attributes being changed differently on each cluster, resulting in a data integrity issue with the volume.

If a TS7700 Virtualization Engine cluster has failed or is known to be unavailable (for example, a power fault in the IT center) or needs to be serviced, its ownership of logical volumes is transferred to the other TS7700 Virtualization Engine cluster through one of the following modes.

These modes are set through the management interface (MI):

– Read Ownership Takeover: When Read Ownership Takeover (ROT) is enabled for a failed cluster, ownership of a volume is allowed to be taken from a TS7700 Virtualization Engine cluster that has failed. Only read access to the volume is allowed through the other TS7700 Virtualization Engine cluster in the grid. After ownership for a volume has been taken in this mode, any operation attempting to modify data on that volume or change its attributes is failed. The mode for the failed cluster remains in place until a different mode is selected or the failed cluster has been restored.

– Write Ownership Takeover: When Write Ownership Takeover (WOT) is enabled for a failed cluster, ownership of a volume is allowed to be taken from a cluster that has been marked as failed. Full access is allowed through the other TS7700 Virtualization Engine cluster in the grid. The mode for the failed cluster remains in place until a different mode is selected or the failed cluster has been restored.

– Service prep/service mode: When a TS7700 Virtualization Engine cluster is placed in service preparation mode or is in service mode, ownership of its volumes is allowed to be taken by the other TS7700 Virtualization Engine cluster. Full access is allowed. The mode for the cluster in service remains in place until it has been taken out of service mode.

•In addition to the manual setting of one of the ownership takeover modes, an optional automatic method named Autonomic Ownership Takeover Manager (AOTM) is available when each of the TS7700 Virtualization Engine clusters is attached to a TS3000 System Console (TSSC) and there is a communication path provided between the TSSCs. AOTM is enabled and defined by the IBM service support representative (SSR). If the clusters are in close proximity of each other, multiple clusters in the same grid can be attached to the same TSSC and the communication path is not required.

|

Guidance: The links between the TSSCs must not be the same physical links that are also used by cluster grid gigabit links. AOTM must have a different network to be able to detect that a missing cluster is actually down, and that the problem is not caused by a failure in the grid gigabit wide area network (WAN) links.

|

If enabled by the IBM SSR, if a TS7700 Virtualization Engine cluster cannot obtain ownership from the other TS7700 Virtualization Engine cluster because it does not get a response to an ownership request, a check is made through the TSSCs to determine whether the owning TS7700 Virtualization Engine cluster is inoperable or just that the communication paths to it are not functioning. If the TSSCs have determined that the owning TS7700 Virtualization Engine cluster is inoperable, they will enable either read or write ownership takeover, depending on what was set by the IBM SSR.

•AOTM enables an ownership takeover mode after a grace period, and can only be configured by an IBM SSR. Therefore, jobs can intermediately fail with an option to retry until the AOTM enables the configured takeover mode. The grace period is set to 20 minutes, by default. The grace period starts when a TS7700 detects that a remote TS7700 has failed. It can take several minutes.

The following OAM messages can be displayed up until the point when AOTM enables the configured ownership takeover mode:

– CBR3758E Library Operations Degraded

– CBR3785E Copy operations disabled in library

– CBR3786E VTS operations degraded in library

– CBR3750I Message from library libname: G0013 Library libname has experienced an unexpected outage with its peer library libname. Library libname might be unavailable or a communication issue might be present.

– CBR3750I Message from library libname: G0009 Autonomic ownership takeover manager within library libname has determined that library libname is unavailable. The Read/Write ownership takeover mode has been enabled.

– CBR3750I Message from library libname: G0010 Autonomic ownership takeover manager within library libname determined that library libname is unavailable. The Read-Only ownership takeover mode has been enabled.

•A failure of a TS7700 Virtualization Engine cluster will cause the jobs using its virtual device addresses to abend. To rerun the jobs, host connectivity to the virtual device addresses in the other TS7700 Virtualization Engine cluster must be enabled (if not already) and an appropriate ownership takeover mode selected. As long as the other TS7700 Virtualization Engine cluster has a valid copy of a logical volume, the jobs can be retried.

If a logical volume is being accessed in a remote cache through the Ethernet link and that link fails, the job accessing that volume also fails. If the failed job is attempted again, the TS7700 Virtualization Engine will use another Ethernet link. You can have four 1-Gbps Ethernet links or two 10-Gbps Ethernet links. If all links fail, access to any data in a remote cache is not possible.

11.2 Failover scenarios

As part of a total systems design, you must develop business continuity procedures to instruct IT personnel in the actions that they need to take in a failure. Test those procedures either during the initial installation of the system or at another time.

The scenarios that are described are from the scenarios described in the IBM Virtualization Engine TS7700 Series Grid Failover Scenarios white paper, which was written in an effort to assist IBM specialists and clients in developing such testing plans. The white paper is available at the following address:

The white paper documents a series of TS7700 Virtualization Engine Grid failover test scenarios for z/OS that were run in an IBM laboratory environment. Single failures of all major components and communication links and some multiple failures are simulated.

11.2.1 Test configuration

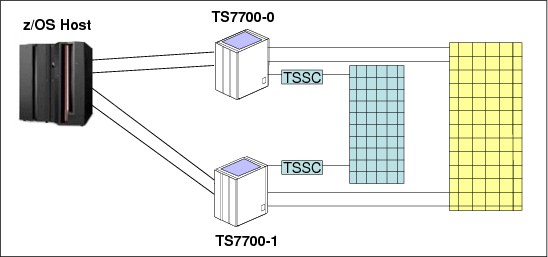

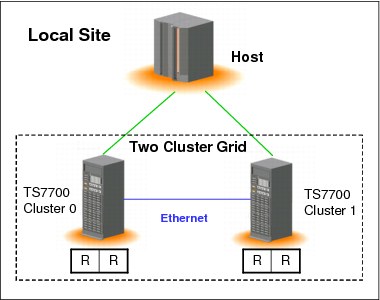

The hardware configuration used for the laboratory test scenarios is shown in Figure 11-1.

Figure 11-1 Grid test configuration for a two-cluster grid

For the Automatic Takeover scenarios, a TSSC attached to each of the TS7700 Virtualization Engine clusters is required and an Ethernet connection between the TSSCs is required. Although all the components tested were local, the results of the tests will be similar, if not the same, for remote configurations. All Fibre Channel connections (FICON) were direct, but again, the results will be valid for configurations that use FICON directors. Any supported level of z/OS software, and current levels of TS7700 Virtualization Engine and TS3500 Tape Library microcode, will all provide similar results. The test environment was MVS/JES2. Failover capabilities are the same for all supported host platforms, although host messages differ and host recovery capabilities might not be supported in all environments.

For the tests, all host jobs are routed to the virtual device addresses associated with TS7700 Virtualization Engine Cluster 0. The host connections to the virtual device addresses in TS7700 Virtualization Engine Cluster 1 are used in testing recovery for a failure of TS7700 Virtualization Engine Cluster 0.

An IBM Support team must be involved in the planning and execution of any failover tests. In certain scenarios, intervention by an IBM SSR might be needed to initiate failures or restore “failed” components to operational status.

Test job mix

The test jobs running during each of the failover scenarios consist of 10 jobs that mount single specific logical volumes for input (read), and five jobs that mount single scratch logical volumes for output (write). The mix of work used in the tests is purely arbitrary, and any mix is suitable. However, in order for recovery to be successful, logical drives must be available for a swap. For that reason, fewer than the maximum number of virtual drives must be active during testing. Also, a large number of messages are generated during some scenarios, and fewer jobs will reduce the number of host console messages.

|

Clarification: The following scenarios were tested using TS7740 Virtualization Engine clusters with attached TS3500 Tape Libraries. The scenarios also apply to the TS7720 Virtualization Engines as long as they are limited to virtual volume management and grid communication.

|

11.2.2 Failover scenario 1

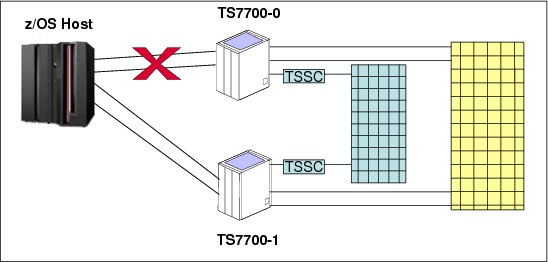

The scenario shown in Figure 11-2 assumes one host link to TS7700-0 fails. The failure might be the intermediate FICON directors, FICON channel extenders, or remote channel extenders.

Figure 11-2 Failure of a host link to a TS7700 Virtualization Engine

Effects of the failure

You see the following effects of the failure:

•All grid components continue to operate.

•All channel activity on the failing host link is stopped.

•Host channel errors are reported or error information becomes available from the intermediate equipment.

•If alternate paths exist from the host to either TS7700, the host I/O operations can continue. Ownership takeover modes are not needed.

•All data remains available.

Recovery from failure

Use the following information to help you recover from the failures:

•Normal error recovery procedures apply for the host channel and the intermediate equipment.

•You must contact your IBM SSR to repair the failed connection.

11.2.3 Failover scenario 2

The scenario shown in Figure 11-3 assumes a failure of both links between the TS7700 Virtualization Engine clusters.

Figure 11-3 Failure of both links between the TS7700 Virtualization Engine clusters

Effects of the failure

You will see the following effects of the failure:

•Jobs on virtual device addresses on TS7700 Cluster 0 continue to run because the logical volumes are using the TVC in Cluster 0.

•All scratch mounts to TS7700 Cluster 0 will succeed if it owns one or more volumes in the scratch category at the time of the mount operation. After the scratch volumes owned by TS7700 Cluster 0 are exhausted, scratch mounts will begin to fail.

•The grid enters the Grid Links Degraded state and the VTS Operations Degraded state.

•All copy operations are stopped.

•The grid enters the Copy Operation Disabled state.

•If the RUN Copy Consistency Point is being used, the grid also enters the Immediate Mode Copy Completion’s Deferred state.

•Call Home support is invoked.

Recovery from failure

Contact your IBM SSR for repair of the failed connection.

11.2.4 Failover scenario 3

The scenario shown in Figure 11-4 assumes a failure of a link between TS7700 Virtualization Engine clusters with remote mounts.

Figure 11-4 Failure of a link between TS7700 Virtualization Engine clusters with remote mounts

Effects of the failure

You will see the following effects of the failure:

•Any job in progress that is using the remote link between TS7700 Cluster 0 and TS7700 Cluster 1 that was disconnected will fail.

•If the job is resubmitted, it will succeed by using the other link.

•The grid enters the Grid Links Degraded state and the VTS Operations Degraded state.

•Call Home support is invoked.

Recovery from failure

Contact your IBM SSR to repair the failed connections.

11.2.5 Failover scenario 4

The scenario shown in Figure 11-5 assumes a failure of both links between TS7700 Virtualization Engine clusters with remote mounts.

Figure 11-5 Failure of both links between TS7700 Virtualization Engine clusters with remote mounts

Effects of the failure

You will see the following effects of the failure:

•Jobs on virtual device addresses on TS7700 Cluster 0 that are using TS7700 Cluster 1 as the TVC cluster will fail.

•Subsequent specific mount jobs that attempt to access the data through TS7700 Cluster 0 that exist only on TS7700 Cluster 1 will fail.

•All scratch mounts to TS7700 Cluster 0 will succeed if Cluster 0 owns one or more volumes in the scratch category at the time of the mount operation. After the scratch volumes owned by TS7700 Cluster 0 are exhausted, scratch mounts will begin to fail.

•All copy operations are stopped.

•The grid enters the Grid Links Degraded state, the VTS Operations Degraded state, and the grid enters the Copy Operation Disabled state.

•Call Home support is invoked.

|

Tip: Although the data resides on TS7700-1, if it was mounted on TS7700-0 when the failure occurred, it is not accessible through the virtual device addresses on TS7700-1 because ownership transfer cannot occur.

|

Recovery from failure

To recover from the failures, you must contact your IBM SSR to repair the failed connections.

11.2.6 Failover scenario 5

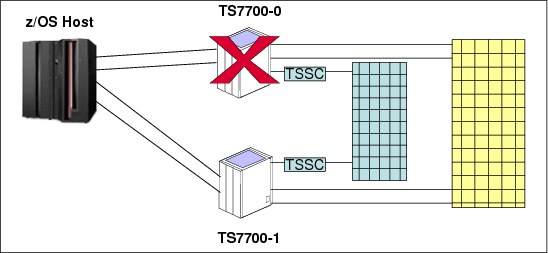

The scenario shown in Figure 11-6 assumes a failure of the local TS7700 Virtualization Engine Cluster 0.

Figure 11-6 Failure of the local TS7700 Virtualization Engine Cluster 0

Effects of the failure

You will see the following effects of the failure:

•Virtual tape device addresses for TS7700 Cluster 0 will become unavailable.

•All channel activity on the failing host links are stopped.

•Host channel errors are reported or error information becomes available from the intermediate equipment.

•Jobs that were using the virtual device addresses of TS7700 Cluster 0 will fail.

•Scratch mounts that target volumes that are owned by the failed cluster will also fail until write ownership takeover mode is enabled. Scratch mounts that target pre-owned volumes will succeed. The grid enters the Copy Operation Disabled and VTS Operations Degraded states.

•If the RUN Copy Consistency Point is being used, the grid also enters the Immediate Mode Copy Completion’s Deferred state.

•All copied data can be made accessible through TS7700 Cluster 1 through one of the takeover modes. If a takeover mode for TS7700 Cluster 0 is not enabled, data will not be accessible through TS7700 Cluster 1 even if it has a valid copy of the data if the volume is owned by TS7700 Cluster 0.

Recovery from failure

To recover from the failures, you must perform the following steps:

1. Enable write or read-only ownership takeover through the MI.

2. Rerun the failed jobs using the virtual device addresses associated with TS7700 Virtualization Engine Cluster 1.

3. Normal error recovery procedures and repair apply for the host channels and the intermediate equipment.

4. Contact your IBM SSR to repair the failed TS7700 cluster.

11.2.7 Failover scenario 6

The scenario shown in Figure 11-7 considers a failure of both links between TS7700 Virtualization Engine clusters with Automatic Takeover.

Figure 11-7 Failure of both links between TS7700 Virtualization Engine clusters with Automatic Takeover

Effects of the failure

You will see the following effects of the failure:

•Specific mount jobs subsequent to the failure using virtual device addresses on Cluster 0 that need to access volumes that are owned by Cluster 1 will fail (even if the data is local to Cluster 0). Jobs using virtual device addresses on Cluster 1 that need to access volumes that are owned by Cluster 0 will also fail.

•All scratch mounts to Cluster 0 will succeed as long as it owns one or more volumes in the scratch category at the time of the mount operation. After the scratch volumes owned by Cluster 0 are exhausted, scratch mounts will begin to fail.

•All copy operations are stopped.

•The grid enters the Grid Links Degraded state, the VTS Operations Degraded state, and the Copy Operation Disabled state.

•If the RUN Copy Consistency Point is being used, the grid also enters the Immediate Mode Copy Completion’s Deferred state.

•Call Home support is invoked.

Recovery from failure

Contact your IBM SSR for repair of the failed connections.

11.2.8 Failover scenario 7

The scenario shown in Figure 11-8 assumes a production site with two TS7700 clusters (Cluster 0 and Cluster 1) active in production. The third TS7700 cluster (Cluster 2) is located at a remote location without attachment to the production hosts. Cluster 2 is attached to a backup host, its devices are varied offline, and there is no active host.

Figure 11-8 Three-cluster grid with failure on two links to Cluster 2

Failures related to Cluster 0 and Cluster 1 are already described in the previous scenarios. This scenario considers what to do when both links to Cluster 2 have failed and the only shared component from Cluster 0 and Cluster 1 to Cluster 2 is the network.

Effects of the failure

You will see the following effects of the failure:

•All copy operations between Cluster 2 and rest of the clusters are stopped.

•All copy operations between Cluster 0 and Cluster 1 continue.

•The grid enters the Grid Links Degraded state, the VTS Operations Degraded state, and the Copy Operations Disabled state.

•If the RUN Copy Consistency Point is being used for Cluster 2, the grid also enters the Immediate Mode Copy Completion’s Deferred state.

•Call Home support is invoked.

Recovery from failure

Contact your IBM SSR for repair of the failed connections.

11.2.9 Failover scenario 8

This scenario assumes a four-cluster hybrid grid configuration with a partitioned workload. At the production site, two TS7720 clusters are installed. At the remote site, two TS7740 clusters, which are attached to TS3500 tape libraries, are installed.

Virtual volumes are written on one cluster at the local site and copied to one cluster at the remote site, so that a copy of a volume will exist both in Cluster 0 and Cluster 2, and in Cluster 1 and Cluster 3.

In the scenario, shown in Figure 11-9, the remote site fails. The grid WAN is operational.

Figure 11-9 Four-cluster hybrid grid multiple failures

Effect of the failures

You will see the following effects of the failures:

•Jobs on virtual device addresses on Cluster 0 will continue to run because the logical volumes are in the TVC on Cluster 0 or Cluster 1.

Jobs that access old volumes, which the automatic removal mechanisms have already removed from the production clusters, will fail. Because TS7720s cannot copy to TS7740, they might eventually become full, and all scratch mounts and specific mounts with modifications will fail.

•The grid enters the Copy Operation Disabled and VTS Operations Degraded states.

•If the RUN Copy Consistency Point is being used, the grid also enters the Immediate Mode Copy Completion’s Deferred state.

•All copy operations for Cluster 2 and Cluster 3 are stopped.

•Call Home support is invoked.

Recovery from failure

Normal error recovery procedures and repair apply for the host channels and the intermediate equipment. To recover from the failures, you must contact your IBM SSR to repair the failed connections.

11.3 Planning for disaster recovery

Although you can hope that a disaster does not happen, planning for such an event is important. Information is provided that can be used in developing a disaster recovery plan as it relates to a TS7700 Virtualization Engine.

Many aspects of disaster recovery planning must be considered:

•How critical is the data in the TS7700 Virtualization Engine?

•Can the loss of some of the data be tolerated?

•How much time can be tolerated before resuming operations after a disaster?

•What are the procedures for recovery and who will execute them?

•How will you test your procedures?

11.3.1 Grid configuration

With the TS7700 Virtualization Engine, two types of configurations can be installed:

•Stand-alone cluster

•Multicluster grid

With a stand-alone system, a single TS7700 Virtualization Engine cluster is installed. If the site at which that system is installed is destroyed, the data that is associated with the TS7700 Virtualization Engine might also have been destroyed. If a TS7700 Virtualization Engine is not usable because of an interruption of utility or communication services to the site, or significant physical damage to the site or the TS7700 Virtualization Engine itself, access to the data that is managed by the TS7700 Virtualization Engine is restored through automated processes designed into the product.

The recovery process assumes that the only elements available for recovery are the stacked volumes. It further assumes that only a subset of the volumes is undamaged after the event. If the physical cartridges have been destroyed or irreparably damaged, recovery is not possible, as with any other cartridge types. It is important that you integrate the TS7700 Virtualization Engine recovery procedure into your current disaster recovery procedures.

|

Remember: The disaster recovery process is a joint exercise that requires your involvement and your IBM SSR to make it as comprehensive as possible.

|

For many clients, the potential data loss or the recovery time required with a stand-alone TS7700 Virtualization Engine is not acceptable. For those clients, the TS7700 Virtualization Engine grid provides a near-zero data loss and expedited recovery-time solution. With a TS7700 Virtualization Engine multicluster grid configuration, two, three, or four TS7700 Virtualization Engine clusters are installed, typically at two or three sites, and interconnected so that data is replicated among them. The way that the two or three sites are used then differs, depending on your requirements.

In a two-cluster grid, the typical use is that one of the sites is the local production center and the other site is a backup or disaster recovery center, separated by a distance dictated by your company’s requirements for disaster recovery.

In a three-cluster grid, the typical use is that two sites are connected to a host and the workload is spread evenly between them. The third site is strictly for disaster recovery and there probably are no connections from the production host to the third site. Another use for a three-cluster grid might consist of three production sites, which are all interconnected and holding the backups of each other.

In a four-cluster grid, disaster recovery and high availability can be achieved, ensuring that two local clusters keep RUN or SYNC volume copies and that both clusters are attached to the host. The third and fourth remote clusters hold deferred volume copies for disaster recovery. This design can be configured in a crossed way, which means that you can run two production data centers, with each production data center serving as a backup for the other.

The only connection between the production sites and the disaster recovery site is the grid interconnection. There is normally no host connectivity between the production hosts and the disaster recovery site’s TS7700 Virtualization Engine. When client data is created at the production sites, it is replicated to the disaster recovery site as defined through Outboard policy management definitions and storage management subsystem (SMS) settings.

11.3.2 Planning guidelines

As part of planning a TS7700 Virtualization Engine grid configuration to address this solution, you need to consider the following items:

•Plan for the necessary WAN infrastructure and bandwidth to meet the copy requirements that you need. You generally will need more bandwidth if you are primarily using a Copy Consistency Point of RUN because any delays in copy time caused by bandwidth limitations will result in an elongation of job run times. If you have limited bandwidth available between sites, use the Deferred Copy Consistency Point or only copy the data that is critical to the recovery of your key operations. The amount of data sent through the WAN can possibly justify the establishment of a separate, redundant, and dedicated network only for the multicluster grid.

•If you use a consistency point of deferred copy, and the bandwidth is the limiting factor, it is possible that some data has not been replicated between the sites, and the jobs that created that data must be rerun. This is also a factor to consider in the implementation of Copy Export for disaster recovery because the export will not capture any volumes in the export pool that are not currently in the TVC of the export cluster.

•Plan for host connectivity at your disaster recovery site with sufficient resources to perform your critical workloads. If the local TS7700 Virtualization Engine cluster becomes unavailable, there is no local host access to the data in the disaster recovery site’s TS7700 Virtualization Engine cluster through the local cluster.

•Design and code the Data Facility System Management Subsystem (DFSMS) automatic class selection (ACS) routines to control the data that gets copied and by which Copy Consistency Point. You might need to consider management policies for testing your procedures at the disaster recovery site that are different from the production policies.

•Prepare procedures that your operators will execute if the local site becomes unusable. The procedures will include tasks, such as bringing up the disaster recovery host, varying the virtual drives online, and placing the disaster recovery TS7700 Virtualization Engine cluster in one of the ownership takeover modes.

•Perform a periodic capacity planning of your tape setup to evaluate whether the disaster setup is still capable of handling the production in a disaster.

•If encryption is used in production, ensure that the disaster site supports encryption, also. The Key Encrypting Keys (KEKs) for production must be available at the disaster recovery site to enable the data key to be decrypted. Default keys are supported and enable key management without modifications required on the TS7740. On the tape setup, the TS1120/TS1130/TS1140, the TS7700 Virtualization Engine, and the MI itself must support encryption. Validate that the TS7700 Virtualization Engine can communicate with the Encryption Key Manager (EKM), Tivoli Key Lifecycle Manager, or IBM Security Key Lifecycle Manager for z/OS (ISKLM), and that the keystore itself is available.

•Consider how you will test your disaster recovery procedures. Many scenarios can be set up:

– Will it be based on all data from an existing TS7700 Virtualization Engine?

– Will it be based on using the Copy Export function and an empty TS7700 Virtualization Engine?

– Will it be based on stopping production of one TS7700 Virtualization Engine and running production to the other during a period when one cluster is down for service?

11.4 High availability and disaster recovery configurations

A few examples of grid configurations are addressed. Remember that these examples are a small subset of possible configurations and are only provided to show how the grid technology can be used. With five-cluser or six-cluster grids, there are many more ways to configure a grid.

Two-cluster grid

With a two-cluster grid, you can configure the grid for disaster recovery, high availability, or both. Configuration considerations for two-cluster grids are described. The scenarios presented are typical configurations. Other configurations are possible and might be better suited for your environment.

Disaster recovery configuration

Information that is needed to plan for a TS7700 Virtualization Engine two-cluster grid configuration to be used specifically for disaster recovery purposes is provided.

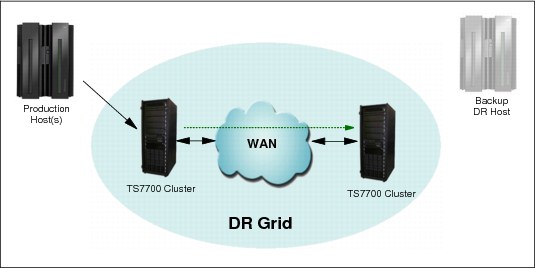

A natural or human-caused event has made the local site’s TS7700 Virtualization Engine cluster unavailable. The two TS7700 Virtualization Engine clusters reside in separate locations, separated by a distance dictated by your company’s requirements for disaster recovery. The only connection between the local site and the disaster recovery site are the grid interconnections. There is no host connectivity between the local hosts and the disaster recovery site’s TS7700 Virtualization Engine.

Figure 11-10 summarizes this configuration.

Figure 11-10 Disaster recovery configuration

Consider the following information as part of planning a TS7700 Virtualization Engine grid configuration to implement this solution:

•Plan for the necessary WAN infrastructure and bandwidth to meet the copy requirements that you need. You generally need more bandwidth if you are primarily using a Copy Consistency Point of RUN, because any delays in copy time caused by bandwidth limitations can result in an elongation of job run times. If you have limited bandwidth available between sites, have data that is critical copied with a consistency point of RUN, with the rest of the data using the Deferred Copy Consistency Point.

•Plan for host connectivity at your disaster recovery site with sufficient resources to perform your critical workloads.

•Design and code the DFSMS ACS routines to control the data that gets copied and by which Copy Consistency Point.

•Prepare procedures that your operators execute if the local site becomes unusable. The procedures include tasks, such as bringing up the disaster recovery host, varying the virtual drives online, and placing the disaster recovery TS7700 Virtualization Engine cluster in one of the ownership takeover modes unless AOTM is configured.

Configuring for high availability

The information needed to plan for a TS7700 Virtualization Engine two-cluster grid configuration to be used specifically for high availability is provided. The assumption is that continued access to data is critical, and no single point of failure, repair, or upgrade can affect the availability of data.

In a high-availability configuration, both TS7700 Virtualization Engine clusters are located within metro distance of each other. These clusters are connected through a LAN. If one of them becomes unavailable because it has failed, or is undergoing service or being updated, data can be accessed through the other TS7700 Virtualization Engine cluster until the unavailable cluster is made available.

As part of planning a TS7700 Virtualization Engine grid configuration to implement this solution, consider the following information:

•Plan for the virtual device addresses in both clusters to be configured to the local hosts. In this way, a total of 512 virtual tape devices are available for use (256 from each TS7700 Virtualization Engine cluster).

•Set up a Copy Consistency Point of RUN for both clusters for all data to be made highly available. With this Copy Consistency Point, as each logical volume is closed, it is copied to the other TS7700 Virtualization Engine cluster.

•Design and code the DFSMS ACS routines to set the necessary Copy Consistency Point.

•Ensure that AOTM is configured for an automated logical volume ownership takeover method in case a cluster becomes unexpectedly unavailable within the grid configuration. Alternatively, prepare written instructions for the operators that describe how to perform the ownership takeover manually, if necessary. See “I/O TVC selection” on page 54 for more details about AOTM.

Figure 11-11 on page 783 summarizes this configuration.

Figure 11-11 Availability configuration

Configuring for disaster recovery and high availability

You can configure a TS7700 Virtualization Engine two-cluster grid configuration to provide both disaster recovery and high availability solutions.

The assumption is that the two TS7700 Virtualization Engine clusters will reside in separate locations, separated by a distance dictated by your company’s requirements for disaster recovery. In addition to the configuration considerations for disaster recovery, you need to plan for the following items:

•Access to the FICON channels on the TS7700 Virtualization Engine cluster located at the disaster recovery site from your local site’s hosts. This can involve connections using dense wavelength division multiplexing (DWDM) or channel extender, depending on the distance separating the two sites. If the local TS7700 Virtualization Engine cluster becomes unavailable, you use this remote access to continue your operations using the remote TS7700 Virtualization Engine cluster.

•Because the virtual devices on the remote TS7700 Virtualization Engine cluster are connected to the host through a DWDM or channel extension, there can be a difference in read or write performance when compared to the virtual devices on the local TS7700 Virtualization Engine cluster. If performance differences are a concern, consider only using the virtual device addresses in the remote TS7700 Virtualization Engine cluster when the local TS7700 Virtualization Engine is unavailable. If that is important, you need to provide operator procedures to vary online and offline the virtual devices to the remote TS7700 Virtualization Engine.

•You might want to have separate Copy Consistency Policies for your disaster recovery data versus your data that requires high availability.

Figure 11-12 on page 784 summarizes this configuration.

Figure 11-12 Availability and disaster recovery configuration

Three-cluster grid

With a three-cluster grid, you can configure the grid for disaster recovery and high availability or use dual production sites that share a common disaster recovery site. Configuration considerations for three-cluster grids are described. The scenarios presented are typical configurations. Other configurations are possible and might be better suited for your environment.

The planning considerations for a two-cluster grid also apply to a three-cluster grid.

High availability and disaster recovery

Figure 11-13 on page 785 illustrates a combined high availability and disaster recovery solution for a three-cluster grid. In this example, Cluster 0 and Cluster 1 are the high-availability clusters and are local to each other (less than 50 kilometers (31 miles) apart). Cluster 2 is at a remote site that is away from the production site or sites. The virtual devices in Cluster 0 and Cluster 1 are online to the host and the virtual devices in Cluster 2 are offline to the host. The host accesses the 512 virtual devices provided by Cluster 0 and Cluster 1. Host data written to Cluster 0 is copied to Cluster 1 at Rewind Unload time. Host data written to Cluster 1 is written to Cluster 0 at Rewind Unload time. Host data written to Cluster 0 or Cluster 1 is copied to Cluster 2 on a Deferred basis.

The Copy Consistency Points at the disaster recovery site (NNR) are set to only create a copy of host data at Cluster 2. Copies of data are not made to Cluster 0 and Cluster 1. This allows for disaster recovery testing at Cluster 2 without replicating to the production site clusters.

Figure 11-13 shows an optional host connection that can be established to remote Cluster 2 using DWDM or channel extenders. With this configuration, you need to define an additional 256 virtual devices at the host for a total of 768 devices.

Figure 11-13 High availability and disaster recovery configuration

Dual production site and disaster recovery

Figure 11-14 on page 786 illustrates dual production sites that are sharing a disaster recovery site in a three-cluster grid (similar to a hub-and-spoke model). In this example, Cluster 0 and Cluster 1 are separate production systems that can be local to each other or distant from each other. The disaster recovery cluster, Cluster 2, is at a remote site at a distance away from the production sites. The virtual devices in Cluster 0 are online to Host A and the virtual devices in Cluster 1 are online to Host B. The virtual devices in Cluster 2 are offline to both hosts. Host A and Host B access their own set of 256 virtual devices provided by their respective clusters. Host data written to Cluster 0 is not copied to Cluster 1. Host data written to Cluster 1 is not written to Cluster 0. Host data written to Cluster 0 or Cluster 1 is copied to Cluster 2 on a Deferred basis.

The Copy Consistency Points at the disaster recovery site (NNR) are set to only create a copy of host data at Cluster 2. Copies of data are not made to Cluster 0 and Cluster 1. This allows for disaster recovery testing at Cluster 2 without replicating to the production site clusters.

Figure 11-14 shows an optional host connection that can be established to remote Cluster 2 using DWDM or channel extenders.

Figure 11-14 Dual production site with disaster recovery

Three-cluster high availability production site and disaster recovery

This model has been adopted by many clients. In this configuration, two clusters are located in the production site (same building or separate location within metro area) and the third cluster is remote at the disaster recovery site. Host connections are available at the production site (or sites). In this configuration, each TS7720 replicates to both its local TS7720 peer and to the remote TS7740. Optional copies in both TS7720 clusters provide high availability plus cache access time for the host accesses. At the same time, the remote TS7740 provides DR capabilities and the remote copy can be remotely accessed, if needed. This configuration is depicted in Figure 11-15 on page 787.

This particular configuration provides 442 TB of high performance production cache if you choose to run balanced mode with three copies (R-R-D for both Cluster 0 and Cluster 1). Alternatively, you can choose to have one copy only at the production site, doubling the cache capacity available for production. In this case, copy mode will be R-N-D for Cluster 0 and N-R-D for cluster one.

Figure 11-15 Three-cluster high availability and disaster recovery with two TS7720s and one TS7740

Another variation of this model uses a TS7720 and a TS7740 for the production site as shown in Figure 11-16, both replicating to a remote TS7740.

Figure 11-16 Three-cluster high availability and disaster recovery with two TS7740s and one TS7720

In both models, if a TS7720 reaches the upper threshold of utilization, the oldest data, which has already been replicated to the TS7740, will be removed from the TS7720 cache.

In the example shown in Figure 11-16, you can have particular workloads favoring the TS7740, and others favoring the TS7720, suiting a specific workload to the cluster best equipped to perform it.

Copy Export (shown as optional in both figures) can be used to have a second copy of the migrated data, if required.

Four-cluster grid

A four-cluster grid that can have both sites for dual purposes is described. Both sites are equal players within the grid, and any site can play the role of production or disaster recovery, as required.

Dual production and disaster recovery

In this model, you have dual production and disaster recovery sites. Although a site can be labeled as a high availability pair or disaster recovery site, they are equivalent from a technology standpoint and functional design. In this example, you have two production sites within metro distances and two remote disaster recovery sites within metro distances between them. This configuration delivers the same capacity as a two-cluster grid configuration, with the high availability of a four-cluster grid. See Figure 11-17.

Figure 11-17 Four-cluster high availability and disaster recovery

You can have host workload balanced across both clusters (Cluster 0 and Cluster 1 in Figure 11-17). The logical volumes written to a particular cluster are only replicated to one remote cluster. In Figure 11-17, Cluster 0 replicates to Cluster 2 and Cluster 1 replicates to Cluster 3. This “partitioning” is accomplished by using copy policies. For the described behavior, copy mode for Cluster 0 is RNDN and for Cluster 1 is NRND.

This configuration delivers high availability at both sites, production and disaster recovery, without four copies of the same tape logical volume throughout the grid.

Figure 11-18 shows the four-cluster grid reaction to a cluster outage. In this example, Cluster 0 goes down due to a electrical power outage. You lose all logical drives emulated by

Cluster 0. The host uses the remaining addresses emulated by Cluster 1 for the entire production workload.

Cluster 0. The host uses the remaining addresses emulated by Cluster 1 for the entire production workload.

Figure 11-18 Four-cluster grid high availability and disaster recovery - Cluster 0 outage

During the outage of Cluster 0 in the example, new jobs for write only use one half of the configuration (the unaffected “partition” in the lower part of the picture). Jobs for read can access content in all available clusters. When power is normalized at the site, Cluster 0 will power up and rejoin the grid, reestablishing the original balanced configuration.

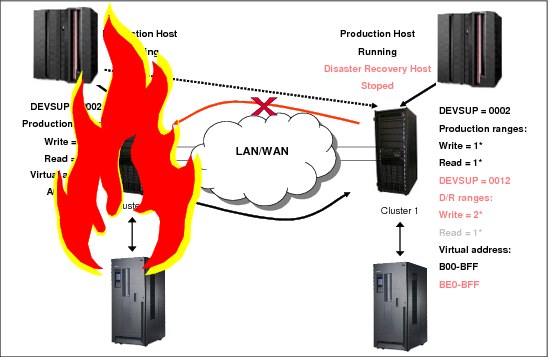

In a disaster recovery situation, the backup host in the disaster recovery site will operate from the second high availability pair, which is the pair of Cluster 2 and Cluster 3 in Figure 11-19 on page 801. In this case, copy policies can be DNRN for Cluster 2 and NDNR for Cluster 3, reversing the direction of the replication so that it would be the opposite of the the green arrows in Figure 11-18 on page 788.

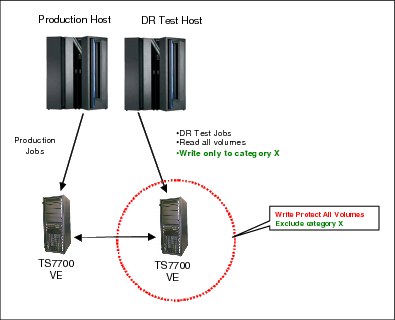

11.4.1 Selective write protect for disaster recovery testing

This function allows clients to emulate disaster recovery events by running test jobs at a disaster recovery (DR) location within a TS7700 grid configuration, only allowing volumes within specific categories to be manipulated by the test application. This prevents any changes to production-written data. This is accomplished by excluding up to 16 categories from the cluster’s write-protect enablement. When a cluster is write-protect-enabled, all volumes that are protected cannot be modified or have their category or storage construct names modified. As in the TS7700 write-protect setting, the option is grid partition scope (a cluster) and configured through the MI. Settings are persistent and saved in a special repository.

Also, the new function allows for any volume assigned to one of the categories contained within the configured list to be excluded from the general cluster’s write-protect state. The volumes assigned to the excluded categories can be written to or have their attributes modified. In addition, those scratch categories that are not excluded can optionally have their Fast Ready characteristics ignored, including Delete Expire and hold processing, allowing the disaster recovery test to mount volumes as private that the production environment has since returned to scratch (they will be accessed as read-only).

One exception to the write protect is those volumes in the insert category. To allow a volume to be moved from the insert category to a write-protect-excluded category, the source category of insert cannot be write-protected. Thus, the insert category is always a member of the excluded categories.

Be sure that you have enough scratch space when Expire Hold processing is enabled to prevent the reuse of production scratched volumes when planning for a DR test. Suspending the volumes’ return-to-scratch processing for the duration of the disaster recovery test is also advisable.

Because selective write protect is a cluster-wide function, separated DR drills can be conducted simultaneously within one multicluster grid, with each cluster having its own independent client-configured settings.

See 11.1, “TS7700 Virtualization Engine grid failover principles” on page 766 for more details.

11.5 Copy Export overview and Considerations

Copy Export provides a function to allow a copy of selected logical volumes written to the TS7700 Virtualization Engine to be removed and taken offsite for disaster recovery purposes. In addition, since the data is a copy of the logical volumes, the volumes will remain intact and are still accessible by the production system.

Control of Copy Export

Storage Group and Management Class constructs are defined to use separate pools for the primary and secondary copies of the logical volume. The existing Management Class construct, which is part of Advanced Policy Management (APM), is used to create a second copy of the data to be Copy Exported. The Management Class actions are configured through the TS7700 Virtualization Engine MI. An option on the MI window allows designation of a secondary pool as a Copy Export pool. As logical volumes are written, the secondary copy of the data is pre-migrated to stacked volumes in the Copy Export pool.

Workflow of a Copy Export process

Typically, you execute the Copy Export operation on a periodic basis. Because the purpose is to get a copy of the data offsite for disaster recovery purposes, performing it soon after the data is created minimizes the time for the recovery point objective (RPO).

When the time comes to initiate a Copy Export, a Copy Export job is run from the production host. The TS7740 Virtualization Engine will pre-migrate any logical volumes in the Copy Export pool that have not been pre-migrated. Any new logical volumes written after the Copy Export operation is initiated will not be included in the Copy Export set of physical volumes. The TS7740 Virtualization Engine then writes a complete TS7740 Virtualization Engine database to each of the physical volumes in the Copy Export set.

During a Copy Export operation, all of the physical volumes with active data on them in a specified secondary pool are removed from the library associated with the TS7740 Virtualization Engine. Only the logical volumes that are valid on that TS7740 Virtualization Engine are considered during the execution of the operation. Logical volumes currently mounted during a Copy Export operation are excluded from the export set as are any volumes that are not currently in the TVC of the export cluster.

The host that initiates the Copy Export operation first creates a dedicated export list volume on the TS7740 Virtualization Engine that will perform the operation. The export list volume contains instructions regarding the execution of the operation and a reserved file that the TS7740 Virtualization Engine will use to provide completion status and export operation information. As part of the Copy Export operation, the TS7740 Virtualization Engine creates response records in the reserved file that list the logical volumes exported and the physical volumes on which they reside. This information can be used as a record for the data that is offsite. The TS7740 Virtualization Engine also writes records in the reserved file on the export list volume that provide the current status for all physical volumes with a state of Copy Exported.

The Copy Export job can specify whether the stacked volumes in the Copy Export set must be ejected immediately or placed into the export-hold category. When Copy Export is used with the export-hold category, you will need to manually request that the export-hold volumes be ejected. The choice to eject as part of the Copy Export job or to eject them later from the export-hold category will be based on your operational procedures. The ejected Copy Export set will then be transported to a disaster recovery site or vault. Your RPO will determine the frequency of the Copy Export operation.

11.5.1 General considerations for Copy Export

Consider the following information when you are planning to use the Copy Export function for disaster recovery:

•Specific logical volumes are not specified as part of a Copy Export operation. Instead, all valid logical volumes on the physical volumes in the specified secondary pool are considered for export. After the first time that Copy Export is performed for a pool, the logical volumes that will be exported are the ones for that pool that have been newly written or modified since the last export began. Previously exported volumes that have not been changed will not be exported. For recovery, all exported physical volumes that still contain active data from a source TS7700 need to be included because not all of the logical volumes that are created are going to be on the last set exported.

•The primary copy of the logical volumes exported remains in the inventory of the TS7700 grid. Exported volumes are always copies of volumes still in the TS7700.

•Only those logical volumes assigned to the secondary pool specified in the export list file volume that are resident on a physical volume of the pool or in the cache of the TS7700 performing the export operation will be considered for export. For a grid configuration, if a logical volume is to be copied to the TS7700 that will be performing the Copy Export operation, but that copy had not yet completed when the export is initiated, it will not be included in the current export operation.

•Logical volumes to be exported that are resident only in the cache and not mounted when the Copy Export operation is initiated will be copied to a stacked volumes in the secondary pool as part of the Copy Export operation.

•Any logical volume assigned to the specified secondary pool in the TS7700 after the Copy Export operation is initiated is not part of the export and will be written to a physical volume in the pool but will not be exported. This includes host-sourced and copy-sourced data.

•Volumes that are currently mounted cannot be Copy Exported.

•Only one Copy Export operation can be performed at a time.

•If the TS7700 cannot access the primary version of a logical volume designated for Copy Export and the secondary version is in a pool also defined for Copy Export, that secondary version is made inaccessible and the mount will fail, regardless of whether that secondary pool is involved in the current Copy Export operation. When a Copy Export operation is not being performed, if the primary version of a logical volume cannot be accessed and a secondary version exists, the secondary becomes the primary.

•The library associated with the TS7700 executing the Copy Export operation must have an I/O station feature for the operation to be accepted. Empty the I/O station before executing Copy Export and prevent it from going to the full state.

•A minimum of four physical tape drives must be available to the TS7700 for the Copy Export operation to be performed. The operation will be terminated by the TS7700 when fewer than four physical tape drives are available. Processing for the physical stacked volume in progress when the condition occurred will be completed and the status file records reflect what was completed before the operation was terminated.

•Copy Export and the insertion of logical volumes are mutually exclusive functions in a TS7700 or grid.

•Only one secondary physical volume pool can be specified per export operation, and it must have been previously defined as a Copy Export pool.

•The export list file volume cannot be assigned to the secondary copy pool that is specified for the operation. If it is, the Copy Export operation will fail.

•If a scratch physical volume is needed during a Copy Export operation, the secondary physical volume pool must have an available scratch volume or access to borrow one for the operation to continue. If a scratch volume is not available, the TS7700 indicates this through a console message and waits for up to 60 minutes. If a scratch volume is not made available to the secondary physical volume pool within 60 minutes, the Copy Export operation is terminated.

•During execution, if the TS7700 determines that a physical volume assigned to the specified secondary pool contains one or more primary logical volumes, that physical volume and any secondary logical volumes on it are excluded from the Copy Export operation.

•To minimize the number of physical tapes used for Copy Export, use the highest capacity media and physical drive format that is compatible with the recovery TS7700. You might also want to reduce the number of concurrent tape devices that the TS7700 will use when copying data from cache to the secondary copy pool used for Copy Export.

•All copy-exported volumes that are exported from a source TS7700 must be placed in a library for recovery. The source TS7700 limits the number of physical volumes that can be Copy Exported. The default limit is 2,000 per TS7700 to ensure that they will all fit into the receiving library. This value can be adjusted to a maximum of 10,000 volumes.

•The recovery TS7700 must have physical tape drives that are capable of reading the physical volumes from a source TS7700. If a source TS7700 writes the volumes using the native E05 format, the recovery TS7700 must also have 3592-E05 drives running in native format mode. If the exporting pool on the source TS7700 is set up to encrypt the data, the recovery TS7700 must also be set up to handle encrypted volumes and have access to the encryption key manager with replicated keys from the production site. If the source TS7700 writes the volumes in J1A or emulated J1A mode, any 3592 model drive in the recovery TS7700 can read the data.

•The recovery TS7700 cannot contain any previous data, and a client-initiated recovery process cannot merge data from more than one source TS7700 together. As a part of the Copy Export Recovery, an option is provided to erase any previous data on the TS7700. This allows a TS7700 that is used for disaster recovery testing to be reused for testing of a different source TS7700’s data.

•For the secondary pool used for Copy Export, the designated reclaim pool must be the same value as the secondary volume pool. If the reclaim pool for the Copy Export secondary pool is the same as either the Copy Export primary pool or its reclaim pool, the primary and backup copies of a logical volume can exist on the same physical tape.

11.5.2 Copy Export grid considerations

Copy Export is supported in both grid and stand-alone environments. You need to remember several considerations that are unique to the grid environment.

Performing Copy Export

The first consideration relates to performing Copy Export. In a grid configuration, a Copy Export operation is performed against an individual TS7700, not across all TS7700 Virtualization Engines. Set up Copy Export in a grid plan based on the following guidelines:

•When using the Copy Export acceleration (LMTDBPVL) option, the database backup will only be appended to the first two and the last two volumes that are exported. These corresponding tapes containing database backup will be selected and listed in the alphabetical order of the physical tape VOLSER. If the export acceleration (LMTDBPVL) option was set and there is a failure appending the DB backup, a different physical volume will be selected to contain the database backup so that four physical volumes have the DB backup.

•Decide which TS7700 in a grid configuration is going to be used to export a specific set of data. Although you can set up more than one TS7700 to export data, only the data from a single source TS7700 can be used in the recovery process. You cannot merge copy-exported volumes from more than one source TS7700 in the recovery TS7700.

•For each specific set of data to export, define a Management Class name. On the TS7700 that will be used to export that data, define a secondary physical volume pool for that Management Class name and also ensure that you indicate that it is an export pool. Although you will need to define the Management Class name on all TS7700s in the grid configuration, specify only the secondary physical volume pool on the TS7700 that is to perform the export operation. Specifying it on the other TS7700s in the grid configuration does not interfere with the Copy Export operation, but it is a waste of physical volumes. The exception to this approach is if you want one of the TS7700s in the grid configuration to have a second physical copy of the data if the primary copies on other TS7700s are inaccessible.

•While you are defining the Management Class name for the data, also ensure that the TS7700 to perform the export operation has a copy policy specifying that it is to have a copy.

•When the Copy Export operation is executed, the export list file volume must only be valid on the TS7700 performing the operation. You will need to define a unique Management Class name to be used for the export list file volume. For that Management Class name, you will need to define its copy policy so that a copy is only on the TS7700 that is to perform the export operation. If the VOLSER specified for the export list file volume when the export operation is initiated is resident on more than one TS7700, the Copy Export operation will fail.

|

Tip: If the Management Class specified for the Copy Export operation is defined to more than one cluster, the Copy Export will fail and the following CBR message will be presented:

CBR3726I FUNCTION INCOMPATIBLE ERROR CODE 32 FROM LIBRARY XXX FOR VOLUME xxxxxx.

X'32' There is more than one valid copy of the specified export list volume in the TS7700 grid configuration.

|

Consider this Copy Export example:

a. A Copy Export with the export list volume EXP000 is initiated from a host connected to the C0, and the Copy Export runs on the C2.

b. The copy mode of EXP000 must be [N,N,D] or [N,N,R], indicating that the only copy of EXP000 exists on C2.

c. If Copy Policy Override is activated on the C0 and the Copy Export is initiated from the host attached to C0, a copy of EXP000 is created both on the C0 and C1.

d. The grid detects that a copy of EXP000 exists on two clusters (C0 and C2) and does not start the Copy Export.

e. Copy Export fails.

For example, assume that the TS7700 that is to perform the Copy Export operation is Cluster 1. The pool on that cluster to export is pool 8. You need to set up a Management Class for the data that is to be exported so that it will have a copy on Cluster 1 and a secondary copy in pool 8. To ensure that the data is on that cluster and is consistent with the close of the logical volume, you want to have a copy policy of Rewind Unload (RUN). You define the following information:

•Define a Management Class, for example, MCCEDATA, on Cluster 1:

Secondary Pool 8

Cluster 0 Copy Policy RUN

Cluster 1 Copy Policy RUN

•Define this same Management Class on Cluster 0 without specifying a secondary pool.

•To ensure that the export list file volume gets written to Cluster 1 and only exists there, define a Management Class, for example, MCELFVOL, on Cluster 1:

Cluster 0 Copy Policy No Copy

Cluster 1 Copy Policy RUN

•Define this Management Class on Cluster 0:

Cluster 0 Copy Policy No Copy

Cluster 1 Copy Policy RUN

A Copy Export operation can be initiated through any virtual tape drive in the TS7700 grid configuration. It does not have to be initiated on a virtual drive address in the TS7700 that is to perform the Copy Export operation. The operation will be internally routed to the TS7700 that has the valid copy of the specified export list file volume. Operational and completion status will be broadcast to all hosts attached to all of the TS7700s in the grid configuration.

It is assumed that Copy Export is performed on a regular basis and logical volumes, whose copies were not complete when a Copy Export was initiated, will be exported the next time that Copy Export is initiated. You can check the copy status of the logical volumes on the TS7700 that is to perform the Copy Export operation before initiating the operation by using the Volume Status function of the BVIR facility. You can then be sure that all critical volumes will be exported during the operation.

Performing Copy Export Recovery

The next consideration relates to how Copy Export Recovery is performed. Copy Export Recovery is always to a stand-alone TS7700. As part of a client-initiated recovery process, the recovery TS7700 processes all grid-related information in the database, converting it to look like a single TS7700. This conversion means that the recovery TS7700 will have volume ownership of all volumes. It is possible that one or more logical volumes will become inaccessible because they were modified on a TS7700 other than the one that performed the Copy Export operation, and the copy did not complete before the start of the operation. Remember that each copy-exported physical volume remains under the management of the TS7700 from which it was exported.

Normally, you will return the empty physical volumes to the library I/O station that associated with the source TS7700 and reinsert them. They will then be reused by that TS7700. If you want to move them to another TS7700, whether in the same grid configuration or another, consider two important points:

•Ensure that the VOLSER ranges you had defined for that TS7700 match the VOLSERs of the physical volumes that you want to move.

•To have the original TS7700 stop managing the copy-exported volumes, you will issue the following command from the host: LIBRARY REQUEST,libname,COPYEXP,volser,DELETE

11.5.3 Reclaim process for Copy Export physical volumes

The physical volumes exported during a Copy Export operation continue to be managed by the source TS7740 Virtualization Engine with regard to space management. As logical volumes that are resident on the exported physical volumes expire, are rewritten, or otherwise invalidated, the amount of valid data on a physical volume will decrease until the physical volume becomes eligible for reclamation based on your provided criteria for its pool. Exported physical volumes that are to be reclaimed are not brought back to the source TS7740 Virtualization Engine for processing. Instead, a new secondary copy of the remaining valid logical volumes is made using the primary logical volume copy as a source.

The next time that the Copy Export operation is performed, the physical volumes with the new copies are also exported. The physical volumes that were reclaimed (which are offsite) no longer are considered to have valid data and can be returned to the source TS7740 Virtualization Engine to be used as new scratch volumes.

|

Tip: If a Copy Export hold volume is reclaimed while it is still present in the tape library, it is automatically moved back to the common scratch pool (or the defined reclamation pool) after the next Copy Export operation completes.

|

Monitoring for Copy Export data

The Bulk Volume Information Retrieval (BVIR) function can also be used to obtain a current list of exported physical volumes for a secondary pool. For each exported physical volume, information is available on the amount of active data that each cartridge contains.

11.5.4 Copy Export process messages

During the execution of the Copy Export operation, the TS7700 sends informational messages to its attached hosts. These messages are in the syslog and shown in Table 11-1 on page 796.

|

Note: Not all message identifiers are used.

|

Table 11-1 SYS log messages

|

Message description

|

Action needed

|

|

E0000 EXPORT OPERATION STARTED FOR EXPORT LIST VOLUME XXXXXX

This message is generated when the TS7700 begins the Copy Export operation.

|

None.

|

|

E0005 ALL EXPORT PROCESSING COMPLETED FOR EXPORT LIST VOLUME XXXXXX

This message is generated when the TS7700 completes an export operation.

|

None.

|

|

E0006 STACKED VOLUME YYYYYY FROM LLLLLLLL IN EXPORT-HOLD

This message is generated during Copy Export operations when an exported stacked volume ‘YYYYYY’ has been assigned to the export-hold category. The ‘LLLLLLLL’ field is replaced with the distributed library name of the TS7700 performing the export operation.

|

None.

|

|

E0006 STACKED VOLUME YYYYYY FROM LLLLLLLL IN EJECT

This message is generated during Copy Export operations when an exported stacked volume ‘YYYYYY’ has been assigned to the eject category. The physical volume will be placed in the convenience I/O station. The ‘LLLLLLLL’ field is replaced with the distributed library name of the TS7700 performing the export operation.

|

Remove ejected volumes from the convenience I/O station.

|

|

E0013 EXPORT PROCESSING SUSPENDED, WAITING FOR SCRATCH VOLUME

This message is generated every five minutes when the TS7700 needs a scratch stacked volume to continue export processing and there are none available.

|

Make one or more physical scratch volumes available to the TS7700 performing the export operation. If the TS7700 does not get access to a scratch stacked volume in 60 minutes, the operation is terminated.

|

|

E0014 EXPORT PROCESSING RESUMED, SCRATCH VOLUME MADE AVAILABLE

This message is generated when, after the export operation was suspended because no scratch stacked volumes were available, scratch stacked volumes are again available and the export operation can continue.

|

None.

|

|

E0015 EXPORT PROCESSING TERMINATED, WAITING FOR SCRATCH VOLUME

This message is generated when the TS7700 has terminated the export operation because scratch stacked volumes were not made available to the TS7700 within 60 minutes of the first E0013 message.

|

Operator must make more TS7700 stacked volumes available, perform analysis of the Status file on the export list file volume, and reissue the export operation.

|

|

E0016 COPYING LOGICAL EXPORT VOLUMES FROM CACHE TO STACKED VOLUMES

This message is generated when the TS7700 begins, and every 10 minutes during, the process of copying logical volumes that are only resident in the Tape Volume Cache to physical volumes in the specified secondary physical volume pool.

|

None.

|

|

E0017 COMPLETED COPY OF LOGICAL EXPORT VOLUMES TO STACKED VOLUMES

This message is generated when the TS7700 has completed the copy of all needed logical volumes from cache to physical volumes in the specified secondary physical volume pool.

|

None.

|

|

E0018 EXPORT TERMINATED, EXCESSIVE TIME FOR COPY TO STACKED VOLUMES

The export process has been terminated because one or more cache resident-only logical volumes needed for the export were unable to be copied to physical volumes in the specified secondary physical volume pool within a 10-hour period from the beginning of the export operation.

|

Call for IBM support.

|

|

E0019 EXPORT PROCESSING STARTED FOR POOL XX

This message is generated when the TS7700 export processing for the specified secondary physical volume pool XX.

|

None.

|

|

E0020 EXPORT PROCESSING COMPLETED FOR POOL XX

This message is generated when the TS7700 has completed processing for the specified secondary physical volume pool XX.

|

None.

|

|

E0021 DB BACKUP WRITTEN TO STACKED VOLUMES, PVOL01, PVOL02, PVOL03, PVOL04

(where PVOL01, PVOL02, PVOL03, and PVOL04 are the physical volumes to which the database backup was appended).

This message is generated if the Copy Export acceleration (LMTDBPVL) option was selected on the export.

|

None.

|

|

E0022 EXPORT RECOVERY STARTED

The export operation has been interrupted by a TS7700 error or a power off condition. When the TS7700 is restarted, it will attempt recovery of the operation.

|

None.

|

|

E0023 EXPORT RECOVERY COMPLETED

The recovery attempt for interruption of an export operation has been completed.

|

Perform analysis of the Status file on the export list file volume and reissue the export operation, if necessary.

|

|

E0024 XXXXXX LOGICAL VOLUME WITH INVALID COPY ON LLLLLLLL

This message is generated when the TS7700 performing the export operation has determined that one or more (XXXXXX) logical volumes that are associated with the secondary storage pool specified in the export list file do not have a valid copy resident on the TS7700. The ‘LLLLLLLL’ field is replaced by the distributed library name of the TS7700 performing the export operation. The export operation continues with the valid copies.

|

When the export operation completes, perform analysis of the Status file on the Export List File volume to determine the logical volumes that were not exported. Ensure that they have completed their copy operations and then perform another export operation.

|

|

E0025 PHYSICAL VOLUME XXXXXX NOT EXPORTED, PRIMARY COPY FOR YYYYYY UNAVAILABLE

This message is generated when the TS7700 detected a migrated-state logical volume ‘YYYYYY’ with an unavailable primary copy. The physical volume ‘XXXXXX’ on which the secondary copy of the logical volume ‘YYYYYY’ is stored was not exported.

This message is added at code level R1.7.

|

The logical volume and the physical volume will be eligible for the next Copy Export operation once the logical volume is mounted and demounted from the host. An operator intervention is also posted.

|

|

E0026 DB BACKUP WRITTEN TO ALL OF STACKED VOLUMES

This message is generated when Copy Export acceleration (LMTDBPVL) option is not selected.

|

None.

|

When a stacked volume associated with a Copy Export operation is ejected from a library (placed in export-hold or is physically ejected from the library), you see status message E0006, which is issued by the library (see Table 11-1 on page 796). Removable Media Management (RMM) intercepts this message and performs one of these actions:

•If the stacked volume is predefined to RMM, RMM will mark the volume as “ejected” or “in-transit” and set the movement/store date associated with the stacked volume.

•If the stacked volume is not predefined to RMM and the STACKEDVOLUME(YES) option in RMM is specified, RMM will automatically add the stacked volume to its control data set (CDS).

To have DFSMSrmm policy management manage the retention and movement for volumes created by Copy Export processing, you must define one or more volume Vital Record Specifications (VRS). For example, assume that all Copy Exports are targeted to a range of volumes STE000 - STE999. You can define a VRS as shown in Example 11-1.

Example 11-1 VRS definition

RMM AS VOLUME(STE*) COUNT(99999) LOCATION(location)

As a result, all matching stacked volumes that are set in AUTOMOVE will have their destination set to the required location and your existing movement procedures can be used to move and track them.