Architecture, components, and functional characteristics

This chapter provides a description of the architecture of the IBM Virtualization Engine TS7700. The description includes general virtualization concepts, new concepts, and functions introduced with TS7700 Virtualization Engine R3.0. In addition, hardware components and configuration scenarios for high-availability solutions are addressed.

All characteristics that are valid for both stand-alone cluster and multicluster grids are explained. The functions that are only valid for grids and all deviations from a stand-alone cluster environment are explained.

The following topics are described:

•Terms and expressions used to describe the TS7700 Virtualization Engine

•Architecture of the TS7700 Virtualization Engine

•Underlying concepts of tape virtualization within the TS7700 Virtualization Engine

•Hardware components for TS7700 Virtualization Engine Release 3.0, including new server and adapter capabilities

•Attachment of the IBM Virtualization Engine TS7740 to an IBM System Storage® TS3500 Tape Library and tape drive support

•Multicluster grid providing support for hybrid-cluster, 5-cluster, and 6-cluster TS7700 grid configurations

•Cluster families

•User security and user access enhancements

•Grid network support for (two or four) copper or shortwave (SW) fibre 1-Gbps links or two long-wave (LW) 10-Gbps links

•Immediate copy failure reporting on Rewind Unload response

•Functional characteristics of the TS7700 Virtualization Engine

•Synchronous mode copy as an additional copy policy

•Logical Write Once Read Many (WORM) support

•Enhanced cache removal policies for grids containing one or more TS7720 clusters

•Selective write protect for disaster recovery (DR) testing

•Device allocation assistance (DAA)

•Scratch allocation assistance (SAA)

•Selective device access control (SDAC)

•On-demand support of up to 4,000,000 logical volumes

This chapter includes the following topics:

•Stand-alone cluster: Components, functionality, and features

•Multicluster grid: Components, functionality, and features

•Hardware components

2.1 TS7700 Virtualization Engine architecture

The architectural design of the TS7700 Virtualization Engine and its potential capabilities are addressed. A short description of the Virtual Tape Server (VTS) architecture is included to help you understand the differences.

Every time that we use the term “TS7700,” both TS7720 and TS7740 are referenced. There might be small differences, such as the amount of storage or the performance characteristics, but the underlying architecture, capability, and functionality are the same. If a function or capability is only available for either the TS7720 or the TS7740, the specific product term is used. If a function or feature works slightly differently between the two models, this also is described.

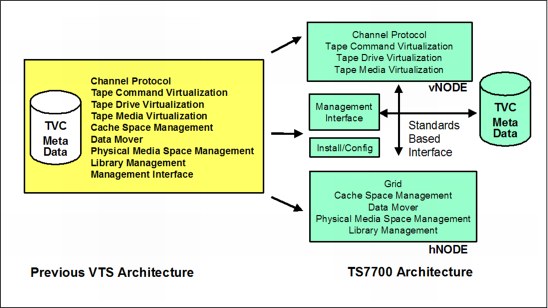

2.1.1 Monolithic design of a Virtual Tape Server

The 3494 Virtual Tape Server (VTS) performed all functions within a single IBM System p® server. The VTS also serves as the RAID disk controller. The RAID system is tightly integrated into the system. To expand the capabilities and functionality, the expansions must fit within the capabilities of the System p server. Because code components are tightly integrated with one another, implementing new functions affects large amounts of code. In addition, the system must be upgraded or extended as a whole because of the tight integration of its components.

All these concerns have been addressed in the architectural design of the TS7700 Virtualization Engine.

2.1.2 Modular design of the TS7700 Virtualization Engine

The modular design of the TS7700 Virtualization Engine separates the functionality of the system into smaller components. These components have well-defined functions connected by open interfaces. The platform allows components to be scaled up from a small configuration to a large one. This provides the capability to grow the solution to meet business objectives.

The TS7700 Virtualization Engine is built on a distributed node architecture. This architecture consists of nodes, clusters, and grid configurations. The elements communicate with each other through standard-based interfaces. In the current implementation, a virtualization node (vNode) and hierarchical data storage management node (hNode) are combined into a general node (gNode), running on a single System p server. The Tape Volume Cache (TVC) module is a high-performance a RAID disk controller or controllers. The TVC has redundant components for high availability (HA) and attaches through Fibre Channel (FC) to the Virtualization Engine.

A TS7700 Virtualization Engine and the previous VTS design are shown in Figure 2-1 on page 18.

Figure 2-1 TS7700 virtualization design compared to a VTS design

Nodes

Nodes are the most basic components in the TS7700 Virtualization Engine architecture. A node has a separate name depending on the role associated with it. There are three types of nodes:

•Virtualization nodes

•Hierarchical data storage management nodes

•General nodes

Virtualization node (vNode)

A vNode is a code stack that presents the virtual image of a library and drives to a host system. When the TS7700 Virtualization Engine is attached as a virtual tape library, the vNode receives the tape drive and library requests from the host. The vNode then processes them as real devices process them. It then translates the tape requests through a virtual drive and uses a file in the cache subsystem to represent the virtual tape image. After the logical volume is created or altered by the host system through a vNode, it resides in disk cache.

Hierarchical data storage management node (hNode)

An hNode is a code stack that performs management of all logical volumes residing in disk cache or physical tape. This management occurs after the logical volumes are created or altered by the host system through a vNode. The hNode is the only node that is aware of physical tape resources and the relationships between the logical volumes and physical volumes. It is also responsible for any replication of logical volumes and their attributes between clusters. An hNode uses standardized interfaces (TCP/IP) to communicate with external components.

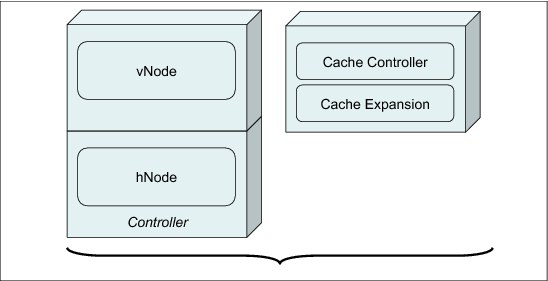

General node (gNode)

A gNode can be considered a vNode and an hNode sharing the same physical controller. The current implementation of the TS7700 Virtualization Engine runs on a gNode. The engine has both a vNode and hNode combined within an IBM System POWER7 processor-based server. Figure 2-2 on page 19 shows a relationship between nodes.

Figure 2-2 Node architecture

Cluster

The TS7700 Virtualization Engine cluster combines the TS7700 Virtualization Engine server with one or more external (from the server’s perspective) disk subsystems. This subsystem is the TS7700 Virtualization Engine cache controller. This architecture permits expansion of disk cache capacity. It also allows the addition of vNodes or hNodes in future offerings to enhance the capabilities of the Tape Virtualization System.

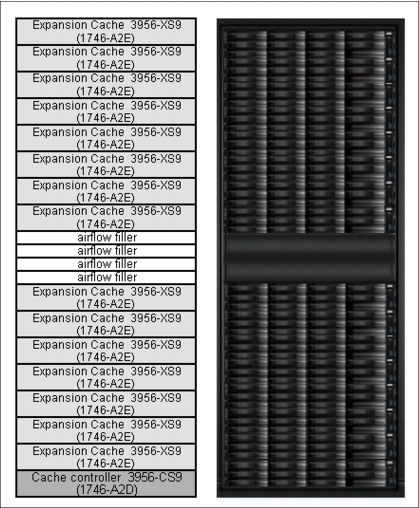

Figure 2-3 shows the TS7700 Virtualization Engine configured as a cluster.

Figure 2-3 TS7700 Virtualization Engine cluster

A TS7700 Virtualization Engine cluster provides Fibre Channel connection (FICON) host attachment and 256 virtual tape devices. The TS7740 Virtualization Engine cluster also includes the assigned TS3500 Tape Library partition, fiber switches, and tape drives. The TS7720 Virtualization Engine can include one or more optional cache expansion frames.

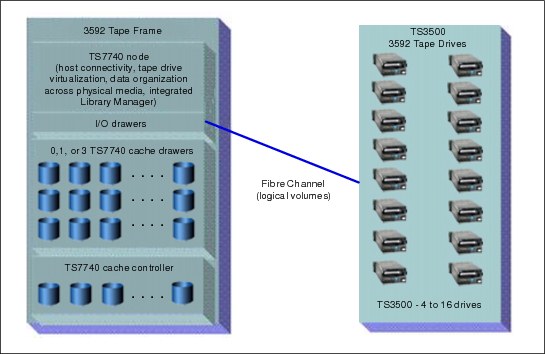

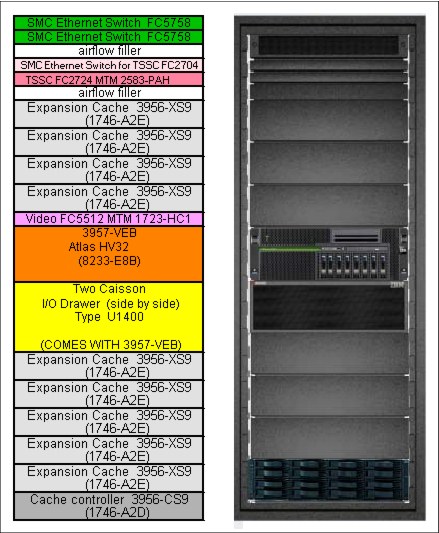

Figure 2-4 on page 20 shows the components of a TS7740 Virtualization Engine cluster.

Figure 2-4 TS7740 Virtualization Engine cluster components

The TS7700 Cache Controller consists of a redundant array of independent disks (RAID) controller and associated disk storage media. These items act as cache storage for data. The R3.0 CS9/CX9 contain 12 Serial Advanced Technology Attachment (SATA) 3.5-inch disks. The R3.0 CC9/CX9 contain 24 or 22 2.5-inch serial-attached SCSI (SAS) disks. The capacity of each disk drive module (DDM) depends on your configuration. The TS7700 Cache Drawer acts as an expansion unit for the TS7700 Cache Controller. The drawer and controller collectively are called the TS7700 Cache. The amount of cache available per TS7700 Cache Drawer depends on your configuration.

The TS7740 Virtualization Engine Cache provides a RAID 5 (up to CC8) and a RAID 6 (since CC9) protected virtual volume storage to temporarily hold data before writing it to physical tape. It then caches the data to allow fast retrieval from disk.

The TS7720 use RAID 6 protection.

2.1.3 Peer-to-peer VTS design

In a 3494 peer-to-peer (PTP) VTS, you needed external Virtual Tape Controller (VTC) hardware to present the components as a single library to the host. The VTCs were connected to the host through IBM ESCON® or FICON. Each VTC was connected to both VTSs. Only two VTSs were able to be connected to a peer-to-peer environment. The new architecture of grid allows you to have more clusters acting as one entity, and also allows the introduction of many new features and functions.

2.1.4 Principles of grid design

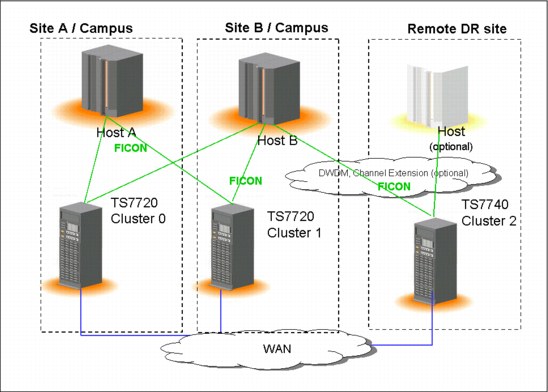

The TS7700 Virtualization Engine R3.0 grid configuration is a series of two, three, four, five, or six clusters. These clusters are connected by grid links to each other by a grid network to constitute resilient DR and highly available solutions.

|

Note: Five-cluster grid and six-cluster grid configurations are available with a request for price quotation (RPQ).

|

A grid configuration looks like a single tape library, including tape drives to the attached hosts. Whether it is a stand-alone cluster or multicluster configuration, the entire subsystem appears as a single tape library to the attached hosts.

Logical volumes (data and tokens) are replicated across the grid links, depending on your TS7700 grids definitions. When or if a replication of data occurs is controlled through several parameters, such as Copy Consistency Points and override policies. Access is independent of where the copy of the logical volumes exists. A logical volume can be mounted through any virtual device in any cluster in the grid.

In general, any data initially created or replicated between clusters is accessible through any available cluster in a grid configuration. This concept ensures that data can still be accessed even if a cluster becomes unavailable.

A grid can contain all TS7720 clusters, all TS7740 clusters, or a mix of the two, which is referred to as a hybrid grid.

The term multicluster grid is used for a grid with two or more clusters.

For a detailed description, see 2.3, “Multicluster grid configurations: Components, functionality, and features” on page 49.

2.1.5 Management of the TS7700

The management of the TS7700 is based on eight key components:

•TS7700 Management Interface

•TS3500 web interface

•Advanced (outboard) policy management

•Data Facility Storage Management Subsystem (DFSMS) integration with the TS7700 to provide the storage management subsystem (SMS) constructs’ names for policy management

•Host commands to control the TS7700

•Messages for automated alerting and operations

•Tools

•Call home support

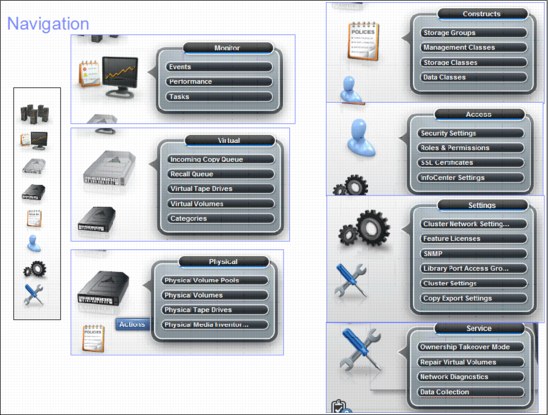

TS7700 Management Interface (MI)

The TS7700 MI is used to configure the TS7700, set up outboard policy management behavior, monitor the systems, and many other client-facing management functions.

TS3500 web interface

The TS3500 web interface is used to configure and operate the TS3500 tape library, particularly for the management of physical drives and media.

Advanced (outboard) policy management

Policy management enables you to better manage your logical and stacked volumes through the usage of the SMS construct names. With z/OS and DFSMS, the SMS construct names that are associated with a volume (Storage Class, Storage Group, Management Class, and Data Class) are sent to the library. When a volume is written from load point, the eight-character SMS construct names (as assigned through your automatic class selection (ACS) routines) are passed to the library. At the library’s MI, you can then define policy actions for each construct name, enabling you and the TS7700 to better manage your volumes. For the other System z platforms, constructs can be associated with the volumes, when the volume ranges are defined through the library’s MI.

DFSMS constructs in System z and their equivalents in TS7700

In System z, four DFSMS constructs exist:

•Storage Class

•Storage Group

•Management Class

•Data Class

Each of these constructs is used to determine specific information about the data that has to be stored. All construct names are also presented to the TS7700. They need to have an equivalent definition at the library. You can define these constructs in advance on the TS7700 MI. See 5.3.8, “Defining TS7700 constructs” on page 235.

If constructs are sent to the TS7700 without having predefined constructs on the TS7700, the TS7700 creates the construct with default parameters.

|

Note: Predefine your SMS constructs on the TS7700. The constructs created automatically might not be suitable for your requirements.

|

Storage class in SMS

Storage classes perform three functions. They decide whether data is SMS-managed. They decide the level of performance of a data set. They decide whether you can override SMS and place data on specific volumes.

Storage class in TS7700

The storage class in TS7700 is used to set the cache preferences for the logical volume. This definition is cluster-based.

Storage Group in SMS

Storage Groups are the fundamental concept of DFSMS. DFSMS groups disks together into storage pools, so you allocate by storage pool. Storage pools can also consist of tape volumes. This allows SMS to direct tape allocations to a VTS or automated library. For tape Storage Groups, one or more tape libraries can be associated with them. Connectivity is defined at both the library level and the Storage Group level. If a Storage Group is connected to certain systems, then any libraries associated with that Storage Group must be connected to the same systems. You can direct allocations to a local or remote library or to a specific library by assigning the appropriate Storage Group in the Storage Group ACS routine.

Storage Group in TS7700

The Storage Group in the TS7700 is used to map the logical volume to a physical pool and the primary pool number. This definition is cluster-based.

Management Class in SMS

Management Classes are used to determine backup and migration requirements. When assigned to data sets, Management Classes replace and expand attributes that otherwise are specified on JCL data definition (DD) statements, IDCAMS DEFINE commands, and DFSMShsm commands. A Management Class is a list of data set migration, backup, and retention attribute values. A Management Class also includes object expiration criteria, object backup requirements, and class transition criteria for the management of objects.

Management Class in TS7700

From the TS7700 side, the Management Class is used for functions, such as Copy Policy, Selective Dual Copy Pool (depending on the physical pool, this function might be used for Copy Export), Retain Copy Mode, and Scratch Mount Candidate for Scratch Allocation assistance. This definition is cluster-based.

DATACLASS in SMS

The DATACLASS construct defines what a file looks like. The DATACLASS ACS routine is always invoked, even if a file is not SMS-managed. A DATACLASS is only ever assigned when a file is created and cannot be changed. A file is described by its data set organization, record format, record length, space allocation, how many volumes it can span, data compaction, media type, and recording information.

DATACLASS in TS7700

DATACLASS in the TS7700 is used for the definition of the virtual volume size and whether it has to be treated as a logical WORM volume. This definition is shared on the grid. If you define it on one cluster, it is propagated in all other clusters in the grid.

Storage Group, Storage Class, and Management Class only affect the local cluster. The DATACLASS is shared grid-wide.

Host commands

Several commands to control and monitor your environment are available. They are described in detail in Chapter 5, “Hardware implementation” on page 189, Chapter 8, “Migration” on page 371, Chapter 9, “Operation” on page 413, and Appendix H, “Library Manager volume categories” on page 953.

These major commands are available:

D SMS,LIB Display library information for composite and distributed libraries

D SMS,VOLUME Display volume information for logical volumes

LI REQ The LIBRARY REQUEST command, also known as the Host Console Request function, is initiated from a z/OS host system to a TS7700 composite library or a specific distributed TS7700 library within a grid. Use the LIBRARY REQUEST command to request information related to the current operational state of the TS7700 Virtualization Engine, its logical and physical volumes, and its physical resources. The command can also be used to perform outboard operations at the library, especially setting alerting thresholds. Because all keyword combinations are simply passed to the TS7700 and all responses are text-based, the LIBRARY REQUEST command is a primary means of adding management features with each TS7700 release without requiring host software changes. When settings are changed, the TS7700 behavior can change for all hosts utilizing the TS7700, which you need to consider when changing settings through the LI REQ command. See to the published white paper (http://www-03.ibm.com/support/techdocs/atsmastr.nsf/WebIndex/WP101091).

DS QLIB Use the DEVICE SERVICES QUERY LIBRARY command to display library and device-related information for the composite and distributed libraries.

There is a subtle difference but it is important to understand. A DS QL command may return different data depending on which host it is issued. An LI command returns the same data without regard to the host, as long as both hosts have full accessibility.

Automation handling and messages

Mainly, consider the CBRxxxx messages. For more information, see the following document:

Tools

There are many helpful tools provided for the TS7700. See Chapter 10, “Performance and monitoring” on page 653.

Call Home support

The Call Home function generates a service alert automatically when a problem is detected within the subsystem, such as a problem in the following components:

•Inside the TS7700 components themselves

•In the associated TS3500 library and tape drives

•In the cache disk subsystem

Status information is transmitted to the IBM Support Center for problem evaluation. An IBM service support representative (SSR) can be dispatched to the installation site if maintenance is required. Call Home is part of the service strategy adopted in the TS7700 family. It is also used in a broad range of tape products, including VTS models and tape controllers, such as the 3592-C07.

The Call Home information for the problem is transmitted with the appropriate information to the IBM product support group. This data includes the following information:

•Overall system information, such as system serial number, microcode level, and so on

•Details of the error

•Error logs that can help to resolve the problem

After the Call Home is received by the assigned IBM support group, the associated information is examined and interpreted. Following analyses, an appropriate course of action is defined to resolve the problem. For instance, an IBM SSR might be sent to the site location to take the corrective actions. Or, the problem might be repaired or resolved remotely by the IBM support personnel through a broadband (if available) or telephone connection.

The TS3000 System Console (TSSC) is the subsystem component responsible for placing the service call or Call Home whenever necessary. The call itself can go through a telephone line or can be placed over a broadband connection, if available. The TS3000 System Console is equipped with an internal or external modem, depending on the model.

2.2 Stand-alone cluster: Components, functionality, and features

In general, a stand-alone cluster can either be a TS7720 or a TS7740 with the attached TS3500.

The TS7700 has several internal characteristics for HA (RAID 6 protection, dual power supplies, and so forth). However, a grid configuration is designed for a HA solution and provides the most redundant setup. See Chapter 3, “TS7700 usage considerations” on page 109.

Next, general information is provided about the components, functionality, and features used in a TS7700 environment. The general concepts and information are also in 2.2, “Stand-alone cluster: Components, functionality, and features” on page 25. Only deviations and additional information for multicluster grid are in 2.3, “Multicluster grid configurations: Components, functionality, and features” on page 49.

2.2.1 Views from the Host: Library IDs

All host interaction with tape data in a TS7700 Virtualization Engine is through virtual volumes and virtual tape drives.

You must be able to identify the logical entity that represents the virtual drives and volumes, but also address the single entity of a physical cluster. Therefore, two types of libraries exist, a composite library and a distributed library. Each type is associated with a library name and a Library ID.

Composite library

The composite library is the logical image of the stand-alone cluster or grid that is presented to the host. All logical volumes and virtual drives are associated with the composite library. In a stand-alone TS7700 Virtualization Engine, the host sees a logical tape library with sixteen 3490E tape control units. These control units each have sixteen IBM 3490E tape drives, and are attached through two or four FICON channel attachments. The composite library is defined through the Interactive Storage Management Facility (ISMF).

Figure 2-5 illustrates the host view of a stand-alone cluster configuration.

Figure 2-5 TS7700 Virtualization Engine stand-alone cluster configuration

Distributed library

Each cluster in a grid is a distributed library, which consists of a TS7700 Virtualization Engine. In a TS7740 Virtualization Engine, it is also attached to a physical TS3500 tape library. At the host, the distributed library is also defined to SMS. It is defined using the existing ISMF windows and has no tape devices defined. The virtual tape devices are defined to the composite library only.

A distributed library consists of the following cluster hardware components:

•A virtualization engine

•A TS7700 TVC

•A 3952-F05 frame

•Attachment to a physical library (TS7740 Virtualization Engine only)

•A number of physical tape drives (TS7740 Virtualization Engine only)

|

Important: A composite library ID must be defined both for a multicluster grid and a stand-alone cluster. For a stand-alone cluster, the composite library ID must not be the same as the distributed library ID. For a multiple grid configuration, the composite library ID must differ from any of the unique distributed library IDs. Both the composite library ID and distributed library ID are five-digit hexadecimal strings.

The Library ID is used to tie the host’s definition of the library to the actual hardware.

|

2.2.2 Tape volume cache

The TS7700 Virtualization Engine Tape Volume Cache (TVC) is a disk buffer that writes to and reads from virtual volumes.

The host operating system sees the TVC as virtual IBM 3490E Tape Drives, and the 3490 tape volumes are represented by storage space in a fault-tolerant disk subsystem. The host never writes directly to the physical tape drives attached to a TS7740 Virtualization Engine.

The following fault-tolerant TVC options are available. For TS7740 configurations utilizing CC6, CC7, or CC8 technology, the TVC is protected with RAID 5. For all TS7720 and TS7740 configurations using CC9 technology, RAID 6 is used.

These RAID configurations provide continuous data availability to users. If up to one data disk (RAID 5) or up to two data disks (RAID 6) in a RAID group become unavailable, the user data can be re-created dynamically from the remaining disks using parity data provided by the RAID implementation. The RAID groups contain global hot spare disks to take the place of a failed hard disk. Using parity, the RAID controller rebuilds the data from the failed disk onto the hot spare as a background task. This allows the TS7700 Virtualization Engine to continue working while the IBM SSR replaces the failed hard disk in the TS7700 Virtualization Engine Cache Controller or Cache Drawer.

To avoid a cache filling up, TVC management was introduced.

The following rules apply:

•The TVC is under exclusive control of the TS7700 Virtualization Engine.

•In a TS7740, if volumes are not in cache during a tape volume mount request, they are scheduled to be brought back into the disk cache from a physical tape device (recall).

•In a TS7740 Virtualization Engine configuration, if a modified virtual volume is closed and dismounted from the host, it is scheduled to be copied to a stacked volume (premigration).

•In a TS7740, if the TVC runs out of space, the cache management removes already migrated volumes.

•In a TS7740, by default, candidates for removal from cache are selected using a least recently used (LRU) algorithm.

•In a TS7740, user-defined policies manage the volumes that preferably are kept in cache.

•The TS7700 Virtualization Engine emulates a 3490E tape of a specific size. However, the space used in the TVC is the number of bytes of data written to the virtual volume after compression. When the TS7740 Virtualization Engine virtual volume is written to the physical tape, it uses only the space occupied by the compressed data.

•In a TS7720, if the TVC runs out of space, the client needs to delete the expired logical volumes.

In a stand-alone TS7720 Virtualization Engine configuration, virtual volumes always remain in the TVC. They remain in the TVC because no physical tape drives are attached to the TS7720 Virtualization Engine.

If a TS7720 runs out of space, the TS7720 shows warning messages of “almost becoming full” and “becoming full”. If the TS7720 fills up, it moves to a read-only state.

|

Important: Monitor your cache in a TS7720 stand-alone environment to avoid an “Out of Cache Resources” situation.

|

For more information about TVC management, see 2.2.12, “General TVC management in a stand-alone cluster” on page 34 and 2.3.20, “General TVC management in multicluster grids” on page 64.

2.2.3 Virtual volumes and logical volumes

A virtual volume is created in the TVC when the host writes data to the TS7700 Virtualization Engine subsystem. As long as the volumes reside in cache, it is called a “virtual volume”.

When a TS7740 Virtualization Engine virtual volume is copied from the TVC to a physical tape cartridge, it becomes a logical volume. This process is called premigration. When the volume is removed from the TVC, the process is called migration. When a logical volume is moved from a physical cartridge to the TVC, the process is called recall. The volume becomes a virtual volume again.

We use logical volume as the overall term (whether it is in cache or not). We use the terms virtual volume and logical volume on stacked volume when necessary for the context.

Each logical volume, like a real volume, has the following characteristics:

•Has a unique volume serial number (VOLSER) known to the host.

•Is loaded and unloaded on a virtual device.

•Supports all tape write modes, including Tape Write Immediate mode.

•Contains all standard tape marks and data blocks.

•Supports an IBM or ISO/ANSI standard label.

•Can be appended to after it is initially written from the beginning of tape (BOT).

•The application is notified that the write operation is complete when the data is written to a buffer in vNode. The buffer is implicitly or explicitly synchronized with the TVC during operation. Tape Write Immediate mode suppresses write data buffering.

•Each host-written record has a logical block ID.

•The end of volume is signaled when the total number of bytes written into the TVC after compression reaches one of the following limits:

– 400 MiB for an emulated cartridge system tape (CST).

– 800 MiB for an emulated enhanced capacity cartridge system tape (ECCST) volume.

– 1000, 2000, 4000, or 6000 MiB using the larger volume size options that are assigned via Data Class.

– An RPQ allows 25000 Mib on TS7720-only configurations.

The default logical volume sizes of 400 MiB or 800 MiB are defined at insert time. These volume sizes can be overwritten at every individual scratch mount using a Data Class construct option.

Virtual volumes can exist only in a TS7700 Virtualization Engine. You can direct data to a virtual tape library by assigning a system-managed tape Storage Group through the ACS routines. SMS passes Data Class, Management Class, Storage Class, and Storage Group names to the TS7700 as part of the mount operation. The TS7700 Virtualization Engine uses these constructs outboard to further manage the volume. This process uses the same policy management constructs defined through the ACS routines.

Beginning with TS7700 Virtualization Engine R2.0, a maximum of 2,000,000 virtual volumes per stand-alone cluster or multicluster grid was introduced. With a V07/VEB server with R3.0, now a maximum number of 4,000,000 virtual volumes per stand-alone cluster or multicluster grid are supported. The default maximum number of supported logical volumes is still 1,000,000 per grid. Support for additional logical volumes can be added in increments of 200,000 volumes using FC5270.

Larger capacity volumes (beyond 400 MiB and 800 Mib) can be defined through Data Class and associated with CST (MEDIA1) or ECCST (MEDIA2) emulated media.

The VOLSERs for the logical volumes are defined through the management interface.Virtual volumes go through the same cartridge entry processing as native cartridges inserted into a tape library attached directly to a System z host.

The management interface uses categories to group volumes. After virtual volumes are inserted through the management interface, they are placed in the insert category and handled exactly like native cartridges. When the TS7700 Virtualization Engine is varied online to a host or after an insert event occurs, the host operating system interacts via object access method (OAM) with the Library. Depending on the definitions in DEVSUPxx and EDGRMMxx parmlib members, the host operating system assigns newly inserted volumes to a particular scratch category. The host system requests a particular category when it needs scratch tapes and the Library Manager knows which group of volumes to use to satisfy the scratch request.

Data compression is based on the IBMLZ1 algorithm by the FICON channel card in a TS7700 Virtualization Engine node. The actual host data stored on a virtual CST or ECCST volume can vary from 1,200 MiB and 18,000 MiB (assuming a 3:1 compression ratio). There is also a 75,000 MiB (assuming a 3:1 compression ratio) for the TS7720 available per RPQ.

2.2.4 Mounting a scratch virtual volume

When a request for a scratch is issued to the TS7700 Virtualization Engine, the request specifies a mount category. The TS7700 Virtualization Engine selects a virtual VOLSER from the candidate list of scratch volumes in the category.

Scratch volumes at the mounting cluster are chosen using the following priority order:

1. All volumes in the source or alternate source category that are owned by the local cluster, not currently mounted, and do not have pending reconciliation changes against a peer cluster.

2. All volumes in the source or alternate source category that are owned by any available cluster, not currently mounted, and do not have pending reconciliation changes against a peer cluster.

3. All volumes in the source or alternate source category that are owned by any available cluster and not currently mounted.

4. All volumes in the source or alternate source category that can be taken over from an unavailable cluster that has an explicit or implied takeover mode enabled.

The first volumes chosen in the preceding steps are the volumes that have been in the source category the longest. Volume serials are also toggled between odd and even serials for each volume selection.

For all scratch mounts, the volume is temporarily initialized as though the volume had been initialized using the EDGINERS or IEHINITT program, and will have an IBM standard label consisting of a VOL1 record, an HDR1 record, and a tape mark. If the volume is modified, the temporary header information is applied to a file in the TVC. If the volume is not modified, the temporary header information is discarded and any previously written content (if it exists) is not modified. Besides choosing a volume, TVC selection processing is used to choose which TVC acts as the I/O TVC, as described in 2.3.4, “I/O TVC selection” on page 54.

|

Important: In Release 3.0 of the TS7700 Virtualization Engine, all categories that are defined as scratch inherit the Fast Ready attribute. There is no longer a need to use the MI to set the Fast Ready attribute to scratch categories; however, the MI is still needed to indicate which categories are scratch.

|

When the Fast Ready attribute is set or implied, no recall of content from physical tape is required in a TS7740. No mechanical operation is required to mount a logical scratch volume.

The TS7700 Virtualization Engine with scratch allocation assistance (SAA) function activated uses policy management in conjunction with z/OS host software to direct scratch allocations to specific clusters within a multicluster grid.

2.2.5 Mounting a specific virtual volume

In a stand-alone environment, the mount is directed to the virtual drives of this cluster. In a grid environment, specific mounts are more advanced. See 2.3.12, “Mounting a specific virtual volume” on page 60.

In the stand-alone environment, the following scenarios are possible:

1. There is a valid copy in the TVC. In this case, the mount is signaled as complete and the host can access the data immediately.

2. There is no valid copy in the TVC. In this case, there are further options:

a. If it is a TS7720, the mount fails.

b. If it is a TS7740, the virtual volume is recalled from a stacked volume. Mount completion is signaled to the host system only after the entire volume is available in the TVC.

The recalled virtual volume remains in the TVC until it becomes the LRU volume, unless the volume was assigned a Preference Group of 0 or the Recalls Preferred to be Removed from Cache override is enabled by using the TS7700 Library Request command.

If the mounted virtual volume was modified, the volume is again pre-migrated.

If modification of the virtual volume did not occur when it was mounted, the TS7740 Virtualization Engine does not schedule another copy operation and the current copy of the logical volume on the original stacked volume remains active. Furthermore, copies to remote TS7700 Virtualization Engine clusters in a grid configuration are not required if modifications were not made.

In a z/OS environment, to mount a specific volume in the TS7700 Virtualization Engine, that volume must reside in a private category within the library. The tape management system prevents a scratch volume from being mounted in response to a specific mount request. Also, the TS7700 Virtualization Engine treats any specific mount that targets a volume that is currently assigned to a scratch category, which is also configured through the management interface as scratch (Fast Ready), as a host scratch mount. In release 3.0 of TS7700, all scratch categories are Fast Ready. If this occurs, the temporary tape header is created and no recalls take place.

In this case, DFSMSrmm or other tape management system fails the mount operation because the expected last written data set for the private volume was not found. Because no write operation occurs, the original volume’s contents are left intact, which accounts for categories being incorrectly configured as scratch (Fast Ready) within the management interface.

2.2.6 Logical WORM (LWORM) support and characteristics

TS7700 Virtualization Engine supports the logical Write Once Read Many (LWORM) function through TS7700 Virtualization Engine software emulation. The host views the TS7700 Virtualization Engine as an LWORM-compliant library that contains WORM-compliant 3490E logical drives and media.

The LWORM implementation of the TS7700 Virtualization Engine emulates physical WORM tape drives and media. TS7700 Virtualization Engine provides the following functions:

•Provides an advanced function Data Class construct property that allows volumes to be assigned as LWORM-compliant during the volume’s first mount, where a write operation from BOT is required, or during a volume’s reuse from scratch, where a write from BOT is required.

•Generates, during the assignment of LWORM to a volume’s characteristics, a temporary worldwide identifier that is surfaced to host software during host software open and close processing, and then bound to the volume during the first write from BOT.

•Generates and maintains a persistent Write-Mount Count for each LWORM volume and keeps the value synchronized with host software.

•Allows only appends to LWORM volumes using physical WORM append guidelines.

•Provides a mechanism through which host software commands can discover LWORM attributes for a given mounted volume.

No method is available to convert previously written volumes to LWORM volumes without having to read the contents and rewrite them to a new logical volume that has been bound as an LWORM volume.

|

Clarification: Cohasset Associates, Inc., has assessed the logical WORM capability of the TS7700 Virtualization Engine. The conclusion is that the TS7700 Virtualization Engine meets all SEC requirements in Rule 17a-4(f), which expressly allows records to be retained on electronic storage media.

|

2.2.7 Virtual drives

From a host perspective, each TS7700 Virtualization Engine appears as sixteen logical IBM 3490E tape control units. Each control unit has sixteen unique drives attached through FICON channels. Virtual tape drives and control units are defined just like physical IBM 3490s through the hardware configuration definition (HCD). Defining a preferred path for the virtual drives gives you no benefit. There is no advantage because the IBM 3490 control unit functions inside the TS7700 Virtualization Engine are emulated to the host.

Each virtual drive has the following characteristics of physical tape drives:

•Uses host device addressing

•Is included in the I/O generation for the system

•Is varied online or offline to the host

•Signals when a virtual volume is loaded

•Responds and processes all IBM 3490E I/O commands

•Becomes not ready when a virtual volume is rewound and unloaded

For software transparency reasons, the functionality of the 3490E integrated cartridge loader (ICL) is also included in the virtual drive’s capability. All virtual drives indicate that they have an ICL. For scratch mounts, using the emulated ICL in the TS7700 Virtualization Engine to preload virtual cartridges is of no benefit.

2.2.8 Selective device access control

Due to the expanding capacity of a multicluster grid configuration, there is an increasing need to share the tape hardware investments between multiple host systems. Selective device access control (SDAC) meets this need by allowing a secure method of hard partitioning. The primary intent of this function is to prevent one host logical partition (LPAR)/sysplex with an independent tape management system from inadvertently modifying or removing data owned by another host. This is valuable in a setup where you have a production system and a test system with different security settings on the hosts and you want to separate the access to the grid in a more secure way. It can also be used in a multi-tenant service provider to prevent tenants from accessing each other’s data.

Hard Partitioning is a way to give a fixed number of logical control units (LCUs) to a defined host group and connect the units to a range of logical volumes dedicated to a particular host or hosts. SDAC is a useful function when multiple partitions have these characteristics:

•Separate volume ranges

•Separate tape management system

•Separate tape configuration database

SDAC allows you to define a subset of all the logical devices per host (control units in ranges of 16 devices based on the LIBPORT definitions in HCD) and enables exclusive control on host-initiated mounts, ejects, and attribute or category changes. The implementation of SDAC is described in more detail in 6.4, “Implementing Selective Device Access Control” on page 311. Implementing SDAC requires planning and orchestration with other system areas to map the desired access for the device ranges from individual servers or logical partitions and consolidate this information in a coherent input/output definition file (IODF) (HCD). From the TS7700 subsystem standpoint, SDAC definitions are set up using the TS7700 Virtualization Management Interface.

|

Note: SDAC is based on the availability of LIBPORT definitions or another equivalent way to define device ranges and administratively protect those assignments. Device partitions must be defined on 16 device boundaries to be compatible with SDAC.

|

2.2.9 Physical drives

The physical tape drives used by a TS7740 Virtualization Engine are installed in a TS3500 Tape Library. The physical tape drives are not addressable by any attached host system, and are controlled by the TS7740 Virtualization Engine. The TS7740 Virtualization Engine supports IBM 3592-J1A, TS1120, TS1130, and TS1140 physical tape drives. For more information, see 2.5.3, “TS7740 Virtualization Engine components” on page 93.

|

Remember: Do not change the assignment of physical tape drives attached to a TS7740 in the Drive Assignment window of the TS3500 IBM Tape Library - Advanced Library Management System (ALMS) web interface. Consult your IBM SSR for configuration changes.

|

2.2.10 Stacked volume

Physical cartridges used by the TS7740 Virtualization Engine to store logical volumes are under the control of the TS7740 Virtualization Engine node. The physical cartridges are not known to the hosts. Physical volumes are called stacked volumes. Stacked volumes must have unique, system-readable VOLSERs and external labels like any other cartridges in a tape library.

|

Remember: Stacked volumes do not need to be initialized before inserting them into the TS3500. However, the internal labels must match the external labels if they were previously initialized.

|

After the host closes and unloads a virtual volume, the storage management software inside the TS7740 Virtualization Engine schedules the virtual volume to be copied (also known as premigration) onto a physical tape cartridge. The TS7740 Virtualization Engine attempts to maintain a mounted stacked volume to which virtual volumes are copied. Therefore, mount activity is reduced because only one physical cartridge is mounted to service multiple virtual volume premigration requests that target the same physical volume pool.

Remember, that virtual volumes are already compressed and will be written in that compressed format to the stacked volume. This procedure maximizes the use of a cartridge’s storage capacity.

A logical volume that cannot fit in the currently filling stacked volume does not span across two or more physical cartridges. Instead, the stacked volume is marked full and the logical volume is written on another stacked volume from the assigned pool.

Due to business reasons, it might be necessary to separate logical volumes from each other (selective dual write, multi-client environment, or encryption requirements). Therefore, you can influence the location of the data by using volume pooling. For more information, see “Physical volume pooling” on page 41.

Through the TS3500 Tape Library Specialist, define which physical cartridges are to be used by the TS7740 Virtualization Engine. When you use pooling, your stacked volumes can be assigned to individual pools. Logical volumes stored on those cartridges are mapped by the TS7740 Virtualization Engine internal storage management software. Logical volumes can then be assigned to specific stacked volume pools.

2.2.11 Selective Dual Copy function

In a stand-alone cluster, a logical volume and its internal data usually exist as a single entity that is copied to a single stacked volume. If the stacked volume is damaged, you can lose access to the data within the logical volume. Without the Selective Dual Copy function, the only way to ensure data availability is to use host software to duplex the logical volume, or to set up a grid environment.

With the Selective Dual Copy function, storage administrators have the option to selectively create two copies of logical volumes within two pools of a TS7740 Virtualization Engine.

The Selective Dual Copy function can be used along with the Copy Export function to provide a secondary offsite physical copy for DR purposes. For more details concerning Copy Export, see 2.2.23, “Copy Export function” on page 45.

The second copy of the logical volume is created in a separate physical pool ensuring physical cartridge separation. Control of Dual Copy is through the Management Class construct (see “Management Classes” on page 237). The second copy is created when the original volume is pre-migrated.

|

Important: Ensure that reclamation in the secondary physical volume pool is self-contained (the secondary volume pool reclaims onto itself) to keep secondary pool cartridges isolated from the others. Otherwise, Copy Export DR capabilities can be compromised.

|

The second copy created through the Selective Dual Copy function is only available when the primary volume cannot be recalled or is inaccessible. It cannot be accessed separately and cannot be used if the primary volume is being used by another operation. The second copy provides a backup if the primary volume is damaged or inaccessible.

Selective Dual Copy is defined to the TS7740 Virtualization Engine and has the following characteristics:

•The copy feature is enabled by the Management Class setting through the management interface where you define the secondary pool.

•Secondary and primary pools can be intermixed:

– A primary pool for one logical volume can be the secondary pool for another logical volume unless the secondary pool is used as a Copy Export pool.

– Multiple primary pools can use the same secondary pool.

•At Rewind Unload time, the secondary pool assignment is determined and the copy of the logical volume is scheduled. The scheduling of the backup is determined by the premigration activity occurring in the TS7740 Virtualization Engine.

•The copy is created before the primary volume being migrated is moved out of cache.

2.2.12 General TVC management in a stand-alone cluster

In a TS7700 environment, each cluster is actively monitoring and evaluating key resources and workflow status, in order to improve average mount response times as well as avoid an “Out of cache resources” situation.

The following resources are monitored:

•The amount of data in cache that needs to be copied to a peer

•The amount of data resident in the cache

•Number of physical scratch volumes (TS7740)

•Number of available physical tape drives (TS7740)

You can operate through the Host Console Request function to modify many of the workflow management controls of the TS7700, and to set alert thresholds for many of the resources managed by the TS7700. But before we go into detail, remember these basics:

1. The TS7740 has back-end drives and tapes. Virtual volumes are migrated to and from the TVC to stacked volumes.

2. If you do not have enough stacked volumes to migrate the content of the TVC, the TS7740 stops working.

3. The TS7720 has no back-end tape drives to which to offload. Therefore, when its disk cache becomes full, it enters a read-only state. In a grid configuration, additional considerations for TVC management are necessary. In the stand-alone environment, TVC management is limited.

The TS7700 cluster manages the TVC cache. You cannot influence the way that the cluster performs these actions. However, you can define which data to keep longer in the TVC, and which data preferably is removed from cache.

Next, the following topics are described:

•Short introduction of how you control the contents of cache

•Description of how the TVC cache management mechanism works

•Description of which TVC cache management processes exist

How you control the content of the TVC (TS7720 and TS7740)

You control the content through the Storage Class construct. Through the management interface, you can define one or more Storage Class names and assign Preference Level 0 or 1 to them.

In a z/OS environment, the Storage Class name assigned to a volume in the ACS routine is directly passed to the TS7700 Virtualization Engine and mapped to the pre-defined constructs. Figure 2-6 shows this process.

If the host passes a previously undefined Storage Class name to the TS7700 Virtualization Engine during a scratch mount request, the TS7700 Virtualization Engine adds the name using the definitions for the default Storage Class.

|

Define Storage Classes: Ensure that you predefine the Storage Classes. The default Storage Class might not support your needs.

|

For environments that are not z/OS (SMS) environments, using the management interface, a Storage Class can be assigned to a range of logical volumes during insert processing. The Storage Class can also be updated to a range of volumes after they have been inserted through the management interface.

Figure 2-6 TS7740 TVC management through Storage Class

|

Remember: Monitor your TS7720 TVC in a stand-alone cluster on a regular basis to avoid “Out of Cache Resource” conditions.

|

To be compatible with the Initial Access Response Time Seconds (IART) method of setting the preference level, the Storage Class definition also allows a Use IART selection to be assigned.

Even before Outboard Policy Management was made available for the previous generation VTS, you had the ability to assign a preference level to virtual volumes by using the IART attribute of the Storage Class. The IART is a Storage Class attribute that was originally added to specify the desired response time (in seconds) for an object using the OAM. If you wanted a virtual volume to remain in cache, you assign a Storage Class to the volume whose IART value is 99 seconds or less. Conversely, if you want to give a virtual volume preference to be out of cache, you assign a Storage Class to the volume whose IART value was 100 seconds or more.

Assuming that the Use IART selection is not specified, the TS7700 Virtualization Engine sets the preference level for the volume based on the Preference Level 0 or 1 of the Storage Class assigned to the volume.

2.2.13 Expired virtual volumes and Delete Expired function

To be compatible with the original tape data processing, expired logical volumes were only “deleted” when the logical volume was reused, and the content overwritten. In a virtual tape environment, that processing might result in the following situations:

•TVCs might fill up with large amounts of expired data. Stacked volumes might retain an excessive amount of expired data.

•Stacked volumes fill up with already expired data.

To avoid the situation, the Delete Expired function was introduced.

With expired volume management, you can set a “grace period” for expired volumes ranging from one hour to approximately 144 weeks (default is 24 hours). After that period has elapsed, expired volumes become candidates for deletion. The expired volume can be deleted from the TVC or back-end physical tape and it is marked as non-valid content on the stacked volumes.

The elapsed time starts when the volume is moved to a designated scratch category or a category with the Fast Ready attribute set. If the logical volume is reused during a scratch mount before the expiration delete time expires, the existing content is immediately deleted at the time of first write. The Delete Expired volume attribute will be honored, regardless of where the logical volume actually resides. The default behavior is to “Delete Expire” up to 1,000 delete-expire candidates per hour. This value can be modified through the LI REQ command. Starting with Release 2.1 of the TS7700, volumes being held can be moved to a private (non-Fast Ready) category in case they were accidentally returned to scratch.

For details about expired volume management, see 5.3.6, “Defining the logical volume expiration time” on page 233.

The explicit movement of a volume out of the Delete Expired configured category can occur before the expiration of this volume.

|

Important: Disregarding the Delete Expired Volumes setting can lead to an out-of-cache state in a TS7720 Virtualization Engine. With a TS7740 Virtualization Engine, it can cause excessive tape usage, or in an extreme condition, an out-of-physical scratch state.

|

The disadvantage of not having this option enabled is that scratched volumes needlessly consume TVC and physical stacked volume resources, therefore demanding more TVC active space while also requiring more physical stacked volumes in a TS7740 Virtualization Engine. The time that it takes a physical volume to fall below the reclamation threshold is also increased because the data is still considered active. This delay in data deletion also causes scratched stale volumes to be moved from one stacked volume to another during reclamation.

Expire Hold settings

An additional option, Expire Hold, can also be enabled if Delete Expired is enabled. When this option is also enabled in addition to Delete Expired function, the volume cannot be accessed using any host-initiated command until the grace period has elapsed.

This additional option is made available to prevent any malicious or unintended overwriting of scratched data before the duration elapses. After the grace period expires, the volume is simultaneously removed from a held state and made a deletion candidate.

|

Restriction: Volumes in the Expire Hold state are excluded from DFSMS OAM scratch counts and are not candidates for TS7700 scratch mounts.

|

Expired data on a physical volume remains readable through salvage processing until the volume has been completely overwritten with new data.

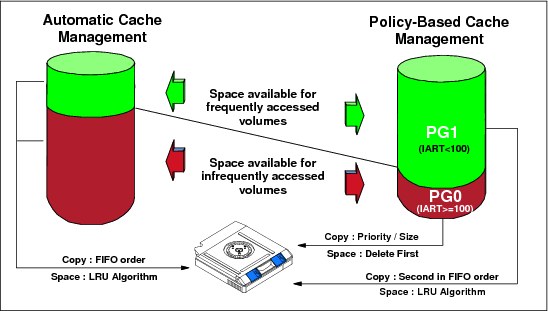

2.2.14 TVC Cache management in a TS7740 stand-alone cluster

The TVC of a TS7740 can be managed by using settings of preference levels.

Preference Level 0

Preference Level 0 (Preference Group 0 or PG0) is assigned to volumes that are unlikely to be accessed after being created, for example, volumes holding DASD image copies. There is no need to keep them in cache any longer than is necessary to copy them to physical tape. Informal studies suggest that the proportion of data that is unlikely to be accessed can be as high as 80%.

When a volume is assigned Preference Level 0, the TS7740 Virtualization Engine gives it preference to be copied to physical tape. When space is needed in the TVC, the TS7740 Virtualization Engine first selects a Preference Level 0 volume that has been copied to a physical volume and deletes it from cache. Preference Level 0 volumes are selected by largest size first, independent of how long they have been resident in cache. If there are no more Preference Level 0 volumes that have been copied to physical volumes to remove, the TS7740 Virtualization Engine selects Preference Level 1 (PG1) volumes.

In addition to removing Preference Level 0 volumes from cache when space is needed, the TS7740 Virtualization Engine also removes them if the subsystem is relatively idle. There is a small internal processing impact to removing a volume from cache, so there is benefit in removing them when extra processing capacity is available. If the TS7740 Virtualization Engine removes PG0 volumes during idle times, it selects them by smallest size first.

Preference Level 1

Preference Level 1(Preference Group 1 or PG1) is assigned to volumes that are likely to be accessed after being created, for example, volumes that contain master files created as part of the nightly batch run. Because the master files are likely to be used as input for the next night’s batch run, it is beneficial for these volumes to stay in the TVC for as long as possible.

When a volume is assigned Preference Level 1, the TS7740 Virtualization Engine adds it to the queue of volumes to be copied to physical tape after a four-minute time delay and after any volumes are assigned to Preference Level 0. The four-minute time delay is to prevent unnecessary copies from being performed when a volume is created, then quickly remounted, and appended to again.

When space is needed in cache, the TS7740 Virtualization Engine first determines whether there are any Preference Level 0 volumes that can be removed. If not, the TS7740 Virtualization Engine selects Preference Level 1 volumes to remove based on a “least recently used” (LRU) algorithm. This results in volumes that have been copied to physical tape and have been in cache the longest without access to be removed first.

Figure 2-7 shows cache utilization with policy-based cache management.

Figure 2-7 TS7740 cache utilization with policy-based cache management

When a preference level has been assigned to a volume, that assignment is persistent until the volume is reused for scratch and a new preference level is assigned. Or, if the policy is changed and a mount/dismount occurs, the new policy also takes effect.

|

Important: As of R2.1, all scratch volumes, independent of their preference group assignment, are favored for migration.

|

Copy files preferred to reside in cache

This function is only available in a multicluster grid. Logical volumes that need to be replicated to one or more peer clusters are retained in disk cache regardless of their preference group assignments. This allows peer clusters to complete the replication process without requiring a recall. After the copy completes, the assigned preference group then takes effect. For example, those assigned as preference group 0 are then immediately migrated.

If replication is not completing and the retention backlog becomes too large, the original preference groups begin to be honored, allowing data not yet replicated to be migrated to tape. These volumes likely need to be recalled into disk cache at a later time in order for replication to complete. The migration of not yet replicated data might be expected when replication is not completing due to an extended outage within the grid.

Recalls preferred for cache removal

Normally, a volume recalled into cache is managed as though it were newly created or modified because it resides in the TVC selected for I/O operations on the volume. A recalled volume displaces other volumes in cache. If the remote cluster of a grid is used for recovery, the recovery time is minimized by having most of the needed volumes in cache.

However, an unlikely situation is that all of the volumes that are used to restore are resident in the cache and that recalls are required. Unless you can explicitly control the sequence of volumes used during restore, recalled volumes likely displace cached volumes that have not yet been restored from, resulting in further recalls at a later time in the recovery process. After a restore process is completed from a recalled volume, that volume is no longer needed. A method is needed with which to remove the recalled volumes from the cache after they have been accessed so that there is minimal displacement of other volumes in the cache.

Based on your current requirements, you can set or modify this control dynamically through the z/OS Host Console Request function on the remote cluster:

•When OFF, which is the default, logical volumes that are recalled into cache are managed by using the actions defined for the Storage Class construct associated with the volume as defined at the TS7700 Virtualization Engine.

•When ON, logical volumes that are recalled into cache are managed as PG0 (preferable to be removed from cache). This control overrides the actions that are defined for the Storage Class associated with the recalled volume.

2.2.15 TVC management processes for TS7740

Two processes manage the TVC of the TS7740 Virtualization Engine in a stand-alone environment:

•Premigration Management (TS7740 only)

This process becomes effective when the amount of TS7740 Virtualization Engine TVC data that is not copied to tape reaches a predefined threshold. It is intended to ensure that the TVC does not become completely full of data that has not been backed up to physical tape.

Be aware that if your TS7740 is already busy, this mechanism might take your Virtualization Engine from peak mode to sustained mode. Threshold values can be tuned to your needs using LIB REQ commands. For details, see 6.5, “TS7700 SETTING function” on page 315.

•Free-space Management (TS7740 only)

This process becomes effective when the amount of unused (free) TVC space reaches another higher predetermined threshold. It is intended to ensure that the TVC does not become completely full of data, copied to physical tape or not. It is the mechanism that keeps the input to the TVC from overrunning the available free space.

If space cannot be freed up quickly enough, that process might lead to host I/O throttling.

2.2.16 TS7720 TVC cache management

In a TS7720 stand-alone cluster, you can only influence the TVC content with the Delete Expired setting. No further cache management is available.

2.2.17 Copy Consistency Point: Copy policy modes in a stand-alone cluster

In a stand-alone cluster, you cannot define any Copy Consistency Point.

2.2.18 TVC selection in a stand-alone cluster

Because there is only one TVC in a stand-alone cluster available, no TVC selection occurs.

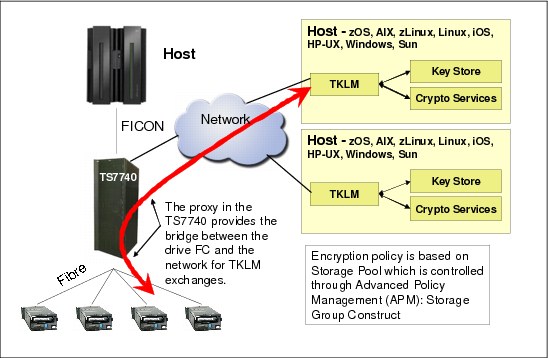

2.2.19 TVC encryption

With R3.0, a TVC encryption feature was introduced. This feature allows you to encrypt either a TS7720 or a TS7740 TVC. Because the encryption is done at the disk drive level, encryption is transparent to the TS7700 while still providing great performance.

TVC encryption is turned on for the whole disk cache; you cannot encrypt a disk cache partially. Therefore, all DDMs in all strings must be full disk encryption (FDE)-capable in order to enable the encryption. The disk cache encryption is supported for a TS7720 with 3956-CS9, and for TS7740 with 3956-CC9. Encryption can be enabled in the field at any time.

Starting with R3.0, only local key management is supported. Local key management is completely automated. There are no keys for the user to manage.

2.2.20 Logical and stacked volume pooling

The TS7740 allows you to manage your logical volumes and stacked volumes.

The following concerns are addressed by volume pooling:

•Data from separate customers on the same physical volume can compromise certain outsourcing contracts.

•Customers want to be able to “see, feel, and touch” their data by having only their data on dedicated media.

•Customers need separate pools for different environments (test, User Acceptance Test (UAT), or production).

•Charging for tape is complicated. Traditionally, users are charged by the number of volumes they have in the tape library. With physical volume pooling, users can create and consolidate multiple logical volumes on a smaller number of stacked volumes and therefore reduce their media charges.

•Recall times depend on the media length. Small logical volumes on the tape cartridges (JA, JB, and JC) take a longer time to recall than volumes on the economy cartridge (JJ or JK). Therefore, pooling by media type is also beneficial.

•Some workloads have a high expiration rate, which causes excessive reclamation. These workloads are better suited in their own pool of physical volumes.

•Protecting data through encryption can be set on a per pool basis, which enables you to encrypt all or some of your data when it is written to the back-end tapes.

•Migration from older tape media technology.

•Second dedicated pool for key workloads to be Copy Exported.

There are benefits to using physical volume pooling, so plan for the number of physical pools. See also “Relationship between reclamation and number of physical pools” on page 44.

Physical volume pooling

Volume pooling allows the administrator to define pools of stacked volumes within the TS7740 Virtualization Engine. You can direct virtual volumes to these pools through the use of SMS constructs. There can be up to 32 general-purpose pools (01 - 32) and one common pool (00). A common scratch pool (Pool 00) is a reserved pool that contains only scratch stacked volumes for the other pools.

Each TS7740 Virtualization Engine that is attached to a TS3500 Tape Library has its own set of pools.

Common scratch pool (Pool 00)

The common scratch pool is a pool that only contains scratch stacked volumes and serves as a reserve pool. You can define a primary pool to borrow scratch stacked cartridges from the common scratch pool (Pool 00) if a scratch shortage occurs. This can be done either on a temporary or permanent basis. Each pool can be defined to borrow single media (JA, JB, JC, JJ, or JK), mixed media, or it can have a “first choice” and a “second choice”. The borrowing options can be set at the management interface when defining stacked volume pool properties.

|

Remember: The common scratch pool must have at least three cartridges available.

|

General-purpose pools (Pools 01 - 32)

There are 32 general-purpose pools available for each TS7740 Virtualization Engine cluster. These pools can contain both empty and full/filling stacked volumes. All physical volumes in a TS7740 Virtualization Engine cluster are distributed among available pools according to the physical volume range definitions in place. Those pools can have their properties tailored individually by the administrator for various purposes. When initially creating these pools, it is important to ensure that the correct borrowing properties are defined to each one. For more information, see “Stacked volume pool properties” on page 42.

By default, there is one pool, Pool 01, and the TS7740 Virtualization Engine stores virtual volumes on any stacked volume available to it. This creates an intermix of logical volumes from differing sources, for example, an LPAR and applications on a physical cartridge. The user cannot influence the physical location of the logical volume within a pool. Having all logical volumes in a single group of stacked volumes is not always optimal.

Using this facility, you can also perform the following tasks:

•Separate different clients or LPAR data from each other

•Intermix or segregate media types

•Map separate Storage Groups to the same primary pools

•Set up specific pools for Copy Export

•Set up pool or pools for encryption

•Set a reclamation threshold at the pool level

•Set reclamation parameters for stacked volumes

•Assign or eject stacked volumes from specific pools

Physical pooling of stacked volumes is identified by defining a pool number, as shown in Figure 2-8.

Figure 2-8 TS7740 Logical volume allocation to specific physical volume pool flow

Through the management interface you can add a Storage Group construct, and assign a primary storage pool to it. Stacked volumes are assigned directly to the defined storage pools. The pool assignments are stored in the TS7740 Virtualization Engine database. During a scratch mount, a logical volume is assigned to a selected Storage Group. This Storage Group is connected to a storage pool with assigned physical volumes. When a logical volume is copied to tape, it is written to a stacked volume that belongs to this storage pool.

Physical VOLSER ranges can be defined with a home pool at insert time. Changing the home pool of a range has no effect on existing volumes in the library. When also disabling borrow/return, this provides a method to have a specific range of volumes used exclusively by a specific pool.

|

Tip: Primary Pool 01 is the default private pool for TS7740 Virtualization Engine stacked volumes.

|

Borrowing and returning

Using the concept of borrowing and returning, out of scratch scenarios can be automatically addressed. Ensure that non-borrowing active pools have at least two scratch volumes.

With borrowing, stacked volumes can move from pool to pool and back again to the original pool. In this way, the TS7740 Virtualization Engine can manage out of scratch and low scratch scenarios, which can occur within any TS7740 Virtualization Engine from time to time.

|

Remember: Pools that have borrow/return enabled that contain no active data will eventually return all scratch volumes to the common scratch pool after 48 - 72 hours of inactivity.

|

Stacked volume pool properties

Logical volume pooling supports cartridge type selection. This can be used to create separate pools of 3592 tape cartridges with a variety of capacities from 128 GB up to 4 TB, depending upon the type of media and tape drive technology used.

Lower capacity JJ or JK cartridges can be designated to a pool to provide fast access to applications, such as hierarchical storage management (HSM) or Content Manager. Higher capacity JA, JB, or JC cartridges assigned to a pool can address archival requirements, such as full volume dumps.

2.2.21 Logical and stacked volume management

Every time that a logical volume is modified (either by modification or by reuse of a scratch volume), the data from the previous use of this logical volume, which is on a stacked volume, becomes obsolete. The new virtual volume is placed in the cache and written to a stacked volume afterward (TS7740 only).The copy on the stacked volume is invalidated, but it still exists in its current state on the physical volume.

Virtual volume reconciliation

The reconciliation process checks for invalidated volumes. A reconciliation is that period of activity by the TS7740 Virtualization Engine when the most recent instance of a logical volume is determined as the active one, and all other instances of that volume are deleted from the active volume list. This process automatically adjusts the active data amount for any stacked volumes that hold invalidated logical volumes.

The data that is associated with a logical volume is considered invalidated if any of the following statements are true:

•A host has assigned the logical volume to a scratch category. The volume is subsequently selected for a scratch mount and data is written to the volume. The older version of the volume is now invalid.

•A host has assigned the logical volume to a scratch category. The category has a non-zero delete-expired data parameter value. The parameter value has been exceeded, and the TS7740 Virtualization Engine has deleted the logical volume.

•A host has modified the contents of the volume. This can be a complete rewrite of the volume or an append to it. The new version of the logical volume is pre-migrated to a separate physical location and the older version is invalidated.

The TS7740 Virtualization Engine keeps track of the amount of active data on a physical volume. It starts at 100% when a volume becomes full. Although the granularity of the percentage of full TS7740 Virtualization Engine tracks is one tenth of 100%, it rounds down, so even one byte of inactive data drops the percentage to 99.9%. TS7740 Virtualization Engine keeps track of the time that the physical volume went from 100% full to less than 100% full by performing the following tasks:

•Checking on an hourly basis for volumes in a pool with a non-zero setting

•Comparing this time against the current time to determine whether the volume is eligible for reclamation

Physical volume reclamation

Physical volume reclamation consolidates active data and frees stacked volumes for return-to-scratch use. Reclamation is part of the internal management functions of a TS7740 Virtualization Engine.

The reclamation process is basically a tape-to-tape copy. The physical volume to be reclaimed is mounted to a physical drive, and the active logical volumes that reside there are copied to another filling cartridge under control of the TS7740 Virtualization Engine. One reclamation task needs two physical tape drives to run. At the end of the reclaim, the source volume is empty and it is returned to the specified reclamation pool as an empty (scratch) volume. The data being copied from the reclaimed physical volume does not go to the TVC, instead it is transferred directly from the source to the target tape cartridge.

Physical tape volumes become eligible for space reclamation when they cross the occupancy threshold level specified by the administrator in the home pool definitions where those tape volumes belong. This reclaim threshold is set for each pool individually according to the specific needs for that client and is expressed in a percentage (%) of tape utilization.

Volume reclamation can be concatenated with a Secure Data Erase for that volume, if required. This causes the volume to be erased after that reclamation finishes. For more details, see 2.2.22, “Secure Data Erase function” on page 44. Do not run reclamation during peak workload hours of the TS7740. This is necessary to ensure that recalls and migrations are not delayed due to physical drive shortages. You must choose the best period for reclamation by considering the workload profile for that TS7740 cluster and inhibit reclamation during the busiest period for the machine.

A physical volume that is being ejected from the library is also reclaimed in a similar way before being allowed to be ejected. The active logical volumes contained in the cartridge are moved to another physical volume, according to the policies defined in the volume’s home pool, before the physical volume is ejected from the library.

Reclamation also can be used to migrate older data from a pool to another while it is being reclaimed, but only by targeting a separate specific pool for reclamation.

Relationship between reclamation and number of physical pools

The reclaim process is done on a pool basis and each reclamation process needs two drives. If you define too many pools, it can lead to a situation where the TS7740 is incapable of processing the reclamation for all pools in an appropriate manner. Eventually, pools can run out of space (depending on the “borrow: definitions), or you need more stacked volumes than planned.

The number of physical pools, physical drives, stacked volumes in the pools, and the available time tables for reclaim schedules must be considered and balanced.

2.2.22 Secure Data Erase function

Another concern is the security of old data. The TS7740 Virtualization Engine provides physical volume erasure on a physical volume pool basis that is controlled by an additional reclamation policy. When Secure Data Erase is enabled, a physical cartridge is not available as a scratch cartridge as long as its data is not erased.

The Secure Data Erase function supports the erasure of a physical volume as part of the reclamation process. The erasure is performed by running a long erase procedure against the media.

A Long Erase operation on a TS11xx drive is completed by writing a repeating pattern from the beginning to the end of the physical tape, making all data previously present inaccessible through traditional read operations.

The key here is that it is not a fully random from beginning to end pattern and it only has one pass. That is why it is not officially a Secure Data Erase as described in the DOD or Department of Defense documentation.

Therefore, the logical volumes written on this stacked volume are no longer readable. As part of this data erase function, an additional reclaim policy is added. The policy specifies the number of days that a physical volume can contain invalid logical volume data before the physical volume becomes eligible to be reclaimed.

When a physical volume contains only encrypted data, the TS7740 Virtualization Engine is able to perform a fast erase of the data by erasing the encryption keys on the cartridge. Basically, it erases only the portion of the tape where the key information is stored. This form of erasure is referred to as a cryptographic erase. Without the key information, the rest of the tape cannot be read. This method significantly reduces the erasure time. Any physical volume that has a status of read-only is not subject to this function and is not designated for erasure as part of a read-only recovery.

If you use the eject stacked volume function, the data on the volume is not erased before ejecting. The control of expired data on an ejected volume is your responsibility.

Volumes tagged for erasure cannot be moved to another pool until erased, but they can be ejected from the library because such a volume is usually removed for recovery actions.

Using the Move function also causes a physical volume to be erased, even though the number of days specified has not yet elapsed. This includes returning borrowed volumes.