Preinstallation planning and sizing

This chapter provides information to help you plan the installation and implementation of the IBM Virtualization Engine TS7700. It covers the following topics:

•Hardware configurations

•Hardware installation and infrastructure planning

•Remote installations and switch support

•High availability (HA) grid considerations

•Tape library attachment

•Planning for software implementation

•Planning for logical and physical volumes

•Planning for encryption in the TS7740 Virtualization Engine

•Tape analysis and sizing the TS7740 Virtualization Engine

•Education and training

•Planning considerations

Use the preinstallation checklists in Appendix C, “TS3500 and TS7700 checklists” on page 867 to help plan your installation.

|

Remember: For this chapter, the term “tape library” refers to the IBM System Storage TS3500 Tape Library.

|

4.1 Hardware configurations

The minimum configurations and the optional enhancements for the TS7700 Virtualization Engine are described. Both the IBM TS7740 Virtualization Engine and the IBM TS7720 Virtualization Engine are covered.

Tape library attachment

The current TS7740 Virtualization Engine no longer implements a physical Library Manager for its TS3500 attached tape library. All Enterprise Library Controller (ELC) functions and associated components are integrated into the TS7700 Virtualization Engine. The TS7720 Virtualization Engine is a disk-only solution and therefore requires no physical tape library.

For more information about the TS3500 Tape Library, see 2.5.4, “TS3500 Tape Library” on page 99.

|

Tip: The IBM 3494 Tape Library attachment is no longer supported from Release 2.0 forward.

|

TS7700 Virtualization Engine configuration summary

Release 3.0 hardware components and configuration requirements for a stand-alone cluster grid and for a multicluster grid configuration are described.

TS7740 (tape-attached) Virtualization Engine configuration summary

A TS7740 Virtualization Engine consists of the following components:

•Frame (3952-F05).

•TS7740 Virtualization Engine Server:

– An IBM System p server (3957 Model V07) with one 3.0 GHz eight-core processor card, 16 GB of 1066 MHz dynamic device reconfiguration 3 (DDR3) memory, and eight small form factor (SFF) hard disk drives (HDDs) with a redundant serial-attached SCSI (SAS) adapter.

– Two I/O expansion drawers.

•Disk cache.

|

The TS7740 has featured different cache models and sizes since it was first launched. In this summary, we list Model CC9/CX9, which is introduced with TS7740 Release 3.0.

|

•One 3956 Model CC9 with up to two 3956 Model CX9s (total of three drawers) providing up to 28.62 TB of data capacity. Considering a compression rate of 3:1, this represents 83.83 TB of uncompressed data.

•Two redundant network switches.

•Two redundant 8 Gb Fibre Channel (FC) switches located in the TS3500 Tape Library that provide connectivity to the 3592 Model J1A, TS1120 Model E05, TS1130 Model E06/EU6, or TS1140 Model E07 tape drives.

•TS3000 System Console (TSSC), LAN hub, and keyboard/display: Can be considered optional if you already have an external TSSC.

TS7720 (disk only) Virtualization Engine configuration summary

A TS7720 Virtualization Engine consists of the following components:

•Frame (3952-F05).

•TS7720 Virtualization Engine Disk Only Server.

An IBM System p server as the node (3957 Model VEB) with one 3.0 GHz eight-core processor card, 16 GB of 1066 MHz DDR3 memory, and 8 SFF HDDs with an external SAS card.

•Two I/O expansion drawers.

•Two redundant network switches.

•TSSC, LAN hub, and keyboard/display: Can be considered optional if you already have an external TSSC.

•Disk cache.

|

The TS7720 has featured different cache models and sizes since it was first launched. In this summary, we list Model CS9/XS9, which is introduced with TS7720 Release 3.0.

|

• One 3956 Model CS9 with up to nine 3956 Model XS9s (total of ten drawers) for a maximum cache size of 239.86 TB of data capacity. Considering a compression rate of 3:1, this represents 719.58 TB of uncompressed data.

•Optional Storage Expansion Frame (3952-F05): One 3956 Model CS9s with up to fifteen drawers of 3956 Model XS9s for a maximum cache size of 623.86 TB. Considering a compression rate of 3:1, this represents 1872 TB of uncompressed data.

|

TS7720 Release 2.1 introduced a second expansion frame via a request for price quotation (RPQ) 8B3604, which increases the maximum native capacity to 580 TB (pre-CS9/XS9 models). 3957-VEB is required for the second expansion frame.

|

In summary, all components are installed in an IBM 3952-F05 Tape Frame. The Virtualization Engine is connected to the host through Fibre Channel connection (FICON) channels, with the tape library and disk cache connected via Fibre Channel adapters.

In a multicluster grid configuration, where you can have up to six TS7700 Virtualization Engines, two or four independent 1 Gb copper or optical grid Ethernet links (single- ported or dual-ported), or alternatively two 10 Gb longwave (LW) optical grid Ethernet links per TS7700 Virtualization Engine, are used to interconnect the clusters.

A copper-based Ethernet network is the communications mechanism between the network switches, client network, TSSC, and virtualization engine.

4.1.1 TS7740 Virtualization Engine configuration details

The specific feature codes (FCs) of the TS7740 Virtualization Engine in a stand-alone and grid configuration are described.

TS7740 Virtualization Engine minimum configuration with R3.0

The minimum configuration of a TS7740 Virtualization Engine build with R3.0 machine code requires the following components. The feature code (FC) number is in parenthesis.

•One 3952 Tape Frame Model F05 with the following required features:

– TS7740 Encryption Capable Base Frame (FC7330)

– Install 3957 V07 (FC5629)

– Plant Install 3956 CC9 (FC5652)

– Integrated Control Path (FC5758)

– Dual AC Power (FC1903)

– Two Ethernet switches

– Optionally, one TSSC

– Ship with R3.0 Machine Code (FC9113)

– A power cord appropriate for the country of installation must be selected from features FC9954 through FC9959, or FC9966.

•One TS7740 Virtualization Engine Server (3957 Model V07) with the following required features:

– One of 1 TB Cache Enablement (FC5267)

– One of 100 MBps Increment (FC5268)

– Dual Port FC host bus adapter (HBA) (FC5240)

– Mainframe Attach (FC9000)

– Ship with R3.0 Machine Code (FC9113)

– Attach to TS3500 (FC9219)

– Plant Install in F05 (FC9350)

– Two of either 10 Gb Grid Optical LW Connection (FC1035), 1 Gb Grid Dual Port Copper Connection (FC1036), or 1 Gb Grid Dual Optical shortwave (SW) Connection (FC1037)

– Either two of host to Virtualization Engine FICON cables (FC0201 or FC0203) or one No Factory Cables (FC9700)

– Two of either FICON Shortwave Attachment (FC3441) or FICON Longwave Attachment (FC3442), or FICON 10 km (6.2 miles) Longwave Attachment (FC3443)

– Console Attachment (FC2715)

|

TS7740 Release 3.0 introduces the Encryption-Capable Cache, which utilizes the new 3956 Cache Controller CC9 along with Expansion Drawer CX9. Both come with 600 GB full disk encryption (FDE)-capable SAS drives.

|

•One TS7740 Cache Controller (3956 Model CC9) is required with the following required features:

– Plant Install in 3952-F05 Encryption Capable Controller (FC9352)

– Plant install 3956-CC9 (FC 5652)

– 13.2 TB SAS Storage (FC 7124)

|

Disk cache encryption is supported in an existing TS7740 Virtualization Engine with 3956-CC8 via an RPQ.

|

•Two 8 Gb Fibre Channel switches are required:

– TS7700 back-end SW Mounting Hardware (FC4871)

– Power Distribution Units (FC1950)

– One power cord FC9954 through FC9959 or FC9966

– 8 Gb Fibre Channel switch (FC4875)

– Attached to LM/TS7700/3592-C07 (FC9217)

– Adjacent frame support for TS7700 back-end Fibre Channel switches (FC4874)

•One or more 3584 Model L23 or D23 frames with the following components:

– From four to sixteen 3592 tape drives: The TS7740 can be attached to 3592 Model J1A, TS1120 Model E05, TS1130 Model E06/EU6 tape drives, or TS1140 Model E07 Tape Drives. All attached drives must operate in the same mode. Intermixing is only supported for TS1120 Model E05 working in 3592-J1A emulation mode and 3592-J1A tape drives. The TS1140 Model E07 Tape drive cannot be intermixed with another drive type.

|

Tip: JA and JJ media are only supported for read-only operations by TS1140 Model E07 Tape Drives. Existing JA or JJ media will be marked read-only and moved to a sunset category after reclamation, which allows them to be later ejected by the user.

|

– Up to 16 FC1515, 3592 Fibre Channel Tape Drive mounting kit.

TS7740 Virtualization Engine configuration upgrades

See Chapter 7, “Upgrade considerations” on page 329 for available upgrades.

4.1.2 TS7720 Virtualization Engine configuration details

The specific features of the TS7720 Virtualization Engine in the stand-alone and grid configurations are described.

TS7720 Virtualization Engine minimum configuration with R3.0

The minimum configuration of a TS7720 Virtualization Engine build with R3.0 machine code requires the components that are described here:

•One 3952 Tape Frame Model F05 with the following required features:

– TS7720 Virtualization Engine Encryption-Capable Base Frame (FC7331)

– Plant Install 3957 VEB (FC5627)

– Plant Install 3956 CS9 (FC5651)

– Integrated Control Path (FC5758)

– Dual AC Power (FC1903)

– Two Ethernet switches

– Optionally, one TSSC

– Ship with R3.0 Machine Code (FC9113)

– A power cord appropriate for the country of installation must be selected from FC9954 through FC9959, or FC9966.

•One TS7720 Virtualization Engine Server (3957 Model VEB) with the following required features:

– 100 MBps Throughput - Plant (FC9268)

– Mainframe Attach (FC9000)

– Ship with R3.0 Machine Code (FC9113)

– Plant Install in 3952-F05 (FC9350)

– Two of either 10 Gb Grid Optical LW Connection (FC1035), 1 Gb Grid Dual Port Copper Connection (FC1036), or 1 Gb Grid Dual Optical SW Connection (FC1037)

– Either two of host to Virtualization Engine FICON cables (FC0201 or FC0203) or one No Factory Cables (FC9700)

– Two of either FICON Shortwave Attachment (FC3441), FICON Longwave Attachment (FC3442), or FICON 10 km (6.2 miles) Longwave Attachment (FC3443)

– Console Attachment (FC2715)

•One TS7720 Cache Controller (3956 Model CS9) with the following required features:

– 36 TB SAS Storage (FC7115)

– Plant install 3956-CS9 (FC5651)

– Plant install in 3952-F05 (FC9352) Encryption Capable Controller

TS7720 Virtualization Engine configuration upgrades

For available upgrades, see Chapter 7, “Upgrade considerations” on page 329.

TS7720 Storage Expansion frame

To attach a TS7720 Storage Expansion frame to a TS7720 base frame, configure the following components:

•One 3952 Tape Frame Model F05 with the following required features:

– TS7720 Encryption-capable Expansion Frame (FC7332)

– One Plant Install 3956-CS9 (FC5651)

– Cache Controller Disk Encryption Enable (FC5272) for cache controller

– Cache Expansion Drawer Disk Encryption Enable (FC7404) for expansion drawer

– Plant install TS7700 Cache in a 3952-F05 (FC9352)

– Zero - 15 of Plant Install 3956-XS9 (FC5655)

– Zero - 15 of Field Install 3956-XS7 (FC5656)

|

Tip: Valid quantities of FC5655 plus FC5656 are zero - 15.

|

– Dual AC Power (FC1903)

– A power cord appropriate for the country of installation must be selected from FC9954 through FC9959, or FC9966

•On an existing TS7720 Non-Encryption-Capable Virtualization Engine base frame (3952 Tape Frame Model F05 with FC7322), the following features are required, in addition to the minimum configuration and optional requirements defined above:

– Expansion Frame Attach (FC9323)

– Existing TS7720 base can have 0 to six instances of combined FC5646 and FC5647.

•An existing TS7720 Virtualization Engine Server (3957 Model VEA) installed in the TS7720 base frame (3952 Tape Frame Model F05 with features FC7322 and FC9323) needs to have the following features in addition to the minimum configuration and optional requirements defined above:

– Dual Port FC HBA (FC5240)

– 8 GB memory upgrade (FC3461 or FC9461)

•On a TS7720 Virtualization Engine Server (3957 Model VEB) base frame (FC7322 and FC9323) or 3957-VEB Encryption-capable base frame (FC7331 and FC9323), the following feature is required in addition to the minimum configuration above:

– Dual Port FC HBA (FC5241)

4.1.3 Cables

The cable feature codes for attachment to the TS7700 Virtualization Engine and additional cables, fabric components, and cabling solutions are described.

Required cable feature codes

The following cable feature codes are needed for attachment to the TS7700 Virtualization Engine.

A TS7700 Virtualization Engine Server with the FICON Attachment features (FC3441, FC3442, or FC3443) can attach to FICON channels of IBM System z mainframe, IBM zSeries server, or IBM S/390® server using FICON cable features ordered on the TS7700 Virtualization Engine Server. A maximum of four FICON cables, each 31 meters in length, can be ordered.

One cable must be ordered for each host system attachment by using the following cable features:

•Gbps FICON Long-Wavelength Attachment feature (FC3442 and FC3443): The FICON long wavelength adapter shipped with FC3442 (4-Gbps FICON Long-Wavelength Attachment) or FC3443 (4-Gbps FICON 10 km Long-Wavelength Attachment) has an LC Duplex connector, and can connect to FICON long wavelength channels of IBM zEnterprise®, IBM System z9®, IBM System z10®, or S/390 servers utilizing a 9-micron single-mode fibre cable. The maximum fibre cable length is 4 KM (2.48 miles) for FC3442 and 10 KM (6.2 miles) for FC3443. If standard host attachment cables (31m) are desired, they can be specified with FC0201 - 9 Micron LC/LC 31 meter Fibre Cable.

•Gbps FICON Short-Wavelength Attachment feature (FC3441): The FICON short wave-length adapter shipped with FC3441 has an LC Duplex connector, and can connect to FICON short wavelength channels of zEnterprise, System z9, System z10, or S/390 servers utilizing a 50-micron or 62.5-micron multimode fibre cable. At 4 Gb/sec, the maximum fibre cable length allowed by 50-micron cable is 150 m, or 55 m if using 62.5-micron cable. If standard host attachment cables are desired, they can be specified with FC0203 - 50 Micron LC/LC 31 meter Fibre Cable.

Additional cables, fabric components, and cabling solutions

Conversion cables from SC Duplex to LC Duplex are available as features on the System z servers if you are currently using cables with SC Duplex connectors that now require attachment to fibre components with LC Duplex connections. Additional cable options, along with product support services, such as installation, are offered by IBM Global Services Networking Services. See the IBM Virtualization Engine TS7700 Introduction and Planning Guide, GA32-0568, for Fibre Channel cable planning information. If Grid Enablement (FC4015) is ordered, Ethernet cables are required for the copper/optical 1 Gbps and optical longwave adapters to attach to the communication grid.

4.1.4 Limitations

Consider the following limitations when performing the TS7700 Virtualization Engine preinstallation and planning:

•Each Tape Subsystem component (TS3500 or TS7700 Virtualization Engine node) must be located within 100 feet or a reasonable distance for an IBM service support representative (SSR) to walk to and from during the servicing of a TS7700 or TS3500.

•Intermixing different models of 3592 Tape Drives are not supported in the same TS7740 Virtualization Engine. The only exception is 3592-J1A and TS1120 operating in J1A emulation mode.

•The 3592 back-end tape drives for a TS7740 cluster must be installed in a TS3500 Tape Library. Connections to 3494 Tape Libraries are no longer supported as of R2.0 machine code.

•Clusters running R3.0 machine code can only be joined in a grid with clusters running either R2.0 or R2.1 machine code. No more than two different code levels are allowed within the same grid.

Tape drives and media support (TS7740 Virtualization Engine only)

The TS7740 supports all four generations of the 3592 Tape Drives: 3592 Model J1A, TS1120 Model E05, TS1130 Model E06, and the TS1140 Model E07 tape drives. Support for the fourth generation of the 3592 tape drive family (TS1140 Model E07) was included after General Availability of Release 2.0 of Licensed Internal Code. At this machine code level, the TS1140-E07 tape drive cannot read 3592 Tape Cartridge JA or 3592 Economy Tape Cartridge JJ.

|

Starting with Release 2.1 of Licensed Internal Code, the reading of JA and JJ media by the TS1140-E07 Tape Drive is supported. This capability enables users to upgrade the tape library tape drives to the TS1140 Model E07 while still having active data on JA or JJ media.

|

The TS1140 supports the 3592 Tape Cartridge JA (read-only), 3592 Expanded Capacity Cartridge JB, 3592 Advanced Tape Cartridge JC, 3592 Economy Tape Cartridge JJ (read-only), and 3592 Economy Advanced Tape Cartridge JK.

|

Note: Not all cartridge media types and media formats are supported by all 3592 Tape Drive models. See Table 4-1 for the media, format, and drive model compatibility to see which tape drive model is required for a certain capability.

|

Table 4-1 Supported 3592 read/write formats

|

3592

Tape Drive

|

EFMT1

512 tracks,

eight R/W channels

|

EFMT2

896 tracks,

16 R/W channels

|

EFMT3

1152 tracks,

16 R/W channels

|

EFMT4

2176 tracks,

32 R/W channels

|

|

Model J1A

|

Read/write

|

Not supported

|

Not supported

|

Not supported

|

|

Model E05

|

Read/write1

|

Read/write

|

Not supported

|

Not supported

|

|

Model E06

|

Read

|

Read/write

|

Read/write

|

Not supported

|

|

Model E07

|

Read2

|

Readb

|

Read/write3

|

Read/write

|

1 Model E05 can read and write EFMT1 operating in native or J1A emulation mode.

2 Model E07 can read JA and JJ cartridge types only with tape drive firmware level of D3I3_5CD or higher.

3 Cartridge type JB only.

|

Tip: Remember that no intermix of tape drive models is supported by TS7740, except for the 3592-E05 Tape Drives working in J1A emulation mode and 3592-J1A tape Drives (those were the first and second generation of the 3592 tape drives).

TS1130 (3592 Model E06) cannot be intermixed with any other model of 3592 Tape Drive within the same TS7740.

TS1140 (3592 Model E07) cannot be intermixed with any other model of 3592 Tape Drive within the same TS7740 configuration.

|

Support for the third generation of the 3592 drive family, TS1130 Model E06 and EU6, was included in TS7700 Virtualization Engine Release 1.5. The 3592 Model EU6 Tape Drive was only available as a field upgrade from the TS1120 Tape Drive Model E05, being functionally equivalent to the regular TS1130 3592-E06 Tape Drive. Table 4-2 summarizes the tape drive models, capabilities, and supported media by drive model.

Table 4-2 Summary of the 3592 Tape Drive models and characteristics versus supported media and capacity

|

3592 drive type

|

Supported media type

|

Encryption support

|

Capacity

|

Data rate

|

|

TS1140 Tape Drive

(3592-E07 Tape Drive)

|

JB

JC

JK

Media read only:

JA

JJ

|

Yes (IBM Tivoli Key Lifecycle Manager or

IBM Security Key Lifecycle Manager only)

|

1.6 TB (JB native)

4.0 TB (JC native)

500 GB (JK native)

4.0 TB (maximum all)

|

250 MB/s

|

|

TS1130 Tape Drive

(3592-EU6 or 3592-E06 Tape Drive)

|

JA

JB

JJ

|

Yes

|

640 GB (JA native)

1.0 TB (JB native)

128 GB (JJ native)

1.0 TB (maximum all)

|

160 MB/s

|

|

TS1120 Tape Drive

(3592-E05 Tape Drive)

|

JA

JB

JJ

|

Yes

|

500 GB (JA native)

700 GB (JB native)

100 GB (JJ native)

700 GB (maximum all)

|

100 MB/s

|

|

3592-J1A

|

JA

JJ

|

No

|

300 GB (JA native)

60 GB (JJ native)

300 GB (maximum all)

|

40 MB/s

|

The media type is the format of the data cartridge. The media type of a cartridge is shown by the last two characters on standard bar code labels. The following media types are supported:

•JA - An Enterprise Tape Cartridge (ETC)

A JA cartridge can be used in native mode in a 3592-J1A drive or a 3592-E05 Tape Drive operating in either native mode or J1A emulation mode. The native capacity of a JA tape cartridge used in a 3592-J1A drive or a 3592-E05 Tape Drive in J1A emulation mode is 300 GB (279.39 GiB). The native capacity of a JA tape cartridge used in a 3592-E05 Tape Drive in native mode is 500 GB (465.6 GiB). The native capacity of a JA tape cartridge used in a 3592-E06 drive in native mode is 640 GB (596.04 GiB).

|

Important: The JA media type is only supported for read-only use with TS1140 Tape Drives.

|

•JB - An Enterprise Extended-Length Tape Cartridge (ETCL)

Use of JB tape cartridges is supported only with TS1140 Tape Drives, TS1130 Tape Drives, and TS1120 Tape Drives operating in native capacity mode.

When used with TS1140 Tape Drives, JB media that contains data written in native E05 mode is only supported for read-only operations. After this data is reclaimed or expired, the cartridge can be written from the beginning of the tape in the new E07 format. If previously written in the E06 format, appends are supported with the TS1140 drive.

The native capacity of a JB tape cartridge used in a 3592-E05 drive is 700 GB

(651.93 GiB). When used in a 3592-E06 drive, the JB tape cartridge native capacity is 1000 GB (931.32 GiB). When used within a Copy Export pool, a JB tape cartridge can be written in the E06 format with a TS1140 drive allowing Copy Export restores to occur with TS1130 hardware. The native capacity of a JB media used in a 3592-E07 Tape Drive in native mode is 1600 GB (1490.12 GiB).

(651.93 GiB). When used in a 3592-E06 drive, the JB tape cartridge native capacity is 1000 GB (931.32 GiB). When used within a Copy Export pool, a JB tape cartridge can be written in the E06 format with a TS1140 drive allowing Copy Export restores to occur with TS1130 hardware. The native capacity of a JB media used in a 3592-E07 Tape Drive in native mode is 1600 GB (1490.12 GiB).

•JC - An Enterprise Advanced Data Cartridge (EADC)

This media type is only supported for use with TS1140 Tape Drives. The native capacity of a JC media used in a 3592-E07 drive is 4 TB (3.64TiB).

•JJ - An Enterprise Economy Tape Cartridge (EETC)

A JJ cartridge can be used in native mode in a 3592-J1A drive or a 3592-E05 Tape Drive operating in either native mode or J1A emulation mode. The native capacity of a JJ tape cartridge used in a 3592-J1A drive or 3592-E05 Tape Drive in J1A emulation mode is

60 GB (58.88 GiB). The native capacity of a JJ tape cartridge used in a 3592-E05 Tape Drive in native mode is 100 GB (93.13 GiB). A JJ cartridge can be used in native mode in a 3592-J1A drive or a 3592-E05 Tape Drive operating in either native mode or J1A emulation mode.

60 GB (58.88 GiB). The native capacity of a JJ tape cartridge used in a 3592-E05 Tape Drive in native mode is 100 GB (93.13 GiB). A JJ cartridge can be used in native mode in a 3592-J1A drive or a 3592-E05 Tape Drive operating in either native mode or J1A emulation mode.

|

Important: The JJ media type is only supported for read-only use with TS1140 Tape Drives.

|

•JK - An Enterprise Advanced Economy Tape Cartridge (EAETC)

This media type is only supported for use with TS1140 Tape Drives. The native capacity of a JK media used in a 3592-E07 drive is 500 GB (465.66 GiB).

The following cartridges are diagnostic and cleaning cartridges:

•CE - Customer Engineer (CE) diagnostic cartridge for use only by IBM SSRs. The VOLSER for this cartridge is CE xxxJA, where a space occurs immediately after CE and xxx equals three numerals.

•CLN - Cleaning cartridge. The VOLSER for this cartridge is CLN xxxJA, where a space occurs immediately after CLN and xxx equals three numerals.

|

Important: Write Once Read Many (WORM) cartridges, JW, JR, JX, and JY, are not supported. Capacity scaling of 3592 tape media is also not supported by the Virtualization Engine TS7740.

|

4.1.5 TS3500 Tape Library High Density frame for a TS7740 Virtualization Engine configuration

A TS3500 Tape Library High Density frame is supported by a TS7740 Virtualization Engine and holds the stacked volumes.

If you are planning to size TS7740 Virtualization Engine physical volume cartridges, this high density (HD) frame can be a solution in terms of floor space reduction.

The TS3500 Tape Library offers high-density, storage-only frame models (HD frames) designed to greatly increase storage capacity without increasing frame size or required floor space.

The new HD frame (Model S24 for 3592 tape cartridges) contains high-density storage slots as shown in Figure 4-1.

Figure 4-1 TS3500 Tape Library HD frame (left) and top-down view

HD slots contain tape cartridges in a tiered architecture. The cartridge immediately accessible in the HD slot is a Tier 1 cartridge. Behind that is Tier 2 and so on. The maximum tier in a 3592 (Model S24) HD slot is Tier 4.

The HD frame Model S24 provides storage for up to 1,000 3592 tape cartridges.

The base capacity of Model S24 is 600 cartridges, which are stored in Tiers 0, 1, and 2. To increase capacity to the maximum for each frame, it is necessary to purchase the High Density Capacity on Demand (HD CoD) feature. This feature provides a license key that enables you to use the storage space available in the remaining tiers.

4.1.6 TS7700 Virtualization Engine upgrades and replacements

The TS7700 Virtualization Engine models V07 and VEB were introduced in Release 2 of the Licensed Internal Code. The feature codes that are available for upgrades and replacements of the existing V06 and VEA models are reviewed. The new feature codes for the V07 and VEB models are described.

See Chapter 7, “Upgrade considerations” on page 329 for existing TS7700 Virtualization Engine component upgrades for functions you might want to enable if you do not have the maximum TS7700 Virtualization Engine configuration.

Licensed Internal Code upgrades from levels earlier than Release 3.0 might also require a hardware reconfiguration scenario. If you currently have a TS7700 Virtualization Engine with a microcode release before R3.0, see 7.3, “TS7700 Virtualization Engine upgrade to Release 3.0” on page 352.

TS7700 Virtualization Engine common feature codes

The following feature codes are available for TS7700 Virtualization Engine models V06 and VEA, and for models V07 and VEB:

•FC1034 Enable dual port grid connection

This feature code enables the second port of each dual port 1-Gb grid connection adapter that is provided by one of these feature codes:

– FC1032 or FC1033 (model V06 or VEA)

– FC1036 or FC1037 (model V07 or VEB)

•FC5270 Increased logical volumes

This feature code increases by 200,000 the number of logical volumes supported on a cluster. You can use multiple increments of this feature to increase the maximum number of supported logical volumes from 1,000,000 (default) to 4,000,000. Fifteen instances of FC 5270 Increased logical volumes are required to support a new maximum of 4,000,000 logical volumes in a 3957 model V07 or VEB.

|

Restriction: The 3957 models V06 and VEA are limited up to a maximum of 2,000,000 volumes, allowing for up to five increment instances of FC5270.

In a multicluster grid configuration, the maximum number of supported logical volumes is determined by the cluster having the fewest installed instances of FC5270. To increase the number of supported logical volumes across a grid, the required number of FC5270 must be installed on each single cluster in the grid.

Grids with one or more 3957 model V06/VEA clusters are limited to a maximum of 2,000,000 volumes.

|

•FC5271 Selective device access control

This feature code authorizes the use of a set of Management Class policies that allow only certain logical control units or LIBPORT-IDs within a composite library to have exclusive access to one or more volumes. FC5271 (Selective device access control) must be installed on each cluster in the grid before any selective device access control policies can be defined in that grid. Each instance of this feature enables the definition of eight selective device access groups. The default group provides a single access group, resulting in nine total possible access groups.

•FC5272 Enable disk encryption

This is a new feature that was introduced in Release 3.0 of Licensed Internal Code. This feature code delivers a product license key to enable disk-based encryption.

|

Disk encryption is only supported in 3957 model V07 and VEB with the new encryption-capable base frame (FC7330 for model V07 and FC7331 for model VEB).

|

TS7700 Virtualization Engine non-hardware feature codes

The following non-hardware-related feature codes are available for TS7700 Virtualization Engine models V06 and VEA, and for models V07 and VEB:

•FC4015 Grid enablement

This feature code provides a key to enable the communication function that allows a TS7700 Virtualization Engine to communicate with other TS7700 Virtualization Engines in a grid. Each TS7700 Virtualization Engine must have this feature to be able to participate in a grid configuration.

|

Note: Contact your IBM SSR to properly set up, connect, and configure the grid environment.

|

•FC4016 Remove cluster from grid

This feature code delivers instructions for a one-time process to remove a TS7700 cluster from a TS7700 grid. You must order this feature before you can perform an FC4017 Cluster cleanup on any TS7700 cluster configured to participate in a TS7700 grid. If a TS7700 cluster is removed from a TS7700 grid, cleaned up using FC4017 Cluster cleanup, and then joined to a new TS7700 grid, another instance of FC4016 Remove cluster from grid is required to remove the cluster from the new grid.

•FC4017 Cluster cleanup

This feature code facilitates a one-time cluster cleanup to clean the database, delete logical volumes from the Tape Volume Cache (TVC), and remove configuration data for host connections from a TS7700 cluster. If the cluster is a member of a TS7700 grid, the cluster must first be removed from the grid using FC4016 Remove cluster from grid. If another cleanup is required on this TS7700 cluster, another instance of FC4017 Cluster cleanup is required.

•FC5267 1 TB Cache enablement

This feature code delivers a key to enable a 1 TB increment of disk cache to store logical volumes. Only the number of increments that is less than or equal to the amount of physical capacity installed can be enabled.

|

Restriction: FC5267 is available only with the TS7740 Virtualization Engine.

|

•FC5268 100 MiB/sec increment

This feature code delivers a key to enable an additional 100 MiB per second increment of potential peak data throughput. Enabling a data throughput increment does not guarantee that the overall TS7700 Virtualization Engine performs at that data throughput level. However, the installation of the maximum allowed feature codes results in unrestricted peak data throughput capabilities:

– Model V06 or VEA: Maximum of six instances of FC5268 can be ordered.

– Model VEB: Maximum of nine instances of FC5268 can be ordered, plus one Plant Installed FC9268, for a total of ten 100 MiB/sec instances.

– Model V07: Maximum of ten instances of FC5268 can be ordered for a total of ten 100 MiB/sec instances.

•FC9900 Tape encryption configuration (TS7740 only)

This feature code includes publication updates with information about how to enable and configure the TS7740 Virtualization Engine and TS3500 Tape Library to support encryption. This feature also provides an Encryption Key Server publication. You must enable and configure the TS7740 Virtualization Engine to support encryption with the TS1120, TS1130, or TS1140 encryption-capable tape drives.

|

Restrictions: This feature is available only with the TS7740 Virtualization Engine.

FC9900 Tape Encryption configuration is only supported with encryption-capable TS1120, TS1130, or TS1140 tape drives. FC9900 Encryption configuration is not supported by 3592-J1A tape drives.

|

TS7700 Virtualization Engine hardware feature codes

The following hardware-related feature codes are available for TS7700 Virtualization Engine models V06 and VEA, and for models V07 and VEB:

•FC3441 FICON short-wavelength attachment

This feature code provides one short-wavelength 4-Gbps FICON adapter with an LC duplex connector for attachment to a FICON host system short-wavelength channel using a 50-micron or 62.5-micron multi-mode fibre cable. At 4 Gbps, the maximum fibre cable length allowed by 50-micron cable is 150 m (492 ft.), or 55 m (180 ft.) if using 62.5-micron cable. Each 4-Gbps FICON attachment can support up to 256 logical channels.

•FC3442 FICON long-wavelength attachment

This feature code provides one long-wavelength 4-Gbps FICON adapter with an LC duplex connector for attachment of a TS7700 Virtualization Engine to a FICON host system long-wavelength channel using a 9-micron single-mode fibre cable. The maximum fibre cable length is 4 km (2.48 mi.). Each 4-Gbps FICON attachment can support up to 256 logical channels.

•FC3443 FICON 10-km long-wavelength attachment

This feature code provides one long-wavelength 4-Gbps FICON adapter with an LC duplex connector for attachment to a FICON host system long-wavelength channel using a 9-micron single-mode fibre cable. The maximum fibre cable length is 10 km (6.21 mi.). Each 4-Gbps FICON attachment can support up to 256 logical channels.

•FC3461 8 GB Memory upgrade in field

This feature code delivers a field-installed TS7700 server memory upgrade to 16 GB of Random Access Memory (RAM).

|

Tip: FC3461 is only supported on the 3957 V06 or VEA, and requires Licensed Internal Code Release 2.0 or higher.

|

TS7700 Virtualization Engine models V07 and VEB only feature codes

Starting with Release 2.0, the following feature codes are available for TS7700 Virtualization Engine models V07 and VEB only:

•FC1035, 10 Gb grid optical LW connection

This feature code provides a single port, 10-Gbps Ethernet longwave adapter for grid communication between TS7700 Virtualization Engines. This adapter has an SC Duplex connector for attaching 9-micron, single-mode fibre cable. This is a standard longwave (1,310 nm) adapter that conforms to the IEEE 802.3ae standards. It supports distances up to 10 km (6.2 miles).

|

Note: These adapters cannot negotiate down to run at 1 Gb. They must be connected to a 10-Gb network device or light point.

|

•FC1036 1-Gb grid dual port copper connection

This feature code provides a dual port 1-Gbps 10/100/1000 Base-TX PCIe Ethernet adapter for grid communication between TS7700 Virtualization Engines with a single port enabled. This adapter has an RJ-45 connector for attaching Cat6 cables. It conforms to the IEEE 802.3ab 1000 Base-T standard. It supports distances of up to 100 meters using four pairs of Cat6 balanced copper cabling.

•FC1037 1-Gb dual port optical SW connection

This feature code provides a dual port 1-Gbps shortwave PCIe Ethernet adapter for grid communication between TS7700 Virtualization Engines with a single port enabled. This adapter has an LC Duplex connector for attaching 50-micron or 62.5-micron multimode fibre cable. It is a standard shortwave (850 nm) adapter conforming to the IEEE 802.3z standards. It supports distances of up to 260 meters when used with a 62.5-micron cable, and up to 550 meters when used with a 50.0-micron cable.

•FC5241 Dual port FC HBA

This feature code installs two Fibre Channel interface cards in the TS7700 server (3957-V07 or 3957-VEB) and provides two 31-meter, 50µ Fibre Channel cables to connect the TS7700 server to the Fibre Channel switch. When ordered against the TS7740 Server (3957-V07), this feature connects a fibre switch and the back-end tape drives to the 3957-V07. When ordered against the TS7720 Server (3957-VEB), this feature connects the disk arrays in the 3952 Storage Expansion Frame with the 3957-VEB. When Fibre Channel cables connect the TS7700 Virtualization Engine to the Fibre Channel switches, and an 8 Gbps rate is expected, the following maximum length restrictions apply:

– 50µ, 2000 MHz multimode Fibre Channel aqua blue cables cannot exceed 150 meters.

– 50µ, 500 MHz multimode Fibre Channel orange cables cannot exceed 50 meters.

– 62.5µ, 200 MHz Fibre Channel cables cannot exceed 21 meters.

TS7700 Virtualization Engine 3952-F05 Frame feature codes

Starting with Release 2.0 the following feature codes are available for the 3952-F05 Frame of the TS7700 Virtualization Engine:

•FC4743 Remove 3957-V06/VEA

This feature code directs the removal of the 3957-V06 or 3957-VEA from the 3952-F05 Tape Frame. A new 3957-V07 (FC5629 Install 3957-V07) must be ordered to replace a removed 3957-V06. A new 3957-VEB (FC 5627 Install 3957-VEB) must be ordered to replace a removed 3957-VEA. The instructions for the field installation of a new model 3957-V07 or 3957-VEB are delivered with this feature.

|

Restriction: On a Model V06, FC4743 is mutually exclusive with FC5638 (Plant install 3956-CC6). This feature is not supported for the TS7700 F05 Frame with a 3956-CC6 tape cache.

|

•FC5627 Install 3957-VEB

This feature code allows the installation of a TS7720 Server in a 3952-F05 Tape Frame. This feature occurs on the 3952-F05 Tape Frame order:

– For a plant install of a 3957-VEB Server in the 3952-F05 Frame, you must also order FC9350 when you order this feature.

– For a field merge of the 3957-VEB Server in the 3952-F05 Frame, you must also order FC9351 when you order this feature. FC4743 Remove 3957-V06/VEA must also be ordered.

•FC5629 Install 3957-V07

This feature code allows the installation of a TS7740 Server in a 3952-F05 Tape Frame. This feature occurs on the 3952-F05 Tape Frame order:

– For a plant install of the 3957-V07 Server in the 3952-F05 Frame, you must also order FC9350 when you order this feature.

– For a field merge of the 3957-V07 Server in the 3952-F05 Frame, you must also order FC9351 when you order this feature. FC4743 Remove 3957-V06/VEA must also be ordered.

|

Restriction: A 3957-V07 Server cannot be field-merged in a 3952-F05 Frame as a replacement for a 3957-V06 Server with 3956-CC6 cache installed.

|

More details about the FC4743 Remove 3957-V06/VEA, FC5627 Install 3957-VEB, and FC5629 Install 3957-V07 options are provided in Chapter 8, “Migration” on page 371.

There are also frame replacement procedures available to replace the entire 3952-F05 Frame that contains a 3956-V06 with a new frame that contains the new 3957-V07, and replacing the entire 3956-VEA Frame with the 3957-VEB Frame. For details about those migration options, see Chapter 8, “Migration” on page 371.

Expanded memory (3957 V06/VEA at R1.7 or a lower level of code)

Existing TS7700 V06 or VEA systems can continue at 8 GB of physical memory. However, an upgrade from 8 GB to 16 GB of physical memory is offered for existing systems. Feature code FC3461 (Memory Upgrade) provides the field upgrade of a 3957-V06 or 3957-VEA Server. This update is targeted for systems that meet one of the following conditions:

•More than 500,000 logical volumes defined and experience heavy host I/O throughput. TS7700 R2.1 or later code will be performance-tuned to take advantage of the additional memory.

•Existing 3957-V06 or 3957-VEA Servers (stand-alone or grid) that are planned to be upgraded to Release 2.1 or higher.

•Existing 3957-V06 or 3957-VEA Servers that are configured in a five-cluster or six-cluster grid.

|

Tip: 16 GB of memory is required for R2.0 or a later level of code.

|

4.2 Hardware installation and infrastructure planning

Planning information related to your TS7700 Virtualization Engine is described. The topics that are covered include system requirements and infrastructure requirements. Figure 4-2 on page 139 shows you an example of the connections and infrastructure resources that might be used for a TS7700 grid with two separate data centers.

Figure 4-2 TS7700 grid configuration example

The legends on Figure 4-2 are described. Refer to the letter on Figure 4-2:

•A: TS7740 3584-L23 library control frame

•B: TS7740 3584-D23 Frames with 3592-J1A, TS1120 (3592-E05), TS1130 (3592-E06), or TS1140 (3592-E07) drives

•C: TS7740 3584-HA frame and 3584-D23/HA frame (optional)

•D: TS7740 3592 data cartridges for the data repository

•E: Fibre connections between TS7740 and the Fibre Switches mounted within 3584-D23 Frame

•F: TSSC for IBM SSRs and Autonomic Ownership Takeover Manager (AOTM)

•G: TS7700 Virtualization Engine

•H: Four Gbit Ethernet (copper or SW fibre) or two 10 Gbit Ethernet for grid communication

•I: Ethernet connections for management interfaces

•J: FICON connections for host workload

•K: FICON fabric infrastructure with extension technology when appropriate

4.2.1 System requirements

Ensure that your facility meets the system requirements for the TS7700 Virtualization Engine when planning for installation. System requirements for installation include requirements for power, cooling, floor leveling, loading, distribution, clearance, environmental conditions, and acoustics.

For a detailed listing of system requirements, see the IBM Virtualization Engine TS7700 Series Introduction and Planning Guide, GA32-0567-14.

IBM 3952 Tape Frame specifications

The 3952 Tape Frame houses the components of the TS7700 Virtualization Engine. Table 4-3 lists the dimensions of the frame enclosing the TS7700 Virtualization Engine.

Table 4-3 Physical characteristics of a maximally configured 3952 Tape Frame

|

Characteristic

|

Value

|

|

Height

|

1804 mm (71.0 in.)

|

|

Width

|

644 mm (25.4 in.)

|

|

Depth

|

1098 mm (43.23 in.)

|

|

Weight

|

270 kg (595.25 lbs.) empty

669.1 kg (1475 lbs.) maximally configured1

|

|

Power

|

240 Vac, 20 amp (single phase)

|

|

Unit height

|

36 U

|

1 See the IBM TS7700 Customer Information Center 3.0.0 under Planning → System requirements. For more detailed information, see TS7720/TS7740 Virtualization Engine specifications and requirements.

Environmental operating requirements

Your facility must meet specified temperature and humidity requirements before installing the TS7700 Virtualization Engine. Table 4-4 shows recommended environmental conditions for the TS7700 Virtualization Engine.

Table 4-4 Environmental specifications

|

Condition

|

Air temperature

|

Altitude

|

Relative humidity1

|

Wet bulb temperature

|

|

Operating

(low altitude) |

10°C - 32°C (50°F - 89.6°F)

|

Up to 5000 ft. amsl

|

20% - 80%

|

23°C (73°F)

|

|

Operating

(high altitude) |

10°C - 28°C (50°F - 82.4°F)

|

5001 ft. amsl - 7000 ft. amsl

|

20% - 80%

|

23°C (73°F)

|

|

Recommended operating range2

|

20°C - 25°C (68°F - 77°F)

|

Up to 7000 ft. amsl

|

40% - 55%

|

N/A

|

|

Power off

|

10°C - 43°C (50°F - 109°F)

|

N/A

|

8% - 80%

|

27°C (80°F)

|

|

Storage

|

1°C - 60°C (33.8°F - 140°F)

|

N/A

|

5% - 80%

|

29°C (84°F)

|

|

Shipping

|

-40°C - 60°C (-40°F - 140°F)

|

N/A

|

5% - 100%

|

29°C (84°F)

|

1 Non-condensing

2 Although the TS7700 Virtualization Engine will operate outside this range, it is strongly advised that you adhere to the recommended operating range.

For a complete list of system requirements, see TS7700 Customer Information Center 3.0.0, under Planning → System Requirements → Environmental requirements.

Power considerations

Your facility must have an available power supply to meet the input voltage requirements for the TS7700 Virtualization Engine.

The 3952 Tape Frame houses the components of the TS7700 Virtualization Engine. The standard 3952 Tape Frame ships with one internal power distribution unit. However, FC1903, Dual AC power, is required to provide two power distribution units to support the high availability characteristics of the TS7700 Virtualization Engine. The 3952 Tape Expansion Frame has two power distribution units and requires two power feeds.

TS7720 Virtualization Engine power requirements

Your facility must have an available power supply to meet the input voltage requirements for the TS7720 Virtualization Engine. Table 4-5 displays the maximum input power for a fully configured TS7720 Virtualization Engine.

Table 4-5 TS7720 Virtualization Engine maximum input power requirements

|

Power requirement

|

Value

|

|

Voltage

|

200 - 240 V AC (single phase)

|

|

Frequency

|

50 - 60 Hz (+/- 3 Hz)

|

|

Current

|

20 A

|

|

Inrush current

|

250 A

|

|

Power (W)

|

4,000 W

|

|

Input power required

|

4.0 kVA (single phase)

|

|

Thermal units

|

13.7 KBtu/hr

|

TS7740 Virtualization Engine power requirements

Your facility must ensure an available power supply to meet the input voltage requirements for the TS7740 Virtualization Engine. Table 4-6 displays the maximum input power for a fully configured TS7740 Virtualization Engine.

Table 4-6 TS7740 Virtualization Engine maximum input power requirements

|

Power requirement

|

Value

|

|

Voltage

|

200 - 240 V AC (single phase)

|

|

Frequency

|

50 - 60 Hz (+/- 3 Hz)

|

|

Current

|

20 A

|

|

Inrush current

|

250 A

|

|

Power (W)

|

4,000 W

|

|

Input power required

|

4.0 kVA (single phase)

|

|

Thermal units

|

13.7 kBtu/hr

|

4.2.2 TCP/IP configuration considerations

The configuration considerations and LAN/WAN requirements for the TS7700 Virtualization Engine are described. Single and multicluster grid configurations are covered. Figure 4-3 on page 142 shows you the different networks and connections used by the TS7700 Virtualization Engine and associated components. We pictured a two-cluster TS7740 grid to show the TS3500 Tape Library Connections (not present in a TS7720 configuration).

Figure 4-3 TCP/IP connections and networks

TS7700 grid interconnect LAN/WAN requirements

The LAN/WAN requirements for the TS7700 Virtualization Engine cross-site grid TCP/IP network infrastructure are described.

The TS7700 grid TCP/IP network infrastructure must be in place before the grid is activated so that the clusters can communicate with one another as soon as they are online. Two or four 1-Gb Ethernet, or two 10-Gb Ethernet connections must be in place before grid installation and activation, including the following equipment:

•Internal Ethernet routers

Ethernet routers are used to connect the network to management interface services operating on existing 3957-VEA or 3957-V06. These routers are still present if the TS7700 Virtualization Engine Server is field-upgraded to a 3957-VEB model or 3957-V07 model, but they are configured as switches by upgrade procedures.

•Internal Ethernet switches

Ethernet switches are used primarily for private communication between components within a cluster in the manufactured 3957-V07 model or VEB model (not upgraded in field).

•External asynchronous transfer mode (ATM) switches or Ethernet extenders

An Ethernet extender or other extending equipment can be used to complete extended distance Ethernet connections. Extended grid Ethernet connections can be any of the following connections:

– 1 Gb copper 10/100/1000 Base-TX

This adapter conforms to the IEEE 802.3ab 1000Base-T standard, which defines gigabit Ethernet operation over distances up to 100 meters using four pairs of CAT6 copper cabling.

– 1 Gb optical shortwave

This SX adapter has an LC Duplex connector that attaches to 50-micron or 62.5-micron multimode fibre cable. It is a standard SW (850 nm) adapter that conforms to the IEEE 802.3z standards. This adapter supports distances of 2 - 260 meters for 62.5-micron Multimode Fiber (MMF) and 2 - 550 meters for 50.0-micron MMF.

– Optical longwave

This adapter supports a maximum of 10 km (6.2 miles) of 1310 nm, 9-micron, single-mode fiber optic cable. It conforms to the IEEE 802.3ae standard. This adapter requires 9-micron single-mode fiber optic cables and uses an SC connector to connect to network infrastructure components.

The default configuration for a TS7700 server from manufacturing (3957-VEB or 3957-V07) is two dual-ported PCIe 1-Gb Ethernet adapters. You can use FC 1035, 10 Gb grid optical LW connection, to add support for two 10-Gb optical longwave Ethernet adapters. If the TS7700 server is a 3957-V07 or 3957-VEB, two instances of either FC 1036 (1 Gb grid dual port copper connection) or FC 1037 (1 Gb dual port optical SW connection) must be installed. This feature improves data copy replication while providing minimum bandwidth redundancy. Clusters configured using two 10-Gb, four 1-Gb, or two 1-Gb clusters can be interconnected within the same TS7700 grid, although the explicit same port-to-port communications still apply.

|

Important: Identify, order, and install any new equipment to fulfill grid installation and activation requirements. The connectivity and performance of the Ethernet connections must be tested prior to grid activation. You must ensure that the installation and testing of this network infrastructure is complete before grid activation.

|

The network between the TS7700 Virtualization Engines must have sufficient bandwidth to account for the total replication traffic. If you are sharing network switches among multiple TS7700 Virtualization Engine paths or with other devices, the sum total of bandwidth on that network must be sufficient to account for all of the network traffic.

The TS7700 Virtualization Engine uses TCP/IP for moving data between each cluster. Bandwidth is a key factor that affects throughput for the TS7700 Virtualization Engine. The following key factors also can affect throughput:

•Latency between the TS7700 Virtualization Engines

•Network efficiency (packet loss, packet sequencing, and bit error rates)

•Network switch capabilities

•Flow control to pace the data from the TS7700 Virtualization Engines

•Inter-switch link capabilities (flow control, buffering, and performance)

The TS7700 Virtualization Engines attempt to drive the network links at the full 1 Gb rate, which might exceed the network infrastructure capabilities. The TS7700 Virtualization Engine supports the IP flow control frames so that the network paces the level at which the TS7700 Virtualization Engine attempts to drive the network. The best performance is achieved when the TS7700 Virtualization Engine is able to match the capabilities of the underlying network, resulting in fewer dropped packets.

|

Remember: When the system exceeds the network capabilities, packets are lost. This causes TCP to stop, resync, and resend data, resulting in a less efficient use of the network.

|

To maximize network throughput, ensure that the underlying network meets these requirements:

•Has sufficient bandwidth to account for all network traffic expected to be driven through the system to eliminate network contention.

•Can support flow control between the TS7700 Virtualization Engines and the switches. This allows the switch to pace the TS7700 Virtualization Engines to the WAN capability. Flow control between the switches is also a potential factor to ensure that the switches can pace their rates to one another. The performance of the switch is capable of handling the data rates expected from all of the network traffic.

In short, latency between the sites is the primary factor. However, packet loss due to bit error rates or insufficient network capabilities can cause TCP to resend data, therefore multiplying the effect of the latency.

The TS7700 Virtualization Engine uses your LAN/WAN to replicate logical volumes, access logical volumes remotely, and perform cross-site messaging. The LAN/WAN must have adequate bandwidth to deliver the throughput necessary for your data storage requirements. The cross-site grid network is 1 Gb Ethernet with either copper (RJ-45) or shortwave fibre (single-ported or dual-ported) links. For copper networks, Cat 5E or Cat6 Ethernet cabling can be used, but Cat6 cabling is preferable to achieve the highest throughput. Alternatively, two 10 Gb longwave fiber Ethernet links can be provided. For additional information, see “FC1036 1-Gb grid dual port copper connection” on page 137, “FC1037 1-Gb dual port optical SW connection” on page 137, and “FC1035, 10 Gb grid optical LW connection” on page 136. Internet Protocol Security (IPSec) is now supported on grid links to support encryption.

|

Important: To avoid any network conflicts, the following subnets must not be used for LAN/WAN IP addresses or management interface primary, secondary, or virtual IP addresses:

•192.168.251.xxx

•192.168.250.xxx

•172.31.1.xxx

|

Network redundancy

The TS7700 Virtualization Engine provides up to four independent 1 Gb copper (RJ-45) or shortwave fibre Ethernet links for grid network connectivity. We suggest that you connect each link through an independent WAN interconnection to be protected from a single point of failure that can disrupt all WAN operating paths to or from a node.

Local IP addresses for management interface access

|

Beginning with Release 3.0, the 3957-V07 and 3957-VEB configurations will support IPv6 and IPSec.

|

You must provide three TCP/IP addresses on the same subnet. Two of these are assigned to physical links, and the third is a virtual IP address used to connect to the TS7700 Virtualization Engine management interface.

Use the third IP address to access a TS7700 Virtualization Engine. It automatically routes between the two addresses assigned to physical links. The virtual IP address enables access to the TS7700 Virtualization Engine management interface by using redundant paths, without the need to manually specify IP addresses for each of the paths. If one path is unavailable, the virtual IP address automatically connects through the remaining path.

|

Tip: If FC9900, Encryption configuration, is installed, this same connection is used for communications between the TS7740 Virtualization Engine and the Encryption Key Server or Tivoli Key Lifecycle Manager. Because encryption occurs on attached physical tape drives, the TS7720 Virtualization Engine does not support encryption and the virtual connection is used exclusively to create redundant paths.

|

You must provide one gateway IP address.

You must provide one subnet mask address.

|

Important: All three provided IP addresses will be assigned to one TS7700 Virtualization Engine cluster for the management interface access.

|

Each cluster in the grid must be configured in the same manner as explained before, with three TCP/IP addresses providing redundant paths between the local intranet and cluster. Two of these addresses are assigned to physical links, and the third address provides a virtual IP address to connect to the management interface in this specific TS7700 Virtualization Engine.

IPv6 support

Starting with TS7700 Virtualization Engine Licensed Internal Code 3.0, the 3957-V07 and 3957-VEB will support IPv6.

|

Tip: The TS7700 Virtualization Engine grid link interface does not support IPv6.

|

All network interfaces that support monitoring and management functions are now able to support IPv4 or IPv6:

•Management interface

•Key manager server:

– Encryption Key Manager (EKM)

– Tivoli Key Lifecycle Manager

– IBM Security Key Lifecycle Manager

•Simple Network Management Protocol (SNMP) servers

•Lightweight Directory Access Protocol (LDAP) server

•Network Time Protocol (NTP) server

When planning for the NTP server, remember that the NTP server requires IPv6 support in all clusters in the grid.

|

Important: All of these client interfaces must be either IPv6 or IPv4. Mixing IPv4 and IPv6 is not supported currently.

|

For implementation details, see Chapter 5, “Hardware implementation” on page 189.

IPSec support for the grid links

When running TS7700 R3.0 level of Licensed Internal Code, the 3957-V07 and 3957-VEB models support Internet Protocol Security (IPSec) on the grid links. Only use IPSec capabilities if they are absolutely required by the nature of your business. Grid encryption might cause a considerable slowdown in all grid link traffic, for example:

•Synchronous, immediate or deferred copies

•Remote read or write

For implementation details, see Chapter 5, “Hardware implementation” on page 189.

Connecting to the management interface

We describe how to connect to the IBM Virtualization Engine TS7700 Management Interface. Table 4-7 lists the supported browsers.

Table 4-7 Supported browsers

|

Browser

|

Version supported

|

Version tested

|

|

Internet Explorer

|

8.x or 9.x

|

9.x

|

|

Mozilla Firefox

|

6.x, 7.x, 10.x, 10.0.x Extended Support Release (ESR), or 13.x

|

13.x

|

Perform the following steps to connect to the interface:

1. In the address bar of a supported web browser, enter http:// followed by the virtual IP entered during installation, followed by /Console. The virtual IP is one of three IP addresses given during installation. The complete URL takes this form:

http://virtual IP address/Console

2. Press Enter on your keyboard or Go on your web browser.

The web browser redirects to http://virtual IP address/cluster ID, which is associated with the virtual IP address. If you bookmark this link and the cluster ID changes, you must update your bookmark before the bookmark resolves correctly. Alternatively, you can bookmark the more general URL, http://virtual IP address/Console, which does not require an update following a cluster ID change.

3. The login page for the management interface loads. The default login name is admin and the default password is admin.

For the list of required TCP/IP port assignments, see Table 4-8 on page 148.

The management interface in each cluster can access all other clusters in the grid through the Gigabit grid links. From the local cluster menu, by selecting a remote cluster the Maintenance Interface navigates automatically to the selected cluster through the Gigabit grid link. Alternatively, you can point the browser to the IP address of the target cluster that you want.

This function is handled automatically by each cluster’s management interface in the background. Figure 4-4 on page 147 shows a sample setup for a two-cluster grid.

Figure 4-4 TS7700 Virtualization Engine management interface access from a remote cluster

WAN IP addresses for cross-site replication within a multicluster grid

For TS7700 Virtualization Engines configured in a grid, the following additional assignments need to be made for the grid WAN adapters. For each adapter, you must supply the following information:

•A TCP/IP address

•A gateway IP address

•A subnet mask

|

Tip: In a TS7700 Virtualization Engine multicluster grid environment, you need to supply two or four IP addresses per cluster for the physical links required by the TS7700 Virtualization Engine grid for cross-site replication.

|

TSSC Network IP addresses

The TS3000 System Console (TSSC) uses an internal and isolated network, which is known as the TSSC network. All separate elements in the tape subsystem connect to this network and are configured in the TSSC by the IBM SSR.

Each component of your tape subsystem connected to the TSSC uses at least one Ethernet port in the TSSC Ethernet hub. For example, a TS7700 cluster needs two connections (one from the primary switch and other from the alternate switch). If your cluster is a TS7740, you need a third port for the TS3500, the 3584 Tape Library. Depending on the size of your environment, you might need to order a console expansion for your TSSC. See FC2704 in Appendix A, “Feature codes” on page 843 for details.

We suggest that at least one TSSC is available per location in proximity of the tape devices, such as TS7700 clusters and TS3500 Tape Libraries.

Apart from the internal TSSC network, the TSSC can also have another two Ethernet physical connections:

•External Network Interface

•Grid Network Interface

Those two Ethernet adapters are used by advanced functions, such as AOTM, LDAP, Assist On-site, and Call Home (not using a modem). If you plan to use them, provide one or two Ethernet connections and the corresponding IP addresses for the TSSC. Table 4-8 shows the network port requirements for the TSSC.

Table 4-8 TSSC TCP/IP port requirement

|

TSSC interface link

|

TCP/IP port

|

Role

|

|

TSSC External

|

80

|

Call Home

|

|

|

443

|

|

|

|

53

|

|

|

|

ICMP

|

|

|

TSSC Grid

|

80

|

|

|

|

22

|

|

|

|

443

|

|

|

|

9666

|

|

|

|

ICMP

|

|

Network switches and TCP/IP port requirements

The network switch and TCP/IP port requirements for the WAN of a TS7700 Virtualization Engine in the grid configuration are described.

|

Clarification: These requirements apply only to LAN/WAN infrastructure; the TS7700 Virtualization Engine internal network is managed and controlled by internal code.

|

Table 4-9 on page 149 displays TCP/IP port assignments for the grid WAN.

Table 4-9 Infrastructure grid WAN TCP/IP port assignments

|

Link

|

TCP/IP port

|

Role

|

|

TS7700 VE Management Interface

|

ICMP

|

Dead gateway detection

|

|

123

|

Network Time Protocol (NTP) (NTP uses the User Datagram Protocol (UDP)) time server

|

|

|

443

|

Access the TS7700 VE Management Interface (HTTPS)

|

|

|

80

|

Access the remote management interface when clusters are operating at different code levels (HTTP)

|

|

|

5988

|

Access the TS7700 VE Management Interface

|

|

|

1443

|

Encryption key server, secure socket layer (SSL)

|

|

|

3801

|

Encryption Key Server (TCP/IP)

|

|

|

T7700 VE GRID

|

ICMP

|

Check cluster health

|

|

9

|

Discard port for speed measurement between grid clusters

|

|

|

80

|

Access the remote management interface when clusters are operating at different code levels

|

|

|

123

|

Network Time Protocol (NTP) time server

|

|

|

1415/1416

|

IBM WebSphere® message queues (grid-to-grid)

|

|

|

443

|

Access the TS7700 VE Management Interface

|

|

|

350

|

TS7700 VE file replication, Remote Mount, and Sync Mode Copy (distributed library file transfer)

|

|

|

20

|

Recommended to remain open for FTP data

|

|

|

21

|

Recommended to remain open for FTP control

|

|

|

23

|

Recommended to remain open for Telnet

|

4.3 Remote installations and FICON switch support

The TS7700 Virtualization Engine attaches to the System z host through FICON channel attachments. There are three basic types of switch connections that can be used between the host and TS7700 Virtualization Engine:

•Direct connect

•Single switch

•Cascaded switches

You can also use Dense Wave Division Multiplexers (DWDMs) or FICON channel extenders between the System z host and the TS7700 Virtualization Engine. For more details about the distances supported, see “TS7700 Virtualization Engine extended distance support” on page 151.

4.3.1 Factors that affect performance at a distance

Fibre Channel distances depend on many factors:

• Type of laser used: Long wavelength or short wavelength

• Type of fiber optic cable: Multi-mode or single-mode

• Quality of the cabling infrastructure in terms of decibel (dB) signal loss:

– Connectors

– Cables

– Bends and loops in the cable

Native shortwave Fibre Channel transmitters have a maximum distance of 500 m with 50-micron diameter, multi-mode, optical fiber. Although 62.5-micron, multi-mode fiber can be used, the larger core diameter has a greater dB loss and maximum distances are shortened to 300 m. Native longwave Fibre Channel transmitters have a maximum distance of 10 km (6.2 miles) when used with 9-micron diameter single-mode optical fiber.

Link extenders provide a signal boost that can potentially extend distances to up to about

100 km (62 miles). These link extenders simply act as a big, fast pipe. Data transfer speeds over link extenders depend on the number of buffer credits and efficiency of buffer credit management in the Fibre Channel nodes at either end. Buffer credits are designed into the hardware for each Fibre Channel port. Fibre Channel provides flow control that protects against collisions.

100 km (62 miles). These link extenders simply act as a big, fast pipe. Data transfer speeds over link extenders depend on the number of buffer credits and efficiency of buffer credit management in the Fibre Channel nodes at either end. Buffer credits are designed into the hardware for each Fibre Channel port. Fibre Channel provides flow control that protects against collisions.

This configuration is extremely important for storage devices, which do not handle dropped or out-of-sequence records. When two Fibre Channel ports begin a conversation, they exchange information about their number of supported buffer credits. A Fibre Channel port will send only the number of buffer frames for which the receiving port has given credit. This approach both avoids overruns and provides a way to maintain performance over distance by filling the “pipe” with in-flight frames or buffers. The maximum distance that can be achieved at full performance depends on the capabilities of the Fibre Channel node that is attached at either end of the link extenders.

This relationship is vendor-specific. There must be a match between the buffer credit capability of the nodes at either end of the extenders. A host bus adapter (HBA) with a buffer credit of 64 communicating with a switch port with only eight buffer credits is able to read at full performance over a greater distance than it is able to write, because, on the writes, the HBA can send a maximum of only eight buffers to the switch port. On the reads, the switch can send up to 64 buffers to the HBA. Until recently, a rule has been to allocate one buffer credit for every 2 km (1.24 miles) to maintain full performance.

Buffer credits within the switches and directors have a large part to play in the distance equation. The buffer credits in the sending and receiving nodes heavily influence the throughput that is attained in the Fibre Channel. Fibre Channel architecture is based on a flow control that ensures a constant stream of data to fill the available pipe. Generally, to maintain acceptable performance, one buffer credit is required for every 2 km (1.24 miles) distance covered. See Introduction to SAN Distance Solutions, SG24-6408, for more information.

4.3.2 Host attachments

The TS7700 attaches to System z hosts via the 8 Gb FICON adapters in the host, either FICON Longwave or Shortwave channels, at speeds of 4 Gb/second:

•ESCON channel attachment is not supported.

•FICON channel extension and DWDM connection are supported.

•FICON directors and director cascading are supported.

Supported distances

When directly attaching to the host, the TS7700 Virtualization Engine can be installed at a distance of up to 10 km (6.2 miles) from the host. With FICON Switches, also called FICON Directors or Dense Wave Division Multiplexers (DWDMs), the TS7700 Virtualization Engine can be installed at extended distances from the host.

TS7700 Virtualization Engine extended distance support

In a multicluster grid configuration, the TS7700 Virtualization Engines are also connected through TCP/IP connections. These network connections are not as sensitive as FICON to long distances when sufficient bandwidth is available.

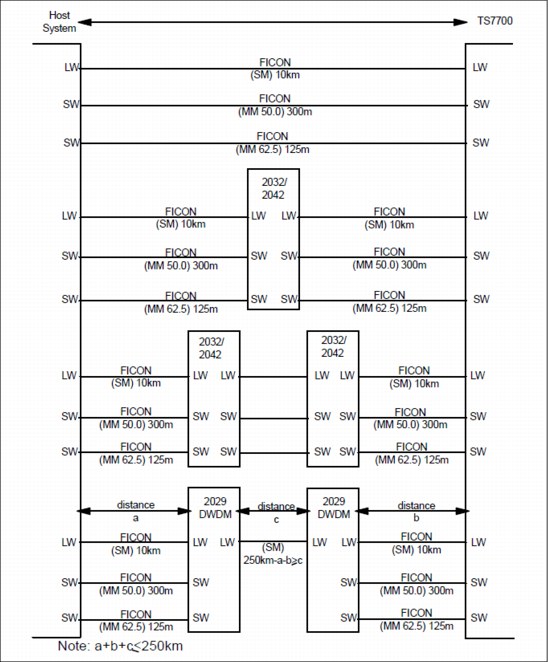

Figure 4-5 on page 152 shows a complete diagram that includes the type and model of common DWDM and FICON Director products other than Shortwave and Longwave specifications. Although not shown in this diagram, FICON Directors and director cascading are supported (see 4.3.3, “FICON Director support” on page 152).

Figure 4-5 System z host attachment distances

4.3.3 FICON Director support

All FICON Directors are supported for single and multicluster grid configurations with

1 Gbps, 2 Gbps, 4 Gbps, or 8 Gbps links. The components will auto-negotiate to the highest speed allowed.

1 Gbps, 2 Gbps, 4 Gbps, or 8 Gbps links. The components will auto-negotiate to the highest speed allowed.

You cannot mix different vendors, such as Brocade (formerly McData, CNT, and InRange) and CISCO, but you can mix models of one vendor.

See the System Storage Interoperation Center (SSIC) for specific intermix combinations supported.

You can find the SSIC at the following URL:

The FICON switch support matrix is at the following address:

4.3.4 FICON channel extenders

FICON channel extenders can operate in one of the following modes:

•Frame shuttle or tunnel mode

•Emulation mode

Using the frame shuttle or tunnel mode, the extender receives and forwards FICON frames without performing any special channel or control unit emulation processing. The performance is limited to the distance between the sites and the normal round-trip delays in FICON channel programs.

Emulation mode can go unlimited distances, and it monitors the I/O activity to devices. The channel extender interfaces emulate a control unit by presenting command responses and channel enablement (CE)/Device End (DE) status ahead of the controller and emulating the channel when running the pre-acknowledged write operations to the real remote tape device. Therefore, data is accepted early and forwarded to the remote device to maintain a full pipe throughout the write channel program.

The supported channel extenders between the System z host and the TS7700 Virtualization Engine are in the same matrix as the FICON switch support under the following URL (see the FICON Channel Extenders section):

Figure 4-6 on page 154 shows an example of host connectivity using FICON channel extenders and cascaded switches.

Figure 4-6 Host connectivity with FICON channel extenders and cascaded switches

4.3.5 Cascaded switches

The following list summarizes the general configuration rules for configurations with cascaded switches:

•Director Switch ID

This is defined in the setup menu.

The inboard Director Switch ID is used on the SWITCH= parameter in the CHPID definition. The Director Switch ID does not have to be the same as the Director Address. Although the example uses a different ID and address for clarity, keep them the same to reduce configuration confusion and simplify problem determination work.

Allowable Director Switch ID ranges have been established by the manufacturer:

– McDATA range: x'61' to x'7F'

– CNT/Inrange range: x'01' to x'EF'

– Brocade range: x'01' to x'EF'

•Director Address

This is defined in the Director GUI setup.

The Director Domain ID is the same as the Director Address that is used on the LINK parameter in the CNTLUNIT definition. The Director Address does not have to be the same as the Director ID, but again, keep them the same to reduce configuration confusion and simplify PD work.

The following allowable Director Address ranges are established by the manufacturer:

– McDATA range: x'61' to x'7F'

– CNT/Inrange range: x'01' to x'EF'

– Brocade range: x'01' to x'EF'

•Director Ports

The Port Address might not be the same as the Port Number. The Port Number identifies the physical location of the port, and the Port Address is used to route packets.

The Inboard Director Port is the port to which the CPU is connected. The Outboard Director Port is the port to which the control unit is connected. It is combined with the Director Address on the LINK parameter of the CNTLUNIT definition:

– Director Address (hex) combined with Port Address (hex): two bytes

– Example: LINK=6106 indicates a Director Address of x'61' and a Port Address of x'06'

•External Director connections:

– Inter-Switch Links (ISLs) connect to E Ports.

– FICON channels connect to F Ports.

•Internal Director connections

Port type and port-to-port connections are defined using the available setup menu in the equipment. Figure 4-7 shows an example of host connection using DWDM and cascaded switches.

Figure 4-7 Host connectivity using DWDM and cascaded switches

4.4 High availability grid considerations

The TS7700 Virtualization Engine grid provides configuration flexibility to meet a variety of needs. Those needs depend on both your needs and the application. This section specifically addresses planning a two-cluster grid configuration to meet high availability needs. However, the discussion easily translates to a three-cluster grid configuration with two production clusters of high availability and disaster recovery. The third cluster is strictly a disaster recovery site.

High availability means being able to provide continuous access to logical volumes through planned and unplanned outages with as little user impact or intervention as possible. It does not mean that all potential for user impact or action is eliminated. The following guidelines relate to establishing a grid configuration for high availability:

•The production systems (sysplexes and LPARs) have FICON channel connectivity to both clusters in the grid. The Data Facility Storage Management Subsystem (DFSMS) library definitions and input/output definition file (IODF) have been established and the appropriate FICON Directors, DWDM attachments, and fiber are in place. Virtual tape devices in both clusters in the grid configuration are varied online to the production systems. If virtual tape device addresses are not normally varied on to both clusters, the virtual tape devices to the standby cluster need to be varied on in a planned or unplanned outage to allow production to continue.

•For the workload placed on the grid configuration, when using only one of the clusters, performance throughput needs to be sufficient to meet service level agreements (SLAs). If both clusters are normally used by the production systems (the virtual devices in both clusters are varied online to production), in the case where one of the clusters is unavailable, the available performance capacity of the grid configuration can be reduced by up to one half.