Hardware implementation

This chapter describes the hardware-related implementation steps for the IBM Virtualization Engine TS7700.

It covers all implementation steps that relate to the setup of the following products:

•IBM System Storage TS3500 Tape Library

•TS7700 Virtualization Engine

|

Important: IBM 3494 Tape Library attachment is not supported at Release 2.0 and later.

|

For information about host software implementation, see Chapter 6, “Software implementation” on page 285 that describes the hardware configuration definition (HCD) steps on the host and operating system-related definitions.

This chapter includes the following sections:

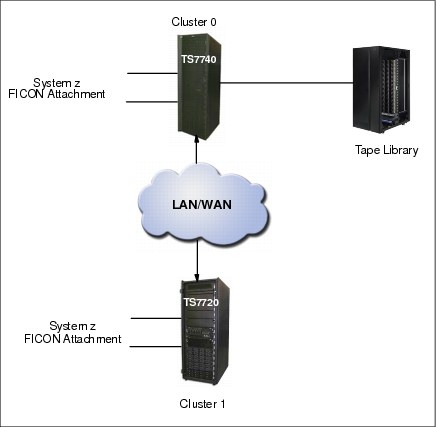

5.1 TS7700 Virtualization Engine implementation and installation considerations

The following sections discuss the implementation and installation tasks to set up the TS7700 Virtualization Engine. The TS7700 Virtualization Engine includes both the IBM Virtualization Engine TS7720 and the IBM Virtualization Engine TS7740, so specific names are used in this chapter if a certain task only applies to one of the two because there are slight differences between the TS7720 Virtualization Engine and the TS7740 Virtualization Engine.

The TS7720 Virtualization Engine does not have a tape library attached, so the implementation steps related to a physical tape library, TS3500 Tape Library, do not apply to the TS7720 Virtualization Engine.

You can install the TS7740 Virtualization Engine together with your existing TS3500 Tape Library. Because the Library Manager functions reside inside of TS7700 Virtualization Engine microcode, the TS7740 itself manages all necessary operations, so the IBM 3953 Tape System is no longer required to attach a TS7740 Virtualization Engine.

|

Important: System z attachment of native 3592 tape drives through a tape controller might still require the IBM 3953 Tape System. See IBM TS3500 Tape Library with System z Attachment A Practical Guide to Enterprise Tape Drives and TS3500 Tape Automation, SG24-6789, for more information.

|

You can also install a new TS3500 Tape Library and a new TS7740 Virtualization Engine at the same time.

5.1.1 Implementation tasks

The TS7700 Virtualization Engine implementation can be logically separated into three major sections:

•TS7740 Virtualization Engine and tape library setup

Use the TS7740 Virtualization Engine and the TS3500 Tape Library interfaces for these setup steps:

– Defining the logical library definitions of the TS7740 Virtualization Engine, such as physical tape drives and cartridges, using the TS3500 Tape Library Specialist, which is the web browser interface to the TS3500 Tape Library.

– Defining specific settings, such as encryption, and inserting logical volumes into the TS7740 Virtualization Engine. You can also use the management interface (MI) to define logical volumes, management policies, and volume categories.

This chapter provides details of these implementation steps.

•TS7700 Hardware I/O configuration definition

This section relates to the system generation. It consists of processes, such as FICON channel attachment to the host, HCD/input/output configuration program (IOCP) definitions, and Missing Interrupt Handler (MIH) settings. This activity can be done before the physical hardware installation and it can be part of the preinstallation planning.

These installation steps are described in Chapter 6, “Software implementation” on page 285.

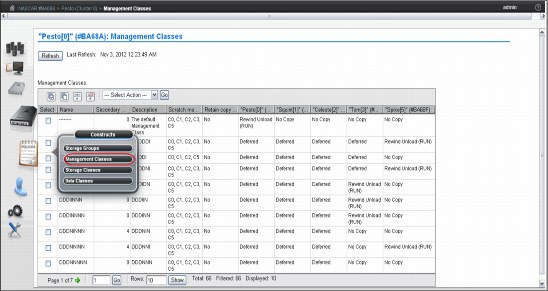

•TS7700 Virtualization Engine software definition

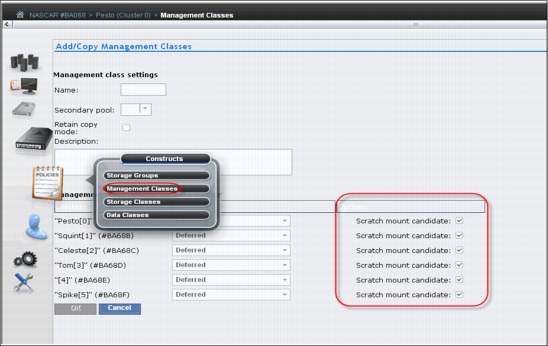

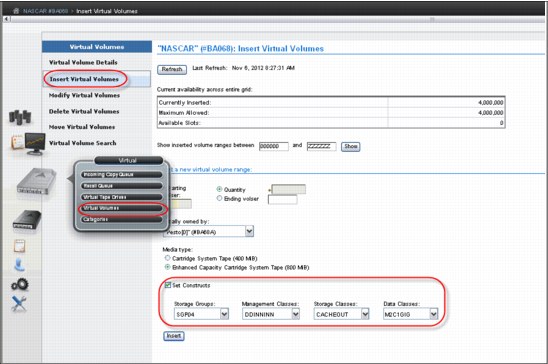

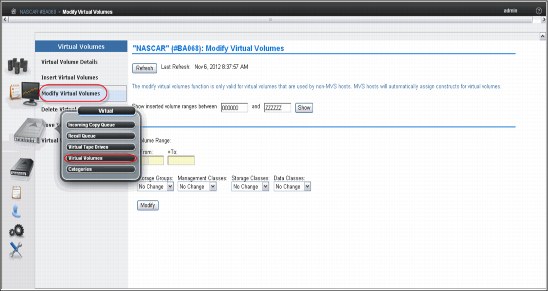

This is where you define the new virtual tape library to the individual host operating system. In a System z environment with Data Facility Storage Management Subsystem (DFSMS)/IBM MVS, this includes updating DFSMS automatic class selection (ACS) routines, object access method (OAM), and your tape management system during this phase. You also define Data Class (DC), Management Class (MC), Storage Class (SC), and Storage Group (SG) constructs and selection policies, which are passed to the TS7700 Virtualization Engine.

These installation steps are described in Chapter 6, “Software implementation” on page 285.

These three groups of implementation tasks can be done in parallel or sequentially. HCD and host definitions can be completed before or after the actual hardware installation.

5.1.2 Installation tasks

The tasks outlined in this section are specific to the simultaneous installation of a TS3500 Tape Library and a TS7740 Virtualization Engine.

|

Important: IBM 3494 Tape Library attachment is not supported at Release 2.0 and later.

|

If you are installing a TS7740 Virtualization Engine in an existing TS3500 Tape Library environment, some of these tasks might not apply to you:

•Hardware-related activities (completed by your IBM System Service Representative (SSR)):

– Install the IBM TS3500 Tape Library.

– Install any native drives that will not be controlled by the TS7740 Virtualization Engine.

– Install the TS7740 Virtualization Engine Frame and additional D2x frame or frames in the TS3500 Tape Library.

•Define drives to hosts:

– z/OS

– z/VM

– z/VSE

– TPF and z/TPF

•Software-related activities:

– Apply maintenance for the TS3500 Tape Library.

– Apply maintenance for the TS7700 Virtualization Engine.

– Verify or update exits for the tape management system (if applicable) and define logical volumes to it.

– See z/VM V5R4.0 DFSMS/VM Removable Media Services, SC24-6090, and 6.6, “Software implementation in z/VM and z/VSE” on page 316.

•Specific TS7700 Virtualization Engine activities:

– Define policies and constructs using the TS7700 Virtualization Engine MI.

– Define the logical VOLSER ranges of the logical volumes through the TS7700 Virtualization Engine MI.

•Specific TS7740 Virtualization Engine installation activities:

– Define the TS7740 Virtualization Engine environment using the TS3500 Tape Library Specialist.

– Define pool properties.

– Define the physical VOLSER ranges for TS7740 Virtualization Engine-owned physical volumes to the MI of the TS7740 Virtualization Engine and TS3500 Tape Library (if applicable).

– Insert TS7740 Virtualization Engine-owned physical volumes in the tape library.

•If you will use encryption, you must specify the system-managed encryption method in the TS3500 web MI, and set all drives for the logical library as Encryption Capable.

|

Important: Encryption does not work with tape drives in emulation mode. Tape drives must be set to Native mode.

|

These tasks are further described, including the suggested order of events, later in this chapter.

After your TS7740 Virtualization Engine is installed on the TS3500 Tape Library, perform the following post-installation tasks:

•Schedule and complete operator training.

•Schedule and complete storage administrator training.

5.2 TS3500 Tape Library definitions (TS7740 Virtualization Engine)

Use this section if you are implementing the TS7740 Virtualization Engine in a TS3500 Tape Library in a System z environment. If your TS7700 Virtualization Engine does not have an associated tape library (TS7720), see 5.3, “Setting up the TS7700 Virtualization Engine” on page 212.

Your IBM SSR performs the hardware installation of the TS7740 Virtualization Engine, its associated tape library, and the frames. This installation does not require your involvement other than the appropriate planning. See Chapter 4, “Preinstallation planning and sizing” on page 123 for details.

The following topics are covered in this section:

•Defining a logical library

•Cartridge assignment policies

•Eight-character VOLSER support

•Assigning drives and creating control paths

•Defining an encryption method and encryption-capable drives

After the SSR has physically installed the library hardware, you can use the TS3500 Tape Library Specialist to set up the logical library, which is attached to the System z host.

|

Clarification: The steps described in the following section relate to the installation of a new IBM TS3500 Tape Library with all the required features, such as ALMS, already installed. If you are attaching an existing IBM TS3500 Tape Library that is already attached to Open Systems hosts to System z hosts also, see IBM TS3500 Tape Library with System z Attachment A Practical Guide to Enterprise Tape Drives and TS3500 Tape Automation, SG24-6789, for additional actions that might be required.

|

5.2.1 Defining a logical library

The TS3500 Tape Library Specialist is required to define a logical library and perform the following tasks. Therefore, ensure that it is set up properly and working. For access through a standard-based web browser, an IP address must be configured, which will be done initially by the SSR during the hardware installation at the TS3500 Tape Library operator window.

|

Important:

•Each TS7740 Virtualization Engine requires its own logical library in a TS3500 Tape Library.

•The ALMS feature must be installed and enabled to define a logical library partition in the TS3500 Tape Library.

|

Ensure that ALMS is enabled

Before enabling ALMS, the ALMS license key has to be entered through the TS3500 Tape Library Operator window, because ALMS is a chargeable feature.

You can check the status of ALMS with the TS3500 Tape Library Specialist by selecting Library → ALMS, as shown in Figure 5-1.

Figure 5-1 TS3500 Tape Library Specialist System Summary and Advanced Library Management System windows

As you can see in the ALMS window (at the bottom of the picture), ALMS is enabled for this TS3500 Tape Library.

When ALMS is enabled for the first time in a partitioned TS3500 Tape Library, the contents of each partition will be migrated to ALMS logical libraries. When enabling ALMS in a non-partitioned TS3500 Tape Library, cartridges that already reside in the library are migrated to the new ALMS single logical library.

Creating a new logical library with ALMS

This function is valid and available only if ALMS is enabled.

|

Tip: You can create or remove a logical library from the TS3500 Tape Library by using the Tape Library Specialist web interface.

|

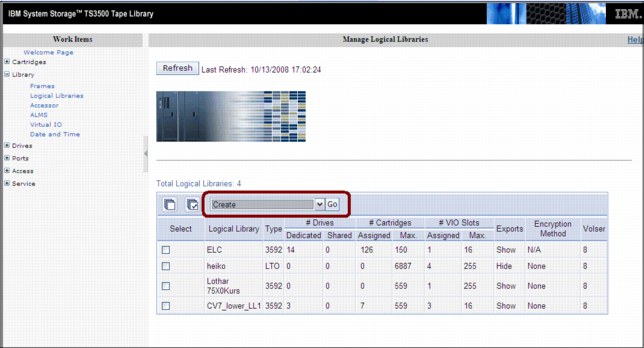

1. From the main section of the TS3500 Tape Library Specialist Welcome window, go to the work items on the left side of the window and select Library → Logical Libraries, as shown in Figure 5-2.

2. From the Select Action drop-down menu, select Create and click Go.

Figure 5-2 Create logical library starting window

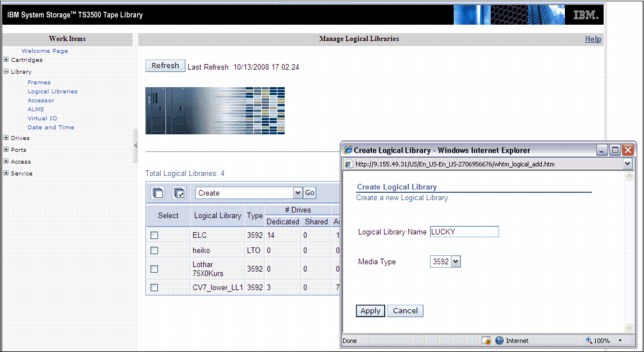

An additional window, named Create Logical Library, opens. Both windows are shown in Figure 5-3.

Figure 5-3 Create Logical Library windows

3. Type the logical library name (up to 15 characters), select the media type (3592 for TS7740), and then click Apply. The new logical library is created and displays in the logical library list when the window is refreshed.

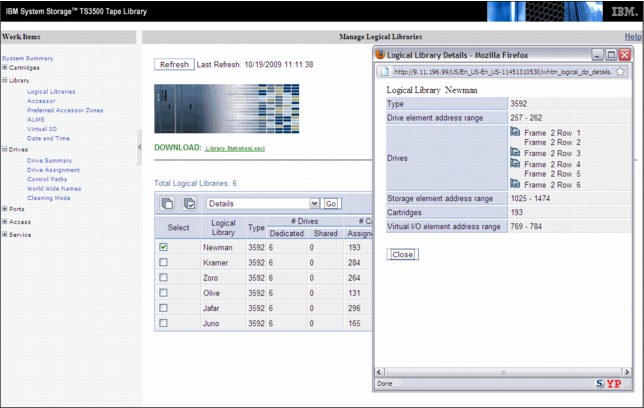

After the logical library is created, you can display its characteristics by selecting Library → Logical Libraries under work items on the left side of the window, as shown in Figure 5-4 on page 197. From the Select Action drop-down menu, select Details and then click Go.

In the Logical Library Details window, you see the element address range. The starting element address of each newly created logical library starts one element higher, such as the following examples:

•Logical Library 1: Starting SCSI element address is 1025.

•Logical Library 2: Starting SCSI element address is 1026.

•Logical Library 3: Starting SCSI element address is 1027.

Figure 5-4 Recording of the starting SCSI element address of a logical library

Setting the maximum cartridges for the logical library

Define the maximum number of cartridge slots for the new logical library. If multiple logical libraries are defined, you can define the maximum number of tape library cartridge slots for each logical library. This allows a logical library to grow without a reconfiguration each time you want to add empty slots. To define the quantity of cartridge slots, select a new logical library from the list, and from drop-down menu, select Maximum Cartridges and then click Go.

Figure 5-5 shows the web interface windows.

Figure 5-5 Defining the maximum number of cartridges

Setting eight-character Volser option in the new logical library

Check the new logical library for the eight-character Volser reporting option. Go to the Manage Logical Libraries window under Library in the Tape Library Web Specialist, as shown in Figure 5-6.

Figure 5-6 Check Volser reporting option

If the new logical library does not show 8 in the Volser column, correct the information:

1. Select a logical library.

2. Click the Select Action drop-down menu and select Modify Volser Reporting.

3. Click Go.

Figure 5-7 illustrates the sequence.

Figure 5-7 Modifying Volser Reporting length

4. In the pop-up window, select the correct Volser character length, which is 8 (default), and apply it as shown in Figure 5-8.

Figure 5-8 Setting 8-character Volser

5.2.2 Adding drives to the logical library

From the Logical Libraries window shown in Figure 5-4 on page 197, use the work items on the left side of the window to navigate to the requested web page by selecting Drives → Drive Assignment.

|

Restriction: No intermix of tape drive models is supported by TS7740 with the only exception of the 3592-E05 Tape Drives working in J1A emulation mode and 3592-J1A Tape Drives (those were the first and second generation of the 3592 Tape Drives).

TS1130 (3592 Model E06) and TS1140 (3592 Model E07) cannot be intermixed with any other model of 3592 Tape Drive within the same TS7740.

|

This link takes you to a filtering window where you can select to have the drives displayed by drive element or by logical library. Upon your selection, a window opens so that you can add a drive to or remove a drive from a library configuration. It also enables you to share a drive between Logical Libraries and define its drive as a control path.

|

Restriction: Do not share drives belonging to a TS7740 (or any other control unit). They must be exclusive.

|

Figure 5-9 on page 201 shows the drive assignment window of a logical library that has all drives assigned.

Unassigned drives appear in the Unassigned column with the box checked, so to assign them, check the appropriate drive box under the logical library name and click Apply.

Click the Help link at the upper-right corner of the window, shown in Figure 5-9, to see extended help information, such as detailed explanations of all the fields and functions of the window. The other TS3500 Tape Library Specialist windows provide similar help support.

Figure 5-9 Drive Assignment window

In a multi-platform environment, you see logical libraries as shown in Figure 5-9, and you can reassign physical tape drives from one logical library to another. You can easily do this for the Open Systems environment, where the tape drives attach directly to the host systems without a tape controller or VTS/TS7700 Virtualization Engine.

|

Restriction: Do not change drive assignments if they belong to an operating TS7740 or tape controller. Work with your IBM SSR, if necessary.

|

In a System z environment, a tape drive always attaches to one tape control unit or TS7740 Virtualization Engine only. If you reassign a tape drive from a TS7740 Virtualization Engine or an IBM 3953 Library Manager partition to an Open Systems partition temporarily, you must also physically detach the tape drive from the TS7740 Virtualization Engine or tape controller first, and then attach the tape drive to the Open Systems host. Ensure that only IBM SSRs perform these tasks to protect your tape operation from unplanned outages.

|

Important: In a System z environment, use the Drive Assignment window only for these functions:

•Initially assign the tape drives from TS3500 Tape Library Web Specialist to a logical partition.

•Assign additional tape drives after they have been attached to the TS7740 Virtualization Engine or a tape controller.

•Remove physical tape drives from the configuration after they are physically detached from the TS7740 Virtualization Engine or tape controller.

In addition, never disable ALMS at the TS3500 Tape Library after it has been enabled for System z host support and System z tape drive attachment.

|

5.2.3 Defining control path drives

Each TS7740 Virtualization Engine requires four control path drives defined. If possible, distribute the control path drives over more than one TS3500 Tape Library frame to avoid single points of failure.

In a logical library, you can designate any dedicated drive to become a control path drive. A drive that is loaded with a cartridge cannot become a control path until you remove the cartridge. Similarly, any drive that is a control path cannot be disabled until you remove the cartridge that it contains.

The definition of the control path drive is specified on the Drive Assignment window shown in Figure 5-10. Notice that drives, defined as control paths, are identified by the symbol on the left side of the drive box. You can change the control path drive definition by selecting or deselecting this symbol.

Figure 5-10 Control Path symbol

5.2.4 Defining the Encryption Method for the new logical library

After adding tape drives to the new logical library, you must specify the Encryption Method for the new logical library (if applicable).

|

Reminders:

•When using encryption, tape drives must be set to Native mode.

•To activate encryption, FC9900 must have been ordered for the TS7400 and the license key factory must be installed. Also, the associated tape drives must be Encryption Capable 3592-E05, 3592-E06, or 3952-E07 (although supported, 3592-J1A is unable to encrypt data).

|

Perform the following steps:

1. Check the drive mode by opening the Drives summary window in the TS3500 MI, as shown in Figure 5-11 on page 203, and look in the Mode column. This column is displayed only if drives in the tape library are emulation-capable.

Figure 5-11 Drive mode

2. If necessary, change the drive mode to Native mode. In the Drives summary window, select a drive and select Change Emulation Mode, as shown in Figure 5-12.

Figure 5-12 Changing drive emulation

3. In the next window that opens, select the native mode for the drive. After the drives are at the desired mode, proceed with the Encryption Method definition.

4. In the TS3500 MI, select Library → Logical Libraries, select the logical library with which you are working, select Modify Encryption Method, and then click Go. See Figure 5-13.

Figure 5-13 Selecting the Encryption Method

5. In the window that opens, select System-Managed for the chosen method, and select all drives for this partition. See Figure 5-14.

Figure 5-14 Setting the Encryption Method

To make encryption fully operational in the TS7740 configuration, additional steps are necessary. Work with your IBM SSR to configure the Encryption parameters in the TS7740 during the installation process.

|

Important: Keep the Advanced Encryption Settings as NO ADVANCED SETTING, unless specifically set otherwise by IBM Engineering.

|

5.2.5 Defining Cartridge Assignment Policies

The Cartridge Assignment Policy (CAP) of the TS3500 Tape Library is where you can assign ranges of physical cartridge volume serial numbers to specific logical libraries. If you have previously established a CAP and place a cartridge with a VOLSER that matches that range into the I/O station, the library automatically assigns that cartridge to the appropriate logical library.

Select Cartridge Assignment Policy from the Cartridges work items to add, change, and remove policies. The maximum quantity of Cartridge Assignment Policies for the entire TS3500 Tape Library must not exceed 300.

Figure 5-15 shows the VOLSER ranges defined for logical libraries.

Figure 5-15 TS3500 Tape Library Cartridge Assignment Policy

The TS3500 Tape Library allows duplicate VOLSER ranges for different media types only. For example, Logical Library 1 and Logical Library 2 contain Linear Tape-Open (LTO) media, and Logical Library 3 contains IBM 3592 media. Logical Library 1 has a Cartridge Assignment Policy of ABC100-ABC200. The library will reject an attempt to add a Cartridge Assignment Policy of ABC000-ABC300 to Logical Library 2 because the media type is the same (both LTO). However, the library does allow an attempt to add a Cartridge Assignment Policy of ABC000-ABC300 to Logical Library 3 because the media (3592) is different.

In a storage management subsystem (SMS)-managed z/OS environment, all VOLSER identifiers across all storage hierarchies are required to be unique. Follow the same rules across host platforms also, whether or not you are sharing a TS3500 Tape Library between System z and Open Systems hosts.

|

Tip: The Cartridge Assignment Policy does not reassign an already assigned tape cartridge. If needed, you must first make it unassigned, and then manually reassign it.

|

5.2.6 Inserting TS7740 Virtualization Engine physical volumes

The TS7740 Virtualization Engine subsystem manages both logical and physical volumes. The Cartridge Assignment Policy (CAP) of the TS3500 Tape Library only affects the physical volumes associated with this TS7740 Virtualization Engine logical library. Logical Volumes are managed from the TS7700 Virtualization Engine MI only.

Perform the following steps to add physical cartridges:

1. Define Cartridge Assignment Policies at the TS3500 Tape Library level by using ALMS through the Web Specialist. This ensures that all TS7740 Virtualization Engine ranges are recognized and assigned to the correct TS3500 Tape Library logical library partition (the logical library created for this specific TS7740 Virtualization Engine) before you begin any TS7700 Virtualization Engine MI definitions.

2. Physically insert volumes into the library by using the I/O station or by opening the library and placing cartridges in empty storage cells. Cartridges are assigned to the TS7740 logical library partitions according to the definitions.

|

Important: Before inserting TS7740 Virtualization Engine physical volumes into the tape library, ensure that the VOLSER ranges are defined correctly at the TS7740 MI. See 5.3.3, “Defining VOLSER ranges for physical volumes” on page 215.

|

These procedures ensure that TS7700 Virtualization Engine back-end cartridges will never be assigned to a host by accident. Figure 5-16 shows the flow of physical cartridge insertion and assignment to logical libraries for TS7740 Virtualization Engine.

Figure 5-16 Volume assignment

Inserting physical volumes into the TS3500 Tape Library

Two methods are available for inserting physical volumes into the TS3500 Tape Library:

•Opening the library doors and inserting the volumes directly into the tape library storage empty cells (bulk loading)

•Using the TS3500 Tape Library I/O station

Insertion directly into storage cells

Use the operator window of the TS3500 to pause it. Open the door and insert the cartridges into any empty slot, except those slots reserved for diagnostic cartridges, which are Frame 1, Column 1 in the first Row (F01, C01, and R01) in a single media-type library.

|

Important: With ALMS enabled, cartridges that are not in a CAP will not be added to any logical library.

|

After completing the new media insertion, close the doors. After approximately 15 seconds, the TS3500 automatically inventories the frame or frames of the door you opened. During the inventory, the message INITIALIZING is displayed on the Activity window on the operator window. When the inventory completes, the TS3500 operator window displays a Ready state. The TS7740 Virtualization Engine uploads its logical library inventory and updates its Integrated Library Manager inventory accordingly. After completing this operation, the TS7740 Virtualization Engine Library reaches the Auto state.

Place cartridges only in a frame that has an open front door. Do not add or remove cartridges from an adjacent frame.

Insertion by using the I/O station

With the ALMS, your TS3500 can be operating with or without virtual I/O being enabled. The procedure varies depending on which mode is active in the library.

Basically, with virtual I/O (VIO) enabled, TS3500 will move the cartridges from the physical I/O station into the physical library by itself. In the first moment, the cartridge leaves the physical I/O station and goes into a slot mapped as a virtual I/O - SCSI element between 769 (X’301’) and 1023 (X’3FF’) for the logical library selected by the CAP.

Each logical library has its own set of up to 256 VIO slots. This is defined in the logical library creation, and can be altered later, if needed.

With VIO disabled, the TS3584 does not move cartridges from the physical I/O station unless it receives a command from the TS7400 Virtualization Engine or any other controlling host.

In both cases, the TS3500 detects the volumes inserted when the I/O station door is closed and scans all I/O cells using the bar code reader. The CAP decides to which logical library those cartridges belong and then performs one of the following tasks:

•Moves them to that logical library’s virtual I/O slots, if VIO is enabled.

•Waits for a host command in this logical partition. The cartridges stay in the I/O station after the bar code scan.

Because the inserted cartridges belong to a defined range in the CAP of this logical library, and those ranges were defined in the TS7740 Virtualization Engine Physical Volume Range as explained in 5.3.3, “Defining VOLSER ranges for physical volumes” on page 215, those cartridges will be assigned to this logical library. If any VOLSER is not in the range defined by the CAP, the operator must identify the correct logical library as the destination by using the Insert Notification window at the operator window. If Insert Notification is not answered, the volume remains unassigned.

|

Restriction: Insert Notification is not supported on a high-density library. If a cartridge outside the CAP-defined ranges is inserted, it will remain unassigned without any notification.

|

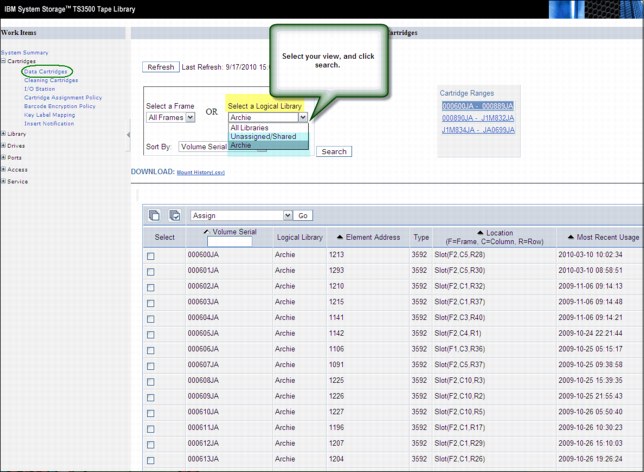

Verify that the cartridges were correctly assigned by using the TS3500 MI. Click Cartridges → Data Cartridges and select the appropriate logical library. If everything is correct, the inserted cartridges will be listed. Alternatively, displaying the Unassigned/Shared volumes show none. See Figure 5-17.

Figure 5-17 Checking volume assignment

Unassigned volumes in the TS3500 Tape Library

If a volume does not match the definitions in the CAP and if during the Insert Notification process, no owner was specified, the cartridge remains unassigned in the TS3500 Tape Library. You can check for unassigned cartridges by using the TS3500 MI and selecting Cartridges → Data Cartridges. In the drop-down menu, select Unassigned/Shared (Figure 5-17).

You can then assign the cartridges to the TS7740 Virtualization Engine logical library partition by following the procedure in 5.2.7, “Assigning cartridges in the TS3500 Tape Library to the logical library partition” on page 210.

|

Important: Unassigned cartridges can exist in the TS3500 Tape Library, and in the TS7700 Virtualization Engine MI. But “unassigned” has separate meanings and requires separate actions from the operator in each system.

|

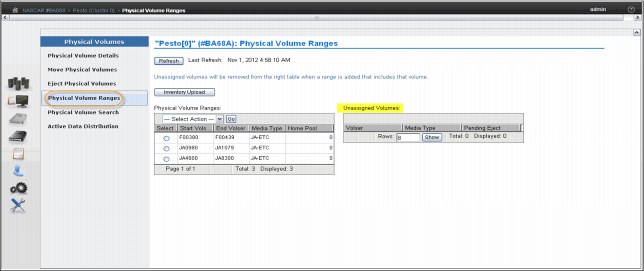

Unassigned volumes in TS7740 Virtualization Engine

A physical volume will be put in the Unassigned category by the TS7740 Virtualization Engine if it does not fit in any defined range of physical volumes for this TS7740 Virtualization Engine. Defined Ranges and Unassigned Volumes can be checked in the Physical Volume Ranges window shown in Figure 5-18. If an unassigned volume needs to belong to this TS7740 Virtualization Engine, a new range that includes this volume must be created, as described in 5.3.3, “Defining VOLSER ranges for physical volumes” on page 215. If this volume was incorrectly assigned to the TS7740 Virtualization Engine, you must eject and reassign it to the proper logical library in the TS3500 Tape Library. Also, double-check the CAP definitions in the TS3500 Tape Library.

Figure 5-18 TS7740 unassigned volumes

5.2.7 Assigning cartridges in the TS3500 Tape Library to the logical library partition

This procedure is necessary only if a cartridge was inserted, but a CAP was not provided in advance. To use this procedure, you must assign the cartridge manually to a logical library in the TS3500 Tape Library.

|

Clarifications:

•Insert Notification is not supported in a high-density library. The CAP must be correctly configured to provide automated assignment of all the inserted cartridges.

•A cartridge that has been manually assigned to the TS7740 Logical Library will not show up automatically in the TS7740 inventory. An Inventory Upload is needed to refresh the TS7740 inventory. The Inventory Upload function is available on the Physical Volume Ranges menu as shown in Figure 5-18.

•Cartridge assignment to a logical library is available only through the TS3500 Tape Library Specialist web interface. The operator window does not provide this function.

|

Assigning a data cartridge

To assign a data cartridge to a logical library in the TS3500 Tape Library, perform the following steps:

1. Open the Tape Library Specialist web interface (navigate to the library’s Ethernet IP address or the library URL using a standard browser). The Welcome window opens.

2. Click Cartridges → Data Cartridges. The Data Cartridges window opens.

3. Select the logical library to which the cartridge is currently assigned and select how you want the cartridge range to be sorted. The library can sort the cartridge by volume serial number, SCSI element address, or frame, column, and row location. Click Search. The Cartridges window opens and shows all the ranges for the logical library that you specified.

4. Select the range that contains the data cartridge that you want to assign.

5. Select the data cartridge and then click Assign.

6. Select the logical library partition to which you want to assign the data cartridge.

7. Click Next to complete the function.

For a TS7740 Virtualization Engine cluster, click Physical → Physical Volumes → Physical Volume Ranges and click Inventory Upload, as shown in Figure 5-18 on page 210.

Inserting a cleaning cartridge

Each drive in the TS3500 Tape Library requires cleaning from time to time. Tape drives used by the TS7740 subsystem can request a cleaning action when necessary. This cleaning is carried out by the TS3500 Tape Library automatically. However, you must provide the necessary cleaning cartridges.

|

Remember:

•ALMS must be enabled in a library that is connected to a TS7740 Virtualization Engine. As a result, the cleaning mode is set to automatic and the library will manage drive cleaning.

•A cleaning cartridge is good for 50 cleaning actions.

|

The process to insert cleaning cartridges varies depending on the setup of the TS3500 Tape Library. A cleaning cartridge can be inserted by using the web interface or from the operator window. As many as 100 cleaning cartridges can be inserted in a TS3500 Tape Library.

To insert a cleaning cartridge using the TS3500 Tape Library Specialist, perform the following steps:

1. Open the door of the I/O station and insert the cleaning cartridge.

2. Close the door of the I/O station.

3. Type the Ethernet IP address on the URL line of the browser and press Enter. The Welcome Page opens.

4. Click Cartridges → I/O Station. The I/O Station window opens.

5. Follow the instructions in the window.

To insert a cleaning cartridge by using the operator window, perform the following steps:

1. From the Library’s Activity touchscreen, press MENU → Manual Operations → Insert Cleaning Cartridges → Enter. The library displays the message Insert Cleaning Cartridge into I/O station before you continue. Do you want to continue?

2. Open the I/O station and insert the cleaning cartridge. If you insert it incorrectly, the I/O station will not close properly. Do not force it.

3. Close the I/O station and press YES. The tape library will scan the I/O station for the cartridges and move them to an appropriate slot. The tape library displays the message Insertion of Cleaning Cartridges has completed.

4. Press Enter to return to Manual Operations menu, and Back until you return to the Activity touchscreen.

|

Tip: Cleaning cartridge are not assigned to specific logical libraries.

|

5.3 Setting up the TS7700 Virtualization Engine

This section uses the TS7700 MI to continue with the TS7700 Virtualization subsystem setup. If you have a TS7720, you can skip 5.2, “TS3500 Tape Library definitions (TS7740 Virtualization Engine)” on page 192 and start here.

This section describes the definitions and settings that apply to the TS7700 Virtualization Engine. The setup requires the following major tasks:

•Definitions that are made by the IBM SSR during the installation of the TS7700 Virtualization Engine at your request

•Definitions that are made through the TS7740 Virtualization Engine MI

•Insertion of logical volumes through the TS7740 Virtualization Engine MI

5.3.1 TS7700 Virtualization Engine definition with the MI

The TS7700 Virtualization Engine MI is a web-based user interface to the TS7700 for information and management. It is accessed through any standard web browser, using the TS7700 Virtualization Engine IP address. During the installation process, the IBM SSR set up TCP/IP on the TS7700 to use the assigned TCP/IP host name and TCP/IP address.

Using the MI, you can perform the following functions:

•Monitor the status of the TS7700 functions and hardware components

•Monitor the performance of the TS7700 and grid

•Manage the TS7700 logical volumes

•Configure the TS7700 and grid

•Manage the operation of the TS7700 and grid

•Manage user access to the TS7700

•Access the TS3500 Tape Library Specialist web interface

•Access the TS7700 Information Center

Connecting to the MI

Perform the following steps to connect to the MI:

1. In the address bar of a supported web browser, enter http:// followed by the virtual IP address that was entered during installation, followed by /Console. The virtual IP is one of three IP addresses specified during installation. The complete URL will take this form:

http://virtual IP address/Console

2. Press the Enter key on your keyboard or click Go on your web browser.

3. The login page for the MI will load. The default login name is admin and the default password is admin.

Figure 5-19 shows a summary window for a six-cluster grid.

Figure 5-19 Six-cluster grid summary

Hold the mouse on the cluster, and the pop-up window shows the distributed library ID. The composite library sequence number, distributed library sequence number, and cluster number are shown in Figure 5-19.

Name your grid and cluster (or clusters) by using the MI. Be sure to use meaningful names to make resource identification as easy as possible to anyone who might be managing or monitoring this grid through the MI.

|

Tip: A preferred practice is to make the grid name the same as the composite library name that was defined through DFSMS.

|

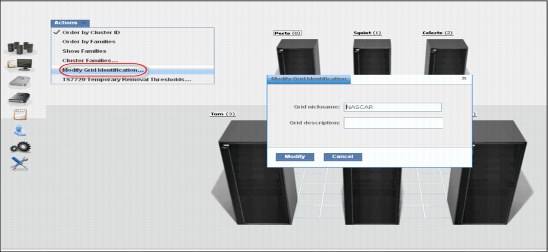

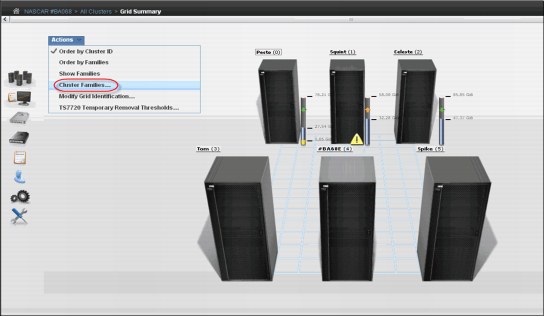

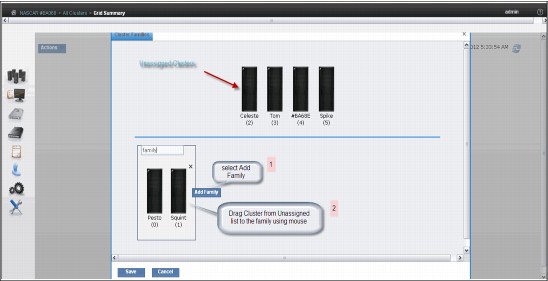

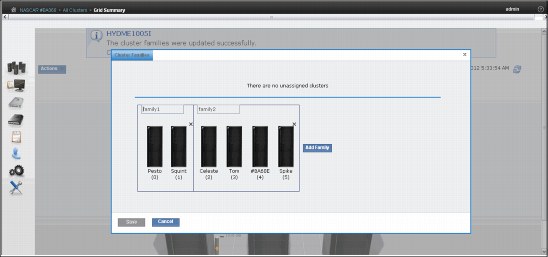

To set the grid name, access the TS7700 MI. Click Action → Modify Grid Identification. The Modify Grid Identification window opens as shown in Figure 5-20. Enter the appropriate information as required.

Figure 5-20 Modify Grid Identification window

Click one cluster image on the Grid Summary window, and the cluster summary window is loaded. To set the cluster names, click Action → Modify Cluster Identification. See Figure 5-21 for the Modify Cluster Identification window.

|

Tip: Use the same denomination in the cluster nickname as used in the DFSMS distributed library for the same cluster.

|

Figure 5-21 Modify Cluster Identification window

Both the cluster or grid nickname must be one to eight characters in length and composed of alphanumeric characters. Blank spaces and the following special characters are also allowed:

@ . - +

Blank spaces cannot be used in the first or last character position.

In the Grid description field or the Cluster description field, a brief description (up to 63 characters) is enough.

5.3.2 Verifying the composite and distributed library sequence numbers

You must ensure that distributed library and composite library sequence numbers are unique within the grid.

Both composite and distributed library sequence numbers are set into each TS7700 Virtualization Engine cluster by the IBM SSR during installation. The composite library ID defined in the TS7700 hardware must match the hardware configuration definition (HCD) as well as the Library-ID defined in ISMF. The distributed library ID defined in the TS7700 hardware must match the Library-ID defined in ISMF.

Even a stand-alone grid (stand-alone) TS7700 Virtualization Engine requires the definition of a composite and a distributed library ID (visualize a grid compounded by a lone cluster). The composite library ID is used for the host definition of the TS7700 Virtualization Engine logical library. The distributed library ID is used to link to the hardware aspects of the TS7700 Virtualization Engine, such as displaying scratch stacked volumes.

Check the distributed and composite library sequence numbers in the Grid Summary window of the MI, as shown in Figure 5-19 on page 213.

|

Restriction: The library ID must be five characters using a hexadecimal value (0-9, A-F). Do not use distributed library names starting with the letter V because on the z/OS host, a library name cannot start with the letter V.

|

5.3.3 Defining VOLSER ranges for physical volumes

After a cartridge is assigned to a logical library that is associated to a TS7740 Virtualization Engine by CAPs, it will be presented to the TS7740 Virtualization Engine Integrated Library Manager. The Integrated Library Manager uses the VOLSER ranges that are defined in its VOLSER Ranges table to set it to a proper Library Manager category. Define the proper policies in the VOLSER Ranges table before inserting the cartridges into the tape library.

|

Important:

•When using a TS3500 Tape Library, you must assign the CAP at the library hardware level before using the library with System z hosts.

•When using a TS3500 Tape Library and the TS7740 Virtualization Engine, physical volumes must fall within ranges that are assigned by the CAP to this TS7740 Virtualization Engine Logical Library in the TS3500 Tape Library.

|

Use the window shown in Figure 5-22 on page 216 to add, modify, or delete physical volume ranges. Unassigned physical volumes are listed in this window. When you observe an unassigned volume that belongs to this TS7740 Virtualization Engine, add a range that includes that volume to fix it. If an unassigned volume does not belong to this TS7740 Virtualization Engine, you must eject it and reassign it to the proper logical library in the TS3500 Tape Library.

Figure 5-22 Physical Volume Ranges window

Click Inventory Upload to upload the inventory from the TS3500 and update any range or ranges of physical volumes that were recently assigned to that logical library. The VOLSER Ranges table displays the list of defined VOLSER ranges for a specific component. You can use the VOLSER Ranges table to create a new VOLSER range, or to modify or delete a predefined VOLSER range.

|

Important: Operator intervention is required to resolve unassigned volumes.

|

Figure 5-22 shows the status information that is displayed in the VOLSER Ranges table:

•Start Volser: The first VOLSER in a defined range

•End Volser: The last VOLSER in a defined range

•Media Type: The media type for all volumes in a certain VOLSER range. The following values are valid:

– JA-ETC: Enterprise Tape Cartridge

– JB-ETCL: Enterprise Extended-Length Tape Cartridge

– JC-EADC: Enterprise Advanced Data Cartridge

– JJ-EETC: Enterprise Economy Tape Cartridge

– JK-EAETC: Enterprise Advanced Economy Tape Cartridge

•Home Pool: The home pool to which the VOLSER range is assigned

Use the drop-down menu in the VOLSER Ranges table to add a new VOLSER range, or to modify or delete a predefined range:

•To add a new VOLSER range, select Add from the drop-down menu. Complete the fields for information that will be displayed in the VOLSER Ranges table, as defined previously.

•To modify a predefined VOLSER range, click the radio button from the Select column that appears in the same row as the name of the VOLSER range you want to modify. Select Modify from the drop-down menu and make your changes to the information that will be displayed in the VOLSER Ranges table.

|

Important: Modifying a predefined VOLSER range will not have any effect on physical volumes that are already inserted and assigned to the TS7740 Virtualization Engine. Only physical volumes that will be inserted after the VOLSER range modification will be changed.

|

The VOLSER entry fields must contain six characters. The characters can be letters, numerals, or a space. The two VOLSERs must be entered in the same format. Corresponding characters in each VOLSER must both be either alphabetic or numeric. For example, AAA998 and AAB004 are of the same form, but AA9998 and AAB004 are not. The VOLSERs that fall within a range are determined in the following manner. The VOLSER range is increased so that alphabetic characters are increased alphabetically, and numeric characters are increased numerically. For example, VOLSER range ABC000 - ABD999 results in a range of 2,000 VOLSERs (ABC000 - ABC999 and ABD000 - ABD999).

|

Restriction: The VOLSER ranges you define on the IBM TS3500 Tape Library apply to physical cartridges only. You can define logical volumes only through the TS7700 Virtualization Engine MI. See 5.3.15, “Inserting logical virtual volumes” on page 259 for more information.

|

For the TS7700 Virtualization Engine, no additional definitions are required at the hardware level other than setting up the correct VOLSER ranges at the TS3500 library.

Although you can now enter cartridges into the TS3500 library, complete the required definitions at the host before you insert any physical cartridges into the tape library.

The process of inserting logical volumes into the TS7700 Virtualization Engine is described in 5.3.15, “Inserting logical virtual volumes” on page 259.

5.3.4 Defining physical volume pools (TS7740 Virtualization Engine)

Physical volume pooling was first introduced as part of advanced policy management advanced functions in the IBM TotalStorage Virtual Tape Server (VTS).

Pooling physical volume allows you to separate your data into separate sets of physical media, treating each media group in a specific way. For instance, you might want to segregate production data from test data, or encrypt part of your data. All of this can be accomplished by defining physical volume pools appropriately. Also, you can define the reclaim parameters for each specific pool to best suit your specific needs. The TS7700 Virtualization Engine MI is used for pool property definitions.

Items under Physical Volumes in the MI only apply to clusters with an associated tape library (TS7740 Virtualization Engine). Trying to access those windows from a TS7720 results in the following HYDME0995E message:

This cluster is not attached to a physical tape library.

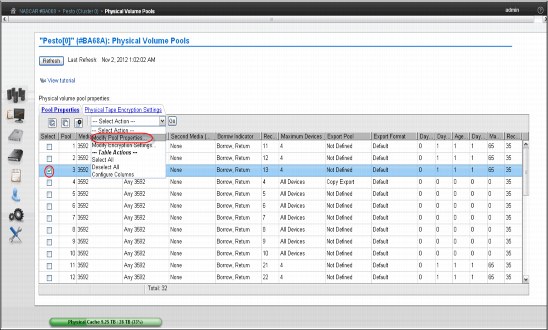

Use the window shown in Figure 5-23 on page 218 to view or modify settings for physical volume pools, which manage the physical volumes used by the TS7700 Virtualization Engine.

Figure 5-23 Physical Volume Pools

The Physical Volume Pool Properties table displays the encryption setting and media properties for every physical volume pool defined for a given cluster in the grid.

You can use the Physical Volume Pool Properties table to view encryption and media settings for all installed physical volume pools. To view and modify additional details of pool properties, select a pool or pools from this table and then select either Modify Pool Properties or Modify Encryption Settings from the drop-down menu.

|

Tip: Pools 1 - 32 are preinstalled. Pool 1 functions as the default pool and is used if no other pool is selected. All other pools must be defined before they can be selected.

|

The Physical Volume Pool Properties table displays the media properties and encryption settings for every physical volume pool defined for a given cluster in the grid. This table contains two tabs: Pool Properties and Physical Tape Encryption Settings:

•The following information is under the Pool Properties tab:

– Pool: Lists the pool number, which is a whole number in the range of 1 - 32, inclusive.

– Media Class: Lists that the supported media class of the storage pool is 3592.

– First Media (Primary): The primary media type that the pool can borrow or return to the common scratch pool (Pool 0). The following values are valid:

Any 3592 Any 3592

JA Enterprise Tape Cartridge (ETC)

JB Enterprise Extended-Length Tape Cartridge (ETCL)

JC Enterprise Advanced Data Cartridge (EADC)

JJ Enterprise Economy Tape Cartridge (EETC)

JK Enterprise Advanced Economy Tape Cartridge (EAETC)

To modify pool properties, select the check box next to one or more pools listed in the Physical Volume Pool Properties table and select Properties from the drop-down menu. The Pool Properties table is displayed.

You can modify the fields Media Class and First Media, defined previously, and the following fields:

– Second Media (Secondary): Lists the second choice of media type from which the pool can borrow. The options listed exclude the media type selected for the First Media. The following values are valid:

Any 3592 Any 3592

JA Enterprise Tape Cartridge (ETC)

JB Enterprise Extended-Length Tape Cartridge (ETCL)

JC Enterprise Advanced Data Cartridge (EADC)

JJ Enterprise Economy Tape Cartridge (EETC)

JK Enterprise Advanced Economy Tape Cartridge (EAETC)

None The only option available if the Primary Media type is Any 3592.

– Borrow Indicator: Defines how the pool is populated with scratch cartridges. The following values are valid:

Borrow, Return A cartridge is borrowed from the common scratch pool and returned when emptied.

Borrow, Keep A cartridge is borrowed from the common scratch pool and retained, even after being emptied.

No Borrow, Return A cartridge cannot be borrowed from the common scratch pool, but an emptied cartridge is placed in the common scratch pool. This setting is used for an empty pool.

No Borrow, Keep A cartridge cannot be borrowed from the common scratch pool, and an emptied cartridge will be retained.

– Reclaim Pool: Lists the pool to which logical volumes are assigned when reclamation occurs for the stacked volume on the selected pool.

– Maximum Devices: Lists the maximum number of physical tape drives that the pool can use for premigration.

– Export Pool: Lists the type of export that is supported if the pool is defined as an Export Pool, which is the pool from which physical volumes are exported (From the Physical Volume Pools page, click the Pool Properties tab; select the check box next to each pool to be modified; select Modify Pool Properties from the Physical volume pools drop-down menu; and click Go to open the Modify Pool Properties page.) The following values are valid:

Not Defined The pool is not defined as an Export pool.

Copy Export The pool is defined as a Copy Export pool.

– Export Format: The media format used when writing volumes for export. This function can be used when the physical library recovering the volumes supports a different media format than the physical library exporting the volumes. This field is only enabled if the value in the Export Pool field is Copy Export. The following values are valid:

Default The highest common format supported across all drives in the library. This is also the default value for the Export Format field.

E06 Format of a 3592-E06 Tape Drive.

E07 Format of a 3592-E07 Tape Drive.

– Days Before Secure Data Erase: Lists the number of days a physical volume that is a candidate for Secure Data Erase can remain in the pool without access to a physical stacked volume. Each stacked physical volume possesses a timer for this purpose, which is reset when a logical volume on the stacked physical volume is accessed. Secure Data Erase occurs at a later time, based on an internal schedule. Secure Data Erase renders all data on a physical stacked volume inaccessible. The valid range of possible values is 1 - 365. Clicking to clear the check box deactivates this function.

– Days Without Access: Lists the number of days the pool can persist without access to set a physical stacked volume. Each physical stacked volume has a timer for this purpose, which is reset when a logical volume is accessed. The reclamation occurs at a later time, based on an internal schedule. The valid range of possible values is 1 - 365. Clicking to clear the check box deactivates this function.

– Age of Last Data Written: Lists the number of days the pool has persisted without write access to set a physical stacked volume as a candidate for reclamation. Each physical stacked volume has a timer for this purpose, which is reset when a logical volume is accessed. The reclamation occurs at a later time, based on an internal schedule. The valid range of possible values is 1 - 365. Clicking to clear the check box deactivates this function.

– Days Without Data Inactivation: Lists the number of sequential days the pool’s data ratio has been higher than the Maximum Active Data to set a physical stacked volume as a candidate for reclamation. Each physical stacked volume has a timer for this purpose, which is reset when data is inactivated. The reclamation occurs at a later time, based on an internal schedule. The valid range of possible values is 1-365. Clicking to clear the check box will deactivate this function. If deactivated, this field is not used as a criteria for reclamation.

– Maximum Active Data: Lists the ratio of the amount of active data in the entire physical stacked volume capacity. This field is used in conjunction with Days Without Data Inactivation. The valid range of possible values is 5 - 95(%). This function is disabled if Days Without Data Inactivation is not selected.

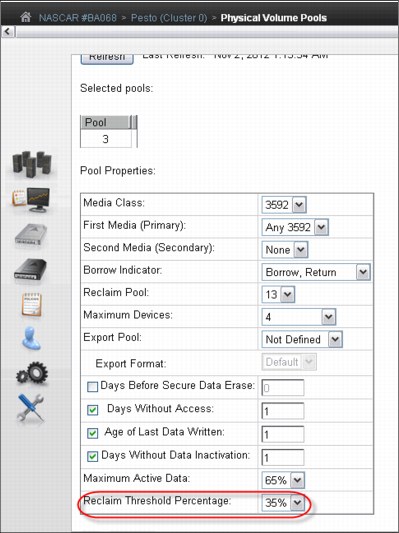

– Reclaim Threshold: Lists the percentage that is used to determine when to perform reclamation of free storage on a stacked volume. When the amount of active data on a physical stacked volume drops below this percentage, a reclaim operation will be performed on the stacked volume. The valid range of possible values is 5 - 95(%). The default value is 10%. Clicking to clear the check box deactivates this function.

•The following information is under the Physical Tape Encryption Settings tab:

– Pool: Lists the pool number. This number is a whole number 1 - 32, inclusive.

– Encryption: Lists the encryption state of the pool. The possible values are Enabled or Disabled.

– Key Mode 1: Lists the encryption mode used with Key Label 1. The following values are valid for this field:

• Clear Label: The data key is specified by the key label in clear text.

• Hash Label: The data key is referenced by a computed value corresponding to its associated public key.

• None: Key Label 1 is disabled.

• Dash (-): The default key is in use.

– Key Label 1: Lists the current encryption key Label 1 for the pool. The label must consist of ASCII characters and cannot exceed 64 characters. Leading and trailing blanks are removed, but an internal space is allowed. Lowercase characters are internally converted to uppercase upon storage, and therefore key labels are reported using uppercase characters.

If the encryption state indicates Disabled, this field is blank. If the default key is used, the value in this field is default key.

– Key Mode 2: Lists the encryption mode used with Key Label 2. The following values are valid for this field:

• Clear Label: The data key is specified by the key label in clear text.

• Hash Label: The data key is referenced by a computed value corresponding to its associated public key.

• None: Key Label 2 is disabled.

• Dash (-): The default key is in use.

– Key Label 2: The current encryption key Label 2 for the pool. The label must consist of ASCII characters and cannot exceed 64 characters. Leading and trailing blanks are removed, but an internal space is allowed. Lowercase characters are internally converted to uppercase upon storage, and therefore key labels are reported using uppercase characters.

If the encryption state is Disabled, this field is blank. If the default key is used, the value in this field is default key.

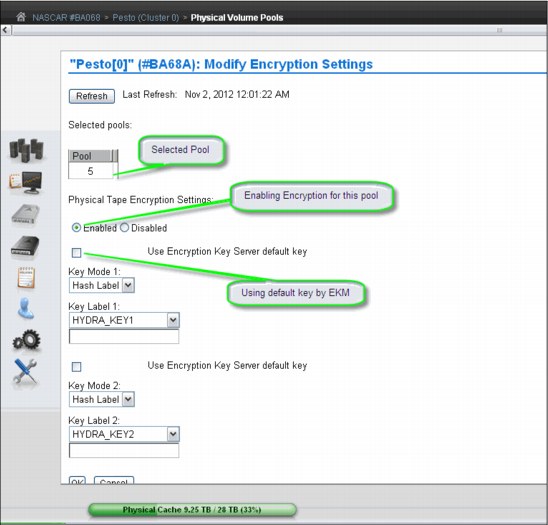

To modify encryption settings for one or more physical volume pools, use the following steps (see Figure 5-24 and Figure 5-25 on page 222 for reference):

1. Open the Physical Volume Pools page (Figure 5-24).

Figure 5-24 Modifying encryption parameters for a pool

|

Tip: A tutorial is available at the Physical Volume Pools page to show how to modify encryption properties.

|

2. Click the Physical Tape Encryption Settings tab.

3. Select the check box next to each pool to be modified.

4. Click Select Action → Modify Encryption Settings.

Figure 5-25 Modify encryption settings parameters

In this window, you can modify values for any of the following controls:

•Encryption:

This field is the encryption state of the pool and can have the following values:

– Enabled: Encryption is enabled on the pool.

– Disabled: Encryption is not enabled on the pool.

When this value is selected, key modes, key labels, and check boxes are disabled.

•Use Encryption Key Manager default key

Select this check box to populate the Key Label field by using a default key provided by the encryption key manager.

|

Restriction: Your encryption key manager software must support default keys to use this option.

|

This check box occurs before both Key Label 1 and Key Label 2 fields; you must select this check box for each label to be defined using the default key.

If this check box is selected, the following fields are disabled:

– Key Mode 1

– Key Label 1

– Key Mode 2

– Key Label 2

•Key Mode 1

This field is the encryption mode that is used with Key Label 1. The following values are valid:

– Clear Label: The data key is specified by the key label in clear text.

– Hash Label: The data key is referenced by a computed value corresponding to its associated public key.

– None: Key Label 1 is disabled. The default key is in use.

•Key Label 1

This field is the current encryption key Label 1 for the pool. The label must consist of ASCII characters and cannot exceed 64 characters. Leading and trailing blanks are removed, but an internal space is allowed. Lowercase characters are internally converted to uppercase upon storage, and therefore, key labels are reported using uppercase characters.

•Key Mode 2

This field is the encryption mode used with Key Label 2. The following values are valid:

– Clear Label: The data key is specified by the key label in clear text.

– Hash Label: The data key is referenced by a computed value that corresponds to its associated public key.

•None

Indicates that the Key Label 2 is disabled. The default key is in use.

•Key Label 2

This field is the current encryption key Label 2 for the pool. The label must consist of ASCII characters and cannot exceed 64 characters. Leading and trailing blanks are removed, but an internal space is allowed. Lowercase characters are internally converted to uppercase upon storage, and therefore key labels are reported using uppercase characters.

To complete the operation, click OK. To abandon the operation and return to the Physical Volume Pools page, click Cancel.

Reclaim thresholds

To optimize utilization of the subsystem resources, such as CPU cycles and tape drive usage, you can inhibit space reclamation during predictable busy periods of time and adjust reclamation thresholds to the optimum point in your TS7740 through the MI. The reclaim threshold is the percentage used to determine when to perform the reclamation of free space in a stacked volume. When the amount of active data on a physical stacked volume drops below this percentage, the volume becomes eligible for reclamation. Reclamation values can be in the range of 5 - 95%, and default value is 10%. Clicking to clear the check box deactivates this function.

Throughout the data lifecycle, new logical volumes are created and old logical volumes become obsolete. Logical volumes are migrated to physical volumes, occupying real space there. When a logical volume becomes obsolete, that space becomes a waste of capacity in that physical tape. In other words, the active data level of that volume is decreasing over time. TS7740 actively monitors the active data in its physical volumes. Whenever this active data level crosses the reclaim threshold that is defined in the Physical Volume Pool in which that volume belongs, the TS7400 sets that volume in a candidate list for reclamation.

Reclamation will copy active data from that volume to another stacked volume in the same pool. When the copy finishes and the volume becomes empty, the volume is returned to available SCRATCH status. This cartridge is now available for use and will be returned to the common scratch pool or directed to the specified reclaim pool, according to the Physical Volume Pool definition.

|

Clarification: Each reclamation task uses two tape drives (source and target) in a tape-to-tape copy function. The TS7740 Tape Volume Cache is not used for reclamation.

|

Multiple reclamation processes can run in parallel. The maximum number of reclaim tasks is limited by the TS7740, based on the number of available back-end drives as shown in Table 5-1.

Table 5-1 Installed drives versus maximum reclaim tasks

|

Number of available drives

|

Maximum number of reclaims

|

|

3

|

1

|

|

4

|

1

|

|

5

|

1

|

|

6

|

2

|

|

7

|

2

|

|

8

|

3

|

|

9

|

3

|

|

10

|

4

|

|

11

|

4

|

|

12

|

5

|

|

13

|

5

|

|

14

|

6

|

|

15

|

6

|

|

16

|

7

|

The reclamation level for the physical volumes must be set by using the Physical Volume Pools window in the TS7740 MI. See Figure 5-26.

Figure 5-26 Physical Volume Pools

Select a pool and click Modify Pool Properties in the drop-down menu to set the reclamation level and other policies for that pool. See Figure 5-27 on page 226.

In this example, the reclamation level is set to 35%, the Borrow-Return policy is in effect for this pool, and reclaimed physical cartridges stay in the same Pool 3, except borrowed volumes, which will be returned to the original pool.

Figure 5-27 Pool properties

Reclamation enablement

To minimize any impact on TS7700 Virtualization Engine activity, the storage management software monitors resource utilization in the TS7700 Virtualization Engine, and enables or disables reclamation as appropriate. You can optionally prevent reclamation activity at specific times of day by specifying an Inhibit Reclaim Schedule in the TS7740 Virtualization Engine MI (Figure 5-28 on page 229 shows an example). However, the TS7740 Virtualization Engine determines whether reclamation is to be enabled or disabled once an hour depending on the number of available scratch cartridges and will ignore the Inhibit Reclaim schedule if the TS7740 Virtualization Engine is almost out of scratch category.

|

Tip: The maximum number of inhibit reclaim schedules is 14.

|

Using the Bulk Volume Information Retrieval (BVIR) process, you can run the query for PHYSICAL MEDIA POOLS to monitor the amount of active data on stacked volumes to help you plan for a reasonable and effective reclaim threshold percentage. You can also use the Host Console Request function to obtain the physical volume counts.

Although reclamation is enabled, stacked volumes might not always be going through the process all the time. Other conditions must be met, such as stacked volumes that meet one of the reclaim policies and drives available to mount the stacked volumes.

Reclamation for a volume is stopped by the TS7700 Virtualization Engine internal management functions if a tape drive is needed for a recall or copy (because these are of a higher priority) or a logical volume is needed for recall off a source or target tape being used in the reclaim process. If this happens, reclamation is stopped for this physical tape after the current logical volume move is complete.

Pooling is enabled as a standard feature of the TS7700 Virtualization Engine, even if you are only using one pool. Reclamation can occur on multiple volume pools at the same time, and processing multiple tasks for the same pool. One of the reclamation methods selects the volumes for processing based on the percentage of active data. For example, if the reclaim threshold was set to 30% generically across all volume pools, the TS7700 Virtualization Engine selects all the stacked volumes from 0% to 29% of the remaining active data. The reclaim tasks then process the volumes from least full (0%) to most full (29%) up to the defined reclaim threshold of 30%.

Individual pools can have separate reclaim policies set. The number of pools can also influence the reclamation process because the TS7740 Virtualization Engine always evaluates the stacked media starting with Pool 1.

The scratch count for physical cartridges also affects reclamation. The scratch state of pools is assessed in the following manner:

1. A pool enters a Low scratch state when it has access to fewer than 50 but two or more stacked volumes.

2. A pool enters a Panic scratch state when it has access to fewer than two empty stacked volumes.

“Access to” includes any borrowing capability, which means that if the pool is configured for borrowing, and if there are more than 50 cartridges in the common scratch pool, the pool will not enter the Low scratch state.

Whether borrowing is configured or not, as long as each pool has two scratch cartridges, the Panic Reclamation mode is not entered. Panic Reclamation mode is entered when a pool has fewer than two scratch cartridges and no more scratch cartridges can be borrowed from any other pool defined for borrowing. Borrowing is described in “Physical volume pooling” on page 41.

|

Important: A physical volume pool running out of scratch cartridges might stop mounts in the TS7740, impacting your operation. Mistakes in pool configuration (media type, borrow and return, home pool, and so on) or operating with an empty common scratch pool might lead to this situation.

|

Consider that one reclaim task consumes two drives for the data move, and CPU cycles. When a reclamation starts, these drives are busy until the volume being reclaimed is empty. If you raise the reclamation threshold level too high, the result is larger amounts of data to be moved, with resultant penalty in resources that are needed for recalls and premigration. The default setting for the reclamation threshold level is 10%. Generally, you need to operate with a reclamation threshold level in the range of 10 - 30%. Also, see Chapter 9, “Operation” on page 413 to fine-tune this function, considering your peak load and using new host functions. Pools in either scratch state (Low or Panic state) get priority for reclamation.

Table 5-2 summarizes the thresholds.

Table 5-2 Reclamation priority table

|

Priority

|

Condition

|

Reclaim schedule honored

|

Active data threshold% honored

|

Number of concurrent reclaims

|

Comments

|

|

1

|

Pool in Panic scratch state

|

No

|

No

|

1, regardless of idle drives

|

|

|

2

|

Priority move

|

Yes or No

|

No

|

1, regardless of idle drives

|

If a volume is within 10 days of a Secure Data Erasure (SDE) and still has active data on it, it will be reclaimed at this priority. An SDE priority move will honor the inhibit reclaim schedule.

For a TS7740 MI-initiated priority move, the option to honor the inhibit

reclaim schedule is given to the operator. |

|

3

|

Pool in Low scratch state

|

Yes

|

Yes

|

1, regardless of idle drives

|

Volumes that are subject to reclaim because of Maximum Active Data, Days Without Access, Age of Last Data Written, and Days Without Data Inactivation will use priority 3 or 4 reclamation.

|

|

4

|

Normal reclaim

|

Yes

|

Yes, pick from all eligible pools

|

(Number of idle drives divided by 2) minus 1

8 drv: 3 max

16 drv: 7 max

|

Volumes that are subject to reclaim because of Maximum Active Data, Days Without Access, Age of Last Data Written, and Days Without Data Inactivation will use priority 3 or 4 reclamation.

|

|

Tips:

•A physical drive is considered idle when no activity has occurred for the previous ten minutes.

•The Inhibit Reclaim schedule is not honored by the Secure Data Erase function for a volume that has no active data.

|

Inhibit Reclaim schedule

The Inhibit Reclaim schedule defines when the TS7700 Virtualization Engine must refrain from reclaim operations. During times of heavy mount activity, it might be desirable to make all of the physical drives available for recall and premigration operations. If these periods of heavy mount activity are predictable, you can use the Inhibit Reclaim schedule to inhibit reclaim operations for the heavy mount activity periods.

To define the Inhibit Reclaim schedule, click Management Interface → Settings → Cluster Settings, which opens the window shown in Figure 5-28.

Figure 5-28 Inhibit Reclaim schedules

The Schedules table (Figure 5-29) displays the day, time, and duration of any scheduled reclamation interruption. All inhibit reclaim dates and times are first displayed in Coordinated Universal Time (UTC) and then in local time. Use the drop-down menu on the Schedules table to add a new Reclaim Inhibit Schedule, or modify or delete an existing schedule, as shown in Figure 5-28.

Figure 5-29 Add Inhibit Reclaim schedule

5.3.5 Defining scratch (Fast Ready) categories

In Release 3.0 of the TS7700 Virtualization Engine, all categories that are defined as scratch inherit the Fast Ready attribute. There is no longer a need to use the MI to set the Fast

Ready attribute to scratch categories; however, the MI is still needed to indicate which categories are scratch.

The MOUNT FROM CATEGORY command is not exclusively used for scratch mounts. Therefore, the TS7700 Virtualization Engine cannot assume that any MOUNT FROM CATEGORY is for a scratch volume.

The Fast Ready attribute provides a definition of a category to supply scratch mounts. For z/OS, it depends on the definitions. The TS7700 MI provides a way to define one or more scratch (Fast Ready) categories. Figure 5-30 shows the Categories window. You can add a scratch (Fast Ready) category by using the Add Scratch Category pull-down (Maintenance Interface with Release 3.0).

When defining a scratch (Fast Ready) category, you can also set up an expire time, as well as further define the expire time as an Expire Hold time.

The actual category hexadecimal number depends on the software environment and on the definitions in the SYS1.PARMLIB member DEVSUPxx for library partitioning. Also, the DEVSUPxx member must be referenced in the IEASYSxx member to be activated.

Figure 5-30 Categories

In Figure 5-30, use this page to add, modify, or delete a scratch (Fast Ready) category of virtual volumes. You can also use this page to view total volumes defined by custom, inserted, and damaged categories. The Categories table uses the following values and descriptions:

•Categories:

– Scratch

Categories within the user-defined private range 0x0001 through 0xEFFF that are defined as scratch (Fast Ready).

– Private

Custom categories established by a user, within the range of 0x0001 though 0xEFFF.

– Damaged

A system category identified by the number 0xFF20. Virtual volumes in this category are considered damaged.

– Insert

A system category identified by the number 0xFF00. Inserted virtual volumes are held in this category until moved by the host into a scratch category.

•Owning Cluster

Names of all clusters in the grid.

•Counts

The total number of virtual volumes according to category type, category, or owning cluster.

•Scratch Expired

The total number of scratch volumes per owning cluster that are expired. The total of all scratch expired volumes is the number of ready scratch volumes.

|

Number of virtual volumes: You cannot arrive at the total number of virtual volumes by adding all volumes shown in the Counts column because some rare, internal categories are not displayed on the Categories table. Additionally, movement of virtual volumes between scratch and private categories can occur multiple times per second and any snapshot of volumes on all clusters in a grid is obsolete by the time a total count completes.

|

You can use the Categories table to add, modify, or delete a scratch category, or to change the way that information is displayed.

Figure 5-31 shows the Add Category window, which opens by selecting Add Scratch Categories as shown in Figure 5-30 on page 230.

Figure 5-31 Scratch (Fast Ready) Categories: Add Category

The Add Category window shows these fields:

•Category

A four-digit hexadecimal number that identifies the category. The following characters are valid characters for this field:

A-F, 0-9

|

Note: Do not use category name 0000 or "FFxx", where xx equals 0 - 9 or A - F. 0000 represents a null value, and "FFxx" is reserved for hardware.

|

•Expire

The amount of time after a virtual volume is returned to the scratch (Fast Ready) category before its data content is automatically delete-expired.

A volume becomes a candidate for delete-expire once all the following conditions are met:

– The amount of time since the volume entered the scratch (Fast Ready) category is equal to or greater than the Expire Time.

– The amount of time since the volume’s record data was created or last modified is greater than 12 hours.

– At least 12 hours has passed since the volume was migrated out of or recalled back into disk cache.

|

Note: If you select No Expiration, volume data never automatically delete-expires.

|

•Set Expire Hold

Check this box to prevent the virtual volume from being mounted or having its category and attributes changed before the expire time has elapsed.

Checking this field activates the hold state for any volumes currently in the given scratch (Fast Ready) category and for which the expire time has not yet elapsed. Clearing this field removes the access restrictions on all volumes currently in the hold state within this scratch (Fast Ready) category.

|

Restriction: Trying to mount a non-expired volume that belongs to a scratch (Fast Ready) category with Expire Hold on will result in an error.

|

Beginning in Release 2.1, a category change to a held volume is allowed so long as the target category is not scratch (Fast Ready). An expire-held volume can be moved to a private (non-Fast Ready) category and cannot be moved to another scratch (Fast Ready) category with this option enabled.

|

Tip: Add a comment to DEVSUPnn to ensure that the scratch (Fast Ready) categories are updated when the category values in DEVSUPnn are changed. They need to be in sync at all times.

|

See Appendix H, “Library Manager volume categories” on page 953 for the scratch mount category for each software platform. In addition to the z/OS DFSMS default value for the scratch mount category, you can define your own scratch category to the TS7700 Virtualization Engine. In this case, you must also add your own scratch mount category to the Fast Ready category list.

5.3.6 Defining the logical volume expiration time

You define the expiration time from the MI window shown in Figure 5-31 on page 231. If the Delete Expired Volume Data setting is not used, logical volumes that have been returned to scratch will still be considered active data, allocating physical space in tape cartridges on the TS7740 Virtualization Engine. In that case, only rewriting this logical volume will expire the old data, therefore allowing that physical space occupied by old data to be reclaimed later. With the Delete Expired Volume Data setting, the data that is associated with volumes that have been returned to scratch are expired after a specified time period and their physical space in tape can be reclaimed.

For example, assume that you have 20,000 logical volumes in SCRATCH status at any point in time, that the average amount of data on a logical volume is 400 MB, and that the data compresses at a 2:1 ratio. The space occupied by the data on those scratch volumes is 4,000,000 MB or the equivalent of fourteen 3592-JA cartridges. By using the Delete Expired Volume Data setting, you can reduce the number of cartridges required in this example by 14. The parameter Expire Time specifies the amount of time in hours, days, or weeks. The data continues to be managed by the TS7700 Virtualization Engine after a logical volume is returned to scratch before the data associated with the logical volume is deleted. A minimum of 1 hour and a maximum of 32,767 hours (approximately 195 weeks) can be specified.

|

Remember:

•Scratch (Fast Ready) categories are global settings within a multicluster grid. Therefore, each defined scratch (Fast Ready) category and the associated Delete Expire settings are valid on each cluster of the grid.

•The Delete Expired Volume Data setting applies also to TS7720 clusters. If it is not used, logical volumes that have been returned to scratch will still be considered active data, allocating physical space in the Tape Volume Cache (TVC). Therefore, setting an expiration time on TS7720 is important to maintain an effective cache usage by deleting expired data.

|

Specifying a value of zero used to work as the No Expiration option in older levels. Zero in this field causes an error message as shown in Figure 5-32 on page 234. You see the message because the data associated with the volume is managed as it was before the addition of this option, meaning that it is never deleted. In essence, specifying a value (other than zero) provides a “grace period” from when the logical volume is returned to scratch until its associated data is eligible for deletion. A separate Expire Time can be set for each category defined as Fast Ready.

Figure 5-32 Invalid expire time value

Expire Time

Figure 5-31 on page 231 shows the number of hours or days in which logical volume data categorized as scratch (Fast Ready) will expire. If the field is set to 0, the categorized data will never expire. The minimum Expire Time is 1 hour and the maximum Expire Time is 195 weeks, 1,365 days, or 32,767 hours. The Expire Time default value is 24 hours.

Establishing the Expire Time for a volume occurs as a result of specific events or actions. The following list shows the possible events or actions and their effect on the Expire Time of a volume:

•A volume is mounted.

The data that is associated with a logical volume will not be deleted, even if it is eligible, if the volume is mounted. Its Expire Time is set to zero, meaning it will not be deleted. It will be re-evaluated for deletion when its category is subsequently assigned.

•A volume’s category is changed.

Whenever a volume is assigned to a category, including assignment to the same category in it currently exists, it is re-evaluated for deletion.

•Expiration.

If the category has a non-zero Expire Time, the volume’s data is eligible for deletion after the specified time period, even if its previous category had a different non-zero Expire Time.

•No action.

If the volume’s previous category had a non-zero Expire Time or even if the volume was already eligible for deletion (but has not yet been selected to be deleted) and the category to which it is assigned has an Expire Time of zero, the volume’s data is no longer eligible for deletion. Its Expire Time is set to zero.

•A category’s Expire Time is changed.

If a user changes the Expire Time value through the scratch (Fast Ready) categories menu on the TS7700 Virtualization Engine MI, the volumes assigned to the category are re-evaluated for deletion.

•Expire Time is changed from non-zero to zero.