Critical Mass

The microprocessor has brought electronics into a new era. It is altering the structure of our society.

–Robert Noyce and Marcian Hoff, Jr. “History of Microprocessor Development at Intel,” IEEE Micro, 1981

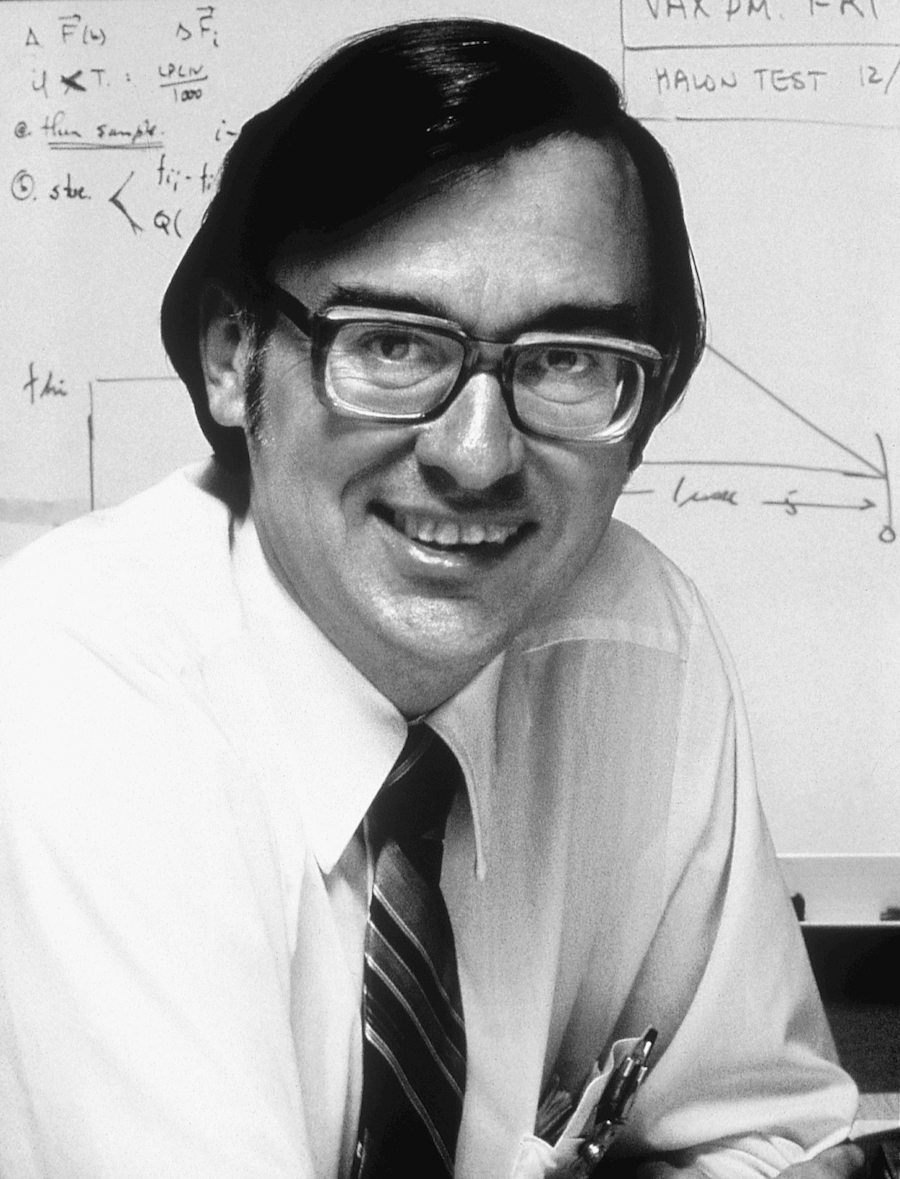

Figure 11. Ted Hoff Marcian “Ted” Hoff led the design effort for Intel’s first microprocessor.

(Courtesy of Intel Corp.)

In early 1969, Intel Development Corporation, a Silicon Valley semiconductor manufacturer, received a commission from a Japanese calculator company called Busicom to produce chips for a line of its calculators. Intel had the credentials: it was a Fairchild spinoff, and its president, Robert Noyce, had helped invent the integrated circuit. Although Intel had opened its doors for business only a few months earlier, the company was growing as fast as the semiconductor industry.

An engineer named Marcian “Ted” Hoff had joined Intel a few months earlier as its twelfth employee, and when he began working on the Busicom job, the company already employed 200 people. Hoff was fresh from academia. After earning a PhD, he continued as a researcher at Stanford University’s Electrical Engineering Department, where his research on the design of semiconductor memory chips led to several patents and to the job at Intel. Noyce felt that Intel should produce semiconductor memory chips and nothing else, and he had hired Hoff to dream up applications for these memory chips.

But when Busicom proposed the idea for calculator chips, Noyce allowed that taking a custom job while the company was building up its memory business wouldn’t hurt.

The 4004

Hoff was sent to meet with the Japanese engineers who came to discuss what Busicom had envisioned. Because Hoff had a flight to Tahiti scheduled for that evening, the first meeting with the engineers was brief. Tahiti evidently gave him time for contemplation, because he returned from paradise with some firm ideas about the job.

In particular, he was annoyed that the Busicom calculator would cost almost as much as a minicomputer. Minicomputers had become relatively inexpensive, and research laboratories all over the country were buying them. It was not uncommon to find two or three minicomputers in a university’s psychology or physics department.

Hoff had worked with DEC’s new PDP-8 computer, one of the smallest and cheapest of the lot, and found that it had a very simple internal setup. Hoff knew that the PDP-8, a computer, could do everything the proposed Busicom calculator could do and more, for almost the same price. To Ted Hoff, this was an affront to common sense.

Hoff asked the Intel bosses why people should pay the price of a computer for something that had a fraction of the capacity. The question revealed his academic bias and his naiveté about marketing: he would rather have a computer than a calculator, so he figured surely everyone else would, too.

The marketing people patiently explained that it was a matter of packaging. If someone wanted to do only calculations, they didn’t want to have to fire up a computer to run a calculator program. Besides, most people, even scientists, were intimidated by computers. A calculator was just a calculator from the moment you turned it on. A computer was an instrument from the Twilight Zone.

Hoff followed reasoning, but still rankled at the idea of building a special-purpose device when a general-purpose one was just as easy—and no more expensive—to build. Besides, he thought, a general-purpose design would make the project more interesting (to him). He proposed to the Japanese engineers a revised design loosely based on the PDP-8.

The design’s comparison to the PDP-8 computer was only partly applicable. Hoff was proposing a set of chips, not an entire computer. But one of those chips would be critically important in several ways. First, it would be dense. Chips at the time contained no more than 1,000 features—the equivalent of 1,000 transistors—but this chip would at least double that number. In addition, this chip would, like any IC, accept input signals and produce output signals. But whereas these signals would represent numbers in a simple arithmetic chip and logical values (true or false) in a logic chip, the signals entering and leaving Hoff’s chip would form a set of instructions for the IC.

In short, the chip could run programs. The customers were asking for a calculator chip, but Hoff was designing an IC EDVAC, a true general-purpose computing device on a sliver of silicon. A computer on a chip. Although Hoff’s design resembled a very simple computer, it left out some computer essentials, such as memory and peripherals for human input and output. The term that evolved to describe such a device was microprocessor, and microprocessors were general-purpose devices specifically because of their programmability.

Because the Intel microprocessor used the stored-program concept, the calculator manufacturers could make the microprocessor act like any kind of calculator they wanted. At any rate, that was what Hoff had in mind. He was sure it was possible, and just as sure that it was the right approach. But the Japanese engineers weren’t impressed. Frustrated, Hoff sought out Noyce, who encouraged him to proceed anyway, and when chip designer Stan Mazor left Fairchild to come to Intel, Hoff and Mazor set to work on the design for the chip.

At that point, Hoff and Mazor had not actually produced an IC. A specialist would still have to transform the design into a two-dimensional blueprint, and this pattern would have to be etched into a slice of silicon crystal. These later stages in the chip’s development cost money, so Intel did not intend to move beyond the logic-design stage without talking further with its skeptical customer.

In October 1969, Busicom representatives flew in from Japan to discuss the Intel project. The Japanese engineers presented their requirements, and in turn Hoff presented his and Mazor’s design. Despite the fact that the requirements and design did not quite match, after some discussion Busicom decided to accept the Intel design for the chip. The deal gave Busicom an exclusive contract for the chips. This was not the best deal for Intel, but at least they were going ahead on the project.

Hoff was relieved to have the go-ahead. They called the chip the 4004, which was the approximate number of transistors the single device replaced and an indication of its complexity. Hoff wasn’t the only person ever to have thought of building a computer on a chip, but he was the first to launch a project that actually got carried out. Along the way, he and Mazor solved a number of design problems and fleshed out the idea of the microprocessor more fully. But there was a big distance between planning and execution.

Leslie Vadasz, the head of a chip-design group at Intel, knew who he wanted to implement the design: Federico Faggin. Faggin was a talented chip designer who had worked with Vadasz at Fairchild and had earlier built a computer for Olivetti in Italy. The problem was, Faggin didn’t work at Intel. Worse, he couldn’t work at Intel, at least not right away: in the United States on a work visa, he was constrained in his ability to change jobs and still retain his visa. The earliest he would be available was the following spring. The clock ticked and the customer grew frustrated.

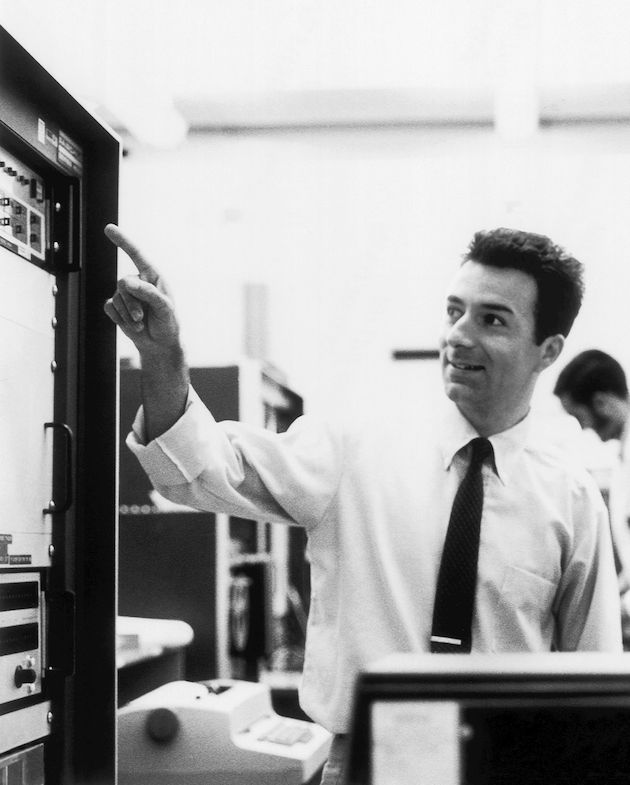

Figure 12. Federico Faggin Faggin was one of the inventors of the microprocessor at Intel, and founded Zilog. (Courtesy of Federico Faggin)

When Faggin finally arrived at Intel in April 1970, he was immediately assigned to implement the 4004 design. Masatoshi Shima, an engineer for Busicom, was due to arrive to examine and approve the final design, and Faggin would set to work turning it into silicon.

Unfortunately, the design was far from complete. Hoff and Mazor had completed the instruction set for the device and an overall design, but the necessary detailed design was nonexistent. Shima understood immediately that the “design” was little more than a collection of ideas. “This is just idea!” he shouted at Faggin. “This is nothing! I came here to check, but there is nothing to check!”

Faggin confessed that he had only arrived recently, and that he was going to have to complete the design before starting the implementation. With help from Mazor and Shima, who extended his stay to six months, he did the job in a remarkably short time, working 12-to-16-hour days. Since he was doing something no one had ever done before, he found himself having to invent techniques to get the job done. In February 1971, Faggin delivered working kits to Busicom, including the 4004 microprocessor and eight other chips necessary to make the calculator work. It was a success.

It was also a breakthrough, but its value was more in what it signified than in what it actually delivered. On one hand, this new thing, the microprocessor, was nothing more than an extension of the IC chips for arithmetic and logic that semiconductor manufacturers had been making for years. The microprocessor merely crammed more functional capability onto one chip. Then again, there were so many functions that the microprocessor could perform, and they were integrated with each other so closely that using the device required learning a new language, albeit a simple one. The instruction set of the 4004, for all intents and purposes, constituted a programming language.

Today’s microprocessors are more complex and powerful than the roomful of circuitry that constituted a computer in 1950. The 4004 chip that Hoff conceived in 1969 was a crude first step toward something that Hoff, Noyce, and Intel management could scarcely anticipate. The 8008 chip that Intel produced two years later was the second crucial step.

The 8008

The 8008 microprocessor was developed for a company then called CTC—Computer Terminal Corporation—and later called Datapoint. CTC had a technically sophisticated computer terminal and wanted some chips designed to give it additional functions.

Once again, Hoff presented a grander vision of how an existing product could be used. He proposed a single-chip implementation of the control circuitry that replaced all of its internal electronics with a single integrated circuit. Hoff and Faggin were interested in the 8008 project partly because the exclusive deal for the 4004 kept that chip tied up. Faggin, who was doing lab work with electronic test equipment, saw the 4004 as an ideal tool for controlling test equipment, but the Busicom deal prevented that.

Because Busicom had exclusive rights to the 4004, Hoff felt that perhaps this new 8008 terminal chip could be marketed and used with testers. The 4004 had drawbacks. It operated on only four binary digits at a time. This significantly limited its computing power because it couldn’t even handle a piece of data the size of a single character in one operation. The new 8008 could. Although another engineer was initially assigned to it, Faggin was soon put in charge of making the 8008 a reality, and by March 1972 Intel was producing working 8008 chips.

Before this happened, though, CTC executives lost interest in the project. Intel now found it had invested a great deal of time and effort in two highly complex and expensive products, the 4004 and 8008, with no mass market for either of them. As competition intensified in the calculator business, Busicom asked Intel to drop the price on the 4004 in order to keep its contract. “For God’s sake,” Hoff urged Noyce, “get us the right to sell these chips to other people.” Noyce did. But possession of that right, it turned out, was no guarantee that Intel would ever exercise it.

Intel’s marketing department was cool to the idea of releasing the chips to the general engineering public. Intel had been formed to produce memory chips, which were easy to use and were sold in volume—like razor blades. Microprocessors, because the customer had to learn how to use them, presented enormous customer-support problems for the young company. Memory chips didn’t.

Hoff countered with ideas for new microprocessor applications that no one had thought of yet. An elevator controller could be built around a chip. Besides, the processor would save money: it could replace a number of simpler chips, as Hoff had shown in his design for the 8008. Engineers would make the effort to design the microprocessor into their products. Hoff knew he himself would.

Hoff’s persistence finally paid off when Intel hired advertising man Regis McKenna to promote the product in a fall 1971 issue of Electronic News. “Announcing a new era in integrated electronics: a microprogrammable computer on a chip,” the ad read. A computer on a chip? Technically the claim was puffery, but when visitors to an electronics show that fall read the product specifications for the 4004, they were duly impressed by the chip’s programmability. And in one sense McKenna’s ad was correct: the 4004 (and even more the 8008) incorporated the essential decision-making power of a computer.

Programming the Chips

Meanwhile, Texas Instruments (TI) had picked up the CTC contract and delivered a microprocessor. TI was pursuing the microprocessor market as aggressively as Intel; Gary Boone of TI had, in fact, just filed a patent application for something called a single-chip computer. Three different microprocessors now existed. But Intel’s marketing department had been right about the amount of customer support the microprocessors demanded. For instance, users needed documentation on the operations the chips performed, the language they recognized, the voltage they used, the amount of heat they dissipated, and a host of other things. Someone had to write these information manuals. At Intel the job was given to an engineer named Adam Osborne, who would later play a very different part in making computers personal.

The microprocessor software formed another kind of essential customer support. A disadvantage with a general-purpose computer or processor is that it does nothing without programs. The chips, as general-purpose processors, needed programs, the instructions that would tell them what to do. To create these programs, Intel first assembled an entire computer around each of its two microprocessor chips. These computers were not commercial products but instead were development systems—tools to help write programs for the processor. They were also, although no one used this term at the time, microcomputers.

One of the first people to begin developing these programs was a professor at the Naval Postgraduate School located down the coast from Silicon Valley, in Pacific Grove, California. Like Osborne, Gary Kildall would be an important figure in the development of the personal computer.

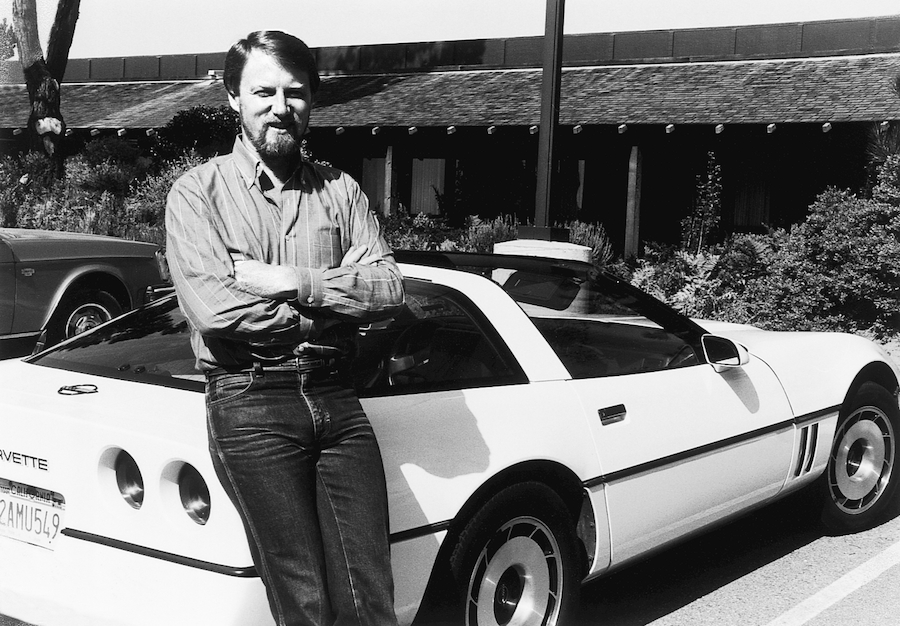

Figure 13. Gary Kildall Kildall wrote the first programming language for Intel’s 4004 microprocessor, as well as a control program that he would later turn into the personal-computer industry’s most popular operating system. (Courtesy of Tom G. O’Neal)

In late 1972, Kildall already had written a simple language for the 4004—a program that translated cryptic commands into the more cryptic ones and zeroes that formed the internal instruction set of the microprocessor. Although written for the 4004, the program actually ran on a large IBM 360 computer. With this program, one could type commands on an IBM keyboard and generate a file of 4004 instructions that could then be sent to a 4004 if a 4004 were somehow connected to the IBM machine.

Connecting the 4004 to anything at all was hardly a trivial task. The microprocessor had to be plugged into a specially designed circuit board that was equipped with connections to other chips and to devices such as a Teletype machine. The Intel development systems had been created for just this type of problem solving. Naturally, Kildall was drawn to the microcomputer lab at Intel, where the development systems were housed.

Eventually, Kildall contracted with Intel to implement a language for the chip manufacturer. PL/M (Programming Language for Microcomputers) would be a so-called high-level language, in contrast to the low-level machine language that was made up of the instruction set of the microprocessor. With PL/M, one could write a program once and have it run on a 4004 processor, an 8008, or on future processors Intel might produce. This would speed up the programming process.

But writing the language was no simple task. To understand why, you have to think about how computer languages operate.

A computer language is a set of commands a computer can recognize. The computer only responds to that fixed set of commands incorporated into its circuitry or etched into its chips. Implementing a language requires creating a program that will translate the sorts of commands a user can understand into commands the machine can use.

The microprocessors not only were physically tiny, but also had a limited logic to work with. They got by with a minimum amount of smarts, and therefore were beastly hard to program. It was difficult to design any language for them, let alone a high-level language like PL/M. A friend and coworker of Kildall’s later explained the choice, saying that Gary Kildall wrote PL/M largely because it was a difficult task. Like many important programmers and designers before him and since, Kildall was in it primarily for the intellectual challenge.

But the most significant piece of software Kildall developed at that time was much simpler in its design.

CP/M

Intel’s early microcomputers used paper tape to store information. Therefore, programs had to enable a computer to control the paper-tape reader or paper punch automatically, accept the data electronically as the information streamed in from the tape, store and locate the data in memory, and feed the data out to the paper-tape punch. The computer also had to be able to manipulate data in memory and keep track of which spots were available for data storage and which were in use at any given moment. A lot of bookkeeping. Programmers don’t want to have to think about such picayune details every time they write a program. Large computers automatically take care of these tasks through the use of a program called an operating system. For programmers writing in a mainframe language, the operating system is a given; it’s a part of the way the machine works and an integral feature of the computing environment.

But Kildall was working with a primordial setup. No operating system. Like a carpenter building his own scaffolding, Kildall wrote the elements of an operating system for the Intel machines. This rudimentary operating system had to be very efficient and compact in order to operate on a microprocessor, and it happened that Kildall had the skills and the motivation to make it so. Eventually, that microprocessor operating system evolved into something Kildall called CP/M (Control Program for Microcomputers). When Kildall asked the Intel executives if they had any objections to his marketing CP/M on his own, they simply shrugged and said to go ahead. They had no plans to sell it themselves.

CP/M made Kildall a fortune and helped to launch an industry.

Intel was in uncharted waters. By building microprocessors, the company had already ventured beyond its charter of building memory chips. Although the company was not about to retreat from that enterprise, there was solid resistance to moving even farther afield. It was true that there’d been talk about designing machines around microprocessors, and even about using a microprocessor as the main component in a small computer. But microprocessor-controlled computers seemed to have marginal sales potential at best.

Wristwatches.

That was where microprocessors would find their chief market, Noyce thought. The Intel executives discussed other possible applications. Microprocessor-controlled ovens. Stereos. Automobiles. But it would be up to the customers to build the ovens, stereos, and cars; Intel would only sell the chips. There was a virtual mandate at Intel against making products that could be seen as competing against its own customers.

It made perfect sense. Intel was an exciting place to work in 1972. To Intel’s executives it felt like Intel was at the center of all things innovative, and that the microprocessor industry was going to change the world. It seemed obvious to Kildall, to Mike Markkula (the marketing manager for memory chips), and to others that the innovative designers of microprocessors should be working at the semiconductor companies. They decided to stick to putting logic on slivers of silicon and to leave the building (and programming) of computers and such devices to the mainframe and minicomputer companies.

But when the minicomputer companies didn’t take up the challenge, Markkula, Kildall, and Osborne each thought better of their decision to stick to the chip business. Within the following decade, each of them would create a multimillion-dollar personal computer or personal-computer-software company of his own.