Steam

I wish to God these calculations had been executed by steam.

–Charles Babbage, 19th-century inventor

Intrigued by the changes being wrought by science, the poets Lord Byron and Percy Bysshe Shelley idled away one rainy summer day in Switzerland discussing artificial life and artificial thought, wondering whether “the component parts of a creature might be manufactured, brought together, and endued with vital warmth.” On hand to take mental notes of their conversation was Mary Wollstonecraft Shelley, Percy’s wife and author of the novel Frankenstein. She expanded on the theme of artificial life in her famous novel.

Mary Shelley’s monster presented a genuinely disturbing allegory to readers of the Steam Age. The early part of the 19th century introduced the age of mechanization, and the main symbol of mechanical power was the steam engine. It was then that the steam engine was first mounted on wheels, and by 1825 the first public railway was in operation. Steam power held the same sort of mystique that electricity and atomic power would have in later generations.

The Steampunk Computer

In 1833, when British mathematician, astronomer, and inventor Charles Babbage spoke of executing calculations by steam and then actually designed machines that he claimed could mechanize calculation, even mechanize thought, many saw him as a real-life Dr. Frankenstein. Although he never implemented his designs, Babbage was no idle dreamer; he worked on what he called his Analytical Engine, drawing on the most advanced thinking in logic and mathematics, until his death in 1871. Babbage intended that the machine would free people from repetitive and boring mental tasks, just as the new machines of that era were freeing people from physical drudgery.

Figure 1. Charles Babbage The 19th-century mathematician and inventor designed a machine to “mechanize thought” 100 years before the first computers were successfully built.

(Courtesy of The Computer Museum History Center, San Jose)

Babbage’s colleague, patroness, and scientific chronicler was Augusta Ada Byron, daughter of Lord Byron, pupil of algebraist Augustus De Morgan, and the future Lady Lovelace. A writer herself and an amateur mathematician, Ada was able, through her articles and papers, to explain Babbage’s ideas to the more educated members of the public and to potential patrons among the British nobility. She also wrote sets of instructions that told Babbage’s Analytical Engine how to solve advanced mathematical problems. Because of this work, many regard Ada as the first computer programmer. The US Department of Defense recognized her role in anticipating the discipline of computer programming by naming its Ada programming language after her in the early 1980s.

No doubt thinking of the public’s fear of technology that Mary Shelley had tapped into with Frankenstein, Ada figured she’d better reassure her readers that Babbage’s Analytical Engine did not actually think for itself. She assured them that the machine could only do what people instructed it to do. Nevertheless, the Analytical Engine was very close to being a true computer in the modern sense of the word, and “what people instructed it to do” really amounted to what we today call computer programming.

The Analytical Engine that Babbage designed would have been a huge, loud, outrageously expensive, beautiful, gleaming, steel-and-brass monster. Numbers were to be stored in registers composed of toothed wheels, and the adding and carrying over of numbers was done through cams and ratchets. It was supposed to be capable of storing up to 1,000 numbers with a limit of 50 digits each. This internal storage capacity would be described today in terms of the machine’s memory size. By modern standards, the Analytical Engine would have been absurdly slow—capable of less than one addition operation per second—but it actually had more memory than the first useful computers of the 1940s and 1950s and the early microcomputers of the 1970s.

Figure 2. Ada Byron, Lady Lovelace Ada Byron (1815–1852) promoted and programmed Babbage’s Analytical Engine, predicting that machines like it would one day do remarkable things such as compose music. (Courtesy of John Murray Publishers Ltd.)

Although he came up with three separate, highly detailed plans for his Analytical Engine, Babbage never constructed that machine, nor his simpler but also enormously ambitious Difference Engine. For more than a century, it was thought that the machining technology of his time was simply inadequate to produce the thousands of precision parts that the machines required. Then in 1991, Doron Swade, senior curator of computing for the Science Museum of London, succeeded in constructing Babbage’s Difference Engine using only the technology, techniques, and materials available to Babbage in his time. Swade’s achievement revealed the great irony of Babbage’s life. A century before anyone would attempt the task again, he had succeeded in designing a computer. His machines would, in fact, have worked, and could have been built. The reasons for Babbage’s failure to carry out his dream all have to do with his inability to get sufficient funding, due largely to his propensity for alienating those in a position to provide it.

If Babbage had been less confrontational or if Lord Byron’s daughter had been a wealthier woman, there may have been an enormous steam-engine computer belching clouds of logic over Dickens’s London, balancing the books of some real-life Scrooge, or playing chess with Charles Darwin or another of Babbage’s celebrated intellectual friends. But—as Mary Shelley had predicted—electricity turned out to be the force that would bring the thinking machine to life.

The computer would marry electricity with logic.

Calculating Machines

Across the Atlantic, the American logician Charles Sanders Peirce was lecturing on the work of George Boole, the fellow who gave his name to Boolean algebra. In doing so, Peirce brought symbolic logic to the United States and radically redefined and expanded Boole’s algebra in the process. Boole had brought logic and mathematics together in a particularly cogent way, and Peirce probably knew more about Boolean algebra than anyone else in the mid-19th century.

But Peirce saw more. He saw a connection between logic and electricity.

By the 1880s, Peirce figured out that Boolean algebra could be used as the model for electrical switching circuits. The true/false distinction of Boolean logic mapped exactly to the way current flowed through the on/off switches of complex electrical circuits. Logic, in other words, could be represented by electrical circuitry. Therefore, electrical calculating machines and logic machines could, in principle, be built. They were not merely the fantasy of a novelist. They could—and would—happen.

One of Peirce’s students, Allan Marquand, actually designed (but did not build) an electric machine to perform simple logic operations in 1885. The switching circuit that Peirce explained how to use to implement Boolean algebra in electric circuitry is one of the fundamental elements of a computer. The unique feature of such a device is that it manipulates information, as opposed to electrical currents or locomotives.

The substitution of electrical circuitry for mechanical switches made possible smaller computing devices. In fact, the first electric logic machine ever made was a portable device built by Benjamin Burack, which he designed to be carried in a briefcase. Burack’s logic machine, built in 1936, could process statements made in the form of syllogisms. For example, given “All men are mortal; Socrates is a man,” it would then accept “Socrates is mortal” and reject “Socrates is a woman.” Such erroneous deductions closed circuits and lit up the machine’s warning lights, indicating the logical error committed.

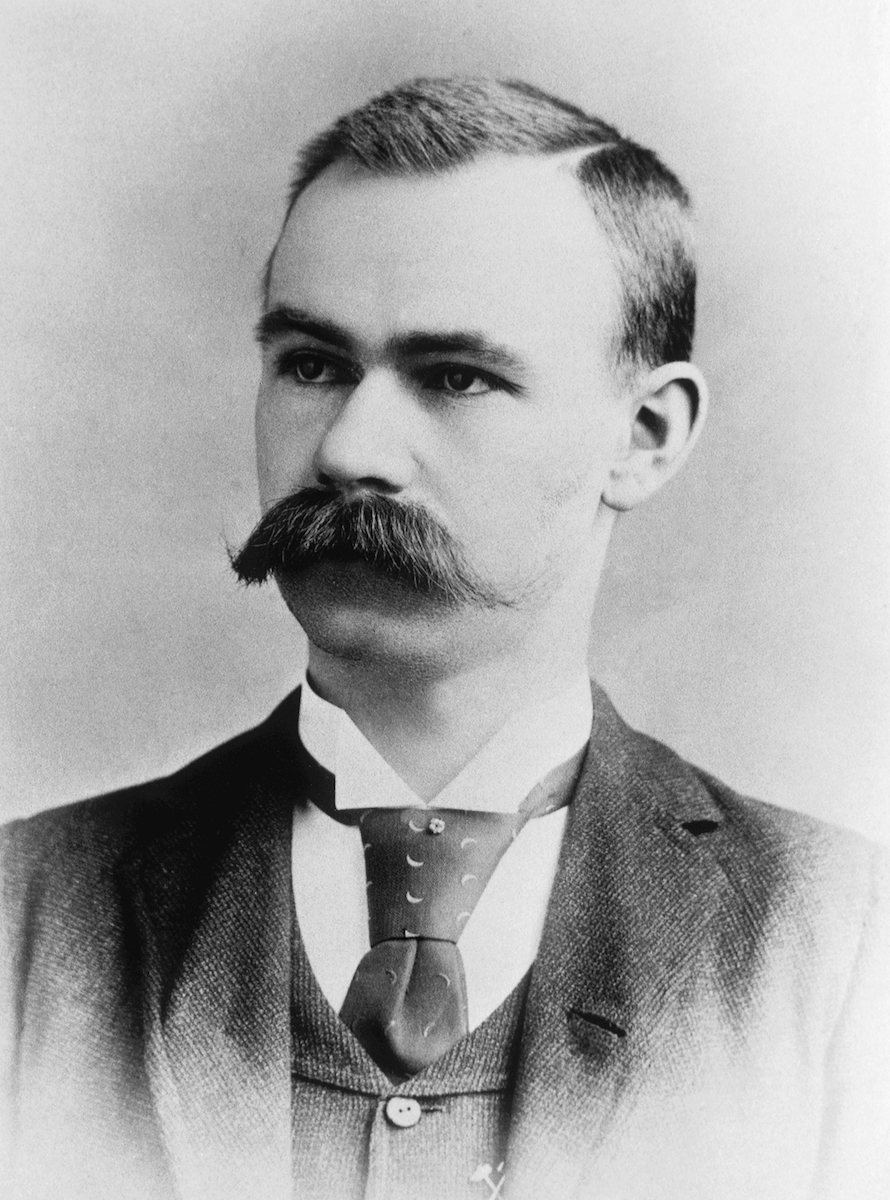

Burack’s device was a special-purpose machine with limited capabilities. Nevertheless, most of the special-purpose computing devices built around that time dealt with only numbers, and not logic. Back when Peirce was working out the connection between logic and electricity, Herman Hollerith was designing a tabulating machine to compute the US census of 1890.

Figure 3. Hermann Hollerith Hollerith invented the first large-scale data-processing device, which was successfully used to compute the 1890 census. His work created the data-processing industry. (Courtesy of IBM Archives)

Figure 4. Hollerith Census Counting Machine Hollerith’s Census Counting Machine cut the time for computing the 1890 census by an order of magnitude. (Courtesy of IBM Archives)

Hollerith’s company was eventually absorbed by an enterprise that came to be called the International Business Machines Corporation. By the late 1920s, IBM was making money selling special-purpose calculating machines to businesses, enabling those businesses to automate routine numerical tasks. But the IBM machines weren’t computers, nor were they logic machines like Burack’s. They were just big, glorified calculators.

The Birth of the Computer

Spurred by Claude Shannon’s PhD thesis at MIT, which explained how electrical switching circuits could be used to model Boolean logic (as Peirce had foreshadowed 50 years earlier), IBM executives agreed in the 1930s to finance a large computing machine based on electromechanical relays. Although they later regretted it, IBM executives gave Howard Aiken, a Harvard professor, the then-huge sum of $500,000 to develop the Mark I, a calculating device largely inspired by Babbage’s Analytical Engine. Babbage, though, had designed a purely mechanical machine. The Mark I, by comparison, was an electromechanical machine with electrical relays serving as the switching units and banks of relays serving as space for number storage. Calculation was a noisy affair; the electrical relays clacked open and shut incessantly. When the Mark I was completed in 1944, it was widely hailed as the electronic brain of science-fiction fame made real. But IBM executives were less than pleased when, as they saw it, Aiken failed to acknowledge IBM’s contribution at the unveiling of the Mark I. And IBM had other reasons to regret its investment. Even before work began on the Mark I device, technological developments elsewhere had made it obsolete.

Figure 5. Thomas J. Watson, Sr. Watson went to work for Hollerith’s pioneering data-processing firm in 1914 and later turned it into IBM. (Courtesy of IBM Archives)

Electricity was making way for the emergence of electronics. Just as others had earlier replaced Babbage’s steam-driven wheels and cogs with electrical relays, John Atanasoff, a professor of mathematics and physics at Iowa State College, saw how electronics could replace the relays. Shortly before the American entry into World War II, Atanasoff, with the help of Clifford Berry, designed the ABC, the Atanasoff-Berry Computer, a device whose switching units were to be vacuum tubes rather than relays.

This substitution was a major technological advance. Vacuum-tube machines could, in principle, do calculations considerably faster and more efficiently than relay machines. The ABC, like Babbage’s Analytical Engine, was never completed, probably because Atanasoff got less than $7,000 in grant money to build it. Atanasoff and Berry did assemble a simple prototype, a mass of wires and tubes that resembled a primitive desk calculator. But by using tubes as switching elements, Atanasoff greatly advanced the development of the computer. The added efficiency of vacuum tubes over relay switches would make the computer a reality.

Figure 6. Vacuum tubes In the 1950s computers were filled with vacuum tubes, such as these from the IBM 701. (Courtesy of IBM Archives)

The vacuum tube is a glass tube with the air removed. Thomas Edison discovered that electricity travels through the vacuum under certain conditions, and Lee de Forest turned vacuum tubes into electrical switches using this “Edison effect.” In the 1950s, vacuum tubes were used extensively in electronic devices from televisions to computers. Today you can still see the occasional tube-based computer display or television.

By the 1930s, the advent of computing machines was apparent. It also seemed that computers were destined to be huge and expensive special-purpose devices. It took decades before they became much smaller and cheaper, but they were already on their way to becoming more than special-purpose machines.

It was British mathematician Alan Turing who envisioned a machine designed for no other purpose than to read coded instructions for any describable task and to follow the instructions to complete the task. This was truly something new under the sun. Because it could perform any task described in the instructions, such a machine would be a true general-purpose device. Perhaps no one before Turing had ever entertained an idea this large. But within a decade, Turing’s visionary idea became reality. The instructions became programs, and his concept, in the hands of another mathematician, John von Neumann, became the general-purpose computer.

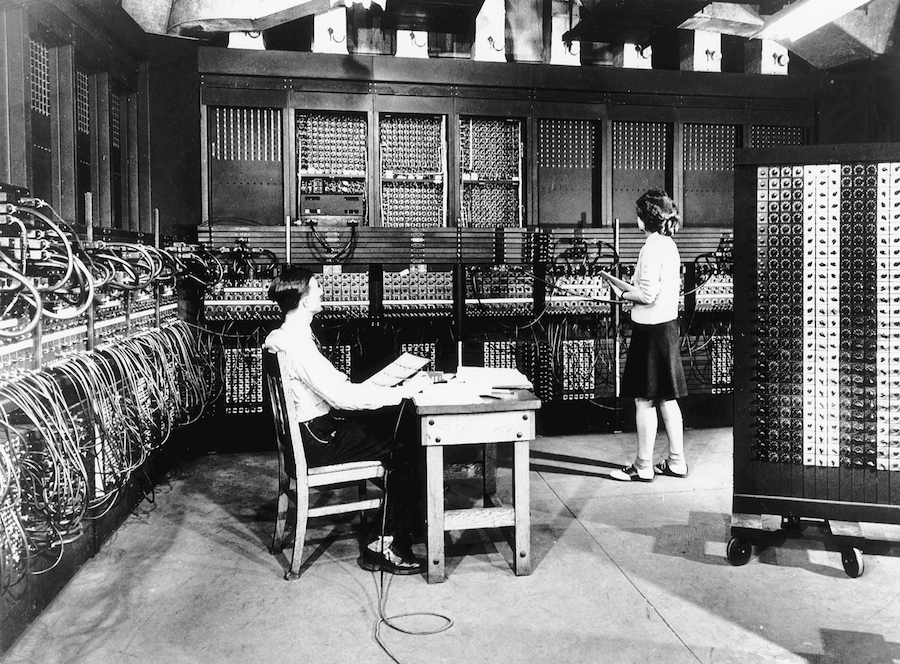

Most of the work that brought the computer into existence happened in secret laboratories during World War II. That’s where Turing was working. In the US in 1943, at the Moore School of Electrical Engineering in Philadelphia, John Mauchly and J. Presper Eckert proposed the idea for a computer. Shortly thereafter they were working with the US military on ENIAC (Electronic Numerical Integrator and Computer), which would be the first all-electronic digital computer. With the exception of the peripheral machinery it needed for information input and output, ENIAC was purely a vacuum-tube machine.

Figure 7. John Mauchly Mauchly, cocreator of ENIAC, is seen here speaking to early personal-computer enthusiasts at the 1976 Atlantic City Computer Festival. (Courtesy of David H. Ahl)

Figure 8. ENIAC The first all-electronic digital computer was completed in December 1945.

(Courtesy of IBM Archives)

Credit for inventing the electronic digital computer is disputed, and perhaps ENIAC was based in part on ideas Mauchly hatched during a visit to John Atanasoff. But ENIAC was real. Mauchly and Eckert attracted a number of bright mathematicians to the ENIAC project, including the brilliant John von Neumann. Von Neumann became involved with the project and made various–and variously reported–contributions to building ENIAC, and in addition offered an outline for a more sophisticated machine called EDVAC (Electronic Discrete Variable Automatic Computer).

Because of von Neumann, the emphasis at the Moore School swung from technology to logic. He saw EDVAC as more than a calculating device. He felt that it should be able to perform logical as well as arithmetic operations and be able to operate on coded symbols. Its instructions for operating on—and interpreting—the symbols should themselves be symbols coded into the machine and operated on. This was the last fundamental insight in the conception of the modern computer. By specifying that EDVAC should be programmable by instructions that were themselves fed to the machine as data, von Neumann created the model for the stored-program computer.

After World War II, von Neumann proposed a method for turning ENIAC into a programmable computer like EDVAC, and Adele Goldstine wrote the 55-operation language that made the machine easier to operate. After that, no one ever again used ENIAC in its original mode of operation.

When development on ENIAC was finished in early 1946, it ran 1,000 times faster than its electromechanical counterparts. But electronic or not, it still made noise. ENIAC was a room full of clanking Teletype machines and whirring tape drives, in addition to the walls of relatively silent electronic circuitry. It had 20,000 switching units, weighed 30 tons, and burned 150,000 watts of energy. Despite all that electrical power, at any given time ENIAC could handle only 20 numbers of 10 decimal digits each. But even before construction was completed on ENIAC, it was put to significant use. In 1945, it performed calculations used in the atomic-bomb testing at Los Alamos, New Mexico.

Figure 9. John von Neumann Von Neumann was a brilliant polymath who made foundational contributions to programming and the ENIAC and EDVAC computers. (Courtesy of The Computer Museum History Center, San Jose)

A new industry emerged after World War II when the secret labs began to disclose their discoveries and creations. Building computers immediately became a business, and by the very nature of the equipment, it became a big business. With the help of engineers John Mauchly and J. Presper Eckert, who were fresh from their ENIAC triumph, the Remington Typewriter Company became Sperry Univac. For a few years, the name Univac was synonymous with computers, just as the name Kleenex came to be synonymous with facial tissues. Sperry Univac had some formidable competition. IBM executives recovered from the disappointment of the Mark I and began building general-purpose computers. The two companies developed distinctive operating styles: IBM was the land of blue pinstripe suits, whereas the halls of Sperry Univac were filled with young academics in sneakers. Whether because of its image or its business savvy, before long IBM took the industry-leader position away from Sperry Univac.

Soon most computers were IBM machines, and the company’s share of the market grew with the market itself. Other companies emerged, typically under the guidance of engineers who had been trained at IBM or Sperry Univac. Control Data Corporation (CDC) in Minneapolis spun off from IBM, and soon computers were made by Honeywell, Burroughs, General Electric, RCA, and NCR. Within a decade, eight companies came to dominate the growing computer market, but with IBM so far ahead of the others in revenues, they were often referred to as Snow White (IBM) and the Seven Dwarfs.

But IBM and the other seven were about to be taught a lesson by some brash upstarts. A new kind of computer emerged in the 1960s—smaller, cheaper, and referred to, in imitation of the then-popular miniskirt, as the minicomputer. Among the most significant companies producing smaller computers were Digital Equipment Corporation (DEC) in the Boston area and Hewlett-Packard (HP) in Palo Alto, California. The computers these companies were building were general-purpose machines in the Turing--von Neumann sense, and they were getting more compact, more efficient, and more powerful. Soon, advances in core computer technology would allow even more impressive advances in computer power, efficiency, and miniaturization.