To choose an adequate structure for a neural network is also a very important step. However, this is often done empirically, since there is no rule on how many hidden units a neural network should have. The only measure on how many units are adequate is the neural network performance. One assumes that if the general error is low enough, then the structure is suitable. Nevertheless, they might have a smaller structure that could yield the same result.

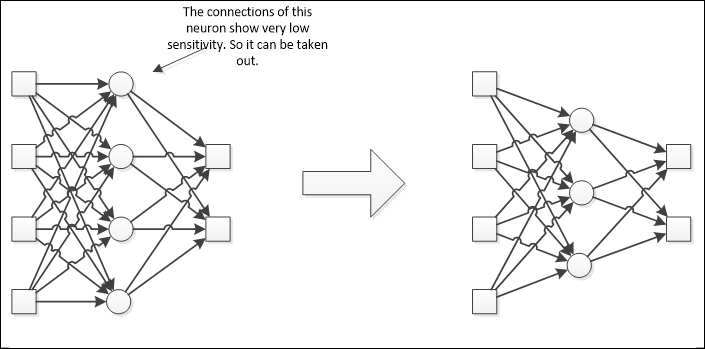

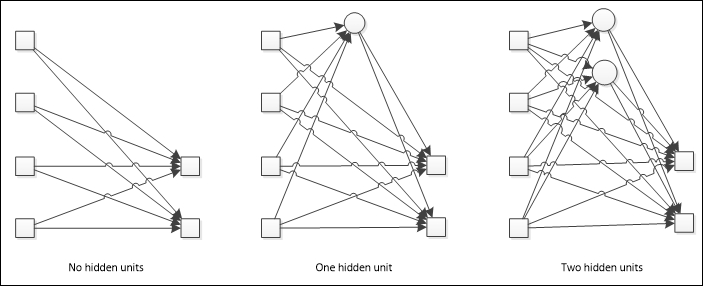

In this context, there are basically two methodologies: constructive and pruning. The construction consists of starting with only the input and the output layers, then adding new neurons at a hidden layer, until a good result can be obtained. The destructive approach, also known as pruning, works on a bigger structure on which the neurons having few contributions to the output, are taken out.

The construction approach is depicted in the following figure:

Pruning is the way back; when given a high number of neurons, one wishes to "prune" those whose sensitivity is very low, which means that its contribution to the error is minimal, as shown in the following figure: