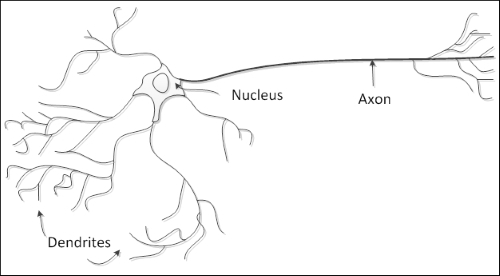

It can be said that the ANN is a nature-inspired structure, so it does have similarities with the human brain. As shown in the following figure, a natural neuron is composed of a nucleus, dendrites, and axon. The axon extends itself into several branches to form synapses with other neurons' dendrites.

So, the artificial neuron has a similar structure. It contains a nucleus (processing unit), several dendrites (analogous to inputs), and one axon (analogous to output), as shown in the following figure:

The links between neurons form the so-called neural network, analogous to the synapses in the natural structure.

Natural neurons have proven to be signal processors since they receive micro signals in the dendrites that can trigger a signal in the axon depending on their strength or magnitude. We can then think of a neuron as having a signal collector in the inputs and an activation unit in the output that can trigger a signal that will be forwarded to other neurons. So, we can define the artificial neuron structure as shown in the following figure:

The neuron's output is given by an activation function. This component adds nonlinearity to neural network processing, which is needed because the natural neuron has nonlinear behaviors. An activation function is usually bounded between two values at the output, therefore being a nonlinear function, but in some special cases, it can be a linear function.

The four most used activation functions are as follows:

- Sigmoid

- Hyperbolic tangent

- Hard limiting threshold

- Purely linear

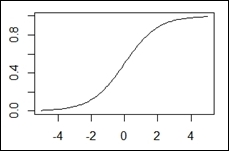

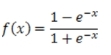

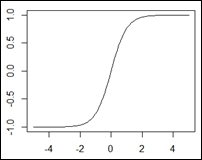

The equations and charts associated with these functions are shown in the following table:

|

Function |

Equation |

Chart |

|---|---|---|

|

Sigmoid |

|

|

|

Hyperbolic tangent |

|

|

|

Hard limiting threshold |

|

|

|

Linear |

|

|

In neural networks, weights represent the connections between neurons and have the capability to amplify or attenuate neuron signals, for example, multiply the signals, thus modifying them. So, by modifying the neural network signals, neural weights have the power to influence a neuron's output, therefore a neuron's activation will be dependent on the inputs and on the weights. Provided that the inputs come from other neurons or from the external world, the weights are considered to be a neural network's established connections between its neurons. Thus, since the weights are internal to the neural network and influence its outputs, we can consider them as neural network knowledge, provided that changing the weights will change the neural network's capabilities and therefore actions.

The artificial neuron can have an independent component that adds an extra signal to the activation function. This component is called bias.

Just like the inputs, biases also have an associated weight. This feature helps in the neural network knowledge representation as a more purely nonlinear system.

Natural neurons are organized in layers, each one providing a specific level of processing; for example, the input layer receives direct stimuli from the outside world, and the output layers fire actions that will have a direct influence on the outside world. Between these layers, there are a number of hidden layers, in the sense that they do not interact directly with the outside world. In the artificial neural networks, all neurons in a layer share the same inputs and activation function, as shown in the following figure:

Neural networks can be composed of several linked layers, forming the so-called multilayer networks. The neural layers can be basically divided into three classes:

- Input layer

- Hidden layer

- Output layer

In practice, an additional neural layer adds another level of abstraction of the outside stimuli, thereby enhancing the neural network's capacity to represent more complex knowledge.