A quick search of the internet will turn up numerous articles showing that unconscious bias is one of the key reasons that women leave roles in technology. It is very likely that the biases outlined in this chapter have a large part to play in denying women and girls (and other under-represented groups) a role in our professions, and yet we are desperately short of skills in an exponentially growing technology industry.

Stereotype

A stereotype is an over-generalised belief about a class or group of people. Stereotypes might lead us to believe that all members of that group have the same characteristics, skills and abilities.

This chapter provides an explanation of the concept of unconscious or implicit bias and why it can make a difference to women’s careers within the technical (and many other) professions. What we cover in this chapter is the creation of the biases and stereotypes that we take on during our lives, their impact on our decisions about others, and how we can either leave these untouched and unmanaged or address them by recognising and managing them. Addressing them enables us to achieve the benefits of real diversity in the technology workforce and more broadly.

We have included insights into why bias has such an impact on women (and people with other protected characteristics) in the technology teams we work in, and how biases can be mitigated (see Chapter 1 for more on protected characteristics). Particularly in the fields of artificial intelligence (AI) and machine learning, we are starting to see that unmanaged biases may cause significant harm. Finally, we offer some examples of cognitive bias and thoughts on where to go to learn more.

UNCONSCIOUS BIAS DEFINED

Research around gender schemas, or unconscious or implicit bias, has been undertaken since the mid-1990s. It is a concept that seems to have a home in the disciplines of social cognition and organisational psychology. Some of the first people to talk about the concept were Anthony Greenwald and Mahzarin Banaji (1995) and Virginia Valian (1999) in the USA, and more recently Binna Kandola in the UK (Kandola, 2009, 2018; Kandola and Kandola, 2013).

It is clear that we all use stereotypes to help us quickly deal with the world around us. We would arguably be in a sorry state if, every time we encountered a new person, we needed to spend five minutes getting to grips with who and what they were – whether they might be good for us or whether they posed a threat. The human brain receives 11 million pieces of information per second but is thought to only be able to consciously process 40 or 50 of those (Britannica, n.d.). We are not able to stop to analyse the essence of everyone we meet and we function with the use of patterns to process information more speedily.

Therefore, rightly or wrongly, we make fleeting decisions about people and move on. When we walk into a room of people we have never encountered before, sometimes we have to swiftly judge them, the organisations they represent, their objectives and their needs. We do this every time we enter a room of clients or customers, as we are about to present, or when we go to a new club or meeting group.

By exploring the subject of unconscious or implicit bias, we learn that these swift decisions about others will be taken based on stereotypes and impressions about people that have been gathered during our upbringing, gleaned from our surroundings, collected from our typical media menu, and compiled through interaction with our friends and acquaintances. They are the way in which our brain deals with the extensive input of information about the world. Our brain continually looks for patterns, sorting and grouping observations to simplify and understand, and the patterns are based on our previous experiences with people or the ways in which people have been represented to us.

ORIGINS OF UNCONSCIOUS BIAS: THE CYCLE OF SOCIALISATION

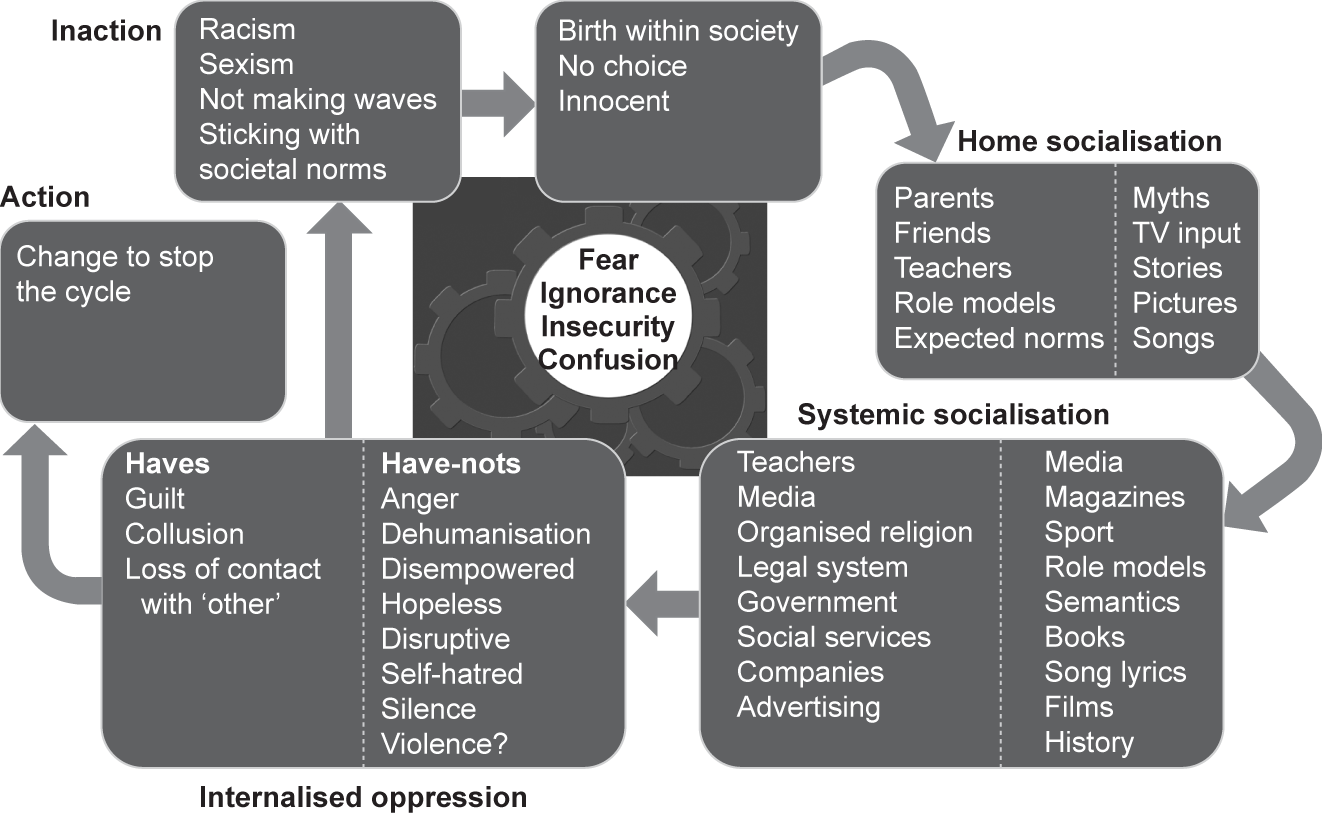

It is important to understand the basis of the beliefs we have collected about people and the stereotypes that underpin them. There is a human process for collecting stereotypes and impressions about others, and there must be a method for updating them. An important paper that outlines the uptake of stereotypes, biases and assumptions about others is the Cycle of Socialisation, produced in the 1980s by Bobbie Harro (see Harro, 2000).

If we start in the middle of the top of Figure 4.1 with our own birth into society, we are born with no previous assumptions or biases about people. We are a blank slate, waiting to receive information about the world and the people in it. And the worlds we arrive into can be hugely different. A child born into a family with two working parents, in a nice district of town, in a country that manages to make health, peaceful stability and education the norm, has a very different life experience to one born with limited recourse to education, poor economic stability, little or no parental support, restricted health outcomes and massive safety concerns.

As the parents or carers around us start to educate us about the norms in the society we have arrived into, they begin our socialisation. In this way, we are instructed in many different societal beliefs about ourselves and others. Our carers might try hard not to burden us with stereotypes, but they come in many forms. We carry beliefs about skin colours, genders, districts in our cities, modes of dress, personal decorations (such as tattoos, hairstyles and jewellery), those of military bearing, different professions and many other aspects of human life. Many hate the fact that girls are urged to wear pink or boys urged to wear blue. Others will be concerned that boys are not encouraged to show the full range of human emotions or that their girl children learn to ‘speak nicely’ or ‘sit decorously’.

Figure 4.1 The Cycle of Socialisation (Adapted from Harro, 2000)

In terms of how this relates to the technology professions, some parents may want both boys and girls to encounter technology and its role models, but other children may learn about technology from films and blockbusters, taking up the stereotypes about technical people that are presented to them. Socialisation is also very dependent on our peer groups. As children we learn a lot from our friends. Parents might have put every effort into avoiding gender stereotypes with their children but be mystified that their girls only want to wear pink and their boys only want to play with trucks. Much of this influence will have come from school or nursery friends. As we get older, our friends or peer groups may maintain the stereotypes and biases we have acquired. We tend to take up similar patterns of behaviour or thinking to our work colleagues, and we often choose our friends because we think the same way as they do. Consequently, our biases can remain unchallenged.

As young children, it quickly becomes clear to us which gender we have been assigned in society. We understand at a very young age how our gender is related to colours, skills, games and social norms. As girls, when we toddle into the toy shop, we take in the fact that everything in the aisle that we are standing in has a pinkish hue, and we start to associate the colours and the contents of the aisle with who we are intended to be. As boys, we get told that we are great at spatial tasks and are repeatedly handed toys that develop these skills, and the encouragement and practice make the fact a self-fulfilling prophecy, even if it didn’t start out as true. Equally, we are socialised to understand the implicit requirements of our gender or race in terms of our behaviours. We are told to ‘sit nicely’ and not show off or are told that ‘boys will be boys’, and these tiny but repeated norms sink into our understanding about what we should be in the world.

As we move into the world of education and eventually into work, this ‘socialisation’ becomes a very regular occurrence. On a daily and even hourly basis, our stereotypes about the world are updated or reinforced. As we look around us, not seeing Black leaders of the judiciary or women wielding spanners and plastering floats, our socialisation makes it very plain that there are conditions under which people expect to see a woman or a man, or a White person or a person of colour. The imbalance in the numbers of female carers or HR staff (predominantly female), or the numbers in the trades (predominantly male), reinforce these beliefs for us. While some will buck the trend, many will not give a second thought to the imposition of perceived norms on their own particular version of the world.

The media plays a huge role in reinforcing the stereotypes we hold about people. Adverts and films reinforce the jobs and lives that we think are ‘suitable’ or appropriate for ourselves and others. For many years, the typical representation of an IT person in films and TV programmes and on social media has been male, nerdy, perversely obsessed with technology and often careless about their appearance. This stereotype has an effect. For girls and women – whose socialisation as girls and teens usually includes, even today, significant attention to body image, dress and cleanliness – it is an undesirable prospect.

Finally, in the Cycle of Socialisation in Figure 4.1, we arrive at a position where we see ourselves as the haves or have-nots, the privileged or the marginalised, or the ‘agents’ or ‘targets’ (Harro, 2000). If we are in a marginalised group (for any facet of our life), we may find that we are doubted, not expected to perform or not expected to achieve. In the privileged group, we will be given the benefit of the doubt and will achieve regularly and without inhibitors. If we are marginalised, this leads us to a sense of disempowerment, or a sense of not belonging, causing huge frustration, possibly to the point of extreme anger when we are continually disappointed. Stop-and-search is a case in point. In the UK in 2019–2020, a Black person was nine times more likely to be stopped and searched than a White person (Home Office, 2020). Particularly as a Black woman or man, you will perceive that you are, yet again, being doubted or suspected. You will be rightly frustrated that the colour of your skin is the trigger for this doubt and suspicion, and when you see that this behaviour is targeted predominantly at people of your skin colour you might reasonably be angry.

At this point in the cycle diagram, we should also see that we are all multifaceted people. For example, you might be a Black man (and therefore not seen to be in the ‘privileged’ White majority) but a famous Premier League football player (and therefore from a highly privileged and visible group) and of working-class heritage. If we take the Manchester United player Marcus Rashford MBE as an example, this is where you can use the privilege of one facet of your life to make a difference to those who are not so privileged. As a professional footballer, Rashford has made a huge difference to communities that are unable to regularly provide food for their families by becoming an ally and using his privilege to challenge government and the public (Siddique, 2020). In doing so, he has become a role model to young people of colour. This is significant because in the diagram above, he has made a societal change, and public perception has shifted a small but significant amount. As the next generation is socialised, he and what he has stood for will be represented in the next cycle of socialisation.

It must be remembered that socialisation is a systemic thing and so, while we can’t possibly be held accountable for the images, notions and perspectives we continually encounter and take up, we could be held accountable if, once we understood the issues around bias and stereotypes in the workplace, we made no effort to implement personal and professional change. Many organisations are implementing training to ensure that teams are awakened to the impact of bias in the workplace.

It is really important to note, though, that we all have implicit or unconscious biases. As outlined above, they are formed through our experiences of life. They are not necessarily formed to be intentionally harmful and they are there to help us deal quickly with the world. However, we should not disregard the impact that unmanaged biases can have. To do so would make us guilty of allowing potentially harmful and erroneous beliefs about others to have free rein.

The following sections of this chapter look more closely at the impacts of bias in recruiting and retaining women and girls in education and in the workplace, particularly in the computer science and technical fields.

IMPACT OF BIAS ON RECRUITMENT OF WOMEN INTO TECHNICAL EDUCATION AND JOBS

As noted above, there is a whole set of stereotypes that are prevalent about technical roles. As outlined in Figure 4.1, these beliefs are held regardless of the gender you identify as or whether you work inside or outside the technical professions. For example, some women might have come to believe, through their socialisation, that ‘technical’ roles wouldn’t suit them but perhaps that creative roles would. Therefore, if they enter the technical professions at all, they may end up in careers such as web design, because they are led to believe that jobs like these are more creative than others.

Equally, in colleges, because large numbers of women have historically not seen technology as a suitable or desirable career, those who do choose computer science (CS) as a topic for their undergraduate studies might find themselves in a minority. And we shouldn’t forget that the young men around them have been subject to the same socialisation – the same norms and cultural influences – through films, books, parents and social media, so they too may feel that somehow (though they can’t quite grasp the reason) the women on the course don’t ‘fit’ as well. This won’t stop them respecting the women they study with, but it may leave a certain dissonance. These same young people will take the perceptions about roles and ‘fit’ into their future working lives.

With regard to the willingness of women to take up training in CS or roles in the industry, our biases and stereotypes have a significant impact at an early stage, falling into several specific areas:

- Girls may not believe that technology and CS are for them in secondary school, as apprentices, or in university and further education colleges.

- Parents may not instinctively believe that roles in technology would be suitable for their girls (but, conversely, they might believe the same roles would work well for their boy children).

- Females at any level may not seek roles in technology (which seem out of step with the media representations of women’s roles).

- Managers may not believe that women make great technologists since the stereotypes that are repeatedly reinforced are male, leading to recruitment and retention issues (which are discussed in much greater detail in later chapters).

It is therefore unsurprising that few women enter the technical professions in comparison to men. There are organisations that are working against this trend. For example, TechUPWomen and Coding Black Females are both succeeding in changing perceptions and have good take-up. However, it is important to recognise that the numbers for women in tech are only just starting to change in 2021 after being stuck at 16–17% for two decades (BCS, 2020).

Bias and the take-up of IT or computer science studies

Parents are often the key influencers for young people’s decisions about GCSE, A-level, BTEC or equivalent qualifications when it comes to the choice of subjects. Where a parent feels ambivalent about a subject because they have consumed negative stereotypes, it is less likely that they will be the ones encouraging their offspring to study the topic. The motivations that may sway parents are around the viability or suitability of careers for their teenagers, and whether those teenagers will be happy in their future careers. It is important that parents become more aware of the roles in technology so that they can feel confident that their young people would thrive in a technology career. That confidence would dispel any fear reasonably aroused by the stereotypical image of a geeky technical male with limited social ability and a propensity for ‘hacking’.

It is important to note the difference here between the word ‘hack’ (meaning ‘to fix’) and the word ‘hacker’ (meaning something much more illicit or illegal in computing). The hacker representation in the media might understandably make a parent protective of their child.

The project People Like Me, which evolved into My Skills My Life, is run by the WISE (Women Into Science and Engineering) Campaign. It is a useful tool for parents of girls at any age and will support informed choices on the selection of science, technology, engineering and maths (STEM) subjects at school (Herman et al., 2018; WISE Campaign, 2021). There is more on this in Chapter 3.

Bias and the CV (or résumé)

Every year, many organisations across the world undertake a survey or business-based research on biases held about names on CVs. We have included outlines of some of these studies below. They often show that if the name on a CV is female, then the individual is less likely to be asked to interview or hired. Equally, if the name suggests anything other than White British, White European or White American heritage, then the individual stands less of a chance of receiving an invitation to talk with the employer.

This is a bias that prevents women and minorities from getting equal access to the jobs on offer and is most likely to have been created as a result of our home socialisation or later systemic socialisation inputs. As discussed in later chapters, companies are addressing this type of bias by removing names from CVs. However, there are many other ways to determine gender from a CV, and these might bypass our awareness but affect management hiring decisions nevertheless.

Examples of studies on bias and CVs

Moss-Racusin et al. (2012)

A famous study was carried out in US higher education institutions in which science academics were asked to review applications for a laboratory manager position. The applications they were given were identical apart from being randomly allocated a male or female name on the applicant’s CV. The participants in the experiment rated applicants with male names as being significantly more competent and hireable than female applicants with identical qualifications and experience. They also selected a higher starting salary and offered more opportunities for career mentoring to male applicants. Interestingly, the gender of the science academics did not affect how they responded, with men and women equally likely to show bias against the female applicant.

Hays (2014)

A Hays study in Australia also took the same CV and modified the gender. The researchers established a set of interview and hiring criteria, which managers used in the study to decide whether the individual on the CV in front of them was suitable. Interestingly, the male hiring managers thought that the male candidate matched 14 out of 20 attributes whereas the females only matched six, and the situation was completely reversed for the female hiring managers. This study shows affinity bias (see ‘Types of cognitive bias’ later in this chapter) very clearly.

Wood et al. (2009)

In another example, a study carried out for the Department for Work and Pensions in the UK developed three identical applications for 155 real job adverts. For each job, one application was given a name usually associated with White people while the other two used names that would usually be associated with specific Black, Asian or minority ethnic groups. The results showed that only 39% of the ‘ethnic minority’ applicants received a positive response from employers compared to 68% of the ‘White’ applicants.

The language and images we use on hiring websites, on promotional material about our CS courses, and on adverts about apprenticeships and jobs will affect whether potential candidates decide to pursue the opportunity being offered. If they don’t ‘hear’ language that they can identify with, and they see images of people unlike them, then they won’t apply. In order to attract a diversity of applications, higher education institutions and employers need to ensure that every pictorial and written description of roles and courses available reflects the diversity of society. There is more on this in Chapter 3. If women make up 47% of the workforce in a country, then they should be reflected in 47% of the images. Equally, if people of colour are 40% of the local population, then their presence should be proportionately reflected in advertising.

Positive action (or affirmative action)

Many countries have now developed legislation that allows for positive or affirmative action on recruitment and support for diverse groups. For example, when a company has an unrepresentative proportion of women or people of colour within the workforce, it becomes acceptable to seek new candidates in these groups to redress the balance. To be very clear, this doesn’t mean that a company could employ an inferior candidate of a certain protected characteristic, in order to redress the balance. That would be discriminatory. However, they could use a fully structured recruitment process to find two candidates who (after a full interview process) have equal merit and then, if the opportunity arises, select the candidate for employment who meets the criteria of their positive action campaign. This is not a tool for favouring the minority unfairly but one for establishing balance and parity.

EFFECT OF BIAS IN RETAINING WOMEN IN THE TECHNOLOGY WORKFORCE

There are distinct effects of bias in the retention of women within technology courses and the workforce. These fall into the following categories:

- Women may not make progress within the workforce, get stuck underneath the glass ceiling or inexplicably not make it to senior positions because senior managers hold pervasive stereotypes about women’s capabilities and levels of commitment.

- Women may not progress to the higher levels of academia and get stuck behind an ‘invisible line of social justice’ (McIntosh, 1989; more on this below) within the educational establishment because bias and stereotypes don’t see them as the ‘knowers’ in technical fields. While it is clear that there are several factors at play in promotion, there is research to suggest that women may be more likely to be given responsibility in non-research areas (research is a requirement for career progression) (Weisshaar, 2017).

- Female technologists may not believe in their own capabilities and potential because they have not seen themselves as leaders or technologists in their home life or through socialisation. Stereotype threat and impostor syndrome may be significant players in this. Stereotype threat is covered later in this chapter.

- Workplace cultures may be driven by people with the same norms and belief systems as each other, and outsiders or minorities may feel unwelcome. This is often manifested through micro-inequities or micro-aggressions.

- Management teams may have been made aware of bias and its impacts but have made little real effort to manage their biases or the company culture.

Bias in regular assessment or appraisal

When any of us is assessed for our performance, whether that be in our academic achievement or as employees, we stand at the gateway to many openings. The judgement of our managers or leaders on our performance may offer us opportunities for our next big assignment. Their perception of our capabilities might allow us to work on the most prestigious projects or with the best customers. Hopefully, through our assessments, we will be rewarded with larger salaries, bigger bonuses or higher-ranking jobs. Assessment might bring us opportunities to patent our work or give us access to better visibility or grants. For this reason, we want to ensure that we are judged entirely equitably.

Where a manager has unmanaged unconscious bias, they might be unaware that their stereotypes or biases allow them to interpret a staff member’s performance much more negatively than if they were aware that they might have bias. Identifying their bias means they can seek to manage it. Research that supports this theory has been reported by Virginia Valian (1999); Richard Martell, David Lane and Cynthia Emerich (1996); Joan Williams and Rachel Dempsey (2014); and many others. They outline how women are asked to ‘Prove it Again’ when they have shown that they can execute well against an objective, or how a tiny difference in the annual assessment of individuals (one that could reasonably be put down to systemic socialisation) can see the women languish at the bottom of the seniority banding and make it to the top in far fewer numbers. It is essential that equity be achieved in assessments and reviews, whether in academic work or in business life, and that managers and leaders at all levels understand how they hold the key to creating or enforcing the glass ceiling.

Stereotype threat related to women in technology

Stereotype threat is a concept that is often covered when organisations discover implicit or unconscious bias. It suggests that we are at risk of being socialised to expect that we will fail at something, and so this belief ultimately affects our performance. What this means is that when we believe that women technologists might not be on par with male technologists, because virtually every example of someone working with technology is male, then some women might carry a doubt that they are equally as capable as their male colleagues. Consequently, their performance may be downgraded because of internalised beliefs that they may be substandard operators, coders, architects, design engineers and process engineers. Studies have demonstrated that when women’s (Spencer et al., 1999; Walsh et al., 1999) and young girls’ (Ambady et al., 2001) genders are highlighted before they complete a maths activity, they experience this threat and its influence. The subtle effect of socialisation on self-esteem has been shown to be very powerful.

This becomes a pervasive threat to academia and industry as it can result in underperformance and increased use of self-defeating or self-destructive behaviours, and it may create generally unhappy people. Sadly, stigmatised groups can underachieve and individuals may disengage, and consequently professional aspirations may be adjusted downwards.

Bias in academic settings

The two difficulties mentioned above, related to bias in assessments and stereotype threat, are brought out in an acclaimed paper by Peggy McIntosh (1989). McIntosh talks about the invisible line of social justice (very similar to a glass ceiling) that existed at the time she wrote the paper as a worker in academia. Above the line were the privileged and below the line were the rest, and when she started work she found that the ‘privileged’ were White, were male and worked as the senior members of the university and the teaching staff. In an attempt to counter this in the UK, the Athena SWAN Charter offers awards to universities and their departments that have worked to counter bias in all its forms, in their policies, in their teaching methods and in their content. This is covered in greater detail in Chapter 3. This is even more necessary where the perception of STEM subjects is closely linked to negative stereotypes.

Micro-aggressions and micro-inequities in the workplace

A concept that is repeatedly linked with unconscious bias is micro-behaviours. These comprise micro-aggressions, micro-inequities and micro-messages. Micro-messages are the verbalised form of these. Clearly there is some overlap between micro-aggressions and micro-inequities, but the concepts are virtually the same, referring to slights or hurts by means of actions or words, typically directed at a minority.

The Cambridge Dictionary (n.d.) defines a micro-aggression as:

A small act or remark that makes someone feel insulted or treated badly because of their race, sex, etc., even though the insult, etc. may not have been intended, and that can combine with other similar acts or remarks over time to cause emotional harm.

Mary Rowe, who coined the term, defines micro-inequities as:

Small events that may be ephemeral and hard to prove; that may be covert, often unintentional, and frequently unrecognized by the perpetrator; that occur wherever people are perceived to be different; and that can cause serious harm, especially in the aggregate. (Rowe and Giraldo-Kerr, 2017)

Both behaviours can result from unmanaged implicit or unconscious bias. The more that they happen to an individual, the more the impact, and they are significant in the culture of a workplace. There are plenty of examples of micro-aggressions that women experience in STEM. The only woman in the room is often assumed to be the project manager or the secretary but rarely the senior architect or senior technical specialist, largely because there are so few women in roles considered to be more technical. It may also be because it is assumed women are better at organisation or because assistant roles are often filled by women. Consequently, the woman may be asked to take the minutes of the meeting and her contribution may be overlooked. In another example, women’s ideas are sometimes unheard in meetings but then repeated by a male colleague, and the idea is attributed to him by the other men in the room. Women are also interrupted in meetings and their opinions are doubted more regularly, and they may be told they don’t look like engineers or that they are ‘one of the guys’. Mary Rowe (c.1975) gives many more examples in her paper ‘The Saturn’s Rings Phenomenon’.

Women may also be asked to prove themselves more than men would be, as laid out in Joan Williams and Rachel Dempsey’s What Works for Women at Work (2014). They are socialised and expected to be likeable and are told they should smile more, and if women assert themselves they can be considered aggressive or worse (Toegel and Barsoux, 2012). Women’s ambition clashes with perceived norms and stereotypes, and the dissonance created sees them judged very harshly for their strengths, as Ginka Toegel and Jean-Louis Barsoux argue in their paper on communal and agentic behaviours and traits. They show how this dissonance leads us to judge those people who don’t conform to expected behavioural norms far more harshly.

What Works for Women at Work (Williams and Dempsey, 2014) and a 2019 report by the University of Cambridge (Armstrong and Ghaboos, 2019) provide more details on some of the micro-aggressions and micro-inequities listed above. While the examples we have given here might seem like a perpetuation of the very stereotypes we are trying to avoid, there is much research that underpins the concepts and issues outlined above, and these behaviours need to be called out so that attitudes towards women in tech can change.

It is key to note that individual micro-aggressions are small in impact (straws) but plentiful (breaking the camel’s back). These may appear to be minor slights, but the power of the repeated personal impacts can accumulate over time to have significant effects (Nadal and Haynes, 2012). The effects can include symptoms such as depression and anxiety, even affecting sleep (Ong et al., 2017).

Even women who are not on the receiving end of such micro-aggressions in STEM, but who simply witness the negative treatment of other women, can experience diminished STEM outcomes (LaCosse et al., 2016) and perceive the culture as unwelcoming. The impact of micro-aggressions and micro-inequities is exacerbated because women (and people of colour) are in a minority in technical professions (Petrie, 2013). This creates a significant reason for women to feel unwelcome in the technical workforce, and so the drift away from the profession is not surprising.

The potential pervasive impact of bias in the technical professions

Bias has become an issue over the past decade with the growth in AI and machine learning. There has been growing concern about the automated systems that we use to sift through CVs, and in the box below Hannah Dee gives examples of the bias that we, the technologists, might build into the algorithms that manage our daily lives.

Currently, the most pervasive of the algorithms that involve and perpetuate bias are those that offer search and look-up support. If I search for ‘bias and framing’ or ‘walking boots’ through my browser or on YouTube, then I will hope to resolve my search with useful information. However, for days and weeks after that, I will be offered similar adverts for ‘walking boots’ or ‘bias and framing’. The outcome of this feedback loop is that my world outlook can become more and more narrowed and subjective, and the objectivity that is injected when I choose not to have ‘customised experiences’ from my web browser is reduced. When the browser tells you that your searches will be quicker with these settings turned on, they don’t make it clear that this feature also reinforces the things you already know or searched for or the stereotypes and perceptions that make up your own systemic socialisation. While it might be a valuable offering from the browser organisations to show us opportunities to purchase or read the things we have already demonstrated a desire for, this might be confirmation bias in the making and certainly makes for a very closed view of the world (Rabbit Hole, 2020).

Algorithmic bias: An introduction

If we want to look at algorithmic bias, there are two main types of system: those entirely crafted by programmers and those that contain machine learning (ML). I like to think of the first kind as being a little like ‘ordinary’ bias and the second as more like ‘unconscious’ bias (although I am not claiming that any of the algorithms are conscious – yet).

Considering the first type of algorithmic bias, we have a situation in which an algorithm will reflect its author. This is clearly going to be an issue for systems that deal with human data. For example, filtering software for CVs will assume that everyone has a first name and a last name, whereas some minorities have many names or just one. Systems that require a ‘Miss’ or a ‘Mrs’ are now fairly rare – and seem to be almost charmingly old-fashioned when I come across them. Almost every system offers ‘Mr’, ‘Mrs’, ‘Miss’ and ‘Ms’ now but what about those of us who prefer to be called ‘Dr’ or ‘Mx’? This kind of bias comes from software teams not considering the situations of people unlike themselves, and accidentally baking into code their personal assumptions and stereotypes.

Systems that are built using ML1 or that contain an element of ML don’t necessarily pick up the biases of their authors, but they pick up the biases of their training sets. These systems take in some form of training data and then learn patterns, which they can apply to new, unseen data. If the data is biased, the system can learn the patterns of bias alongside any other patterns it finds.

In supervised ML, systems are provided with positive examples and (optionally) negative examples of the things they are learning, and they have to work out what makes the positive examples good and the negative examples bad. So, if I were to train a system to detect faces, I’d present it with lots of positive examples (pictures of faces) and lots of negative examples (pictures of roads, chairs, trees, cheesecake, elephants…). The ML system works out what visual patterns are associated with faces and what are associated with not-faces. When trained and presented with a picture that it hasn’t seen before, it can say, ‘This bit of the image contains a face.’

In unsupervised ML, systems are presented with lots and lots of data, and they try to work out the patterns within that data. For example, they might be presented with hundreds of thousands of paragraphs of English language text and be asked to work out which words are related to which other words. A system might find sentences such as ‘I got the cat from the vets’ and ‘I picked up the dog from the vets’ and from many, many examples realise that ‘cat’ and ‘dog’ are words that occupy particular roles within the English language.

So far, so good. But both of the types of system I have just described have been shown to contain bias. Face recognition systems that are trained on standard image sets are not as good at recognising Black faces as they are at recognising White faces, and this effect interacts with gender (Buolamwini and Gebru, 2018). And the language-learning system realises that ‘cat’ is similar to ‘dog’, but it also realises that ‘man’ is similar to ‘engineer’ and ‘woman’ is similar to ‘secretary’ (Garg et al., 2018).

This kind of bias hits gender issues and employment head-on when we come to recruitment systems. If we have an ML system designed to help select candidates from within a set of CVs, we have to think very hard about our training sets. Given the gender balance in technology at the moment, if we give our ML system a training set made up of normal employees, it’ll learn what a techy team currently looks like and try to find people who fit – so it’ll try to find guys, perpetuating gender stereotypes. Famously, this happened to Amazon, which had to stop using its AI-based recruitment system as it exhibited clear bias, overlooking candidates who had attended women’s colleges or who had certain phrases in their applications (Vincent, 2018).

Hannah Dee

TYPES OF COGNITIVE BIAS

There are many types of unconscious cognitive bias. This section explores some of those that relate to attracting and retaining women in technical roles and professions.

Affinity bias

Affinity bias is our tendency to gravitate towards and get along with others who are like us. It takes more effort to socialise, spend time with and even work with others who are different from us. It’s also known as ‘like-likes-like’ and means we are more likely to recruit people to our teams, and promote them, when they have similar characteristics to our own. For example, similarities that will trigger affinity bias are our race, gender, age and societal background. The suggestion that a person is ‘a good cultural fit’ may well betray an affinity bias. Given that the majority of the technology workforce is male, this means that a man might be chosen over a woman for a technical or senior technical role.

So-called old boys’ networks are evidence of the ways that women can be left out of social networking, at work and outside it – and networking outside the office can be so crucial to careers. One of the reasons that women’s grassroots networks, or employee resource groups, are active in bringing women together is because there is a recognised need for a network of individuals who are willing to support one another – to ‘help each other up the steps’. This plays the same role as the old boys’ network would. Affinity bias, or in-group bias, could be at work when women are not invited to watch the football in the pub with colleagues or customers, for instance, or when we exclude those who do not drink alcohol.

Confirmation bias

Confirmation bias is our tendency to seek out and remember information that confirms our beliefs and perceptions. Confirmation bias also shapes and reconfirms stereotypes we have about people and has an effect on how we behave. For example, if we believe that women lack confidence in comparison to men, then we may behave towards them as if they were not confident. Worse, women themselves are often socialised, as children, not to display confidence, since it is more likely to be construed as bragging or as being bossy than would be the case if a boy displayed the same behaviour.

In one experiment, female job applicants were divided into two groups. One group was told their male interviewer had traditional views about women and the other group was told the interviewer’s views about women were non-traditional. Women in the former group downplayed their ambitions and behaved much more femininely during the interview than those interviewed in the non-traditional cohort (Rudman and Glick, 2008). This also means that women who are simply behaving ‘normally’ (whatever that might be) may be reinforcing any biases that the observer holds. In interviews, at work and during assessments, this can have implications for how good a ‘fit’ women are for the role they are in or the role they are interviewing for. Of course, you could choose to assess this example as confirmation bias!

Anchoring bias

Anchoring bias is also known as ‘focalism’ or the ‘availability heuristic’. It is a bias that sees us rely on initial examples that come to mind in order to make judgements. An example of anchoring bias would be as follows: we may be aware that since the 1980s there have been few women in tech, particularly in the western world, and having this as our ‘initial’ example, we might make the assumption that women are not well suited to the industry. However, prior to the 1980s, all of the ‘computers’ (a term for a person working with maths, software and computing machines) working at the Harvard College Observatory were women (Grier, 2005), and during the Second World War and through to the mid-1960s the largest trained technical workforce of the computing industry was composed of women (Hicks, 2017). Women do have a history within the industry, but this fact has now been largely forgotten, and consequently the immediate example today is that there are few women working in technology, which ‘anchors’ the perception of managers when hiring.

Attribution bias

Attribution is the way in which we determine the cause of our own or others’ behaviour. There are many types of attribution bias (Wikipedia, n.d.). In fundamental attribution bias, we are often too quick to attribute the behaviour of other people to something personal about them rather than to something situational. For example, we might think, ‘She isn’t ambitious because she is a woman with caring responsibilities’, when in reality she may be unhappy with the workplace and doesn’t see her future there.

One report studied how a woman facing emotional struggles in a physics class and having her contributions ignored in an environmental science class was viewed by undergraduate men and women (Freedman et al., 2018). Men were more likely to attribute the anxiety to the student’s lack of preparation. Women were more likely to attribute the student’s anxiety to factors related to bias and to see the sequence of events as reflecting real-life experiences of women in STEM. What this suggests is that where women are under-represented in an industry, there are few people who are on the same wavelength as them and who could speak up for them where there are concerns or issues. In this way, the under-representation persists because the predominant workforce is less likely to become allies to the few, having never experienced and recognised all of the issues.

Gender bias

Gender bias is likely to need less explanation than the other biases mentioned here, but it is no less important and can be both conscious and unconscious. This bias prefers one gender over another or assumes that one gender is better at a job. According to the Office for National Statistics, in the UK in 2020, women made up only 15% of construction workers but nearly 60% of those working in education (Office for National Statistics, 2020). As children move through school seeing predominantly female teachers, they may acquire the impression (if they are not challenged) that teaching is a job for females and less so for males. They then may carry this impression through to their career choices and working lives.

Ageism

Ageism is discrimination against someone simply on the basis of their age. It tends to affect men less than women, and starts at younger ages for women too. For example, a study by David Neumark, Ian Burn and Patrick Button states that ‘based on evidence from over 40,000 job applications, we find robust evidence of age discrimination in hiring against older women, especially those near retirement age’ (Neumark et al., 2015).

Blind spot bias

Blind spot bias occurs when we don’t think we have bias. We are able to observe it in others more than in ourselves. ‘I treat everyone the same’ may be a warning sign here, and treating everyone the same is absolutely not the same as treating everyone equally. Consider the popular example that shows two individuals attempting to look over a wall. One person is shorter than the wall and therefore cannot see. Treating them the same has both people standing on the ground: one can see, one cannot. Treating them equally gives the shorter person something to stand on. As everyone is an individual, with their own values, characteristics, behaviours and styles, we must treat everyone differently but with equality. That is, everyone in a college, university, company or organisation must have the same opportunities for progression, recognition and reward.

There are many more biases that have some bearing on the position of minorities and there is plenty of reading, videos and training for those who are looking for more information (see e.g. Kandola, 2009; Kandola and Kandola, 2013).

What can organisations do?

- Consider educating all levels of management and leadership on how to manage their own bias. When doing so, maximise the available funds by ensuring that those who have the largest people responsibilities get more training than those with lesser people responsibilities. Meanwhile, make training on bias part of the leadership training at the earliest stage possible. Bias should also be part of training on interviewing, assessment and hiring.

- When establishing training on unconscious bias, make sure that the board and executive team are trained before everyone else, since you will want to ensure that you have executive sponsorship (that is visible).

- Ensure that bias training is conducted in small groups, to get interaction and debate started. This has been found to make the effect of training more pronounced. Training on bias is a sensitive subject, and skilled trainers and facilitators will ensure that everyone has the opportunity to voice opinions and that sensitivity is ensured for those in a minority.

- Unconscious bias training should be part of the new-manager training package.

- Establish debate about bias across the business.

- Ensure that monitoring of staff will show up biases. For example: (1) monitor across departments for those managers who have assessments that are out of line with others. Where one department scores everyone highly or another scores only the women in the bottom quartiles, checking in this way will uncover disparities. (2) Conduct departmental audits on the culture to uncover any issues with pervasive discomfort and lack of inclusion. (3) Monitor progression rates in relation to people’s protected characteristics through the tiers of the business. (4) Hold staff meetings or roundtables to uncover examples of micro-inequities or micro-aggressions.

- Run confidence-building sessions for those who are likely to be suffering from stereotype threat, while recognising that they should not be pathologised.

- Ensure that messaging, communication and imagery, both internally and externally, do not feature stereotypes. Ensure that both genders, many races and ethnicities, and all ages and abilities are shown in a range of roles and seniorities. It is important that women see role models of women in technical roles, at all levels of seniority.

- Remove names (and therefore perceived gender and ethnicity) from CVs. Some companies have gone so far as to remove the right of the hiring manager to see the CV. However, there are downsides of this policy: the manager is no longer empowered to manage their own biases (and we might reasonably expect them to do so), there is significant additional workload handed to the HR department, and the hiring manager might be said to be the best judge of the skills required for the role.

- Establish interview panels and strict guidance on job descriptions, selection of candidates and interviewing skills to ensure that any bias in one interviewer is countered by difference and diversity in the panel. Where each applicant takes the same test, there is more evidence of skills and less dependence on personal judgement. Ensure that testing and/or case studies are used during the interview process to help ensure that any bias is limited and facts about skills are brought to the fore. Ensure that all those required to work on interview panels have had training on managing their own biases.

- Consider building a positive action campaign to bring more women into your IT workforce. Tell your recruitment team that you are doing this and that you want at least a 50:50 (male and female) split of CVs so that you have a choice of whom to interview. Get your recruitment team trained in unconscious bias.

- Look at your internal culture. Is it welcoming? Does it encourage and empower, or is it likely to have people voting with their feet and leaving the company? Cultural audits, roundtables and employee surveys can all help with this. Organisations must then decide what needs to change about the culture and what they are willing to do to fix the situation. Chapter 7 says more on this topic.

What can individuals do?

- Understand the impact of bias on your own perception of self since socialisation and stereotype threat might be working on you personally too. Work to counter this with training, confidence-building and assertiveness skills, if you feel you need to.

- Consider the systemic socialisation you are subject to on a daily basis and work to counter the crooked perceptions and stereotypes that you have already taken on board, or those that you may take on in the future. Take the Implicit Association Test (Project Implicit, n.d.) to understand some of the biases that you may have. Know that you can shift these.

- When you are called for annual assessment, be sure to help your manager by taking your notes on all of the objectives you have been given and all of the outcomes you have achieved. Then take along a second list of all the additional things that you have done for the organisation during the same time. Be sure that your manager fully understands your contribution. This is a two-way street; both the manager and the employee need to be sharing notes on progress.

- Parents can look at WISE’s My Skills My Life programme to ensure that they are comfortable with what roles STEM professions can offer their children (WISE Campaign, 2021).

SUMMARY

Overall, biases are not things that we take on in a malicious way. However, left unmanaged, they result in significant impacts for ourselves and those around us. This chapter has outlined how they are built from our early socialisation and then from the systemic values and beliefs repeatedly reflected from our peers and friends. We bring those beliefs into the workplace and, where we remain in closed subjective teams, with groupthink, nothing ever challenges them. If we do nothing, the world stays the same.

In the technical professions, unmanaged bias could guarantee that we remain forever at a figure of 17% women in technology roles across the UK and Europe. We could see the numbers of female chief information officers stuck at 11%. Minorities would continue to feel undervalued, incapable, stigmatised and frustrated. As outlined in Chapter 1, companies and institutions wouldn’t achieve their full potential because they would hire and reward monochrome teams and their internal cultures might (eventually) become toxic because of monochrome thinking.

Since the turn of the millennium, organisations have begun to understand that there is a price to pay for unmanaged bias. As a team, the writers of this book have experience of both establishing and running projects to good effect, and we also have personal experience of the impacts of bias in the workplace. We have plenty of expertise to share. In the following chapters we will outline the steps that companies and individuals can take to ensure that personal biases are recognised, analysed and managed in order to mitigate the effects on women in the workforce.

REFERENCES

Ambady, N., Shih, M., Kim, A., & Pittinsky, T. L. (2001). Stereotype susceptibility in children: Effects of identity activation on quantitative performance. Psychological Science, 12(5), 385–390.

Armstrong, J., & Ghaboos, J. (2019). Women collaborating with men: Everyday workplace inclusion. Murray Edwards College, University of Cambridge. Available from https://www.murrayedwards.cam.ac.uk/sites/default/files/

files/Everyday%20Workplace%20Inclusion_FINAL.pdf.

BCS. (2020). BCS diversity report 2020: Part 2. Available from https://www.bcs.org/media/5766/diversity-report-

2020-part2.pdf.

Britannica. (n.d.). Physiology. Available from https://www.britannica.com/science/information-theory/

Physiology.

Buolamwini, J., & Gebru, T. (2018). Gender shades: Intersectional accuracy disparities in commercial gender classification. Proceedings of the 1st Conference on Fairness, Accountability and Transparency, 81, 77–91.

Cambridge Dictionary. (n.d.). Microaggression. https://dictionary.cambridge.org/dictionary/english/

microaggression.

Freedman, G., Green, M. C., Flanagan, M., Fitzgerald, K., & Kaufman, G. (2018). The effect of gender on attributions for women’s anxiety and doubt in a science narrative. Psychology of Women Quarterly, 42(2), 178–191.

Garg, N., Schiebinger, L., Jurafsky, D., & Zou, J. (2018). Word embeddings quantify 100 years of gender and ethnic stereotypes. Proceedings of the National Academy of Sciences, 115(16), E3635–E3644. doi: 10.1073/pnas.1720347115.

Greenwald, A. G., & Banaji, M. R. (1995). Implicit social cognition: Attitudes, self-esteem, and stereotypes. Psychological Review, 102(1), 4–27.

Grier, D. A. (2005). When computers were human. Princeton: Princeton University Press.

Harro, B. (2000). The Cycle of Socialization. In M. Adams, W. Blumenfeld, R. Castaneda, H. Hackman, M. Peters & X. Zuniga (Eds.), Readings for diversity and social justice

(pp. 16-21). New York: Routledge.

Hays. (2014). Same CV, different gender, same results. Available from https://social.hays.com/2014/10/07/cv-different-

gender-results.

Herman, C., Kendall-Nicholas, J., & Sadler, W. (2018). People Like Me: Evaluation report. WISE Campaign. Available from https://www.wisecampaign.org.uk/wp-content/uploads/

2018/06/People-LIke-Me-Evaluation-Report_June18-1.pdf.

Hicks, M. (2017). Programmed inequality: How Britain discarded women technologists and lost its edge in computing. Cambridge, MA: MIT Press.

Home Office. (2020). Police powers and procedures, England and Wales, year ending 31 March 2020 second edition. Gov.uk. Available from https://www.gov.uk/government/statistics/police-

powers-and-procedures-england-and-wales-year-

ending-31-march-2020.

Kandola, B. (2009). The value of difference: Eliminating bias in organisations. Oxford: Pearn Kandola.

Kandola, B. (2018). Racism at work: The danger of indifference. Oxford: Pearn Kandola.

Kandola, B., & Kandola, J. (2013). The invention of difference: The story of gender bias at work. Oxford: Pearn Kandola.

LaCosse, J. L., Sekaquaptewa, D., & Bennett, J. (2016). STEM stereotypic attribution bias among women in an unwelcoming science setting. Psychology of Women Quarterly, 40(3), 378–397.

Martell, R., Lane, D., & Emerich, C. (1996). Male-female differences: A computer simulation. American Psychologist, 51(2), 157–158.

McIntosh, P. (1989). White privilege: Unpacking the invisible knapsack. Peace and Freedom. Available from https://psychology.umbc.edu/files/2016/10/White-

Privilege_McIntosh-1989.pdf.

Moss-Racusin, C. A., Dovidio, J. F., Brescoll, V. L., Graham, M. J., & Handelsman, J. (2012). Science faculty’s subtle gender biases favor male students. Proceedings of the National Academy of Sciences, 109(41), 16474–16479.

Nadal, K. L., & Haynes, K. (2012). The effects of sexism, gender microaggressions, and other forms of discrimination on women’s mental health and development. In P. K. Lundberg-Love, K. L. Nadal & M. A. Paludi (Eds.), Women and mental disorders (pp. 87–101). Santa Barbara: Praeger/ABC-CLIO.

Neumark, D., Burn, I., & Button, P. (2015). Is it harder for older workers to find jobs? NBER Working Paper 21669.

Office for National Statistics. (2020). UK labour market: June 2020. Available from https://www.ons.gov.uk/releases/uklabourmarketjune2020.

Ong, A. D., Cerrada, C., Lee, R. A., & Williams, D. R. (2017). Stigma consciousness, racial microaggressions, and sleep disturbance among Asian Americans. Asian American Journal of Psychology, 8(1), 72–81.

Petrie, K. (2013). Attack on sexism not an attack on men. The Scotsman. Available from https://www.scotsman.com/news/opinion/columnists/

attack-sexism-not-attack-men-1552036.

Project Implicit. (n.d.). Implicit Association Test. Available from https://implicit.harvard.edu/implicit/takeatest.html.

Rabbit Hole. (2020). Rabbit Hole podcast [8 episodes]. New York Times. Available from https://www.nytimes.com/column/rabbit-hole.

Rowe, M. (c.1975). The Saturn’s Rings Phenomenon: Micro-inequities and unequal opportunity in the American economy. Available from https://mitsloan.mit.edu/shared/ods/documents/?

DocumentID=3983.

Rowe, M. & Giraldo-Kerr, A. (2017). Gender microinequities. In K. L. Nadal (Ed.), The SAGE encyclopedia of psychology and gender. Thousand Oaks: SAGE.

Rudman, L. A., & Glick, P. (2008). The social psychology of gender: How power and intimacy shape gender relations. New York: Galliford Press.

Siddique, H. (2020). Marcus Rashford forces Boris Johnson into second U-turn on child food poverty. The Guardian. Available from https://www.theguardian.com/education/2020/nov/08/marcus-

rashford-forces-boris-johnson-into-second-u-turn-

on-child-food-poverty.

Spencer, S. J., Steele, C. M., & Quinn, D. M. (1999). Stereotype threat and women’s math performance. Journal of Experimental Social Psychology, 35(1), 4–28.

Toegel, G., & Barsoux, J.-L. (2012). Women leaders: The gender trap. European Business Review. Available from https://www.europeanbusinessreview.com/women-leaders-

the-gender-trap.

Valian, V. (1999). Why so slow? The advancement of women. Cambridge, MA: MIT Press.

Vincent, J. (2018). Amazon reportedly scraps internal AI recruiting tool that was biased against women. The Verge. Available from https://www.theverge.com/2018/10/10/17958784/

ai-recruiting-tool-bias-amazon-report.

Walsh, M., Hickey, C., & Duffy, J. (1999). Influence of item content and stereotype situation on gender differences in mathematical problem solving. Sex Roles: A Journal of Research, 41(3–4), 219–240.

Weisshaar, K. (2017). Publish and perish? An assessment of gender gaps in promotion to tenure in academia. Social Forces, 96(2), 529–560.

Wikipedia. (n.d.). Attribution bias. Available from https://en.wikipedia.org/wiki/Attribution_bias.

Williams, J., & Dempsey, R. (2014). What works for women at work: Four patterns working women need to know. New York: New York University Press.

WISE Campaign. (2021). Welcome to My Skills My Life. Available from https://www.wisecampaign.org.uk/what-we-do/expertise/

welcome-to-my-skills-my-life.

Wood, M., Hales, J., Purdon, S., Sejersen, T., & Hayllar, O. (2009). A test for racial discrimination in recruitment practice in British cities. National Centre for Social Research. Available from https://natcen.ac.uk/our-research/research/a-test-for-racial-

discrimination-in-recruitment-practice-in-british-cities.

NOTES

1 These are often called AI in the press, but properly speaking there are lots of types of AI and ML systems represent a subset of these.