CONTENTS OF THIS CHAPTER

This chapter covers the following topics:

- approaches to development introduced;

- build or buy – bespoke development versus commercial, off-the-shelf (COTS);

- component-based development;

- development methodologies;

- software engineering paradigms;

- the influence of technological advances;

- references and further reading.

APPROACHES TO DEVELOPMENT

Using the term ‘systems development’ today seems somewhat limiting because, in practice, very few systems are actually developed from the ground up. Perhaps a better term would be ‘systems assembly’ (akin to car assembly, as distinct from car manufacture), or maybe even ‘solution development’. The issue here is that the term ‘development’ suggests creating something from scratch whereas, in practice, development teams are often merely assembling a series of ready-made components to form a solution to meet a particular set of requirements.

In fact, even this definition is not as clear-cut as it may suggest, because it may involve the development of bespoke elements that act as the glue to bind the ready-made components together. Figure 6.1 summarises some of the key elements that make up a particular information system solution. We shall explore each of these as we progress through this chapter.

To provide a reasonably thorough coverage of development approaches in the twenty-first century, it is necessary to consider aspects of methodology, technology, software engineering, procurement and management.

Figure 6.1 Development approaches: schematic overview

Methodology

Methodologies define repeatable processes. A systems development methodology also defines a set of deliverables to be produced at various stages of the process, the roles and responsibilities of people who participate in the process and, often, the techniques that can be used at various stages of the process.

Technology

The approach taken to developing a computer system cannot be completely divorced from the technology used within the solution. Some approaches are only possible as a result of specific enabling technologies; for instance cloud-based solutions.

Software engineering

A useful definition of software engineering is:

‘the application of a systematic, disciplined, quantifiable approach to the development, operation, and maintenance of software.’

(IEEE Standard Glossary of Software Engineering Terminology, IEEE std 610.12 – 1990).

Since the inception of the concept of software engineering, a number of software engineering paradigms1 have emerged, most notably the object-oriented paradigm, covered in more detail in Chapter 8.

In the context of systems development, procurement refers to the process of evaluation, selection, negotiation and purchase of a software solution or set of services that make up the solution, or a part of it.

Management

Management incorporates the planning, monitoring and controlling of an activity or process. A key element of management is also governance, which, in the context of IT has been defined as:

‘the theory that enables an organisation’s principal decision makers to make better decisions around IT and, at the same time, provides guidance for IT managers who are tasked with IT operations and the design, development and implementation of IT solutions.’

(Governance of IT: An executive guide to ISO/IEC 38500).

BUILD OR BUY?

For many systems development projects, perhaps the most fundamental decision to make early on in the project is whether to procure a ready-made solution or to build a bespoke (tailor-made) solution. Some organisations have policies that state that they will always procure an off-the-shelf solution unless one cannot be found. So it seems that the old saying ‘don’t re-invent the wheel’ is firmly in the minds of the decision makers when choosing whether to build or buy.

Realistically however, the decision to build or buy is dependent on the nature of the project being undertaken and, therefore, the type of system being sought. Hence, the decision on which approach to take will often not be made until the system requirements have been defined and agreed. Furthermore, a particular solution to a business need may be delivered through a combination of bespoke-developed and ready-made components, which we shall consider later in this chapter.

Bespoke

At one time, in the not-too-distant past, bespoke systems development was the only option available to organisations wishing to adopt computer technology. If an organisation wanted to automate part (or all) of a business process, to make the process more efficient and reduce costs, then they would need to define a set of requirements and commission a developer to build the system. Many organisations had dedicated teams of systems developers who existed to build bespoke solutions to meet the precise computing needs of their organisations.

In more recent times, it seems that only the largest and most wealthy of organisations have the luxury of dedicated in-house development teams, and even those organisations often outsource the development work to a third-party organisation.

With the bespoke development approach, a ‘tailor-made’ solution is designed and developed to precisely meet a set of agreed requirements. Acceptance of the final solution is dependent on the solution exactly meeting these agreed requirements.

A number of methodologies have been established to formalise the approach to building bespoke systems. We shall consider these later in this chapter.

Ready-made

Partly due to economies of scale, and partly due to a desire to minimise costs and time to benefits realisation, more and more organisations seek ready-made solutions to meet their computing needs. There are some very compelling arguments in favour of procuring a ready-made solution, but there are also potential risks and downsides.

There are two broad approaches to procuring a ready-made solution: purchase a commercial off-the-shelf (COTS) solution or obtain an open source solution. We shall consider each of these approaches in turn.

COTS

In the context of computer systems, COTS software products are ready-made software packages that are purchased, leased or licensed, off-the-shelf, by an acquirer who had no influence on its features and other qualities.

According to ISO/IEC 25051, COTS products...

‘include but are not limited to text processors, spreadsheets, database control software, graphics packages, software for technical, scientific or real-time embedded functions such as real-time operating systems or local area networks for aviation/communication, automated teller machines, money conversion, human resource management software, sales management, and web software such as generators of web sites/pages.’

The ISO definition embraces generalised software (such as spreadsheets and text processors) as well as software for specific application areas (human resource management, finance, supply chain management, and so on). However, for the purpose of this chapter, we shall focus on the latter group.

The procurement and implementation of a COTS solution has many advantages, such as speed of system delivery and cost. However, there are disadvantages, such as the lack of ownership of the software. Before pursuing this approach, it is important to consider both the pros and cons. A recognition of the disadvantages of this approach helps identify risks that need to be addressed throughout the solution development process. The key advantages and disadvantages of the COTS approach are identified in Figure 6.2.

Figure 6.2 Advantages and disadvantages of the COTS approach

Advantages Cost

The procurement of a COTS solution is usually cheaper than developing a bespoke alternative. The reason for this is that significant development costs (detailed design, coding, unit testing and preparation of documentation) have effectively been spread across a large customer base by the vendor and are recouped through ongoing licence fees. Cost is usually an important factor in deciding to pursue the COTS approach. However, some purchasers do not take into account the ongoing costs associated with this approach, such as year-on-year licence renewal fees.

Time

By time, we mean the elapsed time between signing contracts and ‘live’ operational use of the new solution, although, from a business perspective, this could also be viewed as time to benefits realisation. Again, this is due to the fact that a significant part of the development activities (identified above) are avoided. These parts of the development lifecycle are very time-consuming and during this period requirements may change, so complicating the process even further. However, additional activities need to be factored into the project plan, including configuration of the new solution and integration with existing systems. Furthermore, as a consequence of the fact that a COTS solution will never realise one hundred per cent of the solution requirements (see disadvantages below), the adoption of a COTS solution also necessitates the development of manual process workarounds or even bespoke ‘add-ons’ to plug the gap.

Quality

Generally speaking, the quality of a COTS solution is very high. COTS vendors cannot afford to release a poor quality product, as this may lead to significant support and maintenance costs that cannot be recouped. Even worse, a poor quality product may have a damaging effect on the vendor’s reputation, leading to loss of future sales. Another factor leading to the high quality of a product is that it is likely to have been tried and tested by a large number of users within a range of different customer organisations.

Documentation and training

All products are supplied with some level of support and documentation, and this is no different with software products. With COTS software products, the documents (such as user manuals and online help systems) are usually of high quality because they represent an important part of the selling process; prospective customers may ask to review the quality of the supporting documentation as part of the evaluation of competing products. In contrast, the documentation supporting bespoke systems is not available until very late in the lifecycle, and even then there may be insufficient resources available to do the task of documentation justice.

Similarly with training, economies of scale allow the software vendors to produce and provide high quality training courses, supported by professional trainers. Furthermore, prospective purchasers can attend a training course prior to buying the product and evaluate the suitability of the training available.

Maintenance and enhancement

Software products are usually supported by a formal maintenance agreement that provides:

- access to telephone and/or email support, where experts can provide guidance and resolve common user problems;

- periodic upgrades to the software that correct known faults and also include new functionality defined and agreed with the user community.

The cost of support and enhancements is again spread across a number of users and so can be offered relatively economically to each individual customer. The availability of upgrades as part of the ‘package’ is particularly significant to customers of accounts and payroll packages, as these upgrades are essential to maintain compliance with legislative changes made by government.

Try before you buy

The ability to evaluate the software product before purchasing it (using a trial version or a more formal evaluation using the target hardware and software environment) is a major advantage over the bespoke approach, where it is not possible to evaluate the complete product until almost the end of the project. Such product evaluations can also be supplemented by visits to actual users (called reference sites), where the operation of the package can be observed and user comments and experiences documented.

It must be recognised, however, that the ability to ‘try before you buy’ places the onus on the user to ensure that the product will support their requirements, and not the supplier to warrant this.

Disadvantages Not 100% fit to requirements

As a result of COTS solutions being ready-made, it is impossible for the vendor to be able to predict the requirements of all potential customers. Consequently, a COTS solution will never support all of the specific requirements for a given customer. Hence, when pursuing the COTS approach, the customer’s definition of quality must be ‘fitness for purpose’ rather than ‘one hundred per cent fit to requirements’, and, in practice, this means that the customer must identify the gap between their requirements and the COTS product and determine how best to address it. This may be achieved in a number of ways:

- put in place manual workarounds for the areas of functionality not addressed by the product;

- build bespoke ‘add-on’ components/programs that interface with the product to provide the missing functionality;

- tailor the product to incorporate the missing functionality;

- change the way that the customer’s business process works to reflect the functionality provided by the product.

All of these involve compromise, which is a key element of the COTS approach, when compared to a bespoke development, which can fit one hundred per cent of the requirements.

Although common, tailoring the product is perhaps the most risky approach, because this often means that the resultant product no longer provides the benefits of the COTS approach, nor those of the bespoke approach. This is especially the case when the vendor no longer provides support and maintenance for the modified product in the same way as it does for its standard (‘vanilla’) product, and hence, ongoing support and maintenance costs increase significantly. Furthermore, tailoring of the ‘vanilla’ product can lead to disagreements over the ownership of the modifications made.

Ownership issues

With a bespoke system, if the system is developed by an in-house development team, then the ownership of the software clearly resides with the customer organisation. Even when the customer commissions a third party to build the software on their behalf, the ownership generally resides with the customer and not the third-party developer.

With a COTS product, the ownership of the software usually remains with the vendor, and customers are licensed to use the product under the terms of a licence agreement, which, generally does not grant them any rights of access to the product source code. This ownership issue has a number of implications:

- The vendor, not the customer, decides the future of the package, both in terms of any future development work (and hence, new product features).

- The vendor may decide to withdraw support from earlier versions of the product, which may force customers into unnecessary (for them) and potentially expensive upgrades.

- The vendor may completely change the basis of the licence, leading to significant, unforeseen cost increases for customers.

- The vendor may decide to sell their product to a third party, which may unnerve or inconvenience customers, possibly causing them to make plans to move their systems to a rival product.

These ownership issues represent risks to the customer, who must consider the impacts of these risks and look at possible avoidance and mitigation (impact reduction) actions before procuring the COTS solution.

Financial stability of the vendor

The financial stability of the vendor is another key risk of the COTS approach, and is closely related to the issue of ownership. If the vendor ceases to trade, typically as a result of financial instability, then the customer is left without the ongoing support and maintenance that the vendor provides. This is further complicated by the fact that the customer does not have access to the product source code, which would enable them to support and maintain the product in-house (if the customer has an in-house development team) or find an alternative vendor willing to take on the support and maintenance. A common approach to mitigating this risk is to enact an escrow2 agreement during the procurement of the COTS product.

Lack of competitive advantage

A COTS product can never truly be used to provide the customer organisation with a competitive advantage over other organisations in the same marketplace, because these competitors can also buy the same product. Consequently, COTS solutions are generally used for automating the non-niche business processes, such as HR, payroll, finance, supply-chain management, and so on.

Limited legal redress

With a COTS product, the ability to resolve issues relating to the failure of the product to fulfil the customer’s requirements is limited, because the product is essentially ‘bought as seen’, and the onus is on the customer to confirm that the product meets their requirements before purchase. Furthermore, the license agreement is defined in favour of the vendor, and there is usually a clause that states that the package may not support the functional requirements of the customer – and it is the customer’s responsibility to ensure that it does. There is also a limit in terms of the vendor’s liability, which is explicitly defined within the license agreement.

Changing nature of requirements

The selection of a COTS product is obviously based on the customer’s requirements at that point in time, which will have been defined by the relevant stakeholders at that time. However, stakeholders change and so do system requirements, and there is no guarantee that the COTS product will change in accordance with the customer’s changing requirements.

Open source

An alternative to the COTS approach, that promises to provide a ‘best of both worlds’ option, is open source software. With this option, the customer obtains a ready-made solution but also gains access to the source code to enable in-house or third-party developers to tailor the solution to meet the precise needs of the customer. Furthermore, open source software is available at zero cost to the customer. It is, however, still subject to the terms of a license agreement, but this agreement is much more flexible than its commercial (COTS) equivalents.

The principles behind the open source movement are:

- The end-product (and source materials) are available to the public at no cost.

- Independent developers can gain access to the product’s source code to maintain and support the application within their own organisation.

- The source code is made available under a software license that meets the Open Source Definition or similar criteria.3

- Anyone with the necessary skills and tools can change, improve and redistribute the software free of license fees.

- Open source software is typically developed and maintained collaboratively in the public domain, rather than being a product that is developed by a single software house.

The open source option clearly offers a number of benefits: zero license costs (although this doesn’t mean a zero cost solution) and the provision of a ready-made solution that can also be tailored and maintained as necessary to suit the customer organisation.

However, open source solutions still carry risks for the customer. For example, the customer takes full responsibility for ensuring that the solution meets their needs, and for having the available resources for the ongoing support and maintenance of the solution. Some open source products are maintained and supported through public collaboration and, thus, bug fixes and enhancements are also made available to the customer free of charge. However, because public collaboration is a voluntary activity, there can be a question mark over the quality of these products and the availability of support and ongoing maintenance, unless the customer has their own in-house resources who can undertake this work.

Furthermore, if the customer modifies the software, then they are in a similar position to a COTS solution that has been tailored and the ‘vanilla’ product is then enhanced; the open source solution could be enhanced and if the customer wants to take advantage of the recent enhancements, they may be forced to make their own modifications again!

As the open source software marketplace is maturing, a number of commercial organisations have been established to provide tailoring, support and maintenance activities based around the open source product. This can mitigate the risks associated with the public collaborative development of the solution, whilst maintaining the benefits of a ready- (or near ready-) made solution with zero license costs.

The advent of open source software has also encouraged large software corporations, such as Microsoft™, to offer what they call ‘shared source’ software. Microsoft’s Shared Source Initiative was launched in May 2001 and includes a spectrum of technologies and licenses. Most of its source code offerings are available for download after eligibility criteria are met. The licenses associated with the offerings range from being closed-source (allowing only viewing of the code for reference), to allowing it to be modified and redistributed for both commercial and non-commercial purposes.

COMPONENT-BASED DEVELOPMENT

Earlier, we explored bespoke and ready-made as two separate approaches to developing and delivering an information system solution to meet a particular business need. However, in practice, modern information systems comprise a series of separate, but integrated, building blocks referred to as components.

A useful working definition of a software component is as follows:

A component is something that can be deployed as a black box. It has an external specification, which is independent of its internal mechanisms.

With component-based development, software applications are assembled from components from a variety of sources; the components themselves may be written in several different programming languages and run on several different platforms.

What is common to most definitions of a software component is the notion that a component has an inside and an outside, and there is a relationship between the two. The inside of a software component is a discrete piece of software satisfying certain properties. It is a device, artefact or asset that can be managed to achieve reuse. The outside of a software component is an interface that exposes a set of services (that the component provides) to other components.

Components can exist independently of each other but can also be assembled together to build new solutions; they provide services to other components and use services provided by other components, as shown in the UML component diagram in Figure 6.3.

Figure 6.3 Integrated components forming a Sales order processing solution

Figure 6.3 shows three independent components: Logistics, Accounts and OrderManagement. The Logistics component exposes an interface called iLogistics, that can be used by other components to consume the services that it provides. Similarly, the Accounts component exposes an interface called iAccounts and the OrderManagement component exposes an interface called iOrders. Furthermore, Figure 6.3 shows that the Logistics and Accounts components are both using services provided by the OrderManagement component, by virtue of the fact that they have dependences on the iOrders interface (shown as dashed arrows).

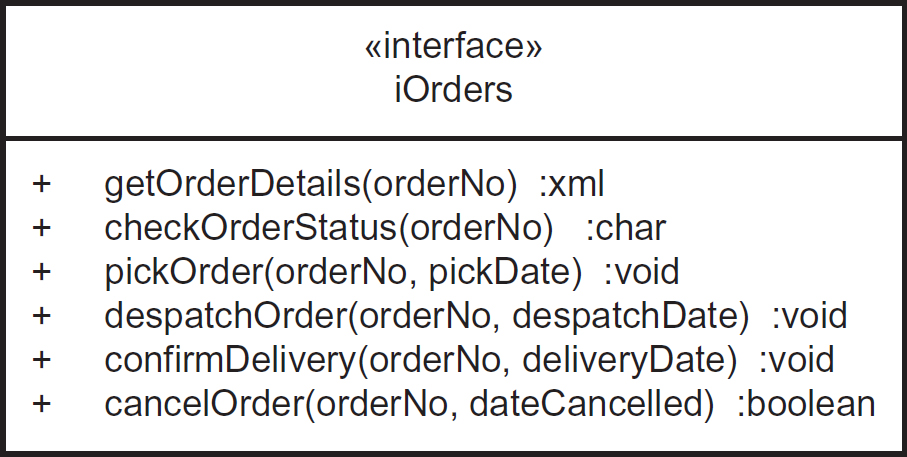

Figure 6.4 shows the definition of the iOrders interface using UML class diagram notation.

Figure 6.4 Definition of the Orders interface

The interface in Figure 6.4 shows the discrete services (such as getOrderDetails) that can be invoked by other components, along with a detailed definition of the message format that must be used to invoke each service and any return values provided by the OrderManagement component. If we consider the example of getOrderDetails, we can see that any component invoking that service must provide the order number to uniquely identify the order that they require the details for, and, in turn, the OrderManagement component will return an XML data structure containing the required details.

Components exist at different levels of granularity. Some are defined at a very low level and are seen as a basis for extensive reuse across a wide range of systems development projects (for example, software houses establish an extensive internal inventory of documented and tested components that developers use to create their applications from), whilst others are complete encapsulated applications in their own right that can be integrated with other application-level components to form a much broader scope solution.

As granularity increases, it is likely that components will be purchased externally and implemented into the application software. External purchase of components requires a clear understanding of the service that the component provides and the interface it requires. Small, low-level components may also be purchased and these find their way into many applications. Furthermore, some components are ‘open source’ and may be modified to suit local circumstances, whilst others are closed and the program code inside the component is not accessible or visible to the systems developer. In fact, entire COTS products could be treated as components within the context of a broader, multi-application solution.

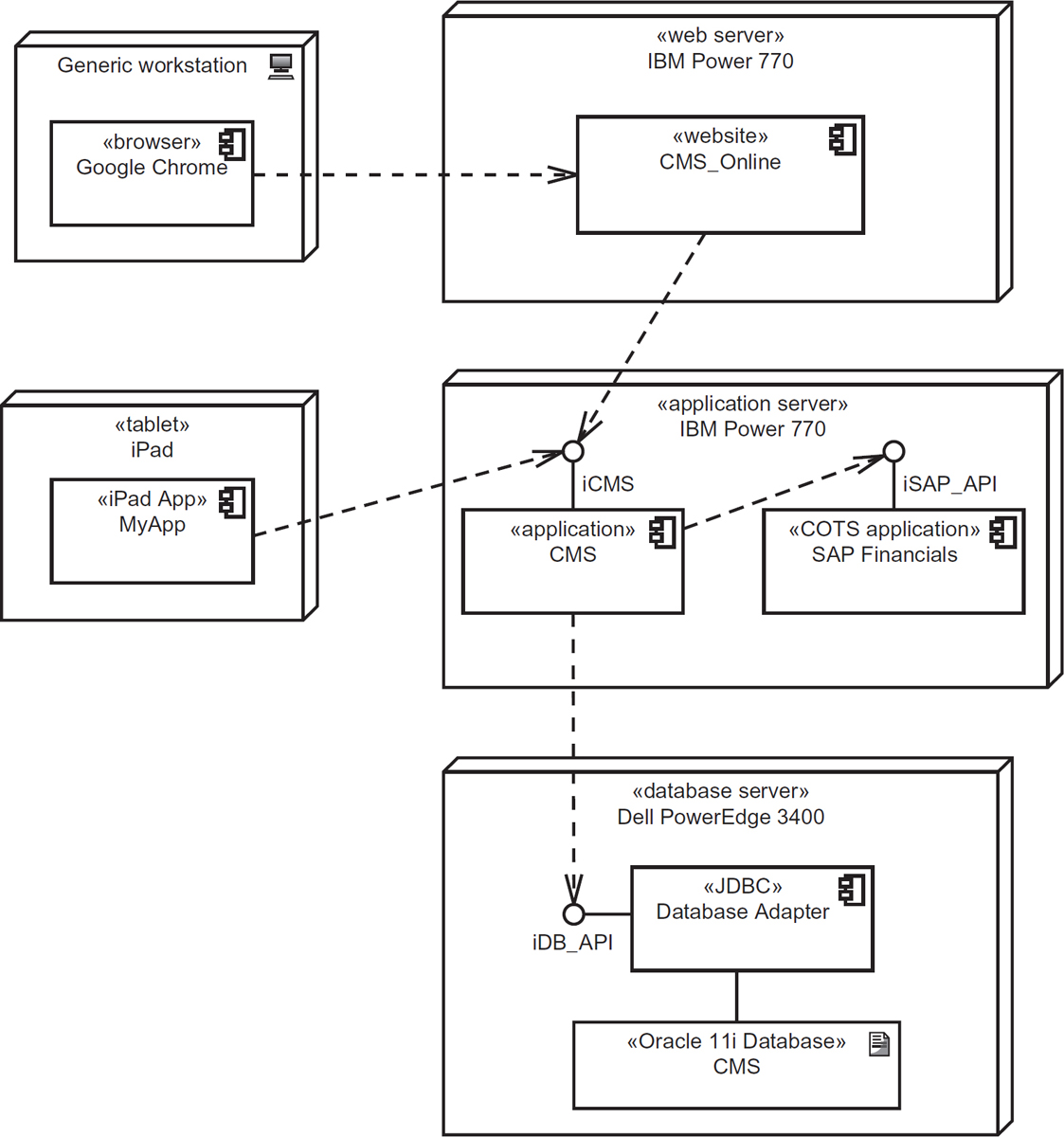

Figure 6.5 shows a UML deployment diagram that highlights how a series of discrete components can be assembled to form a solution.

Figure 6.5 UML deployment diagram showing a component-based solution

In Figure 6.5 the three-dimensional cubes represent Nodes in UML, which equate to devices within the solution infrastructure. UML stereotypes are used to further clarify the roles of each device (<<tablet>>, <<web server>>, <<application server>>, <<database server>>) and also the different types of component or technology used (<<browser>>, <<iPad App>>, <<website>>, <<application>>, <<COTS application>>, <<Oracle 11i Database>>, <<JDBC>>).

A more detailed explanation of component-based system design is provided in Chapter 8.

DEVELOPMENT METHODOLOGIES

A software (or system) development methodology (SDM) is a framework that is used to structure, plan, and control the process of developing an information system. The idea of software development methodology frameworks first emerged in the 1960s.

While some methodologies prescribe specific software development tools, such as Integrated Development Environments (IDEs) or Computer Aided Software Engineering (CASE) tools, the main idea behind the SDM is to pursue the development of information systems in a structured and methodical way from inception of the idea to delivery of the final system, within the context of the framework being applied.

The framework within which systems development takes place is determined by the systems development lifecycle (SDLC) model used. In Chapter 2, we introduced four basic models:

- Waterfall

- ‘V’ model

- Incremental

- Iterative

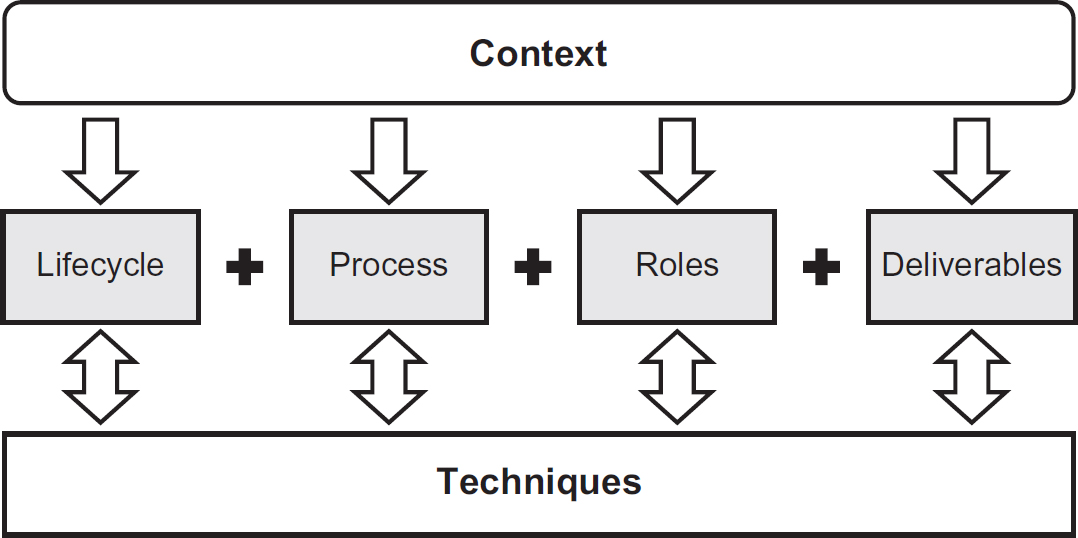

However, systems development projects also comprise a number of other elements, as shown in Figure 6.6.

Figure 6.6 Elements of a systems development methodology

Context

The approach taken to systems development has to be context sensitive, and the context in this case is the business and project environment within which the development takes place, which includes (amongst others):

- the business strategy and drivers for undertaking the development;

- the key stakeholders involved;

- the organisational culture;

- existing hardware and software constraints;

- architectural standards and principles;

- the legislative and regulatory framework that the organisation must operate within;

- resource and skills availability;

- time and budget constraints.

The context influences the other key elements: lifecycle, process, roles and deliverables.

Lifecycle

The lifecycle model provides a framework (in terms of a series of either linear or Iterative stages) within which the activities are conducted in order to deliver the required outcome.

Process

The process provides a definition/description of the flow of work that must take place in terms of a series of activities, and who undertakes each activity. In this sense, processes provide a more detailed breakdown of what should happen during each stage of the lifecycle. For example, during the analysis stage of the Waterfall and ‘V’ model SDLCs, requirements engineering defines a process for eliciting, analysing and validating a set of good quality requirements.

Note: The term ‘system development process’ is sometimes used more loosely to refer to a methodology that encompasses all of the elements in Figure 6.6.

Roles

The roles define a set of responsibilities that need to be assigned to individuals or groups of individuals in order for systems development to be successfully completed. Generic roles related to systems development are discussed in Chapters 1 and 5 but some systems development methods define their own specific roles.

Deliverables

The deliverables from a systems development project comprise a series of artefacts that are produced during various activities that take place within systems development processes. Some artefacts are merely enablers of the process – such as a meeting report following a fact-finding interview – whilst others form the basis of project governance, whereby their approval and sign-off are required before progress can move on to the next stage – such as a requirements document signed-off by the project sponsor.

A technique is essentially a way of completing a task; for instance, the MoSCoW prioritisation technique discussed in Chapter 5 provides a way of completing the prioritisation of requirements. Or it can be a systematic method to obtain information; for instance, prototyping provides a method to obtain feedback regarding the functionality and usability aspects of a system design. The variety of techniques deployed within systems development is therefore diverse.

Lifecycles, roles, deliverables and techniques are all covered elsewhere in this book, so we shall focus here on the process aspect of a systems development approach.

Systems development processes broadly fall in to one of two categories: ‘defined’ or ‘empirical’. We shall now compare each of these types of processes in turn.

Defined processes

A defined process is one that is based upon the defined process control and management model. This model is extensively used in manufacturing industries; it has been adopted for use with software development, which is thus treated as a manufacturing discipline, where the product is software.

The premise behind a defined process is that each piece of work can be completely understood and that, given a well-defined set of inputs, the same outputs are generated every time. Management and control arise from the predictability of defined processes, and, since the processes are defined, they can be grouped together and expected to continue to operate predictably and repeatably. Hence, when applied to systems development, approaches based on defined processes work on the premise that it is possible and desirable to define a set of simple, repeatable development processes, with associated techniques, in order to ensure a predictable outcome from systems development projects. The approach (or methodology) revolves around a knowledge base of processes and techniques, and the dependencies between elements in the knowledge base form defined project templates, each tailored to meet the needs of specific types of project (such as online transaction processing, batch processing, web development, and so on). In theory, processes defined with sufficient accuracy, granularity and precision can ensure predictability of outcome.

A typical defined approach to systems development is explained succinctly by Ken Schwaber:

‘A project manager starts a project by selecting and customising the appropriate project template. The template consists of those processes appropriate for the work that is planned. He or she then estimates the work and assigns the work to team members. The resultant project plan shows all the work to be done, who is to do each piece of work, what the dependencies are, and the process instructions for converting the inputs to the outputs... Having a project plan like this gives the project manager a reassuring sense of being in control. He or she feels that they understand the work that is required to successfully complete the project. Everyone will do what the methodology and project plan says, and the project will be successfully completed.’

(Schwaber and Beedle 2001)

Systems development approaches based upon defined processes are often criticised because they lead to increased levels of documentation and bureaucracy. In inexperienced or ill-informed hands, the use of such methods can lead to the adoption of a ‘cookbook’ approach, whereby the various steps and activities of the method are followed blindly without considering the reasons why they should be carried out, often with disastrous consequences.

A number of so-called defined (also referred to as procedural) systems development methodologies and frameworks have sprung up over the years and the more common of these are listed in Table 6.1, along with some of their defining features.

Table 6.1 Common defined (procedural) methodologies and frameworks

Empirical processes

The problem with applying defined processes to the discipline of systems development is that defined processes are based on the premise that the product being built is always the same and, hence, the process is predictable and repeatable. However, systems development does not meet these criteria. In fact, systems development more closely resembles research and development of new products, which is more of an empirical process. Hence, the recent trend towards ‘Agile’ development, which is based upon an empirical process model.

One of the first major approaches to systems development that used a more empirical approach was Rapid Application Development (RAD). The term Rapid Application Development (RAD) was first used during the mid 1970s to describe a software development process developed by the New York Telephone Company’s Systems Development Center. The approach, which involved iterative development and the construction of prototypes, became more widely adopted with the publication of James Martin’s book of the same name in 1991.

More recently, the term ‘RAD’ has been used somewhat less and the alternative ‘Agile’ has gained currency. Agile development was established when 17 independent thinkers about software development met at The Lodge at Snowbird ski resort in the Wasatch mountains of Utah on 11–13 February 2001. The result of this gathering was the creation of a Manifesto for Agile development, discussed in Chapter 2 but repeated here:

We are uncovering better ways of developing software by doing it and helping others do it. Through this work we have come to value:

Individuals and interactions over processes and tools Working software over comprehensive documentation Customer collaboration over contract negotiation Responding to change over following a plan

That is, while there is value in the items on the right, we value the items on the left more.

The group named themselves the ‘Agile Alliance’ and included representatives from Extreme Programming (Kent Beck), Scrum (Ken Schwaber), DSDM (Arie van Bennekum), Adaptive Software Development (Jim Highsmith), Crystal Clear (Alistair Cockburn), Feature-Driven Development, Pragmatic Programming (Andrew Hunt), and others sympathetic to the need for an alternative to documentation-driven, heavyweight software development processes.

In addition to the manifesto, the group formulated a set of 12 underlying principles, as follows:

- Our highest priority is to satisfy the customer through early and continuous delivery of valuable software.

- Welcome changing requirements, even late in development. Agile processes harness change for the customer’s competitive advantage.

- Deliver working software frequently, from a couple of weeks to a couple of months, with a preference to the shorter timescale.

- Business people and developers must work together daily throughout the project.

- Build projects around motivated individuals. Give them the environment and support they need, and trust them to get the job done.

- The most efficient and effective method of conveying information to and within a development team is face-to-face conversation.

- Working software is the primary measure of progress.

- Agile processes promote sustainable development. The sponsors, developers and users should be able to maintain a constant pace indefinitely.

- Continuous attention to technical excellence and good design enhances agility.

- Simplicity – the art of maximising the amount of work not done – is essential.

- The best architectures, requirements, and designs emerge from self-organising teams.

- At regular intervals, the team reflects on how to become more effective, then tunes and adjusts its behaviour accordingly.

Agile systems development is an evolutionary approach based around an iterative SDLC with rapid, incremental delivery (whereby each release of production-ready software builds upon the functionality delivered in the previous release).

Agile approaches also demonstrate the following characteristics:

Exploratory

Requirements evolve in parallel with the solution.

Collaborative

System users, analysts, designers, developers and testers work together to define, build and review evolutionary prototypes, which evolve into production-ready software.

Focus on working software

The approach is based on an understanding that documentation is important when it adds value to the quality of the software, but more emphasis should be given to the development and refinement of working software, which is more accessible to the end user.

Flexible

The project team focuses on the business need at all times, which may change as the project progresses.

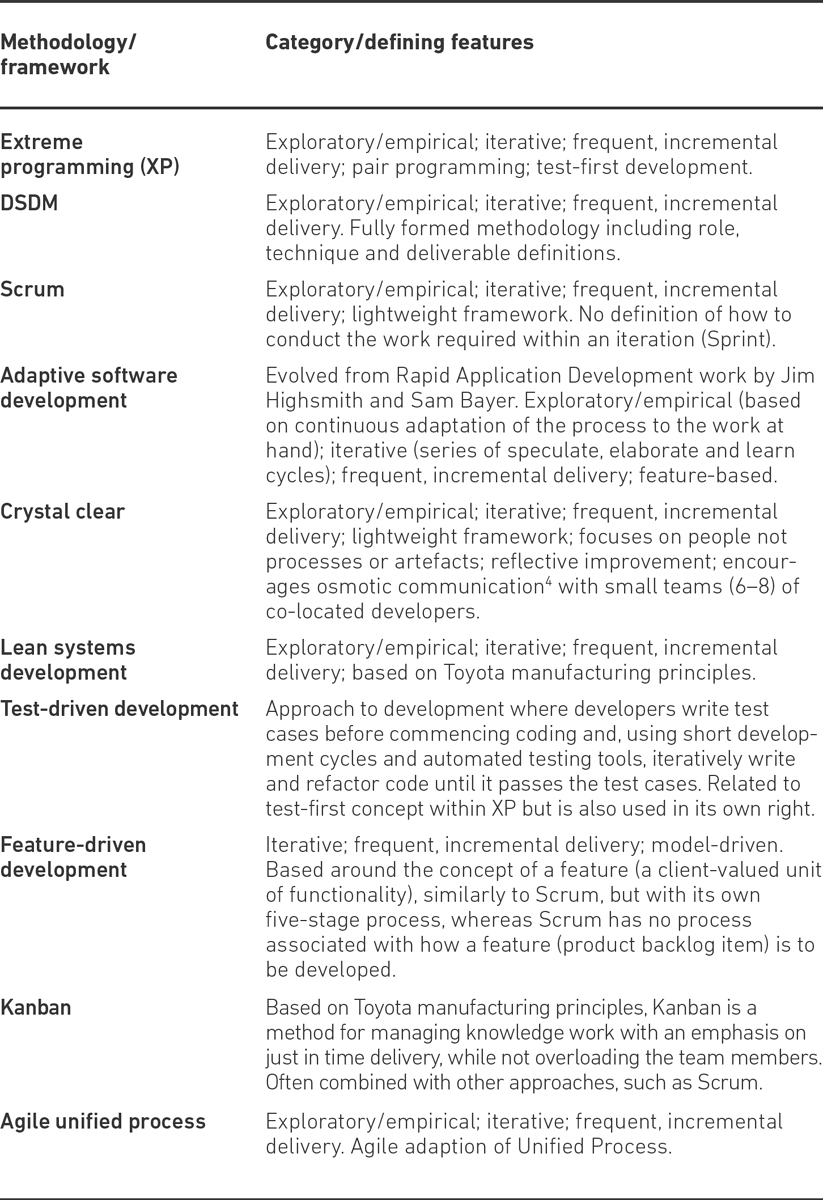

Several discrete Agile development methodologies and frameworks have evolved in recent years (although some were in existence before the Agile Manifesto was devised, and have since been absorbed into the general category of Agile approaches). The most common are listed and summarised in Table 6.2.

Table 6.2 Common Agile methodologies and frameworks

SOFTWARE ENGINEERING PARADIGMS

A number of different paradigms (world views underlying the theories and methodology of software engineering and programming) have evolved since the early 1960s, each proposing a radically different approach to building software. We shall focus on the three most prevalent paradigms that underpin modern software development.

Structured programming

Structured programming (SP) emerged in the 1960s from work by Boehm and Jacopini and a famous letter, ‘Go to statement considered harmful’, from Edsger Dijkstra in 1968. The principles behind structured programming were reinforced by the ‘structured program theorem’, and the emergence of languages such as ALGOL, which provided the necessary control structures to practically implement the concept.

SP is aimed at improving the clarity and quality of a computer program, whilst simultaneously reducing the amount of time needed to develop it. This is achieved by making use of three basic programming constructs: sequence (block structures and subroutines), selection (conditional execution of statements using keywords such as if..then..else..endif, switch, or case), and iteration (repetition of a group of statements using keywords such as while, repeat, for or do..until), in contrast to using simple tests and jumps such as the goto statement, which could lead to ‘spaghetti code’ which is both difficult to follow and to maintain. Additionally, there are recommended best practices such as ensuring that each construct has only one entry and one exit point.

Michael A. Jackson devised a variation to the original SP called Jackson Structured Programming (JSP), that became widely adopted by mainframe development teams for the design of COBOL programs, although it is by no means limited to this environment. JSP used structure charts with specific notation for sequence, selection and iteration constructs, and one of the key rules was that no two types of construct could be mixed at the same level.

The basic principles behind SP are still applied during systems design in the form of modular design and its associated principles of coupling and cohesion, which is covered in more detail in Chapter 8.

Object-oriented development

Object-oriented development (OOD) is a programming paradigm based around the concept of an object and the fact that objects can collaborate directly with each other in order to realise required system functionality.

In software terms, an object is a discrete package of functions and procedures (operations), all relating to a particular real-world concept such as an order, or a physical entity such as a car. In addition to a set of operations that they can perform (behaviour), objects also have a set of properties (data items or attributes) associated with them.

Objects are classified by classes, which define the attributes and operations that a particular type of object will possess. Classes are often modelled using a class diagram, which shows the class definitions and the relationships between them.

In OOD, objects represent business entities (such as customer, order and product) as well as artefacts that make up the built system (such as windows, forms, controls and reports), and when designing and writing program code, there is less focus on discrete programs performing functions defined in a functional specification but, instead, classes are built individually and the operations of certain classes invoke the operations of other classes that provide services to them. Objects can only access other objects by sending messages to them, which, in turn, invoke one of their operations – a concept known as encapsulation, which is explained further in Chapter 8. Isolating objects in this way makes software easier to manage, more robust and reusable.

There is a correlation between OOD and component-based development (discussed earlier). Components interact with each other via interfaces, which expose services to other components and, similarly, objects interact with each other by sending messages that invoke their publicly visible operations.

Although it is possible to build systems in an object-oriented way using non-OO programming languages (such as COBOL, C, ALGOL, Pascal, RPG and PL1), OO programming languages (such as C++, Java, Perl, PHP, Visual Basic.NET, Visual C#.NET and Smalltalk) automatically enforce the OO principles of encapsulation, abstraction, inheritance and polymorphism, which would otherwise have to be explicitly coded by the developer.

Object-oriented systems design is covered in more detail in Chapter 8.

Service-oriented development

The principle behind service-oriented approaches to systems development is similar to OOD in that services collaborate with each other to realise the required functionality. However, service-oriented development (SOD) does not rely on the use of objects in the same way that OOD does. In fact a service is a more abstract concept than an object in that it can effectively be implemented using any technology. Consequently, SOD is said to be ‘technology agnostic’. However, in practice, the physical implementation of SOD relies on the use of certain technologies, including XML web services, HTTP (Hyper Text Transport Protocol) and SOAP (Simple Object Access Protocol), amongst others. Detailed coverage of these technologies is outside the scope of this book.

The concept behind SOD is very simple: required services are matched to available services. Required services are identified through the decomposition of high-level business processes and use cases, and available services are discovered in some form of services catalogue and invoked across a network via a services directory. Available services are invoked by the passing of messages.

If we take the example of an ecommerce website, a required service may be to take payment from the customer to confirm an order. The invoker of the service (the website) does not need to know how the service provider (in this example, a payment processing service such as PayPal) provides the service but only the nature of the service required and details of the message formats. Consequently, the consumer of the service is completely ignorant of the technologies used to implement the service, and also even where the service provider resides geographically or within the IT infrastructure.

THE INFLUENCE OF TECHNOLOGICAL ADVANCES

Advances in technology provide opportunities for software developers that enable them to deliver significant business benefits. Sometimes these benefits manifest themselves in cheaper and quicker solution development, whilst others are more far-reaching and can actually revolutionise the provision of IT solutions for the entire IT industry. Two such technological advances are considered here.

Cloud-based

A useful definition of cloud computing is provided courtesy of the National Institute of Standards and Technology:

‘a model for enabling convenient, on-demand network access to a shared pool of configurable computing resources [...] that can be rapidly provisioned and released with minimal management effort or service provider interaction.’

Cloud computing consists of three separate types of service:

- Software as a service (SaaS) – the provision of applications in the cloud.

- Platform as a service (PaaS) – the provision of services that enable the customer to deploy applications in the cloud using tools provided by the supplier.

- Infrastructure as a service (laaS) – the provision of computing power, storage and network capacity that enable the customer to run software (including operating systems and applications) in the cloud.

These three services together are referred to as the cloud computing stack and, with each of them, the services are hosted remotely and accessed over a network (usually the internet) through the customer’s web browser, rather than being installed locally on a customer’s computer.

On demand software

The cloud-based approach to application delivery provides flexibility to the customer, who can access the application from any computer connected to the internet (whether desktop PC or mobile device); it provides a multitude of IT services rather than being limited to using only locally-installed software and being dependent on the storage capacity of the customer’s local computer network. For organisations large and small, this offers a scalable IT resource without the complexity of managing the IT infrastructure and application upgrades typical with more traditional applications.

On demand software is effectively a subset of SOD. The premise here is that software is licensed as a service on demand. The service consumer pays for the service as and when it is used rather than via a more traditional licensing arrangement.

SaaS vendors (also known as Application Service Providers (ASPs)) typically host and manage the application themselves, and their customers access the application remotely via the web. This approach to the provision of software applications provides a number of benefits:

- The solution is usually scalable as the number of users can grow with the demand for the service, without the need for the consumer to invest in additional hardware or software infrastructure.

- Lower licensing costs (typically) as the user only pays for the service that they use, as opposed to buying separate licenses for all devices that may be required to access the application.

- Low maintenance costs, as activities such as feature updating are managed from central locations rather than at each customer’s site. This means that all customers gain access to the latest software version, without the need to upgrade individual client workstations, by downloading patches and installing upgrades.

- Customers access applications remotely via the web, independent of the hardware location and, hence, are not restricted to using the application from a particular workstation or geographical location.

Other benefits are similar to those associated with COTS solutions, covered earlier in this Chapter.

Perhaps one of the most widespread implementations of on demand software via SaaS is Microsoft’s Office 365 offering, which has revolutionised the way organisations deploy and use generic office and team collaboration applications.

Model Driven Architecture (MDA)

Model Driven Architecture refers to an approach to the production of executable software directly from a set of design models, without the intervention of a human programmer.

Producing program code directly from a set of design models has long been an objective for systems development tools vendors but, until recently, the supporting technology was either not up to the job or was prohibitively expensive. Furthermore, a lack of industry-wide standards led to proprietary tools that could not be interchanged and, hence, represented a huge investment and major risk for development teams.

With the adoption of UML as an open modelling standard by the Object Management Group (OMG) in 1997, tools vendors could focus on building MDA tools around UML. In fact, since the release of version 2.0 of UML, the OMG has focused its efforts on enhancements aimed at the use of UML with MDA tools, to enable much more precise model definitions to be produced.

Apart from the obvious benefits of reduced cost and increased speed of development, a major advantage of this approach, from the perspective of the development team, is that the model and the physical system it represents are kept synchronised. This is particularly significant when it comes to system maintenance, as a common problem encountered at that stage is out-of-date documentation. An additional benefit is that the MDA tools can potentially be used by systems analysts, who may already be producing the required models using a similar tool.

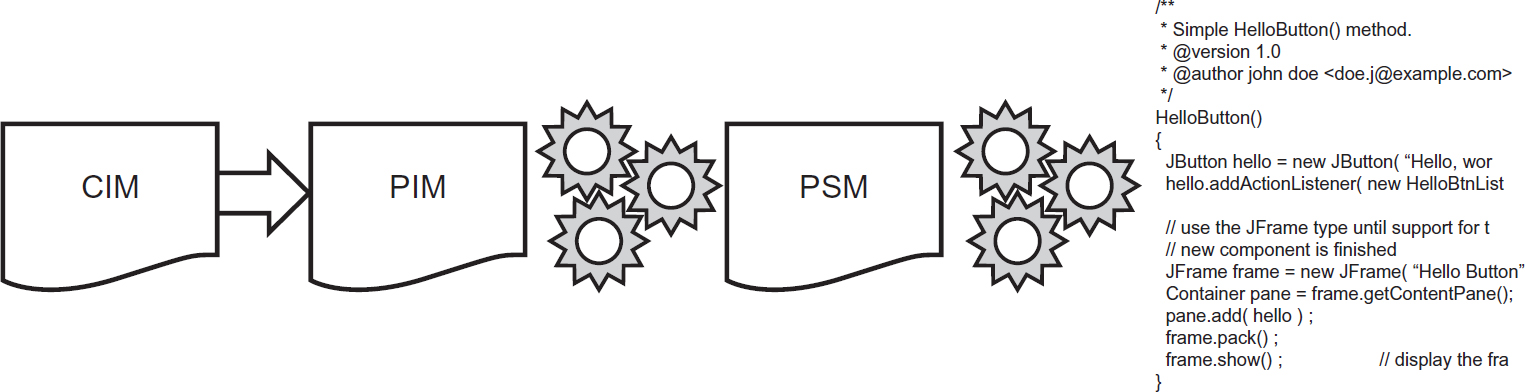

The way MDA works is summarised in Figure 6.7 below.

Figure 6.7 The MDA process

With MDA, software is produced by a specialist software tool through a series of automated transformations. Hence, in practice, MDA tools are also UML modelling tools.

The start point is an abstract computer-independent model (CIM). This is essentially a conceptual model of a business domain that captures the key requirements of a new computer system. The CIM is then used as a basis for developing a platform-independent model (PIM). The PIM is based on the main analysis models expressed using UML notation and represents the business functionality and behaviour without the constraints of technology. The PIM is then converted into a platform-specific model (PSM), which takes into account the software and hardware environment that the solution is to be implemented in. Finally, the PSM is used to generate the actual executable program code.

REFERENCES

Boehm, C. and Jacopini, G. (1966) ‘Flow diagrams, turing machines and languages with only two formation rules’. Comm. ACM, 9, 5, 366–371.

Holt, A. L. (2013) Governance of IT: an executive guide to ISO/IEC 38500. BCS, Swindon.

IEEE (1990–2002) Standard glossary of software engineering terminology, IEEE std 610.12-1990. IEEE Computer Society, Washington, USA.

ISO/IEC 25051:2014 (2014) Software engineering – Systems and software Quality Requirements and Evaluation (SQuaRE) – Requirements for quality of Ready to Use Software Product (RUSP) and instructions for testing. ISO/IEC, Geneva.

Schwaber, K. and Beedle, M. (2001) Agile software development with Scrum. Pearson Education, London.

The Agile Alliance (2001) ‘Agile Manifesto’. The Agile Alliance. www.Agilemanifesto.org (accessed on 4 June 2014).

FURTHER READING

Anderson, D. A. (2010) Kanban. Blue Hole Press, Seattle, WA.

Arlow, J. and Neustadt, I. (2004) Enterprise patterns and MDA: building better software with archetype patterns and UML. Pearson Education, Boston, MA.

Arlow, J. and Neustadt, I. (2005) UML 2 and the unified process: practical object-oriented analysis and design. Addison-Wesley, Boston, MA.

Avison, D. and Fitzgerald, G. (2006) Information systems development: methodologies, techniques and tools (4th edition). McGraw-Hill, Maidenhead.

BCS (2012) Cloud computing: moving IT out of the office. BCS, Swindon.

Beck, K. (2002) Test driven development. Addison-Wesley, Boston, MA.

Beck, K. and Andres, C. (2004) Extreme programming explained: embrace change (2nd edition). Addison-Wesley, Boston, MA.

Burgess, R. S. (1990) Structured program design using Jackson structured programming. Nelson Thornes Ltd, Cheltenham.

Cadle, J. and Yeates, D. (2007) Project management for information systems (5th edition). Prentice Hall, Harlow.

Checkland, P. and Scholes. J. (1999) Soft systems methodology: a 30-year retrospection. John Wiley and Sons, New York.

Cockburn, A. (2004) Crystal clear: a human-powered methodology for small teams. Addison-Wesley, Boston, MA.

DSDM (2012) The DSDM Agile project framework. DSDM Consortium, Ashford, Kent.

DeMarco, T. (1979) Structured analysis and system specification. Prentice-Hall, Harlow.

Erl, T. (2005) Service-oriented architecture: concepts, technology, and design. Pearson, Boston, MA.

Goodland, M. and Slater, C. (1995) SSADM Version 4. McGraw-Hill, Maidenhead.

Highsmith, J. A. III (2000) Adaptive software development: a collaborative approach to managing complex systems. Dorset House Publishing, New York.

Hollander, N. (2000) A Guide to software package evaluation and selection: the R2isc method, Amacom, USA.

Hughes, B. and Cotterell, M. (2009) Software project management (5th edition). McGraw-Hill, Maidenhead.

Jansen, W. and Grance, T. (2011) ‘Guidelines on security and private in public cloud computing’. http://www.nist.gov/manuscript-publication-search.cfm?pub_id=909494 (accessed 15 June 2014).

Jordan, J. M. (2012) Information, technology, and innovation: resources for growth in a connected world. John Wiley and Sons, New York.

Linger, R. C. et al. (1979) Structured programming: theory and practice. Addison Wesley Longman Publishing Co., Boston, MA.

Martin, J. (1991) Rapid application development. MacMillan, New York.

Ogunnaike, B. and Harmon Ray, H. (1995) Process dynamics, modeling, and control (topics in chemical engineering). Oxford University Press, Oxford.

Palmer, S. R. (2002) A practical guide to feature driven developmen. Prentice-Hall, Harlow.

Poppendieck, M. and Poppendieck, T. (2003) Lean software development: an Agile toolkit. Addison-Wesley, Boston, MA.

Skidmore, S. and Eva, M. (2003) Introducing systems development. Palgrave Macmillan, Basingstoke.

Yourdon, E. and Constantine, L. L. (1979) Structured design: fundamentals of a discipline of computer program and systems design. Prentice-Hall, Harlow.