4. Lab

Can You Really Study Design in the Laboratory?

Can you study design by bringing it into a laboratory in which it can be subjected to controlled experiments? If the goal is to learn about causality and even produce formulas for understanding design, this is viable, as long as researchers keep in mind methodological issues involved in generalizing from laboratory studies to the real world. In experimental methodology, concepts and prototypes become physical hypotheses that are used as stimuli in experiments. Our example of a successful constructive design research program with an experimental approach comes from Eindhoven, the Netherlands. This research program has created many frameworks for understanding and designing interactive products and systems. This work has built on J.J. Gibson’s ecological psychology for more than ten years, but has recently turned to phenomenology.

The sociologist Morris Zelditch, Jr., once published a paper called “Can You Really Study the Army in the Laboratory?”1 Under this provocative title, he wrote about the limitations of studying large institutions in a laboratory. Zelditch’s answer was yes, if the study is done with care. This chapter shows that this is true in design as well. 2 It is impossible to study a phenomenon like design in the laboratory in its entirety; design has many faces, only some of which are appropriate for laboratory studies. The trick, however, is to see which ones are. 3

2.For example, see Stanley Milgram’s (1974) famous studies of obedience, which illustrated how easy it is to make people act against their will and ethics simply by giving them orders. Milgram’s studies have been criticized for many problems, but his experiments provide a clear illustration of how one can take a key element of a place like the army — obedience to authority — and study it in isolation in a laboratory.

3.Thanks to Pieter Jan Stappers for some of the formulations in this paragraph, as well as pointing out the relevance of Milgram’s work in this context.

The historical foundations of this methodology are in the natural sciences, but it usually comes to design through psychology. The aim is to identify relationships designers might find interesting; for example, how the limits of human cognitive processing capabilities affect error rates in using tablet computers. The design justification for this methodology is straightforward: if such relationships were found, they could be turned into mathematical formulas that would provide a solid ground for design. 4

4.See Overbeeke et al. (2006, pp. 63–64).

This chapter is about the logic of laboratory studies. 5 Actual research tends to be impure in terms of logic; in particular, early stage user studies aiming at inspiration tend to be done with probes and contextual inquiries. They are qualitative and inspiration-oriented and are typically combined with laboratory-style studies. For example, Stephan Wensveen used cultural probes for inspiration in the early stages of his research. 6 Experimental work typically happens in concept testing and selection and in the evaluation phase of the prototypes. Although the ethos of this tradition comes from experimental psychology, researchers borrow from other ways of doing to complement it.

5.Lee (2001). There are many extensions of this methodology, like quasi-experimental studies and living labs, and many other methodologies emulate this model, including surveys based on questionnaires.For quasi-experiments, see Cook and Campbell (1979) and for living labs, see De Ruyter and Aarts (2010). Neither methodology has caught on. Quasi-experiments try to vary things like political programs, but as they take place in the open, not in a lab, control is barely more than a metaphor, and this needs to be taken into account. The problem with living labs like smart homes is that despite much of the rhetoric, they seldom combine the best of experimental laboratory work and working in real context, but tend to remain technological showcases or technical proofs of concepts (for a thorough study of smart homes, see Harper, 2003). An excellent introduction to survey methodology is De Vaus (2002).

4.1. Rich Interaction: Building a Tangible Camera

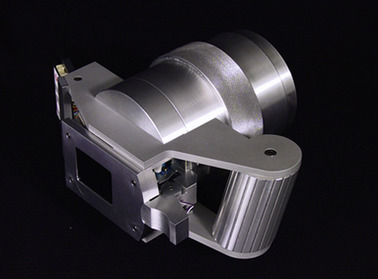

Our example is from Technische Universiteit Eindhoven, where Joep Frens designed a camera with a rich interaction user interface and compared it to conventional cameras. The standard interaction approach in industry is based on a menu on a screen, which can be navigated with buttons. Frens aimed at creating an alternative to this standard approach.

While conventional digital cameras typically have controls based on buttons and menus on screen, rich interaction cameras had tangible controls. For example, with a rich interaction camera, the photographer could take a picture by pushing a trigger and save it by pushing the screen toward the memory card. To delete it, he had to push the screen back to the lens. Frens designed these unconventional forms, interactions, and functions so that the photographer could read the possibilities for action and function from the form (Figure 4.1).

Building on ecological psychology and literature on tangible interaction, Frens created a series of hypotheses for each camera variation to be able to compare user experience. Frens’ main hypothesis was that a rich interaction camera is more intuitive to use than a conventional camera. Another hypothesis was that people think it is more beautiful. The cameras were stimuli in his study; measures for things like use and beauty came from Marc Hassenzahl, a German design psychologist. 7

Frens’ design process was driven by a wish to find alternatives for the prevalent industrial interaction paradigm for cameras. His inspiration came from his knowledge of trends in interaction design and from his background research, not from user studies. Research questions, hypotheses, and the rich interaction framework came after the first designs, and they were based on the insights gained during the design process (Figure 4.2 and Figure 4.3).

|

| Figure 4.2 Joep Frens’s approach to constructive design research. Building a rich interaction camera through mock-ups and prototypes. Top two left: details from a service scenario with a simple mock-up. Top three right: cardboard mock-ups from one camera variation. Below, clockwise from bottom left: fitting electronics into the cask, hacking existing technology; building a case, and final prototype of one camera. 8 8Frens (2006a) and Frens (2006b). (Pictures by Joep Frens.) |

Frens built several camera prototypes out of cardboard and tested these with students. Having a good idea of how to build a rich interaction camera, he built the body from aluminum. He also built several control modules that could be fitted to the body. The first module was with conventional controls; the second with light controls; the third with mixed controls; and the fourth with rich controls, all within the same form language. These designs formed a scale. At one end was an interface using conventional menus and buttons. At the other end was a radically reworked camera with tangible controls only.

He went on to test these cameras with 24 students of architecture in a laboratory setting. Each student received instructions, viewed the camera, and took photographs with it. 9 This study was repeated four times, once for each camera variation. 10 User experience was evaluated with a questionnaire. Afterward, the participants compared the cameras and completed a closing questionnaire.

9.Technically speaking, these were not cameras but a body containing different interaction modules.

10.The order in which the cameras were given to the participants was varied to make sure that the order in which they were shown did not cause error.

From a cognitive psychology perspective one would expect that the rich interaction camera would do worse than a traditional one, as it breaks the user interface conventions of cameras. However, it did not, and it was appreciated by many of the participants. The rich interaction camera did not fare better than other cameras in aesthetic and practical terms. Still, Frens was able to say that his design was successful. At a more fine-grained level, the results were also positive. For example, saving images was found to be pleasing with the rich interaction camera, which participants also found more beautiful than other cameras.

4.2. Laboratory as a Site of Knowledge

Studying things in a laboratory means that something is taken out from its natural environment and brought into a controlled area where it can be subjected to experimentation. Almost anything can be studied in the laboratory: armies, design, chemical reactions, rich interaction, and so forth.

The trouble with studying a phenomenon in the real world is that usually many things shape it. This makes it difficult to find what causes something one sees; there are typically several possible explanations, and it is impossible to rule any of them out with a high degree of certainty. Research becomes an exercise in “what about if.…”

Studying a phenomenon in a laboratory helps with this problem. The laboratory gives the researchers an opportunity to focus on one thing at a time. Most typically, this “thing” is a relationship, such as the relationship of rich interaction and user experience in Joep Frens’ study. The laboratory also helps researchers study alternative explanations and competing hypotheses; doing this is far more difficult in natural settings. After researchers have eliminated alternative explanations, they are able to confidently say things about how rich interaction improves user experience in camera design. It is possible that the results are wrong, but this is highly unlikely.

Causes, Effects, and Variables

Scientists do not talk about “things” but use more specialized terminology. Things that exist before the phenomenon to be studied takes place are called “independent variables,” which explain the behavior of “dependent variables.” In addition, there are intervening, background, and consequent variables.

Ideally, a researcher should be able to state his hypothesis as a function y=f(x), where y represents the dependent variable and x the independent variable, although this function is usually far more complex.

A hypothesis is an explanation based on theory: it is researchers’ best guess about how the function works before they do a study. The hypothesis is not true before empirical proof, but there are theoretical reasons to think it will receive such proof.

Notice that the aim of experimental research is not to capture everything in a causal system; the aim is to focus on the key relationships.

When specifying causal systems, there are a few useful rules of thumb, such as supposed causes always ought to precede effects, and things that come first in time should precede things that follow. Perhaps most important, as the word “variable” tells, things in the system have to be able to vary. Other than that, specification depends on theory: theory should tell how x impacts y and how to work with other variables.

An alternative way is to talk about causes and effects, but social scientists usually avoid the language of causality. Talking about variables avoids confusing theoretical language with things this language describes.

In the social sciences, it is also not conventional to talk about the “causes” of what people or their social organizations do. Most social scientists prefer to think that people make sense of situations and act accordingly; whether these products of sense-making can be thought of as causes is a philosophical question.

For example, when Joep Frens built his rich interaction camera to enhance user experience, his independent variables were his cameras (x), while his dependent variable (y) was user experience measured with Marc Hassenzahl’s scales. Frens did not study possible background variables like gender, which other researchers might have found interesting. He also left out consequent variables like the effect of his camera on users’ satisfaction of life.

Such exclusions belong to any laboratory research: instead of studying everything, researchers have to decide which variables are relevant enough to be included in the research design. Specifying causal systems is a matter of judgment (Figure 4.4).

Working in a laboratory has many other benefits. For example, a laboratory can be equipped with instruments that help make detailed and accurate observations and measurements.

What is of the utmost importance, we think, when testing the variations, is that the user’s actions on the prototype are recorded. We need a trace of the actual interaction, as it is done as soon as one stops to interact. The recordings of setting the alarm clock enabled us to reconstruct step by step how a, e.g., symmetrical pattern was constructed.11

11.Overbeeke et al. (2006, pp. 64–65). The reference to an alarm clock is from Wensveen (2004). Note that this is not always true. Ethnomethodologists have proven that one can use extremely fine-tuned audio- and videotapes for research (see Szymanski and Whalen, 2011). Importantly, this accuracy has a cost: compared to studies in natural environments, laboratory research is far less rich in terms of context. Accurate measurements may mean inaccurate rendering of the context.

As the laboratory environment and experiments are typically documented in detail, it is also possible to replicate the study in other laboratories. This rules out errors coming from the setting and its research culture. This also applies to issues like “observer effects” — the researcher giving people cues about his intentions. If people understand a researcher’s intentions, they may change their behavior to please or to confuse the researcher. There has to be a proper strategy to deal with these effects. 12 Most threats to validity are beyond this book. For example, to see how things like user experience develop over time, researchers often study the same people several times in so-called time series analysis. However, over time, people in the study learn about the study and change their behavior. There are ways to minimize such threats, but this complicates research design. 13

12There is practically a science on observer effects and how they can be controlled, starting from Robert Rosenthal’s (1966) classic treatment of the topic. His work is still a standard reference and highly recommended reading.

13The classic treatment of validity threats in psychological research — and, by implication, any work that involves experimenting and people — is referenced in Campbell and Stanley (1973). The main problem with this book is that it degraded non-experimental research unjustifiably; in particular, Donald Campbell revised his argumentation considerably in the early 1970s. In design research, the most comprehensive effort to minimize such threats is again done by Joep Frens (2006a, pp. 152–153), who showed statistically that the order in which he presented his cameras to participants did not affect the results.

Analysis in Nutshell

There are many statistical techniques to study how independent and dependent variables “covary.”

To study covariation, researchers typically use some kind of linear model. These range from cross-tabulations to correlations, analysis of variance (ANOVA), and regression analysis. Non-linear techniques like logit and probit regressions are rare in design. Multivariate techniques like factor and discriminant analysis are normally used for pattern-finding rather than for testing hypotheses; they are non-linear and also rare.

For example, in studying whether there is a link between his cameras and user experience, Frens used the camera variations he built as his independent variable. Since he built four user interface variations, his independent variable got four values.

He hypothesized that as these cameras were different, people would experience (which was his dependent variable) them differently in ways that the theory of rich interaction can foresee. His null hypothesis predicted no change or a change so small that it could have been produced by chance.

Frens used several statistical techniques in his study but mostly relied on ANOVA to decide whether his camera variations led to predicted changes in user experience.

Statistical methods do not have to be complex and sophisticated. More attention should be placed on theory and identifying the underlying causal model. If there is no variation in data, it is impossible to find it even with the best statistical tools. As Ernest Rutherford — a physicist with several groundbreaking findings on his list of conquests — reputedly noted, “if your experiment needs statistics, you ought to have done a better experiment.”

It is also good to keep in mind that even experienced researchers struggle to find the right model to describe the data. They routinely do dozens of analyses before they are happy with the results; patience is a virtue in statistical analysis.

Similarly, it is good to know that there are differences between methods preferred in different disciplines. For example, psychologists usually prefer some form of ANOVA.

4.3. Experimental Control

The crux of any laboratory study is experimentation. 14 The researcher manipulates the thing of interest in the lab to learn how people react to it while holding other things constant. Typically, he assumes a new design will improve things like user experience when compared to older designs. In research language, the null hypothesis predicting no change is rejected. Having established the basic relationship, researchers study other explanations to see whether they somehow modify results. Ideally, researchers vary one additional variable at a time to see whether it alters the basic relationship. 15

14As Pieter Jan Stappers noted in private, this is also why the lab is useful for validation studies of already fixed hypotheses but less suited for exploratory/generative studies where research questions evolve.

15These additional studies help to elaborate analysis and rule out alternative explanations and competing hypotheses. These studies make the initial result more robust and defensible. They may also show that the basic relationship exists only for one group, or even remove the basic relationship altogether, in which case the original relationship is said to be spurious.

For example, Frens studied user experience first by varying his camera designs and learned that a rich interaction interface improved user experience. He could have gone further; for example, he could have studied men and women separately to see whether gender somehow was relevant in explaining the link between rich interaction and user experience. However, he chose not to do these additional analyses, keeping his focus on the basic relationship only (Figure 4.5).

Research is successful when the basic relationship exists and the most important competing explanations are ruled out. Thus, if a rich interaction camera functions as expected and there are no serious alternative explanations, the theory about rich interaction ought to be accepted until a better theory comes along. If the first rich interaction camera of its sort is already about as good as conventional cameras, even though cognitive theory would predict otherwise, something must have gone right in the design process.

Selecting what to study and what to leave out is ideally a matter of theory, but equally often, it is also a matter of judgment. Many things influence human behavior, and it is impossible to study everything carefully. What is included is a theoretical question; for example, when Philip Ross selected people for studying his lamp designs, he selected university students with a similar value system in mind (see Chapter 8). 16 This limited his ability to generalize but also made his analyses easier. He would have gained little from knowing how people with different medical conditions would have reacted to his lamps. This might be an interesting question for another study but not for his.

16As Ross (2008, p. 196) wrote, “All participants were students at TU/e, from several departments or Fontys College in Eindhoven. None of these people had experience in interaction design. The advantage of having participants from the same social group (students) was that the factors other than values were more constant than they would be in a heterogeneous participant group.”

There are also methodic ways to make research designs simpler. The most popular technique is randomized trial, in which researchers take one group of typically randomly selected people. Then they repeat the study with another randomly selected group. One of the groups is given a “treatment,” for example, they have to use a rich interaction camera. The other group gets a placebo, for example, a conventional camera. Researchers measure things like user experience before and after the treatment in both groups, with the expectation that satisfaction has increased more in the treatment group than in the control group. Randomization does not eliminate variables like gender or horoscope sign, but in large enough groups the impact of such variables evens out.

Randomized Trials

The most typical research design, especially in medicine, is a randomized trial. In this research design, two groups of people are drawn randomly and allocated into a study group and a control group.

The study group gets a treatment; for example, in design research, they use the prototype. The control group does not receive this treatment.

A study begins with a measurement in which both groups fill out a questionnaire or do some other test. After the treatment, both groups are measured again. The thing to be measured can be almost anything, but typically it is user experience.

The hypothesis is that the treatment improves the study group’s user experience, while the control group does not experience similar improvement. This set of expectations can be written more formally, for example:

1.

2.

3.

All of these conditions should to be compared with appropriate statistics, most typically with t-test or ANOVA.

The smart thing about this design is that it eliminates the need to conduct a new study for each possible alternative explanation, like gender or cognitive style. The number of observations, however, needs to be large enough (Figure 4.6).

4.4. Physical Hypotheses and Design

In constructive design research, the epitome of analysis is an expression such as a prototype. It crystallizes theoretical work, and becomes a hypothesis to be tested in the laboratory. For Stephan Wensveen, it was the alarm clock he built. For Ianus Keller, it was a cabinet for interacting with visual material. For Andres Lucero, it was a space he called the “funky-design-space” meant to support moodboard construction and browsing. 17 For Joep Frens, his camera variations are considered physical hypotheses.

However, adding the design phase to research also adds an important new ingredient to the soup — the designer’s skill and intuition.

Stappers recently described some of the complexities involved in treating prototypes as hypotheses. In the design process, a prototype integrates many types of information. Theory is one component in the prototype, but not the only one. For example, ecological psychology tells us to build tangible controls that use human sensory motor skills to accomplish tasks like taking photos or setting the time for the alarm. However, it says little about many of the sensual issues so important for designers, including shape, colors, sound, feel, surfacing, and so on. Ideas for these come from the designer and the design process. As Stappers said:

Prototypes and other types of expressions such as sketches, diagrams and scenarios, are the core means by which the designer builds the connection between fields of knowledge and progresses toward a product. Prototypes serve to instantiate hypotheses from contributing disciplines, and to communicate principles, facts and considerations between disciplines. They speak the language of experience, which unites us in the world. Moreover, by training (and selection), designers can develop ideas and concepts by realizing prototypes and evaluating them….

The designing act of creating prototypes is in itself a potential generator of knowledge (if only its insights do not disappear into the prototype, but are fed back into the disciplinary and cross-disciplinary platforms that can fit these insights into the growth of theory).18

18Stappers (2007, p. 87). It is important to make a distinction between the prototype and the theoretical work that led into it. Occasionally, design researchers build marvelous prototypes even though their research shows that the reasoning behind the prototype is probably wrong. This was the case in Philip Ross’ work, which produced marvelous lamps, but fairly inconsistent theoretical results. That his results were inconclusive and designs attractive suggest that the problem was not in the design process, but in the theoretical framework he used. More typically, researchers do solid theoretical work that leads to fairly awkward designs, at least when judged by professional standards. The reasons for this have been dealt with earlier in this chapter. Most constructive design researchers would probably side with Ross, and sacrifice some theoretical elegance to guarantee enough resources for design. Again, this is common in research: empirical researchers typically sacrifice theoretical sophistication if data so requires. Why not designers?

Elsewhere, Stappers listed some uses of prototypes. They can be used to test a theory, in which case they become embodiments of theory or “physical hypothesis.”19 However, they also confront theories: researchers, whose prototypes cannot hide in abstractions, have to face many types of complexities that working designers face. Similarly, they confront the world. When building a prototype, the researcher has to face the opinions of other people. Furthermore, they serve as demonstrations, provocations, and criticisms, especially to outsiders who have not seen their development from within (Figure 4.7). 20

19“… we use methods we borrow, mostly, from social sciences. The prototypes are physical hypotheses …” (Overbeeke et al., 2006, pp. 65–66, italics in original).

20Stappers, workshop Jump Start in Research in Delft, June 29, 2010. An updated list will reappear in 2011 in the PROTO:type 2010 Symposium held in Dundee, Scotland (Stappers, 2011).

|

| Figure 4.7 How a physical hypothesis emerges from various types of knowledge expressed as sheets on the floor. As understanding grows, more knowledge from other disciplines is drawn into the spiral. 21.Stappers (2007, p. 12). See also Horvath (2007). (Drawing by Pieter Jan Stappers.) 21 |

4.5. Design, Theory, and Real-World Relevance

As Stappers points out, prototyping is more than theory testing, it is also a design act. A design process may be inspired by theory, but it goes beyond it. A prototype is an embodiment of design practice, but it also goes beyond theory. For this reason, design prototypes are also tests of design, not just theory. Indeed, one of the most attractive things in research in Eindhoven has been the quality of craftsmanship. These designs can be evaluated as design statements. They are good enough to please a professional designer aesthetically, structurally, and conceptually.

Research sets some requirements for prototypes at odds with doing good design. Researchers almost invariably aim at simplification; for example, people bring in many types of aesthetic opinions to the laboratory and are barely aware of most of them. The way to control this is to eliminate clutter by keeping design simple. When the subjects’ mind does not wander, changes in their behavior can be attributed to the designs. As Overbeeke wrote with his colleagues:

Design research resembles research in, e.g., psychology in that it has a minimum of controls built in when exploring the solution when testing variations of solutions. Therefore … “we have kept thedevices simple, pure and with resembling aesthetic appearance.” This makes it possible, to a certain degree, to isolate and even manipulate systematically critical variables.22

22Overbeeke et al. (2006, pp. 64–65, italics ours).

This is where there is tension. As Stappers noted, research prototypes are not pure expressions of theory; they also embody design values. The more they do, the more difficult it becomes to say with confidence that the theory that inspired design actually works. The secret of success, quite simply, may be design.

This is a catch-22. On the one hand, the more seriously researchers take design, the more difficult it becomes to draw unambiguous theoretical conclusions. On the other hand, when the theoretical frame and the aims of the study guide prototyping, 23 a good amount of design relevance is sacrificed. Ultimately, the way in which prototyping is done is a matter of the researcher’s personal criteria for quality and taste. Most design researchers think design quality is more important than theoretical purity, but opinions differ.

23Overbeeke et al. (2006, pp. 64–65).

Most design researchers, however, find it easy to agree that research prototypes differ from industrial prototypes. As Joep Frens noted, his cameras are finished enough for research but not production ready.

Moreover, the prototypes that are presented in this thesis are not products ready for production. The prototypes are elaborated to a highly experiential level so that they can be used in real life experiments to answer the research question … The prototypes can be seen as “physical hypotheses” that have sufficient product qualities to draw valid and relevant conclusions from.24

24Frens (2006a, pp. 29, 185).

As Frens related, prototypes are done to see where theoretically informed design leads. Issues like durability, electric safety, and the quality of computer code are in the background. In the foreground are things that serve knowledge creation; it is better to leave concern for production to industry.

4.6. From Lab to Society: The Price of Decontextualization

When things are taken from society to a laboratory, many things are decontextualized; however, this comes with a price. A laboratory is a very special place, and things that happen in the laboratory may not happen in society or may happen in a different way, as conditions are different. Do results of laboratory studies tell anything about real world?

There are several ways to answer this question, and sometimes this question is not relevant. Researchers may want to show that a certain outcome is possible by building upon it, and there is no need to produce definitive proof beyond the construct. This is called “existence proof.”25 This proof is well known in mathematics and is common in engineering but almost non-existent in empirical research. Sometimes, generalization happens to theory, and this is typical in many natural sciences — a piece of pure tin melts at the same temperature whether it is in Chicago or Patagonia.

25Our thanks go to Pieter Jan Stappers for pointing this out.

Usually the jump from the laboratory to the real world builds on statistics. Many things in ergonomics may be universal enough for theoretical analysis, but it is more difficult to argue this in, say, aesthetics or design. 26 Researchers can calculate statistics like averages and deviations for those people they studied. They cannot, however, use these figures only as estimates of what happens in larger populations: it is always possible to err. The basic rule is that as sample size increases, confidence in estimates increases.

26A researcher cannot take a result and say that he proved the theory at work behind the study without first checking his work. The main elements to check include things missing in theory, reliance on only one set of measures, overly simple measurements, the fact that people learn to respond “the right way,” and the researchers’ own expectations.

Finally, proof goes beyond one study. As Lakatos argued, there is no instant rationality in research. 27 Generalizing from individual studies is risky. However, if a program repeatedly leads to interesting results, it should be taken seriously. It was only after hundreds of studies that the world came to believe that asbestos and tobacco caused life-threatening illnesses. This logic also applies in constructive design research (Figure 4.8).

27For Lakatos (1970), see Chapter 3.

In actual research practice, these proofs coexist. Again, Frens provided a good example. In terms of an existence proof, his camera shows that it is possible to build rich interaction cameras. In terms of generalizing to theory, his research framework can be applied in many different circumstances. In statistical terms, his empirical results apply to people with a high level of aesthetic abilities and a great deal of experience in photography. Finally, by now dozens of designs coming out from the Netherlands show that ecological psychology can be fruitfully applied to designing interaction. The burden of proof does not lie on Frens only.

There is a margin of error in even the best of studies; however, after reading Frens, we know more about rich interaction in smart products than before. Researchers are skeptics and do not easily accept anything, but when they accept something, they stand behind it. Proving that a good study is wrong requires a careful study that shows in detail what was wrong.

4.7. Program at the Junction

Recent work in Eindhoven has taken a significant step away from its basis in ecological psychology. Earlier work built on J.J. Gibson’s ecological psychology and aimed at formulating conditional laws and constructing mathematical models of actual interaction. 28 Since Kees Overbeeke’s inauguration in 2007, however, research has turned to phenomenological and pragmatic philosophy. 29 Ecological psychology worked marvelously well when creating systems that can be used with one’s body instead of cognition, but it gave few tools to see how things like social interaction and culture shape conduct. It remained limited in its ability to conceptualize reflection, thinking, and discourse, and these are just the things in the center of the most recent work coming out of the program.

28Overbeeke et al. (2006, pp. 63–64). Caroline Hummels (2000, p. 1.27) talked about conditional laws in her thesis.

29See Overbeeke 2007.

For people trained in philosophy, this may sound risky. Phenomenology makes few claims about explaining human conduct. However, there is a tradition of experimental psychological phenomenology, and Maurice Merleau-Ponty, the key philosopher of psychological phenomenology, built many of his theories on reinterpretations of clinical and experimental studies. 30 Also, there are ways to combine careful measurement and phenomenologically informed theory. 31 William James, one of the founding fathers of the pragmatists, had a background in medicine, making him no stranger to experimental research. Here the line between philosophical thinking and experimental research is fine, but as long as a researcher keeps in mind that experiments are aids to imagination, he is on safe ground. 32

30Merleau-Ponty’s seminal works that continue to inspire psychological research are The Phenomenology of Perception (2002) and The Structure of Behavior (1963). Other key figures in phenomenological psychology include Albert Michotte. Also, many late Gestalt theorists like Kurt Koffka were influenced by Husserl’s phenomenological philosophy.

31The best recent example is probably Oscar Tomico’s doctoral thesis in Barcelona, which built a method of measuring experience based on Kelly’s (1955) personal construct theory. Tomico’s thesis was a conscious attempt to combine elements from the empathic tradition of Helsinki with ecological tradition in Eindhoven. Today, Tomico works in Eindhoven.

32As Overbeeke et al. (2006, pp. 65–66) wrote, their long-time hope is to discover conditional laws of human-machine interaction. However, they also tell that “the level of abstraction in our work is low. We almost argue by case. This is done by necessity; otherwise we would lose the rich human experience.”

The push came from Paris where philosopher Charles Lenay and his colleagues recently studied how we perceive other people’s perception with our bodies. It is one thing to be alone somewhere and to see things; it is another thing to be there when others see me seeing things. I have to take into account other viewpoints and how they change as time goes by. 33 With his colleagues, Lenay studied Tactile Vision Sensory Substitution, a system developed by Paul Bach y Rita. This system transforms images captured by a camera into three-dimensional “tactile image,” which is applied to the skin or tongue. It is meant to give blind people a three-dimensional perception of their surroundings. For Lenay and his colleagues, this commercial and prosthetic failure provided an opportunity to study how technology mediates perception. They showed what people are able to say when they interact with another person through the system. We give a body image to others, and others respond to this image. We can recognize this response. We know we do not deal with a machine.

This may sound like hairsplitting, but it has important implications for understanding many technologies that are specifically built on social assumptions. People do think about the image they give others because they know that others act on this image and that way affects them. Many “presence” technologies build on similar assumptions, and such considerations certainly shape behavior on social networks on the Web. This question is relevant in traditional design as well. For example, we dress differently for Midtown than we do for the neighborhood bar in Brooklyn because we know people will look at us differently in those places. It is much like looking into a mirror: you become more self-conscious.

This work has recently been picked up in Eindhoven. For example, in her master’s thesis, Eva Deckers weaved a carpet that responds to movement of the hand, giving it the ability to perceive and react to people’s perception. In her doctoral work, she focused on situations with multiple users. 34 Work like this is bringing the Eindhoven program to a junction. These studies promise a better understanding of many kinds of interactive technologies but also make research more difficult. Importantly, this new direction creates links to twentieth century Continental thinking in the humanities and the social sciences. Recent work is also bringing work in Eindhoven closer to interpretive sociology, ethnomethodology, and discourse analysis. This shift has certainly paved the way for more sophisticated research programs that may fill the promise of providing knowledge of “la condition humaine” — what makes humans tick. 35

34Deckers et al. (2009), Deckers et al. (2010) and Deckers et al. (2011). See w3.id.tue.nl/en/research/designing_quality_in_interaction/projects/perceptive_qualities/.

35These are words from Overbeeke et al.’s (2006, pp. 65–66) programmatic paper.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.