Chapter 16: Orchestrating Your Application with Google Kubernetes Engine

The remaining three chapters of this book cover the three major options for deploying our application as cloud-native microservices, which are Google Kubernetes Engine (GKE), Google App Engine, and Cloud Run. This chapter focuses on GKE, which serves as a platform for deploying microservices, though not serverless ones. We will cover provisioning a cluster, configuring the environment and microservices, deploying our application, and configuring public access to the application.

In this chapter, we will cover the following topics:

- Introducing GKE

- Configuring the environment

- Deploying and configuring the microservices

- Configuring public access to the application

- When to use GKE

Now, let's look at what exactly GKE is and how to provision and use it.

Technical requirements

The code files for this chapter are available here: https://github.com/PacktPublishing/Modernizing-Applications-with-Google-Cloud-Platform/tree/master/Chapter%2016.

Introducing GKE

GKE is a container management and orchestration service based on Kubernetes, which is an open source container management and orchestration system.

So, what do we mean by management and orchestration? Kubernetes handles deploying, configuring, and scaling our container-based microservices.

There are two major parts to Kubernetes.

The first part is masters, also known as the control plane. This hosts the following services:

- The API server: Handles all communications between all components.

- The cluster store (etcd): Holds the configuration and state of the cluster.

- The controller manager: Monitors all components and ensures they match the desired state.

- The scheduler: Watches the cluster store for new tasks and assigns them to a cluster node.

- The cloud controller manager: Handles the specific integrations needed for a cloud service provider. An example in our case is to provision an HTTP(s) load balancer for our application.

The second major part is Nodes (part of Node pools). This is where our application runs. Nodes do three basic things:

- Watch the API server for new work

- Execute the new work

- Report the status of the work to the control plane

As we are using GKE rather than hosting Kubernetes on our virtual machines, we do not need to concern ourselves with the masters. These are hosted and managed by Google Cloud. This means tasks such as upgrading the version of Kubernetes are managed for us by Google Cloud rather than us having to manually handle them. GKE also provides a facility called cluster autoscaling, which means that if the Nodes in our cluster become fully utilized and we need to deploy more workloads, the cluster will be horizontally scaled out to add the needed capacity, and once the capacity is no longer needed, the cluster will be horizontally scaled in to control costs.

The following diagram shows the architecture of GKE:

Figure 16.1 – GKE architecture

Now that we have gained an understanding of what GKE is, we will move on and learn how to create a GKE cluster.

Modes of operation

GKE offers two different modes of operation, each providing two different levels of control and customizability over your GKE clusters:

- Autopilot: As the name suggests, Autopilot mode takes control of the entire cluster and Node infrastructure, managing it for you. Clusters running in this mode are preconfigured and optimized for production workloads. The developer only needs to pay for the resources that are used by your workloads.

- Standard: On the other side of the spectrum, Standard mode gives developers complete control and customizability over the clusters and node infrastructure, allowing them to configure standard clusters whichever way suits their production workloads.

We will use Standard mode to create GKE clusters for our deployment.

Creating a GKE cluster

Now, let's create a GKE cluster by performing the following steps:

- From the cloud shell, enter the following command to set up the project:

gcloud config set project bankingapplication

- Enter the following command to create the cluster:

gcloud container clusters create banking-cluster --zone europe-west2-c --enable-ip-alias --machine-type=n1-standard-2 --num-nodes 1 --enable-autoscaling --min-nodes 1 --max-nodes 4

The preceding command will create a new GKE cluster called banking-cluster hosted in the europe-west2-c zone. We used the --enable-ip-alias switch to join the cluster to our VPC so that we can use the private IP address of our Cloud SQL database. We have specified that we will use the n1-standard-2 machine type for the Nodes and that our pool will initially have one Node. Finally, we enabled cluster autoscaling and set the limits to be a minimum of one Node and a maximum of four Nodes. This is a long-running task, so it will take a few minutes to complete.

- In the cloud console, from the Navigation menu, select Kubernetes Engine:

Figure 16.2 – Navigation menu – Kubernetes Engine

After a few minutes, you will see the status change to a green tick, as follows:

Figure 16.3 – Kubernetes cluster (status)

With that, we have learned about GKE and created our cluster for use in the rest of this chapter. Now, let's turn our attention to providing configuration to our application.

Configuring the environment

A well-designed microservice will have its configuration externalized so that it can take the configuration from the environment it is deployed to. This means we can deploy the same container to a development environment, test environment, and deployment environment and not have specific versions for each. Another particularly good reason to externalize configuration such as usernames, passwords, and other credentials is to keep them out of the source code repository, so we must keep them secret. We will be making use of manifests extensively in this chapter. Manifests declare what the state should be, and Kubernetes does what it needs to do to bring the cluster into that state. Manifests are idempotent, so if a manifest is applied multiple times, no changes are made except for the first time it is applied. If the contents of a manifest are changed and it is applied, then Kubernetes will update its state to address the difference between the two instances of the manifest.

Kubernetes ConfigMaps and Secrets

In Kubernetes, this externalized configuration is provided by ConfigMaps and Secrets.

A ConfigMap is an API object that provides name-value pairs. These pairs can either be simple, such as HOSTNAME: localhost, or the value can be the contents of a file and later used as a file by our container.

Secrets differ from ConfigMaps in that they have the values encoded using Base64. It is important to remember that this is encoding and not encryption; the encoding is pure so that the values are not easily read from the configuration. Due to Secrets only being encoded rather than encrypted, we will use Google Secret Manager to handle our truly secret configuration.

Google Secret Manager

Google Secret Manager is a secure central service for storing and managing sensitive information such as passwords for use by applications in Google Cloud. These secrets can be accessed by service accounts that have been granted the Secret Manager Secret Accessor and Secret Manager Viewer roles. Add these roles to the Compute Engine default service account before moving on to create our secrets.

We will add the secret keys used by our application by following these steps:

- From the cloud console, from the Navigation menu, select Security | Secret Manager:

Figure 16.4 – Navigation menu – Security | Secret Manager

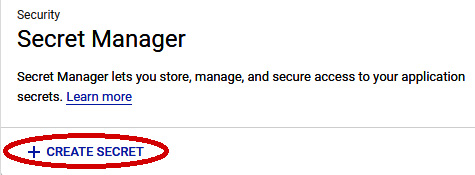

- Click CREATE SECRET:

Figure 16.5 – Secret Manager – CREATE SECRET

- Enter the name and value of the secret, select the region (we used europe-west4), and click CREATE SECRET:

Figure 16.6 – The Create secret screen

- You will see the success page, as follows. Click the left arrow at the top:

Figure 16.7 – Successfully created secret

- At this point, we will see a list of all the secrets we have configured and can click + CREATE SECRET to create another secret:

Figure 16.8 – Secret Manager

- Create a secret for each item in the following list:

--ACCOUNT_DATASOURCE_CLOUD_SQL_INSTANCE: bankingapplication:europe-west2:mysql-instance

--ACCOUNT_DATASOURCE_PASSWORD: <your_db_password>

--ACCOUNT_DATASOURCE_URL: jdbc:mysql:///account

--ACCOUNT_DATASOURCE_USERNAME: <your_db_user>

--BANKING_DATASOURCE_DDL_AUTO: update

--BANKING_DATASOURCE_DIALECT: org.hibernate.dialect.MySQL5InnoDBDialect

--BANKING_INITIAL_ADMIN_EMAIL: <your_admin_email>

--BANKING_PROFILES_ACTIVE: DEV

--GKE_ACCOUNT_DATASOURCE_URL: jdbc:mysql://localhost:3306/account?useSSL=false&allowPublicKeyRetrieval=true&useUnicode=yes&characterEncoding=UTF-8

--GKE_USER_DATASOURCE_URL: jdbc:mysql://localhost:3306/user?useSSL=false&allowPublicKeyRetrieval=true&useUnicode=yes&characterEncoding=UTF-8

--USER_DATASOURCE_CLOUD_SQL_INSTANCE: bankingapplication:europe-west2:mysql-instance

--USER_DATASOURCE_PASSWORD: <your_db_password>

--USER_DATASOURCE_URL: jdbc:mysql:///user

--USER_DATASOURCE_USERNAME: <your_db_username>

On inspecting the preceding list of secrets, we will notice that ACCOUNT_DATASOURCE_URL and USER_DATASOURCE_URL are different from what we have used previously, as well as that GKE_ACCOUNT_DATASOURCE_URL and GKE_USER_DATASOURCE_URL match the old values for them. This is in preparation for the following chapters. We will use the GKE_ versions in this chapter.

We now have secrets for every environment variable that could be moved to be a secret. But how do we use these secrets in our application? Following our strategy of minimizing code changes, we utilized the support for Google Secret Manager that's provided by Spring Boot by updating our build.gradle dependencies to add the following:

implementation 'org.springframework.cloud:spring-cloud-gcp-starter-secretmanager:1.2.2.RELEASE'

Next, we created a new configuration file in our resources folder called bootstrap.properties with the following content:

spring.cloud.gcp.secretmanager.enabled = true

spring.cloud.gcp.secretmanager.bootstrap.enabled = true

spring.cloud.gcp.secretmanager.secret-name-prefix = GCP_

The preceding configuration enables Google Secret Manager's support and enables it to work at bootstrap time, which is before our application.properties file is processed. This allows us to inject the secrets into application.properties in the same way we previously injected the environment variables. Finally, the configuration declares that variables prefixed with GCP_ are the ones that will be loaded from Google Secret Manager.

Finally, we must update our application.properties file:

spring.profiles.active = ${GCP_BANKING_PROFILES_ACTIVE}

spring.jpa.hibernate.ddl-auto = ${GCP_BANKING_DATASOURCE_DDL_AUTO}

spring.jpa.properties.hibernate.dialect = ${GCP_BANKING_DATASOURCE_DIALECT}

spring.datasource.jdbcUrl = ${GCP_GKE_USER_DATASOURCE_URL}

spring.datasource.username = ${GCP_USER_DATASOURCE_USERNAME}

spring.datasource.password = ${GCP_USER_DATASOURCE_PASSWORD}

spring.account-datasource.jdbcUrl = ${GCP_GKE_ACCOUNT_DATASOURCE_URL}

spring.account-datasource.username = ${GCP_ACCOUNT_DATASOURCE_USERNAME}

spring.account-datasource.password = ${GCP_ACCOUNT_DATASOURCE_PASSWORD}

logging.level.org.springframework: ${BANKING_LOGGING_LEVEL}

logging.level.org.hibernate: ${BANKING_LOGGING_LEVEL}

logging.level.com.zaxxer: ${BANKING_LOGGING_LEVEL}

logging.level.io.lettuce: ${BANKING_LOGGING_LEVEL}

logging.level.uk.me.jasonmarston: ${BANKING_LOGGING_LEVEL}

banking.initial.admin.email = ${GCP_BANKING_INITIAL_ADMIN_EMAIL}

In the preceding source code, we have not included the entire application.properties file, just the items that obtain their values from Google Secret Manager or environment variables.

These configuration changes of adding the dependencies, adding the bootstrap.properties file, and updating the application.properties file means that we do not have to make any source code changes to make use of Google Secret Manager.

Now that we can access our application secrets from Google Secret Manager, we will learn how Kubernetes provides environment variables and other configurations to our application.

Kubernetes ConfigMaps

Our application has externalized configuration it obtains from environment variables. As this is not sensitive information, we can make use of a ConfigMap. The following account-env.yaml manifest file shows how we can accomplish this:

apiVersion: v1

kind: ConfigMap

metadata:

name: account-env-configmap

data:

BANKING_LOGGING_LEVEL: WARN

GOOGLE_APPLICATION_CREDENTIALS: /secrets/credentials.json

GOOGLE_CLOUD_PROJECT: bankingapplication

In the preceding ConfigMap manifest, we provided the details for our logging level, the location of our credentials, and the Google Cloud project the credentials are for. Once we have updated those details, we can apply the ConfigMap to our cluster. To do this, upload the file to our cloud shell and execute the following command:

kubectl apply -f account-env.yaml

We can delete the ConfigMap by executing the following command:

kubectl delete -f account-env.yaml

The preceding apply command creates the ConfigMap needed for the account's microservice environment variables. We must repeat the same procedure for user-env.yaml to provide the environment variables needed for the user microservice.

Next, we will create a ConfigMap that holds the configuration for our Nginx service. This will be our initial approach to creating a Strangler Facade. The following nginx-config.yaml manifest file shows how we can accomplish this:

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-configmap

data:

default.conf: "server {

listen 80;

server_name www.banking.jasonmarston.me.uk;

location / {

root /usr/share/nginx/html;

index index.html;

location /account {

proxy_pass http://account-svc:8080;

}

location /user {

proxy_pass http://user-svc:8080;

}

}

} "

We will upload this file to our cloud shell and execute the following command to create the ConfigMap:

kubectl apply -f nginx-config.yaml

We can delete the ConfigMap by executing the following command:

kubectl delete -f nginx-config.yaml

The preceding apply command creates the ConfigMap needed for the Nginx reverse proxy configuration to route requests to our account and user microservices. This will look like a file to our microservices rather than the simple name and value pairs we used previously.

We will now move on and examine Kubernetes Secrets in the next section.

Kubernetes Secrets

We are not finished with the externalized configuration yet. When interacting with Google Cloud Pub/Sub, Google Cloud Identity Platform, and Google Cloud SQL, our application makes use of a credentials file for our service account. Specifically, we used the Compute Engine default service account. We need to upload a copy of that credentials file to our cloud shell and name it credentials.json. Once that is done, we can create the Secret by executing the following command:

kubectl create secret generic credentials-secret --from-file=credentails.json=./credentials.json

We can delete the Secret by executing the following command:

kubectl delete secret credentials-secret

The preceding create command creates the Secret needed for each of the Google Cloud services we will be using. This will look like a file to our microservices rather than the simple name and value pairs we used previously. The name of the Secret is set to look like a filename and the value populated from the content of the file is passed to the command.

The externalized configuration of our microservices is now in place and ready to be used when we deploy our application. We will now move on and examine how containers are deployed and managed in Kubernetes.

Important Note

We will need credentials-secret later in this chapter, so if you have deleted it, please recreate it.

Deploying and configuring the microservices

Kubernetes has several abstractions that represent the state of our system. The key abstractions when deploying our microservices are as follows:

- Pod

- ReplicaSet

- Deployment

- Horizontal Pod Autoscaler

- Service

We will examine each of these abstractions and how we can use them in the following sections.

Kubernetes Pods

A Pod is the smallest unit of deployment in Kubernetes. A Pod is a wrapper around one or more containers that share a network address space and share resources such as volumes. Most of the time, a Pod consists of a single container, but sometimes, it is useful to have multiple containers working together when tight coupling between the containers exists. In our application for our microservices, we will have the container of our microservice and a container for the Cloud SQL proxy. This is called a sidecar pattern and allows us to keep our microservice containers simple and still make use of capabilities such as the Cloud SQL proxy.

We will examine the layout of a Pod manifest by taking it a section at a time:

apiVersion: v1

kind: Pod

metadata:

name: account-pod

spec:

containers:

The start of our preceding manifest declares the version of the API we are using, specifies we are declaring a Pod, provides a name of account-pod for our Pod, and starts the specification for the containers we will be using.

The next section deals with the declarations for our account container:

- name: account

image: gcr.io/bankingapplication/account-rest:latest

ports:

- containerPort: 8080

volumeMounts:

- name: secrets-volume

mountPath: /secrets

readOnly: true

envFrom:

- configMapRef:

name: account-env-configmap

This part of the manifest declares that the container we are using will be called account and that the image will be pulled from gcr.io/bankingapplication/account-rest:latest. Our container's service will be exposed on port 8080 and mount two volumes, /firebase and /pubsub, in read only mode. Finally, our container will populate the environment variables from our account-env-secret.

Next, we have the declaration of the container for the Cloud SQL proxy:

- name: cloudsql-proxy

image: gcr.io/cloudsql-docker/gce-proxy:1.21

command: ["/cloud_sql_proxy",

"-instances=bankingapplication:europe-west2:mysql-instance=tcp:3306",

"-ip_address_types=PRIVATE",

"-credential_file=/secrets/credentials.json"]

securityContext:

runAsUser: 2 # non-root user

allowPrivilegeEscalation: false

volumeMounts:

- name: secrets-volume

mountPath: /secrets

readOnly: true

This part of the manifest declares that the container we are using will be called cloud-sql-proxy and that the image will be pulled from the Cloud SQL Auth proxy GitHub releases page. The latest release of the Cloud SQL Auth proxy Docker image, at the time of writing, is 1.23. and referenced as gcr.io//cloudsql-docker/gce-proxy:1.21. When starting the Cloud SQL proxy, the /cloud_sql_proxy command will be invoked, passing in the parameters needed to connect to our Cloud SQL database on the private IP address using the credentials from our service account. We have also secured the container to ensure it does not run as root, and privileges cannot be promoted to root. Finally, our container will mount a volume called /secrets in read only mode.

That last part of our configuration is the volume we used previously:

volumes:

- name: secrets-volume

secret:

secretName: credentials-secret

In the preceding code, we exposed our Secret as a volume that can be mounted by containers in our Pod. Specifically, we have exposed the credentials file, which is used to externalize the security configuration that's used to connect to Google Cloud services.

Assuming we have created the preceding file in our cloud shell, we can deploy the Pod by executing the following command:

kubectl apply -f account-pod.yaml

We can delete the Pod by executing the following command:

kubectl delete -f account-pod.yaml

The problem with Pods is that, on their own, they are limited. We have declared our account-pod, but that just gives us a single instance of our Pod. What do we do if we need to scale and have multiple Pod instances? That is where ReplicaSets come in.

Kubernetes ReplicaSets

The purpose of a ReplicaSet is to maintain a set of stateless Pods that are replicas. The ReplicaSet keeps the desired number of Pods active. It acts as a wrapper around our Pod definition. The following manifest shows the declaration of a ReplicaSet:

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: account-rs

spec:

replicas: 2

selector:

matchLabels:

name: account-pod

template:

...

The preceding code declares a ReplicaSet called account-rs that has two Pod replicas in it. We match that to our Pod by using a selector. Our selection has a label match declared using the name tag and stating it needs to be account-pod. The omitted part of the file after template is the content from our Pod manifest file, omitting the first two lines of that declaration.

Assuming we have created the preceding file in our cloud shell, we can deploy the ReplicaSet by executing the following command:

kubectl apply -f account-rs.yaml

We can delete the ReplicaSet by executing the following command:

kubectl delete -f account-rs.yaml

So, now, we can have a stable set of stateless Pods active, but how do we manage different deployment strategies? How do we keep some Pods running while we roll out updates to the ReplicaSet? This is handled by Deployments, and just like how ReplicaSets wrap around Pods, Deployments wrap around ReplicaSets, as we will see in the next section.

Kubernetes Deployments

The purpose of a Deployment is to change Pods and ReplicaSets at a controlled rate. In practice, we do not declare ReplicaSets directly but wrap them with Deployments. Deployments allow us to apply strategies to updates such as rolling updates and provide us with the ability to roll back updates. The following manifest shows the declaration of a Deployment:

apiVersion: apps/v1

kind: Deployment

metadata:

name: account-deployment

spec:

replicas: 2

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

selector:

matchLabels:

name: account-pod

template:

...

The preceding manifest code declares a RollingUpdate strategy, states that only one Pod in the ReplicaSet may be unavailable at a time, and that the number of Pods may be one bigger than the ReplicaSet size while an update is underway. As with the ReplicatSet manifest, it uses a selector to link to the Pod definition.

Assuming we have created the preceding file in our cloud shell, we can deploy the Deployment by executing the following command:

kubectl apply -f account-deployment.yaml --record

The purpose of the --record switch in the preceding command is to create a rollback point that we can use if there is a problem with the update. We can see the available revisions by executing the following command:

kubectl rollout history deployment account-deployment

We can roll back to a revision by executing the following command:

kubectl rollout undo deployment account-deployment --to-revision=1

We can delete the Deployment by executing the following command:

kubectl delete -f account-deployment.yaml

With that, we have organized how we can deploy our containers in a way that protects the availability of existing services and allows us to roll back updates to previous update points. Now, let's look at how we can automatically horizontally scale our Pods in and out. This is covered in the next section.

Kubernetes Horizontal Pod Autoscalers

The purpose of a Horizontal Pod Autoscaler (HPA) is to add and remove Pods in a ReplicaSet based on the resource metrics we define to best match the resource consumption to our workload needs.

The following manifest declares an HPA for our Deployment:

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: account-hpa

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: account-deployment

minReplicas: 1

maxReplicas: 10

targetCPUUtilizationPercentage: 75

The preceding manifest declares an HPA called account-hpa. It specifies that the HPA is for the Deployment called account-deployment. It then specifies that the minimum number of replicas is 1 and that the maximum number is 10. Finally, it declares that we scale up if the CPU utilization goes over 75%.

Assuming we have created the preceding file in our cloud shell, we can deploy the HPA by executing the following command:

kubectl apply -f account-hpa.yaml

We can delete the HPA by executing the following command:

kubectl delete -f account-hpa.yaml

This concludes what we need to do for our microservices to run, scale, and be effectively managed to remove downtime while updating the application. We will now move on to how we can access these Pods in a ReplicaSet as a unit without having to address a specific Pod. We will do this using Services.

Kubernetes Services

The purpose of Services is to present a frontend for our ReplicaSets or Pods and provide a single point of entry that will load balance across the Pods that have been identified.

The following manifest declares a Service for our Pods, identified by the name account-pod:

apiVersion: v1

kind: Service

metadata:

name: account-svc

spec:

type: NodePort

selector:

name: account-pod

ports:

- protocol: TCP

port: 8080

targetPort: 8080

The preceding manifest declares a Service called account-svc that routes requests to the account-pod instances in our ReplicaSet. It maps the port our service exposes, which is 8080, to the port our Pods expose, which is also 8080.

Of note here is the type of Service. The type is important because of the behavior it describes. The following are the most important types:

- ClusterIP: Exposes the service on a cluster internal IP address. The service can be accessed by other Pods from within the cluster

- NodePort: Builds on top of the ClusterIP and allows external access to the Pods on the node ports that have been generated by the service for each Node. This external access balances within a Node but not across Nodes and is often used in conjunction with Ingress, as we will see in the next section.

- LoadBalancer: Builds on top of ClusterIP or NodePort and creates a Google Cloud HTTP(s) load balancer to route traffic from outside our cluster to the service in our cluster. This external access balances across Nodes and is often used in conjunction with Ingress, as we will see in the next section.

Assuming we have created the preceding file in our cloud shell, we can deploy the Service by executing the following command:

kubectl apply -f account-service.yaml

We can delete the Service by executing the following command:

kubectl delete -f account-service.yaml

We now have the manifests needed to create a Deployment that manages a ReplicaSet that manages instances of our Pod. We also have the manifests for an HPA and a Service. We will now look at how we can automate applying these manifests and the manifests for our ConfigMap instances using Google Cloud Build.

Automating the deployment of our components

In this section, we will learn how to automate the deployment of our application components. The source code for this deployment can be found in the Chapter 16 folder of this book's GitHub repository: https://github.com/PacktPublishing/Modernizing-Applications-with-Google-Cloud-Platform/tree/master/Chapter%2016. In this folder, there is a project called gke-deploy. Copy that into the local repository that is linked to our Google Cloud source repository.

The structure of our project is as follows:

gke-deploy

certificate

certificate.yaml

cloudBuild.yaml

ingress

cloudBuild.yaml

cloudBuild2.yaml

ingress.yaml

ingress2.yaml

microservices

account

account-deployment.yaml

account-env.yaml

account-hpa.yaml

account-service.yaml

front-end

front-end-deployment.yaml

front-end-hpa.yaml

front-end-service.yaml

nginx-config.yaml

user

user-deployment.yaml

user-env.yaml

user-hpa.yaml

user-service.yaml

cloudBuild.yaml

For now, ignore the certificate and ingress folders – we will cover those in the next section. In this section, we are focusing on deploying the three microservices and all the components and configurations they need to run. In Google Cloud Build, create a new build trigger using the gke-deploy/microservice/cloudBuild.yaml file. The content of this file is shown in the following fragments.

The first fragment deploys all the component parts needed for our account microservice:

steps:

- name: 'gcr.io/cloud-builders/kubectl'

args: ['apply', '-f', 'account-env.yaml', '-f', 'account-deployment.yaml', '-f', 'account-hpa.yaml', '-f', 'account-service.yaml']

dir: 'gke-deploy/microservices/account'

env:

- 'CLOUDSDK_COMPUTE_ZONE=europe-west2-c'

- 'CLOUDSDK_CONTAINER_CLUSTER=banking-cluster'

The following fragment deploys all the components needed for our user microservice:

- name: 'gcr.io/cloud-builders/kubectl'

args: ['apply', '-f', 'user-env.yaml', '-f', 'user-deployment.yaml', '-f', 'user-hpa.yaml', '-f', 'user-service.yaml']

dir: 'gke-deploy/microservices/user'

env:

- 'CLOUDSDK_COMPUTE_ZONE=europe-west2-c'

- 'CLOUDSDK_CONTAINER_CLUSTER=banking-cluster'

The final fragment deploys all the components needed for our front-end microservice:

- name: 'gcr.io/cloud-builders/kubectl'

args: ['apply', '-f', 'nginx-config.yaml', '-f', 'front-end-deployment.yaml', '-f', 'front-end-hpa.yaml', '-f', 'front-end-service.yaml']

dir: 'gke-deploy/microservices/front-end'

env:

- 'CLOUDSDK_COMPUTE_ZONE=europe-west2-c'

- 'CLOUDSDK_CONTAINER_CLUSTER=banking-cluster'

The preceding build will provision all the items we discussed previously, except for the credential's secret. That is deployed manually before the other items are automatically deployed using Google Cloud Build. Once the build trigger is in place, we can add, commit, and push to our Google Cloud source repository and watch the deployment happen.

Once the build has been completed successfully, our microservices will be provisioned in GKE and ready to use. But how do we test and use our application? The services are ready but have not been exposed on public endpoints with hostnames provided by DNS. We will cover how to do that in the following section.

Configuring public access to the application

We will now look at how we can expose our services to the outside world and make them available on public endpoints. The services we have created thus far are of the NodePort type, which means they are exposed on the IP address of the Node they are running on, using a port generated by the Service. The IP address of each Node is a private IP address on our VPC. To expose our services securely to the outside world, we need to have three things in place. First, we need a public static IP address so that we can map our hostname in our DNS to that address. Next, we need an SSL certificate so that we can use HTTPS. Finally, we need a method that will let us route traffic from the public IP address/hostname to our services. This method is called Ingress.

We create the public static IP address called banking-ip as we did when setting up the Google HTTP(s) load balancer for our virtual machine infrastructure. Please ensure that the A (address) record that's set up in your DNS provider matches the hostname we will use to resolve to our public static IP address.

Now, we will learn how to use a Google-managed certificate to enable HTTPS for our application.

Kubernetes-managed certificates

Kubernetes-managed certificates are wrappers around Google-managed certificates, which are SSL certificates that Google creates and manages for us. These certificates are limited compared to self-managed certificates in that they do not demonstrate the identity of the organization and do not support wildcards.

Support for Kubernetes-managed certificates is in beta, so we may run into problems when asking Google Cloud to provision a certificate for us. The problem is that if there is already a managed certificate existing in our Google Cloud project for the hostname we want to use, the provisioning will fail. To check the existing certificates, enter the following commands in the cloud shell:

gcloud config set project bankingapplication

gcloud beta compute ssl-certificates list

If you see a conflicting certificate, you can delete it with the following command:

gcloud beta compute ssl-certificates delete <certificate_name>

We are now ready to learn how Kubernetes-managed certificates are provisioned. In the certificate folder of our gke-deploy project, there are two files: certificate.yaml and cloudBuild.yaml. The certificate.yaml file is the manifest for our managed certificate and looks like this:

apiVersion: networking.gke.io/v1beta1

kind: ManagedCertificate

metadata:

name: banking-certificate

spec:

domains:

- www.banking.jasonmarston.me.uk

In the preceding manifest, we have declared that our certificate is named banking-certificate and that it is for the www.banking.jasonmarston.me.uk document.

The cloudBuild.yaml file defines the build for our certificate:

steps:

- name: 'gcr.io/cloud-builders/kubectl'

args: ['apply', '-f', 'certificate.yaml']

dir: 'gke-deploy/certificate'

env:

- 'CLOUDSDK_COMPUTE_ZONE=europe-west2-c'

- 'CLOUDSDK_CONTAINER_CLUSTER=banking-cluster'

The preceding listing uses the kubectl cloud builder to apply our certificate.yaml file.

We will not execute the cloud build for our certificate yet as it goes hand in hand with our Ingress. Instead, we will apply both the certificate and Ingress in the next section.

Kubernetes Ingress

Ingress is a Kubernetes object that exposes one or more Services to clients outside of our cluster. It defines a set of rules for routing traffic from specific URLs to specific services. The initial version of the Ingress manifest file (ingress.yaml) we will examine looks as follows:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: banking-ingress

annotations:

kubernetes.io/ingress.allow-http: "false"

kubernetes.io/ingress.global-static-ip-name: banking-ip

networking.gke.io/managed-certificates: banking-certificate

spec:

rules:

- host: www.banking.jasonmarston.me.uk

http:

paths:

- path:

backend:

serviceName: front-end-svc

servicePort: 80

The annotations in our preceding metadata section define that we will only accept HTTPS traffic, we will use the public static IP address called banking-ip, and we will use the certificate called banking-certificate to enable SSL. The content in the spec section defines a rule that all incoming traffic that will host www.banking.jasonmarston.me.uk on any path will be routed to our front-end-svc service on port 80. While this works, it does mean that all traffic to user-svc and account-svc goes through front-end-svc, which can create a bottleneck. Instead, in ingress2.yaml, we have specified additional rules, as shown in the following fragment:

spec:

rules:

- host: www.banking.jasonmarston.me.uk

http:

paths:

- path: /account

backend:

serviceName: account-svc

servicePort: 8080

- path: /account/*

backend:

serviceName: account-svc

servicePort: 8080

- path: /user/*

backend:

serviceName: user-svc

servicePort: 8080

- path:

backend:

serviceName: front-end-svc

servicePort: 80

In the preceding fragment, we have specified that traffic to /account or /account/* will be routed to our account-svc on port 8080, traffic to /user/* will be routed to our user-svc on port 8080, and all other traffic will be routed to front-end-svc on port 80. This means that front-end-svc is no longer a bottleneck and traffic is routed directly to the service intended.

Now, we can set up Cloud Build triggers for our managed certificate and Ingress manifests. Use cloudBuild2.yaml for the Ingress build. Once these have been set up, run them manually from Cloud Console.

Provisioning certificates and setting up Ingress can be a lengthy process. Kubernetes needs to create a Google HTTP(s) load balancer and provision the certificate. We have found that it typically takes approximately 30 minutes from completion of the cloud builds to the application being available.

You would see the following sorts of errors in the browser if the Ingress is not ready yet:

- This site can't be reached: Generally, the Google HTTP(s) load balancer has not been provisioned yet.

- Certificate or Cypher error messages: The certificate has either not been provisioned yet or the provisioned certificate has not been applied and propagated to where it is needed yet.

We now have a fully working GKE-based environment for our banking application.

When to use GKE

GKE should be used as the deployment environment when containers are under discussion and we need complex orchestration.

The following list explains some reasons why we would want to use GKE to host our containers:

- We need Stateful containers.

- We need to use ConfigMaps or Secrets.

- We need to have complex Ingress routing.

- We need the services to be hosted on a VPC.

- We need to always have at least one instance of our container active.

- We have special host needs such as GPUs or Windows operating systems on our Nodes.

Now that we have learned about when to use GKE, we can move on to the next section and review what we have learned in this chapter.

Summary

In this chapter, we learned about GKE and how it orchestrates and manages the deployment, configuration, and scaling of our container-based microservices. We learned how to provide configuration for our microservices using Kubernetes ConfigMaps and Secrets, and how to manage true secrets using Google Secret Manager. We then learned how to configure and deploy our microservices and how to expose them securely to a public endpoint so that they can be accessed from outside the cluster. Finally, we learned when to use GKE.

With the information provided in this chapter, we can decide whether GKE is appropriate for hosting our application microservices and if it is, how to provision a GKE cluster, deploy and configure our microservices, and provide a public endpoint for users of the application to access via a web browser.

In the next chapter, we will learn how to deploy and run our microservices in the most mature serverless platform on Google Cloud, Google App Engine (Flexible).