Reaction to Robot Design: Cute, Creepy, and Everything in Between

|

Regarding Robot Appearance—Disagreement Among Robot Makers |

|

|

Don’t make them humanlike |

Make them humanlike |

|

It’s interesting. When you start trying to make robots look more human, you end up making them look more grotesque. Colin Angle, Founder of iRobot (Wired.com interview with Colin Angle, October 2010) |

In designing human-inspired robotics, we hold our machines to the highest standards we know—humanlike robots being the apex of bio-inspired engineering David Hanson, Founder of Hanson Robotics (Hanson 2011) |

Chapter Overview

A robot’s appearance is critical to people’s interest in interacting with it. In this chapter, we discuss the four general appearances robots can assume: (1) mechanical, (2) android, (3) humanoid, and (4) zoological. We then explore two fundamental issues associated with robot design. The first is how human to make a robot look. On this topic, we discuss the concept of the “Uncanny Valley,” which, in short, suggests a robot that is almost, but not perfectly, humanlike will backfire by engendering feelings of fear and discomfort in people. Yet, the public is also resistant to robots that look exactly like humans, which suggests that an android direction is likely not the optimal choice for widespread robot design—at least not in the near term (a key exception is discussed in this chapter). The second design issue we explore is how cute to make robots, drawing from the theories of kinderschema (an innate human desire to bond with entities displaying youthful features). Even though robots are advanced and sophisticated machines, elements of cuteness go a long way in stimulating human trust and desire to interact with them. We also look at robot face design, and the pros and cons of fixed-face versus screened-face robots. The results of our studies with American consumers which tested their reactions to a variety of robot appearances are shared. We also discuss several examples of excellent robot design for human-interactive robots, drawing from robots currently available or in late-stage development.

Four General Robot Appearances

What should robots look like? This is a critical question for the creators of any robot that will interact with humans. Humans are visual creatures, we react to people and objects in our lives based significantly on their appearance—at least at first. Psychological research suggests people’s initial reactions to a robot they encounter will be immediate (first impressions matter!) and generally driven by subconscious cues triggered by their appearance (Aarts and Dijksterhuis 2000; Shibata 2004). How robots look will greatly influence our desire to interact with them—how much we trust them, enjoy their company, or fear them.

There are four general appearances robots can assume:

- Mechanical. These are robots that do not attempt to look human in any way. They lack discernible faces or eyes. As a result, if you wished to communicate with one of them, you would not be sure on which part of its body you should focus. They generally do not have two arms or legs. Their wires or gears may be visible. Mechanical robots are mainly designed for function, and there is no attempt to hide that they are machines. From the entertainment media, R2D2 from the Star Wars franchise is an example. Regarding real-world robots discussed so far in this book, the TUG delivery robots and the K3 and K5 security robots are prime examples.

- Android. These robots are the exact opposite of their mechanical counterparts. They are meant to look as human as possible. The perfect android would be one that you cannot tell from a human, by look or by touch. From the entertainment media, the replicates in Blade Runner are androids, as are the residents of West World (all played by actual humans, of course). In the real world, Sophia from Hanson Robotics and the robotic Chinese news reporter (discussed in Chapters 1 and 4, respectively) are androids.

- Humanoid. These robots are somewhere between mechanical and android. They have the basic features of a human, in particular a discernible face and eyes. In this manner, you have a comfortable place to look at when you interact with them, making interchanges more natural than they would be with a mechanical robot. They also generally have two arms, two legs, and a torso, though sometimes move via wheels. However, they do not try to pass for human. Although they are made in the general shape of a human, they are clearly robotlike. From the fictional world, C3PO of the Star Wars franchise is an excellent example of a humanoid robot. From the real world, Connie the Hilton concierge robot is a humanoid robot example, as is Softbank’s Pepper.

- Within the humanoid robot segment, we have a cuteness spectrum. Humanoid robots can range from cute (with features such as small stature, large eyes, infant-like round heads) to less cute (harder edges, smaller eyes, larger in size). We will dig further into the impact of cuteness later in the chapter.

- Zoological. These are robots made to look like animals. They can be more realistic in style (like Paro the robot seal, discussed in Chapter 5) or more mechanical (like Chip the robot dog, discussed in Chapter 1). There are also zoological robots, such as Boston Dynamic’s Spot Mini, which generally resemble animals due to their four legs and overall posture, though they are far more rough and mechanical in appearance (can be considered borderline zoological and mechanical).

See Figure 6.1 for examples of each.

Figure 6.1 Four types of robot appearance

Experts from the world of architecture and design tell us “form follows function.” Hence, a robot meant to serve as a pet would benefit from being zoological in style. A robot meant to interact with humans in a social or companion-like manner would be better designed as a humanoid or android. A robot meant to carry out a more “workman” role (such as making deliveries) might be better designed in a mechanical style meant to fit its purpose. Yet, it is not quite that simple. There are still two major consumer-oriented questions that robot designers struggle with, namely: (a) how realistically human should robots look and (b) should they be designed to appear more cute or more professional/sophisticated looking? Specifically:

- Human likeness. Should robots look as human as possible? After all, we humans are used to interacting with other humans, such as looking into people’s eyes and faces when we communicate. Hence, why not make robots in our own image to facilitate human-robot interactions (as David Hanson argues in his quote at the start of the chapter)? Or, on the other hand, should robots maintain a distinctly mechanical look, so we are constantly reminded they are made of wires and circuits (closer to the position of Colin Angle, as reflected in his quote at the start of the chapter)? Perhaps something in between?

- Cuteness. Should robots be made to look cute and endearing? Would this put people more at ease when they interact with them? Or, should robots look more formal and sophisticated, to reflect their intelligence and advanced computing capabilities?

In this chapter, we will review the main findings from the significant amount of research that has been conducted in these areas over the past several years by academics and industry researchers, as well as from original research we conducted for this book.

How Human Should a Robot Look?

There is a fairly strong case to be made that social and companion robots, or any robot humans will regularly converse with, should be at least somewhat humanoid in appearance—meaning at least having something that can be considered a face, and having the general shape of a human. Researchers argue that some degree of humanoid likeness helps facilitate human interaction (Riek et al. 2009; Goetz et al. 2003). For instance, humans, by nature, look at faces during conversations. If we are to be waited on by a robotic waitress, for example, we would naturally want to know where its face and eyes are when we ask about the daily specials, rather than talk to a moving pile of wires and circuits. Also, our homes and buildings are made for human movement. For example, two legs work great on stairs, while wheels do not. Hence, there is an argument to be made that robots we will interact with regularly in our home and community environments should be humanoid to some degree. But to what extent should we make robots in our own image? Should we follow this logic to the extreme, and make fully humanlike androids?

Robots that look as human as possible have long been the vision of many science fiction books, films, and TV shows (the replicants in Blade Runner, Ash and Bishop in the Alien franchise, Lieutenant Data in Star Trek: The Next Generation, and a large cast of characters in Westworld). It is also the goal of numerous robotic developers today. What reaction can we expect from consumers if they are asked to interact with increasingly humanlike robots?

Imagine tomorrow you walk into your insurance company’s customer service office to settle some outstanding claims, and you stroll up to the reception desk. Instead of the human receptionist you might have been expecting, you are greeted by Nadine, a humanlike android developed at the Nanyang Technological University in Singapore. Nadine is intelligent enough to hold her own in a conversation with you, and also has the ability to move independently. Take a close look at Nadine (Figure 6.2):

Figure 6.2 Nadine

Are you feeling at all uncomfortable? Suppose she was moving and talking to you (go to this site to watch her in a video: www.youtube.com/watch?v=GUnQpwSceEk). You notice Nadine looks fairly human, but something is not quite right. She has many human features, but she is clearly not a real human. Would you be at all uncomfortable interacting with Nadine the first time? The first several times? If you believe interacting with Nadine would put you somewhat ill at ease, you are experiencing a psychological phenomenon known as the Uncanny Valley.

The “Uncanny Valley” phenomenon was originally proposed by a Japanese professor and roboticist named Masahiro Mori in 1970. However, it was not until 35 years later, in 2005, that the article was translated into English and began to receive significant attention across the globe (Grabianowski 2017). In his article, Mori explained that humans tend to have a higher affinity for robots as they become generally more humanoid, until they reach a point where they are almost humanlike except for a few flaws. At this point, we become wary of the robot and our affinity for it drops sharply. Thus, if we created a plot to depict our affinity for robots as they become more and more humanlike, we will notice a steep drop-off that occurs when robots are very close—but not quite—humanlike; this drop-off is what Mori named the Uncanny Valley. Furthermore, Mori believed that the size of this drop-off would be magnified considerably if the robots had any degree of movement or animation (Mori et al. 2012). Many research studies since Mori’s first publication have supported this phenomenon (Gray and Wegner 2012; Mathur and Reichling 2016; Ho and MacDorman 2017).

Why does this phenomenon occur? Why does human comfort regarding a robot drop dramatically when its appearance becomes very close to but not quite human? A number of theories have been proposed, and we will review the most prevalent.

Perhaps the most straightforward theory is that the “Uncanny Valley” takes place when we see something right at the boundary of one category to another, where we are not quite sure how to categorize what we are seeing. In this case it is the boundary between clearly not human (doll or mechanical robot) and an actual human. When something exists right at this boundary, our brain does not know how to categorize it—we cannot say if it is an inanimate object or a living human—and hence we get an uneasy feeling (Looser and Wheatley 2010).

A related theory posits that as a robot becomes almost identical in appearance to humans, observers may believe the robot can have some sort of conscious mind. The observer wonders, even if only slightly, if the robot might have the ability to sense and experience things, to feel, to be “alive” in some manner. This possibility, even if slight, causes the observers to become ill at ease (Gray and Wegner 2012).

Another theory suggests that when a robot closely resembles a human, the human brain subconsciously begins to consider the robot as a possible human, however, a human with some sort of “problem.” This classification comes with a number of imposed expectations about how a human should look, and if any of these expectations are not met, the brain perceives a problem. This is referred to by cognitive processing researchers as expectancy violation (Hsu 2012). For example, the brain sees enough cues to think a robot is a human, but then perceives slight variations that make it clear it is not fully human. This can raise red flags in the observer’s subconscious brain, and even trigger a fight or flight response. On some level, our brain may perceive this slightly off human as possibly diseased, deranged, or in some other manner a danger to us (MacDorman and Ishiguro 2006).

The “Uncanny Valley” phenomenon becomes even more pronounced regarding robots that move, which will be the vast majority of them in the near future. Neuroscience supplies some insights in this regard. Researchers have pinpointed many of the exact areas of the human brain that are involved in different mental processes. For example, our brain’s visual cortex is heavily involved in helping us process sensory information from our eyes, while our motor cortex helps us direct the movements of our muscles. Between these two regions lies the parietal cortex, which works as a link between the visual and motor cortices, and helps us learn how to do something by watching and mimicking someone else. This watching and mimicking process is hardwired into humans, as we have relied upon it since we were infants; this is how we learned to walk, eat with utensils, and throw a baseball (McElroy 2013).

Interestingly, it is theorized that this process also explains the “Uncanny Valley” phenomenon for mobile robots. When we see an almost humanlike android perform an action in a not-quite-humanlike manner, our parietal cortex becomes confused. On the one hand, the visual stimuli it receives indicate that the observer is looking at a human being, but on the other hand, the motor stimuli it receives suggest that the motion the observer is viewing is more robotic than human (and, thus, impossible to truly mimic). When the parietal cortex is unable to immediately reconcile the inconsistency between the humanlike appearance of the android and the machinelike nature of its movements, it signals the rest of the brain that the being is something that it does not understand (Brown 2011). As a result, the brain identifies the robot as something of which it might need to be wary (SciShow 2016).

This theory is supported by a 2012 article in the journal Social Cognitive and Affective Neuroscience. In the article, there is a review of a study in which the participants were monitored by an fMRI machine while being shown three video clips of different entities performing familiar actions (sipping from a glass of water, waving to the camera, etc.). One clip showed these actions being performed by a clearly mechanical robot (with visible wiring and metal), another clip showed them being performed by a humanlike android, and the last clip showed them being performed by an actual human. Participants watching the clips of the actual human and the mechanical-looking robot showed no unusual activity on their fMRI scan. However, when participants viewed the clip of the almost humanlike android performing the actions, their parietal cortices lit up with intense activity. The researchers concluded that this was the result of the effort undertaken by the parietal cortex to try and comprehend what it was seeing, thus providing evidence of the occurrence of the “Uncanny Valley” phenomenon (Saygin et al. 2012).

The notion of the “Uncanny Valley” has been applied to a range of other contexts as well as robotics. From animated film characters to virtual reality avatars to puppets, a wide range of almost-but-not-quite-human entities can cause individuals to experience the “Uncanny Valley” phenomenon. For example, one of the most common criticisms of the 2004 film The Polar Express was that the unusual style of animation was eerie and off-putting. The filmmakers used a system of CGI animation that involved live-capture technology in order to make the motions of the film’s characters far more life-like than a traditional cartoon. However, while the film got the characters close to human, the animators struggled to depict fully realistic emotions and facial expressions (many critiques of the film directly refer to the characters’ “dead eyes”). In the end, the film’s characters were humanlike in most aspects except for a few small but impossible to ignore flaws, causing them to fall right into the “Uncanny Valley” category (Seymour, Riemer, and Kay 2017).

Research has shown that the “Uncanny Valley” is not a uniquely human phenomenon. A group of researchers conducted a study in which they showed monkeys images of other monkeys ranging from unrealistic to realistic and examined their responses. Interestingly, the researchers found that the monkeys exhibited visual preferences that followed the “Uncanny Valley” structure. The monkeys showed the greatest affinity for the images of real monkeys and unrealistic monkeys, and they had the least positive reactions to the images that were close to realistic monkeys but slightly off. The results of this study reinforce the idea that the “Uncanny Valley” phenomenon has its roots in evolution—our distant ancestors may have relied upon it as a tool to identify abnormalities and threats in their natural environment (Steckenfinger and Ghazanfar 2009).

So what are we to do with these insights? For Mori and most other researchers who have studied the “Uncanny Valley,” the takeaway is clear: do not try to create robots that look as human as possible until we reach a point where we can do so flawlessly (Mori et al. 2012). It is much better to offer robots that are generally humanoid in shape, but remain mechanical enough that they are clearly robots. But what if we reach the point where we can make robots so humanlike that they are truly indistinguishable from humans, in essence cross over to the other side of the “Uncanny Valley.” Should we do this? Consumers respond with an emphatic no. In one of our national surveys (sample size = 370), the vast majority of respondents told us we must always be able to distinguish robots from humans. See Table 6.1.

Table 6.1 Should robots be 100% humanlike?

|

% |

|

|

If possible, robots should be made to look exactly like humans so that we cannot easily tell robots from humans |

14 |

|

Even if possible, robots should never be made to look exactly like humans; we should always be able to easily tell a robot apart from a human |

75 |

|

Not sure |

11 |

In follow-up interviews, consumers say they would be extremely frightened in a world where they could not distinguish robots from real people. The underlying cause of this fear seems to harken back to the issue of control, and people feel if they cannot quickly distinguish robots from humans, they have lost a great deal of control. As one respondent stated, It would be way too scary if at some point in the future there are robots that look exactly like humans and we could not tell the difference. That’s just crazy! I would always want to know if I was talking to a person or a robot. Otherwise, like, robots could take advantage and trick us all the time.

Humans are used to interacting with other humans, with our common human features to make us comfortable with each other. However, as the “Uncanny Valley” theory tells us, robot makers should not make robots that look almost—but not quite—human, because that triggers a hardwired sense of fear. Further, our survey respondents told us they do not want robots that look exactly human, because they always want to know if they are interacting with a real human or robot. This may change over the long term as people grow more accustomed to robots, but certainly not in the near future. As a result of all this, it seems robot makers should make social robots that have a general humanoid shape and size, but these robots should retain a distinctly mechanical or robot-like appearance and not try to completely replicate human likeness.

We now have one more important factor to consider regarding robot appearance—cuteness.

The Power of Cuteness

Robots that exist today are highly advanced machines, the culmination of generations of science and research. And the robots coming in the next decade or two will be even more advanced. Their capabilities will be nothing short of astonishing. Should robot appearance reflect their high level of capability, perhaps expressing a degree of sophistication? The answer, from consumers, is no. We want our robots to be cute.

Another commonly cited theory that is applied to robot design is the Baby Schema (kinderschema in the original German) by Konrad Lorenz (Lorenz 1971). Lorenz’s theory posits that there are certain features that make a human or animal appear cute, such as small overall size, large eyes, relatively large head, small nose, and chubby cheeks. These are characteristics associated with infants and youth. Further, cuteness elicits a positive affective response, particularly a desire to care for and bond with the cute creature. This is tied to evolution; it is an instinctive survival response for a species to care for its young. This is particularly important in species such as humans and other advanced mammals. In such species, infants are born helpless and remain so for quite some time. Hence, there must be an inherent desire among adults to bond with and care for the young.

Recent research has supported and even expanded beyond the basic propositions of Lorenz’s Baby Schema (Tarlach 2019). Research conducted over the past couple of decades involving brain scans while showing people pictures of cute infants has demonstrated the intrinsic power of cuteness. These studies have shown the response of adults to cute infants is lightning fast, and infant-related cuteness triggers intense activities in the regions of the brain associated with attention and reward processing. Recent research also suggests cuteness is not simply about creating a desire to care for youth, but also about creating a desire to interact with and socialize with infants. Consider the tendency of adults to make funny faces at infants to engender a response. This is a natural desire among social animals (in this case, humans) to encourage interaction with, and socialization among, infants. Cuteness is the trigger for this. As University of Oxford neuroscientist Morten Kringelbach, a leader in cuteness research, has stated, Like a Trojan horse, cuteness opens doors that might remain shut.(Tarlach 2019). Cuteness innately triggers in most humans (yes, studies show this occurs among both men and women) a desire for more interest, interaction, and care. And the power of cuteness vis-à-vis adult humans goes beyond human infants. It can also be applied to animals and mechanical devices (Tarlach 2019).

For robot designers, cuteness is a strategy for getting humans to feel positively about robots and establish relationships with them (Breazeal and Foerst 1999; Turkle 2011). Understanding that many consumers are apprehensive about robots, making them look cute helps mitigate some of that concern. Clearly, there is a time to dial up the cuteness (robot playmates for children; robots working at amusement parks) and times to dial down the cuteness (robots performing serious roles at a funeral; robot soldiers). The essential idea is that robots meant to interact and socialize with humans have “cute” appearance cues that suggest friendliness to foster engagement and adorability to, ultimately, promote relationship building and bonding. Further, being cute also makes a robot seem less threatening and less dangerous, making humans feel more control over it. Recall the discussion in Chapter 3—a sense of losing control to more powerful and more capable robots is an underlying theme in many people’s concerns about robots. A cute appearance appears to reduce this fear.

Softbanks’ Pepper robot is an example of a robot designed to be cute (see Figure 6.3). Pepper has large round eyes, a round head, small nose and overall small stature. There is no need to worry about a robot that is this cute, is there? A robot this cute would never enslave the human race!

Figure 6.3 Pepper

Our Study—Consumer Reaction to Robot Appearances

To explore these issues further, we conducted a national study involving 310 adult Americans. In the study, we showed 40 pictures of robots1 and captured respondents’ reactions to each robot image via the following scaled questions. A “1” meant they felt the image was completely described by the phrase on the left and a “7” meant the image was completely described by the phrase on the right. A “4” is the midpoint, and respondents were allowed to select any of the seven numbers on the scale. Respondents knew nothing else about the robots—they were given no information about their roles or their capabilities. All they had to react to was their appearance.

|

Looks machinelike |

1 2 3 4 5 6 7 |

Looks humanlike |

|

Looks formal |

1 2 3 4 5 6 7 |

Looks friendly |

|

Looks untrustworthy |

1 2 3 4 5 6 7 |

Looks trustworthy |

|

Does not look cute |

1 2 3 4 5 6 7 |

Looks cute |

|

Does not look eerie |

1 2 3 4 5 6 7 |

Looks eerie |

|

Makes me feel comfortable |

1 2 3 4 5 6 7 |

Makes me feel uncomfortable |

|

Is not something I want to interact with |

1 2 3 4 5 6 7 |

Is something I want to interact with |

We tested pictures of a wide variety of robots that are currently available for sale or in development. They ranged from purely mechanical looking (service robots with gears and wires visible, and no attempt at a humanoid shape) to generally humanoid in shape, but still clearly robotic (such as the Lynx and Buddy robots mentioned already in this book) to a highly humanlike female android (such as Sophia and Nadine, both previously discussed).

The findings of our study strongly support the power of cuteness in robot appearance. Cuteness was significantly correlated with perceived friendliness (r = 0.948, p < 0.001), trustworthiness (r = 0.882, p < 0.001), feeling comfortable with (r =0.885, p < 0.001), and a willingness to interact with (r = 0.930, p < 0.001). What this means in nonstatistical language is that the higher the rating of cuteness that our respondents gave each robot, the more likely that robot was viewed as being friendly, trustworthy, making the respondent feel comfortable and wanting to interact with it. In essence, the cuter the robot, the far more positive the response from consumers and the more likely a relationship could be established. The robots that rated highest on the cute scale had several characteristics in common: overall youthful/juvenile-looking appearance, large round eyes, short stature, and friendly/endearing faces. The robot rated the cutest was Buddy from Blue Frog Robots, which was first discussed (and image shown) in Chapter 5. The cuteness factor does indeed appear to break down a barrier and make humans more likely to want to be around them.

Let us now turn to the android that we tested—the robot that looked very much like a human female in her twenties. As expected, she topped the list on humanlike (looking humanlike versus machinelike). However, she fell solidly into the middle of the pack on all other scales. This means she was far from the top robots in terms of respondents wanting to interact with her or feeling comfortable around her. If we compare this android robot to any of the humanoid (non-android) robots rated as cute, we see the clear preference for a cute robot over an android robot. On key ratings of “feeling comfortable,” not looking “eerie,” and the all-important “desire to interact with,” the cute robots significantly outperform the android robot.

Our research supports earlier insights. The robots in our set of 40 that respondents most wanted to interact with are generally humanoid, but not at all close to looking truly human. They have faces to look at, as opposed to being purely mechanical. However, none is meant to pass as human. Also, they are heavy on cute—with youthful, innocent, and submissive appearances. On the other hand, the robots that respondents told us they least wanted to interact with are heavily mechanical in appearance, lacking discernible faces and they made no effort at cuteness. The android female fell exactly in the middle of the pack, number 20 out of 40 robots on the scale of “want to interact with” (average rating of 4.2 on the 7-point scale). See Table 6.2.

Table 6.2 Large range of “want to interact with,” based purely on appearance

|

Average rating “Want to interact with” On a 1–7 scale |

|

|

Buddy robot (cute, generally humanoid shape, has screen with youthful face image) |

5.4 |

|

Female android (realistically resembles an attractive young adult female) |

4.2 |

|

Atlas robot (mechanical, no face) |

2.4 |

What Does All This Mean for Robot Appearance?

Human-interactive robots must be made in a manner that makes humans actually want to interact with them. In sum, companies planning to utilize robots in consumer-facing roles where significant robot-human interaction will take place should consider the following regarding robot appearance:

- Make robots generally humanoid in overall shape. There should be a discernible face and eyes for people to look at when interacting. It will ease interactions.

- This might only apply partially to some robots, depending on the task it performs. For instance, a hotel delivery robot needs to be somewhat boxlike in shape, rather than have a torso with arms and legs, to carry food and supplies. Yet, having a screen on the top with a face on it would aid in its interactions with hotel guests, such as if the guest had a question about the food or supplies delivered.

- Do not attempt to make fully humanlike androids. Currently, technology only gets robots to look close to human, but that makes them even more eerie. Even if a truly humanlike robot can be made, consumers are nowhere near ready for that. We want to be able to tell humans and androids apart. The one exception to this rule may be sex robots.

- Aim for a degree of cuteness in the robot’s appearance. This does not mean all robots must be childish, the role the robots are performing should be considered. However, for almost all social robots, factoring in at least some visual cues of friendliness, openness, and endearment will make robots appear approachable, happy, and subservient.

Several social robots currently available adhere to the positive considerations listed, including:

- Pepper from Softbank Robotics (image shown earlier in this chapter)

- NAO from Alderon Robotics, now owned by Softbank (see image at www.softbankrobotics.com/emea/en/index)

- Elf from Sanbot Robotics (see image here at http://en.sanbot.com/)

- Buddy from Blue Frog Robotics (image shown in Chapter 5)

All these robots are humanoid; however, they make no attempt to look fully human. Yet, there are enough humanoid features to make interactions more natural, such as a face and eyes to look at when talking. And, they are all clearly designed with cues to express friendliness, openness, and subservience.

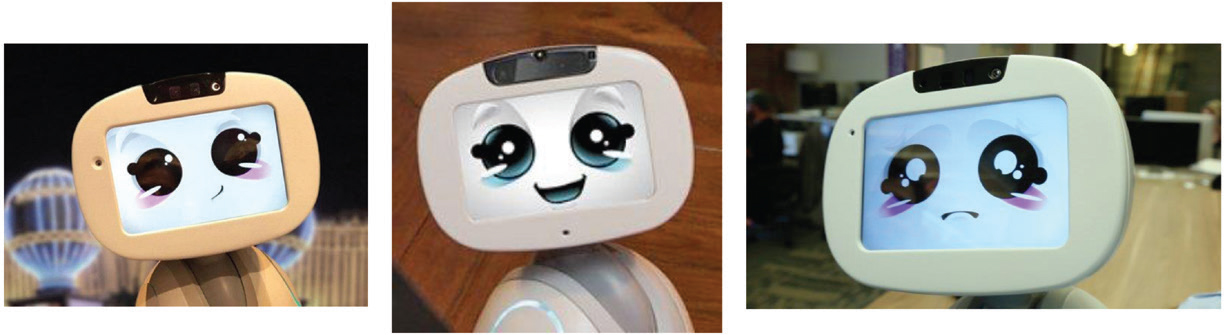

Robot Faces: Fixed versus Screens

With that said, these four robots manifest two markedly different approaches in their face displays. The NAO and Pepper robots have a fixed face. On the other hand, the Elf and Buddy robots have computer screens for a face. Either approach works; however, there are clear advantages in using a screen for a face. A real human’s face can make an endless amount of facial expressions. A robot with a fixed face cannot make multiple expressions, though a robot with a screen face can. Buddy and Sanbot can both feature expressions that clearly show happiness, sadness, excitement, confusion, and many other emotions (see Figure 6.4).

Figure 6.4 Buddy’s emotional expressions

Implications for Consumer Behavior and Marketing Strategy

A robot’s appearance is critical to consumers’ willingness to trust it and their desire to interact with it. Hence, companies developing and utilizing robots must keep the end user in mind for all design considerations. Potential robot designs should be researched with consumers to ensure an optimal reaction when ultimately rolled out. Designing for function, of course, is critical, but consumer receptivity is equally important for robots that will interact with the public.

For now, avoid the desire to go android for most robots. Any robot that is almost—but not quite—human looking causes anxiety in humans and will not have the desired positive effect on customer interactions. Further, the public is not yet ready for robots that are indistinguishable from humans, as that represents too great a loss of control. A key exception would be robots made for sexual encounters. We encourage further development into android robots, as there will likely be a time in the more distant future when there will be greater public acceptance of highly humanlike robots. However, for the vast majority of public-facing robots for the near term (at least the next two decades), the smarter option would be humanoid robots. Our ongoing theme of a cautious, stepwise approach to the robot revolution means humanoid now and (maybe) android later.

For humanoid robots, factor in appearance cues of cuteness which help communicate friendliness, subservience, and approachability. Certainly the cuteness quota will vary by role, with some roles requiring greater sophistication in appearance. But no matter what the role, if the robot is customer-facing, a degree of “cute” is helpful in robot design.

When designing robots, keep in mind communications with the end user, and remember that humans are hardwired to want to communicate by looking at eyes and faces. Of course, many robots must be more mechanical in nature to fit their tasks—such as delivery bots which must hold supplies. Yet, these types of robots should still have a “face” for consumer interactions—such as a screen with eyes. Speaking of screens, consider the benefit of a screened face for the portrayal of greater emotions, which will aid in relationship building and leads us to our next chapter.

1The authors will share the 40 robot images tested and the detailed study findings as well. We are limited regarding the images that we can show in this book. Contact the lead author at [email protected]