Handling input is a critical aspect of any application, but it is an especially unique challenge on a mobile device. The first consideration is that the user is most likely not sitting in a comfortable chair sipping coffee, casually browsing the Internet. A mobile application user is most likely on the go, looking to just get a task done or find the needed information and be on their way.

The second consideration is that user input on a mobile device is quite different than handling input on the PC or even on the XBOX 360 for XNA Framework game development. A Windows Phone 7 mobile device may or may not have a physical keyboard, so you cannot author your application to depend on it. Even at 800px x480px screen resolution, screen real estate is still at a premium.

Mobile devices have unique hardware input capabilities for innovative user experiences, such as capacitive touch, accelerometer, and location. In this chapter, I cover handling user input in both Silverlight and XNA Windows Phone 7 applications.

Note

The gesture samples use the Silverlight for Windows Phone Toolkit, available at http://silverlght.codeplex.com. Please download the toolkit to compile the sample code.

Note

This code in this chapter is broken up into two separate Solutions in the sample source code: Ch03_HandlingInput and Ch03_HandlingInput_Part2. Sample code starting in the location sample on forward is in the Part 2 solution.

The last thing a mobile user wants to do is type on a mobile keyboard, but it is inevitable for many applications to require some form of text input. In this section I discuss keyboard capabilities and API enhancements to ease typing on the keyboard on Windows Phone 7 devices.

Windows Phone 7 devices may also have a hardware slide-out keyboard, but your application will not pass certification in App Hub if it is dependent upon the hardware keyboard. Otherwise, from a software development perspective, programming for hardware keyboard input "just works." Of the six devices available at Windows Phone 7 launch, only one had a full slide-out keyboard, and another had a vertical QWERTY keyboard. The other four devices were pure touch devices without a physical keyboard.

All Windows Phone 7 devices have a SIP keyboard used for entering text. Typing on the SIP keyboard built into Windows Phone 7 is a pretty comfortable means of entering text; however, it is still a small keyboard, so anything that a developer can do to ease typing can really help improve the overall user experience.

Typing text on a mobile phone should be minimized as much as possible, but if text input is required, a developer should take advantage of capabilities to make typing as simple as possible. In the next section I cover InputScope, which is a must-have feature to take advantage of when typing is required in your Windows Phone 7 applications.

When testing keyboard input, you will be tempted to type on your PC keyboard; however, it does not work. You must use the mouse with the SIP keyboard in the Emulator for input.

Tip

Click the Pause/Break button on your PC keyboard to enable typing in the emulator with your PC keyboard instead of having to use the mouse to "touch" the SIP.

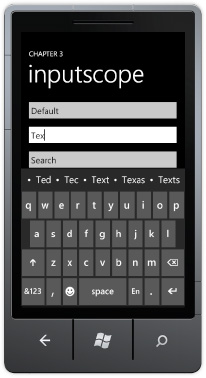

The InputScope property is available on the TextBox control, which is the primary control for text input. InputScope lets the developer customize the keyboard for the expected type of input. For example, the default behavior is that when you click into a TextBox, the SIP keyboard pops up, as shown in Figure 3-1.

The second TextBox has an InputScope of Text, which enables word selection just above the keyboard, as shown in Figure 3-2.

With just one simple attribute, text input becomes much easier for the enduser. Figure 3-3 shows three additional text input options, which I explain just after the figure.

Configuring an InputScope of Search turns the Enter key into a GO key with the idea that the user enters a search keyword and then clicks Enter to kick off a search. Password is not actually an InputScope. It is a custom TextBox class named PasswordBox that automatically hides data entry as the user types. An InputScope of TelephoneNumber brings up a phone keypad. As you can see, all of these could come in handy as you develop your application UI and optimize input for the end user. Table 3-1 lists the available InputScope options and their descriptions, reprinted here for your convenience from the Windows Phone 7 documentation.

Table 3.1. Available InputScope Options

Input Scope | Description |

|---|---|

AddressCity | The text input pattern for a city address. |

AddressCountryName | The text input pattern for the name of a country/region. |

AddressCountryShortName | The text input pattern for the abbreviated name of a country/region. |

dAdressStateOrProvince | The text input pattern for a state or province. |

AddressStreet | The text input pattern for a street address. |

AlphanumericFullWidth | The text input pattern for alphanumeric full-width characters (East-Asian languages only). |

AlphanumericHalfWidth | The text input pattern for alphanumeric half-width characters(East-Asian languages only). |

Chat | The SIP layout for text messaging, which recognizes pre-defined abbreviations. Supported only in Silverlight for Windows Phone. |

CurrencyAmount | The text input pattern for amount of currency. |

CurrencyAmountAndSymbol | The text input pattern for amount and symbol of currency. |

CurrencyChinese | The text input pattern for Chinese currency. |

Date | The text input pattern for a calendar date. |

DateDay | The text input pattern for the numeric day in a calendar date. |

DateDayName | The text input pattern for the name of the day in a calendar date. |

DateMonth | The text input pattern for the numeric month in a calendar date. |

DateMonthName | The text input pattern for the name of the month in a calendar date. |

DateYear | The text input pattern for the year in a calendar date. |

Default | The default handling of input commands. |

Digits | The text input pattern for digits. |

EmailNameOrAddress | The SIP layout for an e-mail name or address. Supported only in Silverlight for Windows Phone. |

EmailSmtpAddress | The text input pattern for a Simple Mail Transfer Protocol (SMTP) e-mail address. |

EmailUserName | The text input pattern for an e-mail user name. |

FileName | The text input pattern for a file name. |

FullFilePath | The text input pattern for the full path of a file. |

Hanja | The text input pattern for Hanja characters (Korean characters). |

Hiragana | The text input pattern for the Hiragana writing system(a Japanese syllabary). |

KatakanaFullWidth | The text input pattern for full-width Katakana characters (a Japanese syllabary). |

KatakanaHalfWidth | The text input pattern for half-width Katakana characters (a Japanese syllabary). |

LogOnName | The text input pattern for a log on name. |

Maps | The SIP layout for entering a map location. Supported only in Silverlight for Windows Phone. |

NameOrPhoneNumber | The SIP layout for SMS To field. Supported only in Silverlight for Windows Phone. |

Number | The text input pattern for a number. |

NumberFullWidth | The text input pattern for a full-width number. |

OneChar | The text input pattern for one character. |

Password | The text input pattern for a password. |

PersonalFullName | The text input pattern for a person's full name. |

PersonalGivenName | The text input pattern for a person's given name. |

PersonalMiddleName | The text input pattern for a person's middle name. |

PersonalNamePrefix | The text input pattern for the prefix of a person's name. |

PersonalNameSuffix | The text input pattern for the suffix of a person's name. |

PersonalSurname | The text input pattern for a person's surname. |

PhraseList | The text input pattern for a phrase list. |

PostalAddress | The text input pattern for a postal address. |

PostalCode | The text input pattern for a postal code. |

RegularExpression | The text input pattern for a regular expression. |

The SIP layout for a search query. Supported only in Silverlight for Windows Phone. | |

Srgs | The text input pattern for the Speech Recognition Grammar Specification (SRGS). |

TelephoneAreaCode | The text input pattern for a telephone area code. |

TelephoneCountryCode | The text input pattern for a telephone country/region code. |

TelephoneLocalNumber | The text input pattern for a telephone local number. |

TelephoneNumber | The text input pattern for a telephone number. |

Text | The software input panel (SIP) layout for standard text input. Supported only in Silverlight for Windows Phone. |

Time | The text input pattern for the time. |

TimeHour | The text input pattern for the hour of the time. |

TimeMinorSec | The text input pattern for the minutes or seconds of time. |

Url | The text input pattern for a Uniform Resource Locator (URL). |

Xml | The text input pattern for XML. |

Let's now shift gears and explore the available keyboard events.

There are two keyboard events available on the TextBox, as well as pretty much any other object that inherits from UIElement: the KeyDown and KeyUp events. Both events have a KeyEventArgs class in its parameters that provides access to the Key and PlatformKeyCode values native to the platform. It also provides access to the OriginalSource property that represents the control that raised the Keyboard event, as well as a Handled member to indicate that the key has been processed.

This completes our discussion of keyboard events. In general, typing should be minimized in a mobile application for the reasons listed previously, i.e., small screen, small keyboard, and so on. Mobile devices are optimized for touch input, especially modern devices with highly responsive capacitive touch screens that do not require a stylus. Let's now focus on touch input.

Most modern mobile devices that have touch screens do not require a stylus, which was necessary for resistivetouch-based screens. Modern mobile devices are capacitive touch and respond very well to touch with a finger.

Windows Phone 7 supports up to four multi-touch contact points for XNA Framework development. Silverlight for Windows Phone supports two multi-touch contact points. As part of the platform, there is a touch driver and gesture engine under the covers that provides a consistent detection capability across hardware device OEMs and across applications.

As mentioned previously, Silverlight for Windows Phone 7 is based on Silverlight 3. The Windows Phone product team took the Silverlight 3 controls and APIs and optimized the controls for performance, for look and feel via control templates and styles and for input. The next section covers single-point touch as it relates to the controls optimized for Windows Phone 7.

When a user clicks a Button control, TextBox control, ListBox control and the like on Windows Phone 7, it is single-point touch. For consistency, single-point touch events are translated to the Mouse events that you are familiar with when programming desktop Silverlight, Windows Forms, or other application frameworks. For example, touching a button appears as a Click event. Tapping to type text in a TextBox or touch a TextBlock control fires a MouseEnter, a MouseLeftButtonDown, a MouseLeftButtonUp, anda MouseLeave event.

The Chapter 3 SinglePointTouch project TextControlsMouseEventsPage.xaml page shows these events firing when you interact with the TextBox and TextBlock controls. You will notice when testing on a device that sometimes multiple MouseEnter/MouseLeave pairs can fire. You can also see multiple MouseMove events fire as well as a result of small movements in your finger when interacting with the controls. It's something to consider when using these events with touch, as opposed to mouse clicks on the desktop, and why discrete events like listening for click or gestures is recommended except when discrete touch points are required. Figure 3-4 shows the UI with the mouse events trace.

Listing 3-1 shows the TextControslMouseEventPage.xaml code file with the XAML markup.

Example 3.1. WMAppManifest.xml Configuration File

<phone:PhoneApplicationPage

x:Class="SinglePointTouch.Pages.TextBoxMouseEventPage"

xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"

xmlns:phone="clr-namespace:Microsoft.Phone.Controls;assembly=Microsoft.Phone"

xmlns:shell="clr-namespace:Microsoft.Phone.Shell;assembly=Microsoft.Phone"

xmlns:d="http://schemas.microsoft.com/expression/blend/2008"

xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006"

FontFamily="{StaticResource PhoneFontFamilyNormal}"

FontSize="{StaticResource PhoneFontSizeNormal}"

Foreground="{StaticResource PhoneForegroundBrush}"

SupportedOrientations="Portrait" Orientation="Portrait"

mc:Ignorable="d" d:DesignHeight="768" d:DesignWidth="480"

shell:SystemTray.IsVisible="True">

<!--LayoutRoot is the root grid where all page content is placed-->

<Grid x:Name="LayoutRoot" Background="Transparent">

<Grid.RowDefinitions>

<RowDefinition Height="Auto"/>

<RowDefinition Height="*"/></Grid.RowDefinitions>

<!--TitlePanel contains the name of the application and page title-->

<StackPanel x:Name="TitlePanel" Grid.Row="0" Margin="12,17,0,28">

<TextBlock x:Name="ApplicationTitle" Text="Chapter 3 - SINGLE POINT TOUCH"

Style="{StaticResource PhoneTextNormalStyle}"/>

<TextBlock x:Name="PageTitle" Text="textbox mouse events" Margin="9,-7,0,0"

Style="{StaticResource PhoneTextTitle1Style}"/>

</StackPanel>

<!--ContentPanel - place additional content here-->

<Grid x:Name="ContentPanel" Grid.Row="1" Margin="12,0,12,0">

<StackPanel Orientation="Vertical">

<TextBox HorizontalAlignment="Left" x:Name="MouseEventsTextBox"

Text="TextBox Mouse Events Demo" Width="460" Height="72"

MouseEnter="MouseEventsTextBox_MouseEnter"

MouseLeave="MouseEventsTextBox_MouseLeave"

MouseLeftButtonDown="MouseEventsTextBox_MouseLeftButtonDown"

MouseLeftButtonUp="MouseEventsTextBox_MouseLeftButtonUp"

MouseMove="MouseEventsTextBox_MouseMove"

MouseWheel="MouseEventsTextBox_MouseWheel" />

<TextBlock Height="30" HorizontalAlignment="Left" Margin="12,0,0,0"

x:Name="MouseEventStatusText" Text="Mouse Events Log"

Width="438" />

<ListBox Height="217" x:Name="MouseEventLogListBox" />

<Rectangle Fill="#FFF4F4F5" Height="10" Stroke="Black" Margin="0,0,6,0"/>

<TextBlock TextWrapping="Wrap" Text="TextBlock Mouse Events Demo"

Margin="0" Name="TextBlockMouseEventsDemo"

MouseEnter="TextBlockMouseEventsDemo_MouseEnter"

MouseLeave="TextBlockMouseEventsDemo_MouseLeave"

MouseLeftButtonDown="TextBlockMouseEventsDemo_MouseLeftButtonDown"

MouseLeftButtonUp="TextBlockMouseEventsDemo_MouseLeftButtonUp"

MouseMove="TextBlockMouseEventsDemo_MouseMove"

MouseWheel="TextBlockMouseEventsDemo_MouseWheel" />

<TextBlock Height="30" HorizontalAlignment="Left" Margin="12,0,0,0"

x:Name="MouseEventStatusTextBlock" Text="Mouse Events Log"

Width="438" />

<ListBox Height="220" x:Name="MouseEventLogListBox2" />

</StackPanel>

</Grid>

</Grid>

</phone:PhoneApplicationPage>In Listing 3-1, you can see the event handler assignments like this one assigning an event handler to the MouseEnter event for the MouseEventsTextBox object

MouseEnter="MouseEventsTextBox_MouseEnter"

The code-behind file has the related event handlers that simply write a text message to the MouseEventLogListBox like this one

private void MouseEventsTextBox_MouseEnter(object sender, MouseEventArgs e)

{

MouseEventLogListBox.Items.Add("MouseEnter event fired.");

}Now that we have covered the mouse events, we will next look at how to use the mouse events for raw touch.

In addition to indicating a "click" or touch even, mouse events can be used for raw touch. An example of raw touch is drawing with your finger, where you need individual touch locations. What enables raw touch with mouse events is the MouseEventArgs class passed into the mouse events. The following are the key properties of the MouseEventArgs class:

GetPosition(UIElement relativeTo): Gets the position of the mouse event in relation to the passed in object. Returns aPointobject.OriginalSource: Provides a reference to the object that raised the event.

StylusDevice: Returns a StylusDevice object that includes the set of stylus points associated with the input.

The StylusDevice object contains a GetStylusPoints method that returns a StylusPointsCollection that we can draw an object onscreen to represent user touches. The StylusPoint class is enhanced over the Point class with the StylusPoint. PressureFactor property. Because PressureFactor is a float, we can assign it to the Opacity property of the object we draw onscreen to represent touches such that the Opacity indicates whether it is a light or heavy press on the screen. So a light pressure press will have a lower opacity when drawn on screen.

In the next couple of sections we will build a mini finger drawing application that includes multi-color selection, ListBox customizations, animations, the application bar, and basic drawing functionality.

Add a Windows Phone Portrait Page new item to the SinglePointTouch project. Uncomment the sample ApplicationBar code at the bottom of the page. We will use the ApplicationBar to implement commands to clear the drawing canvas, set the touch object size, and so on.

At the top we set the title and subtitle for the page. In the default ContentPanelGrid object, we add a Canvas object. On top of the Canvas object is a Rectangle that receives the mouse events. We take advantage of absolute positioning in the Canvas object to place the objects that represent user touches using X and Y coordinates provided by StylusPoint objects. The following is a XAML snippet of the TitlePanel and ContentPanel:

<!--TitlePanel contains the name of the application and page title-->

<StackPanel x:Name="TitlePanel" Grid.Row="0" Margin="12,17,0,28">

<TextBlock x:Name="ApplicationTitle" Text="Chapter 3 - SinglePointTouch"

Style="{StaticResource PhoneTextNormalStyle}"/>

<TextBlock x:Name="PageTitle" Text="finger painting" Margin="9,-7,0,0"

Style="{StaticResource PhoneTextTitle1Style}"/>

</StackPanel>

<!--ContentPanel - place additional content here-->

<Grid x:Name="ContentPanel" Grid.Row="1" Margin="24,0,0,0">

<Canvas x:Name="DrawCanvas" >

<Rectangle Fill="White" Stroke="Black"

MouseMove="Rectangle_MouseMove" Width="456" Height="535" />

</Canvas>

</Grid>The following is the Rectangle_MouseMove event handler on the Rectangle object and related helper method:

private void Rectangle_MouseMove(object sender, MouseEventArgs e)

{

foreach (StylusPoint p in e.StylusDevice.GetStylusPoints(DrawCanvas))

{

Ellipse ellipse = new Ellipse();

ellipse.SetValue(Canvas.LeftProperty, p.X);

ellipse.SetValue(Canvas.TopProperty, p.Y);

ellipse.Opacity = p.PressureFactor;

ellipse.Width = 20d;

ellipse.Height = 20d;

ellipse.IsHitTestVisible = false;

ellipse.Stroke = new SolidColorBrush(Colors.Black);

ellipse.Fill = new SolidColorBrush(Colors.Black);

DrawCanvas.Children.Add(ellipse);

}

}The application uses the MouseMove event and the StylusPointsCollection to draw small Ellipse objects to the screen as you drag the mouse on the emulator or finger on a device across the screen. Figure 3-5 shows the UI in action.

Finger painting without multiple colors is boring. Let's add a ListBox and populate it with the built-in System.Windows.Media.Colors collection so that the user can select an item and change the "finger paint" color. We first create a couple of classes to encapsulate the System.Windows.Media.Colors collection since we cannot data bind directly to it. See Listing 3-2.

Example 3.2. The ColorClass Code File

public class ColorClass

{

public Brush ColorBrush { get; set; }

public String ColorName { get; set; }

}It contains a Brush to represent the RGB values for the color and a text name for the color. We need a collection of ColorClass objects to bind to. Listing 3-3 has the simple class that generates a collection of ColorClass objects.

Example 3.3. The ColorsClass Code File

public class ColorsClass

{

List<ColorClass> _colors;

public ColorsClass()

{

_colors = new List<ColorClass>();

_colors.Add(new ColorClass() {

ColorBrush = new SolidColorBrush(Colors.Blue), ColorName = "Blue" });

_colors.Add(new ColorClass() {

ColorBrush = new SolidColorBrush(Colors.Brown), ColorName = "Brown"});

_colors.Add(new ColorClass() {

ColorBrush = new SolidColorBrush(Colors.Cyan), ColorName = "Cyan"});

_colors.Add(new ColorClass() {

ColorBrush = new SolidColorBrush(Colors.DarkGray),

ColorName = "DarkGray"});

_colors.Add(new ColorClass() {

ColorBrush = new SolidColorBrush(Colors.Gray), ColorName = "Gray"});

_colors.Add(new ColorClass() {

ColorBrush = new SolidColorBrush(Colors.Green), ColorName = "Green"});

_colors.Add(new ColorClass() {

ColorBrush = new SolidColorBrush(Colors.LightGray),

ColorName = "LightGray" });

_colors.Add(new ColorClass() {

ColorBrush = new SolidColorBrush(Colors.Magenta),

ColorName = "Magenta" });

_colors.Add(new ColorClass() {

ColorBrush = new SolidColorBrush(Colors.Orange), ColorName="Orange"});

_colors.Add(new ColorClass() {

ColorBrush = new SolidColorBrush(Colors.Purple), ColorName="Purple"});

_colors.Add(new ColorClass() {

ColorBrush = new SolidColorBrush(Colors.Red), ColorName = "Red"});

_colors.Add(new ColorClass() {

ColorBrush = new SolidColorBrush(Colors.White), ColorName = "White"});

_colors.Add(new ColorClass() {

ColorBrush = new SolidColorBrush(Colors.Yellow), ColorName = "Yellow"});

_colors.Add(new ColorClass() {ColorBrush = new SolidColorBrush(Colors.Black), ColorName = "Black"});

}

public List<ColorClass> ColorsCollection

{

get { return _colors; }

}

}All of the work is done in the constructor using abbreviated syntax to create the collection. Data bind the ColorListBox.ItemsSource to the ColorsClass.ColorsCollection either manually in Visual Studio or with Expression Blend.By default the ColorListBox scrolls vertically. To have the ColorListBox scroll horizontally, right-click on the ColorListBox in Expression Blend and select Edit Additional Templates

<DataTemplate x:Key="FingerPaintingColorTemplate">

<StackPanel Orientation="Vertical">

<Rectangle Fill="{Binding ColorBrush}" HorizontalAlignment="Left"

Height="95" Stroke="Black" VerticalAlignment="Top" Width="95" Margin="4,4,4,0"/>

<TextBlock HorizontalAlignment="Center" TextWrapping="Wrap"

Text="{Binding ColorName}" VerticalAlignment="Center" Margin="0"/>

</StackPanel>

</DataTemplate>The ColorListBoxDataTemplate consists of a Rectangle that displays the color based on the ColorClass.ColorBrush property and a TextBlock that displays the name of the color based on the ColorClass.ColorName property. Figure 3-6 shows the resulting work.

In PhoneApplicationPage_Loaded, set the SelectedIndex on ColorListBox so that a color is always selected. The drawing code is updated to obtain the ColorListBox.SelectedItem object in order to set the brush color for the Ellipse.

private void Rectangle_MouseMove(object sender, MouseEventArgs e)

{

foreach (StylusPoint p in e.StylusDevice.GetStylusPoints(DrawCanvas))

{

Ellipse ellipse = new Ellipse();

ellipse.SetValue(Canvas.LeftProperty, p.X);

ellipse.SetValue(Canvas.TopProperty, p.Y);

ellipse.Opacity = p.PressureFactor;

ellipse.Width = 20d;

ellipse.Height = 20d;

ellipse.IsHitTestVisible = false;

ellipse.Stroke = ((ColorClass)ColorListBox.SelectedItem).ColorBrush;

ellipse.Fill = ((ColorClass)ColorListBox.SelectedItem).ColorBrush;

DrawCanvas.Children.Add(ellipse);

}

}The application will now allow finger painting using the selected color in the ColorListBox. In the next section we will expand the painting functionality in the application.

Let's now add additional painting functionality to make the application more usable, such as to clear the drawing surface, increase the touch pencil size, decrease the touch pencil size, show/hide the color palate to change drawing color, and to set the background for the image. Here is how the UI is set up:

Clear: Erase the drawing surface (trashcan icon).

Touch color: Shows the color palate to set the drawing color (edit pencil icon).

Pencil size: Increases pencil size (plus sign icon).

Pencil size: Decreases pencil size (minus sign icon).

Set background color menu item: Shows the color palate to set the background color.

In Expression Blend, edit the Application Bar to provide four application bar icons and one menu item. Expression Blend provides access to the built-in icons, as shown in Figure 3-7.

Once the application bar icons and menu item are configured visually in Blend, set the ColorListBox control's Visibility to Visibility.Collapsed so that it is only visible when needed. We switch over to Visual Studio to add the event handlers in XAML for the Application Bar button icons and menu item. Listings 3-4 and 3-5 have the full source code of the mini-application.

Example 3.4. The FingerPaintingPageMouseEvents.xaml Code File

<phone:PhoneApplicationPage

xmlns="http://schemas.microsoft.com/winfx/2006/xaml/presentation"

xmlns:x="http://schemas.microsoft.com/winfx/2006/xaml"

xmlns:phone="clr-namespace:Microsoft.Phone.Controls;assembly=Microsoft.Phone"

xmlns:shell="clr-namespace:Microsoft.Phone.Shell;assembly=Microsoft.Phone"

xmlns:d="http://schemas.microsoft.com/expression/blend/2008"

xmlns:mc="http://schemas.openxmlformats.org/markup-compatibility/2006"

xmlns:SinglePointTouch="clr-namespace:SinglePointTouch"

x:Class="SinglePointTouch.Pages.FingerPaintingPageMouseEvents"

SupportedOrientations="Portrait" Orientation="Portrait"

mc:Ignorable="d" d:DesignHeight="696" d:DesignWidth="480"

shell:SystemTray.IsVisible="True" Loaded="PhoneApplicationPage_Loaded">

<phone:PhoneApplicationPage.Resources>

<SinglePointTouch:ColorsClass x:Key="ColorsClassDataSource"

d:IsDataSource="True"/>

<DataTemplate x:Key="FingerPaintingColorTemplate">

<StackPanel Orientation="Vertical">

<Rectangle Fill="{Binding ColorBrush}" HorizontalAlignment="Left"

Height="95" Stroke="Black" VerticalAlignment="Top" Width="95"

Margin="4,4,4,0"/>

<TextBlock HorizontalAlignment="Center" TextWrapping="Wrap"

Text="{Binding ColorName}" VerticalAlignment="Center" Margin="0"/>

</StackPanel>

</DataTemplate>

<ItemsPanelTemplate x:Key="FingerPaintingColorsListBoxItemsPanel">

<StackPanel Orientation="Horizontal"/>

</ItemsPanelTemplate>

</phone:PhoneApplicationPage.Resources>

<phone:PhoneApplicationPage.ApplicationBar>

<shell:ApplicationBar IsVisible="True" IsMenuEnabled="True">

<shell:ApplicationBarIconButton x:Name="AppBarClearButton"

IconUri="/icons/appbar.delete.rest.jpg" Text="clear"

Click="AppBarClearButton_Click" />

<shell:ApplicationBarIconButton x:Name="AppBarChangeTouchColorButton"

IconUri="/icons/appbar.edit.rest.jpg" Text="touch color"

Click="AppBarChangeTouchColor_Click"/>

<shell:ApplicationBarIconButton x:Name="AppBarIncreaseButton"

IconUri="/icons/appbar.add.rest.jpg" Text="pencil size"

Click="AppBarIncreaseButton_Click"/>

<shell:ApplicationBarIconButton x:Name="AppBarDecreaseButton"

IconUri="/icons/appbar.minus.rest.jpg" Text="pencil size"

Click="AppBarDecreaseButton_Click"/>

<shell:ApplicationBar.MenuItems>

<shell:ApplicationBarMenuItem Text="Set Background Color"

x:Name="SetBackgroundColorMenuItem"

Click="SetBackgroundColorMenuItem_Click" />

</shell:ApplicationBar.MenuItems>

</shell:ApplicationBar>

</phone:PhoneApplicationPage.ApplicationBar>

<phone:PhoneApplicationPage.FontFamily><StaticResource ResourceKey="PhoneFontFamilyNormal"/>

</phone:PhoneApplicationPage.FontFamily>

<phone:PhoneApplicationPage.FontSize>

<StaticResource ResourceKey="PhoneFontSizeNormal"/>

</phone:PhoneApplicationPage.FontSize>

<phone:PhoneApplicationPage.Foreground>

<StaticResource ResourceKey="PhoneForegroundBrush"/>

</phone:PhoneApplicationPage.Foreground>

<Grid x:Name="LayoutRoot" Background="Transparent" DataContext=

"{Binding Source={StaticResource ColorsClassDataSource}}" >

<Grid.RowDefinitions>

<RowDefinition Height="Auto"/>

<RowDefinition Height="*"/>

</Grid.RowDefinitions>

<!--TitlePanel contains the name of the application and page title-->

<StackPanel x:Name="TitlePanel" Grid.Row="0" Margin="12,17,0,28">

<TextBlock x:Name="ApplicationTitle" Text="Chapter 3 - SinglePointTouch"

Style="{StaticResource PhoneTextNormalStyle}"/>

<TextBlock x:Name="PageTitle" Text="finger painting" Margin="9,-7,0,0"

Style="{StaticResource PhoneTextTitle1Style}"/>

</StackPanel>

<!--ContentPanel - place additional content here-->

<Grid x:Name="ContentPanel" Grid.Row="1" Margin="24,0,0,0">

<Canvas x:Name="DrawCanvas" >

<Rectangle Fill="White" Stroke="Black" Name="BlankRectangle"

MouseMove="Rectangle_MouseMove" Width="456" Height="535" />

</Canvas>

<ListBox x:Name="ColorListBox" Margin="0"

ScrollViewer.HorizontalScrollBarVisibility="Auto"

ScrollViewer.VerticalScrollBarVisibility="Disabled"

ItemsPanel="{StaticResource FingerPaintingColorsListBoxItemsPanel}"

VerticalAlignment="Top" ItemsSource="{Binding ColorsCollection}"

ItemTemplate="{StaticResource FingerPaintingColorTemplate}"

Background="Black" SelectedIndex="-1" HorizontalAlignment="Right"

Width="456" RenderTransformOrigin="0.5,0.5"

SelectionChanged="ColorListBox_SelectionChanged" Visibility="Collapsed">

</ListBox>

</Grid>

</Grid>

</phone:PhoneApplicationPage>Example 3.5. The FingerPaintingPageMouseEvents.xaml.cs Code File

using System; using System.Windows; using System.Windows.Controls; using System.Windows.Input; using System.Windows.Media; using System.Windows.Shapes; using System.Windows.Threading; using Microsoft.Phone.Controls;

namespace SinglePointTouch.Pages

{

public partial class FingerPaintingPageMouseEvents : PhoneApplicationPage

{

private Rectangle _backgroundRectangle;

private double _touchRadius = 20d;

private bool ColorBackgroundMode = false;

private int TouchPaintingSelectedColorIndex;

public FingerPaintingPageMouseEvents()

{

InitializeComponent();

_backgroundRectangle = BlankRectangle;

}

private void Rectangle_MouseMove(object sender, MouseEventArgs e)

{

foreach (StylusPoint p in e.StylusDevice.GetStylusPoints(DrawCanvas))

{

Ellipse ellipse = new Ellipse();

ellipse.SetValue(Canvas.LeftProperty, p.X);

ellipse.SetValue(Canvas.TopProperty, p.Y);

ellipse.Opacity = p.PressureFactor;

ellipse.Width = _touchRadius;

ellipse.Height = _touchRadius;

ellipse.IsHitTestVisible = false;

ellipse.Stroke = ((ColorClass)ColorListBox.SelectedItem).ColorBrush;

ellipse.Fill = ((ColorClass)ColorListBox.SelectedItem).ColorBrush;

DrawCanvas.Children.Add(ellipse);

}

}

private void PhoneApplicationPage_Loaded(object sender, RoutedEventArgs e)

{

ColorListBox.SelectedIndex = 0;

//Setup memory tracking timer

DispatcherTimer DebugMemoryTimer = new DispatcherTimer();

DebugMemoryTimer.Interval = new TimeSpan(0, 0, 0, 0, 5000);

DebugMemoryTimer.Tick += DebugMemoryInfo_Tick;

DebugMemoryTimer.Start();

}

// Track memory Info

void DebugMemoryInfo_Tick(object sender, EventArgs e)

{

//GC.GetTotalMemory(true);

long deviceTotalMemory =

(long)Microsoft.Phone.Info.DeviceExtendedProperties.GetValue(

"DeviceTotalMemory");

long applicationCurrentMemoryUsage =

(long)Microsoft.Phone.Info.DeviceExtendedProperties.GetValue(

"ApplicationCurrentMemoryUsage");

long applicationPeakMemoryUsage =(long)Microsoft.Phone.Info.DeviceExtendedProperties.GetValue(

"ApplicationPeakMemoryUsage");

System.Diagnostics.Debug.WriteLine("--> " +

DateTime.Now.ToLongTimeString());

System.Diagnostics.Debug.WriteLine("--> Device Total : " +

deviceTotalMemory.ToString());

System.Diagnostics.Debug.WriteLine("--> App Current : " +

applicationCurrentMemoryUsage.ToString());

System.Diagnostics.Debug.WriteLine("--> App Peak : " +

applicationPeakMemoryUsage.ToString());

}

private void AppBarClearButton_Click(object sender, EventArgs e)

{

DrawCanvas.Children.Clear();

DrawCanvas.Children.Add(BlankRectangle);

BlankRectangle.Fill = new SolidColorBrush(Colors.White);

}

private void AppBarIncreaseButton_Click(object sender, EventArgs e)

{

if (_touchRadius <= 30d)

{

_touchRadius += 5;

}

}

private void AppBarDecreaseButton_Click(object sender, EventArgs e)

{

if (_touchRadius > 20d)

{

_touchRadius -= 5;

}

}

private void SetBackgroundColorMenuItem_Click(object sender, EventArgs e)

{

ColorListBox.Visibility = Visibility.Visible;

ColorBackgroundMode = true;

TouchPaintingSelectedColorIndex = ColorListBox.SelectedIndex;

}

private void ColorListBox_SelectionChanged(object sender,

SelectionChangedEventArgs e)

{

ColorListBox.Visibility = Visibility.Collapsed;

if (ColorBackgroundMode == true)

{

_backgroundRectangle.Fill =

((ColorClass)ColorListBox.SelectedItem).ColorBrush;

ColorBackgroundMode = false;

ColorListBox.SelectedIndex = TouchPaintingSelectedColorIndex;}

}

private void AppBarChangeTouchColor_Click(object sender, EventArgs e)

{

ColorListBox.Visibility = Visibility.Visible;

}

}

}In Listing 3-5 there is memory-tracking code to help analyze memory consumption that I cover in the next section.

In Listing 3-5 there is an event handler named DebugMemoryInfo_Tick, as well as code in the PhoneApplicationPage_Loaded method to fire the Tick event for a DispatcherTimer object named TrackMemoryTimer. The DebugMemoryInfo_Tick event handler generates this text to the Output window in Visual Studio when the finger painting page is launched in the SinglePointTouch project.

--> 7:14:50 PM --> Device Total : 497618944 --> App Current : 11014144 --> App Peak : 12492800

Next, draw a sample image, such as that shown in Figure 3-8.

What follows is the resulting memory consumption:

--> 7:14:36 AM --> Device Total : 390012928 --> App Current : 24748032 --> App Peak : 24748032

The emulator has essentially unlimited memory so consuming almost 250MB of RAM runs fine. You could finger paint a similar image on a physical device with 512MB, and it would be fine as well. However, for certification in AppHub, applications need to stay under 90MB to pass. This is because on a device with 256MB of RAM, consuming more than that could impact performance.

Tracking memory using this script or something similar is a very important aspect of performance tuning WP7 applications, especially when testing on the emulator that essentially has unlimited resources.

Tip

Applications can momentarily go over 90MB and not crash, so don't panic if your application peaks over 90MB, but settles in below 90MB.

The reason the finger painting application consumes memory is that it is a purely vector-based drawing consisting of Ellipse objects. The Ellipse objects can yield an impressionistic effect with careful drawing but it does result in high memory consumption. As a user moves the mouse, new Ellipse objects are drawn to screen. When drawing over an area that is already colored, the old color is still present underneath in Ellipse objects. Options to investigate are to use Silverlight geometry primitives instead of Ellipse objects. Another option to reduce memory consumption is to use the WritableBitmap class to "burn" the objects into the background as a way to collapse the vector objects into simple raster bitmaps.

The Mouse and Touch events are familiar to developers and easy to work with; however, they should only be used when absolutely necessary, such as when you need individual touch points. The MSDN documentation has a section titled "Performance Considerations in Applications for Windows Phone" available here

http://msdn.microsoft.com/en-us/library/ff967560(v=VS.92).aspx

This white paper has a section titled "User Input" that recommends using Manipulation Events instead of mouse and touch events or performance and compatibility reasons for all scenarios other than when you need individual points. This chapter covers gestures and manipulation events next as part of multi-point touch.

As mentioned previously, Silverlight applications are generally based on the control framework and single touch when interacting with controls. There are parts of applications that may require multi-touch. Windows Phone 7 supports up to four touch points, which are available to both Silverlight- and XNA Framework-based applications. Examples of multi-touch in Silverlight would be image manipulation, zooming in or out on a news article to adjust the font, and so on.

In the XNA Framework, multi-touch is essential, since game-based user experiences are generally highly customized. One example of multi-touch in the XNA Framework are having one thumb manipulating a virtual accelerator and the other thumb manipulating a virtual brake in a driving game. Another example is one thumb manipulating a virtual joystick and the other thumb touching buttons to jump or shoot.

A couple of controls that are part of the Windows Phone 7 development platform include support for multi-touch. The WebBrowser control supports pinch/zoom and pan gestures. Another control that has built-in support for multi-touch is the Bing Maps control, which also supports pinch/zoom and pan gestures.

The other control that is more generic than the WebBrowser and Bing Maps controls is the ScrollViewer panel control, which supports flick and pan gestures for contained content. The ScrollViewer project in the Chapter 3 solution demonstrates the ScrollViewer Control. Once the solution is created, drag a ScrollViewer control onto the ContentPanel Grid control in Expression Blend. Reset the Height and Width on the Image control to Auto. Also reset layout on the ScrollViewer so that it fills the ContentPanel.

Drag an Image control onto the ScrollViewer control. Set the Source property of the Image control to point to the France. jpg image in the images folder of the ScrollViewer solution. Set the Stretch property on the Image control to None so that it expands beyond the screen bounds to full size. On the containing ScrollViewer control, set the HorizontalScrollBarVisibility property to Auto from Disabled. We want to be able to pan and flick the image in all directions.

Once layout is configured property for the controls as detailed in the previous paragraphs, we are ready to test. When you run the application, you can see that you get pan and flick gestures "for free," provided by the ScrollViewer control. In the next couple of sections I cover multi-touch programming, gestures, and manipulation events.

The mouse events covered in the previous section may work fine for many cases, but may feel a bit clunky. In this section we will implement the finger-painting application using Touch.FrameReported for more fine-grained raw touch development.

We start with a copy of the previous finger painting application but change the Page class from FingerPaintingPageMouseEvents to FingerPaintingPageTouchEvents to prevent compilation errors with duplicate names. We keep both pages in the SinglePointTouch project, though System.Windows.Input.Touch supports multi-touch, which is an advantage over the mouse events. The next step is to remove the MouseMove event handler from the Rectangle and comment out the Rectangle_MouseMove event handler in the code behind.

In the PhoneApplicationPage_Loaded event, wire-up the FrameReported event like this

System.Windows.Input.Touch.FrameReported += new TouchFrameEventHandler(Touch_FrameReported);

To prevent exceptions when navigating back and forth to the page, the event is disconnected in the unload event here

private void PhoneApplicationPage_Unloaded(object sender, RoutedEventArgs e)

{

System.Windows.Input.Touch.FrameReported -= Touch_FrameReported;

}The Touch_FrameReported event is where the touch action happens and directly replaces the Rectangle_MouseMove event from the previous example. The FrameReported event TouchFrameEventArgs class provides a rich set of properties to provide fine-grained control over touch development. Table 3-2 provides a summary of its properties and events.

Table 3.2. Available InputScope Options

Unlike with the mouse events StylusPoint class, the TouchPoint class does not support PressureFactor values, so Opacity is not varied by pressure. The TouchPoint class does support a Size value for the touch action but the size resolves to a very small value regardless of whether drawing with a small finger or larger finger, making the Size value less useful. The following is the final Touch_FrameReported event handler:

void Touch_FrameReported(object sender, TouchFrameEventArgs e)

{

foreach (TouchPoint p in e.GetTouchPoints(DrawCanvas))

{

if ((InDrawingMode) && (p.Action == TouchAction.Move))

{

Ellipse ellipse = new Ellipse();

ellipse.SetValue(Canvas.LeftProperty, p.Position.X);

ellipse.SetValue(Canvas.TopProperty, p.Position.Y);

ellipse.Width = _touchRadius;

ellipse.Height = _touchRadius;

ellipse.IsHitTestVisible = false;

ellipse.Stroke = ((ColorClass)ColorListBox.SelectedItem).ColorBrush;

ellipse.Fill = ((ColorClass)ColorListBox.SelectedItem).ColorBrush;

DrawCanvas.Children.Add(ellipse);

}

}

}Notice that this code has an additional check on the Boolean variable InDrawingMode. The value of InDrawingMode is set to false when showing the color selector ColorListBox. This is because the Touch.FrameReported event fires no matter what control has focus. So without additional checks, selecting or scrolling colors would generate additional touch events on the DrawCanvas Canvas object. Raw touch with Touch.FrameReported is truly raw touch processing.

The mouse events have a nice benefit over Touch. FrameReported. The mouse events generate StylusPoint objects, which include a PressureFactor value instead of the TouchPoint objects for Touch.FrameReported. This allows varying the Opacity, for a better drawing experience. However, for other touch-related programming where Gestures or Manipulations cannot provide needed functionality, raw touch with Touch. FrameReported is recommended over mouse events

One capability that Touch.FrameReported provides over mouse events is multi-touch capabilities via the TouchPoint class. The TouchPoint class has the following two members that allow tracking of state and history:

With these two properties it is possible to track the state of the touch as well as associated history as the user moves their finger around the screen. The MultiTouchwithRawTouch project is a simple program that tracks up to four touch actions by a user. Essentially you can place four fingers on the screen and watch the Rectangle objects follow your fingers on the screen. The XAML for the project is a generic page that has Rectangle objects dynamically added to a Canvas panel added to the default ContentPanel Grid. Listing 3-6 contains the source code for the code-behind file.

Example 3.6. MultiTouchwithRawTouch MainPage.xaml.cs Code File

using System.Collections.Generic;

using System.Linq;

using System.Windows;

using System.Windows.Controls;

using System.Windows.Input;

using System.Windows.Media;

using System.Windows.Shapes;

using Microsoft.Phone.Controls;

namespace MultiTouchwithRawTouch

{

public partial class MainPage : PhoneApplicationPage

{

List<TrackedTouchPoint> trackedTouchPoints = new List<TrackedTouchPoint>();

// Constructor

public MainPage()

{

InitializeComponent();

Touch.FrameReported += new TouchFrameEventHandler(Touch_FrameReported);

}

void Touch_FrameReported(object sender, TouchFrameEventArgs e)

{

foreach (TouchPoint tp in e.GetTouchPoints(DrawCanvas))

{

tp.TouchDevice.

TrackedTouchPoint ttp = null;

var query = from point in trackedTouchPoints

where point.ID == tp.TouchDevice.Idselect point;

if (query.Count() != 0)

ttp = query.First();

switch (tp.Action)

{

case TouchAction.Down: ttp = new TrackedTouchPoint();

ttp.ID = tp.TouchDevice.Id;

if (trackedTouchPoints.Count == 0)

{

ttp.IsPrimary = true;

DrawCanvas.Children.Clear();}

trackedTouchPoints.Add(ttp);

ttp.Position = tp.Position;

ttp.Draw(DrawCanvas);

break;

case TouchAction.Up: ttp.UnDraw(DrawCanvas);

trackedTouchPoints.Remove(ttp);

break;

default:

ttp.Position = tp.Position;

ttp.Draw(DrawCanvas);

break;

}

}

CleanUp(e.GetTouchPoints(DrawCanvas));

}

private void CleanUp(TouchPointCollection tpc)

{

List<int> ToDelete = new List<int>();

foreach (TrackedTouchPoint ttp in trackedTouchPoints)

{

var query = from point in tpc

where point.TouchDevice.Id == ttp.ID

select point;

if (query.Count() == 0)

ToDelete.Add(ttp.ID);

}

foreach (int i in ToDelete)

{

var query = from point in trackedTouchPoints

where point.ID == i

select point;

if (query.Count() != 0)

trackedTouchPoints.Remove(query.First());

}

if (trackedTouchPoints.Count == 0)

{

DrawCanvas.Children.Clear();

}

}

}

class TrackedTouchPoint

{

public TrackedTouchPoint()

{

Rect = new Rectangle() { Height = 50, Width = 50 };

Position = new Point(0, 0);

IsPrimary = false;

BrushColor = new SolidColorBrush(Colors.Yellow);

}

private Rectangle Rect { get; set; }

public int ID { get; set; }

public Brush BrushColor

{

set

{

Rect.Fill = value;

}

}

public Point Position { get; set; }

public bool IsPrimary { get; set; }

public void Draw(Canvas canvas)

{

if (IsPrimary)

BrushColor = new SolidColorBrush(Colors.Blue);

Rect.SetValue(Canvas.LeftProperty, Position.X);

Rect.SetValue(Canvas.TopProperty, Position.Y);

if (Rect.Parent == null)

canvas.Children.Add(Rect);

}

public void UnDraw(Canvas canvas)

{

canvas.Children.Remove(Rect);

}

}

}Raw touch with Touch.FrameReported gives full access to every touch event; however, it is cumbersome to work with when you just need to detect gestures or a set of gestures. For mutli-touch programming Touch.FrameReported is not recommended. The next couple of sections cover gesture detection in both the XNA Framework and Silverlight as well as manipulations, which are recommended for multi-touch.

A gesture is a one or two finger action that is a pre-defined touch interaction. Gestures on Windows Phone 7 are similar to gestures that are defined on Windows 7, iPhone, Android, or pretty much any other touch device. What makes gestures useful is their consistency, which means that they should not be altered or "enhanced" in a way that will confuse users.

I cover single-touch and raw touch in the previous section titled "Single-Point Touch," but I did not speak to it in terms of gestures. Single-touch gestures consist of the following interactions:

Tap: Select an object in a ListBox, touch to click a button, or text to navigate to another screen.

Double Tap: Successive taps in a row that happen with a time duration such as one second and are therefore recognized as a double-tap, not two single-tap gestures.

Pan: Use a single feature to move an object across the screen.

Flick: Similar to a pan gesture except that the finger moves quickly across the screen, acceleration is detected, and the object moves with inertia relative to the amount of acceleration applied.

Touch and Hold: Touch on an area of screen for a period of time, say a second, and a touch and hold gesture is detected. Used to open context menus.

The two-finger gestures are Pinch and Stretch. The pinch gesture consists of placing two fingers on the screen and moving them closer. Pinch is used to zoom out as well as to make an object smaller. The Stretch gesture consists of placing two fingers on the screen and moving them further away. Stretch is used to zoom in as well as to make an object larger. In the next two subsections I cover how to support gestures in Windows Phone 7 Applications.

The XNA Framework on Windows Phone 7 includes the Microsoft.Xna.Framework.Input.Touch namespace. This is a non-graphical, non-rendering namespace, so it can be leveraged in both Silverlight and XNA Game Studio. The primary class for the namespace is the TouchPanel static class, which receives touch input that is automatically interpreted into a gesture for developers.

To process gestures, developers call TouchPanel.IsGestureAvailable to determine if a Gesture is pending. If one is, developers then call TouchPanel.ReadGesture. The Microsoft.Xna.Framework.Input.Touch namespace includes an enumeration named GestureType that identifies the supported gestures, DoubleTap, Flick, FreeDrag, HorizontalDrag, VerticalDrag, Hold, Pinch, and Tap.

The Chapter 3 project GesturesTouchPanelXNA demonstrates how simple it is to use the TouchPanel class to determine gestures. In the Initialize() method of Game1.cs, the code enables all possible gestures.

TouchPanel.EnabledGestures = GestureType.DoubleTap | GestureType.Flick | GestureType.FreeDrag | GestureType.Hold | GestureType.HorizontalDrag | GestureType.None | GestureType.Pinch | GestureType.PinchComplete | GestureType.Tap | GestureType.VerticalDrag | GestureType.DragComplete;

We want to draw text to the screen in the XNA Framework project so we right-click on the GesturesTouchPanelXNAContentContent project and select Add

In the LoadContent() method of Game1.cs, this code loads the font and defines a position in the middle of the screen to draw the font.

spriteFontSegoeUIMono = Content.Load<SpriteFont>("Segoe UI Mono");

spriteFontDrawLocation = new Vector2(graphics.GraphicsDevice.Viewport.Width / 2,

graphics.GraphicsDevice.Viewport.Height / 2);In the Update() method, here is the code to check for a gesture:

if (TouchPanel.IsGestureAvailable)

{

gestureSample = TouchPanel.ReadGesture();

gestureInfo = gestureSample.GestureType.ToString();

}The gestureInfo variable is printed to the screen using the imported font with these lines of code in the Draw() method.

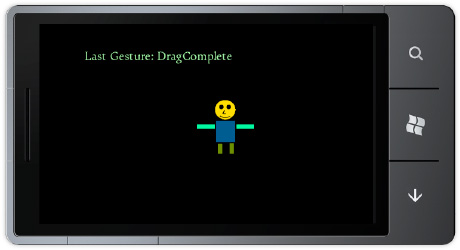

spriteBatch.Begin(); // Draw gesture info string output = "Last Gesture: " + gestureInfo; // Find the center of the string to center the text when outputted Vector2 FontOrigin = spriteFontSegoeUIMono.MeasureString(output) / 2; // Draw the string spriteBatch.DrawString(spriteFontSegoeUIMono, output, spriteFontDrawLocation, Color.LightGreen,0, FontOrigin, 1.0f, SpriteEffects.None, 0.5f); spriteBatch.End();

Run the application on a device and gesture on the screen to see the gesture recognized and the name of the gesture action drawn onscreen. Now that we have an easy way to detect a gesture, let's use it to do something useful.

The GestureSample class provides six properties to provide useful information regarding the gesture, GestureType, Timestamp, Position, Position2, Delta, and Delta2. You know what GestureType does from the discussion in the preceding paragraphs. Timestamp indicates the time of the gesture sample reading. The Timestamp values are continuous for readings to they can be subtracted to determine how much time passed between readings. The other four values are Vector2 values related to the position of the finger on the screen. Position represents the first finger. Position2 represents the second finger if a two-finger gesture. The Delta and Delta2 values are like Timestamp, in that they indicate the changes in finger position relative to the last finger position, not between fingers if a multi-touch gesture. Table 3-3 relates gestures to the applicable fields with relevant notes.

Table 3.3. Gestures and Applicable GestureSample Members

Gesture | Applicable Members | Notes |

|---|---|---|

Tap | Position | |

DoubleTap | Position | |

Hold | Position | |

FreeDrag | Position, Delta | |

VerticalDrag Position, Delta | Delta values are constrained to the direction of movement, either vertical (X=0) or horizontal (Y=0). | |

HorizontalDrag | Position, Delta | |

DragComplete | N/A | All vector2 values set to zero. |

Flick | Delta | The Delta member represents flick speed (and direction) as pixels per second. |

Pinch | Position, Position2, Delta, Delta2 | Position/Delta represent the first finger.Position2/Delta2 represents the second finger.Negative values indicate the Pinch gesture is moving fingers closer together. |

PinchCompete | N/A | All vector2 values set to zero. |

Debug info is added to write out the data from the GestureSample instance named gestureSample to help with development. The following is an example from the beginning of a Pinch gesture:

gesture Type: Pinch

gesture Timestamp: 03:27:37.3210000

gesture Position: {X:425.2747 Y:287.3394}

gesture Position2: {X:523.077 Y:366.6055}

gesture Delta: {X:0 Y:0}

gesture Delta2: {X:0 Y:0}A short expanding Pinch gesture results in about 30 gesture samples over just less than half a second, providing a rich set of data to apply to objects as a result of user touches. Run the sample and perform gestures on blank portions of the screen to see how position and delta values change.

To make the sample more interesting stickman figure manipulation is added to the GesturesTouchPanelXNA project. The stickman figure responds to Hold, Flick, Drag, and Pinch gestures. Figure 3-9 shows the simple UI but you will want to run this on a device to try out the supported gestures.

If you tap and hold (Hold GestureType) on the stickman, the figure rotates 90 degrees. If the stickman is flicked (Flick GestureType), the stickman will bounce around the screen and will eventually slow down. Tap on the stickman to stop movement. Finally, drag (FreeDragGestureType) to slide the stickman around the screen.

There is a little bit of XNA Framework development in the sample to create a basic GameObject class to represent the stickman sprite. This keeps the game code clean without using a bunch of member variables to track state in the Game1.cs file.Listing 3-7 shows the GameObject class.

Example 3.7. GameObject.cs Code File

using Microsoft.Xna.Framework;

using Microsoft.Xna.Framework.Graphics;

namespace GesturesTouchPanelXNA

{

class GameObject

{

private const float _minScale = .4f;

private const float _maxScale = 6f;

private const float _friction = .7f;

private const float _bounceVelocity = .9f;

private float _scale = 1f;

private Vector2 _velocity;

private Vector2 _position;

public GameObject(Texture2D gameObjectTexture)

{

Rotation = 0f;

Position = Vector2.Zero;

SpriteTexture = gameObjectTexture;

Center = new Vector2(SpriteTexture.Width / 2, SpriteTexture.Height / 2);

Velocity = Vector2.Zero;

TintColor = Color.White;

Selected = false;}

public Texture2D SpriteTexture { get; set; }

public Vector2 Center { get; set; }

public float Rotation { get; set; }

public Rectangle TouchArea { get; set; }

public Color TintColor { get; set; }

public bool Selected { get; set; }

public float Scale

{

get { return _scale; }

set

{

_scale = MathHelper.Clamp(value, _minScale, _maxScale);

}

}

public Vector2 Position

{ get { return _position; }

set { _position = value ; } //Move position to Center.

}

public Vector2 Velocity

{

get {return _velocity;}

set { _velocity = value; }

}

public Rectangle BoundingBox

{

get

{

Rectangle rect =

new Rectangle((int)(Position.X - SpriteTexture.Width / 2 * Scale),

(int)(Position.Y - SpriteTexture.Height / 2 * Scale),

(int)(SpriteTexture.Width * Scale),

(int)(SpriteTexture.Height * Scale));

//Increase the touch target a bit

rect.Inflate(10, 10);

return rect;

}

}

public void Update(GameTime gameTime, Rectangle displayBounds)

{

//apply scale for pinch / zoom gesture

float halfWidth = (SpriteTexture.Width * Scale) / 2f;

float halfHeight = (SpriteTexture.Height * Scale) / 2f;

// apply friction to slow down movement for simple physics when flicked

Velocity *= 1f - (_friction * (float)gameTime.ElapsedGameTime.TotalSeconds);

// Calculate position

//position = velocity * time

//TotalSeconds is the amount of time since last update in seconds

Position += Velocity * (float)gameTime.ElapsedGameTime.TotalSeconds;// Apply "bounce" if sprite approaches screen bounds

if (Position.Y < displayBounds.Top + halfHeight)

{

_position.Y = displayBounds.Top + halfHeight;

_velocity.Y *= -_bounceVelocity;

}

if (Position.Y > displayBounds.Bottom - halfHeight)

{

_position.Y = displayBounds.Bottom - halfHeight;

_velocity.Y *= -_bounceVelocity;

} if (Position.X < displayBounds.Left + halfWidth)

{

_position.X = displayBounds.Left + halfWidth;

_velocity.X *= -_bounceVelocity;

}

if (Position.X > displayBounds.Right - halfWidth)

{

_position.X = displayBounds.Right - halfWidth;

_velocity.X *= -_bounceVelocity;

}

}

public void Draw(SpriteBatch spriteBatch)

{

spriteBatch.Draw(SpriteTexture, Position, null, TintColor, Rotation,

Center,Scale,

SpriteEffects.None,0);

}

}

}The vast majority of the GameObject class is basic math calculations for checking screen boundaries, velocity, position, and so on. The one item to point out is the handy MathHelper static class that includes numerous helpful methods. The Clamp method is used to limit the zooming via the Pinch GestureType to be between a min and max scale value.

The other interesting code is the ProcessTouchInput() method in Game1.cs that is called in the Update() method. The method checks for touches first in order to determine if the stickman was touched on screen. To perform the check, each touch is converted to a Point object mapped into the screen coordinates. Next, we create a Rectangle object that encapsulates the stickman. The Rectangle.Contains method is passed in the Point object that represents the touch to determine if the touch was within the bounding box of the stickman. If the Point object is within the bounds of the Rectangle object, Selected is set to true on the StickMan sprite and gestures are applied. Otherwise, if a gesture is performed outside of the stickman, the gesture info is displayed to the screen as before but the StickMan sprite is not affected. The following is the code to determine selection:

TouchCollection touches = TouchPanel.GetState();

if ((touches.Count > 0) && (touches[0].State == TouchLocationState.Pressed))

{

// map touch to a Point object to hit test

Point touchPoint = new Point((int)touches[0].Position.X,

(int)touches[0].Position.Y);if (StickManGameObject.BoundingBox.Contains(touchPoint))

{

StickManGameObject.Selected = true;

StickManGameObject.Velocity = Vector2.Zero;

}

}A switch statement is added to the while (TouchPanel.IsGestureAvailable) loop. As a GestureType is identified, it is applied to the StrawMan sprite. The switch statement is shown in Listing 3-8.

Example 3.8. ProcessInput Method GestureType Switch Statement

if (StickManGameObject.Selected)

{

switch (gestureSample.GestureType)

{

case GestureType.Hold:

StickManGameObject.Rotation += MathHelper.Pi;

break;

case GestureType.FreeDrag:

StickManGameObject.Position += gestureSample.Delta;

break;

case GestureType.Flick:

StickManGameObject.Velocity = gestureSample.Delta;

break;

case GestureType.Pinch:

Vector2 FirstFingerCurrentPosition = gestureSample.Position;

Vector2 SecondFingerCurrentPosition = gestureSample.Position2;

Vector2 FirstFingerPreviousPosition = FirstFingerCurrentPosition -

gestureSample.Delta;

Vector2 SecondFingerPreviousPosition = SecondFingerCurrentPosition -

gestureSample.Delta2;

//Calculate distance between fingers for the current and

//previous finger positions. Use it as a ration to

//scale object. Can have positive and negative scale.

float CurentPositionFingerDistance = Vector2.Distance(

FirstFingerCurrentPosition, SecondFingerCurrentPosition);

float PreviousPositionFingerDistance = Vector2.Distance(

FirstFingerPreviousPosition, SecondFingerPreviousPosition);

float zoomDelta = (CurentPositionFingerDistance -

PreviousPositionFingerDistance) * .03f;

StickManGameObject.Scale += zoomDelta;

break;

}

}For the GestureType.Hold gesture, the StickMan's Rotation property on the sprite is altered by MathHelper.PiOver2 radians, which is equal to 90 degrees. For the GestureType.FreeDrag gesture, the StickMan's Position property is updated by the Delta value, which is a Vector2 in the direction and magnitude of movement since the last time a gesture sample was provided. For GestureType.Flick, the StickMan's Velociy is updated by the Delta as well, which in this case represents a flick speed that is added.

The GestureType.Pinch gesture requires a bit more calculation, but it is fairly straightforward.Essentially, the distance between fingers in screen coordinates is calculated for the current finger position and previous finger position. The differences are used to calculate scale factor. Increasing finger distance is a positive scale factor. Decreasing finger distance is a negative scale factor. If the distance greatly increases (either to be bigger or smaller), that determines the size of the scale factor.

Touch input and Gestures are a key component to game development for Windows Phone 7. This section covered a lot of ground from gesture recognition to applying gestures to a game object, taking advantage of the gesture capabilities available in the XNA Framework libraries. We will now cover how to work with gestures in Silverlight.

We can take the information above regarding XNA Framework multi-touch and apply it to Silverlight. Because Silverlight and XNA Framework share the same application model, you can share non-drawing libraries across programming models. This is demonstrated in the GesturesTouchPanelSilverlight project. To get started, add a reference to the Microsoft.Xna.Framework and Microsoft.Xna.Framework.Input.Touch namespaces.

In the MainPage() constructor in MainPage.xaml.cs, add the following code to enable Gestures, just as before:

TouchPanel.EnabledGestures = GestureType.DoubleTap | GestureType.Flick |

GestureType.FreeDrag | GestureType.Hold | GestureType.HorizontalDrag |

GestureType.None | GestureType.Pinch | GestureType.PinchComplete |

GestureType.Tap | GestureType.VerticalDrag | GestureType.DragComplete;In XNA Game Studio, the game loop Update method is called 30 times a second so it is a single convenient place to capture touch input. In Silverlight there isn't a game loop. A polling loop could be simulated with a DispatcherTimer that fires every 1000/30 milliseconds. This is the cleanest approach, because it exactly simulates how the XNA Framework works.

Another method is to hook into the mouse or manipulation events. I cover the manipulation events in the next section so we use the mouse events instead. This will work fine most of the time, but some gesture events fire in MouseLeftButtonDown and MouseButtonUp as well as MouseMove so you have to be careful if it causes you a bug if you are just tracking events in MouseMove, and so on. The following is the code to capture gesture events in Silverlight mouse events:

private void PhoneApplicationPage_MouseLeftButtonDown(object sender, MouseButtonEventArgs e)

{

while (TouchPanel.IsGestureAvailable)

{

GestureActionsListBox.Items.Add("LeftBtnDown "+TouchPanel.ReadGesture().GestureType.ToString());

}

}

private void PhoneApplicationPage_MouseLeftButtonUp(object sender, MouseButtonEventArgs e)

{

while (TouchPanel.IsGestureAvailable)

{

GestureActionsListBox.Items.Add("LeftBtnUp " + TouchPanel.ReadGesture().GestureType.ToString());

}

}private void PhoneApplicationPage_MouseMove(object sender, MouseEventArgs e)

{

while (TouchPanel.IsGestureAvailable)

{

GestureActionsListBox.Items.Add("MouseMove " + TouchPanel.ReadGesture().GestureType.ToString());

}

}Once the gestures are detected in the mouse events, you can perform similar programming using a Canvas panel as with the XNA Framework ample to react to gestures. One additional item to consider when comparing the XNA Framework and Silverlight is the coordinate system. In the XNA Framework, all objects are absolutely positioned relative to the upper left hand corner so the math to calculate position is straightforward. In Silverlight, objects can be placed within containers. For example, a Rectangle can have margin top and left margin of 10,10, but be contained within a Grid that has margin of 100,100 relative to the screen so coordinate mapping is necessary to translate the touch location to an actual control position.

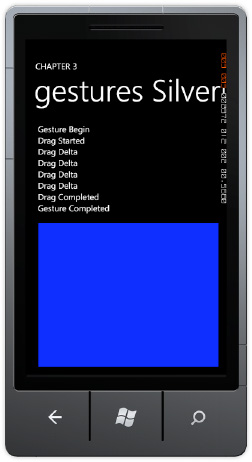

Another method to detect gestures in Silverlight is available within the Silverlight for Windows Phone Toolkit at Silverlight.codeplex.com. The toolkit includes the GestureService/GestureListener components to detect gestures, so you will want to download the toolkit to test out the sample

Once the Silverlight for Windows Phone Toolkit is installed, browse to the toolkit library and add a reference. On my system it is located here: C:Program Files (x86)Microsoft SDKsWindows Phonev7.0ToolkitNov10Bin. The GesturesSilverlightToolkit project demonstrates how to use the GestureListener control. The toolkit library is added as a reference and made available in MainPage.xaml via an xml namespace import:

xmlns:toolkit="clr-namespace:Microsoft.Phone.Controls;assembly=Microsoft.Phone.Controls.Toolkit"

A Rectangle object containing a GestureListener control is added to the ContentPanel Grid:

<toolkit:GestureService.GestureListener> <toolkit:GestureListener /> </toolkit:GestureService.GestureListener>

Figure 3-10 shows the events available on the GestureListener.

An event handler is added for all the possible supported gestures, Tap, DoubleTap, Drag, Flick, TapAndHold, and Pinch to the GesturesSilverlightToolkit project to allow you to explore the events. Figure 3-11 shows the test UI.

An important item to note, each event has unique EventArgs to provide the information developers need to apply the gesture to objects. As an example, the FlickGestureEventArgs class includes Angle, Direction, GetPosition, Handled, HorizontalVelocity, and VerticalVelocity members. The properties are more tailored toward Silverlight development, which may simplify gesture processing over using the XNA Framework libraries.

This concludes the discussion of gesture processing. The next section covers manipulation events.

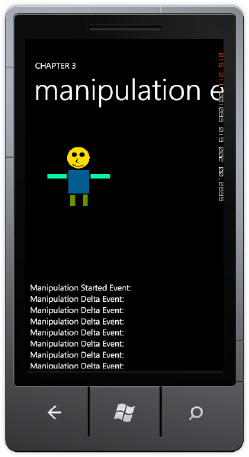

Manipulations permit more complex interactions. They have two primary characteristics: Manipulations consists of multiple gestures that appear to happen simultaneously. The other characteristic is that manipulations consist of a set of transforms resulting from the user touch actions. The Manipulation events are very helpful because they interpret the user touch interaction into a set of transforms like translate and scale that you as the developer can apply to objects onscreen.

Windows Presentation Foundation 4.0 introduced Manipulation events to provide a high-level touch programming model that simplifies touch programming when compared to using low-level raw touch input. A subset of the manipulation events is available in Silverlight for Windows Phone 7 with some differences. WPF manipulation events support translation, scaling, and rotation. Silverlight for Windows Phone does not include rotation.

Manipulation events do not distinguish between fingers. The events interpret finger movement into translation and scaling as well as an indication of velocity to implement physics.

Windows Phone 7 includes three manipulation events:ManipulationStarted, ManipulationDelta, and ManipulationCompleted defined on the UIElement base class. Each manipulation event includes a custom EventArgs class with the following members in common:

e.OriginalSource: The original object that raised the event.e.ManipulationContainer: The container object or panel that defines the coordinate system for the manipulation. This property will stay consistent through all three events.e.ManipulationOrigin: The point from which the manipulation originated. Indicates the location of the finger relative to theManipulationContainerobject. For two-finger manipulations, theManipulationOriginrepresents roughly the center point between the two fingers.

The events include unique EventArgs members as well, listed in the following:

ManipulationStarted: TheManipulationStartedEventArgsclass includes aCompletemethod that completes the manipulation without inertia, and aHandledproperty to indicate that the routed event is handled so that other controls don't attempt to handle the event again.ManipulationDelta: TheManipulationDeltaEventArgsclass includes aCompletemethod. TheIsInertialmethod indicates whether the Delta events is occurring during inertia. Other properties areDeltaManipulationandCumulativeManipulation, which represent the discrete (delta) and cumulative changes sinceManipulationStartedresulting from the manipulation. The otherEventArgsproperty isVelocities, which indicates the most recent rate of change for the manipulation.ManipulationCompleted: TheManipulationCompletedEventArgsinclude aFinalVelocitiesandTotalManipulationproperties. It also includes aHandledandIsInertialproperties.

As we saw before with gesture development there is one"started" event followed by zero or more ManipulationDelta events, and then a ManipulationCompleted "completed" event. To test manipulations, we created the ManipulationEvents project using the StickMan sprite from the GesturesTouchPanelXNA project. Figure 3-12 shows the UI.

The project implements drag and scale via the ManipulationsDelta event. Here is the code for the ManipulationsDelta event.

private void StickManImage_ManipulationDelta(object sender,

ManipulationDeltaEventArgs e)

{

ReportEvent("Manipulation Delta Event: ");

Image image = sender as Image;

CompositeTransform compositeTransform =

image.RenderTransform as CompositeTransform;

if ((e.DeltaManipulation.Scale.X > 0) || (e.DeltaManipulation.Scale.Y > 0))

{

double ScaleValue = Math.Max(e.DeltaManipulation.Scale.X,

e.DeltaManipulation.Scale.Y);

System.Diagnostics.Debug.WriteLine("Scale Value: " +

ScaleValue.ToString());

//Limit how large

if ((compositeTransform.ScaleX <= 4d) || (ScaleValue < 1d))

{

compositeTransform.ScaleX *= ScaleValue;

compositeTransform.ScaleY *= ScaleValue;

}}

System.Diagnostics.Debug.WriteLine("compositeTransform.ScaleX: " +

compositeTransform.ScaleX);

System.Diagnostics.Debug.WriteLine("compositeTransform.ScaleY: " +

compositeTransform.ScaleY);

compositeTransform.TranslateX += e.DeltaManipulation.Translation.X;

compositeTransform.TranslateY += e.DeltaManipulation.Translation.Y;

e.Handled = true;

}The code modifies a CompositeTransform based on the DeltaManipulation values, Scale for Pinch gestures and Translation for movement. The CompositeTransform is declared in the XAML for the StickMan Image tag, as shown in the following:

<Image x:Name="StickManImage" Source="/images/StickMan.jpg"

ManipulationCompleted="StickManImage_ManipulationCompleted"

ManipulationDelta="StickManImage_ManipulationDelta"

ManipulationStarted="StickManImage_ManipulationStarted">

<Image.RenderTransform>

<CompositeTransform />

</Image.RenderTransform>

</Image>The Silverlight for Windows Phone Toolkit GestureListener control is the preferred method for detecting gestures in Silverlight for Windows Phone. Manipulation events should be a second or third choice if for some reason the GestureListener or XNA Framework libraries do not suit your needs. For non-gesture detection multi-touch development, the manipulation events are recommended. Let's now shift gears to discuss other forms of application input on Windows Phone 7.

As far as fun goes, the accelerometer can be an entertaining and engaging method of input, especially for game development with XNA Game Studio or Silverlight. We all have seen the car racing games on mobile phone or mobile gaming devices where the user is tilting the device like a steering wheel. The next section covers how to work with the Accelerometer.

The Accelerometer sensor detects acceleration in all three axis's, X, Y, Z to form a 3D vector. You may wonder in what direction and magnitude does the vector point?Collect a few accelerometer readings using this line of code

System.Diagnostics.Debug.WriteLine(AccelerometerHelper.Current2DAcceleration.ToString());

The following are a few samples from the Output window when debugging:

{X:0.351 Y:-0.002 Z:0.949} (Magnatude is approximately 1.02)

{X:0.401 Y:0.044 Z:0.984} (Magnatude is approximately 1.06)

{X:0.378 Y:0.04 Z:1.023} (Magnatude is approximately 1.09)

{X:0.386 Y:0.022 Z:0.992} (Magnatude is approximately 1.06)

{X:0.409 Y:0.03 Z:0.992} (Magnatude is approximately 1.07)You can calculate the magnitude of the vector using the Pythagorean Theorem, which is the Sqrt(X2+Y2+Z2) = magnitude of the vector. The value should be about one but as you can see from the above samples, it can vary by location or could possibly be an error deviation. Either way, this is why applications like a level suggest that you calibrate the level against a known flat surface before using the virtual level.

If you run the application in the emulator, this reading is returned every time: {X:0 Y:0 Z:-1}. Holding the phone flat in my unsteady hand yields similar values with Z near one and X, and Y near zero.

{X:0.039 Y:0.072 Z:-1.019}

{X:0.069 Y:0.099 Z:-1.047}

{X:0.012 Y:0.056 Z:-1.008}

{X:0.016 Y:0.068 Z:-1.019}This suggests that the vector is oriented to point towards the center of the earth, which for the above readings is the bottom of the phone or a negative Z when the phone is lying flat on its back. Flipping the phone in my hand yields the following values:

{X:-0.043 Y:0.08 Z:1.019}

{X:-0.069 Y:0.111 Z:1.093}

{X:-0.069 Y:0.099 Z:1.093}

{X:-0.039 Y:0.107 Z:1.031}This time the vector is pointing out from the glass toward the ground, because the phone is lying face down. This information is useful if you need to determine how the phone is oriented in the users hand when say taking a photograph.

Figure 3-13 shows the accelerometer coordinate system. This is important because developers must translate readings into the coordinate system for the application.

As an example, in the XNA Framework, the default 2D coordinate system has positive Y goingdown, not up, so you cannot just take the Y component of acceleration and apply it to the Y value for a game object in 2D XNA.

Note

The default coordinate system for 3D in the XNA Framework has positive Y going up. Chapter 8 covers 3D XNA Game Studio development.

With this background in hand, the next section covers development with the accelerometer sensor.

Accessing the Accelerometer sensor is pretty straightforward. We start with an XNA project, adding a reference to the Microsoft.Devices.Sensors assembly, and declaring instance of the Accelerometer class. In the Game1.Initialize() method, create an instance of the Accelerometer and call the Start() method to generate readings.

Note

Turn off the Accelerometer if not needed to save battery power.

Create an event handler for ReadingChanged as well. The following is the code to create the event handler:

accelerometer = new Accelerometer(); accelerometer.Start(); accelerometer.ReadingChanged += new EventHandler<AccelerometerReadingEventArgs>(accelerometer_ReadingChanged);

The accelerometer_ReadingChanged event handler event arguments AccelerometerReadingEventArgs class exposes acceleration in three dimensions via the X, Y, and Z member variables of type double. There is also a TimeStamp variable to allow measurement of acceleration changes over time.