Chapter 2: Getting Started with Predictive Modeling

2.2 Opening SAS Enterprise Miner 12.1

2.3 Creating a New Project in SAS Enterprise Miner 12.1

2.4 The SAS Enterpriser Miner Window

2.5 Creating a SAS Data Source

2.6 Creating a Process Flow Diagram

2.8 Tools for Initial Data Exploration

2.8.4 Variable Clustering Node

2.9 Tools for Data Modification

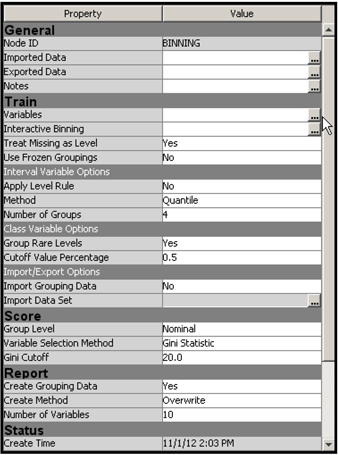

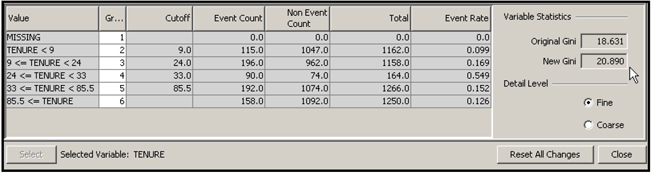

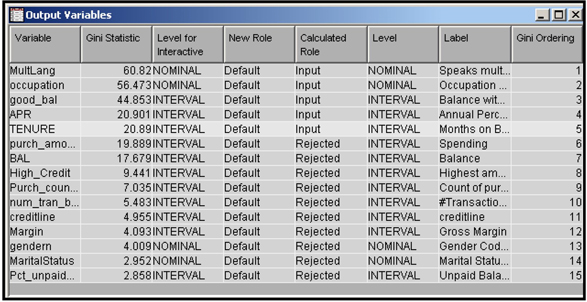

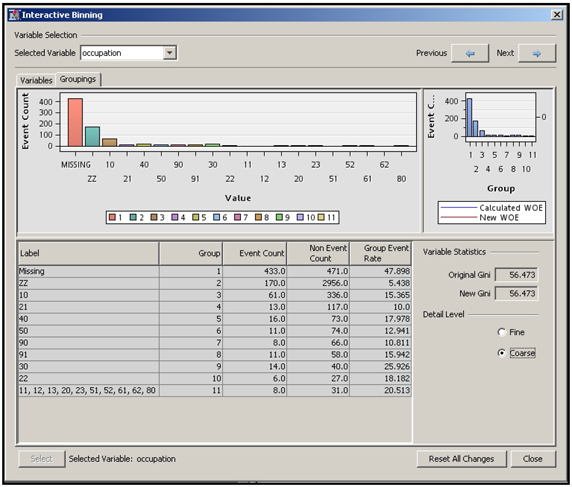

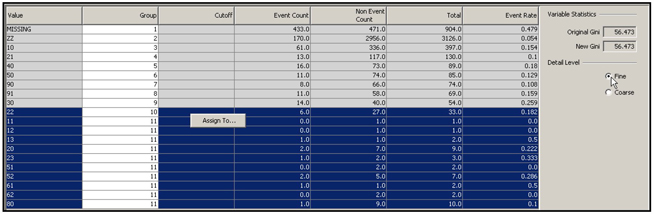

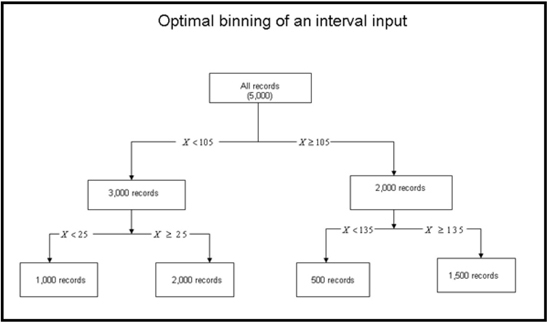

2.9.4 Interactive Binning Node

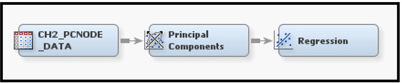

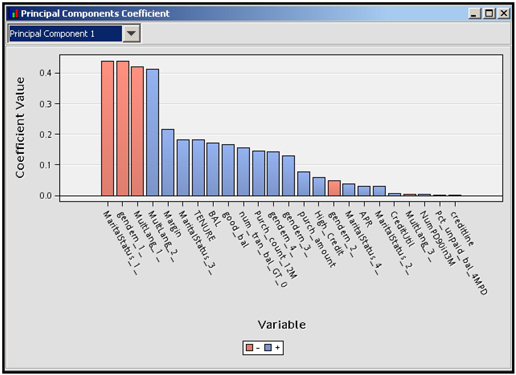

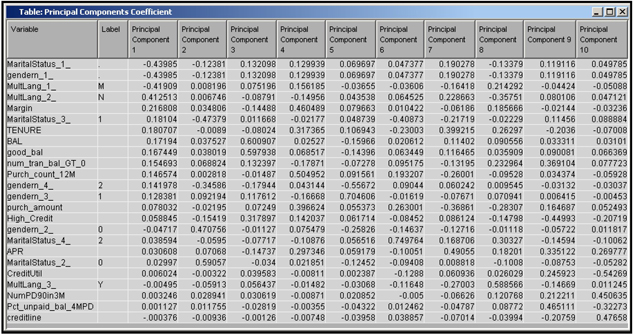

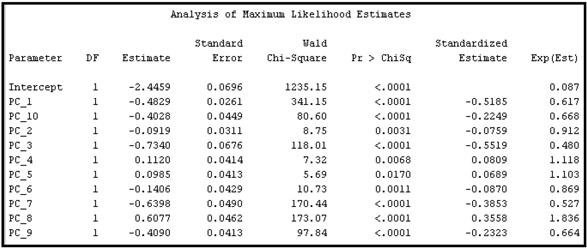

2.9.5 Principal Components Node

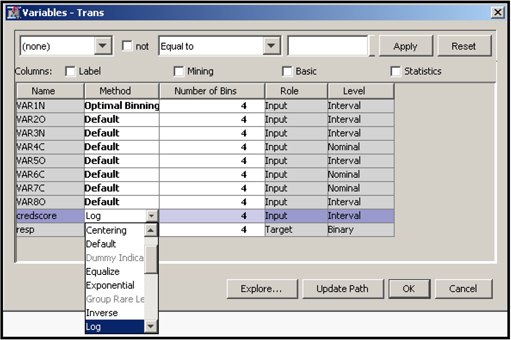

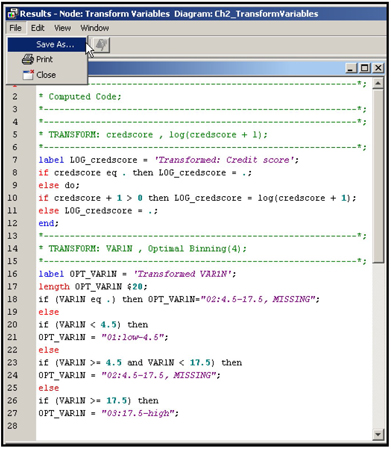

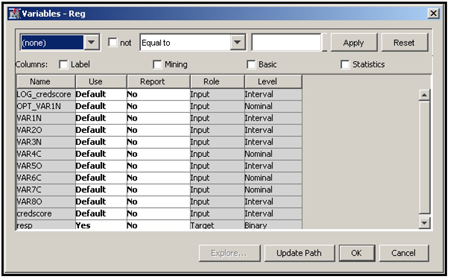

2.9.6 Transform Variables Node

2.11.1 The Type, the Measurement Scale, and the Number of Levels of a Variable

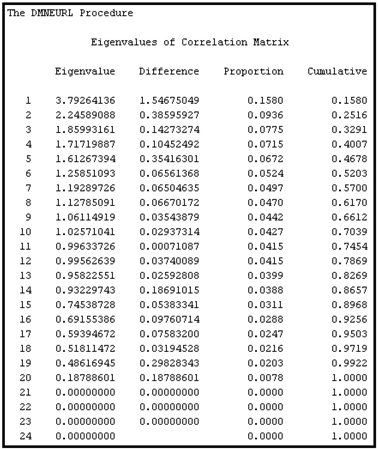

2.11.2 Eigenvalues, Eigenvectors, and Principal Components

2.11.4 Calculation of Chi-Square Statistic and Cramer’s V for a Continuous Input

2.1 Introduction

This chapter introduces you to SAS Enterprise Miner 12.1 and some of the preprocessing and data cleaning tools (nodes) needed for data mining and predictive modeling projects. SAS Enterprise Miner’s modeling tools are not included in this chapter as they are covered extensively in Chapters 4, 5, and 6.

2.2 Opening SAS Enterprise Miner 12.1

To start SAS Enterprise Miner 12.1, click the SAS Enterprise Miner icon on your desktop.1 If you have a Workstation configuration, the Welcome to Enterprise Miner window opens, as shown in Display 2.1.

Display 2.1

2.3 Creating a New Project in SAS Enterprise Miner 12.1

When you select New Project in the Enterprise Miner window, the Create New Project window opens.

In this window, enter the name of the project and the directory where you want to save the project. This example uses Chapter2 and C:TheBookEM12.1EMProjects (the directory where the project will be stored). Click Next. A new window opens, which shows the New Project Information.

Display 2.2

Click Finish. The new project is created, and the SAS Enterprise Miner 12.1 interface window opens, showing the new project.

2.4 The SAS Enterprise Miner Window

This is the window where you create the process flow diagram for your data mining project. The numbers in Display 2.3 correspond to the descriptions below the display.

Display 2.3

① Menu bar

② Tools bar: This contains the Enterprise Miner node (tool) icons. The icons displayed on the toolbar change according to the tab you select in the area indicated by ③.

③ Node (Tool) group tabs: These tabs are for selecting different groups of nodes. The toolbar ② changes according to the node group selected. If you select the Sample tab on this line, you will see the icons for Append, Data Partition, File Import, Filter, Input Data, Merge, Sample, and Time Series in ②. If you select the Explore tab, you will see the icons for Association, Cluster, DMDB, Graph Explore, Market Basket, Multiplot, Path Analysis, SOM/Kohonen, StatExplore, Variable Clustering, and Variable Selection in ②.

④ Project Panel: This is for viewing, creating, deleting, and modifying the Data Sources, Diagrams, and Model Packages. For example, if you want to create a data source (tell SAS Enterprise Miner where your data is and give information about the variables, etc.), you click Data Sources and proceed. For creating a new diagram, you right-click Diagrams and proceed. To open an existing diagram, double-click on the diagram you want.

⑤ Properties Panel: In this panel, you would see properties of Project, Data Sources, Diagrams, Nodes, and Model Packages by selecting them. In this example, the nodes are not yet created; hence, you do not see them in Display 2.3. You can view and edit the properties of any object selected. If you want to specify or change any options in a node such as Decision Tree or Neural Network, you must use the Properties panel.

⑥ Help Panel: This displays a description of the property that you select in the Properties panel.

⑦ Status Bar: This indicates the execution status of the SAS Enterprise Miner task.

⑧ Toolbar Shortcut Buttons: These are shortcut buttons for Create Data Source, Create Diagram, Run, etc. To display the text name of these buttons, position the mouse pointer over the button.

⑨ Diagram Workspace: This is used for building and running the process flow diagram for the project with various nodes (tools) of SAS Enterprise Miner.

Project Start Code

For any SAS Enterprise Miner Project, you must specify the directory where the data sets required for the project are located. Open the Enterprise Miner window and click ![]() located in the value column of Project Start Code row in the Properties panel (see Display 2.3). A window opens where you can type the path of the library where your data sets for the project are located. The Project Start Code window is shown in Display 2.4.

located in the value column of Project Start Code row in the Properties panel (see Display 2.3). A window opens where you can type the path of the library where your data sets for the project are located. The Project Start Code window is shown in Display 2.4.

Display 2.4

The data for this project is located in the folder C:TheBookEM12.1DataChapter2. This is indicated by the libref TheBook. When you click Run Now, the library reference to the path is created. You can check whether the library is successfully created by opening the log window by clicking the Log tab.

2.5 Creating a SAS Data Source

You must create a data source before you start working on your SAS Enterprise Miner Project. After the data source is created, it contains all the information associated with your data—the directory path to the file that contains the data, the name of the data file, the names and measurement scales of the variables in the data set, and the cost and decision matrices and target profiles you specify. Profit matrix is also known as decision weights in SAS Enterprise Miner 12.1, which is used in decisions such as assigning a target class to an observation and assessing the models. This section shows how a data source is created, covering the essential steps. For additional capabilities and features of data source creation, refer to the Help menu in SAS Enterprise Miner. SAS Enterprise Miner saves all of this information, or metadata, as different data sets in a folder called Data Sources in the project directory.

To create a data source, click on the toolbar shortcut button or right-click Data Sources in the Project panel, as shown in Display 2.5.

Display 2.5

When you click Create Data Source, the Data Source Wizard window opens, and SAS Enterprise Miner prompts you to enter the data source.

If you are using a SAS data set in your project, use the default value SAS Table in the Source box and click Next. Then another window opens, prompting you to give the location of the SAS data set.

When you click Browse, a window opens that shows the list of library references. This window is shown in Display 2.6.

Display 2.6

Since the data for this project is in the library Thebook, double-click on Thebook. The window opens with a list of all the data sets in that library. This window is shown in Display 2.7.

Display 2.7

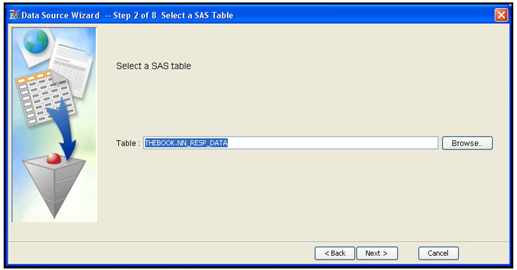

Select the data set named NN_RESP_DATA, and click OK. The Data Source Wizard window opens, as shown in Display 2.8.

Display 2.8

This display shows the libref and the data set name. Click Next. Another window opens displaying the Table Properties. This is shown in Display 2.9.

Display 2.9

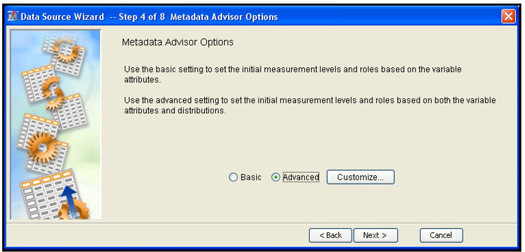

Click Next to show the Metadata Advisor Options. This is shown in Display 2.10.

Display 2.10

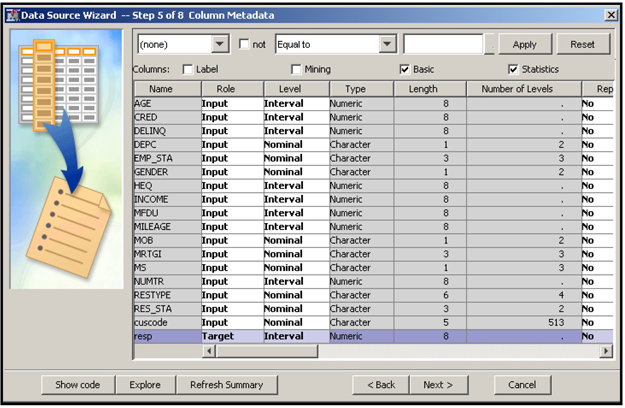

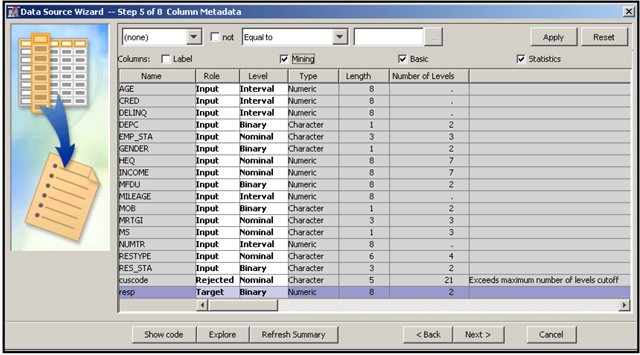

Use the Metadata Advisor Options window to define the metadata. Metadata is data about data sets. It specifies how each variable is used in the modeling process. The metadata contains information about the role of each variable, its measurement scale,2 etc.

If you select the Basic option, the initial measurement scales and roles are based on the variable attributes. It means that if a variable is numeric, its measurement scale is designated to be interval, irrespective of how many distinct values the variable may have. For example, a numeric binary variable will also be initially given the interval scale. If your target variable is binary in numeric form, it will be treated as an interval-scaled variable, and it will be treated as such in the subsequent nodes. If the subsequent node is a Regression node, SAS Enterprise Miner automatically uses ordinary least squares regression, instead of the logistic regression, which is usually appropriate with a binary target variable.

With the Basic option, all character variables are assigned the measurement scale of nominal, and all numeric variables are assigned the measurement scale of interval.

If you select Advanced, SAS Enterprise Miner applies a bit more logic as it automatically sets the variable roles and measurement scales. If a variable is numeric and has more than 20 distinct values, SAS Enterprise Miner sets its measurement scale (level) to interval. In addition, if you select the Advanced option, you can customize the measurement scales. For example, by default the Advanced option sets the measurement scale of any numeric variable to nominal if it takes 20 or fewer unique values, but you can change this number by clicking Customize and setting the Class Levels Count Threshold property (See Display 2.11) to a number other than the default value of 20.

For example, consider a numeric variable such as X, where X may be the number of times a credit card holder was more than 60 days past due in payment in the last 24 months. In the modeling data set, X takes the values 0, 1, 2, 3, 4, and 5 only. With the Advanced Advisor option, SAS Enterprise Miner will assign the measurement scale of X to Nominal by default. But, if you change the Class Levels Count Threshold property from 20 to 3, 4, or 5, SAS Enterprise Miner will set the measurement scale of X to interval. A detailed discussion of the measurement scale assigned when you select the Basic Advanced Advisor Options with default values of the properties, and Advanced Advisor Options with customized properties is given later in this chapter.

Display 2.11 shows the default settings for the Advanced Advisor Options.

Display 2.11

One advantage of selecting the Advanced option is that SAS Enterprise Miner automatically sets the role of each unary variable to Rejected. If any of the settings are not appropriate, you can change them later in the window shown in Display 2.12.

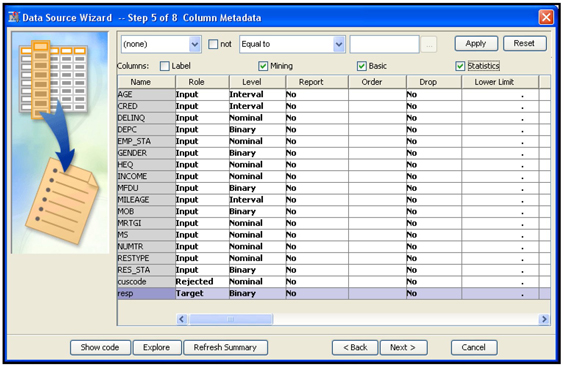

In this example, the Class Levels Count Threshold property is changed to 10. I closed the Advanced Advisor Options window by clicking OK, and then I clicked Next. This opens the window shown in Display 2.12.

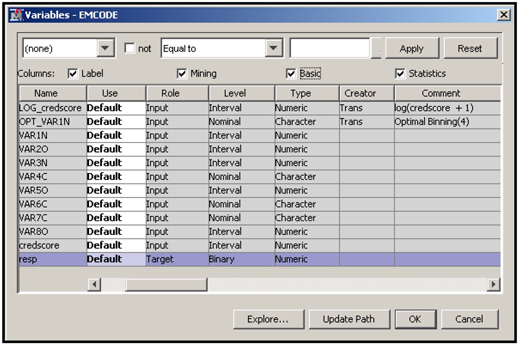

Display 2.12

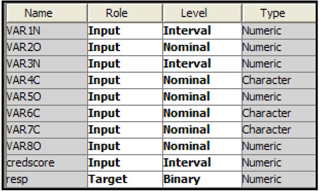

This window shows the Variable List table with the variable names, model roles, and measurement levels of the variables in the data set. This example specifies the model role of the variable resp as the target.

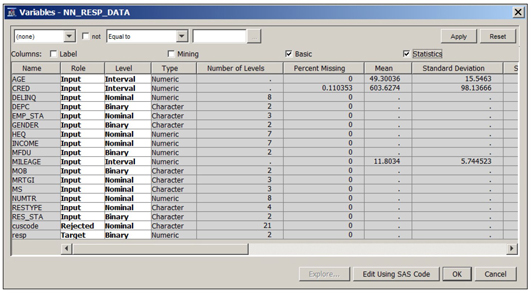

If you check the box Statistics at the top of the Variable List table, the Advanced Option function of the Data Source Wizard calculates important statistics such as the number of levels, percent missing, minimum, maximum, mean, standard deviation, skewness, and kurtosis for each variable. If you check the Basic box, the Variable List table also shows what type (character or numeric) each variable belongs. Display 2.13 shows a partial view of these additional statistics and variable types.

Display 2.13

If the target is categorical, when you click Next, another window opens with the question “Do you want to build models based on the values of the decisions?”

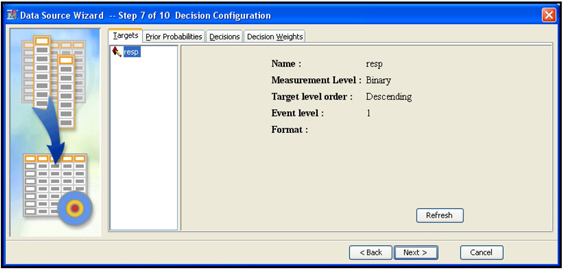

If you are using a profit matrix (decision weights), cost variables, and posterior probabilities, select Yes, and click Next to enter these values (you can also enter or modify these matrices later). The window shown in Display 2.14 opens.

Display 2.14

The Targets tab displays the name of the target variable and its measurement level. It also gives the target levels of interest. In this example, the variable resp is the target and is binary, which means that it has two levels: response, indicated by 1, and non-response, indicated by 0. The event of interest is response. That is, the model is set up to estimate the probability of response. If the target has more than two levels, this window will show all of its levels. (In later chapters, I model an ordinal target that has more than two levels, each level indicating frequency of losses or accidents, with 0 indicating no accidents, 1 indicating one accident, and 2 indicating four accidents, and so on.)

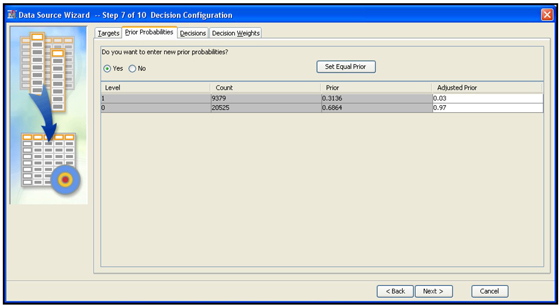

Display 2.15 shows the Prior Probabilities tab.

Display 2.15

This tab shows (in the column labeled Prior) the probabilities of response and non-response calculated by SAS Enterprise Miner for the sample used for model development. In the modeling sample I used in this example, the responders are over-represented. In the sample, there are 31.36% responders and 68.64% non-responders, shown under the column “Prior.” So the models developed from the modeling sample at hand will be biased unless a correction is made for the bias caused by over-representation of the responders. In the entire population, there are 3% responders and 97% non responders. These are the true prior probabilities. If you enter these true prior probabilities in the Adjusted Prior column as I have done, SAS Enterprise Miner will correct the models for the bias and produce unbiased predictions. To enter these adjusted prior probabilities, select Yes in response to the question Do you want to enter new prior probabilities? Then enter the probabilities that are calculated for the entire population (0.03 and 0.97 in my example).

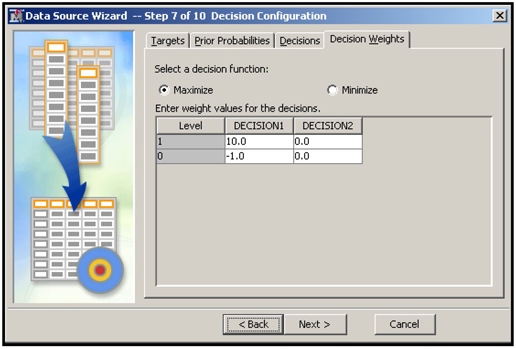

To enter a profit matrix, click the Decision Weights tab, shown in Display 2.16.

Display 2.16

The columns of this matrix refer to the different decisions that need to be made based on the model’s predictions. In this example, DECISION1 means classifying or labeling a customer as a responder, while DECISION2 means classifying a customer as a non-responder. The entries in the matrix indicate the profit or loss associated with a correct or incorrect assignment (decision), so the matrix in this example implies that if a customer is classified as a responder, and he is in fact a responder, then the profit is $10. If a customer is classified as a responder, but she is in fact a non-responder, then there will be a loss of $1. Other cells of the matrix can be interpreted similarly.

In developing predictive models, SAS Enterprise Miner assigns target levels to the records in a data set. In the case of a response model, assigning target levels to the records means classifying each customer as a responder or non- responder. In a latter step of any modeling project, SAS Enterprise Miner also compares different models, on the basis of a user-supplied criterion, to select the best model. In order to have SAS Enterprise Miner use the criterion of profit maximization when assigning target levels to the records in a data set and when choosing among competing models, select Maximize for the option Select a decision function. The values in the matrix shown here are arbitrary and given only for illustration.

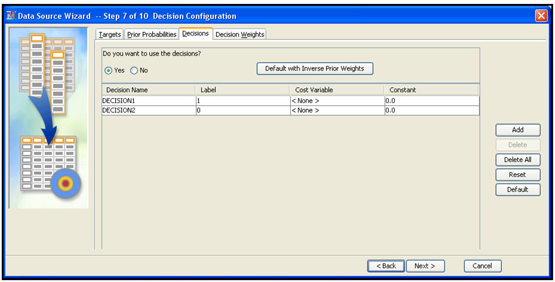

Display 2.17 shows the window for cost variables, which you can open by clicking the Decisions tab.

Display 2.17

If you want to maximize profit instead of minimizing cost, then there is no need to enter cost variables. Costs are already taken into account in profits. Therefore, in this example, cost variables are not entered.

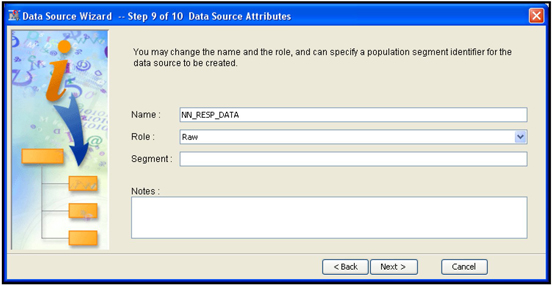

When you click Next, another window opens with the question “Do you wish to create a sample data set?” Since I want to use the entire data for this project, I chose No, and click Next. A window opens that shows the data set and its role, shown in Display 2.18. In this example, the data set NN_RESP_DATA is assigned the role Raw.

Diagram 2.18

Other options for Role are Train, Validate, Test, Score, Document, and Transaction. Since I plan to create the Train, Validate, and Test data sets from the sample data set, I leave its role as Raw. When I click Next, the metadata summary window opens. Click Finish. The Data Source Wizard closes, and the Enterprise Miner Project window opens, as shown in Display 2.19.

Display 2.19

In order to see the properties of the data set, expand Data Sources in the project panel. Select the data set NN_RESP_DATA; the Properties panel shows the properties of the data source as shown in Display 2.19.

You can view and edit the properties. Open the variables table (shown in Display 2.20) by clicking ![]() located on the right of the Variables property in the Value column.

located on the right of the Variables property in the Value column.

Display 2.20

This variable table shows the name, role, measurement scale, etc., for each variable in the data set. You can change the role of any variable, change its measurement scale, or drop a variable from the data set. If you drop a variable from the data set, it will not be available in the next node.

By checking the “Basic” box located above the columns, you can see the variable type and length. By checking the “Statistics” box you can see statistics such as mean standard deviation etc. for interval scaled variables as shown in Display 2.20.

Note that the variable Income is numeric (see under the column Type) but its level (measurement scale) is set to Nominal because income has only 7 levels (unique values) in the sample. When you select the advanced advisor options in creating the data source, by default the measurement scale of a numeric variable is set to nominal if it has less than 20 unique values. See display 2.11, where you see that the class level count threshold is 20 by default. Although we changed this threshold to 10, the measurement scale of Income is still nominal since it has only 7 levels (less than 10).

2.6 Creating a Process Flow Diagram

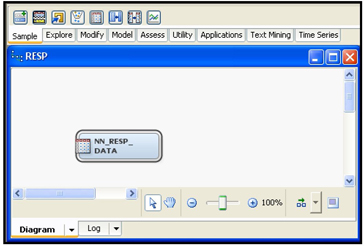

To create a process flow diagram, right-click Diagrams in the project panel (shown in Display 2.19), and click Create Diagram. You are prompted to enter the name of the diagram in a text box labeled Diagram Name. After entering a name for your diagram, click OK. A blank workspace opens, as shown in Display 2.21, where you create your process flow diagram.

Display 2.21

To create a process flow diagram drag and connect the nodes (tools) you need for your task. The following sections show some examples of how to use the nodes available in SAS Enterprise Miner.

2.7 Sample Nodes

If you open the Sample tab, the tool bar is populated with the icons for the following nodes: Append, Data Partition, File Import, Filter, Input Data, Merge, Sample, and Time Series. In this section, I provide an overview of some of these nodes, starting with the Input Data node.

2.7.1 Input Data Node

This is the first node in any diagram (unless you start with the SAS Code node). In this node, you specify the data set that you want to use in the diagram. You might have already created several data sources for this project, as discussed in Section 2.5. From these sources, you need to select one for this diagram. A data set can be assigned to an input in one of two ways:

• When you expand Data Sources in the project panel by clicking the + on the left of Data Sources, all the data sources appear. Then click on the icon to the left of the data set you want, and drag it to the Diagram Workspace. This creates the Input Data node with the desired data set assigned to it.

• Alternatively, first drag the Input Data node from the toolbar into the Diagram Workspace. Then set the Data Source property of the Input Data node to the name of the data set. To do this, select the Input Data node, then click ![]() located to the right of the Data Source property as shown in Display 2.22. The Select Data Source window opens. Click on the data set you want to use in the diagram. Then click OK.

located to the right of the Data Source property as shown in Display 2.22. The Select Data Source window opens. Click on the data set you want to use in the diagram. Then click OK.

When you follow either procedure, the Input Data node is created as shown in Display 2.23.

Display 2.22

Display 2.23

2.7.2 Data Partition Node

In developing predictive models, you must partition the sample into Training, Validation, and Test. The Training data is used for developing the model using tools such as Regression, Decision Tree, and Neural Network. During the training process, these tools generate a number of models. The Validation data set is used to evaluate these models, and then to select the best one. The process of selecting the best model is often referred to as fine tuning. The Test data set is used for an independent assessment of the selected model.

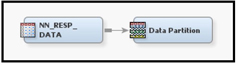

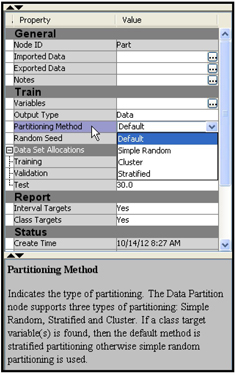

From the Tools bar, drag the Data Partition node into the Diagram Workspace, connect it to the Input Data node and select it, so that the Property panel shows the properties of the Data Partition node, as shown in Display 2.25.

The Data Partition node is shown in Display 2.24, and its Property panel is shown in Display 2.25.

Display 2.24

Display 2.25

In the Data Partition node, you can specify the method of partitioning by setting the Partitioning Method property to one of four values: Default, Simple random, Cluster, or Stratified. In the case of a binary target such as response, the stratified sampling method results in uniform proportions of responders in each of the partitioned data sets. Hence, I set the Partitioning Method property to Stratified, which is the default for binary targets. The default proportion of records allocated to these three data sets are 40%, 30%, and 30%, respectively. You can change these proportions by resetting the Training, Validation, and Test properties under the Data Set Allocations property.

2.7.3 Filter Node

The Filter node can be used for eliminating observations with extreme values (outliers) in the variables.

You should not use this node routinely to eliminate outliers. While it may be reasonable to eliminate some outliers for very large data sets for predictive models, the outliers often have interesting information that leads to insights about the data and customer behavior.

Before using this node, you should first find out the source of extreme value. If the extreme value is due to an error, the error should be corrected. If there is no error, you can truncate the value so that the extreme value does not have an undue influence on the model.

Display 2.26 shows the flow diagram with the Filter node. The Filter node follows the Data Partition node. Alternatively, you can use the Filter node before the Data Partition node.

Display 2.26

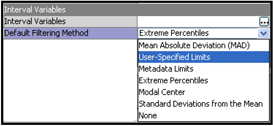

To use the Filter node, select it, and set the Default Filtering Method property for Interval Variables to one of the values, as shown in Display 2.27. (Different values are available for Class variables.)

Display 2.27

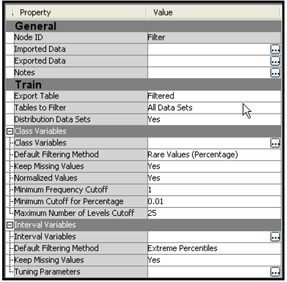

Display 2.28 shows the Properties panel of the Filter node.

Display 2.28

I set the Tables to Filter property to All Data Sets so that outliers are filtered in all three data sets—Training, Validation, and Test—and then ran the Filter node.

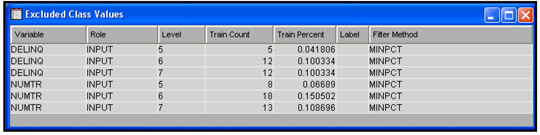

The Results window shows the number of observations eliminated due to outliers of the variables. Output 2.1 (from the output of the Results window) shows the number of records excluded from the Train, Validate, and Test data sets due to outliers.

Output 2.1

The number of records exported to the next node is 11557 from the Train data set, 8658 records from the Validate data set and 8673 records from the Test data set. Displays 2.29 and 2.30 show the criteria used for filtering the observations.

Display 2.29

Display 2.30

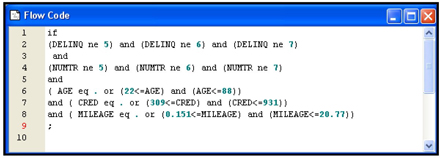

To see the SAS code that is used to perform the filters, click View→SAS Results→Flow Code. The SAS code is shown in Display 2.31.

Display 2.31

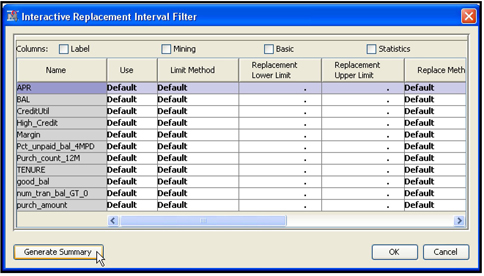

Instead of using the default filtering method for all variables, you can specify different filtering methods to individual variables. Do this by opening the Variables window. To open the Variable window for interval variables, click ![]() located to the right of the Interval Variables property. The Interactive Interval Filter window opens, as shown in Display 2.32.

located to the right of the Interval Variables property. The Interactive Interval Filter window opens, as shown in Display 2.32.

Display 2.32

For example, if you want to change the filtering method for the variable CRED(stands for Credit Score) , select the row for the variable CRED as shown in Display 2.32 and click in the Filtering Method column corresponding to CRED. A drop-down menu of all the filtering methods available appears. You can then select the method you want.

You can also interactively set the limits for filtering out extreme values by sliding the handles that appear above the chart in Display 2.32. Let me illustrate this by manually setting the filtering limits for the variable CREDIT.

Display 2.32 shows that some customers have a credit rating of 1000 or above. In general the maximum credit rating is around 950, so a rating above this value is almost certainly erroneous. So I set the lower and upper limits for the CREDIT variable at around 301 and 950 respectively. I set these limits by sliding the handles located at the top of the graph to the desired limits and clicking Apply Filter, as shown in Display 2.33. Click OK to close the window.

Display 2.33

After running the Filter node and opening the Results window, you can see that the limits I set for the credit variable have been applied in filtering out the extreme values, as shown in Display 2.34.

Display 2.34

2.7.4 File Import Node

The File Import node enables you to create a data source directly from an external file such as a Microsoft Excel file. Display 2.35 shows the types of files that can be converted directly into data sources format in SAS Enterprise Miner.

Display 2.35

I will illustrate the File Import node by importing an Excel file. You can pass the imported data to any other node. I demonstrate this by connecting the File Import node to the StatExplore node.

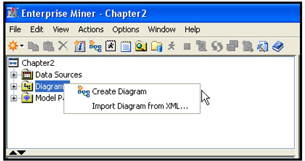

To use the File Import node, first create a diagram in the current project by right-clicking Diagrams in the project panel, as shown in Display 2.36

Display 2.36

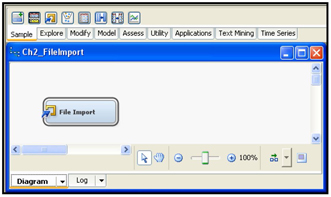

Type a diagram name in the text box in the Create Diagram dialog box, and click OK. A blank workspace is created. Drag the File Import tool from the Sample tab, as shown in Display 2.37.

Display 2.37

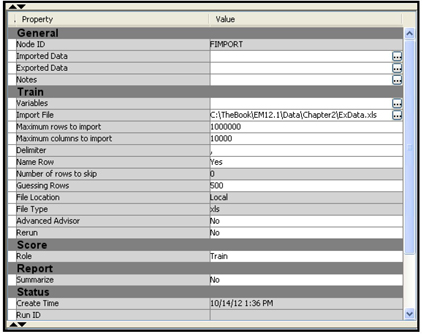

The Properties panel of the File Import node is shown in Display 2.38.

Display 2.38

In order to configure the metadata, click ![]() to the right of Import File property in the Properties panel (see Display 2.38). The File Import dialog box appears.

to the right of Import File property in the Properties panel (see Display 2.38). The File Import dialog box appears.

Since the Excel file I want to import is on my C drive, I select the My Computer radio button, and type the directory path, including the file name, in the File Import dialog box. Now I can preview my data in the Excel sheet by clicking the Preview button, or I can complete the file import task by clicking OK. I chose to click OK.

The imported Excel file is now shown in the value of the Import File property in the Properties panel, as shown in Display 2.39.

Display 2.39

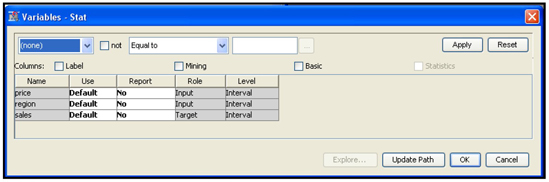

Next we have to assign the Metadata Information such as variable roles and measurement scales. To do this click ![]() located to the right of Variables property. The Variables window opens. In the Variables window, you can change the role of a variable by clicking on the column Role. I have changed the role of the variable Sales to Target, as shown in Display 2.40.

located to the right of Variables property. The Variables window opens. In the Variables window, you can change the role of a variable by clicking on the column Role. I have changed the role of the variable Sales to Target, as shown in Display 2.40.

Display 2.40

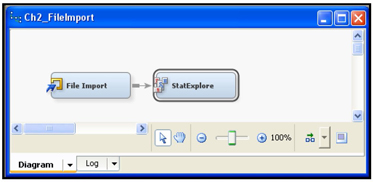

Click OK, and the data set is ready for use in the project. It can be passed to the next node. I have connected the StatExplore node to the File Import node in order to verify that the data can be used, as shown in Display 2.41.

Display 2.41

Display 2.42 shows the table that is passed from the File Import node to the StatExplore node.

Display 2.42

You can now run the StatExplore node. I successfully ran StatExplore node shown in Display 2.41. I discuss the results later in this chapter.

2.7.5 Time Series Node

Converting transactions data to time series

The Time Series node in SAS Enterprise Miner 12.1 can be used to condense transactional data to time series form, which is suitable for analyzing trends and seasonal variations in customer transactions. Both the transactional data and the time series data are time stamped. But the observations in transactional data may not occur at any particular frequency, whereas the observations in time series data pertain to consecutive time periods of a specific frequency, such as annual, quarterly, monthly, weekly or daily.

In order to introduce the reader to the Time Series node, I present an example of a transactional data set that shows the sales of two products (termed A and B) over a 60-month period by a hypothetical company. The company sells these products in 3 states—Connecticut, New Jersey, and New York. The sales occur in different weeks within each month. Display 2.43 shows a partial view of this transactions data.

Display 2.43

Note that, in January 2005, customers purchased product A during the weeks 1, 2, and 4, and they purchased Product B during the weeks 2, 3 and 4. In February 2005, customers purchased product A during the weeks 1, 2 and 4, and they purchased B in weeks 3 and 4. If you view the entire data set, you will find that there are sales of both products in all 60 months (Jan 2005 through Dec 2009), but there may not be sales during every week of every month. No data was entered for weeks when there were no sales, hence there is no observation in the data set for the weeks during which no purchases were made. In general, in a transaction data set, an observation is recorded only when a transaction takes place.

In order to analyze the data for trends, or seasonal or cyclical factors, you have to convert the transactional data into weekly or monthly time series. Converting to weekly data entails entering zeroes for the weeks that had missing observations to represent no sales. In this example, we convert the transaction data set to a monthly time series.

Display 2.44 shows monthly sales of product A, and Display 2.45 shows monthly sales of product B in Connecticut. Time series of this type are used by the Time Series node for analyzing trends and seasonal factors.

Display 2.44

Display 2.45

In order to perform an analysis on monthly time series derived from the transactions data shown in Display 2.43, you need to specify the time ID (month_yr in this example), and the cross ID variables (State and Product) and the target variable (Sales) in the Time Series node. The steps described in the following sections illustrate how to identify seasonal factors in the sales of products A and B (from the transactions data discussed earlier) using the Time Series node. You can use the Time Series node to find trend and cyclical factors in the sales also, but here I show only the seasonal decomposition of the sales.

First open SAS Enterprise Miner, create a project, and then create a data source for the transaction data. The first two steps are the same as described earlier, but creating a data source from transaction data is slightly different.

Creating a Data Source for the Transaction data

To create the data source, open an existing project. Right-click Data Sources in the Project panel and select Create Data Source (as shown earlier in Display 2.5 when we created a new SAS data source). The Data Source Wizard opens. For this example, use the default value of SAS Table for the Source field. Click Next.

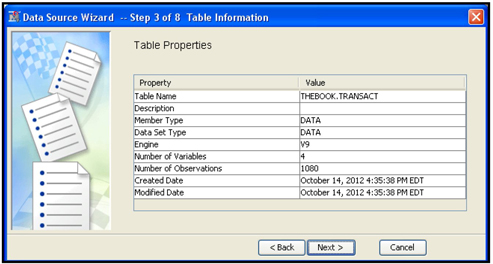

For step 3, enter the name of the transactions data set (called TRANSACT in this example), and click Next. The Table Properties table opens, as shown in Display 2.46A.

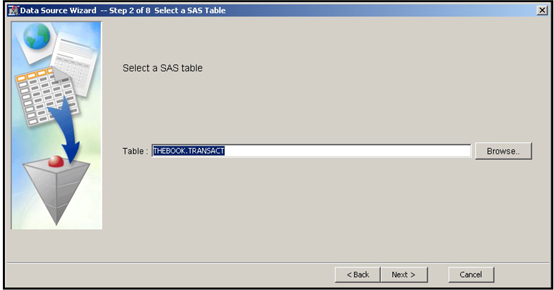

In the “Source” text box type in the data type. Since the transactions data is a SAS data set in this example, I entered “SAS Table”. By clicking on the “Next” button, the Wizard takes you to Step 2. In Step2 enter the name of the transaction data set (THEBOOK.TRANSACT) as shown in Display 2.46.

Display 2.46

By clicking “Next” the Wizard takes you to Step 3.

Display 2.46A

Click Next to move to step 4 of the Wizard. Select the Advanced option and click Next.

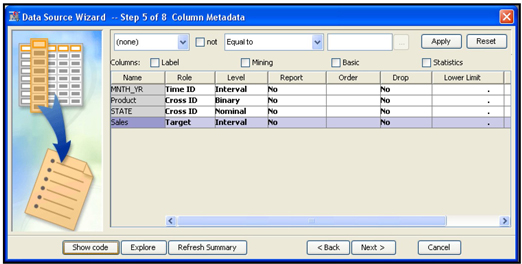

In step 5, names, roles, measurement levels, etc. of the variables in your data set are displayed.

Display 2.47

I changed the role of the variable Month_Yr to Time ID and its measurement level to Interval, the roles of the variables Product and State to Cross ID, and the role of Sales to Target. These changes result in the use of monthly time series of sales for analysis. Since there are three states and two products, six time series will be analyzed when I run the Time Series node.

Click Next. In step 6, the Wizard asks if you want to create a sample data set. I selected No, as shown in Display 2.48.

Display 2.48

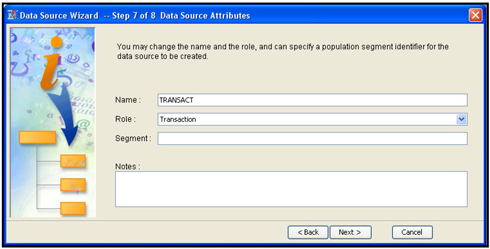

Click Next. In step 7, assign Transaction as the Role for the data source (shown in Display 2.49), and click Next.

Display 2.49

Step 8 shows a summary of the metadata created. When you click Finish, the Project window opens.

Display 2.50

Creating a Process Flow Diagram for Time Series Analysis

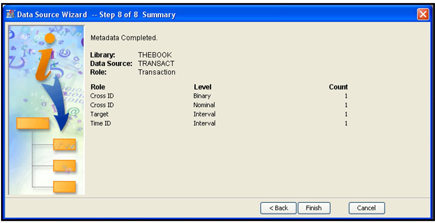

To create a process flow diagram, right-click Diagram in the Project panel and click Create Diagram. The Create New Diagram window opens. Enter the diagram name (Ch2_TS), and click OK.

From the Data Sources folder, click on the icon to the left of the Transact data source and drag it into the Diagram Workspace. In the same way, drag the Time Series node from the Sample tab into the Diagram Workspace and connect it to the Input Data node, as shown in Display 2.51.

Display 2.51

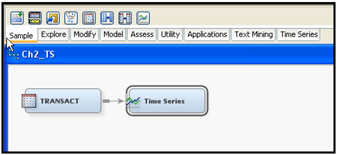

Analyzing Time Series: Seasonal Decomposition

By clicking the Time Series node in the Diagram Workspace (Display 2.51), the Properties panel of the Time Series node appears, as shown in Display 2.52.

Display 2.52

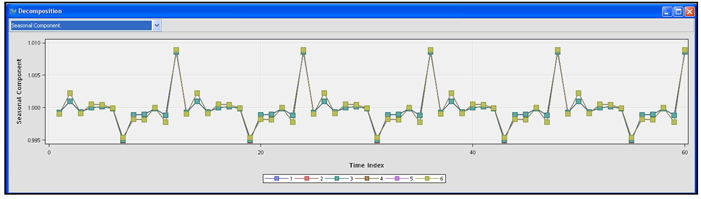

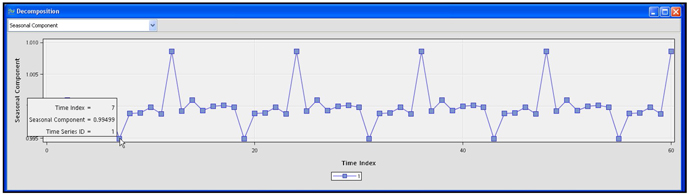

I have set the Select an Analysis property to Seasonal Decomposition since I am interested in getting the monthly seasonal factors for sales of the two products for the three states included in the data set. Then I ran the Time Series node and opened the Results window. In the Decomposition window, I selected the Seasonal Component graph. This graph in Display 2.53 shows the seasonal effects for the six monthly time series created by the Time Series node.

Display 2.53

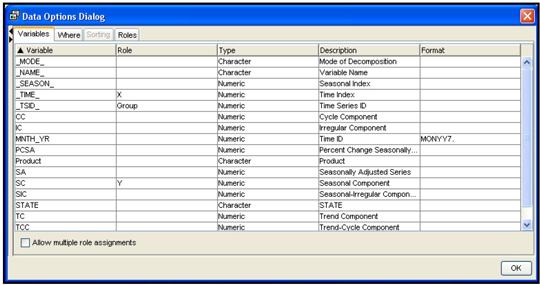

Display 2.53 shows that there are seasonal factors for the months of July and December. You can view the seasonal factors of any individual time series by right-clicking on the graph area to open the Data Options dialog box, shown in Display 2.54.

Display 2.54

To see the seasonal factors in the sales for given product in a given state, click the Where tab. The window for making the selection of a time series opens, as shown in Display 2.55.

Display 2.55

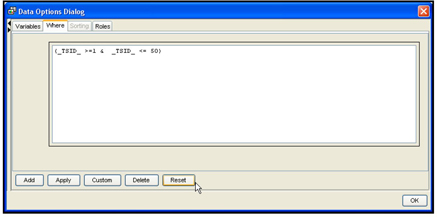

Click Reset. A Data Options dialog box opens as shown in Display 2.56.

Display 2.56

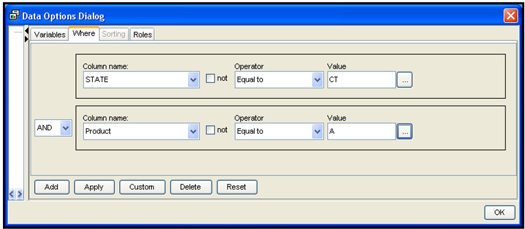

In the Column name box, select the variable name State. Enter CT in the Value field, and click Add. A new area appears, as shown in Display 2.57.

Display 2.57

Select Product in the Column name box, and select the value A. Click Apply and OK. The seasonal component graph for Sales of Product A in the state of Connecticut appears, as shown in Display 2.58.

Display 2.58

By hovering over any point on the graph, you can see the seasonal components for that month. As Display 2.58 shows, the seasonal component (factor) is 0.9949 for the month of July 2005. Since the component is below 1, it means that the sales were slightly 1% below normal. Similarly, you can see that the seasonal factors account for a slightly higher sales during the month of December.

To learn how to estimate the seasonal components of a time series, refer to:

• Dagum, E. B. (1980), The X-11-ARIMA Seasonal Adjustment Method, Statistics Canada.

• Dagum, E. B. (1983), The X-11-ARIMA Seasonal Adjustment Method, Technical Report 12-564E, Statistics Canada.

• Ladiray, D. and Quenneville, B. (2001), Seasonal Adjustment with the X-11 Method, New York: Springer-Verlag.

The example presented here is very simple. The full benefit of the Time Series node becomes clear when you use more complex data than that presented here.

Output Data Sets

To see a list of output data sets that are created by the Time Series node, click ![]() located in the Value column of the Exported Data property of the Properties panel, as shown in Display 2.59. Display 2.60 shows a list of the output data sets.

located in the Value column of the Exported Data property of the Properties panel, as shown in Display 2.59. Display 2.60 shows a list of the output data sets.

Display 2.59

Display 2.60

The seasonal decomposition data is saved as a data set with the name time_decomp. On my computer, this table is saved as C:TheBookEM12.1EMProjectsChapter2WorkspacesEMWS4 ime_decomp.sas7bdat. Chapter2 is the name of the project, and it is a sub-directory in C:TheBookEM12.1EMProjects.

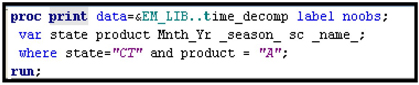

You can also print selected columns of the data set time_decomp.sas7bdat from the SAS code node using the code shown in Display 2.61.

Display 2.61

Partial output generated by this code is shown in Output 2.2.

Output 2.2

2.7.6 Merge Node

The Merge node can be used to combine different data sets within a SAS Enterprise Miner project. Occasionally you may need to combine the outputs generated by two or more nodes in the process flow. For example, you may want to test two different types of transformations of interval inputs together, where each type of transformation is generated by different instances of the Transform Variables node. To do this you can attach two Transform Variables nodes, as shown in Display 2.62. You can set the properties of the first Transform Variables node such that it applies a particular type of transformation to all interval inputs. You can set the properties of the second Transform Variables node to perform a different type of transformation on the same interval variables. Then, using the Merge node, you can combine the output data sets created by these two Transform Variables nodes. The resulting merged data set can be used in a Regression node to test which variables and transformations are the best for your purposes.

Display 2.62

To make this example more concrete, I have generated a small data set for a hypothetical bank with two interval inputs and an interval target. The two inputs are: (1) interest rate differential (SPREAD) between the interest rate offered by the bank and the rate offered by its competitors, and (2) amount spent by the bank on advertisements (AdExp) to attract new customers and/or induce current customers to increase their savings balances. The target variable is the month-to-month change in the savings balance (DBAL) of each customer for each of a series of months, which is a continuous variable.

The details of different transformations and how to set the properties of the Transform Variables node to generate the desired transformations are discussed later in this chapter and also in Chapter 4. Here it is sufficient to know that the two Transform Variables nodes shown in Display 2.62 will each produce an output data set, and the Merge node merges these two output data sets into a single combined data set.

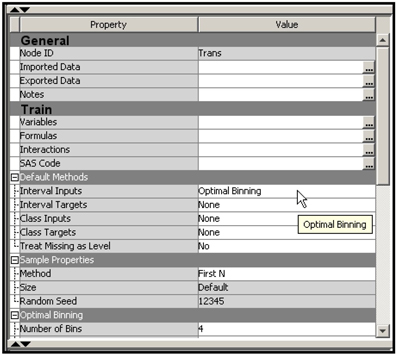

In the upper Transform Variables node, all interval inputs are transformed using the “optimal binning” method. (See Chapter 4 for more detail.) The optimal binning method creates a categorical variable from each continuous variable; the categories are the input ranges (or class intervals or bins). In order for all continuous and interval scaled inputs to be transformed by the Optimal Binning method, I set the Interval Inputs property in the Default Methods group to Optimal Binning, as shown in Display 2.63.

Display 2.63

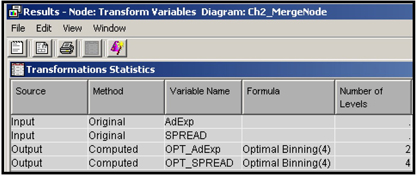

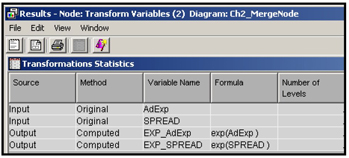

After running the Transform Variables node, you can open the Results window to see the transformed variables created, as shown in Display 2.64. The transformed variables are: OPT_AdExp and OPT_SPREAD.

Display 2.64

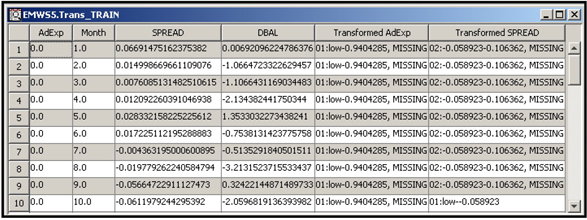

You can view the output data set by clicking ![]() located to the right of the Exported Data row in the Property table in Display 2.63. A partial view of the output data set created by the upper Transform Variables node is shown in Display 2.65.

located to the right of the Exported Data row in the Property table in Display 2.63. A partial view of the output data set created by the upper Transform Variables node is shown in Display 2.65.

Display 2.65

To generate a second set of transformations, click on the second (lower) Transform Variables node and set the Interval Inputs property in the Default Methods group to Exponential so that all the interval inputs are transformed using the exponential funtion. Display 2.66 shows the new variables created by the second Transform Variables node.

Display 2.66

The two Transform Variables nodes are then connected to the Merge node, as shown in Display 2.62. I have used the default properties of the Merge node.

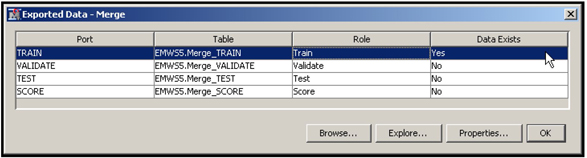

After running the Merge node, click on it and click ![]() located to the right of Exported Data in the Properties panel. Then, select the exported data set, as shown in Display 2.67.

located to the right of Exported Data in the Properties panel. Then, select the exported data set, as shown in Display 2.67.

Display 2.67

Click Explore to see the Sample Properties, Sample Statistics, and a view of the merged data set.

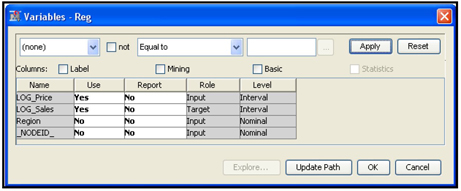

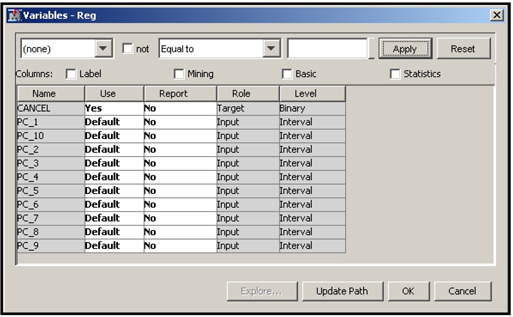

The next step is to connect a Regression node to the Merge node, then click Update Path. The variables exported to the Regression node are shown in Display 2.68.

Display 2.68

Display 2.68 shows that the transformed variables created by the Optimal Binning method are nominal and those created by the second Transform Variables node are interval scaled. You can now run the Regression node and test all the transformations together and make a selection. Since we have not covered the Regression node, I have not run it here. But you can try.

2.7.7 Append Node

The Append node can be used to combine data sets created by different paths of a process flow in a SAS Enterprise Miner project. The way the Append node combines the data sets is similar to the way a SET statement in a SAS program stacks the data sets. This is different from the side-by-side combination that is done by the Merge node.

Display 2.69 shows an example in which the Append node is used.

Display 2.69

In Display 2.69 two data sources are used. The first data source is created by a data set called Data1, which contains data on Sales and Price in Region A at different points of time (months). The second data source is created from the data set Data2, which contains data on Sales and Price for Region B.

To illustrate the Append node, I have used two instances of the Transform Variables node. In both instances, the Transform Variables node makes a logarithmic transformation of the variables Sales and Price, creates data sets with transformed variables, and exports them to the Append node.

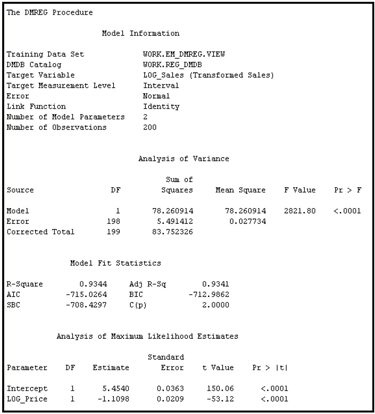

The output data sets produced by the two instances of the Transform Variables node are then combined by the Append node3 and passed to the Regression node, where you can estimate the price elasticity of sales using the combined data set and the following specification of the demand equation:

log_sales = α + βlog_ price or

Sales = A Priceβ

where A = eα

In an equation of this form, β measures the price elasticity of demand;

in this example it is -1.1098

The first data set (Data1) has 100 observations and four columns (variables)—Price, Sales, Month, and Region. The second data set (Data2) also has 100 observations with the four columns Price, Sales, Month, and Region. The combined data set contains 200 observations.

The first instance of Transform Variables node creates new variables log_sales and log_Price from Data1, stores the transformed variables in a new data set, and exports it to the Append node. The data set exported by the first instance of the Transform Variables node has 100 observations.

The second instance of the Transform Variables node performs the same transformations done by the first instance, creates a new data set with the transformed variables, and exports it to the Append node. The data set exported by the second instance also has 100 observations.

The Append node creates a new data set by stacking the two data sets generated by the two instances of the Transform Variables node. Because of stacking (as opposed to side-by-side merging) the new data set has 200 observations. The data set exported to the Regression node has four variables, as shown in Display 2.70, and 200 observations shown in Output 2.3.

Display 2.70

Output 2.3

Display 2.71 shows the property settings of the Append node for the example presented here.

Display 2.71

This type of appending is useful in pooling the price and sales data for two regions and estimating a common equation. Here my intention is only to demonstrate how the Append node can be used for pooling the data sets for estimating a pooled regression and not to suggest or recommend pooling. Whether you pool depends on statistical and business considerations.

2.8 Tools for Initial Data Exploration

In this section I introduce the StatExplore, MultiPlot, GraphExplore, Variable Clustering, Cluster and Variable Selection nodes, which are useful in predictive modeling projects.

I will use two example data sets in demonstrating the use of StatExplore, MultiPlot, and GraphExplore. The first data set shows the response of a sample of customers to solicitation by a hypothetical auto insurance company. The data set consists of an indicator of response to the solicitation and several input variables that measure various characteristics of the customers who were approached by the insurance company. Based on the results from this sample, the insurance company wants to predict the probability of response based on customer’s characteristics. Hence the target variable is the response indicator variable. It is a binary variable taking only two values, namely 0 and 1, where 1 represents response and 0 represents non-response. The actual development of predictive models is illustrated in detail in subsequent chapters, but here I provide an initial look into various tools of SAS Enterprise Miner that can be used for data exploration and discovery of important predictor variables in the data set.

The second data set used for illustration consists of month-to-month change in the savings balances of all customers (DBAL) of a bank, interest rate differential (Spread) between the interest rate offered by the bank and its competitors, and amount spent by the bank on advertisements to attract new customers and/or induce current customers to increase their savings balances.

The bank wants to predict the change in customer balances in response to change in the interest differential and the amount of advertising dollars spent. In this example, the target variable is change in the savings balances, which is a continuous variable.

Click the Explore tab located above the Diagram Workspace so that the data exploration tools appear on the tool bar. Drag the Stat Explore, MultiPlot, and Graph Explore nodes and connect them to the Input Data Source node, as shown in Display 2.72

Display 2.72

2.8.1 Stat Explore Node

Stat Explore Node: Binary Target (Response)

Select the Stat Explore node in the Diagram Workspace to see the properties of the StatExplore node in the Properties panel, shown in Display 2.73.

Display 2.73

If you set the Chi-Square property to Yes, a Chi-Square statistic is calculated and displayed for each variable. The Chi-Square statistic shows the strength of the relationship between the target variable (Response, in this example) and each categorical input variable. The appendix to this chapter shows how the Chi-Square statistic is computed.

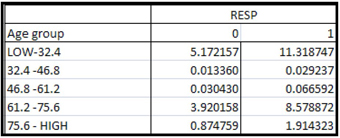

In order to calculate Chi-Square statistics for continuous variables such as age and income, you have to first create categorical variables from them. Derivation of categorical variables from continuous variables is done by partitioning the ranges of continuous scaled variables into intervals. These intervals constitute different categories or levels of the newly derived categorical variables. A Chi-Square statistic can then be calculated to measure the strength of association between the categorical variables derived from the continuous variables and the target variable. The process of deriving categorical variables from continuous variables is called binning. If you want the StatExplore node to calculate the Chi-Square statistic for interval scaled variables, you must set the Interval Variables property to Yes, and you must also specify the number of bins into which you want the interval variables to be partitioned. To do this, set the Number of Bins property to the desired number of bins. The default value of the Number of Bins property is 5. For example, the interval scaled variable AGE is grouped to five bins, which are 18–32.4, 32.4-46.8, 46.8-61.2, 61.2-75.6, and 75.6-90.

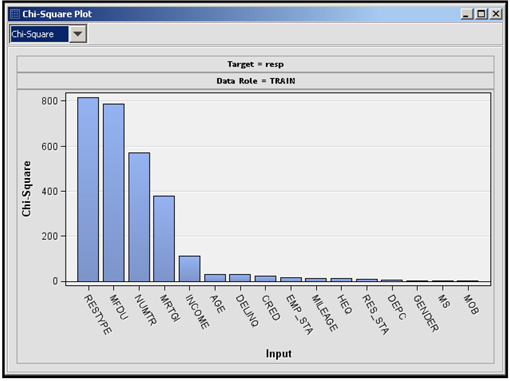

When you run the StatExplore node and open the Results window, you see a Chi-Square plot, Variable Worth plot and an Output window. The Chi-Square plot shows the Chi-Square value of each categorical variable and binned variable paired with the target variable, as shown in Display 2.74. The plot shows the strength of relationship of each categorical or binned variable with the target variable.

Display 2.74

The results window also displays, in a separate panel, the worth of each input. The worth is calculated from the p-value corresponding to the calculated Chi-Square test statistic. The p-value corresponding to the calculated chi-square statistic is calculated as

P(χ2 ≥ calculated chi - Square statistic) = p

Worth of the input is - 2log(p).

The Variable Worth plot is shown in Display 2.75.

Display 2.75

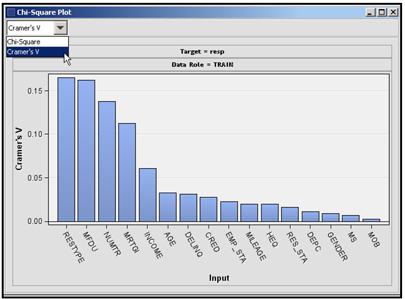

Both the Chi-Square plot and the Variable Worth plot show that the variable RESTYPE (the type of residence) is the most important variable since it has the highest Chi-Square value (Display 2.74) and also the highest worth (Display 2.75). Next in importance is MFDU (an indicator of multifamily dwelling unit). From the StatExplore node you can make a preliminary assessment of the importance of the variables.

An alternative measure of calculating the worth of an input, called impurity reduction, is discussed in the context of decision trees in Chapter 4. In that chapter, I discuss how impurity measures can be applied to calculate the worth of categorical inputs one at a time.

In addition to the Chi-Square statistic, you can display the Cramer’s V for categorical and binned interval inputs in the Chi-Square Plot window. Select Cramer’s V from the drop-down list, as shown in Display 2.76, in order to open the plot.

Display 2.76

You can get the formulae for Chi-Square statistic and Cramer’s V from the Help tab of SAS Enterprise Miner. The calculation of the Chi-Square statistic and Cramer’s V are illustrated step-by-step in the appendix to this chapter.

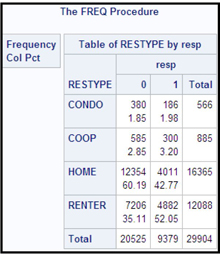

The Output window shows the mode of the input variable for each target class. For the input RESTYPE, the modal values are shown Output 2.4.

Output 2.4

This output arranges the modal values of the inputs by the target levels. In this example, the target has two levels: 0 and 1. The columns labeled Mode Percentage and Mode2 Percentage exhibit the first modal value and the second modal value, respectively. The first row of the output is labeled _OVERALL_. The _OVERALL_ row values for Mode and Mode Percent indicate that the most predominant category in the sample is homeowners, indicated by HOME. The second row indicates the modal values for non-responders. Similarly, you can read the modal values for the responders from the third row. The first modal value for the responders is RENTER, suggesting that the renters in general are more likely to respond than home owners in this marketing campaign. These numbers can be verified by running PROC FREQ from the Program Editor, as shown in Display 2.77.

Display 2.77

The results of PROC FREQ are shown in Output 2.5.

Output 2.5

StatExplore Node: Continuous/Interval scaled Target (DBAL: Change in Balances)

In order to demonstrate how you can use the StatExplore node with a continuous target, I have constructed a small data set for a hypothetical bank. As mentioned earlier, this data set consists of only three variables: (1) month-to-month change in the savings balances of all customers (DBAL), (2) interest rate differential (Spread) between the interest rate offered by the bank and its competitors, and (3) amount spent by the bank on advertisements (AdExp) to attract new customers and/or induce current customers to increase their savings balances. This small data set is used for illustration, although in practice you can use the StatExplore node to explore much larger data sets consisting of hundreds of variables.

Display 2.78 shows the process flow diagram for this example. The process flow diagram is identical to the one shown for a binary target, except that the input data source is different.

Display 2.78

The property settings for the StatExplore node for this example are the same as those shown in Display 2.73, with the following exceptions: the Interval Variables property (in the Chi-Square Statistics group) is set to No and the Correlations, Pearson Correlations and Spearman Correlations properties are all set to Yes.

After we run the Stat Explore node, we get the correlation plot, which shows the Pearson correlation between the target variable and the interval scaled inputs. This plot is shown in Display 2.79.

Display 2.79

Both the SPREAD and Advertisement Expenditure (AdExp) are positively correlated with Changes in Balances (DBAL), although the correlation between AdExp and DBAL is lower than the correlation between the spread and DBAL.

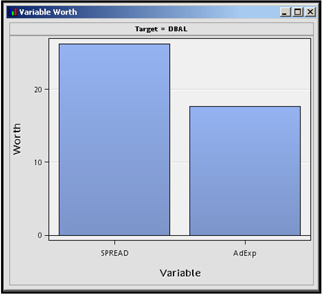

Display 2.80 shows a comparison of the worth of the two inputs advertisement expenditure and interest rate differential (spread).

Display 2.80

2.8.2 MultiPlot Node

MultiPlot Node: Binary Target (Response)

After you run the MultiPlot node, the results window shows plots of all inputs against the target variable. If an input is a categorical variable, then the plot shows the input categories (levels) on the horizontal axis and the percentage distribution of the target classes on the vertical axis, as shown in Display 2.81.

Display 2.81

The variable RESTYPE is a categorical variable, along with categories CONDO, COOP, HOME, and RENTER. The categories refer to the type of residence the customer lives. The plot above shows that the percentage of responders (indicated by 1) among the renters is higher than among the home owners.

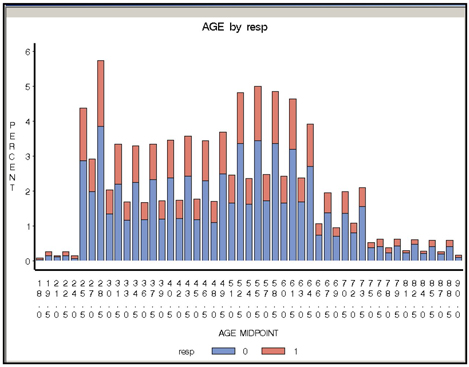

When the input is continuous, the distribution of responders and non-responders is given for different intervals of the input, as shown in Display 2.82. The midpoint of each interval is shown on the horizontal axis, and the distribution of target class (response and non-response) is shown on the vertical axis.

Display 2.82

MultiPlot Node: Continuous/Interval scaled Target (DBAL: Change in Balances)

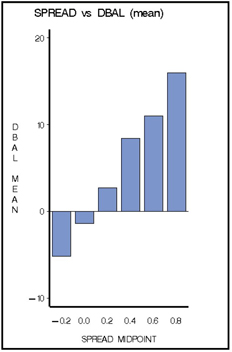

If you run the MultiPlot node in the Process Flow shown in Display 2.78 and open the Results window, you see a number of charts that show the relation between each input and the target variable. One such chart shows how the change in balances is related to interest rate differential (SPREAD), as shown in Display 2.83. The MultiPlot node shows the mean of target variable in different intervals of continuous inputs.

Display 2.83

In Display 2.83, the continuous variable SPREAD is divided into six intervals and the midpoint of each interval is shown on the horizontal axis. The mean of the target variable in each interval is shown along the vertical axis. As expected, the chart shows that the larger the spread, the higher the increase in balances is.

2.8.3 Graph Explore Node

Graph Explore Node: Binary Target (Response)

If you run the Graph Explore node and open the results window, you see a plot of the distribution of the target variable, as shown in Display 2.84. Right-click in the plot area, and select Data Options.

Display 2.84

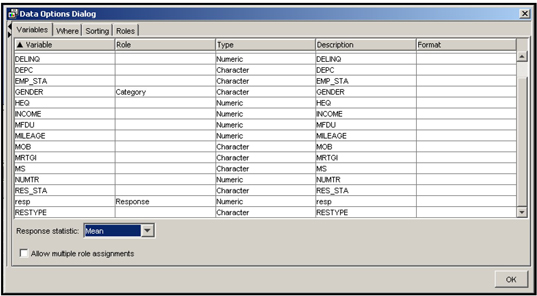

In the Data Options dialog box, shown in the Display 2.85, select the variables you want to plot and their roles.

Display 2.85

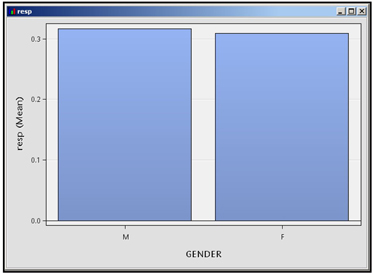

I assigned the role category to GENDER and the role Response to the target variable resp. I selected the response statistic to be the Mean and clicked OK. The result is shown in Display 2.86, which shows the Gender category on the horizontal axis and the mean of the response variable for each gender category on the vertical axis. The response rate for males is slightly higher than that for females.

Display 2.86

If you explore the Graph Explore node further, you will find there are many types of charts available.

Graph Explore Node: Continuous/Interval Scaled Target (DBAL: Change in Balances)

Run the Graph Explore node and open the results window. Click View on the menu bar, and select Plot. The Select a Chart Type dialog box opens, as shown in Display 2.87.

Display 2.87

I selected the Scatter chart and clicked Next. Then I selected the roles of the variables SPREAD and DBAL, designating SPREAD to be the X variable and DBAL to be the Y variable, as shown in Display 2.88.

Display 2.88

I clicked Next twice. In the Chart Titles dialog box, I filled in the text boxes with Title, and X and Y axis labels as shown in Display 2.89 and clicked Next.

Display 2.89

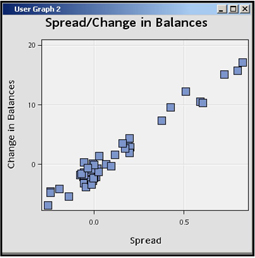

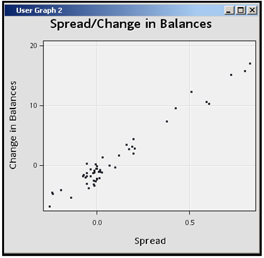

In the last dialog box, I clicked Finish, which resulted in the plot shown in Display 2.90.

Display 2.90

To change the marker size, right-click in the chart area and select Graph Properties. The properties window opens, shown in Display 2.91.

Display 2.91

Clear the Autosize Markers check box, and slide the scale to the left until you see that the Size is set to 3. Click Apply and OK. These settings result in a scatter chart with smaller markers, as shown in Display 2.92.

Display 2.92

The scatter chart shows that there is direct relation between SPREAD and Change in Balances.

2.8.4 Variable Clustering Node

The Variable Cluster node divides the inputs (variables) in a predictive modeling data set into disjoint clusters or groups. Disjoint means that if an input is included in one cluster, it does not appear in any other cluster. The inputs included in a cluster are strongly inter-correlated, and the inputs included in any one cluster are not strongly correlated with the inputs in any other cluster.

If you estimate a predictive model by including only one variable from each cluster or a linear combination of all the variables in that cluster, you not only reduce the severity of collinearity to a great extent, you also have fewer variables to deal with in developing the predictive model.

In order to learn how to use the Variable Clustering node and interpret the results correctly, you must understand how the Variable Clustering node clusters (groups) variables.

The Variable Clustering algorithm is both divisive and iterative. The algorithm starts with all variables in one single cluster and successively divides it into smaller and smaller clusters. The splitting is binary in the sense that at each point in the process, a cluster is split into two sub-clusters or child clusters, provided certain criteria are met. The process of splitting can be described as follows:4

1. Initially there is a single cluster with all variables in the data set included in it.

2. Eigenvalues are extracted from the correlation (or covariance) matrix of the variables included in the cluster. The largest eigenvalue and the next largest eigenvalue are used for calculating the eigenvectors and creating cluster components. If you arrange the eigenvalues in descending order of magnitude, then the first eigenvalue is the largest and the second eigenvalue is the next largest.

3. Eigenvectors corresponding to the first two (the largest and the next largest) eigenvalues are calculated. We can call them first and second eigenvectors.

4. Perform an oblique rotation on the eigenvectors and calculate “rotated” components.

5. Cluster components (principal components), which are linear combinations of all the variables included in the cluster, are calculated from the first and second eigenvectors. A linear combination can be thought of as a weighted sum of the variables with the elements of a rotated eigenvector as weights. Two cluster components are created – one corresponding to the first eigenvalue and the other corresponding to the second eigenvalue. We can call these first and second cluster components or first and second principal components since they correspond to the first and second eigenvalues as described in 2 above.

6. If the criterion for splitting is met (as described in 8 below), then the cluster is split into two child clusters by assigning each variable to the cluster component with which it has the highest squared multiple correlation.

7. Reassign variables iteratively until the explained variance is maximized

8. Steps 6 and 7 are performed only if one of the following conditions occur: the second eigenvalue is larger than the threshold value specified by the Maximum Eigenvalue property (the default threshold value is 1), the variance explained by the first principal component (cluster component) is below a specified threshold value specified by the Variation Proportion property, or the number of clusters is smaller than the value to which the Maximum Clusters property is set.

After the initial cluster is split into two child-clusters as described above, the algorithm selects one of the child-clusters for further splitting. The selected cluster has either the smallest percentage of variation explained by its first cluster component or the largest eigenvalue associated with the second cluster component.

9. The selected cluster is split into two child-clusters in the same way as described in 2-7.

10. At any point in the splitting process, there may be more than two candidate clusters that can be considered for splitting. The cluster that is selected from this set has either the smallest percentage of variation explained by its first cluster component or the largest eigenvalue associated with the second cluster component.

11. Steps 2 – 7 are repeated until no cluster is eligible for further splitting.

When all the clusters meet the stopping criterion, the splitting stops.

The stopping criterion is met when at least one of the following is true: (a) The second eigenvalue in all the clusters is smaller than the threshold value specified by the Maximum Eigenvalue property; (b) The proportion of variance explained by the first principal component in all the clusters is above the threshold value specified by the Variation Proportion property; (c) the number of clusters is equal to the value set for the Maximum Clusters property.

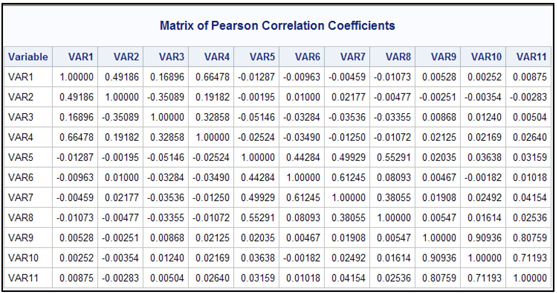

To demonstrate the Variable Cluster node, I have created a small data set with 11 variables. Display 2.93 shows the correlation matrix of variables.

Display 2.93

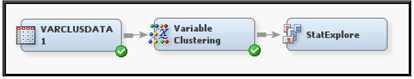

Display 2.94 shows the process flow diagram for clustering the variables in the data set.

Display 2.94

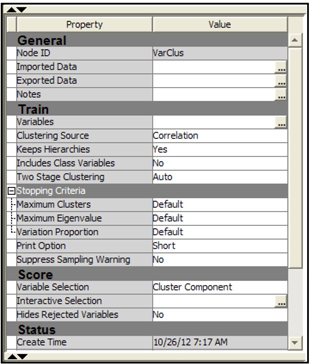

The properties of the Variable Clustering node are shown in Display 2.95.

Display 2.95

In this example, I set the Clustering Source property to Correlation. I have chosen to use the correlation matrix as the source for clustering, so the eigenvalues are calculated from the correlation matrix. From each eigenvalue, the algorithm calculates an eigenvector. The method of calculating eigenvalues and corresponding eigenvectors can be found in any linear algebra textbook or any multivariate statistics book such as “Applied Multivariate Statistical Analysis” by Richard A. Johnson and Dean W. Wichern.

In principle, if the number of variables in a cluster is k, you can extract k eigenvalues for that cluster of variables. But if the variables in the cluster are closely related to each other, you may find only a few eigenvalues larger than 1, and it may be more useful to group the closely related variables together in sub-clusters.

In our example, at the very beginning of the splitting process k = 11 since the very first cluster includes all the variables in the data set. But as the splitting process continues, the number of variables (k) in each resulting cluster will be less than 11.

bLet the largest eigenvalue of the correlation matrix be represented by λ1 and the next largest eigenvalue by λ2. A cluster is not split if

Calculation of Cluster Components

The eigenvalues of the correlation (or covariance) matrix and the corresponding eigenvectors are needed to calculate the cluster components. From the first eigenvalue

The algorithm then calculates two new variables called cluster components from the original variables using the elements of

the second cluster component is:

The cluster membership of a variable is unique if its weight in one cluster component is zero while its weight in the other cluster component is nonzero. Although the rotation takes us in this direction, it does not always result in a clear-cut assignment of variables to the clusters. In other words, it does not result in a variable having zero weight in one component and a nonzero weight in another component.

Assignment of variables to clusters

In order to achieve this uniqueness of membership (or non-overlapping of clusters), the algorithm compares the squared multiple correlations of each variable in the cluster with the two components

The algorithm goes further by iteratively re-assigning the variables to different clusters in order to maximize the variance accounted for by the cluster components. You can request that the hierarchical structure developed earlier in the process not be destroyed during this iterative re-assignment process by setting the KeepHierarchies property to Yes (see Diagram 2.96).

After the iteration process is completed, the cluster components are recomputed for each cluster, and they are exported to the next node.

Proportion of variance explained by a cluster component

One of the criterion used in determining whether to split a cluster is based on the proportion of variance explained by the first cluster component (or first principal component) of the cluster. This proportion of variance explained by the first cluster component is the ratio:

To get a general idea of how to calculate the proportion of variance explained by a principal component in terms of the eigenvalues, refer to “Applied Multivariate Statistical Analysis” by Richard A. Johnson and Dean W. Wichern. The exact calculation of the proportion of variance explained by the first cluster component by the Variable Clustering node in SAS Enterprise Miner may be different from the calculations shown in the book cited above.

Variable clustering using the example data set

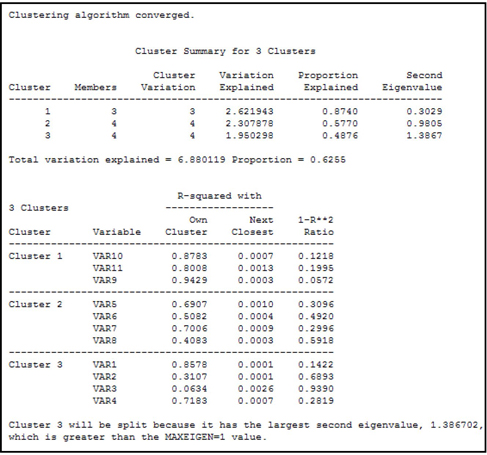

I ran the Variable Clustering node shown in Display 2.94. The output in the Results window is reproduced in output 2.6.

Output 2.6

Output 2.6 (cont’d)

Output 2.6 (cont’d)

Output 2.6 (cont’d)

As a way of reducing the number of inputs (dimension reduction), you can select one representative variable from each cluster. A representative variable of a cluster can be defined as the one with the lowest 1-R**2 Ratio. This ratio is calculated as:

An alternative way of achieving dimension reduction is to replace the original variables with cluster components.

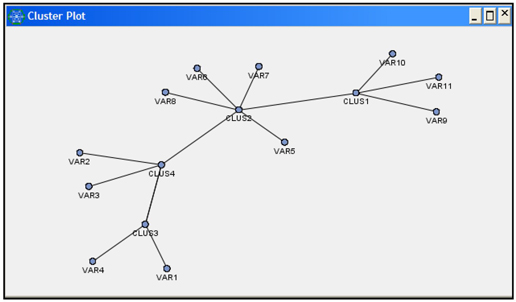

Display 2.96 shows the cluster plot.

Display 2.96

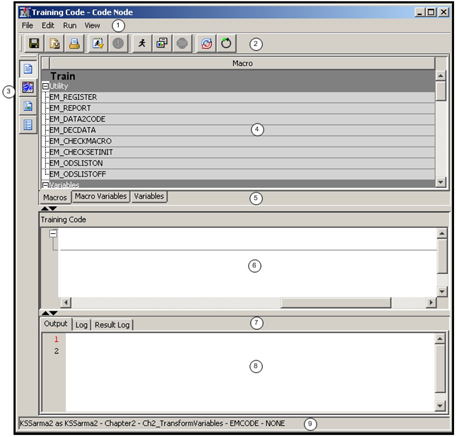

The variables exported to the next node are shown in Display 2.97.

Display 2.97

The 11 original variables and the 4 new variables (Clus1, Clus2, Clus3, and Clus4) are exported to the next node. You could develop a predictive model using only the four newly constructed variables Clus1, Clus2, Clus3, and Clus4 instead of the original 11 variables. Thus, the Variable Clustering node helps in reducing the inputs and reducing the danger of collinearity.

An alternative to using the constructed variables Clus1, Clus2, Clus3, and Clus4 is to select one input variable from each of the four clusters presented in Output 2.6. The best input variable to select from each cluster can be determined on the basis of the correlation of the input to the cluster component or its correlation with the target variable.

Note that I have not included a target variable in the example data set I used to demonstrate the Variable Clustering node. If you include another variable with the role of TARGET, then the Variable Clustering node excludes it from creating clusters. This becomes clear in Chapter 3 where I demonstrate how the Variable Clustering node is used for variable selection.

2.8.5 Cluster Node

The Cluster node can be used to create clusters of customers (observations) with similar characteristics. An examination of these clusters enables you to discover patterns in your data and also helps identify the inputs and input combinations that are potentially good predictors of the target variable. Cluster analysis is an unsupervised learning technique in the sense that it creates the clusters from the input variables alone, without reference to any target variable. Cluster analysis by itself does not tell you how the inputs are related to the target. Additional analysis is needed to find out the relationship of the clusters to the target variable. This sort of clustering can be used, for example, to segment your customers, without reference to any particular target variable, just to see if your customers (observations) naturally fall into different groups or clusters. Customers who end up in the same cluster are similar in some way, while customers in different clusters are relatively dissimilar.

In order to demonstrate the properties and the results of the Cluster node, I have used a data set of 5,000 credit card customers of a hypothetical bank. These customers were observed during an interval of 6 months, which is referred to as the performance window. During this performance window, some of the customers cancelled their credit cards and some retained them. I created an indicator variable, called Cancel, which takes the value 1 if the customer cancelled his credit card during the performance window and 0 if he did not. In addition, data was appended to each customer’s record showing his demographic characteristics and other information relating to the customer’s behavior prior to the time interval specified by the performance window. Some examples of customer behavior are: the number of credit card delinquencies, the number of purchases, and the amount of his/her credit card balance – all for the period prior to the performance window.

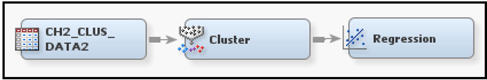

We use only the input variables to create the clusters, and we pass the cluster labels and cluster numbers created by the Cluster node to other nodes such as the Regression node for further analysis. The cluster labels or numbers can be used as nominal inputs in the Regression node. Display 2.98 shows the process flow for demonstrating the Cluster node.

Display 2.98

A Regression node is attached to the Cluster node to show how the variables created by the Cluster node are passed to the next node. Instead of the Regression node, you could use the Stat Explore or Graph Explore node to compare the profiles of the clusters.

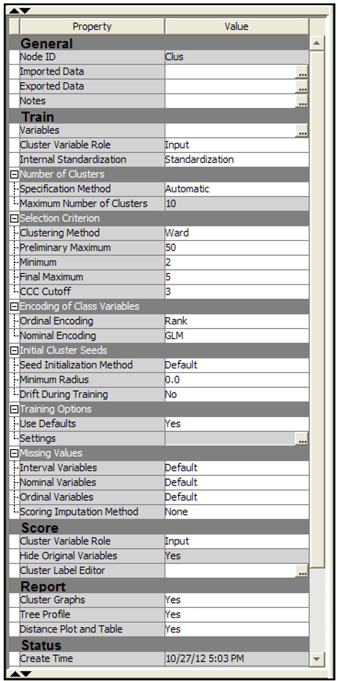

Display 2.99 shows the Properties window for the Cluster node.

Display 2.99

In the Train group I set the Cluster Variable Role to Input. After the cluster node creates the clusters, it creates a nominal variable called _Segment_, which takes the value 1, 2, 3, etc., showing the cluster to which an observation belongs. When you set the value of the Cluster Variable Role property to Input, the variable _segment_ is assigned the role of Input and passed to the next node. Another nominal variable called _Segment _Label_ takes the values Cluster1, Cluster2, Cluster3, etc., showing the label of the cluster. The variable _Segment_ can be used as a nominal input in the next node. Alternatively, you can set the value of the Cluster Variable Role property to Segment resulting in a segment variable with the model role of Segment. This can be used for by-group processing in the next node.

The settings in the Number of Clusters group and in the Selection Criterion cause the Cluster node to perform clustering in two steps. In the first step, it creates a number of clusters not exceeding 50 (as specified by the Preliminary Maximum property), and in the second step it reduces the number of clusters by combining clusters, subject to two conditions specified in the properties: (1) The number of clusters must be greater than 2 (as specified by the value of the Minimum property), and (2) the value of the Cubic Clustering Criterion (CCC) statistic is greater than or equal to the Cutoff, which is 3. The Ward method is used to determine the final maximum number of clusters.

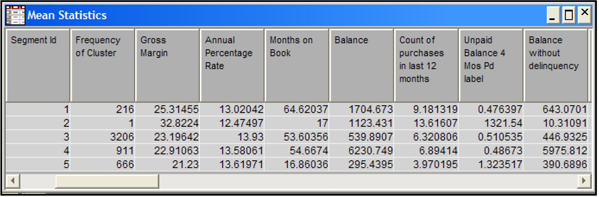

I then ran the Cluster node and opened the results window. A partial list of the Mean Statistics by cluster is shown in Display 2.100

Display 2.100

Display 2.100 shows how the mean of the inputs differs from cluster to cluster. Cluster 2 (segment id =2) has only one observation. SAS Enterprise Miner created a separate cluster for this observation because it has some outliers in the inputs. The customer in cluster 2 generates the highest average gross margin (32.8) for the company. (Gross margin is revenue less cost of service.) The customer in cluster 2 differs from customers in other clusters in other ways: although she makes 13.6 purchases per year with an average daily balance of $1123.43, this customer has an unpaid balance of $1321.54 with four months past due, and an average balance of 10.31 without delinquency. While cluster 1 ranks highest in average gross margin (a measure of customer profitability), Clusters 3 ranks highest in total profits (gross margins) earned by the company since it contains the largest number of customers (3206). Cluster analysis can often give important insights into customer behavior as it did in this example.

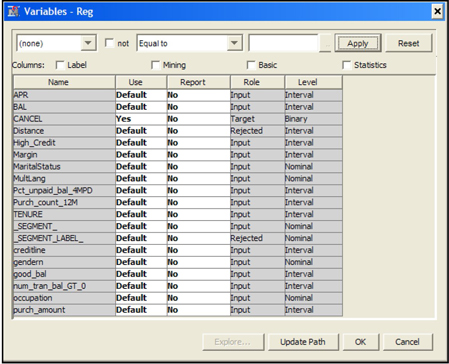

To illustrate how the output of the Cluster node is exported to the subsequent nodes in the process flow, I attached a Regression node to the Cluster node. The variables passed to the Regression node are shown in Display 2.101.

Display 2.101

Since I set the value of the Cluster Variable Role property to Input, the variable _SEGMENT_ is assigned the role of Input by the Cluster node and is passed on to the Regression node as a nominal variable.

You can use this nominal variable as an input in the regression. Alternatively, if I had selected Segment as the value for the Cluster Variable Role property, then the Cluster node would have assigned the role of Segment to the variable _SEGMENT_, in which case it could be used for by-group processing.

2.8.6 Variable Selection Node

The Variable Selection node can be used for variable selection for predictive modeling. There are number of alternative methods with various options for selecting variables. The methods of variable selection depend on the measurement scales of inputs and the targets.

These options are discussed in detail in Chapter 3. In this chapter, I present a brief review of the techniques used by the Variable Selection node for different types of targets, and I show how to set the properties of the Variable Selection node for choosing an appropriate technique.

There are two basic techniques used by the Variable Selection node. They are the R-Square selection method and the Chi-Square selection method. Both these techniques select variables based on the strength of their relationship with the target variable. For interval targets, only the R-Square selection method is available. For binary targets both the R-Square and Chi-Square selection methods are available.

2.8.6.1 R-Square Selection Method

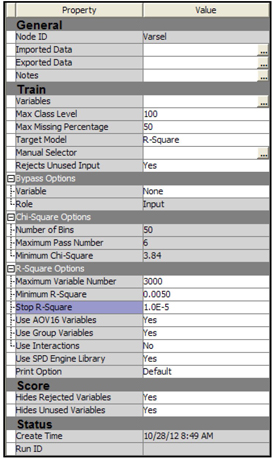

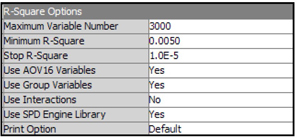

To use the R-Square selection method, you must set the Target Model property of the Variable Selection node to R-Square, as shown in the Display 2.102.

Display 2.102

When the R-Square selection method is used, the variable selection is done in two steps. In Step 1, the Variable Selection node computes an R-Square value (the squared correlation coefficient) with the target for each variable, and then assigns the Rejected role to those variables that have a value less than the minimum R-square. The default minimum R-square cut-off is set to 0.005. You can change the value of the minimum R-square by setting the Minimum R-Square property to a value other than the default.

The R-square value (or the squared correlation coefficient) is the proportion of variation in the target variable explained by a single input variable, ignoring the effect of other input variables.

In Step 2, the Variable Selection node performs a forward stepwise regression to evaluate the variables chosen in the first step. Those variables that have a stepwise R-square improvement less than the cut-off criterion have the role of rejected. The default cut-off for R-square improvement is set to 0.0005. You can change this value by setting the Stop R-Square property to a different value.

For interval variables, R-square is calculated directly by means of a linear regression of the target variable on the interval variable, assessing only the linear relation between the interval variable and the target.

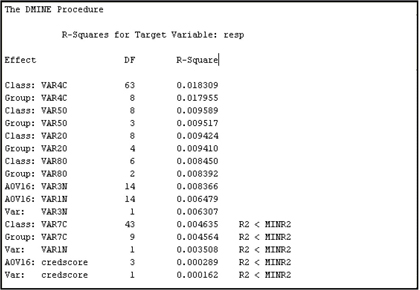

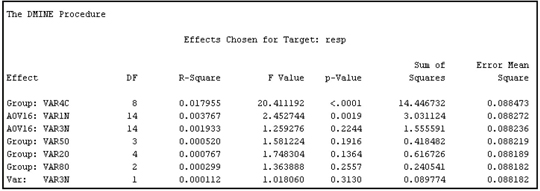

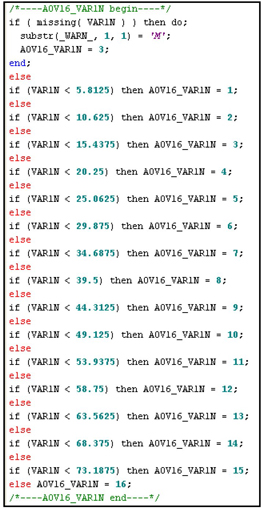

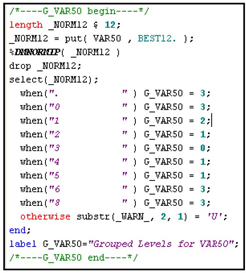

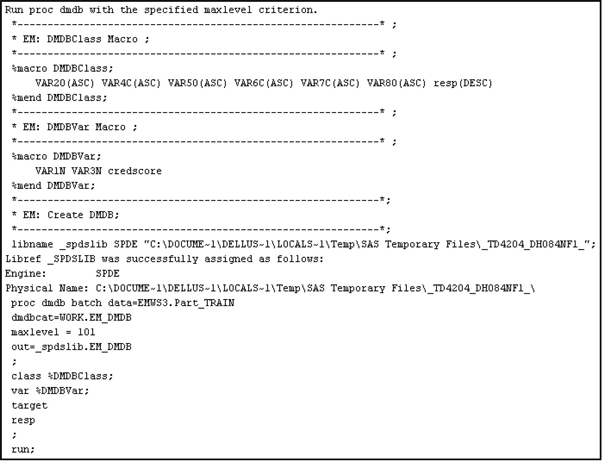

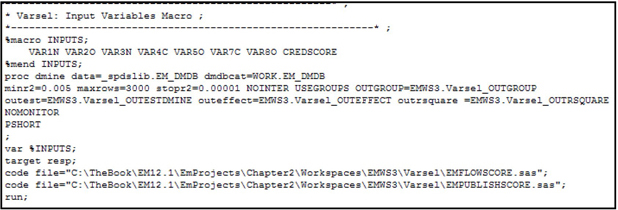

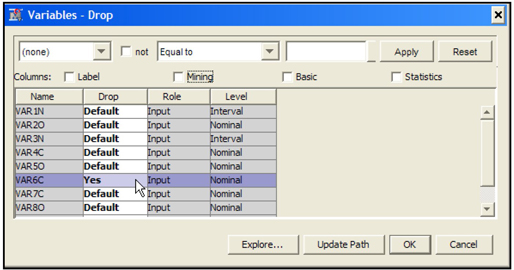

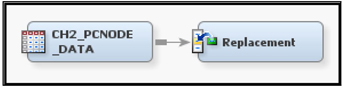

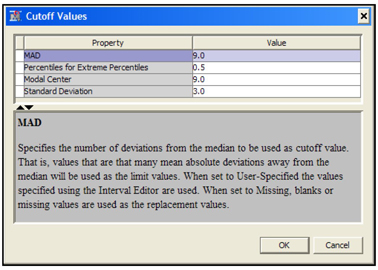

To detect non-linear relations, the Variable Selection node creates binned variables from each interval variable. The binned variables are called AOV16 variables. Each AOV16 variable has a maximum of 16 intervals of equal width. The AOV16 variable is treated as a class variable. A one-way analysis of variance is performed to calculate the R-Square between an AOV16 variable and the target. These AOV16 variables are included in Step 1 and Step 2 of the selection process.