Chapter 9

Vulnerability Management

Information in this chapter:

• PCI DSS Requirements Covered

• Vulnerability Management in PCI

• Internal Vulnerability Scanning

Before we discuss Payment Card Industry (PCI) requirements related to vulnerability management in depth and find out what technical and nontechnical safeguards are prescribed there and how to address them, we need to address one underlying and confusing issue of defining some of the terms that the PCI Data Security Standard (DSS) documentation relies upon.

These are as follows:

Defining vulnerability assessment is a little tricky, since the term has evolved over the years. The authors prefer to define it as a process of finding and assessing vulnerabilities on a set of systems or a network, which is a very broad definition. By the way, the term vulnerability is typically used to mean a software flaw or a weakness that makes the software susceptible to an attack or abuse. In the realm of information security, a vulnerability assessment is usually understood to be a vulnerability scan of the network with a scanner, implemented as installable software, a dedicated hardware appliance, or a scanning software-as-a-service (SaaS). Sometimes using the term network vulnerability assessment adds more clarity to this. The terms network vulnerability scanning or network vulnerability testing are usually understood to mean the same. In addition, the separate term application vulnerability assessment is typically understood to mean an assessment of application-level vulnerabilities in a particular application; most frequently, a Web-based application deployed publicly on the Internet or internally on the intranet. A separate tool called an application vulnerability scanner (as opposed to the network vulnerability scanner mentioned above), is used to perform an application security assessment. By the way, concepts such as “port scan,” “protocol scan,” and “network service identification” belong to the domain of network vulnerability scanning, whereas concepts such as “site crawl,” “Hypertext Transfer Protocol (HTTP) requests,” “cross-site scripting,” and “ActiveX” belong to the domain of Web application scanning. We will cover both types in this chapter as they are mandated by the PCI DSS, albeit in different requirements (6 and 11).

Penetration testing is usually understood to mean an attempt to break into the network by a dedicated team, which can use the network and application scanning tools mentioned above and also other nontechnical means such as dumpster diving (i.e. looking for confidential information in the trash) or social engineering (i.e. attempting to subvert authorized information technology [IT] users to give out their access credential and other confidential information). Sometimes, penetration testers might rely on other techniques and methods such as custom-written attack tools.

Testing of controls, mentioned in Requirement 11.1, does not have a simple definition. Sometimes referred to as a “site assessment,” such testing implies either an in-depth assessment of security practices and controls by a team of outside experts or a self-assessment by a company’s own staff. Such controls assessment will likely not include attempts to break into the network.

Preventing vulnerabilities, covered in Requirement 6, addresses vulnerability management by assuring that newly created software does not contain known flaws and problems. Requirements 5, 6, and 11 also mandate various protection technologies such as antivirus, Web firewalls, intrusion detection or prevention, and others.

The core of Requirement 11 discussed in this chapter covers all the above and some of the practices that help mitigate the impact of problems, such as the use of intrusion prevention tools. Such practices fall into broad domains of vulnerability management and threat management. Although there are common definitions of vulnerability management (covered below), threat management is typically defined ad hoc as “dealing with threats to information assets.”

PCI DSS Requirements Covered

Vulnerability management controls are present in PCI DSS Requirements 5, 6, and 11.

• PCI Requirement 5 “Use and regularly update antivirus software or programs” covers anti-malware measures (albeit from a weaker signature-basis); these are tangentially related to what is commonly seen as vulnerability management, but it helps deal with the impact of vulnerabilities.

• PCI Requirement 6 “Develop and maintain secure systems and applications” covers a broad range of application security subjects, application vulnerability scanning, secure software development, etc.

• PCI Requirement 11 “Regularly test security systems and processes” covers a broad range of security testing, including network vulnerability scanning by approved scanning vendors (ASVs), internal scanning, and other requirements. We will focus on Requirements 11.2 and 11.3 in this chapter.

Vulnerability Management in PCI

Before we start our discussion of the role of vulnerability management for PCI compliance, we need to briefly discuss what is commonly covered under vulnerability management in the domain of information security. It appears that some industry pundits have proclaimed that vulnerability management is simple: just patch all those pesky software problems and you are done. Others struggle with it because the scope of platforms and applications to patch and other weaknesses to rectify is out of control in most large, diverse organizations. The problems move from intractable to downright scary when you consider all the Web applications being developed in the world of HTML 5 and cloud computing, including all the in-house development projects, outsourced development efforts, partner development, etc. Such applications may never get that much needed patch from the vendor because you are simultaneously a user and a vendor; a code change by your own engineers might be the only way to solve the issue.

Thus, vulnerability management is not the same as just keeping your systems patched; it expands into software security and application security, secure development practices, and other adjacent domains. If you are busy every first Tuesday when Microsoft releases its batch of patches, but not doing anything to eliminate a broad range of application vulnerabilities during the other 29 days in a month, you are not managing your vulnerabilities efficiently, if at all. Vulnerability management was a mix of technical and nontechnical process even in the time when patching was most of what organizations needed to do to stay secure. Nowadays, it touches an even bigger part of your organization: not only network group, system group, desktop group, but also your development and development partners, and possibly even individual businesses deploying their own, possible “in the cloud” applications (it is not unheard of that such applications will handle or contain payment card data).

Clearly, vulnerability management is not only about technology and “patching the holes.” As everybody in the security industry knows, technology for discovering vulnerabilities is getting better every day. Moreover, the same technology is also used to detect configuration errors and non-vulnerability related security issues. For instance, a fully patched system is still highly vulnerable if it has a blank administrator or root password, even though no patch is missing. The other benefit derived from vulnerability management is the detection of “rogue” hosts, which are sometimes deployed by business units and are sitting outside of control of your IT department, and thus, might not be deployed in adherence with PCI DSS requirements. One of the basic tenets of adhering to the PCI standard is to limit the scope of PCI by strictly segmenting and controlling the cardholder data environment (CDE). Proper implementation of internal and external vulnerability scanning can assist in maintaining a pristine CDE.

As a result, it’s useful to define vulnerability management as managing the lifecycle of processes and technologies needed to discover and then reduce (or, ideally, remove) the vulnerabilities in software required for the business to operate, and thus, bring the risk to business information to the acceptable threshold.

Network vulnerability scanners can detect vulnerabilities from the network side with good accuracy and from the host side with better accuracy. Host-side detection is typically accomplished via internal scans by using credentials to log into systems (the so-called “authenticated” or “trusted” scanning), so configuration files, registry entries, file versions, etc. can be read, thus increasing the accuracy of results. Such scanning is performed only from inside the network, not from the Internet.

Note

Sometimes vulnerability scanning tools are capable of running trusted or authenticated scanning where the tools will actually log into a system, just like a regular user would, and then perform the search for vulnerabilities. If you successfully run a trusted or authenticated scan from the Internet and discover configuration issues and vulnerabilities, you have a serious issue because no one should be able to directly log into hosts or network devices directly from the Internet side, whether to network device or servers. Believe it or not, this has happened in real production environments, subject to PCI DSS! Also, PCI ASV scanning procedures prohibit authentication scanning when performing ASV scan validation. Authenticated or trusted scans are extremely useful for PCI DSS compliance and security, but they should always be performed from inside the network perimeter.

However, many organizations that implement periodic vulnerability scanning have discovered that the volumes of data generated far exceed their expectations and abilities. A quick scan-then-fix approach turns into an endless wheel of pain, obviously more dramatic for PCI DSS external scanning because you have no choice in fixing vulnerability, which leads to validation failure (we will review the exact criteria for failure below). Many free and low-cost commercial-vulnerability scanners suffer from this more than their more advanced brethren; thus, exacerbating the problem for price-sensitive organizations such as smaller merchants. Using vulnerability scanners presents other challenges, including having network visibility of the critical systems, perceived or real impact on the network bandwidth, as well as system stability. Overall, vulnerability management involves more process than technology and your follow-up actions should be based on the overall risk and not simply on the volume of incoming scanner data.

Stages of Vulnerability Management Process

Let’s outline some critical stages of the vulnerability management process. Those include defining the policy, collecting the data, deciding what to remediate or shield and then taking action.

Policy Definition

Indeed, the vulnerability management process starts from the policy definition that covers your organization’s assets, such as systems and applications and their users, as well as partners, customers, and whoever touches those resources. Such documents and the accompanying detailed security procedures define the scope of the vulnerability management effort and create a “known good” state of those IT resources. Policy creation should involve business and technology teams, as well as senior management who would be responsible for overall compliance. PCI DSS requirements directly affect such policy documents and mandate its creation (see Requirement 12 that states that one needs to “maintain a policy that addresses information security”). For example, marking the assets that are in scope for PCI compliance is also part of this step.

Data Acquisition

The data acquisition process comes next. A network vulnerability scanner or an agent-based host scanner is a common choice. Both excellent freeware and commercial solutions are available. In addition, emerging standards for vulnerability information collection, such as OVAL (http://oval.mitre.org), established standards for vulnerability naming, such as CVE (http://cve.mitre.org) and vulnerability scoring, such as Common Vulnerability Scoring System ([CVSS], www.first.org/cvss) can help provide a consistent way to encode vulnerabilities, weaknesses, and organization-specific policy violations across the popular computing platforms. Moreover, an effort by US National Institute of Standards and Technology called Security Content Automation Protocol (SCAP) has combined the above standards into a joint standard bundle to enable more automation of vulnerability management. See http://scap.nist.org for more details on SCAP.

Scanning for compliance purposes is somewhat different from scanning purely for remediation. Namely, PCI DSS reports that Qualified Security Assessor (QSA) will ask for to validate your compliance should show the list of systems that were scanned for all PCI-relevant vulnerabilities, as well as an indication that all systems test clean. Scanning tools also provide support for Requirements 1 and 2, secure-system configurations, and many other requirements described below. This shows that while “scanning for remediation” only requires a list of vulnerable systems with their vulnerabilities, “scanning for compliance” also calls for having a list of systems found not to be vulnerable.

Prioritization

The next phase, prioritization, is a key phase in the entire process. It is highly likely that even with a well-defined specific scan policy (which is derived from PCI requirements, of course) and a quality vulnerability scanner, the amount of data on various vulnerabilities from a large organization will be enormous. Even looking at the in-scope systems might lead to such data deluge; it is not uncommon for an organization with a flat network to have thousands of systems in scope for PCI DSS. No organization will likely “fix” all the problems, especially if their remediation is not mandated by explicit rules. Some kind of prioritization will occur. Various estimates indicate that even applying a periodic batch of Windows patches (“black” Tuesday) often takes longer than the period between patch releases (longer than 1 month). Accordingly, there is a chance that the organization will not finish the previous patching round before the next one rushes in. To intelligently prioritize vulnerabilities for remediation, you need to take into account various factors about your own IT environment as well as the outside world. Ideally, such prioritization should not only be based on PCI DSS but also on organization’s view and approach to information risk (per the note in Requirement 6.1). Also, even when working within PCI DSS scope, it makes sense to fix vulnerability with higher risk to card data first, even if this is not mandated by PCI DSS standards.

Those include the following:

• Specific regulatory requirement: fix all medium- and high-severity vulnerabilities as indicated by the scanning vendor; fix all vulnerabilities that can lead to Structured Query Language (SQL) injection, cross-site scripting attacks, etc.

• Vulnerability severity for the environment: fix all other vulnerabilities on publicly exposed and then on other in-scope systems.

• Related threat information and threat relevance: fix all vulnerabilities on the frequently attacked systems.

• Business value and role information about the target system: address vulnerabilities on high-value critical servers.

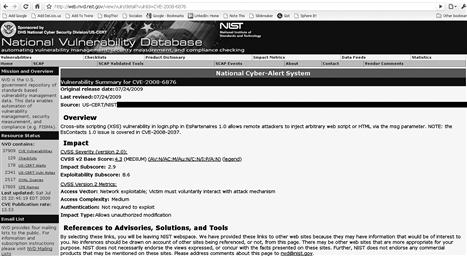

To formalize such prioritization, one can use the CVSS (www.first.org/CVSS), which takes into account various vulnerability properties such as priority, exploitability, and impact, as well as multiple, local site-specific properties. The CVSS scheme offers a way to provide a uniform way of scoring vulnerabilities on a scale from 0 to 10. PCI DSS mandates the use of CVSS by ASVs; moreover, PCI validation scanning prescribes that all vulnerability with the score equal to or higher than 4.0 must be fixed to pass the scan. For external scans??? National Vulnerability Database (NVD) located at http://nvd.nist.org provides CVSS scores for many publicly disclosed vulnerabilities (see Figure 9.1).

Figure 9.1 National Vulnerability Database

PCI DSS 2.0 also introduced the concept of a threshold for internal vulnerability scanning. Validation procedure 11.2.1.b stats that a QSA must “review the scan reports and verify that the scan process includes rescans until passing results are obtained, or all “High” vulnerabilities as defined in PCI DSS Requirement 6.2 are resolved” for internal scanning.

Mitigation

The next phase of mitigation is important in many environments where immediate patching or reconfiguration is impossible, such as a critical server running unusual or custom-built applications. Despite the above, in some cases, when a worm is released or a novel attack is seen in similar environments, protecting such a system becomes unavoidable. In this case, you immediately need to do something to mitigate the vulnerability temporarily. This step might be performed by a host or network intrusion prevention system (IPS); sometimes even a firewall blocking a network port will do. The important question here is choosing the best mitigation strategy that won’t create additional risk by blocking legitimate business transactions. In case of a web application vulnerability, a separate dedicated device such as a web application firewall needs to be deployed in addition to the traditional network security safeguards such as firewalls, filtering routers, IPSs, etc.

In this context, using antivirus and intrusion prevention technologies might be seen as part of vulnerability mitigation because these technologies help protect companies from vulnerability exploitation (either by malware or human attackers).

Ideally, all vulnerabilities that impact card data need to be fixed, such as patched or remediated in some other way prescribed by the above prioritization procedure taking into account the steps we took to temporarily mitigate the vulnerability above. In a large environment, it is not simply the question of “let’s go patch the server.” Often, a complicated workflow with multiple approval points and regression testing on different systems is required.

To make sure that vulnerability management becomes a process, an organization should monitor vulnerability management on an ongoing basis. This involves looking at the implemented technical and process controls aimed at decreasing risk. Such monitoring goes beyond vulnerability management into other security management areas. It is also important to be able to report to senior management about your progress.

Vulnerability management is not a panacea even after all the “known” vulnerabilities are remediated. “Zero-day” attacks, which use vulnerabilities with no resolution publicly available, will still be able to cause damage. Such cases need to be addressed by using the principle of “defense in-depth” during the security infrastructure design.

Now, we will walk through all the requirements in PCI DSS guidance that are related to vulnerability management. We should again note that vulnerability management guidance is spread across Requirements 5, 6, and 11.

Requirement 5 Walk-Through

While antivirus solutions have little to do with finding and fixing vulnerabilities, in PCI DSS they are covered under the broad umbrella definition of vulnerability management. One might be able to argue that antivirus solutions help when a vulnerability is present and is being exploited by malicious software such as a computer virus, worm, Trojan horse, or spyware. Thus, antivirus tools help mitigate the consequences of exploited vulnerabilities in some scenarios.

Requirement 5.1 mandates the organization to “use and regularly update antivirus software or programs.” Indeed, many antivirus vendors moved to daily (and some to hourly) updates of their virus definitions. Needless to say, virus protection software is next to useless without an up-to-date malware definition set.

PCI creators wisely chose to avoid the trap of saying “antivirus must be on all systems,” but instead chose to state that you need to “Deploy antivirus software on all systems commonly affected by malicious software (particularly personal computers and servers).” This ends up causing a ton of confusion, and in many cases, companies fight deploying antivirus, even when the operating system manufacturer recommends it (e.g. Apple’s OS X). A good rule of thumb is to deploy it on all Microsoft Windows machines and any desktop machine with users regularly accessing untrusted networks (like the Internet) that have an anti-malware solution.

Subsection 5.1.1 states that one needs to “Ensure that all antivirus programs are capable of detecting, removing, and protecting against all known types of malicious software.” They spell out all the detection, protection, and removal of various types of malicious software, knowing full well that such protection is highly desirable, but not really achievable, given the current state of malware research. In fact, recent evidence suggests that an antivirus product’s ability to protect you from all the malware is less certain everyday as more backdoors, Trojans, rootkits, and other forms of malware enter the scene. Security toolkits like Metasploit take further whacks at A/V effectiveness with tools like msfpayload and msfencode. Check your nearest search engine for details as those go beyond the scope of this discussion.

Finally, Section 5.2 drives the point home: “ensure that all antivirus mechanisms are current, actively running, and generating audit logs.” This combines three different requirements, which are sometimes overlooked by organizations that deployed antivirus products. First, they need to be current—updated as frequently as their vendor is able to push updates. Daily, not weekly or monthly, is a standard now. Second, the running status of security tools needs to be monitored. Third, as mentioned in Chapter 10, “Logging Events and Monitoring the Cardholder Data Environment,” logs are critical for PCI compliance. This section reminds PCI implementers that antivirus tools also need to generate logs, and such logs need to be reviewed in accordance with Requirement 10. Expect your Assessor to ask for logs from your anti-malware solution to substantiate this requirement, including logs from the A/V console.

What to Do to Be Secure and Compliant?

Requirement 5 offers simple and obvious action items:

1. Deploy antivirus software on in-scope systems, wherever such software is available and wherever the system can suffer from malware. Free antivirus products can be downloaded from several vendors such as AVG (go to http://free.avg.com to get the software) or Avast (go to www.avast.com to get it).

2. Configure the obtained anti-malware software to update at least daily. Please forget the security advice from the 1990s when weekly updates were seen as sufficient. Daily is the minimum acceptable update frequency today—but be prepared that in many cases it will be way too late as malware will already be on your systems. This will deal with Requirement 5.1.1—but might not provide enough security for your organization.

3. Verify that your antivirus software is generating audit logs. This will take care of Requirement 5.2. Please refer to Chapter 10, “Logging Events and Monitoring the Cardholder Data Environment,” to learn how to deal with all the logs, including antivirus logs.

Note

For example, Symantec AntiVirus will log all detections by default; there is no need to “enable logging.” To preserve, please make sure that the setting shown in Figure 9.2 allows your centralized log collection system to get the logs before they are deleted.

Figure 9.2 Antivirus Log Setting

Requirement 6 Walk-Through

Another requirement of PCI covered under the vulnerability management umbrella is Requirement 6, which covers the need to “develop and maintain secure systems and applications.” Thus, it touches vulnerability management from another side: making sure that those pesky flaws and holes never appear in software in the first place. At the same time, this requirement covers the need to plan and execute a patch-management program to assure that, once discovered, the flaws are corrected via software vendor patches or other means. In addition, it deals with a requirement to scan applications, especially Web applications, for vulnerabilities.

Thus, one finds three types of requirements in Requirement 6: those that help you patch the holes in commercial applications, those that help you prevent holes in the in-house developed applications, and those that deal with verifying the security of Web application (Requirement 6.6). Requirement 6.1 states that an organization must “Ensure that all system components and software are protected from known vulnerabilities by having the latest vendor-supplied security patches installed. Install critical security patches within 1 month of release” [2]. Then, it covers the prescribed way of dealing with vulnerabilities in custom, homegrown applications: careful application of secure coding techniques, and incorporating them into a standard software-development lifecycle. Specifically, the document says “for in-house developed applications, numerous vulnerabilities can be avoided by using standard system-development processes and secure coding techniques.” Finally, it addresses the need to test the security of publicly exposed Web applications by mandating that “for public-facing Web applications, address new threats, and vulnerabilities on an ongoing basis.”

Apart from requiring that organizations “ensure that all system components and software have the latest vendor-supplied security patches installed,” Requirement 6.1 attempts to settle the debates in security industry, which is between a need for prompt patching in case of an imminent threat and a need for careful patch testing. They take the simplistic approach of saying that one must install patches within 1 month. Such an approach, while obviously “PCI-compliant,” might sometimes be problematic: one month is way too long in case of a worm outbreak (all vulnerable systems will be firmly in the hands of the attackers), and on the other hand, too short in case of complicated mission-critical systems and overworked IT staff (such as for database patches). Therefore, after PCI DSS was released, a later clarification was added, which explicitly mentions a risk-based approach. Specifically, “An organization may consider applying a risk-based approach to prioritize their patch installations;” this can allow an organization to get an extension to the above one-month deadline “to ensure high-priority systems and devices are addressed within 1 month, and less-critical devices and systems are addressed within 3 months.”

Further, Requirement 6.2 prescribes “establishing a process to identify newly discovered security vulnerabilities.” Note that this doesn’t mean “scanning for vulnerabilities” in your environment, but looking for newly discovered vulnerabilities via vulnerability alert services, some of which are free such as the one from Secunia (see www.secunia.com), whereas others are targeted at enterprises. Some services can be highly customized to only send alerts applicable to your environment, and also fixes for vulnerabilities that are not public. They are not free, but may surely be worth the money paid for them. You can also monitor public mailing lists for vulnerability information (BugTraq is a primary example: www.securityfocus.com/archive/1), which usually requires a significant time commitment. Even Twitter can be useful here as certain streams contain excellent real-time vulnerability data.

Note

If you decide to use public mailing lists, you need to have a list of all operating systems and commercial software that is in-scope. You may want to set up a specific mailbox that multiple team members have access to, so new vulnerabilities are not “missed” when someone is out of the office. Checking these lists as part of your normal Security Operation Center (SOC) analyst duties can help ensure this activity regularly takes place. In fact, this is even explicitly mandated in PCI DSS, Requirement 12.2: “Develop daily operational security procedures that are consistent with requirements in this specification.” Even if your organization is small and does not have a real SOC with rows of security analysis dedicated to security monitoring or a virtual SOC, checking the lists and services frequently will help satisfy this requirement.

Other aspects of your vulnerability management program apply to securing the software developed in-house. Section 6.3 states that one needs to “develop software applications based on industry best practices and incorporate information security throughout the software-development life cycle.” The unfortunate truth, however, is that there is no single authoritative source for such security “best practices” and, at the same time, current software “industry best practices” rarely include “information security throughout the software-development life cycle.” Here are some recent examples of projects that aim at standardizing security programming best practices, which are freely available for download and contain detailed technical guidance:

• BSIMM “The Building Security In Maturity Model”; see www.bsi-mm.com/;

• OWASP “Secure Coding Principles”; see www.owasp.org/index.php/Secure_Coding_Principles;

• SANS and MITRE “CWE/SANS TOP 25 Most Dangerous Programming Errors”; see www.sans.org/top25errors/ or http://cwe.mitre.org/top25/;

• SAFECode “Fundamental Practices for Secure Software Development”; see www.safecode.org/.

Detailed coverage of secure programming topic goes far beyond the scope of this book.

Section 6.3 and 6.4 goes over software development and maintenance practices. Requirement 6.3 mandates that for PCI compliance, an organization must “develop software applications in accordance with PCI DSS (e.g. secure authentication and logging) and based on industry best practices, and incorporate information security throughout the software development lifecycle.” This guidance is obviously quite unclear and the burden of making the judgment call is on the QSAs, who are rarely experts in secure application development lifecycle as well as in general in the esoteric aspects of software security.

Let’s review some of the subrequirements of 6.4, which are clear, specific, and leave the overall theme of “following industry best practices” to your particular QSA.

The next one simply presents security common sense (Requirement 6.4.1): “Separate development/test and production environments.” This is very important because some recent attacks penetrated publicly available development and testing or staging sites.

A key requirement 6.4.3, which states “production data (live primary account numbers [PANs]) are not used for testing or development,” is one that is the most critical and also the most commonly violated with the most disastrous consequences. Many companies found their data stolen because developers moved data from the more secure production environment to a much less-protected test environment, as well as on mobile devices (laptops), remote offices, and so on.

On the contrary, contaminating the production environment with test code, utilities, and accounts is also critical (and was known to lead to just as disastrous compromises of production data) and is covered in Section 6.4.4, which regulate the use of “test data and accounts” and prerelease “custom application accounts” “custom code.” Similarly, recent attackers have focused on looking for left over admin logins, test code, hard-coded passwords, etc.

The next requirement is absolutely a key to application security (6.3.2): “Review of custom code prior to release to production or customers to identify any potential coding vulnerability.” Please also pay attention to the clarification to this requirement: “This requirement for code reviews applies to all custom code (both internal and public-facing and custom scripts), as part of the system development lifecycle. This mandates application security code review for in-scope and public applications. This scoping statement is of supreme importance: BOTH public (all public) and internal in-scope application must be secured. (“This requirement for code reviews applies to all custom code (both internal and public-facing), as part of the system development life cycle.”)

Further, Section 6.4 covers a critical area of IT governance: change control. Change control can be considered a vulnerability management measure because unpredicted, unauthorized changes often lead to opening vulnerabilities in both custom and off-the-shelf software and systems. It states that one must “follow change control procedures for all system and software configuration changes,” and even helps the organization define what the proper procedures must include (Requirement 6.4.5).

The simple way to remember is: if you change something somewhere in your IT environment, document it. Whether it is a bound notebook (small company) or a change control system (large company) is secondary, leaving a record is primary.

To put this into context, most other IT governance frameworks, such as COBIT (www.isaca.org/cobit) or ITIL (www.itil.co.uk/), cover change control as one of the most significant areas that directly affect system security. Indeed, having documentation and sign-off for changes and an ability to “undo” things will help achieve both security and operation goals by reducing the risk, and striving toward operational excellence. Please refer to Chapter 14, “PCI and Other Laws, Mandates, and Frameworks,” to learn how to combine multiple compliance efforts.

Another critical area of PCI DSS covers Web application security; it is contained in Sections 6.5 and 6.6 that go together when implementing compliance controls.

Web-Application Security and Web Vulnerabilities

Section 6.5 covers Web applications, because it is the type of application that will more likely be developed in-house and, at the same time, more likely to exposed to the hostile Internet (a killer combo—likely less-skilled programmers with larger number of malicious attackers!). Fewer organizations choose to write their own Windows or Unix software from scratch compared to those creating or customizing Web application frameworks.

In addition, it also calls to “review custom-application code to identify coding vulnerabilities.” It adds that companies must follow “industry best practices for vulnerability management … (e.g. the OWASP Guide, SANS CWE Top 25, CERT Secure Coding, etc.), the current best practices must be used for these requirements.”

Although a detailed review of secure coding goes much beyond the scope of this book, there are many other books devoted to the subject including detailed coverage of secure web application programming, web application security, and methods for discovering web site vulnerabilities well beyond the scope of this book. See Hacking Exposed Web Applications, Second Edition, and HackNotes™ Web Security Portable Reference for more details. OWASP has also launched a project to provide additional guidance on satisfying Web application security requirements for PCI (see “OWASP PCI” online).

PCI DSS goes into great level of details here, covering common types of coding-related weaknesses in Web applications. Those are as follows:

• “6.5.1 Injection flaws, particularly SQL injection. Also consider OS Command Injection, LDAP and XPath injection flaws as well as other injection flaws.”

• “6.5.4 Insecure communications.”

• “6.5.6 All “High” vulnerabilities identified in the vulnerability identification process (as defined in PCI DSS Requirement 6.2).”

and others (see PCI DSS Requirement 6.5).

In addition to secure coding to prevent vulnerabilities, organizations might need to take care of the existing deployed applications by looking into web application firewalls and web application scanning (WAS). An interesting part of Requirement 6.6 is that PCI DSS recommends either a vulnerability scan followed by a remediation (“Reviewing public-facing web applications via manual or automated application vulnerability security assessment tools”) or a web application firewall (“Installing a Web application firewall in front of public-facing web applications”), completely ignoring the principal of layered defense or defense-in-depth. In reality, deploying both is highly recommended for effective protection of web applications.

WAS

Before progressing with the discussion of WAS, we need to remind our readers that cross-site scripting and SQL injection web site vulnerabilities account for a massive percentage of card data loss. These same types of web vulnerabilities are also very frequently discovered during PCI DSS scans. For example, Qualys vulnerability research indicates that cross-site scripting is one of the most commonly discovered vulnerabilities seen during PCI scanning; it was also specifically called out by name in one PCI Council document [2] as a vulnerability that leads to PCI validation failure.

You need to ensure that whichever solution you use covers the current OWASP Top Ten list. This list may change over time and you need to ensure the WAS you are using keeps up with the changes. Some WAS products will need to add or modify detections to continue to meet this requirement. If you are using a more full-featured WAS, you may need to modify the scan options from time-to-time as the Top Ten list changes.

There are many commercial and even some free WAS solutions available. Common examples of free or open-source tools are as follows:

• Nessus, free vulnerability scanner, now has some detection of Web application security issues, see www.nessus.org.

• WebScarab, direct from OWASP Project (see www.owasp.org/index.php/Category:OWASP_WebScarab_Project) is also a must for your assessment efforts. The new one, WebScrab NG, is being created as well (see www.owasp.org/index.php/OWASP_WebScarab_NG_Project).

• w3af, is a Web Application Attack and Audit Framework (see http://w3af.sourceforge.net/), which can be used as well.

• Wikto, even if a bit dated, is still useful (see www.sensepost.com/research/wikto/).

• Ratproxy is not a scanner, but a passive discovery and assessment tool (see http://code.google.com/p/ratproxy/).

• Another classic passive assessment tool is Paros proxy (http://sourceforge.net/projects/paros).

• The whole CD of Web testing tools Samurai Web Testing Framework from InGuardians can be found at http://samurai.inguardians.com/.

Commercial tools’ vendors include Qualys, IBM, HP, Whitehat Security and others. Apart from procuring the above tools and starting to use them on your public Web applications, it is worthwhile to learn a few things about their effective use. We will present those below and highlight their use for card data security.

First, if you are familiar with ASV network scanning (covered below in the section on Requirement 11), you need to know that Web application security scanning is often more intrusive than network-level vulnerability scanning performed by an ASV for external scan validation. For example, testing for SQL injection or cross-site scripting often requires actual attempts to perform an injection of SQL code into a database or a script into a Web site. Doing so may cause some databases/applications to hang or to have spurious entries to appear in your Web application.

Just as with network scanning, application scanners may need to perform authentication to get access to more of your application. Many flaws that allow a regular user to become an application administrator can only be discovered via a trusted scan. If the main page on your Web application has a login form, you will need to perform a trusted scan, i.e., one that logs in. In addition to finding flaws where a user can become an admin, you also need to ensure that customers cannot intentionally, or inadvertently, traverse within the Web application to see other customers’ data. This has happened many times in Web applications. See Figure 9.3, which shows an example of such vulnerability.

Figure 9.3 User Privilege Violation Vulnerability in NVD

Also, depending on how your Web site handles authentication, you may need to log in manually first with the account you will use for scanning, grab the cookie by using a tool like Paros or WebScarab, and then load it into the scanner. Depending on time-outs, you may need to perform this activity just before the scan. In this case, do not plan on being able to schedule scans and have them run automatically.

In addition, WAS requires a more detailed knowledge of software vulnerabilities and attack methodologies to allow for the correct interpretation of results than network-based or “traditional” vulnerability scanning does. Remember to always do research in a laboratory environment, not connected to your corporate environment!

Warning

It is perfectly reasonable to use an advanced web application security scanner to scan applications deployed in production environment, but only after you tried it more than a few times in the laboratory.

When web farms and web portals are in the mix, scoping can become somewhat cloudy. Sometimes, you may have a web portal that will send all transactions involving the transmission or processing of credit-card data to different systems. It is likely that the entire cluster will be in-scope for PCI in this case.

Finally, WAS (as an option in Requirement 6.6) is not a substitute for a web application penetration test (mandated in Requirement 11.3). Modern web application scanners can do a lot of poking and probing, but they cannot completely perform tasks performed by a human who is attacking a web application. For example, full discovery of cross-site requirement forgery flaws is not possible using automated scanners today.

Note

Network vulnerability scanning (mandated in Requirement 11.2) and Web application security testing (as an option in Requirement 6.6) have nothing to do with each other. Please don’t confuse them! Network vulnerability scanning is mostly about looking for security issues in operating systems and off-the-shelf applications, such as Microsoft Office or Apache Web server, while Web application security testing typically looks for security issues in custom and customized Web applications. Simply scanning your Web site with a network vulnerability scanner does not satisfy Requirement 6.6 at all.

Again, just a reminder, whether network or application, the act of scanning is not sufficient as it is because it will only tell you about the issues but will not make you secure; you need to either fix the issue in code or deploy a web application firewall to block possible exploitation of the issues.

That is what we are going to discuss next.

Warning

While talking about network or application scanning, you rarely if ever scan to “just know what is out there.” The work does not end when the scan completes; it only begins. The risk reduction from vulnerability scanning comes when you actually remediate the vulnerability or at least mitigate the possible loss. Even if scanning for PCI DSS compliance, your goal is ultimately risk reduction, which only comes when the scan results come back clean.

Web Application Firewalls

Let’s briefly address the web application firewalls. Before the discussion of this technology, remember that a network firewall deployed in front of a web site does not a web firewall make. Web application firewalls got their unfortunate name (that of a firewall) from the hands of marketers. These fine folks didn’t consider the fact that a network firewall serves to block or allow network traffic based on network protocol and port as well as source and destination, whereas a web application firewall has to analyze the application behavior before blocking or allowing the interaction of a browser with the web application framework.

A web application firewall, like a network intrusion detection system (IDS) or IPS, needs to be tuned to be effective. To tune it, run reports that give total counts per violation type. Use these reports to tune the web application firewall (WAF). Often, a few messages that you determine to be acceptable traffic for your environment and applications will clean up 80% of the clutter in the alert window. This sounds like an obvious statement, but you would be amazed how many people try to tune WAF technologies in blocking mode while causing the application availability issues in your environment.

If you have a development or quality assurance (QA) environment, placing a WAF (the same type you use in production) in front of one or more of these environments (even in just read/passive mode) can assist you, to some extent, in discovering flaws in Web applications. This then allows for a more planned code fix. Sometimes, you may need to deploy to production with blocking rules until the code can be remediated. In addition, place a WAF in a manner that will block all the directions from where the application attacks might come from (yes, including the dreaded insider attacks).

Finally, unlike the early versions, WAFs are now actually usable and need to be used to protect the web site from exploitation of the vulnerabilities you discover while scanning.

What to Do to Be Secure and Compliant?

Requirement 6 asks for more than a few simple things; you might need to invest time to learn about application security before you can bring your organization into compliance.

• Read up on software security (pointers to OWASP, SANS, NIST, MITRE, BSIMM are given above).

• In particular, read up on Web application security.

• If you develop software internally or use other custom code, start building your software security program. Such a program must focus on both secure programming to secure the code written within your organization and on code review to secure custom code written by other people for you. No, it is not easy, and likely will take some time.

• Invest in a Web application security scanner; both free open-source and quality commercial offerings that cover most of OWASP Top 10 are available.

• Also, possibly invest in Web application firewall to block the attacks against the issues discovered while scanning.

Requirement 11 Walk-Through

Let’s walk through Requirement 11 to see what is being asked. First, the requirement name itself asks users to “Regularly test security systems and processes,” which indicates that the focus of this requirement goes beyond just buffer overflows and format string vulnerabilities from the technical realm, but also includes process weaknesses vulnerabilities. A simple example of a process weakness is using default passwords or easily guessable passwords (such as the infamous “1234” password). The above process weaknesses can be checked from the technical side, for example during the network scan by a scanner that can do authenticated policy and configuration audits, such as password strength checks. However, another policy weakness, requiring overly complicated passwords and frequent changes, which in almost all cases lead to users writing the password on the infamous yellow sticky notes, cannot be “scanned for” and will only be revealed during an annual penetration test. Thus, technical controls can be automated, whereas most policy and awareness controls cannot be.

The requirement text goes into a brief description of vulnerabilities in a somewhat illogical manner: “Vulnerabilities are being discovered continually by malicious individuals and researchers, and being introduced by new software.” Admittedly, vulnerabilities are being introduced first and then discovered by researchers (which are sometimes called “white hats”) and attackers (“black hats”).

The requirement then calls for frequent testing of software for vulnerabilities: “Systems, processes, and custom software should be tested frequently to ensure security is maintained over time and with any changes in software.” An interesting thing to notice in this section is that they explicitly call for testing of systems (such as operating systems software or embedded operating systems), processes (such as the password-management process examples referenced above), and custom software, but don’t mention the commercial off-the-shelf (COTS) software applications. The reason for this is that it is included as part of the definition of “a system” because it is not only the operating system code, but vendor application code contains vulnerabilities. Today, most of the currently exploited vulnerabilities are found in applications and even in desktop applications such as MS Office, Adobe, Java, and at the same time, there is a relative decreased weakness in core Windows system services.

Wireless network testing (Requirement 11.1) states: “use a wireless analyzer at least quarterly to identify all wireless devices in use.” Indeed, the retail environments today make heavy use of wireless networks in a few common cases where POS wireless network traffic was compromised by the attackers. Please refer to Chapter 7, “Using Wireless Networking,” for wireless guidance.

Further, Section 11.2 requires you to “run internal and external network vulnerability scans at least quarterly and after any significant change in the network (such as new system component installations, changes in network topology, firewall rule modifications, product upgrades).”

This requirement has an interesting twist, however. Quarterly external vulnerability scans must be performed by a scan vendor qualified by the payment card industry. Thus, just using any scanner won’t do; you need to pick it from the list of ASVs, which we mentioned in Chapter 3, “Why Is PCI Here?” At the same time, the requirements for scans performed after changes are more relaxed: “Scans conducted after network changes may be performed by the company’s internal staff.” This is not surprising given that such changes occur much more frequently in most networks.

The next section covers the specifics of ASV scanning and the section after covers the internal scanning.

External Vulnerability Scanning with ASV

We will look into the operational issues of using an ASV, cover some tips about picking one, and then discuss what to expect from an ASV.

What is an ASV?

As we mentioned in Chapter 3, “Why Is PCI Here?,” PCI DSS validation also includes network vulnerability scanning by an ASV. To become an ASV, companies must undergo a process similar to QSA qualification. The difference is that in the case of QSAs, the individual assessors attend classroom training on an annual basis, whereas ASVs submit a scan conducted against a test network perimeter. An organization can choose to become both QSA and ASV, which allows the merchants and service providers to select a single vendor for PCI compliance validation.

ASVs are security companies that help you satisfy one of the two third-party validation requirements in PCI. ASVs go through a rigorous laboratory test process to confirm that their scanning technology is sufficient for PCI validation.

Also, it is worthwhile to mention that validation via an external ASV scan only applies to those merchants that are required to validate requirement 11. In particular, those who don’t have to validate Requirement 11 are those that outsource payment processing, those who don’t process or store any data on their premises and those with dial-up (non-Internet) terminals. This is important, so it bears repeating; if you have no system to scan because you don’t process or store data in-house, you don’t have to scan. If this is you, you probably don’t have to do too much around PCI DSS. Of course, it goes without saying that deploying a vulnerability management system to reduce your information risk is appropriate even if PCI DSS didn’t exist at all.

Considerations When Picking an ASV

First, your acquiring bank might have picked an ASV [3] for you. In this case, you might or might not have to use its choice. Note, however, that such prepicked ASV might be neither the best nor the cheapest.

While looking at the whole list of ASVs and then picking the one that “sounds nice” is one way to pick, it is likely not the one that will ensure trouble-free PCI validation and increased card data security as well as reduced risk of data theft. At the time of this writing, the ASV list has grown tremendously, from small 1 to 2 persons consulting outlets to IBMs and Verizons of the world, located on all the continents (save Antarctica). How do you pick?

First, one strategy, that needs to be unearthed and explained right away, is as simple as it is harmful for your card data security and PCI DSS compliance status. Namely, organizations that blindly assume that “all ASVs are the same” based on the fact that all are certified by the PCI Council to satisfy PCI DSS scan validation requirements would sometimes just pick on price. This same assumption sometimes applies to QSAs, and as many security industry insiders have pointed out (including both authors), they all are not created equal!

As a result, passing the scan validation requirement and submitting the report that indicates “Pass” will definitely confirm your PCI validation (as long as your ASV remains in good standing with the Council). Sadly, it will not do nearly enough for your cardholder data security. Even if certified, ASVs coverage of vulnerabilities varies greatly; all of them do the mandatory minimum, but more than a few cut corners and stay at that minimum (which, by the way, they are perfectly allowed doing), while others help you uncover other holes and flaws that allow malicious hackers to get to that juicy card data.

Thus, your strategy might follow these steps.

First, realize that all ASVs are not created equal; at the very least, prices for their services will be different, which should give you a hint that the value they provide will also be different.

Second, realize that all ASVs roughly fall into two groups: those that do the minimum necessary according to the above guidance documents (focus on compliance) and those that intelligently interpret the standard and help you with your data security and not just with PCI DSS compliance (focus on security). Typically, the way to tell the two groups apart is to look at the price. In addition, nearly 60% of all currently registered ASVs use the scanning technology from Qualys (www.qualys.com), while many of the rest use Nessus (www.nessus.org) to perform PCI validation.

In addition, though the pricing models for ASV services vary they roughly fall into two groups: in one model, you can scan your systems many times (unlimited scanning) while the other focuses on providing you the mandatory quarterly scan (i.e. four a year). In the latter case, if your initial scan shows the vulnerabilities and need to fix and rescan to arrive at a passing scan, you will be paying extra. Overall, it is extremely unlikely that you can get away with only scanning your network from the outside four times a year.

Third, even though an ASV does not have to be used for internal scanning, it is more logical to pick the same scanning provider for external (must be done by an ASV) and internal (must be done by somebody skilled in using vulnerability management tools). Using the same technology provider will allow you to have the same familiar report format and the same presentation of vulnerability findings. Similarly, and perhaps more importantly, even though ASV scanning does not require authenticated or trusted scanning, picking an ASV that can run authenticated scans on your internal network is useful since such scanning can be used to automate the checking for the presence of other DSS controls, such as password length, account security settings, use of encryption, availability of anti-malware defenses, etc.

Table 9.1 shows a sample list of PCI DSS controls that may be performed using automated scanning tools that perform authenticated or trusted scanning.

Table 9.1 Automatic Validation of PCI DSS Controls

| Requirement | PCI DSS 1.2 [1] Requirement | Technical Validation of PCI Requirements |

| 1.4 | Install personal firewall software on any mobile and/or employee-owned computers with direct connectivity to the Internet (for example, laptops used by employees), which are used to access the organization’s network. | Automated tools are able to check for the presence of personal firewalls deployed on servers, desktops, and laptops remotely. |

| 2.1 | Always change vendor-supplied defaults before installing a system on the network, including but not limited to passwords, simple network management protocol (SNMP) community strings, and elimination of unnecessary accounts. | Automated tools can be used to verify that vendor defaults are not used by checking for default and system accounts on servers, desktops, and network devices. |

| 2.1.1 | For wireless environments connected to the cardholder data environment or transmitting cardholder data, change wireless vendor defaults, including but not limited to default wireless encryption keys, passwords, and SNMP community strings. | Automated tools can be used to verify that default settings and default passwords are not used across wireless devices connected to the wired network. |

| 2.2 | Develop configuration standards for all system components. Assure that these standards address all known security vulnerabilities and are consistent with industry-accepted system hardening standards. | Automated tools can validate the compliance of deployed systems to configuration standards, mandated by the PCI DSS. |

| 2.2.2 | Enable only necessary and secure services, protocols, daemons, etc., as required for the function of the system. | Automated tools can help discover systems on the network as well as detect the network-exposed services that are running on systems, and thus significantly reduce the effort needed to bring the environment in compliance. |

| 2.2.4 | Remove all unnecessary functionality, such as scripts, drivers, features, subsystems, file systems, and unnecessary web servers. | Automated tools can help discover some of the insecure and unnecessary functionality exposed to the network, and thus significantly reduce the effort needed to bring the environment in compliance. |

| 2.3 | Encrypt all non-console administrative access using strong cryptography. Use technologies such as SSH, VPN, or SSL/TLS for webbased management and other non-console administrative access. | Automated tools can help validate that encrypted protocols are in use across the systems and that unencrypted communication is not enabled on servers and workstations (SSH, not Telnet; SSL, not unencrypted HTTP, etc). |

| 3.4 | Render PAN unreadable anywhere it is stored (including on portable digital media, backup media, and in logs). | Automated tools can confirm that encryption is in use across the PCI in-scope systems by checking system configuration settings relevant to encryption. |

| 3.5 | Protect any keys used to secure cardholder data against disclosure and misuse. | Automated tools can be used to validate security settings relevant to protection of system encryption keys. |

| 4.1 | Use strong cryptography and security protocols (e.g. SSL/TLS, IPSEC, SSH, etc.) to safeguard sensitive cardholder data during transmission over open, public networks. | Automated tools can be used to validate the use of strong cryptographic protocols by checking relevant system configuration settings and detect instances of insecure cipher use across the in-scope systems. |

| 4.1.1 | Ensure wireless networks transmitting cardholder data or connected to the cardholder data environment, use industry best practices (e.g. IEEE 802.11i) to implement strong encryption for authentication and transmission. | Automated tools can attempt to detect wireless access points from the network side and to validate the use of proper encryption across those access points. |

| 5.1 | Deploy antivirus software on all systems commonly affected by malicious software (particularly, personal computers and servers). | Automated tools can validate whether antivirus software is installed on in-scope systems. |

| 5.2 | Ensure that all antivirus mechanisms are current, actively running, and capable of generating audit logs. | Automated tools can be used to check for running status of antivirus tools. |

| 6.1 | Ensure that all system components and software are protected from known vulnerabilities by having the latest vendor-supplied security patches installed. Install critical security patches within 1 month of release. | Automated tools can be used to detect missing OS, application patches, and security updates. |

| 6.2 | Establish a process to identify and assign a risk ranking to newly discovered security vulnerabilities. | Automated tools are constantly updated with new vulnerability information and can be used in tracking newly discovered vulnerabilities. |

| 6.6 | For public-facing Web applications, address new threats and vulnerabilities on an ongoing basis and ensure these applications are protected against known attacks by either of the following methods: reviewing public-facing Web applications via manual or automated application vulnerability security assessment tools or methods, at least annually and after any changes. | Automated tools can be used to assess Web application security in support of PCI Requirement 6.6. |

| 7.1 | Limit access to system components and cardholder data to only those individuals whose job requires such access. | Automated tools can analyze database user right and permissions, looking for broad and insecure permissions. |

| 8.1 | Assign all users a unique ID before allowing them to access system components or cardholder data. | In partial support of this requirement, automated tools are used to look for active default, generic accounts (root, system, etc.), which indicate that account sharing takes place. |

| 8.2 | In addition to assigning a unique ID, use at least one of the following methods to authenticate all users: Password or passphrase. | Automated tools can be used to look for user accounts with improper authentication settings, such as accounts with no passwords or with blank passwords. |

| 8.4 | Render all passwords unreadable during transmission and storage on all system components using strong cryptography. | Automated tools can be used to detect system configuration settings, permitting unencrypted and inadequately encrypted passwords across systems. |

| 8.5 | Ensure proper user authentication and password management for non-consumer users and administrators on all system components. | Automated tools can be used to validate an extensive set of user account security settings and password security parameters across systems in support of this PCI requirement. |

| 11.1 | Test for the presence of wireless access points and detect unauthorized wireless access points on a quarterly basis. | Automated tools can attempt to detect wireless access points from the network side, thus to help the detection of rogue access points. |

| 11.2 | Run internal and external network vulnerability scans at least quarterly and after any significant change in the network (such as new system component installations, changes in network topology, firewall rule modifications, and product upgrades). | Automated tools can be used to scan for vulnerabilities both from inside and from outside the network. |

Fourth, look for how ASV workflow matches your experience and expectation. Are there many manual tasks required to perform a vulnerability scan and create a report or is everything automated? Fully automated ASV services where launching a scan and presenting a compliance report to your acquirer can be done from the same interface are available. Still, if you need help with fixing the issues before you can rescan and validate your compliance, hiring an ASV that offers help with remediation is advisable. It goes without saying that picking an ASV that requires you to purchase any hardware or software is not advisable; all external scan requirements can be satisfied by scanning from the Internet.

Finally, even though this strategy focuses on picking an ASV, you and your organization have a role to play as well, namely, in fixing the vulnerabilities that the scan discovered to arrive at a compliant status—clean scan with no failures. We discuss the criteria that ASVs use for pass/fail below.

How ASV Scanning Works

ASVs use standard vulnerability scanning technology to detect vulnerabilities that are deemed by the PCI Council to be relevant for PCI DSS compliance. This information will help you understand what exactly you are dealing with when you retain the scanning services of an ASV. It will also help you learn how to pass or fail the PCI scan criteria and how to prepare your environment for an ASV scan.

Specifically, the ASV procedures mandate that ASV covers the following in its scan (the list below is heavily abridged, please refer to “Technical and Operational Requirements for Approved Scanning Vendors (ASVs)” [2] for more details):

• Identify issues in “operating systems, Web servers, Web application servers, common Web scripts, database servers, mail servers, firewalls, routers, wireless access points, common (such as DNS, FTP, SNMP, etc.) services, custom Web applications.”

• “ASV must use the CVSS base score for the severity level” (please see the main site for CVSS at www.first.org/cvss for more information).

• After the above resources are scanned, the following criteria are used to pass/fail the PCI validation (also [2]):

• “Generally, to be considered compliant, a component must not contain any vulnerability that has been assigned a CVSS base score equal to or higher than 4.0.” Any curious reader can look up a CVSS score for many publicly disclosed vulnerabilities by going to NVD at http://nvd.nist.gov.

• “If a CVSS base score is not available for a given vulnerability identified in the component, then the compliance criteria to be used by the ASV depend on the identified vulnerability leading to a data compromise.” This criterion makes sure that ASV security personnel can use their own internal scoring methodology when CVSS scores cannot be produced.

• There are additional exceptions to the above rules. Some vulnerability types are included in pass/fail criteria, no matter what their scores are, while others are excluded. Here are the inclusions:

• “A component must be considered noncompliant if the installed SSL version is limited to Version 2.0, or older.”

• “The presence of application vulnerabilities on a component that may lead to SQL injection attacks and cross-site scripting flaws must result in a noncompliant status” [2].

• The exclusions are as follows:

• “Vulnerabilities or misconfigurations that may lead to DoS should not be taken into consideration” [2].

The above criteria highlight the fact that PCI DSS external scanning is not everything you need to do for security. After all, PCI DSS focuses on card data loss, not on the availability of your key IT resources for your organization and not on their resistance to malicious hackers.

Each ASV will interpret the requirements a little differently. However, quality ASV identifies many different types of vulnerabilities in addition to PCI DSS.

When the scan completes, the report is generated, which can then be used to substantiate your PCI validation via vulnerability scanning.

To summarize, ASV quality scanning will detect all possible external vulnerabilities and highlight those that are reasons for PCI DSS validation failure. The same process needs to be repeated for quarterly scans—usually toward the end of the quarter but not during the last day because remediation activities needs to happen before a final rescan takes place. In fact, let’s talk about operationalizing the ASV scanning.

Operationalizing ASV Scanning

To recap PCI DSS Requirement 11.2 calls for quarterly scanning. In addition, every scan may lead to remediation activities, and those aren’t limited to patching. Moreover, validation procedures mention that a QSA will ask for four passing reports during an assessment.

The above calls for an operation process for dealing with this requirement. Let’s build this process together now.

First, it is a very good idea to scan monthly or even weekly if possible. Why would you be doing it to satisfy a quarterly scanning requirement? Well, consider the following scenario: on the last day of the quarter, you perform an external vulnerability scan and you discover a critical vulnerability. The discovered vulnerability is present on 20% of your systems, which totals to 200 systems. Now, you have exactly 1 day to fix the vulnerability on all systems and perform a passing vulnerability scan, which will be retained for your records. Is this realistic? The scenario can happen and, in fact, has happened in many companies that postpone their quarterly vulnerability scan until the very last day and did not perform any ongoing vulnerability scanning. Considering the fact that many acquiring institutions are becoming more stringent with PCI validation requirements, many will not grant you an exception. Beyond the first day over the next month, the scenario will certainly incur unnecessary pain and suffering on your company and your IT staff. What is the way to avoid it? Performing external scans every month or even every week. It is also a good idea to perform an external scan after you apply a patch to external systems.

Note

Most companies run their external scans monthly, even though those are called “quarterly scans.” That way, issues can be resolved in time to have a clean quarterly report since there are no surprises. There are known cases where organizations have been burned by waiting until the last month of a quarter to run an external scan. This can cause a serious amount of last-minute, emergency code and system configuration changes, and an overall sense of panic, which is not conducive to good security management.

After you run the scan, carefully review the results of the reports. Are those passing or failing reports? If the report indicates that you do not pass the PCI validation requirement, please note which systems and vulnerabilities do not pass the criteria. Next, distribute the report to those in your IT organization who is responsible for the systems that fail the test. Offer them some guidance on how to fix the vulnerabilities and bring those systems back to PCI compliance. These intermediate reports will absolutely not be shared with your acquiring institutions.

When you receive the indication that those vulnerabilities have been successfully fixed, please rescan to obtain a clean report. Repeat the process every month or week.

Finally, scan a final round before the end of the quarter and preserve the reports for the assessor. Thus, your shields will be up at all times. If you are only checking them four times a year, you’re suffering from two problems. First, you are most likely not PCI compliant throughout most of the year. Second, you burden yourself with a massive emergency effort right at the end of the quarter when other people at your organization expect IT systems to operate at its peak. Don’t be the one telling finance that they cannot run that quarterly report!

What Do You Expect from an ASV?

Discussing the expectations while dealing with an ASV and working toward PCI DSS scan validation is a valuable exercise. The critical considerations are described below.

First, ASV can scan you and present the data (report) to you. It is your job to then bring the environment in compliance. After that, ASV can again be used to validate your compliant status and produce a clean report. Remember, ASV scanning does not make you compliant, you do, by making sure that no PCI-fail vulnerabilities are present in your network.

Second, you don’t have to hire expensive consultants just to run an ASV scan for you every quarter. Some ASVs will automatically perform the scan with the correct settings and parameters, without you learning the esoteric nature of a particular vulnerability scanner. In fact, you can sometimes even pay for it online and get the scan right away—yes, you guessed right—using a credit card.

Third, you should expect that a quality ASV will discover more vulnerabilities than is required for PCI DSS compliance. You’d need to make your own judgment call on whether to fix them. One common case where you might want to address the issue is vulnerabilities that allow hackers to crash your systems (denial of service [DoS] vulnerabilities). Such flaws are out of scope for PCI because they cannot directly cause the theft of card data (this depends on this cause of the DoS); however, by not fixing them, you are allowing the attackers to disrupt your online business operation.

Finally, let us offer some common tips on ASV scanning.

First comes the question: what system must you scan for PCI DSS compliance? The answer to this splits into two parts, for external and internal scanning.

Specifically, for external systems or those visible from the Internet, the guidance from the PCI Council is clear: “all Internet-facing Internet Protocol (IP) addresses and/or ranges, including all network components and devices that are involved in e-commerce transactions or retail transactions that use IP to transmit data over the Internet” (source: “Technical and Operational Requirements for Approved Scanning Vendors (ASVs)” [2] by PCI Council). The obvious answer can be “none” if your business has no connection to the Internet.

For internal systems, the answer covers all systems that are considered in-scope for PCI, which is either those involved with card processing or directly connected to them. The answer can also be “none” if you have no systems inside your perimeter, which are in-scope for PCI DSS.

Second, the question about pass/fail criteria for internal scans often arises as well. While the external ASV scans have clear criteria discussed above, internal scans from within your network have no set pass/fail criteria. The decision is thus based on your idea of risk; there is nothing wrong in using the same criteria as above, of course, if you think that it matches your view of risks to card data in your environment.

Another common question is how to pass the PCI DSS scan validation? Just as above, the answer is very clear for external scans: you satisfy the above criteria. If you don’t, you need to fix the vulnerabilities that are causing you to fail and the rescan. Then, you pass and get a “passing report,” that you can submit to your acquiring bank.

For internal scans, the pass/fail criteria are not expressly written in the PCI Council documentation. Expect your assessor to ask for clean internal and external scans as part of Requirement 11.2. Typically, QSAs will define “clean” internal scans as those not having high-severity vulnerability across the in-scope systems. For a very common scale of vulnerability ranking from 1 to 5 (with 5 being the most severe), vulnerability of severities 3–5 are usually not acceptable. If CVSS scoring is used, 4.0 becomes the cutoff point; vulnerabilities with severities above 4.0 are not accepted unless compensating controls are present and are accepted by the QSA. It was reported that in some situations, a QSA would accept a workable plan that aims to remove these vulnerabilities from the internal environment.

Also, people often ask whether they become “PCI compliant” if they get a passing scan for their external systems. The answer is certainly a “no.” You only satisfied one of the PCI DSS requirements; namely, PCI DSS validation via an external ASV scan. This is not the end. Likely, this is the beginning.

Internal Vulnerability Scanning

As we mentioned in the section, “Vulnerability Management in PCI,” internal vulnerability scanning must be performed every quarter and after every material system change, and no high-severity vulnerability must be present in the final scan preserved for presentation to your QSA. Internal scanning is governed by the PCI Council document called Security Scanning Procedures [5]. Requirement 11.2.1 (since PCI DSS 2.0) states that a merchant must “Perform quarterly internal vulnerability scans.” A QSA must the validate and “review the scan reports and verify that the scan process includes rescans until passing results are obtained, or all “High” vulnerabilities as defined in PCI DSS Requirement 6.2 are resolved.”

First, using the same template your ASV uses for external scanning is a really good idea, but you can use more reliable trusted or authenticated scanning, which will reveal key application security issues on your in-scope systems that regular, unauthenticated scanning may sometimes miss.

Remediation may take the form of hardening, patching, architecture changes, technology/tool implementations, or a combination thereof. Remediation efforts after internal scanning are prioritized based on risk and can be managed better than external ASV scans. Something to keep in mind for PCI environments is that the remediation of critical vulnerabilities on all in-scope systems is mandatory. This makes all critical and high-severity vulnerabilities found on in-scope PCI systems a high priority. Follow the same process we covered in the “operationalizing ASV scanning” section and work toward removing the high-severity vulnerabilities from the environment before presenting the clean report to the QSA.

Reports that show the finding and remediation of vulnerabilities for in-scope systems over time become artifacts that are needed to satisfy assessment requirements. You should consider that having a place to keep archives of all internal and external scan reports (summary, detailed, and remediation) for a 12-month period is a good idea. Your ASV may offer to keep them for you and also as an added service. However, it is ultimately your responsibility.

This is a continuous process. As with other PCI compliance efforts, it is important to realize that PCI compliance is an effort that takes place 24/7, 365 days a year.