Chapter 10

Logging Events and Monitoring the Cardholder Data Environment

Information in this chapter:

• Why Logging and Monitoring in PCI DSS?

• Logging and Monitoring in Depth

• Logging in PCI Requirement 10

• Monitoring Data and Log Security Issues

• Logging and Monitoring in PCI—All Other Requirements

• PCI DSS Logging Policies and Procedures

When most people think about information security, the idea of blocking, deflecting, denying, or otherwise stopping a malicious hacker attack comes to mind. Secure network architecture, secure server operating systems, data encryption, and other security technologies are deployed to shield your assets from that evil influence that can steal your information, commit fraud, or disrupt the operation of systems and networks.

Indeed, the visions of tall castle walls, deep moats, or more modern armor and battleships pervade most people’s view of warfare as well as information security. However, there is more to warfare (and more to security!) than armor and shields. We are talking about the other keystone of ancient as well as modern (and, likely, future!) warfare: intelligence. Those archers who glance from the top of the castle walls and modern spy satellites that glance down to Earth are no less mandatory to winning (or “not losing,” as we have it in the field of information security) the war than fortifications and armored divisions.

In fact, security professionals often organize what they do in security into the following:

“Prevention” is what covers all the blocking, deflecting, denying, or stopping attacks. Notice that it includes the actual blocking of a live attack (such as running a network intrusion prevention system [IPS]) as well as making sure that such an attack cannot take place (such as deploying a patch management system). However, what happens if such prevention measures actually fail to prevent or block an attack? Wouldn’t it be nice to know that when it happens?

This is exactly where “detection” comes in. All the logging and monitoring technologies, whether relevant for PCI DSS or not, are things that allow you to know. Specifically, know that you are attacked, know that a prevention measure gave way, and know that an attacker has penetrated the network and is about to make it out with the loot. They also allow you to simply know what is going on!

As any self-respecting security guidance, PCI DSS guidance mandates not just prevention but detection in the form of logging, alerting, and monitoring. Let’s discuss all these in detail.

PCI Requirements Covered

Contrary to popular belief, logging and monitoring are not constrained to Requirement 10, but in fact permeate all 12 of the PCI DSS requirements. Still, the key areas where logging and monitoring are mandated in PCI DSS are Requirement 10 and sections of Requirement 11.

Why Logging and Monitoring in PCI DSS?

As we mentioned previously, for those who are used to thinking of security as prevention or blocking, the benefits of monitoring might need to be spelled out explicitly, Before we go into describing what security monitoring measures are prescribed by PCI, we will do exactly that.

So, why monitor?

First comes situational awareness. It simply means knowing what is going on in your network and on your systems and applications. Examples here are “Who is doing what on that server?”, “Who is accessing my network?,” or “What is that application doing with card data?” In addition, system logging helps you know not only what is going on but also what was going on; a vital component needed for investigations and later incident response.

Next comes new threat discovery, which is simply knowing the bad stuff that is happening. This illustrates one of the major use cases to collect and review logs as well as to deploy intrusion detection systems (IDSs).

Third, logging helps you to get more value out of the network and security infrastructure, deployed for blocking and prevention. For example, using firewall logs for intrusion detection—often justified during assessments but not as commonly used—is an example of that.

What is even more interesting is that logging and monitoring controls (notice that these are considered security “controls” even though they don’t really “control” anything) allow one to measure security and compliance by building metrics and trends. This means that one can use such data for a range of applications, from a simple “Top users by bandwidth” report obtained from firewall logs, all the way to sophisticated tracking of gmail.com card data movements and use.

Last, but not least, if the worst does happen, then you would need to have as much data as possible during your incident response process. You might not use it all, but having reliable logs, assessment trails, and network capture data from all affected systems is indispensable for a hectic post-incident environment.

Requirements 10 and 11 are easily capable of inflating PCI compliance costs to the point of consuming the small margins of card transactions. No one wants to lose money to be PCI compliant. Therefore, the ability to meet the requirements above all must make business sense. Nowhere else in PCI compliance does the middle ground of design philosophy come into play more than in the discipline of monitoring, but this is also where minimizing the risk can hurt most.

Finally, assuming that you’ve designed your PCI environment to have appropriate physical and logical boundaries through the use of segregated networks and dedicated application space as we described in Chapter 4, “Building and Maintaining a Secure Network,” you should be able to identify the boundaries of your monitoring scope. If you haven’t done this part, go back to Requirement 1 and start over!

Logging and Monitoring in Depth

Even today, many organizations, whether under PCI DSS or not, still think of security as blocking and denying and leave monitoring and logging aside, despite their importance.

On the other hand, as computer and Internet technology continues to spread and computers start playing an even more important role in our lives, the records that they produce, such as logs and other traces, start to play a bigger role. From firewalls and routers to databases and enterprise applications, to wireless access points and Voice over Internet Protocol (VoIP) gateways, logs are being spewed forth at an ever-increasing pace. Both security and other IT components not only increase in numbers but also often come with more logging enabled out of the box. An example of this trend includes the Linux operating system as well as Web servers, both commercial and open-source, that now ship with increased levels of logging out of the box. In addition, such additional monitoring methods as full network packet capture, database activity monitoring (DAM), and special-purpose application monitoring are becoming common as well. And all this data begs for constant attention!

Warning

A log management problem is to a large extent a data management problem. Thus, if you deal with logs (and deal with them you must—and not only due to PCI DSS mandates), you’ll deal with plenty of data. On top of this, much of this data is often unstructured and requires normalization to get common messages into a common format and sent to a centralized place for analysis.

Still, with logs, it is much better to err on the side of keeping more—in case you’d need to look at it later. This is not the same as saying that you have to keep every log message, but retaining more log data can save you in some situations (e.g. in a legal matter).

But this is easier said than done. Immense volumes of monitoring data are being generated on payment card processing networks and customer-facing web resources. In turn, it results in a need to manage, store, and search all this data. Moreover, such review of data needs to happen both reactively—after a suspected incident—and proactively—in search of potential risks and future problems. For example, a typical large retailer generates hundreds of thousands of log messages per day amounting to many terabytes or even petabytes per year. An online merchant can generate millions of various log messages every day. One of America’s largest retailers has more than 80 TB of log data on their systems at any given time. Unlike other companies, retailers do not have the option of not managing their logs due to PCI DSS.

Note

Even though we often refer to “retailers” as a company subject to PCI DSS, PCI is not only about retailers. Remember, anyone who “stores, processes, or transmits” member-branded credit- or debit-card numbers must comply with PCI DSS.

To start our discussion of PCI logging and monitoring requirements, Table 10.1 shows a sample list of technologies that produce logs of relevance to PCI. Though this list is not comprehensive, it is likely that the readers find at least one system that they have in their cardholder data environment and for which logs are not being collected, much less looked at, and that is not being monitored at all.

Table 10.1 Log-Producing Technologies, Monitored Using Their Logs

| Type | Example Logs |

| Operating Systems | Linux, Solaris syslog, Windows Event Log |

| Databases | Oracle, Structured Query Language Server assessment trails |

| Network infrastructure | Cisco routers and switches syslog |

| Remote access | Virtual private network logs |

| Network security | Cisco PIX firewalls syslog |

| Intrusion detection and preventions | Snort network intrusion detection system syslog and packet capture |

| Enterprise applications | SAP, PeopleSoft logs |

| Web servers | Apache logs, Internet Information Server logs |

| Proxy servers | BlueCoat, Squid logs |

| E-mail servers | Sendmail syslog, various Exchange logs |

| Domain Name System (DNS) servers | Bind DNS logs, MS DNS |

| Antivirus and antispyware | Symantec AV event logs, TrendMicro AV logs |

| Physical access control | IDenticard, CoreStreet |

| Wireless networking | Cisco Aironet AP logs |

Despite the multitude of log sources and types, people typically start from network and firewall logs and then progress upward on the protocol stack as well as sideways toward other non-network applications. For example, just about any firewall or network administrator will look at a simple summary of connections that his or her Cisco ASA or Checkpoint firewall is logging. Many firewalls log in standard syslog format, and such logs are easy to collect and review.

For example, here is a Juniper firewall log message in syslog format:

NOC-FWa: NetScreen device_id=NOC-FWa system-notification-00257(traffic): start_time=“2007-05-01 19:17:37” duration=60 policy_id=9 service=snmp proto=17 src zone=noc-services dst zone=-access-ethernet action=Permit sent=547 rcvd=432 src=10.0.12.10 dst=10.2.16.10 src_port=1184 dst_port=161 src-xlated ip=10.0.12.10 port=1184

And, here is one from Cisco ASA firewall device:

%ASA-6-106100: access-list outside_access_in denied icmp -outside/10.88.81.77(0) -> inside/192.10.10.246(11) hit-cnt 1 (first hit)

Finally, one from a Linux IPTables firewall:

Nov 12 08:49:50 fw kernel: [3005768.228266] IPT-global R 25 -- ACCEPT IN=eth3 OUT=eth0 SRC=10.19.10.251 DST=207.232.83.70 LEN=76 TOS=0x00 PREC=0x00 TTL=63 ID=32906 DF PROTO=UDP SPT=34530 DPT=123 LEN=56

Reviewing network intrusion detection system (NIDS) or IPS logs, although “interesting” in case of an incident, is often a very frustrating task since NIDS would sometimes produce “false alarms” and dutifully log volumes of them. Still, NIDS log analysis, at least the postmortem kind for investigative purposes, often happens right after firewall logs are looked at. The NIDS logs themselves might be next, checking for signature updates, logins, and changes to the appliance.

Even though system administrators always knew to look at logs in case of problems, large-scale server operating system log analysis (both Windows and Unix/Linux variants) didn’t materialize until more recently. Collecting logs from Windows servers, for example, was hindered by the lack of agentless log collection tools as well as Windows support for log centralization (included in Microsoft server OS since Windows 2008). On the other hand, Unix server log analysis was severely undercut by a total lack of unified format for log content in syslog records.

Web server logs were long analyzed by marketing departments to check on their online campaign successes. Most Web server administrators would also not ignore those logs. However, because Web servers don’t have native log forwarding capabilities (most log to files stored on the server itself), consistent centralized Web log analysis for both security and other IT purposes is still ramping up.

For example, the open-source Apache Web server has several types of logs. The most typical among them are access_log that contains all page requests made to the server (with their response codes) and error_log that contains various errors and problems. Other Apache logs relate to Secure Sockets Layer (SSL) (ssl_error_log) as well as optional granular assessment logs that can be configured using tools such as ModSecurity (which produces an additional highly-detailed audit_log).

Similarly, e-mail tracking through e-mail server logs languishes in a somewhat similar manner: people only turn to e-mail logs when something goes wrong (e-mail failures) or horribly wrong (external party subpoenas your logs). Lack of native centralization and, to some extent, complicated log formats slowed down the e-mail log analysis initiatives.

Even more than e-mail, database logging wasn’t on the radar of most IT folks until last year. In fact, IT folks were perfectly happy with the fact that even though Relational Database Management Systems (RDBMSs) had extensive logging and data access assessment capabilities, most of them were never turned on—many times citing performance issues. Oracle, Microsoft Structured Query Language (SQL) Server, IBM DB2, and MySQL all provide excellent logging, if you know how to enable it, configure it for your specific needs, and analyze and leverage the resulting onslaught of data. In the context of PCI DSS, Database Activity Monitoring (DAM) is often performed not using logs but instead using a separate software tools.

What’s next? Web applications and large enterprise application frameworks largely lived in a world of their own, but now people are starting to realize that their log data provides unique insight into insider attacks, insider data theft, and other trusted access abuse. Many retailers are ramping up their application log management efforts. Additionally, desktop operating system log analysis from large numbers of deployed desktops will also follow.

PCI Relevance of Logs

Before we begin with covering additional details on logging and monitoring in PCI, one question needs to be addressed. It often happens that PCI Qualified Security Assessors (QSAs) or security consultants are approached by the merchants: what exactly must they log and monitor for PCI DSS compliance? The honest answer to the above question is that there is no list of what exactly you must be logging due to PCI or, pretty much, any other recent compliance mandate. That is true despite the fact that PCI rules are more specific than most other recent regulations, affecting information security. However, the above does not mean that you can log nothing.

The only thing that can be explained is what you should be logging. There is no easy “MUST-log-this” list; it is pretty much up to individual assessor, consultant, vendor, engineer, and so forth to interpret—not simply “read,” but interpret!—PCI DSS guidance in your own environment. In addition, when planning what to log and monitor, it makes sense to start from compliance requirement as opposed to end with what PCI DSS suggests. After all, organization can derive value from using it, even without regulatory or industry compliance.

So, which logs are relevant to your PCI project? In some circumstances, the answer is “all of them,” but it is more likely that logs from systems that handle credit-card information, as well as systems they connect to, will be in-scope. Please refer to the data flow diagram that was described in Chapter 4, “Building and Maintaining a Secure Network,” to determine which systems actually PCI DSS requirements apply to all members, merchants, and service providers that store, process, or transmit cardholder data. Additionally, these requirements apply to all “system components,” which are defined as “any network component, server, or application included in, or connected to, the cardholder data environment.” Network components include, but are not limited to, firewalls, switches, routers, wireless access points, network appliances, and other security appliances. Servers include, but are not limited to, Web, database, authentication, Domain Name System (DNS), e-mail, proxy, and Network Time Protocol (NTP). Applications include all off-the-shelf and custom-built applications, including internally facing and externally facing Web applications.

By the way, it is important to remind you that approaching logging and monitoring with the sole purpose of becoming PCI compliant is not only wasteful but can actually undermine the intent of PCI DSS compliance. Starting from its intent—cardholder data security—is the way to go, which we advocate.

If you’ve been at this for a while, you may remember an interpretation clarification that happened many years ago on the actual capture of actions, where must it happen? If the database is logging access, do I need to also log that access in the application? You will need to choose the best option for your particular system, but you only need to capture the action of accessing the data once. Access could be captured in the DB (good for batch), application (good for interactive), or system (good for service accounts).

Logging in PCI Requirement 10

Let’s quickly go through Requirement 10, which directly addresses logging. We will go through it line by line and then go into details, examples, and implementation guidance.

The requirement itself is called “Track and monitor all access to network resources and cardholder data” and is organized under the “Regularly monitor and test networks” heading. The theme thus deals with both periodic (test) and ongoing (monitor) aspects of maintaining your security; we are focusing on logging and monitoring and have addressed periodic testing in Chapter 8, “Vulnerability Management.” More specifically, it requires a network operator to track and monitor all access to network resources and cardholder data. Thus, both network resources that handle the data and the data itself are subject to those protections.

Further, the requirement states that logging is critical, primarily when “something does go wrong” and one needs to “determine the cause of a compromise” or other problem. Indeed, logs are of immense importance for incident response. However, using logs for routine user tracking and system analysis cannot be underestimated. Next, the requirement is organized in several sections on process, events that need to be logged, suggested level of details, time synchronization, assessment log security, required log review, and log retention policy.

Specifically, Requirement 10.1 covers “establish[ing] a process for linking all access to system components (especially access done with administrative privileges such as root) to each individual user.” This is a very interesting requirement indeed; it doesn’t just mandate for logs to be there or for a logging process to be set, but instead it mentions that logs must be tied to individual persons (not computers or “devices” where they are produced). It is this requirement that often creates problems for PCI implementers because many think of logs as “records of people actions,” while in reality they will only have the “records of computer actions.” Mapping the latter to actual flesh-and-blood users often presents an additional challenge. By the way, PCI DSS Requirement 8.1 mandates that an organization “assigns all users a unique ID before allowing them to access system components or cardholder data, which” helps to make the logs more useful here.

Note

Question: Do I have to manually read every single log record daily to satisfy PCI Requirement 10?

Answer: No, automated log analysis and review is acceptable and, in fact, recommended in PCI DSS.

Next, Section 10.2 defines a minimum list of system events to be logged (or, to allow “the events to be reconstructed”). Such requirements are motivated by the need to assess and monitor user actions as well as other events that can affect credit-card data (such as system failures).

Following is the list from the requirements (events that must be logged) from PCI DSS:

• 10.2.1: All individual user accesses to cardholder data,

• 10.2.2: All actions taken by any individual with root or administrative privileges,

• 10.2.3: Access to all audit trails,

• 10.2.4: Invalid logical access attempts,

• 10.2 5: Use of identification and authentication mechanisms,

These requirements cover data access, privileged user actions, log access and initialization, failed and invalid access attempts, authentication and authorization decisions, and system object changes. These lists have their roots in IT governance “best practices,” which prescribe monitoring access, authentication, authorization change management, system availability, and suspicious activity. Thus, other regulations, such as the Sarbanes–Oxley Act and IT governance frameworks such as COBIT, contain or imply similar lists of events that need to be logged.

Note

We hope that in the future, such a list of system events will be determined by the overall log standards that go beyond PCI. There are ongoing log standard projects such as Common Event Expression (CEE) from MITRE [1] that have a chance to produce such a universally accepted (or at least, industry-accepted) list of events in the next 2–3 years.

Table 10.2 is a practical example of the list on the previous page.

Table 10.2 Logging Requirement and How to Address Them

| Requirement Number | Requirement | Example Type of a Log Message |

| 10.2.1 | All individual user accesses to cardholder data | Successful logins to processing server (Unix, Windows) |

| 10.2.2 | All actions taken by any individual with root or administrative privileges | Sudo root actions on a processing server |

| 10.2.3 | Access to all audit trails | Execution of Windows event viewer |

| 10.2.4 | Invalid logical access attempts | Failed logins (Unix, Windows) |

| 10.2.5 | Use of identification and authentication mechanisms | All successful, failed, and invalid login attempts |

| 10.2.6 | Initialization of the audit logs | Windows audit log cleaned alert |

| 10.2.7 | Creation and deletion of system-level objects | Unix user added, Windows security policy updated, database created |

Moreover, PCI DSS Requirement 10 goes into an even deeper level of detail and covers specific data fields or values that need to be logged for each event. They provide a healthy minimum requirement, which is commonly exceeded by logging mechanisms in various IT platforms.

Such fields are as follows:

• 10.3.1: User identification,

• 10.3.4: Success or failure indication,

• 10.3.5: Origination of event,

• 10.3.6: Identity or name of affected data, system component, or resource.

As shown, this minimum list contains all the basic attributes needed for incident analysis and for answering the questions: when, who, where, what, and where from. For example, if you are trying to discover who modified a credit-card database to copy all the transactions with all the details into a hidden file (a typical insider privilege abuse), you would need-to-know all the above. Table 10.3 summarizes the above fields in this case.

Table 10.3 PCI Event Details

| PCI Requirement | Purpose |

| 10.3.1: User identification | Which user account is associated with the event being logged? This might not necessarily mean “which person,” only which username |

| 10.3.2: Type of event | Was it a system configuration change? File addition? Database configuration change? Explains what exactly happened |

| 10.3.3: Date and time | When did it happen? This information helps in tying the event to an actual person |

| 10.3.4: Success or failure indication | Did he or she try to do something else that failed before his or her success in changing the configuration? |

| 10.3.5: Origination of event | Where did he or she connect from? Was it a local access or network access? This also helps in tying the log event to a person. Note that this can also refer to the process or application that originated the event |

| 10.3.6: Identity or name of affected data, system component, or resource | What is the name of the database, system object, and so forth which was affected? Which server did it happen on? This provides important additional information about the event |

Requirement 10.4 addresses a commonly overlooked but critical requirement: a need to have accurate and consistent time in all of the logs. It seems fairly straightforward that time and security event monitoring would go hand in hand as well.

System time is frequently found to be arbitrary in a home or small office network. It’s whatever time your server was set at, or if you designed your network for some level of reliance, your systems are configured to obtain time synchronization from a reliable source, like the NTP servers.

A need to “synchronize all critical system clocks and times” can make or break your incident response or lead to countless hours spent figuring out the actual times of events by correlating multiple sources of information together. In some cases, uncertainty about the log timestamps might even lead a court case to be dismissed because uncertainly about timestamps might lead to uncertainty in other claims as well. For example, from “so you are saying you are not sure when exactly it happened?” an expert attorney might jump to “so maybe you are not even sure what happened?” Fortunately, this requirement is relatively straightforward to address by configuring an NTP environment and then configuring all servers to synchronize time with it. The primary NTP servers can synchronize time with time.nist.gov or other official time sources, also called “Stratum 1” sources. For the most part, you can use any number of official time sources, including going down to Stratum 2 or 3 type devices. The goal is to have one time for your organization that everyone can synchronize with for services. Otherwise, you will end up with outages in directory systems, some authentication systems, and performing event discovery or analytics will become a nightmare.

Security of the logs themselves is of paramount importance for reasons similar to the above concerns about the log time synchronization. Requirement 10.5 states that one needs to “secure audit trails so they cannot be altered” and then clarifies various risks that need to be addressed.

Because it is a key issue, we will look at the security of monitoring data and logs in the next section.

Monitoring Data and Log for Security Issues

Although PCI is more about being compliant than about being hacked (well, not directly!), logs certainly help to answer this question. However, if logs themselves are compromised by the attackers and the log server is broken into, they lose all value for either security or compliance purposes. Thus, having assured log confidentiality, integrity, and availability (CIA) is a requirement for PCI as well as a best practice for other log uses.

First, one needs to address all the CIA of logs. Section 10.5.1 of PCI DSS covers the confidentiality: “Limit viewing of audit trails to those with a job-related need.” This means that only those who need to see the logs to accomplish their jobs should be able to. What is so sensitive about logs? One of the obvious answers is that authentication-related logs will always contain usernames. Although not truly secret, username information provides 50% of the information needed for password guessing (password being the other 50%). Why give possible attackers (whether internal or external) this information? Moreover, because of users mistyping their credentials, it is not uncommon for passwords themselves to show up in logs. Poorly written Web applications might result in a password being logged together with the Web Uniform Resource Locator (URL) in Web server logs. Similarly, a Unix server log might contain a user password if the user accidentally presses “Enter” one extra time while logging in.

Next comes “integrity.” As per Section 10.5.2 of PCI DSS, one needs to “protect audit trail files from unauthorized modifications.” This one is blatantly obvious; because if logs can be modified by unauthorized parties (or by anybody, in fact), they stop being an objective assessment trail of system and user activities.

However, one needs to preserve the logs not only from malicious users but also from system failures and consequences of system configuration errors. This touches upon both the “availability” and “integrity” of log data. Specifically, Section 10.5.3 of PCI DSS covers that one needs to “promptly back-up audit trail files to a centralized log server or media that is difficult to alter.” Indeed, centralizing logs to a server or a set of servers that can be used for log analysis is essential for both log protection and increasing log usefulness. Backing up logs to DVDs (or tapes, for that matter) is another action you might have to perform as a result of this requirement. You should always keep in mind that logs on tape are not easily accessible and not searchable in case of an incident.

Many pieces of network infrastructure such as routers and switches are designed to log to an external server and only preserve a minimum (or none) of logs on the device itself. Thus, for those systems, centralizing logs is most critical.

To further decrease the risk of log alteration as well as to enable proof that such alteration didn’t take place, Requirement 10.5.5 calls for the “use file integrity monitoring and change detection software on logs to ensure that existing log data cannot be changed without generating alerts.” At the same time, adding new log data to a log file should not generate an alert because log files tend to grow and not shrink on their own (unless logs are rotated or archived to external storage). File integrity monitoring systems use cryptographic hashing algorithms to compare files to a known good copy. The issue with logs is that log files tend to grow due to new record addition, thus undermining the utility of integrity checking. To resolve this difficulty, note that integrity monitoring can only assure the integrity of logs that are not being actively written to by the logging components. However, there are solutions that can verify the integrity of growing logs.

The next requirement is one of the most important as well as one of the most overlooked. Many PCI implementers simply forget that PCI Requirement 10 does not just call for “having logs” but for “having the logs and looking at them.” Specifically, Section 10.6 states that the PCI organization must, as per PCI DSS, “review logs for all system components at least daily. Log reviews must include those servers that perform security functions.”

Thus, the requirement covers the scope of log sources that need to be “reviewed daily” and not just configured to log and have logs preserved or centralized. Given that a Fortune 1000 IT environment might produce gigabytes of logs per day, it is humanly impossible to read all of the logs. That is why a note is added to this PCI DSS requirement that states “Log harvesting, parsing, and alerting tools may be used to achieve compliance with Requirement 10.6.” Indeed, log management tools are the only practical way to satisfy this requirement.

The final Requirement 10.7 deals with another hugely important logging question – log retention. It says to “retain audit trail history for at least one year, with a minimum of three months online availability.” Unlike countless other requirements, this deals with the complicated log retention question directly. Thus, if you are not able to go back one year and look at the logs, you are in violation.

So, let us summarize what we have learned so far on logging in PCI:

• PCI Requirement 10 calls for logging specific events with a predefined level of details from all in-scope systems.

• PCI calls for tying the actual users to all logged actions.

• All clocks and time on the in-scope systems should be synchronized.

• The CIA of all collected logs should be protected.

• Logs should be regularly reviewed; specific logs should be reviewed at least daily.

• All in-scope logs should be retained for at least one year.

Now, we are ready to dig deeper to discover that logs and monitoring “live” not only within Requirement 10 but in all other PCI requirements.

Logging and Monitoring in PCI—All Other Requirements

Although many people think that logs in PCI are represented only by Requirement 10, the reality is more complicated: logs are in fact present, undercover, in all other sections. We will now reveal where they hide. Table 10.4 highlights some of the places where logging requirements are implied or mentioned. The overall theme here is that logging and log management assists with validation and verification of many other requirements.

Table 10.4 Logging and Monitoring across PCI DSS Requirements

Now, let’s dive deeper into the role of logs to further explain that logs are not only about the Requirement 10. Just about every claim that is made to satisfy the requirements, such as data encryption or antivirus updates, can make effective use of log files to actually substantiate it.

For example, Requirement 1, “Install and maintain a firewall configuration to protect cardholder data,” mentions that organizations must have “a formal process for approving and testing all external network connections and changes to the firewall configuration.” However, after such a process is established, you need to validate that firewall configuration changes do happen with authorization and in accordance with documented change management procedures. That is where logging becomes extremely handy, because it shows you what actually happened and not just what was supposed to happen.

Specifically, seeing a message such as this Cisco ASA appliance record should indicate that someone is likely trying to modify the appliance configuration.

Other log-related areas within Requirement 1 include Section 1.1.6.

“Justification and documentation for any available protocols besides Hypertext Transfer Protocol (HTTP), SSL, Secure Shell (SSH), and virtual private network (VPN) where logs should be used to watch for all events triggered due to such communication.”

Section 1.1.7: Justification and documentation for any risky protocols allowed (e.g. File Transfer Protocol [FTP]), which includes the reason for use of protocol and security features implemented, where logs help to catalog the user of “risky” protocols and then monitor such use.

The entire Requirement 1.3 contains guidance to firewall configuration, with specific statements about inbound and outbound connectivity. One must use firewall logs to verify this; even a review of configuration would not be sufficient, because only logs show “how it really happened” and not just “how it was configured.”

Similarly, Requirement 2 talks about password management “best practices” as well as general security hardening, such as not running unneeded services. Logs can show when such previously disabled services are being started, either by misinformed system administrators or by attackers.

For example, if Apache Web server is disabled on an e-mail server system, a message such as the following should trigger an alert because the service should not be starting or restarting.

[Sun Jul 18 04:02:09 2004] [notice] Apache/1.3.19 (Unix) (Red-Hat/Linux) mod_ssl/2.8.1 OpenSSL/0.9.6 DAV/1.0.2 PHP/4.0.4pl1 mod_perl/1.24_01 configured— resuming normal operations

Further, Requirement 3, which deals with data encryption, has unambiguous links to logging. Specifically, key generation, distribution, and revocation are logged by most encryption systems, and such logs are critical for satisfying this requirement. Requirement 4, which also deals with encryption, has logging implications for similar reasons.

Requirement 5 refers to antivirus defenses. Of course, to satisfy Section 5.2, which requires that you “Ensure that all antivirus mechanisms are current, actively running, and capable of generating audit logs,” one needs to see such mentioned logs.

For example, Symantec AntiVirus might produce the following log record that occurs when the antivirus software experiences problems and cannot continue scanning, thus putting you in violation of PCI DSS rules.

So, even the requirement to “use and regularly update antivirus software” will likely generate requests for log data during the assessment, because the information is present in antivirus assessment logs. It is also well-known that failed antivirus updates, also reflected in logs, expose the company to malware risks because antivirus without the latest signature updates only creates a false sense of security and undermines the compliance effort.

Requirement 6 is in the same league: it calls for the organizations to “Develop and maintain secure systems and applications,” which is unthinkable without a strong assessment logging function and application security monitoring.

Requirement 7, which states that one needs to “Restrict access to cardholder data by business need-to-know,” requires logs to validate who actually had access to said data. If the users who should be prevented from seeing the data appear in the log files as accessing the data usefully, remediation is needed.

Assigning an unique ID to each user accessing the system fits with other security good practices. In PCI, it is not just good practice; it is a requirement (Requirement 8 “Assign a unique ID to each person with computer access”).

Obviously, one needs to “Control addition, deletion, and modification of user IDs, credentials, and other identifier objects” (Section 8.5.1 of PCI DSS). Most systems log such activities.

For example, the message below indicates a new user being added to a PIX/ASA firewall.

In addition, Section 8.5.9, “Change user passwords at least every 90 days,” can also be verified by reviewing the logs files from the server to assure that all the accounts have their password changed at least every 90 days.

Requirement 9 presents a new realm of security—physical access control. Even Section 9.4 that covers maintaining a visitor log (likely in the form of a physical log book) is connected to log management if such a visitor log is electronic. There are separate data retention requirements for such logs: “Use a visitor log to maintain a physical assessment trail of visitor activity. Retain this log for a minimum of three months, unless otherwise restricted by law.”

Requirement 11 addresses the need to scan the in-scope systems for vulnerabilities. However, it also calls for the use of IDS or IPS in Section 11.4: “Use network intrusion detection systems, host-based intrusion detection systems, and intrusion prevention systems to monitor all network traffic and alert personnel to suspected compromises. Keep all intrusion detection and prevention engines up-to-date.” Intrusion detection is only useful if monitored.

Requirement 12 covers the issues on a higher level—security policy as well as security standards and daily operational procedures (e.g. a procedure for daily log review mandates by Requirement 10 should be reflected here). However, it also has logging implications because assessment logging should be a part of every security policy. In addition, incident response requirements are also tied to logging: “Establish, document, and distribute security incident response and escalation procedures to ensure timely and effective handling of all situations” is unthinkable to satisfy without effective collection and timely review of log data.

Thus, event logging and security monitoring in PCI DSS program goes much beyond Requirement 10.

PCI DSS Logging Policies and Procedures

At this stage, we went through all of the PCI guidelines and uncovered where logging and monitoring are referenced. We now have a mammoth task ahead—how to address all those requirements?

In light of the above discussion, a PCI-derived logging policy must at least contain the following:

• Adequate logging, that covers both logged event types and details;

Let’s start focusing in depth on log review as the most complex of the requirements. PCI testing and validation procedures for log review mandate that a QSA should “obtain and examine security policies and procedures to verify that they include procedures to review security logs at least daily and that follow-up to exceptions is required.” QSA must also assure “Through observation and interviews, verify that regular log reviews are performed for all system components.”

Thus the organization should at least address the following:

The procedures can be implemented using automated log management tools as well as manually when tools are not available.

The overall connection between the three types of PCI-mandates procedure is as follows (see Figure 10.1).

Figure 10.1 PCI Log Flow

In other words, “Periodic Log Review Practices” are performed every day (or less frequently, if daily review is impossible) and any discovered exceptions or are escalated to “Exception Investigation and Analysis.”

The basic principle of PCI DSS periodic log review (further referred to as “daily log review” even if it might not be performed daily for all the applications) is to accomplish the following:

Assure that card holder data has not been compromised by the attackers;

Detect possible risks to cardholder data, as early as possible;

Satisfy the explicit PCI DSS requirement for log review;

Even given that fact that PCI DSS is the motivation for daily log review, other goals are accomplished by performing daily log review:

Assure that systems that process cardholder data are operating securely and efficiently;

Reconcile all possible anomalies observed in logs with other systems activities (such as application code changes or patch deployments).

In light of the above goals, the daily log review is built around the concept of “baselining” or learning and documenting normal set of messages appearing in logs. Baselining is then followed by the process of finding “exceptions” from the normal routine and investigating them to assure that no breach of cardholder data has occurred or imminent.

The process can be visualized as follows (see Figure 10.2).

Figure 10.2 Finding Exceptions

Before PCI daily log review is put into practice, it is critical to become familiar with normal activities logged on each of the applications.

Explicit event types might not always be available for some log types. For example, some Java application logs and some Unix logs don’t have explicit log or event types recorded in logs. What is needed is to create an implicit event type. The procedure for this case is as follows:

2. Identify which part of the log message identifies what it is about,

Even though log management tools perform the process automatically, it makes sense to go through an example of doing it manually in case manual log review procedure is utilized.

For example:

The log message is:

[Mon Jan 26 22:55:37 2011] [notice] Digest: generating secret for digest authentication.

It is very likely that the key part of the message is “generating secret for digest authentication” or even “generating secret.”

A review of other messages in the log indicates that no other messages contain the same phase and thus this phrase can be used to classify a message as a particular type.

We can create a message ID or message type as “generating_secret.” Now we can update our baseline that this type of message was observed today.

Building an Initial Baseline Manually

To build a baseline without using a log management tool has to be done when logs are not compatible with an available tool or the available tool has poor understanding of log data. To do it, perform the following:

1. Make sure that relevant logs from a PCI application are saved in one location,

2. Select a time period for an initial baseline: “90 days” or “all time” if logs have been collected for less than 90 days; check the timestamp on the earliest logs to determine that,

3. Review log entries starting from the oldest to the newest, attempting to identify their types,

4. Manually create a summary of all observed types; if realistic, collect the counts of time each message was seen (not likely in case of high log data volume),

5. Assuming that no breaches of card data have been discovered in that time period, we can accept the above report as a baseline for “routine operation”

6. An additional step should be performed while creating a baseline: even though we assume that no compromise of card data has taken place, there is a chance that some of the log messages recorded over the 90 day period triggered some kind of action or remediation. Such messages are referred to as “known bad” and should be marked as such.

The logs could be very large text and .csv files. If you are doing your analysis notepad ++ and csved are two tools that can help. They also have good search features and counting functions within them.

Guidance for Identifying “Known Bad” Messages

The following are some rough guidelines for marking some messages as “known bad” during the process of creating the baseline. If generated, these messages will be looked at first during the daily review process.

1. Login and other “access granted” log messages occurring at unusual hour,

2. Credential and access modifications log messages occurring outside of a change window,

3. Any log messages produced by the expired user accounts,

4. Reboot/restart messages outside of maintenance window (if defined),

5. Backup/export of data outside of backup windows (if defined),

7. Logging termination on system or application,

8. Any change to logging configuration on the system or application,

9. Any log message that has triggered any action in the past: system configuration, investigation, etc.

10. Other logs clearly associated with security policy violations.

As we can see, this list is also very useful for creating “what to monitor in near-real-time?” policy and not just for logging. Over time, this list should be expanded based on the knowledge of local application logs and past investigations.

After we built the initial baselines we can start the daily log review.

Main Workflow: Daily Log Review

This is the very central piece of the log review—comparing the logs produced over the last day (in case of a daily review) with an accumulated baseline.

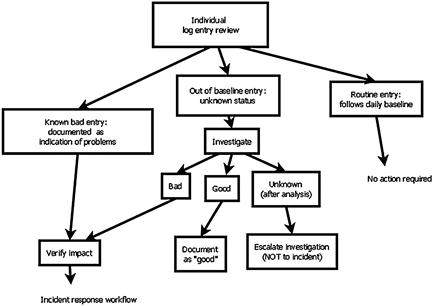

Daily workflow follows this model (see Figure 10.3).

Figure 10.3 PCI DSS Daily Workflows

This diagram summarizes the actions of the log analyst who performs the daily log review.

Exception Investigation and Analysis

A message not fitting the profile of a normal is flagged “an exception.” It is important to note that an exception is not the same as a security incident, but it might be an early indication that one is taking place.

At this stage we have an individual log message that is outside of routine/normal operation. How do we figure out whether it is significant, determine impact on security and PCI compliance status?

The following high-level investigative process (“Initial Investigation”) is used on each “exception” entry (more details are added further in the document) (see Figure 10.4).

Figure 10.4 High Level Investigative Process

Specifically, the above process makes use of a log investigative checklist, which is explained below in more details.

1. Look at log entries at the same time: this technique involves looking at an increasing range of time periods around the log message that is being investigated. Most log management products can allow you to review logs or to search for all logs within a specific time frame. For example:

a. First, look at other log messages triggered 1 min before and 1 min after the “suspicious” log

b. Second, look at other log messages triggered 10 min before and 10 min after the “suspicious” log

c. Third, look at other log messages triggered 1 h before and 1 h after the “suspicious” log

2. Look at other entries from same user: this technique includes looking for other log entries produced by the activities of the same user. It often happens that a particular logged event of a user activity can only be interpreted in the context of other activities of the same user. Most log management products can allow you to “drill down into” or search for a specific user within a specific time frame.

3. Look at the same type of entry on other systems: this method covers looking for other log messages of the same type, but on different systems in order to determine its impact. Learning when the same message was products on other system may hold clues to understanding the impact of this log message.

4. Look at entries from same source (if applicable): this method involves reviewing all other log messages from the network source address (where relevant).

5. Look at entries from same app module (if applicable): this method involves reviewing all other log messages from the same application module or components. While other messages in the same time frame (see item 1. above) may be significant, reviewing all recent logs from the same components typically helps to reveal what is going on.

Validation of Log Review

Final and critical part of compliance-motivated log review is making sure that there is sufficient evidence of the process, its real-world implementation and diligence in following the process. The good news here is that the same data can be used for management reporting about the logging and log review processes.

Let’s determine what documentation should be produced as proof of log review.

First, the common misconception is that having the logs actually provides that. That is not really true: “having logs” and “having logs reviewed” are completely different and sometime years of maturing the security and compliance program separates one and the other.

Just to remind you, we have several major pieces that we need to prove for PCI DSS compliance validation. Here is the master-list of all compliance proof we will assemble. Unlike other sections, here we will cover proof of logging and not just proof of log review since the latter is so dependent on the former:

PCI Compliance Evidence Package

Overall, it is useful to create a “PCI Compliance Evidence Package” to show it to the QSA that will establish our three keys of PCI DSS logging requirements:

While it is possible to prepare the evidence package before the assessment, it is much easier to maintain it on the ongoing basis and build scripts or other automated processes to refresh evidentiary data used during your assessment. For example, keep printed or electronic copies of the following:

1. Logging policy that covers all of the PCI DSS in-scope systems,

2. Logging and log review procedures (this document),

3. List of log sources—all systems and their components (applications) from the in-scope environment,

4. Sampling of configuration files that indicate that logging is configured according to the policy (e.g. /etc/syslog.conf for Unix, screenshots of audit policy for Windows, etc.),

5. Sampling of logs from in-scope systems that indicate that logs are being generated according to the policy and satisfy PCI DSS logging requirements,

6. Exported or printed report from a log management tools that shows that log reviews are taking place,

This will allow you to establish a compliant status and prove ongoing compliance.

Finally, let’s summarize all the operational tasks the organization should be executing in connection with log review.

Periodic Operational Task Summary

The following contains a summary of operational tasks related to logging and log review. Some of the tasks are described in detail in the document above; others are auxiliary tasks needed for successful implementation of PCI DSS log review program.

Daily Tasks

Table 10.5 contains daily tasks, responsible role that performs them as well as what record or evidence is created of their execution:

Table 10.5 PCI DSS Daily Tasks

| Task | Responsible Role | Evidence |

| Review all the types of logs produced over the last day as described in the daily log review procedures | Security administrator, security analyst, (if authorized) application administrator | Record of reports being run on a log management tool |

| (As needed) investigate the anomalous log entries as described in the investigative procedures | Security administrator, security analyst, (if authorized) application administrator | Recorded logbook entries for investigated events |

| (As needed) take actions as needed to mitigate, remediate or reconcile the results of the investigations | Security administrator, security analyst, (if authorized) application administrator, other parties | Recorded logbook entries for investigated events and taken actions |

| Verify that logging is taking place across all in-scope applications | Application administrator | Create a spreadsheet to record such activities for future assessment |

| (As needed) enabled logging if disabled or stopped | Application administrator | Create a spreadsheet to record such activities for future assessment |

Now we are ready to discuss the tools you might use for logging while meeting PCI DSS compliance.

Tools For Logging in PCI

First, if we are talking about a single server and a single piece of network gear such as a router there might be no need for automation and tools. One can easily configure logging and then look at the logs (measuring a few pages of text a day or more), as well as save a copy of said logs to make sure that one can go back a year and review the old logs if needed. However, this approach fails miserably when the number of systems grows from 1 to, say, 10. In reality, a large e-commerce site or a whole chain of stores might easily have thousands of in-scope systems, starting from mainframes with customer databases down to servers to complex network architectures (including classic LANs, WANs, wireless networks, and remote access systems with hundreds of remote users) to point of sale (POS) systems and all the way down to wireless card scanners. A handheld wireless card scanner is “a credit-card processing system” and thus is in-scope for PCI compliance. In fact, credit-card information has indeed been stolen this way.

Once it has been accepted that manual review of logs is not feasible or effective, the next attempt to satisfy the requirements usually comes in the form of scripts written by system administrators, to filter, review, and centralize the logs as well as makeshift remote logging collectors (usually limited to those log sources that support syslog, which is easy to forward to another system).

For example, one might configure all in-scope Unix servers to log a single “log server” and then write a Perl script that will scan the logs for specific strings (such as “fail∗,” “attack,” “denied,” “modify,” and so forth) and then send an e-mail to the administrator upon finding one of those strings. Another script might be used to summarize the log records into a simple “report” that highlights, for example, “Top Users” or “Top IP Addresses.” A few creative tools were written to implement various advanced methods of log analysis, such as rule-based or stateful correlation.

Such “solutions” work well and do not require any initial investment. Other advantages of such “homegrown” approaches are as follows:

• You are likely to get exactly what you want because you design and build the tool for your environment.

• You can develop tools that have capabilities not offered by any commercial tool vendor.

• The choice of platform, development tools, analysis methods, and everything else is yours alone.

• You can later customize the solution to suit your future needs.

• There is no up-front cost for buying software and even hardware (if you are reusing some old unused servers, which is frequently the case for such log analysis projects), however you may run into cost allocations around storage.

• Many system administrators say that “it is a fun thing to do.”

What makes it even easier is the availability of open source and freeware tools to address some of the pieces of log management for PCI. For example, Table 10.6 summarizes a few popular tools that can be (and in fact, have been) used in PCI log management projects.

Table 10.6 Logging Tools Useful for PCI DSS

On the other hand, many organizations turn to commercial vendors when looking for solutions to PCI logging and monitoring challenges. On the logging side, commercial log management solutions can aggregate all data from the in-scope entities, whether applications, servers, or network gear. Such solutions enable satisfying the log data collection, monitoring, analysis, data protection, and data retention.

Note

Why do we keep saying “retention” where some people would have used to term “storage?” “Retention” usually implies making sure that data is stored, but on a determined schedule and lifecycle.

Vendors also help with system configuration guidance to enable optimum logging (sometimes for a fee as “professional services”). Advantages of such an approach are obvious: on day 1, you get a supported solution as well as a degree of certainty that the vendor will maintain and improve the technology as well as have a roadmap for addressing other log-related organization needs beyond PCI compliance.

Fortunately, there are simple things you can do to avoid the pitfall of unmet requirements when acquiring a log management solution.

• Review PCI logging guidance such as this book (as well as the standard itself) to clarify the standard’s requirements.

• Consider how a log management solution would work in your environment.

• Define the need by talking to all the stakeholders in your PCI project and have the above information in mind.

Look through various commercial log management and SIEM tools such as LogLogic (www.loglogic.com), HP ArcSight (www.arcsight.com), Splunk (www.splunk.com), Q1Labs—now part of IBM (www.q1labs.com), Nitro (www.nitrosecurity.com), RSA’s envision (www.rsa.com or others to “case the joint” and to see what is out there. Here are some useful ideas on how to best talk to those vendors:

• Can your tool collect and aggregate 100% of all log data from all in-scope log sources on the network?

• Are your logs transported and stored securely to satisfy the CIA of log data?

• Are there packaged reports that suit the needs of your PCI projects stakeholders such as IT, assessors, maybe even Finance or Human Resources? Can you create the additional needed reports to organize collected log data quickly?

• Can you set alerts on anything in the logs to satisfy the monitoring requirements?

• Top three capacity concerns,

• How do you size the environment?

• How does your investigation tool perform under 90, 180, and 360+ loads of data?

Note

Alerts are only useful if there is a process and personnel in place to intake, analyze, and respond to alerts on a timely basis. In other words, if nobody is looking for e-mail alerts and is prepared to respond, they are next to useless.

• Does the tool make it easy to look at log data on a daily basis?

• Can the tools help you prove that you are by maintaining an assessment trail of log review activities? (Indeed, it is common for the assessors to ask for a log that shows that you review other logs and not for the original logs from information systems! Yes, log analyst activities need to be logged as well—if this is news to you then welcome to the world of compliance!)

• Can you perform fast, targeted searches across all in-scope systems components for specific data when asked? Remember, PCI is not about dumping logs on tape or disk, but about using them for cardholder data security.

• Can you readily prove, based on logs, that security (such as antivirus and intrusion prevention), change management (such as user account management), and access control policies mandated by the PCI requirements are in use and up-to-date?

When such tools are deployed, a few useful reports and alerts are typically created. On a more detailed level, here are some sample PCI-related reports and alerts for log review and monitoring.

Alerts used for real-time monitoring of in-scope servers are as follows:

• New privileges added to a user account,

• Antivirus protection failed to load,

• Log collection failed from an in-scope system,

• Additions to administrative groups,

• Group policy changes (if in an Active Directory environment).

Some of the recommended reports used for daily review of stored data are as follows:

• Traffic other than that allowed by PCI,

• User account changes on servers (e.g. additions, deletions, and modifications),

• Login activity on in-scope servers,

• User group membership changes,

• Password changes on in-scope servers and network devices,

Note

A good list of reports can be found in “SANS Top 7 log reports,” currently in draft form

http://chuvakin.blogspot.com/2010/08/updated-with-community-feedback-sans_06.html, and another great resource is Randy Franklin Smith’s web site at

http://www.ultimatewindowssecurity.com/securitylog/encyclopedia/Default.aspx.

Other Monitoring Tools

It is critical to remember that the scope of security monitoring in PCI DSS is not limited to logs because system logs alone do not cover all the monitoring needs. Additional monitoring requirements are covered by the following technologies (as well as process requirements that accompany them):

• Intrusion detection or intrusion prevention; mandated by PCI Requirement 11.4 “Use intrusion detection systems and/or intrusion–prevention systems to monitor all traffic in the cardholder data environment,”

• File integrity monitoring; mandated by PCI Requirement 11.5 “Deploy file integrity monitoring software to alert personnel to unauthorized modification of critical system files, configuration files, or content files.”

We will cover the critical issues related to these technologies in the section below.

Intrusion Detection and Prevention

NIDS and IPSs are becoming a standard information security safeguard. Together with firewalls and vulnerability scanners, intrusion detection is one of the pillars of modern computer security. When referring to IDSs and IPSs today, security professional typically refers to NIDS and network IPS. As we covered in Chapter 4, “Building and Maintaining a Secure Network,” the former sniffs the network, looking for traces of attacks, whereas the latter sits “inline” and passes (or blocks) network traffic.

NIDS monitors the entire subnet for network attacks against machines connected to it, using a database of attack signatures or a set of algorithms to detect anomalies in network traffic. See Figure 10.5 for a typical NIDS deployment scenario.

Figure 10.5 Network Intrusion Detection Deployment

On the other hand, network IPS sits at a network choke point and protects such a network of systems from inbound attacks or outbound exfiltration. To simplify the difference, IDS alerts whereas IPS blocks. See Figure 10.6 for a typical network IPS deployment.

Figure 10.6 Network Intrusion Prevention Deployment

The core technology of picking “badness” from the network traffic with a subsequent alert (IDS) or blocking (IPS) is essentially similar. Even when intrusion prevention functionality is integrated with other functions to be deployed as so-called Unified Threat Management (UTM), the idea remains the same: network traffic passes through the device with malicious traffic stopped, suspicious traffic logged, and benign passed through.

Also important is the fact that most of today’s IDS and IPS rely upon the knowledge of attacks and thus require ongoing updates of signatures, rules, attack traces to look for, and so forth. This is exactly why PCI DSS mandates that IDS and IPS are not only deployed but also frequently updated and managed in accordance with manufacturer’s recommendations.

In the context of PCI, IDS and IPS technologies are mentioned in the context of monitoring. Even though IDS can only alert and log attacks while IPS adds blocking functionality, both can and must be used to notify the security personnel about malicious and suspicious activities on the cardholder data networks. Below we present a few useful tips for deploying IDS and IPS for PCI DSS compliance and card data security. IPS is not required, but can be used in the place of an IDS.

Despite the domination of commercial vendors, the free open-source IDS/IPS Snort, now developed and maintained by its corporate parent, Sourcefire, is likely the most popular IDS/IPS by the number of deployments worldwide. Given its price (free) and reliable rule updates from Sourcefire (www.sourcefire.com), it makes a logical first choice for smaller organizations. It also shouldn’t be taken off the shortlist even for larger organizations seeking to implement intrusion detection or prevention.

Although a detailed review of IDS and IPS technologies and practices goes well beyond the scope of this book, we would like to present a few key practices for making your PCI-driven deployment successful.

First, four key facts about IDS and IPS, which are also highlighted in the PCI standard:

1. IDS or IPS technology must be deployed as per PCI DSS. If another device includes IDS or IPS functionality (such as UTM mentioned above), it will likely qualify as well.

2. IDS and IPS need to “see” the network traffic in cardholder; for IDS, it needs to be able to sniff it and for IPS, to pass it through. An IDS box sitting in the closet is not PCI compliance (and definitely not security!).

3. IDS and IPS must be actively monitored by actual people, devoted (full-time, part-time, or outsources) to doing just that. PCI DSS states that systems must be set to “alert personnel to suspected compromises.”

4. IDS and IPS rely on updates from the vendor; such updates must be deployed, or the devices will lose most of its value. PCI does highlight it by stating to “Keep all intrusion detection and prevention engines up-to-date.”

The above four facts define how IDS and IPS are used for the cardholder requirement. Despite the above knowledge, IDS technologies are not the easiest to deploy, especially in light of the number 3 consideration above. PCI DSS-driven IDS deployments suffer from a few of the common mistakes covered below.

First, using an IDS or an IPS to protect the cardholder environment and to satisfy PCI DSS requirement is impossible without giving it an ability to see all the network traffic. In other words, deploying an NIDS without sufficient network environment planning is a big mistake that reduces, if not destroys, the value of such tools. Network IPS, for example, should be deployed on the network choke point such as right inside the firewall leading to cardholder network, on the appropriate internal network segment, or in the De-Militarized Zone (DMZ). For the shared Ethernet-based networks, IDS will see all the network traffic within the Ethernet collision domain or subnet and also destined to and from the subnet but no more. For the switched networks, there are several IDS deployment scenarios that use special switch capabilities such as port mirroring or spanning. When one or more IDS devices are deployed, it is your responsibility to confirm that they can “cover” the entire “in-scope” network.

Port mirroring and spanning should be avoided whenever possible. Switch vendors documentation will tell you that they do not scale for this type of monitoring and you may not see all data when the network is busy.

Network taps are the preferred method of deployment for IDS. Even if you decide to put IPS in-line, you should consider using taps to get the appliances in line. Even though all commercial IPS systems can be configured to fail open or “turn into a wire” if you do not use taps you may have to schedule a network outage whenever you have to swap out an appliance for maintenance or upgrades. In some environments, this can lead to failing to meet SLAs.

Second, even if an IDS is deployed appropriately, but nobody is looking at the alerts it generates, the deployment will end in failure and will not lead to PCI compliance. It’s well known that IDS is a “detection” technology, and it never promised to be a “shoot-and-forget” means of thwarting attacks. Although in some cases, the organization might get away with dropping the firewall in place and configuring the policy, such a deployment scenario never works for intrusion detection. If IDS alerts are reviewed only after a successful compromise, the system turns into an overpriced incident response helper tool, clearly not what the technology designers had in mind. Even with IPS, a lot of suspicious indicators are not reliable enough to be blocked automatically, thus monitoring is just as critical as with IDS.

PCI DSS Requirement 12.5.2 does state that an organization needs to “Monitor and analyze security alerts and information, and distribute to appropriate personnel.” Still, despite this, many organizations deploy IDS and develop a no response policy. As a result, their network IPS is deployed, it “sees” all the traffic, and there is somebody reviewing the alert stream. But what is the response for each potential alert? Panic, maybe? Does the person viewing the alerts know the best course of action needed for each event? What alerts are typically “false positives”—alerts being triggered on benign activity—and “false alarms”—alerts being triggered on attacks that cannot harm the target systems—in the protected environment? Unless these questions are answered, it is likely that no intelligent action is being taken based on IDS alerts—a big mistake by itself, even without PCI DSS.

The fourth and final mistake is simply not accepting the inherent limitations of network intrusion protection technology. Although anomaly-based IDSs might detect an unknown attack, most signature-based IDS will miss a new exploit if there is no rule written for it. IDS and IPS must frequently receive vendor signature updates, as mandates by the PCI DSS. Even if updates are applied on a schedule, exploits that are unknown to the IDS vendor will probably not be caught by the signature-based system. Attackers may also try to blind or evade the NIDS by using many tools available for download. There is a constant battle between the technology developers and those who want to escape detection. IPS/IDS are becoming more sophisticated and able to see through the old evasion methods, but new approaches are constantly being used by attackers like trusted server to trusted server or island hopping. Those deploying the NIDS technology should be aware of its limitations and practice “defense-in-depth” by deploying multiple and diverse security solutions.

Thus, IDS/IPS is a key monitoring technology for PCI DSS and data protection; however, when deploying it, many pitfalls need to be considered if it were to be useful for PCI compliance and security.

Integrity Monitoring

In the prehistoric days of security industry, before all the current compliance frenzy and way before PCI DSS, the idea of monitoring key system files for changes was invented. As early system administrators engaged in an unequal battle with hackers who compromised almost every server connected to the Internet, the idea of a quick and easy way for verifying that system files was not modified and thus not subverted by hackers seemed like a god-send.

Indeed, just run a quick “scan” of a filesystem, compute cryptographic checksums, save them in a safe place (a floppy comes to mind—we are talking 1990s here, after all), and then have a “known good” record of the system files. In case of problems, run another checksum computation and compare the results; if differences are found, then something or someone has subverted the files.

That is exactly the idea of integrity checking and monitoring. The tools such as the pioneering tripwire (now developed by its corporate parent, Tripwire, Inc) have matured significantly and offer near real-time checks, an ability to revert to the previous version of the changed files, as well as a detailed analysis of observed changes across a wide range of platforms for servers, desktops, and even network devices.

Note

Question: What is the difference between host intrusion detection and integrity monitoring?

Answer: Many different types of applications are labeled as host-based intrusion detection. In general, the distinction is that with IDS, the end goal is the detection of malicious activity in a host environment, whereas an integrity monitoring system aims to provide visibility into all kinds of change. Detecting malicious change or activity is a big part of an integrity monitoring system but that is not the entire motivation behind its deployment.

As we mentioned, PCI DSS mandates the use of such tools via Requirement 11.5. Namely, “Deploy file integrity monitoring software to alert personnel to unauthorized modification of critical system files, configuration files, or content files; and configure the software to perform critical file comparisons at least weekly.”

This means that the tools must be deployed on in-scope systems, key files need to be monitored, and comparisons are to be run at least weekly. Indeed, knowing that the server has been compromised by attackers a week after the incident is a lot better than what some recent credit-card losses indicate. For example, TJX card theft was discovered years after the actual intrusion took place, causing the company some massive embarrassment.

Some of the challenges with such tools include creating a list of key files to checksum. Typically, relying on the integrity checking tool vendor default (or even “PCI DSS-focused”) policy is a good idea. Here is an example list of such files for a typical Linux server:

• Configuration files in/etc. directory.

• All system executables (/bin,/usr/bin, /usr/sbin, and other possible binary directories), ESPECIALLY those setuid to root.

• Key payment application executable, configuration, and data files.

• Log files /var/log (these require a special append-only mode in your integrity monitoring).

Also, just as with IDS and IPS, a response policy in case of breach of integrity is essential.

Common Mistakes and Pitfalls

By now the reader should be convinced that it is impossible to comply with PCI requirements without log data management processes and technologies in place. Complete log data is needed to prove that security, change management, access control, and other required processes and policies are in use, up-to-date, and are being adhered to. In addition, when managed well, log data can protect companies when compliance-related legal issues arise (e.g. when processes and procedures are in question or when an e-discovery process is initiated as part of an ongoing investigation). Not only does log data enable compliance but also allows companies to prove that they are implementing and continuously monitoring the processes outlined by the requirements.

• Logging in the PCI DSS is not confined to Requirement 10. As we discussed above, all the requirements imply having a solid log management policy, program, and tools.

• Logging in PCI is not only about log collection retention; Requirement 10.6 directly states that you need to review, not just accumulate logs.

• Log review in PCI does not mean that you have to read the logs yourself; using automated log management tools is not only allowed but suggested.

• A careful review of what is in-scope must be performed. Otherwise, one creates a potentially huge issue for the organization in terms of having what is thought of as a solid PCI program, but then suffering a data breach and getting fined due to missing a wireless POS system or some other commonly overlooked but clearly in-scope systems. (Well, at least it becomes clear after your organization is fined.)

• Your logging tools purchased and deployed for PCI compliance are almost certainly useful for other things ranging from other compliance mandates (see the above example of PCI and Sarbanes–Oxley) as well as operational, security, investigative, incident response, and other uses.

Case Study

Next, we present two of the case studies that illustrate what is covered in this chapter.

The Case of the Risky Risk-Based Approach

This case study covers deployment of a log management solution to satisfy PCI requirements at a large retail chain in the Midwest. Bert’s Bazaar, an off-price retailer servicing the Midwest and southern US states, decided to deploy a commercial log management solution when their PCI assessor strongly suggested that they need to look into it. Given that Bert has a unique combination of a large set of in-scope systems (some running esoteric operating systems and custom applications) and an extreme shortage of skilled IT personnel, they chose to buy a commercial solution without seriously considering an in-house development. So, they progressed from not doing anything with their logs directly to running an advanced log management system.