Chapter 3: Atomicity, Consistency, Isolation, and Durability (ACID)

In the Chapter 2, How Does CockroachDB Work Internally, we learned about the different layers of CockroachDB. In this chapter, we will learn about what ACID is, its importance, and what the ACID guarantees that CockroachDB provides are.

ACID guarantees the following things:

- Atomicity: This is achieved through the notion of a transaction, in which all the statements within a transaction are executed as a single unit. So, either all of them succeed or fail together.

- Consistency: The database state should be consistent before and after a transaction is executed and should ensure that the database constraints are never violated.

- Isolation: Multiple transactions can get executed independently at the same time, without running into each other.

- Durability: Changes, once committed, always remain intact, irrespective of any system or network failures.

The following topics will be covered in this chapter:

- An overview of ACID properties

- ACID from CockroachDB's perspective

An overview of ACID properties

In this section, we will discuss each of the ACID properties and understand their importance in avoiding data loss and corruption. First, we will take a look at atomicity.

Atomicity

Atomicity refers to the integrity of a given transaction, which means if a transaction comprises multiple statements, atomicity ensures that either all of them succeed or none of them succeed. Atomicity is important to make sure that there is no data inconsistency because of a transaction getting partially executed.

Let's try to understand this with an example:

BEGIN TRANSACTION

Read Foo's Account

Debit $100 from foo's Account

Read Bar's Account

Credit $100 to bar's Account

COMMIT

Here, you have a transaction in which you are debiting the money from Foo's Account and crediting it to bar's Account. Here, it's important that these two activities happen as a single unit of work. Otherwise, it can result in data inconsistency. Consider a case in which you are able to debit the money from Foo's Account, but not able to credit it to bar's Account – you will lose track of the money that was deducted from Foo's Account. So, either all of them should succeed, or none of them should.

Next, we will learn about consistency.

Consistency

Consistency in ACID is an overloaded term and can mean several things, including the following:

- Ensuring that the transactions in the future see the effects of transactions that are already committed

- Ensuring database constraints are not violated once a given transaction is committed

- Ensuring that all the operations in a transaction are executed correctly

Basically, consistency is responsible for ensuring the database always moves from one valid state to another, which doesn't result in any data inconsistency or corruption.

In the context of a Consistency, Availability, and Partition Tolerance (CAP) theorem, consistency indicates that in a distributed system, all the reads receive the most recent write, or it will error out. As per the CAP theorem, you can only have two of consistency, partition tolerance, and availability. Since consistency will be important to most of the applications, you have to choose between availability and partition tolerance.

Next, we will go through various isolation levels and try to understand the implication of each on a transaction.

Isolation

Isolation deals with the guarantees a database provides when multiple clients are interacting with the same set of data.

Some of the popular isolation levels are as follows:

- Serializable: This is the highest isolation level which requires acquiring a lock on the data you are operating. Transactions in CockroachDB implement the highest isolation level which is serializable. This means that transactions will never result in inconsistent or corrupt data. In the case of CockroachDB, this is provided by using range-level locks called write intents.

- Snapshot: This is a non-lock-based concurrency control, so no locks are not used, however, if a conflict is detected between concurrent transactions, then only one of them is allowed to commit.

- Read uncommitted: This is the lowest isolation level which allows dirty reads, which means changes made by live transactions that are not yet committed.

- Repeatable read: Repeatable read guarantees that you only read a committed value and also already read data cannot be changed by some other transaction. However, it does allow a phantom read. A phantom read happens when, between two reads of the same data, some other parallel transaction adds new data and they show up in subsequent reads, once they are committed.

- Read committed: This isolation level guarantees that any read you do is already committed.

With an example, let's try to understand the difference between read committed, repeatable read, and serializable:

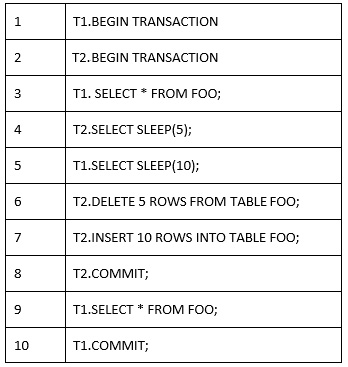

Figure 3.1 – Two transactions, T1 and T2, happening in parallel

In the previous example, there are two parallel transactions, T1 and T2.

For the discussion, let's assume that the following figure is the sequence of events:

Figure 3.2 – Ordering of operations for transactions T1 and T2

Here, serializable guarantees that the T1 transaction sees the exact same value in Steps 3 and 8, although there are new rows added and some rows got deleted by the T2 transaction.

With read committed, Step 9 will see new rows added by the T2 transaction and it will not see the rows deleted by the T2 transaction, since all the changes by T2 are already committed.

In the case of a repeatable read, Step 9 will only see the new data committed by T1.

Durability

Durability guarantees that any changes that are committed are permanent, irrespective of failures related to nodes, memory, storage, or the network. Databases achieve durability by flushing out the transactional log to non-volatile storage like solid-state drives and magnetic storage devices.

Many of the database management systems (DBMS) use the concept of a transaction log to ensure they can recover from system crashes. The transaction log is also called a binary log, database log, or audit trail. The transaction log is usually stored on an external storage device.

Let's say a database node crashes while executing a bunch of transactions. Now, whenever it comes back, it goes through the transaction log to determine which transactions were committed and which were uncommitted at the time of the crash. If a transaction is committed, all the changes made during that transaction are replayed. If a transaction was uncommitted, all the changes made by the transaction are rolled back.

Next, we will learn about how ACID properties are supported in CockroachDB.

ACID from CockroachDB's perspective

In this section, we will go over how each of the ACID properties is implemented in CockroachDB and what guarantees they provides. Like in the previous section, we will start with atomicity.

Atomicity

As we learned in the first section, atomicity ensures that all the statements in a given transaction are executed as a single unit – that is, either all of them succeed or all of them fail. This condition should be guaranteed irrespective of machine, network, and memory failures. This is essential to make sure multiple queries don't run into each other.

CockroachDB allows you to have ACID transactions that can span the entire cluster, touching multiple nodes and geographical locations. CockroachDB supports this using an atomic protocol called parallel commits.

In the previous chapter, we learned about transaction records and write intent. A transaction record keeps track of the current state of the transaction and is maintained in the range where the first write occurs. Whenever we are changing a value, they are not directly written to the storage layer. Instead, a value is written to an intermediate state known as write intent. Write intent acts on a multiversion concurrency control (MVCC) record, with a link to the transaction record. Write intent acts as a replicated lock, which houses a replicated provisional value.

Write intent has been shown in the following figure with a sample transaction with two writes:

Figure 3.3 – Transaction timeline without parallel commit

Any transaction that comes across a write intent should also go through corresponding transaction records and, based on its state, decide how to treat the write intent. A commit flips the transaction record state to committed. Once the transaction is committed, write intents are cleaned up asynchronously.

Now, let's take a look at how traditional atomic transactions worked in CockroachDB without parallel commits and later with parallel commits.

Atomic transactions without parallel commits

Prior to version 19.2, there was no parallel commit concept and transactions worked similar to a two-phase commit protocol. Let's explore this with an example.

Let's say there is a transaction with three writes, as shown in the following example:

BEGIN

write Tesla

write GameStop

write Amazon

COMMIT

This entire flow has been depicted in Figure 3.4:

Figure 3.4 – Transaction timeline without parallel commit

Consider the following:

- The transaction record is created in the first range where the write happens. In this case, that happens to be the Tesla value. So, along with the provisional Tesla value, a write intent is created with the transactional record in a PENDING state.

- As and when the rest of the writes are received, they create their own write intents. All these write intents are said to be pipelined because CockroachDB doesn't wait until they succeed before receiving the next statement within the same transaction from the SQL client.

- Once the COMMIT is issued in the transaction, CockroachDB waits for all the write intents to succeed with replication.

- Once all of them succeed, the transaction record state is changed to COMMITTED. Again, this is replicated for durability and the transaction is considered committed once the replication is completed.

- At this time, CockroachDB sends back an acknowledgment that the transaction is committed.

- Later, the rest of the write intents are resolved and are eventually cleaned up.

This mechanism is similar to a two-phase commit protocol, in which write intents can be compared to the prepare phase and marking the transaction record as committed is similar to the commit phase. The main problem here is that it is blocking, since CockroachDB waits for all the write intents to succeed before marking the transaction record as committed. If the coordinator node crashes, then it's impossible to recover from that.

CockroachDB overcomes this problem by implementing a two-phase commit on top of a consensus protocol, RAFT, which we discussed in the previous chapter. This ensures that the transaction records themselves are replicated and highly available, and can recover from a coordinator crash.

Having a two-phase commit protocol on top of RAFT introduces more latency. This is because CockroachDB first waits for all the write intents to succeed before changing the transaction status to committed. Later, it has to wait until changing the transaction status itself has succeeded, as that involves one more round of consensus.

Now, let's see how an atomic transaction with parallel commit avoids this added transactional latency.

Atomic transactions with parallel commits

Parallel commit was introduced to reduce the transaction latency observed in the previously discussed two-phase commit like protocol

In the previous protocol, the transaction record has to wait until all the write intents have succeeded to change the status to committed. In parallel commit, there is a new status called STAGING. The transaction record also includes the list of all the keys for which there are write operations in the current transaction. A transaction can be implicitly assumed to be committed if all the writes that are listed in the transaction record have succeeded and reached consensus.

Let's go over the same transaction with three writes:

BEGIN

write Tesla

write GameStop

write Amazon

COMMIT

This entire flow has been depicted in Figure 3.5:

Figure 3.5 – Transaction timeline with parallel commit

Here, the key difference is that the logic of a transaction being committed depends on the status of all the writes involved in that transaction. Also, if we know the status of all the writes, we don't have to wait until the transaction record status is explicitly updated to committed after reaching consensus. Because of this, the transaction's coordinator node can acknowledge that a given transaction has been committed successfully to the SQL client once the coordinator observes that all the writes in that transaction have succeeded. The other important change in this protocol is that the initiation of pipelining of the write to the transaction record with the STAGING status is done after a COMMIT for a given transaction is received from the SQL client. Pipelining the write to the transaction record is done in parallel with pipelining the write intents, in order to speed up the entire process.

Now, let's take a look at how a transaction status is recovered whenever a transaction coordinator crashes in the middle of a transaction.

Transaction status recovery

Now, let's see what happens if the coordinator crashes before it can update the transaction record to either COMMITTED or ABORTED. In this case, whenever there is a transaction with a conflicting write intent, it looks up that write intent's transaction record. Once it sees that the status is STAGING, it cannot decide whether that transaction was COMMITTED or ABORTED. So, now it starts the status recovery process.

During transaction status recovery, each write intent involved in that transaction is consulted to see if it succeeded. If all the write intents have succeeded, the transaction is assumed to be COMMITTED, and if not, to be ABORTED. After this, the appropriate status is updated so that any other conflicting transaction in the future doesn't have to go through the status recovery process again.

Status recovery can be very expensive, especially if it involves multiple writes with ranges that do not share leaseholders. If multiple leaseholders are involved in status recovery, there will be multiple roundtrips to several nodes, before we can recover the status. To avoid this, CockroachDB does two things:

- The transaction coordinator node marks the transaction record as COMMITTED or ABORTED as soon as it can.

- Transaction coordinators periodically send heartbeats to their transaction records. This helps the conflicting transactions to determine if a transaction is still alive or not.

In the next section, we will learn about how consistency is ensured within CockroachDB.

Consistency

As discussed earlier, consistency deals with two things:

- Ensuring no database rules are violated

- Making sure that transactions that are executed in parallel on the same set of data do not conflict with each other, which is necessary to avoid data consistency issues

For the first one, it boils down to making sure that the database doesn't have any bugs and does whatever it claims. Jepsen is an effort to improve the safety of distributed databases, queues, and consensus systems. During Jepsen testing, a given system is verified for whether it lives up to its documentation's claims. CockroachDB passed Jepsen testing in 2017.

In CAP theorem, which we discussed in the first chapter, consistency means every read sees the latest write or errors out. CockroachDB is a consistent and partition tolerant (CP) system, which means its highly consistent and, whenever there are partitions, the system becomes unavailable rather than ending up with inconsistent data.

Let's now learn about isolation and what kind of isolation CockroachDB provides.

Isolation

CockroachDB uses something called a serializable snapshot, which is an optimistic, multi-version, timestamp-ordered concurrency control system.

It's a distributed, lockless, recoverable, and serializable protocol. Distributed, as multiple nodes can be involved. Lockless, as operations are performed without locks and correctness is ensured by aborting transactions that violate serializability. Recoverable, since aborted transactions don't have any effect on the state of the database, which is ensured by the atomic commit protocol. Serializable, since CockroachDB guarantees a consistent database state by ensuring serial execution of composite transactions is correct.

Next, we will learn about durability in CockroachDB.

Durability

Durability guarantees that any changes that are committed are permanent. CockroachDB uses the RAFT consensus algorithm to ensure that all writes for a transaction record and write intents are durable. We have already discussed RAFT at length in Chapter 2, How Does CockroachDB Work Internally?

CockroachDB replicates each range three times by default and ensures that each replica is stored on different nodes. If a minority of the nodes fail, CockroachDB continues to operate and does not result in inconsistency or loss of data.

Let's take a look at how durability works in a three-node cluster:

Figure 3.6 – Transaction timeline with parallel commit

As you can see, node 1 is the lease holder for data1 and node 2 has the replica. Similarly, node 3 is the lease holder for data3, and node 1 has the replica. Next, node 2 is the lease holder for data2, and node 3 has the replica. Here, node 1 is also acting as a gateway node, where the initial request from the SQL client lands, and it also coordinates other nodes in the cluster to serve the request:

Figure 3.7 – Transaction timeline with parallel commit

Now, let's say node 2 is not available due to a system failure. Since node 2 was the lease holder of data2, now the coordinator is unable to get the data for data2 from node 2, since it's not available. Now, the RAFT group for data2 will hold an election and the lease holder will be reassigned. In this case, it has to be node 3, as that's the only other node that has the replica of data2:

Figure 3.8 – Transaction timeline with parallel commit

As you can see in Figure 3.8, node 3 acts as the lease holder for both data2 and data3 and the cluster is still fully functional, in spite of a node failure.

Now, if node 3 also goes down for some reason, since we don't have any lease holder for data2, if a request involves serving the data for data2, it cannot be completed, affecting the availability of data2 on a given CockroachDB cluster.

As you can see, CockroachDB can tolerate certain node failures, as long as there are lease holders for all the data. Otherwise, availability takes a hit. In either case, there won't be any data loss.

Users can also configure replication zones for databases, tables, rows, indexes, and system data. We will discuss these configurations in Chapter 5, Fault Tolerance and Auto-Rebalancing, where we will discuss fault tolerance and auto-recovery.

Summary

In this chapter, we learned about the four basic pillars of any database: Atomicity, Consistency, Isolation, and Durability. As a recap, atomicity ensures that a transaction is executed as a single unit of work. Consistency involves making sure that any database operation doesn't violate any of the database constraints. In the context of a CAP theorem, consistency refers to the fact you will never read stale or uncommitted data. CockroachDB provides both serializable and snapshot isolation levels. CockroachDB uses the RAFT protocol for transaction records and write intents to guarantee that all committed data is durable and permanent, irrespective of node failures.

In the next chapter, we will go over fault tolerance and auto-recovery, and what some of the configurations are in CockroachDB.