Chapter 18: Implementing Game AI for Building Enemies

What is a game if not a great challenge to the player, who needs to use their character's abilities to tackle different scenarios? Each game imposes different kinds of obstacles to the Player, and the main one in our game is the enemies. Creating challenging and believable enemies can be complex, they need to behave like real characters and to be smart enough to not be easy to kill, but also easy enough that they are not impossible to kill either. We are going to use basic but good enough AI techniques to accomplish exactly that.

In this chapter, we will examine the following AI concepts:

- Gathering information with sensors

- Making decisions with FSMs

- Executing FSM actions

Gathering information with sensors

An AI works first by taking in info about its surroundings, then that data is analyzed to determine an action and finally, the chosen action is executed, and as you can see, we cannot do anything without information, so let's start with that part. There are several sources of information our AI can use, such as data about itself (life and bullets) or maybe some game state (winning condition or remaining enemies), which can be easily found with the code we saw so far, but one important source of information is also the AI senses. According to the needs of our game, we might need different senses such as sight and hearing, but in our case, sight will be enough, so let's learn how to code that.

In this section, we will examine the following sensor concepts:

- Creating Three-Filters sensors

- Debugging with Gizmos

Let's start seeing how to create a sensor with the Three-Filters approach.

Creating Three-Filters sensors

The common way to code senses is through a Three-Filters approach to discard enemies out of sight. The first filter is a distance filter, which will discard enemies too far away to be seen, then the angle check, which will check enemies inside our viewing cone, and finally a raycast check, which will discard enemies that are being occluded by obstacles such as walls. Before starting, a word of advice: we will be using vector mathematics here, and covering those topics in-depth is outside the scope of this book. If you don't understand something, feel free to just copy and paste the code in the screenshot and look up those concepts online. Let's code sensors the following way:

- Create an empty GameObject called AI as a child of the Enemy Prefab. You need to first open the Prefab to modify its children (double-click the Prefab). Remember to set the transform of this Object to position (0,0,0), rotation (0,0,0), and scale (1,1,1) so it will be aligned to the Enemy. While we can certainly just put all AI scripts directly in the Enemy, we did this just for separation and organization:

Figure 18.1 – AI scripts container

- Create a script called Sight and add it to the AI child Object.

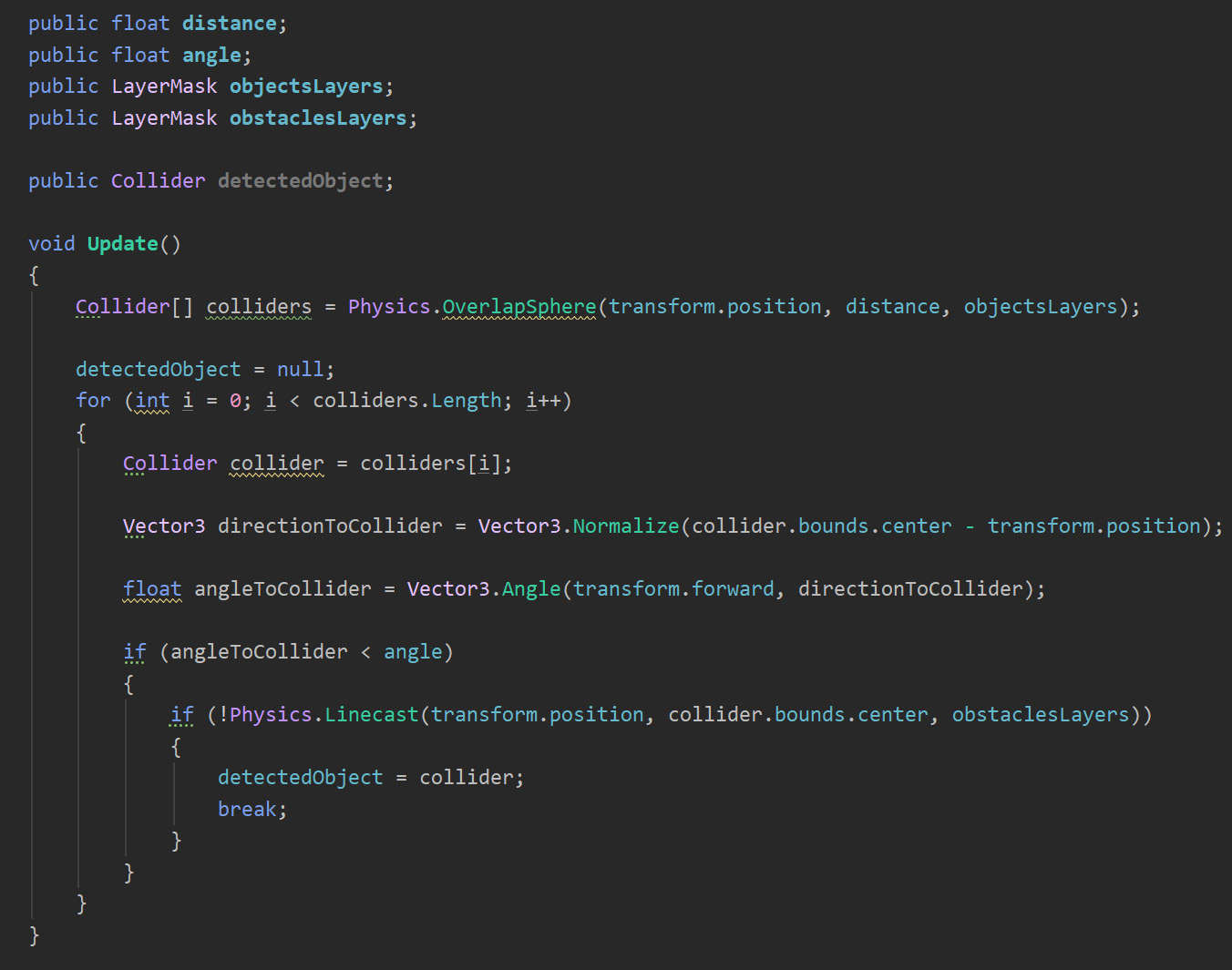

- Create two fields of the float type called distance and angle, and another two of the LayerMask type called obstaclesLayers and ObjectsLayers. distance will be used as the vision distance, angle will determine the amplitude of the view cone, ObstacleLayers will be used by our obstacle check to determine which Objects are considered obstacles, and ObjectsLayers will be used to determine what types of Objects we want the sight to detect. We just want the sight to see enemies; we are not interested in Objects such as walls or power-ups. LayerMask is a property type that allows us to select one or more layers to use inside code, so we will be filtering Objects by layer. In a moment, you will see how we use it:

Figure 18.2 – Fields to parametrize our sight check

- In Update, call Physics.OverlapSphere as in the next screenshot. This function creates an imaginary sphere in the place specified by the first parameter (in our case, our position) and with a radius specified in the second parameter (the distance property) to detect Objects with the layers specified in the third parameter (ObjectsLayers). It will return an array with all Objects Colliders found inside the sphere, these functions use Physics to do the check, so the Objects must have at least one collider. This is the way we will be using to get all enemies inside our view distance, and we will be further filtering them in the next steps.

Important Note

Another way of accomplishing the first check is to just check the distance to the Player, or if looking for other kinds of Objects, to a Manager containing a list of them, but the way we chose is more versatile and can be used in any kind of Object.

Also, you might want to check the Physics.OverlapSphereNonAlloc version of this function, which does the same thing but is more performant by not allocating an array to return the results.

- Iterate over the array of Objects returned by the function:

Figure 18.3 – Getting all Objects at a certain distance

- To detect whether the Object falls inside the vision cone, we need to calculate the angle between our viewing direction and the direction to the Object itself. If the angle between those two directions is less than our cone angle, we consider that the Object falls inside our vision. We can start detecting the direction toward the Object, which is calculated normalizing the difference between the Object position and ours, like in the following screenshot. You might notice we used bounds.center instead of transform.position; this way, we check the direction to the center of the Object instead of its pivot. Remember that the Player's pivot is in the ground and the ray check might collide against it before the Player:

Figure 18.4 – Calculating direction from our position toward the collider

- We can use the Vector3.Angle function to calculate the angle between two directions. In our case, we can calculate the angle between the direction toward the Enemy and our forward vector to see the angle:

Figure 18.5 – Calculating the angle between two directions

IMPORTANT INFO

If you want, you can instead use Vector3.Dot, which will execute a dot product. Vector3.Angle actually uses that one, but to convert the result of the dot product into an angle, it needs to use trigonometry and this can be expensive to calculate. Anyway, our approach is simpler and fast while you don't have a big number of sensors (50+, depending on the target device), which won't happen in our case.

- Now check whether the calculated angle is less than the one specified in the angle field. Consider that if we set an angle of 90 degrees, it will be actually 180 degrees, because if the Vector3.Angle function returns, as an example, 30, it can be 30 to the left or the right. If our angle says 90 degrees, it can be both 90 degrees to the left or to the right, so it will detect Objects in a 180-degree arc.

- Use the Physics.Line function to create an imaginary line between the first and the second parameter (our position and the collider position) to detect Objects with the layers specified in the third parameter (the obstacles layers) and return boolean indicating whether that ray hit something or not. The idea is to use the line to detect whether there are any obstacles between ourselves and the detected collider, and if there is no obstacle, this means that we have a direct line of sight toward the Object. Again, remember that this function depends on the obstacle Objects having colliders, which in our case, we have (walls, floor, and so on):

Figure 18.6 – Using a Line Cast to check obstacles between the sensor and the target Object

- If the Object passes the three checks that means that this is the Object we are currently seeing, so we can save it inside a field of the Collider type called detectedObject, to save that information for later usage by the rest of the AI scripts. Consider using break to stop for that is iterating the colliders to prevent wasting resources by checking the other Objects, and to set detectedObject to null before for to clear the result from the previous frame, so in case, in this frame, we don't detect anything, it will keep the null value so we can notice that there is nothing in the sensor:

Figure 18.7 – Full sensor script

IMPORTANT INFO

In our case, we are using the sensor just to look for the Player, the only Object the sensor is in charge of looking for, but if you want to make the sensor more advanced, you can just keep a list of detected Objects, placing inside it every Object that passes the three tests instead of just the first one.

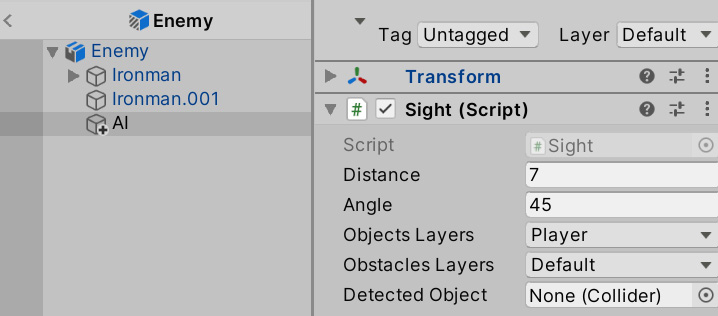

- In the Editor, configure the sensor as you wish. In this case, we will set ObjectsLayer to Player so our sensor will focus its search on Objects with that layer, and obstaclesLayer to Default, the layer we used for walls and floors:

Figure 18.8 – Sensor settings

- To test this, just place an Enemy with a movement speed of 0 in front of the Player, select its AI child Object and then play the game to see how the property is set in the Inspector. Also, try putting an obstacle between the two and check that the property says "None" (null). If you don't get the expected result, double-check your script, its configuration, and whether the Player has the Player layer and the obstacles have the Default layer. Also, you might need to raise the AI Object a little bit to prevent the ray from starting below the ground and hitting it:

Figure 18.9 – The sensor capturing the Player

Even if we have our sensor working, sometimes checking whether it's working or configured properly requires some visual aids we can create using Gizmos.

Debugging with Gizmos

As we will create our AI, we will start to detect certain errors in edge cases, usually related to misconfigurations. You may think that the Player falls inside the sight of the Enemy but maybe you cannot see that the line of sight is occluded by an Object, especially as the enemies move constantly. A good way to debug those scenarios is through Editor-only visual aids known as Gizmos, which allows you to visualize invisible data such as the sight distance or the line casts executed to detect obstacles.

Let's start seeing how to create Gizmos by drawing a sphere representing the sight distance by doing the following:

- In the Sight script, create an event function called OnDrawGizmos. This event is only executed in the Editor (not in builds) and is the place Unity asks us to draw Gizmos.

- Use the Gizmos.DrawWireSphere function passing our position as the first parameter and the distance as the second parameter to draw a sphere in our position with the radius of our distance. You can check how the size of the Gizmo changes as you change the distance field:

Figure 18.10 – Sphere Gizmo

- Optionally, you can change the color of the Gizmo, setting Gizmos.color prior to calling the drawing functions:

Figure 18.11 – Gizmos drawing code

IMPORTANT INFO

Now you are drawing Gizmos constantly, and if you have lots of enemies, they can pollute the scene view with too many Gizmos. In that case, try the OnDrawGizmosSelected event function instead, which draws Gizmos only if the Object is selected.

- We can draw the lines representing the cone using Gizmos.DrawRay, which receives the origin of the line to draw and the direction of the line, which can be multiplied by a certain value to specify the length of the line, as in the following screenshot:

Figure 18.12 – Drawing rotated lines

- In the screenshot, we used Quaternion.Euler to generate a quaternion based on the angles we want to rotate. If you multiply this quaternion by a direction, we will get the rotated direction. We are taking our forward vector and rotating it according to the angle field to generate our cone vision lines. Also, we multiply this direction by the sight distance to draw the line as far as our sight can see; you will see how the line matches the end of the sphere this way:

Figure 18.13 – Vision Angle lines

We can also draw the line casts, which check the obstacles, but as those depend on the current situation of the game, such as the Objects that pass the first two checks and their positions, we can use Debug.DrawLine instead, which can be executed in the Update method. This version of DrawLine is designed to be used in runtime only. The Gizmos we saw also executes in the Editor. Let's try them the following way:

- First, let's debug the scenario where LineCast didn't detect any obstacles, so we need to draw a line between our sensor and the Object. We can call Debug.DrawLine in the if statement that calls LineCast, as in the following screenshot:

Figure 18.14 – Drawing a line in Update

- In the next screenshot, you can see DrawLine in action:

Figure 18.15 – Line toward the detected Object

- We also want to draw a line in red when the sight is occluded by an Object. In this case, we need to know where the Line Cast hit, so we can use an overload of the function, which provides an out parameter that gives us more information about what the line collided with, such as the position of the hit and the normal and the collided Object, as in the following screenshot:

Figure 18.16 – Getting information about Linecast

IMPORTANT INFO

Consider that Linecast doesn't always collide with the nearest obstacle but with the first Object it detects in the line, which can vary in order. If you need to detect the nearest obstacle, look for the Physics.Raycast version of the function.

- We can use that information to draw the line from our position to the hit point in the else clause of the if sentence, when the line collides with something:

Figure 18.17 – Drawing a line in case we have an obstacle

- In the next screenshot, you can see the results:

Figure 18.18 – Line when an obstacle occludes vision

Now that we have our sensors completed, let's use the information provided by them to make decisions with Finite State Machines (FSMs).

Making decisions with FSMs

We explored the concept of FSMs in the past when we used them in the Animator. We learned that an FSM is a collection of states, each one representing an action that an Object can be executing at a time, and a set of transitions that dictates how the states are switched. This concept is not only used in Animation but in a myriad of programming scenarios, and one of the common ones is in AI. We can just replace the animations with AI code in the states and we have an AI FSM.

In this section, we will examine the following AI FSM concepts:

- Creating the FSM

- Creating transitions

Let's start creating our FSM skeleton.

Creating the FSM

To create our own FSM, we need to recap some basic concepts. Remember that an FSM can have a state for each possible action it can execute and that only one can be executed at a time. In terms of AI, we can be Patrolling, Attacking, Fleeing, and so on. Also, remember that there are transitions between States that determine conditions to be met to change from one state to the other, and in terms of AI, this can be the user being near the Enemy to start attacking or life being low to start fleeing. In the next screenshot, you can find a simple reminder example of the two possible states of a door:

Figure 18.19 – FSM example

There are several ways to implement FSMs for AI; you can even use the Animator if you want to or download some FSM system from the Asset Store. In our case, we are going to take the simplest approach possible, a single script with a set of If sentences, which can be basic but is still a good start to understand the concept. Let's implement it by doing the following:

- Create a script called EnemyFSM in the AI child Object of the Enemy.

- Create enum called EnemyState with the GoToBase, AttackBase, ChasePlayer, and AttackPlayer values. We are going to have those states in our AI.

- Create a field of the EnemyState type called currentState, which will hold, well, the current state of our Enemy:

Figure 18.20 – EnemyFSM states definition

- Create three functions named after the states we defined.

- Call those functions in Update depending on the current state:

Figure 18.21 – If-based FSM

IMPORTANT INFO

Yes, you can totally use a switch here, but I just prefer the regular if syntax.

- Test in the Editor how changing the currentState field will change which state is active, seeing the messages being printed in the console:

Figure 18.22 – States testing

As you can see, it is a pretty simple but totally functional approach, so let's continue with this FSM, creating its transitions.

Creating transitions

If you remember the transitions created in the Animator Controller, those were basically a collection of conditions that are checked if the state the transition belongs to is active. In our FSM approach, this translates simply as If sentences that detect conditions inside the states. Let's create the transitions between our proposed states as follows:

- Add a field of the Sight type called sightSensor in our FSM script, and drag the AI GameObject to that field to connect it to the Sight component there. As the FSM component is in the same Object as Sight, we can also use GetComponent instead, but in advanced AIs, you might have different sensors that detect different Objects, so I prefer to prepare my script for that scenario, but pick the approach you like the most.

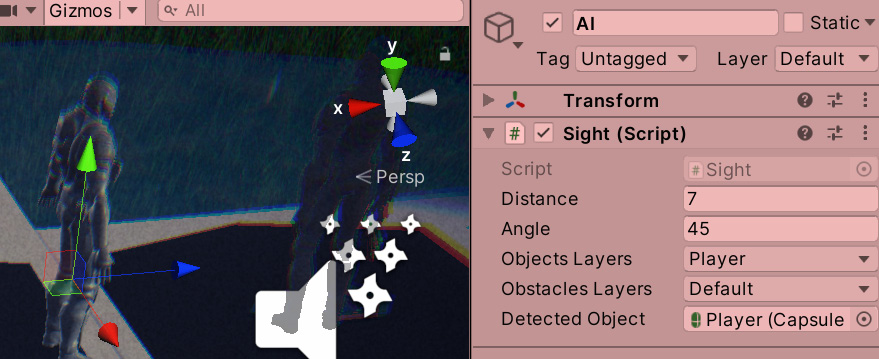

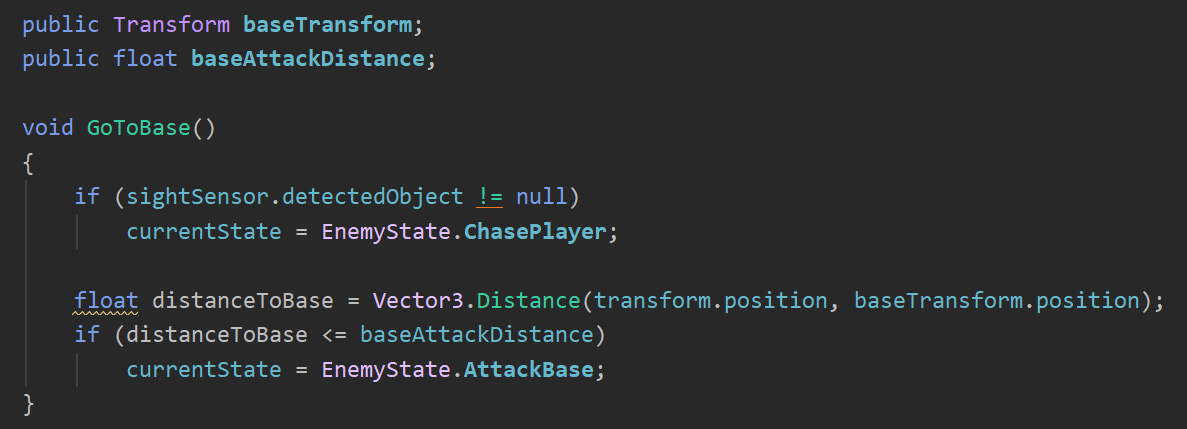

- In the GoToBase function, check whether the detected Object of the Sight component is not null, meaning that something is inside our line of vision. If our AI is going toward the base but detects an Object in the way there, we must switch to the Chase state to pursue the Player, so we change the state, as in the following screenshot:

Figure 18.23 – Creating transitions

- Also, we must change to AttackBase in case we are near enough the Object that must be damaged to decrease the base life. We can create a field of the Transform type called baseTransform and drag the Base Life Object there so we can check the distance. Remember to add a float field called baseAttackDistance to make that distance configurable:

Figure 18.24 – Go to Base Transitions

- In the case of ChasePlayer, we need to check whether the Player is out of sight to switch back to the GoToBase state or whether we are near enough the Player to start attacking it. We will need another distance field, which determines the distance to attack the Player, and we might want different attack distances for those two targets. Consider an early return in the transition to prevent getting null reference exceptions if we try to access the position of the sensor-detected Object when there is none:

Figure 18.25 – Chase Player Transitions

- For AttackPlayer, we need to check whether Player is out of sight to get back to GoToBase or whether it is far enough to go back to chasing it. You can notice how we multiplied PlayerAttackDistance to make the stop-attacking distance a little bit larger than the start-attacking distance; this will prevent switching back and forth rapidly between attack and chase when the Player is near that distance. You can make it configurable instead of hardcoding 1.1:

Figure 18.26 – Attack Player Transitions

- In our case, AttackBase won't have any transition. Once the Enemy is near enough the base to attack it, it will stay like that, even if the Player starts shooting at it. Its only objective once there is to destroy the base.

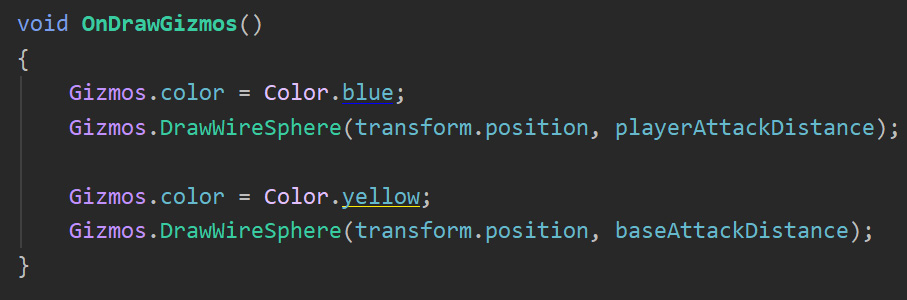

- Remember you can use Gizmos to draw the distances:

Figure 18.27 – FSM Gizmos

- Test the script selecting the AI Object prior to hitting play and then move the Player around, checking how the states change in the inspector. You can also keep the original print messages in each state to see them changing in the console. Remember to set the attack distances and the references to the Objects. In the screenshot, you can see the settings we use:

Figure 18.28 – Enemy FSM settings

A little problem that we will have now is that the spawned enemies won't have the needed references to make the distance calculations toward the Base Transform. You will notice that if you try to apply the changes on the Enemy of the scene to the Prefab (Overrides | Apply All), the Base Transform will say None. Remember that Prefabs cannot contain references to Objects in the scene, which complicates our work here. One alternative would be to create BaseManager, a Singleton that holds the reference to the damage position, so our EnemyFSM can access it. Another one could be to make use of functions such as GameObject.Find to find our Object.

In this case, we will try the latter. Even if it can be less performant than the Manager version, I want to show you how to use it to expand your Unity toolset. In this case, just set the baseTransform field in Awake to the return of GameObject.Find, using BaseDamagePoint as the first parameter, which will look for an Object called like that, as in the following screenshot. Also, feel free to remove the private keyword from the baseTransform field; now that is set via code, it makes little sense to display it in the Editor other than to debug it. You will see that now our wave-spawned enemies will change states:

Figure 18.29 – Searching for an Object in the scene by name

Now that our FSM states are coded and transition properly, let's make them do something.

Executing FSM actions

Now we need to do the last step—make the FSM do something interesting. Here, we can do a lot of things such as shoot the base or the Player and move the Enemy toward its target (the Player or the base). We will be handling movement with the Unity Pathfinding system called NavMesh, a tool that allows our AI to calculate and traverse paths between two points avoiding obstacles, which needs some preparation to work properly.

In this section, we will examine the following FSM action concepts:

- Calculating our scene Pathfinding

- Using Pathfinding

- Adding final details

Let's start preparing our scene for movement with Pathfinding.

Calculating our scene Pathfinding

Pathfinding algorithms rely on simplified versions of the scene. Analyzing the full geometry of a complex scene is almost impossible to do in real time. There are several ways to represent Pathfinding information extracted from a scene, such as Graphs and NavMesh geometries. Unity uses the latter—a simplified mesh similar to a 3D model that spans over all areas that Unity determines are walkable. In the next screenshot, you can find an example of a NavMesh generated in a scene, that is, the light blue geometry:

Figure 18.30 – NavMesh of walkable areas in the scene

Generating a NavMesh can take from seconds to minutes depending on the size of the scene. That's why Unity's Pathfinding system calculates that once in the Editor, so when we distribute our game, the user will use the pre-generated NavMesh. Just like Lightmapping, a NavMesh is baked into a file for later usage. Like Lightmapping, the main caveat here is that the NavMesh Objects cannot change during runtime. If you destroy or move a floor tile, the AI will still walk over that area. The NavMesh on top of that didn't notice the floor isn't there anymore, so you are not able to move or modify those Objects in any way. Luckily, in our case, we won't suffer any modification of the scene during runtime, but remember that there are components such as NavMeshObsacle that can help us in those scenarios.

To generate a NavMesh for our scene, do the following:

- Select any walkable Object and the obstacles on top of it, such as floors, walls, and other obstacles, and mark them as Static. You might remember that the Static checkbox also affects Lightmapping, so if you want an Object not to be part of Lightmapping but contribute to the NavMesh generation, you can click the arrow at the left of the static check and select Navigation Static only. Try to limit Navigation Static Objects to only the ones that the enemies will actually traverse to increase NavMesh generation speed. Making the Terrain navigable, in our case, will increase generation time a lot and we will never play in that area.

- Open the NavMesh panel in Window | AI | Navigation.

- Select the Bake tab and click the Bake button at the bottom of the window and check the generated NavMesh:

Figure 18.31 – Generating a NavMesh

And that's pretty much everything you need to do. Of course, there are lots of settings you can fiddle around with, such as Max Slope, which indicates the maximum angle of slopes the AI will be able to climb, or Step Height, which will determine whether the AI can climb stairs, connecting the floors between the steps in the NavMesh, but as we have a plain and simple scene, the default settings will suffice.

Now, let's make our AI move around the NavMesh.

Using Pathfinding

For making an AI Object that moves with NavMesh, Unity provides the NavMeshAgent component, which will make our AI stick to the NavMesh, preventing the Object to go outside it. It will not only calculate the Path to a specified destination automatically but also will move the Object through the path with the use of Steering behavior algorithms that mimic the way a human would move through the path, slowing down on corners and turning with interpolations instead of instantaneously. Also, this component is capable of evading other NavMeshAgents running in the scene, preventing all of the enemies from collapsing in the same position.

Let's use this powerful component by doing the following:

- Select the Enemy Prefab and add the NavMeshAgent component to it. Add it to the root Object, the one called Enemy, not the AI child—we want the whole Object to move. You will see a cylinder around the Object representing the area the Object will occupy in the NavMesh. Remember that this isn't a collider, so it won't be used for physical collisions:

Figure 18.32 – The NavMeshAgent component

- Remove the ForwardMovement component; from now on, we will drive the movement of our Enemy with NavMeshAgent.

- In the Awake event function of the EnemyFSM script, use the GetComponentInParent function to cache the reference of NavMeshAgent. This will work similar to GetComponent—it will look for a component in our GameObject, but if the component is not there, this version will try to look for that component in all parents. Remember to add the using UnityEngine.AI line to use the NavMeshAgent class in this script:

Figure 18.33 – Caching a parent component reference

IMPORTANT INFO

As you can imagine, there is GetComponentInChildren, which searches components in GameObject first and then in all its children if necessary.

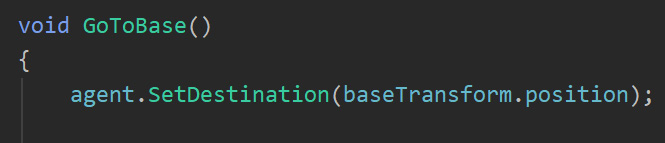

- In the GoToBase state function, call the SetDestination function of the NavMeshAgent reference, passing the position of the base Object as the target:

Figure 18.34 – Setting a destination of our AI

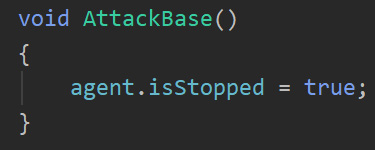

- Save the script and test this with a few enemies in the scene or with the enemies spawned by the waves. You will see the problem where the enemies will never stop going toward the target position, entering inside the Object, if necessary, even if the current state of their FSMs changes when they are near enough. That's because we never tell NavMeshAgent to stop, which we can do by setting the isStopped field of the agent to true. You might want to tweak the Base Attack Distance to make the Enemy stop a little bit nearer or further:

Figure 18.35 – Stopping agent movement

- We can do the same for ChasePlayer and AttackPlayer. In ChasePlayer, we can set the destination of the agent to the Player position, and in Attack Player, we can stop the movement. In this scenario, Attack Player can go back again to GoToBase or ChasePlayer, so you need to set the isStopped agent field to false in those states or before doing the transition. We will pick the former, as that version will cover other states that also stop the agent without extra code. We will start with the GoToBase state:

Figure 18.36 – Reactivating the agent

- Then, continue with Chase Player:

Figure 18.37 – Reactivating the agent and chasing the Player

- And finally, continue with Attack Player:

Figure 18.38 – Stopping the movement

- You can tweak the Acceleration, Speed, and Angular Speed properties of NavMeshAgent to control how fast the Enemy will move. Also, remember to apply the changes to the Prefab for the spawned enemies to be affected.

Now that we have movement in our Enemy, let's finish the final details of our AI.

Adding final details

We have two things missing here, the Enemy is not shooting any bullets and it doesn't have animations. Let's start fixing the shooting by doing the following:

- Add a bulletPrefab field of the GameObject type to our EnemyFSM script and a float field called fireRate.

- Create a function called Shoot and call it inside AttackBase and AttackPlayer:

Figure 18.39 – Shooting function calls

- In the Shoot function, put a similar code as the one used in the PlayerShooting script to shoot bullets at a specific fire rate, as in the following screenshot. Remember to set the Enemy layer in your Enemy Prefab, in case you didn't before, to prevent the bullet from damaging the Enemy itself. You might also want to raise the AI script a little bit to shoot bullets in another position or, better, add a shootPoint transform field and create an empty Object in the Enemy to use as a spawn position. If you do that, consider making the empty Object to not be rotated so the Enemy rotation affects the direction of the bullet properly:

Figure 18.40 – Shooting function code

IMPORTANT INFO

Here, you find some duplicated shooting behavior between PlayerShooting and EnemyFSM. You can fix that by creating a Weapon behavior with a function called Shoot that instantiates bullets and takes into account the fire rate, and call it inside both components to re-utilize it.

- When the agent is stopped, not only does the movement stop but also the rotation. If the Player moves while the Enemy is attacked, we still need the Enemy to face it to shoot bullets in its direction. We can create a LookTo function that receives the target position to look and call it in AttackPlayer and AttackBase, passing the target to shoot at:

Figure 18.41 – LookTo function calls

- Complete the LookTo function by getting the direction of our parent to the target position, we access our parent with transform.parent because, remember, we are the child AI Object, the Object that will move is our parent. Then, we set the Y component of the direction to 0 to prevent the direction pointing upward or downward—we don't want our Enemy to rotate vertically. Finally, we set the forward vector of our parent to that direction so it will face the target position immediately. You can replace that with interpolation through quaternions to have a smoother rotation if you want to, but let's keep things as simple as possible for now:

Figure 18.42 – Looking toward a target

Finally, we can add animations to the Enemy using the same Animator Controller used in the Player and setting the parameters with other scripts in the following steps:

- Add an Animator component to the Enemy, if it's not already there, and set the same Controller used in the Player; in our case, this is also called Player.

- Create and add a script to the Enemy root Object called NavMeshAnimator, which will take the current velocity of NavMeshAgent and will set it to the Animator Controller. This will work similar to the VelocityAnimator script and is in charge of updating the Animator Controller velocity parameter to the velocity of our Object. We didn't use that one here because NavMeshAgent doesn't use Rigidbody to move. It has its own velocity system. We can actually set Rigidbody to kinematic if we want because of this, since it moves but not with Physics:

Figure 18.43 – Connecting the NavMeshAgent to our Animator Controller

- Cache a reference to the parent Animator in the EnemyFSM script. Just do the same thing we did to access NavMeshAgent:

Figure 18.44 – Accessing the parent's Animator reference

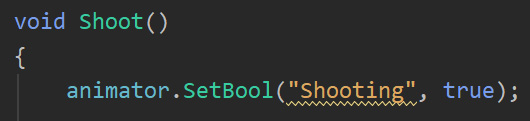

- Turn on the Shooting animator parameter inside the Shoot function to make sure every time we shoot, that parameter is set to true (checked):

Figure 18.45 – Turning on the shooting animation

- Turn off boolean in all non-shooting states, such as GoToBase and ChasePlayer:

Figure 18.46 – Turning off the shooting animation

With that, we have finished all AI behaviors. Of course, this script is big enough to deserve some rework and splitting in the future, and some actions such as stopping and resuming the animations and NavMeshAgent can be done in a better way. But with this, we have prototyped our AI, and we can test it until we are happy with it, and then we can improve this code.

Summary

I'm pretty sure AI is not what you imagined; you are not creating any SkyNet here, but we have accomplished a simple but interesting AI for challenging our Player, which we can iterate and tweak to tailor to our game's expected behavior. We saw how to gather our surrounding information through sensors to make decisions on what action to execute using FSMs, and using different Unity systems such as Pathfinding and Animator to make the AI execute those actions.

With this, we end Part 2 of this book, about C# scripting. In the next short part, we are going to finish our game's final details, starting with optimization.