14

Advanced Concepts for Machine Learning Projects

In the previous chapter, we introduced a possible workflow for solving a real-life problem using machine learning. We went over the entire project, starting with cleaning the data, through training and tuning a model, and then lastly evaluating its performance. However, this is rarely the end of the project. In that project, we used a simple decision tree classifier, which most of the time can be used as a benchmark or minimum viable product (MVP). In this chapter, we cover a few more advanced concepts that can help with improving the value of the project and make it easier to adopt by the business stakeholders.

After creating the MVP, which serves as a baseline, we would like to improve the model’s performance. While attempting to improve the model, we should also try to balance underfitting and overfitting. There are a few ways to do so, some of which include:

- Gathering more data (observations)

- Adding more features—either by gathering additional data (for example, by using external data sources) or through feature engineering using currently available information

- Using more complex models

- Selecting only the relevant features

- Tuning the hyperparameters

There is a common stereotype that data scientists spend 80% of their time on a project gathering and cleaning data while only 20% remains for the actual modeling. In line with the stereotype, adding more data might greatly improve a model’s performance, especially when dealing with imbalanced classes in a classification problem. But finding additional data (be it observations or features) is not always possible, or might simply be too complicated. Then, the other solution may be to use more complex models or to tune the hyperparameters to squeeze out some extra performance.

We start the chapter by presenting how to use more advanced classifiers, which are also based on decision trees. Some of them (XGBoost and LightGBM) are frequently used for winning machine learning competitions (such as those found on Kaggle). Additionally, we introduce the concept of stacking multiple machine learning models to further improve prediction performance.

Another common real-life problem concerns dealing with imbalanced data, that is, when one class (such as default or fraud) is rarely observed in practice. This makes it especially difficult to train a model to accurately capture the minority class observations. We introduce a few common approaches to handling class imbalance and compare their performance on a credit card fraud dataset, in which the minority class corresponds to 0.17% of all the observations.

Then, we also expand on hyperparameter tuning, which was explained in the previous chapter. Previously, we used either an exhaustive grid search or a randomized search, both of which are carried out in an uninformed manner. This means that there is no underlying logic in selecting the next set of hyperparameters to investigate. This time, we introduce Bayesian optimization, in which past attempts are used to select the next set of values to explore. This approach can significantly speed up the tuning phase of our projects.

In many industries (and finance especially) it is crucial to understand the logic behind a model’s prediction. For example, a bank might be legally obliged to provide actual reasons for declining a credit request, or it can try to limit its losses by predicting which customers are likely to default on a loan. To get a better understanding of the models, we explore various approaches to determining feature importance and model explainability. The latter is especially relevant when dealing with complex models, which are often considered to be black boxes, that is, unexplainable. We can additionally use those insights to select only the most relevant features, which can further improve the model’s performance.

In this chapter, we present the following recipes:

- Exploring ensemble classifiers

- Exploring alternative approaches to encoding categorical features

- Investigating different approaches to handling imbalanced data

- Leveraging the wisdom of the crowds with stacked ensembles

- Bayesian hyperparameter optimization

- Investigating feature importance

- Exploring feature selection techniques

- Exploring explainable AI techniques

Exploring ensemble classifiers

In Chapter 13, Applied Machine Learning: Identifying Credit Default, we learned how to build an entire machine learning pipeline, which contained both preprocessing steps (imputing missing values, encoding categorical features, and so on) and a machine learning model. Our task was to predict customer default, that is, their inability to repay their debts. We used a decision tree model as the classifier.

Decision trees are considered simple models and one of their drawbacks is overfitting to the training data. They belong to the group of high-variance models, which means that a small change to the training data can greatly impact the tree’s structure and its predictions. To overcome those issues, they can be used as building blocks for more complex models. Ensemble models combine predictions of multiple base models (for example, decision trees) in order to improve the final model’s generalizability and robustness. This way, they transform the initial high-variance estimators into a low-variance aggregate estimator.

On a high level, we could divide the ensemble models into two groups:

- Averaging methods—several models are estimated independently and then their predictions are averaged. The underlying principle is that the combined model is better than a single one as its variance is reduced. Examples: Random Forest and Extremely Randomized Trees.

- Boosting methods—in this approach, multiple base estimators are built sequentially and each one tries to reduce the bias of the combined estimator. Again, the underlying assumption is that a combination of multiple weak models produces a powerful ensemble. Examples: Gradient Boosted Trees, XGBoost, LightGBM, and CatBoost.

In this recipe, we use a selection of ensemble models to try to improve the performance of the decision tree approach. As those models are based on decision trees, the same principles about feature scaling (no explicit need for it) apply and we can reuse most of the previously created pipeline.

Getting ready

In this recipe, we build on top of what we already established in the Organizing the project with pipelines recipe from the previous chapter, in which we created the default prediction pipeline, from loading the data to training the classifier.

In this recipe, we use the variant without the outlier removal procedure. We will be replacing the last step (the classifier) with more complex ensemble models. Additionally, we first fit the decision tree pipeline to the data to obtain the baseline model for performance comparison. For your convenience, we reiterate all the required steps in the notebook accompanying this chapter.

How to do it...

Execute the following steps to train the ensemble classifiers:

- Import the libraries:

from sklearn.ensemble import (RandomForestClassifier, GradientBoostingClassifier) from xgboost.sklearn import XGBClassifier from lightgbm import LGBMClassifier from chapter_14_utils import performance_evaluation_reportIn this chapter, we also use the already familiar

performance_evaluation_reporthelper function.

- Define and fit the Random Forest pipeline:

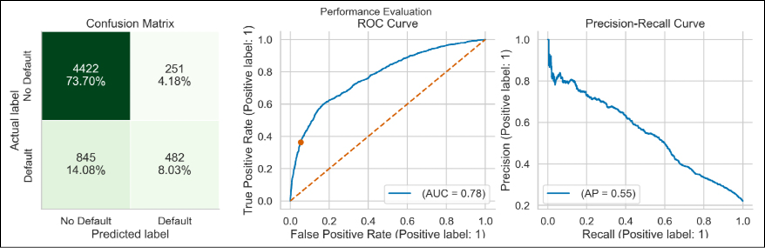

rf = RandomForestClassifier(random_state=42) rf_pipeline = Pipeline( steps=[("preprocessor", preprocessor), ("classifier", rf)] ) rf_pipeline.fit(X_train, y_train) rf_perf = performance_evaluation_report(rf_pipeline, X_test, y_test, labels=LABELS, show_plot=True, show_pr_curve=True)The performance of the Random Forest can be summarized by the following plot:

Figure 14.1: Performance evaluation of the Random Forest model

- Define and fit the Gradient Boosted Trees pipeline:

gbt = GradientBoostingClassifier(random_state=42) gbt_pipeline = Pipeline( steps=[("preprocessor", preprocessor), ("classifier", gbt)] ) gbt_pipeline.fit(X_train, y_train) gbt_perf = performance_evaluation_report(gbt_pipeline, X_test, y_test, labels=LABELS, show_plot=True, show_pr_curve=True)The performance of the Gradient Boosted Trees can be summarized by the following plot:

Figure 14.2: Performance evaluation of the Gradient Boosted Trees model

- Define and fit an XGBoost pipeline:

xgb = XGBClassifier(random_state=42) xgb_pipeline = Pipeline( steps=[("preprocessor", preprocessor), ("classifier", xgb)] ) xgb_pipeline.fit(X_train, y_train) xgb_perf = performance_evaluation_report(xgb_pipeline, X_test, y_test, labels=LABELS, show_plot=True, show_pr_curve=True)The performance of the XGBoost can be summarized by the following plot:

Figure 14.3: Performance evaluation of the XGBoost model

- Define and fit the LightGBM pipeline:

lgbm = LGBMClassifier(random_state=42) lgbm_pipeline = Pipeline( steps=[("preprocessor", preprocessor), ("classifier", lgbm)] ) lgbm_pipeline.fit(X_train, y_train) lgbm_perf = performance_evaluation_report(lgbm_pipeline, X_test, y_test, labels=LABELS, show_plot=True, show_pr_curve=True)The performance of the LightGBM can be summarized by the following plot:

Figure 14.4: Performance evaluation of the LightGBM model

From the reports, it looks like the shapes of the ROC curve and the Precision-Recall curve were very similar for all the considered models. We will look at the scores of the models in the There’s more… section.

How it works...

This recipe shows how easy it is to use different classifiers, as long as we want to use their default settings. In the first step, we imported the classifiers from their respective libraries.

In this recipe, we have used the scikit-learn API of libraries such as XGBoost or LightGBM. However, we could also use their native approaches to training models, which might require some additional effort, such as converting a pandas DataFrame to formats acceptable by those libraries. Using the native approaches can yield some extra benefits, for example, in terms of accessing certain hyperparameters or configuration settings.

In Steps 2 to 5, we created a separate pipeline for each classifier. We combined the already established ColumnTransformer preprocessor with the corresponding classifier. Then, we fitted each pipeline to the training data and presented the performance evaluation report.

Some of the considered ensemble models offer additional functionalities in the fit method (as opposed to setting hyperparameters when instantiating the class). For example, when using the fit method of LightGBM we can pass in the names/indices of categorical features. By doing so, the algorithm knows how to treat those features using its own approach, without the need for explicit one-hot encoding. Similarly, we could use a wide variety of available callbacks.

Thanks to modern Python libraries, fitting all the considered classifiers was extremely easy. We only had to replace the model’s class in the pipeline with another one. Keeping in mind how simple it is to experiment with different models, it is good to have at least a basic understanding of what those models do and what their strengths and weaknesses are. That is why below we provide a brief introduction to the considered algorithms.

Random Forest

Random Forest is an example of an ensemble of models, that is, it trains multiple models (decision trees) and uses them to create predictions. In the case of a regression problem, it takes the average value of all the underlying trees. For classification it uses a majority vote. Random Forest offers more than just training many trees and aggregating their results.

First, it uses bagging (bootstrap aggregation)—each tree is trained on a subset of all available observations. Those are drawn randomly with replacement, so—unless specified otherwise—the total number of observations used for each tree is the same as the total in the training set. Even though a single tree might have high variance with respect to a particular dataset (due to bagging), the forest will have lower variance overall, without increasing the bias. Additionally, this approach can also reduce the effect of any outliers in the data as they will not be used in all of the trees. To add even more randomness, each tree only considers a subset of all features to create each split. We can control that number using a dedicated hyperparameter.

Thanks to those two mechanisms, the trees in the forest are not correlated with each other and are built independently. The latter allows for the parallelization of the tree-building step.

Random Forest provides a good trade-off between complexity and performance. Often—without any tuning—we can get much better performance than when using simpler algorithms, such as decision trees or linear/logistic regression. That is because Random Forest has a lower bias (due to its flexibility) and reduced variance (due to aggregating predictions of multiple models).

Gradient Boosted Trees

Gradient Boosted Trees is another type of ensemble model. The idea is to train many weak learners (shallow decision trees/stumps with high bias) and combine them to obtain a strong learner. In contrast to Random Forest, Gradient Boosted Trees is a sequential/iterative algorithm. In boosting, we start with the first weak learner, and each of the subsequent learners tries to learn from the mistakes of the previous ones. They do this by being fitted to the residuals (error terms) of the previous models.

The reason why we create an ensemble of weak learners instead of strong learners is that in the case of the strong learners, the errors/mislabeled data points would most likely be the noise in the data, so the overall model would end up overfitting to the training data.

The term gradient comes from the fact that the trees are built using gradient descent, which is an optimization algorithm. Without going into too much detail, it uses the gradient (slope) of the loss function to minimize the overall loss and achieve the best performance. The loss function represents the difference between the actual and predicted values. In practice, to perform the gradient descent procedure in Gradient Boosted Trees, we add such a tree to the model that follows the gradient. In other words, such a tree reduces the value of the loss function.

We can describe the boosting procedure using the following steps:

- The process starts with a simple estimate (mean, median, and so on).

- A tree is fitted to the error of that prediction.

- The prediction is adjusted using the tree’s prediction. However, it is not fully adjusted, but only to a certain degree (based on a learning rate hyperparameter).

- Another tree is fitted to the error of the updated prediction and the prediction is further adjusted as in the previous step.

- The algorithm continues to iteratively reduce the error until a specified number of rounds (or another stopping criterion) is reached.

- The final prediction is the sum of the initial prediction and all the adjustments (predictions of the error weighted with the learning rate).

In contrast to Random Forest, Gradient Boosted Trees use all available data to train the models. However, we can use random sampling without replacement for each tree by using the subsample hyperparameter. Then, we are dealing with Stochastic Gradient Boosted Trees. Additionally, similarly to Random Forest, we can make the trees consider only a subset of features when making a split.

XGBoost

Extreme Gradient Boosting (XGBoost) is an implementation of Gradient Boosted Trees that incorporates a series of improvements resulting in superior performance (both in terms of evaluation metrics and estimation time). Since being published, the algorithm has been successfully used to win many data science competitions.

In this recipe, we only present a high-level overview of its distinguishable features. For a more detailed overview, please refer to the original paper (Chen et al. (2016)) or documentation. The key concepts of XGBoost are the following:

- XGBoost combines a pre-sorted algorithm with a histogram-based algorithm to calculate the best splits. This tackles a significant inefficiency of Gradient Boosted Trees, namely that the algorithm considers the potential loss for all possible splits when creating a new branch (especially important when considering hundreds or thousands of features).

- The algorithm uses the Newton-Raphson method to approximate the loss function, which allows us to use a wider variety of loss functions.

- XGBoost has an extra randomization parameter to reduce the correlation between the trees.

- XGBoost combines Lasso (L1) and Ridge (L2) regularization to prevent overfitting.

- It offers a more efficient approach to tree pruning.

- XGBoost has a feature called monotonic constraints—the algorithm sacrifices some accuracy and increases the training time to improve model interpretability.

- XGBoost does not take categorical features as input—we must use some kind of encoding for them.

- The algorithm can handle missing values in the data.

LightGBM

LightGBM, released by Microsoft, is another competition-winning implementation of Gradient Boosted Trees. Thanks to some improvements, LightGBM results in a similar performance to XGBoost, but with faster training time. Key features include the following:

- The difference in speed is caused by the approach to growing trees. In general, algorithms (such as XGBoost) use a level-wise (horizontal) approach. LightBGM, on the other hand, grows trees leaf-wise (vertically). The leaf-wise algorithm chooses the leaf with the maximum reduction in the loss function. Such algorithms tend to converge faster than the level-wise ones; however, they tend to be more prone to overfitting (especially with small datasets).

- LightGBM employs a technique called Gradient-based One-Side Sampling (GOSS) to filter out the data instances used for finding the best split value. Intuitively, observations with small gradients are already well trained, while those with large gradients have more room for improvement. GOSS retains instances with large gradients and additionally samples randomly from observations with small gradients.

- LightGBM uses Exclusive Feature Bundling (EFB) to take advantage of sparse datasets and bundles together features that are mutually exclusive (they never have values of zero at the same time). This leads to a reduction in the complexity (dimensionality) of the feature space.

- The algorithm uses histogram-based methods to bucket continuous feature values into discrete bins in order to speed up training and reduce memory usage.

The leaf-wise algorithm was later added to XGBoost as well. To make use of it, we need to set grow_policy to "lossguide".

There’s more...

In this recipe, we showed how to use selected ensemble classifiers to try to improve our ability to predict customers’ likelihood of defaulting their loan. To make things even more interesting, these models have dozens of hyperparameters to tune, which can significantly increase (or decrease) their performance.

For brevity, we will not discuss the hyperparameter tuning of these models here. We refer you to the accompanying Jupyter notebook for a short introduction to tuning these models using a randomized grid search approach. Here, we only present a table containing the results. We can compare the performance of the models with default settings versus their tuned counterparts.

Figure 14.5: Table comparing the performance of various classifiers

For the models calibrated using the randomized search (including the _rs suffix in the name), we used 100 random sets of hyperparameters. As the considered problem deals with imbalanced data (the minority class is ~20%), we look at recall for performance evaluation.

It seems that the basic decision tree achieved the best recall score on the test set. This came at the cost of much lower precision than the more advanced models. That is why the F1 score (a harmonic mean of precision and recall) is the lowest for the decision tree. We can see that the default LightGBM model achieved the best F1 score on the test set.

The results by no means indicate that the more complex models are inferior—they might simply require more tuning or a different set of hyperparameters. For example, the ensemble models enforced the maximum depth of the tree (determined by the corresponding hyperparameter), while the decision tree had no such limit and it reached the depth of 37. The more advanced the model, the more effort it requires to “get it right.”

There are many different ensemble classifiers available to experiment with. Some of the possibilities include:

- AdaBoost—the first boosting algorithm.

- Extremely Randomized Trees—this algorithm offers improved randomness as compared to Random Forests. Similar to Random Forest, a random subset of features is considered when making a split. However, instead of looking for the most discriminative thresholds, the thresholds are drawn at random for each feature. Then, the best of these random thresholds is picked as the splitting rule. Such an approach usually allows us to reduce the variance of the model, while slightly increasing its bias.

- CatBoost—another boosting algorithm (developed by Yandex) that puts a high emphasis on handling categorical features and achieving high performance with little hyperparameter tuning.

- NGBoost—at a very high level, this model introduces uncertainty estimation into the gradient boosting by using the natural gradient.

- Histogram-based gradient boosting—a variant of gradient boosted trees available in

scikit-learnand inspired by LightGBM. They accelerate the training procedure by discretizing (binning) the continuous features into a predetermined number of unique values.

While some algorithms have introduced certain features first, the other popular implementations of gradient boosted trees often receive those as well. An example might be the histogram-based approach to discretizing continuous features. While it was introduced in LightGBM, it was later added to XGBoost as well. The same goes for the leaf-wise approach to growing trees.

See also

We present additional resources on the algorithms mentioned in this recipe:

- Breiman, L. 2001. “Random Forests.” Machine Learning 45(1): 5–32.

- Chen, T., & Guestrin, C. 2016, August. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd international conference on knowledge discovery and data mining, 785–794. ACM.

- Duan, T., Anand, A., Ding, D. Y., Thai, K. K., Basu, S., Ng, A., & Schuler, A. 2020, November. Ngboost: Natural gradient boosting for probabilistic prediction. In International Conference on Machine Learning, 2690–2700. PMLR.

- Freund, Y., & Schapire, R. E. 1996, July. Experiments with a new boosting algorithm. In International Conference on Machine Learning, 96: 148–156.

- Freund, Y., & Schapire, R. E. 1997. “A decision-theoretic generalization of on-line learning and an application to boosting.” Journal of Computer and System Sciences, 55(1), 119–139.

- Friedman, J. H. 2001. “Greedy function approximation: a gradient boosting machine.” Annals of Statistics, 29(5): 1189–1232.

- Friedman, J. H. 2002. “Stochastic gradient boosting.” Computational Statistics & Data Analysis, 38(4): 367–378.

- Ke, G., Meng, Q., Finley, T., Wang, T., Chen, W., Ma, W., ... & Liu, T. Y. 2017. “Lightgbm: A highly efficient gradient boosting decision tree.” In Neural Information Processing Systems.

- Prokhorenkova, L., Gusev, G., Vorobev, A., Dorogush, A. V., & Gulin, A. 2018. CatBoost: unbiased boosting with categorical features. In Neural information Processing Systems.

Exploring alternative approaches to encoding categorical features

In the previous chapter, we introduced one-hot encoding as the standard solution for encoding categorical features so that they can be understood by ML algorithms. To recap, one-hot encoding converts categorical variables into several binary columns, where a value of 1 indicates that the row belongs to a certain category, and a value of 0 indicates otherwise.

The biggest drawback of that approach is the quickly expanding dimensionality of our dataset. For example, if we had a feature indicating from which of the US states the observation originates, one-hot encoding of this feature would result in the creation of 50 (or 49 if we dropped the reference value) new columns.

Some other issues with one-hot encoding include:

- Creating that many Boolean features introduces sparsity to the dataset, which decision trees don’t handle well.

- Decision trees’ splitting algorithm treats all the one-hot-encoded dummies as independent features. It means that when a tree makes a split using one of the dummy variables, the gain in purity per split is small. Thus, the tree is not likely to select one of the dummy variables closer to its root.

- Connected to the previous point, continuous features will have higher feature importance than one-hot encoding dummy variables, as a single dummy can only bring a fraction of its respective categorical feature’s total information into the model.

- Gradient boosted trees don’t handle high-cardinality features well, as the base learners have limited depth.

When dealing with a continuous variable, the splitting algorithm induces an ordering of the samples and can split that ordered list anywhere. A binary feature can only be split in one place, while a categorical feature with k unique categories can be split in ![]() ways.

ways.

We illustrate the advantage of the continuous features with an example. Assume that the splitting algorithm splits a continuous feature at a value of 10 into two groups: “below 10” and “10 and above.” In the next split, it can further split any of the two groups, for example, “below 6” and “6 and above.” That is not possible for a binary feature, as we can at most use it to split the groups once into “yes” or “no” groups. Figure 14.6 illustrates potential differences between decision trees created with or without one-hot encoding.

Figure 14.6: Example of a dense decision tree without one-hot encoding (on the left) and a sparse decision tree with one-hot encoding (on the right)

Those drawbacks, among others, led to the development of a few alternative approaches to encoding categorical features. In this recipe, we introduce three of them.

The first one is called target encoding (also known as mean encoding). In this approach, the following transformation is applied to a categorical feature, depending on the type of the target variable:

- Categorical target—a feature is replaced with a blend of the posterior probability of the target given a certain category and the prior probability of the target over all the training data.

- Continuous target—a feature is replaced with a blend of the expected value of the target given a certain category and the expected value of the target over all the training data.

In practice, the simplest scenario assumes that each category in the feature is replaced with the mean of the target value for that category. Figure 14.7 illustrates this.

Figure 14.7: Example of target encoding

Target encoding results in a more direct representation of the relationship between the categorical feature and the target, while not adding any new columns. That is why it is a very popular technique in data science competitions.

Unfortunately, it is not a silver bullet to encoding categorical features and comes with its disadvantages:

- The approach is very prone to overfitting. That is why it assumes blending/smoothing of the category mean with the global mean. We should be especially cautious when some categories are very infrequent.

- Connected to the risk of overfitting, we are effectively leaking target information into the features.

In practice, target encoding works quite well when we have high-cardinality features and are using some form of gradient boosted trees as our machine learning model.

The second approach we cover is called Leave One Out Encoding (LOOE) and it is very similar to target encoding. It attempts to reduce overfitting by excluding the current row’s target value when calculating the average of the category. This way, the algorithm avoids row-wise leakage. Another consequence of this approach is that the same category in multiple observations can have a different value in the encoded column. Figure 14.8 illustrates this.

Figure 14.8: Example of Leave One Out Encoding

With LOOE, the ML model is exposed not only to the same value for each encoded category (as in target encoding) but to a range of values. That is why it should learn to generalize better.

The last of the considered encodings is called Weight of Evidence (WoE) encoding. This one is especially interesting, as it originates from the credit scoring world, where it was employed to improve the probability of default estimates. It was used to separate customers who defaulted on the loan from those who paid it back successfully.

Weight of Evidence evolved from logistic regression. Another useful metric with the same origin as WoE is called Information Value (IV). It measures how much information a feature provides for the prediction. To put it a bit differently, it helps rank variables based on their importance in the model.

The weight of evidence indicates the predictive power of an independent variable in relation to the target. In other words, it measures how much the evidence supports or undermines a hypothesis. It is defined as the natural logarithm of the odds ratio:

Figure 14.9 illustrates the calculations.

Figure 14.9: Example of the WoE encoding

The fact that the encoding originates from credit scoring does not mean that it is only usable in such cases. We can generalize the good customers as the non-event or negative class, and the bad customers as the event or positive class. One of the restrictions of the approach is that, in contrast to the previous two, it can only be used with a binary categorical target.

WoE was also historically used to encode categorical features as well. For example, in a credit scoring dataset, we could bin a continuous feature like age into discrete bins: 20–29, 30–39, 40–49, and so on, and only then calculate the WoE for those categories. The number of bins chosen for the encoding depends on the use case and the feature’s distribution.

In this recipe, we show how to use those three encoders in practice using the default dataset we have already used before.

Getting ready

In this recipe, we use the pipeline we have used in the previous recipes. As the estimator, we use the Random Forest classifier. For your convenience, we reiterate all the required steps in the Jupyter notebook accompanying this chapter.

The Random Forest pipeline with one-hot encoded categorical features resulted in the test set’s recall of 0.3542. We will try to improve upon this score with alternative approaches to encoding categorical features.

How to do it…

Execute the following steps to fit the ML pipelines with various categorical encoders:

- Import the libraries:

import category_encoders as ce from sklearn.base import clone - Fit the pipeline using target encoding:

pipeline_target_enc = clone(rf_pipeline) pipeline_target_enc.set_params( preprocessor__categorical__cat_encoding=ce.TargetEncoder() ) pipeline_target_enc.fit(X_train, y_train) target_enc_perf = performance_evaluation_report( pipeline_target_enc, X_test, y_test, labels=LABELS, show_plot=True, show_pr_curve=True ) print(f"Recall: {target_enc_perf['recall']:.4f}")Executing the snippet generates the following plot:

Figure 14.10: Performance evaluation of the pipeline with target encoding

The recall obtained using this pipeline is equal to

0.3677. This improves the score by slightly over 1 p.p.

- Fit the pipeline using Leave One Out Encoding:

pipeline_loo_enc = clone(rf_pipeline) pipeline_loo_enc.set_params( preprocessor__categorical__cat_encoding=ce.LeaveOneOutEncoder() ) pipeline_loo_enc.fit(X_train, y_train) loo_enc_perf = performance_evaluation_report( pipeline_loo_enc, X_test, y_test, labels=LABELS, show_plot=True, show_pr_curve=True ) print(f"Recall: {loo_enc_perf['recall']:.4f}")Executing the snippet generates the following plot:

Figure 14.11: Performance evaluation of the pipeline with Leave One Out Encoding

The recall obtained using this pipeline is equal to

0.1462, which is significantly worse than the target encoding approach.

- Fit the pipeline using Weight of Evidence encoding:

pipeline_woe_enc = clone(rf_pipeline) pipeline_woe_enc.set_params( preprocessor__categorical__cat_encoding=ce.WOEEncoder() ) pipeline_woe_enc.fit(X_train, y_train) woe_enc_perf = performance_evaluation_report( pipeline_woe_enc, X_test, y_test, labels=LABELS, show_plot=True, show_pr_curve=True ) print(f"Recall: {woe_enc_perf['recall']:.4f}")Executing the snippet generates the following plot:

Figure 14.12: Performance evaluation of the pipeline with Weight of Evidence encoding

The recall obtained using this pipeline is equal to 0.3708, which is a small improvement over target encoding.

How it works…

First, we executed the code from the Getting ready section, that is, instantiated the pipeline with one-hot encoding and Random Forest as the classifier.

After importing the libraries, we cloned the entire pipeline using the clone function. Then, we used the set_params method to replace the OneHotEncoder with TargetEncoder. Just as when tuning the hyperparameters of a pipeline, we had to use the same double underscore notation to access the particular element of the pipeline. The encoder was located under preprocessor__categorical__cat_encoding. Then, we fitted the pipeline using the fit method and printed the evaluation scores using the performance_evaluation_report helper function.

As we have mentioned in the introduction, target encoding is prone to overfitting. That is why instead of simply replacing the categories with the corresponding averages, the algorithm is capable of blending the posterior probabilities with the prior probability (global average). We can control the blending with two hyperparameters: min_samples_leaf and smoothing.

In Steps 3 and 4, we followed the very same steps as with target encoding, but we replaced the encoder with LeaveOneOutEncoder and WOEEncoder respectively.

Just as with target encoding, the other encoders use the target to build the encoding and are thus prone to overfitting. Fortunately, they also offer certain measures to prevent that from happening.

In the case of LOOE, we can add normally distributed noise to the encodings in order to reduce overfitting. We can control the standard deviation of the Normal distribution used for generating the noise with the sigma argument. It is worth mentioning that the random noise is added to the training data only, and the transformation of the test set is not impacted. Just by adding the random noise to our pipeline (sigma = 0.05), we can improve the measured recall score from 0.1462 to around 0.35 (depending on random number generation).

Similarly, we can add random noise for the WoE encoder. We control the noise with the randomized (Boolean flag) and sigma (standard deviation of the Normal distribution) arguments. Additionally, there is the regularization argument, which prevents errors caused by division by zero.

There’s more…

Encoding categorical variables is a very broad area of active research, and every now and then new approaches to it are being published. Before changing the topic, we would also like to discuss a couple of related concepts.

Handling data leakage with k-fold target encoding

We have already mentioned a few approaches to reducing the overfitting problem of the target encoder. A very popular solution among Kaggle practitioners is to use k-fold target encoding. The idea is similar to k-fold cross-validation and it allows us to use all the training data we have. We start by dividing the data into k folds—they can be stratified or purely random, depending on the use case. Then, we replace the observations present in the l-th fold with the target’s mean calculated using all the folds except the l-th one. This way, we are not leaking the target from the observations within the same fold.

An inquisitive reader might have noticed that the LOOE is a special case of k-fold target encoding, in which k is equal to the number of observations in the training dataset.

Even more encoders

The category_encoders library offers almost 20 different encoding transformers for categorical features. Aside from the ones we have already mentioned, you might want to explore the following:

- Ordinal encoding—very similar to label encoding; however, it ensures that the encoding retains the ordinal nature of the feature. For example, the hierarchy of bad < neutral < good is preserved.

- Count encoder (frequency encoder)—each category of a feature is mapped to the number of observations belonging to that category.

- Sum encoder—compares the mean of the target for a given category to the overall average of the target.

- Helmert encoder—compares the mean of a certain category to the mean of the subsequent levels. If we had categories [A, B, C], the algorithm would first compare A to B and C and then B to C alone. This kind of encoding is useful in situations in which the levels of the categorical feature are ordered, for example, from lowest to highest.

- Backward difference encoder—similar to the Helmert encoder, with the difference that it compares the mean of the current category to the mean of the previous one.

- M-estimate encoder—a simplified version of the target encoder, which has only one tunable parameter (responsible for the strength of regularization).

- James-Stein encoder—a variant of target encoding that aims to improve the estimation of the category’s mean by shrinking it toward the central/global mean. Its single hyperparameter is responsible for the strength of shrinkage (this means the same as regularization in this context)—the bigger the value of the hyperparameter, the bigger the weight of the global mean (which might lead to underfitting). On the other hand, reducing the hyperparameter’s value might lead to overfitting. The best value is usually determined by cross-validation. The approach’s biggest disadvantage is that the James-Stein estimator is defined only for Normal distribution, which is not the case for any binary classification problem.

- Binary encoder—converts a category into binary digits and each one is provided a separate column. Thanks to this encoding, we generate far fewer columns than with OHE. To illustrate, for a categorical feature with 100 unique categories, binary encoding just needs to create 7 features, instead of 100 in the case of OHE.

- Hashing encoder—uses a hashing function (often used in data encryption) to transform the categorical features. The outcome is similar to OHE, but with fewer features (we can control that with the encoder’s hyperparameters). It has two significant disadvantages. First, the encoding results in information loss, as the algorithm transforms the full set of available categories into fewer features. The second issue is called collision and it occurs as we are transforming a potentially high number of categories into a smaller set of features. Then, different categories could be represented by the same hash values.

- Catboost encoder—an improved variant of Leave One Out Encoding, which aims to overcome the issues of target leakage.

See also

- Micci-Barreca, D. 2001. “A preprocessing scheme for high-cardinality categorical attributes in classification and prediction problems.” ACM SIGKDD Explorations Newsletter 3(1): 27–32.

Investigating different approaches to handling imbalanced data

A very common issue when working with classification tasks is that of class imbalance, that is, when one class is highly outnumbered in comparison to the second one (this can also be extended to multi-class cases). In general, we are dealing with imbalance when the ratio of the two classes is not 1:1. In some cases, a delicate imbalance is not that big of a problem, but there are industries/problems in which we can encounter ratios of 100:1, 1000:1, or even more extreme.

Dealing with highly imbalanced classes can result in the poor performance of ML models. That is because most of the algorithms implicitly assume balanced distribution of classes. They do so by aiming to minimize the overall prediction error, to which the minority class by definition contributes very little. As a result, classifiers trained on imbalanced data are biased toward the majority class.

One of the potential solutions to dealing with class imbalance is to resample the data. On a high level, we can either undersample the majority class, oversample the minority class, or combine the two approaches. However, that is just the general idea. There are many ways to approach resampling and we describe a few selected methods below.

When working with resampling techniques, we only resample the training data! The test data stays intact.

Figure 14.13: Undersampling of the majority class and oversampling of the minority class

The simplest approach to undersampling is called random undersampling. In this approach, we undersample the majority class, that is, draw random samples (by default, without replacement) from the majority class until the classes are balanced (with a ratio of 1:1 or any other desired ratio). The biggest issue of this method is the information loss caused by discarding vast amounts of data, often the majority of the entire training dataset. As a result, a model trained on undersampled data can achieve lower performance. Another possible implication is a biased classifier with an increased number of false positives, as the distribution of the training and test sets is not the same after resampling.

Analogically, the simplest approach to oversampling is called random oversampling. In this approach, we sample multiple times with replacement from the minority class, until the desired ratio is achieved. This method often outperforms random undersampling, as there is no information loss caused by discarding training data. However, random oversampling comes with the danger of overfitting, caused by replicating observations from the minority class.

Synthetic Minority Oversampling Technique (SMOTE) is a more advanced oversampling algorithm that creates new, synthetic observations from the minority class. This way, it overcomes the previously mentioned problem of overfitting.

To create the synthetic samples, the algorithm picks an observation from the minority class, identifies its k-nearest neighbors (using the k-NN algorithm), and then creates new observations on the lines connecting (interpolating) the observation to the nearest neighbors. Then, the process is repeated for other minority observations until the classes are balanced.

Aside from reducing the problem of overfitting, SMOTE causes no loss of information, as it does not discard observations belonging to the majority class. However, SMOTE can accidentally introduce more noise to the data and cause overlapping of classes. This is because while creating the synthetic observations, it does not take into account the observations from the majority class. Additionally, the algorithm is not very effective for high-dimensional data (due to the curse of dimensionality). Lastly, the basic variant of SMOTE is only suitable for numerical features. However, SMOTE’s extensions (mentioned in the There’s more… section) can handle categorical features as well.

The last of the considered oversampling techniques is called Adaptive Synthetic Sampling (ADASYN) and it is a modification of the SMOTE algorithm. In ADASYN, the number of observations to be created for a certain minority point is determined by a density distribution (instead of a uniform weight for all points, as in SMOTE). This is how ADASYN’s adaptive nature enables it to generate more synthetic samples for observations that come from hard-to-learn neighborhoods. For example, a minority observation is hard to learn if there are many majority class observations with very similar feature values. It is easier to imagine that scenario in the case of only two features. Then, in a scatterplot, such a minority class observation might simply be surrounded by many of the majority class observations.

There are two additional elements worth mentioning:

- In contrast to SMOTE, the synthetic points are not limited to linear interpolation between two points. They can also lie on a plane created by three or more observations.

- After creating the synthetic observations, the algorithm adds a small random noise to increase the variance, thus making the samples more realistic.

Potential drawbacks of ADASYN include:

- A possible decrease in precision (more false positives) of the algorithm caused by its adaptability. This means that the algorithm might generate more observations in the areas with high numbers of observations from the majority class. Such synthetic data might be very similar to those majority class observations, potentially resulting in more false positives.

- Struggling with sparsely distributed minority observations. Then, a neighborhood can contain only one or very few points.

Resampling is not the only potential solution to the problem of imbalanced classes. Another one is based on adjusting the class weights, thus putting more weight on the minority class. In the background, the class weights are incorporated into calculating the loss function. In practice, this means that misclassifying observations from the minority class increases the value of the loss function significantly more than in the case of misclassifying the observations from the majority class.

In this recipe, we show an example of a credit card fraud problem, where the fraudulent class is observed in only 0.17% of the entire sample. In such cases, gathering more data (especially of the fraudulent class) might simply not be feasible, and we need to resort to other techniques that can help us in improving the models’ performance.

Getting ready

Before proceeding to the coding part, we provide a brief description of the dataset selected for this exercise. You can download the dataset from Kaggle (link in the See also section).

The dataset contains information about credit card transactions made over a period of two days in September 2013 by European cardholders. Due to confidentiality, almost all features (28 out of 30) were anonymized by using Principal Components Analysis (PCA). The only two features with clear interpretation are Time (seconds elapsed between each transaction and the first one in the dataset) and Amount (the transaction’s amount).

Lastly, the dataset is highly imbalanced and the positive class is observed in 0.173% of all transactions. To be precise, out of 284,807 transactions, 492 were identified as fraudulent.

How to do it...

Execute the following steps to investigate different approaches to handling class imbalance:

- Import the libraries:

import pandas as pd from sklearn.model_selection import train_test_split from sklearn.ensemble import RandomForestClassifier from sklearn.preprocessing import RobustScaler from imblearn.over_sampling import RandomOverSampler, SMOTE, ADASYN from imblearn.under_sampling import RandomUnderSampler from imblearn.ensemble import BalancedRandomForestClassifier from chapter_14_utils import performance_evaluation_report - Load and prepare data:

RANDOM_STATE = 42 df = pd.read_csv("../Datasets/credit_card_fraud.csv") X = df.copy().drop(columns=["Time"]) y = X.pop("Class") X_train, X_test, y_train, y_test = train_test_split( X, y, test_size=0.2, stratify=y, random_state=RANDOM_STATE )Using

y.value_counts(normalize=True)we can confirm that the positive class is observed in 0.173% of the observations.

- Scale the features using

RobustScaler:robust_scaler = RobustScaler() X_train = robust_scaler.fit_transform(X_train) X_test = robust_scaler.transform(X_test) - Train the baseline model:

rf = RandomForestClassifier( random_state=RANDOM_STATE, n_jobs=-1 ) rf.fit(X_train, y_train) - Undersample the training data and train a Random Forest classifier:

rus = RandomUnderSampler(random_state=RANDOM_STATE) X_rus, y_rus = rus.fit_resample(X_train, y_train) rf.fit(X_rus, y_rus) rf_rus_perf = performance_evaluation_report(rf, X_test, y_test)After random undersampling, the ratio of the classes is as follows:

{0: 394, 1: 394}.

- Oversample the training data and train a Random Forest classifier:

ros = RandomOverSampler(random_state=RANDOM_STATE) X_ros, y_ros = ros.fit_resample(X_train, y_train) rf.fit(X_ros, y_ros) rf_ros_perf = performance_evaluation_report(rf, X_test, y_test)After random oversampling, the ratio of the classes is as follows:

{0: 227451, 1: 227451}.

- Oversample the training data using SMOTE:

smote = SMOTE(random_state=RANDOM_STATE) X_smote, y_smote = smote.fit_resample(X_train, y_train) rf.fit(X_smote, y_smote) rf_smote_perf = peformance_evaluation_report( rf, X_test, y_test, )After oversampling with SMOTE, the ratio of the classes is as follows:

{0: 227451, 1: 227451}.

- Oversample the training data using ADASYN:

adasyn = ADASYN(random_state=RANDOM_STATE) X_adasyn, y_adasyn = adasyn.fit_resample(X_train, y_train) rf.fit(X_adasyn, y_adasyn) rf_adasyn_perf = performance_evaluation_report( rf, X_test, y_test, )After oversampling with ADASYN, the ratio of the classes is as follows:

{0: 227451, 1: 227449}.

- Use sample weights in the Random Forest classifier:

rf_cw = RandomForestClassifier(random_state=RANDOM_STATE, class_weight="balanced", n_jobs=-1) rf_cw.fit(X_train, y_train) rf_cw_perf = performance_evaluation_report( rf_cw, X_test, y_test, ) - Train the

BalancedRandomForestClassifier:balanced_rf = BalancedRandomForestClassifier( random_state=RANDOM_STATE ) balanced_rf.fit(X_train, y_train) balanced_rf_perf = performance_evaluation_report( balanced_rf, X_test, y_test, ) - Train the

BalancedRandomForestClassifierwith balanced classes:balanced_rf_cw = BalancedRandomForestClassifier( random_state=RANDOM_STATE, class_weight="balanced", n_jobs=-1 ) balanced_rf_cw.fit(X_train, y_train) balanced_rf_cw_perf = performance_evaluation_report( balanced_rf_cw, X_test, y_test, ) - Combine the results in a DataFrame:

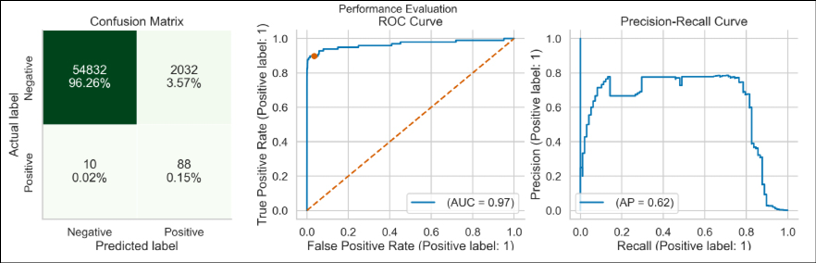

performance_results = { "random_forest": rf_perf, "undersampled rf": rf_rus_perf, "oversampled_rf": rf_ros_perf, "smote": rf_smote_perf, "adasyn": rf_adasyn_perf, "random_forest_cw": rf_cw_perf, "balanced_random_forest": balanced_rf_perf, "balanced_random_forest_cw": balanced_rf_cw_perf, } pd.DataFrame(performance_results).round(4).TExecuting the snippet prints the following table:

Figure 14.14: Performance evaluation metrics of the various approaches to dealing with imbalanced data

In Figure 14.14 we can see the performance evaluation of various approaches we have tried in this recipe. As we are dealing with a highly imbalanced problem (the positive class accounts for 0.17% of all the observations), we can clearly observe the case of the accuracy paradox. Many models have an accuracy of ≈99.9%, but they still fail to detect fraudulent cases, which are the most important ones.

The accuracy paradox refers to a case in which inspecting accuracy as the evaluation metric creates the impression of having a very good classifier (a score of 90%, or even 99.9%), while in reality it simply reflects the distribution of the classes.

Taking that into consideration, we compare the performance of the models using metrics that account for that. While looking at precision, the best performing approach is Random Forest with class weights. When considering recall as the most important metric, the best performing approach is either undersampling followed by a Random Forest model or a Balanced Random Forest model. In terms of the F1 score, the best approach seems to be the vanilla Random Forest model.

It is also important to mention that no hyperparameter tuning was performed, which could potentially improve the performance of all of the approaches.

How it works...

After importing the libraries, we loaded the credit card fraud dataset from a CSV file. In the same step, we additionally dropped the Time feature, separated the target from the features using the pop method, and created an 80–20 stratified train-test split. It is crucial to remember to use stratification when dealing with imbalanced classes.

In this recipe, we only focused on working with imbalanced data. That is why we did not cover any EDA, feature engineering, and so on. As all the features were numerical, we did not have to carry out any special encoding.

The only preprocessing step we did was to scale all the features using RobustScaler. While Random Forest does not require explicit feature scaling, some of the rebalancing approaches use k-NN under the hood. And for such distance-based algorithms, the scale does matter. We fitted the scaler using only the training data and then transformed both the training and test sets.

In Step 4, we fitted a vanilla Random Forest model, which we used as a benchmark for the more complex approaches.

In Step 5, we used the RandomUnderSampler class from the imblearn library to randomly undersample the majority class in order to match the size of the minority sample. Conveniently, classes from imblearn follow scikit-learn's API style. That is why we had to first define the class with the arguments (we only set the random_state). Then, we applied the fit_resample method to obtain the undersampled data. We reused the Random Forest object to train the model on the undersampled data and stored the results for later comparison.

Step 6 is analogical to Step 5, with the only difference being the use of the RandomOverSampler to randomly oversample the minority class in order to match the size of the majority class.

In Step 7 and Step 8, we applied the SMOTE and ADASYN variants of oversampling. As the imblearn library makes it very easy to apply different sampling methods, we will not go deeper into the description of the process.

In all the mentioned resampling methods, we can actually specify the desired ratio between classes by passing a float to the sampling_strategy argument. The number represents the desired ratio of the number of observations in the minority class over the number of observations in the majority class.

In Step 9, instead of resampling the training data, we used the class_weight hyperparameter of the RandomForestClassifier to account for the class imbalance. By passing “balanced" , the algorithm automatically assigns weights inversely proportional to class frequencies in the training data.

There are different possible approaches to using the class_weight hyperparameter. Passing "balanced_subsample" results in a similar weights assignment as in "balanced"; however, the weights are computed based on the bootstrap sample for every tree. Alternatively, we can pass a dictionary containing the desired weights. One way of determining the weights can be by using the compute_class_weight function from sklearn.utils.class_weight.

The imblearn library also features some modified versions of popular classifiers. In Steps 10 and 11, we used a modified Random Forest classifier, that is, Balanced Random Forest. The difference is that in Balanced Random Forest the algorithm randomly undersamples each bootstrapped sample to balance the classes. In practical terms, its API is virtually the same as in the vanilla scikit-learn implementation (including the tunable hyperparameters).

In the last step, we combined all the results into a single DataFrame and displayed the results.

There’s more...

In this recipe, we presented only some of the available resampling methods. Below, we list a few more possibilities.

Undersampling:

- NearMiss—the name refers to a collection of undersampling approaches that are essentially heuristic rules based on the Nearest Neighbors algorithm. They base the selection of the observations from the majority class to keep on the distance between the observations from the majority and minority classes. The rest is removed in order to balance the classes. For example, the NearMiss-1 method selects observations from the majority class that have the smallest average distance to the three closest observations from the minority class.

- Edited Nearest Neighbors—this approach removes any majority class observation whose class is different from the class of at least two of its three nearest neighbors. The underlying idea is to remove the instances from the majority class that are near the boundary of classes.

- Tomek links—in this undersampling heuristic we first identify all the pairs of observations that are nearest to each other (they are the nearest neighbors) but belong to different classes. Such pairs are called Tomek links. Then, from those pairs, we remove the observations that belong to the majority class. The underlying idea is that by removing those observations from the Tomek link we increase the class separation.

Oversampling:

- SMOTE-NC (Synthetic Minority Oversampling Technique for Nominal and Continuous)—a variant of SMOTE suitable for a dataset containing both numerical and categorical features. The vanilla SMOTE can create illogical values for one-hot-encoded features.

- Borderline SMOTE—this variant of the SMOTE algorithm will create new, synthetic observations along the decision boundary between the two classes, as those are more prone to being misclassified.

- SVM SMOTE—a variant of SMOTE in which an SVM algorithm is used to indicate which observations to use for generating new synthetic observations.

- K-means SMOTE—in this approach, we first apply k-means clustering to identify clusters with a high proportion of minority class observations. Then, the vanilla SMOTE is applied to the selected clusters and each of those clusters will have new synthetic observations.

Alternatively, we could combine the undersampling and oversampling approaches. The underlying idea is to first use an oversampling method to create duplicate or artificial observations and then use an undersampling method to reduce the noise or remove unnecessary observations.

For example, we could first oversample the data with SMOTE and then undersample it using random undersampling. imbalanced-learn offers two combined resamplers—SMOTE followed by Tomek links or Edited Nearest Neighbours.

In this recipe, we have only covered a small selection of the available approaches. Before changing topics, we wanted to mention some general notes on tackling problems with imbalanced classes:

- Do not apply under/oversampling on the test set.

- For evaluating problems with imbalanced data, use metrics that account for class imbalance, such as precision, recall, F1 score, Cohen’s kappa, or the PR-AUC.

- Use stratification when creating folds for cross-validation.

- Introduce under-/oversampling during cross-validation, not before. Doing so before leads to overestimating the model’s performance!

- When creating pipelines with resampling using the

imbalanced-learnlibrary, we also need to use theimbalanced-learnvariants of the pipeline. This is because the resamplers use thefit_resamplemethod instead of thefit_transformrequired byscikit-learn's pipelines. - Consider framing the problem differently. For example, instead of a classification task, we could treat it as an anomaly detection problem. Then, we could use different techniques, for example, isolation forest.

- Experiment with selecting a different probability threshold than the default 50% to potentially tune the performance. Instead of rebalancing the dataset, we can use the model trained using the imbalanced dataset to plot the false positive and false negative rates as a function of the decision threshold. Then, we can choose the threshold that results in the performance that best suits our needs.

We use the decision threshold to determine over which probability or score (a classifier’s output) we consider that the given observation belongs to the positive class. By default, that is 0.5.

See also

The dataset we have used in this recipe is available on Kaggle:

Additional resources are available here:

- Chawla, N. V., Bowyer, K. W., Hall, L. O., & Kegelmeyer, W. P. 2002. “SMOTE: synthetic minority oversampling technique.” Journal of artificial intelligence research 16: 321–357.

- Chawla, N. V. 2009. “Data mining for imbalanced datasets: An overview.” Data mining and knowledge discovery handbook: 875–886.

- Chen, C., Liaw, A., & Breiman, L. 2004. “Using random forest to learn imbalanced data.” University of California, Berkeley 110: 1–12.

- Elor, Y., & Averbuch-Elor, H. 2022. “To SMOTE, or not to SMOTE?.” arXiv preprint arXiv:2201.08528.

- Han, H., Wang, W. Y., & Mao, B. H. 2005, August. Borderline-SMOTE: a new over-sampling method in imbalanced data sets learning. In International conference on intelligent computing, 878–887. Springer, Berlin, Heidelberg.

- He, H., Bai, Y., Garcia, E. A., & Li, S. 2008, June. ADASYN: Adaptive synthetic sampling approach for imbalanced learning. In 2008 IEEE international joint conference on neural networks (IEEE world congress on computational intelligence), 1322–1328. IEEE.

- Le Borgne, Y.-A., Siblini, W., Lebichot, B., & Bontempi, G. 2022. Reproducible Machine Learning for Credit Card Fraud Detection – Practical Handbook.

- Liu, F. T., Ting, K. M., & Zhou, Z. H. 2008, December. Isolation forest. In 2008 Eighth Ieee International Conference On Data Mining, 413–422. IEEE.

- Mani, I., & Zhang, I. 2003, August. kNN approach to unbalanced data distributions: a case study involving information extraction. In Proceedings of workshop on learning from imbalanced datasets, 126: 1–7. ICML.

- Nguyen, H. M., Cooper, E. W., & Kamei, K. 2009, November. Borderline over-sampling for imbalanced data classification. In Proceedings: Fifth International Workshop on Computational Intelligence & Applications, 2009(1): 24–29. IEEE SMC Hiroshima Chapter.

- Pozzolo, A.D.et al. 2015. Calibrating Probability with Undersampling for Unbalanced Classification, 2015 IEEE Symposium Series on Computational Intelligence.

- Tomek, I. (1976). Two modifications of CNN, IEEE Transactions on Systems Man and Communications, 6: 769-772.

- Wilson, D. L. (1972). “Asymptotic properties of nearest neighbor rules using edited data.” IEEE Transactions on Systems, Man, and Cybernetics 3: 408–421.

Leveraging the wisdom of the crowds with stacked ensembles

Stacking (stacked generalization) refers to a technique of creating ensembles of potentially heterogeneous machine learning models. The architecture of a stacking ensemble comprises at least two base models (known as level 0 models) and a meta-model (the level 1 model) that combines the predictions of the base models. The following figure illustrates an example with two base models.

Figure 14.15: High-level schema of a stacking ensemble with two base learners

The goal of stacking is to combine the capabilities of a range of well-performing models and obtain predictions that result in a potentially better performance than any single model in the ensemble. That is possible as the stacked ensemble tries to leverage the different strengths of the base models. Because of that, the base models should often be complex and diverse. For example, we could use linear models, decision trees, various kinds of ensembles, k-nearest neighbors, support vector machines, neural networks, and so on.

Stacking can be a bit more difficult to understand than the previously covered ensemble methods (bagging, boosting, and so on) as there are at least a few variants of stacking when it comes to splitting data, handling potential overfitting, and data leakage. In this recipe, we follow the approach used in the scikit-learn library.

The procedure used for creating a stacked ensemble can be described in three steps. We assume that we already have representative training and test datasets.

Step 1: Train level 0 models

The essence of this step is that each of the level 0 models is trained on the full training dataset and then those models are used to generate predictions.

Then, we have a few things to consider for our ensemble. First, we have to pick what kind of predictions we want to use. For a regression problem, this is straightforward as we do not have any choice. However, when working with a classification problem we can use the predicted class or the predicted probability/score.

Second, we can either use only the predictions (whichever variant we picked before) as the features for the level 1 model or combine the original feature set with the predictions from the level 0 models. In practice, combining the features tends to work a bit better. Naturally, this heavily depends on the use case and the considered dataset.

Step 2: Train the level 1 model

The level 1 model (or the meta-model) is often quite simple and ideally can provide a smooth interpretation of the predictions made by the level 0 models. That is why linear models are often selected for this task.

The term blending often refers to using a simple linear model as the level 1 model. This is because the predictions of the level 1 model are then a weighted average (or blending) of the predictions made by the level 0 models.

In this step, the level 1 model is trained using the features from the previous step (either only the predictions or combined with the initial set of features) and some cross-validation scheme. The latter is used to select the meta-model’s hyperparameters and/or the set of base models to consider for the ensemble.

Figure 14.16: Low-level schema of a stacking ensemble with two base learners

In scikit-learn's approach to stacking, we assume that any of the base models could have a tendency to overfit, either due to the algorithm itself or due to some combination of its hyperparameters. But if that is the case, it should be offset by the other base models not suffering from the same problem. That is why cross-validation is applied to tune the meta-model and not the base models as well.

After the best hyperparameters/base learners are selected, the final estimator is trained on the full training dataset.

Step 3: Make predictions on unseen data

This step is the easiest one, as we are essentially fitting all the base models to the new observations to obtain the predictions, which are then used by the meta-model to create the stacked ensemble’s final predictions.

In this recipe, we create a stacked ensemble of models applied to the credit card fraud dataset.

How to do it...

Execute the following steps to create a stacked ensemble:

- Import the libraries:

import pandas as pd from sklearn.model_selection import (train_test_split, StratifiedKFold) from sklearn.metrics import recall_score from sklearn.preprocessing import RobustScaler from sklearn.svm import SVC from sklearn.naive_bayes import GaussianNB from sklearn.tree import DecisionTreeClassifier from sklearn.linear_model import LogisticRegression from sklearn.ensemble import RandomForestClassifier from sklearn.ensemble import StackingClassifier - Load and preprocess data:

RANDOM_STATE = 42 df = pd.read_csv("../Datasets/credit_card_fraud.csv") X = df.copy().drop(columns=["Time"]) y = X.pop("Class") X_train, X_test, y_train, y_test = train_test_split( X, y, test_size=0.2, stratify=y, random_state=RANDOM_STATE ) robust_scaler = RobustScaler() X_train = robust_scaler.fit_transform(X_train) X_test = robust_scaler.transform(X_test) - Define a list of base models:

base_models = [ ("dec_tree", DecisionTreeClassifier()), ("log_reg", LogisticRegression()), ("svc", SVC()), ("naive_bayes", GaussianNB()) ]In the accompanying Jupyter notebook, we specified the random state of all the models to which it is applicable. Here, we omitted that part for brevity.

- Train the selected models and calculate the recall using the test set:

for model_tuple in base_models: clf = model_tuple[1] if "n_jobs" in clf.get_params().keys(): clf.set_params(n_jobs=-1) clf.fit(X_train, y_train) recall = recall_score(y_test, clf.predict(X_test)) print(f"{model_tuple[0]}'s recall score: {recall:.4f}")Executing the snippet generates the following output:

dec_tree's recall score: 0.7551 log_reg's recall score: 0.6531 svc's recall score: 0.7041 naive_bayes's recall score: 0.8469Out of the considered models, the Naive Bayes classifier achieved the best recall on the test set.

- Define, fit, and evaluate the stacked ensemble:

cv_scheme = StratifiedKFold(n_splits=5, shuffle=True, random_state=RANDOM_STATE) meta_model = LogisticRegression(random_state=RANDOM_STATE) stack_clf = StackingClassifier( base_models, final_estimator=meta_model, cv=cv_scheme, n_jobs=-1 ) stack_clf.fit(X_train, y_train) recall = recall_score(y_test, stack_clf.predict(X_test)) print(f"The stacked ensemble's recall score: {recall:.4f}")Executing the snippet generates the following output:

The stacked ensemble's recall score: 0.7449Our stacked ensemble resulted in a worse score than the best of the individual models. However, we can try to further improve the ensemble. For example, we can allow the ensemble to use the initial features for the meta-model and replace the logistic regression meta-model with a Random Forest classifier.

- Improve the stacking ensemble with additional features and a more complex meta-model:

meta_model = RandomForestClassifier(random_state=RANDOM_STATE) stack_clf = StackingClassifier( base_models, final_estimator=meta_model, cv=cv_scheme, passthrough=True, n_jobs=-1 ) stack_clf.fit(X_train, y_train)

The second stacked ensemble achieved a recall score of 0.8571, which is better than the best of the individual models.

How it works...

In Step 1, we imported the required libraries. Then, we loaded the credit card fraud dataset, separated the target from the features, dropped the Time feature, split the data into training and test sets (using a stratified split), and finally, scaled the data with RobustScaler. The transformation is not necessary for tree-based models, however; we use various classifiers (each with its own set of assumptions about the input data) as base models. For simplicity, we did not investigate different properties of the features, such as normality. Please refer to the previous recipe for more details on those processing steps.

In Step 3, we defined a list of base learners for the stacked ensemble. We decided to use a few simple classifiers, such as a decision tree, a Naive Bayes classifier, a support vector classifier, and logistic regression. For brevity, we will not describe the properties of the selected classifiers here.

When preparing a list of base learners, we can also provide the entire pipelines instead of just the estimators. This can come in handy when only some of the ML models require dedicated preprocessing of the features, such as scaling or encoding categorical variables.

In Step 4, we iterated over the list of classifiers, fitted each model (with its default settings) to the training data, and calculated the recall score using the test set. Additionally, if the estimator had an n_jobs parameter, we set it to -1 to use all the available cores for computations. This way, we could speed up the model’s training, provided our machine has multiple cores/threads available. The goal of this step was to investigate the performance of the individual base models so that we could compare them to the stacked ensemble.

In Step 5, we first defined the meta-model (logistic regression) and the 5-fold stratified cross-validation scheme. Then, we instantiated the StackingClassifier by providing the list of the base classifiers, together with the cross-validation scheme and the meta-model. In the scikit-learn implementation of stacking, the base learners are fitted using the entire training set. Then, in order to avoid overfitting and improve the model’s generalization, the meta-estimator uses the selected cross-validation scheme to train the model on the out-samples. To be precise, it uses cross_val_predict for this task.

A possible shortcoming of this approach is that applying cross-validation only to the meta-learner can result in overfitting of the base learners. Different libraries (mentioned in the There’s more… section) employ different approaches to cross-validation with stacked ensembles.

In the last step, we tried to improve the performance of the stacked ensemble by modifying its two characteristics. First, we changed the level 1 model from logistic regression to a Random Forest classifier. Second, we allowed the level 1 model to use the features used by the level 0 base models. To do so, we set the passthrough argument to True while instantiating the StackingClassifier.

There’s more...

In order to get a better understanding of stacking, we can take a peek at the output of Step 1, which is the data being used to train the level 1 model. To get that data, we can use the transform method of a fitted StackedClassifier. Alternatively, we can use the familiar fit_transform method when the classifier was not fitted. In our case, we look into the stacked ensemble using both the predictions and original data as features:

level_0_names = [f"{model[0]}_pred" for model in base_models]

level_0_df = pd.DataFrame(

stack_clf.transform(X_train),

columns=level_0_names + list(X.columns)

)

level_0_df.head()

Executing the snippet generates the following table (abbreviated):

Figure 14.17: Preview of the input for the level 1 model in the stacking ensemble

We can see that the first four columns correspond to the predictions made by the base learners. Next to those, we can see the rest of the features, that is, those used by the base learners to generate their predictions.

It is also worth mentioning that when using the StackingClassifier we can use various outputs of the base models as inputs for the level 1 model. For example, we can either use the predicted probabilities/scores or the predicted labels. Using the default settings of the stack_method argument, the classifier will try to use the following types of outputs (in that specific order): predict_proba, decision_function, and predict.

If we had used stack_method="predict", we would have seen four columns of zeros and ones corresponding to the models’ class predictions (using the default decision threshold of 0.5).

In this recipe, we presented a simple example of a stacked ensemble. There are multiple ways in which we could try to further improve it. Some of the possible extensions include:

- Adding more layers to the stacked ensemble

- Using more diverse models, such as k-NN, boosted trees, neural networks, and so on

- Tuning the hyperparameters of the base classifiers and/or the meta-model

The ensemble module of scikit-learn also contains a VotingClassifier, which can aggregate the predictions of multiple classifiers. VotingClassifier uses one of the two available voting schemes. The first one is hard, and it is simply the majority vote. The soft voting scheme uses the argmax of the sums of the predicted probabilities to predict the class label.

There are also other libraries providing stacking functionalities:

vecstackmlxtendh2o

These libraries also differ in the way they approach stacking, for example, how they split the data or how they handle potential overfitting and data leakage. Please refer to the respective documentation for more details.

See also

Additional resources are available here:

- Raschka, S. 2018. “MLxtend: Providing machine learning and data science utilities and extensions to Python’s scientific computing stack.” The Journal of Open Source Software 3(24): 638.

- Wolpert, D. H. 1992. “Stacked generalization”. Neural networks 5(2): 241–259.

Bayesian hyperparameter optimization

In the Tuning hyperparameters using grid search and cross-validation recipe in the previous chapter, we described how to use various flavors of grid search to find the best possible set of hyperparameters for our model. In this recipe, we introduce an alternative approach to finding the optimal set of hyperparameters, this time based on the Bayesian methodology.

The main motivation for the Bayesian approach is that both grid search and randomized search make uninformed choices, either through an exhaustive search over all combinations or through a random sample. This way, they spend a lot of time evaluating combinations that result in far from optimal performance, thus basically wasting time. That is why the Bayesian approach makes informed choices of the next set of hyperparameters to evaluate, this way reducing the time spent on finding the optimal set. One could say that the Bayesian methods try to limit the time spent evaluating the objective function by spending more time on selecting the hyperparameters to investigate, which in the end is computationally cheaper.

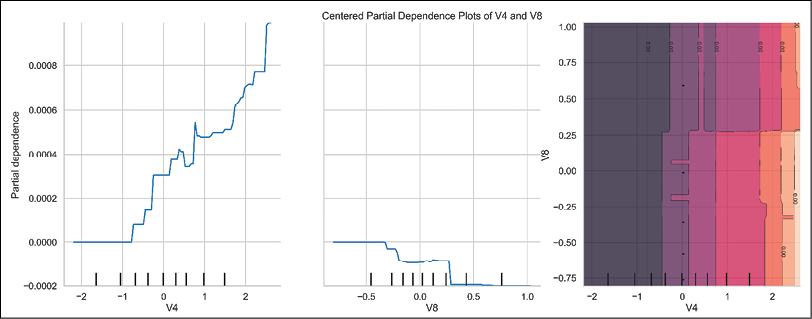

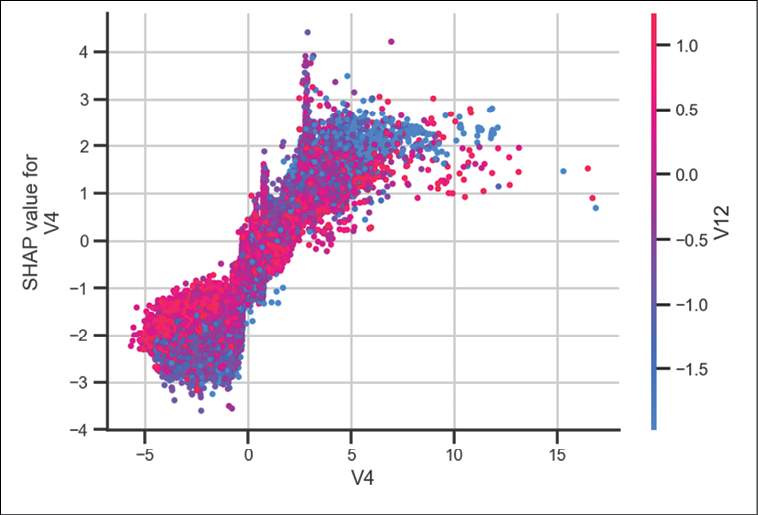

A formalization of the Bayesian approach is Sequential Model-Based Optimization (SMBO). On a very high level, SMBO uses a surrogate model together with an acquisition function to iteratively (hence “sequential”) select the most promising hyperparameters in the search space in order to approximate the actual objective function.