CONTENTS OF THIS CHAPTER

This chapter covers the following topics:

- the place of maintenance in the lifecycle;

- corrective, adaptive and perfective maintenance;

- evaluation and types of review;

- the role and selection of metrics for evaluation;

- key performance indicators (KPIs) and service level agreements (SLAs);

- references and further reading.

INTRODUCTION

Most of this book is devoted to the approaches and techniques used to develop a system. However, when the system is handed over for live use, it moves into a new stage – maintenance – and the project itself moves into an evaluation stage. A definition of the evaluation and maintenance stages is:

to recognise the need to evaluate a delivered system and to enhance it through subsequent maintenance.

Maintenance and evaluation of systems are subjects in their own right and many books have been written on these topics, some of which are noted at the end of the chapter. This chapter provides an overview of some of the main aspects of maintenance and evaluation. It should also be borne in mind that work in the maintenance stage is generally (although not always) carried out by a different team from the team involved in developing the system. The maintenance team often operate within a different management structure and, of course, due to the different nature of the work, different techniques are required.

MAINTENANCE IN THE SYSTEMS DEVELOPMENT LIFECYCLE

The term ‘maintenance’ can sometimes be misleading as it implies that it concerns only actions carried out to correct faults or to keep the system in good running order. However, evidence suggests that, of all the work carried out in the maintenance stage, only around twenty per cent is actually corrective, with the remaining eighty per cent being related to system enhancements. This may reflect the current focus on delivering software in releases or increments. It is possible that, if a system undergoes significant enhancement over time, it may end up bearing little, or very limited, resemblance to the original system. It is also the case that the costs incurred during the maintenance stage are typically high in relation to the cost of the original development, if they are considered over the full life of the system; maintenance costs may account for several times the development cost. Obviously, this varies considerably from system to system, depending upon factors such as the lifetime of operation. For example, a pensions management system is likely to be in operation for many years, thus incurring high overall maintenance costs, whereas a system developed to help launch a new product may have a very short lifespan and require little maintenance before being abandoned.

Chapter 2 explained the different system development lifecycles and it is useful to look at how the maintenance stage is added to some of them.

Linear approach

The first sets of lifecycles are linear in nature, and include the Waterfall and ‘V’ models. Figure 13.1 shows a conventional Waterfall lifecycle, with maintenance added as an extra stage at the end. However, this model doesn’t provide any insight into what is actually done in the maintenance stage; it simply treats maintenance as another stage that starts when the Implementation Stage is finished.

Figure 13.1 Maintenance in the Waterfall lifecycle

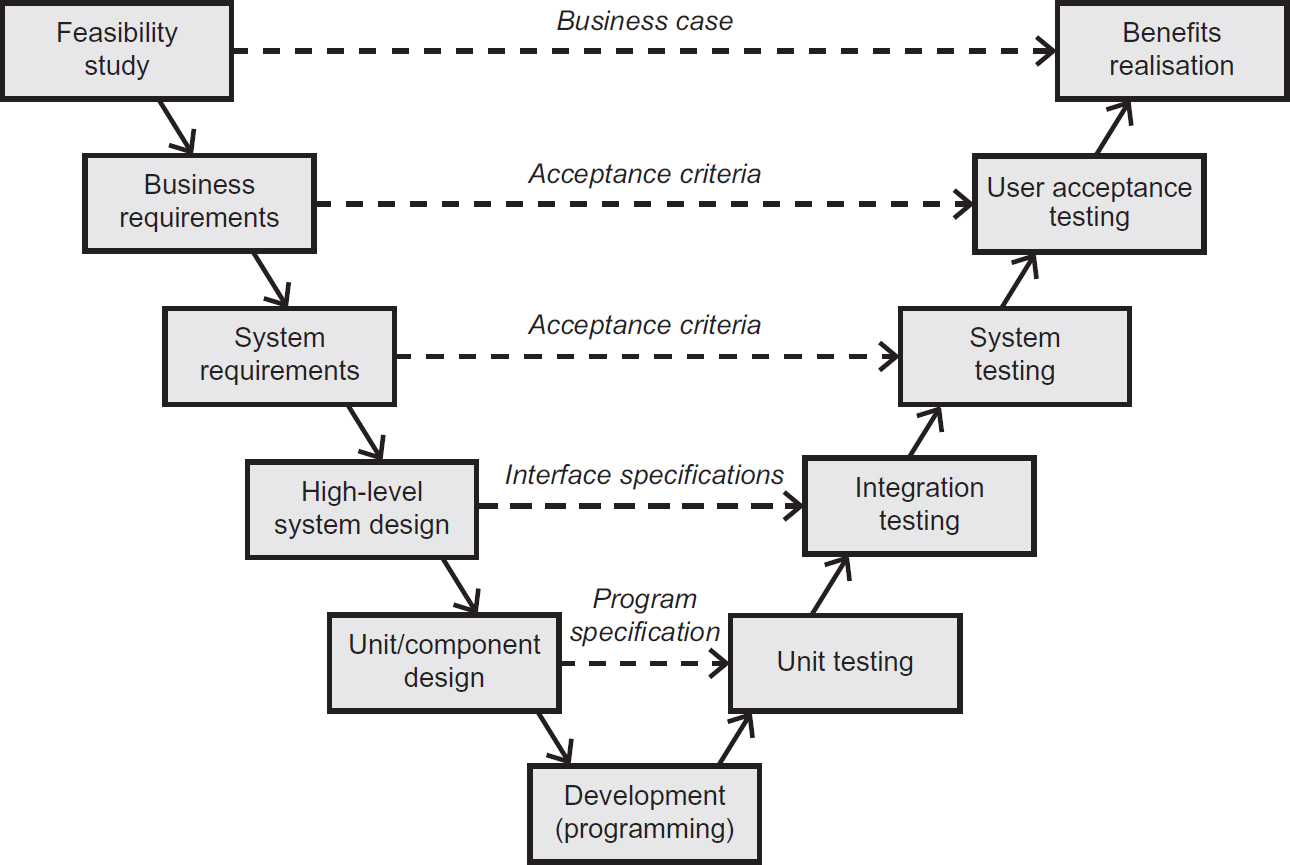

A better representation is provided by the ‘V’ model of systems development (Figure 13.2) as in essence it involves repeating the entire lifecycle. The maintenance stage of the system’s life will consist of a series of iterations of the ‘V’ model stages. Each iteration will, of course, be on a smaller scale than the original development, encompassing a selected set of additional requirements. So, when user acceptance testing is finished, we return to the first stage (business requirements), and continue down and then up the ‘V’ as before. This sequence will probably be repeated many times in order to maintain the system during its lifespan.

Figure 13.2 ‘V’ model lifecycle

There is another variant of the Waterfall model that explicitly shows the maintenance stage and this – by reason of its shape – is known as the ‘b’ model. It is illustrated in Figure 13.3.

The ‘b’ model shows that, once the system is in live operation, there is a separate set of stages where the users and others carry out evaluation and maintenance:

- they evaluate how well the system works:

- they record things that are wrong with it and, with the benefit of their experience in using the system, think of additional things they would like it to do;

- these ideas may then be examined in a feasibility study and, if approved, give rise to a small development project to enhance and improve the system.

This sequence continues throughout the lifetime of the system.

Figure 13.3 The ‘b’ model of systems development

Evolutionary approach

The second main approach concerns evolutionary systems development. This approach is based upon the Spiral lifecycle, which is used to some extent by many Agile approaches such as RAD, DSDM, SCRUM, XP, RUP, Kanban, ScrumBan, AgileUP and so on.

Figure 13.4 shows the original Spiral lifecycle proposed by Barry Boehm.

This model suggests that a separate ‘maintenance’ stage is not needed since the principles of continuous improvement and addition are inherent to, and built into, the approach. The essence of the model is that it is iterative using an evolutionary prototyping approach to develop a system release. Any further release of the system, following the initial deployment, begins at the heart of the spiral and is developed using the same approach.

However, it is possible, in practice, that a system is developed using an evolutionary approach, but when it moves into maintenance, it is passed into a maintenance pool where other standardised maintenance approaches are used.

Figure 13.4 Spiral lifecycle (Boehm)

MAINTENANCE CATEGORIES

The maintenance work undertaken after the system is implemented can fall into three broad categories:

Corrective maintenance

Corrective maintenance fixes faults and failures discovered within the system. The system may have been exhaustively tested before being handed over to the users for live use, but faults may still be found when used in the real world and subjected to real situations. If problems have been discovered with the way requirements were defined originally, or in the way that the developers have implemented some requirements, putting the resultant problems right would also fall into this category.

Adaptive maintenance

Adaptive maintenance (or enhancement) involves making changes to the system to meet new or changed requirements. The system is being adapted to cater for these changes. These usually result from changes to the way the organisation operates, sometimes caused by external forces such as new legislation. For example, if the rules for calculating National Insurance contributions are changed in the budget, adjustments would be needed to a payroll system to encompass them.

Perfective maintenance

Perfective maintenance (optimisation) involves ‘tuning’ the system to make it work better. Often, perfective maintenance work is directing at improving the non-functional requirements, for example to make response times faster or the system more intuitive to use.

Other types

Other categories include preventative maintenance – action taken on foreseeable or latent errors, but where the error has not yet caused any problems. A good historical example was the Year 2000 date format issue (‘Y2K’), when a lot of software was modified to avoid possible problems where dates were held in dd/mm/yy format.

Sometimes part of adaptive maintenance is classed as evolutive – where the users decide to change the requirements as a result of their experience in using the system, as opposed to system modifications made necessary by changes in the external environment, such as new legislation or the need to interface with another system.

TESTING IN THE MAINTENANCE STAGE

Whatever type of maintenance is being undertaken, there is a need to test the changes before they enter live running. The stages of testing involved here are basically the same as for the original development – unit (module) testing, integration testing, system testing and user acceptance testing – but maintenance also requires what is called regression testing.

Regression testing means, in effect, checking that the changes being introduced do not adversely affect something that previously worked correctly. For example, if there had been a module of the system that passed a field to another module, what would happen if the size of that field were increased? Would the receiving module be able to handle this larger field or would it fail in some way? It is possible that the receiving module may truncate the length of the field leading to an undesirable outcome, so regression testing is needed to ensure that these types of errors do not occur.

EVALUATION

It is important that the ‘evaluation’ aspects of a system are carefully considered. Evaluation should always be carried out and has a number of different objectives.

Evaluation of a system may vary depending on the type of system and on how it is used. It is useful to bear in mind the ‘CCP’ model when considering evaluation reviews:

Context:

Why is evaluation being carried out and who is to be involved? Which stakeholders are interested in the review and why?

Content:

What is being evaluated? It may be the conduct of the project, or the performance of the software, or an aspect of the expected benefits.

Process:

What is the timeframe for conducting the review, how is it done, and what is done?

Evaluation can take many forms and the terminology used for these can be misleading, as different organisations may use the same term to mean different things (and different terms to mean the same thing). The three major evaluation activities are post-project review (how well the project was conducted), post-implementation review (how well the delivered software meets the requirements) and benefits review (how well the benefits have been realised). The more common types of review are described below. Although organisations use different names for these reviews, those used below are the most common terms.

Post-project review

The post-project review is a one-off exercise conducted at the completion of the development project. This review is likely to take place almost immediately after the start of live running and examines how well the development project was conducted, in terms of what was done well, and what could be improved. The output from this review is a Post Project Review Report, sometimes called a Lessons Learned Report. The review is not an opportunity to apportion blame for things that went badly, but to agree on ‘what worked well’ and ‘what did not work well’ during the project in order to use the findings to benefit future projects.

The review will look at a number of areas, including the following:

- Project management: How well was the project planned, monitored and controlled? How did the project perform against its constraints of time, cost and quality?

- Estimating: How good were the estimates and what useful software metrics were gained from the project?

- Risks and issues: How well were risks identified, analysed and dealt with?

- User involvement: Was there sufficient user involvement and how well were the users’ expectations managed?

Members of the project team – user representatives, analysts, designers, developers and testers – are involved in this review as well as the project sponsor. Input documents may include project progress reports, cost reports, outstanding error lists, issues logs and so on.

Post-implementation review

The post-implementation review looks at how successfully the software meets the requirements defined earlier in the project and typically takes place shortly after go-live – perhaps three to six months later (although this is not a fixed timescale). The post-implementation review (PIR) is concerned with reviewing the product produced by systems development, primarily the software, although other deliverables (user guides, training and so on) could also be included. It focuses on whether the product meets the objectives and requirements defined at the start of development in the project initiation document. It also addresses any unresolved issues still remaining with the product.

The participants in this review should include user representatives, business analysts and developers. The aim is to develop a ‘snagging list’ of items that require attention. This list should be prioritised in some way, for example, whether the issue needs immediate attention or whether a fix can be incorporated in the next scheduled upgrade of the system, or even whether it can be lived with indefinitely.

In addition to the snagging list, another outcome of a post-implementation review may be changes to the software development and testing processes. This is designed to avoid the same software problems occurring in future projects, although this is more correctly dealt with in the post-project review. Some organisations combine the two types of review, although this is generally not a good idea as the objectives of the reviews are different.

Benefits review

Benefits management is an ongoing process, which should have started much earlier in the lifecycle, at the point when the business case has been agreed and the predicted business benefits established. A benefits review may take place at any appropriate point after the system has been delivered and when enough time has elapsed for the benefits to have appeared (or at any rate where the likelihood of their appearance can be assessed). The main objective of investing in new IT systems is to deliver business benefits, and benefit reviews are carried out in order to check that this has actually happened. Once a system is in live operation, the benefits review is conducted to determine whether or not the business benefits have been realised.

It is important that the benefits are defined clearly at the outset of the project, otherwise it is impossible to determine whether the new system has been a success and whether it has delivered the intended benefits. The initial definition of the benefits should be contained in the business case, and this business case should be revisited throughout the development project to ensure that the benefits are still on track to be delivered. The business case may require to be changed as a result. Points at which the business case is reviewed may be pre-determined, for example, at the end of each stage, or may take place as a result of a major external factor, such as a significant change in legislation which affects the system under development.

The benefits review is concerned with benefits realisation, where the benefits are checked to see if those originally proposed in the business case have actually been delivered. Although the business benefits are often financial in nature, they may also be related to other non-financial aspects such as meeting legal requirements, increased quality of customer service, speedier responses to enquiries and so on. As a result, there may be many aspects to check in order to confirm (or otherwise) the realisation of a benefit. The place of benefits realisation as part of an extended ‘V’ model is shown in Figure 13.5.

THE ROLE AND SELECTION OF METRICS FOR EVALUATION

In general, a metric is a quantifiable measurement used to track and assess the performance of a product, plan or process. For an IT system, metrics may be used to assess and monitor its performance during live running, or to help establish whether the business benefits are being met.

Figure 13.5 Extended ‘V’ model showing benefits realisation stage

To be useful, metrics should be defined in line with the following characteristics:

Quantifiable

It should be possible to assign some value to the metric, for example that ninety-five per cent of the requirements were agreed to have been met during user acceptance testing or that transactions are processed twenty-five per cent quicker than previousiy.

Relevant

The metrics must be relevant to the business objectives for the project, for example, that they contributed towards increased sales or a better image for the organisation.

Easy to collect

The effort of collecting the data should not be disproportionate to its importance. Some very useful measures – such as customers’ perceptions of a new product – may perhaps be collected through time-consuming methods such as one-to-one interviews. In some contexts, this may be considered worthwhile, but this may not be the case in other situations.

The metrics should be defined such that they focus on key aspects of the new system development. Examples include:

Business objectives

If the aim of the project was to improve profitability by replacing salespeople with a new website, data must be collected that enables the organisation to assess whether this has worked or not. This could include number and value of sales processed via the website, reduced cost of the salesforce and so on.

It is important that the software does what it was initially required to do. User acceptance testing provides some insights into functional fit by identifying how well the requirements have been met. However, another measure is the number of corrective maintenance changes requested after the initial implementation.

Reliability

Reliability is another important aspect to be considered when evaluating the success of a system. Measures such as the percentage availability of the system during working hours, or of the amount of ‘downtime’ experienced, fit into this category.

Usability

Usability is a key factor for ensuring the successful deployment of a system into the work environment. This can be difficult to measure, but such issues as how long it takes to train someone to use the system effectively and how well-defined the help facilities are, should be considered.

Key performance indicators (KPIs)

Key performance indicators are used to measure vital aspects of the system’s performance. Usually a number of KPIs are measured, which together provide an overall measure of how well the system is performing. KPIs should make use of SMART, that is they should be Specific, Measurable, Attainable (or Achievable), Relevant (to the business) and Time-based.

Service level agreements (SLAs)

A service level agreement is generally an agreement between two (or more) parties where one party is the customer and the other party is the supplier of the service. In an SLA, there is a ‘contract’ between the parties where the provider agrees to provide the service to a pre-defined level or standard. This level of service is then monitored and, depending on the contract in place, penalties may be imposed on the supplier if the SLA is not met. In the case of a delivered system, there should be several SLAs in place. One SLA may state that the maintenance team will correct any high-priority faults and restore a full service with 24 hours. Another example SLA may be that the system will be available for use ninety-eight per cent of the total time within each calendar month.

REFERENCES

Boehm, B. (1986) A spiral model of software development and enhancement. ACM SIGSOFT Software Engineering Notes, August.

FURTHER READING

Grubb, P. and Takang, A. A. (2003) Software maintenance: concepts and practice. World Scientific Publishing, London.

Pigoski, T. M. (1996) Practical software maintenance: best practices for managing your software investment. Wiley, New York.