Chapter 4. Understanding the Technology

Introduction

In Chapter 3, we mentioned that, in addition to traditional investigative skills, a good cybercrime investigator needs a thorough understanding of the technology that is used to commit these crimes. Just as a homicide investigator must know something about basic human pathology to understand the significance of evidence provided by dead bodies—rigor mortis, lividity, blood-spatter patterns, and so forth—a cybercrime investigator needs to know how computers operate so as to recognize and preserve the evidence they offer.

A basic tenet of criminal investigation is that there is no “perfect crime.” No matter how careful, a criminal always leaves something of him- or herself at the crime scene and/or takes something away from the scene. These clues can be obvious, or they can be well hidden or very subtle. Even though a cybercriminal usually never physically visits the location where the crime occurs (the destination computer or network), the same rule of thumb as for physical crimes applies: Everyone who accesses a network, a system, or a file leaves a track behind. Technically sophisticated criminals might be able to cover those tracks, just as sophisticated and careful criminals are able to do in the physical world. But in many cases, they don't completely destroy the evidence; they only make the evidence more difficult to find.

For example, a burglar might take care to wipe all fingerprints off everything he's touched while inside a residence, removing the most obvious evidence (and often the evidence that's the most helpful to police) that proves he was there. But if as he does so, tiny bits of fabric from the rag that he uses adhere to some of the surfaces, and if he takes that rag with him and it is later found in his possession, police could still have a way to link him to the crime scene. Likewise, the cybercriminal may take care to delete incriminating files from his hard disk, even going so far as to reformat the disk. It will appear to those who aren't technically savvy that the data is gone, but an investigator who understands how information is stored on disk will realize that evidence could still be on the disk, even though it's not immediately visible (much like latent fingerprints), and will take the proper steps to recover and preserve that evidence.

Information technology (IT) professionals who are reading this book and who already have a good understanding of technology might wonder whether they can skip this chapter. We recommend that they read the chapter. It might be useful for those who anticipate working with law enforcement officers and crime scene technicians to see computer technology from a new perspective: how it can serve as evidence and which technological details are most important to understand from the investigative point of view. Most IT professionals are used to looking at computer and networking hardware, software, and protocols in terms of making things work. Investigators see these items in terms of what they can reveal that is competent, relevant, and material to the case. A network administrator familiar with the Windows operating system, for example, knows that it can be made to display file modification dates, but he or she might not have considered how crucial this information could be in an investigation. Similarly, a police investigator who is not trained in the technology might realize the importance of the information but not realize that such information is available, because it isn't obvious when the operating system is using default settings. Once again, each side has only half of the pieces to the puzzle. If the two sides work together, the puzzle falls into place that much more quickly.

In this chapter, we provide an overview of how computers process and store information. First we look at the hardware, then we discuss the software (particularly the operating system) on which personal computers run. We will also discuss some basic issues of networks, and introduce you to some of the other devices that may be a source of evidence in an investigation. By introducing you to these technologies, we will be better able to expand on them in later chapters, where we discuss acquiring evidence from them.

Understanding Computer Hardware

It's commonplace for people who operate in the business world or in any administrative or clerical capacity in the public sector to have exposure to computers. The fact that they use computers every day doesn't mean that they understand them, however. This makes sense. Most of us drive cars every day without necessarily knowing anything about mechanics. Even people with enough mechanical aptitude to change their own car's oil and spark plugs might not really understand how an internal combustion engine works. Similarly, we can turn on our televisions and change the channels without really knowing how programs are broadcast over the airwaves or via cable.

Most casual users take it for granted that if they put gas in a car, it takes them where they want to go, and if they pay the cable bill, the show goes on. Even though we don't understand these technologies, they've been around long enough that we're comfortable with them. To “first-generation” users, though, the old Model T Ford must have seemed like quite a mysterious and scary machine, and pictures that somehow invisibly flew through the air and landed inside a little box in people's living rooms seemed nothing short of magic to early TV owners.

We must remember that many of the people using computers today are members of the “first generation” of computer users—people who didn't grow up with computers in every office, much less in almost every home. To them, computers still retain the flavor of something magical, something unexplainable. Some skilled crime investigators fit into this category. Just as effective cybercrime fighting requires that we acquaint IT professionals with the legal process, it also requires that we acquaint law enforcement personnel with computer processing—how the machines work “under the hood.”

The first step toward this enlightenment is to open the case and look inside at all the computer's parts and pieces and what they do so that we can understand the role that each plays in creating and retaining electronic evidence.

Note

Police colleges and other training opportunities that provide courses on electronic search and seizure or computer forensics may have prerequisites that require a person to understand some of the specifics of how computers function and store information. Having a basic understanding of the topics covered in this chapter will be useful to officers or other individuals who plan to attend such courses.

Looking Inside the Machine

At its most basic level, all a computer really does is crunch numbers. As explained later in this chapter, all data—text, pictures, sounds, programs—must be reduced to numbers for the computer to “understand” it. According to the Merriam-Webster Online Dictionary (http://www.merriam-webster.com/dictionary/computer), a computer is “a programmable usually electronic device that can store, retrieve, and process data.” To a allow a person to enter this data so that it can be processed, saved, and retrieved according to preprogrammed instructions, a combination of different kinds of hardware is required. Although we commonly think of the computer as a box with a keyboard, monitor, and mouse attached to it, it is actually an assembly of different parts.

Regardless of whether it is a tiny handheld model or a big mainframe system, computers consist of the same basic components:

▪ A control unit

▪ A processing unit

▪ A memory unit

▪ Input/output units

Of course, there must be a way for all these components to communicate with one another. PC architecture is fairly standardized, which makes it easy to interchange parts between different computers. The foundation of the system is a main circuit board, fondly referred to as the motherboard.

The Role of the Motherboard

As shown in Figure 4.1, most of the computer's components plug into the main board, and they all communicate via the electronic paths (circuits) that are imprinted into the board. Additional circuit boards can be added via expansion slots. The electronic interface between the motherboard and these additional boards, cards, and connectors is called the bus. The bus is the pathway on the motherboard that connects the components and allows them to interact with the processor.

|

| Figure 4.1 Motherboard and Computer Components inside a Computer Case |

The motherboard is the PC's control unit. The motherboard is actually made up of many subcomponents:

▪ The printed circuit board (PCB) itself, which may be made of several thin layers or a single planar surface onto which the circuitry is affixed

▪ Voltage regulators, which reduce the 5V signal from the power supply to the voltage needed by the processor (typically 3.3V or less)

▪ Capacitors that filter the signals

▪ The integrated chipset that controls the interface between the processor and all the other components

▪ Controllers for the keyboard and I/O devices (integrated SCSI, onboard sound and video, and so on)

▪ An erasable programmable read-only memory (EPROM) chip that contains the core software that directly drives the system hardware

▪ A battery-operated CMOS chip that contains the Basic Input Output System (BIOS) settings and the real-time clock that maintains the time and date

▪ Sockets and slots for attaching other components (processor, main memory, cache memory, expansion cards, power supply)

The layout and organization of the components on the motherboard is called its form factor. The form factor determines the size and shape of the board and where its integrated ports are located, as well as the type of power supply it is designed to use. The computer case type must match the motherboard form factor or the openings in the back of the case won't line up correctly with the slots and ports on the motherboard. Typical motherboard form factors include:

▪ ATX/mini ATX/microATX Currently the most popular form factors; all current Intel motherboards are ATX or microATX. Port connectors and PS/2 mouse connectors are built in; access to components is generally more convenient, and the ATX power supply provides better air flow to reduce overheating problems. microATX provides a smaller motherboard size and smaller power supply, and supports AGP high-performance graphics. A subset of the microATX form factor is the FlexATX, which is a flexible form factor that allows various custom designs of the motherboard's shape.

▪ AT/Baby AT The most common PC motherboard form factor prior to 1997. The power supply connects to the board with two connectors labeled P8 and P9; reversing them can destroy the motherboard.

▪ LPX/Mini LPX Used by big brand-name computer manufacturers to save space in small cases. This form factor uses a “daughterboard” or riser card that plugs into the main board. Expansion cards then plug into the riser card.

▪ NLX A modernized and improved version of LPX. This form factor is also used by name-brand vendors.

Note

For additional information and specifications of various form factors, you can visit http://www.formfactors.org.

The Roles of the Processor and Memory

Two of the most important components in a computer are the processor and memory. Let's take a brief look at what these components do.

The Processor

The processor (short for microprocessor) is an integrated circuit on a single chip that performs the basic computations in a computer. The processor is sometimes called the CPU (for central processing unit), although many computer users use that term to refer to the PC “box”—the case and its contents—without monitor, keyboard, and other external peripherals.

The processor is the part of the computer that does all the work of processing data. Processors receive input in the form of strings of 1s and 0s (called binary communication, which we discuss later in this chapter) and use logic circuits, or formulas, to create output (also in the form of 1s and 0s). This system is implemented via digital switches. In early computers, vacuum tubes were used as switches; they were later replaced by transistors, which were much smaller and faster and had no moving parts (making them solid-state switches). Transistors were then grouped together to form integrated circuit chips, made of materials (particularly silicon) that conduct electricity only under specific conditions (in other words, a semiconductor). As more and more transistors were included on a single chip, the chips became increasingly smaller and less expensive to make. In 1971, Intel was the first to use this technology to incorporate several separate logic components into one chip and call it a microprocessor.

Processors are able to perform different tasks using programmed instructions. Modern operating systems allow multiple applications to share the processor using a method known as time slicing, in which the processor works on data from one application, then switches to the next (and the next and the next) so quickly that it appears to the user as though all the applications are being processed simultaneously. This method is called multitasking, and there are a couple of different ways it can be accomplished. Some computers have more than one processor. To take advantage of multiple processors, the computer must run an operating system that supports multiprocessing. We discuss multitasking and multiprocessing in more depth later in this chapter.

The processor chip itself is an ultra-thin piece of silicon crystal, less than a single millimeter in thickness, that has millions of tiny electronic switches (transistors) embedded in it. This embedding is done via photolithography, which involves photographing the circuit pattern and chemically etching away the background. The chip is part of a wafer, which is a round piece of silicon substrate, on which 16 to 256 individual chips are etched (depending on wafer size). The chips are then packaged, which is the process of matching up the tiny connection points on the chip with the pins that will connect the processor to the motherboard socket and encasing the fragile chip in an outer cover.

Before they're packaged, the chips are tested to ensure that they perform their tasks properly and to determine their rated speed. Processor speed is dependent on the production quality, the processor design, the process technology, and the size of the circuit and die. Smaller chips generally can run faster because they generate less heat and use less power. As processor chips have shrunk in size, they've gotten faster. The circuit size of the original 8088 processor chip was 3 microns; modern Pentium chips are 0.25 microns or less. Overheating decreases performance, and the more power is used, the hotter the chip gets. For this reason, new processors run at lower voltages than older ones. They also are designed as dual voltage chips, in which the core voltage (the internal voltage) is lower than the I/O voltage (the external voltage). The same motherboard can support processors that use different voltages, because they have voltage regulators that convert the power supply voltage to the voltage needed by the processor that is installed.

Even running at lower voltages, modern high-speed processors get very hot. Heat sinks and processor fans help keep the temperature down. A practice popular with hackers and hardware aficionados—called overclocking (setting the processor to run faster than its rating)—causes processors to overheat easily. Elaborate—and expensive—water-cooling systems and Peltier coolers that work like tiny solid-state air conditioners are available to address this problem.

The System Memory

The term memory refers to a chip on which data is stored. Some novice computer users might confuse the terms disk space and memory; thus, you hear the question, “How much memory do I have left on my hard drive?” In one sense, the disk does indeed “remember” data. However, the term memory is more accurately used to describe a chip that stores data temporarily and is most commonly used to refer to the system memory or random access memory (RAM) that stores the instructions with which the processor is currently working and the data is currently being processed. Memory chips of various types are used in other parts of the computer; there's cache memory, video memory, and so on. It is called random access memory because data can be read from any location in memory, in any order.

The amount of RAM installed in your computer affects how many programs can run simultaneously and the speed of the computer's performance. Memory is a common system bottleneck (that is, the slowest component in the system that causes other components to work at less than their potential performance speed). The data that is stored in RAM, unlike data stored on disks or in some other types of memory, is volatile. That means the data is lost when the system is shut down or the power is lost.

Each RAM chip has a large number of memory addresses or cells, organized in rows and columns. A single chip can have millions of cells. Dynamic random access memory (DRAM) pairs a transistor and capacitor together to create a memory cell, which represents a single bit of data. Each address holds a specified number of bits of data. Multiple chips are combined on a memory module, which is a small circuit board that you insert in a memory slot on the computer's motherboard. The memory controller, which is part of the motherboard chipset, is the “traffic cop” that controls which memory chip is written to or read at a given time. How does the data get from the memory to the processor? It takes the bus—the memory bus (or data bus), that is. As mentioned earlier, a bus is a channel that carries the electronic signals representing the data within the PC from one component to another.

RAM can be both read and written. Computers use another type of memory, read-only memory (ROM), for storing important programs that need to be permanently available. A special type of ROM mentioned earlier, erasable programmable ROM (EPROM), is used in situations in which you might need to occasionally, but not often, change the data. A common function of EPROM (or EEPROM, which is electrically erasable PROM) is to store “flashable” BIOS programs, which generally stay the same but might need to be updated occasionally. Technically, EPROM is not “read-only” 100 percent of the time, because it can be erased and rewritten, but most of the time it is only read, not written. The data stored in ROM (including EPROM) is not lost when the system is shut down.

Yet another type of memory used in PCs is cache memory. Cache memory is much faster than RAM but also much more expensive, so there is less of it. Cache memory holds recently accessed data. The cache is arranged in layers between the RAM and the processor. Primary, or Level 1 (L1), cache is fastest; when the processor needs a particular piece of data, the cache controller looks for it first in L1 cache. If it's not there, the controller moves on to the secondary, or L2, cache. If the controller still doesn't find the data, the controller looks to RAM for it. At this writing, L1 cache memory costs approximately 100 times as much as normal RAM or SDRAM, whereas L2 cache memory costs four to eight times the price of the most expensive available RAM. Cache speeds processing considerably because statistically, the data that is most recently used is likely to be needed again. Getting it from the faster cache memory instead of the slower RAM increases overall performance.

Note

There are other types of cache in addition to the processor's cache memory. For example, Web browsers create a cache on the hard disk where they store recently accessed Web pages, so if those same pages are requested again, the browser can access them from the local hard disk. This system is faster than going out over the Internet to download the same pages again. The word cache (pronounced “cash”) originally meant “a secret page where things are stored,” and appropriately, the Web cache can provide a treasure trove of information that might be useful to investigators, as we discuss in Chapter 15.

Cache memory uses static RAM (SRAM) instead of the dynamic RAM (DRAM) that is used for system memory. The difference is that SRAM doesn't require a periodic refresh to hold the data that is stored there, as DRAM does. This makes SRAM faster. Like DRAM, though, SRAM loses its data when the computer's power is turned off.

Storage Media

The term storage media is usually used to refer to a means of storing data permanently, and numerous media types can be used to store data more or less permanently, including those storing data magnetically or using optical disks. In this section, we'll look at a number of different digital media devices, as well as the most common method of storing data: hard disks.

Hard Disks

Hard disks are nonvolatile storage devices that are used to store and retrieve data quickly. Nonvolatile storage is physical media that retains data without electrical power. This means that no data is lost when the computer is powered off, making hard disks suitable for permanent storage of information. As we'll discuss in the sections that follow, hard disk drives write the digital data as magnetic patterns to rigid disks that are stored inside the hard disk drive (HDD). Because the HDD is installed in the computer, it is able to access and process the data faster than removable media such as floppy disks.

Although hard disks have been used for decades in computers, the use of them has expanded to other forms of technology. Today, you can find camcorders, game systems, and Digital Video Recorders that use hard disks to store data instead of magnetic tapes or other media. Regardless of their use, the hard disks and their related file systems all perform the same tasks of storing data so that it can be retrieved, processed, and viewed at a later time.

Overview of a Hard Disk

Although removable hard disks exist, most HDDs are designed for installation inside a computer, and for that reason were referred to as fixed disks. To avoid custom or proprietary hard disks needing to be purchased to fit inside different brands of computer, standards were developed early in the personal computer's history. These standards dictate the size and shape of the hard disk, as well as the interfaces used to attach them to the computer. As we saw when discussing motherboards, these standards are called form factors, and they refer to the physical external dimensions of the disk drive. The most common form factors that have been used over the past few decades are:

▪ 5.25-inch, which were the first hard drives that were used on PCs and were commonly installed in machines during the 1980s

▪ 3.5-inch, which is the common size form factor used in modern PCs

▪ 2.5-inch, which is the common size form factor used in laptop/notebook computers

Note

The two most common sizes of hard disk are the 2.5-inch form factor (used for laptops) and the 3.5-inch form factor (used for PCs). Although the numbers are generally associated with the width of the drive's platter (or sometimes the drive itself), this isn't necessarily the case. The 3.5-inch drives are generally 4 inches wide and use a platter that's 3.74 inches in width. They're called 3.5-inch form factor drives because they fit in the bay for a 3.5-inch floppy drive. Similarly, the obsolete 5.25-inch form factor was named as such because it fit in the 5.25-inch floppy drive bay.

The 5.25-inch disk drive is obsolete, although you may still find some in legacy machines. The first of these drives appeared on the market in 1980, but were succeeded in 1983 when the 3.5-inch drives appeared. When these became available, they were either mounted into the computer case's 3.5-inch floppy drive bays, or screwed into frames that allowed the 3.5-inch form factor to fit into the larger 5.25-inch bays.

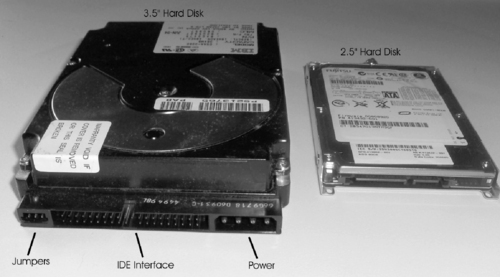

Even though the 3.5-inch form factor has been around for more than two decades, it continues to be the common size used in modern desktop computers. The other most popular form factor is the 2.5-inch disk, which is used in laptop computers. Figure 4.2 shows a 3.5-inch form factor hard disk on the left, and a 2.5-inch form factor drive on the right. When comparing the two, you can immediately see the difference in size between them, and see that both encase the internal mechanisms of the disk drive inside a sturdy metal case. This is to prevent dust and other foreign elements from coming into contact with the internal components, which (as we'll see later) would cause the disk to cease functioning.

|

| Figure 4.2 Hard Disks |

In looking at the 3.5-inch hard disk depicted on the left of Figure 4.2, you will see that the HDD has several connections that allow it to be installed in a computer. The 2.5-inch hard disk doesn't have these same components because it is installed differently in a laptop; a cover is removed from the laptop (generally on the back of the computer), where the 2.5-inch HDD is inserted into a slot. The 3.5-inch HDD needs to be installed in the computer using several different components outside the HDD:

▪ Jumpers

▪ Hard disk interface

▪ Power connector

Jumpers are a connector that works as an on/off switch for the hard disk. A small piece of plastic with metal connectors inside is placed over two of the metal pins to create an electrical circuit. The existence of the circuit lets the computer know whether the hard disk is configured in one of the following two roles:

▪ Master The primary hard disk that is installed on a computer. The master is the first hard disk that the computer will attempt to access when booting up (that is, starting), and it generally contains the operating system that will be loaded. If only one hard disk is installed on a machine, that hard disk is the master drive.

▪ Slave The secondary hard disk that is installed on a computer. Slave drives are generally used for additional data storage, and as a location where additional software is installed.

The power connector on a hard disk used to plug the hard disk into the computer's power supply. A power cable running from the power supply is attached to the hard disk, allowing it to receive power when the computer is started.

The hard disk interface is used to attach the hard disk to the computer so that data can be accessed from the HDD. Although we'll discuss hard disk interfaces in greater detail later in this chapter, the one shown in Figure 4.2 is an IDE hard disk interface, which is one of the most popular interfaces used on HDDs. A thin, flat cable containing parallel wires called a ribbon cable is inserted into the interface on the HDD, while the other end of the ribbon cable is plugged into the disk drive controller. As a result of this configuration, the computer can communicate with the HDD and access its data.

The Evolution of Hard Disks

The hard disk is usually the primary permanent storage medium in a PC. However, the earliest PCs didn't have hard disks. In fact, early computers (prior to the PC) didn't have any sort of data storage medium. You had to type in every program that you wanted to run, each time you ran it. Later, punched cards or tape was used to store programs and data. The next advancement in technology brought us magnetic tape storage; large mainframes used big reels of tape, whereas early microcomputers used audiocassette tapes to store programs and data. By the time the IBM PC and its clones appeared, computers were using floppy disks (the 5.25-inch type that really was floppy). More expensive models had two floppy drives (which we'll discuss later in this chapter), one for loading programs and a second for saving data—but still no hard disk.

The first hard disks that came with PCs provided 5 megabytes (MB) of storage space—a huge amount, compared to floppies. The IBM PC XT came with a gigantic 10MB hard disk. Today's hard disks are generally measured in hundreds of gigabytes (GB), at prices far lower than those first comparatively tiny disks, with arrays of them measured in terabytes (TB). Despite the fact that they're much bigger, much faster, less fragile, and more reliable, the hard disks of today are designed basically the same way as those of years ago.

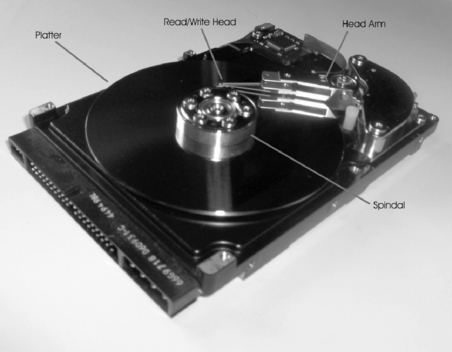

Disk Platter

Although there are a number of external elements to a hard disk, the major components are inside the HDD. As shown in Figure 4.3, hard disks comprise from one to several platters, which are flat, round disks that are mounted inside the disk. The platters are stacked one on top of another on a spindle that runs through a hole in the middle of each platter, like LPs on an old-time record player. A motor is attached to the spindle that rotates the platters, which are made of some rigid material (often aluminum alloy, glass, or a glass composite) and are coated with a magnetic substance. Electromagnetic heads write information onto the disks in the form of magnetic impulses and read the recorded information from them.

|

| Figure 4.3 Inside Components of a Hard Disk |

Data can be written to both sides of each platter. The information is recorded in tracks, which are concentric circles in which the data is written. The tracks are divided into sectors (smaller units). Thus, a particular bit of data resides in a specific sector of a specific track on a specific platter. Later in this chapter, when we discuss computer operating systems and file systems, you will see how the data is organized so that users can locate it on the disk.

The spindles of a hard disk spin the platters at high speeds, with the spindles on most IDE hard disks spinning platters at thousands of revolutions per minute (rpm). The read/write head moves over the platter and reads or writes data to the platter. When there is more than one platter in the hard disk, each platter usually has a read/write head on each of its sides. Smaller platter sizes do more than save space inside the computer; they also improve disk performance (seek time) because the heads don't have to move as far.

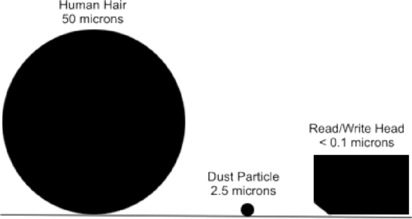

To read and write the magnetic information on the hard disk, the read/write head of the HDD is positioned incredibly close to the platter. It floats less than 0.1 micron over the surface of the platter. A micron (or micrometer) is one one-millionth of a meter, meaning that the read/write is less than one-tenth of one one-millionth of a meter from the platter's surface. To illustrate this, Figure 4.4 compares the sizes of a read/write head and the sizes of an average dust particle (which is 2.5 microns) and an average human hair (which is 50 microns). In looking at the differences in size, it is easy to see how a simple piece of dust or hair on a platter could cause the hard disk to crash, and why the internal components are sealed inside the hard disk assembly.

Hard Disk Sizes

IBM introduced its first hard disk in 1956, but the real “grandfather” of today's hard disks was the Winchester drive, which wasn't introduced until the 1970s. The standard physical size of disks at that time was 14 inches (the size of the platters that are stacked to make up the disk). In 1979, IBM made an 8-inch disk, and Seagate followed that in 1980 with the first 5.25-inch hard disk, which was used in early PCs. Three years later, disks got even smaller; the 3.5-inch disk was introduced. This became a standard for PCs. Much smaller disks (2.5 inches) were later developed for use in laptop and notebook computers. The IBM “microdrive” shrunk the diameter of the platter to 1 inch, connecting to a laptop computer via a PC Card (also called PCMCIA, for the Personal Computer Memory Card International Association that created the standard).

|

| Figure 4.4 Comparison of Objects to a Read/Write Head's Distance from the Platter |

Tracks

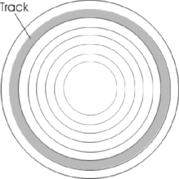

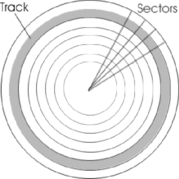

While the platters in a hard disk spin, the read/write head moves into a position on the platter where it can read data. The part of the platter passing under the read/write head is called a track. Tracks are concentric circles on the disk where data is saved on the magnetic surface of the platter. These thin concentric rings or bands are shown in Figure 4.5 and although a full number of tracks aren't shown in this figure, a single 3.5-inch hard disk can have thousands of tracks. The tracks hold data, and pass beneath the stationary read/write head as the platter rotates.

|

| Figure 4.5 Tracks on a Hard Disk |

Tracks and sectors (which we'll discuss in the next section) are physically defined through the process of low-level formatting (LLF), which designates where the tracks and sectors are located on each disk. Although in the 1980s people would perform an LLF on their hard disk themselves, typically this is done at the factory where the hard disk is made. It is necessary for the tracks and sectors to be present so that the areas where data is written to are visible to the disk controller, and the operating system can perform a high-level format and generate the file structure.

Because there are so many tracks on a platter, they are numbered so that the computer can reference the correct one when reading and writing data. The tracks are numbered from zero to the highest numbered track (which is typically 1,023), starting from the outermost edge of the disk to the track nearest the center of the platter. In other words, the first track on the disk is the track on the outer edge of the platter, and the highest numbered disk is close to the center.

Note

Although we discuss tracks and sectors on hard disks here, other media also use these methods of formatting the surface of a disk to store data. For example, a 1.44MB floppy disk has 160 tracks.

Sectors

Sectors are segments of a track, and are the smallest physical storage unit on a disk. As mentioned, the hard disk comprises predefined tracks that form concentric rings on the disk. As seen in Figure 4.6, the disk is further organized by dividing the platter into pie slices, which also divide the tracks into smaller segments. These segments are called sectors, and are typically 512 bytes (0.5KB) in size. By knowing the track number and particular sector in which a piece of data is stored, a computer is able to locate where data is physically stored on the disk.

|

| Figure 4.6 Sectors on a Hard Disk |

Just as tracks on a hard disk are numbered, an addressing scheme is also associated with sectors. When a low-level format is performed at the factory, a number is assigned to the sector by writing a number immediately before the sector's contents. This number in this header identifies the sector address, so it can be located on the disk.

Bad Sectors

At times, areas of a hard disk can be damaged, making them unusable. Bad sectors are sectors of a hard disk that cannot store data due to a manufacturing defect or accidental damage. When sectors become unusable, it only means that those areas of the disk cannot be used, not the entire disk itself. When you consider that an average 3.5-inch disk will have more than 1,000 tracks that are further segmented into millions of sectors, it isn't surprising that over the lifetime of a disk, a few sectors will eventually go bad.

Because bad sectors mean that damage has occurred at the surface of the disk, it cannot be repaired, and any data stored in that area of the hard disk is lost. It can, however, be marked as bad so that the operating system or other software doesn't write to this area again. To check and mark sectors as being unusable, special utilities are used. Programs such as Scandisk and Checkdisk (CHKDSK in DOS 8.3 notation) have been available under versions of Windows, and badblocks is a tool used on Linux systems. Each can detect a sector that has been damaged and mark it as bad.

Disk Partitions

Before a hard disk can be formatted to use a particular file system (which we'll discuss later in this chapter), the HDD needs to be partitioned. A partition is a logical division of the hard disk, allowing a single hard disk to function as though it were one or more hard disks on the computer. Even if different partitions aren't used, and the entire disk is set up as a single partition, a partition must be set so that the operating system knows the disk is going to be used in its entirety. Once a partition is set, it can be given a drive letter (such as C:, D:, and so on) and formatted to use a file system. When an area of the hard disk is formatted and issued a drive letter, it is referred to as a volume.

If more than one partition is set, multiple file systems supported by the operating system can be used on a single HDD. For example, on one volume of a Windows computer, you could have C: formatted as a FAT32 file system and D: formatted as a New Technology File System (NTFS). This allows you to use features unique to different file systems on the same computer.

On computers running Linux, DOS, or Windows operating systems, you can use different kinds of partitions. The two types of partitions are:

▪ Primary partition

▪ Extended partition

A primary partition is a partition on which you can install an operating system. A primary partition with an operating system installed on it is used when the computer starts to load the OS. Although a primary partition can exist without an operating system, on older Windows and DOS operating systems, the first partition installed had to be a primary partition. Modern versions of Windows allow up to four primary, or three primary and one extended partition (which we'll discuss next) on a single disk.

An extended partition is a partition that can be divided into additional logical drives. Unlike a primary partition, you don't need to assign it a drive letter and install a file system. Instead, you can use the operating system to create an additional number of logical drives within the extended partition. Each logical drive has its own drive letter and appears as a separate drive. Your only limitations to how many logical drives you create are the amount of free space available on the extended partition and the number of available drive letters you have left on your system.

System and Boot Partitions

When a partition is created, it can be designated as the boot partition, system partition, or both. A system partition stores files that are used to boot (start) the computer. These are used whenever a computer is powered on (cold boot) or restarted from within the operating system (warm boot). A boot partition is a volume of the computer that contains the system files used to start the operating system. Once the boot files on the system partition have been accessed and have started the computer, the system files on the boot partition are accessed to start the operating system. The system partition is where the operating system is installed. The system and boot partitions can exist as separate partitions on the same computer, or on separate volumes.

Note

Don't get too confused about the purposes of the boot and system partitions. The names are self-explanatory if you reverse their actual purposes. Remember that the system partition is used to store boot files, and the boot partition is used to store system files (that is, the operating system). On many machines, both of these are on the same volume of the computer.

Boot Sectors and the Master Boot Record

Although many sectors may exist on an HDD, the first sector (sector 0) on a hard disk is always the boot sector. This sector contains codes that the computer uses to start the machine. The boot sector is also referred to as the Master Boot Record (MBR). The MBR contains a partition table, which stores information on which primary partitions have be created on the hard disk so that it can then use this information to start the machine. By using the partition table in the MBR, the computer can understand how the hard disk is organized before actually starting the operating system that will interact with it. Once it determines how partitions are set up on the machine, it can then provide this information to the operating system.

Note

At times, you'll hear about boot viruses that infect your computer when it's started, which is why users have been warned never to leave a floppy disk or other media in a bootable drive when starting a machine. Because the MBR briefly has control of the computer when it starts, a boot virus will attempt to infect the boot sector to infect the machine immediately after it's started, and before any antivirus (AV) software is started.

NTFS Partition Boot Sector

One of the many file systems we'll discuss later in this chapter is NTFS, which is used on many computers running Windows. Because NTFS uses a Master File Table (MFT) that's used to store important information about the file system, information on the location of the MFT and MFT mirror file is stored in the boot sector. To prevent this information from being lost, a duplicate of the boot sector is stored at the disk's logical center, allowing it to be recovered if the original information in the boot sector was corrupted.

Clusters

Clusters are groups of two or more consecutive sectors on a hard disk, and they are the smallest amount of disk space that can be allocated to store a file. As we've mentioned, a sector is typically 512 bytes in size, but data saved to a hard disk is generally larger than this. As such, more than one sector is required to save the data to the disk. To access the data quickly, the computer will attempt to keep this data together by writing the data to a contiguous series of sectors on the disk. In doing so, the read/write head can access the data on a single track of the hard disk. As such, the head doesn't need to move from track to track, increasing the time it takes to read and write data.

Unlike tracks and sectors of the hard disk, clusters are logical units of file storage. Clusters are managed by the operating system, which assigns a unique number to each cluster so that it can keep track of files according to the clusters they use. Although the computer will try to store files in contiguous clusters on the disk, this isn't always possible, and so data belonging to a single file may be split across the disk in different clusters. This is invisible to the user of the computer, who will open the file without any knowledge of whether the data is stored in clusters that are scattered across various areas of the disk.

Cluster Size

Because clusters are controlled by the operating system, the size of the cluster is determined by a number of factors, including the file system being used. When a disk is formatted, the option may exist to specify the size of the cluster being used. For example, in Windows XP, right-clicking on a drive in Windows Explorer displays a context menu that provides a Format menu item. When you click on this menu item the screen shown in Figure 4.7 is displayed. As shown in this figure, the dialog box provides the ability to choose the file system in which the disk will be formatted, and it provides a drop-down list called Allocation unit size. This drop-down list is where you choose what size clusters will be created when the disk is formatted.

|

| Figure 4.7 Specifying Cluster Size When Formatting a Disk |

The dialog box in Figure 4.7 provides options to allocate clusters in sizes of 512 bytes, 1,024 bytes, 2,048 bytes, and 4,096 bytes. If a cluster size isn't specified, Windows will use the other option to use a default allocation size. On computers running Windows 2003 Server, the default cluster sizes are those shown in Table 4.1.

Slack Space

Because clusters are a fixed size, the data stored in a cluster will use the entire space, regardless of whether it needs the entire cluster. For example, if you allocated a cluster size of 4,096 bytes, and saved a 10-byte file to the disk, the entire 4KB cluster would be used even though 4,086 bytes of space are wasted. This wasted space is called slack space or file slack. Slack space is the area of space between the end of a file and the end of the last cluster used by that data.

Because an operating system will track where a file is located using the clusters used to store the data, clusters will always be used and potential storage space will always be wasted. Essentially, slack space is the same as pouring a bottle of cola into a series of glasses. As the bottle is emptied, the first glasses are filled to the top, but the final glass will be only partially filled. Because the glass has already been used, it can't be filled with something else as well. In the same way, once a cluster has been allocated to store a file, it can't be used to store data associated with other files.

Because extra space must be allocated to hold a file, it is better that the sizes of the clusters are lower. When clusters are smaller, the amount of space in the final cluster used to store a file will have less space that is unused. Smaller clusters will limit the amount of wasted space and will utilize disk space more effectively.

On any system, there will always be a certain amount of disk space that's wasted. You can calculate the amount of wasted space using the following formula:

Although this formula isn't exact, it does give an estimate of how much disk space is wasted on a particular hard disk. The number of files includes the number of directories, and this amount is multiplied by half of the allocated cluster size. Therefore, if you had a cluster size of 2,048 bytes, you would divide this in half to make it 1,024 bytes (or 1KB). If there were 10,000 files on the hard disk, this would be multiplied by 1KB, making the amount of wasted space 10MB; that is, (2,048/2) * 10,000 = 10,000KB or 10MB.

Any tool used to acquire and analyze data on a hard disk should also examine the slack space. When a file is deleted from the disk, data will continue to reside in the unallocated disk space. Even if parts of the file are overwritten on the disk, data may be acquired from the slack space that could be crucial to your investigation.

Lost Clusters

As we mentioned, each cluster is given a unique number, and the operating system uses these to keep track of which files are stored in particular clusters on the hard disk. When you consider the thousands of files stored on a hard disk, and that each may be assigned to one or more clusters, it isn't surprising that occasionally a cluster gets mismarked. From time to time, an operating system will mark a cluster as being used, even though it hasn't been assigned to a file. This is known as a lost cluster.

Lost clusters are also known as lost allocation units or lost file fragments. In UNIX or Linux machines that refer to clusters as blocks, they are referred to as lost blocks or orphans. According to the operating system, these clusters don't belong to any particular file. They generally result from improperly shutting down the computer, loss of power, shutting down the computer without closing applications first, files not being closed properly, or ejecting removable storage such as floppy disks while the drive is reading or writing to the media. When these things occur, data in the cache may have been assigned a cluster, but it was never written because the machine lost power or shut down unexpectedly. Even though the cluster isn't actually used by a file, it isn't listed as being free to use by the operating system.

Although lost clusters are generally empty, such as when a cluster is allocated to a program but never released, lost clusters may contain data. If the system was incorrectly shut down (or some other activity occurred), the cluster may have had data written to it before the event occurred. This data may be a fragment of the file or other corrupted data.

Just as bad sectors can be marked as unusable using programs such as Scandisk or Checkdisk, these same programs can be used to identify lost clusters. These tools will find lost clusters, and can recover data that may have been stored in the cluster. The data is stored as files named file####.chk, and although most of the time they are empty and can be deleted, viewing the contents using Notepad or other tools to view text may reveal missing data that is important. In UNIX, you can use another program called Filesystem Check (fsck) to identify and fix orphans. With this tool, the lost blocks of data are saved in a directory called lost+found. If the data contained in these files doesn't have any useful information, it can simply be deleted. In doing so, the lost cluster is reassigned and disk space is freed up.

Disk Capacity

Disk capacity is the amount of data that a hard disk is capable of holding. The capacity is measured in bytes, which is 7 or 8 bits (depending on whether error correction is used). Bit is short for binary digit, and it is the smallest unit of measurement for data. It can have a value of 1 or 0, which respectively indicates on or off. A bit is abbreviated as b, whereas a byte is abbreviated as B. Because bits and bytes are incredibly small measurements of data, and the capacity of an HDD or other media is considerably larger, capacity is measured in increments of these values.

A kilobyte is abbreviated as KB, and although you might expect this to equal 1,000 bytes, it actually equals 1,024 bytes. This is because a kilobyte is calculated using binary (base 2) math instead of decimal (base 10) math. Because computers use this function to calculate the number of bytes used, a kilobyte is calculated as 210 or 1,024. These values increase proportionally, but to make it easier for laypeople to understand, the terms associated with the number of bytes are incremented by thousands, millions, and larger amounts of bytes. The various units used to describe the capacity of disks are as follows:

▪ Kilobyte (KB), which is actually 1,024 bytes

▪ Megabyte (MB), which is 1,024KB or 1,048,576 bytes

▪ Gigabyte (GB), which is 1,024MB or 1,073,741,824 bytes

▪ Terabyte (TB), which is 1,024GB or 1,099,511,627,776 bytes

▪ Petabyte (PB), which is 1,024TB or 1,125,899,906,842,624 bytes

▪ Exabyte (EB), which is 1,024PB or 1,152,921,504,606,846,976 bytes

▪ Zettabyte (ZB), which is 1,024EB or 1,180,591,620,717,411,303,424 bytes

▪ Yottabyte (YB), which is 1,024 ZB or 1,208,925,819,614,629,174,706,176 bytes

To put these monumental sizes in terms that are fathomable, consider that a single terabyte can hold roughly the equivalent of 1,610 CDs of data, or approximately the same amount of data stored in all the books of a large library.

Hard Disk Interfaces

The hard disk interface is one of several standard technologies used to connect the hard disk to the computer so that the machine can then access data stored on the hard disk. The interface used by an HDD serves as a communication channel, allowing data to flow between the computer and the HDD. Over the years, a number of different interfaces have been developed, allowing the hard disk to be connected to a disk controller that's usually mounted directly on the computer's motherboard. The most common hard disk interfaces include:

▪ IDE/EIDE/ATA

▪ SATA

▪ SCSI

▪ USB

▪ Fibre Channel

IDE/EIDE/ATA

IDE is an acronym for Integrated Drive Electronics, and EIDE is an acronym for Enhanced IDE. Integrated Drive Electronics is so named because the disk controller is built into, or integrated with, the disk drive's logic board. It is also referred to as Advanced Technology Attachment (ATA), a standard of the American National Standards Institute (ANSI). Almost all modern PC motherboards include two EIDE connectors. Up to two ATA devices (hard disks or CD-ROM drives) can be connected to each connector, in a master/slave configuration. One drive functions as the “master,” which responds first to probes or signals on the interrupt (a signal from a device or program to the operating system that causes the OS to stop briefly to determine what task to do next) that is shared with the other, “slave” drive that shares the same cable. User-configurable settings on the drives determine which will act as master and which as slave. Most drives have three settings: master, slave, and cable-controlled. If the latter is selected for both drives, the first drive in the chain will be the master drive.

SATA

SATA is an acronym for Serial Advanced Technology Attachment, and is the next generation that will probably replace ATA. It provides high data transfer rates between the motherboard and storage device, and uses thinner cables that can be used to hot-swap devices (plug in or unplug the devices while they're still operating). The ability to hot-swap devices has made SATA a possible successor to USB connections used with such things as external hard disks, which can be plugged into the computer to provide large removable storage or data.

SCSI

SCSI (pronounced “skuzzy”) is an acronym for Small Computer System Interface. SCSI is another ANSI standard that provides faster data transfer than IDE/EIDE. Some motherboards have SCSI connectors and controllers built in; for those that don't, you can add SCSI disks by installing a SCSI controller card in one of the expansion slots. There are a number of different versions of SCSI; later forms provide faster transfer rates and other improvements. Devices can be “chained” on a SCSI bus, each with a different SCSI ID number. Depending on the SCSI version, either eight or 16 SCSI IDs can be attached to one controller (with the controller using one ID, thus allowing seven or 15 SCSI peripherals).

USB

USB is an acronym for Universal Serial Bus. As we'll discuss later in this chapter, USB is used for a variety of different peripherals, including keyboards, mice, and other devices that previously required serial and parallel ports, as well as newer technologies such as digital cameras and digital audio devices. Because USB uses a bus topology, the devices can be daisy-chained together or connected to a USB hub, allowing up to 127 devices to be connected to the computer at one time.

In addition to peripherals, USB provides an interface for external hard disks. Hard disks can be mounted in cases that provide a USB connection that plugs into a USB port on the computer. Once plugged into the port, the computer then detects the device and installs it, allowing you to access any data on the external hard disk. The current standard for USB is USB 2.0, which is backward compatible to earlier 1.0 and 1.1 standards, and supports bandwidths of 1.5Mbps (megabits per second), 12.5Mbps, and 480Mbps. Using an external USB hard disk that supports USB 2.0 provides a fast exchange of data between the computer and the HDD.

Fibre Channel

Fibre Channel is another ANSI standard that provides fast data transfer, and uses optical fiber to connect devices. Several different standards apply to fiber channels, but the one that primarily applies to storage is Fibre Channel Arbitrated Loop (FC-AL). FC-AL is designed for mass storage devices, and is used for storage area networks (SANs). A SAN is a network architecture in which computers attach to remote storage devices such as optical jukeboxes, disk arrays, tape libraries, and other mass storage devices. Because optical fiber is used to connect devices, FC-AL supports transfer rates of 100Mbps, and is expected to replace SCSI for network storage systems.

Hard Disk Sizes

There are ways to completely erase the data on a disk, but the average user (and the average cybercriminal) will not usually take these measures. Software programs that “zero out” the disk do so by overwriting all the 1s and 0s that make up the data on the disk, replacing them with 0s. These programs are often called “wiping” programs. Some of these programs make several passes, overwriting what was already overwritten in the previous pass, for added security. However, in some cases, the data tracks on the disk are wider than the data stream that is written on them. This means that some of the original data might still be visible and recoverable with sophisticated techniques.

A strong magnet can also erase or scramble the data on magnetic media. This process is called degaussing. It generally makes the disk unusable without restoring the factory-installed timing tracks. The platters might have to be disassembled to completely erase all the data on all of them, but equipment is available that will degauss all the platters while they remain intact.

In very high-security environments such as sensitive government operations, disks that have contained classified information are usually physically destroyed (pulverized, incinerated, or exposed to an abrasive or acid) to prevent recovery of the data.

Digital Media Devices

Although to this point we've focused on the most common method of storing data, it is important to realize there are other data storage methods besides hard disks. There are several popular types of removable media, so called because the disk itself is separate from the drive, the device that reads and writes to it. There are also devices that attach to a computer through a port, allowing data to be transferred between the machine and storage device. In the sections that follow, we'll look at a number of different digital media devices, including:

Magnetic Tape

In the early days of computing, magnetic tapes were one of the few methods used to store data. Magnetic tapes consist of a thin plastic strip that has a magnetic coating, on which data can be stored. Early systems throughout the 1950s to the 1970s used magnetic tape on 10.5-inch tape, whereas home computers in the early 1980s used audiocassette tapes for storing programs and data. Today, magnetic tape is still commonly used to back up data on network servers and individual computers.

Magnetic tape is a relatively inexpensive form of removable storage, especially for backing up data. It is less useful for data that needs to be accessed frequently, because it is a sequential access medium. You have to move back and forth through the tape to locate the particular data you want. In other words, to get from file 1 to file 20, you have to go through files 2 through 19. This is in contrast to direct access media such as disks, in which the heads can be moved directly to the location of the data you want to access without progressing in sequence through all the other files.

Floppy Disks

In the early days of personal computing, floppy disks were large (first 8 inches, then 5.25 inches in diameter), thin, and flexible. Today's “floppies,” often and more accurately called diskettes, are smaller (3.5 inches), rigid, and less fragile. The disk inside the diskette housing is plastic and is coated with magnetic material. The drive into which you insert the diskette contains a motor to rotate the diskette so that the drive heads, made of tiny electromagnets, can read and write to different locations on the diskette. Standard diskettes today hold 1.44MB of data; SuperDisk technology (developed by Imation Corporation) provides for storing either 120MB or 240MB on diskettes of the same size. Although diskettes are still used, larger file sizes have created the need for removable media that store greater amounts of data.

Compact Discs and DVDs

CDs and DVDs are rigid disks a little less than 5 inches in diameter, made of hard plastic with a thin layer of coating. CDs and DVDs are called optical media because CD and DVD drives use a laser beam, along with an optoelectronic sensor, to write to and read the data that is “burned” into the coating material (a compound that changes from reflective to nonreflective when heated by the laser). The data is encoded in the form of incredibly tiny pits or bumps on the surface of the disc. CDs and DVDs work similarly, but the latter can store more data because the pits and tracks are smaller, because DVDs use a more efficient error correction method (that uses less space), and because DVDs can have two layers of storage on each side instead of just one.

CDs

The term CD originates from “Compact Disc” under which audio discs were marketed. Philips and Sony still hold the trademark to this name. Several different types of CDs have been developed over the years, with the first being CD Audio or Compact Disc Digital Audio (CDDA).

CD Audio discs are the first CDs that were used to record audio discs. Little has changed in CD physics since the origin of CD Audio discs in 1980. This is due in part to the desire to maintain physical compatibility with an established base of installed units, and because the structure of CD media was both groundbreaking and nearly ideal for this function.

CD-ROM

Until 1985, CDs were used only for audio. Then Philips and Sony introduced the CD-ROM standard. CD-ROM is an acronym for Compact Disc – Read Only Memory, and it refers to any data CD. However, the term has grown to refer to the CD-ROM drive used to read this optical storage medium. For example, when you buy software, the disc used to install the program is called an installation CD. Such a disc is capable of holding up to 700MB of data, and remains a common method of storing data.

DVDs

Originally, DVD was an acronym for Digital Video Disc and then later Digital Versatile Disc. Today it is generally agreed that DVD is not an acronym for anything. However, although these discs were originally meant to store video, they have become a common method of storing data. In fact, DVD-ROM drives are not only able to copy (rip) or create (burn) data on DVD discs, but also they are backward compatible and can copy and create CDs as well.

DVDs represent an evolutionary growth of CDs, with slight changes. Considering that the development of DVD follows the CD by 14 years, you can see that the CD was truly a revolutionary creation in its time. It is important to understand that both CDs and DVDs are electro optical devices, as opposed to nearly all other computer peripherals which are electromagnetic. No magnetic fields are involved in the reading or recording of these discs; therefore, they are immune to magnetic fields of any strength, unlike hard drives.

Due to their immunity to magnetic fields, CD and DVD media are unaffected by Electromagnetic Pulse (EMP) effects, X-rays, and other sources of electromagnetic radiation. The primary consideration with recordable CD media (and to a lesser extent, manufactured media) is energy transfer. It takes a significant amount of energy to affect the media that the writing laser transfers to the disc. Rewritable discs (which we'll discuss later) require even more energy to erase or rewrite data.

This is in direct contrast to floppy discs and hard drives, which can be affected by electromagnetic devices such as Magnetic Resonance Imaging (MRI) machines, some airport X-ray scanners, and other devices that create a strong magnetic field. CDs and DVDs are also immune to EMPs from nuclear detonations.

It is important to understand that CD and DVD media are read with light and that recordable discs are written with heat. Using an infrared (IR) laser, data is transferred to a CD or DVD onto a small, focused area that places all of the laser energy onto the target for transfer. It should be noted that all CD and DVD media are sensitive to heat (that is, higher than 120°F/49°C), and recordable media are sensitive to IR, ultraviolet (UV), and other potential intense light sources. Some rewritable media are affected by EPROM erasers, which use an intense UV light source. Various forensic alternative light sources can provide sufficient energy to affect optical media, especially if it is focused on a small area. It is not necessarily a question of heat but one of total energy transfer, which can result in heating.

Both CD and DVD media are organized as a single line of data in a spiral pattern. This spiral is more than 3.7 miles (or 6 kilometers [km]) in length on a CD, and 7.8 miles (or 12.5 km) for a DVD. The starting point for the spiral is toward the center of the disc, with the spiral extending outward. This means that the disc is read and written from the inside out, which is the opposite of how hard drives organize data.

With this spiral organization, there are no cylinders or tracks like those on a hard drive. The term track refers to a grouping of data for optical media. The information along the spiral is spaced linearly, thus following a predictable timing. This means that the spiral contains more information at the outer edge of the disc than at the beginning. It also means that if this information is to be read at a constant speed, the rotation of the disc must change between different points along the spiral.

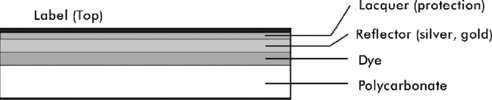

As shown in Figure 4.8, all optical media are constructed of layers of different materials. This is similar to how all optical media discs are constructed. The differences between different types of discs are as follows:

▪ CD-R The dye layer can be written to once.

▪ CD-ROM The reflector has the information manufactured into it and there is no dye layer.

▪ CD-RW The dye is replaced with multiple layers of different metallic alloys. The alloy is bi-stable and can be changed many times between different states.

▪ DVD DVDs are constructed of two half-thickness discs bonded together, even when only one surface contains information. Each half disc contains the information layer 0.6 millimeters (mm) from the surface of the disc.

|

| Figure 4.8 CD-R Construction |

DVD media consist of two half-thickness polycarbonate discs; each half contains information and is constructed similarly to CD media. DVD write-once recordable media use a dye layer with slightly different dyes than those used for CD-R media, but otherwise they are very similar physically. Manufactured DVD media have the information manufactured into the reflector and no dye layer is present. Rewritable DVD media use bi-stable alloy layers similar to those for CD rewritable media. The differences between manufactured, write-once, and rewritable media are physically similar between CD and DVD media.

The key to all recordable media types is the presence of a reflector with the ability to reflect laser energy. Data is represented by blocking the path to the reflector either by dye or by a bi-stable metallic alloy. The bottom of a CD is made of a relatively thick piece of polycarbonate plastic. Alternatively, the top is protected by a thin coat of lacquer. Scratches on the polycarbonate are out of focus when the disc is read, and minor scratches are ignored completely. It takes a deep scratch in the polycarbonate to affect the readability of a disc. However, even a small scratch in the lacquer can damage the reflector. Scratching the top of a disc can render it unreadable, which is something to consider the next time you place a disc on your desk top-down “to protect it.” A DVD has polycarbonate on both sides; therefore, it is difficult to scratch the reflector.

Types of DVDs

Just as several types of CDs are available for a variety of uses, a wide variety of DVDs are available as well. As mentioned previously, the storage capacity of a DVD is immense compared to that of a CD, and can range from 4.5GB on a single-layer, single-sided DVD to 17GB on a dual-layer, double-sided DVD. The various types of DVDs on the market include the following:

▪ DVD-R Stands for DVD minus Recordable. A DVD-R disc will hold up to 4.5GB of data, and is a write once-read many (WORM) medium. In other words, once it is written to, the data on the DVD cannot be modified.

▪ DVD+R Stands for DVD plus Recordable. A DVD+R disc will also hold up to 4.5GB of data, and is similar to the DVD-R. You should choose between DVD-R and DVD+R discs based on how you intend to use the disc. There is some evidence that DVD-R discs are more compatible with consumer DVD recorders than DVD+R discs; however, some consumer players that will only read DVD+R discs. DVD-R discs are often the best choice for compatibility if the disc being produced contains data files. Early DVD-ROM drives can generally read DVD-R discs but are incapable of reading DVD+R discs. DVD writers that only write DVD+R/RW discs will read DVD-R discs.

▪ DVD-RW Stands for DVD minus Read Write. This, like CD-RW discs, allows an average of 1,000 writes in each location on the disc before failing. A DVD-RW disc will hold up to 4.5GB of data and is recordable.

▪ DVD+R DL (dual-layer) Is an extension of the DVD standard to allow for dual-layer recording. Previously the only dual-layer discs were those manufactured that way. This allows up to 8.5GB of data to be written to a disc. Most current DVD drives support reading and writing DVD+R DL discs.

▪ DVD+RW Stands for DVD plus Read Write. This, like CD-RW discs, allows an average of 1,000 writes in each location on the disc before failing. A DVD+RW disc will hold up to 4.5GB of data and is recordable.

▪ DVD-RAM Is a relatively obsolete media format, which emphasized rewritable discs that could be written to more than 10,000 times. There were considerable interoperability issues with these discs and they never really caught on.

HD-DVD and Blu-ray

HD-DVD is an acronym for High Definition DVD, and is the high-density successor to DVD and a method of recording high-definition video to disc. Developed by Toshiba and NEC, a single-layer HD-DVD is capable of storing up to 15GB of data, whereas a dual-layer disc can store up to 30GB of data. Although developed for high-definition video, HD-DVD ROM drives for computers were released in 2006, allowing HD-DVD to be used as an optical storage medium for computers.

HD-DVDs require so much storage space because of the amount of data required to record high-definition television (HDTV) and video. A dual-layer HD-DVD can record eight hours of HDTV or 48 hours of standard video. The difference between the two is that that HDTV uses 1,125 lines of digital video, which requires considerably more storage space. HD-DVD ROM drives are used much the same way that VCRs were used to record video onto VHS tapes, and have a transfer rate of 36Mbps, which is 12Mbps more than the rate at which HDTV signals are transmitted. Similar to the format wars between Betamax and VHS, HD-DVD has been less popular than Blu-ray.

Like HD-DVD, Blu-ray is a high-density optical storage method that was designed for recording high-definition video. The name of this technology comes from the blue-violet laser that is used to read and write to the discs. A single-layer Blu-ray disc can store up to 25GB of data, whereas a dual-layer Blu-ray disc can store up to 50GB of data.

Although stand-alone Blu-ray and HD-DVD players and recorders are available, ones that will play either technology are also available. Also, certain Blu-ray drives allow users to record and play data on computers. In 2007, Pioneer announced the release of a Blu-ray drive that can record data to Blu-ray discs, as well as DVDs and CDs. In addition to this, Sony has also released its own rewritable drive for computers.

iPod and Zune

iPod is the brand name of portable media players developed by Apple in 2001. iPods were originally designed to play audio files, with capability to play media files added in 2005. Apple has introduced variations of the iPod, with different capabilities. For example, the full-size iPod stores data on an internal hard disk, whereas the iPod Nano and iPod Shuffle both use flash memory, which we'll discuss later in this chapter. Although iPod is a device created by Apple, the term has come to apply in popular culture to any portable media player.

iPods store music and video by transferring the files from a computer. Audio and video files can be purchased from iTunes, or can be acquired illegally by downloading them from the Internet using peer-to-peer (P2P) software or other Internet sites and applications, or sharing them between devices.

Unless you're investigating the illegal download of music or video files, where iPods become an issue during an investigation is through their ability to store other data. iPods can be used to store and transfer photos, video files, calendars, and other data. As such, they can be used as storage devices to store any file that may be pertinent to an investigation. Using the Enable Disk Use option in iTunes activates this function, and allows you to transfer files to the iPod. Because any media files are stored in a hidden folder on the iPod, you will need to enable your computer to view hidden files to browse any files stored on the iPod.

iPods use a file system that is based on the computer formatting the iPod. When you plug an iPod into a computer, it will use the file system corresponding to the type of machine to which it's connecting. If you were formatting it on Windows XP, it would use a FAT32 file system format, but if you were formatting it on a machine running Macintosh OS X, it would be formatted to use the HFS Plus file system. The exception to this is the iPod Shuffle, which uses only the FAT32 file system.

Entering late in the portable digital media market is Microsoft, which developed its own version of the iPod in 2006. Zune is a portable media player that allows you to play both audio and video, as well as store images and other data. Another feature of this device is that you can share files wirelessly with others who use Zune. In addition to connecting to a computer, it can also be connected to an Xbox using USB. Ironically, although it is compatible with only Xbox 360 and Windows, it was incompatible with Windows Vista until late 2006.

Flash Memory Cards

Flash memory cards and sticks are popular for storing and transferring varying amounts of data. Memory cards have typically ranged from 8MB to 512MB, but new cards are capable of storing upward of 8GB of data. They are commonly used for storing photos in digital cameras (and transferring them to PCs) and for storing and transferring programs and data between handheld computers (Pocket PCs and Palm OS devices). Although called “memory,” unlike RAM, flash media is nonvolatile storage; that means the data is retained until it is deliberately erased or overwritten. PC Card (PCMCIA) flash memory cards are also available. Flash memory readers/writers come in many handheld and some laptop/notebook computers, and external readers can be attached to PCs via USB or serial port. Flash memory cards include:

▪ Secure Digital (SD) Memory Card

▪ CompactFlash (CF) Memory Card

▪ Memory Stick (MS) Memory Card

▪ Multi Media Memory Card (MMC)

▪ xD-Picture Card (xD)

▪ SmartMedia (SM) Memory Card

USB Flash Drives

USB flash drives are small, portable storage devices that use a USB interface to connect to a computer. Like flash memory cards, they are removable and rewritable, and have become a common method of storing data. However, whereas flash memory cards require a reader to be installed, USB flash drives can be inserted into the USB ports found on most modern computers. The storage capacity of these drives ranges from 32MB to 64GB.

USB flash drives are constructed of a circuit board inside a plastic or metal casing, with a USB male connector protruding from one end. The connector is then covered with a cap that slips over it, allowing the device to be carried in a pocket or on a key fob without worry of damage. When you need it, you can insert the USB flash drive into the USB port on a computer, or into a USB hub that allows multiple devices to be connected to one machine.

USB flash drives often provide a switch that will set write protection on the device. In doing so, any data on the device cannot be modified, allowing it to be easily analyzed. This is similar to the write protection that could be used on floppy disks, making it impossible to modify or delete any existing data, or add additional files to the device.

Although USB flash drives offer limited options in terms of their hardware, a number of flash drives will come with software that you can use for additional features. Encryption may be used, protecting anyone from accessing data on the device without first entering a password. Compression may also be used, allowing more data to be stored on the device. Also, a number of programs are specifically designed to run from a USB flash drive rather than a hard disk. For example, Internet browsers may be used that will store any history and temporary files on the flash drive. This makes it more difficult to identify a person's browsing habits.

USB flash drives have been known by many other names over the years, including thumb drive and USB pen drive. Because these devices are so small, they can be packaged in almost any shape or item. Some USB flash drives are hidden in pens, making them unidentifiable as a flash drive, unless one pulled each end of the pen to pop it open and saw the USB connector. The pen itself is completely usable, but contains a small flash drive that is fully functional. This allows the device to be hidden, and can be useful for carrying the drive around with you…unless you tend to forget your pen in places, or work in a place where others walk off with your pens.

Understanding Why These Technical Details Matter to the Investigator

Why does the cybercrime investigator need to know the difference between RAM and disk space, what a microprocessor does, or the function of cache memory? Understanding what each part of a computer does will ensure that you also understand where in the machine the evidence (data) you need might be—and where not to waste your time looking for it.

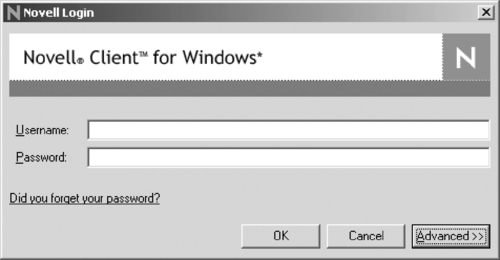

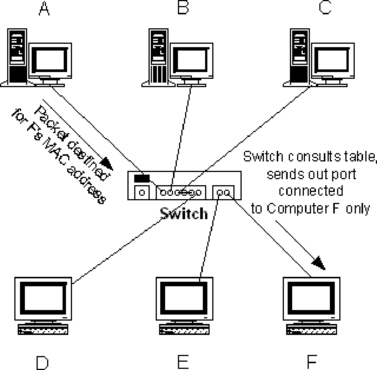

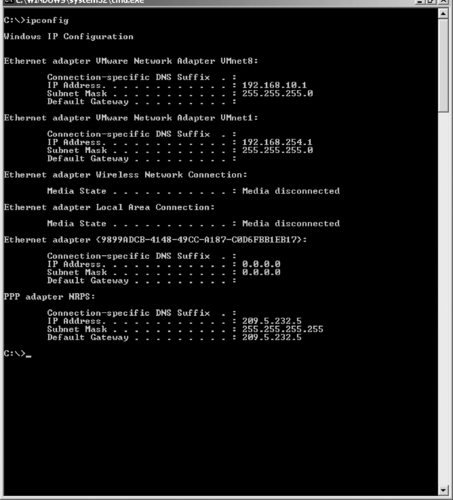

For example, if you know that information in RAM is lost when the machine is shut down, you'll be more careful about immediately turning off a computer being seized pursuant to warrant. You'll want to evaluate the situation; was the suspect “caught in the act” while at the computer? The information that the suspect is currently working on will not necessarily be saved if you shut down the system. The contents of open chat sessions, for example, could be lost forever if they're not automatically being logged. You will want to consider the best way to preserve this volatile data without compromising the integrity of the evidence. You might be able to save the current data, print current screens, or even have your crime scene photographer take photos of the screens to prevent information in RAM from being lost.