Chapter 13. Implementing System Security

Introduction

Cybercrime is possible because computers and networks are not properly secured. Law enforcement officers know that most criminals look for “easy” prey—that is, pickpockets look for victims who fail to secure their wallets or purses, and burglars hit the residences and businesses that take fewer steps to secure their property. It should come as no surprise that cybercriminals do the same. Most attacks against computer systems and networks exploit well-known vulnerabilities—vulnerabilities that, in many cases, can be fixed with a simple patch or configuration change. Often, applying these simple security measures costs nothing. Yet computer users and network administrators are as lax in protecting their valuable data as many citizens are in protecting their personal property. The fact that these known exploits still work most of the time shows that most individuals and companies are not performing due diligence in protecting their information technology (IT) assets before connecting them to the Internet.

There are many reasons for this behavior, including:

▪ The average computer user's lack of knowledge of security issues

▪ Busy network professionals’ lack of time (the “I really meant to get around to it” syndrome)

▪ Psychological denial that leads people who are aware of the risk to think that even though such things happen, “it can't happen to me”

Of course, none of these reasons is good enough to justify potential loss due to cybercrime, and that fact hits home with a vengeance after the network and its data have been compromised. It's important to realize that it's not just naïve individuals or small businesses on tight budgets that neglect their security needs. Unfortunately, many companies are like the police agencies that “can't afford” to buy body armor for their officers—until one of their own is killed in a shooting. Human nature is such that it often takes a tragedy to motivate people in charge to take action.

How Can Systems Be Secured?

System security is not a thing; it's a process—the process of building a barrier between the network and those who would do it harm. The key is to make your barrier more difficult to cross than someone else's. In other words, IT security involves creating a deterrent to convince a would-be intruder or attacker that your system is more difficult to breach than some other system. However, if an attacker specifically wants to breach your security perimeter, given enough time, he or she will be able to do so.

Crime prevention officers tell residents at neighborhood watch meetings that there is no way to make a home completely impervious to burglars—and if you could, it would be a windowless fortress that would be unpleasant to live in. No lock will keep out someone who's determined to break in, but what good locks will do is slow an intruder. If you make it difficult enough to get in, a typical burglar will go elsewhere, looking for quicker and easier pickings. Likewise, no computer or network can ever be 100 percent secure unless it is disconnected from every communication interface and completely powered off—and of course, such a fully secured system is also completely useless to the user. What system security methods can do is raise the break-in difficulty level to the point where most would-be intruders will take their attacks somewhere else—especially because they'll find no shortage of networks that they can break into with little effort.

The Security Mentality

Security is not something you can install right off the shelf, nor is it something you can ever achieve or complete. Security is an ongoing course of action that involves continually improving, tuning, and adjusting your systems to protect against new vulnerabilities and attacks. You cannot view security as a characteristic limited to computers, either; security must be an end-to-end solution. For your enterprise to be secure, you must address everything: computers, networking devices, connectivity media, boundary devices, communication devices, operating systems, applications, services, protocols, people, physical access, and the relationships among all of these components of your business.

A good network security specialist has at least one thing in common with a good law enforcement officer: Both are naturally suspicious—sometimes almost to the point of paranoia. Both subscribe to the philosophy that it's better to be safe than sorry. A security-conscious network professional sees a potential attack in every security hole. This can be annoying to other network users, just as a police officer's insistence on sitting with a clear view of the door can be annoying to civilian friends and family members. However, considering every possible threat is part of the job—both jobs. After all, just because you're paranoid doesn't mean they aren't out to get you—or your data!

Developing a Defensive Mindset

Early in their training, most law enforcement officers become familiar with the color codes that identify differing mental states of alertness. This color system is usually attributed to Colonel Jeff Cooper, a legendary firearms and personal defense expert, and is used to represent mental “conditions” as follows:

▪ Condition White Describes the mindset of most people as they go about their daily business, oblivious to possible danger and wrapped up in their own thoughts and activities

▪ Condition Orange Describes the mindset a person should be in when known dangers exist (for example, when walking down a street at night in a high-crime area), constantly scanning for possible threats and ready to escalate to a higher state if necessary

▪ Condition Red Describes the mindset of a person who has encountered a threat (or, as police officers put it, when the proverbial waste byproducts have already hit the oscillating instrument); in this condition, the body experiences an adrenaline rush and the person reacts—usually—in one of two ways: fight or flight

We can borrow these “mindset” codes to describe the state of our network's security. Unfortunately, too many networks operate in Condition White, with administrators and users oblivious to the many threats that exist. For our purposes, Condition Yellow is probably not enough to adequately protect computer systems and networks; any network that is connected to the Internet must be considered to be in a known high-crime area. Thus, network security professionals should remain in Condition Orange, a heightened state of alertness, constantly on the lookout for threats and ready to respond when (not if) intrusions or attacks occur.

Elements of System Security

System security is about much more than just keeping out malicious users and preventing attacks. It is also about maintaining and providing access to resources for authorized users, and it is about maintaining the integrity of the data and the infrastructure. These related but separate elements of system security are described using four terms: authentication, confidentiality, integrity, and availability. If network administrators fail to properly manage any one of these elements, they will fail in the task of providing security for the IT infrastructure.

Successfully designing, deploying, and maintaining security requires mastery of an ever-expanding body of knowledge. This book couldn't possibly provide you with all the details of locking down even a single operating system, much less the entire IT infrastructure of a small company or enterprise corporation. However, we can highlight some of the big issues you face in your security efforts.

Implementing Broadband Security Measures

Broadband is one of the buzzwords in Internet connectivity today. Broadband technologies have made it possible for both home users and small office networks to obtain reasonably high data-throughput rates at relatively low cost. According to a May 2006 report by the Pew Internet & American Life Project (http://www.pewinternet.org/pdfs/PIP_Broadband_trends2006.pdf), the widespread availability and implementation of broadband connectivity have made high-speed connections more prevalent. At least 42 percent of adults in the United States (84 million people) had a high-speed connection, and of these people, 50 percent use DSL and 41 percent use cable modems. As more end-users (customers) gained access to greater bandwidth, Web sites began to offer more resources, more multimedia content, and more volume than was feasible for slower modem connections.

However, a great deal of confusion, even among IT professionals, has arisen about what broadband really is. The term is sometimes used to refer to any high-speed connection, but it has a specific technical meaning. Broadband refers to a connection technology that uses multiple frequencies over a common networking medium (such as the coaxial cable used for cable television, or CATV) to exploit all available bandwidth. This allows data to be multiplexed so that it can travel on different frequencies (or channels) simultaneously and more data can be transmitted in a specified period of time than with baseband (one-channel) technologies such as Ethernet. Broadband is also sometimes called wideband.

Note

In addition to broadband and baseband, you'll sometimes hear the term narrowband. This term is often used to refer to technologies that carry only voice communications. In radio communications, narrowband refers to the 50cps to 64Kbps frequency range allocated by the Federal Communications Commission (FCC) for paging and mobile radio services.

Some factors that affect the data transmission capacity of a communications medium include its frequency range and the quality (or signal-to-noise ratio) of the connection. A single channel has a fixed capacity within those parameters, but capacity can be increased by increasing the number of communications channels. This is how broadband works.

Cable modems deliver a common form of broadband Internet connectivity. And in fact, cable has many advantages as an Internet technology. Cable companies have extensive network infrastructures in place for transmission of television programming. Because it is a broadband technology, computer data signals can be sent over the cable on their own frequency, just as each TV channel's signal travels over its own frequency. Cable Internet typically offers speeds ranging from 500Kbps to 1.5Mbps (roughly equivalent to T1, at prices at least 10 times lower), and the technology is capable of much higher speeds—up to 10Mbps or more.

Despite its advantages, cable has some significant disadvantages, including the following:

▪ Some cable companies’ lines are capable of only one-way transmission (which, after all, is all that's needed for transmitting TV programs). In this case, users must send upstream messages via a regular analog phone line; only the downstream data comes over the cable. Fortunately, most cable companies have upgraded their infrastructures to support two-way transmission.

▪ Even with two-way cable, many cable companies throttle upstream bandwidth to 128Kbps. This is to prevent users from running Internet servers (which is also often prohibited by the subscribers’ terms-of-service agreements).

▪ Another disadvantage, in some areas, is lack of reliability. The cable network might be “down” a lot, leaving users without an Internet connection for periods of time. Unlike expensive business solutions such as leased lines, no guaranteed uptime (or guaranteed bandwidth) is included in a typical cable contract for Internet access.

▪ Perhaps the most serious disadvantage comes from the fact that cable is a “shared bandwidth” technology. This means that all subscribers in any immediate area share the same connection medium. In other words, everyone in a neighborhood is connected to the same subnet and therefore has the potential to become a security threat to any other system in that neighborhood. This is the primary weakness of cable Internet technology. Keep in mind that to launch an attack against a computer you must be able to communicate with that computer. Being connected to the same network medium facilitates—some might even argue that it enables—malicious communications among those computers.

Digital Subscriber Line (DSL) technology provides broadband Internet connectivity over telephone lines. Asymmetric DSL (ADSL), the most widely available form of DSL, uses a signal coding technique called discrete multitone that divides a pair of copper wires in an ordinary telephone line into 256 subchannels. Via this technique, data can be transmitted at more than 8Mbps—but only for a relatively short distance, due to attenuation. The frequencies used are above the voice band; this means that both voice and data signals travel over the same phone line at the same time.

Note

For more information about how DSL works, see http://www.howstuffworks.com/dsl.htm.

Broadband Integrated Services Digital Network (B-ISDN) is another broadband technology that uses telephone lines. In this case, however, these are fiber optic phone lines rather than copper wiring. B-ISDN provides data transmission speeds of up to 1.5Mbps. The original ISDN technology was once intended to replace analog voice lines with digital lines, which are more reliable and less vulnerable to “noise” (interference). There are two types of ISDN services: Basic Rate Interface (BRI) and Primary Rate Interface (PRI). BRI is more often used by consumers and small businesses. It provides two channels (called B channels or bearer channels) on which data or voice can be transmitted at 64Kbps. These two channels can be used separately so that you can connect to the Internet at 64Kbps and use the other line for voice calls at the same time, or they can be aggregated to give you a 128KB data transmission rate. Another channel, called the D channel or data channel, is 16Kbps and manages signaling. PRI is much more expensive than BRI but provides more bandwidth: twenty-three 64Kbps B channels plus one 64Kbps D channel, for a total capacity of 1.5Mbps (this applies to the United States; in Europe, PRI provides 1.98Mbps).

DSL and ISDN connections are not shared connections. Instead, the connection medium is used only by the two endpoints of the connection. Because there are only two parties in these connections, they offer a more secure means of communication than cable modem. Those to whom security is important—and that should include everyone who uses the Internet—should opt for an unshared connectivity option if it is available and affordable. Satellite Internet technologies can also provide always-on Internet access at speeds of up to about 500Kbps in areas where cable and DSL aren't available. Satellite is available almost anywhere, as long as you have an unobstructed view of the sky where the satellite is located. (This is required because satellite is a line-of-sight technology.)

Broadband Security Issues

The benefits of high-speed broadband connectivity are complicated by problems and vulnerabilities unique to broadband. These issues arose directly out of the broadband implementation and were not palpable threats to the dial-up modem community. One threat is the always-connected aspect of broadband. No longer do users have to manually initiate a connection when they want to surf the Web or access e-mail, because broadband connections are always on. In the past, modem users typically disconnected from the Internet once they completed their online sessions. This removed their computers from the Internet and prevented crackers from accessing their systems or mounting attacks against them. With broadband connections that remain up 24/7 and automatically reconnect when interrupted, a computer is now available to be attacked on an ongoing basis.

A second aspect of this always-connected issue is the Internet Protocol (IP) address assigned to a system. With dial-up modem connectivity, a PC is usually configured to use Dynamic Host Configuration Protocol (DHCP) to obtain its address and is assigned a different IP address each time the connection is established. Thus, a modem-connected PC that has one address today will usually have a different address tomorrow, and the address it used yesterday will be assigned to a different system today. This makes tracking individual systems difficult. However, with broadband connectivity, systems using DHCP are connected for days or weeks at a time and are able to continually renew their assigned IP addresses so that their online identifiers remain consistent over a long period of time. They may also be assigned a static IP address, meaning that the IP address never changes. This makes tracking a specific system extremely easy.

Law enforcement officers can easily understand the impact on detection and apprehension; many traditional criminals—especially scam artists—are always on the move, living in motel rooms or with friends and changing addresses every few weeks or months. These offenders are much more difficult to track down than those who have established a permanent residence and a fixed address. The same is true of cybercriminals. The ones who use static (consistent) IP addresses are easier to find than those with ever-changing addresses. Although there are ways for technically savvy criminals to disguise their IP addresses, the advent of broadband, with a greater likelihood of static addressing, makes the investigator's job easier when dealing with cybercriminals who are less knowledgeable about the technology.

On the other hand, the longer a system remains online, especially when it retains the same IP address, the more vulnerable that system is to repeat brute force and port-scanning attacks. Given enough time, every system can be breached, even if security-conscious administrators have taken standard precautions such as deploying a firewall, installing patches, and assigning strong passwords. Remember, security is a deterrent—it is not an impenetrable barrier. Given enough time and determination, any security measure can be breached.

Simply powering down the computer when it's not in use isn't enough to protect against these threats. Through the power of automation, crackers can continuously scan an IP address to determine when the computer is on or off. Yes, it is possible to prevent the attack from progressing while the PC is powered down, but the attack can resume right where it left off once the system boots back up. Instead of relying on “security through obscurity” (attempting to hide the existence of a system or data from an attacker), you can take numerous proactive steps to reduce your vulnerability to broadband-specific attacks. We discuss these steps in the next section.

It might sound strange, but another flaw in broadband security is the speed at which it allows data to flow. First, this speed allows a faster attack against your system. A malicious user can send a significantly greater amount of data to your system over a broadband connection than is possible over a dial-up modem connection. Second, once your system is compromised, it takes an attacker less time to download or upload files.

Obviously, broadband connectivity has its drawbacks. But for many Internet users, it still offers an irresistible promise of lightning-fast connectivity at very low cost. It's important to first recognize that there is a problem and then take specific risk reduction measures, so in this section, we not only point out the flaws but also offer guidance on reducing the risks associated with using broadband connectivity.

When implementing risk-reducing strategies such as improving security, you must take into account the specific hardware and software configuration of each computer. This includes the operating system in use, the applications installed, and the services used on the connected computer. Implementing security precautions on just one aspect of your system does not provide adequate security. You must deploy a multilayered security solution so that there will be numerous barriers to unauthorized access.

Note

We discussed the concept of multilayered security in Chapter 12.

When a large company contracts with an Internet service provider (ISP) for a high-speed bandwidth connection, security is probably the most important item in the service contract. However, individual customers (and many small businesses) who obtain low-cost broadband connections often overlook security. This oversight primarily stems from the fact that few individuals or small-company employees are trained security professionals, and they simply don't know any better. Even when they have a vague idea that there are security risks they should be addressing, they might be overwhelmed by the complexity of the topic and the sheer number of competing security “solutions” available, as well as the high cost of implementing many such recommended solutions. The promise of fast Internet downloads for little cost blinds many users to other important issues, such as security and privacy, which should be carefully considered before broadband deployment.

In the following sections, we discuss specific risk-reducing strategies you can employ to improve the security of your computer or network using broadband connectivity. Readers who are implementing broadband must also consider the issues that we raise later in this chapter, when we discuss how to secure specific operating systems.

Deploying Antivirus Software

Security has many facets, two of which are the need to prevent unauthorized access and the need to support authorized access. All too often, the prevention of attacks comes at the expense of ensuring access to data by valid users. It is important to keep a balanced perspective when deploying any security measure. Think of security in terms of the popular policing motto, “To protect and to serve.” If your security implementation fails to adequately support either of these elements, protecting your data and serving your authorized users, you have failed to implement a truly effective security plan.

This is a challenge because security and availability will always be at opposite ends of the continuum. The more you have of one, the less you have of the other. However, you must strike a balance, because a security policy that is too restrictive could have the same result as one that is too lax—that is, a negative (perhaps even catastrophic) impact on the company's bottom line.

Maintaining the integrity of your data so that it can be served to authorized users is just as important as preventing unwanted outsiders from stealing it. Many things—including human error, disgruntled employees, and even hardware failure—can threaten your data's integrity. But the most serious, prevalent, and imminent threat is corruption, destruction, or alteration of data by virus infection. A broadband connection is just as likely as a local area network (LAN) link or a dial-up connection to be a pathway for virus infection. No matter how your computer is connected to the Internet or other systems, you must protect it from viruses.

One Hundred Percent Virus-Free E-mail

E-mail is the number-one virus delivery mechanism today, so it is essential to keep as much malicious e-mail out of your network as possible. With the increasing reliance on e-mail for commercial and private communications, balancing security against availability can be especially difficult in regard to electronic mail.

Some companies provide solutions to this problem. For example, MessageLabs offers a service that guarantees 100 percent virus-free e-mail delivered to your e-mail servers. This guarantee is based on MessageLabs’ ability to adequately screen inbound e-mail for any possible virus infection or carrier agent. This task is accomplished by routing your inbound e-mail to one of the company's control tower systems. There each message is inspected by at least three antivirus (AV) solutions from reliable vendors as well as an artificial intelligence search tool that relies on heuristics, pattern matching, signature matching, and traffic flow analysis to detect unknown viral threats. After a delay of about 1.5 seconds, your e-mail is then delivered to your internal e-mail servers for distribution on your network. MessageLabs maintains an excellent track record for making good on promises. For more information, visit the company's Web site at http://www.messagelabs.com.

When striving to protect your data's integrity, you can obtain no greater bang for your buck than that gained by deploying reliable AV software. When selecting such a product, look for the following:

▪ The product should originate from a well-known, reputable company.

▪ The product should automatically update its virus definitions.

▪ The product should clean or quarantine any infected files it detects.

Whenever possible, deploy two or more virus solutions together on the network, to work as a layered system. However, do not install two AV tools on the same computer, because doing so can often cause the system to crash or behave erratically. Many organizations opt to place an AV product on each border system (firewall, gateway, proxy, and so on), on each server, and on each client. This multilayered approach provides more thorough protection and eliminates the problems that can come with reliance on a single vendor's solution.

Note

We discuss viruses, Trojans, and other malicious code and provide additional information on how to protect systems from these threats in Chapter 10.

Defining Strong User Passwords

Only two elements are necessary to gain access to most computer systems: a user identity (username) and its associated password. Most usernames are obvious or very easy to guess—a person's first name, first initial and last name, or the like—and are therefore not confidential. Thus, access authorization is likely to be based solely on the password. Passwords must be very strong and kept secured to maintain control over access. This is true whether your system is linked to a broadband connection, a LAN cable, or a dial-up link. Because broadband connections are always on, however, they offer a potential intruder much more time than a dial-up connection to carry out a brute force attack (which is essentially a trial-and-error method that attempts various character combinations until the attacker stumbles onto one that works).

In Chapter 11, we discussed in detail how to create strong passwords that are difficult to crack and how to set and enforce password policies to ensure that none of the passwords in use in your organization creates an “easy in” for intruders.

Setting Access Permissions

Controlling access is an important element in maintaining system security. The most secure environments follow the “least privileged” principle. This principle states that users are granted the least amount of access possible that still enables them to complete their required work tasks. Expansions to that access are carefully considered before being implemented. Law enforcement officers are familiar with this principle in regard to noncomputerized information; this concept is usually termed need to know. Generally, following this principle means that the network administrators hear more complaints from users about being unable to access resources. However, hearing complaints from authorized users is better than hearing about access violations that damage an organization's profitability or its capability to conduct business. We discussed establishing and enforcing access policies in more detail in Chapter 12 and we will discuss access control for wireless networks later in this chapter.

Disabling File and Print Sharing

The ability to share files and printers with other members of your network can make many tasks simpler and, in fact, was the original purpose for networking computers in the first place. However, this ability also has a dark side—especially when users are unaware that they're sharing resources. If a trusted user can gain access, the possibility exists that a malicious user can obtain access as well. On systems linked by broadband connections, crackers have all the time they need to connect to your shared resources and exploit them.

File and Print Sharing allows others to access files and printers on your Windows operating system across the network. When enabled, the service provides access to files and printers attached to the machine. In Windows NT Server, Windows Server 200x, XP, and Vista, this is controlled by the Server service, and in nonserver Windows operating systems prior to XP, the File and Print Sharing service controls this ability.

Shared resources are advertised but may not offer security to restrict who is able to see and access those shares. Setting permissions on the shares controls such security. On older Windows operating systems, when a share is created, by default the permissions are set to give full control over the resource to the Everyone group—which includes literally everyone who accesses that system. Sharing a folder in Windows XP is somewhat different. Not only does it use the Server service, but it only provides Read permissions to the Everyone group, meaning that anyone can view and copy files from the shared directory, but not add, modify, or delete files in that folder. Windows Vista has gone a step further, providing a folder named Public that can be used to store files that are used by everyone in the organization. With Public folder sharing enabled, any files and folders in the Public folder are available to others on the network. However, unlike Windows XP, Vista does not allow simple file sharing. Any shared folders including the Public folder require a username and password.

Windows Vista provides a Network and Sharing Center tool that allows you to turn the following on and off:

▪ File sharing

▪ Public folder sharing

▪ Printer sharing

▪ Password-protected sharing

▪ Media sharing

If the user doesn't need to share resources with anyone on the internal (local) network, these options should be set to off. Similarly, File and Print Sharing should be disabled on computers running older operating systems. On networks where security is important, sharing of resources should be disabled on all clients. This action forces all shared resources to be stored on network servers, which typically have better security and access controls than end-user client systems.

Using NAT

Network address translation (NAT) is a feature of many firewalls, proxies, and routing-capable systems. NAT has several benefits, one of which is its ability to hide the IP address and network design of the internal network. The ability to hide your internal network from the Internet reduces the risk of intruders gleaning information about your network and exploiting that information to gain access. If an intruder doesn't know the structure of a network, the network layout, the names and IP addresses of systems, and so on, it is very difficult to gain access to that network.

NAT enables internal clients to use nonroutable IP addresses, such as the private IP addresses defined in Request for Comments (RFC) 1918, but still enables them to access Internet resources. NAT restricts traffic flow so that only traffic requested or initiated by an internal client can cross the NAT system from external networks.

If only a single system is linked to the Internet with a broadband connection, NAT is of little use. However, for local networks that share a broadband connection, NAT's benefits can be utilized for security purposes. When using NAT, the internal addresses are reassigned to private IP addresses and the internal network is identified on the NAT host system. Once NAT is configured, external malicious users are only able to access the IP address of the NAT host that is directly connected to the Internet, but they are not able to “see” any of the internal computers that go through the NAT host to access the Internet.

Deploying a NAT Solution

NAT is relatively easy to implement, and there are several ways to do so. Many broadband hardware devices (cable and DSL modems) are called cable/DSL “routers” because they allow you to connect multiple computers. However, they are actually combination modem/NAT devices rather than routers because they require only one external (public) IP address. You can also buy NAT devices that attach your basic cable or DSL modem to the internal network. Alternatively, the computer that is directly connected to a broadband modem can use NAT software to act as the NAT device itself. This can be an add-on software program such as the Smart Firewall included in Symantec's Norton Internet Security, or the NAT software that is built into some operating systems.

When NAT is used to hide internal IP addresses, it is sometimes called a NAT firewall; however, don't let the word firewall give you a false sense of security. NAT by itself solves only one piece of the security perimeter puzzle. A true firewall does much more than link private IP addresses to public ones, and vice versa.

Deploying a Firewall

As we discussed in Chapter 12, a firewall is a device or a software product whose primary purpose is to filter traffic crossing the boundaries of a network. That boundary can be a broadband connection, a dial-up link, or some type of LAN or wide area network (WAN) connection. The network can be an enterprise LAN, a single system, or anything in between.

The most typical use for firewalls is to restrict what types of traffic can traverse the boundary connections of your network. Several types of firewalls or filtering mechanisms are available to handle this job: packet filters, stateful inspection systems, proxy systems, and circuit-level filtering.

You will recall that packet filters, also known as screening routers, decide what traffic is allowed or blocked based on information found in Transmission Control Protocol (TCP) headers. The information used from the TCP header is typically the source or destination IP address and the corresponding TCP or User Datagram Protocol (UDP) port. Packet filters are static, always open, and therefore unable to properly manage dynamic port applications. Furthermore, packet filters are unable to monitor sessions or traffic content.

Stateful inspection systems inspect the ongoing activities within active communication sessions to ensure that the type of traffic detected is valid. Stateful inspection was designed to address deficiencies in packet filters, specifically to ensure that traffic over dynamic ports is valid and authorized.

Proxy systems, also known as application gateways or application firewalls, are able to filter traffic based on high-level protocols (such as Hypertext Transfer Protocol, or HTTP; File Transfer Protocol, or FTP; Simple Mail Transfer Protocol, or SMTP; and Telnet), applications, or even specific control commands. Proxy systems work well with dynamic port applications, although unfortunately, some services and applications don't lend themselves well to being proxied. Proxy systems also lower the network performance due to the amount of processing involved with fully inspecting each packet.

Circuit-level filtering makes traffic decisions based on the content of the session rather than individual packets. Circuit-level filters open ports only when internal clients make requests, thus supporting dynamic port applications such as FTP. This type of filter supports a wider range of protocols than a proxy system, but it does not provide the detailed controls that a proxy system does.

When selecting a firewall to protect broadband connections, the user should seek a product with all these filtering capabilities as well as extensive logging and auditing features and alarms and alerts. Another good idea is to seek out products with NAT and intrusion detection capabilities.

For individual, stand-alone, or home systems, several fairly inexpensive personal firewall products provide adequate security for nonprofessional computer use, such as ZoneAlarm from Zone Labs. Operating systems such as Windows XP and Vista also include a built-in personal firewall feature. However, to protect a business network, administrators cannot rely on “personal” firewalls. Companies should invest in a heavy-duty firewall that provides a higher level of security and greater configurability. Deployment might be simplified if you contract with a security outsourcing company to install, configure, maintain, and administer your firewall.

Disabling Unneeded Services

One of the primary tenets for maintaining physical security in a residence or business property is to reduce the number of pathways an intruder can take to gain access to it. This reduction typically involves locking doors and windows, sealing off access tunnels, and securing ventilation shafts. Administrators should apply the same perspective in regard to the electronic pathways into the network. Any means by which valid data can reach the network or computer is also a potential path for a malicious intruder or attack.

Systems linked to the Internet by broadband connections should have any unneeded protocols, applications, and services either disabled or completely removed or uninstalled. With the proliferation of poor programming practices that often lead to security vulnerabilities, it is essential to limit exposure to potential threats simply by not having removed unessential software from the network's computer systems. For example, many versions of Windows automatically install a Web server during default OS installation. If a system is not specifically intended for use as a Web server, that component of the OS must be disabled.

Configuring System Auditing

When a system is compromised, one of two things occurs:

▪ Bad things happen and you are clearly aware of them (system crash, deleted files, or the like).

▪ Bad things happen and you are unaware of them (a hacker toolkit is downloaded to your system, a user account is compromised, or a similar security breach).

Waiting for clear indications of system violations to appear is a poor security practice, especially because unseen compromises usually result in more severe consequences. Law enforcement officers can relate this concept to the difference between reactive policing, in which a law enforcement agency waits until a crime is reported to go into action, and proactive policing, which involves activities designed to prevent crimes from occurring in the first place. We all know that the proactive method is more effective in fighting crime, but it has one drawback: It's a lot more work. Unfortunately, system administrators, police officers, and other human beings are often tempted to take the path that requires the least amount of effort, and that's why preventable crimes—including network security breaches—proliferate.

The only way to know when your system has been breached or when an unsuccessful attempt to penetrate your security has occurred is to monitor or audit for unusual or abnormal activity—just as patrol officers drive through the neighborhoods on their beats, looking for anything out of the ordinary. Most OSes include native auditing capabilities. For example, Windows servers and client operating systems provide for security auditing that is tracked through a security log available to administrators through the Event Viewer administrative tool. At a minimum, administrators should audit logons and logoffs, changes to user accounts and privileges, and use of administrative-level functions. These are activities that are often involved in a security breach and can serve as indicators when the computer or network has been compromised.

If the amount of data the auditing system gathers is too much to manage manually, as might be the case on enterprise networks, it could be beneficial to invest in an intrusion detection system (IDS). An IDS automates the tedious task of looking for abnormal or suspicious system activity. An IDS uses pattern recognition and heuristic learning to detect suspect activities by authorized user accounts as well as external malicious users.

Implementing Web Server Security

Most companies and organizations today have a Web presence on the Internet. An Internet presence offers numerous business advantages, such as the ability to reach a large audience with advertising, to interact with customers and partners, and to provide updated information to interested parties.

Web pages are stored on servers running Web services software such as Microsoft's Internet Information Server (IIS) or Apache (on Linux/UNIX servers). Web servers must be accessible via the Internet if the public is to be able to access their Web pages. However, this accessibility provides a point of entry to Internet “bad guys” who want to get into the network, so it is vitally important that Web servers be secured. Protecting a Web server is no small task. Systems attached to the Internet before they are fully “hardened” are usually detected and compromised within minutes. Malicious crackers are always actively searching for systems to infiltrate, making it essential that you properly lock down a Web server before bringing it online.

First and foremost, administrators should lock down the underlying operating system. This process includes applying updates and patches, removing unneeded protocols and services, and properly configuring all native security controls. We discuss some of the important issues related to specific OS lockdown procedures later in this chapter.

Second, it is wise to place the Web server behind a protective barrier, such as a firewall or a reverse proxy. Anything that will limit, restrict, filter, or control traffic into and out of the Web server reduces the means by which malicious users can attack the system.

Third, administrators must lock down the Web server itself. This process actually has numerous facets, each of which is important to maintaining a secure Web server. We discuss these topics in the following sections.

DMZ versus Stronghold

There are two general lines of thought when it comes to Web server security. One is to assume that the Web server will be compromised and to plan accordingly. The other is to try to prevent any and all possible attacks at all costs. The first philosophy utilizes a deployment design called a demilitarized zone (DMZ); the second relies on a design referred to as a stronghold.

A DMZ is a networking area where your Web server (and other servers that are accessible via the public Internet) is secured from most known common exploits, but it is known to be insecure at some level. Because the DMZ is a separate network, the internal network remains more secure. A DMZ assumes that the time and money required to protect against every possible attack are too great an expense to offset the value of the data hosted on the Web server.

Note

Microsoft uses the term screened subnet to refer to the DMZ in some of its documentation. You'll also hear the same concept called a perimeter network.

To compensate for the lack of front-line security, organizations that deploy a DMZ configuration typically have a duplicate Web server positioned on their internal LAN that maintains a mirror image of the publicly accessible Web server. In the event that the primary Web server is compromised, the mirror backup can be repositioned to act as the public Web server until the primary system is repaired. Other companies employ an even less expensive solution by maintaining only tape backups of the primary Web server. Such a company might assume that the time and money lost while its Web server is offline will not significantly impact the organization. It also might assume that the value of its Web presence does not justify a more fault-tolerant solution, such as the expense of creating and maintaining a backup system.

A stronghold is a networking area where the Web server is protected from all known exploits and significant effort is expended to protect against unknown new exploits. A stronghold assumes that the data hosted on the Web server is valuable enough to spare no expense in protecting it. This type of configuration is often deployed by organizations whose Web server integrity and availability are essential to doing business, such as e-commerce sites.

Each organization must choose the protection policy that is appropriate to its situation and that fits its needs best. A DMZ deployment is cheaper but more prone to attack than a stronghold. A stronghold deployment is more expensive than a DMZ but will repel most attacks.

Isolating the Web Server

For security purposes, the Web server should be separated and isolated from the internal LAN. Otherwise, if the Web server is compromised, the attacker could get a free ticket into the entire network. Separating the Web server from the internal production network prevents Web attacks from becoming organization killers.

Separating the Web server from the LAN can take many forms, such as:

▪ Deploying a separate domain just for the Web server and its supporting services

▪ Using a Web-in-a-box solution that has no capabilities other than Web page service

▪ Co-locating Web servers at an ISP

▪ Outsourcing Web services to an ISP or other third party

No matter what method an organization chooses, it is also wise to consider creating a sideband communication channel for all management, administration, and file transfer activities. A sideband communication channel can be as simple as using a unique protocol between your LAN and the Web server and preventing (blocking via unbinding) Transmission Control Protocol/Internet Protocol (TCP/IP) from crossing that link. A sideband channel could be a dial-up link, a dedicated ISDN line, or even a direct-connect serial port link. Using a sideband channel restricts the traffic that can flow between the Web server and the internal network. This system could dampen the speed or the capabilities of remote administration; however, it greatly reduces the possibility that a malicious user can cross over from a compromised Web server to the LAN.

If security is of utmost importance, organizations can deploy a firewall on the sideband channel or eliminate direct communication completely. If the administrator must be physically present at the Web server and must transfer data to and from it using removable media. This completely eliminates the possibility of a malicious user employing the Web server as a bridge into the LAN.

Web Server Lockdown

Locking down the Web server itself follows a path that begins in a way that should already be familiar to you: applying the latest patches and updates from the vendor. Once this task is accomplished, the network administrator should follow the vendor's recommendations for securely configuring Web sites. The following sections discuss typical recommendations made by Web server vendors and security professionals.

Managing Access Control

Many Web servers, such as IIS on Windows servers, use a named user account to authenticate anonymous Web visitors. When a Web visitor accesses a Web site using this methodology, the Web server automatically logs that user on as the IIS user account. The visiting user remains anonymous, but the host server platform uses the IIS user account to control access. This account grants system administrators granular access control on a Web server.

These specialized Web user accounts should have their access restricted so that they cannot log on locally or access anything outside the Web root. Additionally, administrators should be very careful about granting these accounts the ability to write to files or execute programs; this should be done only when absolutely necessary. If other named user accounts are allowed to log on over the Web, it is essential that these accounts not be the same user accounts employed to log on to the internal network. In other words, if employees will log on via the Web using their own credentials instead of the anonymous Web user account, administrators should create special accounts for those employees to use just for Web logon. Authorizations over the Internet should be considered insecure unless strong encryption mechanisms are in place to protect them. Secure Sockets Layer (SSL) can be used to protect Web traffic; however, the protection it offers is not significant enough to protect internal accounts on the Internet.

Handling Directory and Data Structures

Planning the hierarchy or structure of the Web root is an important part of securing a Web server. The root is the highest-level web in the hierarchy that consists of webs nested within webs. Whenever possible, Web server administrators should place all Web content within the Web root. All the Web information (the Web pages written in Hypertext Markup Language (HTML), graphics files, sound files, and so on) is normally stored in folders and directories on the Web server. Administrators can create virtual directories, which are folders that are not contained within the Web server hierarchy (they can be on a completely different computer) but appear to the user to be part of that hierarchy. Another way of providing access to data that is on another computer is mapping drives or folders. These methods allow administrators to store files where they are most easily updated or take advantage of extra drive space on other computers. However, mapping drives, mapping folders, or creating virtual directories can result in easier access for intruders if the Web server's security is compromised. It is especially important not to map drives from other systems on the internal network.

If users accessing these webs must have access to materials on another system, such as a database, it is best to deploy a duplicate server within the Web server's DMZ or domain. That duplicate server should contain only a backup, not the primary working copy of the database. The duplicate server should also be configured so that no Web user or Web process can alter or write its data store. Database updates should come only from the protected server within the internal network. If data from Web sessions must be recorded into the database, it is best to configure a sideband connection from the Web zone back to the primary server system for data transfers. Administrators should also spend considerable effort verifying the validity of input data before adding it to the database server.

Scripting Vulnerabilities

Maintaining a secure Web server means ensuring that all scripts and Web applications deployed on the Web server are free from Trojans, backdoors, or other malicious code. Many scripts are available on the Internet for the use of Web developers. However, scripts downloaded from external sources are more susceptible to coding problems than those developed in-house. If it is necessary to use external programming code sources, developers and administrators should employ quality assurance tests to search for out-of-place system calls, extra code, and unnecessary functions. These hidden segments of malevolent code are called logic bombs.

One logic bomb to watch out for occurs within Internet Server Application Programming Interface (ISAPI) scripts. The command RevertToSelf() allows the script to execute any following commands at a system-level security context. In a properly designed script, this command should never be used. If this command is present, the code has been altered, or was designed by a malicious or inexperienced coder. The presence of such a command enables attacks on a Web server through the submission of certain Uniform Resource Locator (URL) syntax constructions to launch a logic bomb.

Logging Activity

Logging, auditing, or monitoring the activity on your Web server becomes more important as the value of the data stored on the server increases. The monitoring process should focus on attempts to perform actions that are atypical for a Web user. These actions include, among others:

▪ Attempting to execute scripts

▪ Trying to write files

▪ Attempting to access files outside the Web root

The more traffic your Web server supports, the more difficult it becomes to review the audit trails. An automated solution is needed when the time required to review log files exceeds the time administrators have available for that task. IDSes are automated monitoring tools that look for abnormal or malicious activity on a system. An IDS can simply scan for problems and notify administrators or actively repel attacks once they are detected.

Backups

Unfortunately, every administrator should assume that the Web server will be compromised at some point and that the data hosted on it will be destroyed, copied, or corrupted. This assumption will not become a reality in all cases, but planning for the worst is always the best security practice. A reliable backup mechanism must be in place to protect the Web server from failure. This mechanism can be a real-time mirror server to back up the primary Web server or just a daily backup to tape. Either way, a backup is the only insurance available that allows a return to normal operations within a reasonable amount of time. If security is as much maintaining availability as it is maintaining confidentiality, backups should be part of any organization's security policy.

Maintaining Integrity

Locking down the Web server is only one step in the security process. It is also necessary to maintain that security over time. Sustaining a secure environment requires monitoring the system for anomalies, applying new patches when they are available, and adjusting security configurations to match the ever-changing needs of the internal and external Web communities. If a security breach occurs, the organization should reevaluate previous security decisions and implementations. Administrators could have overlooked a security because of ignorance, or they might have simply misconfigured some security control.

Rogue Web Servers

There is one thing worse for a network administrator than having a Web server and knowing that it is not 100 percent secure, even after locking it down, and that is having a Web server on the network that you're not aware exists. These are sometimes called rogue Web servers, and they can come about in two ways. It is possible that a technically savvy user on the network has configured Web services on his or her machine. More often, however, rogue Web servers are deployed unintentionally. Many operating systems include Web server software and install it as part of the default OS installation, or they can be accidentally installed if the Web server is selected as an option. If administrators aren't careful, when they install Windows (especially a member of the Server family) on a network computer, they can create a new Web server without even realizing it's there. When a Web server is present on a network without the knowledge of network administrators, no one will take all the precautions necessary to secure that system. This makes the system (and through it, the entire network) vulnerable to every out-of-the-box exploit and attack for that Web server.

Hunting Down Rogue Web Servers

To check a system to see whether a local Web server is running without your knowledge, you can use a Web browser to access http://localhost/. If no Web server is running, you should see an error stating that you are unable to access the Web server. If you see any other message or a Web page (including a message advising that the page is under construction or coming soon), you are running a Web server locally. Once you discover the existence of such a server, you must either secure it or remove or disable it. Otherwise, the system will remain insecure.

Understanding Operating System Security

Regardless of the operating system being used, you should take certain steps to ensure that the system is up-to-date and secure. These include:

▪ Installing patches and service packs

▪ Verifying user account security

▪ Configuring logging to record significant events

▪ Backing up important data

Installing Patches and Service Packs

Even if you've just installed the latest version of an operating system, it's possible that the system is already out-of-date. This is especially true if the operating system is an older one, or one that was released a number of months or years previous. Operating systems are often released with bugs and vulnerabilities that were either known or overlooked when the operating systems were made available on the market. The reasoning for this is that patches and service packs can be released to fix the problem.

With many applications and operating systems, there is a need to visit the vendor's Web site to determine what patches and updates are available. Microsoft provides an easy solution to downloading and installing patches through the Windows Update site. In Internet Explorer, you can click on the Tools menu and then click the Windows Update menu item. In Windows Vista, you can also click on Start | All Programs | Windows Update. Doing so will take you to http://www.update.microsoft.com. Here, using a combination of scripts and ActiveX components, the site can analyze your system to determine what updates are needed for Windows and Internet Explorer, allowing you to then download and install them. By installing the Microsoft Update tool, it can also identify whether updates are required for Microsoft Office, SQL Server, and other Microsoft applications installed on your computer.

Systems running Windows XP and Vista can also use the Automatic Updates feature. Turning on this feature will allow the computer to automatically connect to Microsoft's Web site and download any necessary updates. The feature can be turned on from the computer, or by clicking a link on the Windows Update site.

Network administrators may not wish to allow users to update their own machines, and often download the necessary updates. These updates can then be applied remotely, or by making configurations that allow the updates to be installed automatically when the user logs on, or uses a particular tool (as in the case of Novell NetWare where software can be automatically installed when the user accesses the ZENworks application launcher). An alternative is to use tools such as Offline Update, a freeware tool that downloads updates for the operating systems you specify, including Windows 2000, XP, Vista, and Server 2003, as well as updates for Microsoft Office 2003 and 2007. Once downloaded, Offline Update can write an International Organization for Standardization (ISO) file that can be burned to a CD or DVD, allowing you to install updates from the CD or DVD without connecting to the Internet.

Verifying User Account Security

A number of different accounts are common to operating systems, and some applications that require usernames and passwords, which should be modified immediately after installation. A Guest account is often used for temporary users, and this should be disabled to prevent unauthorized users from gaining entry. If a temporary user account is needed, you should change the name from “Guest” (which is well known and easy for hackers to guess) to something less obvious. Another common account name is “Administrator” or “Admin,” which is used for administration of the computer and has the highest level of access. Because hackers can guess the name of this well-known account name, it too should be changed to a less obvious username. A strong password should also be set for the account for both of these accounts, if they are used and enabled.

Just as the Administrator account provides full access to the system, an Administrator group may also be used on the computer's operating system or the network operating system. The Administrator group provides the highest level of access to any users who are part of this group, but administrative privileges should be limited to only those who need them. Many computer users log on to the operating system using an account with this level of access, or the actual Administrator account. This means that the user could install programs that he or she doesn't need and that could contain viruses or Trojan horses. Also, because any malware installed on the machine would have the same privileges as the user who's logged on to the machine, this means the malware would be able to access anything on the system.

It is important that users have only the level of access they need to perform their work, or in the case of home users, perform the actions they'd do on a regular basis. Each user should have his or her own account, and should have a password set. This will prevent anyone with physical access to the machine from using the computer, and in the case of network accounts from accessing network resources.

Removing Applications That Aren't Required

As we mentioned earlier, operating systems often have services that automatically run, and any services that aren't required should be disabled. In addition to the services, applications that are installed on your computer can be used as a means for hackers to gain access to systems. Vulnerabilities in the program may be exploited, or may conflict with other aspects of the system. In addition to this, programmers may have developed the program with malicious code programmed to activate on a given date, or use other services on the computer to provide a back door to systems. By installing only the applications a user needs to perform his or her work, there is less chance that the program will cause conflicts with the system. By setting Group Policies that prevent the user from installing additional software, you will remove the possibility that unnecessary programs will cause unnecessary problems.

Logging

Auditing significant events on a computer can go a long way toward identifying problems, including intrusions to the system. Many operating systems and applications that are security-minded provide methods of logging certain actions that a user takes, such as logging on or accessing specific data. A review of these logs can provide vital information during an investigation, and can be a primary source of identifying whether someone has attempted to hack a system or access data he or she is not authorized to use.

Auditing Events on Windows

Windows operating systems such as Windows 200x, XP, and Vista provide the ability to generate logs that track events. Auditing is disabled on some systems such as Windows 2000 and XP, but should be enabled to track the success or failure of certain events through the following steps:

1. From the Start menu, select Settings, and then click on Control Panel.

2. Double-click on the Administrative Tools icon.

4. When the Local Security Settings applet appears, expand Local Policies in the left pane, and then click on Audit Policy. By double-clicking on different policies in the right pane, you can then set the different events you want to log.

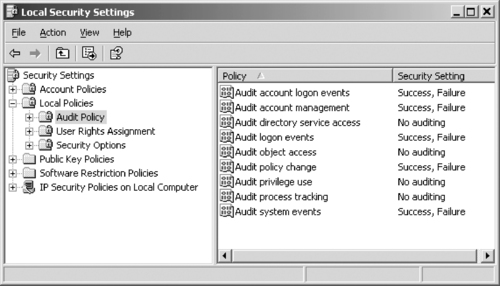

When logging is enabled, any events you've set to be logged are tracked. As shown in Figure 13.1, these events can include logging the success and/or failure of account logons, account management, logon events, policy changes, and system events. At a minimum, these events should be logged, as they can help determine whether someone has attempted to guess a user's password and try and log on as that person, or performed other actions that would indicate an attempt to access the system using another person's account.

|

| Figure 13.1 Local Security Settings |

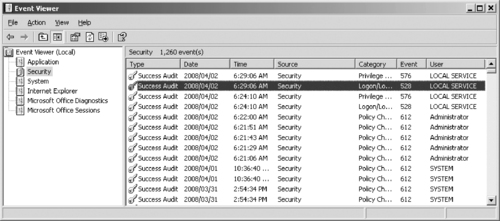

Once logging is enabled, you can view the log using the Event Viewer. In Windows 200x, XP, and Vista, you also can access this tool in the Administrative Tools folder in the Control Panel. By double-clicking on the Event Viewer icon in this folder, the tool shown in Figure 13.2 will appear. As you can see in this figure, by selecting different categories of events in the left pane, you can then view information on events related to those categories in the right pane. Double-clicking on a particular event in the right pane will provide more detailed information.

|

| Figure 13.2 The Event Viewer |

Monitoring user activity is an important part of the forensic process. Unusual user activity may be just an indicator of system problems, or it may be a real security issue. By analyzing the user activity reported in your system logs, you can determine authentication problems and hacking activity.

An important aspect in determining relevant events in your system logs is determining which behavior is benign and which behavior indicates trouble. For example, if a user fails to authenticate one time in a one-week span, you can be pretty certain that the user simply mistyped his or her password and is not trying to hack into your system. Alternatively, if a user has two failed authentication attempts hourly for an extended period you should look more deeply into the problem. We will be covering these types of events and going over how to make the critical determination as to whether a behavior is benign or a warning sign. Security is, and always should be, a top priority for anyone working on key infrastructure systems.

Access to files can also be audited on a machine. As shown earlier in Figure 13.1, double-clicking on Audit object access in Local Security Settings allows you to set whether the success and/or failure of accessing objects such as files and folders is audited. By using Windows Explorer, you can right-click on the file or folder you want to audit, and then click Properties on the menu that appears. When the Properties dialog box appears, you would then click on the Security tab, and perform the following steps:

1. Click the Advanced button.

2. When the Advanced Security Settings screen appears, click the Auditing tab.

3. To add a new user or group to audit, click the Add button, type the name of the user or group to audit, and then click OK.

4. As shown in Figure 13.3, you can then set what will be audited. Checking a Successful checkbox will audit events that were successful, whereas checking a Failed checkbox will audit unsuccessful events.

|

| Figure 13.3 The Auditing Entry for a Folder |

Log Parser

Log Parser is a tool from Microsoft that can read text-based files, XML files, and comma-separated value (CSV) files, as well as data sources on Windows operating systems such as the Registry and Event log. It is available as a free download from Microsoft's Web site at http://www.microsoft.com/technet/scriptcenter/tools/logparser/default.mspx.

Tools such as Log Parser can help you identify events that could indicate a potential hack attempt or even just a user attempting to look at something he or she shouldn't be looking at. By enabling file access auditing and using Log Parser to examine the results, you can even track down potential security issues at the file level. Digging through these same log files to track down this data manually is both tedious and unnecessary with the advanced capabilities of Microsoft's Log Parser utility.

Backing Up Data

As we've stressed throughout this book, important data should always be backed up. Some organizations use policies to enforce users saving data to locations on a server so that only the server's data needs to be backed up and not each individual computer. If data is saved to the local hard disk of each computer in an organization, the folders where data is stored should be routinely backed up. This not only includes documents that the users have saved, but also data that may be stored in databases on the local machine.

Microsoft Operating Systems

Microsoft is one of the leading vendors of server and desktop operating systems, and each new version of Windows has included new features to improve (or attempt to improve) security. However, even with Microsoft's widespread use, the company's track record for security has been less than spotless (of course, the same is true for every other operating system). To make your Windows operating system more secure, you should perform certain steps.

Disabling Services

Services are programs that perform specific tasks or functions, and run in the background while the computer is running. Although certain core services need to be running for Windows to operate, your system may not require many others. Unfortunately, with the exception of Windows 2003 that requires the network administrator to enable the services that he or she deems necessary, the services are installed and enabled by default regardless of whether you want them. These unneeded services use up memory and take up processing time from the CPU, and can make the computer run slower. Even worse, if there are any programming flaws in the services, these may be exploited by hackers and viruses. Although patches are released to fix these vulnerabilities, it may take months or years after the operating system has been released before the problem is identified, and a patch is made available. Even when patches are available, it doesn't mean that users will download and install them immediately.

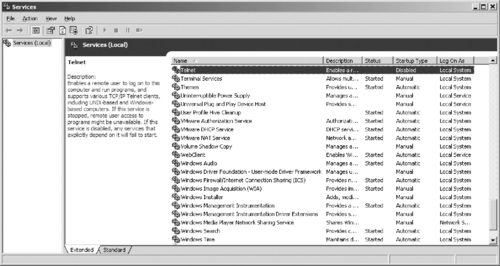

Because of the security risks and other issues that unneeded services can cause, it is wise to disable any unnecessary services on the computer. You can disable services through the Services applet (found in the Administrative Tools folder of the Control Panel), which you can alternatively open by clicking Start | Run, typing services.msc in the Open field, and clicking OK. As shown in Figure 13.4, the right pane of this tool provides a listing of all services installed on the computer. When you select a service, a description of the service appears to the left.

|

| Figure 13.4 Services |

Double-clicking on a service will display the Properties dialog box for it. By clicking on the General tab, you will see a drop-down list titled Startup Type. This list allows you to set what happens with the service when the computer starts. The different startup types in the list are:

▪ Automatic, which causes the service to automatically start when Windows starts

▪ Manual, in which the service starts only when the user or another service needs it

▪ Disabled, in which the service will not start under any circumstances

When you select the services you don't need and set the startup type to Disabled, the service cannot be started. When services are stopped, programs that use the service will be unable to use its functions. If services are disabled, any services that depend on it will fail to start. Therefore, you will need to ensure that the services aren't needed by applications or services that you do require. The various services that aren't required by Windows XP to run include:

▪ Alerter, which notifies specific users and computers of administrative alerts. This should be disabled if the computer is not on a corporate network.

▪ ClipBook, which allows the ClipBook Viewer to store information and share it with other computers on the network. This tool isn't commonly used anymore, and is generally safe to disable.

▪ Messenger, which (despite its name) isn't related to Windows Messenger. The service is used to transmit net send and alerter messages between clients and servers. It is often used to send spam, and is already disabled in some installations.

▪ SSDP Discovery Service, which is used for discovering Universal Plug and Play (UPnP) devices on a home network.

▪ Telnet, which is used by remote users to log on to the computer and run programs.

▪ NetMeeting Remote Desktop Sharing, which allows authorized users to access the computer remotely using NetMeeting. It may be used on corporate intranets, but should be disabled if NetMeeting isn't used.

▪ Remote Registry, which allows remote users to modify Registry settings on the computer. This isn't a needed service on home networks or stand-alone machines, and should be disabled if it isn't used on corporate networks.

▪ Smart Card, which manages access to smart cards read by the computer. If smart cards aren't used, it should be disabled.

▪ Routing and Remote Access, which is used for routing services to businesses in LANs and WANs. Again, this isn't needed on stand-alone machines and home networks, and should be disabled if not used on a corporate network.

NIC Bindings

Microsoft Windows operating systems use a mechanism known as binding to associate specific services and protocols with particular network interfaces. A network interface can be any port into or out of the computer, including a network interface card (NIC), a modem, or even a serial port. By default, Windows enables every possible binding when the OS is first installed and when a new interface or new service or protocol is installed. This is done to ensure that everything “just works”—it's easier and more convenient for less knowledgeable users than having to troubleshoot communication problems and enable the correct bindings. However, it also opens up security holes, so each time a significant change is made to a system, you should inspect the bindings to make sure no unwanted bindings are enabled.

One of the most common problems arising from this situation occurs when a system is connected to a broadband communication device for the first time. Windows automatically binds TCP/IP to the new interface as well as the Microsoft Networking service and File and Print Services. This effectively transforms a “stand-alone” system into a member of a network comprising other systems on the local broadband segment. This situation poses a significant security risk. To return the system to a more secure configuration, you must disable the bindings for every service and protocol other than TCP/IP on the new Internet interface.

Understanding Security and UNIX/Linux Operating Systems

UNIX and Linux systems seem to have a reputation for being more secure than Windows operating systems. To some extent, this reputation might be justified, but UNIX and Linux have a significant number of unique security vulnerabilities as well as sharing some common vulnerabilities with Windows OSes. Remember: No operating system is without security problems. Because UNIX has been around for decades, it has already been through numerous attack and repair cycles. For that reason, its current manifestations are generally more secure than many Windows OSes. Linux is based on UNIX architecture, and has been around since 1991. It is generally more secure than Windows for two reasons: It lacks several of the biggest pitfalls of Windows (such as support for NetBIOS) and it is less a target for attackers who prefer to concentrate on developing attacks for the most widely deployed operating systems, which means Windows. Nonetheless, both UNIX and Linux still have security problems.

For example, the passwd program used by some versions of UNIX appears to require somewhat secure passwords: That is, it requires passwords that contain at least five letters (or four characters if numerals or symbols are included). But this requirement is an illusion, because the program will accept a shorter password if the user enters it three times—thus overriding the “requirement.”

Many UNIX machines use accounts with names such as lpq or date that are used to execute simple commands without requiring users to log on. Often such accounts have blank passwords and a user ID of 0, which means they execute with superuser permissions. This is a big security hole because anyone can use these accounts, including hackers who might replace the command that is supposed to be run by the account with one of his or her own commands.

When securing a deployment of UNIX or Linux, you must take the following measures. This list is not exhaustive and does not apply to every version of UNIX or Linux.

▪ Use strong passwords.

▪ Implement password aging.

▪ Implement a shadow password file.

▪ Eliminate shared accounts. This is a type of “group account” that differs from the security groups used on Windows, NetWare, and UNIX systems. The latter contains user accounts; the UNIX shared group account is a single account that many different people use—often members of a team working together on a particular project. Instead of using group accounts, you should place the user accounts in groups by editing the /etc/group file.

▪ Be careful about implementing the “trusted hosts” concepts. They allow users to designate host computers that are to be considered trusted, so the users will not have to enter a password every time they use the network. This system can be exploited, so the best security practice is to disallow trusted hosts. If trusted hosts are allowed, only local hosts should be trusted; remote hosts should never be trusted. (Trusted hosts are listed in the /etc/hosts.equiv file.)

▪ Remove the “secure” designation from all terminals so that the root account can't log on from unsecured terminals, even with the password. Authorized users will still be able to use the su command to become a superuser.

▪ Ensure that network file system (NFS) security is enabled. Some UNIX implementations have no NFS security features enabled by default, which means that any Internet host (including untrusted hosts) can access files via NFS.

▪ Disallow anonymous FTP unless it is necessary.

▪ Disallow shell scripts that have the setuid or setgid permission bits set on them.

▪ Set the sticky bit on directories to prevent users from deleting or renaming other users’ files. The sticky bit isn't supported on all versions, as it has been dropped by Linux, FreeBSD, and Solaris.

▪ Set default file permissions so that group/world read/write access is not granted.

▪ Write-protect the root account startup files and home directory.

▪ Use only secured applications and service daemons. Secure NFS, Network Information Service (NIS), X Windows, and so on. Disable the r commands if they are not used.

▪ Remove unnecessary services and protocols. You can remove unneeded services by editing the /etc/inetd.conf and /etc/rc.conf files.

▪ Log all connections to network services, and use a TCP wrapper to track connections.

▪ Prevent domain name system (DNS) hostname spoofing.

▪ Set appropriate permissions on all files.

▪ Employ packet filtering.

▪ For remote logons, use a secured shell instead of Telnet, FTP, RLOGIN, RSH, and so on.

▪ For file encryption, use add-on programs that use strong algorithms (such as 3DES) rather than the standard UNIX crypt command, which is easily broken.

▪ Ensure that device files such as /dev/kmem, /dev/mem, and /dev/drum cannot be read by the world. Most device files should be owned by user “root.”