Chapter 2

Multicore-Based EWSNs—An Example of Parallel and Distributed Embedded Systems*

Advancements in silicon technology, embedded systems, sensors, micro-electro-mechanical systems, and wireless communications have led to the emergence of embedded wireless sensor networks (EWSNs). EWSNs consist of sensor nodes with embedded sensors to sense data about a phenomenon, and these sensor nodes communicate with neighboring sensor nodes over wireless links. Many emerging EWSN applications (e.g., surveillance, volcano monitoring) require a plethora of sensors (e.g., acoustic, seismic, temperature, and, more recently, image sensors and/or smart cameras) embedded in the sensor nodes. Although traditional EWSNs equipped with scalar sensors (e.g., temperature, humidity) transmit most of the sensed information to a sink node (base station node), this sense-transmit paradigm is becoming infeasible for information-hungry applications equipped with a plethora of sensors, including image sensors and/or smart cameras.

Processing and transmission of the large amount of sensed data in emerging applications exceed the capabilities of traditional EWSNs. For example, consider a military EWSN deployed in a battlefield, which requires various sensors, such as imaging, acoustic, and electromagnetic sensors. This application presents various challenges for existing EWSNs since transmission of high-resolution images and video streams over bandwidth-limited wireless links from sensor nodes to the sink node is infeasible. Furthermore, meaningful processing of multimedia data (acoustic, image, and video in this example) in real time exceeds the capabilities of traditional EWSNs consisting of single-core embedded sensor nodes [33, 34] and requires more powerful embedded sensor nodes to realize this application.

Since single-core EWSNs will soon be unable to meet the increasing requirements of information-rich applications (e.g., video sensor networks), next-generation sensor nodes must possessenhanced computation and communication capabilities. For example, the transmission rate for the first-generation MICA motes was 38.4 kbps, whereas the second-generation MICA motes (MICAz motes) can communicate at 250 kbps using IEEE 802.15.4 (ZigBee) [35]. Despite these advances in communication, limited wireless bandwidth from sensor nodes to the sink node makes timely transmission of multimedia data to the sink node infeasible. In traditional EWSNs, the communication energy dominates the computation energy. For example, an embedded sensor node produced by Rockwell Automation [36] expends 2000![]() more energy for transmitting a bit than that of executing a single instruction [37]. Similarly, transmitting a 15 frames per second (FPS) digital video stream over a wireless Bluetooth link takes 400 mW [38].

more energy for transmitting a bit than that of executing a single instruction [37]. Similarly, transmitting a 15 frames per second (FPS) digital video stream over a wireless Bluetooth link takes 400 mW [38].

Fortunately, there exists a trade-off between transmission and computation in an EWSN, which is well suited for in-network processing for information-rich applications and allows transmission of only event descriptions (e.g., detection of a target of interest) to the sink node to conserve energy. Technological advancements in multicore architectures have made multicore processors a viable and cost-effective choice for increasing the computational ability of embedded sensor nodes. Multicore embedded sensor nodes can extract the desired information from the sensed data and communicate only this processed information, which reduces the data transmission volume to the sink node. By replacing a large percentage of communication with in-network computation, multicore embedded sensor nodes could realize large energy savings that would increase the sensor network's overall lifetime.

Multicore embedded sensor nodes enable energy savings over traditional single-core embedded sensor nodes in two ways. First, reducing the energy expended in communication by performing in situ computation of sensed data and transmitting only processed information. Second, a multicore embedded sensor node allows the computations to be split across multiple cores while running each core at a lower processor voltage and frequency, as compared to a single-core system, which results in energy savings. Utilizing a single-core embedded sensor node for information processing in information-rich applications requires the sensor node to run at a high processor voltage and frequency to meet the application's delay requirements, which increases the power dissipation of the processor. A multicore embedded sensor node reduces the number of memory accesses, clock speed, and instruction decoding, thereby enabling higher arithmetic performance at a lower power consumption as compared to a single-core processor [38].

Preliminary studies have demonstrated the energy efficiency of multicore embedded sensor nodes as compared to single-core embedded sensor nodes in an EWSN. For example, Dogan et al. [39] evaluated single-core and multicore architectures for biomedical signal processing in wireless body sensor networks (WBSNs) where both energy efficiency and real-time processing are crucial design objectives. Results revealed that the multicore architecture consumed 66% less power than the single-core architecture for high biosignal computation workloads(i.e., 50.1 mega operations per seconds (MOPS)), whereas the multicore architecture consumed 10.4% more power than that of the single-core architecture for relatively light computation workloads (i.e., 681 kilo operations per second (KOPS)).

This chapter's highlights are as follows:

- Proposal of a heterogeneous hierarchical multicore embedded wireless sensor networks (MCEWSN) and the associated multicore embedded sensor node architecture.

- Elaboration on several computation-intensive tasks performed by sensor networks that would especially benefit from multicore embedded sensor nodes.

- Characterization and discussion of various application domains for MCEWSNs.

- Discussion of several state-of-the-art multicore embedded sensor node prototypes developed in academia and industry.

- Research challenges and future research directions for MCEWSNs.

The remainder of this chapter is organized as follows. Section 2.1 proposes an MCEWSN architecture. Section 2.2 proposes a multicore embedded sensor node architecture for MCEWSNs. Section 2.3 elaborates on several compute-intensive tasks that motivated the emergence of MCEWSNs. Potential application domains amenable to MCEWSNs are discussed in Section 2.4. Section 2.5 discusses several prototypes of multicore embedded sensor nodes. Section 2.6 discusses the research challenges and future research directions for MCEWNS, and Section 2.7 concludes this chapter.

2.1 Multicore Embedded Wireless Sensor Network Architecture

Figure 2.1 depicts our proposed heterogeneous hierarchical MCEWSN architecture, which satisfies the increasing in-network computational requirements of emerging EWSN applications. The heterogeneity in the architecture subsumes the integration of numerous single-core embedded sensor nodes and several multicore embedded sensor nodes. We note that homogeneous hierarchical single-core EWSNs have been discussed in the literature for large EWSNs (EWSNs consisting of a large number of sensor nodes) [40, 41].Our proposed architecture is hierarchical since the architecture comprises of various clusters (a group of embedded sensor nodes in communication range with each other) and a sink node. A hierarchical network is well suited for large EWSNs since small EWSNs, which consist of only a few sensor nodes, can send the sensed data directly to the base station or sink node.

Figure 2.1 A heterogeneous multicore embedded wireless sensor network (MCEWSN) architecture

Each cluster consists of several leaf sensor nodes and a cluster head. Leaf sensor nodes contain a single-core processor and are responsible for sensing, preprocessing sensed data, and transmitting sensed data to the cluster head nodes. Since leaf sensor nodes are not intended to perform complex processing of sensed data in our proposed architecture, a single-core processor sufficiently meets the computational requirements of leaf sensor nodes. Cluster head nodes consist of a multicore processor and are responsible for coalescing/fusing the data received from leaf sensor nodes for transmission to the sink node in an energy- and bandwidth-efficient manner. Our proposed architecture with multicore cluster heads is based on practical reasons since sending all the collected data from the cluster heads to the sink node is not feasible for bandwidth-limited EWSNs, which warrants complex processing and information fusion to be carried out at cluster head nodes, and only the concise processed information is transmitted to the sink node.

The sink node contains a multicore processor and is responsible for transforming high-level user queries from the control and analysis center (CAC) to network-specific directives, querying the MCEWSN for the desired information, and returning the requested information to the user/CAC. The sink node's multicore processor facilitates postprocessing of the information received from multiple cluster heads. The postprocessing at the sink node includes information fusion and event detection based on the aggregated data from all of the sensor nodes in the network. The CAC further analyzes the information received from the sink node and issues control commands and queries to the sink node.

MCEWSNs can be coupled with a satellite backbone network that provides long-haul communication from the sink node to the CAC since MCEWSNs are often deployed in remote areas with no wireless infrastructure, such as a cellular network infrastructure. The satellites in the satellite backbone network communicate with each other via intersatellite links (ISLs). Since a satellite's uplink and downlink bandwidth is limited, a multicore processor in the sink node is required to process, compress, and/or encrypt the information sent to the satellite backbone network.

Although this chapter focuses on heterogeneous MCEWSNs, homogeneous MCEWSN architectures are an extension of our proposed architecture (Fig. 2.1) where leaf sensor nodes also contain a multicore processor. In a homogeneous MCEWSN equipped with multiple sensors, each processor core in a multicore embedded sensor node can be assigned to process one sensing task (e.g., one processor core handles sensed temperature data and another processor core handles sensed humidity data and so on) as opposed to single-core embedded sensor nodes where the single processor core is responsible for processing all of the sensed data from all of the sensors. We focus on heterogeneous MCEWSNs as we believe that heterogeneous MCEWSNs would serve as a first step toward integration of multicore and sensor networking technology because of the following reason. Owing to the dominance of single-core embedded sensor nodes in existing EWSNs, replacing all of the single-core embedded sensor nodes with multicore embedded sensor nodes may not be feasible and cost effective, given that only a few multicore embedded sensor nodes operating as cluster heads could meet an application's in-network computation requirements. Hence, our proposed heterogeneous MCEWSN would enable a smooth transition from single-core EWSNs to multicore EWSNs.

2.2 Multicore Embedded Sensor Node Architecture

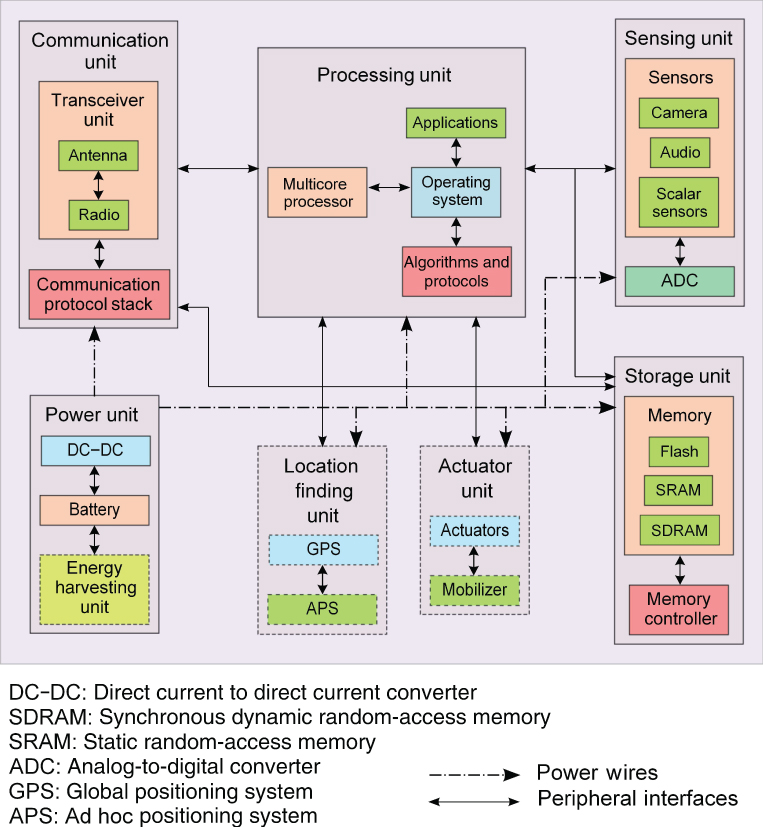

Figure 2.2 depicts the architecture of a multicore embedded sensor node in our MCEWSN. The multicore embedded sensor node consists of a sensing unit, a processing unit, a storage unit, a communication unit, a power unit, an optional actuator unit, and an optional location finding unit (optional units are represented by dotted lines in Fig. 2.2) [33].

Figure 2.2 Multicore embedded sensor node architecture

2.2.1 Sensing Unit

The sensing unit senses the phenomenon of interest and is composed of two subunits: sensors (e.g., camera/image, audio, and scalar sensors such as temperature and pressure) and analog-to-digital converters (ADCs). Image sensors can either leverage traditional charge-coupled device (CCD) technology or complementary metal-oxide-semiconductor (CMOS) imaging technology. The CCD sensor accumulates the incident light energy as the charge accumulated on a pixel, which is then converted into an analog voltage signal. In CMOS imaging technology, each pixel has its own charge-to-voltage conversion and other processing components, such as amplifiers, noise correction, and digitization circuits. The CMOS imaging technology enables integration of the lens, an image sensor, and image compression and processing technology on a single chip. ADCs convert the analog signals produced by sensors to digital signals, which serve as input to the processing unit.

2.2.2 Processing Unit

The processing unit consists of a multicore processor and is responsible for controlling sensors, gathering and processing sensed data, executing the system software that coordinates sensing, communication tasks, and interfacing with the storage unit. The processing unit for traditional sensor nodes consists of a single-core processor for general-purpose applications, such as periodic sensing of scalar data (e.g., temperature, humidity). High-performance single-core processors would be infeasible to meet computational requirements since these single-core processors would require operation at high processor voltage and frequency. A processor operating at a high voltage and frequency consumes an enormous amount of power since power increases proportionally to the operating processor frequency and square of the operating processor voltage. Furthermore, even if these energy issues are ignored, a single high-performance processor core may not be able to meet the computational requirements of emerging applications, such as multimedia sensor networks, in real time.

Multicore processors distribute the computations across the available cores, which speeds up the computations as well as conserves energy by allowing each processor core to operate at a lower processor voltage and frequency. Multicore processors are suitable for streaming and complex, event-based monitoring applications, such as in smart camera sensor networks, that require data to be processed and compressed as well as require extraction of key information features. For example, the IC3D/Xetal single-instruction multiple-data (SIMD) processor, which consists of a linear processor array (LPA) with 320 reduced instruction set computers (RISC)/processors, is being used in smart camera sensor networks [42].

2.2.3 Storage Unit

The storage unit consists of the memory subsystem, which can be classified as user memory and program memory, and a memory controller, which coordinates memory accesses between different processor cores. The user memory stores sensed data when immediate data transmission is not possible due to hardware failures, environmental conditions, physical layer jamming, limited energy reserves, or when the data requires processing. The program memory is used for programming the embedded sensor node, and using Flash memory for the program memory provides persistent storage of application code and text segments. Static random-access memory (SRAM), which does not need periodic refreshing but is expensive in terms of area and power consumption, is used as dedicated processor memory. Synchronous dynamic random-access memory (SDRAM) is typically used as user memory. For example, the Imote2embedded sensor node, which contains a Marvell PXA271 XScale processor operating between 13 and 416 MHz, has 256 kB SRAM, 32 MB Flash, and 32 MB SDRAM [43].

2.2.4 Communication Unit

The communication unit interfaces the embedded sensor node to the wireless network and consists of a transceiver unit (transceiver and antenna) and the communication unit software. The communication unit software mainly consists of the communication protocol stack and the physical layer software in the case of software-defined radio (SDR). The transceiver unit consists of either a wireless local area network (WLAN) card, such as an IEEE 802.11b compliant card, or an IEEE 802.15.4 compatible card, such as a Texas Instrument/Chipcon CC2420 chipset. The choice of a transceiver unit card depends on the application requirements such as desired range and allowable power. The maximum transmit power of IEEE 802.11b cards is higher in comparison to IEEE 802.15.4 cards, which results in a higher communication range but consumes more power. For example, the Intel PRO/Wireless 2011 card has a data rate of 11 Mbps and a typical transmit power of 18 dB m, but draws 300 and 170 mA for sending and receiving, respectively. The CC2420 802.15.4 radio has a maximum data rate of 250 kbps and a transmit power of 0 dB m, but draws 17.4 and 19.7 mA for sending and receiving, respectively.

2.2.5 Power Unit

The power unit supplies power to various components/units on the embedded sensor node and dictates the sensor node's lifetime. The power unit consists of a battery and a DC–DC converter. The DC–DC converter provides a constant supply voltage to the sensor node. The power unit may be augmented by an optional energy-harvesting unit that derives energy from external sources, such as solar cells. Although multicore embedded sensor nodes are more power efficient as compared to single-core embedded sensor nodes, energy-harvesting units in multicore cluster heads and the sink node would prolong the MCEWSN's lifetime. Energy-harvesting units are more suitable for cluster heads and the sink node as these nodes perform more computations as compared to the single-core leaf sensor nodes. Furthermore, incorporating energy-harvesting units in only a few embedded sensor nodes (i.e., cluster heads and sink nodes) would not substantially increase the cost of EWSN deployment. Without an energy-harvesting unit, MCEWSNs would only be suitable for applications with relatively small lifetime requirements.

2.2.6 Actuator Unit

The optional actuator unit consists of actuators (e.g., motors, servos, linear actuators, air muscles, muscle wire, camera pan tilt) and an optional mobilizer unit for sensor node mobility. Actuators enhance the sensing task by opening/closing a switch/relay to control functions, such as a camera or antenna orientation and repositioning sensors. Actuators, in contrast to sensors that only sense a phenomenon, typically affect the operating environment by opening a valve, emitting sound, or physically moving the sensor node.

2.2.7 Location Finding Unit

The optional location finding unit determines a sensor node's location. Depending on the application requirements and available resources, the location finding unit can either be global positioning system (GPS)-based unit or ad hoc positioning system (APS)-based unit. Although GPS is highly accurate, the GPS components are expensive and require direct line of sight between the sensor node and satellites. APS determines a sensor node's position with respect to defined landmarks, which may be other GPS-based sensor nodes [44]. A sensor node estimates the distance from itself to the landmark based on direct communication and the received communication signal strength. A sensor node that is two hops away from a landmark estimates its distance based on the distance estimate of a sensor node one hop away from a landmark via the message propagation. A sensor node with distance estimates to three or more landmarks can compute its own position via triangulation.

2.3 Compute-Intensive Tasks Motivating the Emergence of MCEWSNs

Many applications require embedded sensor nodes to perform various compute-intensive tasks that often exceeds the computing capability of traditional single-core sensor nodes. These tasks include information fusion, encryption, network coding, and SDR and motivate the emergence of MCEWSNs. In this section, we discuss these compute-intensive tasks requiring multicore support in an embedded sensor node.

2.3.1 Information Fusion

A critical processing task in EWSNs is information fusion, which can benefit from a multicore processor in an embedded sensor node. EWSNs produce a large amount of data that must be processed, delivered, and assessed according to application objectives. Since the transmission bandwidth is limited, information fusion condenses the sensed data and transmits only the selected fused information to the sink node. Additionally, the data received from neighboring sensor nodes is often redundant and highly correlated, which warrants fusing the sensed data. Formally, information fusion encompasses theory, techniques, and tools created and applied to exploit the synergy in the information acquired from multiple sources (sensors, databases, etc.) such that the resulting fused data/information is considered qualitatively or quantitatively better in terms of accuracy or robustness than the acquired data from any single data source [45]. Data aggregation is an instance of information fusion in which the data from various sources is aggregated using summarization functions (e.g., minimum, maximum, and average) that reduce the volume of data being manipulated. Information fusion can reduce the amount of data traffic, filter noisy measurements, and make predictions and inferences about a monitored entity.

Information fusion can be computationally expensive, especially for video sensing applications. Unlike scalar data, which can be combined using relatively simple mathematical manipulations such as average and summation, video data is vectorial and requires complex computations to fuse (e.g., edge detection, histogram formation, compression, filtering). Reducing transmission overhead via information fusion in video sensor networks requires a substantial increase in intermediate processing, which warrants the use of multicore cluster heads in MCEWSNs. Multicore cluster heads fuse data received from multiple sensor nodes to eliminate redundant transmission and provide fused information to the sink node with minimum data latency. Data latency is the sum of the delay involved in data transmission, routing, and information fusion/data aggregation [40]. Data latency is important in many applications, especially real-time applications, where freshness of data is an important factor. Multicore cluster heads can fuse data much faster than single-core sensor nodes, which justifies the use of multicore cluster heads in MCEWSNs with complex real-time computing requirements.

Omnibus Model for Information Fusion: The Omnibus model [46] guides information fusion for sensor-based devices. Figure 2.3 illustrates the Omnibus model with respect to our MCEWSN architecture, and we exemplify the model's usage by considering a surveillance application performing target tracking based on acoustic sensors [45]. The Observe stage, which can be carried out at single-core sensor nodes and/or multicore cluster heads, uses a filter (e.g., moving average filter) to reduce noise (Signal Processing) from acoustic sensor data provided by the embedded sensor nodes (Sensing). The Orientate stage, which is carried out at multicore cluster heads, uses the filtered acoustic data for range estimation (Feature Extraction) and estimates thetarget's location and trajectory (Pattern Processing). The Decide stage, which is carried out at multicore cluster heads and/or multicore sink nodes, classifies the sensed target (Context Processing) and determines whether the target represents a threat (Decision Making). If the target is a threat, the Act stage, which is carried out at the CAC, intercepts the target (Control) (e.g., with a missile) and activates available armaments (Resource Tasking).

Figure 2.3 Omnibus sensor information fusion model for an MCEWSN architecture

2.3.2 Encryption

Security is an important issue in many sensor networking applications since sensors are deployed in open environments and are susceptible to malicious attacks. The sensed and/or aggregated data must be encrypted for secure transmission to the sink node. The two main practical issues involved in encryption are the size of the encrypted message and the encryption execution time. Privacy homomorphisms (PHs) are encryption functions suitable for MCEWSNs that allow a set of operations to be performed on encrypted data without knowing the decryption functions [40]. PHs use a positive integer ![]() for computing the secret key for encryption such that the size of the encrypted data increases by a factor of

for computing the secret key for encryption such that the size of the encrypted data increases by a factor of ![]() as compared to the original data. The security of the encrypted data increases with

as compared to the original data. The security of the encrypted data increases with ![]() as well as the execution time for encryption. For example, the execution time for encryption of one byte of data is 3481 clock cycles on a MICA2 mote when

as well as the execution time for encryption. For example, the execution time for encryption of one byte of data is 3481 clock cycles on a MICA2 mote when ![]() = 2 and increases to 4277 clock cycles when

= 2 and increases to 4277 clock cycles when ![]() = 4. MICA2 motes cannot handle the computations for

= 4. MICA2 motes cannot handle the computations for ![]() [40], hence, applications requiring greater security require multicore sensor nodes and/or cluster heads to perform these computations.

[40], hence, applications requiring greater security require multicore sensor nodes and/or cluster heads to perform these computations.

2.3.3 Network Coding

Network coding is a coding technique to enhance network throughput in multinodal environments, such as EWSNs. Despite the effectiveness of network coding for EWSNs, excessive decoding cost associated with network coding hinders the technique's adoption in traditional EWSNs with constrained computing power [47]. Future MCEWSNs will enable adoption of sophisticated coding techniques, such as network coding to increase network throughput.

2.3.4 Software-Defined Radio (SDR)

SDR is a radio in which some or all of the physical layer functions execute as software. The radio in existing EWSNs is hardware-based, which results in higher production costs and minimal flexibility in supporting multiple waveform standards [48]. MCEWSNs can realize SDR-based radio by enabling fast, parallel computation of signal processing operations needed in SDR (e.g., fast Fourier transform (FFT)). SDR-based MCEWSNs would enable multimode, multiband, and multifunctional radios that can be enhanced using software upgrades.

2.4 MCEWSN Application Domains

MCEWSNs are suitable for sensor networking application domains that require complex in-network information processing such as wireless video sensor networks (WVSNs), wireless multimedia sensor networks (WMSNs), satellite-based wireless sensor networks (SBWSNs), space shuttle sensor networks (3SNs), aerial–terrestrial hybrid sensor networks (ATHSNs), and fault-tolerant (FT) sensor networks. In this section, we discuss these application domains for MCEWSNs.

2.4.1 Wireless Video Sensor Networks (WVSNs)

WVSNs are WSNs in which smart cameras and/or image sensors are embedded in the sensor nodes. WVSNs emulate the compound eye found in certain arthropods. Although WVSNs are a subset of WMSNs, we discuss WVSNs separately to emphasize the WVSNs' stand-alone existence. WVSNs are suitable for applications in areas such as homeland security, battlefield monitoring, and mining. For example, video sensors deployed at airports, borders, and harbors provide a level of continuous and accurate monitoring and protection that is otherwise unattainable. We discuss the application of multicore embedded sensor nodes both for image- and video-centric WVSNs.

In image-centric WVSNs, multiple image/camera sensors observe a scene from multiple directions and are able to describe objects in their true 3D appearance by overcoming occlusion problems. Low-cost imaging sensors are readily available, such as CCD and CMOS imaging sensors from Kodak, and the Cyclops camera from the University of California at Los Angeles (UCLA) designed as an add-on for MICA sensor nodes [38]. Image preprocessing involves convolutions and data-dependent operations using a limited neighborhood of pixels. The signal processing algorithms for image processing in WVSNs typically exhibit a high degree of parallelism and are dominated by a few regular kernels (e.g., FFT) that are responsible for a large fraction of the execution time and energy consumption. Accelerating these kernels on multicore embedded sensor nodes would achieve significant speedup in execution time and reduction in energy consumption, and would help achieve real-time computational requirements for many applications in energy-constrained domains.

Video-centric WVSNs rely on multiple video streams from multiple embedded sensor nodes. Since sensor nodes can only serve low-resolution video streams given the sensor nodes' resource limitations, a single video stream alone does not contain enough information for vision analysis such as event detection and tracking; however, multiple sensor nodes can capture video streams from different angles and distances together providing enormous visual data [35]. Video encoders rely on intraframe compression techniques that reduce redundancy within one frame and interframe compression techniques (e.g., predictive coding) that exploit redundancy among subsequent frames [33]. Video coding techniques require complex algorithms that exceed the computing power of single-core embedded sensor nodes. The visual data from numerous sensor nodes can be combined to give high-resolution video streams; however, this processing requires multicore embedded sensor nodes and/or cluster heads.

2.4.2 Wireless Multimedia Sensor Networks (WMSNs)

A WMSN consists of wirelessly connected embedded sensor nodes that can retrieve multimedia content such as video and audio streams, still images, and scalar sensor data of the observed phenomenon. WMSNs target a large variety of distributed, wireless, streaming multimedia networking applications ranging from home surveillance to military and space applications. A multimedia sensor captures audio and image/video streams using an embedded microphone and a microcamera.

Various sensors in a WMSN coordinate closely to achieve application goals. For example, in a military application for target detection and tracking, acoustic and electromagnetic sensors can enable early detection of a target but may not provide adequate information about the target. Additional target details, such as type of vehicle, equipped armaments, and onboard personnel, are often required and gathering these details requires image sensors. Although the sensing ability in most sensors is isotropic and attenuates with distance, a distinct characteristic of video/image sensors is these sensors' directional sensing ranges. Recently, omnicameras have become available, which can provide complete coverage of the scene around a sensor node; however, applications are limited to close range scenarios to guarantee sufficient image resolution for moving objects [35]. To ensure full coverage of the sensor field, a set of directional cameras is required to capture enough information for activity detection. The image and video sensors' high sensing cost limits these sensors' continuous activation given constrained embedded sensor node resources. Hence, the image and video sensors in a WMSN require sophisticated control such that the image and video sensors are triggered only after a target is detected based on sensed data from other lower cost sensors, such as acoustic and electromagnetic.

Desirable WMSN characteristics include the ability to store, process in real time, correlate, and fuse multimedia data originating from heterogeneous sources [33]. Multimedia contents, especially video streams, require data rates that are orders of magnitude higher than those supported by traditional single-core embedded sensor nodes. To process multimedia data in real time and to reduce the wireless bandwidth demand, multicore embedded sensor nodes in the network are required. Multicore embedded sensor nodes facilitate in situ processing of voluminousinformation from various sensors, notifying the CAC only once an event is detected (e.g., target detection).

2.4.3 Satellite-Based Wireless Sensor Networks (SBWSN)

A SBWSN is a wireless communication sensing network composed of many satellites, each equipped with multifunctional sensors, long-range wireless communication modules, thrusters for attitude adjustment, and a computational unit (potentially multicore) to carry out processing of the sensed data. Traditional satellite missions are extremely expensive to design, build, launch, and operate, thereby motivating the aerospace industry to focus on distributed space missions, which would consist of multiple small, inexpensive, and distributed satellites coordinating to attain mission goals. SBWSNs would enable robust space missions by tolerating the failure of a single or a few satellites as compared to a large single satellite, where a single failure could compromise the success of a mission. SBWSNs can be used for a variety of missions, such as space weather monitoring, studying the impact of solar storms on Earth's magnetosphere and ionosphere, environmental monitoring (e.g., pollution, land, and ocean surface monitoring), and hazard prediction (e.g., flood and earthquake prediction).

Each SBWSN mission requires specific orbits and constellations to meet mission requirements, and GPS provides an essential tool for orbit determination and navigation. Typical constellations include string-of-pearls, flower constellation, and satellite cluster. In particular, the flower constellation provides stable orbit configurations, which are suitable for microsatellite (mass ![]() 100 kg), nanosatellite (mass

100 kg), nanosatellite (mass ![]() 10 kg), and picosatellite (mass

10 kg), and picosatellite (mass ![]() 1 kg) missions. Important orbital factors to consider in SBWSN design are relative range (distance) and speed between satellites, the ISL access opportunity, and the ground-link access opportunity. The access time is the time for two satellites to communicate with each other and depends on the distance between the satellites (range). Satellites in an SBWSN can be used as an interferometer, which correlates different images acquired from slightly different angles/view points in order to get better resolution and more meaningful insights.

1 kg) missions. Important orbital factors to consider in SBWSN design are relative range (distance) and speed between satellites, the ISL access opportunity, and the ground-link access opportunity. The access time is the time for two satellites to communicate with each other and depends on the distance between the satellites (range). Satellites in an SBWSN can be used as an interferometer, which correlates different images acquired from slightly different angles/view points in order to get better resolution and more meaningful insights.

All of the satellites in an SBWSN collaborate to sense the desired phenomenon, communicate over long distances through beam-forming over an ISL, and maintain the network topology through self-organized mobility [49]. Studies indicate that IEEE 802.11b (Wi-Fi) and IEEE 802.16 (WiMax) can be used for intersatellite communications (communication between satellites) and IEEE 802.15.4 (ZigBee) can be used for intrasatellite (communication between sensor nodes within a satellite) communications [50]. We point out that the IEEE 802.11b protocol requires modifications for use in an ISL where the distance between satellites is more than 1 km since the IEEE 802.11b standard normally supports a communication range within 300 m. The feasibility of wireless protocols for intersatellite communication depends on therange, power requirements, medium access control (MAC) features, and support for mobility. The intrasatellite protocols are mainly selected based on power since the range is small within a satellite. A low duty cycle and the ability to put the radio to sleep are desirable features for intrasatellite communication protocols. For example, the MICA2DOT mote, which requires 24 mW of active power and 3 ![]() W of standby power, supplied by a 3 V 750 mA h battery cell can last for 27,780 h

W of standby power, supplied by a 3 V 750 mA h battery cell can last for 27,780 h ![]() 3 years and 2 months, while operating at a duty cycle of 0.1% (supported by ZigBee) [51].

3 years and 2 months, while operating at a duty cycle of 0.1% (supported by ZigBee) [51].

Since an individual satellite within an SBWSN may not have sufficient power to communicate with a ground station, a sink satellite in an SBWSN can communicate with a ground station, which is connected to the CAC. Ground communication in SBWSNs takes place in very-high- frequency (VHF) (30–300 MHz) and ultra-high- frequency (UHF) (300 MHz–3 GHz) bands. VHF frequencies pass through the ionosphere with effects, such as scintillation, fading, Faraday's rotation, and multipath effects during intense solar cycles due to the reflection of the VHF signals. UHF bands, in which both S- and L-bands lie, can suffer severe disruptions during a solar storm. For a formation of several SNAP-1 nanosatellites, the typical downlink data rate is 38.4 or 76.8 kbps maximum [51], which necessitates multicore embedded sensor nodes in SBWSNs to perform in situ processing so that only event descriptions are sent to the CAC.

2.4.4 Space Shuttle Sensor Networks (3SN)

A 3SN corresponds to a network of sensors aimed to monitor a space shuttle during preflight, ascent, on-orbit, and reentry phases. Battery-operated embedded wireless sensors can be easily bonded to the space shuttle's structure and enable real-time monitoring of temperature, triaxial vibration, strain, pressure, tilt, chemical, and ultrasound data. MCEWSNs would enable real-time monitoring of space vehicles not possible by ground-based sensing systems. For example, the Columbia space shuttle accident was caused by damage done when foam shielding dislodged from the external fuel tank during the shuttle's launch, which damaged the wing's leading edge panels [52]. The vehicle lacked onboard sensors that could have enabled ground personnel to determine the extent and location of the damage. Ground-based cameras captured images of the impact but were not able to reliably characterize the location and severity of the impact and resulting damage.

MCEWSNs for space shuttles, currently under development, would be used for space shuttle main engine (SSME) crack investigation, space shuttle environmental control life support system (ECLSS) oxygen and nitrogen flexhose analysis, and wing leading edge impact detection. Since the amount of data acquired during the 10-min ascent period is nearly 100 MB, the time to download all data, even for a single event, via the radio frequency (RF)link is prohibitively long. Hence, information fusion algorithms are required in 3SNs to minimize the quantity and increase the quality of the data being transmitted via the RF link. Furthermore, MCEWSNs would enable a 10![]() reduction in the installation costs for the shuttle as compared to the sensing systems based on traditional wired approaches [52].

reduction in the installation costs for the shuttle as compared to the sensing systems based on traditional wired approaches [52].

2.4.5 Aerial–Terrestrial Hybrid Sensor Networks (ATHSNs)

ATHSNs, which consist of ground sensors and aerial sensors, integrate terrestrial sensor networks with aerial/space sensor networks. To connect remote terrestrial EWSNs to a CAC located far away in urban areas, ATHSNs can include a satellite backbone network. The satellite backbone network is widely available at remote locations and provides a reliable and broadband communication network [53, 54]. Various satellite communication choices are possible, such as WildBlue, HughesNet, and geostationary operational environmental satellite (GOES) system of National Aeronautics and Space Administration (NASA). However, a satellite's uplink and downlink bandwidth is limited and requires preprocessing and compression of sensed data, especially multimedia data such as image and video streams. Multicore embedded sensor nodes are suitable for ATHSNs, and are capable of carrying out the processing and compression of high-quality image and video streams for transmission to and from a satellite backbone network.

Aerial networks in ATHSNs may consist of unmanned aerial vehicles (UAVs) and satellites. For example, consider an ATHSN in which UAVs contain embedded image and video sensors such that only the image scenes that are of significant interest from a military strategy perspective are sensed in greater detail. The working of ATHSNs consisting of UAVs and satellites can be described concisely in the following seven steps [53]. (1) Ground sensors detect the presence of a hostile target in the monitored field and store events in memory. (2) The satellite periodically contacts multicore cluster heads in the terrestrial EWSN to download updates about the target's presence. (3) Satellites contact UAVs to acquire image data about the scene where the intrusion is detected. (4) UAVs gather image data through the embedded image sensors. (5) The embedded multicore sensors in UAVs process and compress the image data for transmission to the satellite backbone network in a bandwidth-efficient manner. (6) The satellite backbone network relays the processed information received from the UAVs to the CAC. (7) The satellite backbone network relays the commands (e.g., launching the UAVs' arsenals) from the CAC to the UAVs.

Ye et al. [54] have implemented an ATHSN prototype for an ecological study using temperature, humidity, photosynthetically active radiation (PAR), wind speed, and precipitation sensors. The prototype consists of a small satellite dish and a communication modem for integrating aterrestrial EWSN with the WildBlue satellite backbone network, which provides commercial service. The prototype uses Intel's Stargate processor as the sink node, which provides access control and manages the use of the satellite link.

The transformational satellite (TSAT) system is a future generation satellite system that is designed for military applications by NASA, the U.S. Department of Defense (DoD), and the Intelligence Community (IC) [53]. The TSAT system is a constellation of five satellites, placed in geostationary orbit, that constitute a high-bandwidth satellite backbone network, which allows terrestrial units to access optical and radar imagery from UAVs and satellites in real time. TSAT provides broadband, reliable, worldwide, and secure transmission of data. TSAT supports RF communication links with data rates up to 45 Mbps and laser communication links with data rates up to 10–100 Gbps [53].

2.4.6 Fault-Tolerant (FT) Sensor Networks

The sensor nodes in an EWSN are typically deployed in harsh and unattended environments, which makes fault tolerance (FT) an important consideration in EWSN design, particularly for space-based WSNs. For example, the temperature of aerospace vehicles varies from cryogenic to extremely high temperature, and pressure varies from vacuum to very high pressure. Additionally, shock and vibration levels during launch can cause component failures. Furthermore, high levels of ionizing radiation require electronics to be FT if not radiation-hardened (rad-hard). Multicore embedded sensors can provide hardware-based (e.g., triple modular redundancy (TMR) or self-checking pairs (SCP)) as well as software-based (e.g., algorithm-based fault tolerance (ABFT)) FT mechanisms for applications requiring high reliability. Computations, such as preprocessing and data fusion, can be replicated on multiple cores so that if radiation corrupts processing on one core, processing on other cores would still enable reliable computation of results.

2.5 Multicore Embedded Sensor Nodes

Several initiatives toward multicore embedded sensor nodes have been undertaken by academia and industry for various real-time applications. In this section, we describe several state-of-the-art multicore embedded sensor node prototypes.

2.5.1 InstraNode

InstraNode is a dual-core sensor node for real-time health monitoring of civil structures, such as highway bridges and skyscrapers. InstraNode is equipped with a 4000 mA h lithium-ion battery, three accelerometers, a gyroscope, and an IEEE 802.11b (Wi-Fi) card for communication with other nodes. One low-power processor core in InstraNode runs at 3 V and 4 MHz and is dedicated to sampling data from sensors, whereas the other faster, high-power processor core runs at 4.3 V and 40 MHz and is responsible for networking tasks, such as transmission/reception of data and execution of a routing algorithm. Furthermore, InstraNode possesses multimodal operation capabilities such as wired/wireless and battery-powered/AC-adaptor powered options. Experiments indicate that the InstraNode outperforms single-core sensor nodes in terms of power efficiency and network performance [55].

2.5.2 Mars Rover Prototype Mote

Etchison et al. [56] have proposed a high-performance EWSN for the Mars Rover, which consists of dual-core mobile sensor nodes and a wireless cluster consisting of multiple processors to process image data gathered from the sensor nodes and to make decisions based on gathered information. The prototype mote consists of a Micro ATX motherboard with Intel's dual-core Atom processor, 2 GB of random-access memory (RAM), and is powered by a 12 V/5 A DC power supply for lab testing. Each mote performs data acquisition, processing, and transmission.

2.5.3 Satellite-Based Sensor Node (SBSN)

Vladimirova et al. [57] have developed a system-on-chip (SoC) satellite-based sensor node (SBSN). The SBSN prototype contains a SPARC V8 LEON3 soft processor core, which allows configuration in an SMP architecture [58]. The LEON3 processor core runs software applications and interfaces with the upper layers of the communication stack using the IEEE 802.11 protocol. The SBSN prototype uses a number of intellectual property (IP) cores, such as a hardware-accelerated Wi-Fi MAC, a transceiver core, and a Java coprocessor. The Java coprocessor enables distributed computing and Internet protocol (IP)-based networking functions in SBWSNs. The intersatellite communication module (ISCM) in the SBSN prototype adheres to IEEE 802.11 and CubeSat design specifications. The ISCM supports ground communication links and ISLs at variable data rates and configurable waveforms to adapt to channel conditions. The ISCM incorporates S-band (2.4 GHz) and a 434/144 MHz radio frontend interfaced to a single reconfigurable modem. The ISCM uses a high-end AD9861 ADC/digital-to-analog converter (DAC) for the 2.4 GHz radio frontend for a Maxim2830 radio and a low-end AD7731 for the 434/144 MHz frontend for an Alinco DJC-7E radio. Additionally, ISCM incorporates current and temperature sensors and a 16-bit microcontroller for housekeeping purposes.

2.5.4 Multi-CPU-Based Sensor Node Prototype

Ohara et al. [59] have developed a prototype for an embedded sensor node using three PIC18 central processing units (CPUs). The prototype is supplied by a configurable voltage stabilized power supply, but the same voltage is supplied to all CPUs. The prototype allowed each CPU's frequency to be statically changed by changing a corresponding ceramic resonator. Experiments revealed that the multi-CPU sensor node prototype consumed 76% less power as compared to a single-core sensor node for benchmarks that involved sampling, root mean square calculation, and preprocessing samples for transmission.

2.5.5 Smart Camera Mote

Kleihorst et al. [38] developed a smart camera mote, which consists of four basic components: color image sensors, an IC3D SIMD processor (a member of the Philips' Xetal family of SIMD processors) for low-level image processing, a general-purpose processor for intermediate and high-level processing and control, and a communication module. Both of the processors are coupled with a dual-port RAM that enables these processors to work in a shared workspace. The IC3D SIMD processor consists of a linear array of 320 RISC processors. The peak pixel performance of the IC3D processor is approximately 50 giga operations per second (GOPS). Despite high pixel performance, the IC3D processor is an inherently low-power processor, which makes the processor suitable for multicore embedded sensor nodes. The power consumption of the IC3D processor for typical applications, such as feature finding or face detection, is below 100 mW in active processing modes.

2.6 Research Challenges and Future Research Directions

Despite few initiatives toward MCEWSNs, the domain is still in its infancy and requires addressing some challenges to facilitate ubiquitous deployment of MCEWSNs. In this section, we discuss several research challenges and future research directions for MCEWSNs.

Application Parallelization: Parallelization of existing serial applications andalgorithms can be challenging, considering the limited number of parallel programmers as compared to serial programmers. Parallel applications with limited scalability present challenges for efficient utilization of multicore and future manycore embedded sensor nodes. Furthermore, synchronization between different cores by the use of barriers and locks limits the attainable speedup from parallel applications. A poor speedup due to limited scalability as the number of cores increases can diminish the energy and performance benefits attained by parallelization of sensor applications. To minimize potential performance degradation for parallel applications with limited scalability, designers can restrict these applications to a limited number of cores while turning off remaining cores to save power or utilizing other cores by multiprogramming other sensor applications on those cores. Consequently, existing operating systems for embedded sensor nodes (e.g., TinyOS [60], MANTIS [61]) would require updating their schedulers for efficient scheduling of multiprogrammed workloads and would also require some middleware support (e.g., OpenMP) to support multithreading of parallel applications.

Signal Processing and Computer Vision: Advances in sensor technology have led to a dramatic increase in the amount of data sensed, which is fueled by both the reduced cost of sensors and increased deployment over a large class of applications. This sensed data deluge problem exacerbates for MCEWSNs and places immense stress on our ability to process, store, and obtain meaningful information from the data. The fundamental reason behind the data deluge problem comes from sensor designs that are based on the Nyquist sampling theorem [62], which has been the dogma in traditional signal processing. However, as we build sensors and sensing platforms with increasing capabilities (e.g., MCEWSNs involving hyper-spectral imaging), designs based on Nyquist sampling are prohibitively costly because of high-resolution sensors and extremely fast data processing requirements. The failure of Nyquist sampling lies in its inability to exploit redundant structures in signals. This redundancy and compressibility in signals forms the basis of Fourier and wavelet transforms. Research in sensing and processing systems that exploit the redundant structures in signals includes sparse models, union-of-subspace models, and low-dimensional manifold models. The data deluge problem in MCEWSNs can be addressed in three fundamental ways: (1) parsimonious signal representations that facilitate efficient processing of visual signals; (2) novel compressive and computational imaging systems for sensing of data; and (3) scalable algorithms for large-scale machine learning systems. These novel techniques to address the data deluge problem in MCEWSNs require further research.

Another related research avenue for MCEWSNs is compressive sensing for high-dimensional visual signals, which requires sensors with capabilities that go beyond sensing 2D images. Examples of these novel sensors include the Lytro camera for sensing light fields [63]; the Kinect system that provides scene depth [64]; and flexible camera-arrays that provide unique trade-offs in the spatial, temporal, and angular resolutions of the incident light. Design of novel models, sensors, and technologies is imperative to better characterize objects with complex visual properties.

Furthermore, distilling information from a large number of low-resolution video streams obtained from multiple video sensors requires novel algorithms since current computer vision and signal processing algorithms can only analyze a few high-resolution images.

Reconfigurability: Reconfigurability in MCEWSNs is an important research avenue that would allow the network to adapt to new requirements by integrating code upgrades (e.g., a more efficient algorithm for video compression may be discovered after deployment). Mobility and self-adaptability of embedded sensor nodes require further research to obtain the desired view of the sensor field (e.g., an image sensor facing downward toward the earth may not be desirable).

Energy Harvesting: Considering that the battery energy is the most critical resource constraint for sensor nodes in MCEWSNs, research and development in energy-efficient batteries and energy-harvesting systems would be beneficial for MCEWSNs.

Near-Threshold Computing (NTC): NTC refers to using a supply voltage (![]() ) that is close to a single transistor's threshold voltage

) that is close to a single transistor's threshold voltage ![]() (generally

(generally ![]() is slightly above

is slightly above ![]() in near-threshold operation, whereas

in near-threshold operation, whereas ![]() is below

is below ![]() for sub-threshold operation). Lowering the supply voltage reduces power consumption and increases energy efficiency by lowering the energy consumed per operation. With the advent of MCEWSNs leveraging manycore chips, sub- or near-threshold designs become a natural fit for these highly parallel architectures. Considering the stringent power constraints of the manycore chips leveraged in MCEWSNs, sub- or near-threshold designs may be the only practical way to power up all of the cores in these chips [65]. Hence, NTC provides a promising solution for the dark silicon problem (transistor under utilization) in manycore architectures. However, widespread adoption of NTC in MCEWSNs for reduced power consumption requires addressing NTC challenges such as increased process, voltage, and temperature variations, sub-threshold leakage power, and soft error rates.

for sub-threshold operation). Lowering the supply voltage reduces power consumption and increases energy efficiency by lowering the energy consumed per operation. With the advent of MCEWSNs leveraging manycore chips, sub- or near-threshold designs become a natural fit for these highly parallel architectures. Considering the stringent power constraints of the manycore chips leveraged in MCEWSNs, sub- or near-threshold designs may be the only practical way to power up all of the cores in these chips [65]. Hence, NTC provides a promising solution for the dark silicon problem (transistor under utilization) in manycore architectures. However, widespread adoption of NTC in MCEWSNs for reduced power consumption requires addressing NTC challenges such as increased process, voltage, and temperature variations, sub-threshold leakage power, and soft error rates.

Heterogeneous Architectures: MCEWSNs would benefit from parallel computer architecture research. Specifically, a heterogeneous manycore architecture that could leverage both super-threshold computing and NTC to meet performance and energy requirements of sensing applications might provide a promising solution for MCEWSNs. The heterogeneous architecture can integrate super-threshold (nominal voltage) SMP cores and near-threshold SIMD cores [66]. Research indicates that a combination of NTC and parallel SIMD computations achieves excellent energy efficiency for easy-to-parallelize applications [67]. With this heterogeneous architecture, sensing applications' tasks with less parallelism can be scheduled to high-power SMP cores, whereas tasks with abundantparallelism will benefit from scheduling on low-power near-threshold SIMD cores. Hence, research in heterogeneous architectures would enable a single architecture to serve a broad range of sensing applications with varying degrees of parallelism.

Transistor Technology: With ongoing technology scaling, conventional planar CMOS devices suffer from increasing susceptibility to numerous variations, such as circuit performance, short channel effects, delay, or leakage. Research in novel transistor technologies that improve the energy efficiency, provide better resistance to process variation, and are amenable for nanoscale fabrication would benefit sensor nodes in MCEWSNs. One of the promising transistor technologies for future process nodes (22 nm and below) is FinFET, in which the channel is a slab (fin) of undoped silicon perpendicular to the substrate [68]. The increased electrostatic control of the FinFET gate over the channel enables high on-current to off-current ratio, which improves carrier mobility, and is promising for near-threshold low-power designs. Other advantages of FinFET over planar CMOS include reduced random dopant fluctuations, lower parasitic junction capacitance, suppression of short channel effects, leakage currents, and parametric variations. However, the widespread transition to FinFET requires further research in prediction models for performance, energy, and process variation for this transistor technology as well as a complete overhaul of the current fabrication process.

2.7 Chapter Summary

In this chapter, we proposed an architecture for heterogeneous hierarchical MCEWSNs. Compute-intensive tasks, such as information fusion, encryption, network coding, and SDR, will benefit in particular from the increased computational power offered by multicore embedded sensor nodes. Many wireless sensor networking application domains, such as WVSNs, WMSNs, satellite-based sensor networks, 3SNs, ATHSNs, and fault-tolerant sensor networks, can benefit from MCEWSNs. Perceiving the potential benefits of MCEWSNs, several initiatives have been undertaken in both academia and industry to develop multicore embedded sensor nodes, such as InstraNode, SBSNs, and smart camera motes. We further highlighted the research challenges and future research avenues for MCEWSNs. Specifically, MCEWSNs would benefit from advancements in application parallelization, signal processing, computer vision, reconfigurability, energy harvesting, NTC, heterogeneous architectures, and transistor technology.