Chapter 25

Service Transition Processes: Release and Deployment Management and Service Validation and Testing

THE FOLLOWING ITIL INTERMEDIATE EXAM OBJECTIVES ARE DISCUSSED IN THIS CHAPTER:

- ✓ Release and deployment management and service validation and testing are discussed in terms of

- Purpose

- Objectives

- Scope

- Value

- Policies

- Principles and basic concepts

- Process activities, methods, and techniques

- Triggers, inputs, outputs, and interfaces

- Critical success factors and key performance indicators

- Challenges

- Risks

The syllabus covers the managerial and supervisory aspects of service transition processes. It excludes the day-to-day operation of each process and the details of the process activities, methods, and techniques as well as its information management. More detailed process operation guidance is covered in the intermediate service capability courses. More details on the courses included in the ITIL exam framework can be found at www.axelos.com/qualifications/itil-qualifications. Each process is considered from the management perspective. That means that at the end of this section of the book, you should understand those aspects that would be required to understand each process and its interfaces, oversee its implementation, and judge its effectiveness and efficiency.

The syllabus covers the managerial and supervisory aspects of service transition processes. It excludes the day-to-day operation of each process and the details of the process activities, methods, and techniques as well as its information management. More detailed process operation guidance is covered in the intermediate service capability courses. More details on the courses included in the ITIL exam framework can be found at www.axelos.com/qualifications/itil-qualifications. Each process is considered from the management perspective. That means that at the end of this section of the book, you should understand those aspects that would be required to understand each process and its interfaces, oversee its implementation, and judge its effectiveness and efficiency.

Release and Deployment Management

A release may include many different types of service assets and involve many people. Release and deployment management ensures that responsibilities for handover and acceptance of a release are defined and understood. It ensures that the requisite planning takes place, and controls the release of the new or changed CIs.

Purpose

The purpose of the release and deployment management process is to plan, schedule, and control the build, test, and deployment of releases and to deliver new functionality required by the business while protecting the integrity of existing services. This is an important process because a badly planned deployment can cause disruption to the business and fail to deliver the benefits of the release that is being deployed.

Objectives

The main objective of release and deployment management ensures that the purpose is achieved by delivering plans and managing the release effectively to meet the agreed standards. It aims to ensure that release packages are built, installed, tested, and deployed efficiently and on schedule and the new or changed service delivers the agreed benefits. An important aspect of release and deployment is that it also seeks to protect the current services by minimizing any adverse impact of the release on the existing services. It aims to satisfy the different needs of customers, users, and service management staff.

The objectives of release and deployment management include defining and agreeing on plans for release and deployment with customers and stakeholders and then creating and testing release packages of compatible CIs. Another objective is to maintain the integrity of the release package and its components throughout transition. All release packages should be stored in and deployed from the DML following an established plan. They should also be recorded accurately in the CMS.

Other objectives of release and deployment management include ensuring that release packages can be tracked, installed, tested, verified, and/or uninstalled or backed out and that organization and stakeholder change is managed. The new or changed service must be able to deliver the agreed utility and warranty.

The process ensures that any deviations, risks, and issues are recorded and managed by taking the necessary corrective action. Customers and users are able to use the service to support their business activities because release and deployment ensures that the necessary knowledge transfer takes place as part of the transition. It also ensures that the required knowledge transfer takes place to enable the service operation functions to deliver, support, and maintain the service according to the required warranties and service levels.

Scope

The scope of release and deployment management includes the processes, systems, and functions to package, build, test, and deploy a release into production and establish the service specified in the service design package before final handover to service operations.

It also includes all the necessary CIs to implement a release. These may be physical, such as a server or network, or virtual, such as a virtual server or virtual storage. Other CIs included within the scope of this process are the applications and software. As we said previously, training for users and IT staff is also included, as are all contracts and agreements related to the service.

You should note that release and deployment is responsible for ensuring that appropriate testing takes place; the actual testing is carried out as part of the service validation and testing process. Release and deployment management also does not authorize changes. At various stages in the lifecycle of a release, it requires authorization from change management to move to the next stage.

Value to the Business

A well-planned approach to the implementation and release and deployment of new or changed services can make a significant difference in the overall costs to the organization. The release and deployment process delivers changes faster and at optimum cost and risk while providing assurance that the new or changed service supports the business goals. It ensures improved consistency in the implementation approach across teams and contributes to meeting audit requirements for traceability.

Poorly designed or managed release deployment may force unnecessary expenditure and waste time.

Policies, Principles, and Basic Concepts

Release and deployment management policies should be in place. The correct policies will help ensure the correct balance between cost of the service, the stability of the service, and its ability to change to meet changing circumstances.

The relative importance of these different elements will vary between organizations. For some services, stability is crucial; for other services, the need to implement releases in order to support rapidly changing business requirements is the most important, and the business is willing to sacrifice some stability to achieve this flexibility. Deciding on the correct balance for an organization is a business decision; release and deployment management policies should therefore support the overall objectives of the business.

These policies can be applicable to all services provided or apply to an individual service, which may have a different desired balance between stability, flexibility, and cost.

Release Unit

The term release unit describes the portion of a service or IT infrastructure that is normally released as a single entity according to the organization’s release policy. The unit may vary, depending on the different type(s) of service asset or service component being released, such as software or hardware.

In Figure 25.1, you can see a simplified example showing an IT service made up of systems and service assets, which are in turn made up of service components. The actual components to be released on a specific occasion may include one or more release units and are grouped together into a release package for that specific release. Each service asset will have an appropriate release-unit level. The release unit for business-critical applications may be the complete application in order to ensure comprehensive testing, whereas the release unit for a website might be at the page level.

Figure 25.1 Simplified example of release units for an IT service

Copyright © AXELOS Limited 2010. All rights reserved. Material is reproduced under license from AXELOS.

Ease of deployment is a major deciding factor when specifying release units. This will depend on the complexity of the interfaces between the release unit and the rest of the services and IT infrastructure.

Releases should be uniquely numbered, and the numbering should be meaningful, referencing the CIs that it represents and a version number—for example, Payroll-System v.1.1.1 or Payroll-System v.2.0. It is common for the first number to change when additional functionality is being released, whereas the later numbers show an update to an existing release.

Release Package

A release package is a set of configuration items that will be built, tested, and deployed together as a single release. It may be a single release unit or a structured set of release units such as the one shown in Figure 25.1. Release packages are useful when there are dependencies between CIs, such as when a new version of an application requires an operating system upgrade, which in turn requires a hardware change. In some cases, the release package may include documentation and procedures.

Figure 25.2 shows how the architectural elements of a service may be changed from the current baseline to the new baseline with releases at each level. The release teams need to understand the relevant architecture to plan, package, build, and test a release to support the new or changed service. For example, the technology infrastructure needs to be ready—with service operation functions prepared to support it with new or changed procedures—before an application is installed.

Figure 25.2 Architecture elements to be built and tested

Copyright © AXELOS Limited 2010. All rights reserved. Material is reproduced under license from AXELOS.

The example in Figure 25.3 shows an application with its user documentation and a release unit for each technology platform. The customer service asset is supported by two supporting services: SSA for the infrastructure service and SSB for the application service. These release units will contain information about the service, its utilities and warranties, and release documentation.

Figure 25.3 Example of a release package

Copyright © AXELOS Limited 2010. All rights reserved. Material is reproduced under license from AXELOS.

Deployment Options and Considerations

Service design will define the adopted approach to transitioning; it will be documented in the SDP. The choices include a big bang versus a phased approach, using a push or pull deployment method, and automating the deployment or carrying it out manually. We are going to look at each of these now.

A big bang approach deploys a release to all user areas in one operation. This can be useful if it is important that everyone is working on the same version, but it will have a significant impact should the deployment fail. A phased approach deploys the release to different parts of the user base in a scheduled plan. This is less risky but takes longer, and it will mean that there will be more than one version running concurrently.

Using a push mechanism to deploy the release means that the components are deployed from the center and the recipient cannot “opt out” of receiving them. A pull mechanism allows users to choose if and when they want to download the new release.

An automated approach to deployment will help to ensure repeatability and consistency and may be the only practical option if the deployment is to hundreds or thousands of CIs. However, the automated approach requires the distribution tools to be set up, and this may not always be justified. If a manual mechanism is used, the greater risk of errors or inefficiency should be considered.

Release and Deployment Models

As with other processes, the use of predefined models in release and deployment can be a useful way of achieving consistency in approach, no matter how many people are involved. The release and deployment models would contain a series of preagreed and predefined steps or activities to provide structure and consistency to the delivery of the process. Also included would be the exit and entry criteria for each stage, definitions of roles and responsibilities, controlled build and test environments, and baselines and templates. All the supporting systems and procedures would be defined in the model with the documented handover activities.

Process Activities, Methods, and Techniques

There are four phases to release and deployment management, shown in Figure 25.4. Let’s look at each one in turn:

- The first phase is release and deployment planning. During this phase, the plans for creating and deploying the release are drawn up. This phase starts when change management gives the authorization to begin planning for the release and ends with change management authorization to build the release.

- This then marks the start of the second stage, release build and test. During this phase, the release package is built, tested, and checked into the DML. This phase ends with change management authorization for the baselined release package to be checked into the DML by service asset and configuration management. This phase happens only once for each release. Once the release package is in the DML, it is ready to be deployed to the target environments when authorized by change management.

- During the deployment phase, the release package is deployed to the live environment. This phase ends with the handover to the service operation functions and early life support. There may be many separate deployment phases for each release, depending on the planned deployment options.

- The final phase is the review and close phase. During this phase, experience and feedback are captured, performance targets and achievements are reviewed, and lessons are learned. These activities may result in additions to the CSI register. Note how it is change management that controls when the release takes place, with multiple trigger points for the release and deployment management activity. This does not require a separate RFC at each stage.

Figure 25.4 Phases of release and deployment management

Copyright © AXELOS Limited 2010. All rights reserved. Material is reproduced under license from AXELOS.

Let’s look at each of these phases in a little more detail.

The plan release and deployment phase will include plans for all aspects of this phase; the plans will be mostly drawn up during service design and approved by change management. The plans must include not only the release and deployment plans themselves, but also the plans for pilot releases; the plans for building, testing, and packaging the release; and detailed deployment plans. Logistical considerations and financial plans must also be included. As part of this phase, the criteria for whether an activity has passed or failed to meet the required standard will be decided.

Next, let’s consider the build and test phase. This phase includes writing the release and build documentation, ensuring that the necessary contracts and agreements are in place, and obtaining the necessary configuration items and components. These items will all need to be tested. The release packages will then need to be built, and this is an opportunity to make sure build management procedures, methodologies, tools, and checklists are in place to ensure that the release packages are built in a standard, controlled, and reproducible way. This will help to ensure that the output of this activity matches the solution design defined in the service design package. The final activity in this phase is the building and management of the test environments. The test environments must be controlled to ensure that the builds and tests are performed in a consistent, repeatable, and manageable manner. Failure to control the test environments can jeopardize the testing activities and could necessitate significant rework. Dedicated build environments should be established for assembling and building the components to create the controlled test and deployment environments.

The definitive version of the release package (authorized by change management) must be placed in the DML. The release package must always be taken from the DML to deploy to the service operation readiness, service acceptance, and live environments.

The entry criteria for the plan and prepare for deployment phase include all stakeholders being confident that they are ready for the deployment and that they accept the deployment costs and the management, organization, and people implications of the release. The deployment includes activities required to deploy, transfer, or decommission/retire services or service assets. It may also include transferring a service or a service unit within an organization or between organizations as well as moving and disposal activities.

An example of the deployment activities that apply to the deployment for a target group is shown in Figure 25.5. Note the actions taken at each stage, the authorizations obtained from change management at different stages, and the baselines taken at key points.

Figure 25.5 Example of a set of deployment activities

Copyright © AXELOS Limited 2010. All rights reserved. Material is reproduced under license from AXELOS.

When the deployment activities are complete, it is important to verify that users, service operation functions, other staff, and stakeholders are capable of using or operating the service as planned. This is a good time to gather feedback on the deployment process to feed into future improvements.

Successful confirmation of the deployment verification triggers the early life support for the deployment group. If it’s unsuccessful, a decision may be made to remediate or back out of the release.

Now let’s look at the triggers, inputs, outputs, and process interfaces for release and deployment management.

Triggers

We’ll look first at the triggers for this process. Release and deployment management is triggered by the receipt of an authorized change to plan, build, and test a production-ready release package. Deployment is triggered by the receipt of an authorized change to deploy a release package to a target deployment group or environment, for example, a business unit, customer group, and/or service unit.

Inputs

The inputs to release and deployment management include the authorized change and the SDP containing a service charter. The charter defines the business requirements, expected utility and warranty, outline budgets and timescales, service models, and service acceptance criteria. Another input is the acquired service assets and components and their documentation and specifications for build, test, release, training, disaster recovery, pilot, and deployment. The release policy and design, release, and deployment models and exit and entry criteria for each stage are the remaining inputs.

Outputs

There are many outputs of this process; the main ones are the new, changed, and retired services; the release and deployment plan; and the updated service catalog. Other outputs include details of the new service capability and documentation, the service transition report, the release package, SLAs, OLAs, contracts, tested continuity plans, CI specifications, and the capacity plan.

Interfaces

The main interfaces are with the other transition processes:

- Service design coordination creates the SDP that defines the new service and how it should be created. This is a major input to release and deployment.

- Transition planning and support provides the framework in which release and deployment management will operate, and transition plans provide the context for release and deployment plans.

- Change management is tightly integrated with release and deployment. Change authorizes the release and deployment work. Release and deployment plans form part of the change schedule.

- Service asset and configuration management provides essential data and information from the CMS and provides updates to the CMS.

- Service validation and testing coordinates its actions with release and deployment to ensure that testing is carried out when necessary and that builds are available when required by service validation and testing.

Critical Success Factors and Key Performance Indicators

As with all processes, the performance of release and deployment management should be monitored and reported, and action should be taken to improve it. And as with the other processes we have discussed, each critical success factor (CSF) should have a small number of key performance indicators (KPIs) that will measure its success, and each organization may choose its own KPIs.

Let’s look at two examples of CSFs for release and deployment management and the related KPIs for each.

The success of the CSF “Ensuring integrity of a release package and its constituent components throughout the transition activities” can be measured using KPIs that measure the trends toward increased accuracy of CMS and DML information when audits take place. Other relevant KPIs for this CSF would be the accuracy of the proposed budget and reducing the number of incidents due to incorrect components being deployed.

The success of the CSF “Ensuring that there is appropriate knowledge transfer” can be measured using KPIs that measure a falling number of incidents categorized as “user knowledge,” an increase in the percentage of incidents solved by level 1 and level 2 support, and an improved customer satisfaction score when customers are questioned about release and deployment management.

Challenges

Challenges for release and deployment management include developing standard performance measures and measurement methods across projects and suppliers, dealing with projects and suppliers where estimated delivery dates are inaccurate, and understanding the different stakeholder perspectives that underpin effective risk management. The final challenge is encouraging a risk management culture where people share information and take a pragmatic and measured approach to risk.

Risks

The main risks in release and deployment include a poorly defined scope and an incomplete understanding of dependencies, leading to scope creep during release and deployment management. The lack of dedicated staff can cause resource issues. Other risks include the circumvention of the process, insufficient financing, poor controls on software licensing and other areas, ineffective management of organizational and stakeholder change, and poor supplier management. There may also be risks arising from the hostile reaction of staff to the release. Finally, there is a risk that the application or technical infrastructure will be adversely affected, due to an incomplete understanding of the potential impact of the release.

Service Validation and Testing

The underlying concept behind service validation and testing is quality assurance—establishing that the service design and release will deliver a new or changed service or service offering that is fit for purpose and fit for use.

Purpose

The purpose of the service validation and testing process is to ensure that a new or changed IT service matches its design specification and will meet the needs of the business.

Objective

The objectives of service validation and testing are to ensure that a release will deliver the expected outcomes and value within the projected constraints and to provide quality assurance by validating that a service is “fit for purpose” and “fit for use.” Another objective is to confirm that the requirements are correctly defined, remedying any errors or variances early in the service lifecycle. The process aims to provide objective evidence of the release’s ability to fulfil its requirements. The final objective is to identify, assess, and address issues, errors, and risks throughout service transition.

Scope

The service provider has a commitment to deliver the required levels of warranty as defined within the service agreement. Throughout the service lifecycle, service validation and testing can be applied to provide assurance that the required capabilities are being delivered and the business needs are met.

The testing activity of service validation and testing directly supports release and deployment by ensuring appropriate testing during the release, build, and deployment activities. It ensures that the service models are fit for purpose and fit for use before being authorized as live through the service catalog. The output from testing is used by the change evaluation process to judge whether the service is delivering the service performance with an acceptable risk profile.

Value to the Business

The key value to the business and customers from service testing and validation is in terms of the established degree of confidence it delivers that a new or changed service will deliver the value and outcomes required of it and the understanding it provides of the risks.

Successful testing provides a measured degree of confidence rather than guarantees. Service failures can harm the service provider’s business and the customer’s assets and result in outcomes such as loss of reputation, loss of money, loss of time, injury, and death.

Policies, Principles, and Basic Concepts

Now we’ll look at the policies and principles of service validation and testing and the basic concepts behind it. The policies for this process reflect strategy and design requirements. The following list includes typical policy statements:

- All tests must be designed and carried out by people not involved in other design or development activities for the service.

- Test pass/fail criteria must be documented in a service design package before the start of any testing.

- Establish test measurements and monitoring systems to improve the efficiency and effectiveness of service validation and testing.

Service validation and testing is affected by policies from other areas of service management. Policies that drive and support service validation and testing include the service quality policy, the risk policy, and the service transition, release management, and change management policies.

Inputs from Service Design

A service is defined in the SDP. This includes the service charter defining agreed utility and warranty for the service, so the SDP is a key input to service validation and testing. The SDP also defines the service models that describe the structure and dynamics of a service delivered by service operation. The model can be used by service validation and testing to develop test models and plans.

The service design package defines a set of design constraints (as shown in Figure 25.6) against which the service release and new or changed service will be developed and built. Validation and testing should test the service at the boundaries to check that the design constraints are correctly defined and, particularly if there is a design improvement, to add or remove a constraint.

Figure 25.6 Design constraints driven by strategy

Copyright © AXELOS Limited 2010. All rights reserved. Material is reproduced under license from AXELOS.

Service Quality and Assurance

Assurance of the service is achieved through a combination of testing and validation against an agreed standard, providing objective evidence that the service meets the defined requirements. Early in the service lifecycle, it confirms that customer needs, specified in the service charter, are translated correctly into the service design as service level requirements and constraints. Later in the service lifecycle, testing is carried out to assess whether the actual performance is achieved in terms of utility and warranty.

Policies that drive and support service validation and testing include service quality policy, risk policy, service transition policy, release policy, and change management policy. ITIL defines four quality perspectives: the level of excellence, value for money, conformance to specifications, and meeting or exceeding expectations.

The risk policy will influence the degree and level of validation and testing of service level requirements, utility, and warranty, that is, availability risks, security risks, continuity risks, and capacity risks.

The type and frequency of releases will influence the testing approach. Should a policy of frequent releases (such as once a day) be adopted, this will drive requirements for reusable test models and automated testing. The change management policy will define the testing to be done and ensure that it is integrated into the project lifecycle to support the aim of reducing service risk. Other aspects of the policy will ensure that feedback from testing is captured and that automation is introduced where possible.

A test strategy defines the overall approach to organizing testing and allocating testing resources. It is agreed with the appropriate stakeholders to ensure that there is sufficient buy-in to the approach. Early in the lifecycle, the service validation and test role needs to work with service design and service change evaluation to plan and design the test approach using information from the service package, service level packages, the SDP, and the interim evaluation report.

A test model includes a test plan, information on what is to be tested, and the test scripts that define how each element will be tested. A test model ensures that testing is executed consistently in a repeatable way that is effective and efficient. It provides traceability back to the requirement or design criteria and an audit trail through test execution, evaluation, and reporting.

Levels of Testing and Test Models

Because testing is directly related to the building of the service assets and products that make up services, each one of the assets or products should have an associated acceptance test. This will ensure that the individual components will work effectively prior to use in the new or changed service. Each service model should be supported by a reusable test model that can be used for both release and regression testing in the future. Testing models should be introduced early in the lifecycle to ensure that there is a lifecycle approach to the management of testing and validation.

Testing determines if the service meets the functional and quality requirements of the end users by executing defined business processes in an environment that simulates the live operational environment. The entry and exit criteria for testing are defined in the service design package. Testing should cover business and IT perspectives; testing from the business perspective is basically acceptance testing, with users testing the application, system, and service. Operations and service improvement testing tests if the technology, processes, documentation, staff skills, and resources are in place to deliver the new or changed service.

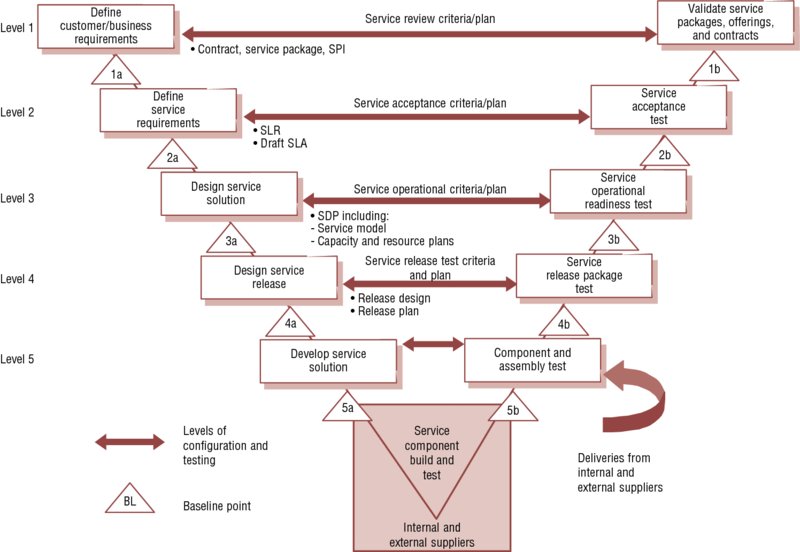

The diagram in Figure 25.7, sometimes called the service V-model, maps the types of tests to each stage of development. Using the V-model ensures that testing covers business and service requirements, as well as technical ones, so that the delivered service will meet customer expectations for utility and warranty. The left-hand side shows service requirements down to the detailed service design. The right-hand side focuses on the validation activities that are performed against these specifications. At each stage on the left-hand side, there is direct involvement by the equivalent party on the right-hand side. It shows that service validation and acceptance test planning should start with the definition of the service requirements. For example, customers who sign off on the agreed service requirements will also sign off on the service acceptance criteria and test plan.

Figure 25.7 Example of service lifecycle configuration levels and baseline points

Copyright © AXELOS Limited 2010. All rights reserved. Material is reproduced under license from AXELOS.

Types of Testing

There are many testing approaches and techniques that can be combined to conduct validation activities and tests. Examples include modeling or simulating situations where the service would be used, limiting testing to the areas of highest risk, testing compliance to the relevant standard, taking the advice of experts on what to test, and using waterfall or agile techniques. Other examples involve conducting a walk-through or workshop, a dress rehearsal, or a live pilot.

Functional and service tests are used to verify that the service meets the user and customer requirements as well as the service provider’s requirements for managing, operating, and supporting the service. Functional testing will depend on the type of service and channel of delivery. Service testing will include many nonfunctional tests. They include testing for usability and accessibility, testing of procedures, and testing knowledge and competence. Testing of the warranty aspects of the service, including capacity, availability, resilience, backup and recovery, and security and continuity, is also included.

Process Activities, Methods, and Techniques

There are seven phases to service validation and testing, shown in Figure 25.8. The basic activities are as follows:

- Validation and test management

- Plan and test design

- Verify test plan and test design

- Prepare test environment

- Perform tests

- Evaluate exit criteria and report

- Test cleanup and closure

Figure 25.8 Example of a validation and testing process

Copyright © AXELOS Limited 2010. All rights reserved. Material is reproduced under license from AXELOS.

The test activities are not undertaken in a sequence; several may be done in parallel; for example, test execution begins before all the test design is complete. Figure 25.8 shows an example of a validation and testing process; it is described in detail in the following list.

- The first activity is validation and test management, which includes the planning, control, and reporting of activities through the test stages of service transition. Also included are managing issues, mitigating risks, and implementing changes identified from the testing activities because these can impose delays.

- The next step, plan and design tests, starts early in the service lifecycle and covers many of the practical aspects of running tests, such as the resources required, any supporting services (including access, security, catering, and communications services), agreeing on the schedule of milestones, handover and delivery dates, agreeing on the time for consideration of reports and other deliverables, specifying the point and time of delivery and acceptance, and any financial requirements.

- The third step is to verify the test plan and test design, ensuring that the testing included in the test model is sufficient and appropriate for the service and covers the key integration points and interfaces. The test scripts should also be checked for accuracy and completeness.

- The next step is to prepare the test environment using the services of the build and test environment staff; use the release and deployment management processes to prepare the test environment where possible. This step also includes capturing a configuration baseline of the initial test environment.

- Next comes the perform tests step. During this stage, the tester carries out the tests using manual or automated techniques and records findings during the tests. In the case of failed tests, the reasons for failures must be fully documented, and testing should continue if at all possible. Should part of a test fail, the incident should be resolved or documented (e.g., as a known error) and the appropriate retests should be performed by the same tester.

- The next step is to evaluate the exit criteria and the report, which has been produced from the test metrics. In this stage, the actual results are compared to what was expected. The service may be considered as having passed or failed or it may be that the service will work but with higher risk or costs than planned. A decision is made as to whether the exit criteria have been met. The final action of this step is to capture the configuration baselines into the CMS.

- The final step is test cleanup and closure. During this step, the test environments are initialized. The testing process is reviewed, and any possible improvements are passed to CSI.

Next we’ll look at the trigger, inputs and outputs, and process interfaces for service validation and testing.

Trigger

This process has only one trigger, a scheduled activity. The scheduled activity could be on a release plan, test plan, or quality assurance plan.

Inputs

A key input to this process is the service design package. This defines the agreed requirements of the service, expressed in terms of the service model and service operation plan. The SDP, as we have discussed previously, contains the service charter, including warranty and utility requirements, definitions of the interface between different service providers, acceptance criteria, and other information. The operation and financial models, capacity plans, and expected test results are further inputs.

The other main input consists of the RFCs that request the required changes to the environment within which the service functions or will function.

Outputs

The direct output from service validation and testing is the report delivered to service evaluation. This sets out the configuration baseline of the testing environment, identifies what testing was carried out, and the results. It also includes an analysis of the results (for example, a comparison of actual results with expected results) and any risks identified during testing activities.

Other outputs are the updated data and information and knowledge gained from the testing along with test incidents, problems, and known errors.

Interfaces

Service validation and testing supports all of the release and deployment management steps within service transition. It is important to remember that although release and deployment management is responsible for ensuring that appropriate testing takes place, the actual testing is carried out as part of the service validation and testing. The output from service validation and testing is then a key input to change evaluation. The testing strategy ensures that the process works well with the rest of the service lifecycle, for example, with service design, ensuring that designs are testable, and with CSI, managing improvements identified in testing. Service operation will use maintenance tests to ensure the continued efficacy of services, while service strategy provides funding and resources for testing.

Critical Success Factors and Key Performance Indicators

As with all processes, the performance of service validation and testing should be monitored and reported, and action should be taken to identify and implement improvements to the process. Each critical success factor (CSF) should have a small number of key performance indicators (KPIs) that will measure its success, and each organization may choose its own KPIs.

Let’s consider two examples of CSFs for service validation and testing and the related KPIs for each.

The success of the CSF “Achieving a balance between cost of testing and effectiveness of testing” can be measured using KPIs that measure:

- The reduction in budget variances and in the cost of fixing errors

- The reduced impact on the business due to fewer testing delays and more accurate estimates of customer time required to support testing

The success of the CSF “Providing evidence that the service assets and configurations have been built and implemented correctly in addition to the service delivering what the customer needs” can be measured using KPIs that measure both the improvement in the percentage of service acceptance criteria that have been tested for new and changed services and the improvement in the percentage of services for which build and implementation have been tested separately from any tests of utility or warranty.

Challenges

The most frequent challenges to effective testing are based on other staff’s lack of respect and understanding for the role of testing. Traditionally, testing has been starved of funding, and this results in an inability to maintain an adequate test environment and test data that matches the live environment, with not enough staff, skills, and testing tools to deliver adequate testing coverage. Testing is often squeezed due to overruns in other parts of the project so the go-live date can still be met. This impacts the level and quality of testing that can be done. Delays by suppliers in delivering equipment can reduce the time available for testing.

All of these factors can result in inadequate testing, which, once again, feeds the commonly held feeling that it has little real value.

Risks

The most common risks to the success of this process are as follows:

- A lack of clarity regarding expectations or objectives

- A lack of understanding of the risks, resulting in testing that is not targeted at critical elements

- Resource shortages (e.g., users, support staff), which introduce delays and have an impact on other service transitions

Summary

This chapter explored two more processes in the service transition stage, release and deployment management and service validation and testing. We examined how the release and deployment management process ensures that the required planning for the release is carried out, and the release activities are managed to minimize the risk to existing services. We also learned how the service validation and testing ensures that the release is fit for use and fit for purpose.

We examined how each of these processes supports the other and the importance of these processes to the business and to the IT service provider.

Exam Essentials

Understand the purpose of release and deployment management. Release and deployment management ensures that releases are planned, scheduled, and controlled so the new or changed services are built, tested, and deployed successfully.

Understand and be able to list the objectives of release and deployment management. The objectives of release and deployment are to ensure successful transition of a new or changed service into production, including the management of issues and risks. It also ensures that knowledge transfer is completed for both recipients and support teams.

Understand the scope of release and deployment management. The scope of release and deployment management covers all aspects of packaging, building, and testing the release (including managing all CIs that are part of the release) to deploy the release into production.

Be able to explain the contents of a release policy. The release policy describes the manner in which releases will be carried out, provides definitions for release types, and specifies the activities that should be managed under the control of the process.

Be able to list the four phases of release and deployment management. The four phases of release and deployment are release and deployment planning, release build and test, deployment, and review and close. All four phases should be triggered by authorization from the change management process.

Understand and be able to list the objectives of service validation and testing. The objectives of service validation and testing are to ensure that a release will deliver the expected outcomes and value and to provide quality assurance by validating that a service is fit for purpose and fit for use. The process aims to provide objective evidence of the release’s ability to fulfil its requirements.

Understand the dependencies between the service transition processes of release and deployment management, service validation and testing, and change evaluation. Release and deployment management is responsible for ensuring that appropriate testing takes place, but the testing is carried out in service validation and testing. The output from service validation and testing is then a key input to change evaluation.

Be able to list the typical types of testing used in service validation and testing. The main testing approaches used are as follows:

- Simulation

- Scenario testing

- Role playing

- Prototyping

- Laboratory testing

- Regression testing

- Joint walk-through/workshops

- Dress/service rehearsal

- Conference room pilot

- Live pilot.

Be able to describe the use and contents of a test model. A test model includes a test plan, what is to be tested, and the test scripts that define how each element will be tested. A test model ensures that testing is executed consistently in a repeatable way that is effective and efficient. It provides traceability back to the requirement or design criteria and an audit trail through test execution, evaluation, and reporting

You can find the answers to the review questions in the appendix. Which of the following is the correct definition of a release package? The release and deployment process covers a concept called early life support. What is meant by early life support? Which of these statements does NOT describe a recommended part of a release policy? Which of these is NOT one of the phases of the release and deployment process? Early life support is an important concept in the release and deployment management process. In which phase of the release and deployment process does early life support happen? Which of the following is NOT provided as part of a test model? Which is the correct order of actions when a release is being tested? Where are the entry and exit criteria for testing defined? The diagram mapping the types of test to each stage of development to ensure that testing covers business and service requirements as well as technical ones is known as what? Which of the following are valid results of an evaluation of the test report against the exit criteria?Review Questions