IN THIS CHAPTER

Proper planning and designing of your Configuration Manager (ConfigMgr) solution is crucial to its success. No matter how small or large one’s ConfigMgr deployment, there are many factors to consider to ensure the deployed solution meets its operational requirements yet does not impact the network, computers, or users in a negative fashion.

Historically, poor implementations and usage of Systems Management Server (SMS) have led to a negative perception of the product in general. SMS was frequently deployed in environments where the organization’s requirements were not known. This resulted in a long learning curve as administrators and support staff often discovered product features months or years after their SMS implementation.

A goal of this book is to address mistakes made by SMS administrators worldwide and attempt to educate the reader on best practices and common misconceptions. Proper deployment and planning is required in any project for a successful deployment. This chapter discusses Configuration Manager solution design, emphasizing the Microsoft Solution Framework (MSF). The MSF is a deployment methodology you can use for planning your ConfigMgr deployment.

The MSF Process Model depicts a series of high-level tasks that Microsoft recommends to deploy applications and technologies successfully. MSF is not specific to any technology; it is a broad representation of items to review and activities to perform. The purpose of the MSF Process Model is to combine industry project management guidance while overseeing the implementation of the technology from the envisioning phase, through deployment, and into production. For more information on the Microsoft Solution Framework, refer to http://www.microsoft.com/msf.

By leveraging the MSF Process Model, administrators can implement ConfigMgr in a fast and efficient manner, prepared to address any requirements or issues that arise. The model, illustrated in Figure 4.1, divides the process of creating and deploying a solution into five distinct phases:

• Envisioning

• Planning

• Developing

• Stabilizing

• Deploying

Although Figure 4.1 illustrates MSF as a waterfall model or sequential development process, it can also be a spiral model where the deployment phase is iterative and returns to the envisioning phase, which is how the MSF typically is envisioned. This is useful in rapid software development projects where there are not clear milestones.

During the envisioning phase, the ConfigMgr design team identifies the high-level requirements and business goals for the project. Using this information, the team then develops a document that states the current state, project goals, and scope.

Think of the envisioning phase as a high-level whiteboard session. This is when team members discuss the pros and cons of various topologies, issues that may arise, testing scenarios that may need to be passed for a feature to be used, responsibilities, and timelines. The product of this phase, the Project Scope Document, should capture this information and present it in a simple fashion that is easy to digest. During the envisioning phase, a number of areas are examined, which are discussed in the next sections.

The first step in designing your solution is to understand the current environment. Knowing where you are is a first step in planning how to design your ConfigMgr solution. A detailed assessment gathers information from a variety of sources, resulting in a document you can easily review and update. A variety of sources may be used to gather information for an assessment document, including the following:

• Interviews with networking, support, executive management, asset management, procurement, and security IT personnel

• Information from business stakeholders

• Current documentation on the network, security, servers, and client operating systems

Document the hardware, software, configurations, business technical and functional requirements, and overall network topology. In large-scale enterprises, these tasks may need to occur multiple times (for instance, once per physical location).

The data collected from these sources should be compiled into an architecture document describing the current state. This will serve as a reference point going forward, as well as a snapshot when looking back. This process frequently uncovers real business needs that may have not been known at the time the project was first envisioned.

The network infrastructure is given consideration as part of the envisioning phase of the project. The network is one of the most fragile and complex parts of an IT infrastructure. Areas to consider should include the following:

• What are the networks’ boundaries?

• What network protocols are used?

• What subnets make up the network?

• Is Active Directory (AD) in use?

• Are all the subnets in AD sites?

• Are there wide area network (WAN) links in the infrastructure and, if so, what are their speeds?

• Will the Configuration Manager solution span the WAN, or will you use ConfigMgr to manage the local location only?

• Will firewalls and routers allow the ports ConfigMgr requires to pass traffic, or is port filtering applied at this level?

• What will be the impact to end users when client agents or packages are pushed across the network?

• Are machine accounts in more than one AD domain?

• Are machine accounts in more than one AD forest?

• Will you be able to use a single service account with administrative rights on all site systems?

• Are there computers that are in workgroups?

• Is managing servers part of the plan?

• Can the ConfigMgr client be provisioned as part of the operating system deployment process on workstations and servers?

• Do users work from outside the firewall for long periods of time?

• How is patch management accomplished?

• Will remote control be deployed?

• Will support operators remote control users’ computers without their permission and, if so, is this allowed enterprisewide?

• What are management’s expectations of the solution?

• How quickly after deployment are ConfigMgr features expected to be available to the support staff?

Once there are answers to these questions, the project team will have a better idea of the solution’s requirements and tasks to address with upper management and the security team to determine whether company policies may influence some of these answers.

Chapter 5, “Network Design,” discusses how you can design your ConfigMgr 2007 solution to address these areas.

The solution architecture needs to be conceptualized at this point of the project. This is when ConfigMgr topology conversations should occur. The decisions made here become a reference point for what the initial ConfigMgr architecture and topology will look like. Here are some key items to think about and discuss:

• How many ConfigMgr servers are necessary?

• Is there a server (or servers) that meets the hardware requirements or must it be procured?

• How many SQL servers are required?

• What are the licensing costs of the solution?

• Who will need access to the ConfigMgr console?

• Does the Active Directory schema need to be extended?

• Will using ConfigMgr require native mode or will mixed mode be acceptable?

Chapter 6, “Architecture Design Planning,” covers a number of these items. You will also want to refer to the “Site Design,” “Capacity Planning,” “Licensing Requirements,” and “Extending the Schema” sections of this chapter for additional information.

Server architecture follows solution architecture because the decisions made in the solution architecture phase affect the server architecture. Server architecture is where the ConfigMgr team conceptualizes server requirements. This includes CPUs, RAM, and most importantly drive configuration. Decisions such as whether SQL Server is installed locally or on a separate server are critical to the drive configuration and memory required for sufficient performance. Decisions made in this phase of the ConfigMgr deployment will have an effect on licensing and procurement. The “Developing the Server Architecture,” “Disk Performance,” and “Capacity Planning” sections of this chapter provide additional discussion in these areas.

Client architecture is where the ConfigMgr team and sponsors conceptualize how the agents will be loaded and the settings they will have. Corporate policy often drives many of the ConfigMgr agent policy settings, such as whether or not to prompt for remote control access to a user’s computer.

Frequently, the corporate legal department may have stipulations about which agents may be loaded on certain systems. Discovering these corporate policies is usually time consuming and inflexible, and they may drive a different solution and server design than initially anticipated. The “Client Architecture” section of this chapter and Chapter 12, “Client Management,” discuss specifics of the client design and implementation.

Configuration Manager 2007 is a management solution and, as such, requires licenses for both management servers and managed clients (agents). Part of the requirements your organization may have for a given solution will include its licensing costs, and licensing is frequently an item driving part of the final ConfigMgr architecture.

Configuration Manager licensing is available with and without a SQL Server license. When the SQL Server installation is used only for ConfigMgr purposes, such as when installed on primary site servers, using the combined SQL Server/ConfigMgr license offers substantial savings. When ConfigMgr is licensed with the SQL Server, no other databases other than the ConfigMgr database can use the SQL Server, and no Client Access Licenses (CALs) are required for the SQL Server.

ConfigMgr licensing consists of three types of licenses:

• Server license for each management server

• Management license (ML) for each managed operating system environment (OSE)

• Management license for each managed client

Microsoft provided only one version of SMS 2003. Today, with ConfigMgr 2007, there are two versions of the product:

• Standard

• Enterprise

According to Microsoft’s ConfigMgr licensing page (http://www.microsoft.com/systemcenter/configurationmanager/en/us/pricing-licensing.aspx), the only real difference in the two versions is the server workload running in the OSE, which can be a single device, PC, terminal, PDA, or other device. An Enterprise Server ML is required for using the Desired Configuration Management (DCM) functionality of the Configuration Manager solution. The use of proactive management functionality in DCM is limited to the Enterprise Server ML, whereas the Standard Server ML provides operating system and basic DCM functionality.

To clarify this some, based on conversations with Microsoft, the difference between the Standard and Enterprise MLs is the ability to use DCM on SKUs other than the Windows Base Operating System. Here are some specifics:

Standard Server ML:

• Inventory for any workload

• Software distribution for any workload

• Patch management for any workload

• DCM for basic workloads

• Base OS or system hardware

• Storage/File/Print (including FTP, NFS, SMB, and CIFS)

• Networking (DHCP, DNS, WINS, and RADIUS)

Enterprise Server ML:

• All Standard Server ML functionality

• Any functionality not covered by a Standard Server ML, including DCM for applications and compliance

See Chapter 16, “Desired Configuration Management,” for a discussion of DCM.

During the discovery phase of the project, you will want to review your organization’s Microsoft licensing agreements for desktops and servers. If your organization already has the Microsoft Core Client Access License (CAL) Suite (see http://www.microsoft.com/calsuites/core.mspx), it is possible you already own the management license for each managed client. The CAL Suite encompasses four fundamental Microsoft server product families:

• Windows Server

• Exchange Server

• Office SharePoint Server

• System Center Configuration Manager

Using the Configuration Manager licensing page previously referenced in this section, Microsoft provides a licensing guide to use in estimating the cost of the various technologies. Costs given are in U.S. dollars:

• Configuration Manager Server 2007 R2—$579

• Configuration Manager Server 2007 R2 with SQL Server Technology—$1,321

• Enterprise ML—$430

• Standard ML—$157

• Client ML—$41

To estimate the costs for a company with a single primary site server intending to do standard configuration management on the 50 servers that are clients and 950 workstation clients, use the following numbers:

• Configuration Manager Server 2007 R2 with SQL Server Technology: 1 × $1,321 = $1,321

• Standard ML: 50 × $157 = $7,850

• Client ML: 950 × $41 = $38,950

The total cost of this estimate comes to $48,121. This is only an estimate using a single site server and does not include any operating system or other CALs required in a networked environment. In addition, your organization’s licensing costs will vary depending on the size of the organization and the type of license agreement held.

For more information on Microsoft Volume Licensing options and available discounts, visit http://www.microsoft.com/licensing/default.mspx.

Implementing any new tool brings a requirement for training administrative staff, support staff, and potentially even users. ConfigMgr is a technology that touches many areas—servers, databases, networks, and users workstations. Most groups in Information Technology (IT) will require some level of training specific to their job function and the impact ConfigMgr will have on them or the technologies for which they are responsible. At this point in the project, the design team should start addressing training requirements to address the various audiences. Training should begin before implementing Configuration Manager to help facilitate its adoption, understanding of the tool, its impact on the environment from a cost- and timesaving perspective, and the technical impact it will have on the various pieces of the IT infrastructure.

Your specific environment will determine the level and amount of training required. You may need to consider both user and technical training.

If your ConfigMgr implementation will be pushing a considerable amount of software that requires user involvement, training end users will be paramount and decisions must be made regarding the type of training to provide, the medium, and so on. When end-user training is required, technologies available today make it very easy to provide web-based training in video form to users on a company intranet. This mechanism is very low cost, support for the media is inherent to the client operating systems, and most users will know how to access and use the posted video training sessions.

The links at http://technet.microsoft.com/en-us/library/bb694263.aspx provide access to documents to help you get started with Configuration Manager. There is also formal training using Microsoft Official Curriculum (MOC). The MOC for System Center Configuration Manager 2007 offers IT professionals the training needed to deploy, administer, and support Configuration Manager 2007. Course 6451A, “Planning, Deploying and Managing Microsoft System Center Configuration Manager 2007,” is a 5-day instructor-led course teaching students the skills needed to plan, deploy, and support ConfigMgr 2007 implementations. Course 6451A’s syllabus can be found at http://www.microsoft.com/learning/en/us/syllabi/6451a.aspx. For more information about the Microsoft Official Curriculum, visit the Microsoft training website at http://go.microsoft.com/fwlink/?LinkId=80347. For other ConfigMgr 2007 training options, see Appendix B, “Reference URLs.”

Microsoft offers a Technical Specialist ConfigMgr certification. The requirements and summary of Exam 70-401, “Microsoft System Center Configuration Manager 2007, Configuring,” can be viewed at http://www.microsoft.com/learning/en/us/exams/70-401.mspx. Completing Exam 70-401 will grant the individual a Microsoft Certified Technology Specialist (MCTS) endorsement in System Center Configuration Manager 2007. Exam 70-401 is also available as an elective for Microsoft Certified Systems Administrator (MCSA) and Microsoft Certified Systems Engineer (MCSE) certification on Windows Server 2008.

During the MSF planning phase, the team identifies the ConfigMgr features it will implement and decides how to implement them. These items drive what is known as the functional specification. The functional specification is the foundation of the design. It incorporates the current state of the environment, the business functional and technical requirements, and the proposed architecture of the solution, and it discusses how the solution will address the business requirements. The functional specification also addresses the order in which the solution is built, tested, and deployed, defining success factors for each tested scenario.

The team should include a project manager who will facilitate the validation of business requirements, log key decision factors, and log the construction and testing activities in a master project plan. The master project plan will map against a schedule known as the master project schedule. The master project schedule is a series of dates when key milestones should occur, such as the following:

• Server build

• Storage allocation

• SQL Server installation

• ConfigMgr installation

• Site System provisioning

• Test package creation and distribution

• Test client policies for expected behavior

• Test advertisement behavior

• Pilot client deployment

• Production deployment

In addition, the team should develop a risk management plan. Managing risk is a core discipline of the MSF, because change and resulting uncertainty are inherent in the IT life cycle. By having a risk management plan, you can proactively deal with uncertainty and continually assess risks.

When the functional specification, master project plan, master project schedule, and risk management plan deliverables are approved, the team can begin the deployment. This planning phase ends when the master project plan (MPP and not to be confused with Microsoft Project) is approved.

There often will be changes to the architecture after the MPP is approved, because assumptions are initially made for certain aspects of the proposed solution. The functional specification is referred to as a living document, because updates are made to it after the MPP is approved. These updates should describe the reason for modifying the initial architecture and its changes, for future reference. The project manager will own all the project-specific documentation. This documentation also includes individual test, training, pilot, communication, and release plans.

The communication plan has several purposes, paramount of which is notifying the community of the changes being implemented and the impact those changes will have on existing systems. The challenge with communications is keeping them short, to the point, and not inundating users with emails. The communication plan is often overlooked, so much so that it is common for ConfigMgr implementations to occur without users and IT staff knowing it is taking place! The communication plan for such a project has a unique audience because it includes executives, management, project teams, IT, and users with various skill levels. It is important that the communication plan “sell” the overall project and technology to the user community. By letting the various audiences know what the technology will do for the organization as well as how it will save time and money and increase the overall end-user support experience, the ConfigMgr project team will increase its chance for a successful deployment.

The proof of concept (POC) is where the solution’s architecture is validated. Those architectural features, processes, or options that do not meet the business and technical requirements will be flushed out during the POC. A number of different items should be evaluated and determined whether they will be in the POC—and ultimately in the solution. The following list is not comprehensive because your business may have unique requirements beyond these, but it’s a starting point for formulating the ConfigMgr solution:

• Are multiple sites in the hierarchy necessary?

• Should you extend the Active Directory schema?

• Is this an upgrade or a new installation?

• Choose between a simple or custom setup when installing Configuration Manager 2007.

• Do you require a custom website?

• Choose Configuration Manager boundaries.

• Choose a Configuration Manager client installation method.

• Choose which Configuration Manager features you need.

• Choose between local and remote Configuration Manager site database servers.

• Choose the SMS Provider installation location.

• Choose between native mode and mixed mode.

If you will implement native mode, consider the following:

• Determine if you can use your existing PKI (Public Key Infrastructure). For information on how PKI works, see Chapter 11, “Related Technologies and References.”

• Determine if you need to specify client certificate settings.

• Decide how to deploy the site server signing certificate to clients.

• Determine if you need to renew or change the site server signing certificate.

• Determine if you need to enable Certificate Revocation Checking (CRL) on clients.

• Determine if you need to configure a Certificate Trust List (CTL) with Internet Information Services (IIS).

• Decide if you need to configure HTTP communication for roaming and site assignment.

The proof of concept should be limited to a sandbox-type lab environment where administrators can freely manipulate settings and learn how the ConfigMgr solution can help them more effectively manage their IT assets. Key business requirements should be identified by this time; these should be verified in the POC.

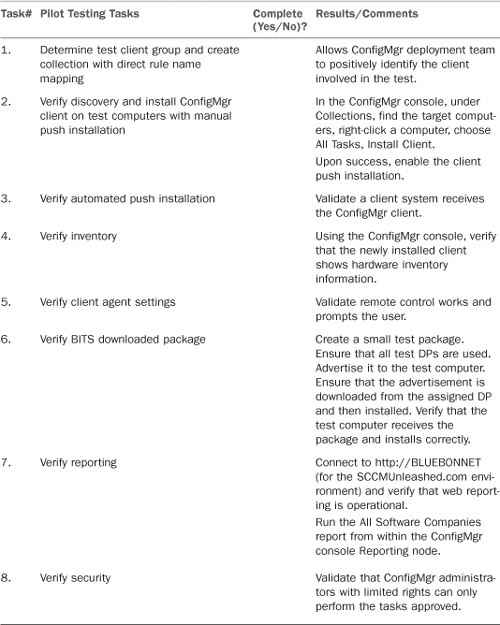

IT management, training, program management, and release management teams are typically involved in the pilot efforts. The pilot plan identifies the scope of the pilot, participants, locations, what will be tested, and success criteria. Planning the pilot phase involves project team members working out the logistics regarding the following:

• Who is in the pilot, and what is their contact information?

• Is there a timeline?

• Where are the servers and clients located?

• What will be tested?

• What will the communication plan say?

• When will the communication plan be ready?

• What is the rollback plan?

• Is staff training required?

• What will be expected of the pilot users?

Planning the pilot phase is essential to the success of the phase itself. The pilot phase is the first actual phase where the solution is tested in a production environment, with the user’s policies, network infrastructure, and skill levels all affecting and providing insight to the overall deployment plan and processes. The success of the pilot will illustrate the project team has tested the design in the production environment where users are working and has demonstrated the business and technical requirements have been met.

Once the project team and the business determine the pilot is successful, the next step on the road to the ConfigMgr solution deployment is its implementation. The plans made for piloting the solution should be leveraged for the production implementation. Most of the tools, lessons, and processes established as part of the testing and pilot phases can be leveraged to simplify and standardize the production rollout. This includes a list of tasks, issues encountered, their associated resolutions, and the overall monitoring of the server and client deployments. You can leverage the Microsoft Solution Framework for guidance around deliverables for various stages of MSF. Information on the MSF is available at http://www.microsoft.com/technet/solutionaccelerators/msf/default.mspx.

No two ConfigMgr implementations are the same. Between network topology, desired functionality, and requirements, each ConfigMgr implementation is unique in its own way. Some organizations have federated or distributed IT departments, lending themselves to a tiered ConfigMgr hierarchy where support personnel have ownership of the clients in their respective regions. Figure 4.2 illustrates a decentralized IT model, where sitewide management functions are typically handled at the regional level.

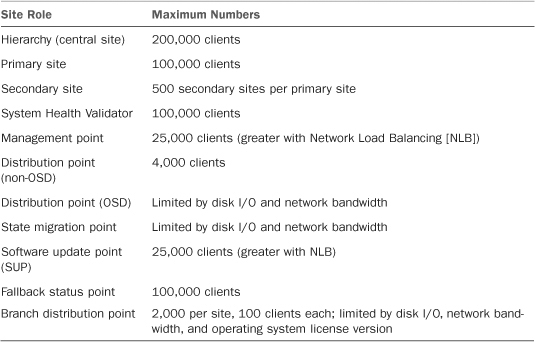

Alternatively, with the rising cost of IT in today’s economy, the push is greater than ever before to consolidate and centralize resources. For proof of this point, look at the virtualization market leading the way in cost savings via consolidation, particularly in larger environments (see http://www.cio.com/article/471408/The_Tricky_Math_of_Server_Virtualization_ROI for a discussion on this). ConfigMgr scales to very large numbers, as listed in Table 4.1, which is taken from the ConfigMgr 2007 R2 help file.

With the scalability numbers as high as specified in Table 4.1, implementing a single site (which would be the central site) provides ConfigMgr administrators the ability to manage a very large number of clients. Most often, the number of site servers is driven by various demands—from the network team, management, political influences, and finally technical requirements. A centralized hierarchy is the easiest to manage if you have the bandwidth and hardware to support the client load.

Note

ConfigMgr Site Database Placement

Starting in the late 1990s, Microsoft has recommended loading SQL Server locally on primary site servers. The recommendation comes from the requirement for the WBEM (Web-Based Enterprise Management) provider to have “fast access” to the site database. However, if a high-speed connection is available to a remote SQL Server, performance might be better than collocating due to the more database-intensive processing required.

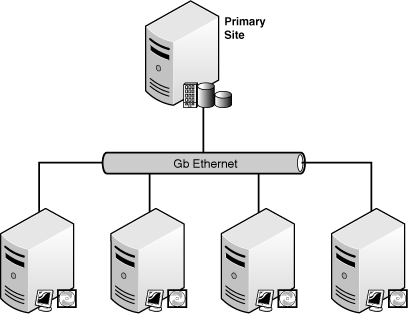

Centralized hierarchies, as displayed in Figure 4.3, come in several flavors. A good example of ConfigMgr centralization is a manufacturing company that has implemented one site in the United States, another in Europe, and finally a third in Asia. The requirement for a primary site server in Asia was one that falls into the technical category, because several countries in that site use native language operating systems that leverage double-byte characters.

Using three primary sites and hundreds of distribution points, the organization depicted in Figure 4.3 was able to effectively roll out patches monthly as well as operating systems on demand in refresh and new system Operating System Deployment (OSD) scenarios, provide remote support, perform detailed inventory, and use a third party add-on—asset management—for thousands and thousands of clients. This is managed using approximately six individuals around the world supporting over 100 locations.

In some cases, organizations implement a flat hierarchy to satisfy political desires within the organization yet still comply with management’s desires concerning inventory management, patching, and so on. The flat hierarchy model allows for a simple hierarchy providing some measure of autonomy, while still allowing a higher level of management over child sites. This is a common approach when a complex hierarchy is required but the organization wants to isolate network communication between tiers. Flat hierarchies, such as the one illustrated in Figure 4.4, are particularly useful in situations where reliable network communications are of concern.

Regardless of the hierarchy model implemented, the overall solution will work the same and provide the same robust level of management across the organization. The key difference is in the flexibility afforded by the solution as a whole to the enterprise. It is up to the design team to determine the hierarchy that will be best for the requirements, network, and usage profile.

The next sections discuss areas to consider when developing your solution architecture.

The design team should spend a considerable amount of time on the network infrastructure. Large amounts of data may be passed across the network, and the traffic patterns will match that which may be blocked by a variety of network intrusion detection systems (IDS), intrusion prevention systems (IPS), and firewalls. Major roadblocks in a managed client deployment often occur at the lowest layers in the Open Systems Interconnection (OSI) model, with large amounts of time spent troubleshooting it at the application level.

Network mediums such as Frame, ATM, and so on are usually transparent to the ConfigMgr solution. Little regard needs to be given to the network medium, but this is not the case with the individual circuit speed. Understanding circuit speeds, the number of clients at a given location, and the expectations of software distribution are the three most substantial factors in planning where to place distribution points (DPs). If client demands are significant enough, such as a large number of systems at a location with inventory and software distribution requirements, an additional primary or a secondary ConfigMgr site may be warranted.

The following times are examples of how long a circuit could be saturated pushing software such as Microsoft Office to a distribution point:

• A 1GB file transferred over a 512Kbps link will take approximately 4 hours and 40 minutes.

• A 1GB file copied over a T1 will take only 1 hour and 33 minutes.

• Increase this circuit to a DS3, which is approximately 45Mbps, and the transfer time drops to just over 3 minutes.

This scenario demonstrates how additional bandwidth may be required in locations that may have previously not needed such bandwidth capacity. You can recoup the return on investment (ROI) of this additional bandwidth with lower overall administrative costs, including the following:

• Reducing or eliminating the need for local server resources

• Reducing or eliminating the need for remote backup operations

• Reducing travel for IT resources that travel to locations for administration or support reasons

• Consolidating voice and data services over a single circuit

• Eliminating long-distance charges due to interoffice direct dialing

The topic of multiple site servers and additional management points introduces the use of addresses and senders, where traffic can be scheduled and throttled across network segments. More information on these topics is available in Chapter 6.

Leveraging Active Directory with schema extensions provides a constant, redundant, easily accessible, and secure location when querying for ConfigMgr information. This makes extending the schema the preferred approach when implementing ConfigMgr. If you previously extended the AD schema for SMS 2003, it should be extended again because not all functionality for ConfigMgr 2007 exists in SMS 2003 schema extensions. Table 4.2 illustrates the ConfigMgr features that depend and benefit from AD schema extensions.

When the schema is not extended, ConfigMgr administrators have to perform manual maintenance tasks that could otherwise be automated with ConfigMgr 2007. These include running scripts and maintaining group policy objects (GPOs) as well as other items required to roll out clients and have them perform with acceptable functionality. Many of the workarounds published in the ConfigMgr online help file do not even scale to support medium-size deployments. Chapter 3, “Looking Inside Configuration Manager,” provides additional information about the benefits of extending the schema and discusses the process of extending the AD schema.

Secondary site servers, discussed in Chapter 6, are used in ConfigMgr to lessen the load on the primary parent site and reduce network bandwidth utilization. It is extremely important to understand how to leverage secondary sites over slow links when defining the distribution point architecture across the WAN. Secondary site servers can host a role known as the proxy management point (PMP), which, to conserve bandwidth, caches a local copy of the policies stored on the parent primary site.

If you place distribution points in a secondary site, clients that are local to the secondary site and within its boundaries can request content from a local DP rather than downloading it across the WAN. The benefit here is that although multiple clients request the content, it is only sent across the slower WAN link once and then installed locally across the LAN by each client. This conserves bandwidth and improves the end-user experience.

You can also use branch DPs in this manner, which provide the added benefit of being supported on client operating systems such as Windows XP and Vista. If you’re deploying secondary site servers purely for the sake of throttling transmissions to DPs, use branch DPs rather than creating secondary sites. However, although branch DPs will keep traffic down, they do not provide the ability to cache and push client inventories, status messages, and policies, as does the PMP of a secondary site server. Branch DPs are limited to 10 concurrent connections when being run from a client operating system rather than a server.

Secondary site servers have a number of advantages and limitations, which should be understood to implement them in your organization effectively. Advantages of secondary site servers include the following:

• Do not require a ConfigMgr Server license.

• Do not require a SQL Server database.

• Can be managed remotely.

• Can have PMPs to optimize WAN traffic.

• Can have DPs to optimize WAN traffic.

Here are some of the disadvantages of secondary site servers you will want to consider:

• Cannot have child sites.

• Can only have a primary site as a parent site.

• Cannot be moved in the hierarchy without uninstalling and then reinstalling.

• Cannot be upgraded to a primary site.

• Cannot have ConfigMgr clients assigned to them; hence, client agent settings are inherited from the primary parent site.

• Although configuring the site address allows throttling and scheduling of inventories as well as status and policy transmissions, overly restrictive settings may result in undesirable behavior.

ConfigMgr provides two modes of security: mixed and native. If you are familiar with SMS 2003, that version used standard and advanced site security modes. Different from SMS 2003, if you upgrade the site to the more secure mode, you can downgrade it later. ConfigMgr 2007’s mixed mode is functionally equivalent to SMS 2003’s advanced security. Because self-signing of transmissions by the management point or the site server using certificates is only available with ConfigMgr, Internet-Based Client Management (IBCM) is not available on ConfigMgr sites running in mixed mode, as IBCM requires manipulating the certificate templates. On the other hand, authentication between clients and site servers uses the same proprietary technology as SMS 2003 when ConfigMgr is in mixed mode.

Native mode introduces new functionality and complexity to ConfigMgr security, such as using industry-standard Public Key Infrastructure certificates and Secure Sockets Layer (SSL) authentication between clients and site systems. ConfigMgr also supports third-party certificates, as long as their template is modifiable. SSL does not encrypt transmissions of policies; these are signed by the site server and management point. Metering data, status messages, policy, and inventory are all signed and encrypted. Because IBCM requires clients to communicate over HTTPS across the Internet to the ConfigMgr hierarchy, it requires native mode. If you will be implementing IBCM, you will want to analyze carefully the various supported deployment scenarios discussed at http://technet.microsoft.com/en-us/library/bb693824.aspx. Chapter 6 provides additional information about planning for site security modes.

Note

Securely Publishing to the Web

When publishing HTTP or HTTPS to the Web from inside a network, you should use Microsoft Internet Security and Acceleration (ISA) Server to perform web publishing of these ports. ISA acts as a reverse proxy in this fashion and provides application layer packet inspection to protect internal systems from malicious code.

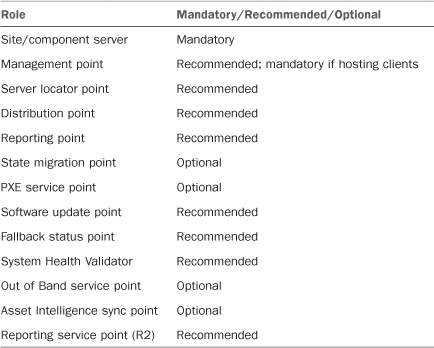

ConfigMgr 2007 has numerous roles that serve various purposes. Some roles are mandatory, some are optional, and some are recommended. Table 4.3 lists the ConfigMgr 2007 roles. Chapter 2, “Configuration Manager 2007 Overview,” introduces the different roles, and Chapter 8, “Installing Configuration Manager 2007,” covers the specifics of installing them.

Each role has different purposes that create unique loads on the site system housing that role. You will need to plan to ensure the site system can handle the load placed on it once in production. The following sections touch on many of the common design considerations that go into a ConfigMgr deployment.

Site servers are the most heavily planned role in the hierarchy. They typically include a local installation of SQL Server and the reporting point, may have thousands of systems reporting to them, run queries, update collection membership, house the management point and server locator point (SLP), and provide connections for ConfigMgr administration consoles across the organization. Site servers handle updating distribution points, process discovery data, and collect inventory from client systems. When a hierarchy of sites exists, site servers also replicate bidirectionally, sending collection, query, and various package data down the hierarchy and consuming inventory data from the lower tier of the hierarchy. Inventory data is only replicated from any given child to its parent and from that site to the next parent site if one exists. Eventually all inventory data makes it to the top of the hierarchy, the central site. In large implementations, the central site does not have any clients, and it is used primarily for reporting purposes.

Note

Placement of the Reporting Point Role

Microsoft recommends placing the reporting point role on a dedicated system in the central site position of the hierarchy for large deployments, to offload the reporting impact on SQL Server.

Collections are updated on a schedule in ConfigMgr, which defaults to every 24 hours beginning at the time the collection was created. Depending on the size of your site and the habits of your ConfigMgr administrators, there may be hundreds to thousands of collections. With so many collections updating against the SQL Server database, you may see a negative performance impact if too many collections are updating or collections are updating too often. This is a scenario often observed in the field, when large numbers of clients in collections are updating on a very aggressive schedule, such as hourly or every 15 minutes. Although these types of intervals are sometimes required, it is important to understand the load this puts on SQL Server and Windows disks. Adequate spindle counts will allow such aggressive collection evaluation. Monitoring various disk counters, covered in the “Developing the Server Architecture” section of this chapter, will provide insight into whether or not there is sufficient hardware to handle the load.

Tip

Collection Evaluation

Take note of the collection evaluation intervals and throttle back the evaluation intervals on those collections rarely used. It is common to find many collections that only need updating on a weekly basis, thus freeing resources on the site server for other pertinent tasks.

Distribution points are typically the most heavily used role from an I/O perspective in all of ConfigMgr. Hundreds to thousands of clients may download or install packages from any given distribution point. Because each environment has unique circumstances around the volume of packages they push, the frequency in which the packages are pushed, and the average size of the packages, it is difficult to create a standard recommendation for sizing distribution points. Here are several best practices for DPs:

• Use BITS whenever possible to download and execute packages.

• Protect DPs on slow links or in remote locations.

• Verify there are enough spindles on the DP to support the packages pulled from them.

• Group distribution points as possible, for simpler administration.

• Make sure DPs have sufficient drive space to accommodate packages.

• If DPs are placed on a system with multiple purposes, such as a domain controller, make sure the load will not hinder other services the system provides.

• When placed on systems providing other network services, the DPs should be placed on a dedicated logical drive, if possible, to segregate I/O.

If a WAN link exists between a site server and the distribution point, using a secondary site server for the remote DP has a number of advantages. The primary benefit is the scheduling and throttling of traffic between the primary site server and secondary site server in regard to package replication. Here are some points to keep in mind:

• When the WAN link is reliable but somewhat small (such as a T1) or the link hosts important services for users across the WAN, a secondary site server housing the DP is highly recommended, as shown in Figure 4.5.

• When the WAN link is unreliable or very slow (such as in a circuit under 1.5Mbps), it is best to use a branch DP, which enables leveraging BITS to replicate the package data.

Network Access Protection requires Windows Server 2008. The NAP role is dependent on the Server 2008 version because it obtains the network policy data from Windows Server 2008. Windows Server 2008 has a role within the operating system itself called the Network Policy Server (NPS) role. This role defines what is deemed as healthy and unhealthy as well as the remediation actions to take when unhealthy clients are found. NAP relies extensively on NPS and the ConfigMgr System Health Validator role. If you plan to implement NAP, Windows Server 2008 and SHV will be a critical piece of your ConfigMgr topology and architecture. Although Chapters 11 and 15 discuss NAP, NPS, and SHV in more detail, it is important at the planning stage to understand the relationship of the roles, the load the systems will have, and their dependencies on each other, as well as the underlying technologies (such as IIS and Windows Server 2008).

By default, clients on the intranet query the Active Directory for their site assignment and resident management point. If the AD schema is not extended, server locator points become mandatory. SLPs are only required when you are managing clients in a workgroup or another AD forest, or have not extended the Active Directory schema. SLPs are not required when IBCM is used. Typically, only one SLP per site is necessary if implemented; the site server hosts this role in a sufficient manner.

The management point is the most significant role in the ConfigMgr site. This role is the primary point for contact between clients and the site server. The management point requires IIS and WebDAV (Web Development Authoring and Versioning).

Note

MPs on Server 2008

You must download, install, and configure WebDAV manually on management points running Windows Server 2008.

Management points provide the following services to clients:

• Installation prerequisites

• Client installation files

• Configuration details

• Advertisements

• Software distribution package source file locations

• Receive inventory data

• Receive software-metering information

• Receive status and state messages from clients

When provisioning an MP, the ConfigMgr wizard prompts the administrator for whether the site database (which is the default) or a database replica should be used. The database replica option should only be selected when desiring to use NLB for the MPs. (Replicas are discussed further in Chapter 8.) The site systems hosting the MP roles must have permission to update their objects in AD.

Management points can support intranet, Internet, and device clients. Management points supporting Internet clients require a web server signing certificate. By default, and as a best practice, the management point computer account is used to access site database information.

The fallback status point (FSP) is the lifesaver of ConfigMgr 2007. It cannot be stressed enough how important it is to implement this role. The FSP lets administrators know when a client installation has failed, is having communication issues with the MP, and is left in an unmanaged state.

The FSP receives state messages from clients and relays them to the site. Because clients can be assigned to only one FSP, make sure to assign them prior to deployment.

Microsoft’s documentation states that the software update point (SUP) role is required within the ConfigMgr hierarchy if you are deploying software updates. The SUP interacts with WSUS to configure settings, to request synchronization to the upstream update source and on the central site, and to synchronize the software updates from the WSUS database to the site server database.

Note

ConfigMgr SP 1 Requirements

Configuration Manager 2007 SP 1 requires WSUS 3.0 SP 1 at the time of this writing. As service packs for both Configuration Manager and WSUS evolve, this requirement may change. Make sure to refer to the release notes and requirements prior to deploying either ConfigMgr or WSUS.

Never make changes to WSUS from within the WSUS Administration console. The active software update point is controlled via the ConfigMgr console. Changes made to WSUS will be overwritten hourly by ConfigMgr’s WSUS Configuration Manager component of the SMS Executive Service. More on ConfigMgr’s patch management capabilities is provided in Chapter 15, “Patch Management.”

A reporting point (RP) is a ConfigMgr role designed to host web reports that query the database of the primary site for which they belong. Because they require SQL to run queries against, RPs can only belong to primary sites, not secondary sites. When multiple primary sites are in a hierarchy, it is a good idea to implement an RP at each primary site. This gives the individual groups who manage the site servers the ability to run reports specific to their managed environment.

Reporting points have the following requirements:

• The site system computer must have IIS installed and enabled.

• Active Server Pages must be installed and enabled.

• Microsoft Internet Explorer 5.01 SP 2 or later must be installed on any server or client that uses Report Viewer.

• To use graphs in reports, Office Web Components (Microsoft Office 2000 SP 2, Microsoft Office XP, or Microsoft Office 2003) must be installed.

Note

Reporting Point Prerequisite Requirements

When you install ASP.NET on a Windows Server 2008 operating system reporting point, you must also manually enable Windows Authentication. For more information, see the “How to Configure Windows Server 2008 for Site Systems” section of the ConfigMgr R2 help file.

Office Web Components is not supported on 64-bit operating systems. If you want to use graphs in reports, use 32-bit operating systems for your reporting points.

Not every role is available all the time to clients. If you will be implementing IBCM, it is important to understand that only a few roles are available to clients out on the untrusted network. Only the following roles are available to IBCM clients:

• Management point

• Fallback status point

• Software update point

• Distribution point (if it is not a site system share), protected site system, or branch distribution point

CPU, RAM, and disk I/O are the three most important items when planning and configuring server hardware. The size, or robustness, of the server provisioned for any given role dictates how well it will handle the load. When discussing expectations of the overall solution, some level of understanding needs to be communicated and agreed on. ConfigMgr has many dependencies, including business and user requirements in addition to the overall infrastructure and network services requirements. This makes it difficult to predict expectations for the overall solution. Because each environment is different and has different requirements, there is no “one size fits all” solution.

The site database server is the most memory-intensive role in the ConfigMgr hierarchy. The amount of memory used is configurable in SQL Server and limited to 3GB, unless you are running SQL Server on an x64 platform and operating system. (Covering alternatives to this, such as AWE, is beyond the scope of this book.) If SQL Server will require more than 3GB, as in instances when it is not dedicated to ConfigMgr, using a separate SQL Server running on x64 becomes a compelling solution.

Several counters are listed in Table 4.4 that you will want to evaluate on your ConfigMgr database server.

You will also want to understand some basic SQL Server best practices. Some of these options will vary depending on your site size, hierarchy, which roles you are using, and how you are using them.

Microsoft has posted several SQL Server best practices as well as technical white papers at http://technet.microsoft.com/en-us/sqlserver/bb671430.aspx.

Microsoft has also produced a SQL Server 2005 Best Practice Analyzer (BPA) that gathers data from SQL Server configuration settings. The SQL BPA produces a report using predefined recommendations to determine if there are issues with the SQL Server implementation. The SQL 2005 BPA can be downloaded at http://www.microsoft.com/downloads. Search for SQL Server 2005 Best Practices. (No BPA is planned for SQL Server 2008.)

There is no ideal performance or target goal for a given ConfigMgr solution. Cost/benefit analysis should be performed to weigh the performance cost versus the actual requirements.

Across any ConfigMgr role, it is important to understand the overall load the role places on a system, and how the system will handle that load. Table 4.5 illustrates a general array of performance counters system administrators should be aware of and use to gauge the overall performance, or health, of their systems. These counters are not specific to servers or roles, and can be applied to any Microsoft Windows operating system.

Disks today are the weakest point in a computer’s performance, and you will want to give serious attention to designing the right disk subsystem for the various ConfigMgr roles. Due to the increasing demand to lower server prices, vendors now make server systems available using hardware designed for the desktop-level system. This may lead to performance bottlenecks and disk failures with ConfigMgr site systems. Although performance using SCSI (Small Computer System Interface) devices may be adequate for server specs, technologies such as SATA (Serial Advanced Technology Attachment) have a much higher Mean Time Between Failure (MTBF), which is calculated during Phase 2 of a hard drive’s life.

It is important to understand the implications of drive failure in servers. Although a drive may fail and the system may continue to run, if another drive fails, the entire volume goes down, ultimately creating an outage. If you are dealing with an enterprise environment, outages are never welcome. Here are the three phases of a drive’s life:

• Phase 1 of a drive’s life is the burn-in phase, and failure is very high.

• In phase 2, the drive is run for a length of time and the failure rate is minimal. This equates to the normal operational lifetime of the drive and is how the MTBF value is calculated.

• Phase 3 is where failure rates increase and the drive is reaching the end of its life, or warranty (ironically).

Table 4.6 lists characteristics of several types of drives.

Smaller sites may be able to run sufficiently on a small array, such as a RAID1 array, which uses two disks. However, larger implementations will falter on such a small backend disk subsystem. As scale increases in the enterprise or demand increases on the disk subsystem used by ConfigMgr, a larger array becomes necessary to support the load. Unfortunately, there is no formula where x number of ConfigMgr clients equals y number of disks—there are just too many possible implementation paths in ConfigMgr 2007 to allow a standard formula to dictate disk I/O load.

When dealing with larger enterprises or more aggressive policy evaluation intervals, such as daily or hourly inventories, know that adding spindles always increases performance of the disk subsystem. Arrays composed of many disks will yield exponentially better performance than arrays just several disks smaller. An easy way to understand this is thinking of each disk as a worker going to find information. When there are additional workers, the information is returned quicker.

If ConfigMgr console performance is important to your ConfigMgr administrators, you will want to explore SANs (Storage Area Networks) and other storage solutions for the ConfigMgr database and binaries. Although discussing SANs, iSCSI (Internet SCSI), and other disk technologies is beyond the scope of this book, you will want to explore them in large-scale enterprises with 20,000 or more clients reporting to a site server. This does not imply that if there are fewer than 20,000 clients that you should not look at using a SAN for your SQL Server databases or distribution points. Storage solutions offer a variety of other benefits, including disaster recovery, backup, and other options that are frequently vendor specific.

Disk optimization steps include the following:

• The ConfigMgr SQL database should be on its own array.

• The ConfigMgr SQL transaction log should be on its own array.

• The Windows operating system should be on its own array.

• Any distribution point should be on its own array.

• Any software update point should be on its own array.

Operating systems perform best when loaded on RAID 1 arrays. Consult with your company’s standard on whether you use RAID1+0 or external storage solutions such as SANs. The principle here is that two disks give good performance, redundancy, and the lowest possible failure rate. (That is correct. With only two disks in a RAID1 array, you are four times less likely to have a failure than in a RAID5 array with eight disks!)

Databases typically need to be placed on RAID5 or RAID10 arrays, due to the sheer number of disks required to support the database size. Fortunately, ConfigMgr has a relatively small database size, although its size is dependent on a multitude of variables such as inventories, packages, number of clients, features in use, and such. SMS 2003’s SQL sizing was based on 50MB + (N × 250KB), where N is the number of clients. This means that if there were 5,000 clients, the formula would read as follows:

|

50MB + (5,000 × 250KB) = 1.27GB |

This sizing formula was found to be unrealistically low, and most administrators doubled or tripled the value. With ConfigMgr 2007, you can use the same rule of thumb for database sizing, but should increase the 250KB multiplier to support the new features, including patch management, configuration management, and expanded inventory. Experience has shown that 2MB per client is a more realistic value to use than 250KB as a starting point for sizing the ConfigMgr database. This means you should use the following formula to determine the required database size:

|

50MB + (N × 2,048KB), where N is the number of clients |

Using this new formula for the same size (5,000 clients) gives a considerably higher number:

|

50MB + (5,000 × 2,048KB) = 9.8GB |

SQL Server transaction logs can usually be a RAID1 array because it is not common for ConfigMgr requirements to do point-in-time restores. This means selecting a simple database recovery model, so the transaction log will not need to be extraordinarily large.

DPs and state migration points are the most critical in terms of disk I/O. Memory and CPU on these roles are a minor concern, and are not an issue as long as there’s sufficient RAM on the system to prevent unnecessary swapping.

Distribution points can have the most widely varying requirements depending on how they are used. As an example, if a company performs routine software patching and package pushes, the size of its distribution point may be minimal, particularly if BITS is used extensively to download and execute content. Anything from a single disk to a RAID1 array could be effective in a branch DP or a conventional DP.

If you introduce the OSD functionality into your ConfigMgr solution, the requirements jump substantially. Conventional packages are relatively small, between 1MB and 200MB, depending on the average package. Microsoft Office usually is one of the largest at 1GB for the 2007 version. Operating system images, regardless of the applications being in the images or called from outside them, average around 1GB for Windows XP and 3GB to 4GB for Vista images in the ImageX WIM (Windows Imaging) format. In addition, because download-and-execute is not an option for operating system deployments, you can have a DP with a very large data demand for many machines in parallel. The best solution for this scenario is many disk spindles. You should seriously consider SANs if your ConfigMgr implementation requires supporting large operating system deployments. State migration points may have similar disk I/O requirements. Disk I/O for this role is difficult to calculate, with each user’s state volume size being an unknown.

Tip

Calculating User State Volume

You can use ConfigMgr to calculate user state volume size, thus helping to define capacity requirements and expected timeframes for OS migrations. Simply query the user’s dataset you desire to capture running a script deployed as a package, and store the size in Windows Management Instrumentation (WMI) on the client. The next inventory will upload data to the site server, where it can be used to populate reports. Microsoft partners such as SCCM Experts (formerly known as SMS Experts) specialize in solutions such as this.

If available, utilize tools such as System Center Operations Manager (OpsMgr) 2007 to baseline performance and monitor ConfigMgr site health. When external monitoring solutions are not available, use a tool such as Performance Monitor (Perfmon), which is built into each version of Windows. Perfmon enables administrators to collect a myriad of performance data and log it to a file for later analysis. Realize that this method of using Performance Monitor can place a load on the system when the samples are captured at an aggressive interval! Because you only need to look at average performance over a broad period, sampling every 10 or 15 minutes is acceptable and provides a multitude of useful data to analyze when tuning the system.

Tip

Benchmarking

Consider periodically collecting performance metrics from the site systems when they are utilized during business hours. This data ultimately will provide a baseline by which you can measure performance. This data is useful for scaling out or up, depending on how the load increases on the site systems. CPU, memory, disk, and network throughput are the four areas to evaluate periodically.

Capacity requirements are frequently miscalculated in the initial planning phases of ConfigMgr deployments because adequate thought is not given to the actual amount of data that will be kept on each site server and site system. Adding storage after the fact is a rather difficult situation to deal with; it frequently requires outages, possibly moving roles around, and in general is something that could have been dealt with in advance if properly architected.

A number of items contribute to ConfigMgr capacity requirements:

• Software inventory has the ability to do file collection.

In a 5,000-seat environment, collecting a 1MB file will add 5GB of storage to the server, backups, and the network load while transferring the data. ConfigMgr software inventory file collection can be configured to limit the maximum amount transferred per client, but the site server and network infrastructure will need to handle this size times the number of clients reporting to the site server or hierarchy.

Adding a software file extension such as .dll to your inventory can easily double the ConfigMgr database size. Tables in the ConfigMgr database such as softwarefile can grow exponentially in size, affecting reporting and Resource Explorer performance. When designing your ConfigMgr solution, it is important to know what software file types will be inventoried to help determine backend storage requirements from a capacity and performance perspective.

• You can scale SUPs and MPs beyond 25,000 clients per site by implementing these site system roles with NLB, as illustrated in Figure 4.6.

If you are implementing NLB on an MP in a mixed mode site, IIS does not allow clients to authenticate to the site system using Kerberos authentication. To support an NLB implementation, you must reconfigure the website application pools running under the Local System account to run under a domain user account.

• Distribution points have disk I/O and network I/O constraints.

Considerations affecting the size of the volume needed include how many packages are planned to be kept on distribution points year-round, and the number of packages a given distribution point is expected to support. Although the ConfigMgr documentation states that a distribution point can handle up to 4,000 clients, network speed, disk performance, and package size greatly impact this value.

Tip

Capacity Planning Calculations

DPs and state migration points are site systems with unique capacity requirements. As a rule of thumb, take the current size of your existing software library volume and then triple it to use as a starting point for DPs or package source repository requirements.

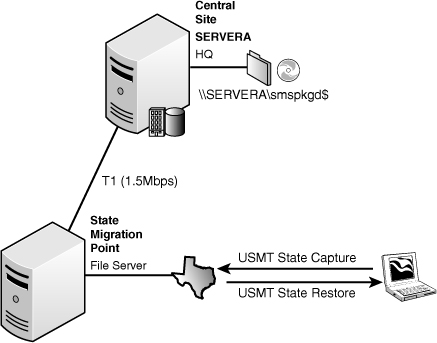

• The state migration point (SMP) is a Configuration Manager 2007 site role providing a secure location to store user state, data, and settings, prior to an operating system deployment.

You can store the user state on the SMP while the operating system deployment proceeds, and then restore the user state to the new computer from the state migration point, as illustrated in Figure 4.7.

Each SMP site server can only be a member of one Configuration Manager 2007 site. State migration points provide ConfigMgr administrators the ability to store users’ data and purge it automatically after it has become stale, a period defined by the ConfigMgr administrator. The concept behind this relies on the data being restored or backed up within the allotted threshold. Figure 4.8 shows the state migration point properties.

Although state migration points allow for automatic scheduled deletion of data considered stale, capacity planning is still needed to handle the volume of data sent at the state migration point within the defined retention period. Chapter 19, “Operating System Deployment,” discusses state migration points in more detail.

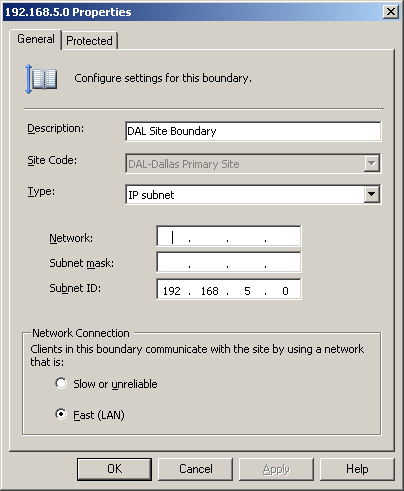

ConfigMgr boundaries, discussed in Chapter 6, are logical groupings defining where the site server has management capabilities. You can specify boundaries using AD sites, Internet Protocol (IP) subnets, IP address ranges, or IPv6 prefixes. Figure 4.9 depicts the Dallas site boundary on the Bluebonnet central site.

When defining boundaries, the ConfigMgr administrator must define whether these boundaries are slow or fast (which really means unreliable or reliable). Boundaries should be unique to each site server and ideally not overlap.

When planning your ConfigMgr site boundaries, you may discover unique requirements for some AD sites or subnets, which may often lead to creating additional ConfigMgr sites. Some client settings at a site level only may not be allowed on certain subnets or AD sites (as an example, remote controlling a computer without user interaction). This action may be prohibited in the Accounting AD site or subnet and ultimately require an additional site server with unique settings for the location.

Although settings are often sitewide, they can be overridden using local policy. Local policies are described in Chapter 3.

Roaming in ConfigMgr is the capability allowing clients to move between sites in the hierarchy, yet still be managed, while making the best use of local network resources. ConfigMgr clients have the ability to roam throughout the hierarchy, allowing clients to leverage services from a nearby site server in the hierarchy so that traversing a WAN or “slow” network is not required. As an example, if a client is at a remote location supporting a site server the client does not belong to, the client can use the roaming feature to install packages off that site server’s DP if the packages are present, thus minimizing impact to the WAN and optimizing the end-user experience of software distribution.

Figure 4.10 illustrates how a client can roam to a different network defined as a slow or unreliable network managed by another site. This is a common scenario when laptops travel, which allows ConfigMgr clients to automatically download and execute packages rather than installing them across the WAN.

Designing a site involves analyzing several items and making decisions on the settings to apply to those items. You will need to analyze items such as client agent settings or policies to determine what the best setting is for the environment the site will be managing. A great example of this is the notification options selected for users to experience when they receive advertisements to run packages on their systems.

Site design items are not specific to client agent settings; they consist of many things, including the following:

• Is the server physical or virtual?

• Where is the server?

• What is the storage subsystem?

• Is SQL Server installed on the site server or a separate system?

• Is the site a primary or secondary?

• What are the site boundaries?

• Which ports will be used for client communication?

• Is the site in mixed or native mode?

• What is the hardware inventory frequency?

• Who are the administrators and operators of this site?

• What is the hardware inventory collecting information on?

• Which discovery methods will be used?

• What is the discovery method’s frequency?

• What LDAP path is being queried for discovery?

• What client push installation system types are selected?

• What client push installation account is being used?

• What rights does the client push installation account have?

• Which roles does the site hold?

• What site maintenance tasks are enabled, and what are their settings?

• What site systems are in the ConfigMgr site, and what are their roles?

• Is Wake On LAN (WOL) enabled, and what are its settings?

Although some settings may only be useful in a disaster-recovery scenario, many of them can have negative impacts when used incorrectly. As an example, when you’re performing discovery from multiple ConfigMgr sites within a hierarchy, it is possible for a system to be discovered multiple times with its data discovery record (DDR) sent up the hierarchy and processed by each system that handles it. This not only results in duplicate DDR analysis effort, but every site will have to analyze each DDR and determine which is newer. It is best to let sites discover only resources that belong to that site, even for child sites—because discovered data flows up the hierarchy. This is easily viewable by looking at the properties of any discovered system or client in a collection.

Due to ConfigMgr’s scalable design, sites may host multiple ConfigMgr roles. In most implementations of 10,000 managed clients or fewer, you can use a single site server with specifications similar to those listed in the next sections. These designs are documented by Microsoft at http://download.microsoft.com/download/4/b/9/4b97e9b7-7056-41ae-8fc8-dd87bc477b54/Sample_Configurations_and_Common_Performance_Related_Questions.pdf (this link is also available in Appendix B), for scaling and providing adequate performance in the listed configurations.

In a small ConfigMgr site, you can configure a site server containing a management point as follows:

In larger environments of approximately 25,000 seats, Microsoft found the following site design to be sufficient.

Site server:

• Dual Xeon 3GHz

• 4GB of RAM

• SAS drives with battery-backed cache

• RAID1 array for OS

• RAID1 array for ConfigMgr files

• RAID10 array (four disks) for SQL Server database, log, and TempDB

Management point:

• Dual Xeon 3GHz

• 4GB of RAM

• SAS drives with battery-backed cache

• RAID1 array for OS

• RAID1 array for ConfigMgr files

When you scale up to 50,000 clients, this site’s physical design adds NLB management points to support the additional load. At 100,000 clients, the management points are load-balanced across four systems and the management points read from a SQL site database replica. The following configuration details the hardware recommended to achieve this client density on a single site.

Site server:

• Quad Xeon 2.66GHz

• 16GB of RAM

• SAS drives with battery-backed cache

• RAID1 array for OS

• RAID10 array (four disks) for ConfigMgr files

• RAID10 array (four disks) for SQL data files

• RAID10 array (four disks) for SQL log files

• RAID1 array for SQL replication distribution database

Four management points in the NLB cluster:

• Dual Xeon 3GHz

• 4GB of RAM

• SAS drives with battery-backed cache

• RAID1 array for OS

• RAID1 array for ConfigMgr files

• RAID10 array (four disks) for SQL replication distribution database

When implementing ConfigMgr with greater than 100,000 clients, use a central site with no clients reporting to it. Ever since SMS 2.0, the central site was intended for inventory rollup, status processing, centralized administration, and reporting. The key difference is that although during the SMS 2.0 timeframe hierarchies over 500 clients were recommended to implement a central site with no clients, Microsoft now baselines that architecture at hierarchies greater than 100,000 clients.

When you define which agents will be loaded into the ConfigMgr client, you are actually defining the ConfigMgr client architecture. This architecture requires planning to ensure future initiatives will work without issues.

As an example, defining an initial ConfigMgr client cache value is an important task to perform before rolling out clients to the enterprise. Although you can modify the cache size on an individual client basis, initial packages may fail if they exceed the default cache size.

Another setting is choosing to have the client display a visual indicator or even generate an audible alert when the client is being remote-controlled. Enabling the audio is considered annoying by users, and systems have been known to have problems with it. Displaying a visual indicator is suggested, because it lets the user know the system is still being controlled and identifies when the remote administrator has closed the session.

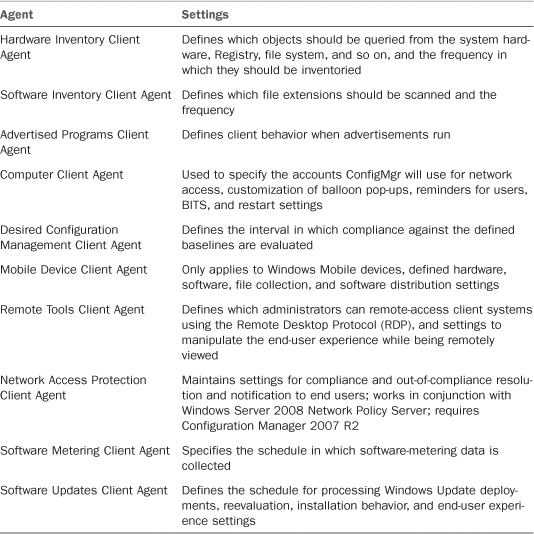

ConfigMgr clients consist of multiple agents, each of which has unique settings that can be assigned to them. When the settings are defined, ConfigMgr creates XML (eXtensible Markup Language) policies, which are downloaded via the management point to clients. The following agents have policies defined that collectively make up the ConfigMgr Client architecture illustrated in Table 4.7.

Client architecture is fairly flexible in that if a setting is not initially deployed correctly, it can be changed centrally with the clients updating to the new setting in fairly quick fashion (as fast as the setting for the Computer Client Agent Policy Polling Interval). The importance of understanding the agents and their settings is primarily to facilitate creating a correct ConfigMgr site design and to position the ConfigMgr deployment team for success.

Chapter 12 further discusses client architecture.

In today’s global economy, more companies have networks spanning multiple countries than ever before. The nature of the different languages used from country to country presents new challenges for system administrators. When Microsoft Windows client operating systems are installed using the native, non-English language of the country, ConfigMgr administrators must look at available options to address their system management needs. The International Client Pack (ICP) is a specialized set of client files split across two separate downloads, supporting the following 22 languages. You can download the ConfigMgr 2007 SP 1 ICPs by searching for ICP at www.microsoft.com/downloads.

ICP1 contains the following languages:

• English

• French

• German

• Japanese

• Spanish

ICP2 contains all languages from ICP1 plus the following:

• Chinese (Simplified)

• Chinese (Traditional)

• Czech

• Danish

• Dutch

• Finnish

• Greek

• Hungarian

• Italian

• Korean

• Norwegian

• Polish

• Portuguese

• Portuguese (Brazil)

• Russian

• Swedish

• Turkish

ICPs are client files only. The files consist of the following:

• Client.msi

• Client.mst

• Several small supporting files, including language resource files

ConfigMgr site and service pack versions must correlate to the ICP version implemented. As an example, you should only install the Microsoft System Center Configuration Manager 2007 SP 1 International Client Packs on a ConfigMgr 2007 SP 1 site.

Caution

ICPs and Service Packs

When using ICPs with ConfigMgr, you cannot upgrade to a new service pack level until the associated ICP is also available. ICPs are released shortly after service packs. Once the ICP is released for a new service pack, install the service pack and then install the new ICP.

ICPs are versioned in a fashion where the client version number is greater than the service pack level of the current ConfigMgr client. You can identify service pack, ICP, and hotfix numbers from the version number, which is the last four digits of the Configuration Manager 2007 version number. Let’s use a sample scenario to illustrate how clients know they need to upgrade to an ICP-versioned client. The following illustrates a current ConfigMgr 2007 RTM install that is being upgraded to SP 1:

• ConfigMgr 2007 RTM Client version—4.0.5931.0000

• ConfigMgr 2007 SP 1 Prerelease version—4.00.6086.1000

• ConfigMgr 2007 SP 1 Client version—4.00.6221.1000

• ConfigMgr 2007 SP 1 ICP1 Client version—4.00.6221.1400

• ConfigMgr 2007 SP 1 ICP2 Client version—4.00.6221.1700

• ConfigMgr 2007 R2 Client version—4.00.6355.1000

To determine the version of an ICP on a site server, check the version value of HKEY_LOCAL_MACHINESoftwareMicrosoftSMSSetup in the Registry. To validate the management point has been updated, verify that language directories such as 00000409, 00000407, and so on, exist and contain .mst files. To verify clients have received the updated client binaries, create a custom query and include the Client Version property from the System Resource class. To verify the client has received the updates at the client, open the Configuration Manager applet in Control Panel and view the version of each client on the Components tab.

If an ICP is installed on a site server, the only prerequisite is the operating system must be English.

End-user experience will vary depending on whether or not the ICP is deployed. The following scenarios could exist:

Scenario 1— The site server is running the English operating system without any ICP.

• Clients running native language OS will show the ConfigMgr client in English; users may not be able to read ConfigMgr dialog boxes.

• Clients running the Multilanguage User Interface (MUI) will have the same experience as those running native OS; users may not be able to read ConfigMgr dialog boxes.

Scenario 2—The site server is installed in English and ICP1 or ICP2 is installed.

• Clients running native language will be able to see the ConfigMgr client dialog boxes in their language as long as the ICP version includes their language.

• Clients running MUI will be able to see the ConfigMgr client dialog boxes in their language as long as the ICP version includes their language.

Take special considerations when deploying the ICP to ConfigMgr hierarchies. Always read the release notes and product documentation, and test thoroughly in a lab and pilot environment.

You will want to establish a lab environment and implement ConfigMgr in a completely isolated lab scenario. Labs return large amounts of ROI to those organizations that use them. Using a lab allows IT administrators to work with, experiment, and learn new technology, configurations, and scenarios needed in a production environment, while mitigating the risk by isolating the lab environment from those systems that could cause a loss in revenue or service if an outage occurred. In short, labs should mirror a production environment as closely as possible, have no risk associated with their use, and be able to be reset to allow for quickly repeating a process or testing a given scenario.

Using a lab allows several options that build on each other to guarantee the success of the ConfigMgr implementation. With a lab, you can implement ConfigMgr without interference of network devices such as firewalls, routers, IDS, and IPS. If resources allow, you can scale the solution in the lab to validate the expected performance and behavior of the design destined for the production environment.