Lots of Data (Vectors)

The Intel® Xeon Phi™ coprocessor is designed with strong support for vector level parallelism with features such as 512-bit vector math operations, hardware prefetching, software prefetch instructions, caches, and high aggregate memory bandwidth. This rich support in hardware coupled with numerous software options may seem overwhelming at first glance, but the richness available offers many solutions for diverse needs. Processors and coprocessors benefit from vectorization. Exposing opportunities to use vector instructions for vector parallel portions of algorithms is the first programming effort. Even then, the layout of data, alignment of data, and the effectiveness of prefetching into caches can affect vectorization. This chapter covers the rich set of options available for processors and Intel Xeon Phi coprocessors with little specific Intel Xeon Phi knowledge required.

The best software options are using functions in the Intel® Math Kernel Library (MKL), or programming using the vector support from any of SIMD directives, prefetching, or Intel® Cilk™ Plus. Alternatives such as intrinsics and compilers auto-vectorization can deliver excellent performance results, but the limitations in portability and future maintenance can be substantial. All together, the numerous methods to achieve vector performance on Intel Xeon Phi coprocessors are plentiful enough to help you use this important capability. In the first chapter we explained how you should aim to have at a high usage of vector instructions on Intel Xeon Phi coprocessors. These vector instructions are critical to keeping the coprocessor busy.

We break down our discussion in this chapter as follows: we start by explaining why vectorization matters, and then we give an overview of five approaches to vectorization. We introduce a six-step methodology for looking for important and easy places where a few changes might improve vectorization. The rest of the chapter then walks through the key topics for writing vectorizable code:

• Streaming through caches: data layout, alignment, prefetching, streaming stores

• Compiler tips, options, and directives

Why vectorize?

Performance! Full use of the vector instructions means being able to do 16 single-precision or 8 double-precision mathematical operations at once instead of one. The performance boost that offers is substantial, and is a key to the performance we should expect from an Intel Xeon Phi coprocessor. Until a couple years ago, with SSE, processors could only offer four single-precision or two double-precision operations per instruction. The advent of AVX has make that eight and four, respectively, but still half what is found in Intel Xeon Phi coprocessors.

How to vectorize

Effective vectorization comes from a combination of efficient data movement and recognition of vectorization opportunities in the program itself. Many things get in the way of vectorization: poor data layout, C/C++ language limitations, required compiler conservatism, and poor program structure. We’ll start with the five approaches to vectorization that should be of most interest, then look at a six-step method that may help you, and finally we’ll review the multiple obstacles and solutions such as data layout and compiler options, in order to vectorize your code! Table 5.1 summarizes techniques for achieving vectorization.

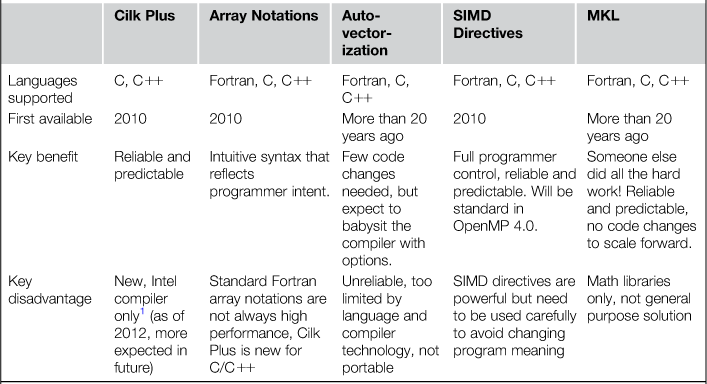

Table 5.1

Techniques to Achieve Vectorization

1There has been work on the gcc compiler in an experiment branch to support this specification too.

Five approaches to achieving vectorization

Vectorization occurs when code makes use of vector instructions effectively. We encourage finding a method that has the compiler generate the instructions as opposed to manually writing in assembly code or with explicit intrinsics. The compiler can do everything automatically for a very small number of examples, but usually does much better with some help from programmers to overcome limitations in the programming language or algorithm implementation. As listed in Table 5.1, five approaches to consider are:

• Math library. See Chapter 11. The Intel Math Kernel library has been tuned to use vectorization when possible within the library routines in Intel MKL.

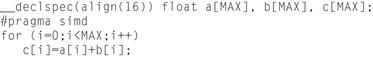

• Auto vectorization. Count on the compiler to figure it all out. This works for only the simplest loops, and usually only with a little help overcoming programming language limitations (for instance, using the –ansi-alias compiler option). Figure 5.1 shows the C code for a simple add of two vectors (we illustrate later in Figure 5.6). We have inserted an alignment directive, which is important. We saw alignment directives in Chapters 2 through 4 for the same reason. There are additional tips for compiler vectorization in an upcoming section, General Compiler Tips and Comments for Vectorization, in this chapter.

![]()

Figure 5.1 Vector Addition Using Standard C, Similar Programming Can Be Done in Fortran.

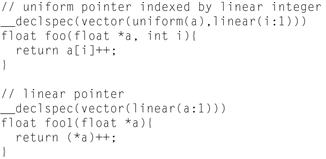

• Directives/pragmas to assist or require vectorization. See Figure 5.2. The SIMD directives (expected to be standard in OpenMP 4.0 in 2013, probably with slightly different syntax) give simple and effective controls to mandate vectorization. These are much more powerful than “IVDEP” directives that did not mandate vectorization. The catch in using these directives is that the vectorization of arbitrary loops is generally unsafe or will change the meaning of the program. Use of these directives has been very popular, but requires a firm understanding of their meaning and their dangers. SIMD directives are covered in an upcoming section, SIMD Directives, in this chapter.

Figure 5.2 Vector Addition Using Standard C, Similar Programming Can Be Done in Fortran.

• Array notations. See Figure 5.3 and Figure 5.4. Array notations convey programmer intent in an intuitive notation that reflects the array operations within an algorithm. The concise semantics of array notations match vector execution, thereby naturally avoiding unintended barriers to vectorization. Fortran has array notations in the standard, whereas array notations in C/C++ are extensions support by compilers with support for Cilk Plus.

![]()

Figure 5.3 Vector Addition Using Cilk Plus Array Notation, Similar Programming Can Be Done in Fortran.

Figure 5.4 Vector Addition Using Cilk Plus Array Notation, with “Short Vector” Syntax.. Similar Programming Can Be Done in Fortran.

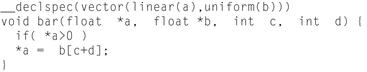

• Elemental functions. See Figure 5.5. Elemental functions are very useful when a program has an existing function that does one operation at a time that could be done in parallel with vector operations. This elemental function capability of Cilk Plus allows programs to maintain their current modularity but benefit from vectorization. Another advantage is that the simple code can be inlined but more complex operations may be left as functions for efficiency.

Figure 5.5 Vector Addition Using a Cilk Plus Elemental Function, No Fortran Equivalent Exists.

Six step vectorization methodology

Intel has published an interesting article suggesting a six-step process for vectorizing an application. It is not specific to Intel Xeon Phi coprocessors, but rather a general methodology designed for processors that is equally appropriate for Intel Xeon Phi coprocessors. It is neatly documented in an online Vectorization Toolkit that includes links to additional resources for each step. The URL is given at the end of this chapter. This section is a brief overview suitable to give a taste of some interesting tools Intel has to help evaluate and guide vectorization work.

This approach is very useful in cases where incremental work can yield strong results. Scientific codes that have successfully used vector supercomputers previously, or made use of SIMD instructions such as SSE or AVX, are easily the best candidates for this approach. This six-step methodology is no panacea because it can completely miss a bigger picture of overall algorithm redesign when that might be most appropriate. Parallelism may not be easily exposed. An effective parallel program may require a more holistic approach involving restructuring (refactoring) of the algorithm and data layout to get significant gains. Whether parallelism is easily exposed, or with great difficulty, it is wise to start by looking for the easiest opportunities to expose. These six steps help explain how to look for the most accessible and easiest opportunities.

Step 1. Measure baseline release build performance

We should start with a baseline for performance so we know if changes to introduce vectorization are effective. In addition, setting a goal can be useful at this point so we can celebrate later when we achieve it. A release build should be used instead of a debug build. A release build will contain all the optimizations for our final application and may alter the hotspots or even the code that is executed. For instance a release build may optimize away a loop in a hotspot that otherwise would be a candidate for vectorization. Using a debug build would be a mistake when working to optimize. A release build is the default in the Intel Compiler. We have to specifically turn off optimizations by doing a DEBUG build on Windows (or using the -Zi switch) or using the -Od switch on Linux or OS X. If using the Intel Compiler, ensure you are using optimization levels 2 or 3 (−O2 or –O3) to enable the auto-vectorizer.

Step 2. Determine hotspots using Intel® VTune™ Amplifier XE

We can use Intel® VTune™ Amplifier XE, our performance profiler, to find the most time-consuming functions in your application. The Hotspots analysis type is recommended; although Lightweight Hotspots would work as well (it will profile the whole system as opposed to just your application).

Identifying which areas of the code are taking the most time will allow us to focus on optimization efforts in the areas where performance improvements will have the most effect. Generally we want to focus on only the top few hotspots, or functions taking at least 10 percent of the application’s total time. Make note of the hotspots we want to focus on for the next step.

Step 3. Determine loop candidates using Intel Compiler vec-report

The vectorization report (or vec-report) of the Intel Compiler can tell us whether or not each loop in our code was vectorized. We should ensure that we are using Compiler optimization level 2 or 3 (–O2 or –O3) to enable the auto-vectorizer, then run the vec-report and look at the output for the hotspots we determined in Step 2. If there are loops in the hotspots that did not vectorize, we can check whether they have math, data processing, or string calculations on data in parallel (for instance in an array). If they do, they might benefit from vectorization. Continue to Step 4 if any candidates are found. To run the vec-report, use the -vec-report2 or /Qvec-report2 option. The vec-report option, when used with offloading, can be easier to read if the reports for the host and the coprocessor are not interspersed. The section “Vec-report Option Used with Offloads” in Chapter 7 explains how to do this.

Step 4. Get advice using the Intel Compiler GAP report and toolkit resources

Run the Intel Compiler Guided Auto-parallelization (or GAP) report to see suggestions from the compiler on how to vectorize the loop candidates from Step 3. (Note: Intel named it guided auto-parallelization because it helps with task and data (vector) parallelism, vectorization is really just a special type of parallel processing.) Examine the advice and refer to additional toolkit resources as needed. Run the GAP-report using the -guide or /Qguide options for the Intel Compiler.

Step 5. Implement GAP advice and other suggestions (such as using elemental functions and/or array notations)

Now that we know the GAP report suggestions for the loop, it is time to implement them if possible. The report may suggest making a code change. Make sure the change would be “safe” to do. In other words, we must make sure the change does not affect the semantics or safety of the loop. The tool can definitely suggest changes that will yield vectorization but will change the program and get the wrong answer (but it will be faster!). One way to ensure that the loop has no dependencies that may be affected is to consider if executing the loop in backwards order would change the results. Another is to think about the calculations in the loop being in a scrambled order. If the results would be changed, the loop has dependencies and vectorization would not be “safe.” We may still be able to vectorize by eliminating dependencies in this case. We could modify the source to give additional information to the compiler or optimize a loop for better vectorization. At this point we may introduce some of the high-level constructs provided by Cilk Plus or Fortran to better expose the vector parallelism.

Step 6: Repeat!

Iterate through the process as needed until performance is achieved or there are no good candidates left in the identified hotspots. Please bear in mind that this six-step process does not consider opportunities that may be possible if algorithm and data restructuring are considered. This six-step approach does not attempt to identify such opportunities.

Streaming through caches: data layout, alignment, prefetching, and so on

In order for vectorization to occur efficiently, data needs to flow to and from the vector instructions without excessive overhead. Efficiency in data movement depends on data layout, alignment, prefetching and efficient store operations. Some investigation into the code produced by the compiler can be done by anyone, and is discussed in a later section in this chapter titled “Looking at What the Compiler Created.”

Why data layout affects vectorization performance

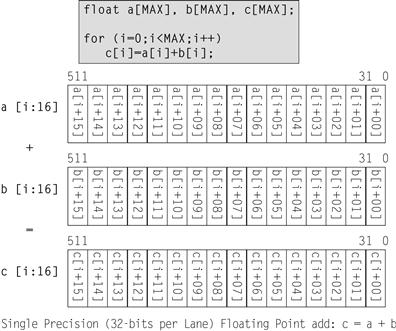

Vector parallelism comes from performing the same operation on multiple pairs (or triplets with FMA) of data elements simultaneously. Figure 5.6 illustrates the concept using the same vector registers we first showed in Figure 2.1. Figure 5.6 is not particular to Intel Xeon Phi coprocessors, other than the exact width of the registers. In fact, the optimizations discussed in the chapter are essentially the same as we would do for any modern microprocessors. The Intel Xeon Phi coprocessor does offer more vector level parallelism by having wider registers and more aggregate memory bandwidth, but with the correct programming methods an application can be written to map to processors or coprocessors through use of Intel MKL or the compiler without requiring different approaches in programming.

Figure 5.6 Vector Addition.

Figure 5.6 illustrates a vector addition of two registers, each holding 16 single-precision floating-point values yielding 16 single precision sums. In general, this math is done by loading the two input registers from data in memory and then performing the addition operation. There are several issues that need to be understood and optimized to make this possible:

• Data layout in memory, aligned and packed. Sixteen values have to be collected into each input register. If the data is laid out in memory in the same linear order and aligned on 512-bit (64 bytes) boundary, then a simple high performance vector load will suffice. If not, the first consideration should be whether data could be laid out in order and alignment. Data that is not packed and aligned optimally will require more instructions and cache or memory accesses to collect and organize in registers in order to use the vector operations. The extra instructions and data accesses reduce performance. The compilers offer alignment directives and command line options, covered later in this chapter, to force or specify alignment of variables.

− Fetch data from cache not memory. Data eventually comes from memory, but at the time a vector load instruction is issued it is much faster if the data has been fetched into the closest (level 1, known as L1 cache) prior to the load instruction. On an Intel Xeon Phi coprocessor data actually travels from memory to L2 cache to L1 cache. Optimally this can be done by a prefetch of data from memory to L2, later followed by a prefetch from L2 to L1 cache, and later by the load instruction from L1 cache. The prefetches can be initiated by hardware or by software prefetches specified by the compiler automatically or manually by the programmer. L1 Prefetch instructions can ask for memory data to L1, but there are far fewer L1 prefetch operations allowed to be outstanding at the same time than the capacity for L2 prefetches to be outstanding. The compiler inserts prefetches automatically, and there are directives to help the compiler with hints and there are mm_prefetch intrinsics to do the prefetching manually. These are covered in a later section in this chapter.

− Data reuse. If a program is fetching data into the cache that will be accessed more than once, then those accesses should occur close together. This is called temporal locality of reference. Ensuring that data is reused quickly helps reduce the chance that data will be evicted before it is needed again—resulting in a new fetch. Rearranging a program to increase temporal reuse is often a rewarding optimization. Intel MKL (Chapter 11) uses blocking in the library routines, which is a big help. For code we write explicitly, the challenge of blocking generally falls to the programmer. The good news is that cache blocking has been studied for decades for processors, and Intel Xeon Phi coprocessor relies on the same programming techniques. Before we decide to curse caches, it is worth noting that caches exist to lower power consumption and increase performance. The fact that our programming style can align with the cache designs to maximize performance is the price we pay to have more performance and power efficiency than would be possible without caches.

− Streaming stores. If a program is writing data out that it will not use again, and that occupies memory linearly without gaps, then making sure streaming stores are being generated is important. Streaming stores increase cache utilization for data that matters by preventing the data being written from needlessly using up cache space.

Data alignment

If poor vectorization is detected via compiler vectorization report or assembler inspection, we may decide to improve data alignment to increase vectorization opportunities. For alignment, what is important is often the relative alignment of one array compared to another, or of one row of a matrix compared to the next. The compiler can often compensate for an overall offset in absolute alignment. Though of course, absolute alignment of everything is one way of getting relative alignment. For matrices, we may want to pad the row length (or column length, for Fortran) to be a multiple of the alignment factor. For Intel Xeon Phi coprocessors, alignment to 64-byte boundaries of all data (usually arrays) is very important in order to reach maximum performance. Aligned heap memory allocation can be achieved with calls to:

Void* _mm_malloc (int size, int base)

Alignment assertion align(base) can be used on statically allocated variables:

__attribute__((align(64))) float temp[SIZE]; /* array temp: 64byte aligned */

or

__declspec(align(64)) float temp[SIZE]; /* array temp: 64byte aligned */

Note: The Intel compilers accept either __attribute__() or __declspec() spellings to contain the directives for alignment. The __attribute() spelling is associated with gcc and Linux generally whereas __declspec() originated with Microsoft compilers and Windows. The Intel compiler allows source code to be used on Linux, Windows, or Mac OS X systems without changing the spelling, allowing either to be used.

The equivalent Fortran directive is

!DIR$ ATTRIBUTES ALIGN: base :: variable

Alignment assertion __assume_aligned(pointer, base) can be used on pointers:

__assume_aligned(ptr, 64); /* ptr is 64B aligned */

Fortran programmers would use the following to assert that the address of A(1) is 64B aligned:

!DIR$ ASSUME_ALIGNED A(1): 64

Additionally, there are compiler command line options (“align”) to specify alignment of data. The Fortran compiler support variations to force alignment of arrays and COMMON blocks. C/C++ programs generally use only the __attribute() or __declspec() source code directives, whereas Fortran programmers often use the command line options as an alternative to the directives.

Some examples of alignment directives (shown in Fortran) include:

!dir$ attributes align : 32 :: A, B

• Directs compiler to align the start of objects A, B to 32 bytes

• Does not work for objects inside common blocks or derived types with sequence attribute; can be used to align start of common block itself

!dir$ assume_aligned A:32, B:32

!dir$ vector aligned

• Invites the compiler to vectorize a loop using aligned loads for all arrays, ignoring efficiency heuristics, provided that it is safe to do so.

!dir$ attributes align

and

!dir$ assume_aligned

!dir$ assume_aligned

• Most obvious use is for an incoming subroutine argument

• Cannot be used for global objects declared elsewhere or sequential objects (risk of conflicts) such as within common blocks or within modules or within structures

!dir$ vector aligned

Prefetching

The best performing applications will have well laid out data ready to be streamed to the math units to be processed. Moving the data from its tidy layout in memory to the math units is done using prefetching to avoid delays that occur as a mathematical operation has to wait for input data.

Prefetching is an important topic to consider regardless of what coding method we use to write an algorithm. To avoid having a vector load operation request data that is not in cache, we can make sure prefetch operations are happening. Any time a load requests data not in the L1 cache, a delay occurs to fetch the data from an L2 cache. If data is not in any L2 cache, an even longer delay occurs to fetch data from memory. The lengths of these delays are nontrivial, and avoiding the delays can greatly enhance the performance of an application. Optimized libraries such as Intel MKL will already utilize prefetches, so we will focus on controlling prefetches for code we are writing.

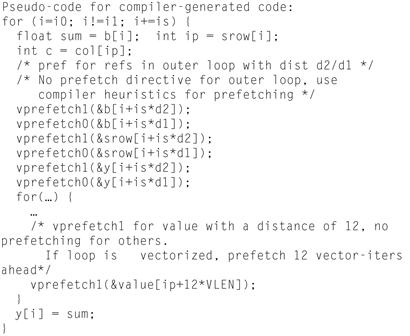

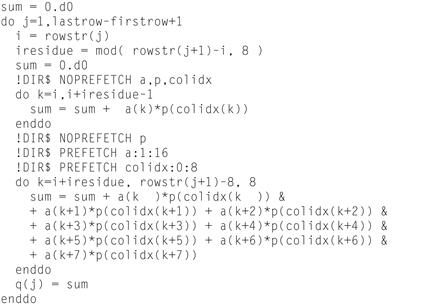

There are four sources of prefetches: automatic prefetching by the hardware to L2 caches (see Chapter 8), automatic software prefetch instructions by the compiler to L1 cache, compiler-generated prefetches (which were assisted by information from the programmer), and manual prefetch instructions by the programmer with both L2 and L1 fetch capabilities. The hardware prefetching is automatic and will automatically give way to software prefetches because they have higher priority. Figure 5.7 shows usage of prefetch directives. Figure 5.8 demonstrates the roughly equivalent using manual prefetches. It’s easy to see that pragmas are far simpler to use. We recommend starting by working with the compiler, and moving to coding of manual prefetches only if required.

Figure 5.7 Examples of Prefetching Directives.

Figure 5.8 Roughly the “Manual Prefetches” that Are Generated from the Example in Figure 5.7 by the Compiler.

Compiler prefetches

When using the Intel compiler with normal or elevated optimization levels (-O2 or greater) then prefetching is automatically set to opt-prefetch=3. Compiler prefetching is enabled with the -opt-prefetch=n option with n being a value 1 to 4 on Linux (Windows: /Qopt-prefetch:n). The higher the value, the more aggressive the compiler is with issuing prefetch instructions. If algorithm is well blocked to fit in L2 cache, prefetching is less critical but generally still useful for Intel Xeon Phi coprocessors.

In general, we recommend using the compiler to issue prefetches. If this is not perfect, there are a number of ways to give the compiler additional hints or refinements but still have the compiler generate the prefetches. We consider manual insertion of prefetch directives to be a last resort if compiler prefetching cannot be utilized for a particular need.

The first tuning to try is to use different second-level prefetch (vprefetch0) distances. We recommend trying n=1,2,4,8 with -mP2OPT_hlo_use_const_second_pref_dist=n. In this case, n=2 means use a prefetch distance of two vectorized iterations ahead (if the loop is vectorized). Instead of the compiler option, prefetch pragmas can be utilized in the source code.

Compiler prefetch controls (prefetching via pragmas/directives)

Instead of relying only on the compiler option, prefetch pragmas can be utilized in the source code. The compiler can be given prefetch directives to modify default behavior. The passing of addition information through directives can improve the performance of an application. The format for C/C++ and Fortran are respectively:

#pragma prefetch var:hint:distance

!DIR$ prefetch var:hint:distance

The hint value can be 0 (L1 cache [and L2 due to inclusion]), 1 (L2 cache, not L1). Figure 5.7 shows usage of prefetch directives and Figure 5.8 shows the roughly equivalent manual prefetches that we get from the compiler automatically with the simpler Figure 5.7 example syntax. Figures 5.9, 5.10A, 5.10B, and 5.11 give additional usage examples to examine.

Figure 5.9 A Prefetch Directive Example in Fortran.

Figure 5.10a Another Prefetch Directive Example in Fortran.

Figure 5.10b A Prefetch Directive Example in C.

Figure 5.11 C Prefetch Examples Illustrating Interoperation with Vector Intrinsics.

Manual prefetches (using vprefetch0 and vprefetch1)

Figures 5.12 and 5.13 show examples of using manual prefetch directives. We recommend only doing this if the compiler cannot generate prefetches sufficiently even with hint covered in the prior section. These intrinsics work on processors and coprocessors both and are not specific to Intel Xeon Phi coprocessors. The intrinsics for Fortran and C/C++, respectively, are:

Figure 5.12 C Prefetch Intrinsics Example, Manual Prefetching.

Figure 5.13 Fortran Prefetch Intrinsics Example, Manual Prefetching.

MM_PREFETCH (address [, hint])

void _mm_prefetch(char const*address, int hint)

The intrinsic issues a software prefetch to load one cache line of data located at address. The value hint specifies the type of prefetch operation. Values of _MM_HINT_T0 or 0 (for L1 prefetches) and _MM_HINT_T1 or 1 (for L2 prefetches) are most common and are the interesting ones for use with Intel Xeon Phi coprocessors.

If we do decide to insert prefetches manually, we will want to disable automatic compiler insertion of prefetch instructions. Doing both will generally create competition for the limited prefetch capabilities of the hardware, and will result in reduced performance. If we are going through the effort to control prefetching manually, we should have already determined the compiler prefetching was not optimal. This generally happens for more complex data processing than the compiler can determine automatically.

Compiler prefetching is disabled with the -opt-prefetch=0 or -no-opt-prefetch option on Linux (Windows: /Qopt-prefetch:0 or /Qopt-prefetch-).

Streaming stores

Streaming stores are a special consideration in vectorization. Streaming stores are instructions especially designed for a continuous stream of output data that fills in a section of memory with no gaps between data items. They are supported by many processors, including Intel Xeon processors, as well as Intel Xeon Phi coprocessors. An interesting property of an output stream is that the result in memory does not require knowledge of the prior memory content. This means that the original data does not need to be fetched from memory. This is the problem that streaming stores solve—the ability to output a data stream but not use memory bandwidth to read data needlessly.

Having the compiler generate streaming stores can improve performance by not having the processor or coprocessor fetch caches lines from memory that will be completely overwritten. This method stores data with instructions that use a nontemporal buffer, which minimizes memory hierarchy pollution. This optimization helps with memory bandwidth utilization and is present for processors and Intel Xeon Phi coprocessors and may improve performance for both.

Use of the compiler option -opt-streaming-stores keyword (Linux and OS X) or /Qopt-streaming-stores:keyword (Windows) controls the compilers generation of streaming stores. The keyword can be always, never or auto. The default is auto. It is useful to know how to help the compiler understand an application enough to use streaming stores effectively.

When streaming stores will be generated for Intel® Xeon Phi™ coprocessors

The compiler is smart enough not to generate prefetches for lines for the streaming store, so as to avoid negating the value of the streaming stores. The compiler will also inject L2 cache line eviction instructions when using streaming stores. The Intel compiler generates streaming store instructions for an Intel Xeon Phi coprocessor only when:

1. It is able to vectorize the loop and generate an aligned unit-strided vector unmasked store:

• If the store accesses in the loop are aligned properly, user can convey alignment information using pragmas/clauses

− Suggestion: Use vector aligned directive before loop to convey alignment of all memory references inside loop including the stores.

• In some cases, even when there is no directive to align the store-access, the compiler may align the store-access at runtime using a dynamic peel-loop based on its own heuristics.

• Based on alignment analysis, compiler could prove that the store accesses are aligned (at 64 bytes)

• Store has to be aligned and be writing to a full cache line (vstore – 64 bytes, no masks)

− Suggestion: it is the responsibility of the programmer to align the data appropriately at allocation time using align clauses, aligned_malloc, -align array64byte option on Fortran, and so on.

2. Vector-stores are classified as nontemporal using one of:

• We have specified a nontemporal pragma on the loop to mark the vector-stores as streaming

− Suggestion: Use vector nontemporal directive before loop to mark aligned stores, or communicate nontemporal-property of store using a vector nontemporal A directive where an assignment into array A is the store inside the loop.

• We have specified the compiler option -opt-streaming-stores always to force marking all aligned vector-stores as nontemporal.

− This has the implicit effect of adding the nontemporal pragma to all loops that are vectorized by the compiler in the compilation scope

− Using this option has few negative consequences even if used incorrectly for Intel Xeon Phi coprocessor since the data remains in the L2 cache (just not in the L1 cache)—so this option can be used if most aligned vector-stores are nontemporal. However, using this option on processors for cases where some accesses actually are temporal can cause significant performance losses since the streaming-store instructions on Intel Xeon bypass the cache altogether.

Compiler generation of clevicts

On streaming operations, the use of clevicts instructions allows the compiler to tell the hardware to not fetch data that will entirely be discarded (overwritten). This effectively avoids wasted prefetching efforts. Compiler has heuristics that determine whether a store is streaming. Usually this heuristic will kick in only when the loop has a large number of iterations specified and that is known at compile time. This is very limiting, so for applications where the calculation for the number of iterations is symbolic, there is a nontemporal pragma that allows us to mark streaming stores:

#pragma vector nontemporal

For processors, this pragma generates the nontemporal hints on stores. For Intel Xeon Phi coprocessors the compiler generates clevicts instead when the compiler knows the store addresses are aligned. Feature is controlled via the option:

-mGLOB_default_function_attrs=”clevict_level=N”

where N=0, 1, 2 or 3 (default is 3)

Compiler tips

There are a number of tips we can offer on how to get the most of the ability for the compiler to help with vectorization.

Avoid manual loop unrolling

The Intel® Compiler can typically generate efficient vectorized code if a loop structure is not manually unrolled. Unrolling means duplicating the loop body as many times as needed to operate on data using full vectors. For single precision on Intel Xeon Phi coprocessors, this commonly means unrolling 16-times. This means the loop body would do 16 iterations at once and the loop itself would need to skip ahead 16 per iteration of the new loop.

It is better to let the compiler do the unrolls, and we can control unrolling using #pragma unroll N (C/C++) or !DIR$ UNROLL N (Fortran). If N is specified, the optimizer unrolls the loop N times. If N is omitted, or if it is outside the allowed range, the optimizer picks the number of times to unroll the loop. Manual unrolling will generally interfere with the compiler optimizations enough to hurt performance.

To add to this, manual loop unrolling tends to tune a loop for a particular processor or architecture, making it less than optimal for some future port of the application. Generally, it is good advice to write code in the most readable, straightforward manner. This gives the compiler the best chance of optimizing a given loop structure. Here is a Fortran example where manual unrolling is done in the source:

m = MOD(N,4)

if ( m /= 0 ) THEN

do i = 1 , m

Dy(i) = Dy(i) + Da*Dx(i)

end do

end if

if (mp1 = m +1) then

do i = mp1 , N , 4

Dy(i) = Dy(i) + Da*Dx(i)

Dy(i+1) = Dy(i+1) + Da*Dx(i+1)

Dy(i+2) = Dy(i+2) + Da*Dx(i+2)

Dy(i+3) = Dy(i+3) + Da*Dx(i+3)

end do

end if

It is better to express this in the simple form of:

do i=1,N

Dy(i)=Dy(i) + Da*Dx(i)

end do

This allows the compiler to generate efficient vector-code for the entire computation and also improves code readability. Here is a C/C++ example where manual unrolling is done in the source:

double accu1 = 0, accu2 = 0, accu3 = 0, accu4 = 0;

double accu5 = 0, accu6 = 0, accu7 = 0, accu8 = 0;

for (i = 0; i < NUM; i += 8) {

accu1 = src1[i+0]*src2 + accu1;

accu2 = src1[i+1]*src2 + accu2;

accu3 = src1[i+2]*src2 + accu3;

accu4 = src1[i+3]*src2 + accu4;

accu5 = src1[i+4]*src2 + accu5;

accu6 = src1[i+5]*src2 + accu6;

accu7 = src1[i+6]*src2 + accu7;

accu8 = src1[i+7]*src2 + accu8;

}

accu = accu1 + accu2 + accu3 + accu4

+ accu5 + accu6 + accu7 + accu8;

It is better to express this in the simple form of:

double accu = 0;

for (i = 0; i < NUM; i++ ) {

accu = src1[i]*src2 + accu;

}

Requirements for a loop to vectorize (Intel® Compiler)

Since a single iteration of a loop generally operates on one element of array, but use of vector instruction depends on operating on multiple elements of an array at once (see Figure 5.6), the “vectorization” of a loop essentially requires unrolling the loop so that it can take advantage of packed SIMD instructions to perform the same operation on multiple data elements in a single instruction.

The Intel compilers may be among the most capable and aggressive at vectorizing programs, but they still have limitations. In the most recent Intel compilers, vectorization is one of many optimizations enabled by default. Here is a list of requirements in order for a loop to potentially vectorize:

• If a loop is part of a loop nest, it must be the inner loop. Outer loops can be parallelized using OpenMP or autoparallelization (-parallel), but they cannot be vectorized unless the compiler is able either to fully unroll the inner loop, or to interchange the inner and outer loops. Additional high level loop transformations such as these may require -O3.

• The loop must contain straight-line code (a single basic block). There should be no jumps or branches, but masked assignments are allowed. A “masked assignment” simply means an assignment controlled by a conditional (such as an IF statement).

• The loop must be countable, which means that the number of iterations must be known before the loop starts to execute although it need not be known at compile time. Consequently, there must be no data-dependent exit conditions.

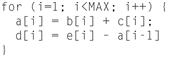

• There should be no backward loop-carried dependencies. For example, the loop must not require statement 2 of iteration 1 to be executed before statement 1 of iteration 2 for correct results. This allows consecutive iterations of the original loop to be executed simultaneously in a single iteration of the unrolled, vectorized loop. Figures 5.14 and 5.15 illustrate this requirement. The examples only make sense when thinking of doing multiple iterations at once due to vectorization. That is what would make Figure 5.15 illustrate a barrier to vectorization.

Figure 5.14 Vectorizable: a[i-1] Is Always Computed Before It Is Used.

Figure 5.15 Not Vectorizable: a[i-1] Might Be Needed Before It Has Been Computed (if Vectorized).

There should be no special operators and no function or subroutine calls, unless these are inlined, either manually or automatically by the compiler. Intrinsic math functions such as sin(), log(), and fmax() are allowed, since the compiler runtime library contains vectorized versions of these functions. The following math functions may be vectorized by the Intel C/C++ compiler: sin, cos, tan, asin, acos, atan, log, log2, log10, exp, exp2, sinh, cosh, tanh, asinh, acosh, atanh, erf, erfc, erfinv, sqrt, cbrt, trunk, round, ceil, floor, fabs, fmin, fmax, pow, and atan2. The list may not be exhaustive. Single precision versions such as sinf may also vectorize. The Fortran equivalents, where available, should also vectorize.

Some additional advice on how to have a loop vectorize:

• Both reductions and vector assignments to arrays are allowed.

• Try to avoid mixing vectorizable data types in the same loop (except for integer arithmetic on array subscripts). Vectorization of type conversions may be either unsupported or inefficient. Support for the vectorization of loops containing mixed data types may be extended in a future version of the Intel compiler.

• Try to access contiguous memory locations. (So loop over the first array index in Fortran, or the last array index in C). While the compiler may sometimes be able to vectorize loops with indirect or non-unit stride memory addressing, the cost of gathering data from or scattering back to memory is often too great to make vectorization worthwhile.

• The ivdep pragma or directive may be used to advise the compiler that there are no loop-carried dependencies that would make vectorization unsafe.

• The vector always pragma or directive may be used to override the compiler’s heuristics that determine whether vectorization of a loop is likely to yield a performance benefit.

• To see whether a loop was or was not vectorized, and why, look at the vectorization report. This may be enabled by the command line switch /Qvec-report3 (Windows) or -vec-report3 (Linux or Mac OS X).

• The vec-report option, when used with offloading, can be easier to read if the reports for the host and the coprocessor are not interspersed. The section “Vec-report Option Used with Offloads” in Chapter 7 explains how to do this.

Importance of inlining, interference with simple profiling

Because vectorization happens on innermost loops consisting of straight line code (see actual rules in the prior section), inlining of functions within the loop is critical. Turning off optimizations (the default is ON) will stop automatic inlining, and that will affect the ability of loops to vectorize if they contain function calls. Instrumenting for profiling using the –pg option will generally do the same, and stop optimization. Better profiling can be done using the less intrusive Intel VTune Amplifier XE (see Chapter 13). Because it requires no instrumentation that will defeat inlining, we can do profiling on the release version of an application. The -opt-report-phase-ipo_inl option can show which functions are inlined.

Compiler options

There are many ways to share information that we know about out program that is not captured purely in the existing language. We selected a few of the most used and most effective compiler options to consider in order to boost the performance of an application:

• Use the ANSI aliasing option for C++ programs; this is not ON by default due to potential compatibility issues with older programs. This enables compiler to do type-based disambiguation (asserts that pointers and floats do not overlap). Without the flag, the compiler may assume that the count for the number of iterations is changing inside the loop if the upper bound is an object-field access. Obeying strict ANSI aliasing rules provides more room for optimization. Hence, it is highly recommended to enable for ANSI-conforming code via -ansi-alias (Linux and OS ) or /Qansi-alias (Windows). This is already the default for the Intel Fortran Compiler. See an example coming up next in the section titled “Memory Disambiguation Inside Vector-Loops.”

• No aliasing of arguments: on Linux and Mac OS X the option -fargument-noalias acts in the same way as applying the keyword restrict to all pointers of all function parameters throughout a compilation unit. This option is not available on Windows.

Use optimization reports to understand what the compiler is doing:

-opt-report-phase hlo –opt-report-phase hpo –opt-report 2

This lets us check whether a loop of interest is properly vectorized. The “Loop Vectorized” message is the first step. We can get extra information using –vec-report 6.

• Use loop count directives to give hints to compiler; this affects prefetch distance calculation, use #pragma loop count (200), before a loop.

• The restrict keyword is a feature of the C99 standard that can be useful to C/C++ programmers. It can be attributed to pointers to guarantee that no other pointer overlaps the referenced memory location. Using the Intel C++ compiler does not only limit it to C99. It makes the keyword available for C89 and even for C++, simply by enabling a dedicated option: -restrict (Linux and Mac OS X) or /Qrestrict (Windows).

• In order to allow vectorization to happen, we need to avoid converting 64-bit integers to/from floating point. Use of 32-bit integers, preferable signed integers (most efficient).

Memory disambiguation inside vector-loops

Consider vectorization for a simple loop:

void vectorize(float *a, float *b, float *c, float *d, int n) {

int i;

for (i=0; i<n; i++) {

a[i] = c[i] * d[i];

b[i] = a[i] + c[i]−d[i];

}

}

Here, the compiler has no idea as to where those four pointers are pointing. As programmers, we may know they point to totally independent locations. The compiler thinks differently. Unless the programmer explicitly tells the compiler that they point to independent locations, the compiler has to assume the worst case aliasing is happening. For example, c[1] and a[0] may be at the same address and thus the loop cannot be vectorized at all.

When the number of unknown pointers are very small, the compiler can generate a runtime check and generate optimized and unoptimized versions of the loops (with overhead in compile time, code size, and also runtime testing). Since the overhead grows quickly, that very small number has to be really small—like two—and even then we are paying the price for not telling the compiler that “pointers are independent.”

So, the better thing to do is to tell the compiler that “pointers are independent.” One way to do it is to use C99 restrict pointer keyword. Even if we are not compiling with C99 standard, we can use -restrict (Linux) and -Qrestrict (Windows) flag to let the Intel compilers to accept restrict as a keyword. In the following example, we tell the compiler that a isn’t aliased with anything else, and b isn’t aliased with anything else:

void vectorize(float *restrict a, float *restrict b, float *c, float *d, int n) {

int i;

for (i=0; i<n; i++) {

a[i] = c[i] * d[i];

b[i] = a[i] + c[i]−d[i];

}

}

Another way is to use IVDEP pragma. Semantics of IVDEP is different from the restrict pointer, but it allows the compiler to eliminate some of the assumed dependencies—just enough to let the compiler think vectorization is safe.

void vectorize(float *a, float *b,

float *c, float *d, int n) {

int i;

#pragma ivdep

for (i=0; i<n; i++) {

a[i] = c[i] * d[i];

b[i] = a[i] + c[i]−d[i];

}

}

Compiler directives

When we work to get the key part of an algorithm (usually a loop) to vectorize, we may quickly realize that there are two barriers: (1) getting the code to be vectorizable, (2) expressing the algorithm so the compiler accepts that it is vectorizable. The former is our responsibility as programmers. The nuisances of programming languages complicate the latter so that even the most brilliant compiler cannot auto-vectorize most loops written with no hints at all. In general, the presence of pointers that are too flexible in the C/C++ languages tend to make this problem most significant in C/C++ code and less for Fortran. Nevertheless, the challenge to completely specify our intent so that a compiler will vectorize is why we have directives. In a later section, we will review array notation and elemental functions as more elegant coding styles that can do much the same thing if we rewrite our code using a better notation. The directive is a bit more like a hammer—we use directives to force our current program to vectorize assuming it should. Code transformations to make the code vectorize is a prerequisite, the directive simply finishes reassuring the compiler so that it does the vectorization.

Traditionally, directives have been hints to the compiler to remove certain restrictions it may enforces. Assuming the concerns that blocked vectorization were removed, then the compiler will vectorize the code. These offer safety, but can be less portable and less predictable, even from release to release, as the heuristics in a compiler shift and change. The VECTOR and IVDEP directives are examples—they give the compiler hints but still rely on the compiler checking and approving all concerns for which the hints do not apply.

The SIMD directives are a different approach; they force the compiler to vectorize and essentially place all the burden on the programmer to ensure correctness. Gone are the days of fighting the compiler? Perhaps, but the programmer has to do the work and ensure correctness. For many, it is a dream come true: no compiler to fight. For many others, it is difficult without the compiler to help verify correctness.

SIMD directives

The SIMD directives enforce vectorization of loops. SIMD directives are an important capability to understand for vectorization work. SIMD directives come in two forms for C/C++ and Fortran, respectively:

#pragma simd [clause[ [,] clause]…]

!DIR$ SIMD [clause[[,] clause]…]

Analogously to how cilk_for gives permission to parallelize a loop (see Chapter 6), but does not require it, marking a for loop with #pragma simd similarly gives a compiler permission to execute a loop with vectorization. Usually this vectorization will be performed in small chunks whose size will depend on the vector width of the machine. For example, writing:

extern float a[];

#pragma simd

for ( int i=0; i<1000; ++i ) {

a[i] = 2 * a[i+1];

}

grants the compiler permission to transform the code equivalently into:

extern float a[];

#pragma simd

for ( int i=0; i<1000; i+=4 ) {

float tmp[4];

tmp[0:4] = 2 * a[i+1:4];

a[i:4] = tmp[0:4];

}

The original loop in our example would not be legal to parallelize with cilk_for, because of the dependence between iterations. A #pragma simd is okay in the example because the chunked reads of locations still precede chunked writes of those locations. However, if the original loop body reversed the subscripts and assigned a[i+1] = 2 * a[i], then the chunked loop would not preserve the original semantics, because each iteration needs the value of the previous iteration.

In general, #pragma simd is legal on any loop for which cilk_for is legal, but not vice versa. In cases where only #pragma simd appears to be legal, study dependences carefully to be sure that it is really legal.

Note that #pragma simd is not restricted to inner loops. For example, the following code grants the compiler permission to vectorize the outer loop:

#pragma simd

for ( int i=1; i<1000000; ++i ) {

while ( a[i]>1 )

a[i] *= 0.5f;

}

In theory, a compiler can vectorize the outer loop by using masking to emulate the control flow of the inner while loop. Whether a compiler actually does so depends on the implementation. The Intel compiler detects and handles many forms of masking.

Requirements to vectorize with SIMD directives (Intel® Compiler)

The SIMD directives ask the compiler to relax some of its requirements and to make every possible effort to vectorize a loop. If an ASSERT clause is present, the compilation will fail if the loop is not successfully vectorized. This has led it to be sometimes called the “vectorize or die” directive.

The directive (#pragma simd in C/C++, or !DIR$ SIMD in Fortran) behaves somewhat like a combination of #pragma vector always and #pragma ivdep, but is more powerful. The compiler does not try to assess whether vectorization is likely to lead to performance gain, it does not check for aliasing or dependencies that might cause incorrect results after vectorization, and it does not protect against illegal memory references. #pragma ivdep overrides potential dependencies, but the compiler still performs a dependency analysis, and will not vectorize if it finds a proven dependency that would affect results. With #pragma simd, the compiler does no such analysis, and tries to vectorize regardless. It is the programmer’s responsibility to ensure that there are no backward dependencies that might impact correctness. The semantics of #pragma simd are rather similar to those of the OpenMP #pragma omp parallel for. It accepts optional clauses such as REDUCTION, PRIVATE, FIRSTPRIVATE, and LASTPRIVATE. SIMD-specific clauses are VECTORLENGTH (implies the loop unroll factor), and LINEAR, which can specify different strides for different variables. Pragma SIMD allows a wider variety of loops to be vectorized, including loops containing multiple branches or function calls. It is particularly powerful in conjunction with the vector functions of Intel Cilk Plus.

Nevertheless, the technology underlying the SIMD directives is still that of the compiler vectorizer, and some restrictions remain on what types of loop can be vectorized:

• The loop must be countable. This means that the number of iterations must be known before the loop starts to execute, although it need not be known at compile time. Consequently, there must be no data-dependent exit conditions, such as break (C/C++) or EXIT (Fortran) statements. This also excludes most while loops. Typical diagnostic messages for incorrect application are similar to

error: invalid simd pragma // warning #8410: Directive SIMD must be followed by counted DO loop.

• Certain special, nonmathematical operators are not supported, and also certain combinations of operators and of data types, with diagnostic messages such as “operation not supported,” “unsupported reduction,” and “unsupported data type.”

• Very complex array subscripts or pointer arithmetic may not be vectorized, a typical diagnostic message is “dereference too complex.”

• Loops with very low number of iterations (also referred to as a low trip count) may not be vectorized. Typical diagnostic: “remark: loop was not vectorized: low trip count.”

• Extremely large loop bodies (very many lines and symbols) may not be vectorized. The compiler has internal limits that prevent it from vectorizing loops that would require a very large number of vector registers, with many spills and restores to and from memory.

• SIMD directives may not be applied to Fortran array assignments or to Intel Cilk Plus array notation.

• SIMD directives may not be applied to loops containing C++ exception handling code.

A number of the requirements detailed in the prior section “Requirements for a Loop to Vectorize (Intel® Compiler)” are relaxed for SIMD directives, in addition to the above-mentioned ones relating to dependencies and performance estimates. Loops that are not the innermost loop may be vectorized in certain cases; more mixing of different data types is allowed; function calls are possible and more complex control flow is supported. Nevertheless, the advice in the prior section should be followed where possible, since it is likely to improve performance.

It is worth noting that with SIMD directives, loops are vectorized under the “fast” floating-point model, corresponding to /fp:fast (-fp-model=fast). The command line option /fp:precise (-fp-model precise) is not respected by a loop vectorized with a SIMD directive; such a loop might not give identical results to a loop without the directive. For further information about the floating-point model, see “Consistency of Floating-Point Results using the Intel® Compiler” (listed in additional reading at the end of the chapter).

SIMD directive clauses

A SIMD directive can be modified by additional clauses, which control chunk size or allow for some C/C++ programmers’ fondness for bumping pointers or indices inside the loop. The SIMD directives come in two forms for C/C++ and Fortran, respectively:

#pragma simd [clause[ [,] clause]…]

!DIR$ SIMD [clause[[,] clause]…]

If we specify the SIMD directive with no clause, default rules are in effect for variable attributes, vector length, and so forth. The VECTORLENGTH and VECTORLENGTHFOR clauses are mutually exclusive. We cannot use the VECTORLENGTH clause with the VECTORLENGTHFOR clause, and vice versa.

If we do not explicitly specify a VECTORLENGTH or VECTORLENGTHFOR clause, the compiler will choose a VECTORLENGTH using its own cost model. Misclassification of variables into PRIVATE, FIRSTPRIVATE, LASTPRIVATE, LINEAR, and REDUCTION, or the lack of appropriate classification of variables, may lead to unintended consequences such as runtime failures and/or incorrect results.

We can only specify a particular variable in at most one instance of a PRIVATE, LINEAR, or REDUCTION clause. If the compiler is unable to vectorize a loop, a warning occurs by default. However, if ASSERT is specified, an error occurs instead. If the vectorizer has to stop vectorizing a loop for some reason, the fast floating-point model is used for the SIMD loop. A SIMD loop may contain one or more nested loops or be contained in a loop nest. Only the loop preceded by the SIMD directive is processed for SIMD vectorization.

The vectorization performed on this loop by the SIMD directive overrides any setting we may specify for options -fp-model (Linux OS and OS X) and /fp (Windows) for this loop.

VECTORLENGTH (n1 [, n2]…)

n is a vector length (VL). It must be an integer that is a power of 2 (16 or less for C/C++); the value must be 2, 4, 8, or 16. If we specify more than one n, the vectorizor will choose the VL from the values specified. This clause causes each iteration in the vector loop to execute the computation equivalent to n iterations of scalar loop execution. Multiple VECTORLENGTH clauses will cause a syntax error.

VECTORLENGTHFOR (data-type)

data-type is one of the following intrinsic data types in Fortran or built-in integer, pointer, float, double or complex types in C/C++. This clause causes each iteration in the vector loop to execute the computation equivalent to n iterations of scalar loop execution where n is computed from size_of_vector_register/sizeof(data_type).

For example, VECTORLENGTHFOR (REAL (KIND=4)) or vectorlengthfor(float) results in n=4 for SSE2 to SSE4.2 targets (packed float operations available on 128-bit XMM registers) and n=8 for AVX target (packed float operations available on 256-bit YMM registers) and n=16 for Intel Xeon Phi coprocessors. The VECTORLENGTHFOR and VECTORLENGTH clauses are mutually exclusive. We cannot use the VECTORLENGTHFOR clause with the VECTORLENGTH clause, and vice versa. Multiple VECTORLENGTHFOR clauses cause a syntax error.

LINEAR (var1:step1 [, var2:step2]…)

var is a scalar variable; step is a compile-time positive, integer constant expression. For each iteration of a scalar loop, var1 is incremented by step1, var2 is incremented by step2, and so on. Therefore, every iteration of the vector loop increments the variables by VL*step1, VL*step2, …, to VL*stepN, respectively. If more than one step is specified for a var, a compile-time error occurs. Multiple LINEAR clauses are merged as a union. A variable in a LINEAR clause cannot appear in a REDUCTION, PRIVATE, FIRSTPRIVATE, or LASTPRIVATE clause.

REDUCTION (oper:var1[, var2]…)

oper is a reduction operator (+, *,−, .AND., .OR., .EQV., or .NEQV.); var is a scalar variable. Applies the vector reduction indicated by oper to var1, var2, …, varN. A SIMD directive can have multiple reduction clauses using the same or different operators. If more than one reduction operator is associated with a var, a compile-time error occurs. A variable in a REDUCTION clause cannot appear in a LINEAR, PRIVATE, FIRSTPRIVATE, or LASTPRIVATE clause.

[NO]ASSERT

Directs the compiler to assert (produce an error) or not to assert (produce a warning) when the vectorization fails. The default is NOASSERT. If this clause is specified more than once, a compile-time error occurs.

PRIVATE (var1 [, var2]…)

var is a scalar variable. Causes each variable to be private to each iteration of a loop. Its initial and last values are undefined upon entering and exiting the SIMD loop. Multiple PRIVATE clauses are merged as a union. A variable that is part of another variable (for example, as an array or structure element) cannot appear in a PRIVATE clause. A variable in a PRIVATE clause cannot appear in a LINEAR, REDUCTION, FIRSTPRIVATE, or LASTPRIVATE clause.

FIRSTPRIVATE (var1 [, var2]…)

var is a scalar variable. Provides a superset of the functionality provided by the PRIVATE clause. Variables that appear in a FIRSTPRIVATE list are subject to PRIVATE clause semantics. In addition, its initial value is broadcast to all private instances for each iteration upon entering the SIMD loop. A variable in a FIRSTPRIVATE clause can appear in a LASTPRIVATE clause. A variable in a FIRSTPRIVATE clause cannot appear in a LINEAR, REDUCTION, or PRIVATE clause.

LASTPRIVATE (var1 [, var2]…)

var is a scalar variable. Provides a superset of the functionality provided by the PRIVATE clause. Variables that appear in a LASTPRIVATE list are subject to PRIVATE clause semantics. In addition, when the SIMD loop is exited, each variable has the value that resulted from the sequentially last iteration of the SIMD loop (which may be undefined if the last iteration does not assign to the variable). A variable in a LASTPRIVATE clause can appear in a FIRSTPRIVATE clause. A variable in a LASTPRIVATE clause cannot appear in a LINEAR, REDUCTION, or PRIVATE clause.

[NO]VECREMAINDER

Directs the compiler to vectorize (or not to vectorize) the remainder loop when the original loop is vectorized. If !DIR$ VECTOR ALWAYS is specified, the following occurs: if neither the VECREMAINDER or NOVECREMAINDER clause is specified, the compiler overrides efficiency heuristics of the vectorizer and it determines whether the loop can be vectorized. If VECREMAINDER is specified, the compiler vectorizes remainder loops when the original main loop is vectorized. If NOVECREMAINDER is specified, the compiler does not vectorize the remainder loop when the original main loop is vectorized.

Use SIMD directives with care

The SIMD directives are most powerful in forcing vectorization, but come with the danger that the implications of vectorization need to be understood to avoid changing the program behavior in unexpected ways. They should be used with care. First-time users generally make mistakes and learn from them. We advise working on loops where the output values can be tested during development to provide rapid feedback. Some instructors advise “stick in SIMD directives and start debugging.” We find that suggestion a little scary, but it seems to work with many developers quite well. Just be advised that it is hard to see the changes in a loop that are unexpected and problematic. On the other hand, SIMD directives free us from another evil: everything looks good, but we cannot figure out how to get the compiler to do the vectorization. Take your pick: SIMD to force the compiler to vectorize but we have to be careful, or auto-vectorization where the burden is on the compiler to keep things correct to the limits of its ability to analyze an application. The VECTOR and IVDEP directives give us some “in between” options, which share the burden by retaining some compiler checking but giving us more control.

The VECTOR and NOVECTOR directives

Unlike SIMD directives, VECTOR and NOVECTOR are generally hints to modify compiler heuristics. The VECTOR and NOVECTOR overrides the default heuristics for vectorization of FORTRAN DO loops and C/C++ for loops. They can also affect certain optimizations. Their format is:

!DIR$ VECTOR [clause[[,] clause]…]

!DIR$ NOVECTOR

#pragma vector [clause[[,] clause]…]

#pragma vector nontemporal[(var1[, var2, …])]

clause is an optional vectorization or optimizer clause. It can be one or more of the following:

ALWAYS [ASSERT]

Enables or disables vectorization of a loop. The ALWAYS clause overrides efficiency heuristics of the vectorizer, but it only works if the loop can actually be vectorized. If the ASSERT keyword is added, the compiler will generate an error-level assertion message saying that the compiler efficiency heuristics indicate that the loop cannot be vectorized. We should use the IVDEP directive to ignore assumed dependences or SIMD directive to ignore virtually everything. This makes VECTOR ALWAYS safer for the programmer in a way but leaves room for the compiler to be more conservative than might be strictly necessary. IVDEP and SIMD all shift more burden to the programmer to check that the vectorization is safe (preserves the programmer’s intent).

ALIGNED | UNALIGNED

Specifies that all data is aligned or no data is aligned in a loop. These clauses override efficiency heuristics in the optimizer. The clauses ALIGNED and UNALIGNED instruct the compiler to use, respectively, aligned and unaligned data movement instructions for all array references. These clauses disable all the advanced alignment optimizations of the compiler, such as determining alignment properties from the program context or using dynamic loop peeling to make references aligned.

Be careful when using the ALIGNED clause. Instructing the compiler to implement all array references with aligned data movement instructions will cause a runtime exception if some of the access patterns are actually unaligned.

TEMPORAL | NONTEMPORAL [(var1 [, var2]…)]

var is an optional memory reference in the form of a variable name. This controls how the “stores” of register contents to storage are performed (streaming versus non-streaming). The TEMPORAL clause directs the compiler to use temporal (that is, non-streaming) stores. The NONTEMPORAL clause directs the compiler to use non-temporal (that is, streaming) stores. For Intel Xeon Phi coprocessors, the compiler generates clevict (cache-line-evict) instructions after the stores based on the non-temporal directive when the compiler knows that the store addresses are aligned.

By default, the compiler automatically determines whether a streaming store should be used for each variable. Streaming stores may enable significant performance improvements over non-streaming stores for large numbers on certain processors. However, the misuse of streaming stores can significantly degrade performance.

VECREMAINDER | NOVECREMAINDER

Same as the [NO]VECREMAINDER clause for SIMD directives (see above).

Use VECTOR directives with care

The VECTOR directive should be used with care. Overriding the efficiency heuristics of the compiler should only be done if we are absolutely sure the vectorization will improve performance.

For instance, the compiler normally does not vectorize loops that have a large number of non-unit stride references (compared to the number of unit stride references). In the following example, vectorization would be disabled by default, but the directive overrides this behavior:

!DIR$ VECTOR ALWAYS

do i = 1, 100, 2

! two references with stride 2 follow

a(i) = b(i)

enddo

There may be cases where we want to explicitly avoid vectorization of a loop; for example, if vectorization would result in a performance regression rather than an improvement. In these cases, we can use the NOVECTOR directive to disable vectorization of the loop.

In the following example, vectorization would be performed by default, but the directive overrides this behavior:

!DIR$ NOVECTOR

do i = 1, 100

a(i) = b(i) + c(i)

enddo

The IVDEP directive

Unlike SIMD directives, IVDEP gives specific hints to modify compiler heuristics about dependencies. Specifically, the compiler will assume dependencies between loops when it cannot prove they do not exist. The IVDEP directive instructs the compiler to ignore assumed vector dependencies. The format for C/C++ and Fortran, respectively, is:

#pragma ivdep

!DIR$ IVDEP [: option]

!DIR$ IVDEP with no option can also be spelled !DIR$ INIT_DEP_FWD (INITialize DEPendences ForWarD)

To ensure correct code, the compiler will prevents vectorization in the presence of assumed dependencies. This pragma overrides that decision. Use this pragma only when we know that the assumed loop dependencies are safe to ignore.

In Fortran, the option LOOP implies no loop-carried dependencies and the option BACK implies no backward dependencies. When no option is specified, the compiler begins dependence analysis by assuming all dependences occur in the same forward direction as their appearance in the normal scalar execution order. This contrasts with normal compiler behavior, which is for the dependence analysis to make no initial assumptions about the direction of dependence.

IVDEP example in fortran

In the following example, the IVDEP directive provides more information about the dependences within the loop, which may enable loop transformations to occur:

!DIR$ IVDEP

DO I=1, N

A(INDARR(I)) = A(INDARR(I)) + B(I)

END DO

In this case, the scalar execution order follows:

4. Use the result from step 1 to retrieve A(INDARR(I)).

6. Add the results from steps 2 and 3.

7. Store the results from step 4 into the location indicated by A(INDARR(I)) from step 1.

IVDEP directs the compiler to initially assume that when steps 1 and 5 access a common memory location, step 1 always accesses the location first because step 1 occurs earlier in the execution sequence. This approach lets the compiler reorder instructions, as long as it chooses an instruction schedule that maintains the relative order of the array references.

IVDEP examples in C

The loop in this example will not vectorize without the ivdep pragma, since the value of k is not known; vectorization would be illegal if k<0:

void ignore_vec_dep(int *a, int k, int c, int m)

{

#pragma ivdep

for (int i = 0; i < m; i++)

a[i] = a[i + k] * c;

}

The pragma binds only the for loop contained in current function. This includes a for loop contained in a sub-function called by the current function.

The following loop requires the parallel option in addition to the ivdep pragma to indicate there are no loop-carried dependencies:

#pragma ivdep

for (i=1; i<n; i++)

{

e[ix[2][i]] = e[ix[2][i]]+1.0;

e[ix[3][i]] = e[ix[3][i]]+2.0;

}

The following loop requires the parallel option in addition to the ivdep pragma to ensure there is no loop-carried dependency for the store into a():

#pragma ivdep

for (j=0; j<n; j++)

{

a[b[j]] = a[b[j]] + 1;

Random number function vectorization

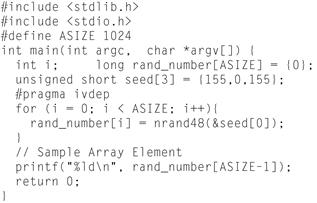

The Intel Compiler supports a vectorized version of the random number function. This is the drand48 family of random number functions in C/C++ and RANF and Random_Number functions (single and double precision) in Fortran. Figure 5.16 shows the list of supported C/C++ functions and Figures 5.17 through 5.22 show examples using them.

Figure 5.16 Supported C/C++ Functions.

Figure 5.17 Example of drand48 Vectorization.

Figure 5.18 erand38 Vectorization; Seed Value Is Passed as an Argument.

Figure 5.19 lrand38 Vectorization.

Figure 5.20 nrand48 Vectorization; Seed Value ID Passed as an Argument.

Figure 5.21 mrand48 Vectorization.

Figure 5.22 jrand48 Vectorization; Seed Value Is Passed as an Argument.

Utilizing full vectors, -opt-assume-safe-padding

Efficient vectorization involves making full use of the vector hardware. This implies that users should strive to get most code to be executed in the kernel-vector loop as opposed to peel loop and/or remainder loop.

Remainder loop

A remainder loop is created to execute the remaining iterations when the number of loop iterations (trip count) for a vectorized loop is not a multiple of the vector length. While this is unavoidable in many cases, having a large amount of time spent in remainder loops will lead to performance inefficiencies. For example, if the vectorized loop trip count is 20 and the vector length is 16, it means every time the kernel loop gets executed once, the remainder 4 iterations have to be executed in the remainder-loop. Though the Intel Xeon Phi compiler may vectorize the remainder-loop (as reported by -vec-report6), it won’t be as efficient as the kernel loop. For example, the remainder-loop will use masks, and may have to use gathers/scatters instead of unit-strided loads/stores (due to memory-fault-protection issues). The best way to address this is to refactor the algorithm/code in such a way that the remainder-loop is not executed at runtime (by making trip counts a multiple of vector length) and/or making the trip count large compared to the vector length (so that the overhead of any execution in the remainder loop is low).

The compiler optimizations also take into account any knowledge of actual trip count values. So if the trip count is 20, compiler usually makes better decisions if it knows that the trip count is 20 (trip count is a constant known statically to the compiler) as opposed to a trip count of n (symbolic value) that happens to have a value of 20 at runtime (maybe it is an input value read in from a file). In the latter case, we can help the compiler by using a #pragma loop_count (20) in C/C++, or CDEC$ LOOP COUNT (20) in Fortran, before the loop.

Also take into account any unrolling of the vector-loop done by the compiler (by studying output from -vec-report6 option). For example, if the compiler vectorizes a loop (of trip count n and vector length 16) and unrolls the loop by 2 (after vectorization), each kernel loop is designed to execute 32 iterations of the original src loop. If the dynamic trip count happens to be 20, the kernel loop gets skipped completely and all execution will happen in the remainder loop. If we encounter this issue, we can use the #pragma nounroll in C/C++ or CDEC$ NOUNROLL in Fortran to turn off the unrolling of the vector loop. (We can also use the loop_count pragma described earlier instead to influence the compiler heuristics).

If we want to disable vectorization of the remainder loop generated by the compiler, use #pragma vector novecremainder in C/C++ or CDEC$ vector noremainder in Fortran pragma/directive before the loop (using this also disables vectorization of any peel loop generated by the compiler for this loop). We can also use the compiler internal option -mP2OPT_hpo_vec_remainder=F to disable remainder loop vectorization (for all loops in the scope of the compilation). This is typically useful if we are analyzing the assembly code of the vector loop, and we want to identify clearly the vector-kernel loop from the line numbers (otherwise we have to carefully sift through multiple versions of the loop in the assembly—kernel/remainder/peel to identify which one we are looking at).

Peel loop

The compiler generates dynamic peel loops typically to align one of the memory accesses inside the loop. The peel loop peels a few iterations of the original src loop until the candidate memory access gets aligned. The peel loop is guaranteed to have a trip count that is smaller than the vector length. This optimization is done so that the kernel vector loop can utilize more aligned load/store instructions—thus increasing the performance efficiency of the kernel loop. But the peel loop itself (even though it may be vectorized by the compiler) is less efficient (study the-vec-report6 output from the compiler). The best way to address this is to refactor the algorithm/code in such a way that the accesses are aligned and the compiler knows about the alignment following the vectorizer alignment BKMs. If the compiler knows that all accesses are aligned (say if the user correctly uses #pragma vector aligned before the loop so that the compiler can safely assume all memory accesses inside the loop are aligned), then there will be no peel loop generated by the compiler.

We can also use the loop_count pragma described earlier to influence the compiler decision of whether or not to create a peel loop.

We can instruct the compiler to not generate a dynamic peel loop by adding #pragma vector unaligned in C/C++ or CDEC$ vector unaligned in Fortran pragma/directive before the loop in the source.

We can use the vector pragma/directive with the novecremainder clause (as mentioned above) to disable vectorization of the peel loop generated by the compiler. We can also use the compiler internal option -mP2OPT_hpo_vec_peel=F to disable peel-loop vectorization (for all loops in the scope of the compilation).

% cat -n t2.c

#include <stdio.h>

void foo1(float *a, float *b, float *c, int n)

{

int i;

#pragma ivdep

for (i=0; i<n; i++) {

a[i] *= b[i] + c[i];

}

}

void foo2(float *a, float *b, float *c, int n)

{

int i;

#pragma ivdep

for (i=0; i<20; i++) {

a[i] *= b[i] - c[i];

}

}

For the loop in function foo1, the compiler generates a kernel-vector loop (unrolled after vectorization by a factor of 2), a peel loop and remainder loop, both of which are vectorized. For the loop in function foo2, the compiler takes advantage of the fact that the trip count is a constant (20) and generates a kernel loop that is vectorized (and not unrolled). The remainder loop (of 4 iterations) is completely unrolled by the compiler (and not vectorized). There is no peel loop generated.

Option -opt-assume-safe-padding

We can increase the size of arrays by using the compiler option -opt-assume-safe-padding, which can improve performance. This option determines whether the compiler assumes that variables and dynamically allocated memory are padded past the end of the object.

When -opt-assume-safe-padding is specified, the compiler assumes that variables and dynamically allocated memory are padded. This means that code can access up to 64 bytes beyond what is specified in our program. The compiler does not add any padding for static and automatic objects when this option is used, but it assumes that code can access up to 64 bytes beyond the end of the object, wherever the object appears in the program. To satisfy this assumption, we must increase the size of static and automatic objects in our programs when we use this option.

One example of where this option can help is in the sequences generated by the compiler for vector-remainder and vector-peel loops. This option may improve performance of memory operations in such loops. If this option is used in the compilation above, the compiler will assume that the arrays a, b, and c have a padding of at least 64 bytes beyond n. If these arrays were allocated using malloc, such as:

ptr = (float *)malloc(sizeof(float) * n);

then they should be changed by the user to say:

ptr = (float *)malloc(sizeof(float) * n + 64);

After making such changes (to satisfy the legality requirements for using this option), we get a higher-performing sequence for the peel loop generated for loop at line 7.

Data alignment to assist vectorization

Data alignment is a method to force the compiler to create data objects in memory on specific byte boundaries. This is done to increase efficiency of data loads and stores to and from the processor. Without going into great detail, processors are designed to efficiently move data when that data can be moved to and from memory addresses that are on specific byte boundaries. For the Intel Xeon Phi coprocessor, memory movement is optimal when the data starting address lies on 64-byte boundaries. Thus, it is desired to force the compiler to create data objects with starting addresses that are modulo 64 bytes.

In addition to creating the data on aligned boundaries, the compiler is able to make optimizations when the data is known to be aligned by 64 bytes. By default, the compiler cannot know and cannot assume data alignment when that data is created outside of the current scope. Thus, we must also inform the compiler of this alignment via pragmas (C/C++) or directives (Fortran) so that the compiler can generate optimal code. The one exception is that Fortran module data receives alignment information at USE sites. To summarize, two steps are needed: