This chapter highlights some of the successes and challenges that government organizations (based in the United States or elsewhere) have experienced as they have sought to improve their acquisition processes. Links to elements of the CMMI for Acquisition (CMMI-ACQ) model are included in this discussion to demonstrate not only how these successes and challenges influenced the existing model, but also how they offer opportunities for future model development.

by Mike Phillips and Brian Gallagher

As the CMMI Product Team began to consider how to construct a CMMI model to address the acquisition area of interest, the U.S. Department of Defense (DoD) was considering a critical report, the “Defense Acquisition Performance Assessment Report” (a.k.a. the DAPA report) [Kadish 2006]. In his letter delivering the report to Deputy Secretary of Defense Gordon England, the panel chair, Gen. Kadish, noted:

“Although our Acquisition System has produced the most effective weapon systems in the world, leadership periodically loses confidence in its efficiency. Multiple studies and improvements to the Acquisition System have been proposed—all with varying degrees of success. Our approach was broader than most of these studies. We addressed the ‘big A’ Acquisition System because it includes all the management systems that DoD uses not only the narrow processes traditionally thought of as acquisition. The problems DoD faces are deeply imbedded in the ‘big A’ management systems not just the ‘little a’ processes. We concluded that these processes must be stable for incremental change to be effective—they are not.”

As the CMMI Product Team began to develop the CMMI-ACQ model—a model we wanted to apply to both commercial and government organizations—we considered tackling the issues raised in the DAPA report. However, successful model adoption requires an organization to embrace the ideas (i.e., best practices) of the model for effective improvement of the processes involved. As the preceding quote illustrates, in addition to organizations traditionally thought of as the “acquisition system” (“little a”) in the DoD, there are also organizations that are associated with the “big A,” including the Joint Capabilities Integration and Development System (JCIDS) and the Planning, Programming, Budgeting, and Execution (PPBE) system. These systems are governed by different stakeholders, directives, and instructions. To be effective, the model would need to be adopted at an extremely high level—by the DoD itself. Because of this situation, we resolved with our sponsor in the Office of the Secretary of Defense to focus on the kinds of “little a” organizations that are able to address CMMI best practices once the decision for a materiel solution has been reached.

However, we often find that models can have an impact beyond what might be perceived to be the “limits” of process control. The remainder of this section highlights some of the clear leverage points at which the model supports DAPA recommendations for improvement.

Figure 6.1 illustrates the issues and the relative importance of each issue as observed by the DAPA project team in reviewing previous recommendations and speaking to experts. The most important issues are labeled [Kadish 2006].

We would never claim that the CMMI-ACQ model offers a complete solution to all of the significant key issues in Figure 6.1; however, building capabilities addressed in the CMMI-ACQ model does offer opportunities to address many of the risks captured in these issue areas. In the text that follows, we limit the discussion of each issue to one paragraph.

This is one of the more significant additions to the CMMI Model Foundation (CMF) to support the acquisition area of interest. Multiple process area locations (including Acquisition Requirements Development and Solicitation and Supplier Agreement Development) were considered before placing the acquisition strategy practice into Project Planning. Feedback from various acquisition organizations noted that many different organizational elements are stakeholders in strategy development, but its criticality to the overall plan suggested an effective fit as part of this process area. We also found that some projects might develop the initial strategy before the formal establishment of full acquisition offices, which would then accept the long-term management responsibility of the strategy. Note that the practice does not demand a specific strategy, but does expect the strategy to be a planning artifact. The full practice, “Establish and maintain the acquisition strategy,” in Project Planning specific practice 1.1, recognizes that maintenance may require strategy updates if the changing environment reflects that need. The DAPA report (p. 14) calls out some recommended strategies in the DoD environment [Kadish 2006].

Additional discussion is contained in the section Acquisition Strategy: Planning for Success, later in this chapter.

Although a model such as CMMI-ACQ should not direct specific organizational structures, the CMMI Product Team looked for ways to encourage improved operations on programs. Most significant might be practices for creating and managing integrated teams with effective rules and guidelines. The DAPA report (p. 76) noted deficiencies in operation. Organizations following model guidance may be better able to address these shortfalls.

The CMMI-ACQ Product Team recognized the vital role played by effective requirements development. The team assigned Acquisition Requirements Development to maturity level 2 (Requirements Development is at maturity level 3 in the CMMI for Development or CMMI-DEV model). Key to this effectiveness is one of the significant differences between the CMMI-ACQ and CMMI-DEV versions of “Requirements Development,” played out in specific goal 2, “Customer requirements are refined and elaborated into contractual requirements.” The importance of this activity cannot be underemphasized, as poor requirements are likely to be the most troubling type of “defect” that the acquisition organization can inject into the system. The DAPA report notes that often, requirement errors are injected late into the acquisition system. Examples in the report included operational test requirements unspecified by the user. Although the model cannot address such issues directly, it does provide a framework that aids in the traceability of requirements to the source, which in turn allows the more successful resolution of issues. (The Requirements Management process area also aids in this traceability.)

Several features in CMMI-ACQ should help users address the oversight issue. Probably the most significant help is in the Acquisition Technical Management process area, which recognizes the need for the effective technical oversight of development activities. Acquisition Technical Management couples with the companion process area of Agreement Management, which addresses business issues with the supplier via contractual mechanisms. For those familiar with CMMI model construction, the generic practice associated with reviewing all model activities with senior management gives yet another opportunity to resolve issues.

We have grouped leadership, program managers expertise, and process discipline issues together because they reflect the specific purpose of creating CMMI-ACQ—to provide best practices that enable leadership; to develop capabilities within acquisition organizations; and to instill process discipline where clarity might previously have been lacking. Here the linkage between DAPA report issues and CMMI-ACQ is not specific, but very general.

Alas, for complex acquisition system and PPBE process issues, the model does not have any specific assistance to offer. However, the effective development of the high maturity elements of process-performance modeling, particularly if shared by both the acquirer and the system development team, may help address the challenges of “big A” budgetary exercises with more accurate analyses than otherwise would be produced.

Although CMMI-ACQ is aimed primarily at the acquisition of products and services, it also has some practices that would be especially important in addressing system of systems issues. Because of the importance of this topic in government acquisition, we decided to add this discussion and the Interoperable Acquisition section later in this chapter to assist readers who are facing the challenges associated with systems of systems.

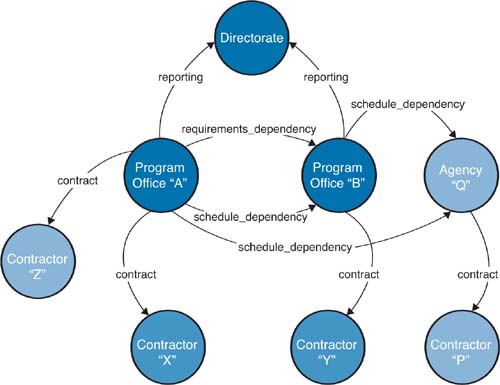

A source document for this segment is Interoperable Acquisition for Systems of Systems: The Challenges [Smith 2006], which describes the ever-increasing interdependence of systems necessary to provide needed capabilities to the user. As an example cited in the document, on one key satellite communications program, at least five separate and independent acquisition programs needed to achieve successful completion before the actual capability could be delivered to the various military services. The increasing emphasis on net-centric operations and service-oriented architectures added to the challenge. Figure 6.2 provides a visual depiction of some of the complexity.

Figure 6.2 shows the many ways that complexity grows quickly as the number of critical programmatic interfaces grows. Each dependency creates a risk for at least one of the organizations. The figure suggests that for two of the programs, a shared reporting directorate can aid in mitigating risks. This kind of challenge can be described as one in which applying recursion is sufficient. The system is composed of subsystems, and a single overarching management structure controls the subordinate elements. In many large program offices, the system might be an aircraft with its supporting maintenance systems and required training systems. All elements may be separate programs, but coordination occurs within a single management structure. The figure, however, shows the additional challenges evident when there is not a single management structure. Critical parts of the capability are delivered by separate management structures, often with widely different motivators and priorities.

Although the model was not constructed to specifically solve these challenges, especially the existence of separate management structures, the complexity was familiar to CMMI-ACQ authors. Those of you who have used the Capability Maturity Model (CMM) or CMMI model in the past will recognize the Risk Management process area in this model. In addition to noting that there are significant risks in managing the delivery of a system through any outside agent, such as a supplier, the model also includes the value of recognizing both internal and external sources of risk. The following note is part of Risk Management specific practice 1.1:

“Identifying risk sources provides a basis for systematically examining changing situations over time to uncover circumstances that impact the ability of the project to meet its objectives. Risk sources are both internal and external to the project. As the project progresses, additional sources of risk may be identified. Establishing categories for risks provides a mechanism for collecting and organizing risks as well as ensuring appropriate scrutiny and management attention to risks that can have serious consequences on meeting project objectives.”

Varying approaches to addressing risks across multiple programs and organizations have been taken. One of these, Team Risk Management, has been documented by the Software Engineering Institute (SEI) [Higuera 1994]. CMMI-ACQ anticipated the need for flexible organizational structures to address these kinds of issues and chose to expect that organizations establish and maintain integrated teams crossing organizational boundaries rather than making it an option, as it is in CMMI-DEV. The following is part of Organizational Process Definition specific practice 1.7:

“In an acquisition organization, integrated teams are useful not just in the acquirer’s organization but between the acquirer and supplier and among the acquirer, supplier, and other relevant stakeholders, as appropriate. Integrated teaming may be especially important in a system of systems environment.”

Perhaps the most flexible and powerful model feature used to address the complexities of systems of systems is the effective employment of the specific practices in the second specific goal of Acquisition Technical Management. These practices require successfully managing needed interfaces among the system being focused on and other systems not under the project’s direct control. It is set up first in Acquisition Requirements Development:

“Develop interface requirements of the acquired product to other products in the intended environment.” (ARD SP 2.1.2)

The installation of the specific goal in Acquisition Technical Management as part of overseeing effective system development from the acquirer’s perspective is powerful for addressing system of systems problems. The following note is part of Acquisition Technical Management specific goal 2:

“Many integration and transition problems arise from unknown or uncontrolled aspects of both internal and external interfaces. Effective management of interface requirements, specifications, and designs helps to ensure implemented interfaces are complete and compatible.”

The supplier is responsible for managing the interfaces of the product or service it is developing. However, the acquirer identifies those interfaces, particularly external interfaces, that it will manage as well.

Although these model features provide a basis for addressing some of the challenges of delivering the capabilities desired in a system of systems environment, the methods best suited for various acquisition environments await further development in future documents. CMMI-ACQ is positioned to aid that development and mitigate the risks inherent in these acquisitions.

Adapted from “Techniques for Developing an Acquisition Strategy by Profiling Software Risks,” CMU/SEI-2006-TR-002

As previously discussed, CMMI-ACQ’s Project Planning process area asks the program to establish and maintain an acquisition strategy. You will also see references to the acquisition strategy called out in many other CMMI-ACQ process areas.

What is an acquisition strategy, and how does it relate to other planning documents discussed in CMMI-ACQ?

The Defense Acquisition University (DAU) uses the following definition of acquisition planning:

“The process by which the efforts of all personnel responsible for an acquisition are coordinated and integrated through a comprehensive plan for fulfilling the agency need in a timely manner and at a reasonable cost. It is performed throughout the lifecycle and includes developing an overall acquisition strategy for managing the acquisition and a written acquisition plan (AP).”

Acquisition planning is the act of defining and maintaining an overall approach for a program. Acquisition planning guides all elements of program execution to transform the mission need into a fielded system that is fully supported and delivers the desired capability. The goal of acquisition planning is to provide a road map that is followed to maximize the chances of successfully fielding a system that meets users’ needs within cost and schedule constraints. Acquisition planning is an iterative process; feedback loops impact future acquisition planning activities.

An acquisition strategy, when formulated carefully, is a means of addressing program risks via program structure. The DAU uses the following definition of acquisition strategy:

“A business and technical management approach designed to achieve program objectives within the resource constraints imposed. It is the framework for planning, directing, contracting for, and managing a program. It provides a master schedule for research, development, test, production, fielding, modification, postproduction management, and other activities essential for program success. The acquisition strategy is the basis for formulating functional plans and strategies (e.g., test and evaluation master plan [TEMP], acquisition plan [AP], competition, systems engineering, etc.).”

The best acquisition strategy for a given program directly addresses that program’s highest-priority risks. High-priority risks can be technical if no one has yet built a component that meets some critical aspect of the system or has never combined mature components in the way that is required. Risks can be programmatic if the system must be designed to accommodate predefined cost or schedule constraints. Or risks can be mission-related when the characteristics of a system that meets the need cannot be fully articulated and agreed on by stakeholders. Each program faces a unique set of risks, so the corresponding acquisition strategy must be unique to address them.

The risks a program faces also evolve through the life of the program. The acquisition strategy and the plans developed based on that strategy must also evolve. Figure 6.3 shows the iterative nature of acquisition strategy development and planning.

The process usually starts with an articulation of user needs requiring a material solution and identification of alternatives to satisfy those needs (e.g., an analysis of alternatives [AoA]). A technology development strategy is then developed to mature the technologies required for the selected alternative. Next, the acquisition strategy is developed to guide the program through its life. As the strategy is executed, refinements are made. In the DoD, the acquisition strategy is required and formally reviewed at major program milestones (e.g., Milestone B [MS B]).

The terms acquisition planning, acquisition strategy, and acquisition plan are frequently used interchangeably, which causes much confusion. A program’s acquisition strategy is different from its acquisition plan, but both are artifacts of acquisition planning. All strategies can be plans, but not all plans can be strategies. In the context of acquisition, strategies are high-level decisions that direct the development of more detailed plans, which guide the execution of a program. Careful planning of what is to be done, who will do it, and when it will be done is required.

Developing an all-encompassing acquisition strategy for a program is a daunting activity. As with many complex endeavors, often the best way to begin is to break the complex activity down into simpler, more manageable tasks. When developing an acquisition strategy, a program manager’s first task is to define the elements of that strategy. When defining strategy elements, it is useful to ask the question, “What acquisition choices must I make in structuring this program?” Inevitably, asking this question leads to more detailed questions, such as the following.

• What acquisition approach should I use?

• What type of solicitation will work best?

• How will I monitor my contractor’s activities?

The result of these choices defines the acquisition strategy. Identifying strategy elements is the first step in this process.

The DAU’s Defense Acquisition Guidebook defines 18 strategy considerations that should be addressed when formulating an acquisition strategy [DoD 2006]. These considerations include the following:

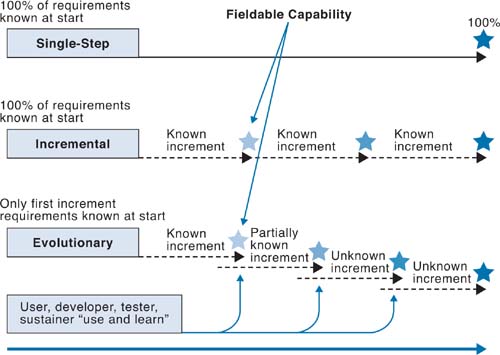

One of the most important strategy considerations is defining the acquisition approach. The acquisition approach strategy element defines the approach the program will use to achieve full capability. Typically, this approach is either single-step, incremental, or evolutionary, as illustrated in Figure 6.4.

For readers who are experienced with development approaches, the decision to use single-step, incremental, or evolutionary approaches seems natural and is driven by program risk. When acquiring a capability that has been developed many times before and there are known solutions, a single-step approach may be warranted. However, most systems are more complex and require either an incremental or an evolutionary approach. The most important aspect to understand is the difference between the acquisition approach and the development approach used by your supplier. You can use a single-step approach as an acquisition strategy while the developer is using an incremental development approach. At times, a different approach may be selected for different parts of the program. Figure 6.5 depicts the acquirer using a single-step approach while the developer is using an incremental approach. Figure 6.6 shows the acquirer using an evolutionary approach and the developer using a mix of single-step, incremental, and evolutionary (spiral) approaches.

The acquisition approach is just one of many decisions an acquirer makes when developing the acquisition strategy. Most choices are made based on a careful examination of risk. Each project is unique, and the acquisition strategy is unique and becomes one of the most important guiding artifacts for all stakeholders. The decisions made and documented in the acquisition strategy help to mitigate the highest risks to the program and provide a road map that all stakeholders can understand, share, and analyze to help the project succeed.

When creating the CMMI-ACQ model, we wrestled with finding ways to properly discuss the myriad agreements that are needed for an acquisition program to be successful. In this section, I offer some thoughts related to the multiple types of agreements possible in acquisitions based on my experiences in government acquisition over the past 30 years.

Central to a successful acquisition process is the key contractual agreement between the acquirer and the supplier. To describe the agreements for this relationship, we built on the excellent foundation provided by the Supplier Agreement Management (SAM) process area from CMMI-DEV and legacy documents, such as the Software Acquisition CMM published in the 1990s [SEI 2002, SEI 2006a]. However, the product team knew that other agreements must be carefully developed and managed over the life of many acquisitions, and that the model had to cover these types of agreements as well. Figure 6.2 is a reminder of the need for multiple agreements given the complexity of many of today’s acquisitions.

In many government organizations, the acquisition discipline is established and maintained in specific organizations that are chartered to handle acquisition tasks for a variety of using organizations. Often the acquirers do not simply provide products to customers that the supplier delivers “as is,” but instead integrate delivered products with other systems or add services, such as training or upgrades, all of which may come from other agencies, departments, or organizations not typically thought of as suppliers. These other acquirer activities increase as product complexity increases.

Because of this known complexity, the product team knew that the definition of supplier agreement had to be broad and cover more than just a contract. So, the product team settled on the following definition: “A documented agreement between the acquirer and supplier (e.g., contract, license, or memorandum of agreement).”

The focus of the Acquisition Requirements Development process area is to ensure that customer needs are translated into customer requirements and that those requirements are used to develop contractual requirements. Although the product team agreed to call these “contractual” requirements, they knew the application of these requirements had to be broader.

Thus, contractual requirements are defined as follows: “The result of the analysis and refinement of customer requirements into a set of requirements suitable to be included in one or more solicitation packages, formal contracts, or supplier agreements between the acquirer and other appropriate organizations.”

Practices in the Solicitation and Supplier Agreement Development and Agreement Management process areas are particularly helpful, as the need for other types of documented agreements increases with system complexity. An example of these kinds of agreements is the program-manager-to-program-manager agreement type that the U.S. Navy uses to help integrate combat systems and C4I in surface ships during new construction and modernization.

Another helpful process area to use when integrating a large product is Acquisition Technical Management. Specific goal 2 is particularly useful because it focuses on the critical interfaces that likely have become the responsibility of the acquisition organization. Notes in this goal state: “The supplier is responsible for managing the interfaces of the product or service it is developing. However, the acquirer identifies those interfaces, particularly external interfaces, that it will manage as well.”

This statement recognizes that the acquirer’s role often extends beyond simply ensuring a good product from a supplier. In the introductory notes of this process area, the acquirer is encouraged to use more of CMMI-DEV’s Engineering process areas if “the acquirer assumes the role of overall systems engineer, architect, or integrator for the product.”

Clearly many acquisitions are relatively simple and direct, ensuring delivery of a new capability to the customer by establishing contracts with selected suppliers. However, the model provides an excellent starting point for the types of complex arrangements that often are seen in government today.

by Mike Phillips

Having been a military test pilot and director of tests for the B-2 Spirit Stealth Bomber acquisition program for the U.S. Air Force (USAF), I am particularly interested in the interactions between the testing aspects contained in the two companion models of CMMI-DEV and CMMI-ACQ. This section provides some perspectives I hope are helpful for those of you who need to work on verification issues across the acquirer–supplier boundary, particularly in government.

When we created the process areas for the Acquisition category, the product team knew we needed to expand the limited supplier agreement coverage in SAM (in the CMMI-DEV model) to address the business aspects critical to acquisition. However, we knew we also needed to cover the technical aspects that were “close cousins” to the process areas in the Engineering process area category in CMMI-DEV. We tried to maximize the commonality between the two models so that both collections of process areas would be tightly related in the project lifecycle. For a significant part of the lifecycle, the two models represent two “lenses” observing the same total effort. Certainly testing seemed to be a strongly correlated element in both models.

As you read through the CMMI-ACQ process areas of Acquisition Verification and Acquisition Validation, notice that the wording is either identical or quite similar to the wording in the CMMI-DEV process areas of Verification and Validation. This commonality was intentional to maintain bridges between the acquirer and supplier teams, which often must work together effectively.

Throughout my military career, I often worked on teams that crossed the boundaries of government and industry to conserve resources and employ the expertise needed for complex test events, such as flight testing. Combined test teams were commonly used. Often these teams conducted flight test missions in which both verification and validation tasks were conducted. The government, in these cases, helped the contractor test the specification and provided critical insight into the usability of the system.

Each model, however, must maintain a distinct purpose from its perspective (whether supplier or acquirer) while maximizing desirable synergies. Consider Validation and Acquisition Validation first. There is little real distinction between these two process areas, since the purpose of both is the same: to satisfy the ultimate customer with products and services that provide the needed utility in the end-use environment. The value proposition is not different—dissatisfied customers are often as upset with the acquisition organization as with the supplier of the product that has not satisfied the users. So, the difference between Validation and Acquisition Validation is quite small.

With Verification and Acquisition Verification, however, the commonality is strong in one sense—both emphasize assurance that work products are properly verified by a variety of techniques, but each process area focuses on the work products produced and controlled in its own domain. So, whereas Verification focuses on the verification of supplier work products, Acquisition Verification focuses on the verification of acquirer work products.

The CMMI-ACQ product team saw the value of focusing on acquirer work products, such as solicitation packages, supplier agreements and plans, requirements documents, and design constraints developed by the acquirer. These work products require thoughtful verification using powerful tools, such as peer reviews to remove defects. Since defective requirements are among the most expensive to find and address because they often are discovered late in development, improvements in verifying requirements have great value.

Verifying the work products developed by the supplier is covered in Verification and is the responsibility of the supplier, who is presumably using CMMI-DEV. The acquirer may assist in verification activities, since such assistance may be mutually beneficial to both the acquirer and the supplier. However, conducting the verification of the product or service developed by the supplier is ultimately performed by the supplier.

The acquirer’s interests during supplier verification activities are captured in two other process areas. During much of development, supplier deliverables are used as a way to gauge development progress. Monitoring that progress and reviewing the supplier’s technical solutions and verification results are covered by Acquisition Technical Management.

All of the process areas discussed thus far (Verification, Validation, Acquisition Verification, Acquisition Validation, Acquisition Technical Management, and Agreement Management) have elements of the stuff we call “testing.” Testing is, in fact, a subset of the wider concept often known as “verification.” I’ll use my B-2 experience as an example.

Early in B-2 development, the B-2 System Program Office (SPO) prepared a test and evaluation master plan, or TEMP. This plan is required in DoD acquisition and demands careful attention to the various methods that the government requires for the contractor’s verification environment. The B-2 TEMP outlined the major testing activities necessary to deliver a B-2 to the using command in the USAF. This plan delineated a collection of classic developmental test activities called “developmental test and evaluation.” From a model perspective, these activities would be part of the supplier’s Verification activities during development. Fortunately, we had the supplier’s system test plan, an initial deliverable document to the government, to help us create the developmental test and evaluation portions of the TEMP. Receiving the right supplier deliverables to review and use is part of what the Solicitation and Supplier Agreement Development process area is about.

The TEMP also addressed operational test and evaluation activities, which included operational pilots and maintenance personnel to ensure operational utility. These activities map to both Validation and Acquisition Validation. From a model perspective, many of the operational elements included in the TEMP were the result of mission and basing scenarios codeveloped with the customer. These activities map to practices in Acquisition Requirements Development.

The TEMP also described a large number of ancillary test-related activities such as wind tunnel testing and radar reflectivity testing. These earlier activities are best mapped to the practices of Acquisition Technical Management. They are the technical activities the acquisition, organization uses to analyze the suppliers’ candidate solution and review the suppliers’ technical progress. As noted in Acquisition Technical Management, when all of the technical aspects have been analyzed and reviewed to the satisfaction of the acquirer, the system is ready for acceptance testing. In the B-2 example, that was a rigorous physical configuration audit and functional configuration audit to account for all of the essential elements of the system. The testing and audits ensured that a fully capable aircraft was delivered to the using command. The physical configuration audit activities are part of the “Conduct Technical Reviews” specific practice in Acquisition Technical Management.

Much of the emphasis in CMMI-ACQ reminds acquirers of their responsibilities for the key work products and processes under their control. Many of us have observed the value of conducting peer reviews on critical requirements documents, from the initial delivery that becomes part of a Request for Proposal (RFP) to the oft-needed engineering change proposals. But my history with planning the testing efforts for the B-2 led me to point out a significant use of Acquisition Verification associated with the effective preparation of the TEMP. The test facilities typically were provided by the government, often a mix between the DoD and other government agencies, such as NASA and what is now the Department of Energy.

Many people have stated that “an untestable requirement is problematic.” Therefore, to complete a viable TEMP, the acquisition team must determine the criteria for requirements satisfaction and the verification environment that is needed to enable requirements satisfaction to be judged accurately. Here are a few of the questions that I believe complement Acquisition Verification best practices to help improve the quality of the government work product called the TEMP.

What confidence do we have that a specific verification environment, whether it is a government site or a contractor site, can accurately measure the performance we are demanding?

Are results obtained in a constrained test environment scalable to represent specified performance requirements?

Can limited experience be extrapolated to represent long-term needs for reliability and maintainability?

When might it be more cost-effective to accept data gathered at the suppliers’ facilities?

When might it be essential to use a government facility to ensure confidence in test results?

These and similar questions demonstrate the need for early attention to Acquisition Verification practices, particularly those under the first Acquisition Verification–specific goal, “Prepare for Verification.” These practices, of course, then support specific goal 3 activities that verify acquisition work products. These work products, in turn, often provide or augment the verification environment in which the system being acquired demonstrates its performance. These questions also help to differentiate the need to ensure a viable verification environment for the acquiring organization from the more familiar need to support the suppliers’ verification of work products.

by Craig Meyers

The term interoperability has long been used in an operational context. For example, it relates to the ability of machines to “plug and play.” However, there is no reason to limit interoperability to an operational context.

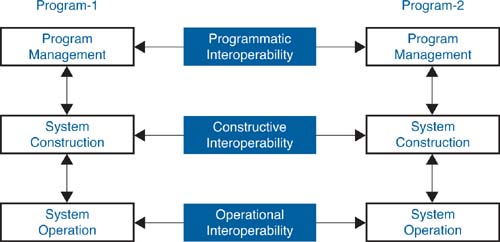

Recent work at the SEI has broadened the concept of interoperability to apply in other contexts. A main result of this work is the System of Systems Interoperability (SOSI) model [Morris 2004], a diagram of which appears in Figure 6.7. This model is designed for application to a system of systems context in which there are more and broader interactions than one typically finds in a program- and system-centric context.

Taking a vertical perspective of Figure 6.7, we introduce activities related to program management, system construction, and system operation. However, what is important to recognize is the need for interaction among different acquisition functions. It is this recognition that warrants a broader perspective of the concept of interoperability. Figure 6.7 shows three different aspects of interoperability. Although we recognize that operational interoperability is the traditional use of the term, we also introduce programmatic interoperability, constructive interoperability, and interoperable acquisition [Meyers 2008].

Programmatic interoperability is interoperability with regard to the functions of program management, regardless of the organization that performs those functions.

It may be natural to interpret programmatic interoperability as occurring only between program offices, but such an interpretation is insufficient. Multiple professionals collaborate with respect to program management. In addition to the program manager, relevant participants may include staff members from a program executive office, a service acquisition executive, independent cost analysts, and so on. Each of these professionals performs a necessary function related to the management of a program. In fact, contractor representation may also be included. The report “Exploring Programmatic Interoperability: Army Future Force Workshop” [Smith 2005] provides an example of the application of the principles of programmatic interoperability.

The concept of constructive interoperability may be defined in a similar manner as programmatic interoperability. Constructive interoperability is interoperability with regard to the functions of system construction, regardless of the organization that performs those functions.

Again, it may be natural to think of constructive interoperability as something that occurs between contractors, but that would limit its scope. For example, other organizations that may participate in the construction of a system might include a subcontractor, a supplier of commercial-of-the-shelf (COTS) products, independent consultants, an independent verification and validation organization, or a testing organization. And yes, a program management representative may also participate in the function of system construction. Each of these organizations performs a valuable function in the construction of a system.

An example of programmatic interoperability is the case in which a program is developing a software component that will be used by another program. For this transaction to occur, the program offices must interact with regard to such tasks as schedule synchronization, for example. Other interactions may be required, such as joint risk management. However, as we noted earlier, other organizations will play a role. For example, the development of an acquisition strategy may require interaction with the organizations responsible for its approval.

You can apply this example to the context of constructive interoperability as well. For one program office to provide a product to another, a corresponding agreement must exist among the relevant contractors. Such an agreement is often expressed in an associate contractor agreement (such an agreement would be called a supplier agreement in CMMI-ACQ). It is also possible that the separate contractors would seek out suppliers in a collective manner to maximize the chance of achieving an interoperable product.

The preceding example, although focusing on the aspects of interoperability, does not address the integration of those aspects. This topic enlarges the scope of discussion and leads us to define interoperable acquisition.

Interoperable acquisition is the collection of interactions that take place among the functions necessary to acquire, develop, and maintain systems to provide interoperable capabilities.

In essence, interoperable acquisition is the integration of the various aspects of interoperability. For example, in regard to the previous example, although two program offices may agree on the need for the construction of a system, such construction will require interactions among the program offices and their respective contractors, as well as among the contractors themselves.

There are clearly implications for acquisition in the context of a system of systems[1] and CMMI-ACQ. For example, CMMI-ACQ includes the Solicitation and Supplier Agreement Development and Agreement Management process areas, which relate to agreement management. As the previous discussion illustrates, the nature of agreements—their formation and execution—is important in the context of a system of systems and interoperable acquisition. These agreements may be developed to serve acquisition management or to aid in the construction of systems that must participate in a larger context.

[1] We do not use the expression “acquisition of a system of systems,” as this implies acquisition of a monolithic nature that is counter to the concept of a system of systems in which autonomy of the constituents is a prime consideration. As a colleague once said, “Systems of systems are accreted, not designed.” This may be a bit strong, but it conveys a relevant and meaningful notion.

Other areas of relevance deal with requirements management and schedule management, as discussed in the reports “Requirements Management in a System of Systems Context: A Workshop” [Meyers 2006a] and “Schedule Considerations for Interoperable Acquisition” [Meyers 2006c], respectively. Another related topic is that of risk management considerations for interoperable acquisition. This topic is covered in the report “Risk Management Considerations for Interoperable Acquisition” [Meyers 2006b]. Some common threads exist among these topics, including the following:

• Developing and adhering to agreements that manage the necessary interactions

• Defining information, including its syntax, but also (and more importantly) its semantics

• Providing mechanisms for sharing information

• Engaging in collaborative behavior necessary to meet the needs of the operational community

Each of these topics must be considered in the application of CMMI-ACQ and CMMI-DEV to a system of systems context, perhaps with necessary extensions, to meet the goals of interoperable acquisition. Therefore, the application of maturity models to acquisition in a system of systems context is quite relevant!

by Brian Gallagher

CMMI-ACQ includes two practices, one each in Project Planning and Project Monitoring and Control, related to transitioning products and services into operational use. As shown in Figure 6.8, one of the primary responsibilities of the acquirer, in addition to the acceptance of products and services from suppliers, is to ensure a successful transition of capability into operational use, including logistical considerations initially as well as throughout the life of the product or service.

Planning and monitoring transition to operations and support activities involves the processes used to transition new or evolved products and services into operational use, as well as their transition to maintenance or support organizations. Many projects fail during later lifecycle phases because the operational user is ill-prepared to accept the capability into day-to-day operations. Failure also stems from the inability to support the capability delivered, due to inadequate initial sparing or the inability to evolve the capability as the operational mission changes.

Maintenance and support responsibilities may not be the responsibility of the original supplier. Sometimes an acquisition organization decides to maintain the new capability in-house. There may be other reasons, economic or otherwise, when it makes sense to re-compete support activities. In these cases, the acquisition project must ensure that it has access to everything that is required to sustain the capability. This required access would include all design documentation as well as development environments, test equipment, simulators, and models.

The acquisition project is responsible for ensuring that acquired products not only meet their specified requirements (see the Acquisition Technical Management process area) and can be used in the intended environment (see the Acquisition Validation process area), but also that they can be transitioned into operational use to achieve the users’ desired operational capabilities and can be maintained and sustained over their intended lifecycles.

The acquisition project is responsible for ensuring that reasonable planning for transition into operations is conducted (see Project Planning specific practice 2.7, “Plan for Transition to Operations and Support”), clear transition criteria exist and are agreed to by relevant stakeholders, and planning is completed for product maintenance and support of products after they become operational. These plans should include reasonable accommodation for known and potential evolution of products and their eventual removal from operational use.

This transition planning should be conducted early in the acquisition lifecycle to ensure that the acquisition strategy and other planning documents reflect transition and support decisions. In addition, contractual documentation must reflect these decisions to ensure that operational personnel are equipped and prepared for the transition and that the product or service is supportable after delivery. The project also must monitor its transition activities (see Project Monitoring and Control specific practice 1.8, “Monitor Transition to Operations and Support”) to ensure that operational users are prepared for the transition and that the capability is sustainable once it is delivered.

Adequate planning and monitoring of transition activities is critical to success when delivering value to customers. The acquirer plays an important role in these activities by setting the direction, planning for implementation, and ensuring that the acquirer and supplier implement transition activities.

by Andrew D. Boyd and Richard Freeman

When the USAF consolidated various systems engineering assessment models into a single model for use across the entire USAF, the effort was made significantly easier due in large part to the fact that every model used to build the expanded USAF model was based on CMMI content and concepts.

In the late 1990s, pressure was mounting for the DoD to dramatically change the way it acquired, fielded, and sustained weapons systems. Faced with increasingly critical Government Accountability Office (GAO) reports and a barrage of media headlines reporting on multimillion-dollar program cost overruns, late deliveries, and systems that failed to perform at desired levels, the DoD began to implement what was called “acquisition reform.” Most believed that the root of the problem rested with the government’s hands-on approach to be intimately involved in requirements generation, design, and production processes. The DoD exerted control primarily through government-published specifications, standards, methods, and rules, which were codified into contractual documents.

These documents directed, often in excruciating detail, how contractors were required to design and build systems. Many thought that the government unnecessarily was bridling commercial contractors, who were strongly advocating that if they were free of these inhibitors, they could deliver better products at lower costs. This dual onslaught from the media and contractors resulted in sweeping changes that included rescinding a large number of military specifications and standards, slashing program documentation requirements, and greatly reducing the size of government acquisition program offices. The language of contracts was changed to specify the desired capability and allowed contractors the freedom to determine how to best deliver this capability. This action effectively transferred systems engineering to the sole purview of the contractors. Responsibility for the delivery of viable solutions ultimately remained squarely with the government, which was now relying heavily on contractors to deliver.

What resulted was a vacuum in which neither the government nor the contractors accomplished the necessary systems engineering. And over the following decade, the government’s organic systems engineering capabilities virtually disappeared. This absence of systems engineering capability became increasingly apparent on multiple-system (i.e., systems-of-systems) programs in which more than one contractor was involved. Overall acquisition performance did not improve, and in many instances it worsened. Although many acquisition reform initiatives were beneficial, it became increasingly clear that the loss of integrated systems engineering was a principal driver behind continued cost overruns, schedule slips, and performance failures.

One service’s response was in the USAF, when on February 14, 2003, it announced the establishment of the Air Force Center for Systems Engineering (AF CSE). The AF CSE was chartered to “revitalize” systems engineering across the USAF. Simultaneously, three of the four major USAF acquisition centers initiated efforts to swing the systems engineering pendulum back the other way. To regain some level of capability, each center used a process-based approach to address the challenge. Independently, each of these three centers turned to the CMMI construct and began to tailor it to create a model to be used within their various program offices, thereby molding it to meet the specific needs of these separate acquisition organizations.

Recognizing the potential of this approach, and with an eye toward standardizing systems engineering processes across all of the USAF centers (i.e., acquisition, test, and sustainment), in 2006 the AF CSE was tasked to do the following.

• Develop and field a single Air Force Systems Engineering Assessment Model (AF SEAM).

• Involve all major USAF centers (acquisition, test, and sustainment).

• Leverage current systems engineering CMMI-based assessment models in various stages of development or use at USAF centers.

In summer 2007, following initial research and data gathering, the AF CSE established a working group composed of members from the eight major centers across the USAF (four acquisition centers, one test center, and three sustainment centers). Assembled members included those who had either built their individual center models or would be responsible for the AF SEAM going forward. The team’s first objective was to develop a consistent understanding of systems engineering through mutual agreement of process categories and associated definitions. Once this understanding was established, the team members would use these process areas and, building on the best practices of existing models, together develop a single AF SEAM which would have the following characteristics.

• It would be viewed as adding value by program managers and therefore would be “pulled” for use to aid in the following:

• Ensuring that standardized core systems engineering processes are in place and being followed

• Reducing technical risk

• Improving program performance

• It would be scalable for use by all programs and projects across the entire USAF:

• Based on self-assessment

• Capable of independent verification

• It would be a vehicle for sharing systems engineering lessons learned as well as best practices.

• It would be easy to maintain.

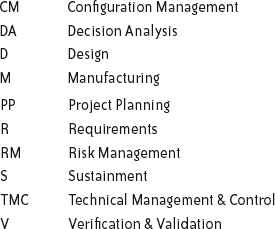

To ensure a consistent understanding of systems engineering across the USAF and to provide the foundation on which to build AF SEAM, the working group clearly defined the ten systems engineering process areas (presented in the following list in alphabetical order):

The model’s structure is based on the CMMI construct of process areas, specific goals, specific practices, and generic practices. AF SEAM has the ten process areas in the preceding list amplified by 33 specific goals, 119 specific practices, and 7 generic practices. Each practice includes a title, a description, typical work products, references to source requirements and/or guidance, and additional considerations to provide context. Although some process areas are largely an extract from CMMI, others are combinations of specific practices from multiple CMMI process areas and other sources. Additionally, two process areas not explicitly covered in CMMI were added, namely Manufacturing and Sustainment.

To aid in the establishment of a systems engineering process baseline and in turn achieve the various goals stated earlier, programs and projects will undergo one or more assessments followed by continuous process improvement. An assessment focuses on process existence and use and therefore serves as a leading indicator of potential future performance. The assessment is not a determination of product quality or a report card on the people or the organization (i.e., lagging indicators). To allow for full USAF-wide scalability, the assessment method is intentionally designed to support either a one- or two-step process. In the first step, each program or project performs a self-assessment using the model to verify process existence, identify local references, and identify work products for each specific practice and generic practice—in other words, “grading” itself. If leadership determines that verification is beneficial, a second independent assessment is performed. This verification is conducted by an external team that verifies the results of the self-assessment and rates each specific practice and generic practice as either “satisfied” or “not satisfied.” Results are provided back to the program or project as output of the independent assessment. The information is presented in a manner that promotes the sharing of best practices and facilitates reallocation of resources to underperforming processes. The AF SEAM development working group also developed training for leaders, self-assessors, and independent assessors, who are provided this training on a “just-in-time” basis.

The future of the AF SEAM looks bright. With a long history of using multiple CMMI-based models across the USAF, significant cultural inroads have been made to secure the acceptance of a process-based approach. The creation and use of a single USAF-wide Systems Engineering Assessment Model will aid significantly in the revitalization of sound systems engineering practices and in turn will facilitate the provision of mission-capable systems and systems of systems on time and within budget.

(The views expressed herein do not represent an official position of the DoD or the USAF.)

by Brian Gallagher

Most process improvement approaches are based on a similar pedigree that traces back to the same foundation established by process improvement gurus, such as Shewhart, Deming, Juran, Ishikawa, Taguchi, Humphrey, and others. The improvement approaches embodied in CMMI, Lean, Six Sigma, the Theory of Constraints, Total Quality Management, and other methods all embrace an improvement paradigm that can be boiled down to these simple steps.

- Define the system you want to improve.

- Understand the scope of the system.

- Define the goals and objectives for the system.

- Determine constraints to achieving objectives.

- Make a plan to remove the constraints.

- Learn lessons and do it again.

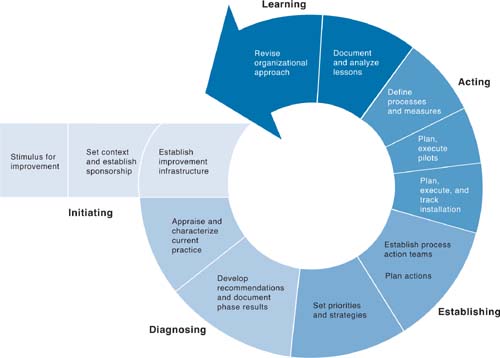

These simple steps comprise an improvement pattern that is evident in the Plan-Do-Check-Act (PDCA) improvement loop, Six Sigma’s Define, Measure, Analyze, Improve, Control (DMAIC) improvement methodology, the military’s Observe-Orient-Decide-Act (OODA) loop, and the SEI’s Initiating, Diagnosing, Establishing, Acting, and Learning (IDEAL) method shown in Figure 6.9.

All of these improvement paradigms share a common goal: to improve the effectiveness and efficiency of a system. That system can be a manufacturing assembly line, the decision process used by military pilots, a development organization, an acquisition project, or any other entity or process that can be defined, observed, and improved.

One mistake individuals and organizations make when embarking on an improvement path is to force a decision to choose one improvement methodology over another as though each approach is mutually exclusive. An example is committing an organization to using CMMI or Lean Six Sigma or the Theory of Constraints before understanding how to take advantage of the tool kits provided by each approach and selecting the tools that make the most sense for the culture of the organization and the problem at hand. The following case study illustrates how one organization took advantage of using the Theory of Constraints with CMMI.

One government agency had just completed a Standard CMMI Appraisal Method for Process Improvement (SCAMPI) appraisal with a CMMI model on its 12 most important customer projects—all managed within a Project Management Organization (PMO) under the direction of a senior executive. The PMO was a virtual organization consisting of a class of projects that the agency decided needed additional focus due to their criticality from a customer perspective. Although the entire acquisition and engineering staff numbered close to 5,000 employees, the senior executive committed to delivering these 12 projects on time, even at the expense of less important projects. Each project had a well-defined set of process requirements to include start-up and planning activities and was subject to monthly Program Management Reviews (PMRs) to track progress, resolve work issues, and manage risk. With its clear focus on project success, the PMO easily achieved CMMI maturity level 2.

On the heels of the SCAMPI appraisal, the agency was directed to move much of its customer-facing functionality to the Web, enabling customers to take advantage of a wide variety of self-service applications. This Web-based system was a new technology for the agency, and many of its employees and contractors had experience only with mainframe or client/server systems. To learn how to successfully deliver capability using this new technology, the senior executive visited several Internet-based commercial organizations and found that one key factor of their success was the ability to deliver customer value within very short time frames—usually between 30 and 90 days. Armed with this information, the executive decried that all Internet-based projects were to immediately establish a 90-day delivery schedule. Confident with this new direction and eager to see results, he asked the Internet projects to be part of the scope of projects in the next SCAMPI appraisal to validate the approach.

The agency’s process improvement consultant realized that trying to do a formal SCAMPI appraisal on an organization struggling to adopt a new, more agile methodology while learning a new Web-based technology and under pressure to deliver in 90 days wasn’t the best option given the timing of all the recent changes. She explored the idea of conducting a risk assessment or other technical intervention, but found that the word risk was overloaded in the agency context and so she needed a different approach. She finally suggested using the Theory of Constraints to help identify and mitigate some of the process-related constraints facing the agency.

The Theory of Constraints is a system-level management philosophy that suggests all systems may be compared to chains. Each chain is composed of various links with unique strengths and weaknesses. The weakest link (i.e., the constraint) in the chain is not generally eliminated; rather it is strengthened by following an organized process, thus allowing for improvement in the system. By systematically identifying the weakest link, and strengthening it, the system as a whole sees improvement. The first step in improving the system is to identify all of the system’s constraints.

A constraint is an obstacle to the successful completion of an endeavor. Think about how constraints would differ depending on the following endeavors:

• Driving from Miami to Las Vegas

• Walking across a busy street

• Digging a ditch

• Building a shed

• Acquiring a new space launch vehicle

• Fighting a battle

Constraints are not context-free; you can’t know your constraints until you know your endeavor. For the Internet project endeavor, the senior executive selected a rather wordy “picture of success”:

“The Internet acquisition projects are scheduled and defined based upon agency priorities and the availability of resources from all involved components, and the application releases that support those initiatives are planned, developed, and implemented within established time frames using established procedures to produce quality systems.”

The problem the consultant faced was how to systematically identify the constraints. Where should she look and how would she make sure she covered all the important processes employed by the agency? Since the agency was familiar with CMMI, she decided to use the practices in CMMI as a taxonomy to help identify constraints. Instead of looking for evidence of compliance to the practice statements as you would in a SCAMPI appraisal, she asked interviewees to judge how well the practices were implemented and the impact of implementation on successful achievement of the “picture of success.” Consider the difference in an interview session between the two:

SCAMPI Appraisal

CMMI-Based Constraint Identification

After six intense interview sessions involving 40 agency personnel and contractors, 103 individual constraint statements were gathered and affinity-grouped into the following constraint areas:

• Establishing, Communicating, and Measuring Agency Goals

• Legacy versus Internet

• Lack of Trained and Experienced Resources

• Lack of Coordination and Integration

• Internet-Driven Change

• Product Quality and Integrity

• Team Performance

• Imposed Standards and Mandates

• Requirements Definition and Management

• Unprecedented Delivery Paradigm

• Fixed 90-day Schedule

Further analysis using cause and effect tools helped to identify how each constraint affected the other. This further analysis also allowed the consultant to produce the hierarchical interrelationship digraph depicted in Figure 6.10.

The results of this analysis helped the senior executive decide which constraints needed to be resolved to help improve the quality of the delivered systems. The higher up in the diagram you can affect, the more impact you can achieve.

The senior executive decided that the “Imposed Mandates” constraint was outside his span of control. However, many of the other constraints were either directly within his control or at least within his sphere of influence. He decided to charter improvement teams to tackle the following constraints:

• Establishing, Communicating, and Measuring Goals

• Internet versus Legacy (combined with Lack of Coordination and Integration)

• Internet-Driven Changes

The improvement teams used a well-defined improvement approach that helped them explore each constraint in detail, establish an improvement goal, perform root cause analysis, identify barriers and enablers to success, and establish strategies and milestones to accomplish the goal.

The process improvement consultant recognized that using CMMI alone wasn’t appropriate for the challenges facing the agency, and brought concepts and tools from another improvement approach—the Theory of Constraints—to help the agency recognize and remove critical process-related constraints. She helped establish improvement strategies and helped the agency avert a pending crisis, setting them on an improvement course that served them well. Today, the agency’s PMO manages both legacy and Internet-based projects, is an exemplar for other agencies, and demonstrated its process prowess by successfully achieving CMMI maturity level 3.

This case study also illustrates how using CMMI for process improvement fits into the DoD-wide Continuous Process Improvement (CPI)/Lean Six Sigma (LSS) Program, which includes the Lean, Six Sigma, and Theory of Constraints tools and methods for process improvement [DoD 2008].