15

Present and Defend: Second Mock

In this chapter, you will continue creating an end-to-end solution for your second full mock scenario. You already covered most of the scenario in Chapter 14, Practice the Review Board: Second Mock, but you still have plenty of topics to cover. In this chapter, you will continue analyzing and solutioning each of the shared requirements and then practice creating some presentation pitches.

To give you the closest possible experience to the real-life exam, you will go through a practice Q&A session and defend, justify, and change the solution based on the judges’ feedback.

After reading this chapter, you are advised to reread the scenario and try to solution it on your own. In this chapter, you are going to cover the following main topics:

- Continue analyzing the requirements and creating an end-to-end solution

- Presenting and justifying your solution

By the end of this chapter, you will have completed your second mock scenario. You will have become more familiar with the full mock scenario’s complexity and be better prepared for the exam. You will also have learned how to present your solution within the limited time and justify it during the Q&A.

Continue Analyzing the Requirements and Creating an End-to-End Solution

You covered the business process requirements earlier in Chapter 14, Practice the Review Board: Second Mock. By the end of that chapter, you had a good understanding of the potential data model and landscape. You have already marked some objects as potential LDVs.

Next, there is a set of data migration requirements that you need to solve. Once you complete them, you will have an even better understanding of the potential data volumes in each data model’s objects, which will help you create an LDV mitigation strategy. Now, you can first start with the data migration requirements.

Analyzing the Data Migration Requirements

This section’s requirements might impact some of your diagrams, such as the landscape architecture diagram or the data model.

The company has over 80 million customers in its legacy CRMs. The data is expected to contain a significant number of duplicates.

After analyzing this requirement, you can identify the following needs:

- Deduplicate customers in the legacy CRMs so that you end up with one record to represent each customer.

- Migrate active customers only. The resulting dataset is expected to contain nearly six million customers.

- Link the migrated active unique customers with their relevant records in the ERP. This must be done without significant changes to the ERP’s data.

Always remember all the tools that you can use to solve your client’s needs. You can read the preceding three requirements as the following:

- There is a need to deduplicate the legacy CRM customers and create a golden record for all the company’s customers.

- There is a need to select a subset of these customers (active customers only).

- There is a need to identify the customers in the ERPs and build a relationship between their records and the unique customer golden record. This will ensure that you can create a customer 360-degree view across all systems despite all of the ERPs’ redundancies.

In Chapter 2, Core Architectural Concepts: Data Life Cycle, you came across three different MDM implementation styles:

- Registry style: This style spots duplicates in various connected systems by running a match, using cleansing algorithms, and assigning unique global identifiers to matched records.

- Consolidation style: In this style, the data is usually gathered from multiple sources and consolidated in a hub to create a single version of the truth, which is sometimes referred to as the golden record.

- Coexistence style: This style is similar to the consolidation style in the sense that it creates a golden record, but the master data changes can take place in either the MDM hub or the data source systems.

Now that you have been reminded of these three MDM styles, take a moment to think of your data strategy again, considering the shared requirements. Keep in mind that you can combine multiple MDM styles, probably in phases. Did you create a plan in your mind? Cross-check it with the following.

Deduplicating source records to create a consolidated, unique golden record can be achieved using an MDM tool that implements the consolidation style. Considering that deduplicating can get complicated, especially with the absence of strong attributes (such as an email address or phone number in a specific format), you need a tool capable of executing fuzzy-logic matching rather than exact matching.

Does that sound familiar? You came across the same challenge in Chapter 13, Present and Defend: First Mock. For this part of the solution, you can propose using Informatica MDM. Informatica’s ETL capabilities will also be useful to help you identify and select the active customers that will be migrating to Salesforce.

You finally reach the last part of the requirement, where there is a need to link the unique customers with the duplicated and redundant customer records in the ERPs. For that, you need an MDM tool that supports the registry style.

This tool will have its own fuzzy-logic matching and use that to identify the different representations of the customer across all ERPs. Then, instead of merging records to create a golden number, it will simply generate a global ID and assign it to the customer records on all relevant systems. Once that is done, you can get a 360-degree view of the customer across all systems using the global ID, despite data redundancies. Informatica MDM supports the MDM registry style. There are also other well-known tools, such as Talend and IBM InfoSphere. Salesforce Customer 360-degree Data Manager (retired as of February 1st, 2021) was also one of these tools.

Note

Avoid proposing products just because they are new and assuming that they will solve all problems and challenges. Many challenges can be addressed using tools that have been on the market for years. Propose tools that you know would work based on your knowledge and experience.

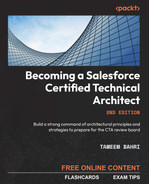

Figure 15.1 illustrates the difference between the consolidation and registry MDM styles:

Figure 15.1 – Example illustrating the difference between the MDM consolidation and registry styles

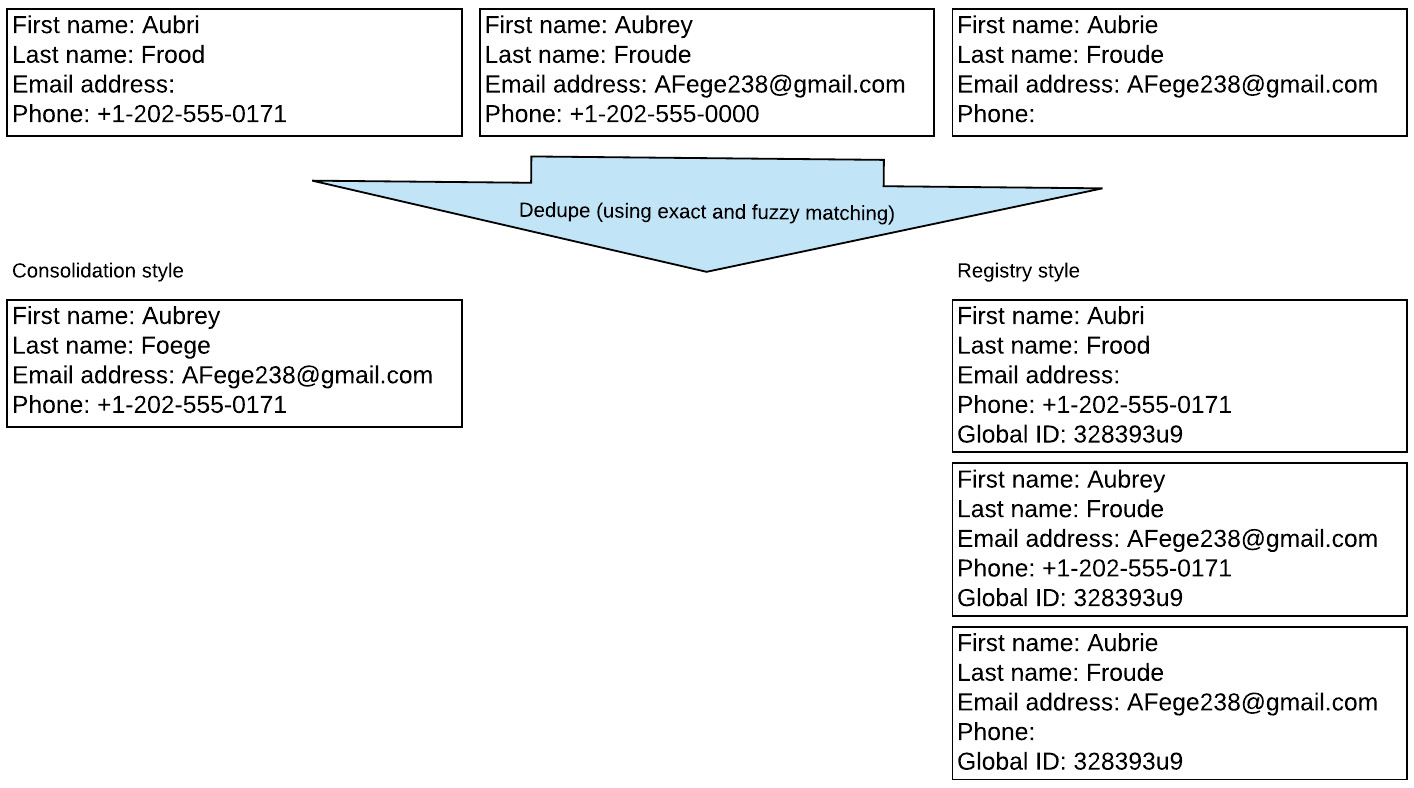

You are now clear on the proposed solution. To help make this even easier to communicate, you can create a simple diagram such as the following:

Figure 15.2 – Proposed data migration stages

Diagrams will make it easier to present the solution and simpler for the audience to understand it. Feel free to add other details to your diagram, such as the expected input (for example, CSV files). You still need to explain the migration approach, plan, and considerations. But go through the next requirement first to get a better understanding of the scope.

The legacy CRMs have details for over 200 million meters. The vast number of records is due to significant record redundancy.

The actual number of meter records to migrate is expected to be less than 10 million. The company would like to clean up the data and maintain a single record for each meter to develop a 360-degree asset view.

This is another deduplication requirement. However, it is a bit different because meters are always strongly identified using a meter ID. The MDM tool still needs to consolidate the input records to create a unique golden record for each meter.

Luckily, you already have an MDM tool capable of delivering MDM consolidation. Update the landscape architecture diagram to include the migration interfaces. Also, update the list of integration interfaces. Your landscape architecture diagram could look like the following:

Figure 15.3 – Landscape architecture (fourth draft)

The list of data migration interfaces would look like the following:

![This shows the final version of Data migration interfaces. This lists the interface codes [Mig-001, 002, and 003] under Source/Destination, Integration Layer, Integration Pattern, Description, Security, and Authentication.](https://imgdetail.ebookreading.net/2023/10/9781803239439/9781803239439__9781803239439__files__image__B18391_15_04.jpg)

Figure 15.4 – Data migration interfaces (final)

You learned in Chapter 13, Present and Defend: First Mock, that you need to address the following questions:

- How are you planning to get the data from its data sources?

- How would you eventually load the data into Salesforce? What are the considerations that the client needs to be aware of, and how do you mitigate the potential risks?

- What is the proposed migration plan?

- What is your data migration approach (big bang versus ongoing accumulative approach)? Why?

You will cover these topics later in this chapter when you formulate the data migration presentation. For the time being, you need to review the LDV mitigation strategy. You have penciled in several objects as potential LDVs in the data model, but you still need to do the math to confirm whether they are indeed LDVs or not. Once you confirm the final set of LDVs, you need to craft a mitigation strategy. You will tackle this challenge next.

Reviewing Identified LDVs and Developing a Mitigation Strategy

Going through the scenario, you can identify several objects that could potentially be LDVs. List them all and do the math to determine whether they are LDVs or not. You can use a table such as the following:

|

Object |

Expected number of records |

Is it an LDV? |

Mitigation strategy |

|

Account |

6,700,000 customer records (B2B and B2C). The company’s properties (specific account record type), which are assumed to be 25% more than the number of customers (some customers are related to more than one property). That is nearly 8.5 million records. In total, you can assume nearly 15,200,000 records. |

Yes. |

You need to keep this object as slim as possible by archiving inactive customers whenever possible. Accounts will be archived using Salesforce Big Objects. |

|

Contact |

Every Person Account consumes an account and a contact record. This means you have nearly six million B2C customer contacts. You can also have up to five contacts per B2B account, which is almost 1.4 million records. In total, you have nearly 7.4 million records. |

Yes. Although the number of records is not massive, this object will be directly impacted by the Account archiving strategy. |

You need to keep this object as slim as possible by archiving inactive Contacts whenever possible. Contacts will be archived using Salesforce Big Objects. |

|

Asset |

There are six million B2C customers. On average, 1.5 of these customers have subscriptions to both electricity and gas. 80% of B2B customers are signed up for both electricity and gas. Key customers will have an average of 10 properties. You roughly have 12,150,000 total assets. |

Yes. |

You need to keep this object as slim as possible by archiving inactive assets whenever possible. Assets will be archived using Salesforce Big Objects. |

|

Contract_Line_item__c |

This object is going to be similar in size to the Asset object. It will contain roughly 12,150,000 total contract line items. |

Yes. |

Similar to the Asset object. This object will be archived using Salesforce Big Objects. |

|

Contract |

You can assume one active contract per customer (which covers one or more products). This means you have around 6,700,000 potential records. |

Yes, although the number of records is not massive. |

This object is tightly related to the Asset and Contract_Line_item__c objects. It should be archived in a similar way using Salesforce Big Objects. |

|

Meter__c |

There are 10 million records in this object. |

No. |

This object does not grow much in size; therefore, it is not considered an LDV. |

|

Meter_Reading__c |

There are 24.5 million records (per year). |

Yes. |

All meter readings more than two years old should be archived using Salesforce Big Objects. |

|

Quote |

A quote is generated for each new online signup process. It is assumed that there will be less than one million records per year. |

No. |

N/A |

|

Opportunity |

The Opportunity object is only used for B2B deals. It is assumed that there will be less than 500,000 records per year. |

No. |

N/A |

|

Case |

The company has an average of three cases per customer per year. This is nearly 20 million records, not including other types of cases (such as reporting a failure). |

Yes. |

All cases more than a year old should be archived using Salesforce Big Objects. |

|

AccountContactRelation |

This object will be heavily utilized to control record visibility. You can assume more than 10 million records (based on 8.5 million properties and 6.7 million customers; some are related to more than one property). |

Yes. |

This object is tightly related to Account (Person account, property) and Contact objects. It should be archived in a similar way to these objects. The AccountContactRelation object will be archived using Big Objects (for the sake of data integrity only, as it will not be driving any record visibility anymore). |

Table 15.1 – Main objects and identified LDVs

You have identified several LDV objects, out of which there are many that you cannot shift to an off-platform solution because you need the data to exist in Salesforce to execute Business logic (such as auto-renewals and lead generation).

You can mitigate these objects’ LDV impact by ensuring they stay as slim as possible and archive records whenever possible. Some business rules have been shared in the scenario, and you can assume some others. The company does not currently have a data warehouse to archive the records. You can either propose one, archive to Heroku, or archive to Salesforce Big Objects.

You do not have Heroku in the landscape yet, but do not let that stop you from proposing it. Data archiving is a fair-enough reason to justify that. However, keep in mind that Salesforce Big Objects can also be used in such use cases. You came across a comparison of Salesforce Big Objects and Heroku PostgreSQL archival in Chapter 7, Designing a Scalable Salesforce Data Architecture. For this scenario, you can choose Salesforce Big Objects.

You can populate the Big Objects using Apex, but there is a more scalable and easier-to-manage way. You already have an ETL tool in the landscape, which you can utilize. Informatica will be handling all the archiving jobs from sObjects to Big Objects.

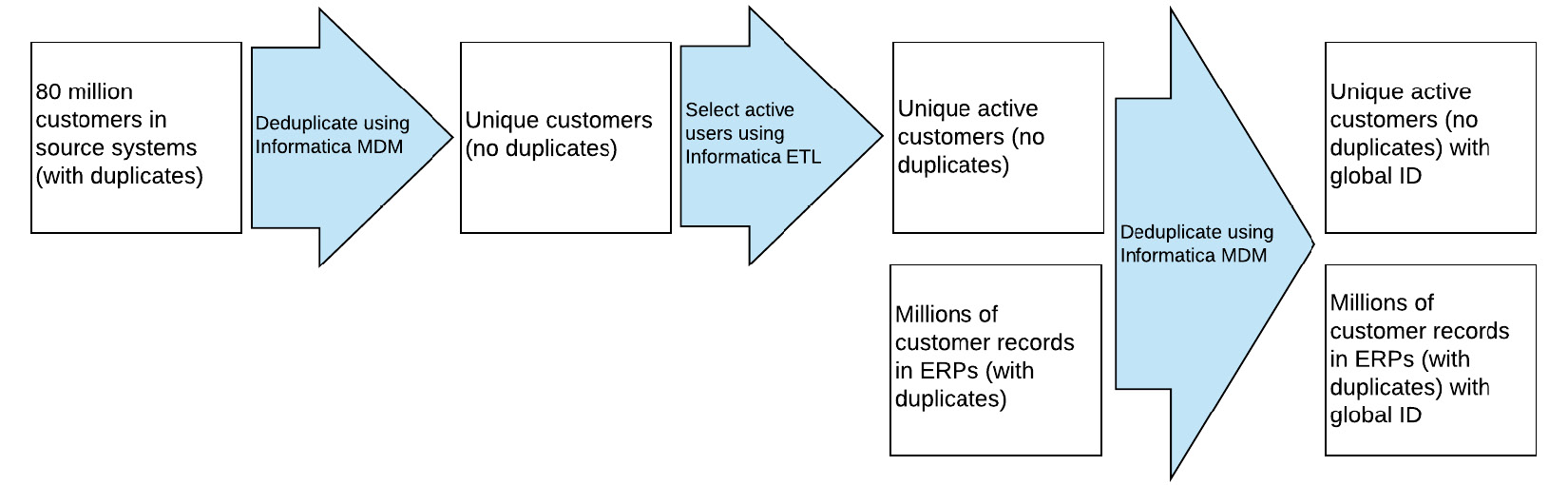

Objects mastered in the ERPs, such as Invoice_Header__x, Invoice_Line_Item__x, and Payment__x, are not a concern considering that the data is stored outside the Salesforce Platform. Update your landscape architecture, list of interfaces, and data model. Your data model could look like the following:

Figure 15.5 – Data model (fourth draft)

Continue onward and start analyzing the accessibility and security requirements, which is usually the second most tricky part of an exam scenario.

Analyzing the Accessibility and Security Requirements

The accessibility and security section requirements are usually the second most difficult to tackle after the business processes requirements. In contrast to the business process requirements, they do not tend to be lengthy, but rather more technically challenging.

You might need to adjust your data model, role hierarchy, and possibly your actors and licenses diagram. The good news is that there is a limited number of possible sharing and visibility capabilities available within the platform. You have a small pool of tools to choose from. However, you must know each of these tools well to select the correct combination. Now, start with the first shared requirement.

Key customers and their meter readings are only visible to the Key customer manager.

In the proposed solution for the key customer management process in Chapter 14, Practice the Review Board: Second Mock, you designed your solution so that a Key customer manager would eventually own the key customer’s business account. You also noted that other relevant Key customer managers would be added to the account team. This will ensure these users and their managers have access to the required Account record.

The OWD of the Account object is private, which means other users cannot access these records. You can grant the support agents visibility using criteria-based sharing rules. The support agents require access to all accounts, including key customers. You can create a sharing rule that shares all Account records with the support agent role and anyone on top of the hierarchy.

The Meter_Reading__c object is linked via a master/detail relationship with the relevant asset, which, in turn, is related to a property (a special Account record type). To fulfill the requirement, you need to ensure that the property record is visible to the right users only.

The parent account relationship is not enough to grant record visibility. A possible approach would be to copy the account team members to all relevant properties. You can rely on a Lightning flow to do the job, but you need a condition to control it. The condition could simply rely on an Is_Key_Customer__c flag on the business account.

Once that flag is checked, the Salesforce flow will copy all account team members to the relevant properties. The flow will also execute every time you add or remove a new account team member to the business account.

You will also need another flow that fires upon linking a property to a business account to automatically copy the account team members to the newly connected property record. Update your data model diagram and move on to the next requirement, which begins with the following:

The Key customer manager should be able to delegate the visibility of a customer account to another manager for a specific period.

Once the specified period of visibility is passed, the record should not be visible anymore to the delegated manager.

Salesforce provides a way to delegate approvals to other users, but it does not offer the requested functionality out of the box. If you are unaware of a third-party product (for example, from AppExchange) that offers such capability then you need to design it yourself.

You can propose doing this in multiple ways, including the following:

- Define a new account team role with a name that indicates a predefined duration (for example, Temp Admin – seven days). The key account manager can add a new account team member using that role. This will grant the user access to the Account record. You can then have a scheduled job that queries records based on their creation date and names (for example, query all AccountTeamMember records created more than seven days ago that have the Temp Admin – seven days role) and deletes them. This will ensure that the delegated users do not get access beyond the allowed period.

- Create a similar logic using a new custom object. You can define the exact start and end dates on that custom object. The Batch job would either create or delete account team member entries based on the settings in the custom object. You can even extend this solution beyond the Account object and include other standard or custom objects. In that case, you will not be utilizing the account teams. Instead, you would create share records via Apex.

This solution offers more customization capabilities and enables more advanced use cases, such as defining time-based delegated sharing for all records of a particular object.

Pick the second option as it is more flexible and future-embracing. Do not hesitate to explain both options during the presentation as long as you have enough time.

Now, introduce a new custom object, Delegated_Record_Access__c, add it to the data model, and move on to the next requirement.

A complaint is only visible to the agent who is managing it and their manager.

Complaints are modeled as cases in your solution. This means the Case object’s OWD will be private. The agent managing a complaint will also own it, making the record visible to the agent and the agent’s manager by default.

Finally, you can simply grant the set of superusers the View All permission on the Case object. But this will grant them visibility to all types of cases, not just complaints. Alternatively, you can create a public group, add the designated users to it, and then create a criteria-based sharing rule to share all complaints with it.

Be careful while proposing a solution that relies on the View All or Modify All object permission. The advantage of using these permissions is that they allow users to access records without evaluating the record-sharing settings. This enhances performance. However, they might be granting the users more access than they should. It is recommended to avoid them unless there is a valid reason to justify using them. You covered that earlier in Chapter 6, Formulating a Secure Architecture in Salesforce.

Inquiries should be visible to all support agents.

Inquiries are modeled as cases in your solution. You can create a criteria-based sharing rule to share all inquiries with the support agents’ role.

Maintenance partners’ records should be visible to the support agents only.

Maintenance partner account records should be visible, but not editable, to all support agents. You can achieve that by creating a criteria-based sharing rule to share the relevant accounts with the support agents’ role. The access level on the sharing rule can be set to Read Only.

Support agents who manage the direct relationship with a partner will also own the partner’s Account record. Therefore, the support agents have edit permission by default.

B2B customers should be able to manage all properties and meters related to their account.

You already solved this requirement in Chapter 14, Practice the Review Board: Second Mock, while designing the data model. You utilized a combination of sharing sets and AccountContactRelation records to achieve the desired behavior.

It is worth mentioning that sharing sets were available for Customer Community licenses only in the past, but Salesforce extended that feature to both the Partner Community and Customer Community Plus licenses in Summer 2018 (at that time, it was in public beta).

Note

You can find more details about the enablement of sharing sets for the Partner Community licenses at the following link: https://packt.link/4N3Di.

Now, move on to the next and final requirement.

B2C customers should be able to manage all their related properties. It is common to have a B2C customer associated with more than one property.

You solved this requirement already in Chapter 14, Practice the Review Board: Second Mock, as part of the proposed data model. You used Person Accounts to represent B2C customers. You utilized a combination of sharing sets and AccountContactRelation records to allow community users to access multiple properties (an Account record type).

This concludes the accessibility and security section requirements. By now, you have covered nearly 80–85% of the scenario (complexity-wise). You still have a few more requirements to solve, but you have solved most of the tricky topics. Continue pushing forward as the next target is the reporting requirements.

Analyzing the Reporting Requirements

In Chapter 13, Present and Defend: First Mock, you came to know that reporting requirements could occasionally impact your data model, particularly when deciding to adopt a denormalized data model to reduce the number of generated records.

Reporting could also impact your landscape architecture, list of integrations, or the licenses associated with the actors. Go through the requirements shared by the company and solve them one at a time.

The Global SVP of service would like a report showing service requests handled by the maintenance partners for a given year compared to data from four other years.

Maintenance requests are modeled as cases in the proposed solution where all cases more than a year old are going to be archived. Although the data still technically exists on the platform (in Big Objects), you still cannot use standard reports and dashboards to report on this data. There are two potential ways to fulfill this requirement:

- Using Batch Apex to extract aggregated data: You can use Batch Apex (or the Bulk API) to extract and aggregate your archived data and load it into a custom object. You can then use standard reports and dashboards with this object. In the past, it was also possible to use Async SOQL to do the extraction and aggregation but Salesforce has announced plans to retire Async SOQL by the Summer’23 release.

- Use an analytics tool such as CRM Analytics: This option will allow further flexibility and the ability to drill into the data behind dashboards. CRM Analytics (formerly Einstein Analytics and Tableau CRM) has a connector that can retrieve data from Big Objects.

The second approach does not require custom development and provides more flexibility to embrace future requirements. This is a justified reason to use it. Update your landscape architecture and list of integration interfaces accordingly, then move on to the next requirement.

The Global SVP of the service would like a dashboard showing the number of inquiries and complaints received and resolved broken down by country and region.

This requirement can be fulfilled using standard reports and dashboards, assuming that the past year’s data is enough. The SVP already has access to the mentioned data. The question did not specify a timeframe for the data. Avoid overcomplicating things for yourself and simply assume that data from the past year is good enough to meet expectations. Now, move on to the next requirement.

Key customer managers would like a set of business intelligence reports showing business improvements gained by switching key customers from the previous tariffs to new tariffs.

Reporting requirements that indicate business intelligence are generally beyond the grasp of the standard platform capabilities. Standard reports and dashboards will fall short of such requirements, except if you invest in developing a custom solution that creates aggregated data that you can report on.

For such requirements, you should consider an analytics and business intelligence tool such as CRM Analytics. Luckily, you already have it in your landscape architecture. You can use it for this requirement too. Ensure you call that out during your presentation.

The company would like to offer its customers a dashboard showing the change in their consumption across the past two years.

You can maintain meter readings on the platform for two years using the custom Meter_Reading__c object. You can easily develop a report that shows the change in consumption using that object.

Are you missing anything here? Yes, Customer Community users do not have access to standard reports and dashboards, but Customer Community Plus users do. Should you adjust the proposed solution and use Customer Community Plus licenses with both B2C and B2B customers?

The answer is no. You selected the B2C licenses based on solid logic. You had millions of customers, and you needed a license suitable for that number of users. Do not lose faith in your logic because of such requirements. Hold your ground and think of a potential solution.

You can easily develop a custom Lightning component with a decent graph showing user consumption. This will indeed require custom development (which it’s best to usually try to avoid in the Salesforce ecosystem) but it is well justified.

Switching to Customer Community Plus license will have far more impact on the solution. The impact will not only be from a cost perspective but also, more importantly, from a technical perspective. Customer Community Plus users consume portal roles, which are limited. You covered that in detail in Chapter 6, Formulating a Secure Architecture in Salesforce.

Stick with the proposed Customer Community licenses and use a custom-developed Lightning component to fulfill this requirement. Based on the required number of users and the limitations of the Customer Community Plus’s licenses, the rationale is clear and therefore the design decision is justified.

That concludes the requirements of the reporting section. The last part of the scenario normally lists requirements related to the governance and development life cycle. Keep that momentum going and proceed to tackle the next set of requirements.

Analyzing the Project Development Requirements

This part of the scenario would generally focus on the tools and techniques used to govern the development and release process in a Salesforce project. As mentioned before in Chapter 13, Present and Defend: First Mock, the artifacts you created earlier in Chapter 10, Development Life Cycle and Deployment Planning, such as Figure 10.3 (Proposed CoE structure), Figure 10.4 (Proposed DA structure), and Figure 10.7 (Development life cycle diagram (final)), are handy to solve this part of the scenario.

You need to fully understand and believe in the values that organizational structures such as the CoE or the Design Authority (DA) bring. You need to know why customers should consider CI/CD—not just the market hype, but the actual value brought by these techniques to the development and release strategy.

Next, go through the different company requirements and figure out how to put these artifacts into action to resolve some of the raised challenges. Start with the first requirement.

The company would like to start realizing value quickly and get Salesforce functionalities as soon as possible.

This requirement represents a common trend in today’s enterprise world. Enterprises are all eager to switch to a more agile fashion of product adoption. It is simply not acceptable for enterprises to wait for years to start realizing their investment’s value. They are willing to embark on an understandably long journey to roll out a new system across the enterprise fully, but they want to start reaping some of the value as soon as possible.

Therefore, the concept of minimum viable product (MVP) has gained so much traction in the past few years. The MVP concept promises a quick reap of value with minimal effort and, therefore, financial risk. An MVP contains core functionalities that the full product would eventually include. These core functionalities allow additional features to be developed around and extended with time.

Think about it this way. You can theoretically start reaping the benefits of your newly built house as long as you have it in a habitable form. You can later make it beautiful by adding decorations, tidying up the garden, setting up a nice garage, and so on.

The MVP allows enterprises to start gaining benefits without waiting until the end of the program. This effectively reduces the overall risk as the users will start using the new system in a matter of weeks or a few months rather than years.

Note

The art of determining what belongs to an MVP scope and what does not is out of scope for this book and is at the heart of modern product management. You can read more about this in other books, such as Lean Product Management by Mangalam Nandakumar.

Solid product management alone will not be enough to develop a scalable MVP solution. In real life, you need to combine that with the right technical guidance (offered by the DA), overall governance (provided by the CoE), and the right delivery methodology. Otherwise, you could risk developing an MVP that does not extend or scale well.

This is particularly relevant to Salesforce projects mainly because the platform is flexible, dynamic, and full of ready-to-use functionalities, which could be tempting to quickly put together an MVP solution, which, without proper planning and governance, could easily miss its future potential.

Coming back to the company’s requirement, this can be met by adopting an MVP style of product design and development. In your presentation, you need to briefly explain what an MVP solution is, the value it brings, and how you can ensure it meets its future potential. Move on to the next requirement.

The team maintaining the ERPs works in a six-month release cycle, and they are unable to modify their timeline to suit this project.

This could potentially be a risk. You need to bring up any foreseen risks during your presentation. Considering the ERPs’ release cycle, you might encounter situations where a Salesforce release is held back until the next ERP release. This means there will be a need to carefully manage and select the released Salesforce features in any version.

Moreover, you should have the right environment structure to control feature developments and the right integration strategy to enable loose coupling.

The proposed environment structure and development life cycle strategy should cater to these requirements and clearly explain how they would address this risk. You will cover this in the presentation pitch later in this chapter.

Historically, the customer support team is used to high-productivity systems, and they have a regulatory requirement to handle calls in no more than 10 minutes.

You can improve the productivity of the customer support team using the Service Cloud Console (also known as Lightning Service Console). The console allows configuring some shortcuts as well to meet the company’s expectations. Also, Service Cloud Voice allows automatic contact lookup based on the caller’s phone number. Salesforce macros can also be used to speed up some repetitive tasks (such as sending an email to the customer and updating the case record with a single click).

All these features will further improve the productivity and response time of the customer support team.

The company would like to get support in identifying potential project risks.

You have already come across three potential risks:

- Designing and governing the MVP solution

- Aligning the relatively slow release cycle of the ERPs with the quick-release cycle adopted by Salesforce

- Managing the user expectations and performance while switching to a cloud-/browser-based solution compared to a high-productivity desktop application

You need to call each of these out during the presentation and clearly explain how you plan to mitigate each. You will do that later in this chapter.

The company would like to have a clear, traceable way to track features developed throughout the project life cycle.

In Chapter 10, Development Life Cycle and Deployment Planning, you came across several techniques to govern a release cycle, including tracking developed features.

You have also learned that the best mechanism to handle such situations in Salesforce is by implementing source code-driven development using a multi-layered development environment.

Source code-driven development will allow you to maintain a trackable audit trail that shows the developed features at any given time of your project life cycle. This requirement is also related to the next. Go through that requirement to help you formulate a comprehensive response for both. The next requirement is as follows:

The company is looking for recommendations for the right environment management strategy to ensure the proper tests are executed at each stage.

This requirement could be asked in several different ways. The answer will always lead to what you learned in Chapter 10, Development Life Cycle and Deployment Planning. The multi-layered development environment, automated release management, and test automation tools and techniques should be used to fulfill this requirement.

You saw a sample development life cycle diagram in Chapter 10, Development Life Cycle and Deployment Planning Figure 10.7 (Development life cycle diagram (final)), which you used as the base for the proposed environment management and development life cycle diagram in Chapter 13, Present and Defend: First Mock. You will use the same diagram as the base for this scenario too. You can assume one development stream in this case. Your diagram could look like the following:

Figure 15.6 – Development life cycle diagram (final)

You will notice that you still have a CI sandbox (also known as the build sandbox) and QA sandboxes before and after the CI environment. You might wonder why you need them. After all, there is only one development stream, and things will be more straightforward with fewer environments, right? Not exactly.

Your release strategy should be future embracing. It should never be short-sighted to only cover near-future requirements. Your strategy should be able to extend and accommodate multiple project streams developing new/updated features concurrently, even if this is not currently an urgent requirement.

In most Salesforce projects, there will be at least two concurrent streams at any given time. There would be a stream building and developing new features and a stream maintaining the production org and fixing and patching any encountered bugs. All code changes should ideally be merged into the CI environment to follow the designed pipeline (apart from some exceptional cases). Your environment strategy should be able to cater to that and more.

Local QA environments help the development team test their functionalities before they can progress any further. Shared QA environments are typically managed by dedicated testing teams who ensure that everything fits together and works as expected.

Things will indeed get more complicated with additional environments, except if you have the right tools in place to support you. This is why source control management (SCM) and automated build and release management tools are crucial for any enterprise-scale Salesforce project’s success.

For the company’s scenario, you need to suggest an automated build and release tool. You can propose a tool such as Copado. You also need to add an automated testing tool such as Provar. Keep in mind that you still need to explain how your unit tests would work side by side with Provar to provide a comprehensive testing experience. You will cover that later in this chapter while crafting the presentation pitch. Add Copado and Provar to your landscape diagram and move on to the next and final requirement.

The company is looking for an appropriate methodology to manage the project delivery and ensure proper technical governance.

This is a combined requirement that you can address using the knowledge you gained regarding the various developing methodologies and the different governance bodies you came to know about in Chapter 10, Development Life Cycle and Deployment Planning.

You need a delivery methodology capable of supporting the MVP solution, that is, a methodology that caters to a blueprinting phase to plan the program’s outcome and define how that would be achieved, preferably with some proofs of concept. However, the methodology should also be flexible enough to accommodate and embrace other requirements beyond the MVP phase. All these should point you to the hybrid methodology.

To ensure technical governance, you need a governing body capable of bringing together the right people at the right time to make decisions, empowered by executive sponsorship and supported by expertise from around the enterprise. This is precisely what the CoE is for.

Besides, you need to have an ongoing activity to validate, challenge, and ratify each design decision required during the project delivery, which is exactly what the DA is meant to do. MVP solutions accumulate additional functionalities with time and become more complex. With the DA structure in place, you ensure that every user story’s solution is challenged and technically validated by the right people before you start delivering it.

That concludes the requirements of the project development section. The next section is usually limited and contains other general topics and challenges. Maintain the momentum and continue to the next set of requirements.

Analyzing the Other Requirements

The other requirements section in a full mock scenario could contain further requirements about anything else that has not yet been covered by the other sections. There are usually a limited number of requirements in this section.

Your experience and broad knowledge of the Salesforce Platform and its ecosystem would play a vital role at this stage. Move on to explore the shared requirements.

The company has recently acquired another company working in renewable energy.

The acquired company manufacture and install solar system panels as well as electric batteries. This company is also utilizing Salesforce as its central CRM. The company would like to know whether they should plan to merge this Salesforce instance with theirs or keep it separate. They are looking for your support with this decision.

The org strategy is something you need to consider for every review board scenario you come across. You should come up with a clear recommendation and a valid rationale behind whether you decide to propose a single org or multi-orgs. You learned about some pros and cons for each in Chapter 5, Developing a Scalable System Architecture.

In this scenario, you come across a use case where there is already a second org inherited via company acquisition. The efforts to merge this Salesforce org with the main company’s org should be considered and never underestimated.

Moreover, the newly acquired company works in a slightly different business. Manufacturing, distributing, and installing solar panels and electric batteries will understandably have a completely different set of sales and service processes and activities. In such cases, you will typically find limited interaction between the two businesses.

The acquired company will likely have its own set of supporting systems, such as ERPs and HR solutions. It is also common to keep the two companies’ finances separate or embark on a three-to-five-year transition period.

For all these reasons, it is safer to assume a multi-org strategy. Global reporting requirements, if any, can be accommodated using business intelligence tools such as CRM Analytics.

As you do not know much about this new org, you will not include it in the landscape architecture diagram. You can simply explain the proposed org strategy and the rationale behind it during the presentation. Your landscape architecture diagram could now look like the following:

Figure 15.7 – Landscape architecture (final)

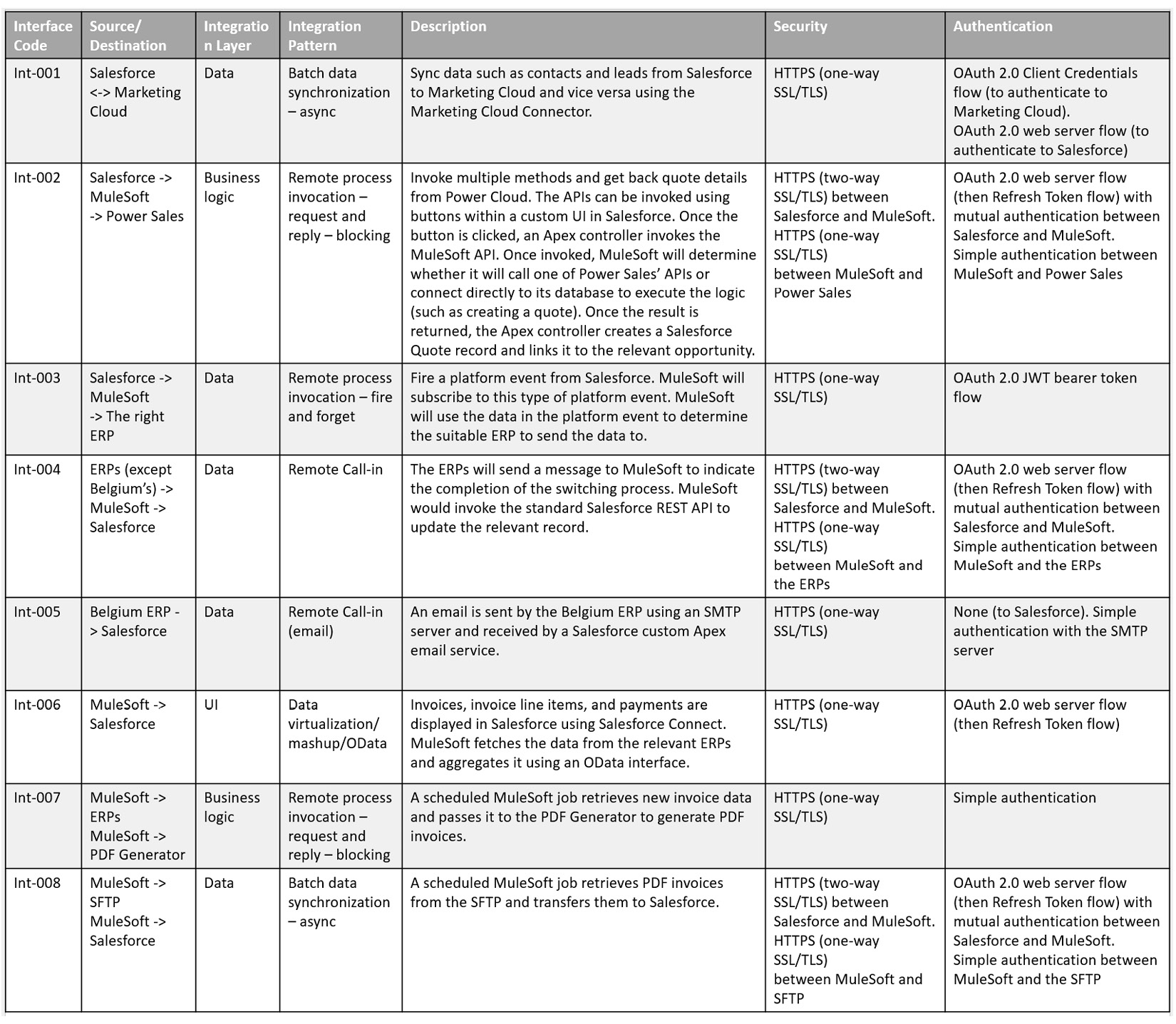

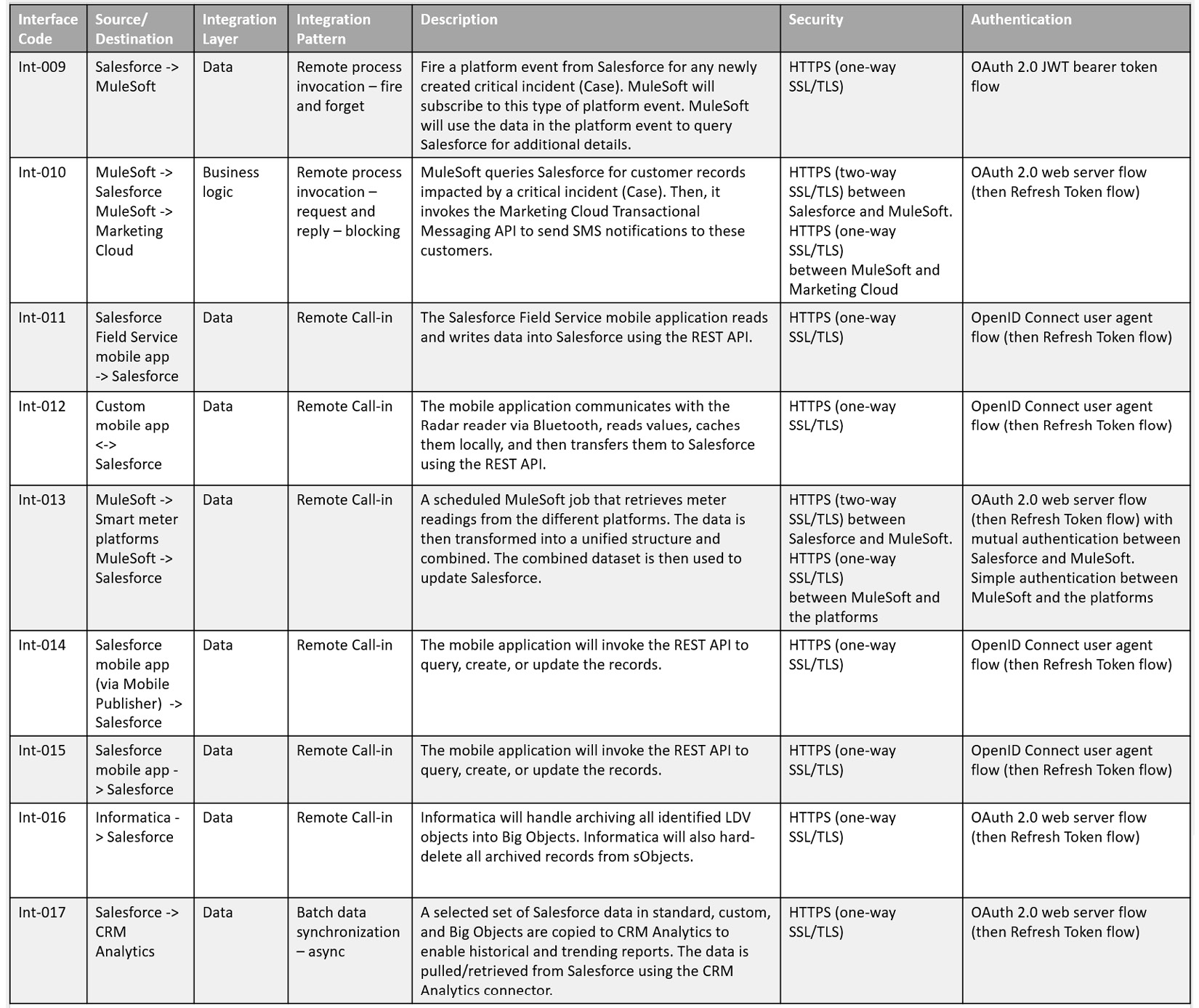

The list of integration interfaces has been split into two diagrams to improve readability. The first part of the list of integration interfaces could look like the following:

Figure 15.8 – Integration interfaces (part one – final)

The second part of the list of integration interfaces could look like the following:

Figure 15.9 – Integration interfaces (part two – final)

Your actors and licenses diagram could look like the following:

Figure 15.10 – Actors and licenses (final)

Your data model diagram could look like the following:

Figure 15.11 – Data model (final)

No changes occurred in the role hierarchy diagram.

Congratulations! You managed to complete your second full scenario. You have created a scalable and secure end-to-end solution. You need to pair that now with a strong, engaging, and attractive presentation to nail down as many marks as possible and leave the Q&A stage to close a few gaps only.

Ready for the next stage? Proceed forward.

Presenting and Justifying Your Solution

It is now time to create your presentation. This is something you would have to do on the go during the review board as you would likely consume the whole three hours creating the solution and supporting artifacts.

As you learned before in Chapter 13, Present and Defend: First Mock, you need to plan your presentation upfront and use your time management skills to ensure you describe the solution with enough detail in the given time. You will learn some time-keeping techniques in Appendix, Tips and Tricks, and the Way Forward.

You are now familiar with the structure of the board presentation. You start with the structured part of the presentation and then switch to the catch-all mode. As a reminder, these two different presentation phases include the following:

- Structured part: In this part, you describe specific pre-planned elements of your solution, starting with an overall solution introduction, including a brief walk-through of the created artifacts. Then, you start tackling the business processes one by one and—using the diagrams and artifacts—explain how your solution will fulfill them. After that, you need to describe your LDV mitigation and data migration strategies.

You can add more topics as you see appropriate, assuming you have planned and budgeted enough time for that.

- Catch-all part: This is where you go through the requirements one by one and call out those that you have not covered in the first part. You do this to ensure you have not missed any requirements, particularly the straightforward ones.

In this chapter, you will create presentation pitches for the following topics: the overall solution and artifact introduction, one of the business processes, and the project development requirements. The customer service process will be used as it is the most complex and lengthy.

You will then hold your ground and defend and justify your proposed solution, knowing that you might need to adjust the solution based on newly introduced challenges.

In the following sections, you will be creating sample presentation pitches for the structured part. Start with an overall introduction of the solution and artifacts.

Introducing the Overall Solution and Artifacts

Similar to what you did in Chapter 13, Present and Defend: First Mock, start by introducing yourself; this should be no more than a single paragraph. It should be as simple as the following:

Then, proceed with another short paragraph that briefly describes the company’s business. This will help you to get into presentation mode and break the ice. You can simply utilize a shortened version of the first paragraph in the scenario, such as the following:

Remember that you should use no more than a minute to present yourself and set the scene. Now you can start explaining the key need of the company using a brief paragraph, such as the following:

It is now time to explain the current and to-be landscape architecture. Use and show your landscape architecture diagram to guide the audience through it; never just stand next to it and speak in a monotone. You should be able to look at your diagram and describe the as-is and to-be landscape. Your pitch could be as follows:

You have set the scene and briefly explained your solution. Remember to call out your org strategy (and the rationale behind it) during your presentation. You can now proceed by explaining the licenses they need to invest in. Switch to the actors and licenses diagram and continue with your pitch. Remember to use your body language to assert your authority on the stage by moving across it (if you are doing the presentation in person). Your presentation could be as follows:

That concludes the overall high-level solution presentation. This part should take five to six minutes to cover from end to end. You can use your own words, of course, but you need to adjust your speed to ensure that you cover all the required information in the given time. Keep practicing until you manage to do that. Time management is crucial for this exam.

Now, move on to the most significant part of your presentation, where you will explain how your solution will solve the shared business processes.

Presenting the Business Processes’ End-to-End Solution

You are still in the structured part of your presentation. If you have created a business process diagram for a business process, then this is the time to use it. Besides, you will typically use the landscape architecture diagram, the data model, and the list of interfaces to explain your end-to-end solution. You can start this part with a short intro, such as the following:

You will create a sample presentation for the customer service business process.

Customer Service

Similar to what you did in Chapter 13, Present and Defend: First Mock, you will try to tell a story that explains how their process will be realized using the new system. Your pitch could be as follows:

This is a lengthy business process, but presenting its solution should take five to six minutes. The other business processes should take less time. Practice the presentation using your own words and keep practicing until you are comfortable with delivering a similar pitch in no more than six minutes.

Some questions have also been seeded, such as the full description of the OAuth 2.0 web server flow. If the judges feel a need to get more details, they will come back to this point during the Q&A.

Note

You have included the assumptions in the context of the presentation. You can use this approach or simply list all your assumptions upfront. The former approach is preferable as it is more natural and engaging.

You are not going to create a pitch for the LDV mitigation strategy. However, you can utilize the table you created earlier in this chapter (Figure 15.4). It contains a list of all identified LDV objects, the expected number of records, and the mitigation strategy.

Move on to the next element of the structured presentation.

Presenting the Project Development and Release Strategy

At this stage, you have probably consumed most of the time allocated for your structured presentation. Proceed with your concise project development and release strategy pitch:

The judges will most probably ask questions related to this domain in the Q&A stage, such as the structure of a CoE, the proposed development methodology, the rationale behind the CI environment, the use of branches in the SCM, how to keep all development environments up to date, and how to ensure the tests are consistently being invoked.

You have seeded some questions here as well. For example, you have briefly mentioned that the CI setup will allow teams to avoid conflict, but you did not explain precisely how. You will tackle that question later in this chapter. Move on to the catch-all phase of the presentation.

Going through the scenario and catching all the remaining requirements.

At this part of the presentation, you will go through the requirements one by one and ensure you have covered each aspect.

You learned about this approach’s benefits in Chapter 13, Present and Defend: First Mock, where it helped you spot and solve a missed requirement. Even if you have created a perfectly structured presentation, it is recommended to leave some room to go through the requirements again and ensure you have not missed any.

If you are doing an in-person review board, you will receive a printed version of the scenario. You will also have access to a set of pens and markers. When you read and solve the scenario, highlight or underline the requirements. If you have a solution in mind, write it next to the requirement. This will help you during the catch-all part of the scenario.

If you are doing a virtual review board, you will get access to a digital version of the scenario. You have access to MS PowerPoint and Excel, and you can copy the requirements to your slides/sheets and then use that during the presentation.

That concludes the presentation. The next part is the Q&A, the stage where the judges will ask questions to fill in any gaps that you have missed or not clearly explained during the presentation.

Justifying and Defending Your Solution

Next, move on to the Q&A, the stage that should be your friend. Relax and be proud of what you have achieved so far. You are very close to achieving your dream.

During the Q&A, you should also expect that the judges will change some requirements to test your ability to solve on the fly. This is all part of the exam. Next, explore some example questions and answers.

The judges might decide to challenge one of your proposed solutions. This does not necessarily mean that it is wrong. Even if the tone suggests so, trust in yourself and your solution. Defend, but do not over-defend. If you figure out that you have made a mistake, accept it humbly and rectify your solution. The judges will appreciate that.

For example, a judge might ask the following question:

While answering such a question, make sure your answer is sharp and to the point. Do not try to muddy the water and hope to slip away. Your answer could be as follows:

Your answer should take no more than three minutes. Remember that you have a limited Q&A time, and you must ensure you leave room for the judges to ask as many questions as possible. The Q&A phase is your friend.

Next, explore another question raised by the judges:

MuleSoft is authenticating to and communicating with Salesforce. This is a server-to-server or machine-to-machine kind of authentication. This could have been accomplished using the JWT flow, which is commonly used for similar scenarios and supported by both platforms. Your answer could be as follows:

Be ready to draw the sequence diagram for all these authentication flows. You are likely to be asked to explain one or more of them. There is no better and more comprehensive way to describe them than using sequence diagrams. You can find these diagrams in Chapter 4, Core Architectural Concepts: Identity and Access Management.

Note

Some candidates know about sequence diagrams but feel that it is a bit of an overkill to use them. Remember that you are expected to explain the flow in detail in front of three seasoned CTAs. The sequence diagram is the best tool to achieve that.

Explore another question raised by the judges:

This is a question you have seeded during the presentation. You should be ready to answer it:

Remember not to get carried away with too many details. Keep an eye on the ticking clock. That concludes the Q&A stage. You covered a different set of questions than what you came across in Chapter 11, Communicating and Socializing Your Solution, and Chapter 13, Present and Defend: First Mock. But, as you would expect, there is a vast number of possible questions. However, you have now learned how to handle them.

Understand the questions, accept being challenged, defend and adjust your solution if needed, and answer with enough details without losing valuable time. Enjoy being challenged by such senior and talented architects. Cherish it as this is a worthwhile experience that you may not encounter many times in your career.

Summary

In this chapter, you continued developing the solution for the second full mock scenario. You explored more challenging data modeling, accessibility, and security requirements. You also created comprehensive LDV mitigation and data migration strategies. You tackled challenges with reporting and had a good look at the development and release management process and its supporting governance model.

You then dived into specific topics and created an engaging presentation pitch that described the proposed solution from end to end. You used diagrams to help explain the architecture more thoroughly and practiced the Q&A stage using various potential questions.

Congratulations! You have now completed two full mock scenarios. You can practice solving these scenarios as many times as possible. You can also try to add or remove requirements to them to make things more challenging. The mini-scenarios you used in this book are also handy to practice a shorter version from time to time.

In Appendix, Tips and Tricks, and the Way Forward, you will go through some tips and tricks that can help you pass the review board. You will learn about some best practices and lessons learned. Finally, you will explore some potential next steps.