Chapter 7. Threads and Scheduling

The thread is the basic unit of execution in Windows, and a process can contain multiple, independent threads sharing the process’s address space and other resources. Chapter 6 limited processes to a single thread, but there are many situations in which multiple threads are desirable. This chapter describes and illustrates Windows thread management and introduces program parallelism. The example programs use threads to simplify program design and to enhance performance. Chapter 8 continues with a description of synchronization objects and the performance impact, positive and negative. Chapter 9 examines performance tuning and trade-off issues and describes new NT6 locking and thread pool features. Chapter 10 describes advanced synchronization programming methods and models that greatly simplify the design and development of reliable multithreaded programs. The remaining chapters and example programs use threads and synchronization as a basic tool.

This chapter ends with a very brief discussion of fibers, which allow you to create separate tasks within a thread, followed by an introduction to parallelism. Fibers are rarely used, and many readers might wish to skip the topic.

Thread Overview

A thread is an independent unit of execution within a process. The multithreaded programming challenge requires organization and coordination of thread execution to simplify programs and to take advantage of the inherent parallelism of the program and the host computer.

Traditionally, programs execute as a single thread of execution. While several processes can execute concurrently, as in the Chapter 6 examples, and even interact through mechanisms such as shared memory or pipes (Chapter 11), concurrent single-threaded processes have several disadvantages.

• It is expensive and time consuming for the OS to switch running processes, and, in cases such as the multiprocess search (grepMP, Program 6-1), the processes are all executing the same program. Threads allow concurrent file or other processing within a single process, reducing overall system overhead.

• Except in the case of shared memory, processes are not tightly coupled, and it is difficult to share resources, such as open files.

• It is difficult and inefficient for single-threaded processes to manage several concurrent and interacting tasks, such as waiting for and processing user input, waiting for file or network input, and performing computation.

• I/O-bound programs, such as the Caesar cipher conversion program in Chapter 2 (cci, Program 2-3) are confined to a simple read-modify-write model. When you’re processing sequential files, it can be more efficient to initiate as many read and write operations as possible. Windows also allows asynchronous overlapped I/O (Chapter 14), but threads can frequently achieve the same effect with less programming effort.

• The Windows executive will schedule independent threads on separate processors of a multiprocessor1 computer, frequently improving performance by exploiting the multiple processors to execute application components concurrently.

1 Multiple CPUs are common, even on laptops. Several processors may be on a single “multicore” chip, and, in turn, a computer may have several multicore chips. In Edition 3, we used the term “symmetric multiprocessing” (SMP), but this does not accurately describe multiple multicore chips in a single computer. We use both “multiprocessor” and “multicore” from now on to describe multiple processors accessing common, shared memory.

This chapter discusses Windows threads and how to manage them. The examples illustrate thread usage with parallel file searching and a multithreaded sort. These two examples contrast I/O- and compute-intensive concurrent activities performed with threads. The chapter also presents an overview of Windows process and thread scheduling and concludes with a brief introduction to parallelism.

Perspectives and Issues

This chapter and those that follow take the point of view that not only do threads make certain programs simpler to design and implement but, with attention to a few basic rules and programming models, threaded programs also can improve performance and be reliable, easy to understand, and maintainable. Thread management functions are very similar to the process management functions so that, as just one example, there is a GetThreadExitCode function that is comparable to GetProcessExitCode.

This point of view is not universally accepted. Many writers and software developers mention thread risks and issues and prefer to use multiple processes when concurrency is required. Common issues and concerns include the following.

• Threads share storage and other resources within a process, so one thread can accidentally modify another thread’s data, leading to defects such as race conditions and deadlocks.

• In certain circumstances, concurrency can drastically degrade, rather than improve, performance.

• Converting legacy single-threaded programs to exploit threads can be challenging, partly for the reasons above as well as lack of program understanding.

These concerns are real but are generally avoidable with careful design and programming, and many of the issues are inherent to concurrency, whether using threads within a process, multiple processes, or special-purpose techniques, such as Windows asynchronous I/O (Chapter 14).

Thread Basics

Figure 6-1 in the previous chapter shows how threads exist in a process environment. Figure 7-1 illustrates threads by showing a multithreaded server that can process simultaneous requests from multiple networked clients; a distinct thread is dedicated to each client. A Chapter 11 example implements this model.

Figure 7-1 Threads in a Server Environment

Threads within a process share data and code, but individual threads also have their own unique storage in addition to the shared data. Windows provides data for individual threads in several ways. Be aware, however, that the data is not totally protected from other threads within the process; the programmer must assure that threads do not access data assigned to other threads.

• Each thread has its own stack for function calls and other processing.

• The calling process can pass an argument (Arg in Figure 7-1), usually a pointer, to a thread at creation time. This argument is actually on the thread’s stack.

• Each thread can allocate its own Thread Local Storage (TLS) indexes and can read and set TLS values. TLS, described later, provides small pointer arrays to threads, and a thread can access only its own TLS array. Among other advantages, TLS assures that threads will not modify one another’s data.

The thread argument can point to an arbitrary data structure. In Figure 7-1’s server example, this structure might contain the current request and the thread’s response to that request as well as other working storage.

Windows programs can exploit multiprocessor systems by allowing different threads, even from the same process, to run concurrently on separate processors. This capability, if used properly, can enhance performance, but without sufficient care and a good strategy to exploit multiple processors, execution can actually be slower than on a single-processor computer, as we’ll see in Chapter 9.

Thread Management

It should come as no surprise that threads, like any other Windows object, have handles and that there is a CreateThread system call to create an executable thread in the calling process’s address space. As with processes, we will sometimes speak of “parent” and “child” threads, although the OS does not make any such distinction. CreateThread has several unique requirements.

CreateThread

The CreateThread function allows you to:

• Specify the thread’s start address within the process’s code.

• Specify the stack size, and the stack space is allocated from the process’s virtual address space. The default stack size is the parent’s virtual memory stack size (normally 1MB). One page is initially committed to the stack. New stack pages are committed as required until the stack reaches its maximum size and cannot grow anymore.

• Specify a pointer to a thread argument. The argument can be nearly anything and is interpreted by the thread and its parent.

• CreateThread returns a thread’s ID value and its handle. A NULL handle value indicates a failure.

Parameters

lpsa is the familiar security attributes structure.

dwStackSize is the byte size of the new thread’s stack. Use 0 to default to the primary thread’s stack size.

lpStartAddr points to the thread function (within the calling process) to be executed. This function accepts a single pointer argument and returns a 32-bit DWORD exit code. The thread can interpret the argument as a DWORD or a pointer. The thread function signature, then, is as follows:

lpThreadParm is the pointer passed as the thread argument and is interpreted by the thread and its parent, normally as a pointer to an argument structure.

dwCreationFlags, if 0, means that the thread is ready to run immediately. If dwCreationFlags is CREATE_SUSPENDED, the new thread will be in the suspended state, requiring a ResumeThread function call to move the thread to the ready state.

lpThreadId points to a DWORD that receives the new thread’s identifier. The pointer can also be NULL, indicating that no thread ID will be returned.

The CreateRemoteThread function allows a thread to be created in another process. Compared with CreateThread, there is an additional parameter for the process handle, and the function addresses must be in the target process’s address space. CreateRemoteThread is one of several interesting, and potentially dangerous, ways for one process to affect another directly, and it might be useful in writing, for example, a debugger. There is no way to call this function usefully and safely in normal applications. Avoid it!

ExitThread

All threads in a process can exit using the ExitThread function. A common alternative, however, is for a thread to return from the thread function using the exit code as the return value. The thread’s stack is deallocated and all handles referring to the thread are signaled. If the thread is linked to one or more DLLs (either implicitly or explicitly), then the DllMain functions (Chapter 4) of each DLL will be called with DLL_THREAD_DETACH as the “reason.”

When the last thread in a process exits, the process itself terminates.

One thread can terminate another thread with the TerminateThread function, but the thread’s resources will not be deallocated, completion handlers do not execute, and there is no notification to attached DLLs. It is best if the thread terminates itself with a return statement; TerminateThread usage is strongly discouraged, and it has the same disadvantages as TerminateProcess.

GetExitCodeThread

A terminated thread (again, a thread normally should terminate itself) will continue to exist until the last handle to it is closed using CloseHandle. Any other thread, perhaps one waiting for some other thread to terminate, can retrieve the exit code.

lpExitCode will contain the thread’s exit code. If the thread is still running, the value is STILL_ACTIVE.

Thread Identity

You can obtain thread IDs and handles using functions that are similar to those used with processes.

• GetCurrentThread returns a noninheritable pseudohandle to the calling thread.

• GetCurrentThreadId obtains the thread ID rather than the handle.

• GetThreadId obtains a thread’s ID from its handle.

• OpenThread creates a thread handle from a thread ID. OpenProcess was very useful in JobShell (Program 6-3), and you can use OpenThread in a similar fashion.

Additional Thread Management Functions

While the thread management functions just discussed are sufficient in most cases, including the examples in this book, two additional functions were introduced in XP and Windows 2003 (that is, NT5). Brief descriptions follow.

1. GetProcessIdOfThread, which requires Windows 2003 or later (XP does not support it), finds the process ID of a thread from the thread’s handle. You could use this function in a program that manages or interacts with threads in other processes. If necessary, use OpenProcess to obtain a process handle.

2. GetThreadIOPendingFlag determines whether the thread, identified by its handle, has any outstanding I/O requests. For example, the thread might be blocked on a ReadFile operation. The result is the status at the time that the function is executed; the actual status could change at any time if the target thread completes or initiates an operation.

Suspending and Resuming Threads

Every thread has a suspend count, and a thread can execute only if this count is 0. One thread can increment or decrement the suspend count of another thread using SuspendThread and ResumeThread. Recall that a thread can be created in the suspended state with a count of 1.

Both functions, if successful, return the previous suspend count. 0xFFFFFFFF indicates failure.

Waiting for Threads to Terminate

One thread can wait for another thread to terminate in the same way that threads wait for process termination (Chapter 6). Use a wait function (WaitForSingleObject or WaitForMultipleObjects) using thread handles instead of process handles. Note that the handles in the array passed to WaitForMultipleObjects do not all need to be of the same type; for example, thread, process, and other handle types can be mixed in a single call.

WaitForMultipleObjects can wait for only MAXIMUM_WAIT_OBJECTS (64) handles at one time, but you can perform a series of waits if you have a large number of threads. Program 6-1 already illustrated this technique; most programs in this book will perform single waits.

The wait function waits for the object, indicated by the handle, to become signaled. In the case of threads, ExitThread and TerminateThread set the object to the signaled state, releasing all other threads waiting on the object, including threads that might wait in the future after the thread terminates. Once a thread handle is signaled, it never becomes nonsignaled. The same is true of process handles but not of handles to some other objects, such as mutexes and events (see the next chapter).

Note that multiple threads can wait on the same object. Similarly, the ExitProcess function sets the process state and the states of all its threads to signaled.

Using the C Library in Threads

Most code requires the C library, even if it is just to manipulate strings. Historically, the C library was written to operate in single-threaded processes, so some functions use global storage to store intermediate results. Such libraries are not thread-safe because two separate threads might, for example, be simultaneously accessing the library and modifying the library’s global storage. Proper design of threaded code is discussed again in Chapter 8, which describes Windows synchronization.

The strtok function is an example of a C library function that is not thread-safe. strtok, which scans a string to find the next occurrence of a token, maintains persistent state between successive function calls, and this state is in static storage, shared by all the threads calling the function.

Microsoft C solves such problems by supplying a thread-safe C library implementation named LIBCMT.LIB. There is more. Do not use CreateThread; if you do, there is a risk of different threads accessing and modifying the same data that the library requires for correct operation. Instead, use a special C function, _beginthreadex, to start a thread and create thread-specific working storage for LIBCMT.LIB. Use _endthreadex in place of ExitThread to terminate a thread.

Note: There is a _beginthread function, intended to be simpler to use, but you should never use it. First, _beginthread does not have security attributes or flags and does not return a thread ID. More importantly, it actually closes the thread handle it creates, and the returned thread handle may be invalid by the time the parent thread stores it. Also avoid _endthread; it does not allow for a return value.

The _beginthreadex arguments are exactly the same as for the Windows functions but without the Windows type definitions; therefore, be sure to cast the _beginthreadex return value to a HANDLE to avoid warning messages. Be certain to define _MT before any include files; this definition is in Environment.h for the sample programs. That is all there is to it. When you’re using the Visual Studio development environment, be sure to do the following:

• Open the Project Properties pages.

• Under Configuration Properties, expand C/C++.

• Select Code Generation.

• For the Runtime Library, specify Multi-threaded DLL (/MD).

• Terminate threads with _endthreadex or simply use a return statement at the end of the thread routine.

All examples will operate this way, and the programs will never use CreateThread directly, even if the thread functions do not use the C library.

Thread-Safe Libraries

User-developed libraries must be carefully designed to avoid thread safety issues, especially when persistent state is involved. A Chapter 12 example (Program 12-5), where a DLL maintains state in a parameter, shows one strategy.

Another Chapter 12 example (Program 12-6) demonstrates an alternative approach that exploits the DllMain function and TLS, which is described later in this chapter.

Example: Multithreaded Pattern Searching

Program 6-1, grepMP, used processes to search multiple files simultaneously. Program 7-1, grepMT, includes the grep pattern searching source code so that threads can perform the searching within a single process. The pattern searching code relies on the C library for file I/O. The main control program is similar to the process implementation.

Program 7-1 grepMT: Multithreaded Pattern Searching

This example also shows that a form of asynchronous I/O is possible with threads without using the explicit methods of Chapter 14. In this example, the program is managing concurrent I/O to multiple files, and the main thread, or any other thread, can perform additional processing before waiting for I/O completion. In the author’s opinion, threads are a much simpler method of achieving asynchronous I/O, and Chapter 14 compares the methods, allowing readers to form their own opinions. We will see, however, that asynchronous I/O, combined with I/O completion ports, is useful and often necessary when the number of threads is large. Furthermore, as of NT6, extended asynchronous I/O often performs very well.

grepMT, for the purposes of illustration, differs in another way from grepMP. Here, WaitForMultipleObjects waits for a single thread to terminate rather than waiting for all the threads. The appropriate output is displayed before waiting for another thread to complete. The completion order will, in most cases, vary from one run to the next. It is easy to modify the program to display the results in the order of the command line arguments; just imitate grepMP.

Finally, notice that there is a limit of 64 threads due to the value of MAXIMUM_WAIT_OBJECTS, which limits the number of handles in the WaitForMultipleObjects call. If more threads are required, create the appropriate logic to loop on either WaitForSingleObject or WaitForMultipleObjects.

Caution: grepMT performs asynchronous I/O in the sense that separate threads are concurrently, and synchronously, reading different files with read operations that block until the read is complete. You can also concurrently read from the same file if you have distinct handles on the file (typically, one per thread). These handles should be generated by CreateFile rather than DuplicateHandle. Chapter 14 describes asynchronous I/O, with and without user threads, and an example in the Examples file (cciMT; see Chapter 14) has several threads performing I/O to the same file.

Note: You can perform all sorts of parallel file processing using this design. All that is required is to change the “ThGrep” function at the end of Program 7-1. An exercise suggests that you implement a parallel word count (wc) program this way, but you could also edit files or compile source code files in parallel.

Run 7-1 shows grepMT operation and compares the performance with grepMP, using the same four 640MB files.

Run 7-1 grepMT: Multithreaded Pattern Searching

Performance Impact

grepMP and grepMT are comparable in terms of program structure and complexity, but grepMT has the expected advantage of better performance; it is more efficient for the kernel to switch between threads than between processes. Run 7-1 shows that the theoretical advantage is real, but not large (12.554 versus 14.956 seconds). Specifically, if you are processing multiple large files of about the same size, with one thread per file, performance improves nearly linearly with the number of files up to the number of processors on the computer. You may not see this improvement with smaller files, however, because of the thread creation and management overhead. Chapter 9 shows how to improve performance slightly more with NT6 thread pools.

Both implementations exploit multiprocessor systems, giving a considerable improvement in the elapsed time; threads, whether in the same process or in different processes, run in parallel on the different processors. The measured user time actually exceeds the elapsed time because the user time is the total for all the processors.

The Examples file contains a word counting example, wcMT, which has the same structure as grepMT and, on a multiprocessor computer, is faster than the Cygwin wc command (Cygwin, an open source set of UNIX/Linux commands, implements wc).

There is a common misconception, however, that this sort of parallelism using either grepMP or grepMT yields performance improvements only on multiprocessor systems. You can also gain performance when there are multiple disk drives or some other parallelism in the storage system. In such cases, multiple I/O operations to different files can run concurrently.

The Boss/Worker and Other Threading Models

grepMT illustrates the “boss/worker” threading model, and Figure 6-3 illustrates the relationship if “thread” is substituted for “process.” The boss thread (the main thread in this case) assigns tasks for the worker threads to perform. Each worker thread is given a file to search, and the worker threads pass their results to the boss thread in a temporary file.

There are numerous variations, such as the work crew model in which the workers cooperate on a single task, each performing a small piece. The next example uses a work crew (see Figure 7-2). The workers might even divide up the work themselves without direction from the boss. Multithreaded programs can employ nearly every management arrangement that humans use to manage concurrent tasks.

Figure 7-2 Merge-Sort with Multiple Threads

The two other major models are the client/server model (illustrated in Figure 7-1 and developed in Chapter 11) and the pipeline model, where work moves from one thread to the next (see Chapter 10 and Figure 10-1 for an example of a multistage pipeline).

There are many advantages to using these models when designing a multithreaded program, including the following.

• Most multithreaded programming problems can be solved using one of the standard models, expediting design, development, and debugging.

• Not only does using a well-understood and tested model avoid many of the mistakes that are so easy to make in a multithreaded program, but the model also helps you obtain the best performance.

• The models correspond naturally to the structures of most programming problems.

• Programmers who maintain the program will be able to understand it much more easily if documentation describes the program in terms that everyone understands.

• Troubleshooting an unfamiliar program is much easier if you analyze it in terms of models. Frequently, an underlying problem is found when the program is seen to violate the basic principles of one of the models.

• Many common defects, such as race conditions and deadlocks, are also described by simple models, as are effective methods of using the synchronization objects described in Chapters 9 and 10.

These classical thread models are used in many OSs. The Component Object Model (COM), widely used in Windows systems, uses different terminology.

Example: Merge-Sort—Exploiting Multiple Processors

This example, diagrammed in Figure 7-2, shows how to use threads to get significant performance gains, especially on a multiprocessor computer. The basic idea is to divide the problem into component tasks, give each task to a separate thread, and then combine the results to get the complete solution. The Windows executive will automatically assign the threads to separate processors, so the threads will execute in parallel, reducing elapsed time.

This strategy, often called the divide and conquer strategy or the work crew model, is useful both for performance and as an algorithm design method. grepMT, Program 7-1, could be considered one example; it creates a thread for each file or pattern matching task.

Next, consider another example in which a single task, sorting a file, is divided into subtasks delegated to separate threads.

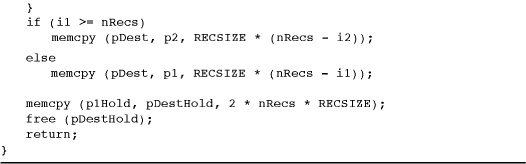

Merge-sort, in which the array to be sorted is divided into smaller arrays, is a classic divide and conquer algorithm. Each small array is sorted individually, and the individual sorted arrays are merged in pairs to yield larger sorted arrays. The pairwise merging continues until completion. Generally, merge-sort starts with small arrays, which can be sorted efficiently with a simple algorithm. This example starts with larger arrays so that there can be one array for each processor. Figure 7-2 is a sketch of the algorithm.

Program 7-2 shows the implementation details. The user specifies the number of tasks on the command line. Exercise 7–9 suggests that sortMT use GetSystemInfo to find the number of processors and then create one thread per processor.

Program 7-2 sortMT: Merge-Sort with Multiple Threads

Notice that the program runs efficiently on single-processor systems with sufficient memory and gains a significant performance improvement on multiprocessor systems. Caution: The algorithm as shown will work only if the number of records in the sort file is divisible by the number of threads and if the number of threads is a power of 2. Exercise 7–8 removes these limitations.

Note: In understanding this program, concentrate on the thread management logic separately from the logic that determines which portion of the array a thread should sort. Notice too that the C library qsort function is used, so there is no need to be concerned with developing an efficient basic sort function.

Additional Point to Notice: The thread creation loop (look for the comment “Create the sorting threads” on the second page of the listing) creates the worker threads in the suspended state. The threads are resumed only after all the worker threads are created. The reason for this can be seen from Figure 7-2; consider what would happen if Thread 0 waits for Thread 1 before Thread 1 is created. There would then be no handle to wait for. This is an example of a “race” condition where two or more threads make unsafe assumptions about the progress of the other threads. Exercises 7–10 and 7–13 investigate this further.

Run 7-2a shows sorting of large and small files, with 1, 2, 4, and 8 threads for the large file. The test computer has four processors, and four threads give the best results. Also note that the first single-thread run is slower than the second; this may be explained by the fact that the file is cached in memory during the second run. sortFL (Program 5-4) was the best previous result, 3.123 seconds.

Run 7-2a sortMT: Sorting with Multiple Threads

An additional screenshot, Run 7-2b, uses a 5,000,000 record (320MB) file so that the time improvements are more significant.

Run 7-2b sortMT: Sorting with Multiple Threads and a Larger File

Performance

Multiprocessor systems give good results when the number of threads is the same as the number of processors. Performance improves with more threads but not linearly because of the merging. Additional threads beyond the processor count slow the program.

Divide and conquer is more than just a strategy for algorithm design; it can also be the key to exploiting multiprocessors. The single-processor results can vary. On a computer with limited memory (that is, insufficient physical memory to hold the entire file), using multiple threads might increase the sort time because the threads contend for available physical memory. On the other hand, multiple threads can improve performance with a single processor when there is sufficient memory. The results are also heavily dependent on the initial data arrangement.

Introduction to Program Parallelism

Programs 7-1 and 7-2 share some common properties that permit “parallelization” so that subtasks can execute concurrently, or “in parallel” on separate processors. Parallelization is the key to future performance improvement, since we can no longer depend on increased CPU clock rates and since multicore and multiprocessor systems are increasingly common.

Chapter 10 discusses these technology trends and parallelism in more detail and relates these trends to the thread pools available in NT6 (Windows 7, Vista, and Server 2008). However, sortMT, wcMT, and grepMT have already illustrated the potential performance benefits from parallelism. The properties that enabled parallelism include the following:

• There are separate worker subtasks that can run independently, without any interaction between them. For example, grepMT can process each file independently, and sortMT can sort subsets of the entire array.

• As subtasks complete, a master program can combine, or “reduce,” the results of several subtasks into a single result. Thus, sortMT merges sorted arrays to form larger sorted arrays. grepMT and wcMT simply display the results from the individual files, in order.

• The programs are “lock-free” and do not need to use mutual exclusion locks, such as the mutexes described next in Chapter 8. The only synchronization required is for the boss thread to wait for the worker threads to complete.

• The worker subtasks run as individual threads, potentially running on separate processors.

• Program performance scales automatically, up to some limit, as you run on systems with more processors; the programs themselves do not, in general, determine the processor count on the host computer. Instead, the Windows kernel assigns worker subtasks to available processors.

• If you “serialize” the program by replacing the thread creation calls with direct function calls and remove the wait calls, you should get precisely the same results as the parallel program.2 The serialized program is, moreover, much easier to debug.

2 This statement fails, or is only approximately true, if operation order and associativity are important. For example, if you sum floating-point numbers, the order is important. In these cases, the multithreaded results will also vary from run to run, and the serial execution is one of many possible multithreaded execution sequences.

• The maximum performance improvement is limited by the program’s “parallelism,” thread management overhead, and computations that cannot be parallelized. The maximum parallelism for sortMT is determined by the command line parameter specifying the number of threads, although the merging steps do not use all the threads. grepMT parallelism cannot be larger than the number of files on the command line. Computations that cannot be parallelized include initialization and reducing worker results.

Be aware, however, that these two examples are relatively simple and “coarse grained.” The subtasks are easy to identify and run for a relatively long time period, although the subtasks will require different amounts of time, depending primarily on the file sizes. In general, correct program parallelization that improves performance significantly can be challenging.

Thread Local Storage

Threads may need to allocate and manage their own storage independently of and protected from other threads in the same process. One technique is to have the creating thread call CreateThread (or _beginthreadex) with lpvThreadParm pointing to a data structure that is unique for each thread. The thread can then allocate additional data structures and access them through lpvThreadParm. Program 7-1 used this technique.

Windows also provides TLS, which gives each thread its own array of pointers. Figure 7-3 shows this TLS arrangement.

Figure 7-3 Thread Local Storage within a Process

Initially, no TLS indexes (rows) are allocated, but new rows can be allocated and deallocated at any time, with at least TLS_MINIMUM_AVAILABLE (64) indexes for any process. The number of columns can change as new threads are created and old ones terminate.

The first issue is TLS index management. The primary thread is a logical place to do this, but any thread can manage thread indexes.

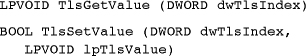

TlsAlloc returns the allocated index (≥ 0), with –1 (0xFFFFFFFF) if no index is available.

An individual thread can get and set its values (void pointers) from its slot using a TLS index.

The programmer must ensure that the TLS index parameter is valid—that is, that it has been allocated with TlsAlloc and has not been freed.

TLS provides a convenient mechanism for storage that is global within a thread but unavailable to other threads. Normal global storage is shared by all threads. Although no thread can access another thread’s TLS, any thread can call TlsFree and destroy an index for all threads, so use TlsFree carefully. TLS is frequently used by DLLs as a replacement for global storage in a library; each thread, in effect, has its own global storage. TLS also provides a convenient way for a calling program to communicate with a DLL function, and this is the most common TLS use. An example in Chapter 12 (Program 12-5) exploits TLS to build a thread-safe DLL; DLL thread and process attach/detach calls (Chapter 5) are another important element in the solution.

Process and Thread Priority and Scheduling

The Windows kernel always runs the highest-priority thread that is ready for execution. A thread is not ready if it is waiting, suspended, or blocked for some reason.

Threads receive priority relative to their process priority classes. Process priority classes are set initially by CreateProcess (Chapter 6), and each has a base priority, with values including:

• IDLE_PRIORITY_CLASS, for threads that will run only when the system is idle.

• NORMAL_PRIORITY_CLASS, indicating no special scheduling requirements.

• HIGH_PRIORITY_CLASS, indicating time-critical tasks that should be executed immediately.

• REALTIME_PRIORITY_CLASS, the highest possible priority.

The two extreme classes are rarely used, and the normal class can be used normally, as the name suggests. Windows is not a real-time OS, and using REALTIME_PRIORITY_CLASS can prevent other essential threads from running.

Set and get the priority class with:

You can use the values listed above as well as:

• Two additional priority classes, ABOVE_NORMAL_PRIORITY_CLASS (which is below HIGH_PRIORITY_CLASS) and BELOW_NORMAL_PRIORITY_CLASS (which is above IDLE_PRIORITY_CLASS).

• PROCESS_MODE_BACKGROUND_BEGIN, which lowers the priority of the process and its threads for background work without affecting the responsiveness of foreground3 processes and threads. The handle must represent the calling process; a process cannot put another into background mode. You need NT6 (Windows Vista or later) to use this mode.

3 Foreground threads and processes (“tasks”) are generally those that need to respond quickly, such as a thread that interacts directly with the user. Background tasks do not need to respond quickly; examples include file processing or time-consuming computations.

• PROCESS_MODE_BACKGROUND_END, which restores the process priority to the value before it was set with PROCESS_MODE_BACKGROUND_BEGIN.

A process can change or determine its own priority or that of another process, security permitting.

Thread priorities are either absolute or are set relative to the process base priority. At thread creation time, the priority is set to that of the process. The relative thread priorities are in a range of ±2 “points” from the process’s base. The symbolic names of the resulting common thread priorities, starting with the five relative priorities, are:

• THREAD_PRIORITY_LOWEST

• THREAD_PRIORITY_BELOW_NORMAL

• THREAD_PRIORITY_NORMAL

• THREAD_PRIORITY_ABOVE_NORMAL

• THREAD_PRIORITY_HIGHEST

• THREAD_PRIORITY_TIME_CRITICAL is 15, or 31 if the process class is REALTIME_PRIORITY_CLASS.

• THREAD_PRIORITY_IDLE is 1, or 16 for REALTIME_PRIORITY_CLASS processes.

• THREAD_MODE_BACKGROUND_BEGIN and THREAD_MODE_BACKGROUND_END are similar to PROCESS_MODE_BACKGROUND_BEGIN and PROCESS_MODE_BACKGROUND_END. You need Windows Vista, or later, to use these modes.

Use these values to set and read a thread’s relative priority. Note the signed integer priority argument.

There are actually two additional thread priority values. They are absolute rather than relative and are used only in special cases.

• THREAD_PRIORITY_IDLE is a value of 1 (or 16 for real-time processes).

• THREAD_PRIORITY_TIME_CRITICAL is 15 (or 31 for real-time processes).

Thread priorities change automatically with process priority. In addition, Windows may adjust thread priorities dynamically on the basis of thread behavior. You can enable and disable this feature with the SetThreadPriorityBoost function.

Thread and Process Priority Cautions

Use high thread priorities and process priority classes with caution or, better yet, not at all, unless there is a proven requirement. Definitely avoid real-time priorities for normal user processes; our examples never use real-time priorities, and real-time applications are out of scope. Among other dangers, user threads may preempt threads in the executive.

Furthermore, everything that we say in the following chapters about the correctness of threaded programs assumes, without comment, that thread scheduling is fair. Fairness ensures that all threads will, eventually, run. Without fairness, a low-priority thread could hold resources required by a high-priority thread. Thread starvation and priority inversion are terms used to describe the defects that occur when scheduling is not fair.

Thread States

Figure 7-4, which is taken from Custer’s Inside Windows NT, page 210 (also see Russinovich, Solomon, and Ionescu), shows how the executive manages threads and shows the possible thread states. This figure also shows the effect of program actions. Such state diagrams are common to all multitasking OSs and help clarify how a thread is scheduled for execution and how a thread moves from one state to another.

Figure 7-4 Thread States and Transitions

(From Inside Windows NT, by Helen Custer. Copyright © 1993, Microsoft Press. Reproduced by permission of Microsoft Press. All rights reserved.)

Here is a quick summary of the fundamentals; see the references for more information.

• A thread is in the running state when it is actually running on a processor. More than one thread can be in the running state on a multiprocessor computer.

• The executive places a running thread in the wait state when the thread performs a wait on a nonsignaled handle, such as a thread or process handle, or on a synchronization object handle (Chapter 8). I/O operations will also wait for completion of a disk or other data transfer, and numerous other functions can cause waiting. It is common to say that a thread is blocked, or sleeping, when in the wait state.

• A thread is ready if it could be running. The executive’s scheduler could put it in the running state at any time. The scheduler will run the highest-priority ready thread when a processor becomes available, and it will run the one that has been in the ready state for the longest time if several threads have the same high priority. The thread moves through the standby state before entering the ready state.

• Normally, the scheduler will place a ready thread on any available processor. The programmer can specify a thread’s processor affinity (see Chapter 9), which will limit the processors that can run that specific thread. In this way, the programmer can allocate processors to threads and prevent other threads from using these processors, helping to assure responsiveness for some threads. The appropriate functions are SetProcessAffinityMask and GetProcessAffinityMask. SetThreadIdealProcessor can specify a preferred processor that the scheduler will use whenever possible; this is less restrictive than assigning a thread to a single processor with the affinity mask.

• The executive will move a running thread to the ready state if the thread’s time slice expires without the thread waiting. Executing Sleep(0) will also move a thread from the running state to the ready state.

• The executive will place a waiting thread in the ready state as soon as the appropriate handles are signaled, although the thread actually goes through an intermediate transition state. It is common to say that the thread wakes up.

• There is no way for a program to determine the state of another thread (of course, a thread, if it is running, must be in the running state, so it would be meaningless for a thread to find its own state). Even if there were, the state might change before the inquiring thread would be able to act on the information.

• A thread, regardless of its state, can be suspended, and a ready thread will not be run if it is suspended. If a running thread is suspended, either by itself or by a thread on a different processor, it is placed in the ready state.

• A thread is in the terminated state after it terminates and remains there as long as there are any open handles on the thread. This arrangement allows other threads to interrogate the thread’s state and exit code.

Pitfalls and Common Mistakes

There are several factors to keep in mind as you develop threaded programs; lack of attention to a few basic principles can result in serious defects, and it is best to avoid the problems in the first place than try to find them during testing or debugging.

The essential factor is that the threads execute asynchronously. There is no sequencing unless you create it explicitly. This asynchronous behavior is what makes threads so useful, but without proper care, serious difficulties can occur.

Here are a few guidelines; there are more in later chapters. The example programs attempt to adhere to all these guidelines. There may be a few inadvertent violations, however, which illustrates the multithreaded programming challenges.

• Make no assumptions about the order in which the parent and child threads execute. It is possible for a child thread to run to completion before the parent returns from CreateThread, or, conversely, the child thread may not run at all for a considerable period of time. On a multiprocessor computer, the parent and one or more children may even run concurrently.

• Ensure that all initialization required by the child is complete before the CreateThread call, or else use thread suspension or some other technique. Failure by the parent to initialize data required by the child is a common cause of “race conditions” wherein the parent “races” the child to initialize data before the child needs it. sortMT illustrates this principle.

• Be certain that each distinct child has its own data structure passed through the thread function’s parameter. Do not assume that one child thread will complete before another (this is another form of race condition).

• Any thread, at any time, can be preempted, and any thread, at any time, may resume execution.

• Do not use thread priority as a substitute for explicit synchronization.

• Do not use reasoning such as “that will hardly ever happen” as an argument that a program is correct. If it can happen, it will, possibly at a very embarrassing moment.

• Even more so than with single-threaded programs, testing is necessary, but not sufficient, to ensure program correctness. It is common for a multithreaded program to pass extensive tests despite code defects. There is no substitute for careful design, implementation, and code inspection.

• Threaded program behavior varies widely with processor speed, number of processors, OS version, and more. Testing on a variety of systems can isolate numerous defects, but the preceding precaution still applies.

• Be certain that threads have a sufficiently large stack, although the default 1MB is usually sufficient.

• Threads should be used only as appropriate. Thus, if there are activities that are naturally concurrent, each such activity can be represented by a thread. If, on the other hand, the activities are naturally sequential, threads only add complexity and performance overhead.

• If you use a large number of threads, be careful, as the numerous stacks will consume virtual memory space and thread context switching may become expensive. “Large” is a relative term and could mean hundreds or thousands. In other cases, it could mean more threads than the number of processors.

• Fortunately, correct programs are frequently the simplest and have the most elegant designs. Avoid complexity wherever possible.

Timed Waits

The final function, Sleep, allows a thread to give up the processor and move from the running to the wait state for a specified period of time. A thread can, for example, perform a task periodically by sleeping after carrying out the task. Once the time period is over, the scheduler moves the thread back to the ready state. A program in Chapter 11 (Program 11-4) uses this technique.

The time period is in milliseconds and can even be INFINITE, in which case the thread will never resume. A 0 value will cause the thread to relinquish the remainder of the time slice; the kernel moves the thread from the running state to the ready state (Figure 7-4).

The function SwitchToThread provides another way for a thread to yield its processor to another ready thread if there is one that is ready to run.

Fibers

Note: Fibers are of specialized interest. See the comment after the first bulleted item below to determine if you want to skip this section.

A fiber, as the name implies, is a piece of a thread. More precisely, a fiber is a unit of execution that can be scheduled by the application rather than by the kernel. An application can create numerous fibers, and the fibers themselves determine which fiber will execute next. The fibers have independent stacks but otherwise run entirely in the context of the thread on which they are scheduled, having access, for example, to the thread’s TLS and any mutexes4 owned by the thread. Furthermore, fiber management occurs entirely in user space outside the kernel. Fibers can be thought of as lightweight threads, although there are numerous differences.

4 A mutex, as explained in Chapter 8, is a synchronization object that threads can own.

A fiber can execute on any thread, but never on two at one time. Therefore, a fiber should not access thread-specific data, such as TLS, as the data will have no meaning if the fiber is later rescheduled to run on another thread.

Fibers can be used for several purposes.

• Most importantly, many applications, especially some written for UNIX using proprietary thread implementations, now generally obsolete, are written to schedule their own threads. Fibers make it easier to port such applications to Windows but otherwise do not provide advantages over properly used threads. Most readers will not have such requirements and may want to skip this section.

• A fiber does not need to block waiting for a file lock, mutex, named pipe input, or other resource. Rather, one fiber can poll the resource and, if the resource is not available, switch control to another specific fiber.

• Fibers operate as part of a converted thread (see the first numbered item below) and have access to thread and process resources. A fiber is not, however, bound to a specific thread and can run on any thread (but not on more than one at a time).

• Unlike threads, fibers are not preemptively scheduled. The Windows executive, in fact, is not aware of fibers; fibers are managed within the fiber DLL entirely within user space.

• Fibers allow you to implement co-routines, whereby an application switches among several interrelated tasks. Threads do not allow this. The programmer has no direct control over which thread will be executed next.

• Major software vendors have used fibers and claim performance advantages. For example, Oracle Database 10g has an optional “fiber mode” (see http://download.oracle.com/owsf_2003/40171_colello.ppt; this presentation also describes the threading model).

Seven functions make up the fiber API. They are used in the following sequence and as shown in Figure 7-5.

1. A thread must first enable fiber operation by calling ConvertThreadToFiber or ConvertThreadToFiberEx. The thread then consists of a single fiber. This call provides a pointer to fiber data, which can be used in much the same way that the thread argument was used to create unique data for a thread.

2. The application can create additional fibers using CreateFiber. Each fiber has a start address, a stack size, and a parameter. Each new fiber is identified by an address rather than by a handle.

3. An individual fiber can obtain its data, as received from CreateFiber, by calling GetFiberData.

4. Similarly, a fiber can obtain its identity with GetCurrentFiber.

5. A running fiber yields control to another fiber by calling SwitchToFiber, indicating the address of the other fiber. Fibers must explicitly indicate the next fiber that is to run within the thread.

6. The DeleteFiber function deletes an existing fiber and all its associated data.

7. New functions, such as ConvertFiberToThread (which releases resources created by ConvertThreadToFiber), have been added to XP (NT 5.1), along with fiber local storage.

Figure 7-5 Control Flow among Fibers in a Thread

Figure 7-5 shows fibers in a thread. This example shows two ways in which fibers schedule each other.

• Master-slave scheduling. One fiber decides which fiber to run, and that fiber always yields control to the master fiber. Fiber 1 in Figure 7-5 behaves in this way. The Examples file contains grepMF, a grepMT variation, that uses master-slave scheduling.

• Peer-to-peer scheduling. A fiber determines the next fiber to run. The determination can be based on policies such as round-robin scheduling, priority scheduling based on a priority scheme, and so on. Co-routines would be implemented with peer-to-peer scheduling. In Figure 7-5, Fibers 0 and 2 switch control in this way.

Summary

Windows supports threads that are independently scheduled but share the same process address space and resources. Threads give the programmer an opportunity to simplify program design and to exploit parallelism in the application to improve performance. Threads can even yield performance benefits on single-processor systems. Fibers are units of execution that the program, rather than the Windows executive, schedules for execution.

Looking Ahead

Chapter 8 describes and compares the Windows synchronization objects, and Chapters 9 and 10 continue with more advanced synchronization topics, performance comparisons, and extended examples. Chapter 11 implements the threaded server shown in Figure 7-1.

Additional Reading

Windows

Multithreading Applications in Win32, by Jim Beveridge and Robert Wiener, is an entire book devoted to Win32 threads.

UNIX and Pthreads

Both Advanced Programming in the UNIX Environment, by W. Richard Stevens and Stephen A. Rago, and Programming with POSIX Threads, by David Butenhof, are recommended. The second book provides numerous guidelines for threaded program design and implementation. The information applies to Windows as well as to Pthreads, and many of the examples can be easily ported to Windows. There is also good coverage of the boss/worker, client/server, and pipeline threading models, and Butenhof’s presentation is the basis for the model descriptions in this chapter.

Exercises

7–1. Implement a set of functions that will suspend and resume threads but also allow you to obtain a thread’s suspend count.

7–2. Compare the performance of the parallel word count programs, one using threads (wcMT) and the other using processes (similar to Program 6-1, grepMP). Compare the results with those in Appendix C.

7–3. Perform additional performance studies with grepMT where the files are on different disk drives or are networked files. Also determine the performance gain on as many multiprocessor systems as are available.

7–4. Modify grepMT, Program 7-1, so that it puts out the results in the same order as that of the files on the command line. Does this affect the performance measurements in any way?

7–5. Further enhance grepMT, Program 7-1, so that it prints the time required by each worker thread. GetThreadTimes will be useful, and this function is similar to GetProcessTimes (Chapter 6).

7–6. The Examples file includes a multithreaded word count program, wcMT.c, that has a structure similar to that of grepMT.c. A defective version, wcMTx.c, is also included. Without referring to the correct solution, analyze and fix the defects in wcMTx.c, including any syntax errors. Also, create test cases that illustrate these defects and carry out performance experiments similar to those suggested for grepMT. If you use Cygwin (open source UNIX/Linux commands and shells), compare the performance of Cygwin’s wc with that of wcMT, especially on multiprocessor systems.

7–7. The Examples file includes grepMTx.c, which is defective because it violates basic rules for thread safety. Describe the failure symptoms, identify the errors, and fix them.

7–8. sortMT requires that the number of records in the array to be sorted be divisible by the number of threads and that the number of threads be a power of 2. Remove these restrictions.

7–9. Enhance sortMT so that if the number of threads specified on the command line is zero, the program will determine the number of processors on the host computer using GetSystemInfo. Set the number of threads to different multiples (1, 2, 4, and so on) of the number of processors and determine the effect on performance.

7–10. Modify sortMT so that the worker threads are not suspended when they are created. What failure symptoms, if any, does the program demonstrate as a result of the race condition defect?

7–11. sortMT reads the entire file in the primary thread before creating the sorting threads. Modify the program so that each thread reads the portion of the file that it requires. Next, modify the program to use mapped files.

7–12. Is there any performance benefit if you give some of the threads in sortMT higher priority than others? For example, it might be beneficial to give the threads that only sort and do not merge, such as Thread 3 in Figure 7-2, a higher priority. Explain the results.

7–13. sortMT creates all the threads in a suspended state so as to avoid a race condition. Modify the program so that it creates the threads in reverse order and in a running state. Are there any remaining race conditions? Compare performance with the original version.

7–14. Quicksort, the algorithm generally used by the C library qsort function, is usually fast, but it can be slow in certain cases. Most texts on algorithms show a version that is fastest when the array is reverse sorted and slowest when it is already sorted. The Microsoft C library implementation is different. Determine from the library code which sequences will produce the best and worst behavior, and study sortMT’s performance in these extreme cases. What is the effect of increasing or decreasing the number of threads? Note: The C library source code can be installed in the CRT directory under your Visual Studio installation. Look for qsort.c.

7–15. The Examples file contains a defective sortMTx.c program. Demonstrate the defects with test cases and then explain and fix the defects without reference to the correct solutions. Caution: The defective version may have syntax errors as well as errors in the thread logic.

7–16. One of the technical reviewers suggested an interesting sortMT enhancement that may provide improved performance. The idea is to modify the MergeArrays function so that it does not need to allocate the destination record storage. Instead, preallocate a second array as large as the array being sorted. Each worker thread can then use the appropriate portion of the preallocated array. Finally, eliminate the memcpy at the end. Hint: Alternate the merge direction on even and odd passes. Compare the resulting performance to Runs 7-2a and 7-2b.