Chapter 14. Asynchronous Input/Output and Completion Ports

Input and output are inherently slow compared with other processing due to factors such as the following:

• Delays caused by track and sector seek time on random access devices, such as disks

• Delays caused by the relatively slow data transfer rate between a physical device and system memory

• Delays in network data transfer using file servers, storage area networks, and so on

All I/O in previous examples has been thread-synchronous, so that the entire thread waits until the I/O operation completes.

This chapter shows how a thread can continue without waiting for an operation to complete—that is, threads can perform asynchronous I/O. Examples illustrate the different techniques available in Windows.

Waitable timers, which require some of the same techniques, are also described here.

Finally, and more important, once standard asynchronous I/O is understood, we are in a position to use I/O completion ports, which are extremely useful when building scalable servers that must be able to support large numbers of clients without creating a thread for each client. Program 14-4 modifies an earlier server to exploit I/O completion ports.

Overview of Windows Asynchronous I/O

There are three techniques for achieving asynchronous I/O in Windows; they differ in both the methods used to start I/O operations and those used to determine when operations are complete.

• Multithreaded I/O. Each thread within a process or set of processes performs normal synchronous I/O, but other threads can continue execution.

• Overlapped I/O (with waiting). A thread continues execution after issuing a read, write, or other I/O operation. When the thread requires the I/O results before continuing, it waits on either the file handle or an event specified in the ReadFile or WriteFile overlapped structure.

• Overlapped I/O with completion routines (or “extended I/O” or “alertable I/O”). The system invokes a specified completion routine callback function within the thread when the I/O operation completes. The term “extended I/O” is easy to remember because it requires extended functions such as WriteFileEx and ReadFileEx.

The terms “overlapped I/O” and “extended I/O” are used for the last two techniques; they are, however, two forms of overlapped I/O that differ in the way Windows indicates completed operations.

The threaded server in Chapter 11 uses multithreaded I/O on named pipes. grepMT (Program 7-1) manages concurrent I/O to several files. Thus, we have existing programs that perform multithreaded I/O to achieve a form of asynchronous I/O.

Overlapped I/O is the subject of the next section, and the examples implement file conversion (simplified Caesar cipher, first used in Chapter 2) with this technique in order to illustrate sequential file processing. The example is a modification of Program 2-3. Following overlapped I/O, we explain extended I/O with completion routines.

Note: Overlapped and extended I/O can be complex and seldom yield large performance benefits on Windows XP. Threads frequently overcome these problems, so some readers might wish to skip ahead to the sections on waitable timers and I/O completion ports (but see the next note), referring back as necessary. Before doing so, however, you will find asynchronous I/O concepts in both old and very new technology, so it can be worthwhile to learn the techniques. Also, the asynchronous procedure call (APC) operation (Chapter 10) is very similar to extended I/O. There’s a final significant advantage to the two overlapped I/O techniques: you can cancel outstanding I/O operations, allowing cleanup.

NT6 Note: NT6 (including Windows 7) provides an exception to the comment about performance. NT6 extended and overlapped I/O provide good performance compared to simple sequential I/O; we’ll show the results here.

Finally, since I/O performance and scalability are almost always the principal objectives (in addition to correctness), remember that memory-mapped I/O can be very effective when processing files (Chapter 5), although it is not trivial to recover from memory-mapped I/O errors.

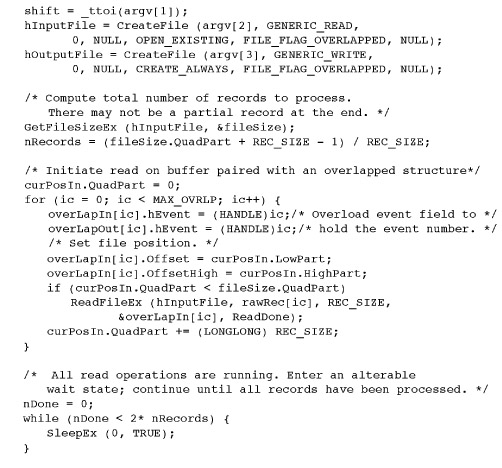

Overlapped I/O

The first requirement for asynchronous I/O, whether overlapped or extended, is to set the overlapped attribute of the file or other handle. Do this by specifying the FILE_FLAG_OVERLAPPED flag on the CreateFile or other call that creates the file, named pipe, or other handle. Sockets (Chapter 13), whether created by socket or accept, have the attribute set by default. An overlapped socket can be used asynchronously in all Windows versions.

Until now, overlapped structures have only been used with LockFileEx and as an alternative to SetFilePointerEx (Chapter 3), but they are essential for overlapped I/O. These structures are optional parameters on four I/O functions that can potentially block while the operation completes:

Recall that when you’re specifying FILE_FLAG_OVERLAPPED as part of dwAttrsAndFlags (for CreateFile) or as part of dwOpenMode (for CreateNamedPipe), the pipe or file is to be used only in overlapped mode. Overlapped I/O does not work with anonymous pipes.

Consequences of Overlapped I/O

Overlapped I/O is asynchronous. There are several consequences when starting an overlapped I/O operation.

• I/O operations do not block. The system returns immediately from a call to ReadFile, WriteFile, TransactNamedPipe, or ConnectNamedPipe.

• A returned FALSE value does not necessarily indicate failure because the I/O operation is most likely not yet complete. In this normal case, GetLastError() will return ERROR_IO_PENDING, indicating no error. Windows provides a different mechanism to indicate status.

• The returned number of bytes transferred is also not useful if the transfer is not complete. Windows must provide another means of obtaining this information.

• The program may issue multiple reads or writes on a single overlapped file handle. Therefore, the handle’s file pointer is meaningless. There must be another method to specify file position with each read or write. This is not a problem with named pipes, which are inherently sequential.

• The program must be able to wait (synchronize) on I/O completion. In case of multiple outstanding operations on a single handle, it must be able to determine which operation has completed. I/O operations do not necessarily complete in the same order in which they were issued.

The last two issues—file position and synchronization—are addressed by the overlapped structures.

Overlapped Structures

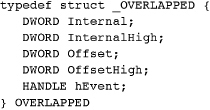

The OVERLAPPED structure (specified, for example, by the lpOverlapped parameter of ReadFile) indicates the following:

• The file position (64 bits) where the read or write is to start, as discussed in Chapter 3

• The event (manual-reset) that will be signaled when the operation completes

Here is the OVERLAPPED structure.

The file position (pointer) must be set in both Offset and OffsetHigh. Do not set Internal and InternalHigh, which are reserved for the system. Currently, Windows sets Internal to the I/O request error code and InternalHigh to the number of bytes transferred. However, MSDN warns that this behavior may change in the future, and there are other ways to get the information.

hEvent is an event handle (created with CreateEvent). The event can be named or unnamed, but it must be a manual-reset event (see Chapter 8) when used for overlapped I/O; the reasons are explained soon. The event is signaled when the I/O operation completes.

Alternatively, hEvent can be NULL; in this case, the program can wait on the file handle, which is also a synchronization object (see the upcoming list of cautions). Note: For convenience, the term “file handle” is used to describe the handle with ReadFile, WriteFile, and so on, even though this handle could refer to a pipe or device rather than to a file.

This event is immediately reset (set to the nonsignaled state) by the system when the program makes an I/O call. When the I/O operation completes, the event is signaled and remains signaled until it is used with another I/O operation. The event needs to be manual-reset because multiple threads might wait on it (although our example uses only one thread).

Even if the file handle is synchronous (it was created without FILE_FLAG_OVERLAPPED), the overlapped structure is an alternative to SetFilePointer and SetFilePointerEx for specifying file position. In this case, the ReadFile or other call does not return until the operation is complete. This feature was useful in Chapter 3.

Notice also that an outstanding I/O operation is uniquely identified by the combination of file handle and overlapped structure.

Here are a few cautions to keep in mind.

• Do not reuse an OVERLAPPED structure while its associated I/O operation, if any, is outstanding.

• Similarly, do not reuse an event while it is part of an OVERLAPPED structure.

• If there is more than one outstanding request on an overlapped handle, use events, rather than the file handle, for synchronization. We provide examples of both forms.

• As with any automatic variable, if the OVERLAPPED structure or event is an automatic variable in a block, be certain not to exit the block before synchronizing with the I/O operation. Also, close the event handle before leaving the block to avoid a resource leak.

Overlapped I/O States

An overlapped ReadFile or WriteFile operation—or, for that matter, one of the two named pipe operations—returns immediately. In most cases, the I/O will not be complete, and the read or write returns FALSE. GetLastError returns ERROR_IO_PENDING. However, test the read or write return; if it’s TRUE, you can get the transfer count immediately and proceed without waiting.

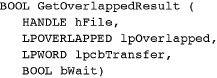

After waiting on a synchronization object (an event or, perhaps, the file handle) for the operation to complete, you need to determine how many bytes were transferred. This is the primary purpose of GetOverlappedResult.

The handle and overlapped structure combine to indicate the specific I/O operation. bWait, if TRUE, specifies that GetOverlappedResult will wait until the specified operation is complete; otherwise, it returns immediately. In either case, the function returns TRUE only if the operation has completed successfully. GetLastError returns ERROR_IO_INCOMPLETE in case of a FALSE return from GetOverlappedResult, so it is possible to poll for I/O completion with this function.

The number of bytes transferred is in *lpcbTransfer. Be certain that the overlapped structure is unchanged from when it was used with the overlapped I/O operation.

Canceling Overlapped I/O Operations

The Boolean NT6 function CancelIoEx cancels outstanding overlapped I/O operations on the specified handle in the current process. The arguments are the handle and the overlapped structure. All pending operations issued by the calling thread using the handle and overlapped structure are canceled. Use NULL for the overlapped structure to cancel all operations using the handle.

CancelIoEx cancels I/O requests in the calling thread only.

The canceled operations will usually complete with error code ERROR_OPERATION_ABORTED and status STATUS_CANCELLED, although the status would be STATUS_SUCCESS if the operation completed before the cancellation call.

CancelIoEx does not, however, wait for the cancellation to complete, so it’s still essential to wait in the normal way before reusing the OVERLAPPED structure for another I/O operation.

Program 14-4 (serverCP) exploits CancelIoEx.

Example: Synchronizing on a File Handle

Overlapped I/O can be useful and relatively simple when there is only one outstanding operation. The program can synchronize on the file handle rather than on an event.

The following code fragment shows how a program can initiate a read operation to read a portion of a file, continue to perform other processing, and then wait on the handle.

Example: File Conversion with Overlapped I/O and Multiple Buffers

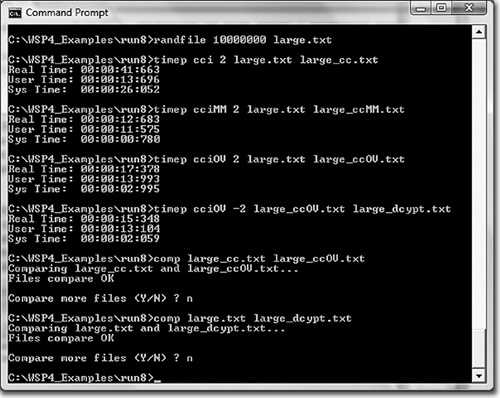

Program 2-3 (cci) encrypted a file to illustrate sequential file conversion, and Program 5-3 (cciMM) showed how to perform the same sequential file processing with memory-mapped files. Program 14-1 (cciOV) performs the same task using overlapped I/O and multiple buffers holding fixed-size records.

Program 14-1 cciOV: File Conversion with Overlapped I/O

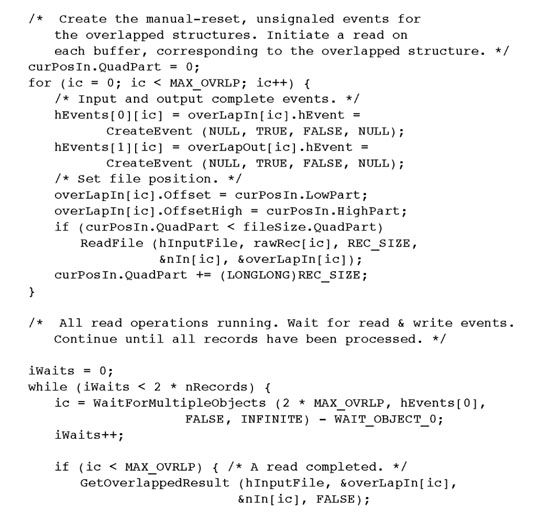

Figure 14-1 shows the program organization and an operational scenario with four fixed-size buffers. The program is implemented so that the number of buffers is defined in a preprocessor variable, but the following discussion assumes four buffers.

Figure 14-1 An Asynchronous File Update Model

First, the program initializes all the overlapped structures with events and file positions. There is a separate overlapped structure for each input and each output buffer. Next, an overlapped read is issued for each of the four input buffers. The program then uses WaitForMultipleObjects to wait for a single event, indicating either a read or a write completed. When a read completes, the buffer is copied and converted into the corresponding output buffer and the write is initiated. When a write completes, the next read is initiated. Notice that the events associated with the input and output buffers are arranged in a single array to be used as an argument to WaitForMultipleObjects.

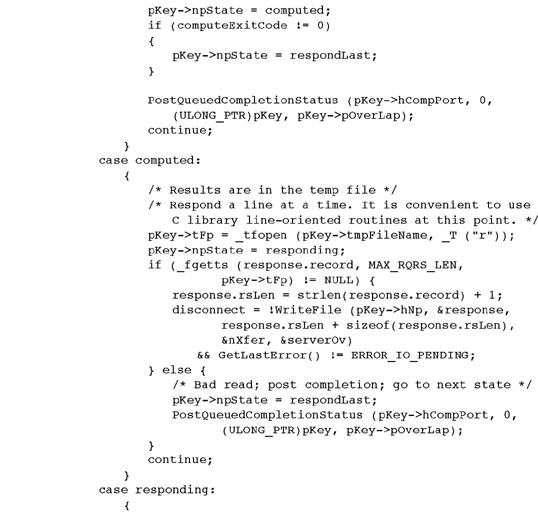

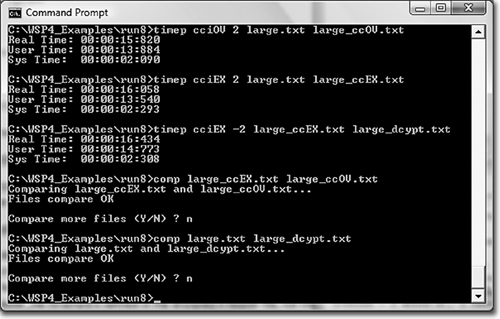

Run 14-1 shows cci timings converting the same 640MB file with cci, cciMM, and cciOV on a four-processor Windows Vista machine.

Run 14-1 cciOV: Comparing Performance and Testing Results

Memory mapping and overlapped I/O provide the best performance, with memory-mapped I/O showing a consistent advantage (12.7 seconds compared to about 16 seconds in this test). Run 14-1 also compares the converted files and the decrypted file as an initial correctness test.

The cciOV timing results in Run 14-1 depend on the record size (the REC_SIZE macro in the listing). The 0x4000 (16K) value worked well, as did 8K. However, 32K required twice the time. An exercise suggests experimenting with the record size on different systems and file sizes. Appendix C shows additional timing results on several systems for the different cci implementations.

Caution: The elapsed time in these tests can occasionally increase significantly, sometimes by factors of 2 or more. However, Run 14-1 contains typical results that I’ve been able to reproduce consistently. Nonetheless, be aware that you might see much longer times, depending on numerous factors such as other machine activity.

Extended I/O with Completion Routines

There is an alternative to using synchronization objects. Rather than requiring a thread to wait for a completion signal on an event or handle, the system can invoke a user-specified completion, or callback, routine when an I/O operation completes. The completion routine can then start the next I/O operation and perform any other bookkeeping. The completion or callback routine is similar to Chapter 10’s asynchronous procedure call and requires alertable wait states.

How can the program specify the completion routine? There are no remaining ReadFile or WriteFile parameters or data structures to hold the routine’s address. There is, however, a family of extended I/O functions, identified by the Ex suffix and containing an extra parameter for the completion routine address. The read and write functions are ReadFileEx and WriteFileEx, respectively. It is also necessary to use one of five alertable wait functions:

• WaitForSingleObjectEx

• WaitForMultipleObjectsEx

• SleepEx

• SignalObjectAndWait

• MsgWaitForMultipleObjectsEx

Extended I/O is sometimes called alertable I/O, and Chapter 10 used alertable wait states for thread cancellation. The following sections show how to use the extended functions.

ReadFileEx, WriteFileEx, and Completion Routines

The extended read and write functions work with open file, named pipe, and mailslot handles if FILE_FLAG_OVERLAPPED was used at open (create) time. Notice that the flag sets a handle attribute, and while overlapped I/O and extended I/O are distinguished, a single overlapped flag enables both types of asynchronous I/O on a handle.

Overlapped sockets (Chapter 12) operate with ReadFileEx and WriteFileEx.

The two functions are familiar but have an extra parameter to specify the completion routine. The completion routine could be NULL; there’s no easy way to get the results.

The overlapped structures must be supplied, but there is no need to specify the hEvent member; the system ignores it. It turns out, however, that this member is useful for carrying information, such as a sequence number, to identify the I/O operation, as shown in Program 14-2.

Program 14-2 cciEX: File Conversion with Extended I/O

In comparison to ReadFile and WriteFile, notice that the extended functions do not require the parameters for the number of bytes transferred. That information is conveyed as an argument to the completion routine.

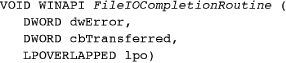

The completion routine has parameters for the byte count, an error code, and the overlapped structure. The last parameter is necessary so that the completion routine can determine which of several outstanding operations has completed. Notice that the same cautions regarding reuse or destruction of overlapped structures apply here as they did for overlapped I/O.

As was the case with CreateThread, which also specified a function name, FileIOCompletionRoutine is a placeholder and not an actual function name.

Common dwError values are 0 (success) and ERROR_HANDLE_EOF (when a read tries to go past the end of the file). The overlapped structure is the one used by the completed ReadFileEx or WriteFileEx call.

Two things must happen before the completion routine is invoked by the system.

1. The I/O operation must complete.

2. The calling thread must be in an alertable wait state, notifying the system that it should execute any queued completion routines.

How does a thread get into an alertable wait state? It must make an explicit call to one of the alertable wait functions described in the next section. In this way, the thread can ensure that the completion routine does not execute prematurely. A thread can be in an alertable wait state only while it is calling an alertable wait function; on return, the thread is no longer in this state.

Once these two conditions have been met, completion routines that have been queued as the result of I/O completion are executed. Completion routines are executed in the same thread that made the original I/O call and is in the alertable wait state. Therefore, the thread should enter an alertable wait state only when it is safe for completion routines to execute.

Alertable Wait Functions

There are five alertable wait functions, and the three that relate directly to our current needs are described here.

Each alertable wait function has a bAlertable flag that must be set to TRUE when used for asynchronous I/O. The functions are extensions of the familiar Wait and Sleep functions.

Time-outs, as always, are in milliseconds. These three functions will return as soon as any one of the following situations occurs.

• Handle(s) are signaled so as to satisfy one of the two wait functions in the normal way.

• The time-out period expires.

• At least one completion routine or user APC (see Chapter 10) is queued to the thread and bAlertable is set. Completion routines are queued when their associated I/O operation is complete (see Figure 14-2) or QueueUserAPC queues a user APC. Windows executes all queued user APCs and completion routines before returning from an alertable wait function. Note how this allows I/O operation cancellation with a user APC, as was done in Chapter 10.

Figure 14-2 Asynchronous I/O with Completion Routines

Also notice that no events are associated with the ReadFileEx and WriteFileEx overlapped structures, so any handles in the wait call will have no direct relation to the I/O operations. SleepEx, on the other hand, is not associated with a synchronization object and is the easiest of the three functions to use. SleepEx is usually used with an INFINITE time-out so that the function will return only after one or more of the currently queued completion routines have finished.

Execution of Completion Routines and the Alertable Wait Return

As soon as an extended I/O operation is complete, its associated completion routine, with the overlapped structure, byte count, and error status arguments, is queued for execution.

All of a thread’s queued completion routines are executed when the thread enters an alertable wait state. They are executed sequentially but not necessarily in the same order as I/O completion. The alertable wait function returns only after the completion routines return. This property is essential to the proper operation of most programs because it assumes that the completion routines can prepare for the next use of the overlapped structure and perform related operations to get the program to a known state before the alertable wait returns.

SleepEx and the wait functions will return WAIT_IO_COMPLETION if one or more queued completion routines were executed.

Here are two final points.

1. Use an INFINITE time-out value with any alertable wait function. Without the possibility of a time-out, the wait function will return only after all queued completion routines have been executed or the handles have been signaled.

2. It is common practice to use the hEvent data member of the overlapped structure to convey information to the completion routine because Windows ignores this field.

Figure 14-2 illustrates the interaction among the main thread, the completion routines, and the alertable waits. In this example, three concurrent read operations are started, and two are completed by the time the alertable wait is performed.

Example: File Conversion with Extended I/O

Program 14-2, cciEX, reimplements Program 14-1, cciOV. These programs show the programming differences between the two asynchronous I/O techniques. cciEX is similar to Program 14-1 but moves most of the bookkeeping code to the completion routines, and many variables are global so as to be accessible from the completion routines.

Run 14-2 and Appendix C show that cciEX performs competitively with cciOV, which was slower than the memory-mapped version on the tested four-processor Windows Vista computer. Based on these results, asynchronous overlapped I/O is a good choice for sequential and possibly other file I/O but does not compete with memory-mapped I/O. The choice between the overlapped and extended overlapped I/O is somewhat a matter of taste (I found cciEX slightly easier to write and debug).

Run 14-2 cciEX: Overlapped I/O with Completion Routines

Asynchronous I/O with Threads

Overlapped and extended I/O achieve asynchronous I/O within a single user thread. These techniques are common, in one form or another, in many older OSs for supporting limited forms of asynchronous operation in single-threaded systems.

Windows, however, supports threads, so the same functional effect is possible by performing synchronous I/O operations in multiple, separate threads, at the possible performance cost due to thread management overhead. Threads also provide a uniform and, arguably, much simpler way to perform asynchronous I/O. An alternative to Programs 14-1 and 14-2 is to give each thread its own handle to the file and each thread could synchronously process every fourth record.

The cciMT program, not listed here but included in the Examples file, illustrates how to use threads in this way. cciMT is simpler than the two asynchronous I/O programs because the bookkeeping is less complex. Each thread simply maintains its own buffers on its own stack and performs the read, convert, and write sequence synchronously in a loop. The performance is superior to the results in Run 14-2, so the possible performance impact is not realized. In particular, the 640MB file conversions, which require about 16 seconds for cciOV and cciEX, require about 10 seconds for cciMT, running on the same machine. This is better than the memory-mapped performance (about 12 seconds).

What would happen if we combined memory mapping and multiple threads? cciMTMM, also in the Examples file, shows even better results, namely about 4 seconds for this case. Appendix C has results for several different machines.

My personal preference is to use threads rather than asynchronous I/O for file processing, and they provide the best performance in most cases. Memory mapping can improve things even more, although it’s difficult to recover from I/O errors, as noted in Chapter 5. The programming logic is simpler, but there are the usual thread risks.

There are some important exceptions to this generalization.

• The situation shown earlier in the chapter in which there is only a single outstanding operation and the file handle can be used for synchronization.

• Asynchronous I/O can be canceled. However, with small modifications, cciMT can be modified to use overlapped I/O, with the reading or writing thread waiting on the event immediately after the I/O operation is started. Exercise 14–10 suggests this modification.

• Multithreaded programs have many risks and can be a challenge to get right, as Chapters 7 through 10 describe. A source file, cciMT_dh.c, that’s in the unzipped Examples, documents several pitfalls I encountered while developing cciMT; don’t try to use this program because it is not correct!

• Asynchronous I/O completion ports, described at the end of this chapter, are useful with servers.

• NT6 executes asynchronous I/O programs very efficiently compared to normal file I/O (see Run 14-1 and Appendix C).

Waitable Timers

Windows supports waitable timers, a type of waitable kernel object.

You can always create your own timing signal using a timing thread that sets an event after waking from a Sleep call. serverNP (Program 11-3) also uses a timing thread to broadcast its pipe name periodically. Therefore, waitable timers are a redundant but useful way to perform tasks periodically or at specified absolute or relative times.

As the name suggests, you can wait for a waitable timer to be signaled, but you can also use a callback routine similar to the extended I/O completion routines. A waitable timer can be either a synchronization timer or a manual-reset (or notification) timer. Synchronization and manual-reset timers are comparable to auto-reset and manual-reset events; a synchronization timer becomes unsignaled after a wait completes on it, and a manual-reset timer must be reset explicitly. In summary:

• There are two ways to be notified that a timer is signaled: either wait on the timer or have a callback routine.

• There are two waitable timer types, which differ in whether or not the timer is reset automatically after a wait.

The first step is to create a timer handle with CreateWaitableTimer.

The second parameter, bManualReset, determines whether the timer is a synchronization timer or a manual-reset notification timer. Program 14-3 uses a synchronization timer, but you can change the comment and the parameter setting to obtain a notification timer. Notice that there is also an OpenWaitableTimer function that can use the optional name supplied in the third argument.

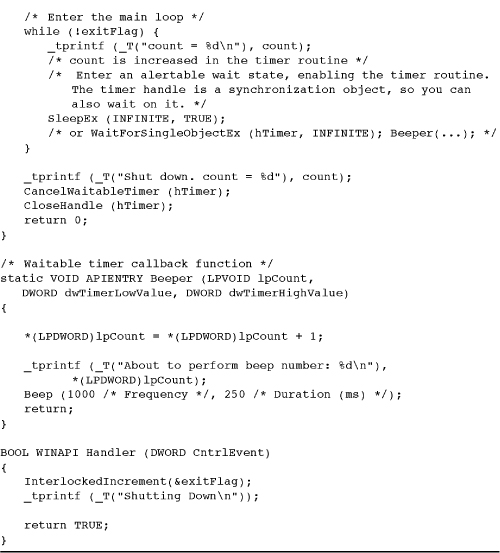

Program 14-3 TimeBeep: A Periodic Signal

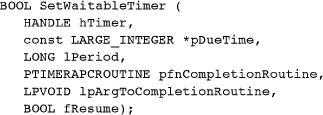

The timer is initially inactive, but SetWaitableTimer activates it, sets the timer to unsignaled, and specifies the initial signal time and the time between periodic signals.

hTimer is a valid timer handle created using CreateWaitableTimer.

The second parameter, pointed to by pDueTime, is either a positive absolute time or a negative relative time and is actually expressed as a FILETIME with a resolution of 100 nanoseconds. FILETIME variables were introduced in Chapter 3 and were used in Chapter 6’s timep (Program 6-2).

The third parameter specifies the interval between signals, using millisecond units. If this value is 0, the timer is signaled only once. A positive value indicates that the timer is a periodic timer and continues signaling periodically until you call CancelWaitableTimer. Negative interval values are not allowed.

pfnCompletionRoutine, the fourth parameter, specifies the time-out callback function (completion routine) to be called when the timer is signaled and the thread enters an alertable wait state. The routine is called with the pointer specified in the fifth parameter, lpArgToCompletionRoutine, as an argument.

Having set a synchronization timer, you can now call SleepEx or other alertable wait function to enter an alertable wait state, allowing the completion routine to be called. Alternatively, wait on the timer handle. As mentioned previously, a manual-reset waitable timer handle will remain signaled until the next call to SetWaitableTimer, whereas Windows resets a synchronization timer immediately after the first wait after the set.

The complete version of Program 14-3 in the Examples file allows you to experiment with using the four combinations of the two timer types and with choosing between using a completion routine or waiting on the timer handle.

The final parameter, fResume, is concerned with power conservation. See the MSDN documentation for more information.

Use CancelWaitableTimer to cancel the last effect of a previous SetWaitableTimer, although it will not change the timer’s signaled state; use another SetWaitableTimer call to do that.

Example: Using a Waitable Timer

Program 14-3 shows how to use a waitable timer to signal the user periodically.

Comments on the Waitable Timer Example

There are four combinations based on timer type and whether you wait on the handle or use a completion routine. Program 14-3 illustrates using a completion routine and a synchronization timer. The four combinations can be tested using the version of TimeBeep.c in the Examples file by changing some comments.

Caution: The beep sound may be annoying, so you might want to test this program without anyone else nearby or adjust the frequency and duration.

Threadpool Timers

Alternatively, you can use a different type of timer, specifying that the timer callback function is to be executed within a thread pool (see Chapter 9). TimeBeep_TPT is a simple modification to TimeBeep, and it shows how to use CreateThreadpoolTimer and SetThreadpoolTimer. The new program requires a FILETIME structure for the timer due time, whereas the waitable timer used a LARGE_INTEGER.

I/O Completion Ports

I/O completion ports combine features of both overlapped I/O and independent threads and are most useful in server programs. To see the requirement for this, consider the servers that we built in Chapters 11 and 12 (and converted to Windows Services in Chapter 13), where each client is supported by a distinct worker thread associated with a socket or named pipe instance. This solution works well when the number of clients is not large.

Consider what would happen, however, if there were 1,000 clients. The current model would then require 1,000 threads, each with a substantial amount of virtual memory space. For example, by default, each thread will consume 1MB of stack space, so 1,000 threads would require 1GB of virtual address space, and thread context switches could increase page fault delays.1 Furthermore, the threads would contend for shared resources both in the executive and in the process, and the timing data in Chapter 9 showed the performance degradation that can result. Therefore, there is a requirement to allow a small pool of worker threads to serve a large number of clients. Chapter 9 used an NT6 thread pool to address this same problem.

1This problem is less severe, but should not be ignored, on systems with large amounts of memory.

I/O completion ports provide a solution on all Windows versions by allowing you to create a limited number of server threads in a thread pool while having a very large number of named pipe handles (or sockets), each associated with a different client. Handles are not paired with individual worker server threads; rather, a server thread can process data on any handle that has available data.

An I/O completion port, then, is a set of overlapped handles, and threads wait on the port. When a read or write on one of the handles is complete, one thread is awakened and given the data and the results of the I/O operation. The thread can then process the data and wait on the port again.

The first task is to create an I/O completion port and add overlapped handles to the port.

Managing I/O Completion Ports

A single function, CreateIoCompletionPort, both creates the port and adds handles. Since this one function must perform two tasks, the parameter usage is correspondingly complex.

An I/O completion port is a collection of file handles opened in OVERLAPPED mode. FileHandle is an overlapped handle to add to the port. If the value is INVALID_HANDLE_VALUE, a new I/O completion port is created and returned by the function. The next parameter, ExistingCompletionPort, must be NULL in this case.

ExistingCompletionPort is the port created on the first call, and it indicates the port to which the handle in the first parameter is to be added. The function also returns the port handle when the function is successful; NULL indicates failure.

CompletionKey specifies the key that will be included in the completion packet for FileHandle. The key could be a pointer to a structure containing information such as an operation type, a handle, and a pointer to the data buffer. Alternatively, the key could be an index to a table of structures, although this is less flexible.

NumberOfConcurrentThreads indicates the maximum number of threads allowed to execute concurrently. Any threads in excess of this number that are waiting on the port will remain blocked even if there is a handle with available data. If this parameter is 0, the number of processors in the system is the limit. The value is ignored except when ExistingCompletionPort is NULL (that is, the port is created, not when handles are added).

An unlimited number of overlapped handles can be associated with an I/O completion port. Call CreateIoCompletionPort initially to create the port and to specify the maximum number of threads. Call the function again for every overlapped handle that is to be associated with the port. There is no way to remove a handle from a completion port; the handle and completion port are associated permanently.

The handles associated with a port should not be used with ReadFileEx or WriteFileEx functions. The Microsoft documentation suggests that the files or other objects not be shared using other open handles.

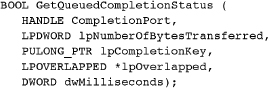

Waiting on an I/O Completion Port

Use ReadFile and WriteFile, along with overlapped structures (no event handle is necessary), to perform I/O on the handles associated with a port. The I/O operation is then queued on the completion port.

A thread waits for a queued overlapped completion not by waiting on an event but by calling GetQueuedCompletionStatus, specifying the completion port. Upon completion, the function returns a key that was specified when the handle (the one whose operation has completed) was initially added to the port with CreateIoCompletionPort. This key can specify the identity of the actual handle for the completed operation and other information associated with the I/O operation.

Notice that the Windows thread that initiated the read or write is not necessarily the thread that will receive the completion notification; any waiting thread can receive completion notification. Therefore, the receiving thread can identify the handle of the completed operation from the completion key.

Never hold a lock (mutex, CRITICAL_SECTION, etc.) when you call GetQueuedCompletionStatus, because the thread that releases the lock is probably not the some thread that acquired it. Owning the lock would not be a good idea in any case as there is an indefinite wait before the completion notification.

There is also a time-out associated with the wait.

It is sometimes convenient (as in an additional example, cciMTCP, in the Examples file) to have the operation not be queued on the I/O completion port, making the operation synchronous. In such a case, a thread can wait on the overlapped event. In order to specify that an overlapped operation should not be queued on the completion port, you must set the low-order bit in the overlapped structure’s event handle; then you can wait on the event for that specific operation. This is an interesting design, but MSDN does document it, although not prominently.

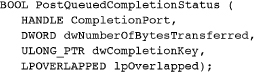

Posting to an I/O Completion Port

A thread can post a completion event, with a key, to a port to satisfy an outstanding call to GetQueuedCompletionStatus. The PostQueuedCompletionStatus function supplies all the required information.

One common technique is to provide a bogus key value, such as -1, to wake up waiting threads, even though no operation has completed. Waiting threads should test for bogus key values, and this technique can be used, for example, to signal a thread to shut down.

Alternatives to I/O Completion Ports

Chapter 9 showed how a semaphore can be used to limit the number of ready threads, and this technique is effective in maintaining throughput when many threads compete for limited resources.

We could use the same technique with serverSK (Program 12-2) and serverNP (Program 11-3). All that is required is to wait on the semaphore after the read request completes, perform the request, create the response, and release the semaphore before writing the response. This solution is much simpler than the I/O completion port example in the next section. One problem with this solution is that there may be a large number of threads, each with its own stack space, which will consume virtual memory. The problem can be partly alleviated by carefully measuring the amount of stack space required. Exercise 14–7 involves experimentation with this alternative solution, and there is an example implementation in the Examples file. I/O completion ports also have the advantage that the scheduler posts the completion to the thread most recently executed, as that thread’s memory is most likely still in the cache or at least does not need to be paged in.

There is yet another possibility when creating scalable servers. A limited number of worker threads can take work item packets from a queue (see Chapter 10). The incoming work items can be placed in the queue by one or more boss threads, as in Program 10-5.

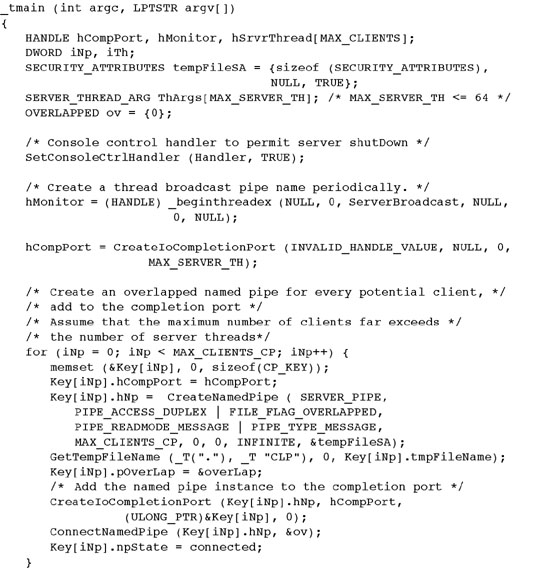

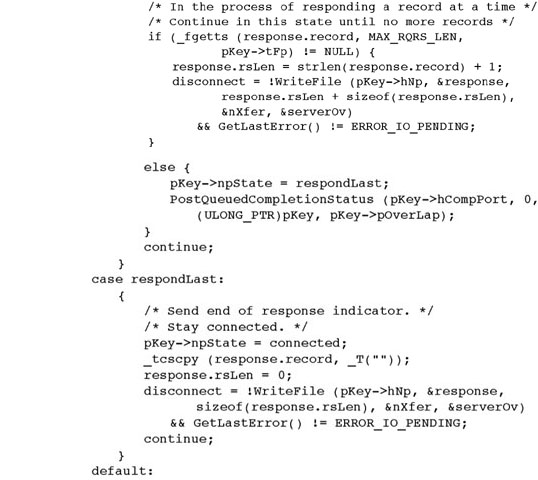

Example: A Server Using I/O Completion Ports

serverCP (Program 14-4) modifies serverNP (Program 11-3) to use I/O completion ports. This server creates a small server thread pool and a larger pool of overlapped pipe handles along with a completion key for each handle. The overlapped handles are added to the completion port and a ConnectNamedPipe call is issued. The server threads wait for completions associated with both client connections and read operations. After a read is detected, the associated client request is processed and returned to the client (clientNP from Chapter 11).

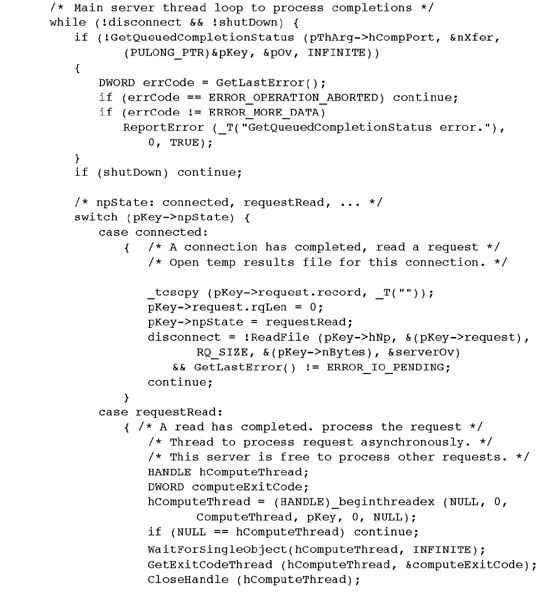

Program 14-4 serverCP: A Server Using a Completion Port

serverCP’s design prevents server threads from blocking during I/O operations or request processing (through an external process). Each client pipe goes through a set of states (see the enum CP_CLIENT_PIPE_STATE type in the listing), and different server threads may process the pipe through stages of the state cycle. The states, which are maintained in a per-pipe CP_KEY structure, proceed as follows:

• connected—The pipe is connected with a server thread.

• requestRead—The server thread reads a request from the client and starts the process from a separate “compute” thread, which calls PostQueuedCompletionStatus when the process completes. The server thread does not block, since the process management is in the compute thread.

• computed—The server thread reads the first temporary file record with the response’s first record, and the server thread then writes the record to the client.

• responding—The server thread sends additional response records, one at a time, returning to the responding state until the last response record is sent to the client.

• respondLast—The server thread sends a terminating empty record to the client.

The program listing does not show familiar functions such as the server mailslot broadcast thread function.

Summary

Windows has three methods for performing asynchronous I/O; there are examples of all three, along with performance results, throughout the book to help you decide which to use on the basis of programming simplicity and performance.

Threads provide the most general and simplest technique. Each thread is responsible for a sequence of one or more sequential, blocking I/O operations. Furthermore, each thread should have its own file or pipe handle.

Overlapped I/O allows a single thread to perform asynchronous operations on a single file handle, but there must be an event handle, rather than a thread and file handle pair, for each operation. Wait specifically for each I/O operation to complete and then perform any required cleanup or sequencing operations.

Extended I/O, on the other hand, automatically invokes the completion code, and it does not require additional events.

The one indispensable advantage provided by overlapped I/O is the ability to create I/O completion ports as mentioned previously and illustrated by a program, cciMTCP.c, in the Examples file. A single server thread can serve multiple clients, which is important if there are thousands of clients; there would not be enough memory for the equivalent number of servers.

Looking Ahead

Chapter 15 completes the book by showing how to secure Windows objects.

Exercises

14–1. Use asynchronous I/O to merge several sorted files into a larger sorted file.

14–2. Does the FILE_FLAG_NO_BUFFERING flag improve cciOV or cciEX performance? Are there any restrictions on file size? Read the MSDN CreateFile documentation carefully.

14–3. Experiment with the cciOV and cciEX record sizes to determine the performance impact. Is the optimal record size machine-independent? What results to you get on Window XP and Windows 7?

14–4. Modify TimeBeep (Program 14-3) so that it uses a manual-reset notification timer.

14–5. Modify the named pipe client in Program 11-2, clientNP, to use overlapped I/O so that the client can continue operation after sending the request. In this way, it can have several outstanding requests.

14–6. Rewrite the socket server, serverSK in Program 12-2, so that it uses I/O completion ports.

14–7. Rewrite either serverSK or serverNP so that the number of ready worker threads is limited by a semaphore. Experiment with a large thread pool to determine the effectiveness of this alternative.

14–8. Use JobShell (Program 6-3, the job management program) to bring up a large number of clients and compare the responsiveness of serverNP and serverCP. Networked clients can provide additional load. Determine an optimal range for the number of active threads.

14–9. Modify cciMT to use an NT6 thread pool rather than thread management. What is the performance impact? Compare your results with those in Appendix C.

14–10. Modify cciMT to use overlapped read/write calls with the event wait immediately following the read/write. This should allow you to cancel I/O operations with a user APC; try to do so. Also, does this change affect performance?

14–11. Modify serverCP so that there is no limit on the number of clients. Use PIPE_UNLIMITED_INSTANCES for the CreateNamedPipe nMaxInstances value. You will need to replace the array of CP_KEY structures with dynamically allocated structures.

14–12. Review serverCP’s error and disconnect processing to be sure all situations are covered. Fix any deficiencies.