HPC Benchmarks for CFD

Abstract

This chapter is about the use of high-performance computing in computational flow dynamics, a real devourer of resources. Various types of comparative studies are analyzed in detail and commented on in terms of interconnectivity, storage, and memory requirements. The ANSYS® Fluent®, CFX®, and OpenFOAM benchmarks provide an overview of the nature of problems run on the various types of hardware.

Keywords

ANSYS CFX; ANSYS FLUENT; Benchmarks; CFD; HPC; OpenFOAM1. How Big Should the Problem Be?

Table 6.1

Number of cores suitable for a particular mesh size for Fluent simulation

| Cluster size (no. of cores) | Fluent case size (no. of cells/mesh size) | No. of simultaneous Fluent simulations |

| 8 | Up to 2–3 million | 1 |

| 16 | Up to 2–3 million | 2 |

| 16 | Up to 4–5 million | 1 |

| 32 | Up to 8–10 million | 1 |

| 32 | Up to 4–5 million | 2 |

| 64 | Up to 16–20 million | 1 |

| 64 | Up to 8–10 million | 2 |

| 64 | Up to 4–5 million | 4 |

| 128 | Up to 30–40 million | 1 |

| 256 | Up to 70–100 million | 1 |

| 256 | Up to 30–40 million | 2 |

| 256 | Up to 8–10 million | 4 |

| 256 | Up to 4–5 million | 16 |

2. Maximum capacity of the critical components of a cluster

2.1. Interconnect

2.2. Memory

2.3. Storage

3. Commercial Software Benchmarks

3.1. ANSYS Fluent Benchmarks

Table 6.3

Storage needs for a particular problem setup in ANSYS Fluent

| Fluent case size (no. of cells/mesh size) | Space requirements |

| 2 Million | 200 MB |

| 5 Million | 1 GB |

| 50 Million | 5 GB |

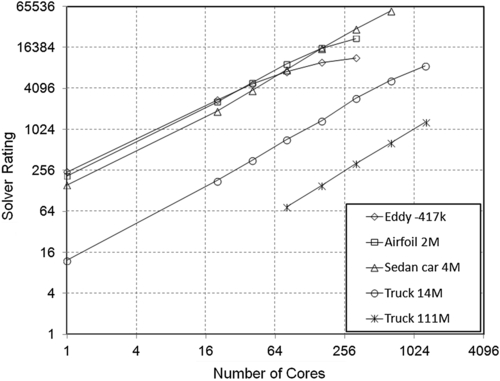

3.1.1. Flow of Eddy Dissipation

Table 6.4

Core solver rating, core solver speedup, and efficiency details for the problem of eddy dissipation

| Processes | Machines | Core solver rating | Core solver speedup | Core solver efficiency |

| Bull with 2.8 GHz turbo | ||||

| 1 | 1 | 243.9 | 1 | 100% |

| 20 | 1 | 2773.7 | 11.4 | 57% |

| 40 | 2 | 4753.8 | 19.5 | 49% |

| 80 | 4 | 7125.8 | 29.2 | 37% |

| 160 | 8 | 9846.2 | 40.4 | 25% |

| 320 | 16 | 11,443.7 | 46.9 | 15% |

| Fujitsu with 2.7 GHz processor | ||||

| 1 | 1 | 185.4 | 1 | 100% |

| 2 | 1 | 361.1 | 1.9 | 97% |

| 4 | 1 | 661.3 | 3.6 | 89% |

| 8 | 1 | 1185.6 | 6.4 | 80% |

| 10 | 1 | 1470.6 | 7.9 | 79% |

| 12 | 1 | 1489.7 | 8 | 67% |

| 24 | 1 | 2421.9 | 13.1 | 54% |

| 48 | 2 | 4670.3 | 25.2 | 52% |

| 96 | 4 | 7697.1 | 41.5 | 43% |

| 192 | 8 | 9573.4 | 51.6 | 27% |

| Fujitsu with 3 GHz processor | ||||

| 1 | 1 | 206.4 | 1 | 100% |

| 2 | 1 | 402 | 1.9 | 97% |

| 4 | 1 | 756.7 | 3.7 | 92% |

| 8 | 1 | 1318.1 | 6.4 | 80% |

| 10 | 1 | 1644.1 | 8 | 80% |

| 20 | 1 | 2769.2 | 13.4 | 67% |

| 40 | 2 | 5112.4 | 24.8 | 62% |

| 80 | 4 | 8093.7 | 39.2 | 49% |

| 160 | 8 | 7819 | 37.9 | 24% |

| 320 | 16 | 8037.2 | 38.9 | 12% |

| IBM with 2.6 GHz processor | ||||

| 16 | 1 | 2168.1 | N/A | N/A |

| 24 | 2 | 3083 | N/A | N/A |

| 32 | 2 | 3945.2 | N/A | N/A |

| 48 | 3 | 5228.4 | N/A | N/A |

| 64 | 4 | 6376.4 | N/A | N/A |

| 96 | 6 | 7783.8 | N/A | N/A |

| 128 | 8 | 9118.7 | N/A | N/A |

| 384 | 24 | 9959.7 | N/A | N/A |

| 1024 | 64 | 11,220.8 | N/A | N/A |

| Table Continued | ||||

| Processes | Machines | Core solver rating | Core solver speedup | Core solver efficiency |

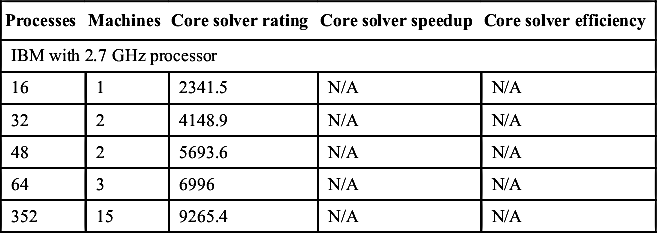

| IBM with 2.7 GHz processor | ||||

| 16 | 1 | 2341.5 | N/A | N/A |

| 32 | 2 | 4148.9 | N/A | N/A |

| 48 | 2 | 5693.6 | N/A | N/A |

| 64 | 3 | 6996 | N/A | N/A |

| 352 | 15 | 9265.4 | N/A | N/A |

3.1.2. Flow Over Airfoil

Table 6.5

Core solver rating, core solver speedup, and efficiency details for the problem of flow over aircraft

| Processes | Machines | Core solver rating | Core solver speedup | Core solver efficiency |

| Bull with 2.8 GHz turbo | ||||

| 1 | 1 | 210.3 | 1 | 100% |

| 20 | 1 | 2583 | 12.3 | 61% |

| 40 | 2 | 4958.4 | 23.6 | 59% |

| 80 | 4 | 9340.5 | 44.4 | 56% |

| 160 | 8 | 15,853.2 | 75.4 | 47% |

| 320 | 16 | 22,012.7 | 104.7 | 33% |

| Fujitsu with 2.7 GHz processor | ||||

| 1 | 1 | 164.9 | 1 | 100% |

| 2 | 1 | 329.9 | 2 | 100% |

| 4 | 1 | 640.4 | 3.9 | 97% |

| 8 | 1 | 1092.3 | 6.6 | 83% |

| 10 | 1 | 1442.4 | 8.7 | 87% |

| 12 | 1 | 1757 | 10.7 | 89% |

| 24 | 1 | 2921.4 | 17.7 | 74% |

| 48 | 2 | 5374.8 | 32.6 | 68% |

| 96 | 4 | 9735.2 | 59 | 61% |

| 192 | 8 | 14,521 | 88.1 | 46% |

| 384 | 16 | 25,985 | 157.6 | 41% |

| Fujitsu with 3 GHz processor | ||||

| 1 | 1 | 184.2 | 1 | 100% |

| 2 | 1 | 368.5 | 2 | 100% |

| 4 | 1 | 719.1 | 3.9 | 98% |

| 8 | 1 | 1327.2 | 7.2 | 90% |

| 10 | 1 | 1575.2 | 8.6 | 86% |

| 20 | 1 | 2721.3 | 14.8 | 74% |

| Table Continued | ||||

| Processes | Machines | Core solver rating | Core solver speedup | Core solver efficiency |

| 40 | 2 | 4979.8 | 27 | 68% |

| 80 | 4 | 7500.3 | 43 | 50% |

| 160 | 8 | 16,225.4 | 88.1 | 55% |

| 320 | 16 | 25,600 | 139 | 43% |

| IBM with 2.6 GHz processor | ||||

| 16 | 1 | 2076.9 | N/A | N/A |

| 24 | 2 | 3110.7 | N/A | N/A |

| 32 | 2 | 3958.8 | N/A | N/A |

| 48 | 3 | 5877.6 | N/A | N/A |

| 64 | 4 | 7697.1 | N/A | N/A |

| 96 | 6 | 11,041.5 | N/A | N/A |

| 128 | 8 | 14,163.9 | N/A | N/A |

| 256 | 16 | 22,887.4 | N/A | N/A |

| 384 | 24 | 27,212.6 | N/A | N/A |

| 512 | 32 | 30,315.8 | N/A | N/A |

| IBM with 2.7 GHz processor | ||||

| 16 | 1 | 2192.9 | N/A | N/A |

| 24 | 1 | 3005.2 | N/A | N/A |

| 48 | 2 | 5798.7 | N/A | N/A |

| 64 | 3 | 7731.5 | N/A | N/A |

| 96 | 4 | 11,006.4 | N/A | N/A |

| 128 | 6 | 13,991.9 | N/A | N/A |

| 192 | 8 | 18,782.6 | N/A | N/A |

| 256 | 11 | 22,736.8 | N/A | N/A |

| 360 | 15 | 26,584.6 | N/A | N/A |

3.1.3. Flow Over Sedan Car

3.1.4. Flow Over Truck Body with 14 Million Cells

Table 6.6

Core solver rating, core solver speedup, and efficiency details for the problem of flow over a sedan car

| Processes | Machines | Core solver rating | Core solver speedup | Core solver efficiency |

| Bull with 2.8 GHz turbo | ||||

| 1 | 1 | 157.4 | 1 | 100% |

| 20 | 1 | 1882.4 | 12 | 60% |

| 40 | 2 | 3818.8 | 24.3 | 61% |

| 80 | 4 | 7663 | 48.7 | 61% |

| 160 | 8 | 15,926.3 | 101.2 | 63% |

| 320 | 16 | 30,315.8 | 192.6 | 60% |

| 640 | 32 | 55,741.9 | 354.1 | 55% |

| Fujitsu with 2.7 GHz processor | ||||

| 1 | 1 | 125.9 | 1 | 100% |

| 2 | 1 | 222 | 1.8 | 88% |

| 4 | 1 | 502.8 | 4 | 100% |

| 8 | 1 | 882.3 | 7 | 88% |

| 10 | 1 | 1175.9 | 9.3 | 93% |

| 12 | 1 | 1125.4 | 8.9 | 74% |

| 24 | 1 | 2053.5 | 16.3 | 68% |

| 48 | 2 | 4085.1 | 32.4 | 68% |

| 96 | 4 | 7944.8 | 63.1 | 66% |

| 192 | 8 | 16,149.5 | 128.3 | 67% |

| 384 | 16 | 29,793.1 | 236.6 | 62% |

| Fujitsu with 3 GHz processor | ||||

| 1 | 1 | 141.2 | 1 | 100% |

| 2 | 1 | 284 | 2 | 101% |

| 4 | 1 | 562.1 | 4 | 100% |

| 8 | 1 | 1066.7 | 7.6 | 94% |

| 10 | 1 | 1269.2 | 9 | 90% |

| 20 | 1 | 1916.8 | 13.6 | 68% |

| 40 | 2 | 3831.5 | 27.1 | 68% |

| 80 | 4 | 7464.4 | 52.9 | 66% |

| 160 | 8 | 14,961 | 106 | 66% |

| 320 | 16 | 27,648 | 195.8 | 61% |

| IBM with 2.6 GHz processor | ||||

| 16 | 1 | 1508.5 | N/A | N/A |

| 24 | 2 | 2285.7 | N/A | N/A |

| 32 | 2 | 3091.2 | N/A | N/A |

| 48 | 3 | 4632.7 | N/A | N/A |

| Table Continued | ||||

| Processes | Machines | Core solver rating | Core solver speedup | Core solver efficiency |

| 64 | 4 | 6149.5 | N/A | N/A |

| 96 | 6 | 9118.7 | N/A | N/A |

| 256 | 16 | 23,351.4 | N/A | N/A |

| 384 | 24 | 32,000 | N/A | N/A |

| 512 | 32 | 38,831.5 | N/A | N/A |

| 1024 | 64 | 54,857.1 | N/A | N/A |

| IBM with 2.7 GHz processor | ||||

| 16 | 1 | 1519.8 | N/A | N/A |

| 24 | 1 | 2068.2 | N/A | N/A |

| 48 | 2 | 4199.3 | N/A | N/A |

| 64 | 3 | 5610.4 | N/A | N/A |

| 96 | 4 | 8307.7 | N/A | N/A |

| 128 | 6 | 11,184.5 | N/A | N/A |

| 192 | 8 | 16,776.7 | N/A | N/A |

| 256 | 11 | 21,735.8 | N/A | N/A |

| 360 | 15 | 29,042 | N/A | N/A |

| 16 | 1 | 1519.8 | N/A | N/A |

| 24 | 1 | 2068.2 | N/A | N/A |

Table 6.7

Core solver rating, core solver speedup, and efficiency details for the problem of flow over a truck body with 14 million cells

| Processes | Machines | Core solver rating | Core solver speedup | Core solver efficiency |

| Bull with 2.8 GHz turbo | ||||

| 20 | 1 | 180.4 | 14.8 | 74% |

| 40 | 2 | 360.3 | 29.5 | 74% |

| 80 | 4 | 723.6 | 59.3 | 74% |

| 160 | 8 | 1373.6 | 112.6 | 70% |

| 320 | 16 | 2958.9 | 242.5 | 76% |

| 640 | 32 | 5366.5 | 439.9 | 69% |

| 1280 | 64 | 8727.3 | 715.4 | 56% |

| Fujitsu with 2.7 GHz processor | ||||

| 1 | 1 | 8.8 | 1 | 100% |

| 2 | 1 | 19.8 | 2.2 | 112% |

| 4 | 1 | 41.1 | 4.7 | 117% |

| 8 | 1 | 74.6 | 8.5 | 106% |

| 10 | 1 | 93.5 | 10.6 | 106% |

| 12 | 1 | 108.4 | 12.3 | 103% |

| 24 | 1 | 206.4 | 23.5 | 98% |

| 48 | 2 | 371.9 | 42.3 | 88% |

| 96 | 4 | 811.3 | 92.2 | 96% |

| 192 | 8 | 1384.6 | 157.3 | 82% |

| 384 | 16 | 2814.3 | 319.8 | 83% |

| Fujitsu with 3 GHz processor | ||||

| 1 | 1 | 9.3 | 1 | 100% |

| 2 | 1 | 20.4 | 2.2 | 110% |

| 4 | 1 | 45 | 4.8 | 121% |

| 8 | 1 | 79 | 8.5 | 106% |

| 10 | 1 | 99.3 | 10.7 | 107% |

| 20 | 1 | 185.9 | 20 | 100% |

| 40 | 2 | 343 | 36.9 | 92% |

| 80 | 4 | 483 | 51.9 | 65% |

| 160 | 8 | 1259.5 | 135.4 | 85% |

| 320 | 16 | 1624.1 | 174.6 | 55% |

| IBM with 2.6 GHz processor | ||||

| 16 | 1 | 147.2 | N/A | N/A |

| 24 | 2 | 223.8 | N/A | N/A |

| 32 | 2 | 292 | N/A | N/A |

| 48 | 3 | 404.3 | N/A | N/A |

| 64 | 4 | 548.6 | N/A | N/A |

| 96 | 6 | 817.4 | N/A | N/A |

| Table Continued | ||||

| Processes | Machines | Core solver rating | Core solver speedup | Core solver efficiency |

| 128 | 8 | 1082.7 | N/A | N/A |

| 256 | 16 | 2112.5 | N/A | N/A |

| 384 | 24 | 3130.4 | N/A | N/A |

| 512 | 32 | 4056.3 | N/A | N/A |

| 1024 | 64 | 6912 | N/A | N/A |

| IBM with 2.7 GHz processor | ||||

| 16 | 1 | 157 | N/A | N/A |

| 24 | 1 | 203.4 | N/A | N/A |

| 48 | 2 | 400 | N/A | N/A |

| 64 | 3 | 539.3 | N/A | N/A |

| 96 | 4 | 799.3 | N/A | N/A |

| 128 | 6 | 1057.5 | N/A | N/A |

| 192 | 8 | 1627.1 | N/A | N/A |

| 256 | 11 | 2138.6 | N/A | N/A |

| 360 | 15 | 2851.5 | N/A | N/A |

3.1.5. Truck with 111 Million Cells

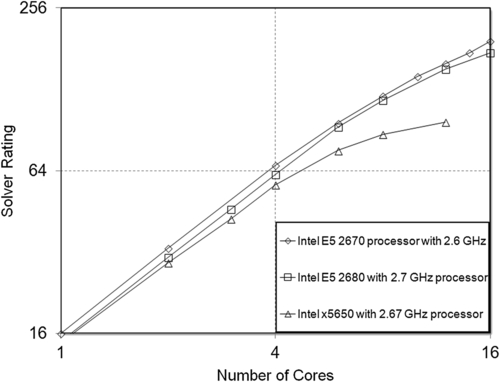

3.1.6. Performance of Different Problem Sizes with a Single Machine

Table 6.8

Core solver rating, core solver speedup, and efficiency details for the problem of flow over a truck body with 111 million cells

| Processes | Machines | Core solver rating | Core solver speedup | Core solver efficiency |

| Bull with 2.8 GHz turbo | ||||

| 80 | 4 | 74 | N/A | N/A |

| 160 | 8 | 154.2 | N/A | N/A |

| 320 | 16 | 322.7 | N/A | N/A |

| 640 | 32 | 654 | N/A | N/A |

| 1280 | 64 | 1303.2 | N/A | N/A |

| Fujitsu with 2.7 GHz processor | ||||

| 96 | 4 | 56.3 | N/A | N/A |

| 192 | 8 | 110 | N/A | N/A |

| 384 | 16 | 318.5 | N/A | N/A |

| Fujitsu with 3 GHz processor | ||||

| 80 | 4 | 56.3 | N/A | N/A |

| 160 | 8 | 128 | N/A | N/A |

| 320 | 16 | 208.2 | N/A | N/A |

| Table Continued | ||||

| Processes | Machines | Core solver rating | Core solver speedup | Core solver efficiency |

| IBM with 2.6 GHz processor | ||||

| 64 | 4 | 56.7 | N/A | N/A |

| 96 | 6 | 79.6 | N/A | N/A |

| 128 | 8 | 107 | N/A | N/A |

| 256 | 16 | 249.9 | N/A | N/A |

| 384 | 24 | 378.9 | N/A | N/A |

| 512 | 32 | 501.7 | N/A | N/A |

| 1024 | 64 | 998.8 | N/A | N/A |

| IBM with 2.7 GHz processor | ||||

| 64 | 3 | 61.4 | N/A | N/A |

| 96 | 4 | 93 | N/A | N/A |

| 128 | 6 | 121.6 | N/A | N/A |

| 192 | 8 | 185.3 | N/A | N/A |

| 256 | 11 | 246.7 | N/A | N/A |

| 360 | 15 | 348.8 | N/A | N/A |

3.2. Benchmarks for CFX

3.2.1. Automotive Pump Simulation

3.2.2. Le Mans Car Simulation

Table 6.9

Benchmarks performed with CFX software for the pump simulation problem

| Processes | Machines | Core solver rating | Core solver speedup | Core solver efficiency |

| HP SL230sG8 with Intel Sandy Bridge (16-core dual CPU) with 64 GB RAM per machine, RHEL 6.2 using FDR Infiniband without turbo mode | ||||

| 1 | 1 | 88 | 1 | 100% |

| 2 | 1 | 162 | 1.84 | 92% |

| 4 | 1 | 285 | 3.24 | 81% |

| 6 | 1 | 381 | 4.33 | 72% |

| 8 | 1 | 483 | 5.49 | 69% |

| 10 | 1 | 580 | 6.59 | 66% |

| 12 | 1 | 691 | 7.85 | 65% |

| 16 | 1 | 847 | 9.63 | 60% |

| 32 | 2 | 1490 | 16.93 | 53% |

| 64 | 4 | 2618 | 29.75 | 46% |

| Intel Sandy Bridge (16-core dual CPU) with 28 GB RAM | ||||

| 1 | 1 | 96.9 | 1 | 100% |

| 2 | 1 | 180.8 | 1.87 | 93% |

| 3 | 1 | 262.6 | 2.71 | 90% |

| 4 | 1 | 317.6 | 3.28 | 82% |

| 6 | 1 | 421.5 | 4.35 | 73% |

| Table Continued | ||||

| Processes | Machines | Core solver rating | Core solver speedup | Core solver efficiency |

| 8 | 1 | 533.3 | 5.51 | 69% |

| 12 | 1 | 732.2 | 7.56 | 63% |

| 16 | 1 | 881.6 | 9.1 | 57% |

| Intel Gulftown/Westmere (12-core dual CPU) with 39 GB RAM | ||||

| 1 | 1 | 83.4 | 1 | 100% |

| 2 | 1 | 149.2 | 1.79 | 89% |

| 3 | 1 | 214.9 | 2.58 | 86% |

| 4 | 1 | 265 | 3.18 | 79% |

| 6 | 1 | 334.9 | 4.02 | 67% |

| 8 | 1 | 407.5 | 4.89 | 61% |

| 12 | 1 | 496.6 | 5.95 | 50% |

Table 6.10

Core solver rating, core solver speedup, and efficiency details for the problem of flow over a Le Mans car body with ANSYS CFX software

| Processes | Machines | Core solver rating | Core solver speedup | Core solver efficiency |

| HP SL230sG8 with Intel Sandy Bridge (16-core dual CPU) with 64 GB RAM per machine, RHEL 6.2 using FDR Infiniband without turbo mode | ||||

| 1 | 1 | 53 | 1 | 100% |

| 2 | 1 | 111 | 2.09 | 105% |

| 4 | 1 | 211 | 3.98 | 100% |

| 6 | 1 | 294 | 5.55 | 92% |

| 8 | 1 | 374 | 7.06 | 88% |

| 10 | 1 | 441 | 8.32 | 83% |

| 12 | 1 | 514 | 9.7 | 81% |

| 14 | 1 | 580 | 10.94 | 78% |

| 16 | 1 | 617 | 11.64 | 73% |

| 32 | 2 | 1168 | 22.04 | 69% |

| 64 | 4 | 1920 | 36.23 | 57% |

| Intel Sandy Bridge (16-core dual CPU) with 28 GB RAM | ||||

| 1 | 1 | 57.9 | 1 | 100% |

| 2 | 1 | 120.8 | 2.09 | 104% |

| 3 | 1 | 169.7 | 2.93 | 98% |

| 4 | 1 | 230.4 | 3.98 | 100% |

| 6 | 1 | 322.4 | 5.57 | 93% |

| 8 | 1 | 413.4 | 7.14 | 89% |

| 12 | 1 | 543.4 | 9.39 | 78% |

| 16 | 1 | 640 | 11.06 | 69% |

| Intel Gulftown/Westmere (12-core dual CPU) with 39 GB RAM | ||||

| 1 | 1 | 50.4 | 1 | 100% |

| 2 | 1 | 103.1 | 2.04 | 102% |

| 3 | 1 | 139.1 | 2.76 | 92% |

| 4 | 1 | 187 | 3.71 | 93% |

| 6 | 1 | 244.8 | 4.85 | 81% |

| 8 | 1 | 296.9 | 5.89 | 74% |

| 12 | 1 | 347 | 6.88 | 57% |

3.2.3. Airfoil Simulation

Table 6.11

Core solver rating, core solver speedup, and efficiency details for the problem of flow over an airfoil

| Processes | Machines | Core solver rating | Core solver speedup | Core solver efficiency |

| HP SL230sG8 with Intel Sandy Bridge (16-core dual CPU) with 64 GB RAM per machine, RHEL 6.2 using FDR Infiniband without turbo mode | ||||

| 1 | 1 | 16 | 1 | 100% |

| 2 | 1 | 33 | 2.06 | 103% |

| 4 | 1 | 67 | 4.19 | 105% |

| 6 | 1 | 96 | 6 | 100% |

| 8 | 1 | 121 | 7.56 | 95% |

| 10 | 1 | 143 | 8.94 | 89% |

| 12 | 1 | 159 | 9.94 | 83% |

| 14 | 1 | 175 | 10.94 | 78% |

| 16 | 1 | 193 | 12.06 | 75% |

| Intel Sandy Bridge (16-core dual CPU) with 28 GB RAM | ||||

| 1 | 1 | 14.9 | 1 | 100% |

| 2 | 1 | 30.6 | 2.06 | 103% |

| 3 | 1 | 46.1 | 3.09 | 103% |

| 4 | 1 | 62 | 4.17 | 104% |

| Table Continued | ||||

| Processes | Machines | Core solver rating | Core solver speedup | Core solver efficiency |

| 6 | 1 | 92.9 | 6.24 | 104% |

| 8 | 1 | 116.6 | 7.83 | 98% |

| 12 | 1 | 151.8 | 10.2 | 85% |

| 16 | 1 | 174.9 | 11.75 | 73% |

| Intel Gulftown/Westmere (12-core dual CPU) with 39 GB RAM | ||||

| 1 | 1 | 14.8 | 1 | 100% |

| 2 | 1 | 29.2 | 1.97 | 98% |

| 3 | 1 | 42.4 | 2.86 | 95% |

| 4 | 1 | 56.7 | 3.82 | 96% |

| 6 | 1 | 76.1 | 5.13 | 86% |

| 8 | 1 | 87.3 | 5.88 | 74% |

| 12 | 1 | 96.9 | 6.53 | 54% |

4. OpenFOAM® Benchmarking

Table 6.12

Flow conditions for the cavity flow problem

| Reynolds number | 1000 |

| Kinematic viscosity | 0.0001 m2/s |

| Cube dimension | 0.1 × 0.1 × 0.1 m |

| Lid velocity | 1 m/s |

| deltaT | 0.0001 s |

| Number of time steps | 200 |

| Solutions written to disk | 8 |

| Solver for pressure equation | Preconditioned conjugate gradient (PGC) with diagonal incomplete Cholesky smoother (DIC) |

| Decomposition method | Simple |

Table 6.13

Problem scale span and number of cores

| Nodes | Mesh size | ||||

| 1M | 3.4M | 8M | 15.6M | 27M | |

| 1N (16 cores) | Yes | Yes | Yes | Yes | No |

| 2N (32 cores) | Yes | Yes | Yes | Yes | Yes |

| 4N (64cores) | Yes | Yes | Yes | Yes | Yes |

| 9N (145 cores) | Yes | Yes | Yes | Yes | Yes |

| 18N (288 cores) | Yes | Yes | Yes | Yes | Yes |

| 27N (432 cores) | Yes | Yes | Yes | Yes | Yes |

| 36N (576 cores) | Yes | Yes | Yes | Yes | Yes |

| 72N (1152 cores) | Yes | Yes | Yes | Yes | Yes |

| 144N (2304 cores) | Yes | Yes | Yes | Yes | Yes |

| 288N (4608 cores) | No | No | Yes | Yes | Yes |

5. Case Studies of Some Renowned Clusters

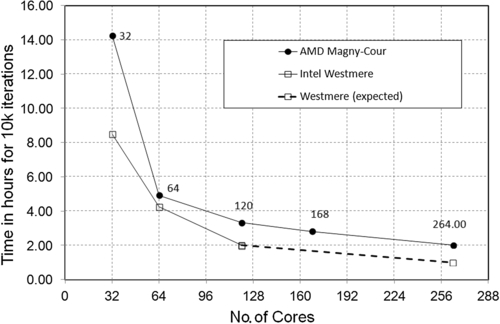

5.1. t-Platforms' Lomonosov HPC

5.2. Lomonosov

5.2.1. Engineering Infrastructure

5.2.2. Infrastructure Facts

Table 6.14

Key features of the Lomonosov cluster

| Features | Values |

| Peak performance | 420 teraflops/s (heterogeneous nodes) |

| Real performance | 350 teraflops/s |

| LINPACK efficiency | 83% |

| Number of compute nodes | 4446 |

| Number of processors | 8892 |

| Number of processor cores | 35,776 |

| Primary compute nodes | T-Blade 2 |

| Secondary compute nodes | T-Blade 1.1 peak cell S |

| Processor type of primary compute node | Intel Xeon X5570 |

| Processor type of secondary compute node | Intel Xeon X5570, power cell 8i |

| Total RAM installed | 56 TB |

| Primary interconnect | QDR Infiniband |

| Secondary interconnect | 10G ethernet, gigabit ethernet |

| External storage | Up to 1350 TB, t-Platforms ready storage SAN 7998–Lustre |

| Operating system | ClusterX t-Platforms edition |

| Total covered area occupied by system | 252 m2 |

| Power consumption | 1.36 MW |

5.2.3. Key Features

5.3. Benchmarking on TB-1.1 Blades