4. Conversational Interactions Using Java

The last two chapters covered service-based development using the SCA Java programming model. Putting this into practice in Chapter 3, “Service-Based Development Using Java,” we refactored the BigBank loan application to reflect SCA best practices by taking advantage of loose coupling and asynchronous interactions. In this chapter, we continue our coverage of the Java programming model, focusing on building conversational services.

Conversational Interactions

Services in distributed applications are often designed to be stateless. In stateless architectures, the runtime does not maintain state on behalf of a client between operations. Rather, contextual information needed by the service to process a request is passed as part of the invocation parameters. This information may be used by the service to access additional information required to process a request in a database or backend system. In the previous chapters, the BigBank application was designed with stateless services: LoanService or CreditService use a loan ID as a key to manually store and retrieve loan application data for processing.

There are, however, many distributed applications that are designed around stateful services or could benefit from stateful services, as they are easier to write and require less code than stateless designs. Perhaps the most common example of such a distributed stateful application is a Java EE-based web application that enables users to log in and perform operations. The operations—such as filling a shopping cart and making a purchase—involve a client and server code (typically servlets and JSPs) sharing contextual information via an HTTP session. If a web application had to manage state manually, as opposed to relying on the session facilities provided by the servlet container, the amount of code and associated complexity would increase.

SCA provides a number of facilities for creating stateful application architectures. In this chapter, we explore how the SCA Java programming model can be used to have the runtime manage and correlate state so that service implementations do not need to do so in code. Chapter 10, “Service-Based Development Using BPEL” covers how to write conversational services using BPEL.

A Conversation

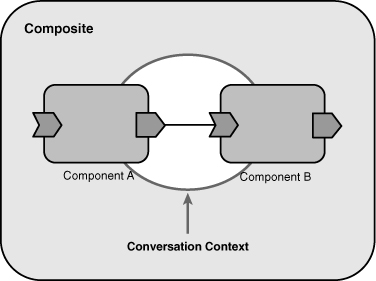

In SCA, a conversation is defined as a shared context between a client and service. For example, the interactions between LoanComponent and CreditService could be modeled as conversational, as shown in Figure 4.1.

Figure 4.1 A conversational interaction

The conversation shown in Figure 4.1 has a start operation (LoanComponent requests a credit check), a series of continuing operations (get status and request for more information), and two end operations (cancel or result).

Conversations in SCA have a number of unique characteristics. First, in line with loosely coupled interactions, conversations may last for a brief period of time (for example, milliseconds) or for a long duration (for example, months). A second characteristic is that conversations involve shared state between two parties: a client and a service. In the previous example, the shared context is the loan applicant information.

The main difference between conversational and stateless interactions is that the latter automatically generate and pass contextual information for the conversation as part of the messages sent between the client and service (typically as message headers). With conversational interactions, the client does not need to generate the context information, and neither the client nor the service needs to “remember” or track this information and send it with each operation invocation. Runtimes support this by passing a conversation ID, which is used to correlate contextual information such as the loan applicant information. This is similar to a session ID in Java EE web applications, which is passed between a browser and a servlet container to correlate session information.

An application may be composed of multiple conversational services. How is conversational context shared between clients and service providers? Before delving into the details of implementing conversational services, we first need to consider how conversational contexts are created and propagated among multiple services.

In Figure 4.2, Component A acts as a client to the conversational service offered by Component B, creating a shared conversation context. As long as the conversation is active, for each invocation A makes to the service offered by B, a single conversational context is in effect.

Figure 4.2 A conversation visualized

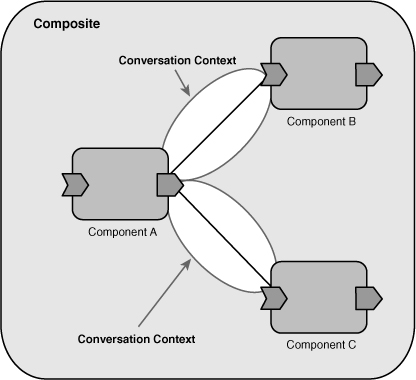

Because a conversation is between a client and a single service, if Component A invokes a conversational service offered by a third component, C, a separate conversational context is created, as shown in Figure 4.3.

Figure 4.3 Multiple conversations

It is important to note that conversational context is not propagated across multiple invocations. If Component A invoked a conversational service on B, which in turn invoked another conversational service on C, two conversations would be created, as shown in Figure 4.4.

Figure 4.4 Conversations are not propagated across multiple invocations.

In this situation, although SCA considers there to be two different conversations, it is likely that the two conversations will have closely coupled lifetimes. When the conversation between Components B and C is complete, Component B will probably also complete its conversation with A. However, this is not always the case, and there are situations where multiple conversations between B and C will start and stop during a single conversation between A and B.

The preceding discussion of conversations may seem somewhat complex on first read. A simple way to grasp conversations is to remember that a conversation in SCA is always between two parties (a client and service). If the client or service interacts with another service, a second conversation is created.

Conversational Services

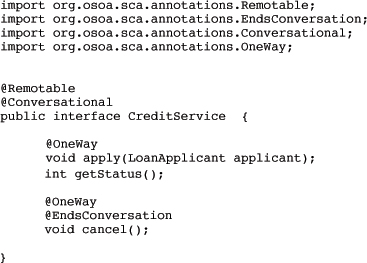

In Java, conversational services are declared using the @Conversational on an interface, as shown in Listing 4.1.

Listing 4.1 A Conversational Service

The preceding interface also makes use of the @EndsConversation annotation. This annotation is used to indicate which service operation or operations (@EndsConversation may be used on multiple operations) end a conversation. It is not necessary to use an annotation to mark operations that may start or continue a conversation, because any operation on a conversational interface will continue an existing conversation, if one is underway, or start a conversation if one is not.

In the previous listing, CreditService.apply(..) is intended to be used for initiating a conversation, whereas CreditService.getStatus() and CreditService.cancel() are intended to be used for already existing conversations (applications in process). The cancel() will also end the conversation. After an operation marked with @EndsConversation is invoked and completes processing, conversational resources such as the shared context may be cleaned up and removed. A subsequent invocation of another operation will result in a new conversation being started.

The fact that the getStatus() and cancel() operations have to be used only for existing conversations is not captured by an annotation, so it should be noted in the documentation on those methods. It is up to the application code for those operations to generate an exception if they are used to start a conversation.

SCA doesn’t define annotations for starting or continuing conversations because they are really just a special case for more complex rules regarding which operations should be called before which other operations. For example, if the loan application service were more complicated and it included an operation for locking in an interest rate, that operation would only be legal during certain phases of the application process. This kind of error needs to be checked by the application code. SCA only includes the @EndsConversation annotation because the infrastructure itself needs to know when the conversation has ended, so that it can free up the resources that were associated with the conversation.

Implementing Conversational Services

Having covered the key aspects of defining a conversational service, we now turn to implementing one. When writing a conversational service implementation, the first decision that needs to be made is how conversational state is maintained. In SCA, developers have two options: They can use the state management features provided as part of SCA, or they can write custom code as part of the implementation.

Conversation-Scoped Implementations

Developers can have the SCA runtime manage conversational state by declaring an implementation as conversation-scoped. This works the same way as other scopes—that is, via the use of the @Scope("CONVERSATION") annotation, as in the extract shown in Listing 4.2.

Listing 4.2 A Conversation-Scoped Implementation

Conversation-scoped implementations work just like other scopes. As discussed in Chapter 3, the SCA runtime is responsible for dispatching to the correct instance based on the client making the request. Because conversations exist over multiple service invocations, the runtime will dispatch to the same instance as long as the conversation remains active. If multiple clients invoke a conversational service, the requests will be dispatched to multiple implementation instances, as illustrated in Figure 4.5.

Figure 4.5 Runtime dispatching to conversation-scoped instances

Note that by default, a component implementation is considered conversation-scoped if it implements a conversational interface. That is, if a service contract is annotated with @Conversational, the component implementation class does not need to specify @Scope("CONVERSATION").

Because the runtime handles dispatching to the correct implementation instance are based on the current conversation, conversation state can be stored in member variables on the component implementation. This allows service contracts to avoid having to pass contextual data as part of the operation parameters. A modified version of CreditComponent demonstrates the use of conversational state (see Listing 4.3).

Listing 4.3 Storing Conversational State in Instance Variables

In this implementation of CreditComponent, the applicant and status variables are associated with the current conversation and will be available as long as the conversation remains active.

In loosely coupled systems, interactions between clients and conversational services may occur over longer periods of time, perhaps months. Although SCA says nothing about how conversational instances are maintained, some SCA runtimes may choose to persist instances to disk or some other form of storage (for example, a database). Persistence may be done to free memory and, more importantly, provide reliability for conversational services; information stored in memory may be lost if a hardware failure occurs. Some runtimes may also allow conversational persistence to be configured—for example, disabling it when reliability is not required. As demonstrated in the previous example, however, how conversational state is stored is transparent from the perspective of application code. The developer needs to be aware that only Java serialization and deserialization may occur between operations of the component.

Custom State Management

In some situations, implementations may elect to manage state as opposed to relying on the SCA runtime. This is often done for performance reasons or because the conversational state must be persisted in a particular way. Implementations that manage their own state must correlate the current conversation ID with any conversation state. This will usually involve obtaining the conversation ID and performing a lookup of the conversational state based on that ID.

In this scenario, the conversation ID is still system-generated, and it is passed in a message header rather than as a parameter (using whatever approach to headers is appropriate for the binding being used). Because of this, the interface is still considered to be conversational. The fact that the conversational state is explicitly retrieved through application logic, rather than automatically maintained by the infrastructure, is an implementation detail that does not need to be visible to the client of the service. The fact that it is conversational, however, is still visible, because the client needs to know that a sequence of operations will be correlated to each other without being based on any information from the messages.

The current conversation ID can be obtained through injection via the @ConversationID annotation. The following version of the CreditComponent uses the conversation ID to manually store state using a special ConversationalStorageService. The latter could store the information in memory using a simple map or persistently using a database (see Listing 4.4).

Listing 4.4 Manually Maintaining Conversational State

Similar to other injectable SCA-related information, the @ConversationID annotation may be used on public and protected fields or setter methods.

Expiring Conversations

In loosely coupled systems, conversational services cannot rely on clients to be well behaved and call an operation marked with @EndsConversation to signal that conversational resources can be released. Clients can fail or a network interruption could block an invocation from reaching its target service. To handle these scenarios, SCA provides mechanisms for expiring a conversation using the @ConversationAttributes annotation. @ConversationAttributes is placed on a Java class and can be used to specify a maximum idle time and maximum age of a conversation. The maxIdleTime of a conversation defines the maximum time that can pass between operation invocations within a single conversation. The maxAge of a conversation denotes the maximum time a conversation can remain active. If the container is managing conversational state, it may free resources, including removing implementation instances, associated with an expired conversation. In the example shown in Listing 4.5, the maxIdleTime between invocations in the same conversation is set to 30 days.

Listing 4.5 Setting Conversation Expiration Based on Idle Time

Similarly, the example in Listing 4.6 demonstrates setting the maxAge of a conversation to 30 days.

Listing 4.6 Setting Conversation Expiration Based on Duration

The @ConversationAttributes annotation allows maxAge and maxIdleTime to be specified in seconds, minutes, hours, days, or years. The value of the attribute is an integer followed by the scale, as in “15 minutes.”

Conversational Services and Asynchronous Interactions

Conversational services can be used in conjunction with non-blocking operations and callbacks to create loosely coupled interactions that share state between the client and service provider.

Non-Blocking Invocations

Operations can be made non-blocking on a conversational service using the @OneWay annotation discussed in Chapter 3 (see Listing 4.7).

Listing 4.7 Using Conversations with Non-Blocking Operations

The preceding example makes the CreditService.apply(..) and CreditService.cancel() operations non-blocking, where control is returned immediately to the client, even before the operation request has been sent to the service provider. This is different from just having the operation return void without having been marked with the @OneWay annotation. Without the @OneWay annotation, the client doesn’t regain control until the operation completes, so the client developer knows that if the operation returns without throwing an exception, the operation has successfully completed.

By contrast, a @OneWay operation may not be started until well after the client program has moved well beyond the place where the operation had been called. This means that the client cannot assume that the operation has successfully completed, or even that the operation request has been able to reach the service provider. Often, in this scenario, it will be advisable to require reliable delivery of the message so that the request is not lost merely because the client or the service provider crashes at an inopportune time. Reliable delivery can be guaranteed by using SCA’s policy intent mechanism. In this case, the annotation would be @Requires("ExactlyOnce"). This will constrain the deployer to configure the runtime to use some form of reliable delivery. Policy intents are described in more detail in Chapter 6, “Policy.”

Using reliable delivery will not, however, help with the fact that the client code can’t see exceptions that are raised by the @OneWay operation. When developing these operations, if an error is discovered in the way the client has invoked the service (an invalid parameter, for example), the error must be sent back to the client through the callback interface, which is described in the next section.

Note that the CreditComponent implementation does not need to change; if the implementation is conversation-scoped, the SCA runtime will continue to manage state, even if the invocation is made in an asynchronous manner.

Callbacks

In addition to non-blocking operations, conversational services may also be used in conjunction with callbacks. Like operations on CreditService, callback operations can be annotated with @EndsConversation. Invoking a callback method marked with @EndsConversation will end the current conversation started by the client. Listing 4.8 shows the CreditServiceCallback interface.

Listing 4.8 Using Conversations with Callbacks

Accessing the callback from the CreditComponent is no different than accessing it from a stateless implementation (see Listing 4.9).

Listing 4.9 Accessing a Callback During a Conversation

Callbacks to Conversational and Stateless Clients

Perhaps the most common conversational interaction pattern involving callbacks is when both the client and service provider are conversation-scoped. In this case, callbacks from the service provider will be dispatched to the originating client instance. This allows clients and service providers to avoid passing context information as service parameters. The CreditComponent in the previous listing was written with the assumption that the client is conversational. When CreditServiceCallback is called, only the credit score result is passed back and not the entire loan application. Because the LoanComponent is conversation-scoped, it can maintain a pointer to the loan application in an instance variable prior to making the original credit score request and access it when the callback is received.

Although conversation-scoped clients and service providers are likely to be the norm when callbacks are used in conversational interactions, it is possible to have a stateless client and conversational service provider. We conclude this section with a brief discussion of this scenario.

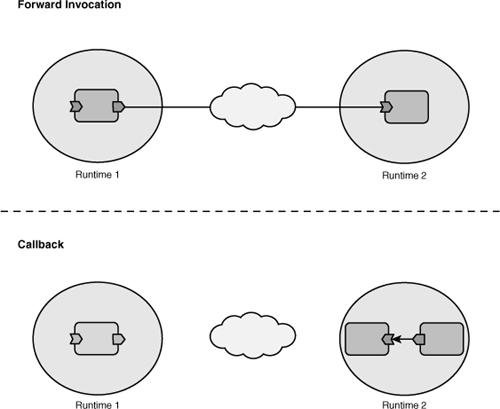

In Figure 4.6, if the original LoanComponent client were stateless, the callback invocation would most likely be dispatched by the runtime to a different instance. Figure 4.6 illustrates how callbacks are dispatched when the client is stateless.

Figure 4.6 Callback dispatching to a stateless client

The advantage to using stateless clients is that the runtime can perform a callback optimization. Because a callback to a stateless client does not have to be dispatched to the same client instance that originated the forward invocation, the runtime can route the callback to an instance co-located with the service provider. Figure 4.7 depicts how this optimization is performed.

Figure 4.7 Routing a callback to a co-located stateless client

Conversation Propagation

In SCA, conversations are between two parties: a client and service provider. However, there are situations where it is useful to allow other services to participate in a conversation. Fabric3 provides the capability to propagate transactions to other services.

Figure 4.8 shows a conversation initiated by an interaction between Components A and B propagated to C.

Figure 4.8 Conversation propagation

When the conversation is propagated, all requests from A to B and B to C will be dispatched to the same component instances. Conversational propagation can be enabled on individual components (for example, on Components A and B in the preceding example) or on an entire composite. For simplicity, it is recommended that conversation propagation be enabled on a per-composite basis. This is done using the requires attribute in a composite file (see Listing 4.10).

Listing 4.10 Setting Conversation Propagation for a Composite

We haven’t yet discussed what the requires attribute is, and it may seem a bit strange that it is used instead of setting an attribute named propagatesConversation to true. In SCA, the requires attribute is the way to declare a policy—in this case, for a composite. We will cover policy in Chapter 6, but for now think of the requires tag as a way to declare that for all components in the composite, conversation propagation is required to be in effect. The other thing to note is the use of the Fabric3 namespace, http://fabric3.org/xmlns/sca/1.0. Because conversation propagation is a proprietary Fabric3 feature (we could say “policy”), it is specified using the Fabric3 namespace.

The lifecycle of a conversation that is propagated to multiple participant services is handled in the same way as a two-party conversation. That is, it can be expired using the @Conversation Attributes annotation or by calling a method annotated with @EndsConversation. There are two caveats to note, however, with multiparty conversations. If using the @Conversation Attributes, the expiration time is determined by the values set on the first component starting the conversation. Second, @EndsConversation should generally be used only on services initiating a conversation. In our A-B-C example, @EndsConversation should be specified on the service contract for A, or a callback interface implemented by A. Otherwise, if a conversation is ended on B or C and there is a callback to A, a conversation expiration error will be raised.

To conclude our discussion of conversation propagation, it is worth briefly taking into account the diamond problem. The diamond problem is when interactions among four or more components form a “diamond.” For example, Figure 4.9 illustrates A invoking B and C, which in turn invoke D.

Figure 4.9 The diamond problem

As shown in Figure 4.9, if conversation propagation is enabled for the composite, when B and C invoke D, they will dispatch to the same instance of D. Similarly, if D invoked callbacks to B and C, which in turn invoked a callback to A, the same instance of A would be called. Finally, if the callback from C to A ended the conversation, conversational resources held by the runtime for A, B, C, and D would be cleaned up.

Summary

In this chapter, we covered designing and implementing conversational services. The conversational capabilities provided by SCA simplify application code by removing the need to pass context information as service operation parameters and manually manage state in component implementations. Having concluded the majority of our discussion of the SCA Java programming model, in Chapter 5, “Composition,” we return to assembling composites and in particular deal with how to architect application modularity through the SCA concept of “composition.”