In this chapter we will we explore game audio implementation. Armed with an understanding of the theory and practice of game audio asset creation from previous chapters, we will explore planning for audio implementation by considering engine capabilities, target platforms, and resources. The core of this chapter will cover topics from asset preparation, implementation, dynamic mixing, and finally onto testing, optimization, and reiteration.

Implementation Basics

In previous chapters we explored the challenges presented in nonlinear media and how the player’s input affects the game state, leaving audio designers unable to determine exactly when a particular audio event will trigger. However, we can plan ahead to answer these challenges during the implementation process. Implementation is essentially the process of assimilating audio into the game engine so that audio can trigger synchronously with other game events. Using a variety of implementation methods we can create dynamic audio systems that adapt seamlessly to gameplay events.

Implementation Methods

There are several foundational methods within the process of audio implementation. As an audio designer you will work with one or more programmers to determine the best tools and techniques for implementation. The budget, schedule, as well as your team’s work capacity and level of knowledge/experience will all be factors in deciding these tools and techniques. Implementation tools include the game engine1 (Unity, Unreal, proprietary engines), a software development environment for building games, and game audio middleware (Wwise, FMOD), which is a third-party audio-focused software environment that sits between the game engine and audio designer. The term middleware in the tech world means software that acts as a bridge or connection between other software or systems. Essentially, audio middleware puts more control into the audio designer’s hands, with less scripting and programming to deal with.

Let’s break down a few typical scenarios in which audio assets are implemented into games. The “asset cannon” approach is the simplest form as it consists of the audio designer delivering assets on spec (concerning file type and compression) to the programmer, who will then implement the audio into the game engine natively. While this approach may seem like a good choice for those who feel a bit uncomfortable with the more technical side of game audio, the downside is a lack of control over assets once delivered. It also places the burden of finalizing audio on the programmer. Not all programmers have a deep understanding of audio concepts and theory, so by working in this way the team’s expertise is not being fully exploited. This approach can also lead to many more iterative reviews and adjustments as the programmer becomes the primary work horse for completing modifications and updates as advised and requested by the sound team.

This method of implementation can also result in the wrong real-time effects being applied, or inconsistencies in compression and volume. With this delivery process you should insist on testing builds once the sounds are in place. Also be sure to build time into the schedule to make changes if necessary. Testing and iteration are the best way to deliver effective game audio using the asset cannon approach.

Implementation of audio natively into an engine is a step above the asset cannon approach. With this approach the audio designer has direct access to the game engine and can import assets and tweak components herself. Compared to the asset cannon approach, direct native implementation saves a lot of the back-and-forth time with the programmer when mixing and making revisions. However, an audio designer not familiar with the engine or uncomfortable with scripting might find it a bit more difficult to achieve all of their audio goals.

Audio implementation natively into the engine is limited to the resources of said engine, and heavily dependent on scripting for audio behaviours beyond play and loop. However, there are plugins like Taz-man, Audio Fabric, and Dark Tonic’s Master Audio which extend the game engine’s native audio functionality, allowing for complex and rich audio behaviours while reducing time spent scripting.

When the programmer agrees to use an audio middleware solution such as Audiokinetic Wwise (Audiokinetic), FMOD (Firelight Technologies), Criware ADX2 (CRI Middleware), or Elias Studio (Elias), it can be the best-case scenario for both the programming team and the audio designer. It’s important to note that these middleware solutions have licensing costs which are indie-dev friendly but should be considered in the process.

Middleware solutions are built to better utilize a game development team’s expertise. With any middleware, whether it is for audio, physics, or anything else, the build allow the experts in each discipline to do what they do best. An audio middleware solution will allow you to control the creation of the sound and music as well as the implementation and mixing of those assets. During development you will spend more time working on audio (as you should) and less time trying to explain to the programmer how the audio should work. The development team will then have more time to spend on other programming tasks and the audio team will have more capacity to make the game sound great!

Later in the chapter we will explore the methods discussed above in greater detail. At times we may generalize the game engine’s audio function and/or audio middleware with the term audio engine.

Integration and Testing

Later in the chapter we will break down the specific functions of implementation and testing, but for now let’s take a macro look at what is involved.

Regardless of the implementation method utilized, the game engine sits at the core of development. A considerable amount of scripting will be required, regardless of who is implementing the audio assets. Audio assets imported into the game engine can be easily attached to game objects – empty containers placed in a game engine that “hold” gameplay instructions (see “Game Engines” later in this chapter for more details). Scripts are then attached as a component onto each game object. A script is basically a set of instructions which tell the engine what to do with the audio assets. At the time of writing this text, game engines are “smart,” but do not operate with much common sense. The future of AI and machine learning might tip that scale, but for now we can assume we need to provide specific instructions to the engine for proper triggering of audio events. Later in the chapter we will break down the key features and functions that make up native engine implementation. We will then take you through a tutorial on the companion site to tie it all together.

As we mentioned previously, audio middleware offers the audio designer control over many functions like randomization of pitch and volume, playlists, beat sync, and more. These features are made easily accessible without scripting in a graphical user interface. Later on we will discuss in detail the various features available in middleware, but for now let’s talk about how it is integrated into the game engine.

Integration is the process of synchronizing a middleware tool with a game engine. Some middleware developers offer integration packages for supported game engines while others use the comprehensive API. Either approach will configure the two pieces of software so they can communicate and share data. A programmer typically completes the integration before sharing the project with the team. After an audio event is created and configured in a middleware program the audio designer can export what is called a sound bank (or bank) to be shared along with the engine. The sound bank provides the audio events and configuration information, which can be used to hook them into the game engine.

During the integration package installation, a specific set of file paths are set up to tell the game engine where to find these sound banks once exported. The sound bank information shares all of the necessary information for the game engine to trigger the audio events as instructed in middleware. The programmer will need to do some light scripting to trigger the events and connect game parameters. Middleware offers users precise control over specific audio event properties such as real-time parameter control (RTPC), which feeds on data values generated by the game engine. RTPC is fundamentally a way to automate multiple audio parameters in real time, and can be used to create fades, volume and effects automation, and mixes that change on the fly.

It’s important to note that native audio implementation into a game engine offers some control of audio based on parameter values, but typically offers limited flexibility and requires additional scripting.

Implementation Cycle

Implementation is a very important step, and (like game audio itself) it is not linear. Your audio assets will be created in a DAW, then imported directly into the game engine or middleware tool for testing. The process is circular in that you will continue to move between these software applications as you create, implement, and test, and then create again. Testing often leaves the audio designer with revisions or re-works, which takes the process back to the audio engine or DAW, and so on.

Visiting Artist: Jeanine Cowen, Composer, Sound Designer

Thoughts on Implementation

Implementation, when it’s done right, opens up new opportunities that the audio designer hadn’t imagined at the outset. Finding a balance between the creativity of sound design and music and the problem solving of implementation is often delicate. Working in game audio implementation is both a technical and a creative job. There is no wrong or right way, there is only, how do we get this audio to sound great and help to enhance the game. Rather than view it as a struggle, the good implementers I know see it as controlled guidance. Like the painter who only had the natural dyes found in the real world, they work with the materials they have rather than trying to force their resources to be something they aren’t. If you find yourself struggling with getting something to work, it might be time to step back and re-ask yourself the question “what is it that I want the player to experience right now?” This question should guide you and allow you to get out of the weeds that can sometimes consume our focus as implementation specialists.

Implementation for Immersion

The level of sonic immersion (or aural cohesion) in a game comes down to the level of detail and planning in the audio implementation. In Chapter 2 as an exercise we asked you to close your eyes and listen. Sound is all around us. Since the days when we heard our very first sounds we understood these details to be cues with valuable information. The same is true of the sonic experience in games. Sounds give players details about the environment, immersing them in the game world.

While sound is clearly used to create affective response in films, in games there is a level of interactivity that also influences the player’s emotional state. In a game scene the player will experience a different level of intensity of music and sound when they are exploring than they will when experiencing an enemy attack. Sound will act as a warning of danger to the player; it prepares them for this transition in gameplay intensity. Sound reacts to the player’s choices and in turn provides the player with information which influence actions. In this way audio creates momentum for the narrative, and reinforces it with aural feedback. This idea is supported by the Gamasutra article, “How Does In-Game Audio Affect Players?”2 Here, Raymond Usher examines the results of a study in which players were monitored while playing games with and without sound. The results proved higher heart rates and slightly higher respiration rates in the audio group. This suggests that audio is a crucial part in our integration and immersion into game scenes.

Playlists and Randomization

Without variation and a certain amount of generative aspects in the sound, games would feel static and lifeless. Gone are the days of the Super Mario Bros. footstep bleeps that repeat over and over again. Variety in even the smallest sonic details can go a long way to making the experience more believable. In film and other linear media we can add variation manually as we build each sequence and it stays synced to the visuals. With interactive media we must create dynamic systems and implement them into the audio engine to vary the soundscape. Sound effects are typically delivered with a few slight audio variations and then grouped into playlists. These playlists can then be randomized in the engine. In addition, the audio engine can also apply randomization to certain properties like pitch and volume. But this is just the tip of the iceberg.

By breaking down a single sound into multiple playlists (sometimes called random containers or multi-instruments) we can add variety and dynamism. For example, an explosion sound could be broken down into attack, sustain, and release tail playlists. The game engine or middleware tool would then randomly choose a sound within each playlist to trigger at the right moment, thus creating unpredictable combinations each time the event is triggered. On top of that, the three containers can be programmed with slight randomizations on pitch and volume as well. This takes our random possibilities and exponentially increases their variability (see Figure 8.1).

Figure 8.1 Screenshot of multi-instruments in FMOD Studio.

Loops

In Chapter 2 on the companion site we discussed creating and preparing looped assets for use in game. Here we will focus on implementing them. By default, implemented audio events will play once from beginning to end. Depending on the method of implementation, it will be necessary to set instructions for the audio engine to loop an asset instead of triggering it just once. This process is usually relatively easy, regardless of the implementation medium, and loops can be set to either loop once or a predetermined number of times.

Audio designers make use of loop functions within the audio engine to get more mileage out of audio assets, which helps keep the memory footprint minimal. Loops also compensate for the differences in timing of each playthrough. Imagine if the soundtrack needed to be as long as the game. Sixty hours of music would certainly take up a large amount of memory and be CPU intensive to stream, not to mention the time it would take to compose. Implementing loops instead of longer linear cues makes the soundtrack more efficient and adaptable. Looping events aren’t just for music either; they can also be used for continuity of background ambience and continuous triggered sound effects.

Looping events is a great solution for keeping memory and CPU usage in check, but the designer must be mindful of avoiding repetition. The length of the loop is something to consider based on how long a player might spend in a specific area where the loop is triggered. We will discuss these benefits and some challenges of looping later in the chapter.

Dynamic Mixing

Mixing for linear media is a process implemented during the post-production stage of film or TV. At this stage designers ensure that signal level, dynamics, frequency content, and positioning of audio are all polished and ready for distribution. However, because games are nonlinear we are essentially working with a moving target when mixing. Dynamic mixing is a multi-faceted process which requires the same attention to signal level, dynamics, frequency content, and positioning, but also requires that the mix be flexible enough to change along with the game state. To do this designers often use snapshots, which allow audio engines to trigger mix changes in synchronization with visual events. Later in this chapter we will discuss in greater detail dynamic mix systems and resource management.

Implementation Preparation

Before we dive into the micro details of audio engines and asset implementation, let’s discuss preparing assets. We will start with the process of mapping out the implementation system, and then we will move into exporting assets ensuring proper volume level, format, and file naming.

Sound Spotting

In the film and television world spotting is the process of determining how the musical score and sound effects will be tied to visuals. During this process the sound designer and/or composer will sit down with the director to review scenes and determine the hit points, or moments that require audio emphasis. In games there is a similar process, but it is often less formal. A game design document (or a GDD) may be provided as a guide for narrative and gameplay. It may contain a synopsis of the story, concept art, and gameplay mechanics. A style of music or a list of musical references may be included to guide the sonic direction. Early gameplay in the form of a build (a pre-release playable version of the game) may be provided to the audio team to prepare concept audio for review. Regardless of how formal or informal the process might be, there is still a need for this pre-production phase.

If audio references from other games are provided, the audio designer should have a good idea of the direction to take. Direction is an important part of the process as visuals can often work well with different sonic styles. Choosing a direction during the spotting process will clarify exactly what style and mood the audio needs to be in to best support the game. For example, a sci-fi shooter as a descriptive can be interpreted in a few ways. Metal Gear Solid and Dead Space are both in the sci-fi genre, but the former is militaristic and technology-driven while the latter is dark and at times horrific. These two games would each require their own particular audio direction to be chosen during the spotting stage.

The spotting session might simply consist of the audio designer playing a build and creating an asset list to review with the developer. Sometimes the game developer will already have a list of assets in mind and present these to the audio designer. Should you receive an asset list as an audio designer it is wise to review the list with the build to ensure sound events have not been overlooked. These asset lists should be kept in a spreadsheet and shared with the rest of the team. A good asset list will allow for new content to be added and the status of assets to be tracked and shared over a cloud-based app like Google Docs for real-time updates. In Chapter 2 we directed you to the Sound Lab (companion site) for a review of a typical asset list.

Building the Sonic Maze

After spotting the game you should have a clearer understanding of the types of assets required. The next step is planning how those assets can be implemented so they sell the scene and support the narrative. We call this building the sonic maze. Essentially you are building the walls of the maze through which the player will move. Your game will consist of a number of assets including sound effects, music, and dialogue. As the audio designer it’s your job to ensure all audio events are implemented with purpose, and are responsive to the player’s input. You must identify the placement of these sound events in the game world by determining where in the scene a player should hear the various sounds as they move about the environment. Taking into account the full player experience and how it impacts the way sounds are triggered is key to effective audio implementation.

To understand how to map out the sounds we must break down the game and its core mechanic(s), just as we did with the animations in Chapter 3. To better understand this, let’s explore a hypothetical sci-fi game. The game will be a third-person shooter, which consists of a player character armed with an automatic weapon and four types of robot as the non-player characters (NPCs). The game is set in a high-tech lab containing five rooms per level with a total of three levels. Inside each room there is various machinery and technology such as LCD monitors (with visual static on the screen) and air vents.

The target platform is mobile and we will be using middleware along with our game engine. Since we know our game is being developed for a mobile platform we will need to be resourceful with memory and CPU usage. Later in the chapter we will discuss ways to manage resources per platform, but for now we just need to understand that there are processing and memory limitations on mobile platforms which are not as much of an issue on desktop or console platforms.

Now that we have an idea of our game, let’s start with mapping out the ambient soundscape of our lab. We can take a macro look at all three game levels and decide what the ambience will be in each of the five rooms. We should first break down room size based on how much technology is in each room. This will help us define our background ambient loop, which will act as the glue to all of our other in-game sounds.

Next, we can take a more micro look at the elements that will emit sound from localized spaces. Let’s imagine one of the rooms contains an air vent, two LCD monitors, and a large mainframe computer system. We would plan to hook sound emitters on those game objects to add some variation to the scene. They will blend with our background ambience, but will work as detailed focal points that bring the ambience to life. Just as sounds in the real world originate from various sources, we need individual sounds in the game world to add depth to the ambience. Once we have a working version of this game (vertical slice pre-alpha build) we can experiment with more complex adaptivity in the environment. We can decide how close or far away a player needs to be to hear certain sounds, and whether or not the acoustics of the location will require reverb or echo effects.

Next we can plan player character sounds, which include weapon fire and footsteps. Starting with footsteps, we would look to the gameplay and visuals to see how to create variation. In our game scene let’s say there are two different floor types, one being solid metal and the other being a thin, wooden platform. With this information we can adapt the footsteps so the sounds change with each floor type. We can continue to think about adding layers of cloth movement and using randomization within our middleware to ensure our footsteps transition and vary smoothly per terrain.

At this point we have taken a very broad look at the sonic maze we would create for this game. From here we would continue with the weapon and robot enemy sounds. These are in a sense simpler because the animation and gameplay will give us specifics in how to create and implement the proper assets. These sounds would be the focal point of the audio because the gameplay is based around combat. We would then employ our numerous techniques from Chapter 3 to create complex and detailed assets for implementation. Although this was a quick overview of the process of implementation, it has served the purpose of introducing a general workflow which we will dive into in the following sections. For now, let’s continue by discussing the preparation of assets for implementation.

Preparing Assets

Delivering quality audio assets begins at the very start of your recording and editing process. A poorly recorded sound that is then trimmed and normalized is not going to sound as polished as a sound that was recorded at a healthy volume to begin with. Working in games is no different than working in a music or post-production studio in this respect. You will want to optimize your workflow and the path the audio takes from beginning to end as much as possible. When preparing assets for implementation there are a few things that should be considered to make the workflow a smooth process. Let’s explore those here.

Mastering Assets

Mastering is a process you may be familiar with in regards to preparing a final soundtrack mix to prepare for distribution. The process ensures consistency across all the music tracks in the album. Typically a mastering engineer will work with a full stereo mix to enhance the sonic character and correct any balance issues with EQ, compression, and limiting. This level of polish is what you are used to hearing when you download an album.

With game audio the assets aren’t in a linear mix that can be processed in a static stereo or surround file. Game audio is dynamic, and assets are cut up and implemented individually into the engine. In this sense, mastering game audio does not produce audio in its final format, the game engine does. Because of this, the mastering process is really meant to prepare the individual assets for interactive mixing. The goal at this stage is then to ensure a cohesive and balanced soundscape once implemented. EQ, compression, and limiting are common tools used to maintain continuity in terms of frequency and volume between all assets. Reverb is sometimes applied to assets to add a sense of “space” to each sound.

During this phase of asset preparation, the audio designer must think about the full mix including music, dialogue, sound effects, Foley, ambiences, UI sounds, etc., and get a sense of the frequency spectrum the assets will occupy. Mid-range, around 1.5 kHz, is the frequency range that our ears are most sensitive to and usually holds the core elements of a soundscape. You may have been introduced to the Fletcher-Munson curve when you first started working in audio. This curve is a good representation of where our ears are most and least sensitive. The midrange is also the part of the frequency spectrum that is most consistent across different speaker types. It’s important to ensure that the assimilation of all assets into the soundscape won’t leave any holes (or buildups) within the 20 Hz to 20 kHz range of human hearing.

The mastering process can also prepare the assets for the lossy compression we mentioned above. This process of encoding attempts to remove frequency content undetectable to the human ear through psychoacoustic modeling. If certain groups of sounds need to be compressed (i.e. low-frequency effects), you can improve the audio quality of the compressed sound by applying high or low pass filters to remove portions of the frequency spectrum preemptively. This then allows the lossy encoding process to dedicate more space and bandwidth to the frequency ranges you, the audio designer, have already identified as important. Different lossy compression encoders, schemes, and formats will provide very different results. It can be helpful to experiment with compression formats beforehand, and evaluate their effect on various categories of sound. A certain amount of trial and error should be expected, and (as always) listen critically to your work to assess whether it suits the needs of the game.

It’s important to note that the mastering stage often carries into the dynamic mixing stage as you test and adjust the audio systems integrated with the game engine. We will cover dynamic mixing in more depth later in this chapter.

Exporting Assets

An important part of bouncing down the assets and preparing them for implementation is ensuring the audio is loud enough so it will not need to have gain applied in the audio engine. Our DAWs are built to provide good gain-staging and signal flow, so doing this work in our specialized audio tool (the DAW) will result in the best-sounding audio in game. Working with a range of reference volumes for each asset type is a helpful way to ensure you have enough audio resolution to work with in the final mix. If you must boost the volume in the audio engine beyond 0 dB, you risk introducing artifacts and system noise. There is only so much gain that can be boosted on an asset that was bounced out of a DAW at a very low volume.

When delivering assets to be integrated by the developer (asset cannon approach), you may want to control the loudness by exporting assets at specific levels for different categories of sound. Here are some rough reference volumes to use as a starting point when you are delivering assets to be integrated by the developer:

- Dialog -6 dBFS

- SFX -6/-9 dBFS

- Music and ambience -20 dBFS

- Foley -18 dBFS

If the audio designer is in charge of implementing natively into the engine or middleware, typically all of the assets can be exported from the DAW around -3 to -5 dBFS. If the project has an audio director, she may offer her preference on the matter. Some might require setting up a limiter with -1 tp (True Peak). The idea here is to ensure you have plenty of audio resolution for fine-tuning volume levels in the audio engine.

Use these signal references as a starting point and adapt them to each project’s unique needs.

Effects Processing

Next we can explore effects processing. The decision to bake in effects processing or to apply real-time effects in the audio engine will influence how the final assets are rendered for implementation. Generally speaking, effects processing in engine can be very CPU intensive. Therefore, baking effects into the audio asset is helpful when you are working with memory- and CPU-limited platforms like mobile. Baking in effects also works for the asset cannon implementation method since you may not have control over defining the effects settings in engine. The negative side of this method is, of course, the effects not adapting to the player’s location. If you apply reverb on footsteps they will sound with the same reverb indoors or outdoors, which doesn’t really make sense. Later in this chapter we will discuss in more detail resource management and optimization, which will allow you as the audio designer to make more educated decisions in regard to planning effects processing.

File Format and Compression

Now that we have a signal level and effects processing covered, let’s talk about file format.

Audio implementation takes into consideration not only what sound events will trigger and when, but also looks at the complete audio storage footprint to ensure that the sounds will fit in their allotted memory. In the past, the limitation might have been the size of the delivery format (CD/DVD/Blu-ray). In today’s world we must also consider the size of the install package, or the DLC download target size. This forces us to use file compression (not to be confused with a compressor, the audio processing tool), which reduces the file size of our audio assets. When the target platform requires us to compress our audio we try to intelligently target different compression approaches for sound categories. For instance it may be acceptable to compress a folder of footstep sounds far more than the background soundtrack.

Most modern game engines can utilize a variety of audio file types and formats. In many instances a decision will need to be made about whether the application itself can handle all audio at its highest resolution. Different hardware platforms (console vs. PC vs. mobile, etc.) may run natively in a particular sample rate and bit depth, or even with an expected audio file format.

Let’s explore the different ways we might handle file formats for two different delivery methods. If you are working within the “asset cannon” scenario, you may want to handle file compression ahead of time. If this is the case, ask that the developer do no further compression of the audio in the game engine. The file format would then be determined ahead of time by the programmer based on the target platform and memory allocated for audio. If you are also in charge of implementation, the files should be exported from your DAW at the highest possible quality as the audio engine will handle conversion settings which can be defined per target platform. We recommend 48 kHz, 24 bit .wav files.

File Naming Standards

File naming is an important part of the asset preparation process. Naming conventions set rules for the expected character sequence of audio file names. This is important for a few reasons, the first of which has to do with being organized when working with team members. The second, and more critically important, has to do with how the assets are embedded into and called from game engine components or scripts. Operating systems, programming languages, and programmers have their own rules and best practices for file naming. If you aren’t well versed in these it’s a good idea to discuss any file-naming specifics with the programmer prior to delivery. This will save you and your development team a lot of back and forth to communicate what is what and where it should be placed when delivering a folder of audio assets.

In the Sound Lab (companion site) we discuss file-naming practices in more detail. Be sure to check it out when you can. For now, we leave you with some of Damian Kastbauer’s thoughts on file-naming standards.

Visiting Artist: Damian Kastbauer, Technical Sound Designer

Thoughts on File-Naming Standards

The opportunity to understand the direct connection of an event-based audio system and game engine begins by leveraging a consistent naming standard. Drawing this line between the input and output is the clearest way to understand their relationship. Building systems with a naming standard in place allows for the flowing of text-based strings from the game to be received by the audio engine. These audio events then arrive loaded with context about what system it came from, what it might represent, and where to find the place it originated from.

This can be as simple as adding a prefix to define the system of origin, for example:

play_vfx_ = the name of the gameplay system originating the audio event (prefix)

explosion_magic_barrel_fire_17* = the name of the object requesting the audio event

Audio event: play_vfx_explosion_barrel_fire_17

This can be further extended to include available actions specific to a system (in this case, as a suffix):

play_vfx_explosion_barrel_fire_17_start

play_vfx_explosion_barrel_fire_17_stop

*Leveraging other disciplines’ naming standards can also help lead you to the asset where an audio event has been authored. If you have the name of the object built into your audio event name, it can easily be used to search within the game engine.

Whether you choose to concatenate (build) different aspects of the event name dynamically or establish the rules up front for use by programmers, the effort of establishing a naming standard is rewarded with a clearer understanding of a sound’s origin during debug. When you find yourself digging through an audio event log trying to understand what, where, and why a sound is playing, the ability to parse the event name and begin your search with a map leading right to the sound is a tremendous way to navigate the project and solve problems.

In the world of abstractions at the core of game development, anything that can be done to build clarity into a pipeline, which can be easily understood, is of value to the process.

Asset Delivery Methods

Delivery for the asset cannon method is often via a secured shared drive on a cloud-based storage site. An asset list in the form of a spreadsheet should be used to keep track of the status of assets, provide notes for integration, and feedback from the developer.

When importing assets natively into the game engine (or when using audio middleware) the process usually involves source control as a delivery method. We will discuss source control in more detail later in this chapter, but for now let’s define it as a server or cloud-based system that hosts source files. In our case the files would consist of game assets and project sessions shared by everyone on the team. It offers control over versioning, like a backup for all of the changes made to the project. It’s important to note that sound banks generated from middleware can be shared across cloud-based storage as an alternative for smaller teams that do not wish to put source control in place. Whether through source control or a drive share, the process allows for sharing sound banks without the programmer needing to have a copy of the middleware.

Source Control

Source control (also known as revision or version control) is an important part of the game development workflow, but is not often discussed. Working with teams means that multiple people need a way to collaborate on a project. This method will need to allow for merging new assets, making updates, and making changes to a shared repository – a central location where data is stored.

Whether you are working on an indie or AAA game, you may be given source control credentials and left wondering what that means. Each development team might have their own preference for source control, so you should familiarize yourself with software like Git, SVN, Mercurial, and Perforce. Git is used quite often among indie teams and there are quite a few “Getting Started” guides and video tutorials on the internet.3

Whenever you have more than one person editing and interacting with assets, you will need a safeguard to avoid changes that might break the game. Source control offers merging of assets and rolling back or reverting to a previous version of an asset, since it keeps a version history. It sounds pretty straightforward, right? Well, it is and it isn’t. For some it can be tricky checking out a repository and creating a local copy of it. The workflow can be a bit daunting as the local repository will consist of trees. The trees are simply objects that create a hierarchy of the files in the repository. You will need to get comfortable working with a directory, checking out files, as well as adding and committing them. We highly recommend starting with exploring Git and the corresponding tutorials.4 Source control is something you have to jump in and use to become comfortable in the workflow.

QA before Asset Implementation/Delivery

Prior to delivering or implementing audio assets, we prefer to audition assets bounced from the DAW in a two-track editor (Adobe Audition, Audacity, etc.). Keep in mind this isn’t a workflow everyone chooses, but we find it useful to ensure our assets are ready for implementation. While introducing another piece of software into the workflow does add an extra step, two-track editors are destructive, which allows any last-minute editing of the file to be easily saved to disk and quickly shared for delivery. The two-track editor outside of the DAW offers a quick look at the head and tail of the assets to ensure the fades are at the zero crossing. Loops can also be checked for seamless playback and levels can be reviewed. Sometimes listening to the asset outside of the DAW environment can reveal issues like tiny pops and clicks, or issues with reverb tails.

This is also a great time to close your eyes and assess the relative levels across similar groups of sounds. By playing all of your swoosh variations for example, you may start to hear the slight volume differences that will make one audio file stand out in game – and not in a good way! Audition lets you rebalance the volume of any sounds that are poking out more than the others. It also allows you to make the final determination of a sound that isn’t quite working with the others, and should not be brought into the game at all. This can also be done visually using loudness meters as part of your mastering process.

Game Engines

Game engines manage numerous tasks to render and run the virtual world of every video game. At any given time while an engine is running it will be handling 2D or 3D graphics, animations, physics, shading, rendering, artificial intelligence, streaming, visual effects, interactive logic, memory management, and audio. The game engine tracks the location of media assets (including music, sound effects, and voice-over assets) as well as when (and when not) to call events. Even in a simple game, this can add up to a large amount of data that needs to be coordinated. In a more complex game the scope can extend into hundreds or even thousands of assets. To render the game the engine might also handle porting of the game to multiple platforms.

There are many game engines available for use and, by extension, there are many plugins and tools to use with them. This means you will find a good amount of variety in the workflow for each project. You might find yourself familiar with some of the more widely mentioned game engines like Unity or Unreal, but there are a variety of other engines like Corona, Game Maker Studio, Cryengine, and Amazon Lumberyard being used by game developers all over the world. Refer to the “List of Game Engines” Wikipedia site5 for a more detailed list. Some developers decide to design and build their own proprietary engines, but third-party software provides programmers with the necessary tools to build games more efficiently.

These engines make use of various scripting languages like C#, C++, Python, Xml, Javascript, and Lua. As you can see it helps to be flexible and open to learning new tech when working in game audio. This doesn’t mean you will need to learn all of these engines and languages, but keeping abreast of the latest trends in the industry and familiarizing yourself with the most utilized tools will help you position yourself as an artisan in the field.

In this chapter we will focus on the Unity engine and its nomenclature as we examine the process of implementing audio natively. At times we will mention similar features in Unreal to demonstrate adaptability between tools. We will also demonstrate the power of audio middleware (specifically Wwise and FMOD) by showing a reduction in the time programmers need to spend on the scripting side, as well as a reflection of the middleware offering much more control over sonic events to the audio designer.

Regardless of the engine used for development, there are common techniques and workflows across the discipline. In Table 8.1 we will break down these common elements, which are an important part of game audio implementation and can be applied to projects of varied configurations.

Table 8.1 Outline of the terminology differences between Unity and Unreal.

| UNITY | UNREAL |

|---|---|

| Project Browser | Content Browser |

| Scene | View Port |

| Hierarchy | World View |

| Inspector | Details Panel |

| GameObject | Actor / Pawn |

The Engine Editor

The game engine is defined as the software application that provides the necessary tools and features to allow users to build games quickly and efficiently. The game engine editor is a creative hub visualized as a graphical interface with customizable layouts of windows and views. These layouts are used by designers and programmers to piece together elements of the game.

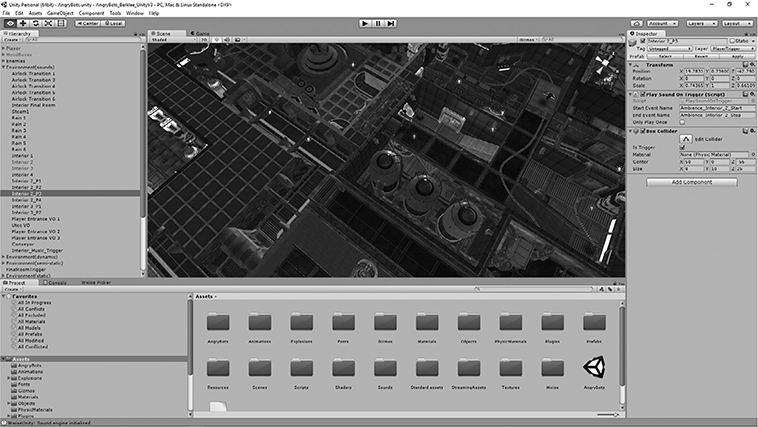

Figure 8.2 Screenshot of the Unity engine editor.

The Sound Lab

A typical Unity engine layout contains a Hierarchy, Project, Console, Scene, Inspector, Audio Mixer, and a Game window. They all work in conjunction with each other in this creative hub. In the Sound Lab we will break down how they are linked. There are also some important components integrated into the engine to help the user define and provide audio functionality within the game. We also discuss the views and components as well as provide a walkthrough of the Unity engine. When you are finished, come back and move onto 2D versus 3D events. If you skip past this companion site study you may not fully understand the information to come.

A typical Unity engine layout contains a Hierarchy, Project, Console, Scene, Inspector, Audio Mixer, and a Game window. They all work in conjunction with each other in this creative hub. In the Sound Lab we will break down how they are linked. There are also some important components integrated into the engine to help the user define and provide audio functionality within the game. We also discuss the views and components as well as provide a walkthrough of the Unity engine. When you are finished, come back and move onto 2D versus 3D events. If you skip past this companion site study you may not fully understand the information to come.

2D and 3D Events

In linear media the final mix contains volume attenuations that are “burned” in. This means a sound coming from an object or person far away from the camera will sound softer and more distant, and objects closer to the camera will sound louder and closer. This is typically accomplished during the post-production stage. In interactive media instead of “burning in” those automations, we make use of 2D and 3D events. These events define the attenuation and panning of sound in real time to approximate distance and spatial information relative to the audio listener. The audio listener is essentially a virtual surrogate for our ears in the game environment. It is almost always hooked into the game engine via the camera so that what the player is seeing matches what the player is hearing.

A 2D (or two-dimensional) event is “unaware” of the three-dimensional, 360-degree environment. 2D events don’t have a specific location as a source, so they don’t adapt spatially as the player moves about the game environment. In a sense, 2D events are non-local – the only way they can adapt is to pan left or right, not forward, backward, up, or down. Instead they play through the listener’s headphones or speakers just as if they were playing out of a DAW. This makes them effective for audio that is intended to surround the player at all times. Common uses for 2D events are background music and environmental ambiences.

3D (three-dimensional) events do include spatial information. They set the volume attenuation and pan based on the source distance and direction from the audio listener. In other words, 3D events are localized. 3D events are great for specific sound effects where location is important to the gameplay. However, they can also be implemented with a wide spread so the sound source simulates a stereo image when the player or camera is within a certain radius of the emitter. We will explore uses for hybrid techniques in the “Ambient Zones and Sound Emitters” section later in the chapter.

A simple way to look at 2D and 3D events is by thinking of diegetic and non-diegetic sounds. Underscore is non-diegetic. Players can’t interact with the sound source because there is no source! So 2D events work well for that scenario. However, if a sound is diegetic, then the player will likely be able to interact with its source. In this case we can guide the player to the location of an interactable object (like a radio that can be picked up and added to your inventory) by setting the event to 3D, thereby allowing it to adapt to distance and direction. There are many more ways to use 2D and 3D events, but these examples are the most typical.

Spatial Settings

The audio engine will need a spatial setting in order for the audio to behave as described above. In Unity the Audio Source (as explained in “The Engine Editor” section on the companion site) offers a Spatial Blend function which allows the user to define 2D, 3D, or a blend of the two. The blend would be used in specific situations where the spatialization of the sound needs to change based on the perspective. When a player character (PC) is driving a vehicle in game, music might be a 2D event to fill the space of the vehicle’s interior. When the PC exits the vehicle the sound will behave as a 3D event so it can be spatially located, emanating from the vehicle. When a pure 3D spatial blend is selected there are a few options to control the behavior of the sound’s playback. We will discuss those options a bit later in the chapter.

Doppler level is another important setting within a 2D or 3D event. The Doppler level will raise the pitch of an audio source as the audio listener approaches the object, and then lower the pitch as it moves away from the object. This emulates the way we hear sound coming from moving objects (or as we are moving) in the real world. Keep in mind that when the value is set to zero the effect is disabled. You may want the option to disable the effect when dealing with music as underscore is most often thought of as non-diegetic.

Typically 2D event assets will be imported as stereo or multi-channel files, while 3D event assets are imported as mono files. The mono assets will sound more consistent and localized when positioned in the 3D space. Multi-channel sounds and sounds that need to cover a wide stereo field can be utilized as a 3D asset, but you will have to use Spread Control (as explained in “The Engine Editor” section on the companion site) to separate the channels in virtual 3D space. If done properly, the perceived width of the sound will diminish as the source moves further away from the audio listener, just as it does in the real world. The Spread will allow you to mimic the effect on multi-channel sound.

The Volume Rolloff function controls the attenuation as the listener moves to and from the audio source. The volume curve offers a logarithmic, linear, and user-defined custom slope setting. A minimum and maximum distance setting offers further control over how loud a sound is over a given distance. A lower minimum will play the sound at its loudest volume setting when the listener is very close to the object. The values are in meters, so a value of one means when the audio listener is one meter from the object it will play at the full volume at which the file was imported and consider the volume and mixer level slider settings. A maximum distance value determines the distance at which the sound will stop attenuating. If the slope falls off to zero the sound will become inaudible.

To determine the best settings we need an understanding of how sound behaves in the real world. Lower frequencies travel further because higher frequencies lose their energy faster. In the real world it’s difficult to discern the difference at shorter ranges unless there is high-density matter absorbing the sound waves. This behavior dictates an inverse curve, which is exactly what the logarithmic setting in Unity offers. Most often this will be the most natural sounding option. The linear roll-off attenuates a bit too quickly. Adding a low-pass filter curve to a logarithmic roll-off that attenuates (or reduces) the high-end frequencies as the volume fades out most closely simulates real-world sound. However, there will be times the natural-sounding curve will not work best in game. As always, the definitive answer for which curve to use will come from listening yourself and deciding on the one that best fits the game. If you aren’t happy with the logarithmic results, the curve can be further defined and saved as a custom value.

As you can see, there is much more that goes into implementing a sound than simply choosing the asset and dropping it in the audio engine. Be sure to always test in game to ensure the 3D settings are accurate, and that they add immersion to the environment.

Audio Middleware

The GameSoundCon yearly survey6 polls audio middleware usage between AAA, indie, and pro casual game developers. It’s a great reference for keeping up with the latest implementation trends. It also includes custom and proprietary engines as well as native engine implementation in the poll for a well-rounded snapshot of how audio is being integrated into games. Currently, Wwise and Fmod are the two popular middleware options for both sound and music. Elias is focused on music integration, but can be easily integrated with Wwise or FMOD to combine a sound design and music solution. It isn’t hard to find numerous games developed with these tools.

The Sound Lab

In the Sound Lab Table 8.2 compares middleware implementation tasks broken out into FMOD and Wwise terminology.

In the Sound Lab Table 8.2 compares middleware implementation tasks broken out into FMOD and Wwise terminology.

The choice of audio engine for a project is usually determined by the game developer or programmer, but a knowledgeable audio designer can help sway this choice. Larger developers with an audio director might rely on their recommendation, or they might decide a custom and proprietary engine is best for the project.

There are many reasons to use audio middleware as opposed to native engine integration. These reasons include the additional licensing costs for using middleware or a programmer’s concern for handling bugs or errors they may be unfamiliar with. As an advocate for working with audio middleware, you should be familiar with licensing costs and the indie budget-friendly options. You should also be well versed in the software and able to assist the programmer with any issues that may arise. Middleware developers do offer support and there are plenty of forums on the internet to provide assistance.

The point of middleware is to offer the sound designer or composer (who may not be familiar with programming) more control over the final in-game mix. It also works to offload the detailed and time-consuming process of audio implementation to the audio designer as opposed to the programmer. Having this control over the implementation process will make your game sound better, period. Middleware allows you to bypass the asset cannon and native engine implementation approaches, and get right into the business of triggering and mixing adaptive audio.

Here are a few other advantages to using audio middleware.

- You have better control over the audio footprint with file compression.

- Dynamic music systems are much easier to configure and implement.

- Debugging and profiling allows for quick ways to uncover issues and resolve them.

- It allows for easier testing for revision and iteration.

- You have control over the final mix.

- You can easily add variety to sound events.

- Flexible licensing makes middleware accessible for smaller teams.

The Sound Lab

In the Sound Lab Table 8.3 looks at some advantages of using middleware over the native audio function in most game engines.

In the Sound Lab Table 8.3 looks at some advantages of using middleware over the native audio function in most game engines.

Fmod and Wwise have similar functions, but their graphical user interface looks a bit different, and naming conventions for certain modules and functions vary. We will also have a look at some middleware tasks and how they are referred to in each application.

Integration Packages

As mentioned earlier, by default the game engine and the audio middleware won’t automatically connect and speak to each other. The middleware software developers provide an integration package, which needs to be installed into the game engine. Because the integration process connects two different software packages, the packages are specific to individual software versions. If you update either the game engine software or the middleware version you should reintegrate the package. For this reason, it is best to hold off on upgrades to your tools until you are finished with the game if at all possible. Once the integration package is in place there are several components that can be used in the game engine without any additional scripting. The audio designer will have instant access to add an audio listener, load banks, trigger game state switches, and audio event emitters into a scene.

The integration package also provides a few classes or libraries of pre-programmed functionality, which can be used with minimal code. As you use these integration packages you will appreciate things like calling banks, events, triggers, and real-time control parameters all without needing any support from the programmer. An API (Application Programming Interface) offers the game’s programmer deeper control, while the provided stand-alone app offers the audio designer a graphical hub in which to import sound assets, configure them for playback in game, and test the audio systems.

Middleware Events

The audio functions we discussed in the Sound Lab earlier in this chapter can be applied to middleware functions and modules. Events in middleware are similar to the Audio Source component in the game engine. The Audio Source acts as a container that holds information for the audio to be triggered in game. Middleware events act similarly in that they hold the audio assets and data which determines how and when it is triggered in game. This data is what determines how the event should behave in game. Additionally, middleware events contain more advanced functionality like scatter sounds, RTPC (real-time parameter control), and nested events, which allows the user to organize large and complex events into one or more master event. We will discuss this further on the companion site.

Firelight Technologies’ FMOD allows the user to determine if an event will be 2D or 3D upon creation. When a 3D event is selected a spatializer planner will be available on the master track. In Wwise an audio object can be defined as 2D or 3D in the Position Editor. Once the user has a solid understanding of the functions of audio implementation that knowledge can be applied when acclimating into different tools.

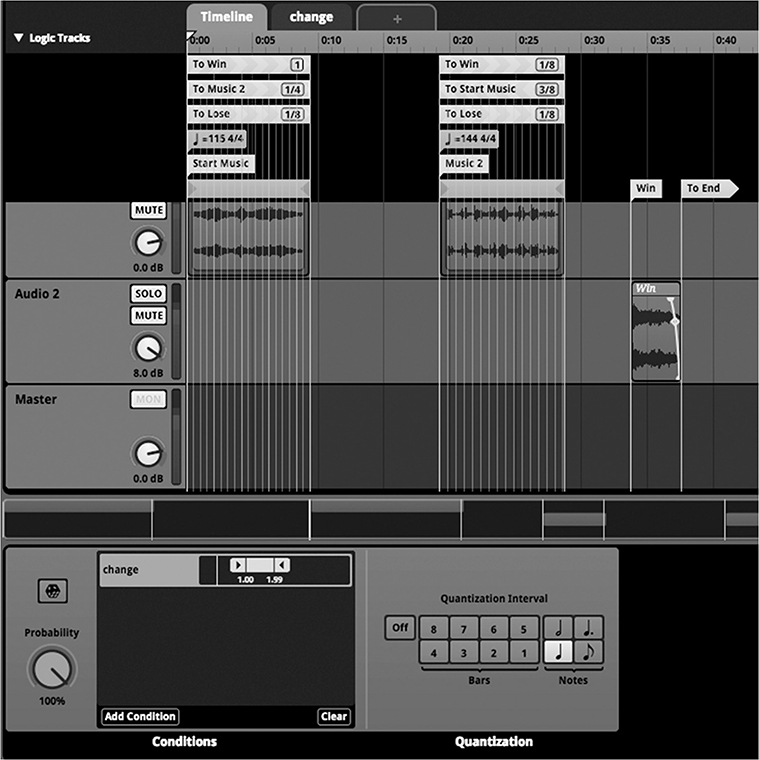

Using middleware, audio designers can easily configure tempo sync transitions, set loop points, create randomization, delays, and and apply real-time effects to events. Programs like Wwise and FMOD accomplish this by taking game data (values that originate from the game code) and synchronizing it to various audio events. The audio events are made up of all kinds of audio assets and automation stored within containers (a broad term used to describe the instruments and modules that can be created with middleware) and triggered in real time. Each middleware program has a different method of synchronizing with game data. FMOD uses parameters, tabs with a pre-set value range that mirrors the game data. Wwise uses game syncs, which function similarly, but without the visual tab that FMOD offers. Native integration into a game engine requires programmer support to create these elements. Additionally, the mixer system allows for grouping events into buses that allow the use of sends and returns. Snapshots can provide pre-configured audio commands which will be applied to specific actions in game. These audio configurations will act as a dynamic mixing board, adapting the mix on the fly. Figure 8.3 demonstrates the flow of data from game engine to middleware.

Figure 8.3 The flow of data from the game engine into middleware and back into the game engine.

Integrated Profiling

A profiler is a tool that provides detailed information about how a game (or in our case a game’s audio) is performing. This tool can uncover various problems or resource management issues and provide some information on how to fix them. Later in the chapter in the “Testing, Debugging and QA” section we discuss profiling as a quality-control and bug-fixing tool. Here, we will explore using profilers for resource management and other tools to audition a project’s events and snapshots in a game-like context prior to connecting to a running instance of the game.

In additional to a profiler, the Wwise Soundcaster and FMOD Sandbox features provide exactly this kind of functionality. These tools provide the audio designer with a way to configure various game state changes without the need to connect to the game. By being certain that the state changes and triggers are working correctly before updating a project into the main development branch, it is far easier for the audio designer to ensure the cohesion of the mix without interrupting workflow for the rest of the team.

Once the designer has created and configured events to her liking, sound banks can be generated based on the target platform. This will create a package from which the game engine can interpret all the necessary audio functions and trigger them appropriately in game.

Software development is never simple. During these tests, issues with implementation often occur. Any small discrepancy such as a mislabellled event can cause an expected sound to improperly trigger. This is where the profiler comes in. A profiler offers a connection to the game through the game engine editor or stand-alone build of the game. A profiler lets the audio designer track the communications between the game and the middleware to verify what data is being sent in between the two programs. This is a crucial step toward optimizing your game’s audio and uncovering bugs or issues with implementation. Later in the chapter we will further explore resource management and optimization.

The Sound Lab

Before moving on, head over to the Sound Lab for tutorials on Wwise and FMOD. When you are finished there come back here to explore individual components and features of middleware that help improve the final in-game mix. If you skip this companion site study, you will not fully understand the information to come.

Before moving on, head over to the Sound Lab for tutorials on Wwise and FMOD. When you are finished there come back here to explore individual components and features of middleware that help improve the final in-game mix. If you skip this companion site study, you will not fully understand the information to come.

Achieving Variation within Limits

Since we have limited resources per development platform, it isn’t possible to have 24 hours of streaming music and infinitely varied sound effects for every possible action in the game.7 This means we have to be creative in how we generate audio content. To account for longer play times, we can create continuous loops of music and ambience. We can also string together shorter clips, triggered randomly, that fit together to make a longer more generative loop. This offers a bit more variety. Adding short linear sounds (typically referred to as one-shot sounds) on top of our continuous loops can help further improve the variation. This can be done via random playlists, which we discussed at the beginning of the chapter. For example, a playlist of gunshot sounds may contain four to six one-shot assets. If the same gunshot sound were to be triggered ten times in a row, it would sound synthetic, and the player would lose her sense of immersion. The random playlist instead chooses an arbitrary gunshot sound from the playlist. Every time the player chooses to fire the weapon in game a different sound triggers, adding variety and maintaining a sense of immersion. Designers can also set containers to randomize the pitch, volume, pan, and delay time for the sound to trigger, and multiply the output so that it sounds varied and scattered around 3D space (like simulating a forest of birds chirping). Random settings on pitch and volume should not be overly exaggerated. Similar sounding assets will produce an organic experience, but sound that is too heavily randomized will feel confusing to the player. A small 2 dB fluctuation in volume and a few cents in pitch are enough to provide the subtle randomization necessary to avoid listener fatigue and add generative detail.

Randomization can and should be applied to a variety of sounds in game, but some thought should be put into what kinds of sound need randomization in addition to how much randomization should be applied. Some things will sound consistent with volume and pitch randomizations, others will sound chaotic. For example, UI button presses in game with random pitch or volume applied won’t sound consistent to the player and therefore will not relay the intended effect. It will actually be confusing to the player. Ambient bird chirps however are great candidates for subtle pitch and volume randomization.

In the Sound Lab (companion site) we will provide a video tutorial on randomization. You can visit the site now or come back to it later in the chapter. We will be sure to remind you.

Ambient Zones and Sound Emitters

To create an immersive soundscape in a game scene we start with an ambient zone (see Figure 8.4). Ambient zones are a defined area within a game scene to which designated components such as audio event emitters can be added to trigger background sounds to match the environment. Instantiating this will create a looping background event which triggers over a defined area. To bring the space to life we can then introduce positional sounds by placing sound emitters throughout the scene. These emitters add detail and specificity. When combined with the looping ambience, these events define the sonic setting for a given scene.

Figure 8.4 An ambient zone defined by a spherical game object in a Unity scene set to trigger an audio event when the player enters the area.

Let’s take a look at a scenario in the likes of a FPS (First Person Shooter). We will need to start with an ambient zone. We’ll add a simple ambient loop of a deserted city. However, our battlefield won’t sound very realistic with just a static ambience looping in the background. By adding positional sound emitters scattered around the environment we can provide a heightened sense of realism. For example, our battlefield might need some randomized debris to crumble now and then, or the shouts and cries of unseen NPCs still fleeing the city. By placing emitters on game objects we can add those sonic details to the scene. When the player moves through the environment and into the radius of the emitters she will hear the soundscape change and adapt.

One important function of emitters is to approximate sounds relative to distance. When the source of a sound is far away, as the audio listener (if you can recall, the audio listener is almost always attached to the camera, which we are controlling in a FPS) moves closer to the emitter the mix responds appropriately. As we previously mentioned, as the source moves closer, the volume of the sound event must increase to mimic the way we hear in real life. Additionally, a filter can to be applied to re-introduce the sound’s higher frequencies as the listener approaches the source, which adds more detail and realism. Back in our FPS example, the player will see an NPC shouting in the distance and, as she moves closer, she will hear the shouts louder and the HPF will open up to include higher frequencies. On top of that, the left–right spatialization (and in some cases even up–down) will automatically adapt to the angle of the audio listener. She will perceive all of this as cohesive and realistic details in the soundscape.

It’s important to note that sound emitters don’t necessarily need to be hooked directly to a visual object in the game scene. The audio designer can place sound event emitters in a scene to create positional random sound triggers within an ambient zone. This approach is great when a game object is not visible to the player, but exists as an element regardless (i.e. wind, bird chirps, crickets). These randomized triggers add detail to the soundscape without cluttering the visuals.

The ambient zone/emitter system can be implemented into the game in a few ways. One way is to tie the ambience and emitters to a single event. One middleware event can handle multiple audio objects, so we can set an ambient zone in the game engine that triggers a single event in the middleware program. In the event we might combine one or more looped assets and layer them with multiple one-shot sounds. Spatial settings can then be applied to each asset as well. The static loop would be a 2D event, covering the full area of the ambient zone, while the one-shot sounds would be set to scatter around 3D space. The second method is to place one or more looped assets into one event and create a separate event for the sound emitters. Just like the first method we can define the spatial settings so the static loop is 2D and the emitters trigger 3D events. The difference here is that the ambient zone will trigger the static loop event over a defined region, while the positional emitters will be independently placed and separately triggered around the game scene. This method is a bit more work, but it offers more flexibility with the emitters.

A third option for a responsive soundscape involves a more dynamic ambient system in which multiple loops and emitters trigger sounds according to the game state. This kind of dynamic system can adapt to game context, and can be controlled by real-time parameters (see the section below). A good example of this is Infinity Ward’s Call of Duty (COD4). The team used a dynamic ambient system which streams four-channel ambiences and switches between them based on the amount of action happening in the scene.8 This systems helps to create a detailed soundscape that evolves as the battle intensifies or vice versa.

Some games take advantage of quad ambiences for ambient zones, which make up two or more sets of stereo environments of the same recordings. This can provide a denser sonic background in game. When using quad ambiences it’s best to utilize captured audio that doesn’t have much in the way of easily identified sonic elements (bird chirps, tonal artifacts, etc.). This helps avoid the player picking up on a small detail in the loop as it repeats over and over.

Reverb Zones

Reverb can make a notable difference in how realistic a scene sounds and how well the scene conveys a sense of space. Reverb zones, just like ambient zones, are defined regions for which reverb settings can be applied. This offers the audio designer a way to create specific reverb effects on sounds triggered within this area.

As we mentioned previously, smaller mobile game projects may require the audio designer to bake the reverb into the sound effect before importing into the engine. The problem with this is lack of adaptability to the player’s location. When resources are available the best way to create space in a location is by setting up reverb regions that will trigger different reverb presets in predetermined locations with predefined effect settings.

Reverb utilization in games has evolved and is still evolving as limitations in CPU and memory are improved. Now, more games can benefit from realistic-sounding ambient spaces. Game are already using ray tracing and convolution reverb for simulating truer reflections in a space. As we push forward we can look at more advanced ways to create believable aural spaces. Auralization9 is the process of simulating sound propagation through the use of physics and graphical data. This is another example of more advanced techniques for implementing reverb zones.

To conclude our thoughts on reverb zones, let’s discuss applying reverb to the zones. Presets are a great starting point, but reverb settings should be tweaked to fit the scene. Pre-delay and reverb tail can make a huge difference in how the scene sounds. Pre-delay is the amount of time between the dry sound and the audible early reflections. Adjusting the pre-delay can add more clarity to the mix by opening up space around the initial sound. Adjusting the pre-delay can change the room size without changing the decay time. This will help avoid a washed-out mix from too much reverb versus dry signal.

Looped Events

Middleware offers the audio designer an easy way to define the start and end points of a looped asset. The ability to define a loop region allows the designer to define the start and end points of the container. When the event is triggered, the loop will then continue to play back that region until the event is stopped. The audio designer can specify a particular number times to complete the loop, or set it to infinite for continuous looping.

Loops are an important part of game audio for assets like music and ambiences, but there are a variety of other sounds that benefit from looping. An automatic weapon fire sound would usually consist of a start, a loop, and a stop. These modules (sometimes called sound containers) could be single files, playlists, or separate events entirely. The start sound would contain the initial fire and the stop sound would contain the last bullet fire and tail. The loop would contain a stream of the weapon’s consecutive bursts, and a loop region would wrap around the entire duration. All three modules triggered in synchrony will allow the player to fire the weapon (thus triggering the start module into the loop) and hear a continuous barrage of bullets until the player ceases firing and the stop module is triggered, ending the event.

Variation and contrast in a looped event can help avoid listener fatigue. Avoiding specific tonal elements can also prevent the listener from picking out the loop point. Using a playlist of one shot-sounds in lieu of a static loop can add even more variation. In this case the user can define the random playlist so it doesn’t play the same asset twice in a row. If we move back to our automatic weapon example, the loop module would then be replaced by a playlist of short single-fire sounds. The loop region would remain an essential element of the event.

Looped events can be stopped and started based on ambient zone triggers. This is useful in managing resources because looped sounds will not continue to play in the background if the audio listener is not inside the defined ambient zone. Imagine that our PC is approaching a forest. As the audio listener moves closer to the forest and into the ambient zone, the forest ambience event will trigger and loop. The problem with this scenario is that the event sound starts from the beginning of the loop each time. If the player decides to run to and from the forest six to ten times in a row that loop start might become aggravating. By using a random seek variable, a different point in the loop will randomly be chosen to initiate the playback every time the event is re-triggered. This can also be a great solution for when two positional sound emitters in close proximity are triggering the same looped event. Phasing issues can be avoided if the files start their playback from different points in the loop.

Real-Time Parameter Control

Earlier in this chapter we briefly defined real-time parameter control (RTPC) as the ability to control specific properties of audio events based on real-time game data. Here we will take a microscopic look into some examples of RTPC usage. To put it concisely, RTPC is a method of taking values from the game engine, and using them to automate volume, panning, effects, or really any other sonic properties found within a middleware event. The only difference between automation in a DAW and automation in middleware is that your DAW is locked into a fixed timeline – the SMPTE code. The playhead only moves forward as time passes. In middleware we can use any in-game value that we want to move the playhead forward, and we can have multiple playheads that correspond to multiple parameters, each independent of one another. In the following sections we’ll dig into what these values are and where they come from, and then we’ll share some examples of RTPC usage.

Game engines manage a large amount of information when the game is in run-time. At any time an engine can pass values that define the game state. These could include information on player location, time of day, velocity of an object, player health, vehicle RPM, and more. Pretty much any action or change in the game state can provide data which can be used to adapt the audio around. For example, when 3D events attenuate and position sound, what is actually happening is the game engine is tracking the distance (typically in meters) from the audio listener to all objects in the scene. These distance values are then passed from the engine to the banks generated by your middleware program. These values can then be linked to parameters in FMOD (or game syncs in Wwise) in your middleware program, allowing the volume and panning to adapt in real time, as needed. Game data isn’t limited to distance. Information can include number of enemies in range, time of day, or mission completion.

Another of the many applications of RTPC is to adapt footstep playlists to different types of terrain. The programmer may pass the values for the terrain types to you, or you can do it yourself using the profiler. Either way, these terrain values must be linked to a parameter (let’s call it “terrain,” although you can technically name it whatever you like) via a short scripting process. Usually this part is done by the audio programmer, but it’s important to communicate your ideas first so you can synchronize event and parameter names and ensure appropriate values are being called. Once this is accomplished you can use the parameter values to trigger the corresponding footstep sounds in an event. Random adjustments to the parameters for pitch and volume can also be applied to the footstep playlists. Additionally you can add layers of cloth or armor movement to the event and position the modules with a slight delay. The delay will avoid the footfall and cloth from playing at the same time and sounding too robotic. The delay setting can also be randomized so it plays back with a slightly different delay time for every trigger.

You can also use RTPC to adapt music and sound design based on the player character’s health. Lower health values on a parameter can be used to automate a low pass filter, which can then affect all sounds in game. When the health parameter decreases into a predetermined threshold, the LPF will kick in. This will give the player a feeling of losing control. It will also force the player to focus on the game mechanics as opposed to more superficial sound effects. Alternatively, low health parameters can also be used to add layers. In this case, instead of using a parameter to automate the cutoff of a LPF down, try using it to automate up a layer of music when health falls below a certain value. This can increase the drama of a battle scene or boss fight.

The automations we’ve mentioned are drawn in with curves on the parameter window. Just like volume curves in a DAW, the curve can be defined so it rolls off smoothly. Sometimes the speed at which the parameter value falls in game can sometimes be quick or uneven. This could cause the audio to follow along abruptly causing the transitions to feel rough. In FMOD, a seek speed module will allow you to set the speed at which the value is chased as it moves across the timeline. Small details such as this will help the audio adapt smoothly to game states.