5

The Entropy of Systems

5.1. System entropy: general considerations

5.1.1. Introduction

The issues of sustainability, reversibility, diversity and perpetuation of organisms, systems or time are raised in this chapter. We discuss several application areas related to automated processes in a company. More precisely, we discuss some mechanisms, principles and concepts related to entropy and we will transpose them in different areas such as:

- basic information used in any process;

- reasoning and solution determination in decision-making;

- hardware, manufactured products, software, services and applications, and more generally, information systems (IS);

- evolution and impact of changes in industrial systems, organizations, economic or administrative structures.

This chapter is thus intended to define some concepts related to entropy and sustainability, and then to better understand how to design a best-of-breed production system, why we use a particular approach to designing and developing decision support systems (DSS) for the management and control of complex systems, and how they can be improved and enhanced over time.

5.1.2. Information and its underlying role in message and decision significance

Information is a basic concept widely used in every system: IS, DSS, planning/scheduling, etc. Its main principles relevant to information theory, associated with information technologies, were first developed in the telecommunication industry. There was initially a momentous need related to the coding, processing and transmission of messages, through an electromagnetic signal carried out by physical cables or wireless supports.

Claude Shannon, an engineer with Bell Telephone Company, defined a mathematical approach in the 1940s that is still today the basis of the “scientific” information concept. His approach was first established to optimize telecommunication system and message encryption.

Information theory does not rely on the elaboration and the physical and intrinsic properties related to rough material or energy. It takes into account the notion of message processing itself, as a set of bits. As an example, the information contained in a message consisting of one letter, repeated many times, such as “aaaaaaaaa…”, is almost zero: it can be compressed. Similarly, this theory does not cover the cognitive content of a given messages sent to somebody; hence, there are some difficulties in understanding the significance of some messages such as:

- The two sentences “Fido is a dog” and “the sales rate of gold is climbing” contain more information than “Fido has four legs” or “the rate of gold is an economic indicator”. In both groups of sentences, the statements contain a higher number of letters (that is to say “data”) in the second formulations than in the first assertions. It is important to consider that rough data are not information: they contain less information or implicit knowledge in a given context. Here, semantics plays a key role in the interpretation of the facts: it is not the length of a raw message (set of data) but the couple (message and context) that carries information. This corroborates the fact that a set of varied data contains some information: only slightly compressible and first needs to be interpreted and integrated in context before any processing.

- In decision theory, it is assumed that information is a set of organized data that reduces the uncertainty of a situation, likely to cause, modify or affect decisions. This explains why we tend to collect and store lots of data but, finally, few of them bear significant meaning and are usefully exploited; in a changing context, they would only be considered as noise. Here, we are simply introducing the notion of “low-noise” data.

- On the Web, an estimate is that over 50 billion web pages are stored in servers; additionally, our electronic mail files look almost saturated. Under these conditions, the huge databases containing our excessive individual information may mask useful low noises and prevent suitable decision-making.

On another level, nobody is protected from a particular deviation known as asymmetric information. To explain this fact, we can consider a problem in economy or industry where the decision-maker possesses information about the problem to be addressed.

This information, however, is often different from that held by the counterpart:

- – The after sales service department of a car manufacturer precisely knows the reliability of its vehicles, its strengths and weaknesses, which is not the case of the customer who found a problem with his vehicle: negotiations about a claim are thus distorted.

- – In searching for investors to develop or save a company, or in a LBO, the CEO exactly knows the financial situation, business potential and strength of his enterprise, much better than the shareholders he will seek.

- – Planning and scheduling: computer aided techniques (CAPPs) enable us to elaborate a precise production program at each stage of a manufacturing system; the workforce is consequently organized, while the production manager already knows that customer disturbances will shake up the established orders in a specific way.

It is, therefore, obvious that the presence of asymmetric information relates directly to the professional ethics of the involved leaders and leads to a distortion of the decisions to be taken: sometimes called “anti-decisions”. Indeed, in a decision-making system, such as in risk management, it is possible to lead to decisions or results opposite those desired. It is said that such a process is of a “principal-agent” type. In this situation, the problem of adverse or reverse selection (taken by a main agent) is mainly based on the uncertainty about information available to the other side agent (opponent or partner): his knowledge or ignorance level in a given context is a key in game theory and sometimes corresponds to a moral random situation.

5.1.3. Consequences

Information technologies are now integrated into the life and work habits of each of us. It is an immersion into a new world. They first enabled the automation of our processes; they also changed our behaviors and living conditions. We do not perceive that to use a mobile internet device (MID) many innovative technologies have been introduced: for instance, they are based on GPS for localization, quantum physics in electronics, encryption, etc. New sensors, and data processors as well, make use of advanced electronic devices based on atomic physics, or artificial neural networks (ANNs), to restore distorted information, when embedded in noise or interferences; they also use very complicated mathematical algorithms, signal theory or else, for signal or source separation, information processing and coding, etc.

This paradigm change is considered normal, and people do not realize what kind of a leap forward was made by scientific progress and human beings.

Similarly, we already mentioned the Internet. We are living with the Web; within the Web, there are more than 40,000 servers and several tens of billions of pages of data stored in many international networks. Continuously, the notion of information is called into question because there are a multitude of sources of data: some of them can be false, biased, contradictory, incomplete, interpreted or presented in such a way that people tend to be influenced, manipulated and forced to make bad decisions.

It is, therefore, vital to ensure the relevance and consistency of the information, and then to organize information channels so that the available information is adequately processed and distributed to the right people. To achieve this objective, it is appropriate to focus on the fact that the information must be factual, clear, precise and concise in order to minimize subjective interpretations and avoid distortion during transmission and information exchanges. As we can understand, there is a link between this requirement and entropy generation. Nevertheless, such facts have been known for a long time;… with new technologies and, also, the loss of written language control, these basic principles need to be recalled and adapted to new types of communication.

This problem of information (associated with its notions of consistency, uncertainty, symmetry, etc.) naturally leads us to introduce the concept of entropy, which is currently a useful indicator for assessing the importance and evolution of decisional information, and also the sustainability of a DSS. This is essential for the development of organizational and decision-making strategies in a company, without going on to argue that “the one who holds the information also holds the power”. We could also write that “the one who controls the information controls the system” [MAS 10]; here again, entropy will play a key role.

5.1.3.1. Entropy: a reminder about historic and basic considerations

In the 1850s and 1860s, German physicist Rudolf Clausius described entropy as transformation-content, i.e. dissipative energy or heat use, of a thermodynamic system or working body of a chemical or living species during a change of state [WIK 15a].

Entropy is a thermodynamic property that can be used to determine the energy available for useful work in a process, such as in energy conversion devices, engines or machines. Such devices can only be driven by convertible energy, and have a theoretical maximum efficiency when converting energy into work. During this work, entropy accumulates in the system, but has to be removed by dissipation in the form of waste heat.

In classical thermodynamics, the concept of entropy is phenomenologically defined by the second law of thermodynamics, which states that the entropy of an isolated system always increases or remains constant. Thus, entropy is also a measure of the tendency of a process, such as a chemical reaction, to be entropically favored, or to proceed in a particular direction.

Entropy determines that thermal energy always flows spontaneously from regions of higher temperature to regions of lower temperature, in the form of heat. These processes reduce the state of order of the initial systems, and therefore entropy is an expression of disorder or randomness.

This example is the basis of the modern microscopic interpretation of entropy in statistical mechanics. Here, we define entropy as the amount of information needed to specify the exact physical state of a system, given its thermodynamic specification. The second law is then a consequence of this definition and the fundamental postulate of statistical mechanics.

Thermodynamic entropy has the dimension of energy divided by temperature, and a unit of Joules per Kelvin (J/K) in the International System of Units.

5.2. The issue and context of entropy within the framework of this book

What we understand today, in fact, is that the world is not a linear, near equilibrium system like a gas in a box, but is instead nonlinear and far-from equilibrium, and that neither the second law nor the world itself is reducible to a stochastic collision function. As the next section outlines, rather than being infinitely improbable, we can now see that spontaneous ordering is the expected consequence of physical law.

As everybody knows, entropy changes lead to a progressive disorganization of the physical world, while evolutionary changes produce progressively higher organization. For instance, autonomous systems have to constantly optimize their behavior involving the combination of nonlinear and dynamic processes. Thus, self-organization allows dynamic self-configuration (adaptation to changing conditions by changing their own configuration permitting additional/removal of resources on fly and without service disruption), self-optimization (self-tuning in a proactive way to respond to environmental stimuli) and self-healing (capacity to diagnose deviations from normal conditions and take proactive actions to normalize them and avoid service disruptions).

The consequence is, if the world selects those above dynamics that minimize potentials at the fastest rate enabled by its constraints, and if an ordered flow is more efficient at reducing potentials than a disordered flow, then the world will select order whenever it gets this opportunity. The world is in an “order production” business because ordered flow produces entropy faster than a disordered flow: this means nature, and the world as a consequence, can be expected to produce as much order as it can. Autocatakinetic systems are self-amplifying sinks that by pulling potentials or resources into their own self-production extend the space-time dimensions and thus the dissipative surfaces of the fields (system and environment) from which they emerge and thereby increase the dissipative rate.

It is not a surprise: there are lots of examples around us which can be quoted. For instance, agriculture (e.g. in wine-making) is facing the issue. Sustainable agriculture is aimed at creating more wealth and employment per unit of output, on a fairer trade basis while being more environmentally friendly. These principles are based on the fact that natural resources are finite and must be used wisely to ensure lasting economic profitability or social well-being, with regard to the ecological balance: the three actual pillars of a sustainable development.

For a long time, agriculture was a human activity able to generate more wealth than it consumed (production is based on photosynthesis due to solar radiation, which is free). Life can thus evolve and develop, following a basic rule of our earthly evolution. Thus, “natural” and moderate agriculture could increase the availability of healthy food, develop new varieties of plants, extend the humus layer and thus soil fertility, provide positive social networking in the communities, etc.

Today, modern urban practices and the agriculture industry are living on credit: they produce much more diversified information and services while consuming more resources (fossil energy, destruction of soils by human beings, etc.) than they physically produce or than generated by nature. The issue is about the excessive selection of plants, invasion of specific living species, standardization of specific practices and environments, etc. What about the balance sheet in terms of entropy and global sustainability? Nobody knows. And who is winning, human beings alone or nature?

5.3. Entropy: definitions and main principles – from physics to Shannon

5.3.1. Entropy: introduction and principles

Initially, in thermodynamics, when considering a physical system, entropy means a heat exchange phenomenon that homogenizes the temperature or energy dissipation into heat. In nature, entropy is all that inexorably is “elapsing, wearing and breaking”, that is to say which is related to the degradation or loss of information, leading to death, in short, which is related to the irreversibility of time. However, to get to death, we first need life, thus, creating and accumulating some order (or information, as soon an IS is involved).

Under the second principle of thermodynamics, the entropy of an isolated system increases with time. In the example of the Boltzmann gas enclosure: energy is always confined in the box, but it is less and less concentrated and usable. Only the difference in energy level (Δ temperature) is available: this difference is weakening over time, as we are returning to an equilibrium, or an average, after the dissipation or mixing of the gas. This leads to a maximum disorder level: for each collision, we do not store and remember the nature of interaction and trace of the different tracks that would enable to go back to the initial condition; this is considered as a loss of information. We can lose some energy, but also the information, differences in distribution of information, heat or particles; finally, what we lose is the stability of a given order as the number of possible states is increasing. In some cases, the heat generated during such a processing can rise (in terms of energy dissipation, in a system, entropy is defined as “the total amount of added heat divided by the temperature”), etc.

However, the concept related to a given order is always a relative one. In a decision-making process or physical system elaborated by a human being: this notion is subjective, contextual and is dependent on the perception we have about the evolution of a system under study or the time scale considered. For example, returning to the Boltzmann experiment:

- – for some people, an order is obtained when the gas enclosed in a sealed box is evenly distributed and the pressure on the walls of the enclosure (which is the result of the residual molecules impacts on the walls) is stabilized;

- – for others, the gas inside the enclosure continues to move and circulate due to convection, microturbulences, etc. The complete set of all various configurations obtained over time is constantly growing in number and reflects a continuous increase in disorders.

By convention, the flow of time, or the increase in entropy, is of course related to damage, destruction and diffusion/homogenization of an orderly identity, but it also corresponds to the creation of unlikely events, or the generation of potential differentiation and diversifications; it also reflects the complexity of a given system, the gradual evolution of more elaborate structures, the building of structured networks of networks, phenomena of organizational evolution, etc. over time.

In the case of a nonlinear dynamic system (NLDS), for instance, a minimum level of information (such as a minimum set of energy at a right place, and at the right time) can lead to a bifurcation (or a catastrophe) and make the entire system converge toward an unpredictable attractor; this may possess a much lower or stronger potential energy level (e.g. a non-significant information, or a low noise, may lead to critical information or a critical event).

Similarly, when a self-organization phenomenon appears in a system after a bifurcation or a break, this indicates that an order emerges from a deterministic chaos: the system will converge to a limited number of possible states and the entropy will decrease. On the other hand, when additional knowledge is created from an original order, or when innovative opportunities, products or services are generated in a system, its entropy increases.

5.3.2. A comment

Again, as addressed in all the previous examples, time measurement is of relative size, but mostly … fractal. Indeed, there is scale invariance; all things being considered, it is associated with the scale of the phenomena that accompanies it: at the level of an atom, we discuss in terms of “nanoseconds”, at the creation of matter (gravitational fluctuations and below the quantum fluctuations) we are in the range of 10-20 up to 10-43 s time scale. On the other hand, more regarding the scale being large (e.g. cosmological level) we will discuss about light years for the galaxies; the range of time scales will be about billions of light years for clusters of galaxies, etc. Here, the variation of entropy generation in a system (then its sustainability) will be different accordingly, since the dissipation volumes are dependent upon the cubic power of a distance.

5.4. Some application fields with consequences

To provide a suitable measurement technique associated with the aforementioned context, two application fields will be covered:

- – entropy in the telecommunication field;

- – entropy in the DSS.

Given what is stated above, all the concepts used in our evolving systems are increasingly complex (due to interactions and virtualization of the organizations); they are multidimensional, subjective, etc.; they have a fractal and relativistic structure, and are subject to uncertainties, etc. This is an often general fact: the systems themselves are becoming complexified and diversified, difficult to control and heavy, that is to say energy consuming and dissipating. Since sustainability is a key factor to ensure the survival and development of our species, it is then appropriate to measure this complexification and sustainability.

5.4.1. Entropy in the telecommunications systems

In an attempt to provide a suitable measurement technique associated with some indicators, in the telecommunication field, we will use “entropy”. Its definition, hereafter given, concerns the notion of “amount of relevant information contained in a given message”. It is widely used in communication theory or computer science, and is closely related to the so-called entropy as defined by Shannon’s works. It measures the average amount of information contained in a set of events (especially messages) and its uncertainty level, as well. This entropy is denoted by “H”.

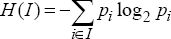

Let us consider N different, or successive events, whose probability is p1, p2 … pN, Let us consider that these events are independent of each other. Thus, Shannon’s entropy is expressed by:

where “I” represents the set of events.

There is a direct relationship between an entropy increase and the information earning. So, there is a parity between this notion and the Boltzmann entropy in thermodynamics (through the second principle).

As we have already stated, this entropy has a number of limitations:

- – it excludes the notion of semantics;

- – it does not take into account the meaning of a message;

- – it is limited to the scope of a messenger whose function is to transfer an object.

Information theory, according to Shannon, is always relative to a data set, or a specific character string, characterized by a peculiar statistical distribution law. This string, therefore, gives an average “information content”, making it a probabilistic theory; it is particularly well suited to the context of message passing based on the transmission of a sequence of characters or data. Thus, we may have an idea that can qualitatively “claim” a given volume of information even if we cannot precisely quantify the deep informational content of an individual string or that of a data set stored in a network.

Finally, Shannon’s approach is quite similar to the Boltzmann’s approach when defining an entropy: “instead of probabilities about the presence of molecules in a given state, it is, more generally, question of probabilities of presence of knowledge significance in a given location of a message”. Both this significance and associated message are only analyzed through probabilities, and their meaning is never taken into account.

5.4.2. Entropy in decision-making (for DSS applications)

The adequacy and sustainability of a decision-making process can be evaluated due to the information entropy-based approach. Indeed, let us consider a set of experiments or decisions taken by an agent called D-Ag, in a given production process, for a given period of time. The information gained can be defined in terms of a measure, from an information theory point of view, i.e. information entropy. This measure can be used to indicate how certain a decision agent (D-Ag) is about the truth value of some concept, approach or solution.

For example, let us consider the set of experiments where D-Ag is associated with an information need denoted by “h”:

- If D-Ag has completely satisfied its information need, it is certain that h (now) is true … or it is certain that ¬h (now) is well defined, in which case is the entropy equal to 0.

- If D-Ag does not have a clue about the truth value of h, the entropy is

1: In this case, the agent’s experience does not provide any indication of the truth value of h, i.e. h appears to be randomly true half of the time.

Here, the decision information entropy is formalized as follows:

- – Given an information need h, and a set of time points Δ (or domain information), the entropy related to the attribute h is expressed by:

Entropy (Δ) = - P.log2 P – N. log2 N

where P is the proportion of time points in Δ where h is true, and N is the proportion of time points in Δ where h is not true.

It is important to emphasize that this heuristic will attempt to minimize the number of questions or experiments necessary to reach a goal, because the information gain is defined as the expected reduction in entropy from obtaining the truth value of a concept or solution. Here, an agent satisfies its information needs most efficiently by reducing its information entropy to 0 with the fewest messages.

Said differently, we need fewer requests if the accuracy of a process or decision is good because the collection of true information is made easier. Therefore, there is a structuring effect and a decision agent will choose the concept or solution with the highest information gain. Under this interpretation, this entropy formula reflects the overall performance of the system (which is not always necessarily the case).

To illustrate this general and important statement, let us illustrate this formula in a similar application field. Here, we are involved in an IS where a user is receiving a set of symbols. Each symbol could be the result of an observation or statistical result in a population (distributed as per a Pareto distribution). This means that a symbol can take two complementary values denoted by s1 (true) and s2 (false), with two associated probabilities: respectively, p1 = 0.8 and p2 = 0.2. The quantity of information contained in a specific symbol (result) is similarly expressed by:

Now, in a complete experiment, or in a message, if each symbol is independent of the next one, a message, or statistics, including N symbols, contains an average amount of information equal to 0.72 × N.

Again, if the symbol S1 is coded “0” while the S2 symbol is coded “1”, then the message has a length equal to N, and an entropy equal to N, which is worse: it is a loss compared to the amount of information the message is carrying out. Shannon’s theorems state that it is impossible to find a code whose average length is less than 0.72 N, but it is possible to encode the message (or to initialize control parameters) so that the coded message would have an average length close to 0.72 N (which we want) when N is increasing.

5.4.2.1. A first comment

The rationale behind this approach is that symbols (Si), experiments or attributes (h), with a higher entropy, also have a higher probability of providing a significant reduction of the search space Δ, since entropy increases with the size of the set values belonging to a given attribute. However, most important, performances are better for attributes which have an adequate balanced distribution of values. In IBM, we had the same result in logistics when studying the flow of a stream of product delivery in a assembly line (same as for the flow of cars on a highway); we get the same performance results, through NLDS simulation, based on given traffic saturation capacity rates (vs. the caterpillar effect). It is the same in plant layout with an adequate location and grouping, or distributing, of physical resources in an assembly line.

In IS, an anti-entropy approach can be used to achieve eventual consistency through pairwise experiments: the relevant algorithm is called anti-entropy since it constantly decreases the disorder among the different data stores.

5.4.2.2. A second comment

Mathematics is a powerful technology to abstract the modeling of complex systems and improve their understanding. Application of its principles is, however, a problem since quantitative approach has a lot of limitations: quite often it is complicated to model a complete system, and to resolve a set of equations related to a problem; also, the analysis of numbers or statistics is always a problem subject to interpretation, speculations and rumors. This means, concerning entropy, that our reasoning will remain the same: we understand what the meaning of entropy is; we understand the philosophy and underlying principles behind this technology and approach, but we have to stay pragmatic. Using precise numbers (from computerized programs) is good to appraise a vision or a trend for instance; it does not always bring a lot of essential information in the decision-making process since we are more often deciding in terms of comparing, discriminating, sorting or classifying possible solutions. Consequently, as we will do in the next section of this chapter, we will focus on the qualitative aspect of entropy and will discuss it more in terms of “entropy generation”.

5.5. Generalization of the entropy concept: link with sustainability

Here, we will recall some informal definitions assigned to entropy and sustainability within the humankind activities context [CHO 12]:

- Entropy: this is defined as the state of systemic disorder caused by the failure of any part of a system in maintaining its organic connection with the other parts. For example, forest depletion is entropy for the environment because of its organic severance from resource supply in maintaining forms of life and livelihood.

- Sustainability: this is the state of continuous reproduction of the dissipated energy. The meaning of sustainability can then be understood as recovery from entropy, or replenishing the decreased energy supply. Sustainability is thereby a dynamic process-oriented idea. The sustainable state of development maintains the resource stock by its continuous recovery.

This definition can easily be compared with the definition specified by the United Nations: “sustainable development is a development that meets the needs of the present without compromising the ability of future generations to meet their own needs” [UNG 87]. This interpretation identifies the need for economic growth, but without damage to the environment via pollution and wasteful management of natural resources.

5.5.1. A comment

We can make the following observation: the finite world we live in is managed unsustainably; resources are not used to their greatest potential, which leads to waste. Trying to live unsustainably would not be such an issue if our universe was not governed by the second law of thermodynamics (in statistical mechanics, entropy is a measure of the number of microscopic configurations corresponding to a macroscopic state. As thermodynamic equilibrium corresponds to a vastly greater number of microscopic configurations than any non-equilibrium state, it has the maximum entropy; the second law follows because random chance alone practically guarantees that the system will evolve toward such thermodynamic equilibrium).

Here, we can see that the activity of human beings is involved in such a situation. The main way is to develop consciousness, and now ethics: they are the main factors involving actualization of self-governed balances and responsibility in the relations between self and other, the present and future generations. Thus, they will highly influence the values of these two above concepts.

5.5.2. An interpretation of entropy

In the definition we have given in this chapter, we understand that the universe as a whole is an open system: it drifts from a state of order to a state of disorder; any ordered system, structure and pattern in the universe breaks down, decays and dissipates: its entropy increases over time. We can better understand what is happening in Figure 5.1.

Figure 5.1. The Sun-Earth system, image courtesy of Counter Currents [JON 12]

Our planet can be treated as an open system as it sits in the middle of a river of energy streaming out from the Sun (let us recall that a closed system has no connection, in terms of energy or matter, with outside). This allows it to create complex structure and patterns, such as ordered crystals from unordered real materials. Also, nature created particles, and therefore molecules, organisms, human bodies and eventually economies. These are low entropy products.

However, nothing is free: an industrial process has a changing entropy. For instance, over time, cars rust, buildings crumble, mountains explode, apples rot and cream poured into coffee dissipates until it is evenly mixed. They are converted into higher entropy products. Also, in industry, every manufacturing operation and chemical process is associated with “production costs”: waste, pollution, greenhouse gases, heat, etc. Thus, entropy increases accordingly [MIL 13].

Since all our complex structures and organisms are produced through given processes for a time, the transformational processes from high disorders (low-ordered assemblies, raw materials and energy) toward high orders (ordered assemblies, products and services) are then associated with reduction of entropy. Later, when the product is dismantled, destroyed or consumed, the ecosystem again becomes more entropic.

In an open system, the global balance remains unchanged. However, locally thinking, at the level of our eco-system (the Earth being considered as a closed system), the context is different: within this framework, sustainability will consist of reducing pollution and damage to our environment and then reducing the overall entropy of our local system.

5.5.3. Diversity in measuring entropy

When defining the entropy of a system, we have to consider several parameters such as the nature of the system, the irreversibility of the products (services, economy, etc.) and the efficiency of the global transformation process.

Here above, we have defined the entropy in two different manners: thermodynamics and information. In fact, entropy is a measure of a structure order: since we have many contexts to be considered (characteristics of the products and processes, application fields, etc.), entropy will be mathematically defined in various ways [FLE 97]:

- in physics, entropy is a measure of the usability of energy (Clausius’ macroconcept as well as Boltzmann’s combined micro-macro approach);

- in communication theory (Shannon), entropy is a measure of the degree of surprise or novelty of a message;

- in biology and sociology, entropy is closely connected with the concept of order and structure;

- in economy, production processes, distribution and consumption of goods, maintenance, etc. necessarily transform free energy into dissipated heat. So, we calculate entropy in measuring the activities by using the concept of available or free energy, transformed into energy and waste heat;

- in agriculture, production can be used as an energy source again, either for consumption (where chemical energy will be used to maintain a temperature gradient between the corpse and the environment of many mammals and human beings, but finally that gradient is transformed into waste heat) or for starting new production activities, etc.

We will not detail the different approaches for measuring these entropies [FLE 97]. We will just recall that Georgescu-Roegen has used the entropy concept to construct a fourth law of thermodynamics [ROE 93] where he extended the entropy concept to matter and arrived at very pessimistic conclusions: there is no possibility of a complete recycling of matter once it is dispersed. He states that in a system like the Earth (nearly no exchange of matter with the environment) mechanical work cannot proceed at a constant rate forever or there is a law of increasing material entropy. This means that it is not possible to get back all the dissipated matter of, for instance, tires worn out by friction.

The laws concerning the degradation of energy in a physical sense are applicable to every open and dynamic system with regard to the physical aspects of the system. However, the laws do not determine the specific way in which a dissipative structure, a living system or a human society obeys them. If entropy holds for the universe as a whole, it is by far the most distant boundary that mankind will ever come close to reaching.

5.6. Proposal for a new information theory approach

As seen before, thermodynamics can account for a number of phenomena related to information transfer. However it hardly gets to account for information in its wholeness. Shannon indeed pointed out a first limiting point: the non-signification of a message.

Henri Atlan also pointed out the same restriction: “we know well that a message without meaning bears no interest and, in the end, doesn’t exist. And the containment of that Shannonnian information to probabilistic uncertainty retains only operational value”. For the same reason, relationships between some chemical molecules and information would be limited.

Could information enable a reaction on information itself so as to give rise to new concepts? It would only be possible by means of a preliminary assimilation process – almost a digestion process – within a cognitive corpus. However, what holds the analogy from going further between a chemical thermodynamics and an informational one is the following:

- The process of assimilating information is not reversible: we may not understand something, use information, yet, as information is integrated within our cognitive domain, it is not possible to unlearn it (except in the case of mental diseases such as Alzheimer’s). The basic reversibility condition of chemical thermodynamics does not exist here.

- Even if we could bypass this handicap due to applying a thermodynamic model of reversible processes, a second objection appears. Chemical reactions tend to be a stable model (in principle unique, at best binary in the case of oscillating reactions) dubbed “maximum entropy”. On the contrary, multiple stability levels exist with information, which we dub “metastable”. Each stability level is the logical result of a knowledge corpus, where knowledge is not necessarily dependent on other knowledge, and therefore cannot form a leveled hierarchy. This is why C-K theory traditionally cites an “archipelagic structure” for knowledge [COR 13a].

- Finally, the argument that hampers most thermodynamic views of information is when various elements of a homogeneous environment communicate, for example in science. The way global knowledge progresses, by simply exchanging information produced in writing through publications or orally via presentations, proves it. Information passes through the filter of peer critics and then becomes integrated into the common knowledge heritage. However, by examining what lies in-between information produced by a given scientist and its effects on other members of the same scientific community, we observe, unlike physical phenomena, that:

- the “wearing effect” that explains that a molecule is implicated in a restricted number of reactions only does not appear. On the contrary, we can call out once, 10 times and 100 times other people with the same intensity and efficacy;

- a “targeting”, plain or masked, between the emitter and receivers does not exist. People publish in the open without knowing who will read them and, above all, without an ability to measure the ins and outs of the impact on the readers’ thinking of the information made available in the publication. Beyond the scientific confirmation of its exactness, the logic or risk that it may spark off often exceed the producer’s. Were Planck and Einstein in a position to imagine all what their “information” was to generate in terms of hypotheses, theories and knowledge? We see that a thermodynamics, were it of non-reversible or biological phenomena, cannot integrate these contradictions. We are forced to observe that, given that mathematical models no longer enable a satisfactory image of reality, it is useful to change the model. Physicists do not proceed otherwise as they create ever more complex models throughout their experiments because these models need to be more complete. As we want to integrate the cognitive and decisional aspects, it is useful to abandon a thermodynamic vision of information.

Core to this discussion is then to ask what would be a model able to more exactly account for the cognitive processes which we are subject to every day. To do so, and according to Bernat [BER 99], four types of constraint must be satisfied:

- Universality. Information must be understandable by a homogeneous sample of persons and only cultural or linguistic barriers should prevail.

- Timelessness. Information which is accepted can only be put to question by new information which brings a new understanding of the system at hand. This constraint aims at ensuring a permanency of scientific thinking, in which any new work draws on commonly agreed knowledge and is not bound to prove its principled basis again.

- Permanency. Information possesses an intrinsic value that is independent of the ideas that may associate with it. This value does not necessarily depend on the use of information made. It is an uneasy concept to explain; in short, information can be reused many times with the same efficacy always, or that information does not wear whatever its usage.

- Interdependency. Information possesses a value that is a function of the possible interactions with a knowledge corpus. This is a well-known fact as it underpins the entire information mark: a technician may see no value in information that is otherwise vital for a financier.

In order to satisfy space-time notions, quantitative models are a necessity. On the contrary, the “systemic and behavioral” approach will orient toward qualitative models. We indeed follow a “complex systems” approach, which calls for geometrical and topological notions. Many works have been conducted, based on these considerations, for example the Lie Groups in set theory.

5.7. Main conclusions

The topics tackled above are not bringing up fundamental notions yet, given that many basic works and explorations are still needed. Going beyond information thermodynamics as defined by Shannon is important for a quantum theory of information to begin.

As in physics – note that thought is only the extension of what happens in matter – that argues in favor of other approaches, even if these may be iconoclastic. (We may refer to a paper by Jean-Pierre Bernat [BER 99] signed by Catherine Vincent in Le Monde newspaper entitled “La pensée en quanta” – thinking in quanta).

Sir John Eccles was a foremost neurobiologist of the 20th Century who obtained the Nobel Prize in Medicine in 1963 for his fundamental discoveries on nervous impulse transmission. He asked a number of questions on the functioning of the brain, more precisely on the emergence of thought. Having gone through the brain’s evolution in Hominidae and cervical lobe specialization, he says:

If the uniqueness of the self is to be derived from the genetic uniqueness which built the brain, then the odds against one existing in the uniqueness of the consciousness in which one actually finds oneself, are huge.

Eccles’ calculations gave him a figure for the odds of 10 to the power 10,000 against. As a result of calculating these odds, Eccles thought that the explanation for the uniqueness of the consciousness associated with a given brain could only exist remotely and be that the uniqueness arise as an emergent phenomenon of the brain’s genetically determined physics and chemistry, hence must rather arise externally (he used the term “supernatural origin”).

In short, he is saying “how to explain a human consciousness uniqueness?” In fact, the “self”, or mind, associated with a given brain, is unique to each of us; it emerges from billions of neuronal configurations and endures throughout our specific life.

Beyond his sayings, we denote a difference between unity of consciousness, which is the ability to be conscious at the same time about several correlated events, and unicity or better, uniqueness of consciousness, which relates to a same consciousness structure and a specific and continuous way of thinking. Both kinds of consciousness may be subject to some diversity, yet they proceed differently.

Finally, what about self-consciousness? Ukrainian evolutionist Theodosius Dobzhansky says “a being who knows he will die one day is born from ancestors who didn’t know it”? For sure, the debate is not new but the “soul” of Plato and Aristotle – a non-material entity supposed to interact with the body – was not yet opposed to science. So, the Latin expression “Cogito ergo sum” from Descartes meaning “I think therefore I am” remains more philosophical than scientific since we are lacking sufficient knowledge about how the brain functions.

Taking into account the advances made by contemporary biology, the scientist notes that the internal brain structure is quite well known nowadays, but its striking complex structure does not enable us to understand the gap between mental and neuronal activities.

Here comes into play Sir John Eccles who, calling back a doctoral work by his old friend philosopher and epistemologist Karl Popper, hypothesizes that thought could be considered a “consciousness field” without mass or energy, which would nevertheless have an influence on the nervous impulse transmission by activating some elementary biological particles that reside in nervous synapses: tiny contact points through which the nervous excitation is transmitted from one neuron to another.

An assumption consists of suggesting that the hexagonal structure of such a network determines a series of “microsites” whose activation by the “consciousness field” would take place in the nervous impulse transmission.

The problem to resolve is to determine how to make the action of an immaterial element (thought) on material organs (neurons) compatible with the energy conservation laws, which are imposed by classical mechanics. To bypass this contradiction, Sir John Eccles calls upon theoretical physics and compares his “consciousness field” with the probabilistic fields described in quantum mechanics: “Mind would thus take place on the neocortex by increasing the probability of occurrence of some neuronal events and would give to the brain not the role of an emitter but of the receiver of consciousness, not the radio set but the magnetic tape”.

Even Sir John Eccles admits it: the validity of his hypothesis, however well it is backed by an irrefutable knowledge on cerebral physiology, remains entirely speculative. Theoretical mechanism experts will surely fire back by saying how far a synapse stands with respect to the atom, a microsite to the electron, and that the laws of quantum physics, which deal with objects involving both wave and corpuscle properties, cannot apply to life domains out of the blue.

After all, atomic physics went through the same trials and errors over about a century by stumbling on the much talked-about issue of dark matter, and dared to open the door to an eminently more complex world. This analogy leads us to again ask a number of questions, among which is what is information?

Let us simply recall today’s famous expression from Henri Bloch Lainé:

Any decision is born from the conjunction of information and competence.

What is competence if not the fruit of experience (learnt or experienced), out of the assimilation of information? The answers are complex and laborious as those arose by the introduction of quantum physics. There is not any good or bad answers, but only well formulated or not problems. It is an open field of research. We will just conclude in saying that failures and crises are the results of a lack of skill, ignorance or a greedy attitude from some decision makers [MAS 10].