SOA with P2P

As stated earlier, one of the biggest challenges with the SOA approach is knowing how to deploy services. For example, when designing a solution, should you take the more traditional n-tier (DNA) approach with a middle tier, should the services be implemented in a more distributed approach where each service is a completely independent entity on the network, or should the approach be somewhere between those two extremes? Although SOA does not impose any technologies, platforms, and protocols, traditionally we treat the various entities involved, such as the service provider, consumer, service broker, and so on, as separate from each other. A better approach would be to treat these roles as different aspects for the services as opposed to explicit boundaries.

Instead of implementing a service on a server, if that service is implemented in every node of a network (such as a peer), then all the requirements of the server such as availability, scalability, and so on, can shift from one particular server to a function on the entire network. Hence, as the number of services on the network increases, the capabilities of the network increase proportionally, thus overall making it more scalable and robust. Also, unlike a traditional approach, in the P2P world, there is nothing to deploy other than the peer itself.

This is not to undermine the challenges that the services in a P2P environment face in an enterprise where there will be a disparate set of technologies and products on numerous runtimes. These will require different authentication and authorization approaches, and in many cases it would not be practical, because every service must also carry the weight of the network functionality that a peer provides in a P2P implementation. Also, operation requirements such as reliability, management, and so on, will have difficulty standardizing because of the disparate technologies. Lastly, there would be a significant overhead on the development team to ensure every service peer can deal with all the process, reliability, management, and so on, every time a new service is built.

If an enterprise can use a single runtime environment that provides a standardized implementation and uses a common set of libraries such as WCF, that would help eliminate many of these challenges. The caveat is that all the services need to adhere to this standard. Another option is to be more creative and implement "smart" networking intermediaries that will take ownership on areas of control such as security, reliability, operational management, and so on, when delegated to by the services. This frees the service endpoints to consume and provide services.

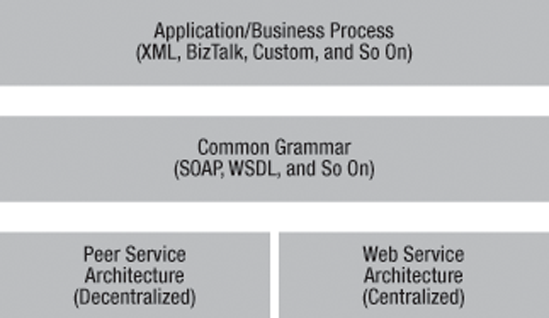

Effectively, we are trying to combine the best of how a centralized and a decentralized system would work. It is quite clear that there are many synergies between an SOA implementation and a P2P implementation. Today, the SOA implementations have concentrated a lot on web services, which in turn rely heavily on the central computing paradigm. Similarly, most P2P implementations today have concentrated more on their resilient paradigm. It is likely you will see a combination where both the peer and the web services rely on a common set for service description and invocation probably based on WSDL and SOAP. Figure 12-8 shows a high-level view of this convergence of web- and peer-based service orientation.

Web+peer service orientation