Optimization of an IBM AIX operating system

In this chapter we discuss performance considerations on AIX. We also describe some basic general tuning for AIX. Some tuning may not apply to all workloads. It is important to check best practices documentation and guidelines for each type of workload (database, web server, fileserver) and follow the recommendations for each product.

We show parameters that can be changed in AIX and features that can be enabled:

4.1 Processor folding, Active System Optimizer, and simultaneous multithreading

In this section we discuss some concepts related to processor performance in AIX.

4.1.1 Active System Optimizer

Active System Optimizer (ASO) is an AIX feature introduced in AIX 6.1 TL7 and AIX 7.1 TL1, which monitors and dynamically optimizes the system. ASO needs POWER7 hardware running in POWER7 mode. For more information, refer to 6.1.2, “IBM AIX Dynamic System Optimizer” on page 288.

4.1.2 Simultaneous multithreading (SMT)

First referred to as SMT with the introduction of POWER5. Prior to that, a previous incarnation of SMT was known as HMT (or hardware multithreading). There are many sources of information regarding SMT, some of which we reference later. This section complements these existing sources by highlighting some key areas and illustrating some scenarios when hosting them on the POWER7 platform.

POWER5, POWER6 and POWER7

The implementation of SMT has matured and evolved through each generation. It is important to understand which SMT component is provided by a given platform, but equally how it differs from the implementations in other generations. Such understanding can be critical when planning to migrate existing workloads from older hardware onto POWER7.

For example, both POWER5 and POWER6 provide SMT2 (although remember that prior to POWER7, it was just referred to as SMT). However, while there is no difference in naming to suggest otherwise, the implementation of SMT2 is significantly different between the two platforms. Similarly, SMT4 is not simply a parallel implementation of SMT2.

A confusion can easily arise between the acronyms of SMT and Symmetric Multi Processing (SMP). As we demonstrate in “SMT4” on page 120, inefficiencies can be introduced by confusing the two.

For a detailed comparison of the three implementations of SMT, refer to 2.14.1 of IBM PowerVM Introduction and Configuration, SG24-7940.

SMT4

An important characteristic of SMT4 is that the four hardware threads are ordered in a priority hierarchy. That is, for each core or virtual processor, there is one primary hardware thread, one secondary hardware thread, and two tertiary hardware threads in SMT4 mode. This means that work will not be allocated to the secondary threads until consumption of the primary threads exceeds a threshold (controlled by schedo options); similarly the tertiary threads will not have work scheduled to them until enough workload exists to drive the primary and secondary threads. This priority hierarchy provides best raw application throughput on POWER7 and POWER7+. Thus the default AIX dispatching behavior is to dispatch across primary threads and then pack across the secondary and tertiary threads.

However, it is possible to negate or influence the efficiency offered by SMT4, through suboptimal LPAR profile configuration. Also, the default AIX dispatching behavior can be changed via schedo options, which are discussed in 4.1.4, “Scaled throughput” on page 124.

|

Note: The following scenario illustrates how inefficiencies can be introduced. There are other elements of PowerVM such as processor folding, Active System Optimizer (ASO), and power saving features that can provide compensation against such issues.

|

An existing workload is hosted on a POWER6-based LPAR, running AIX 6.1 TL02. The uncapped LPAR is configured to have two virtual processors (VP) and 4 GB of RAM. The LPAR is backed up and restored to a new POWER7 server and the LPAR profile is recreated with the same uncapped/2 VP settings as before. All other processor settings in the new LPAR profile are default.

At a later date, the POWER7-based LPAR is migrated from AIX6.1 TL02 to AIX6.1 TL07. On reboot, the LPAR automatically switches from SMT2 to SMT4 due to the higher AIX level allowing the LPAR to switch from POWER6+™ to POWER7 compatibility mode.

To illustrate this we used a WMB workload. Figure 4-1 shows how the application is only using two of the eight available threads.

Figure 4-1 WMB workload with two VPs and SMT4

Reconfiguring the LPAR to have only a single VP (Figure 4-2) shows that the WMB workload is using the same amount of resource, but now more efficiently within one core. In our example, we were able to achieve a comparable throughput with one VP as with two VPs. AIX would only have to manage two idle threads, not six, so the resource allocation would be more optimal in that respect.

Figure 4-2 WMB workload with one VP and SMT4

Scaling the WMB workload to produce double the footprint in the same processor constraints again demonstrated similar efficient distribution. Figure 4-3 on page 122 illustrates the difference in consumption across the four SMT threads.

Figure 4-3 Increased WMB workload with one VP and SMT4

However, the throughput recorded using this larger footprint was around 90% less with one VP than with two VPs because the greater workload consumed the maximum capacity at some times. Remember that even if an LPAR is configured as uncapped, the amount of extra entitlement it can request is limited by the number of VPs. So one VP allows up to 1.0 units of allocation.

We observed that other smaller workloads could not take advantage of the larger number of SMT threads, thus it was more efficient to reconfigure the LPAR profile to have fewer VPs (potential example of our NIM server). Allocating what is required is the best approach compared to over-allocating based on a legacy viewpoint. Fewer idle SMT threads or VPs is less overhead for the hypervisor too. Just because your old POWER5-based LPAR had four dedicated processors, it does not always follow that your POWER7-based LPAR requires the same.

Where workloads or LPARs will be migrated from previous platform generations, spending time evaluating and understanding your workload footprint is important; investing time post-migrating is equally important. Regular monitoring of LPAR activity will help build a profile of resource usage to help assess the efficiency of your configuration and also will allow detection of footprint growth. While it is common for an LPAR to be allocated too many resources, it is also common for footprint growth to go undetected.

It is primarily beneficial in commercial environments where the speed of an individual transaction is not as important as the total number of transactions that are performed. Simultaneous multithreading is expected to increase the throughput of workloads with large or frequently changing working sets, such as database servers and web servers.

Workloads that do not benefit much from simultaneous multithreading are those in which the majority of individual software threads uses a large amount of any specific resource in the processor or memory. For example, workloads that are floating-point intensive are likely to gain little from simultaneous multithreading and are the ones most likely to lose performance.

AIX allows you to control the mode of the partition for simultaneous multithreading with the smtctl command. By default, AIX enables simultaneous multithreading.

In Example 4-1, in the smtctl output, we can see that SMT is enabled and the mode is SMT4. There are two virtual processors, proc0 and proc4, and four logical processors associated with each virtual one, giving a total of eight logical processors.

Example 4-1 Verifying that SMT is enabled and what the mode is

# smtctl

This system is SMT capable.

This system supports up to 4 SMT threads per processor.

SMT is currently enabled.

SMT boot mode is set to enabled.

SMT threads are bound to the same virtual processor.

proc0 has 4 SMT threads.

Bind processor 0 is bound with proc0

Bind processor 1 is bound with proc0

Bind processor 2 is bound with proc0

Bind processor 3 is bound with proc0

proc4 has 4 SMT threads.

Bind processor 4 is bound with proc4

Bind processor 5 is bound with proc4

Bind processor 6 is bound with proc4

Bind processor 7 is bound with proc4

4.1.3 Processor folding

On a shared-processor LPAR, AIX monitors the utilization of virtual processors. It watches each virtual processor and the LPAR as a whole. By default AIX will take action when the aggregate utilization drops below 49% (schedo option vpm_fold_threshold). When current load drops below this threshold, AIX will start folding away virtual processors to make more efficient use of fewer resources. The opposite reaction occurs when the workload increases and breaches the 49% threshold, in which case AIX dynamically unfolds virtual processors to accommodate the increased load.

The aim of this feature is to improve efficiency of thread and virtual processor usage within the LPAR. The folding and unfolding encourages the LPAR to make best use of its processing resources. Improved performance is achieved by attempting to reduce cache misses in the physical processors by efficiently distributing the processes.

Thus, processor folding is a feature introduced in AIX 5.3 ML3 that allows the kernel scheduler to dynamically increase and decrease the use of virtual processors. During low workload demand, some virtual processors are deactivated. Every second, the kernel scheduler evaluates the number of virtual processors that should be activated to accommodate the physical utilization of the partition.

When virtual processors are deactivated, they are not removed from the partition as with dynamic LPAR. The virtual processor is no longer a candidate to run on or receive unbound work; however, it can still run bound jobs. The number of online logical processors and online virtual processors that are visible to the user or applications does not change. There are no impacts to the middleware or the applications running on the system.

Some benefits of processor folding are:

•Better processor affinity

•Less overhead to the hypervisor due to lower number of context switches

•Less virtual processors being dispatched in physical processors implies more physical processors available to other partitions

•Improved energy resources consumption when the processors are idle

Processor folding is enabled by default. In specific situations where you do not want to have the system folding and unfolding all the time, the behavior can be controlled using the schedo command to modify the vpm_xvcpus tunable.

To determine whether or not processor folding is enabled, use the command shown in Example 4-2.

Example 4-2 How to check whether processor folding is enabled

# schedo -o vpm_xvcpus

vpm_xvcpus = 0

If vpm_xvcpus is greater than or equal to zero, processor folding is enabled. Otherwise, if it is equal to -1, folding is disabled. The command to enable is shown in Example 4-3.

Example 4-3 How to enable processor folding

# schedo -o vpm_xvcpus=0

Setting vpm_xvcpus to 0

Each virtual processor can consume a maximum of one physical processor. The number of virtual processors needed is determined by calculating the sum of the physical processor utilization and the value of the vpm_xvcpus tunable, as shown in the following equation:

Number of virtual processors needed = roundup (physical processor utilization) + vpm_xvcpus

If the number of virtual processors needed is less than the current number of enabled virtual processors, a virtual processor is disabled. If the number of virtual processors needed is greater than the current number of enabled virtual processors, a disabled virtual processor is enabled. Threads that are attached to a disabled virtual processor are still allowed to run on it.

Currently, there is no way to monitor the folding behavior on an AIX partition. The nmon tool does some attempt to track VP folding behavior based on the measured processor utilization but again, that is an estimation, not a value reported by any system component.

|

Important: Folding is available for both dedicated and shared mode partitions. On AIX 7.1, folding is disabled for dedicated-mode partitions and enabled for shared-mode.

|

4.1.4 Scaled throughput

This setting is an alternative dispatcher scheduling mechanism introduced with AIX 6.1 TL08 and AIX 7.1 TL02; the new logic affects how AIX utilizes SMT threads and directly dictates how and when folded VPs will be unfolded. This feature was added based on client requirements and is controlled by a schedo tunable. Therefore, it is enabled on an LPAR, by LPAR basis.

The implication of enabling this tunable is that AIX will utilize all SMT threads on a given VP before unfolding additional VPs. The characteristics we observed during tests are best described as a more scientific, controlled approach to what we achieved by forceably reducing VP allocation in “SMT4” on page 120.

The scaled_throughput_mode tunable has four settings: 0, 1, 2 and 4. A value of 0 is the default and disables the tunable. The three other settings enable the feature and dictate the desired level of SMT exploitation (that is SMT1, SMT2, or SMT4).

We tested the feature using our WebSphere Message Broker workload, running on an AIX 7.1 TL02 LPAR configured with four VPs. Two sizes of Message Broker workload were profiled to see what difference would be observed by running with two or four application threads.

Table 4-1 Message Broker scaled_throughput_mode results

|

|

0

|

1

|

2

|

4

|

|

TPS for four WMB threads

|

409.46

|

286.44

|

208.08

|

243.06

|

|

Perf per core

|

127.96

|

149.18

|

208.08

|

243.06

|

|

TPS for two WMB threads

|

235.00

|

215.28

|

177.55

|

177.43

|

|

Perf per core

|

120.51

|

130.47

|

177.55

|

177.43

|

Table 4-1details the statistics from the eight iterations. In both cases the TPS declined as utilization increased. In the case of the 4-thread workload the trade-off was a 41% decline in throughput against an 89% increase in core efficiency. Whereas for the 2-thread workload it was a 25% decline in throughput against a 47% increase in core efficiency.

So the benefit of implementing this feature is increased core throughput, because AIX maximizes SMT thread utilization before dispatching to additional VPs. But this increased utilization is at the expense of overall performance. However, the tunable will allow aggressively dense server consolidation; another potential use case would be to implement this feature on low load, small footprint LPARs of a noncritical nature, reducing the hypervisor overhead for managing the LPAR and making more system resources available for more demanding LPARs.

|

Note: Use of the scaled_throughput_mode tunable should only be implemented after understanding the implications. While it is not a restricted schedo tunable, we strongly suggest only using it under the guidance of IBM Support.

|

4.2 Memory

Similar to other operating systems, AIX utilizes virtual memory. This allows the memory footprint of workloads to be greater than the physical memory allocated to the LPAR. This virtual memory is composed of several devices with different technology:

•Real Memory - Composed of physical memory DIMMs (SDRAM or DDRAM)

•Paging device - One or more devices hosted on storage (SATA, FC, SSD, or SAN)

Size of virtual memory = size of real memory + size of paging devices

All memory pages allocated by processes are located in real memory. When the amount of free physical memory reaches a certain threshold, the virtual memory manager (VMM) through the page-replacement algorithm will search for some pages to be evicted from RAM and sent to paging devices (this operation is called paging out). If a program needs to access a memory page located on a paging device (hard disk), this page needs to be first copied back to the real memory (paging in).

Because of the technology difference between real memory (RAM) and paging devices (hard disks), the time to access a page is much slower when it is located on paging space and needs a disk I/O to be paged in to the real memory. Paging activity is one of the most common reasons for performance degradation.

Example 4-4 Monitoring paging activity with vmstat

{D-PW2k2-lpar1:root}/ #vmstat -w 2

kthr memory page faults cpu

------- --------------------- ------------------------------------ ------------------ -----------

r b avm fre re pi po fr sr cy in sy cs us sy id wa

1 0 12121859 655411 0 0 0 0 0 0 2 19588 3761 4 0 95 0

2 0 12387502 389768 0 0 0 0 0 0 1 13877 3731 4 0 95 0

1 0 12652613 124561 0 46 0 0 0 0 48 19580 3886 4 1 95 0

3 9 12834625 80095 0 59 54301 81898 586695 0 13634 9323 14718 3 10 78 9

2 13 12936506 82780 0 18 50349 53034 52856 0 16557 223 19123 2 6 77 16

1 18 13046280 76018 0 31 49768 54040 53937 0 16139 210 20793 2 6 77 16

2 19 13145505 81261 0 33 51443 48306 48243 0 16913 133 19889 1 5 77 17

With vmstat, the paging activity can be monitored by looking at the column po (number of pagings out per second) and pi (number of pagings in per second).

Figure 4-4 Monitoring paging activity with nmon

In Figure 4-4, we started nmon in interactive mode. We can monitor the number of paging in and out by looking at the values in to Paging Space in and out. This number is given in pages per second.

4.2.1 AIX vmo settings

The AIX virtual memory is partitioned into segments sized 256 MB (default segment size) or 1 TB. Note that 1 TB segment size is only used for 16 GB huge pages, and is similar but a different concept from what is mentioned in 4.2.3, “One TB segment aliasing” on page 129, which still uses a 256 MB segment size.

Depending on the backing storage type, the virtual memory segments can be classified into three types, as described in Table 4-2.

Table 4-2 VMM segments classification depending on backing storage

|

Segment type

|

Definition

|

|

Persistent

|

The pages of persistent segments have permanent storage locations on disk (JFS file systems). The persistent segments are used for file caching of JFS file systems.

|

|

Client

|

The client segments also have permanent storage locations, which are backed by a JFS2, CD-ROM file system, or remote file systems such as NFS. The client segments are used for file caching of those file systems.

|

|

Working

|

Working segments are transitory and exist only during their use by a process. They have no permanent disk storage location and are stored on paging space when they are paged out.

Typical working segments include process private segments (data, BSS, stack, u-block, heap), shared data segments (shmat or mmap), shared libary data segments, and so on. The kernel segments are also classified as working segments.

|

Computation memory, also known as computational pages, consists of the pages that belong to working segments or program text (executable files or shared libary files) segments.

File memory, also known as file pages or non-computation memory, consists of the remaining pages. These are usually pages belonging to client segments or persistent segments.

Some AIX tunable parameter can be modified via the vmo command to change the behavior of the VMM such as:

•Change the threshold to start or stop the page-replacement algorithm.

•Give more or less priority for the file pages to stay in physical memory compared to computational pages.

Since AIX 6.1, the default values of some vmo tunables were updated to fit most workloads. Refer to Table 4-3.

Table 4-3 vmo parameters: AIX 5.3 defaults vs. AIX 6.1 defaults

|

AIX 5.3 defaults

|

AIX 6.1/7.1 defaults

|

|

minperm% = 20

maxperm% = 80

maxclient% = 80

strict_maxperm = 0

strict_maxclient = 1

lru_file_repage = 1

page_steal_methode = 0

|

minperm% = 3

maxperm% = 90

maxclient% = 90

strict_maxperm = 0

strict_maxclient = 1

lru_file_repage = 0

page_steal_methode = 1

|

With these new parameters, VMM gives more priority to the computational pages to stay in the real memory and avoid paging. When the page replacement algorithm starts, it steals only the file pages as long as the percentage of file pages in memory is above minperm%, regardless of the repage rate. This is controlled by the vmo parameter lru_file_repage=0 and it guarantees 97% memory (minperm%=3) for computational pages. If the percentage of file pages drops below minperm%, both file and computational pages might be stolen.

|

Note: In the new version of AIX 7.1, lru_file_repage=0 is still the default, but this parameter disappears from the vmo tunables and cannot be changed any more.

|

The memory percentage used by the file pages can be monitored with nmon by looking at the value numperm, as shown in Figure 4-4 on page 126.

The page_steal_method=1 specification improves the efficiency of the page replacement algorithm by maintaining several lists of pages (computational pages, file pages, and workload manager class). When used with lru_file_repage=0, the page replacement algorithm can directly find file pages by looking at the corresponding list instead of searching in the entire page frame table. This reduces the number of scanned pages compared to freed pages (scan to free ratio).

The number of pages scanned and freed can be monitored in vmstat by looking at the sr column (pages scanned) and fr column (pages freed). In nmon, these values are reported by Pages Scans and Pages Steals. Usually, with page_steal_method=1, the ratio

Pages scanned to Pages freed should be between 1 and 2.

|

Conclusion: On new systems (AIX 6.1 and later), default parameters are usually good enough for the majority of the workloads. If you migrate your system from AIX 5.3, undo your old changes to vmo tunables indicated in /etc/tunables/nextboot, restart with the default, and change only if needed.

If you still have high paging activity, go through the perfpmr process (“Trace tools and PerfPMR” on page 316), and do not tune restricted tunables unless guided by IBM Support.

|

4.2.2 Paging space

Paging space or swap space is a special type of logical volume that is used to accommodate pages from RAM. This allows the memory footprint of workloads and processes to be greater than the physical memory allocated to the LPAR. When physical memory utilization reaches a certain threshold, the virtual memory manager (VMM) through the page-replacement algorithm will search for some pages to be evicted from RAM and sent to paging devices. This is called a page-out. When a program makes reference to a page, that page needs to be in real memory. If that page is on disk, a page-in must happen. This delays the execution of the program because it requires disk I/O, which is time-consuming. So it is important to have adequate paging devices.

The best situation is, where possible, to run the workload in main memory. However, it is important to have well dimensioned and good performing paging space to ensure that your system has the best performance when paging is inevitable. Note that some applications have a requirement on paging space, regardless of how much physical RAM is allocated. Therefore, performance of paging space is still valid today as it was previously.

Paging space size considerations can be found at:

http://www.ibm.com/developerworks/aix/library/au-aix7memoryoptimize3/

•Look for any specific recommendation from software vendors. Products such as IBM DB2 and Oracle have minimum requirements.

•Monitor your system frequently after going live. If you see that you are never approaching 50 percent of paging space utilization, do not add the space.

•A more sensible rule is to configure the paging space to be half the size of RAM plus 4 GB, with an upper limit of 32 GB.

Performance considerations for paging devices:

•Use multiple paging spaces.

•Use as many physical disks as possible.

•Avoid to use a heavily accessed disk.

•Use devices of the same size.

•Use a striped configuration with 4 KB stripe size.

•Use disks from your Storage Area Network (SAN).

4.2.3 One TB segment aliasing

One TB segment aliasing or Large Segment Aliasing (LSA) improves performance by using 1-TB segment translations for shared memory segments. 64-bit applications with large memory footprint and low spatial locality are likely to benefit from this feature. Both directed and undirected shared memory attachments are eligible for LSA promotion.

In this section, we introduce how 1-TB segment aliasing works, when to enable it, and how to observe the benefits of using it.

Introduction to 1-TB segment aliasing

To understand how LSA works, you need to get some knowledge about 64-bit memory addressing.

Virtual address space of 64-bit applications

64-bit architecture has an addressable range of 2**64, from 0x0000000000000000 to 0xFFFFFFFFFFFFFFFF, which is 16 exabytes (EB) in size. The address space is organized in segments, and there are 2**36 segments, each segment being 256 MB in size.

Table 4-4 shows the 64-bit effective address space.

Table 4-4 64-bit effective address space

|

Segment Number (hex)

|

Segment usage

|

|

0x0000_0000_0

|

System call tables, kernel text

|

|

0x0000_0000_1

|

Reserved for system use

|

|

0x0000_0000_2

|

Reserved for user mode loader (process private segment)

|

|

0x0000_0000_3 - 0x0000_0000_C

|

shmat or mmap use

|

|

0x0000_0000_D

|

Reserved for user mode loader

|

|

0x0000_0000_E

|

shmat or mmap use

|

|

0x0000_0000_F

|

Reserved for user mode loader

|

|

0x0000_0001_0 - 0x06FF_FFFF_F

|

Application text, data, BSS and heap

|

|

0x0700_0000_0 - 0x07FF_FFFF_F

|

Default application shmat and mmap area if 1-TB Segment Aliasing (LSA) is not enabled.

Directed application shmat and mmap area if LSA is enabled.

|

|

0x0800_0000_0 - 0x08FF_FFFF_F

|

Application explicit module load area

|

|

0x0900_0000_0 - 0x09FF_FFFF_F

|

Shared library text and per-process shared library data

|

|

0x0A00_0000_0 - 0x0AFF_FFFF_F

|

Default (undirected) shmat and mmap area if LSA is enabled

|

|

0x0B00_0000_0 - 0x0EFF_FFFF_F

|

Reserved for future use

|

|

0x0F00_0000_0 - 0x0FFF_FFFF_F

|

Application primary thread stack

|

|

0x1000_0000_0 - 0XEFFF_FFFF_F

|

Reserved for future use

|

|

0xF000_0000_0 - 0xFFFF_FFFF_F

|

Additional kernel segments

|

64-bit hardware address resolution

Figure 4-5 gives an explanation of how the effective address of one process is translated to a virtual address, and finally the real hardware address in AIX.

As mentioned in “Virtual address space of 64-bit applications” on page 129, each 64 bit effective address uses the first 36 bits as the effective segment ID (ESID), and then it is mapped to a 52-bit virtual segment ID (VSID) using a segment lookaside buffer (SLB) or a segment table (STAB).

After the translation, we get a 52-bit VSID. Combine this VSID with the 16-bit page index, and we get a 68-bit virtual page number. Then the operating system uses TLB and other tables to translate the virtual page number into a real page number, which is combined with the 12-bit page offset to eventually form a 64-bit real address.

Figure 4-5 64-bit hardware address resolution

ESID and VSID mapping can be found with the svmon command, as shown in Example 4-5. Note that the VSID is unique in the operating system, while different processes may have the same ESID.

Example 4-5 VSID and ESID mapping in svmon

#svmon -P 9437198

-------------------------------------------------------------------------------

Pid Command Inuse Pin Pgsp Virtual 64-bit Mthrd 16MB

9437198 lsatest 24990 9968 0 24961 Y N N

PageSize Inuse Pin Pgsp Virtual

s 4 KB 11374 0 0 11345

m 64 KB 851 623 0 851

Vsid Esid Type Description PSize Inuse Pin Pgsp Virtual

20002 0 work kernel segment m 671 620 0 671

9d001d 90000000 work shared library text m 175 0 0 175

50005 9ffffffd work shared library sm 2544 0 0 2544

9f001f 90020014 work shared library s 166 0 0 166

840fa4 70000004 work default shmat/mmap sm 135 0 0 135

890fc9 70000029 work default shmat/mmap sm 135 0 0 135

9d0efd 70000024 work default shmat/mmap sm 135 0 0 135

8c0f6c 70000012 work default shmat/mmap sm 135 0 0 135

9b0edb 70000008 work default shmat/mmap sm 135 0 0 135

980f38 7000000d work default shmat/mmap sm 135 0 0 135

8e0f0e 7000003b work default shmat/mmap sm 135 0 0 135

870ec7 70000036 work default shmat/mmap sm 135 0 0 135

9504b5 7000002d work default shmat/mmap sm 135 0 0 135

Hardware limits on SLB entries and benefits of LSA

Now you know that SLB is used to translate ESID to VSID when doing address translation. Because SLB is in processor cache, the translation will be very efficient if we hit the SLB when accessing memory.

However, POWER6 and POWER7 processor has limited SLB entries, as follows:

•POWER6

– SLB has 64 entries

– 20 reserved for the kernel

– 44 available for user processes, which yields 11 GB of accessible memory

– Many client workloads do not fit into 11 GB

•POWER7

– SLB has 32 entries; architectural trend towards smaller SLB sizes

– 20 still reserved for the kernel

– 12 available for user processes, which yields 3 GB of accessible memory

– Potential for performance regression

As the SLB entries are limited, you can only address 3 GB of user memory directly from SLB in POWER7, which is usually not enough for most applications. And if you failed to address memory directly from SLB, the performance deteriorates.

This is why LSA is introduced in AIX. Through LSA, you can address 12 TB of memory using 12 SLB entries, and SLB faults should be rare. Because this is transparent to the application, you can expect an immediate performance boost for many applications that have a large memory footprint (Figure 4-6 on page 132).

Figure 4-6 Process address space example with LSA

Enabling LSA and verification

In the following sections, we introduce how to enable LSA and check whether LSA has taken effect.

Enabling LSA

There are vmo options as well as environment variables available to enable LSA. For most cases, you need to set esid_allocator=1 when in AIX 6.1, and do nothing in AIX 7.1 because the default is on already. You can also change the environment variables on a per process basis. The option details are as follows:

•esid_allocator, VMM_CNTRL=ESID_ALLOCATOR=[0,1]

Default off (0) in AIX 6.1 TL06, on (1) in AIX 7.1. When on, indicates that the large segment aliasing effective address allocation policy is in use. This parameter can be changed dynamically but will only be effective for future shared memory allocations.

•shm_1tb_shared, VMM_CNTRL=SHM_1TB_SHARED=[0,4096]

Default set to 12 (3 GB) on POWER7, 44 (11GB) on POWER6 and earlier. This is in accord with the hardware limit of POWER6 and POWER7. This parameter sets the threshold number of 256 MB segments at which a shared memory region is promoted to use a 1-TB alias.

•shm_1tb_unshared, VMM_CNTRL=SHM_1TB_UNSHARED=[0,4096]

Default set to 256 (64 GB). This parameter controls the threshold number of 256 MB segments at which multiple homogeneous small shared memory regions will be promoted to an unshared alias. Use this parameter with caution because there could be performance degradation when there are frequent shared memory attaches and detaches.

•shm_1tb_unsh_enable

Default set to on (1) in AIX 6.1 TL06 and AIX 7.1 TL01; Default set to off (0) in AIX 7.1 TL02 and later releases. When on, indicates unshared aliases are in use.

|

Note: Unshared aliases might degrade performance in case there are frequent shared memory attaches and detaches. We suggest you turn unshared aliasing off.

|

You can also refer to the Oracle Database and 1-TB Segment Aliasing in the following website for more information:

http://www-03.ibm.com/support/techdocs/atsmastr.nsf/WebIndex/TD105761

Verification of LSA

This section shows the LSA verification steps:

1. Get the process ID of the process using LSA, which can be any user process, for example a database process.

2. Use svmon to confirm that the shared memory regions are already allocated, as shown in Example 4-6.

Example 4-6 svmon -P <pid>

#svmon -P 3670250

-------------------------------------------------------------------------------

Pid Command Inuse Pin Pgsp Virtual 64-bit Mthrd 16MB

3670250 lsatest 17260 10000 0 17229 Y N N

PageSize Inuse Pin Pgsp Virtual

s 4 KB 3692 0 0 3661

m 64 KB 848 625 0 848

Vsid Esid Type Description PSize Inuse Pin Pgsp Virtual

20002 0 work kernel segment m 671 622 0 671

990019 90000000 work shared library text m 172 0 0 172

50005 9ffffffd work shared library sm 2541 0 0 2541

9b001b 90020014 work shared library s 161 0 0 161

b70f37 f00000002 work process private m 5 3 0 5

fb0b7b 9001000a work shared library data sm 68 0 0 68

a10021 9fffffff clnt USLA text,/dev/hd2:4225 s 20 0 - -

e60f66 70000004 work default shmat/mmap sm 14 0 0 14

fd0e7d 70000023 work default shmat/mmap sm 14 0 0 14

ee0fee 70000007 work default shmat/mmap sm 14 0 0 14

ea0f6a 7000003f work default shmat/mmap sm 14 0 0 14

e50e65 70000021 work default shmat/mmap sm 14 0 0 14

d10bd1 7000001a work default shmat/mmap sm 14 0 0 14

fb0f7b 70000002 work default shmat/mmap sm 14 0 0 14

ff0fff 70000009 work default shmat/mmap sm 14 0 0 14

f20e72 7000003d work default shmat/mmap sm 14 0 0 14

e50fe5 70000028 work default shmat/mmap sm 14 0 0 14

f00e70 7000000e work default shmat/mmap sm 14 0 0 14

8a0c8a 7000001e work default shmat/mmap sm 14 0 0 14

f80f78 7000002f work default shmat/mmap sm 14 0 0 14

Example 4-7 Running kdb

#kdb

START END <name>

0000000000001000 00000000058A0000 start+000FD8

F00000002FF47600 F00000002FFDF9C8 __ublock+000000

000000002FF22FF4 000000002FF22FF8 environ+000000

000000002FF22FF8 000000002FF22FFC errno+000000

F1000F0A00000000 F1000F0A10000000 pvproc+000000

F1000F0A10000000 F1000F0A18000000 pvthread+000000

read vscsi_scsi_ptrs OK, ptr = 0xF1000000C02D6380

(0)>

Example 4-8 tpid -d <pid>

(0)> tpid -d 3670250

SLOT NAME STATE TID PRI RQ CPUID CL WCHAN

pvthread+019500 405!lsatest RUN 1950075 071 4 0

5. Choose any of the thread SLOT numbers listed (only one available above), and run “user -ad <slot_number>” in kdb. As in Example 4-9, the LSA_ALIAS in the command output means LSA is activated for the shared memory allocation. If LSA_ALIAS flag does not exist, LSA is not in effect.

Example 4-9 user -ad <slot_number>

(0)> user -ad 405

User-mode address space mapping:

uadspace node allocation......(U_unode) @ F00000002FF48960

usr adspace 32bit process.(U_adspace32) @ F00000002FF48980

segment node allocation.......(U_snode) @ F00000002FF48940

segnode for 32bit process...(U_segnode) @ F00000002FF48BE0

U_adspace_lock @ F00000002FF48E20

lock_word.....0000000000000000 vmm_lock_wait.0000000000000000

V_USERACC strtaddr:0x0000000000000000 Size:0x0000000000000000

ESID Allocator version (U_esid_allocator)........ 0001

shared alias thresh (U_shared_alias_thresh)...... 000C

unshared alias thresh (U_unshared_alias_thresh).. 0100

vmmflags......00400401 SHMAT BIGSTAB LSA_ALIAS

Identify LSA issues

In the following sections, we introduce how to identify LSA issues using hpmstat and tprof.

Using hpmstat to identify LSA issues

The hpmstat command provides system-wide hardware performance counter information that can be used to monitor SLB misses. Refer to “The hpmstat and hpmcount utilities” on page 334 for more information about hpmstat. If there are a lot of SLB misses, then enabling LSA should help.

You can get the supported event groups from the pmlist command in AIX, as shown in Example 4-10 on page 135.

Example 4-10 Supported hardware performance event groups

#pmlist -g -1|pg

...

Group #10: pm_slb_miss

Group name: SLB Misses

Group description: SLB Misses

Group status: Verified

Group members:

Counter 1, event 77: PM_IERAT_MISS : IERAT Reloaded (Miss)

Counter 2, event 41: PM_DSLB_MISS : Data SLB misses

Counter 3, event 89: PM_ISLB_MISS : Instruction SLB misses

Counter 4, event 226: PM_SLB_MISS : SLB misses

Counter 5, event 0: PM_RUN_INST_CMPL : Run instructions completed

Counter 6, event 0: PM_RUN_CYC : Run cycles

...

Group #10 is used for reporting SLB misses. Use hpmstat to monitor the SLB misses events as shown in Example 4-11. Generally you should further investigate the issue when the SLB miss rate per instruction is greater than 0.5%. The DSLB miss rate per instruction is 1.295%, and is not acceptable. You can enable LSA by setting vmo -p -o esid_allocator=1 and seeing the effect.

Example 4-11 hpmstat before LSA is enabled

#hpmstat -r -g 10 20

Execution time (wall clock time): 20.010013996 seconds

Group: 10

Counting mode: user+kernel+hypervisor+runlatch

Counting duration: 160.115119955 seconds

PM_IERAT_MISS (IERAT Reloaded (Miss)) : 20894033

PM_DSLB_MISS (Data SLB misses) : 72329260

PM_ISLB_MISS (Instruction SLB misses) : 15710

PM_SLB_MISS (SLB misses) : 72344970

PM_RUN_INST_CMPL (Run instructions completed) : 5584383071

PM_RUN_CYC (Run cycles) : 66322682987

Normalization base: time

Counting mode: user+kernel+hypervisor+runlatch

Derived metric group: Translation

[ ] % DSLB_Miss_Rate per inst : 1.295 %

[ ] IERAT miss rate (%) : 0.374 %

[ ] % ISLB miss rate per inst : 0.000 %

Derived metric group: General

[ ] Run cycles per run instruction : 11.876

[ ] MIPS : 34.877 MIPS

u=Unverified c=Caveat R=Redefined m=Interleaved

Example 4-12 shows the hpmstat output after we set esid_allocator=1 and restarted the application. You can see that the SLB misses are gone after LSA is activated.

Example 4-12 hpmstat output after LSA is enabled

#hpmstat -r -g 10 20

Execution time (wall clock time): 20.001231826 seconds

Group: 10

Counting mode: user+kernel+hypervisor+runlatch

Counting duration: 160.005281724 seconds

PM_IERAT_MISS (IERAT Reloaded (Miss)) : 189529

PM_DSLB_MISS (Data SLB misses) : 25347

PM_ISLB_MISS (Instruction SLB misses) : 15090

PM_SLB_MISS (SLB misses) : 40437

PM_RUN_INST_CMPL (Run instructions completed) : 2371507258

PM_RUN_CYC (Run cycles) : 66319381743

Normalization base: time

Counting mode: user+kernel+hypervisor+runlatch

Derived metric group: Translation

[ ] % DSLB_Miss_Rate per inst : 0.001 %

[ ] IERAT miss rate (%) : 0.008 %

[ ] % ISLB miss rate per inst : 0.001 %

Derived metric group: General

[ ] Run cycles per run instruction : 27.965

[ ] MIPS : 14.821 MIPS

u=Unverified c=Caveat R=Redefined m=Interleaved

Using tprof to identify LSA issues

An -E option is available for monitoring such events. When there is a notable amount of SLB misses, you should be able to see a lot of kernel processor time spent in set_smt_pri_user_slb_found. In Example 4-13 you can see 13.92% set_smt_pri_user_slb_found, and you can find that lsatest caused the problem.

Example 4-13 tprof before LSA is enabled

#tprof -E -sku -x sleep 10

Configuration information

=========================

System: AIX 7.1 Node: p750s1aix2 Machine: 00F660114C00

Tprof command was:

tprof -E -sku -x sleep 10

Trace command was:

/usr/bin/trace -ad -M -L 1073741312 -T 500000 -j 00A,001,002,003,38F,005,006,134,210,139,5A2,5A5,465,2FF,5D8, -o -

Total Samples = 1007

Traced Time = 10.02s (out of a total execution time of 10.02s)

Performance Monitor based reports:

Processor name: POWER7

Monitored event: Processor cycles

Sampling interval: 10ms

<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<<

Process Freq Total Kernel User Shared Other

======= ==== ===== ====== ==== ====== =====

lsatest 1 99.50 24.85 74.65 0.00 0.00

/usr/bin/sh 2 0.20 0.10 0.00 0.10 0.00

gil 1 0.10 0.10 0.00 0.00 0.00

...

Total % For All Processes (KERNEL) = 25.25

Subroutine % Source

========== ====== ======

set_smt_pri_user_slb_found 13.92 noname

start 8.05 low.s

.user_slb_found 1.79 noname

slb_stats_usr_point 0.80 noname

._ptrgl 0.20 low.s

slb_user_tmm_fixup 0.10 noname

.enable 0.10 misc.s

.tstart 0.10 /kernel/proc/clock.c

.v_freexpt 0.10 rnel/vmm/v_xptsubs.c

After we enabled LSA, set_smt_pri_user_slb_found was gone (Example 4-14).

Example 4-14 tprof after LSA is enabled

#tprof -E -sku -x sleep 10

Configuration information

=========================

System: AIX 7.1 Node: p750s1aix2 Machine: 00F660114C00

Tprof command was:

tprof -E -sku -x sleep 10

Trace command was:

/usr/bin/trace -ad -M -L 1073741312 -T 500000 -j 00A,001,002,003,38F,005,006,134,210,139,5A2,5A5,465,2FF,5D8, -o -

Total Samples = 1007

Traced Time = 10.02s (out of a total execution time of 10.02s)

Performance Monitor based reports:

Processor name: POWER7

Monitored event: Processor cycles

Sampling interval: 10ms

...

Total % For All Processes (KERNEL) = 0.10

Subroutine % Source

========== ====== ======

ovlya_addr_sc_ret 0.10 low.s

Sample program illustration

The sample program used in this section is in Appendix C, “Workloads” on page 341. In the sample scenario, we got about a 30% performance gain. Note that real workload benefits can vary.

4.2.4 Multiple page size support

In AIX, the virtual memory is split into pages, with a default page size of 4 KB. The POWER5+ processor supports four virtual memory page sizes: 4 KB (small pages), 64 KB (medium pages), 16 MB (large pages), and 16 GB (huge pages). The POWER6 processor also supports using 64 KB pages in segments with base page size of 4 KB. AIX uses this process to provide the performance benefits of 64 KB pages when useful or resorting to 4 KB pages where 64 KB pages would waste too much memory, such as allocated but not used by the application.

Using a larger virtual memory page size such as 64 KB for an application’s memory can improve the application's performance and throughput due to hardware efficiencies associated with larger page sizes. Using a larger page size can decrease the hardware latency of translating a virtual page address to a physical page address. This decrease in latency is due to improving the efficiency of hardware translation caches such as a processor’s translation lookaside buffer (TLB). Because a hardware translation cache only has a limited number of entries, using larger page sizes increases the amount of virtual memory that can be translated by each entry in the cache. This increases the amount of memory that can be accessed by an application without incurring hardware translation delays.

POWER6 supports mixing 4 KB and 64 KB page sizes. AIX 6.1 takes advantage of this new hardware capability automatically without user intervention. This AIX feature is called Dynamic Variable Page Size Support (DVPSS). To avoid backward compatibility issues, VPSS is disabled in segments that currently have an explicit page size selected by the user.

Some applications may require a configuration to take advantage of multiple page support, while others will take advantage by default. SAP, for example, needs some additional configuration to make use of 64 KB pages. Information regarding the required configuratin can be found in the “Improving SAP performance on IBM AIX: Modification of the application memory page size to improve the performance of SAP NetWeaver on the AIX operating system” whitepaper at:

|

Note: The use of multiple page support cannot be combined with Active Memory Sharing (AMS) or Active Memory Expansion (AME). Both only support 4 KB pages. AME can optionally support 64 K pages, but the overhead in enabling that support can cause poor performance.

|

Large pages

Large pages are intended to be used in specific environments. AIX does not automatically use these page sizes. AIX must be configured to do so, and the number of pages of each of these page sizes must also be configured. AIX cannot automatically change the number of configured 16 MB or 16 GB pages.

Not all applications benefit from using large pages. Memory-access-intensive applications such as databases that use large amounts of virtual memory can benefit from using large pages (16 MB). DB2 and Oracle require specific settings to use this. IBM Java can take advantage of medium (64 K) and large page sizes. Refer to section 7.3, “Memory and page size considerations” in the POWER7 and POWER7+ Optimization and Tuning Guide, SG24-8079.

AIX maintains different pools for 4 KB and 16 MB pages. An application (at least WebSphere) configured to use large pages can still use 4 KB pages. However, other applications and system processes may not be able to use 16 MB pages. In this case, if you allocate too many large pages you can have contention for 4 KB and high paging activity.

AIX treats large pages as pinned memory and does not provide paging support for them. Using large pages can result in an increased memory footprint due to memory fragmentation.

|

Note: You should be extremely cautious when configuring your system for supporting large pages. You need to understand your workload before using large pages in your system.

|

Since AIX 5.3, the large page pool is dynamic. The amount of physical memory that you specify takes effect immediately and does not require a reboot.

Example 4-15 shows how to verify the available page sizes.

Example 4-15 Display the possible page sizes

# pagesize -a

4096

65536

16777216

17179869184

Example 4-16 shows how to configure two large pages dynamically.

Example 4-16 Configuring two large pages (16 MB)

# vmo -o lgpg_regions=2 -o lgpg_size=16777216

Setting lgpg_size to 16777216

Setting lgpg_regions to 2

Example 4-17 shows how to disable large pages.

Example 4-17 Removing large page configuration

# vmo -o lgpg_regions=0

Setting lgpg_regions to 0

The commands that can be used to monitor different page size utilization are vmstat and svmon. The flag -P of vmstat followed by the page size shows the information for that page size, as seen in Example 4-18. The flag -P ALL shows the overall memory utilization divided into different page sizes, as seen in Example 4-19 on page 140.

Example 4-18 vmstat command to verify large page utilization

# vmstat -P 16MB

System configuration: mem=8192MB

pgsz memory page

----- -------------------------- ------------------------------------

siz avm fre re pi po fr sr cy

16M 200 49 136 0 0 0 0 0 0

Example 4-19 vmstat command to show memory utilization grouped by page sizes

# vmstat -P ALL

System configuration: mem=8192MB

pgsz memory page

----- -------------------------- ------------------------------------

siz avm fre re pi po fr sr cy

4K 308832 228825 41133 0 0 0 13 42 0

64K 60570 11370 51292 0 0 39 40 133 0

16M 200 49 136 0 0 0 0 0 0

Example 4-20 shows that svmon with the flag -G is another command that can be used to verify memory utilization divided into different page sizes.

Example 4-20 svmon command to show memory utilization grouped by page sizes

# svmon -G

size inuse free pin virtual mmode

memory 2097152 1235568 861584 1129884 611529 Ded

pg space 655360 31314

work pers clnt other

pin 371788 0 0 135504

in use 578121 0 38951

PageSize PoolSize inuse pgsp pin virtual

s 4 KB - 267856 3858 176364 228905

m 64 KB - 9282 1716 8395 11370

L 16 MB 200 49 0 200 49

In the three previous examples, the output shows 200 large pages configured in AIX and 49 in use.

4.3 I/O device tuning

When configuring AIX I/O devices for performance, there are many factors to take into consideration. It is important to understand the underlying disk subsystem, and how the AIX system is attached to it.

In this section we focus only on the tuning of disk devices and disk adapter devices in AIX. AIX LVM and file system performance tuning are discussed in detail in 4.4, “AIX LVM and file systems” on page 157.

4.3.1 I/O chain overview

Understanding I/O chain specifically regarding disks and disk adapters is important because it ensures that all devices in the stack have the appropriate tuning parameters defined.

We look at three types of disk attachments:

•Disk presented via dedicated physical adapters

•Virtualized disk using NPIV

•Virtualized disk using virtual SCSI

Refer to IBM PowerVM Virtualization Introduction and Configuration, SG24-7940-04, which describes in detail how to configure NPIV and Virtual SCSI. In this section we only discuss the concepts and how to tune parameters related to performance.

In 3.6.1, “Virtual SCSI” on page 75, 3.6.2, “Shared storage pools” on page 76, 3.6.3, “N_Port Virtualization” on page 79 we discuss in detail the use cases and potential performance implications of using NPIV and Virtual SCSI.

Dedicated physical adapters

When we referred to disk storage presented via direct physical adapters, this implies that the disk is attached to the AIX system without the use of Virtual I/O. This means that the AIX system has exclusive access to fiber channel adapters, which are used to send I/O to an external storage system.

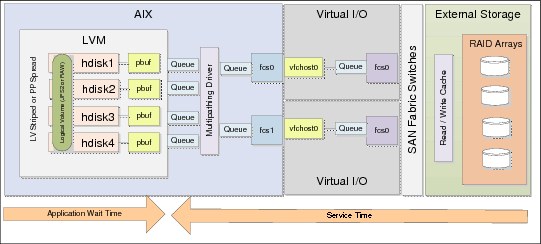

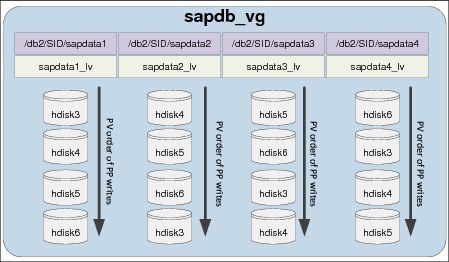

Looking at Figure 4-7 from left to right, when a write or a read operation is issued to AIX, LVM uses one physical buffer (pbuf) for each request. The physical buffers are described in 4.3.3, “Pbuf on AIX disk devices” on page 148. The I/O is then queued to the physical volume (PV), then handed to the multipathing driver and queued to the disk adapter device. The I/O is then passed through one or more SAN fabric switches (unless the storage is direct-attached to the AIX system) and reaches the external storage. If the I/O can be written to or read from the storage system’s cache, it is, otherwise it goes to disk.

Figure 4-7 Dedicated adapters

NPIV

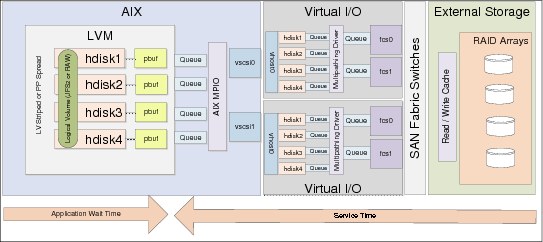

NPIV is a method where disk storage is implemented using PowerVM’s N_Port virtualization capability. In this instance, the Virtual I/O servers act as a passthrough enabling multiple AIX LPARs to access a single shared fiber channel (FC) port. A single FC adapter port on a Virtual I/O server is capable of virtualizing up to 64 worldwide port names (WWPN), meaning there are a maximum of 64 client logical partitions that can connect.

The I/O sequence is very similar to that of using dedicated physical adapters with the exception that there is an additional queue on each fiber channel adapter per Virtual I/O server, and there might be competing workloads on the fiber channel port from different logical partitions.

Figure 4-8 illustrates the I/O chain when NPIV is in use.

Figure 4-8 N_Port virtualization

Virtual SCSI

Virtual SCSI is a method of presenting a disk assigned to one or more Virtual I/O servers to a client logical partition. When an I/O is issued to the AIX LVM, the pbuf and hdisk queue is used exactly the same as in the dedicated physical adapter and NPIV scenarios. The difference is that there is a native AIX SCSI driver used and I/O requests are sent to a virtual SCSI adapter. The virtual SCSI adapter is a direct mapping to the Virtual I/O server’s vhost adapter, which is allocated to the client logical partition.

The hdisk device exists on both the client logical partition and the virtual I/O server, so there is also a queue to the hdisk on the virtual I/O server. The multipathing driver installed on the virtual I/O server then queues the I/O to the physical fiber channel adapter assigned to the VIO server and the I/O is passed to the external storage subsystem as described in the dedicated physical adapter and NPIV scenarios. There may be some limitation with copy services from a storage system in the case that a device driver is required to be installed on the AIX LPAR for this type of functionality.

Figure 4-9 on page 143 illustrates the I/O chain when virtual SCSI is in use.

Figure 4-9 Virtual SCSI

|

Note: The same disk presentation method applies when presenting disks on a Virtual I/O server to a client logical partition as well as using shared storage pools.

|

4.3.2 Disk device tuning

The objective of this section is to discuss which AIX hdisk device settings can be tuned and what their purpose is. Most of the settings discussed here are dependant on the type of workload and the performance capabilities of the storage system.

Understanding your I/O workload is important when performing an analysis of which settings need to be tuned. Your workload may be an OLTP type workload processing small block random I/O or conversely a data warehouse type workload that processes large block sequential I/O. The tuning parameters here differ depending on the type of workload running on the system.

It is also important to understand that changing these values here may enable AIX to send more I/O and larger I/O to the storage system, but this adds additional load to the storage system and SAN fabric switches. We suggest that you work with your SAN and storage administrator to understand the effect that tuning the device will have on your storage system.

Table 4-5 provides a summary of the tuning parameters available on an hdisk device and their purpose.

Table 4-5 Tuning parameters on an AIX hdisk device

|

Setting

|

Description

|

|

algorithm

|

This determines the method by which AIX distributes I/O down the paths that are available. The typical values are failover, where only one path is used, and round_robin where I/O is distributed across all available paths. Some device drivers add additional options, such as SDDPCM, which adds load_balance, which is similar to round_robin except it has more intelligent queueing. We suggest that you consult your storage vendor to find out the optimal setting.

|

|

hcheck_interval

|

This is the interval in seconds that AIX sends health check polls to a disk. If failed MPIO paths are found, the failed path will also be polled and re-enabled when it is found to be responding. It is suggested to confirm with the storage vendor what the recommended value to use here is.

|

|

max_transfer

|

This specifies the maximum amount of data that can be transmitted in a single I/O operation. If an application makes a large I/O request, the I/O is broken down into multiple I/Os the size of the max_transfer tunable. Typically, for applications transmitting small block I/O the default 256 KB is sufficient. However, in cases where there is large block streaming workload, the max_transfer size may be increased.

|

|

max_coalesce

|

This value sets the limit for the maximum size of an I/O that the disk driver will create by grouping together smaller adjacent requests. We suggest that the max_coalesce value match the max_transfer value.

|

|

queue_depth

|

The service queue depth of an hdisk device specifies the maximum number of I/O operations that can be in progress simultaneously on the disk. Any requests beyond this number are placed into another queue (wait queue) and remain in a pending state until an earlier request on the disk completes. Depending on how many concurrent I/O operations the backend disk storage can support, this value may be increased. However, this will place additional workload on the storage system.

|

|

reserve_policy

|

This parameter defines the reservation method used when a device is opened. The reservation policy is required to be set appropriately depending on what multipathing algorithm is in place. We suggest that you consult your storage vendor to understand what this should be set to based on the algorithm. Possible values include no_reserve, single_path, PR_exclusive, and PR_shared. This reservation policy is required to be set to no_reserve in a dual VIO server setup with virtual SCSI configuration, enabling both VIO servers to access the device.

|

As described in Table 4-5 on page 143, the max_transfer setting specifies the maximum amount of data that is transmitted in a single I/O operation. In Example 4-21, a simple I/O test is performed to demonstrate the use of the max_transfer setting. There is an AIX system processing a heavy workload of 1024 k block sequential I/Os with a read/write ratio of 80:20 to an hdisk (hdisk1) which has the max_transfer set to the default of 0x40000, which equates to 256 KB.

Typically, the default max_transfer value is suitable for most small block workloads. However, in a scenario with large block streaming workloads, it is suggested to consider tuning the max_transfer setting.

This is only an example test with a repeatable workload—the difference between achieving good performance and having performance issues is to properly understand your workload, establishing a baseline and tuning parameters individually and measuring the results.

Example 4-21 Simple test using 256KB max_transfer size

root@aix1:/ # lsattr -El hdisk1 -a max_transfer

max_transfer 0x40000 Maximum TRANSFER Size True

root@aix1:/ # iostat -D hdisk1 10 1

System configuration: lcpu=32 drives=3 paths=10 vdisks=2

hdisk1 xfer: %tm_act bps tps bread bwrtn

100.0 2.0G 7446.8 1.6G 391.4M

read: rps avgserv minserv maxserv timeouts fails

5953.9 8.2 0.6 30.0 0 0

write: wps avgserv minserv maxserv timeouts fails

1492.9 10.1 1.2 40.1 0 0

queue: avgtime mintime maxtime avgwqsz avgsqsz sqfull

20.0 0.0 35.2 145.0 62.0 7446.8

--------------------------------------------------------------------------------

root@aix1:/ #

The resulting output of iostat -D for a single 10-second interval looking at hdisk1 displays the following:

•Observed throughput is 2 GB per second. This is described as bytes per second (bps).

•This is made up of 7446.8 I/O operations per second. This is described as transfers per second (tps).

•The storage shows an average read service time of 8.2 milliseconds and an average write of 10.1 milliseconds. This is described as average service time (avgserv).

•The time that our application has to wait for the I/O to be processed in the queue is 20 milliseconds. This is described as the average time spent by a transfer request in the wait queue (avgtime). This is a result of our hdisk queue becoming full, which is shown as sqfull. The queue has filled up as a result of each I/O 1024 KB I/O request consisting of four 256 KB I/O operations. Handling the queue depth is described later in this section.

•The service queue for the disk was also full, due to the large number of I/O requests.

We knew that our I/O request size was 1024 KB, so we changed our max_transfer on hdisk1 to be 0x100000 which is 1 MB to match our I/O request size. This is shown in Example 4-22.

Example 4-22 Changing the max_transfer size to 1 MB

root@aix1:/ # chdev -l hdisk1 -a max_transfer=0x100000

hdisk1 changed

root@aix1:/

On completion of changing the max_transfer, we ran the same test again, as shown in Example 4-23, and observed the results.

Example 4-23 Simple test using 1MB max_transfer size

root@aix1:/ # lsattr -El hdisk1 -a max_transfer

max_transfer 0x100000 Maximum TRANSFER Size True

root@aix1:/ # iostat -D hdisk1 10 1

hdisk1 xfer: %tm_act bps tps bread bwrtn

100.0 1.9G 1834.6 1.5G 384.8M

read: rps avgserv minserv maxserv timeouts fails

1467.6 24.5 14.4 127.2 0 0

write: wps avgserv minserv maxserv timeouts fails

367.0 28.6 16.2 110.7 0 0

queue: avgtime mintime maxtime avgwqsz avgsqsz sqfull

0.0 0.0 0.3 0.0 61.0 0.0

--------------------------------------------------------------------------------

root@aix1:/ #

The output of iostat -D for a single 10-second interval looking at hdisk1 in the second test displayed the following:

•Observed throughput is 1.9 Gb per second. This is almost the same as the first test, shown in bps.

•This is made up of 1,834 I/O operations per second, which is shown in tps in the output in Example 4-23 on page 145. You can see that the number of I/O operations has been reduced by a factor of four, which is a result of moving from a max_transfer size of 256 KB to 1 MB. This means our 1024 KB I/O request is now processed in a single I/O operation.

•The storage shows an average read service time of 24.5 milliseconds and an average write service time of 28.6 milliseconds. This is shown as avgserv. Notice here that our service time from the storage system has gone up by a factor of 2.5, while our I/O size is four times larger. This demonstrates that we placed additional load on our storage system as our I/O size increased, while overall the time taken for the 1024 KB read I/O request to be processed was reduced as a result of the change.

•The time that our application had to wait for the I/O to be retrieved from the queue was 0.0 shown as avgtime. This was a result of the amount of I/O operations being reduced by a factor of four and their size increased by a factor of four. In the first test for a single read 1024 KB I/O request to be completed, this consisted of four 256 KB I/O operations with a 8.2 millisecond service time and a 20 millisecond wait queue time, giving an overall average response time to the I/O request of 52.8 milliseconds since a single 1024 KB I/O request consists of four 256 KB I/Os.

•In the second test after changing the max_transfer size to 1 MB, we completed the 1024 KB I/O request in a single I/O operation with an average service time of 24.5 milliseconds, giving an average of a 28.3 millisecond improvement per 1024 KB I/O request. This can be calculated by the formula avg IO time = avgtime + avgserv.

The conclusion of this test is that for our large block I/O workload, increasing the value of the max_transfer size to enable larger I/Os to be processed without filling up the disk’s I/O queue provided a significant increase in performance.

|

Important: If you are using virtual SCSI and you change to max_transfer on an AIX hdisk device, it is critical that these settings are replicated on the Virtual I/O server to ensure that the changes take effect.

|

The next setting that is important to consider is queue_depth on an AIX hdisk device. This is described in Table 4-5 on page 143 as the maximum number of I/O operations that can be in progress simultaneously on a disk device.

To be able to tune this setting, it is important to understand whether your queue is filling up on the disk and what value to set queue_depth to. Increasing queue_depth also places additional load on the storage system, because a larger number of I/O requests are sent to the storage system before they are queued.

Example 4-24 shows how to display the current queue_depth and observe what the maximum queue_depth is that can be set on the disk device. In this case the range is between 1 and 256. Depending on what device driver is in use, the maximum queue_depth may vary. It is always good practice to obtain the optimal queue depth for the storage system and its configuration from your storage vendor.

Example 4-24 Display current queue depth and maximum supported queue depth

root@aix1:/ # lsattr -El hdisk1 -a queue_depth

queue_depth 20 Queue DEPTH True

root@paix1:/ # lsattr -Rl hdisk1 -a queue_depth

1...256 (+1)

root@aix1:/ #

|

Note: In the event that the required queue_depth value cannot be assigned to an individual disk, as a result of being beyond the recommendation by the storage vendor, it is suggested to spread the workload across more hdisk devices because each hdisk has its own queue.

|

In Example 4-25 a simple test is performed to demonstrate the use of the queue_depth setting. There is an AIX system processing a heavy workload of 8 k small block random I/Os with an 80:20 read write ratio to an hdisk (hdisk1) which has its queue_depth currently set to 20. The iostat command issued here shows hdisk1 for a single interval of 10 seconds while the load is active on the system.

Example 4-25 Test execution with a queue_depth of 20 on hdisk1

root@aix1:/ # iostat -D hdisk1 10 1

System configuration: lcpu=32 drives=3 paths=10 vdisks=2

hdisk1 xfer: %tm_act bps tps bread bwrtn

99.9 296.5M 35745.1 237.2M 59.3M

read: rps avgserv minserv maxserv timeouts fails

28534.2 0.2 0.1 48.3 0 0

write: wps avgserv minserv maxserv timeouts fails

7210.9 0.4 0.2 50.7 0 0

queue: avgtime mintime maxtime avgwqsz avgsqsz sqfull

1.1 0.0 16.8 36.0 7.0 33898.5

--------------------------------------------------------------------------------

Looking at the resulting output of iostat -D in Example 4-25, you can observe the following:

•Our sample workload is highly read intensive and performing 35,745 I/O requests per second (tps) with a throughput of 296 MB per second (bps).

•The average read service time from the storage system is 0.2 milliseconds (avgserv).

•The average wait time per I/O transaction for the queue is 1.1 milliseconds (avgtime) and the disk’s queue in the 10-second period iostat was monitoring the disk workload filled up a total of 33,898 times (sqfull).

•The average amount of I/O requests waiting in the service wait queue was 36 (avgwqsz).

Based on this we could add our current queue depth (20) to the number of I/Os on average in the service wait queue (36), and have a queue_depth of 56 for the next test. This should stop the queue from filling up.

Example 4-26 shows changing the queue_depth on hdisk1 to our new queue_depth value. The queue_depth value is our target queue_depth of 56, plus some slight headroom bringing the total queue_depth to 64.

Example 4-26 Changing the queue_depth to 64 on hdisk1

root@aix1:/ # chdev -l hdisk1 -a queue_depth=64

hdisk1 changed

root@aix1:/ #

Example 4-27 on page 148 demonstrates the same test being executed again, but with the increased queue_depth of 64 on hdisk1.

Example 4-27 Test execution with a queue_depth of 64 on hdisk1

root@aix1:/ # iostat -D hdisk1 10 1

System configuration: lcpu=32 drives=3 paths=10 vdisks=2

hdisk1 xfer: %tm_act bps tps bread bwrtn

100.0 410.4M 50096.9 328.3M 82.1M

read: rps avgserv minserv maxserv timeouts fails

40078.9 0.4 0.1 47.3 0 0

write: wps avgserv minserv maxserv timeouts fails

10018.0 0.7 0.2 51.6 0 0

queue: avgtime mintime maxtime avgwqsz avgsqsz sqfull

0.0 0.0 0.3 0.0 23.0 0.0

--------------------------------------------------------------------------------

Looking at the resulting output of iostat -D in Example 4-27, you can observe the following:

•Our sample workload is highly read intensive and performing 50,096 I/O requests per second (tps) with a throughput of 410 MB per second (bps). This is significantly more than the previous test.

•The average read service time from the storage stem is 0.4 milliseconds (avgserv), which is slightly more than it was in the first test, because we are processing significantly more I/O operations.

•The average wait time per I/O transaction for the queue is 0 milliseconds (avgtime) and the disk’s queue in the 10-second period iostat was monitoring the disk workload did not fill up at all. In contrast to the previous test, where the queue filled up 33,898 times and the wait time for each I/O request was 1.1 milliseconds.

•The average amount of I/O requests waiting in the wait queue was 0 (avgwqsz), meaning our queue was empty; however; additional load was put on the external storage system.

Based on this test, we can conclude that each I/O request had an additional 0.2 millisecond response time from the storage system, while the 1.1 millisecond service queue wait time has gone away, meaning that after making this change, our workload’s response time went from 1.3 milliseconds to 0.4 milliseconds.

|

Important: If you are using virtual SCSI and you change to queue_depth on an AIX hdisk device, it is critical that these settings are replicated on the Virtual I/O server to ensure that the changes take effect.

|

|

Note: When you change the max_transfer or queue_depth setting on an hdisk device, it will be necessary that the disk is not being accessed and that the disk is not part of a volume group that is varied on. To change the setting either unmount any file systems and vary off the volume group, or change the queue_depth option with the -P flag of the chdev command to make the change active at the next reboot.

|

4.3.3 Pbuf on AIX disk devices

AIX Logical Volume Manager (LVM) uses a construct named pbuf to control a pending disk I/O. Pbufs are pinned memory buffers and one pbuf is always used for each individual I/O request, regardless of the amount of data that is supposed to be transferred. AIX creates extra pbufs when a new physical volume is added to a volume group.

Example 4-28 shows the AIX volume group data_vg with two physical volumes. We can see that the pv_pbuf_count is 512, which is the pbuf size for each physical volume in the volume group, and the total_vg_pbufs is 1024, which is because there are two physical volumes in the volume group, each with a pbuf size of 512.

Example 4-28 lvmo -av output

root@aix1:/ # lsvg -p data_vg

data_vg:

PV_NAME PV STATE TOTAL PPs FREE PPs FREE DISTRIBUTION

hdisk1 active 399 239 79..00..00..80..80

hdisk2 active 399 239 79..00..00..80..80

root@aix1:/ # lvmo -av data_vg

vgname = data_vg

pv_pbuf_count = 512

total_vg_pbufs = 1024

max_vg_pbufs = 524288

pervg_blocked_io_count = 3047

pv_min_pbuf = 512

max_vg_pbuf_count = 0

global_blocked_io_count = 3136

root@aix1:/ #

Also seen in Example 4-28, you can see that the pervg_blocked_io_count is 3047 and the global_blocked_io_count is 3136. This means that the data_vg volume group has 3047 I/O requests that have been blocked due to insufficient pinned memory buffers (pervg_blocked_io_count). Globally across all of the volume groups, 3136 I/O requests have been blocked due to insufficient pinned memory buffers.

In the case where the pervg_blocked_io_count is growing for a volume group, it may be necessary to increase the number of pbuf buffers. This can be changed globally by using ioo to set pv_min_pbuf to a greater number. However, it is suggested to handle this on a per volume group basis.

pv_pbuf_count is the number of pbufs that are added when a physical volume is added to the volume group.

Example 4-29 demonstrates increasing the pbuf buffers for the data_vg volume group from 512 per physical volume to 1024 per physical volume. Subsequently, the total number of pbuf buffers for the volume group is also increased.

Example 4-29 Increasing the pbuf for data_vg

root@aix1:/ # lvmo -v data_vg -o pv_pbuf_count=1024

root@aix1:/ # lvmo -av data_vg

vgname = data_vg

pv_pbuf_count = 1024

total_vg_pbufs = 2048

max_vg_pbufs = 524288

pervg_blocked_io_count = 3047

pv_min_pbuf = 512

max_vg_pbuf_count = 0

global_blocked_io_count = 3136

root@aix1:/ #

If you are unsure about changing these values, contact IBM Support for assistance.

|

Note: If at any point the volume group is exported and imported, the pbuf values will reset to their defaults. If you have modified these, ensure that you re-apply the changes in the event that you export and import the volume group.

|

4.3.4 Multipathing drivers

Drivers for IBM storage include SDDPCM for IBM DS8000, DS6000, SAN Volume Controller, and Storwize® V7000 as well as the XIV® Host Attachment kit for an XIV Storage System.

The best source of reference for which driver to use is the IBM System Storage Interoperation Center (SSIC), which provides details on drivers for IBM storage at:

Third-party drivers should be obtained from storage vendors and installed to deliver the best possible performance.

4.3.5 Adapter tuning

The objective of this section is to detail what AIX storage adapter device settings can be tuned to and their purpose. Three scenarios are covered here:

•Dedicated fiber channel adapters

•NPIV virtual fiber channel adapters

•Virtual SCSI

The most important thing to do when tuning the adapter settings is to understand the workload that the disks associated with the adapters are handling and what their configuration is. 4.3.2, “Disk device tuning” on page 143 details the configuration attributes that are applied to hdisk devices in AIX.

Dedicated fiber channel adapters

The scenario of dedicated fiber channel (FC) adapters entails an AIX system or logical partition (LPAR) with exclusive use of one or more FC adapters. There are two devices associated with an FC adapter:

fcsN This is the actual adapter itself, and there is one of these devices per port on a fiber channel card. For example, you may have a dual port fiber channel card. Its associated devices could be fcs0 and fcs1.

fscsiN This is a child device that the FC adapter has which acts as a SCSI software interface to handle SCSI commands related to disk access. If you have a dual port fiber channel card associated with devices fcs0 and fcs1, their respective child devices will be fscsi0 and fscsi1.

Table 4-6 on page 151 describes attributes of the fcs device which it is advised to consider tuning.

Table 4-6 fcs device attributes

|

Attribute

|

Description

|

|

lg_term_dma

|

The attribute lg_term_dma is the size in bytes of the DMA memory area used as a transfer buffer. The default value of 0x800000 in most cases is sufficient unless there is a very large number of fiber channel devices attached. This value typically should only be tuned under the direction of IBM Support.

|

|

max_xfer_size

|

The max_xfer_size attribute dictates the maximum transfer size of I/O requests. Depending on the block size of the workload, this value may be increased from the default 0x100000 (1 MB) to 0x200000 (2 MB) when there are large block workloads, and the hdisk devices are tuned for large transfer sizes. This attribute must be large enough to accommodate the transfer sizes used by any child devices, such as an hdisk device.

|

|

num_cmd_elems

|

The attribute num_cmd_elems is the queue depth for the adapter. The maximum value for a fiber channel adapter is 2048 and this should be increased to support the total amount of I/O requests that the attached devices are sending to the adapter.

|

When tuning the attributes described in Table 4-6, the fcstat command can be used to establish whether the adapter is experiencing any performance issues (Example 4-30).

Example 4-30 fcstat output

root@aix1:/ # fcstat fcs0

FIBRE CHANNEL STATISTICS REPORT: fcs0

Device Type: 8Gb PCI Express Dual Port FC Adapter (df1000f114108a03) (adapter/pciex/df1000f114108a0)

Serial Number: 1C041083F7

Option ROM Version: 02781174

ZA: U2D1.11X4

World Wide Node Name: 0x20000000C9A8C4A6

World Wide Port Name: 0x10000000C9A8C4A6

FC-4 TYPES:

Supported: 0x0000012000000000000000000000000000000000000000000000000000000000

Active: 0x0000010000000000000000000000000000000000000000000000000000000000

Class of Service: 3

Port Speed (supported): 8 GBIT

Port Speed (running): 8 GBIT

Port FC ID: 0x010000

Port Type: Fabric

Seconds Since Last Reset: 270300

Transmit Statistics Receive Statistics

------------------- ------------------

Frames: 2503792149 704083655

Words: 104864195328 437384431872

LIP Count: 0

NOS Count: 0

Error Frames: 0

Dumped Frames: 0

Link Failure Count: 0

Loss of Sync Count: 8

Loss of Signal: 0

Primitive Seq Protocol Error Count: 0

Invalid Tx Word Count: 31

Invalid CRC Count: 0

IP over FC Adapter Driver Information

No DMA Resource Count: 3207

No Adapter Elements Count: 126345

FC SCSI Adapter Driver Information

No DMA Resource Count: 3207

No Adapter Elements Count: 126345

No Command Resource Count: 133

IP over FC Traffic Statistics

Input Requests: 0

Output Requests: 0

Control Requests: 0

Input Bytes: 0

Output Bytes: 0

FC SCSI Traffic Statistics