IBM Power Systems virtualization

In this chapter, we describe some of the features and tools to optimize virtualization on POWER Systems running AIX. This includes PowerVM components and AIX Workload Partitions (WPAR). Virtualization when deployed correctly provides the best combination of performance and utilization of a system. Relationships and dependencies between some of the components need to be understood and observed. It is critical that the different elements are correctly configured and tuned to deliver optimal performance.

We discuss the following topics in this chapter:

3.1 Optimal logical partition (LPAR) sizing

A common theme throughout this book is to understand your workload and size your logical partitions appropriately. In this section we focus on some of the processor and memory settings available in the LPAR profile and provide guidance on how to set them to deliver optimal performance.

This section is divided into two parts, processor and memory.

Processor

There are a number of processor settings available. Some have more importance than others in terms of performance. Table 3-1 provides a summary of the processor settings available in the LPAR profile, a description of each, and some guidance on what values to consider.

Table 3-1 Processor settings in LPAR profile

|

Setting

|

Description

|

Recommended value

|

|

Minimum Processing Units

|

This is the minimum amount of processing units that must be available for the LPAR to be activated. Using DLPAR, processing units can be removed to a minimum of this value.

|

This value should be set to the minimum number of processing units that the LPAR would realistically be assigned.

|

|

Desired Processing Units

|

This is the desired amount of processing units reserved for the LPAR; this is also known as the LPAR’s entitled capacity (EC).

|

This value should be set to the average utilization of the LPAR during peak workload.

|

|

Maximum Processing Units

|

This is the maximum amount of processing units that can be added to the LPAR using a DLPAR operation.

|

This value should be set to the maximum number of processing units that the LPAR would realistically be assigned.

|

|

Minimum Virtual Processors

|

This is the minimum amount of virtual processors that can be assigned to the LPAR with DLPAR.

|

This value should be set to the minimum number of virtual processors that the LPAR would be realistically assigned.

|

|

Desired Virtual Processors

|

This is the desired amount of virtual processors that will be assigned to the LPAR when it is activated. This is also referred to as virtual processors (VPs).

|

This value should be set to the upper limit of processor resources utilized during peak workload.

|

|

Maximum Virtual Processors

|

This the maximum amount of virtual processors that can be assigned to the LPAR using a DLPAR operation.

|

This value should be set to the maximum number of virtual processors that the LPAR would be realistically assigned.

|

|

Sharing Mode

|

Uncapped LPARs can use processing units that are not being used by other LPARs, up to the number of virtual processors assigned to the uncapped LPAR. Capped LPARs can use only the number of processing units that are assigned to them. In this section we focus on uncapped LPARs.

|

For LPARs that will consume processing units above their entitled capacity, it is recommended to have the LPAR configured as uncapped.

|

|

Uncapped Weight

|

When contending for shared resources with other LPARs, this is the priority that this logical partition has when contention for shared virtual resources exists.

|

This is the relative weight that the LPAR will have during resource contention. This value should be set based on the importance of the LPAR compared to other LPARs in the system. It is suggested that the VIO servers have highest weight.

|

There are situations where it is required in a Power system to have multiple shared processor pools. A common reason for doing this is for licensing constraints where licenses are by processor, and there are different applications running on the same system. When this is the case, it is important to size the shared processor pool to be able to accommodate the peak workload of the LPARs in the shared pool.

In addition to dictating the maximum number of virtual processors that can be assigned to an LPAR, the entitled capacity is a very important setting that must be set correctly. The best practice for setting this is to set it to the average processor utilization during peak workload. The sum of the entitled capacity assigned to all the LPARs in a Power system should not be more than the amount of physical processors in the system or shared processor pool.

The virtual processors in an uncapped LPAR dictate the maximum amount of idle processor resources that can be taken from the shared pool when the workload goes beyond the capacity entitlement. The number of virtual processors should not be sized beyond the amount of processor resources required by the LPAR, and it should not be greater than the total amount of processors in the Power system or in the shared processor pool.

Figure 3-1 on page 44 shows a sample workload with the following characteristics:

•The system begins its peak workload at 8:00 am.

•The system’s peak workload stops at around 6:30 pm.

•The ideal entitled capacity for this system is 25 processors, which is the average utilization during peak workload.

•The ideal number of virtual processors is 36, which is the maximum amount of virtual processors used during peak workload.

Figure 3-1 Graph of a workload over a 24-hour period

For LPARs with dedicated processors (these processors are not part of the shared processor pool), there is an option to enable this LPAR after it is activated for the first time to donate idle processing resources to the shared pool. This can be useful for LPARs with dedicated processors that do not always use 100% of the assigned processing capacity.

Figure 3-2 demonstrates where to set this setting in an LPAR’s properties. It is important to note that sharing of idle capacity when the LPAR is not activated is enabled by default. However, the sharing of idle capacity when the LPAR is activated is not enabled by default.

Figure 3-2 Dedicated LPAR sharing processing units

There are performance implications in the values you choose for the entitled capacity and the number of virtual processors assigned to the partition. These are discussed in detail in the following sections:

We were able to perform a simple test to demonstrate the implications of sizing the entitled capacity of an AIX LPAR. The first test is shown in Figure 3-3 and the following observations were made:

•The entitled capacity (EC) is 6.4 and the number of virtual processors is 64. There are 64 processors in the POWER7 780 that this test was performed on.

•When the test was executed, due to the time taken for the AIX scheduler to perform processor unfolding, the time taken for the workload to have access to the required cores was 30 seconds.

Figure 3-3 Folding effect with EC set too low

The same test was performed again, with the entitled capacity raised from 6.4 processing units to 50 processing units. The second test is shown in Figure 3-4 on page 46 and the following observations were made:

•The entitled capacity is 50 and the number of virtual processors is still 64.

•The amount of processor unfolding the hypervisor had to perform was significantly reduced.

•The time taken for the workload to access the processing capacity went from 30 seconds to 5 seconds.

Figure 3-4 Folding effect with EC set higher; fasten your seat belts

The conclusion of the test: we found that tuning the entitled capacity correctly in this case provided us with a 16% performance improvement, simply due to the unfolding process. Further gains would also be possible related to memory access due to better LPAR placement, because there is an affinity reservation for the capacity entitlement.

Memory

Sizing memory is also an important consideration when configuring an AIX logical partition.

Table 3-2 provides a summary of the memory settings available in the LPAR profile.

Table 3-2 Memory settings in LPAR profile

|

Setting

|

Description

|

Recommended value

|

|

Minimum memory

|

This is the minimum amount of memory that must be available for the LPAR to be activated. Using DLPAR, memory can be removed to a minimum of this value.

|

This value should be set to the minimum amount of memory that the LPAR would realistically be assigned.

|

|

Desired memory

|

This is the amount of memory assigned to the LPAR when it is activated. If this amount is not available the hypervisor will assign as much available memory as possible to get close to this number.

|

This value should reflect the amount of memory that is assigned to this LPAR under normal circumstances.

|

|

Maximum memory

|

This is the maximum amount of memory that can be added to the LPAR using a DLPAR operation.

|

This value should be set to the maximum amount of memory that the LPAR would realistically be assigned.

|

|

AME expansion factor

|

When sizing the desired amount of memory, it is important that this amount will satisfy the workload’s memory requirements. Adding more memory using dynamic LPAR can have an effect on performance due to affinity. This is described in 2.2.3, “Verifying processor memory placement” on page 14.

Another factor to consider is the maximum memory assigned to a logical partition. This affects the hardware page table (HPT) of the POWER system. The HPT is the amount of memory assigned from the memory reserved by the POWER hypervisor. If the maximum memory for an LPAR is set very high, the amount of memory required for the HPT increases, causing a memory overhead on the system.

On POWER5, POWER6 and POWER7 systems the HPT is calculated by the following formula, where the sum of all the LPAR’s maximum memory is divided by a factor of 64 to calculate the HPT size:

HPT = sum_of_lpar_max_memory / 64

On POWER7+ systems the HPT is calculated using a factor of 64 for IBM i and any LPARs using Active Memory Sharing. However, for AIX and Linux LPARs the HPT is calculated using a factor of 128.

Example 3-1demonstrates how to display the default HPT ratio from the HMC command line for the managed system 750_1_SN106011P, which is a POWER7 750 system.

Example 3-1 Display the default HPT ratio on a POWER7 system

hscroot@hmc24:~> lshwres -m 750_1_SN106011P -r mem --level sys -F default_hpt_ratios

1:64

hscroot@hmc24:~>

Figure 3-5 provides a sample of the properties of a POWER7 750 system. The amount of memory installed in the system is 256 GB, all of which is activated.

The memory allocations are as follows:

•200.25 GB of memory is not assigned to any LPAR.

•52.25 GB of memory is assigned to LPARs currently running on the system.

•3.50 GB of memory is reserved for the hypervisor.

Figure 3-5 Memory assignments for a managed system

|

Important: Do not size your LPAR’s maximum memory too large, because there will be an increased amount of reserved memory for the HPT.

|

3.2 Active Memory Expansion

Active Memory Expansion (AME) is an optional feature of IBM POWER7 and POWER7+ systems for expanding a system’s effective memory capacity by performing memory compression. AME is enabled on a per-LPAR basis. Therefore, AME can be enabled on some or all of the LPARs on a Power system. POWER7 systems use LPAR processor cycles to perform the compression in software.

AME enables memory to be allocated beyond the amount that is physically installed in the system, where memory can be compressed on an LPAR and the memory savings can be allocated to another LPAR to improve system utilization, or compression can be used to oversubscribe memory to potentially improve performance.

AME is available on POWER7 and POWER7+ systems with AIX 6.1 Technology Level 4 and AIX 7.1 Service Pack 2 and above.

Active Memory Expansion is not ordered as part of any PowerVM edition. It is licensed as a separate feature code, and can be ordered with a new system, or added to a system at a later time. Table 3-3 provides the feature codes to order AME at the time of writing.

Table 3-3 AME feature codes

|

Feature code

|

Description

|

|

4795

|

Active Memory Expansion Enablement POWER 710 and 730

|

|

4793

|

Active Memory Expansion Enablement POWER 720

|

|

4794

|

Active Memory Expansion Enablement POWER 740

|

|

4792

|

Active Memory Expansion Enablement POWER 750

|

|

4791

|

Active Memory Expansion Enablement POWER 770 and 7801

|

|

4790

|

Active Memory Expansion Enablement POWER 795

|

1 This includes the Power 770+ and Power 780+ server models.

In this section we discuss the use of active memory expansion compression technology in POWER7 and POWER7+ systems. A number of terms are used in this section to describe AME. Table 3-4 provides a list of these terms and their meaning.

Table 3-4 Terms used in this section

|

Term

|

Meaning

|

|

LPAR true memory

|

The LPAR true memory is the amount of real memory assigned to the LPAR before compression.

|

|

LPAR expanded memory

|

The LPAR expanded memory is the amount of memory available to an LPAR after compression. This is the amount of memory an application running on AIX will see as the total memory inside the system.

|

|

Expansion factor

|

To enable AME, there is a single setting that must be set in the LPAR's profile. This is the expansion factor, which dictates the target memory capacity for the LPAR. This is calculated by this formula:

LPAR_EXPANDED_MEM = LPAR_TRUE_MEM * EXP_FACTOR

|

|

Uncompressed pool

|

When AME is enabled, the operating system’s memory is broken up into two pools, an uncompressed pool and a compressed pool. The uncompressed pool contains memory that is uncompressed and available to the application.

|

|

Compressed pool

|

The compressed pool contains memory pages that are compressed by AME. When an application needs to access memory pages that are compressed, AME uncompresses them and moves them into the uncompressed pool for application access. The size of the pools will vary based on memory access patterns and the memory compression factor.

|

|

Memory deficit

|

When an LPAR is configured with an AME expansion factor that is too high based on the compressibility of the workload. When the LPAR cannot reach the LPAR expanded memory target, the amount of memory that cannot fit into the memory pools is known as the memory deficit, which might cause paging activity. The expansion factor and the true memory can be changed dynamically, and when the expansion factor is set correctly, no memory deficit should occur.

|

Figure 3-6 on page 50 provides an overview of how AME works. The process of memory access is such that the application is accessing memory directly from the uncompressed pool. When memory pages that exist in the compressed pool are to be accessed, they are moved into the uncompressed pool for access. Memory that exists in the uncompressed pool that is no longer needed for access is moved into the compressed pool and subsequently compressed.

Figure 3-6 Active Memory Expansion overview

The memory gain from AME is determined by the expansion factor. The minimum expansion factor is 1.0 meaning no compression, and the maximum value is 10.0 meaning 90% compression.

Each expansion value has an associated processor overhead dependent on the type of workload. If the expansion factor is high, then additional processing is required to handle the memory compression and decompression. The kernel process in AIX is named cmemd, which performs the AME compression and decompression. This process can be monitored from topas or nmon to view its processor usage. The AME planning tool amepat covered in 3.2.2, “Sizing with the active memory expansion planning tool” on page 52 describes how to estimate and monitor the cmemd processor usage.

The AME expansion factor can be set in increments of 0.01. Table 3-5 gives an overview of some of the possible expansion factors to demonstrate the memory gains associated with the different expansion factors.

|

Note: These are only a subset of the expansion factors. The expansion factor can be set anywhere from 1.00 to 10.00 increasing by increments of 0.01.

|

Table 3-5 Sample expansion factors and associated memory gains

|

Expansion factor

|

Memory gain

|

|

1.0

|

0%

|

|

1.2

|

20%

|

|

1.4

|

40%

|

|

1.6

|

60%

|

|

1.8

|

80%

|

|

2.0

|

100%

|

|

2.5

|

150%

|

|

3.0

|

200%

|

|

3.5

|

250%

|

|

4.0

|

300%

|

|

5.0

|

400%

|

|

10.0

|

900%

|

3.2.1 POWER7+ compression accelerator

A new feature in POWER7+ processors is the nest accelerator (NX). The nest accelerator contains accelerators also known as coprocessors, which are shared resources used by the hypervisor for the following purposes:

•Encryption for JFS2 Encrypted file systems

•Encryption for standard cryptography APIs

•Random number generation

•AME hardware compression

Each POWER7+ chip contains a single NX unit and multiple cores, depending on the model, and these cores all share the same NX unit. The NX unit allows some of the AME processing to be off-loaded to significantly reduce the amount of processor overhead involved in compression and decompression. Where multiple LPARs are accessing the NX unit for compression at once, the priority is on a first in first out (FIFO) basis.

As with the relationship between processor and memory affinity, optimal performance is achieved when the physical memory is in the same affinity domain as the NX unit. AIX creates compressed pools on affinity domain boundaries and makes the best effort to allocate from the local memory pool.

AIX automatically leverages hardware compression for AME when available. Configuring AME on POWER7+ is achieved by following exactly the same process as on POWER7. However, to leverage hardware compression AIX 6.1 Technology Level 8 or AIX 7.1 Technology Level 2 or later are required.

The active memory expansion planning tool amepat has also been updated as part of these same AIX Technology Levels to suggest compression savings and associated processing overhead using hardware compression. Example 3-4 on page 54 illustrates this amepat enhancement.

Figure 3-7 on page 52 demonstrates how to confirm that hardware compression is enabled on a POWER7+ system.

Figure 3-7 Confirming that hardware compression is available

|

Note: The compression accelerator only handles the compression of memory in the compressed pool. The LPAR’s processor is still used to manage the moving of memory between the compressed and the uncompressed pool. The benefit of the accelerator is dependent on your workload characteristics.

|

3.2.2 Sizing with the active memory expansion planning tool

The active memory expansion planning tool amepat is a utility that should be run on the system on which you are evaluating the use of AME. When executed, amepat records system configuration and various performance metrics to provide guidance on possible AME configurations, and their processing impact. The tool should be run prior to activating AME, and run again after activating AME to continually evaluate the memory configuration.

The amepat tool provides a report with possible AME configurations and a recommendation based on the data it collected during the time it was running.

For best results, it is best to consider the following points:

•Run amepat during peak workload.

•Ensure that you run amepat for the full duration of the peak workload.

•The tool can be run in the foreground, or in recording mode.

•It is best to run the tool in recording mode, so that multiple configurations can be evaluated against the record file rather than running the tool in the foreground multiple times.

•Once the tool has been run once, it is reconnected running it again with a range of expansion factors to find the optimal value.

•Once AME is active, it is suggested to continue running the tool, because the workload may change resulting in a new expansion factor being recommended by the tool.

The amepat tool is available as part of AIX starting at AIX 6.1 Technology Level 4 Service Pack 2.

Example 3-2 demonstrates running amepat with the following input parameters:

•Run the report in the foreground.

•Run the report with a starting expansion factor of 1.20.

•Run the report with an upper limit expansion factor of 2.0.

•Include only POWER7 software compression in the report.

•Run the report to monitor the workload for 5 minutes.

Example 3-2 Running amepat with software compression

root@aix1:/ # amepat -e 1.20:2.0:0.1 -O proc=P7 5

Command Invoked : amepat -e 1.20:2.0:0.1 -O proc=P7 5

Date/Time of invocation : Tue Oct 9 07:33:53 CDT 2012

Total Monitored time : 7 mins 21 secs

Total Samples Collected : 3

System Configuration:

---------------------

Partition Name : aix1

Processor Implementation Mode : Power7 Mode

Number Of Logical CPUs : 16

Processor Entitled Capacity : 2.00

Processor Max. Capacity : 4.00

True Memory : 8.00 GB

SMT Threads : 4

Shared Processor Mode : Enabled-Uncapped

Active Memory Sharing : Disabled

Active Memory Expansion : Enabled

Target Expanded Memory Size : 8.00 GB

Target Memory Expansion factor : 1.00

System Resource Statistics: Average Min Max

--------------------------- ----------- ----------- -----------

CPU Util (Phys. Processors) 1.41 [ 35%] 1.38 [ 35%] 1.46 [ 36%]

Virtual Memory Size (MB) 5665 [ 69%] 5665 [ 69%] 5665 [ 69%]

True Memory In-Use (MB) 5880 [ 72%] 5880 [ 72%] 5881 [ 72%]

Pinned Memory (MB) 1105 [ 13%] 1105 [ 13%] 1105 [ 13%]

File Cache Size (MB) 199 [ 2%] 199 [ 2%] 199 [ 2%]

Available Memory (MB) 2303 [ 28%] 2303 [ 28%] 2303 [ 28%]

AME Statistics: Average Min Max

--------------- ----------- ----------- -----------

AME CPU Usage (Phy. Proc Units) 0.00 [ 0%] 0.00 [ 0%] 0.00 [ 0%]

Compressed Memory (MB) 0 [ 0%] 0 [ 0%] 0 [ 0%]

Compression Ratio N/A

Active Memory Expansion Modeled Statistics :

-------------------------------------------

Modeled Implementation : Power7

Modeled Expanded Memory Size : 8.00 GB

Achievable Compression ratio :0.00

Expansion Modeled True Modeled CPU Usage

Factor Memory Size Memory Gain Estimate

--------- ------------- ------------------ -----------

1.00 8.00 GB 0.00 KB [ 0%] 0.00 [ 0%] << CURRENT CONFIG

1.28 6.25 GB 1.75 GB [ 28%] 0.41 [ 10%]

1.40 5.75 GB 2.25 GB [ 39%] 1.16 [ 29%]

1.46 5.50 GB 2.50 GB [ 45%] 1.54 [ 39%]

1.53 5.25 GB 2.75 GB [ 52%] 1.92 [ 48%]

1.69 4.75 GB 3.25 GB [ 68%] 2.68 [ 67%]

1.78 4.50 GB 3.50 GB [ 78%] 3.02 [ 75%]

1.89 4.25 GB 3.75 GB [ 88%] 3.02 [ 75%]

2.00 4.00 GB 4.00 GB [100%] 3.02 [ 75%]

Active Memory Expansion Recommendation:

---------------------------------------

The recommended AME configuration for this workload is to configure the LPAR

with a memory size of 6.25 GB and to configure a memory expansion factor

of 1.28. This will result in a memory gain of 28%. With this

configuration, the estimated CPU usage due to AME is approximately 0.41

physical processors, and the estimated overall peak CPU resource required for

the LPAR is 1.86 physical processors.

NOTE: amepat's recommendations are based on the workload's utilization level

during the monitored period. If there is a change in the workload's utilization

level or a change in workload itself, amepat should be run again.

The modeled Active Memory Expansion CPU usage reported by amepat is just an

estimate. The actual CPU usage used for Active Memory Expansion may be lower

or higher depending on the workload.

Rather than running the report in the foreground each time you want to compare different AME configurations and expansion factors, it is suggested to run the tool in the background and record the statistics in a recording file for later use, as shown in Example 3-3.

Example 3-3 Create a 60-minute amepat recording to /tmp/ame.out

root@aix1:/ # amepat -R /tmp/ame.out 60

Continuing Recording through background process...

root@aix1:/ # ps -aef |grep amepat

root 4587544 1 0 07:48:28 pts/0 0:25 amepat -R /tmp/ame.out 5

root@aix1:/ #

Once amepat has completed its recording, you can run the same amepat command as used previously in Example 3-2 on page 53 with the exception that you specify a -P option to specify the recording file to be processed rather than a time interval.

Example 3-4 demonstrates how to run amepat against a recording file, with the same AME expansion factor input parameters used in Example 3-2 on page 53 to compare software compression with hardware compression. The -O proc=P7+ option specifies that amepat is to run the report using POWER7+ hardware with the compression accelerator.

Example 3-4 Running amepat against the record file with hardware compression

root@aix1:/ # amepat -e 1.20:2.0:0.1 -O proc=P7+ -P /tmp/ame.out

Command Invoked : amepat -e 1.20:2.0:0.1 -O proc=P7+ -P /tmp/ame.out

Date/Time of invocation : Tue Oct 9 07:48:28 CDT 2012

Total Monitored time : 7 mins 21 secs

Total Samples Collected : 3

System Configuration:

---------------------

Partition Name : aix1

Processor Implementation Mode : Power7 Mode

Number Of Logical CPUs : 16

Processor Entitled Capacity : 2.00

Processor Max. Capacity : 4.00

True Memory : 8.00 GB

SMT Threads : 4

Shared Processor Mode : Enabled-Uncapped

Active Memory Sharing : Disabled

Active Memory Expansion : Enabled

Target Expanded Memory Size : 8.00 GB

Target Memory Expansion factor : 1.00

System Resource Statistics: Average Min Max

--------------------------- ----------- ----------- -----------

CPU Util (Phys. Processors) 1.41 [ 35%] 1.38 [ 35%] 1.46 [ 36%]

Virtual Memory Size (MB) 5665 [ 69%] 5665 [ 69%] 5665 [ 69%]

True Memory In-Use (MB) 5881 [ 72%] 5881 [ 72%] 5881 [ 72%]

Pinned Memory (MB) 1105 [ 13%] 1105 [ 13%] 1106 [ 14%]

File Cache Size (MB) 199 [ 2%] 199 [ 2%] 199 [ 2%]

Available Memory (MB) 2302 [ 28%] 2302 [ 28%] 2303 [ 28%]

AME Statistics: Average Min Max

--------------- ----------- ----------- -----------

AME CPU Usage (Phy. Proc Units) 0.00 [ 0%] 0.00 [ 0%] 0.00 [ 0%]

Compressed Memory (MB) 0 [ 0%] 0 [ 0%] 0 [ 0%]

Compression Ratio N/A

Active Memory Expansion Modeled Statistics :

-------------------------------------------

Modeled Implementation : Power7+

Modeled Expanded Memory Size : 8.00 GB

Achievable Compression ratio :0.00

Expansion Modeled True Modeled CPU Usage

Factor Memory Size Memory Gain Estimate

--------- ------------- ------------------ -----------

1.00 8.00 GB 0.00 KB [ 0%] 0.00 [ 0%]

1.28 6.25 GB 1.75 GB [ 28%] 0.14 [ 4%]

1.40 5.75 GB 2.25 GB [ 39%] 0.43 [ 11%]

1.46 5.50 GB 2.50 GB [ 45%] 0.57 [ 14%]

1.53 5.25 GB 2.75 GB [ 52%] 0.72 [ 18%]

1.69 4.75 GB 3.25 GB [ 68%] 1.00 [ 25%]

1.78 4.50 GB 3.50 GB [ 78%] 1.13 [ 28%]

1.89 4.25 GB 3.75 GB [ 88%] 1.13 [ 28%]

2.00 4.00 GB 4.00 GB [100%] 1.13 [ 28%]

Active Memory Expansion Recommendation:

---------------------------------------

The recommended AME configuration for this workload is to configure the LPAR

with a memory size of 5.50 GB and to configure a memory expansion factor

of 1.46. This will result in a memory gain of 45%. With this

configuration, the estimated CPU usage due to AME is approximately 0.57

physical processors, and the estimated overall peak CPU resource required for

the LPAR is 2.03 physical processors.

NOTE: amepat's recommendations are based on the workload's utilization level

during the monitored period. If there is a change in the workload's utilization

level or a change in workload itself, amepat should be run again.

The modeled Active Memory Expansion CPU usage reported by amepat is just an

estimate. The actual CPU usage used for Active Memory Expansion may be lower

or higher depending on the workload.

|

Note: The -O proc=value option in amepat is available in AIX 6.1 TL8 and AIX 7.2 TL2 and later.

|

This shows that for an identical workload, POWER7+ enables a significant reduction in the processor overhead using hardware compression compared with POWER7 software compression.

3.2.3 Suitable workloads

Before enabling AME on an LPAR to benefit a given workload, some initial considerations need to be made to understand the workload’s memory characteristics. This will affect the benefit that can be gained from the use of AME.

•The better a workload’s data can be compressed, the higher the memory expansion factor that can be achieved with AME. The amepat tool can perform analysis. Data stored in memory that is not already compressed in any form is a good candidate for AME.

•Memory access patterns affect how well AME will perform. When memory is accessed, it is moved from the compressed pool to the uncompressed pool. If a small amount of the memory is frequently accessed, and a large amount is not frequently accessed, this type of workload will perform best with AME.

•Workloads that use a large portion of memory for a file system cache will not benefit substantially from AME, because file system cache memory will not be compressed.

•Workloads that have pinned memory or large pages may not experience the full benefit of AME because pinned memory and large memory pages cannot be compressed.

•Memory resource provided by AME cannot be used to create a RAMDISK in AIX.

•Compression of 64k pages is disabled by setting the tunable vmm_mpsize_support to -1 by default. This can be changed to enable compression of 64k pages. However, the overhead of decompressing 64k pages (treated as 16 x 4k pages) outweighs the performance benefit of using medium 64k pages. It is in most cases not advised to compress 64k pages.

|

Note: Using the amepat tool provides guidance of the memory savings achievable by using Active Memory Expansion.

|

3.2.4 Deployment

Once you have run the amepat tool, and have an expansion factor in mind, to activate active memory for the first time you need to modify the LPAR’s partition profile and reactivate the LPAR. The AME expansion factor can be dynamically modified after this step.

Figure 3-8 demonstrates how to enable active memory expansion with a starting expansion factor of 1.4. This means that there will be 8 GB of real memory, multiplied by 1.4 resulting in AIX seeing a total of 11.2 GB of expanded memory.

Figure 3-8 Enabling AME in the LPAR profile

Once the LPAR is re-activated, confirm that the settings took effect by running the lparstat -i command. This is shown in Example 3-5.

Example 3-5 Running lparstat -i

root@aix1:/ # lparstat -i

Node Name : aix1

Partition Name : 750_2_AIX1

Partition Number : 20

Type : Shared-SMT-4

Mode : Uncapped

Entitled Capacity : 2.00

Partition Group-ID : 32788

Shared Pool ID : 0

Online Virtual CPUs : 4

Maximum Virtual CPUs : 8

Minimum Virtual CPUs : 1

Online Memory : 8192 MB

Maximum Memory : 16384 MB

Minimum Memory : 4096 MB

Variable Capacity Weight : 128

Minimum Capacity : 0.50

Maximum Capacity : 8.00

Capacity Increment : 0.01

Maximum Physical CPUs in system : 16

Active Physical CPUs in system : 16

Active CPUs in Pool : 16

Shared Physical CPUs in system : 16

Maximum Capacity of Pool : 1600

Entitled Capacity of Pool : 1000

Unallocated Capacity : 0.00

Physical CPU Percentage : 50.00%

Unallocated Weight : 0

Memory Mode : Dedicated-Expanded

Total I/O Memory Entitlement : -

Variable Memory Capacity Weight : -

Memory Pool ID : -

Physical Memory in the Pool : -

Hypervisor Page Size : -

Unallocated Variable Memory Capacity Weight: -

Unallocated I/O Memory entitlement : -

Memory Group ID of LPAR : -

Desired Virtual CPUs : 4

Desired Memory : 8192 MB

Desired Variable Capacity Weight : 128

Desired Capacity : 2.00

Target Memory Expansion Factor : 1.25

Target Memory Expansion Size : 10240 MB

Power Saving Mode : Disabled

root@aix1:/ #

The output of Example 3-5 on page 57 tells the following:

•The memory mode is Dedicated-Expanded. This means that we are not using Active Memory Sharing (AMS), but we are using Active Memory Expansion (AME).

•The desired memory is 8192 MB. This is the true memory allocated to the LPAR.

•The AME expansion factor is 1.25.

•The size of the expanded memory pool is 10240 MB.

Once AME is activated, the workload may change, so it is suggested to run amepat regularly to see if the optimal expansion factor is currently set based on the amepat tool’s recommendation. Example 3-6 shows a portion of the amepat output with the amepat tool’s recommendation being 1.38.

Example 3-6 Running amepat after AME is enabled for comparison

Expansion Modeled True Modeled CPU Usage

Factor Memory Size Memory Gain Estimate

--------- ------------- ------------------ -----------

1.25 8.00 GB 2.00 GB [ 25%] 0.00 [ 0%] << CURRENT CONFIG

1.30 7.75 GB 2.25 GB [ 29%] 0.00 [ 0%]

1.38 7.25 GB 2.75 GB [ 38%] 0.38 [ 10%]

1.49 6.75 GB 3.25 GB [ 48%] 1.15 [ 29%]

1.54 6.50 GB 3.50 GB [ 54%] 1.53 [ 38%]

1.67 6.00 GB 4.00 GB [ 67%] 2.29 [ 57%]

1.74 5.75 GB 4.25 GB [ 74%] 2.68 [ 67%]

1.82 5.50 GB 4.50 GB [ 82%] 3.01 [ 75%]

2.00 5.00 GB 5.00 GB [100%] 3.01 [ 75%]

To change the AME expansion factor once AME is enabled, this can be done by simply reducing the amount of true memory and increasing the expansion factor using Dynamic Logical Partitioning (DLPAR).

Figure 3-9 demonstrates changing the AME expansion factor to 1.38 and reducing the amount of real memory to 7.25 GB.

Figure 3-9 Dynamically modify the expansion factor and true memory

After the change, you can now see the memory configuration using the lparstat -i command as demonstrated in Example 3-5 on page 57. The lsattr and vmstat commands can also be used to display this information. This is shown in Example 3-7 on page 60.

Example 3-7 Using lsattr and vmstat to display memory size

root@aix1:/ # lsattr -El mem0

ent_mem_cap I/O memory entitlement in Kbytes False

goodsize 7424 Amount of usable physical memory in Mbytes False

mem_exp_factor 1.38 Memory expansion factor False

size 7424 Total amount of physical memory in Mbytes False

var_mem_weight Variable memory capacity weight False

root@aix1:/ # vmstat |grep 'System configuration'

System configuration: lcpu=16 mem=10240MB ent=2.00

root@aix1:/ #

You can see that the true memory is 7424 MB, the expansion factor is 1.38, and the expanded memory pool size is 10240 MB.

|

Note: Additional information about AME usage can be found at:

|

3.2.5 Tunables

There are a number of tunables that can be modified using AME. Typically, the default values are suitable for most workloads, and these tunables should only be modified under the guidance of IBM support. The only value that would need to be tuned is the AME expansion factor.

Table 3-6 AME tunables

|

Tunable

|

Description

|

|

ame_minfree_mem

|

If processes are being delayed waiting for compressed memory to become available, increase ame_minfree_mem to improve response time. Note that the value use for ame_minfree_mem must be at least 257 kb less than ame_maxfree_mem.

|

|

ame_maxfree_mem

|

Excessive shrink and grow operations can occur if compressed memory pool size tends to change significantly. This can occur if a workload's working set size frequently changes. Increase this tunable to raise the threshold at which the VMM will shrink a compressed memory pool and thus reduce the number of overall shrink and grow operations.

|

|

ame_cpus_per_pool

|

Lower ratios can be used to reduce contention on compressed memory pools. This ratio is not the only factor used to determine the number of compressed memory pools (amount of memory and its layout are also considered), so certain changes to this ratio may not result in any change to the number of compressed memory pools.

|

|

ame_min_ucpool_size

|

If the compressed memory pool grows too large, there may not be enough space in memory to house uncompressed memory, which can slow down application performance due to excessive use of the compressed memory pool. Increase this value to limit the size of the compressed memory pool and make more uncompressed pages available.

|

Example 3-8 shows the default and possible values for each of the AME vmo tunables.

Example 3-8 AME tunables

root@aix1:/ # vmo -L ame_minfree_mem

NAME CUR DEF BOOT MIN MAX UNIT TYPE

DEPENDENCIES

--------------------------------------------------------------------------------

ame_minfree_mem n/a 8M 8M 64K 4095M bytes D

ame_maxfree_mem

--------------------------------------------------------------------------------

root@aix1:/ # vmo -L ame_maxfree_mem

NAME CUR DEF BOOT MIN MAX UNIT TYPE

DEPENDENCIES

--------------------------------------------------------------------------------

ame_maxfree_mem n/a 24M 24M 320K 4G bytes D

ame_minfree_mem

--------------------------------------------------------------------------------

root@aix1:/ # vmo -L ame_cpus_per_pool

NAME CUR DEF BOOT MIN MAX UNIT TYPE

DEPENDENCIES

--------------------------------------------------------------------------------

ame_cpus_per_pool n/a 8 8 1 1K processors B

--------------------------------------------------------------------------------

root@aix1:/ # vmo -L ame_min_ucpool_size

NAME CUR DEF BOOT MIN MAX UNIT TYPE

DEPENDENCIES

--------------------------------------------------------------------------------

ame_min_ucpool_size n/a 0 0 5 95 % memory D

--------------------------------------------------------------------------------

root@aix1:/ #

3.2.6 Monitoring

When using active memory expansion, in addition to monitoring the processor usage of AME, it is also important to monitor paging space and memory deficit. Memory deficit is the amount of memory that cannot fit into the compressed pool as a result of AME not being able to reach the target expansion factor. This is caused by the expansion factor being set too high.

The lparstat -c command can be used to display specific information related to AME. This is shown in Example 3-9.

Example 3-9 Running lparstat -c

root@aix1:/ # lparstat -c 5 5

System configuration: type=Shared mode=Uncapped mmode=Ded-E smt=4 lcpu=64 mem=14336MB tmem=8192MB psize=7 ent=2.00

%user %sys %wait %idle physc %entc lbusy app vcsw phint %xcpu xphysc dxm

----- ----- ------ ------ ----- ----- ------ --- ----- ----- ------ ------ ------

66.3 13.4 8.8 11.5 5.10 255.1 19.9 0.00 18716 6078 1.3 0.0688 0

68.5 12.7 10.7 8.0 4.91 245.5 18.7 0.00 17233 6666 2.3 0.1142 0

69.7 13.2 13.1 4.1 4.59 229.5 16.2 0.00 15962 8267 1.0 0.0481 0

73.8 14.7 9.2 2.3 4.03 201.7 34.6 0.00 19905 5135 0.5 0.0206 0

73.5 15.9 7.9 2.8 4.09 204.6 28.7 0.00 20866 5808 0.3 0.0138 0

root@aix1:/ #

The items of interest in the lparstat -c output are the following:

mmode This is how the memory of our LPAR is configured. In this case Ded-E means the memory is dedicated, meaning AMS is not active, and AME is enabled.

mem This is the expanded memory size.

tmem This is the true memory size.

physc This is how many physical processor cores our LPAR is consuming.

%xcpu This is the percentage of the overall processor usage that AME is consuming.

xphysc This is the amount of physical processor cores that AME is consuming.

dxm This is the memory deficit, which is the number of 4 k pages that cannot fit into the expanded memory pool. If this number is greater than zero, it is likely that the expansion factor is too high, and paging activity will be present on the AIX system.

The vmstat -sc command also provides some information specific to AME. One is the amount of compressed pool pagein and pageout activity. This is important to check because it could be a sign of memory deficit and the expansion factor being set too high. Example 3-10 gives a demonstration of running the vmstat -sc command.

Example 3-10 Running vmstat -sc

root@aix1:/ # vmstat -sc

5030471 total address trans. faults

72972 page ins

24093 page outs

0 paging space page ins

0 paging space page outs

0 total reclaims

3142095 zero filled pages faults

66304 executable filled pages faults

0 pages examined by clock

0 revolutions of the clock hand

0 pages freed by the clock

132320 backtracks

0 free frame waits

0 extend XPT waits

23331 pending I/O waits

97065 start I/Os

42771 iodones

88835665 cpu context switches

253502 device interrupts

4793806 software interrupts

92808260 decrementer interrupts

68395 mpc-sent interrupts

68395 mpc-receive interrupts

528426 phantom interrupts

0 traps

85759689 syscalls

0 compressed pool page ins

0 compressed pool page outs

root@aix1:/ #

The vmstat -vc command also provides some information specific to AME. This command displays information related to the size of the compressed pool and an indication whether AME is able to achieve the expansion factor that has been set. Items of interest include the following:

•Compressed pool size

•Percentage of true memory used for the compressed pool

•Free pages in the compressed pool (this is the mount of 4 k pages)

•Target AME expansion factor

•The AME expansion factor that is currently being achieved

Example 3-11 demonstrates running the vmstat -vc command.

Example 3-11 Running vmstat -vc

root@aix1:/ # vmstat -vc

3670016 memory pages

1879459 lruable pages

880769 free pages

8 memory pools

521245 pinned pages

95.0 maxpin percentage

3.0 minperm percentage

80.0 maxperm percentage

1.8 numperm percentage

33976 file pages

0.0 compressed percentage

0 compressed pages

1.8 numclient percentage

80.0 maxclient percentage

33976 client pages

0 remote pageouts scheduled

0 pending disk I/Os blocked with no pbuf

1749365 paging space I/Os blocked with no psbuf

1972 filesystem I/Os blocked with no fsbuf

1278 client filesystem I/Os blocked with no fsbuf

0 external pager filesystem I/Os blocked with no fsbuf

500963 Compressed Pool Size

23.9 percentage of true memory used for compressed pool

61759 free pages in compressed pool (4K pages)

1.8 target memory expansion factor

1.8 achieved memory expansion factor

75.1 percentage of memory used for computational pages

root@aix1:/ #

|

Note: Additional information about AME performance can be found at:

|

3.2.7 Oracle batch scenario

We performed a test on an Oracle batch workload to determine the memory saving benefit of AME. The LPAR started with 120 GB of memory assigned and 24 virtual processors (VP) allocated.

Over the course of three tests, we increased the AME expansion factor and reduced the amount of memory with the same workload.

Figure 3-10 provides an overview of the three tests carried out.

Figure 3-10 AME test on an Oracle batch workload

On completion of the tests, the first batch run completed in 124 minutes. The batch time grew slightly on the following two tests. However, the amount of true memory allocated was significantly reduced.

Table 3-7 provides a summary of the test results.

Table 3-7 Oracle batch test results

|

Test run

|

Processor

|

Memory assigned

|

Runtime

|

Avg. processor

|

|

Test 0

|

24

|

120 GB (AME disabled)

|

124 Mins

|

16.3

|

|

Test 1

|

24

|

60 GB (AME expansion 2.00)

|

127 Mins

|

16.8

|

|

Test 2

|

24

|

40 GB (AME expansion 3.00)

|

134 Mins

|

17.5

|

|

Conclusion: The impact of AME on batch duration is less than 10% with a processor overhead of 7% with three times less memory.

|

3.2.8 Oracle OLTP scenario

We performed a test on an Oracle OLTP workload in a scenario where the free memory on the LPAR with 100 users is less than 1%. By enabling active memory expansion we tested keeping the real memory the same, and increasing the expanded memory pool with active memory expansion to enable the LPAR to support additional users.

The objective of the test was to increase the number of users and TPS without affecting the application’s response time.

Three tests were performed, first with AME turned off, the second with an expansion factor of 1.25 providing 25% additional memory as a result of compression, and a test with an expansion factor of 1.6 to provide 60% of additional memory as a result of compression. The amount of true memory assigned to the LPAR remained at 8 GB during all three tests.

Figure 3-11 provides an overview of the three tests.

Figure 3-11 AME test on an Oracle OLTP workload

In the test, our LPAR had 8 GB of real memory and the Oracle SGA was sized at 5 GB.

With 100 concurrent users and no AME enabled, the 8 GB of assigned memory was 99% consumed. When the AME expansion factor was modified to 1.25 the amount of users supported was 300, with 0.1 processor cores consumed by AME overhead.

At this point of the test, we ran the amepat tool to identify the recommendation of amepat for our workload. Example 3-12 shows a subset of the amepat report, where our current expansion factor is 1.25 and the recommendation from amepat was a 1.54 expansion factor.

Example 3-12 Output of amepat during test 1

Expansion Modeled True Modeled CPU Usage

Factor Memory Size Memory Gain Estimate

--------- ------------- ------------------ -----------

1.03 9.75 GB 256.00 MB [ 3%] 0.00 [ 0%]

1.18 8.50 GB 1.50 GB [ 18%] 0.00 [ 0%]

1.25 8.00 GB 2.00 GB [ 25%] 0.01 [ 0%] << CURRENT CONFIG

1.34 7.50 GB 2.50 GB [ 33%] 0.98 [ 6%]

1.54 6.50 GB 3.50 GB [ 54%] 2.25 [ 14%]

1.67 6.00 GB 4.00 GB [ 67%] 2.88 [ 18%]

1.82 5.50 GB 4.50 GB [ 82%] 3.51 [ 22%]

2.00 5.00 GB 5.00 GB [100%] 3.74 [ 23%]

It is important to note that the amepat tool’s objective is to reduce the amount of real memory assigned to the LPAR by using compression based on the expansion factor. This explains the 2.25 processor overhead estimate of amepat being more than the 1.65 actual processor overhead that we experienced because we did not change our true memory.

Table 3-8 provides a summary of our test results.

Table 3-8 OLTP results

|

Test run

|

Processor

|

Memory assigned

|

TPS

|

No of users

|

Avg CPU

|

|

Test 0

|

VP = 16

|

8 GB (AME disabled)

|

325

|

100

|

1.7 (AME=0)

|

|

Test 1

|

VP = 16

|

8 GB (AME expansion 1.25)

|

990

|

300

|

4.3 (AME=0.10)

|

|

Test 2

|

VP = 16

|

8 GB (AME expansion 1.60)

|

1620

|

500

|

7.5 (AME=1.65)

|

|

Conclusion: The impact of AME on our Oracle OLTP workload enabled our AIX LPAR to have 5 times more users and 5 times more TPS with the same memory footprint.

|

3.2.9 Using amepat to suggest the correct LPAR size

During our testing with AME we observed cases where the recommendations by the amepat tool could be biased by incorrect LPAR size. We found that if the memory allocated to an LPAR far exceeded the amount consumed by the running workload, then the ratio suggested by amepat would actually be unrealistic. Such cases of concern become apparent when running through iterations of amepat—a suggested ratio will keep contradicting the previous result.

To illustrate this point, Example 3-13 lists a portion from the amepat output from a 5-minute sample of an LPAR running a WebSphere Message Broker workload. The LPAR was configured with 8 GB of memory.

Example 3-13 Initial amepat iteration for an 8 GB LPAR

The recommended AME configuration for this workload is to configure the LPAR

with a memory size of 1.00 GB and to configure a memory expansion factor

of 8.00. This will result in a memory gain of 700%. With this

configuration, the estimated CPU usage due to AME is approximately 0.21

physical processors, and the estimated overall peak CPU resource required for

the LPAR is 2.50 physical processors.

The LPAR was configured with 8 GB of memory and with an AME expansion ratio of 1.0. The LPAR was reconfigured based on the recommendation and reactivated to apply the change. The workload was restarted and amepat took another 5-minute sample. Example 3-14 lists the second recommendation.

Example 3-14 Second amepat iteration

WARNING: This LPAR currently has a memory deficit of 6239 MB.

A memory deficit is caused by a memory expansion factor that is too

high for the current workload. It is recommended that you reconfigure

the LPAR to eliminate this memory deficit. Reconfiguring the LPAR

with one of the recommended configurations in the above table should

eliminate this memory deficit.

The recommended AME configuration for this workload is to configure the LPAR

with a memory size of 3.50 GB and to configure a memory expansion factor

of 2.29. This will result in a memory gain of 129%. With this

configuration, the estimated CPU usage due to AME is approximately 0.00

physical processors, and the estimated overall peak CPU resource required for

the LPAR is 2.25 physical processors.

The LPAR was once again reconfigured, reactivated, and the process repeated. Example 3-15 shows the third recommendation.

Example 3-15 Third amepat iteration

The recommended AME configuration for this workload is to configure the LPAR

with a memory size of 1.00 GB and to configure a memory expansion factor

of 8.00. This will result in a memory gain of 700%. With this

configuration, the estimated CPU usage due to AME is approximately 0.25

physical processors, and the estimated overall peak CPU resource required for

the LPAR is 2.54 physical processors.

We stopped this particular test cycle at this point. The LPAR was reconfigured to have 8 GB dedicated; the active memory expansion factor checkbox was unticked. The first amepat recommendation was now something more realistic, as shown in Example 3-16.

Example 3-16 First amepat iteration for the second test cycle

The recommended AME configuration for this workload is to configure the LPAR

with a memory size of 4.50 GB and to configure a memory expansion factor

of 1.78. This will result in a memory gain of 78%. With this

configuration, the estimated CPU usage due to AME is approximately 0.00

physical processors, and the estimated overall peak CPU resource required for

the LPAR is 2.28 physical processors.

However, reconfiguring the LPAR and repeating the process produced a familiar result, as shown in Example 3-17.

Example 3-17 Second amepat iteration for second test cycle

The recommended AME configuration for this workload is to configure the LPAR

with a memory size of 1.00 GB and to configure a memory expansion factor

of 8.00. This will result in a memory gain of 700%. With this

configuration, the estimated CPU usage due to AME is approximately 0.28

physical processors, and the estimated overall peak CPU resource required for

the LPAR is 2.56 physical processors.

The Message Broker workload being used had been intentionally configured to provide a small footprint; this was to provide an amount of load on the LPAR without being excessively demanding on processor or RAM. We reviewed the other sections in the amepat reports to see if there was anything to suggest why the recommendations were unbalanced.

Since the LPAR was originally configured with 8 GB of RAM, all the AME projections were based on that goal. However, from reviewing all the reports, we saw that the amount of RAM being consumed by the workload was not using near the 8 GB. The System Resource Statistics section details memory usage during the sample period. Example 3-18 on page 68 lists the details from the initial report, which was stated in part in Example 3-13 on page 66.

Example 3-18 Average system resource statistics from initial amepat iteration

System Resource Statistics: Average

--------------------------- -----------

CPU Util (Phys. Processors) 1.82 [ 46%]

Virtual Memory Size (MB) 2501 [ 31%]

True Memory In-Use (MB) 2841 [ 35%]

Pinned Memory (MB) 1097 [ 13%]

File Cache Size (MB) 319 [ 4%]

Available Memory (MB) 5432 [ 66%]

From Example 3-18 we can conclude that only around a third of the allocated RAM is being consumed. However, in extreme examples where the LPAR was configured to have less than 2 GB of actual RAM, this allocation was too small for the workload to be healthily contained.

Taking the usage profile into consideration, the LPAR was reconfigured to have 4 GB of dedicated RAM (no AME). The initial amepat recommendations were now more realistic (Example 3-19).

Example 3-19 Initial amepat results for a 4-GB LPAR

System Resource Statistics: Average

--------------------------- -----------

CPU Util (Phys. Processors) 1.84 [ 46%]

Virtual Memory Size (MB) 2290 [ 56%]

True Memory In-Use (MB) 2705 [ 66%]

Pinned Memory (MB) 1096 [ 27%]

File Cache Size (MB) 392 [ 10%]

Available Memory (MB) 1658 [ 40%]

Active Memory Expansion Recommendation:

---------------------------------------

The recommended AME configuration for this workload is to configure the LPAR

with a memory size of 2.50 GB and to configure a memory expansion factor

of 1.60. This will result in a memory gain of 60%. With this

configuration, the estimated CPU usage due to AME is approximately 0.00

physical processors, and the estimated overall peak CPU resource required for

the LPAR is 2.28 physical processors.

So the recommendation of 2.5 GB is still larger than the quantity actually consumed. But the amount of free memory is much more reasonable. Reconfiguring the LPAR and repeating the process now produced more productive results. Example 3-20 lists the expansion factor which amepat settled on.

Example 3-20 Final amepat recommendation for a 4-GB LPAR

Active Memory Expansion Recommendation:

---------------------------------------

The recommended AME configuration for this workload is to configure the LPAR

with a memory size of 2.00 GB and to configure a memory expansion factor

of 1.50. This will result in a memory gain of 50%. With this

configuration, the estimated CPU usage due to AME is approximately 0.13

physical processors, and the estimated overall peak CPU resource required for

the LPAR is 2.43 physical processors.

|

Note: Once the LPAR has been configured to the size shown in Example 3-20 on page 68, the amepat recommendations were consistent for additional iterations. So if successive iterations with the amepat recommendations contradict themselves, we suggest reviewing the size of your LPAR.

|

3.2.10 Expectations of AME

When considering AME and using amepat, remember to take into consideration what data is used to calculate any recommendations:

•Memory size (allocated to LPAR)

•Memory usage

•Type of data stored in memory

•Processor usage

However, amepat has no concept of understanding of application throughput or time sensitivity. The recommendations aim to provide the optimal use of memory allocation at the expense of some processor cycles. amepat cannot base recommendations on optimal application performance, because it has no way to interpret such an attribute from AIX.

In the scenario discussed in 3.2.9, “Using amepat to suggest the correct LPAR size” on page 66, the concluding stable recommendation provided 96% of the application throughput of the original 4 GB dedicated configuration. However, an intermediate recommendation actually produced 101%, whereas the scenario discussed in 3.2.8, “Oracle OLTP scenario” on page 64 resulted in more throughput with the same footprint.

Different workloads will produce different results, both in terms of resource efficiency and application performance. Our previous sections illustrate some of the implications, which can be used to set expectations for your own workloads based on your requirements.

3.3 Active Memory Sharing (AMS)

Active Memory Sharing is a feature of Power systems that allows better memory utilization, similar to Shared Processor Partition (SPLPAR) processor optimization. Similar to what occurs with processors, instead of dedicating memory to partitions, the memory can be assigned as shared. The PowerVM hypervisor manages real memory across multiple AMS-enabled partitions, distributing memory from Share Memory Pool to partitions based on their workload demand.

AMS requirements:

•Enterprise version of PowerVM

•POWER6 AIX 6.1 TL 3 or later, VIOS 2.1.0.1-FP21

•POWER7 AIX 6.1 TL 4 or later, VIOS 2.1.3.10-FP23

For additional information about Active Memory Sharing, refer to IBM PowerVM Virtualization Active Memory Sharing, REDP-4470 at:

3.4 Active Memory Deduplication (AMD)

A system might have a considerable amount of duplicated information stored on its memory. Active Memory Deduplication allows the PowerVM hypervisor to dynamically map identical partition memory pages to a single physical memory page. AMD depends on the Active Memory Sharing (AMS) feature to be available, and relies on processor cycles to identify duplicated pages with hints taken directly from the operating system.

The Active Memory Deduplication feature requires the following minimum components:

•POWER7

•PowerVM Enterprise edition

•System firmware level 740

•AIX Version 6: AIX 6.1 TL7, or later

•AIX Version 7: AIX 7.1 TL1 SP1, or later

For more information about Active Memory Sharing, refer to:

3.5 Virtual I/O Server (VIOS) sizing

In this section we highlight some suggested sizing guidelines and tools to ensure that a VIOS on a POWER7 system is allocated adequate resources to deliver optimal performance.

It is essential to continually monitor the resource utilization of the VIOS and review the hardware assignments as workloads change.

3.5.1 VIOS processor assignment

The VIOS uses processor cycles to deliver I/O to client logical partitions. This includes running the VIO server’s own instance of the AIX operating system, and processing shared Ethernet traffic as well as shared disk I/O traffic including virtual SCSI and N-Port Virtualization (NPIV).

Typically SEAs backed by 10 Gb physical adapters in particular consume a large amount of processor resources on the VIOS depending on the workload. High-speed 8 Gb Fibre Channel adapters in environments with a heavy disk I/O workload also consume large amounts of processor cycles.

In most cases, to provide maximum flexibility and performance capability, it is suggested that you configure the VIOS partition taking the settings into consideration described in Table 3-9.

Table 3-9 Suggested Virtual I/O Server processor settings

|

Setting

|

Suggestion

|

|

Processing mode

|

There are two options for the processing, shared or dedicated. In most cases the suggestion is to use shared to take advantage of PowerVM and enable the VIOS to take advantage of additional processor capacity during peak workloads.

|

|

Entitled capacity

|

The entitled capacity is ideally set to the average processing units that the VIOS partition is using. If your VIOS is constantly consuming beyond 100% of the entitled capacity, the suggestion is to increase the capacity entitlement to match the average consumption.

|

|

Desired virtual processors

|

The virtual processors should be set to the number of cores with some headroom that the VIOS will consume during peak workload.

|

|

Sharing mode

|

The suggested sharing mode is uncapped. This enables the VIOS partition to consume additional processor cycles from the shared pool when it is under load.

|

|

Weight

|

The VIOS partition is sensitive to processor allocation. When the VIOS is starved of resources, all virtual client logical partitions will be affected. The VIOS typically should have a higher weight than all of the other logical partitions in the system. The weight ranges from 0-255; the suggested value for the Virtual I/O server would be in the upper part of the range.

|

|

Processor compatibility mode

|

The suggested compatibility mode to configure in the VIOS partition profile to use the default setting. This allows the LPAR to run in whichever mode is best suited for the level of VIOS code installed.

|

Processor folding at the time of writing is not supported for VIOS partitions. When a VIOS is configured as uncapped, virtual processors that are not in use are “folded” to ensure that they are available for use by other logical partitions.

It is important to ensure that the entitled capacity and virtual processor are sized appropriately on the VIOS partition to ensure that there are no wasted processor cycles on the system.

When VIOS is installed from a base of 2.1.0.13-FP23 or later, processor folding is already disabled by default. If the VIOS has been upgraded or migrated from an older version, then processor folding may remain enabled.

The schedo command can be used to query whether processor folding is enabled, as shown in Example 3-21.

Example 3-21 How to check whether processor folding is enabled

$ oem_setup_env

# schedo -o vpm_fold_policy

vpm_fold_policy = 3

If the value is anything other than 4, then processor folding needs to be disabled.

Processor folding is discussed in 4.1.3, “Processor folding” on page 123.

Example 3-22 demonstrates how to disable processor folding. This change is dynamic, so that no reboot of the VIOS LPAR is required.

Example 3-22 How to disable processor folding

$ oem_setup_env

# schedo -p -o vpm_fold_policy=4

Setting vpm_fold_policy to 4 in nextboot file

Setting vpm_fold_policy to 4

3.5.2 VIOS memory assignment

The VIOS also has some specific memory requirements, which need to be monitored to ensure that the LPAR has sufficient memory.

The VIOS Performance Advisor, which is covered in 5.9, “VIOS performance advisor tool and the part command” on page 271, provides recommendations regarding sizing and configuration of a running VIOS.

However, for earlier VIOS releases or as a starting point, the following guidelines can be used to estimate the required memory to assign to a VIOS LPAR:

•2 GB of memory for every 16 processor cores in the machine. For most VIOS this should be a workable base to start from.

•For more complex implementations, start with a minimum allocation of 768 MB. Then add increments based on the quantities of the following adapters:

– For each Logical Host Ethernet Adapter (LHEA) add 512 MB, and an additional 102 MB per configured port.

– For each non-LHEA 1 Gb Ethernet port add 102 MB.

– For each non-LHEA 10 Gb port add 512 MB.

– For each 8 Gb Fibre Channel adapter port add 512 MB.

– For each NPIV Virtual Fibre Channel adapter add 140 MB.

– For each Virtual Ethernet adapter add 16 MB.

In the cases above, even if a given adapter is idle or not yet assigned to an LPAR (in the case of NPIV), still base your sizing on the intended scaling.

3.5.3 Number of VIOS

Depending on your environment, the number of VIOS on your POWER7 system will vary. There are cases where due to hardware constraints only a single VIOS can be deployed, such non-HMC-managed systems using Integrated Virtualization Manager (IVM).

|

Note: With version V7R7.6.0.0 and later, an HMC can be used to manage POWER processor-based blades.

|

As a general best practice, it is ideal to deploy Virtual I/O servers in redundant pairs. This enables both additional availability and performance for the logical partitions using virtualized I/O. The first benefit this provides is the ability to be able to shut down one of the VIOS for maintenance; the second VIOS will be available to serve I/O to client logical partitions. This also caters for a situation where there may be an unexpected outage on a single VIOS and the second VIOS can continue to serve I/O and keep the client logical partitions running.

Configuring VIOS in this manner is covered in IBM PowerVM Virtualization Introduction and Configuration, SG24-7940-04.

In most cases, a POWER7 system has a single pair of VIOS. However, there may be situations where a second or even third pair may be required. In most situations, a single pair of VIO servers is sufficient.

Following are some situations where additional pairs of Virtual I/O servers may be a consideration on larger machines where there are additional resources available:

•Due to heavy workload, a pair of VIOS may be deployed for shared Ethernet and a second pair may be deployed for disk I/O using a combination of N-Port Virtualization (NPIV), Virtual SCSI, or shared storage pools (SSP).

•Due to different types of workloads, there may be a pair of VIOS deployed for each type of workload, to cater to multitenancy situations or situations where workloads must be totally separated by policy.

•There may be production and nonproduction LPARs on a single POWER7 frame with a pair of VIOS for production and a second pair for nonproduction. This would enable both workload separation and the ability to test applying fixes in the nonproduction pair of VIOS before applying them to the production pair. Obviously, where a single pair of VIOS are deployed, they can still be updated one at a time.

|

Note: Typically a single pair of VIOS per Power system will be sufficient, so long as the pair is provided with sufficient processor, memory, and I/O resources.

|

3.5.4 VIOS updates and drivers

On a regular basis, new enhancements and fixes are added to the VIOS code. It is important to ensure that your Virtual I/O servers are kept up to date. It is also important to check your IOS level and update it regularly. Example 3-23 demonstrates how to check the VIOS level.

Example 3-23 How to check your VIOS level

$ ioslevel

2.2.2.0

$

For optimal disk performance, it is also important to install the AIX device driver for your disk storage system on the VIOS. Example 3-24 illustrates where the storage device drivers are not installed. In this case AIX uses a generic device definition because the correct definition for the disk is not defined in the ODM.

Example 3-24 VIOS without correct device drivers installed

$ lsdev -type disk

name status description

hdisk0 Available MPIO Other FC SCSI Disk Drive

hdisk1 Available MPIO Other FC SCSI Disk Drive

hdisk2 Available MPIO Other FC SCSI Disk Drive

hdisk3 Available MPIO Other FC SCSI Disk Drive

hdisk4 Available MPIO Other FC SCSI Disk Drive

hdisk5 Available MPIO Other FC SCSI Disk Drive

$

In this case, the correct device driver needs to be installed to optimize how AIX handles I/O on the disk device. These drivers would include SDDPCM for IBM DS6000™, DS8000®, V7000 and SAN Volume Controller. For other third-party storage systems, the device drivers can be obtained from the storage vendor such as HDLM for Hitachi or PowerPath for EMC.

Example 3-25 demonstrates verification of the SDDPCM fileset being installed for IBM SAN Volume Controller LUNs, and verification that the ODM definition for the disks is correct.

Example 3-25 Virtual I/O server with SDDPCM driver installed

$ oem_setup_env

# lslpp -l devices.fcp.disk.ibm.mpio.rte

Fileset Level State Description

----------------------------------------------------------------------------

Path: /usr/lib/objrepos

devices.fcp.disk.ibm.mpio.rte

1.0.0.23 COMMITTED IBM MPIO FCP Disk Device

# lslpp -l devices.sddpcm*

Fileset Level State Description

----------------------------------------------------------------------------

Path: /usr/lib/objrepos

devices.sddpcm.61.rte 2.6.3.0 COMMITTED IBM SDD PCM for AIX V61

Path: /etc/objrepos

devices.sddpcm.61.rte 2.6.3.0 COMMITTED IBM SDD PCM for AIX V61

# exit

$ lsdev -type disk

name status description

hdisk0 Available MPIO FC 2145

hdisk1 Available MPIO FC 2145

hdisk2 Available MPIO FC 2145

hdisk3 Available MPIO FC 2145

hdisk4 Available MPIO FC 2145

hdisk5 Available MPIO FC 2145

$

|

Note: IBM System Storage® device drivers are free to download for your IBM Storage System. Third-party vendors may supply device drivers at an additional charge.

|

3.6 Using Virtual SCSI, Shared Storage Pools and N-Port Virtualization

PowerVM and VIOS provide the capability to share physical resources among multiple logical partitions to provide efficient utilization of the physical resource. From a disk I/O perspective, different methods are available to implement this.

In this section, we provide a brief overview and comparison of the different I/O device virtualizations available in PowerVM. The topics covered in this section are as follows:

•Virtual SCSI

•Virtual SCSI using Shared Storage Pools

•N_Port Virtualization (NPIV)

Note that Live Partition Mobility (LPM) is supported on all three implementations and in situations that require it, combinations of these technologies can be deployed together, virtualizing different devices on the same machine.

|

Note: This section does not cover in detail how to tune disk and adapter devices in each scenario. This is covered in 4.3, “I/O device tuning” on page 140.

|

3.6.1 Virtual SCSI

Virtual SCSI describes the implementation of mapping devices allocated to one or more VIOS using the SCSI protocol to a client logical partition. Any device drivers required for the device such as a LUN are installed on the Virtual I/O server, and the client logical partition sees a generic virtual SCSI device.

In POWER5, this was the only way to share disk storage devices using VIO and is still commonly used in POWER6 and POWER7 environments.

The following are the advantages and performance considerations related to the use of Virtual SCSI:

Advantages

These are the advantages of using Virtual SCSI:

•It enables file-backed optical devices to be presented to a client logical partition as a virtual CDROM. This is mounting an ISO image residing on the VIO server to the client logical partition as a virtual CDROM.

•It does not require specific FC adapters or fabric switch configuration.

•It can virtualize internal disk.

•It provides the capability to map disk from a storage device not capable of a 520-byte format to an IBM i LPAR as supported generic SCSI disk.

•It does not require any disk device drivers to be installed on the client logical partitions, only the Virtual I/O server requires disk device drivers.

Performance considerations

The performance considerations of using Virtual SCSI are:

•Disk device and adapter tuning are required on both the VIO server and the client logical partition. If a tunable is set in VIO and not in AIX, there may be a significant performance penalty.

•When multiple VIO servers are in use, I/O cannot be load balanced between all VIO servers. A virtual SCSI disk can only be performing I/O operations on a single VIO server.

•If virtual SCSI CDROM devices are mapped to a client logical partition, all devices on that VSCSI adapter must use a block size of 256 kb (0x40000).

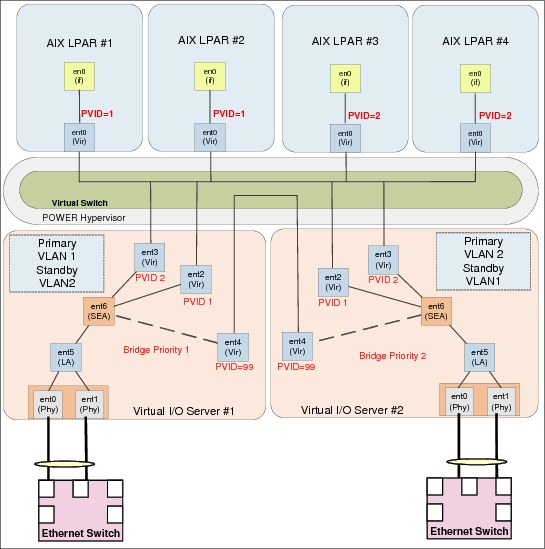

Figure 3-12 on page 76 describes a basic Virtual SCSI implementation consisting of four AIX LPARs and two VIOS. The process to present a storage Logical Unit (LUN) to the LPAR as a virtual disk is as follows:

1. Assign the storage LUN to both VIO servers and detect them using cfgdev.

2. Apply any tunables such as the queue depth and maximum transfer size on both VIOS.

3. Set the LUN’s reserve policy to no_reserve to enable I/O to enable both VIOS to map the device.

4. Map the device to the desired client LPAR.

5. Configure the device in AIX using cfgmgr and apply the same tunables as defined on the VIOS such as queue depth and maximum transfer size.

Figure 3-12 Virtual SCSI (VSCSI) overview

|

Note: This section does not cover how to configure Virtual SCSI. For details on the configuration steps, refer to IBM PowerVM Virtualization Introduction and Configuration, SG24-7590-03.

|

3.6.2 Shared storage pools

Shared storage pools are built on the virtual SCSI provisioning method, with the exception that the VIOS are added to a cluster, with one or more external disk devices (LUNs) assigned to the VIOS participating in the cluster. The LUNs assigned to the cluster must have some backend RAID for availability. Shared storage pools have been available since VIOS 2.2.1.3.

A shared storage pool is then created from the disks assigned to the cluster of VIO servers, and from there virtual disks can be provisioned from the pool.

Shared storage pools are ideal for situations where the overhead of SAN administration needs to be reduced for Power systems, and large volumes from SAN storage can simply be allocated to all the VIO servers. From there the administrator of the Power system can perform provisioning tasks to individual LPARs.

Shared storage pools also have thin provisioning and snapshot capabilities, which also may add benefit if you do not have these capabilities on your external storage system.

The advantages and performance considerations related to the use of shared storage pools are:

Advantages

•There can be one or more large pools of storage, where virtual disks can be provisioned from. This enables the administrator to see how much storage has been provisioned and how much is free in the pool.

•All the virtual disks that are created from a shared storage pool are striped across all the disks in the shared storage pool, reducing the likelihood of hot spots in the pool. The virtual disks are spread over the pool in 64 MB chunks.

•Shared storage pools use cluster-aware AIX (CAA) technology for the clustering, which is also used in IBM PowerHA, the IBM clustering product for AIX. This also means that a LUN must be presented to all participating VIO servers in the cluster for exclusive use as the CAA repository.

•Thin provisioning and snapshots are included in shared storage pools.

•The management of shared storage pools is simplified where volumes can be created and mapped from both the VIOS command line, and the Hardware Management Console (HMC) GUI.

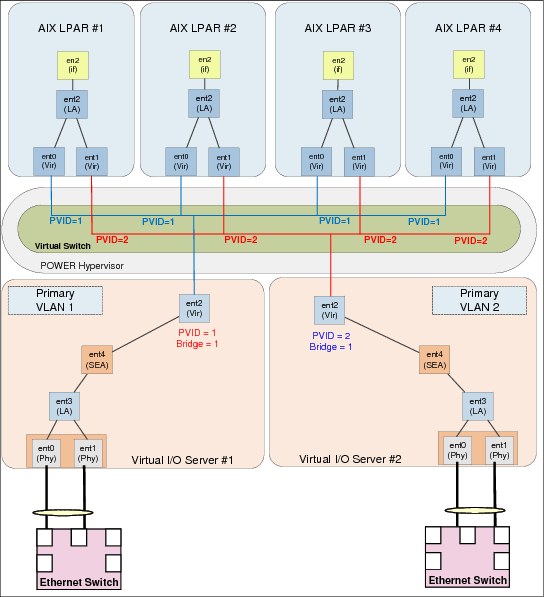

Figure 3-13 on page 78 shows the creation of a virtual disk from shared storage pools. The following is a summary of our setup and the provisioning steps:

•Two VIOS, p24n16 and p24n17, are participating in the cluster.

•The name of the cluster is bruce.

•The name of the shared storage pool is ssp_pool0 and it is 400 GB in size.

•The virtual disk we are creating is 100 GB in size and called aix2_vdisk1.

•The disk is mapped via virtual SCSI to the logical partition 750_2_AIX2, which is partition ID 21.

•750_2_AIX2 has a virtual SCSI adapter mapped to each of the VIO servers, p24n16 and p24n17.

•The virtual disk is thin provisioned.

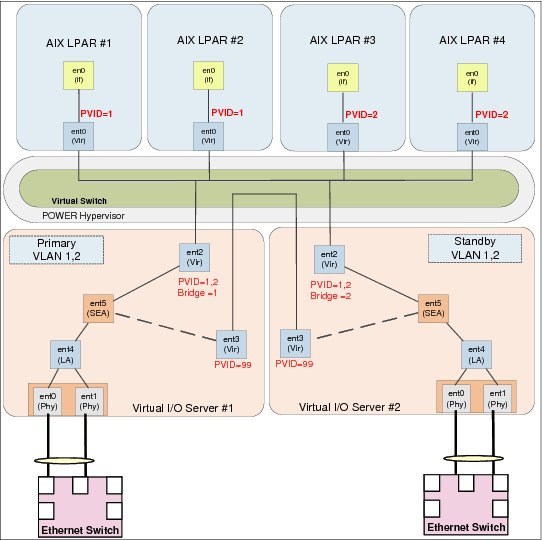

Figure 3-13 Shared storage pool virtual disk creation