Testing the environment

In this chapter we provide information on how to establish test plans, test the different components of your environment at the operating system level, how to interpret information reported by the analysis tools, how to spot bottlenecks, and how to manage your workload. This information can be used by those who are either building a system from scratch, applying all the concepts from the previous chapters, or those who are looking for an improvement of a production environment.

We help you establish and implement tests of the environment, and give you some guidance to understand the results of the changes implemented.

The following topics are discussed in this chapter:

5.1 Understand your environment

Submitting an environment for performance analysis is often a complex task. It usually requires a good knowledge of the workloads running, system capacity, technologies available, and it involves a lot of tuning and tests.

To understand the limits of the different components of the environment is crucial to establish baselines and targets and set expectations.

5.1.1 Operating system consistency

While keeping AIX levels up to date is an obvious concern, keeping things consistent is often overlooked. One LPAR might have been updated with a given APAR or fix, but how to be sure it was also applied to other LPARs of the same levels? Not maintaining consistency is a way of introducing performance problems, as some LPARs can remain backlevel or unpatched. There are many ways to track levels of installed AIX across LPARs. One often overlooked option is provided by NIM.

The niminv command allows administrators to gather, conglomerate, compare and download fixes based on installation inventory of NIM objects. It provides an easy method to ensure systems are at an expected level.

niminv can use any NIM object that contains installation information. Examples include standalone client, SPOT, lpp_source and mksysb objects.

Using niminv has the following benefits:

•Hardware installation inventory is gathered alongside the software installation inventory.

•Data files are saved with a naming convention that is easily recognizable.

Example 5-1 illustrates using niminv to compare one NIM client (aix13) with another (aix19). For each NIM client there will be a column. The value will either be listed “same” if the level for the file set is the same for the target as the base, and “-” if missing and the actual level if existing but different (higher or lower).

Example 5-1 Using niminv with invcom to compare installed software levels on NIM clients

root@nim1: /usr/sbin/niminv -o invcmp -a targets=‘aix13,aix19' -a base=‘aix13' -a location='/tmp/123‘

Comparison of aix13 to aix13:aix19 saved to /tmp/123/comparison.aix13.aix13:aix19.120426230401.

Return Status = SUCCESS

root@nim1: cat /tmp/123/comparison.aix13.aix13:aix19.120426230401

# name base 1 2

----------------------------------------- ---------- ---------- ----------

AIX-rpm-7.1.0.1-1 7.1.0.1-1 same same

...lines omitted...

bos.64bit 7.1.0.1 same same

bos.acct 7.1.0.0 same same

bos.adt.base 7.1.0.0 same same

bos.adt.include 7.1.0.1 same same

bos.adt.lib 7.1.0.0 same same

...lines omitted...

bos.rte 7.1.0.1 same same

...lines omitted...

base = comparison base = aix13

1 = aix13

2 = aix19

'-' = name not in system or resource

same = name at same level in system or resource

5.1.2 Operating system tunable consistency

In environments where tunables beyond the defaults are required, it is important to maintain an overview of what is applied across an environment, and to ensure that tunables are consistent and not removed. Also, to keep track of what is applied as a reminder in case some only need to be temporarily enabled.

System tunable consistency check can be done using the AIX Runtime Expert (ARTEX). The existing samples in the /etc/security/artex/samples directory can be used to create a new profile with the artexget command, which can be customized. The corresponding catalog in /etc/security/artex/catalogs is referred to for retrieving and setting values for that parameter.

|

Note: The artexget and artexset commands execute the <GET> and <SET> sections, respectively, in the cfgMethod of the Catalog which is defined for a particular parameter.

|

Example 5-2 shows a simple profile, which can be used with ARTEX tools.

Example 5-2 AIX Runtime Expert sample profile

root@nim1: cat /etc/security/artex/samples/aixpertProfile.xml

<?xml version="1.0" encoding="UTF-8"?>

<Profile origin="reference" readOnly="true" version="2.0.0">

<Catalog id="aixpertParam" version="2.0">

<Parameter name="securitysetting"/>

</Catalog>

</Profile>

Example 5-3 shows a simple catalog that can be used with the ARTEX tools with a corresponding profile. Note the Get and Set stanzas and Command and Filter attributes, which can be modified and used to create customized catalogues to extend the capabilities of ARTEX.

Example 5-3 AIX Runtime Expert sample catalog

root@nim1: cat /etc/security/artex/catalogs/aixpertParam.xml

<?xml version="1.0" encoding="UTF-8"?>

<Catalog id="aixpertParam" version="2.0" priority="-1000">

<ShortDescription><NLSCatalog catalog="artexcat.cat" setNum="2" msgNum="1">System security level configuration.</NLSCatalog></ShortDescription>

<Description><NLSCatalog catalog="artexcat.cat" setNum="2" msgNum="2">The aixpert command sets a variety of system configuration settings to enable the desired security level.</NLSCatalog></Description>

<ParameterDef name="securitysetting" type="string">

<Get type="current">

<Command>/etc/security/aixpert/bin/chk_report</Command>

<Filter>tr -d '

'</Filter>

</Get>

<Get type="nextboot">

<Command>/etc/security/aixpert/bin/chk_report</Command>

<Filter>tr -d '

'</Filter>

</Get>

<Set type="permanent">

<Command>/usr/sbin/aixpert -l %a</Command>

<Argument>`case %v1 in 'HLS') echo 'h';; 'MLS') echo 'm';; 'LLS') echo 'l';; 'DLS') echo 'd';; 'SCBPS') echo 's';; *) echo 'd';; esac`</Argument>

</Set>

</ParameterDef>

</Catalog>

One method to employ these capabilities is to use NIM to perform an ARTEX operation on a group of systems (Example 5-4); this would provide a centralized solution to GET, SET and compare (DIFF) the attribute values across the group.

Example 5-4 Using NIM script to run AIX Runtime Expert commands on NIM clients

root@nim1: cat /export/scripts/artex_diff

root@nim1: artexget -r -f txt /etc/security/artex/samples/viosdevattrProfile.xml

root@nim1: nim -o define -t script -a server=master -a location=/export/scripts/artex_diff artex_diff

root@nim1: nim -o allocate -a script=artex_diff nimclient123

root@nim1: nim -o cust nimclient123

Component name Parameter name Parameter value Additional Action

----------------- ------------------- ----------------- -----------------------

viosdevattrParam reserve_policy no_reserve NEXTBOOT

viosdevattrParam queue_depth 3 NEXTBOOT

...lines omitted...

5.1.3 Size that matters

The world is dynamic. Everything changes all the time and businesses react in the same way. When you do performance analysis on the environment, you will eventually find that the problem is not how your systems are configured, but instead how they are sized. The initial sizing for a specific workload may not fit your business needs after a while. You may find out that some of your infrastructure is undersized or even oversized for different workloads and you have to be prepared to change.

5.1.4 Application requirements

Different applications are built for different workloads. An application server built for a demand of ten thousand users per month may not be ready to serve one hundred thousand users. This is a typical scenario where no matter how you change your infrastructure environment, you do not see real benefits of the changes you have made unless your application is also submitted to analysis.

5.1.5 Different workloads require different analysis

One of the most important factors when you analyze your systems is that you have a good understanding of the different types of workloads that you are running. Having that knowledge will lead you to more objective work and concise results.

5.1.6 Tests are valuable

Each individual infrastructure component has its own limitations, and understanding these different limits is never easy. For example, similar network adapters show different throughputs depending on other infrastructure components like the number of switches, routers, firewalls, and their different configurations. Storage components are not different, they behave differently depending on different factors.

•Individual tests

A good way to understand the infrastructure limits is by testing the components individually so that you know what to expect from each of them.

•Integration tests

Integration tests are good to get an idea about how the infrastructures interact and how that affects the overall throughput.

|

Note: Testing your network by transmitting packets between two ends separated by a complex infrastructure, for example, can tell you some data about your environment throughput but may not tell you much about your individual network components.

|

5.2 Testing the environment

This section offers some suggestions on how to proceed with testing your environment. By doing so systematically you should be able to determine whether the changes made based on the concepts presented throughout this book have beneficial results on your environment.

A good thing to keep in mind is that not every system or workload will benefit from the same tuning.

5.2.1 Planning the tests

When the environment is going to be tested, it is good practice to establish goals and build a test plan.

The following topics are important things to be considered when building a test plan:

•Infrastructure

Knowledge about the type of machines, their capacity, how they are configured, partition sizing, resource allocation, and about other infrastructure components (network, storage) is important. Without this information it is just hard to establish baselines and goals, and to set expectations.

•Component tests

Test one component at a time. Even though during the tests some results may suggest that other components should be tuned, testing multiple components may not be a good idea since it involves a lot of variables and may lead to confusing results.

•Correct workload

The type of workload matters. Different workloads will have different impact on the tests, and thus it is good to tie the proper workload to the component being tested as much as possible.

•Impact and risk analysis

Tests may stress several components at different levels. The impact analysis of the test plan should consider as many levels as possible to mitigate any major problems with the environment.

In the past years, with all the advance of virtualized environments, shared resources have become a new concern when testing. Stressing a system during a processor test may result in undesired resource allocations. Stressing the disk subsystem might create bottlenecks for other production servers.

•Baselines and goals

Establishing a baseline is not always easy. The current environment configuration has to be evaluated and monitored before going through tests and tuning. Without a baseline, you have nothing to compare with your results.

Defining the goals you want to achieve depends on understanding of the environment. Before establishing a performance gain on network throughput of 20%, for instance, you must first know how the entire environment is configured.

Once you have a good understanding of how your environment behaves and its limitations, try establishing goals and defining what is a good gain, or what is a satisfactory improvement.

•Setting the expectations

Do not assume that a big boost in performance can always be obtained. Eventually you may realize that you are already getting the most out of your environment and further improvements can only be obtained with new hardware or with better-written applications.

Be reasonable and set expectations of what is a good result for the tests.

Expectations can be met, exceeded, or not met. In any case, tests should be considered an investment. They can give you a good picture of how the environment is sized, its ability to accommodate additional workload, estimation of future hardware needs, and the limits of the systems.

5.2.2 The testing cycle

A good approach to test the environment is to establish cycles of tests, broken into the following steps:

•Establish a plan

Set the scope of your tests, which components will be tested, which workloads will be applied, whether they are real or simulation, when tests will be made, how often the system will be monitored, and so on.

•Make the changes

Change the environment according to the plan, trying to stay as much as possible inside the scope of the defined plan.

•Monitor the components

Establish a period to monitor the system and collect performance data for analysis. There is no best period of time for this, but usually a good idea is to monitor the behavior of the system for a few days at least and try to identify patterns.

•Compare the results

Compare the performance data collected with the previous results. Analysis of the results can be used as an input to a new cycle of tests with a new baseline.

You can establish different plans, test each one in different cycles, measure and compare the results, always aiming for additional improvement. The cycle can be repeated as many times as necessary.

5.2.3 Start and end of tests

This section provides information on when to start and end the tests.

When to start testing the environment

A good time to start profiling the environment is now. Unless you have a completely static environment, well sized and stable, tests should be a constant exercise.

Workload demands tend to vary either by increasing or decreasing with time, and analyzing the environment is a good way to find the right moment to review the resource distribution.

Imagine a legacy system being migrated to a new environment. The natural behavior is for a new system to demand more resources with time, and the legacy system demanding less.

When to stop testing the environment

Testing the environment takes time, requires resources, and has costs. At some point, tests will be interrupted by such restrictions.

Despite these restrictions, assuming that a plan has been established at the beginning, the best moment to stop the tests is when the results achieve at least some of the established goals.

The reasons why an environment is submitted to tests can vary and no matter what the goals of the tests are, their results should be meaningful and in accordance with the goals defined, even if you cannot complete all the tests initially planned.

Systems have limits

Every environment has its limits but only tests will tell what your environment’s are. Eventually you may find that even though everything has been done on the system side, the performance of the applications is still not good. You may then want to take a look at the application architecture.

5.3 Testing components

In this section we try to focus on simple tests of the components, and which tools you can use to monitor system behavior, to later demonstrate how to read and interpret the measured values.

Testing the system components is usually a simple task and can be accomplished by using native tools available on the operating system by writing a few scripts. For instance, you may not be able to simulate a multithread workload with the native tools, but you can spawn a few processor-intensive processes and have an idea of how your system behaves.

Basic network and storage tests are also easy to perform.

|

Note: It is not our intention to demonstrate or compare the behavior of processes and threads. The intention of this section is to put a load on the processor of our environment and use the tools to analyze the system behavior.

|

How can I know, for example, that the 100 MB file retrieval response time is reasonable? Its response time is composed of network transmission + disk reading + application overhead. I should be able to calculate that, in theory.

5.3.1 Testing the processor

Before testing the processing power of the system, it is important to understand the concepts explained in this book because there are a lot of factors that affect the processor utilization of the system.

To test the processor effectively, the ideal is to run a processor-intensive workload. Running complex systems that depend on components such as disk storage or networks might not result in an accurate test of the environment and can result in misleading data.

The process queue

The process queue is a combination of two different queues: the run queue and wait queue. Threads on the run queue represent either threads ready to run (awaiting for a processor time slice) or threads already running. The wait queue holds threads waiting for resources or I/O requests to complete.

Running workloads with a high number of processes is good for understanding the response of the system and to try to establish the point at which the system starts to become unresponsive.

In this section, the nstress suite has been used to put some load on the system. The tests are made running the ncpu command starting with 16 processes. On another window we monitored the process queue with the vmstat command, and a one-line script to add time information at the front of each line to check the output. Table 5-1 illustrates the results.

Table 5-1 Tests run on the system

|

Processes

|

System response

|

|

16

|

Normal

|

|

32

|

Normal

|

|

64

|

Minimal timing delays

|

|

96

|

Low response. Terminals not responding to input.

|

|

128

|

Loss of output from vmstat.

|

The system performed well until we put almost a hundred processes on the queue. Then the system started to show slow response and loss of output from vmstat, indicating that the system was stressed.

A different behavior is shown in Example 5-5. In this test, we started a couple of commands to create one big file and several smaller files. The system has only a few processes on the run queue, but this time it also has some on the wait queue, which means that the system is waiting for I/O requests to complete. Notice that the processor is not overloaded, but there are processes that will keep waiting on the queue until their I/O operations are completed.

Example 5-5 vmstat output illustrating processor wait time

vmstat 5

System configuration: lcpu=16 mem=8192MB ent=1.00

kthr memory page faults cpu

----- ----------- ------------------------ ------------ -----------------------

r b avm fre re pi po fr sr cy in sy cs us sy id wa pc ec

2 1 409300 5650 0 0 0 23800 23800 0 348 515 1071 0 21 75 4 0.33 33.1

1 3 409300 5761 0 0 0 23152 75580 0 340 288 1030 0 24 67 9 0.37 36.9

3 4 409300 5634 0 0 0 24076 24076 0 351 517 1054 0 21 66 12 0.34 33.8

2 3 409300 5680 0 0 0 24866 27357 0 353 236 1050 0 22 67 11 0.35 34.8

0 4 409300 5628 0 0 0 22613 22613 0 336 500 1036 0 21 67 12 0.33 33.3

0 4 409300 5622 0 0 0 23091 23092 0 338 223 1030 0 21 67 12 0.33 33.3

5.3.2 Testing the memory

This topic addresses some tests that can be made at the operating system level to measure how much workload your current configuration can take before the system becomes unresponsive or kills processes.

The system we were using for the tests was a partition with 8 GB of RAM and 512 Mb of paging-space running AIX 7.1. To simulate the workload, we used the stress tool, publicly available under GPLv2 license at:

http://weather.ou.edu/~apw/projects/stress/

Packages ready for the AIX can be found at:

http://www.perzl.org/aix

The following tests were intended to test how much memory load our system could take before starting to swap, become unresponsive, and kill processes.

The first set of tests tried to establish how many processes we could dispatch using different memory sizes. Before starting the tests, it is important to have a good understanding of virtual memory concepts and how the AIX Virtual Memory Manager works.

There are a few tunables that will affect the behavior of our system during the tests.

The npswarn, npskill, and nokilluid tunables

When AIX detects that memory resource is running out, it might kill processes to release a number of paging space pages to continue running. AIX controls this behavior through the npswarn, npskill and nokilluid tunables.

•npswarn

The npswarn tunable is a value that defines the minimum number of free paging space pages that must be available. When this threshold is exceeded, AIX will start sending the SIGDANGER signal to all processes except kernel processes.

The default action for SIGDANGER is to ignore this signal. Most processes will ignore this signal. However, the init process does register a signal handler for the SIGDANGER signal, which will write the warning message Paging space low to the defined system console.

The kernel processes can be shown using ps -k. Refer to the following website for more information about kernel processes (kprocs):

•npskill

If consumption continues, this tunable is the next threshold to trigger; it defines the minimum number of free paging-space pages to be available before the system starts killing processes.

At this point, AIX will send SIGKILL to eligible processes depending on the following factors:

– Whether or not the process has a SIGDANGER handler

By default, SIGKILL will only be sent to processes that do not have a handler for SIGDANGER. This default behavior is controlled by the vmo option low_ps_handling.

– The value of the nokilluid setting, and the UID of the process, which is discussed in the following section.

– The age of the process

AIX will first send SIGKILL to the youngest eligible process. This helps to prevent long running processes against a low paging space condition caused by recently created processes. Now you understand why you cannot establish telnet or ssh connections to the system, but still ping it at this point?

However, note that the long running processes could also be killed if the low paging space condition (below npskill) persists.

When a process is killed, the system logs a message with the label PGSP_KILL, as shown in Example 5-6.

Example 5-6 errpt output - Process killed by AIX due to lack of paging space

LABEL: PGSP_KILL

IDENTIFIER: C5C09FFA

Date/Time: Thu Oct 25 12:49:32 2012

Sequence Number: 373

Machine Id: 00F660114C00

Node Id: p750s1aix5

Class: S

Type: PERM

WPAR: Global

Resource Name: SYSVMM

Description

SOFTWARE PROGRAM ABNORMALLY TERMINATED

Probable Causes

SYSTEM RUNNING OUT OF PAGING SPACE

Failure Causes

INSUFFICIENT PAGING SPACE DEFINED FOR THE SYSTEM

PROGRAM USING EXCESSIVE AMOUNT OF PAGING SPACE

Recommended Actions

DEFINE ADDITIONAL PAGING SPACE

REDUCE PAGING SPACE REQUIREMENTS OF PROGRAM(S)

Detail Data

PROGRAM

stress

USER'S PROCESS ID:

5112028

PROGRAM'S PAGING SPACE USE IN 1KB BLOCKS

8

The error message gives the usual information with timestamp, causes, recommended actions and details of the process.

In the example, the process stress has been killed. For the sake of our tests, it is indeed the guilty process for inducing shortages on the system. However, in a production environment the process killed is not always the one that is causing the problems. Whenever this type of situation is detected on the system, a careful analysis of all processes running on the system must be done during a longer period. The nmon tool is a good resource to assist with collecting data to identify the root causes.

In our tests, when the system was overloaded and short on resources, AIX would sometimes kill our SSH sessions and even the SSH daemon.

|

Tip: The default value for this tunable is calculated with the formula:

npskill = maximum(64, number_of_paging_space_pages/128)

|

•nokilluid

This tunable accepts a UID as a value. All processes owned by UIDs below the defined value will be out of the killing list. Its default value is zero (0), which means that even processes owned by the root ID can be killed.

Now that we have some information about these tunables, it is time to proceed with the tests.

One major mistake that people make is to think that a system with certain amounts of memory can take a load matching that same size. This viewpoint is incorrect; if your system has 16 GB of memory, it does not mean that all the memory can be made available to your applications. There are several other processes and kernel structures that also need memory to work.

In Example 5-7, we illustrate the wrong assumption by adding a load of 64 processes, with 128 MB of size each to push the system to its limits (64 x 128 = 8192). The expected result is an overload of the virtual memory and a reaction from the operating system.

Example 5-7 stress - 64x 128MB

# date ; stress -m 64 --vm-bytes 128M -t 120 ; date

Thu Oct 25 15:22:15 EDT 2012

stress: info: [15466538] dispatching hogs: 0 cpu, 0 io, 64 vm, 0 hdd

stress: FAIL: [15466538] (415) <-- worker 4259916 got signal 9

stress: WARN: [15466538] (417) now reaping child worker processes

stress: FAIL: [15466538] (451) failed run completed in 46s

Thu Oct 25 15:23:01 EDT 2012

As seen in bold, the process receives a SIGKILL less than a minute after being started. The reason is that the resource consumption levels reached the limits defined by the npswarn and npskill parameters. This is illustrated in Example 5-8. At 15:22:52 (time is in the last column), the system is exhausted of free memory pages and showing some paging space activity. At the last line, the system had a sudden increase on the paging out and replacement, indicating that the operating system had to make some space by freeing some pages to accommodate the new allocation.

Example 5-8 vmstat output - 64 x 128 MB

# vmstat -t 1

System configuration: lcpu=16 mem=8192MB ent=1.00

kthr memory page faults cpu time

----- ----------- ------------------------- ------------ ----------------------- --------

r b avm fre re pi po fr sr cy in sy cs us sy id wa pc ec hr mi se

67 0 1128383 1012994 0 1 0 0 0 0 9 308 972 99 1 0 0 3.82 381.7 15:22:19

65 0 1215628 925753 0 0 0 0 0 0 2 50 1104 99 1 0 0 4.01 400.7 15:22:20

65 0 1300578 840779 0 0 0 0 0 0 10 254 1193 99 1 0 0 3.98 398.2 15:22:21

65 0 1370827 770545 0 0 0 0 0 0 11 54 1252 99 1 0 0 4.00 400.2 15:22:22

64 0 1437708 703670 0 0 0 0 0 0 20 253 1304 99 1 0 0 4.00 400.0 15:22:23

66 0 1484382 656996 0 0 0 0 0 0 11 50 1400 99 1 0 0 4.00 399.6 15:22:24

64 0 1554880 586495 0 0 0 0 0 0 12 279 1481 99 1 0 0 3.99 398.9 15:22:25

64 0 1617443 523931 0 0 0 0 0 0 4 47 1531 99 1 0 0 3.99 398.7 15:22:26

...

38 36 2209482 4526 0 383 770 0 54608 0 467 138 1995 85 15 0 0 3.99 398.7 15:22:52

37 36 2209482 4160 0 364 0 0 62175 0 317 322 1821 87 13 0 0 3.99 399.5 15:22:53

33 40 2209482 4160 0 0 0 0 64164 0 7 107 1409 88 12 0 0 4.00 399.7 15:22:54

34 42 2209544 4173 0 49 127 997 50978 0 91 328 1676 87 13 0 0 4.01 400.8 15:22:55

31 48 2211740 4508 0 52 2563 3403 27556 0 684 147 2332 87 13 0 0 3.98 398.5 15:22:56

Killed

This is normal behavior and indicates that the system is very low on resources (based on VMM tunable values). In sequence, the system would just kill the vmstat process along with other application processes in an attempt to free more resources.

Example 5-9 has the svmon output for a similar example (the header has been added manually to make it easier to identify the columns). This system has 512 MB of paging space, divided into 131072 x 4096 KB pages. The npswarn and npskill values are 4096 and 1024, respectively.

Example 5-9 svmon - system running out of paging space

# svmon -G -i 5 | egrep "^(s)"

PageSize PoolSize inuse pgsp pin virtual

s 4 KB - 188996 48955 179714 229005

s 4 KB - 189057 48955 179725 229066

s 4 KB - 442293 50306 181663 482303

s 4 KB - 942678 51280 182637 982682

s 4 KB - 1222664 51825 183184 1262663

s 4 KB - 1445145 52253 183612 1485143

s 4 KB - 1660541 52665 184032 1700504

s 4 KB - 1789863 52916 184283 1829823

s 4 KB - 1846800 53196 184395 1887575

s 4 KB - 1846793 78330 184442 1912289

s 4 KB - 1846766 85789 184462 1921204

s 4 KB - 1846800 94455 184477 1929270

s 4 KB - 1846800 110796 184513 1948082

s 4 KB - 1846800 128755 184543 1963861

s 4 KB - 185921 49540 179756 229097

s 4 KB - 185938 49536 179756 229097

Subtracting the number of paging-space pages allocated from the total number of paging spaces, the number of free paging-space frames will be:

131072 - 128755 = 2317 (free paging-space frames)

The resulting value is between npswarn and npskill. Thus, at that specific moment, the system was about to start killing processes and the last two lines of Example 5-9 on page 218 show a sudden drop of the paging-space utilization indicating that some processes have terminated (in this case they were killed by AIX).

The last example illustrated the behavior of the system when we submitted a load of processes matching the size of the system memory. Now, let us see what happens when we use bigger processes (1024 MB each), but with a fewer number of processes (7).

The first thing to notice in Example 5-10 is that the main process got killed by AIX.

Example 5-10 stress output - 7x1024 MB processes

# stress -m 7 --vm-bytes 1024M -t 300

stress: info: [6553712] dispatching hogs: 0 cpu, 0 io, 7 vm, 0 hdd

Killed

Although our main process got killed, we still had six processes running, each 1024 MB in size, as shown in Example 5-11, which also illustrates the memory and paging space consumption.

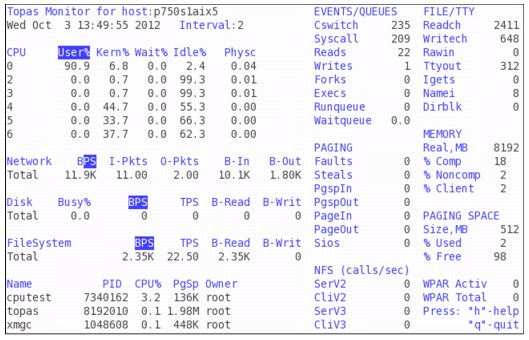

Example 5-11 topas output - 7x1024 MB processes

Topas Monitor for host:p750s1aix5 EVENTS/QUEUES FILE/TTY

Tue Oct 30 15:38:17 2012 Interval:2 Cswitch 226 Readch 1617

Syscall 184 Writech 1825

CPU User% Kern% Wait% Idle% Physc Entc% Reads 9 Rawin 0

Total 76.7 1.4 0.0 21.9 4.00 399.72 Writes 18 Ttyout 739

Forks 0 Igets 0

Network BPS I-Pkts O-Pkts B-In B-Out Execs 0 Namei 0

Total 2.07K 11.49 8.50 566.7 1.52K Runqueue 7.00 Dirblk 0

Waitqueue 0.0

Disk Busy% BPS TPS B-Read B-Writ MEMORY

Total 0.5 56.0K 13.99 56.0K 0 PAGING Real,MB 8192

Faults 5636 % Comp 94

FileSystem BPS TPS B-Read B-Writ Steals 0 % Noncomp 0

Total 1.58K 9.00 1.58K 0 PgspIn 13 % Client 0

PgspOut 0

Name PID CPU% PgSp Owner PageIn 13 PAGING SPACE

stress 11927714 15.0 1.00G root PageOut 0 Size,MB 512

stress 13893870 14.9 1.00G root Sios 13 % Used 99

stress 5898362 12.5 1.00G root % Free 1

stress 9109570 12.2 1.00G root NFS (calls/sec)

stress 11206792 11.1 1.00G root SerV2 0 WPAR Activ 0

stress 12976324 10.9 1.00G root CliV2 0 WPAR Total 2

svmon 13959288 0.4 1.13M root SerV3 0 Press: "h"-help

sshd 4325548 0.2 1.05M root CliV3 0 "q"-quit

In Example 5-12, the svmon output illustrates the virtual memory. Even though the system still shows some free pages, it is almost out of paging space. During this situation, dispatching a new command could result in a fork() error.

Example 5-12 svmon - 7x 1024MB processes

size inuse free pin virtual mmode

memory 2097152 1988288 108864 372047 2040133 Ded

pg space 131072 130056

work pers clnt other

pin 236543 0 0 135504

in use 1987398 0 890

PageSize PoolSize inuse pgsp pin virtual

s 4 KB - 1760688 130056 183295 1812533

m 64 KB - 14225 0 11797 14225

Figure 5-1 illustrates a slow increase in memory pages consumption during the execution of six processes with 1024 MB each. We had almost a linear increase for a few seconds until the resources were exhausted and the operating system killed some processes.

The same tests running with memory sizes lower than 1024 MB would keep the system stable.

Figure 5-1 Memory pages slow increase

This very same test, running with 1048 MB processes for example, resulted in a stable system, with very low variation in memory page consumption.

These tests are all intended to understand how much load the server could take. Once the limits were understood, the application could be configured according to its requirements, behavior, and system limits.

5.3.3 Testing disk storage

When testing the attachment of an IBM Power System to an external disk storage system, and the actual disk subsystem itself, there are some important considerations before performing any meaningful testing.

Understanding your workload is a common theme throughout this book, and this is true when performing meaningful testing. The first thing is to understand the type of workload you want to simulate and how you are going to simulate it.

There are types of I/O workload characteristics that apply to different applications (Table 5-2).

Table 5-2 I/O workload types

|

I/O type

|

Description

|

|

Sequential

|

Sequential access to disk storage is where typically large I/O requests are sent from the server, where data is read in order, one block at a time one after the other. An example of this type of workload is performing a backup.

|

|

Random

|

Random access to disk storage is where data is read in random order from disk storage, typically in smaller blocks, and it is sensitive to latency.

|

An OLTP transaction processing-based workload typically will have a smaller random I/O request size between 4 k and 8 k. A data warehouse or batch type workload will typically have a larger sequential I/O request size of 16 k and larger. Again, a workload such as a backup server may have a sequential I/O block size of 64 k or greater.

Having a repeatable workload is key to be able to perform a test, make an analysis of the results, perform any attribute changes, and repeat the test. Ideally if you can perform an application-driven load test simulating the actual workload, this is going to be the most accurate method.

There are going to be instances where performing some kind of stress test without any application-driven load is going to be required. This can be performed with the ndisk64 utility, which requires minimal setup time and is available on IBM developerworks at:

|

Important: When running the ndisk64 utility against a raw device (such as an hdisk) or an existing file, the data on the device or file will be destroyed.

|

It is imperative to have an understanding of what the I/O requirement of the workload will be, and the performance capability of attached SAN and storage systems. Using SAP as an example, the requirement could be 35,000 SAPS, which equates to a maximum of 14,500 16 K random IOPS on a storage system with a 70:30 read/write ratio (these values are taken from the IBM Storage Sizing Recommendation for SAP V9).

Before running the ndisk64 tool, you need to understand the following:

•What type of workload are you trying to simulate? Random type I/O or sequential type I/O?

•What is the I/O request size you are trying to simulate?

•What is the read/write ratio of the workload?

•How long will you run the test? Will any production systems be affected during the running of the test?

•What is the capability of your SAN and storage system? Is it capable of handling the workload you are trying to simulate? We found that the ndisk64 tool was cache intensive on our storage system.

Example 5-13 demonstrates running the ndisk64 tool for a period of 5 minutes with our SAP workload characteristics on a test logical volume called ndisk_lv.

Example 5-13 Running the ndisk64 tool

root@aix1:/tmp # ./ndisk64 -R -t 300 -f /dev/ndisk_lv -M 20 -b 16KB -s 100G -r 70%

Command: ./ndisk64 -R -t 300 -f /dev/ndisk_lv -M 20 -b 16KB -s 100G -r 70%

Synchronous Disk test (regular read/write)

No. of processes = 20

I/O type = Random

Block size = 16384

Read-WriteRatio: 70:30 = read mostly

Sync type: none = just close the file

Number of files = 1

File size = 107374182400 bytes = 104857600 KB = 102400 MB

Run time = 300 seconds

Snooze % = 0 percent

----> Running test with block Size=16384 (16KB) ....................

Proc - <-----Disk IO----> | <-----Throughput------> RunTime

Num - TOTAL IO/sec | MB/sec KB/sec Seconds

1 - 136965 456.6 | 7.13 7304.84 300.00

2 - 136380 454.6 | 7.10 7273.65 300.00

3 - 136951 456.5 | 7.13 7304.08 300.00

4 - 136753 455.8 | 7.12 7293.52 300.00

5 - 136350 454.5 | 7.10 7272.05 300.00

6 - 135849 452.8 | 7.08 7245.31 300.00

7 - 135895 453.0 | 7.08 7247.49 300.01

8 - 136671 455.6 | 7.12 7289.19 300.00

9 - 135542 451.8 | 7.06 7228.26 300.03

10 - 136863 456.2 | 7.13 7299.38 300.00

11 - 137152 457.2 | 7.14 7314.78 300.00

12 - 135873 452.9 | 7.08 7246.57 300.00

13 - 135843 452.8 | 7.08 7244.94 300.00

14 - 136860 456.2 | 7.13 7299.19 300.00

15 - 136223 454.1 | 7.10 7265.29 300.00

16 - 135869 452.9 | 7.08 7246.39 300.00

17 - 136451 454.8 | 7.11 7277.23 300.01

18 - 136747 455.8 | 7.12 7293.08 300.00

19 - 136616 455.4 | 7.12 7286.20 300.00

20 - 136844 456.2 | 7.13 7298.40 300.00

TOTALS 2728697 9095.6 | 142.12 Rand procs= 20 read= 70% bs= 16KB

root@aix1:/tmp #

Once the ndisk testing has been completed, if it is possible to check the storage system to compare the results, and knowing the workload you generated was similar to the workload on the storage, it is useful to validate the test results.

Figure 5-2 shows the statistics displayed on our storage system, which in this case is an IBM Storwize V7000 storage system.

Figure 5-2 V7000 volume statistics

|

Note: 5.6, “Disk storage bottleneck identification” on page 251 describes how to interpret the performance data collected during testing activities.

|

It is also important to recognize that disk storage technology is evolving. With the introduction of solid state drives (SSD), new technologies have been adopted by most storage vendors, such as automated tiering. An example of this is the Easy Tier® technology used in IBM storage products such as IBM SAN Volume Controller, IBM DS8000 and IBM Storwize V7000.

Automated tiering monitors a workload over a period of time, and moves blocks of data in and out of SSD based on how frequently accessed they are. For example, if you run a test for 48 hours, and during that time the automated tiering starts moving blocks into SSD, the test results may vary. So it is important to consult your storage administrator on the storage system’s capabilities as part of the testing process.

5.3.4 Testing the network

Performing network tests on the environment is simpler than the other tests. From the operating system point of view, there is not much to be tested. Although some tuning can be performed on both AIX and Virtual I/O Server layers, for example, the information to be analyzed is more simple. However, when talking about networks, you should always consider all the infrastructure that may affect the final performance of the environment. Eventually you may find that the systems themselves are OK but some other network component, such as a switch, firewall, or router, is affecting the performance of the network.

Latency

Latency can be defined as the time taken to transmit a packet between two points. For the sake of tests, you can also define latency as the time taken for a packet to be transmitted and received between two points (round trip).

Testing the latency is quite simple. In the next examples, we used tools such as tcpdump and ping to test the latency of our infrastructure, and a shell script to filter data and calculate the mean latency (Example 5-14).

Example 5-14 latency.sh - script to calculate the mean network latency

#!/usr/bin/ksh

IFACE=en0

ADDR=10.52.78.9

FILE=/tmp/tcpdump.icmp.${IFACE}.tmp

# number of ICMP echo-request packets to send

PING_COUNT=10

# interval between each echo-request

PING_INTERVAL=10

# ICMP echo-request packet size

PING_SIZE=1

# do not change this. number of packets to be monitored by tcpdump before

# exitting. always PING_COUNT x 2

TCPDUMP_COUNT=$(expr "${PING_COUNT}*2")

tcpdump -l -i ${IFACE} -c ${TCPDUMP_COUNT} "host ${ADDR} and (icmp[icmptype] == icmp-echo or icmp[icmptype] == icmp-echoreply)" > ${FILE} 2>&1 &

ping -c ${PING_COUNT} -i ${PING_INTERVAL} -s ${PING_SIZE} ${ADDR} 2>&1

MEANTIME=$(cat ${FILE} | awk -F "[. ]" 'BEGIN { printf("scale=2;("); } { if(/ICMP echo request/) { REQ=$2; getline; REP=$2; printf("(%d-%d)+", REP, REQ); } } END { printf("0)/1000/10

"); }' | bc)

echo "Latency is ${MEANTIME}ms"

The script in Example 5-14 has a few parameters that can be changed to test the latency. This script can be changed to accept some command line arguments instead of having to change it every time.

Basically the script monitors the ICMP echo-request and echo-reply traffic while performing some ping with small packet sizes, and calculate the mean round-trip time from a set of samples.

Example 5-15 latency.sh - script output

# ksh latency.sh

PING 10.52.78.9 (10.52.78.9): 4 data bytes

12 bytes from 10.52.78.9: icmp_seq=0 ttl=255

12 bytes from 10.52.78.9: icmp_seq=1 ttl=255

12 bytes from 10.52.78.9: icmp_seq=2 ttl=255

12 bytes from 10.52.78.9: icmp_seq=3 ttl=255

12 bytes from 10.52.78.9: icmp_seq=4 ttl=255

12 bytes from 10.52.78.9: icmp_seq=5 ttl=255

12 bytes from 10.52.78.9: icmp_seq=6 ttl=255

12 bytes from 10.52.78.9: icmp_seq=7 ttl=255

12 bytes from 10.52.78.9: icmp_seq=8 ttl=255

12 bytes from 10.52.78.9: icmp_seq=9 ttl=255

--- 10.52.78.9 ping statistics ---

10 packets transmitted, 10 packets received, 0% packet loss

Latency is .13ms

Example 5-15 on page 224 shows the output of the latency.sh script containing the mean latency time of 0.13 ms. This test has been run between two servers connected on the same subnet sharing the same Virtual I/O server.

In Example 5-16, we used the tcpdump output to calculate the latency. The script filters each pair of requests and reply packets, extracts the timing portion required to calculate each packet latency, and finally sums all latencies and divides by the number of packets transmitted to get the mean latency.

Example 5-16 latency.sh - tcpdump information

# cat tcpdump.icmp.en0.tmp

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on en0, link-type 1, capture size 96 bytes

15:18:13.994500 IP p750s1aix5 > nimres1: ICMP echo request, id 46, seq 1, length 12

15:18:13.994749 IP nimres1 > p750s1aix5: ICMP echo reply, id 46, seq 1, length 12

15:18:23.994590 IP p750s1aix5 > nimres1: ICMP echo request, id 46, seq 2, length 12

15:18:23.994896 IP nimres1 > p750s1aix5: ICMP echo reply, id 46, seq 2, length 12

15:18:33.994672 IP p750s1aix5 > nimres1: ICMP echo request, id 46, seq 3, length 12

15:18:33.994918 IP nimres1 > p750s1aix5: ICMP echo reply, id 46, seq 3, length 12

15:18:43.994763 IP p750s1aix5 > nimres1: ICMP echo request, id 46, seq 4, length 12

15:18:43.995063 IP nimres1 > p750s1aix5: ICMP echo reply, id 46, seq 4, length 12

15:18:53.994853 IP p750s1aix5 > nimres1: ICMP echo request, id 46, seq 5, length 12

15:18:53.995092 IP nimres1 > p750s1aix5: ICMP echo reply, id 46, seq 5, length 12

7508 packets received by filter

0 packets dropped by kernel

Latency times depend mostly on the network infrastructure complexity. This information can be useful if you are preparing the environment for applications that transmit a lot of small packets and demand low network latency.

Transmission tests - TCP_RR

Request and Response tests measure the number of transactions (basic connect and disconnect) that your servers and network infrastructure are able to handle. These tests were performed with the netperf tool (Example 5-17).

Example 5-17 netperf - TCP_RR test

# ./netperf -t TCP_RR -H 10.52.78.47

Netperf version 5.3.7.5 Jul 23 2009 16:57:35

TCP REQUEST/RESPONSE TEST: 10.52.78.47

(+/-5.0% with 99% confidence) - Version: 5.3.7.5 Jul 23 2009 16:57:41

Local /Remote ----------------

Socket Size Request Resp. Elapsed Response Time

Send Recv Size Size Time (iter) ------- --------

bytes Bytes bytes bytes secs. TRs/sec millisec*host

262088 262088 100 200 4.00(03) 3646.77 0.27

262088 262088

Transmission tests - TCP_STREAM

These tests attempt to send the most data possible from one side to another in a certain period and give the total throughput of the network. These tests were performed with the netperf tool (Example 5-18).

Example 5-18 netperf - TCP_STREAM test

# ./netperf -t TCP_STREAM -H 10.52.78.47

Netperf version 5.3.7.5 Jul 23 2009 16:57:35

TCP STREAM TEST: 10.52.78.47

(+/-5.0% with 99% confidence) - Version: 5.3.7.5 Jul 23 2009 16:57:41

Recv Send Send ---------------------

Socket Socket Message Elapsed Throughput

Size Size Size Time (iter) ---------------------

bytes bytes bytes secs. 10^6bits/s KBytes/s

262088 262088 100 4.20(03) 286.76 35005.39

Several tests other than TCP_STREAM and TCP_RR are available with the netperf tool that can be used to test the network. Remember that network traffic also consumes memory and processor time. The netperf tool can provide some processor utilization statistics as well, but we suggest that the native operating system tools be used instead.

|

Tip: The netperf tool can be obtained at:

http://www.netperf.org

|

5.4 Understanding processor utilization

This section provides details regarding processor utilization.

5.4.1 Processor utilization

In past readings, the processor utilization on old single-threaded systems used to be straight forward. Tools such as topas, sar and vmstat provided simple values that would let you know exactly how much processor utilization you had.

With the introduction of multiple technologies in the past years, especially the simultaneous multithreading on POWER5 systems, understanding processor utilization on systems became a much more complex task—first because of different new concepts such as Micro-Partitioning®, Virtual Processors and Entitled Capacity, and second because the inherent complexity of parallel processing on SMT.

Current technologies, for instance, allow a logical partition to go in a few seconds from a single idle logical processor to sixteen fully allocated processes to fulfill a workload demand, triggering several components on hypervisor and hardware levels and in less than a minute, go back to its stationary state.

The POWER7 technology brought important improvements of how processor utilization values are reported, offering more accurate data to systems administrators.

This section focuses on explaining some of the concepts involved in reading the processor utilization values on POWER7 environments and will go through a few well-known commands, explaining some important parameters and how to read them.

5.4.2 POWER7 processor utilization reporting

POWER7 introduces an improved algorithm to report processor utilization. This algorithm is based on calibrated Processor Utilization Resource Register (PURR) compared to PURR that is used for POWER5 and POWER6. The aim of this new algorithm is to provide a better view of how much capacity is used, and how much capacity is still available. Thus you can achieve linear PURR utilization and throughput (TPS) relationships. Clients would benefit from the new algorithm, which emphasizes more on PURR utilization metrics than other targets such as throughput and response time.

Figure 5-3 explains the difference between the POWER5, POWER6, and POWER7 PURR utilization algorithms. On POWER5 and POWER6 systems, when only one of the two SMT hardware threads is busy, the utilization of the processor core is reported 100%. While on POWER7, the utilization of the SMT2 processor core is around 80% in the same situation. Furthermore, when one of the SMT4 hardware thread is busy, the utilization of the SMT4 processor core is around 63%. Also note that the POWER7 utilization algorithm persists even if running in POWER6 mode.

Figure 5-3 POWER7 processor utilization reporting

|

Note: The utilization reporting variance (87~94%) when two threads are busy in SMT4 is due to occasional load balancing to tertiary threads (T2/T3), which is controlled by a number of schedo options including tertiary_barrier_load.

The concept of the new improved PURR algorithm is not related to Scaled Process Utilization of Resource Register (SPURR). The latter is a conceptually different technology and is covered in 5.4.5, “Processor utilization reporting in power saving modes” on page 234.

|

POWER7 processor utilization example - dedicated LPAR

Example 5-19 demonstrates processor utilization when one hardware thread is busy in SMT4 mode. As in the example, the single thread application consumed an entire logical processor (CPU0), but not the entire capacity of the physical core, because there were still three idle hardware threads in the physical core. The physical consumed processor is about 0.62. Because there are two physical processors in the system, the overall processor utilization is 31%.

Example 5-19 Processor utilization in SMT4 mode on a dedicated LPAR

#sar -P ALL 1 100

AIX p750s1aix2 1 7 00F660114C00 10/02/12

System configuration: lcpu=8 mode=Capped

18:46:06 0 100 0 0 0 0.62

1 0 0 0 100 0.13

2 0 0 0 100 0.13

3 0 0 0 100 0.13

4 1 1 0 98 0.25

5 0 0 0 100 0.25

6 0 0 0 100 0.25

7 0 0 0 100 0.25

- 31 0 0 69 1.99

Example 5-20 demonstrates processor utilization when one thread is busy in SMT2 mode. In this case, the single thread application consumed more capacity of the physical core (0.80), because there was only one idle hardware thread in the physical core, compared to three idle hardware threads in SMT4 mode in Example 5-19. The overall processor utilization is 40% because there are two physical processors.

Example 5-20 Processor utilization in SMT2 mode on a dedicated LPAR

#sar -P ALL 1 100

AIX p750s1aix2 1 7 00F660114C00 10/02/12

System configuration: lcpu=4 mode=Capped

18:47:00 cpu %usr %sys %wio %idle physc

18:47:01 0 100 0 0 0 0.80

1 0 0 0 100 0.20

4 0 1 0 99 0.50

5 0 0 0 100 0.49

- 40 0 0 60 1.99

Example 5-21 demonstrates processor utilization when one thread is busy in SMT1 mode. Now the single thread application consumed the whole capacity of the physical core, because there is no other idle hardware thread in ST mode. The overall processor utilization is 50% because there are two physical processors.

Example 5-21 Processor utilization in SMT1 mode on a dedicated LPAR

sar -P ALL 1 100

AIX p750s1aix2 1 7 00F660114C00 10/02/12

System configuration: lcpu=2 mode=Capped

18:47:43 cpu %usr %sys %wio %idle

18:47:44 0 100 0 0 0

4 0 0 0 100

- 50 0 0 50

POWER7 processor utilization example - shared LPAR

Example 5-22 demonstrates processor utilization when one thread is busy in SMT4 mode on a shared LPAR. As in the example, logical processor 4/5/6/7 consumed one physical processor core. Although logical processor 4 is 100% busy, the physical consumed processor (physc) is only 0.63. Which means the LPAR received a whole physical core, but is not fully driven by the single thread application. The overall system processor utilization is about 63%. For details aout system processor utilization reporting in a shared LPAR environment, refer to 5.4.6, “A common pitfall of shared LPAR processor utilization” on page 236.

Example 5-22 Processor utilization in SMT4 mode on a shared LPAR

#sar -P ALL 1 100

AIX p750s1aix2 1 7 00F660114C00 10/02/12

System configuration: lcpu=16 ent=1.00 mode=Uncapped

18:32:58 cpu %usr %sys %wio %idle physc %entc

18:32:59 0 24 61 0 15 0.01 0.8

1 0 3 0 97 0.00 0.2

2 0 2 0 98 0.00 0.2

3 0 2 0 98 0.00 0.3

4 100 0 0 0 0.63 62.6

5 0 0 0 100 0.12 12.4

6 0 0 0 100 0.12 12.4

7 0 0 0 100 0.12 12.4

8 0 52 0 48 0.00 0.0

12 0 57 0 43 0.00 0.0

- 62 1 0 38 1.01 101.5

Example 5-23 demonstrates processor utilization when one thread is busy in SMT2 mode on a shared LPAR. Logical processor 4/5 consumed one physical processor core. Although logical processor 4 is 100% busy, the physical consumed processor is only 0.80, which means the physical core is still not fully driven by the single thread application.

Example 5-23 Processor utilization in SMT2 mode on a shared LPAR

#sar -P ALL 1 100

AIX p750s1aix2 1 7 00F660114C00 10/02/12

System configuration: lcpu=8 ent=1.00 mode=Uncapped

18:35:13 cpu %usr %sys %wio %idle physc %entc

18:35:14 0 20 62 0 18 0.01 1.2

1 0 2 0 98 0.00 0.5

4 100 0 0 0 0.80 80.0

5 0 0 0 100 0.20 19.9

8 0 29 0 71 0.00 0.0

9 0 7 0 93 0.00 0.0

12 0 52 0 48 0.00 0.0

13 0 0 0 100 0.00 0.0

- 79 1 0 20 1.02 101.6

Example 5-24 on page 230 demonstrates processor utilization when one thread is busy in SMT1 mode on a shared LPAR. Logical processor 4 is 100% busy, and fully consumed one physical processor core. That is because there is only one hardware thread for each core, and thus there is no idle hardware thread available.

Example 5-24 Processor utilization in SMT1 mode on a shared LPAR

#sar -P ALL 1 100

AIX p750s1aix2 1 7 00F660114C00 10/02/12

System configuration: lcpu=4 ent=1.00 mode=Uncapped

18:36:10 cpu %usr %sys %wio %idle physc %entc

18:36:11 0 12 73 0 15 0.02 1.6

4 100 0 0 0 1.00 99.9

8 26 53 0 20 0.00 0.2

12 0 50 0 50 0.00 0.1

- 98 1 0 0 1.02 101.7

|

Note: The ratio is acquired using the ncpu tool. The result might vary slightly under different workloads.

|

5.4.3 Small workload example

To illustrate some of the various types of information, we created a simplistic example by putting a tiny workload on a free partition. The system is running a process called cputest that puts a very small workload, as shown in Figure 5-4.

Figure 5-4 single process - Topas simplified processor statistics

In the processor statistics, the graphic shows a total of 3.2% of utilization at the User% column. In the process table you can see that the cputest is consuming 3.2% of the processor on the machine as well, which seems accurate according to our previous read.

|

Note: The information displayed in the processor statistics is not intended to match any specific processes. The fact that it matches the utilization of cputest is just because the system does not have any other workload.

|

There are a few important details shown in Figure 5-4 on page 230:

•Columns User%, Kern%, and Wait%

The column User% refers to the percentage of processor time spent running user-space processes. The Kern% refers to the time spent by the processor in kernel mode, and Wait% is the time spent by the processor waiting for some blocking event, like an I/O operation. This indicator is mostly used to identify storage subsystem problems.

These three values together form your system utilization. Which one is larger or smaller will depend on the type of workload running on the system.

•Column Idle%

Idle is the percent of time that the processor spends doing nothing. In production environments, having long periods of Idle% may indicate that the system is oversized and that it is not using all its resources. On the other hand, a system near to 0% idle all the time can be an alert that your system is undersized.

There are no rules of thumb when defining what is a desired idle state. While some prefer to use as much of the system resources as possible, others prefer to have a lower resource utilization. It all depends on the users’ requirements.

For sizing purposes, the idle time is only meaningful when measured for long periods.

|

Note: Predictable workload increases are easier to manage than unpredictable situations. For the first, a well-sized environment is usually fine while for the latter, some spare resources are usually the best idea.

|

•Column Physc

This is the quantity of physical processors currently consumed. Figure 5-4 on page 230 shows Physc at 0.06 or 6% of physical processor utilization.

•Column Entc%

This is the percentage of the entitled capacity consumed. This field should always be analyzed when dealing with processor utilization because it gives a good idea about the sizing of the partition.

A partition that shows the Entc% always too low or always too high (beyond the 100%) is an indication that its sizing must be reviewed. This topic is discussed in 3.1, “Optimal logical partition (LPAR) sizing” on page 42.

Figure 5-5 on page 232 shows detailed statistics for the processor. Notice that the reported values this time are a bit different.

Figure 5-5 Single process - Topas detailed processor statistics

Notice that topas reports CPU0 running at 90.9% in the User% column and only 2.4% in the Idle% column. Also, the Physc values are now spread across CPU0 (0.04), CPU2 (0.01), and CPU3 (0.01), but the sum of the three logical processors still matches the values of the simplified view.

In these examples, it is safe to say that cputest is consuming only 3.2% of the total entitled capacity of the machine.

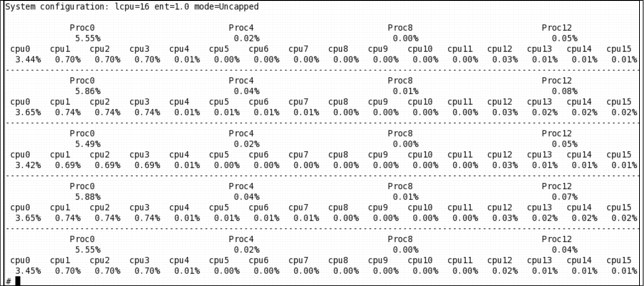

In an SMT-enabled partition, the SMT distribution over the available cores can also be checked with the mpstat -s command, as shown in Figure 5-6.

Figure 5-6 mpstat -s reporting a small load on cpu0 and using 5.55% of our entitled capacity

The mpstat -s command gives information about the physical processors (Proc0, Proc4, Proc8, and Proc12) and each of the logical processors (cpu0 through cpu15). Figure 5-6 on page 232 shows five different readings of our system processor while cputest was running.

|

Notes:

•The default behavior of mpstat is to present the results in 80 columns, thus wrapping the lines if you have a lot of processors. The flag -w can be used to display wide lines.

•The additional sections provide some information about SMT systems, focusing on the recent POWER7 SMT4 improvements.

|

5.4.4 Heavy workload example

With the basic processor utilization concepts illustrated, we now take a look at a heavier workload and see how the processor reports changed.

The next examples provide reports of an eight-processes workload with intensive processors.

In Figure 5-7 User% is now reporting almost 90% of processor utilization, but that information itself does not tell much. Physc and Entc% are now reporting much higher values, indicating that the partition is using more of its entitled capacity.

Figure 5-7 Topas simplified processor statistics - Eight simultaneous processes running

Looking at the detailed processor statistics (Figure 5-8), you can see that the physical processor utilization is still spread across the logical processors of the system, and the sum would approximately match the value seen in the simplified view in Figure 5-7.

Figure 5-8 Topas detailed processor statistics - Eight simultaneous processes running

The thread distribution can be seen in Figure 5-9. This partition is an SMT4 partition, and therefore the system tries to distribute the processes as best as possible over the logical processors.

Figure 5-9 mpstat threads view - Eight simultaneous processes running

For the sake of curiosity, Figure 5-10 shows a load of nine processes distributed across only three virtual processors. The interesting detail in this figure is that it illustrates the efforts of the system to make the best use of the SMT4 design by allocating all logical processors of Proc0, Proc4 and Proc2 while Proc6 is almost entirely free.

Figure 5-10 mpstat threads view - Nine simultaneous processes running

5.4.5 Processor utilization reporting in power saving modes

This section shows processor utilization reporting in power saving mode.

Concepts

Before POWER5, AIX calculated processor utilization based on decrementer sampling which is active every tick (10ms). The tick is charged to user/sys/idle/wait buckets, depending on the execution mode when the clock interrupt happens. It is a pure software approach based on the operating system, and not suitable when shared LPAR and SMT are introduced since the physical core is no longer dedicated to one hardware thread.

Since POWER5, IBM introduced Processor Utilization Resource Registers (PURR) for processor utilization accounting. Each processor has one PURR for each hardware thread, and the PURR is counted by hypervisor in fine-grained time slices at nanosecond magnitude. Thus it is more accurate than decrementer sampling, and successfully addresses the utilization reporting issue in SMT and shared LPAR environments.

Since POWER6, IBM introduced power saving features, that is, the processor frequency might vary according to different power saving policies. For example, in static power saving mode, the processor frequency will be at a fixed value lower than nominal; in dynamic power saving mode, the processor frequency can vary dynamically according to the workload, and can reach a value larger than nominal (over-clocking).

Because PURR increments independent of processor frequency, each PURR tick does not necessarily represent the same capacity if you set a specific power saving policy other than the default. To address this problem, POWER6 and later chips introduced the Scaled PURR, which is always proportional to process frequency. When running at lower frequency, the SPURR ticks less than PURR, and when running at higher frequency, the SPURR ticks more than PURR. We can also use SPURR together with PURR to calculate the real operating frequency, as in the equation:

operating frequency = (SPURR/PURR) * nominal frequency

There are several monitoring tools based on SPURR, which can be used to get an accurate utilization of LPARs when in power saving mode. We introduce these tools in the following sections.

Monitor tools

Example 5-25 shows an approach to observe the current power saving policy. You can see that LPAR A is in static power saving mode while LPAR B is in dynamic power saving (favoring performance) mode.

Example 5-25 lparstat -i to observe the power saving policy of an LPAR

LPAR A:

#lparstat -i

…

Power Saving Mode : Static Power Saving

LPAR B:

#lparstat –i

…

Power Saving Mode : Dynamic Power Savings (Favor Performance)

Example 5-26 shows how the processor operating frequency is shown in the lparstat output. There is an extra %nsp column indicating the current ratio compared to nominal processor speed, if the processor is not running at the nominal frequency.

Example 5-26 %nsp in lparstat

#lparstat 1

System configuration: type=Dedicated mode=Capped smt=4 lcpu=32 mem=32768MB

%user %sys %wait %idle %nsp

----- ----- ------ ------ -----

76.7 14.5 5.6 3.1 69

80.0 13.5 4.4 2.1 69

76.7 14.3 5.8 3.2 69

65.2 14.5 13.2 7.1 69

62.6 15.1 14.1 8.1 69

64.0 14.1 13.9 8.0 69

65.0 15.2 12.6 7.2 69

|

Note: If %nsp takes a fixed value lower than 100%, it usually means you have enabled static power saving mode. This might not be what you want, because static power saving mode cannot fully utilize the processor resources despite the workload.

%nsp can also be larger than 100 if the processor is over-clocking in dynamic power saving modes.

|

Example 5-27 shows another lparstat option, -E, for observing the real processor utilization ratio in various power saving modes. As in the output, the actual metrics are based on PURR, while the normalized metrics are based on SPURR. The normalized metrics represent what the real capacity would be if all processors were running at nominal frequency. The sum of user/sys/wait/idle in normalized metrics can exceed the real capacity in case of over-clocking.

Example 5-27 lparstat -E

#lparstat -E 1 100

System configuration: type=Dedicated mode=Capped smt=4 lcpu=64 mem=65536MB Power=Dynamic-Performance

Physical Processor Utilisation:

--------Actual-------- ------Normalised------

user sys wait idle freq user sys wait idle

---- ---- ---- ---- --------- ---- ---- ---- ----

15.99 0.013 0.000 0.000 3.9GHz[102%] 16.24 0.014 0.000 0.000

15.99 0.013 0.000 0.000 3.9GHz[102%] 16.24 0.013 0.000 0.000

15.99 0.009 0.000 0.000 3.9GHz[102%] 16.24 0.009 0.000 0.000

|

Note: AIX introduces lparstat options -E and -Ew since AIX 5.3 TL9, AIX 6.1 TL2, and AIX 7.1

|

Refer to IBM EnergyScale for POWER7 Processor-Based Systems at:

http://public.dhe.ibm.com/common/ssi/ecm/en/pow03039usen/POW03039USEN.PDF

5.4.6 A common pitfall of shared LPAR processor utilization

For dedicated LPAR, the processor utilization reporting uses the same approach as in no virtualization environment. However, the situation is more complicated in shared LPAR situations. For shared LPAR, if the consumed processor is less than entitlement, the system processor utilization ratio uses the processor entitlement as the base.

As in Example 5-28, %user, %sys, %wait, and %idle are calculated based on the entitled processor, which is 1.00. Thus, 54% user percentage actually means that 0.54 physical processor is consumed in user mode, not 0.54 * 0.86 (physc).

Example 5-28 Processor utilization reporting when consumed processors is less than entitlement

#lparstat 5 3

System configuration: type=Shared mode=Uncapped smt=4 lcpu=16 mem=8192MB psize=16 ent=1.00

%user %sys %wait %idle physc %entc lbusy vcsw phint

----- ----- ------ ------ ----- ----- ------ ----- -----

54.1 0.4 0.0 45.5 0.86 86.0 7.3 338 0

54.0 0.3 0.0 45.7 0.86 85.7 6.8 311 0

54.0 0.3 0.0 45.7 0.86 85.6 7.2 295 0

If the consumed processor is larger than entitlement, the system processor utilization ratio uses the consumed processor as the base. Refer to Example 5-29 on page 237. In this case, %usr, %sys, %wait, and %idle are calculated based on the consumed processor. Thus 62.2% user percentage actually means that 2.01*0.622 processor is consumed in user mode.

Example 5-29 Processor utilization reporting when consumed processors exceeds entitlement

#lparstat 5 3

System configuration: type=Shared mode=Uncapped smt=4 lcpu=16 mem=8192MB psize=16 ent=1.00

%user %sys %wait %idle physc %entc lbusy vcsw phint

----- ----- ------ ------ ----- ----- ------ ----- -----

62.3 0.2 0.0 37.6 2.00 200.3 13.5 430 0

62.2 0.2 0.0 37.6 2.01 200.8 12.7 569 0

62.2 0.2 0.0 37.7 2.01 200.7 13.4 550 0

|

Note: The rule above applies to overall system processor utilization reporting. The specific logical processor utilization ratios in sar -P ALL and mpstat -a are always based on their physical consumed processors. However, the overall processor utilization reporting in these tools still complies with the rule.

|

5.5 Memory utilization

This section covers a suggested approach of looking at memory usage, how to read the metrics correctly and how to understand paging space utilization. It shows how to monitor memory in partitions with dedicated memory, active memory sharing, and active memory expansion. It also presents some information about memory leaks and memory size simulation.

5.5.1 How much memory is free (dedicated memory partitions)

In AIX, memory requests are managed by the Virtual Memory Manager (VMM). Virtual memory includes real physical memory (RAM) and memory stored on disk (paging space).

Virtual memory segments are partitioned into fixed-size units called pages. AIX supports four page sizes: 4 KB, 64 KB, 16 MB and 16 GB. The default page size is 4 KB. When free memory becomes low, VMM uses the Last Recently Used (LRU) algorithm to replace less frequently referenced memory pages to paging space. To optimize which pages are candidates for replacement, AIX classifies them into two types:

•Computational memory

•Non-computational memory

Computational memory, also known as computational pages, consists of the pages that belong to working-storage segments or program text (executable files) segments. Non-computational memory or file memory is usually pages from permanent data files in persistent storage.

AIX tends to use all of the physical memory available. Depending on how you look at your memory utilization, you may think you need more memory.

In Example 5-30, the fre column shows 8049 pages of 4 KB of free memory = 31 MB and the LPAR has 8192 MB. Apparently, the system has almost no free memory.

Example 5-30 vmstat shows there is almost no free memory

# vmstat

System configuration: lcpu=16 mem=8192MB ent=1.00

kthr memory page faults cpu

----- ----------- ------------------------ ------------ -----------------------

r b avm fre re pi po fr sr cy in sy cs us sy id wa pc ec

1 1 399550 8049 0 0 0 434 468 0 68 3487 412 0 0 99 0 0.00 0.0

Using the command dd if=/dev/zero of=/tmp/bigfile bs=1M count=8192, we generated a file of the size of our RAM memory (8192 MB). The output of vmstat in Example 5-31 presents 6867 frames of 4 k free memory = 26 MB.

Example 5-31 vmstat still shows almost no free memory

kthr memory page faults cpu

----- ----------- ------------------------ ------------ -----------------------

r b avm fre re pi po fr sr cy in sy cs us sy id wa pc ec

1 1 399538 6867 0 0 0 475 503 0 60 2484 386 0 0 99 0 0.000 0.0

Looking at the memory report of topas, Example 5-32, you see that the non-computational memory, represented by Noncomp, is 80%.

Example 5-32 Topas shows non-computational memory at 80%

Topas Monitor for host:p750s2aix4 EVENTS/QUEUES FILE/TTY

Thu Oct 11 18:46:27 2012 Interval:2 Cswitch 271 Readch 3288

Syscall 229 Writech 380

CPU User% Kern% Wait% Idle% Physc Entc% Reads 38 Rawin 0

Total 0.1 0.3 0.0 99.6 0.01 0.88 Writes 0 Ttyout 178

Forks 0 Igets 0

Network BPS I-Pkts O-Pkts B-In B-Out Execs 0 Namei 23

Total 1.01K 9.00 2.00 705.0 330.0 Runqueue 0.50 Dirblk 0

Waitqueue 0.0

Disk Busy% BPS TPS B-Read B-Writ MEMORY

Total 0.0 0 0 0 0 PAGING Real,MB 8192

Faults 0 % Comp 19

FileSystem BPS TPS B-Read B-Writ Steals 0 % Noncomp 80

Total 3.21K 38.50 3.21K 0 PgspIn 0 % Client 80

PgspOut 0

Name PID CPU% PgSp Owner PageIn 0 PAGING SPACE

topas 5701752 0.1 2.48M root PageOut 0 Size,MB 2560

java 4456586 0.1 20.7M root Sios 0 % Used 0

getty 5308512 0.0 640K root % Free 100

gil 2162754 0.0 960K root NFS (calls/sec)

slp_srvr 4915352 0.0 472K root SerV2 0 WPAR Activ 0

java 7536870 0.0 55.7M pconsole CliV2 0 WPAR Total 1

pcmsrv 8323232 0.0 1.16M root SerV3 0 Press: "h"-help

java 6095020 0.0 64.8M root CliV3 0 "q"-quit

After using the command rm /tmp/bigfile, we saw that the vmstat output, shown in Example 5-33, shows 1690510 frames of 4 k free memory = 6603 MB.

Example 5-33 vmstat shows a lot of free memory

kthr memory page faults cpu

----- ----------- ------------------------ ------------ -----------------------

r b avm fre re pi po fr sr cy in sy cs us sy id wa pc ec

1 1 401118 1690510 0 0 0 263 279 0 35 1606 329 0 0 99 0 0.000 0.0

What happened with the memory after we issued the rm command? Remember non-computational memory? It is basically file system cache. Our dd filled the non-computational memory and rm wipes that cache from noncomp memory.

AIX VMM keeps a free list—real memory pages that are available to be allocated. When process requests memory and there are not sufficient pages in the free list, AIX first removes pages from non-computational memory.

Many monitoring tools present the utilized memory without discounting non-computational memory. This leads to potential misunderstanding of statistics and incorrect assumptions about how much of memory is actually free. In most cases it should be possible to make adjustments to give the right value.

In order to know the utilized memory, the correct column to look at, when using vmstat, is active virtual memory (avm). This value is also presented in 4 KB pages. In Example 5-30 on page 237, while the fre column of vmstat shows 8049 frames (31 MB), the avm is 399,550 pages (1560 MB). For 1560 MB used out of 8192 MB of the total memory of the LPAR, there are 6632 MB free. The avm value can be greater than the physical memory, because some pages might be in RAM and others in paging space. If that happens, it is an indication that your workload requires more than the physical memory available.

Let us play more with dd and this time analyze memory with topas. In Example 5-34, topas output shows 1% utilization of Noncomp (non-computational) memory.

Using the dd command again:

dd if=/dev/zero of=/tmp/bigfile bs=1M count=8192

The topas output in Example 5-34 shows that the sum of computational and non-computational memory is 99%, so almost no memory is free. What will happen if you start a program that requests memory? To illustrate this, in Example 5-35, we used the stress tool from:

Example 5-34 Topas shows Comp + Noncomp = 99% (parts stripped for better reading)

Disk Busy% BPS TPS B-Read B-Writ MEMORY

Total 0.0 0 0 0 0 PAGING Real,MB 8192

Faults 78 % Comp 23

FileSystem BPS TPS B-Read B-Writ Steals 0 % Noncomp 76

Total 2.43K 28.50 2.43K 0 PgspIn 0 % Client 76

Example 5-35 Starting a program that requires 1024 MB of memory

# stress --vm 1 --vm-bytes 1024M --vm-hang 0

stress: info: [11600010] dispatching hogs: 0 cpu, 0 io, 1 vm, 0 hdd

In Example 5-36, non-computational memory dropped from 76% (Example 5-34) to 63% and the computational memory increased from 23% to 35%.

Example 5-36 Topas output while running a stress program

Disk Busy% BPS TPS B-Read B-Writ MEMORY

Total 0.0 0 0 0 0 PAGING Real,MB 8192

Faults 473 % Comp 35

FileSystem BPS TPS B-Read B-Writ Steals 0 % Noncomp 63

After cancelling the stress program, Example 5-37 shows that non-computational memory remains at the same value and the computational returned to the previous mark. This shows that when a program requests computational memory, VMM allocates this memory as computational and releases pages from non-computational.

Example 5-37 Topas after cancelling the program

Disk Busy% BPS TPS B-Read B-Writ MEMORY

Total 0.0 0 0 0 0 PAGING Real,MB 8192

Faults 476 % Comp 23

FileSystem BPS TPS B-Read B-Writ Steals 0 % Noncomp 63

Using nmon, in Example 5-38, the sum of the values Process and System is approximately the value of Comp. Process is memory utilized by the application process and System is memory utilized by the AIX kernel.

Example 5-38 Using nmon to analyze memory

??topas_nmon??b=Black&White??????Host=p750s2aix4?????Refresh=2 secs???18:49.27??

? Memory ???????????????????????????????????????????????????????????????????????

? Physical PageSpace | pages/sec In Out | FileSystemCache?

?% Used 89.4% 2.4% | to Paging Space 0.0 0.0 | (numperm) 64.5%?

?% Free 10.6% 97.6% | to File System 0.0 0.0 | Process 15.7%?

?MB Used 7325.5MB 12.1MB | Page Scans 0.0 | System 9.2%?

?MB Free 866.5MB 499.9MB | Page Cycles 0.0 | Free 10.6%?

?Total(MB) 8192.0MB 512.0MB | Page Steals 0.0 | -----?

? | Page Faults 0.0 | Total 100.0%?

?------------------------------------------------------------ | numclient 64.5%?

?Min/Maxperm 229MB( 3%) 6858MB( 90%) <--% of RAM | maxclient 90.0%?

?Min/Maxfree 960 1088 Total Virtual 8.5GB | User 73.7%?

?Min/Maxpgahead 2 8 Accessed Virtual 2.0GB 23.2%| Pinned 16.2%?

? | lruable pages ?

????????????????????????????????????????????????????????????????????????????????

svmon

Another useful tool to see how much memory is free is svmon. Since AIX 5.3 TL9 and AIX 6.1 TL2, svmon has a new metric called available memory, representing the free memory. Example 5-39 shows svmon output. The available memory is 5.77 GB.

Example 5-39 svmon output shows available memory

# svmon -O summary=basic,unit=auto

Unit: auto

--------------------------------------------------------------------------------------

size inuse free pin virtual available mmode

memory 8.00G 7.15G 873.36M 1.29G 1.97G 5.77G Ded

pg space 512.00M 12.0M

work pers clnt other

pin 796.61M 0K 0K 529.31M

in use 1.96G 0K 5.18G

One svmon usage is to show the top 10 processes in memory utilization, shown in Example 5-40 on page 241.

Example 5-40 svmon - top 10 memory consuming processes

# svmon -Pt10 -O unit=KB

Unit: KB

-------------------------------------------------------------------------------

Pid Command Inuse Pin Pgsp Virtual

5898490 java 222856 40080 0 194168

7536820 java 214432 40180 0 179176