Chapter 2 Manage databases and instances

Managing relational databases on the Azure platform requires the understanding of several key concepts and technologies that are crucial to a successful cloud implementation. While several of these concepts apply to both on-premises as well as the cloud, a more comprehensive understanding of effectively configuring security, monitoring database performance, and managing server instances in a PaaS offering is necessary.

Skills in this chapter:

Skill 2.1: Configure secure access to Microsoft Azure SQL databases

Skill 2.2: Configure SQL Server performance settings

Skill 2.3: Manage SQL Server instances

Skill 2.1: Configure secure access to Microsoft Azure SQL databases

Azure SQL Database secures your data at multiple layers to provide enterprise-level security and to meet industry security standards. SQL Database provides encryption for data in motion using Transport Layer Security, for data at rest using TDE (Transparent Data Encryption), and for data in use using Always Encrypted.

This skill focuses on the approach and steps necessary to effectively secure your relational databases and appropriately implement key security features in Azure SQL Database. Some of these steps can be quite different from an on-premises implementation of SQL Server.

This skill covers how to:

Configure firewall rules

As explained in Chapter 1, “Implement SQL in Azure,” one of the key concepts and benefits of Azure SQL Database is that it is accessible from nearly anywhere by exposing it over the internet with a TCP endpoint via port 1433., Microsoft provides multiple layers and levels of security to ensure that your database and data is secure and protected. One of those layers is firewall rules. The firewall is the means by which access is granted to a server and or database based on the originating IP address of the incoming request.

By default, all Transact-SQL (T-SQL) access (incoming requests) to your Azure SQL server is blocked by the firewall, and in order to allow incoming requests at least one server-level firewall rule is needed. Firewall rules specify which IP address ranges from the internet are allowed and can be applied at both the server and database levels.

Chapter 1 also provides an overview of how the firewall rules process works. Incoming connection attempts must pass through the firewall in order to access the specified database or server. The firewall first checks that the IP address of the incoming client request falls in the range of any of the specified firewall rules specified at the database-level of the database the client is trying to connect to (as specified in the connection string). If there is a match, the connection is allowed only on the specified database. If there is no match, the firewall makes the same request to the rules specified at the server-level. If there is still no match, the connection fails. If there is a match at the server level (the logical instance level), the client has access to all the databases on the Azure SQL server.

Server-level firewall rules

Server-level firewall rules grant access to all the databases within the same logical server. Server-level firewall rules are stored in the master database and can be created and configured through the following methods:

Azure Portal

T-SQL

Azure PowerShell

Azure CLI

REST API

Portal

Server-level firewall rules can be created and updated via the Azure portal through the Firewall Settings page, which can be accessed from either the Server Overview page shown in Figure 2-1, or the Database Overview page show in Figure 2-2.

From the Server Overview page, you can access the Firewall Settings page by either clicking Firewall in the left-hand menu under Settings, or by clicking the Show Firewall Settings link in the Server Overview page, as shown in Figure 2-1.

Figure 2-1 Accessing the Firewall Settings page via the Server Overview page

From the Database Overview page, you can access the Firewall Settings page by clicking Set Server Firewall on the toolbar, as shown in Figure 2-2.

Figure 2-2 Accessing the Firewall Settings page via the Database Overview page

Regardless of whether you go through the Server Overview page or the Database Overview page, either option will open the Firewall Settings page, shown in Figure 2-3. The Firewall Settings page is where firewall rules are managed at the server-level. By default, when a server and database are first created, no firewall rules exist and therefore at least one server-level firewall rule must be created, even before adding any database-level firewall rules.

Figure 2-3 Configuring Server-level firewall rules

The Firewall Settings page will automatically list your client IP address, and clicking the Add Client IP button on the toolbar will add a single firewall rule using the client IP address as the Start IP and End IP (don’t forget to click Save). In order to create server-level firewall rules via the Azure portal, you must be the subscription owner or a subscription contributor.

The Allow Access To Azure Services option, when enabled, allows applications and connections from Azure to connect to the Azure SQL server. Using this option, an internal firewall rule with a starting and ending IP address of 0.0.0.0 is created, indicating that connections from within Azure are allowed, such as from Azure App Services. It is important to understand that enabling this option allows connections from Azure, including connections from other subscriptions. Thus, care and best practices should be implemented to make sure login and user permissions are only allowed to authorized users.

Azure SQL Database supports a maximum of 128 server-level firewall rules, but creating a large number of server-level firewall rules is not recommended. Uses for server-level firewall rules will be discussed shortly.

The Firewall Settings page only allows one operation per save action. For example, adding multiple IP address ranges, or adding an IP address range and deleting another range before saving the changes is not permitted. A single create/delete/edit operation is permitted per save action.

Server-level firewall rule names must be unique. When adding a firewall rule via the portal, and the name of the new firewall rule matches the name of an existing rule, you will be informed that firewall rule names must be unique and you will not be allowed to create the new rule as shown in Figure 2-4. Existing rules can be edited simply by clicking in the appropriate field.

It is a best practice to name the firewall rule so it will help you remember what the server-level firewall setting is for.

Figure 2-4 Unique firewall rule names

T-SQL

Server-level firewall rules can be managed and maintained using T-SQL through a set of system stored procedures and catalog views, including:

sp_set_firewall_rule System stored procedure to create a new or update an existing server-level firewall rule.

sp_delete_firewall_rule System stored procedure to delete server-level firewall rules.

sys.firewall_rules Catalog view that lists the current server-level firewall rules.

The following code example uses the sp_set_firewall_rule system stored procedure to create a new firewall rule with the name “accting,” a starting IP address of 192.168.1.11, and an ending IP address of 192.168.1.30. The sp_set_firewall_rule system stored procedure must be run in the master database.

EXECUTE sp_set_firewall_rule @name = N'accting', @start_ip_address = '192.168.1.11', @end_ip_address = '192.168.1.30'

Figure 2-5 shows the results of the T-SQL execution. First, the sys.firewall_rules catalog view is called to display the existing firewall rules, followed by the execution of sp_set_firewall_rule system stored procedure to create the new firewall rule. The procedure sys.firewall_rules is again called to show the creation of the new firewall rule.

Figure 2-5 Creating a new server-level firewall rule in T-SQL

Both system stored procedures and the catalog view is available only in the master database to the server-level principal login or Azure Active Directory principal.

Unlike the Azure portal, when creating a new firewall rule via T-SQL and specifying an existing firewall rule name as a parameter to sp_set_firewall_rule system stored procedure, Azure will update the existing firewall rule and not generate an error. It should also be noted that the very first server-level firewall rule cannot be created using T-SQL, but all subsequent rules can be. Initial server-level firewall rules must be created using the Azure portal, the Azure PowerShell, the Azure CLI, or the REST API.

When creating server-level firewall rules via T-SQL, you must connect to the SQL Database instance as a server-level principal or an Active Directory Administrator.

Azure Powershell

Azure PowerShell provides a set of cmdlets in which to manage server-level firewall rules, including:

Get-AzureRmSqlServerFirewallRule Returns a list of the existing server-level firewall rules.

New-AzureRmSqlServerFirewallRule Creates a new server-level firewall rule.

Set-AzureRmSqlServerFirewallRule Updates an existing server-level firewall rule.

Remove-AzureRmSqlServerFirewallRule Deletes the specified server-level firewall rule.

Microsoft provides two ways to execute these PowerShell cmdlets; through the PowerShell IDE or through the Azure Cloud Shell in the Azure Portal. The Azure Cloud Shell brings the PowerShell experience into the Azure Portal and allows you to easily discover and work with all Azure resources. The above PowerShell cmdlets work seamlessly in both, but the example below uses the Azure Cloud Shell.

The following code example creates a new server-level firewall rule named “engineering” on the server “demo908” in the RG-WestUS resource group with a starting IP address of 192.168.1.31 and an ending IP address of 192.168.1.40. Be sure to replace the resource group name with the appropriate name for your resource group.

New-AzureRmSqlServerFirewallRule -ResourceGroupName "RG-WestUS" -ServerName "demo908" -FirewallRuleName "engineering" -StartIpAddress "192.168.1.31" -EndIpAddress "192.168.1.40"

Figure 2-6 shows the execution of the New-AzureRmSqlServerFirewallRule PowerShell cmdlet to create a new firewall rule. The cmdlet was executed in the Azure Cloud Shell which creates a Cloud Shell and a PowerShell environment. The benefit to this is that because you are authenticated in the portal already, you can execute cmdlets such as New-AzureRmSqlServerFirewallRule without the necessity of executing additional cmdlets to authenticate and obtain other Azure environment and subscription information. In addition, the Azure Cloud Shell maintains the latest version of the Azure PowerShell cmdlets, thus you can be confident that you are working with the latest version in every Cloud Shell session.

Figure 2-6 also shows the Firewall Settings page with the newly created server-level firewall rule. The takeaway here is that PowerShell makes it easy to manage server-level firewall rules through a small set of cmdlets.

Figure 2-6 Creating a new Server-level firewall rule in PowerShell

You’ll notice that there are differences in creating and managing firewall rules when using T-SQL versus PowerShell. For example, PowerShell provides individual cmdlets to create, update, and delete firewall rules, whereas T-SQL uses a single system stored procedure to create and update a firewall rule. Items such as these are good to keep in mind when navigating between the technologies.

Azure CLI

The Azure CLI 2.0 is Azure’s new command-line interface for working with and managing Azure resources. It is optimized to work with Azure resources via the command line that work against the Azure Resource Manager. The following commands are used with the Azure CLI to manage server-level firewall rules:

az sql server firewall create Creates a server-level firewall rule.

az sql server firewall delete Deletes a server-level firewall rule.

az sql server firewall list Lists current server-level firewall rules.

az sql server firewall rule show Shows the details of a server-level firewall rule.

az sql server firewall rule update Updates an existing server-level firewall rule.

Similar to PowerShell, there are two ways in which to work with the Azure CLI. The first is to download and install the Azure CLI installer, which provides the command-line experience through a command window. This client can be installed on Windows, Linux, and the macOS.

You can either run the Azure CLI through the Bash Shell or through a normal Windows command window. If using a command window, open a command prompt as an administrator and execute the following to log in with your default subscription:

az login

You will be prompted to log in and enter an authentication code. Once authenticated, you can execute commands simply and easily. The following code example uses the Azure CLI to create a new server-level firewall rule directly within the command prompt window. Be sure to replace the resource group name with the appropriate name for your resource group.

az sql server firewall-rule create --resource-group RG-WestUS --server demo908 -n mrking --start-ip-address 192.168.1.41 --end-ip-address 192.168.1.50

Figure 2-7 shows the execution of the Azure CLI command and the new firewall rule in the Firewall Settings page in the Azure portal as the result of the Azure CLI command execution.

Figure 2-7 Creating a new Server-level firewall rule with the Azure CLI 2.0

Similar to PowerShell, the Azure CLI can be accessed through the Azure portal via the Azure Cloud Shell. Launch the Cloud shell from the top navigation bar in the Azure portal, then select the Bash option from the shell drop-down list, as shown in Figure 2-8.

Figure 2-8 Using the Azure CLI in the Azure Cloud Shell

The Azure CLI via the Azure Cloud Shell provides a streamlined experience similar to the PowerShell experience. The Azure CLI is best used for building automation scripts to work with the Azure Resource Manager.

Here are some closing thoughts on server-level firewall rules. As firewall rules are temporarily cached, it is recommended that you execute DBCC FLUSHAUTHCACHE on occasion, which will remove any cached entries and clean up the firewall rule list, thus improving connection performance.

Server-level firewall rules should be used sparingly. Consider the following for using server-level firewall rules:

For administrative functions

Multiple databases have the same access requirements

Amount of time spent configuring each database individually

It is highly recommended that no firewall rules be created with a starting IP address of 0.0.0.0 and an ending IP address of 255.255.255.255.

Database-level firewall rules

Database-level firewall rules provide a more granular level of security by allowing access only to a specified database. Unlike server-level firewall rules, database-level firewall rules can only be created using T-SQL.

The following T-SQL system stored procedures and catalog views are used to manage database-level firewall rules:

sys.database_firewall_rules Catalog view which lists the current database-level firewall rules.

sp_set_database_firewall_rule System stored procedure to create a new or update an existing database-level firewall rule.

sp_delete_database_firewall_rule System stored procedure to delete database-level firewall rules.

The following code example uses the sp_set_database_firewall_rule system stored procedure to create a new firewall rule with the name “accting,” a starting IP address of 192.168.1.11, and an ending IP address of 192.168.1.30.

EXECUTE sp_set_database_firewall_rule @name = N'accting', @start_ip_address = '192.168.1.1', @end_ip_address = '192.168.1.10'

Figure 2-9 shows the results of the T-SQL execution. First, the sys.database_firewall_rules catalog view is called to display the existing firewall rules for the selected database, followed by the execution of sp_set_database_firewall_rule system stored to create the new firewall rule. The catalog view sys.database_firewall_rules is again called to show the creation of the new firewall rule.

Figure 2-9 Creating a database-level firewall rule with T-SQL

Similar to server-level firewall rules, you can have a maximum of 128 database-level firewall rules. It is also recommended that database-level firewall rules be used whenever possible to help ensure the portability of your database.

As a reminder, the order in which the incoming connection checks the firewall rules is important. The firewall first checks the incoming IP against the ranges specified at the database-level. If the incoming IP address is within one of the ranges, the connection is allowed to the SQL Database. If the incoming IP does not match one of the specified ranges at the database-level, the server-level firewall rules are then checked. If there is still no match, the connection request fails. If there is a match at the server-level, the connection is granted and the connection is granted to all databases on the logical server.

Troubleshooting the database firewall

Even though you may have your firewall rules configured correctly, there may still be times when you cannot connect, and the connections experience does not behave as you would expect. As such, the following points can help you pinpoint the connection issue.

Local firewall configuration Azure SQL Database operates over TCP endpoint 1433. If this port is not opened and enabled on your computer or company firewall, you will not be able to connect.

Login and Password issues Many times the connections issues are login and password related. For example, perhaps the user name and password are not typed correctly, or the login does not have permissions on the Azure SQL Database or server.

NAT (Network address translation) There will be times when the IP address displayed on the Firewall Settings page is different from the IP address being used to connect to Azure. You can see an example of this in Figure 2-10. This typically happens when your computer is behind a company firewall due to NAT. An example of this can be seen in Figure 2-10. The IP address that should be used is the external (NAT) IP address, shown in Figure 2-10, is the one specified from the SQL Server login dialog.

Figure 2-10 Different IP address due to NAT

Microsoft states that it may take up to five minutes for the new firewall rules to take effect. Additionally, if your IP address is a dynamic IP (for example, your network provider changes your IP address every few days), this could also be a symptom.

A key takeaway from these points is that the firewall rules only provide clients with an opportunity to attempt to connect to the server and database. Appropriate and necessary credentials must still be provided to connect.

Configure Always Encrypted for Azure SQL Database

SQL Database provides encryption for data in use using Always Encrypted, a featured designed specifically to protect and safe-guard sensitive data such as social security numbers, national identification numbers, credit card numbers, and phone numbers, just to name a few.

One of the main benefits of Always Encrypted is that it provides client applications to safely and securely encrypt and decrypt data without ever revealing the encryption keys to the database engine. Thus, by providing a necessary separation between those who can view the data and those who manage the data, Always Encrypted ensures that no unauthorized users have access to the encrypted data.

Always Encrypted is achieved by installing an Always Encrypted enabled driver on the client machine (for example, .NET Framework 4.6 or later, JDBC, or Windows ODBC), making encryption completely transparent to the application. The driver has the responsibility of automatically encrypting and decrypting sensitive data at the client within the application. When data is generated at the client, the driver encrypts the data before sending it to the database engine. Likewise, the driver transparently decrypts incoming data from query results retrieved from encrypted database columns.

As mentioned earlier, Always Encrypted uses keys to encrypt and decrypt data. Two types of keys are used; a column encryption key (CEK) and a column master key (CMK). Column encryption keys are used to encrypt data in an encrypted column. Column master keys are key-protecting “keys,” in that they encrypt one or more column encryption keys. It is up to you to specify the information about the encryption algorithm and cryptographic keys to be used when configuring column encryption.

The database engine never stores or uses the keys of either type, but it does store information about the location of the column master keys. Always Encrypted can use external trusted key stores such as Azure Key Value, Windows Certificate Store on a client machine, or a third-party hardware security module. Since this skill focuses on Azure SQL Database, the example will use Azure Key Vault.

Whenever the client driver needs to encrypt and decrypt data, the driver will contact the specified key store, which contains the column master key. It then uses the plaintext column encryption key to encrypt the parameters. The retrieved key is cached to reduce the number of trips to the key store and improve performance. The driver substitutes the plaintext values of the parameters for the encrypted columns with their encrypted values, which it then sends to the entire query for processing.

To enable Always Encrypted within the application, you must first set up the required authentication for the Azure Key Vault (for this example), or whatever key store you are using. When the application requests the key from the key store, it needs authentication to do so. Thus, the first step is to set up a user that will be used to authenticate the application.

In the Azure portal, select the Azure Active Directory option from the left navigation pane. In the App registrations pane, click the New application registration button on the toolbar, which will open the Create pane, shown in Figure 2-11. In the Create pane, provide a Name and Sign-on URL. The Sign-on URL can be anything as long as it is a valid URL. For example, in Figure 2-11 the Name is myClientApp and the Sign-On URL is http://myClientApp. Leave the Application type as Web App / API. Click Create.

Figure 2-11 Registering a new Azure active directory application

Back in the App registrations pane, click on your newly created app, which will open the Settings pane. In the Settings pane, click on the Required Permissions option, which will open the Required Permissions pane, shown in Figure 2-12.

Figure 2-12 Adding application permissions

In the Required Permissions pane, click on the Add button on the toolbar, which will open the Add API access pane. In the API access pane, select the Select API option, which will open the Select an API pane shown in Figure 2-13.

Figure 2-13 Selecting the Windows Azure Service Management API

In the Select an API pane, select Windows Azure Service Management API option, then click Select to close the Select an API pane. The Enable Access pane will automatically open, shown in Figure 2-14. Check the box in the Delegated Permission section for Access Azure Service Management as organization users (preview), then click Select.

Figure 2-14 Enabling delegated permissions to the API

Back on the Add API access pane, ensure that there are green checkmarks for items 1 and 2, then click Done. The Required Permission pane should now list two APIs: the Windows Azure Service Management API which you just added, and the Windows Azure Active Directory API. Close the Required Permissions pane.

Back on the App registrations settings pane for the client application, click the Keys option which will open the Keys pane, shown in Figure 2-15.

Figure 2-15 Creating the Application Key

In the Keys pane you need to create a new key. Enter a description and set the expiration date. Your options for the expiration date are 1 year, 2 years, or Never Expires. The key value will be assigned with the key that is saved. Select 1 year, then click the Save button. As shown in Figure 2-16, the key is automatically generated.

Figure 2-16 Copying the new Application key

You will need this key in your application, so copy this key and save it somewhere, then close the Keys blade. You also need the Application ID for this application, so back on the Settings blade, click the Properties option and copy the Application ID from the Properties pane, shown in Figure 2-17. Save this value somewhere as you will use it shortly as well.

Figure 2-17 Getting the Application ID

The next step is to create the Azure Key Value in which to store the Always Encrypted keys. In the Azure portal, click New, then select Security + Identity, then select the Key Vault option shown in Figure 2-18.

Figure 2-18 Creating a new Azure Key Vault

The Create key vault pane will open, shown in Figure 2-19. In this pane, provide a Name, select the appropriate Resource Group (or leave the Create new option selected if a Resource Group does not exists), accept the default values for the Pricing Tier Access Policies, and Advanced Access Policies, ensure the Pin to dashboard option is checked, then click Create.

Figure 2-19 Configuring the Azure Key Vault

When the Key Vault is created, open the Key Vault by clicking on the tile on the dashboard. In the Key Vault Properties pane, click the Access Policies tile, which will open the Access Policies pane. One user should be listed, which should be you, becauseyou are the creator and owner of the Key Vault.

However, the client application needs to authenticate to the Key Vault via a user that has permissions to the Key Vault. That could be you and your user listed, but that is not best practice. This is the reason you went through the steps of creating a new Active Directory application for authenticating to the Key Vault.

Click Add New in the Access Policies pane, then in the Add Access Policy pane, expand the Select principal option that opens the Principal pane, shown in figure 2-20.

Figure 2-20 Adding a new user access policy

In the Principal pane, start typing the name of the application you created in Azure Active Directory. In this example, the application was named myClientApp, so as I started to type “myc,, the myClientApp was displayed. Click on the appropriate principal and click Select.

In the Add access policy pane, click on the dropdown arrow for the Key permissions. Select the following Key permissions as shown in Figure 2-21:

Get

List

Create

Unwrap Key

Wrap Key

Verify

Sign

The above list are the minimal permissions needed for the principal to access and use the keys for Always Encrypted. You may choose more, but the list above is the minimum needed.

The Wrap Key permission uses the associated key to protect a symmetric key, while the Unwrap Key permission uses the associated key to unprotect the wrapped symmetric keys.

Figure 2-21 Configuring access policy permissions

Click OK on the Add access policy pane. The Access policies pane should now look like Figure 2-22.

Figure 2-22 Newly added User Access Policy

So far you have used the portal to configure the Azure Key Vault, but this configuration can also be done via PowerShell. PowerShell contains two cmdlets that allow you to create the Azure Key Vault and set the access policy. Again, in your code be sure to replace the name of the Resource Group with the name of your Resource Group. If you already have an Azure Key Vault with the name specified below, be sure to supply a different name in the code.

New-AzureRmKeyVault -VaultName 'aekeyvault' -ResourceGroupName 'RG-WestUS' -Location 'West US' Set-AzureRmKeyVaultAccessPolicy -VaultName 'aekeyvault' -ResourceGroupName 'RG-WestUS' -ServicePricipleName 'myClientApp' -PermissionsTokeys get,wrapkey,unwrapkey,sign,verify,list,get,create

At this point you are ready to implement and configure Always Encrypted. First, create a database in which you can work with. You can do that via the Azure portal or via T-SQL.

CREATE DATABASE [database1] (EDITION = 'Basic', SERVICE_OBJECTIVE = 'Basic', MAXSIZE = 2 GB); GO

With the database created, use the following T-SQL to create a table.

CREATE TABLE [dbo].[Customer]( [CustomerId] [int] IDENTITY(1,1), [FirstName] [nvarchar](50) NULL, [LastName] [nvarchar](50) NULL, [MiddleName] [nvarchar](50) NULL, [StreetAddress] [nvarchar](50) NULL, [City] [nvarchar](50) NULL, [ZipCode] [char](5) NULL, [State] [char](2) NULL, [Phone] [char](10) NULL, [CCN] [nvarchar](16) NOT NULL, [BirthDate] [date] NOT NULL PRIMARY KEY CLUSTERED ([CustomerId] ASC) ON [PRIMARY] ); GO

The created table has several columns in which sensitive data is gathered, such as credit card number (CCN) and birth date. This example will use those columns to implement and configure Always Encrypted.

Once the table is created, right-mouse click on the table in the Object Explorer window in SQL Server Management Studio and select Encrypt Columns, as shown in Figure 2-23.

Figure 2-23 Starting the Always Encrypted wizard

The Always Encrypted Wizard opens with the Introduction page. Click Next to move to the Column Selection page, shown in Figure 2-24. The Column Selection page is where you select the columns you want to encrypt, the type of encryption, and what column encryption key to use. Select the CCN and Birthdate columns as shown in Figure 2-24.

Once those columns are selected, you now need to select the encryption type. Click the encryption type dropdown arrow for each column. Notice that you have two options for the encryption type: Deterministic and Randomized.

Deterministic encryption uses a method that always generates the same encrypted value for any given plain text value. Deterministic also allows you to group, filter, join tables, and do equity searches on encrypted values.

Randomized encryption uses a method that encrypts data in a less predictable manner. This encryption is more secure, but does not allow grouping, indexing, joining, or equity searches.

Notice that the Encryption Key value defaults to CEK_Auto1 (New), meaning that since you have yet to create any keys, the wizard will create a new column encryption key for you.

Figure 2-24 Selecting the columns to be encrypted

Also notice in Figure 2-24 that there is a warning on the CCN column. This is just for informational purposes, alerting how it will change collation of the column to binary collation. Always Encrypted only supports binary collations, so the wizard will be changing the collation for that column so that it can encrypt the column. You should take note of what the current collation of the column is in case you need to roll it back. The wizard has no knowledge of the columns prior state.

Also note that indexed columns encrypted using randomized encryption is not supported. Additionally, the following column characteristics are not supported:

Columns that are keys for nonclustered indices using a randomized encrypted column as a key column.

Columns that are keys for clustered indices using a randomized encrypted column as a key column.

Primary key columns when using randomized encryption.

Click Next to take you to the Master Key Configuration page of the wizard, shown in Figure 2-25. Since a column master key has not been created previously, the default value is to auto generate a column master key, which is what is needed.

By default, the wizard selects to store the column master key in a Windows certificate store, so change the option to store the key in Azure Key Vault. When selecting the Azure Key Vault option, you will be asked to authenticate and sign in to Microsoft Azure, at which point the wizard will retrieve your Azure Key Vault names. If you have multiple Azure subscriptions, select the appropriate subscription in which you created the Azure Key Vault.

Select the appropriate Azure Key Vault from the dropdown and then click Next.

Figure 2-25 Master Key configuration

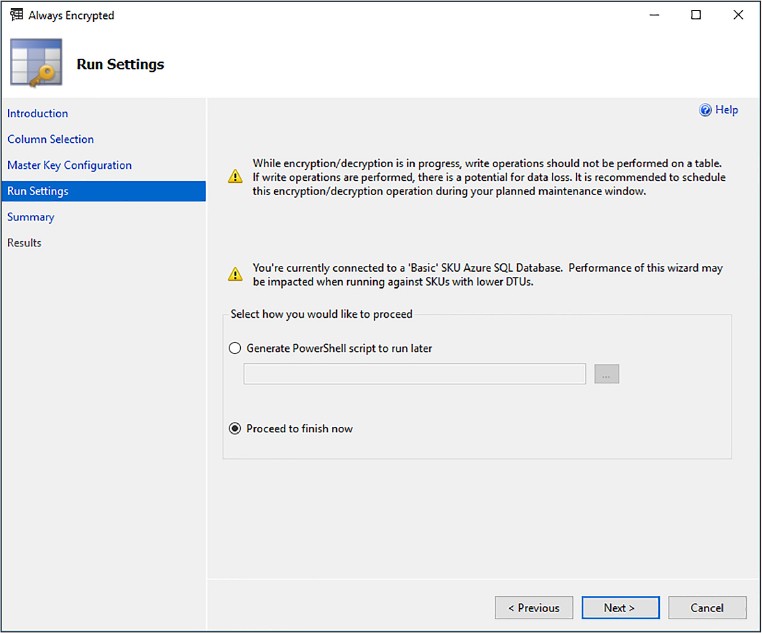

The Run Settings page of the wizard simply gives you a couple of options of proceeding. One of the options will generate a PowerShell script that you can then run later to set up and configure Always Encrypted, and the other option is to proceed and finish now.

Also on this page is several warnings. The first warning states that while the encryption/decryption is taking place, no write operations should be performed on the table. Any write operations that are performed during the encryption/decryption process may cause a loss of data.

The other warning simply states that depending on the SQL Database performance SKU (Basic, Standard, Premium), the performance may vary. In this case, it’s a small table with no data so it does not take too long. Click Next on the Run Settings wizard to kick off the process and take you to the Results page, shown in Figure 2-26.

There are three steps in the process. The first step creates the column master key, the next step creates the column encryption key, and the last step performs the actual encryption. Again, since there is no data to encrypt, the process is quite fast. Depending on how much data exists in the table and the type of data, the last step in the process could take some time.

Figure 2-26 Always Encrypted process completion

When the encryption process is complete, click Close. At this point, Always Encrypted for Azure SQL Database has been configured. The following code snippets show how to implement Always Encrypted in your application. The full code can be downloaded from this book’s homepage.

First, you will need the database connection string, which you can get from the Azure portal. You will then need the ClientId and Secret key. The ClientId is the ApplicationId you copied in Figure 2-17. The Secret is the key value you copied in Figure 2-17.

static string connectionString = @"<connection string from portal>"; static string clientId = @""; static string clientSecret = "";

The following code snippet is the critical piece of code that enables Always Encrypted in your database connection string. You can either use the SqlConnectionStringBuilder class as shown in the code snipped below, or you can simply add the keywords “Column Encryption Setting=Enabled” manually to your connection string. Either is fine, but to enable Always Encrypted, you must use one of these methods.

SqlConnectionStringBuilder connStringBuilder = new SqlConnectionStringBuilder(connectionString); connStringBuilder.ColumnEncryptionSetting = SqlConnectionColumnEncryptionSetting.Enabled; connectionString = connStringBuilder.ConnectionString;

The code below registers the Azure Key Vault as the application’s key provider and uses the ClientId and Secret to authenticate to the Azure Key Vault.

_clientCredential = new ClientCredential(clientId, clientSecret); SqlColumnEncryptionAzureKeyVaultProvider azureKeyVaultProvider = new SqlColumnEncryptionAzureKeyVaultProvider(GetToken); Dictionary<string, SqlColumnEncryptionKeyStoreProvider> providers = new Dictionary<string, SqlColumnEncryptionKeyStoreProvider>(); providers.Add(SqlColumnEncryptionAzureKeyVaultProvider.ProviderName, azureKeyVaultProvider); SqlConnection.RegisterColumnEncryptionKeyStoreProviders(providers);

As discussed previously, client applications must use SqlParameter objects when passing plaintext data. Passing literal values without using the SqlParameter object will generate an exception. Thus, the following code shows how to use parameterized queries to insert data into the encrypted columns. Using SQL parameters allows the underlying data provider to detect data targeted encrypted columns.

string sqlCmdText = @"INSERT INTO [dbo].[Customer] ([CCN], [FirstName], [LastName], [BirthDate]) VALUES (@CCN, @FirstName, @LastName, @BirthDate);"; SqlCommand sqlCmd = new SqlCommand(sqlCmdText); SqlParameter paramCCN = new SqlParameter(@"@CCN", newCustomer.CCN); paramCCN.DbType = DbType.String; paramCCN.Direction = ParameterDirection.Input; paramCCN.Size = 19; sqlCmd.ExecuteNonQuery();

When running the full application code, the encrypted data is decrypted at the client and displayed in clear text as seen in Figure 2-27.

Figure 2-27 Viewing decrypted data via an application

However, as seen in Figure 2-28, querying the data directly from within SQL Server Management Studio shows the data encrypted, as it should be.

Figure 2-28 Viewing encrypted data in the database

To test this further, right mouse click in the query window in SQL Server Management Studio and select Connection > Change Connection from the context menu, opening up the SQL Server connection dialog, shown in Figure 2-29. In the connection dialog, click the Options button to display the connection properties. Click the Additional Connection Parameters tab and add the following (as shown in Figure 2-29):

Column Encryption Settings=Enabled

Click the Login tab and type in your authentication password, then click Connect. Once authenticated, re-execute the SELECT statement to query the table, and you will see that the encrypted data now comes back as clear text, as shown in Figure 2-30.

Figure 2-29 Setting the Always Encrypted additional connection string parameter in SSMS

The data comes back as clear text because the same client driver used in the client application was also called and used when the query was executed in the query window. When the SELECT statement was issued, the Column Encryption Setting=Enabled connection string parameter was added to the query connection string, at which point the data was encrypted.

Figure 2-30 Viewing decrypted data in the database

The principal objective of Always Encrypted is to ensure that sensitive data is safe and secure, regardless of where your data resides (on-premises or in the cloud). A key value-proposition of Always Encrypted is that it assures users that sensitive data can be stored in the cloud safely and securely.

To further ensure proper security, there are a few key management considerations to keep in mind.

Never generate column master keys or column encryption keys on a computer that is hosting your database. Use a separate computer to generate the keys which should be dedicated for key management.

Implement a key management process. Identify roles of who should and should not have access to keys. A DBA, for example, may not generate keys, but may manage the key metadata in the database since the metadata does not contain plaintext keys.

Periodically replace existing keys with new keys, either due to compliance regulations or if your keys have been compromised.

Always Encrypted can be configured either using SQL Server Management Studio, PowerShell, or T-SQL. T-SQL has a limitation in that you cannot provision column master or column encryption keys, nor can you encrypt existing data in selected database columns. The following table details what tasks can be accomplished with SSMS, PowerShell, and T-SQL.

Table 2-1 Always Encrypted functionality with the different tools

Task |

SSMS |

PowerShell |

T-SQL |

Provision CMK and CEK |

Yes |

Yes |

No |

Create key metadata in the database |

Yes |

Yes |

Yes |

Create new tables with encrypted columns |

Yes |

Yes |

Yes |

Encrypt existing data in selected database columns |

Yes |

Yes |

No |

Configure cell level encryption

Another method for encrypting your data is via cell-level encryption (CLE) to help protect and secure your data at the data tier. Similar to Always Encrypted, in which specific columns are encrypted, cell-level encryption is used to encrypt specific columns or cells. Cell-level encryption uses a symmetric encryption and is often referred to or called column-level encryption. A key benefit of cell-level encryption, like Always Encrypted, is that you can encrypt individual cells/columns with different keys. Cell-level encryption is also quite fast and is a great option when working with large amounts of data.

With Cell-level Encryption, encryption and decryption is done by explicitly calling the ENCRYPTBYKEY or DECRYPTBYKEY functions. These functions require the use of a symmetric key, which must be opened to be used. Both ENCRYPTBYKEY or DECRYPTBYKEY return a varbinary type, thus when storing CLE-encrypted data, the column type must be varbinary with a maximum size of 8000 bytes.

Cell-level encryption uses a database master key for its encryption, which is a symmetric key used to protect private keys and asymmetric keys within the database. When created, the database master key is encrypted using the AES_256 algorithm along with a user-supplied password. A database master key is created by issuing the following T-SQL statement:

CREATE DATABASE [database2] (EDITION = 'Basic', SERVICE_OBJECTIVE = 'Basic', MAXSIZE = 2 GB); GO USE database2 GO CREATE MASTER KEY ENCRYPTION BY PASSWORD = 'AwesomeP@ssw0rd' GO

In order to create the database master key and any certificates, you need the following permisions:

CONTROL permission on the database

CREATE CERTIFICATE permission on the database

ALTER permission on the table

With the master key created, you need to create a certificate and symmetric key, which can be done via the following T-SQL:

CREATE CERTIFICATE CreditCardCert01

WITH SUBJECT = 'Customer Credit Card Numbers';

GO

CREATE SYMMETRIC KEY CreditCards_Key01

WITH ALGORITHM = AES_256

ENCRYPTION BY CERTIFICATE CreditCardCert01;

GO

The Create Certificate statement creates a database-level securable that follows the X.509 standards. Because the certificate was created with the database master key, the ENCRYPTION BY PASSWORD option is not required.

The certificate can be time-based, meaning, by supplying a START_DATE and EXPIRY_DATE parameter you can specify when the certificate becomes valid and when it expires. By default, if the START_DATE parameter is not specified it becomes valid when the certificate is created. Likewise, if the EXPIRY_DATE parameter is not specified, the certificate expires one year from when after the START_DATE.

The Create Symmetric statement creates a symmetric key in the database and is encrypted using the certificate created above. A symmetric key must be encrypted using either a certificate, password, another symmetric key, or asymmetric key. A single symmetric key actually be encrypted using multiple encryption types.

In this example, the key was encrypted with the AES_256 algorithm. Starting with SQL Server 2016, all algorithms other than AES_128, AES_192, and AES_256 are no longer supported.

To be cell-level encryption to work, execute the following T-SQL to create a table and insert records into the table.

CREATE TABLE [dbo].[Customer](

[CustomerId] [int] IDENTITY(1,1),

[FirstName] [nvarchar](50) NULL,

[LastName] [nvarchar](50) NULL,

[MiddleName] [nvarchar](50) NULL,

[StreetAddress] [nvarchar](50) NULL,

[City] [nvarchar](50) NULL,

[ZipCode] [char](5) NULL,

[State] [char](2) NULL,

[Phone] [char](10) NULL,

[CCN] [nvarchar](19) NOT NULL,

[BirthDate] [date] NOT NULL

PRIMARY KEY CLUSTERED ([CustomerId] ASC) ON [PRIMARY] );

GO

INSERT INTO Customer (FirstName, LastName, CCN, BirthDate)

VALUES ('Brady', 'Hunter', '1234-5678-1234-5678', '01/04/1964')

INSERT INTO Customer (FirstName, LastName, CCN, BirthDate)

VALUES ('Scott', 'Gaster', '5678-1234-5678-1234', '06/20/1976')

INSERT INTO Customer (FirstName, LastName, CCN, BirthDate)

VALUES ('Phillip', 'Green', '7890-1234-7890-1234', '09/02/1973')

INSERT INTO Customer (FirstName, LastName, CCN, BirthDate)

VALUES ('Joey', 'Klein', '3456-7890-3456-7890', '08/31/1985')

INSERT INTO Customer (FirstName, LastName, CCN, BirthDate)

VALUES ('Robert', 'DAntoni', '6789-4321-6789-4321', '05/06/1991')

GO

Next, execute the following T-SQL, which will modify the table and add a column in which to store the encrypted credit card numbers. As mentioned earlier, the EncryptByKey and DecryptByKey functions return a varbinary type and since the encrypted data will be stored in this new column, the data type will be varbinary.

ALTER TABLE Customer

ADD CCN_Encrypted varbinary(128);

GO

In order to use the EncryptByKey function, the symmetric key must first be opened to encrypted data. The next statement encrypts the values in the CCN column using the EncryptByKey function (which uses the symmetric key) and saves the results in the CCN_Encrypted column.

OPEN SYMMETRIC KEY CreditCards_Key01

DECRYPTION BY CERTIFICATE CreditCardCert01;

UPDATE Customer

SET CCN_Encrypted = EncryptByKey(Key_GUID('CreditCards_Key01')

, CCN, 1, HashBytes('SHA1', CONVERT( varbinary

, CustomerId)));

GO

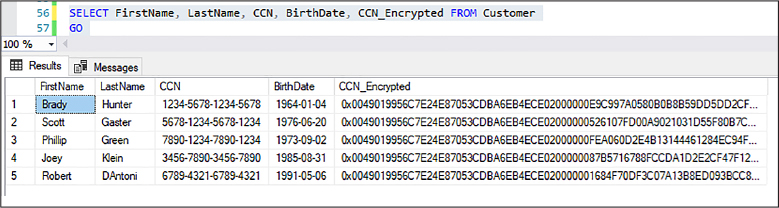

You can view the results of the encryption by executing a simple SELECT statement. The encrypted data is shown in Figure 2-31.

SELECT FirstName, LastName, CCN, BirthDate, CCN_Encrypted FROM Customer GO

Figure 2-31 Viewing encrypted data with Cell-level Encryption

To verify that the encryption, reopen the symmetric key and then issue the following T-SQL which uses the DecryptByKey function to decrypt the values in the CCN_Encrypted column. If the decryption was successful, the original number will match the decrypted number, as shown in Figure 2-32.

SELECT CCN, CCN_Encrypted

AS 'Encrypted card number', CONVERT(nvarchar,

DecryptByKey(CCN_Encrypted, 1 ,

HashBytes('SHA1', CONVERT(varbinary, CustomerID))))

AS 'Decrypted card number' FROM Customer;

GO

Figure 2-32 Using symmetric keys to decrypt data

In this example, the HashBytes function was used to hash the input. When using the HashBytes function, algorithms of MD2, MD4, MD5, SHA, SHA1, and SHA2 can be used. Also, in this example an authenticator was used. Authenticators are additional data that gets encrypted along with the data to be stored encrypted. When the data is decrypted, the authenticator is also specified. If the incorrect authenticator is not specified, the data is not decrypted and a NULL is returned. In this example, the column CreditCardID is used as the authenticator.

You likewise could encrypt data using simple symmetric encryption. For example:

UPDATE Customer

SET CCN_Encrypted = EncryptByKey(Key_GUID('CreditCards_Key01'), CCN);

GO

While a case for using TDE and CLE together could be made, they are typically used for different purposes. CLE has advantages over TDE when encrypting small amounts of data, but when performance is not too much of a concern, then CLE should be considered. With CLE, the data is still encrypted when it is loaded into memory and allows for a higher degree of customization.

On the other hand, TDE can be very simple to deploy with no changes to the application or database and the performance is better over CLE.

Configure Dynamic Data Masking

Dynamic Data Masking (DDM) is a security feature that limits data exposure by masking it to non-privileged users. It provides the ability to designate how much of the sensitive data should be readable with minimal impact on the application layer. Dynamic data masking simply hides the sensitive data in the result set of a query, while keeping the database unchanged. Masks are applied at query time when the results are returned.

Dynamic data masking can be applied to any data deemed as sensitive data by you, such as credit card numbers, social security or national identification numbers, email address, or phone numbers. Dynamic data masking includes several built-in masking functions, but also provides the ability to create a custom mask.

The following example will walk through implementing Dynamic Data Masking. To begin, create a new Azure SQL Database either via SQL Server Management Studio or the Azure portal. Once created, connect to that database with SQL Server Management studio and execute the following T-SQL which creates a Customer table with several columns that will contain sensitive data (such as email, credit card number, and social security number). The script then inserts five rows of data.

CREATE TABLE [dbo].[Customer](

[CustomerId] [int] IDENTITY(1,1),

[FirstName] [nvarchar](50) NULL,

[LastName] [nvarchar](50) NULL,

[MiddleName] [nvarchar](50) NULL,

[StreetAddress] [nvarchar](50) NULL,

[City] [nvarchar](50) NULL,

[ZipCode] [char](5) NULL,

[State] [char](2) NULL,

[Phone] [char](10) NULL,

[Email] [nvarchar] (50) NULL,

[SSN] [char] (11) NOT NULL,

[CCN] [nvarchar](19) NOT NULL,

[BirthDate] [date] NOT NULL

PRIMARY KEY CLUSTERED ([CustomerId] ASC) ON [PRIMARY] );

GO

INSERT INTO Customer (FirstName, LastName, Email, SSN, CCN, BirthDate)

VALUES ('Brady', 'Hunter', '[email protected]', '999-99-0001', '4833-1200-7350-8070',

'01/04/1964')

INSERT INTO Customer (FirstName, LastName, Email, SSN, CCN, BirthDate)

VALUES ('Scott', 'Gaster', '[email protected]', '999-99-0002', '5145-1800-0184-8667',

'06/20/1976')

INSERT INTO Customer (FirstName, LastName, Email, SSN, CCN, BirthDate)

VALUES ('Phillip', 'Green', '[email protected]', '999-99-0003', '3767-6401-5782-0031',

'09/02/1973')

INSERT INTO Customer (FirstName, LastName, Email, SSN, CCN, BirthDate)

VALUES ('Joey', 'Klein', '[email protected]', '999-99-0004',

'3797-0931-5791-0032', '08/31/1985')

INSERT INTO Customer (FirstName, LastName, Email, SSN, CCN, BirthDate)

VALUES ('Robert', 'DAntoni', '[email protected]', '999-99-0005',

'4854-1299-2820-4506', '05/06/1991')

GO

Once the script executes, log in to the Azure Portal and go to the database you created and click on the Dynamic Data Masking option that will open the Masking Rules pane, shown in Figure 2-33.

Figure 2-33 Selecting the columns to mask

No masking rules have been created, but the pane does show recommended columns to mask. You can click on the Add Mask button for the specific column, or you can click on the Add Mask button on the toolbar.

Clicking the Add Mask button next to the recommended field is a quick way to add the mask. Many times the portal is smart enough to recognize what type of field it is and apply the appropriate mask. However, it is not guaranteed, so the quickest and most efficient way to add the column mask is to click the Add Mask button on the top toolbar that opens the Add masking rule pane, shown in Figure 2-34. This pane will also open if the portal can’t appropriately apply the mask when clicking the Add Mask button next to the recommended field.

In the Add masking rule pane, simply select the column you want to mask and then select the appropriate mask. In Figure 2-34, the credit card number column is select and thus the credit card mask is selected.

Figure 2-34 Configuring a column mask

Notice the different types of default masks, including:

Default Full masking according to the data types of the designated fields.

Credit Card Exposes the last four digits of the credit card number and adds a constant string as a prefix in the form of a credit card.

Email Exposes the first letter, then replaces the domain with XXX.com.

Random Number Generates a random number based on the supplied upper and lower boundaries.

Custom Text Exposes the first and last characters based on the supplied Prefix and Suffix, then adds a custom padding string in the middle.

The custom text masking function can be used to mask a social security number. Figure 2-35 shows how to use the Prefix and Suffix and padded string to mask all but the last four numbers of a social security number.

Figure 2-35 Creating a custom data mask

Be sure to click the Add button on the Add masking rule pane once the masking rule is configured. After all the masks are applied, click the Save button back on the Masking rules pane. If you don’t click Save, the masking rules will not be applied.

Querying the data shows data unmasked, as shown in Figure 2-36. Even though the masking rules have been applied, the data is returned in clear text because the user that is logged in is an administrator.

Figure 2-36 Query data with unmasked results

To test the masking, a new user can be created, which simply has SELECT permissions on the table. The following T-SQL creates a new user called TestUser and grants SELECT permission on the Customer table.

CREATE USER TestUser WITHOUT LOGIN; GRANT SELECT ON Customer TO TestUser;

To test masking, the execution context of the current session can be changed to the TestUser, as shown in the following T-SQL. Once the session execution context is changed, the SELECT statement can be reissued, which will be executed in the context of the TestUser.

Execute AS USER = 'TestUser' SELECT FirstName, LastName, Email, SSN, CCN FROM Customer

As shown in Figure 2-37, the Email, SSN, and CCN columns are displayed with their appropriate masks. It should be noted that the data in the underlying table is not masked, but rather the data is displayed with the corresponding mask.

Figure 2-37 Querying data with masked results

The session execution context can be switched back simply be executing the following T-SQL statement:

REVERT;

Dynamic Data Masking policies are made up of three components:

Users excluded from masking These are either SQL users or Azure Active Directory identities that get automatically unmasked data.

Masking rules Rules that define the designated fields to be masked and their corresponding masking functions.

Masking functions The methods/functions that control the data exposure.

Figure 2-33 shows these three components on the Masking rules blade. The top portion of that blade lists any defined masking rules. Once a masking rule is defined, such as the ones defined in Figures 2-34 and 2-35, it will be listed in the Masking Rules section.

Directly below that section is a section on the blade titled SQL Users Excluded From Masking. This is where you can specify a semicolon-separated list of users (either SQL users or Azure Active Directory identities) in which data masking will not apply.

Lastly, the masking functions are the built-in functions used to mask the data, which you can see in Figure 2-34. Together, these three components help define the data masking policies.

Managing DDM using T-SQL

Dynamic Data Masking can also be configured using T-SQL. The following T-SQL creates a table called Customer2 and defines built-in masking functions applied during table creation.

CREATE TABLE [dbo].[Customer2]( [CustomerId] [int] IDENTITY(1,1), [FirstName] [nvarchar](50) NULL, [LastName] [nvarchar](50) NULL, [MiddleName] [nvarchar](50) NULL, [StreetAddress] [nvarchar](50) NULL, [City] [nvarchar](50) NULL, [ZipCode] [char](5) NULL, [State] [char](2) NULL, [Phone] [char](10) NULL, [Email] [nvarchar] (50) MASKED WITH (FUNCTION = 'email()') NULL, [SSN] [char] (11) MASKED WITH (FUNCTION = 'partial(0, "XXX-XX-", 4)') NOT NULL, [CCN] [nvarchar](19) MASKED WITH (FUNCTION = 'partial(0, "xxxx-xxxx-xxxx-", 4)') NOT NULL, [BirthDate] [date] NOT NULL PRIMARY KEY CLUSTERED ([CustomerId] ASC) ON [PRIMARY] );

A mask can be removed from a column by using the DROP MASKED statement. For example:

ALTER TABLE Customer ALTER COLUMN Email DROP MASKED;

Managing DDM using PowerShell

Dynamic data masking can be configured using PowerShell cmdlets. Azure PowerShell comes with six cmdlets to create and configure masking rules and policies.

Get-AzureRmSqlDatabaseDataMaskingPolicy Gets the data masking policy for a database.

Get-AzureRmSqlDatabaseDaaMaskingRule Gets the data masking rules from a database.

New-AzureRmSqlDatabaseDataMaskingRule Creates a data masking rule for a database.

Remove-AzureRmSqlDatabaseDataMaskingRule Removes a data masking rule from a database.

Set-AzureRmSqlDatabaseDataMaskingRule Sets the properties of a data masking rule for a database.

Set-AzureRmSqlDatabaseDataMaskingPolicy Sets data masking for a database.

A masking policy is simply the combination of the set of rules that define the columns to be masked, the SQL or AAD users that get unmasked data in the query results, and the masking functions that control the exposure of data for the different scenarios. Thus, by creating a rule that applies the credit card mask to a CCN column, and optionally specifying the SQL or AAD users, a policy has been created. The PowerShell cmdlets allow the creation, modification, and retrieval of the rules and policies.

For example, you can create a new masking rule by executing the following (replacing the appropriate resource group and server names to match your names):

New-AzureRmSqlDatabaseDataMaskingRule -ResourceGroupName "RG-WestUS" -ServerName "demo908" -DatabaseName "database3" -SchemaName "dbo" -TableName "Customer2" -ColumnName "Email" -MaskingFunction "Email"

It should be clear that dynamic data masking should be used in conjunction with other security features to better secure sensitive data. Dynamic data masking can be used and is a complimentary security feature along with Always Encrypted, Row Level Security, and other security features. The purpose of dynamic data masking exists to limit the exposure of sensitive data to those who should not have access to it.

Creating a mask on a column does not prevent updates to that column. Meaning, even though a user many see the data as masked, that same user can update the data if they have permissions to do so. This means that a proper access control policy should be implemented to limit update permissions.

When using the SELECT INTO or INSERT INTO statements to copy data, if the source data has a masked column, the destination table will result in masked data in the target table. Also, Dynamic Data Masking is applied when running an import or export. Any database that contains masked columns will result in a backup file with masked data.

The system view sys.masked_columns can be queried to see what columns have a mask applied to them and what masking function is used. This view inherits from the sys.columns view that contains an is_masked column and masking_functions column. The following T-SQL returns a good summary view into the columns that are masked and their corresponding masking functions.

SELECT c.name, tbl.name as table_name, c.is_masked, c.masking_function

FROM sys.masked_columns AS c

JOIN sys.tables AS tbl

ON c.[object_id] = tbl.[object_id]

WHERE is_masked = 1;

For some final notes, a masking rule cannot be defined on the following column types:

A column encrypted with Always Encrypted

FILESTREAM

COLUMN_SET or a sparse column that is part of a column set

A computed column

There are a couple of caveats. If a computed column depends on a column with a MASK, then the computed column will return the masked data. Also, a column with data masking applied cannot be part of a FULLTEXT index.

Configure Transparent Data Encryption

Similar to cell-level encryption, Transparent Data Encryption (TDE) is used to encrypt data at rest. There are several differences between cell-level encryption (CLE) and TDE, one of which is that TDE will automatically encrypt and decrypt the data when it reads and writes the data to/from disk, whereas CLE required the use of the EncryptByKey and DecryptByKey functions. Transparent data encryption also differs from cell-level encryption by encrypting the storage of an entire database, not just a single cell or column. Other differences between CLE and TDE were discussed at the conclusion of the CLE section.

Transparent data encryption helps secure and protect your data by performing database encryption real-time without requiring changes to an application. Encryption is accomplished through a symmetric key called a database encryption key (DEK). The DEK key is protected by the transparent data encryption protector, which is either a service-managed certificate or an asymmetric key stored in Azure Key Vault. The transparent data encryption protector is set at the server level.

When the database starts up, the encrypted DEK is decrypted and then used for the encryption and decryption of the database files. Transparent data encryption performs real-time I/O encryption and decryption of the data at the page level, thus each page is encrypted before it is written to disk, and decrypted when read from disk and into memory.

For Azure SQL Database, TDE is enabled by default at the database level, as shown in Figure 2-38. At this level, TDE can either be turned on or off.

Figure 2-38 Configuring TDE

At the server level, the default setting for TDE in Azure SQL Database is for the database encryption key to be protected by a built-in server certificated. This certificate is unique for each server, but if geo-replication is enabled and a database is participating in a geo-replication relationship, the geo-secondary database will be protected by the primary server key. As a best practice, Microsoft rotates these certificates every 90 days. As shown in Figure 2-39, the Use Your Own Key option is disabled by default, thus the database encryption key is protected by a built-in certificate.

Figure 2-39 configuring bring your own key encryption

As mentioned previously, Azure SQL Database also supports Bring Your Own Key (BYOK), which provides the ability to have control over the TDE encryption keys and stores them in Azure Key Vault. To turn on BYOK, simply select Yes for the Use Your Own Key option for TDE at the server level, as shown in Figure 2-40.

Figure 2-40 selecting the key for bring your own encryption key

When selecting the option to Use Your Own Key, you will be prompted to select the Azure Key Vault and the symmetric key from that vault. When selecting the key, an existing key can be selected, or you have the option to create a new key, as shown in Figure 2-41.

Figure 2-41 creating a new encryption key

Once a key is selected or a new key is created, click Save back on the TDE pane.

Figure 2-42 saving the new encryption key

Clicking save will save the Transparent Data Encryption settings for the server, which is essentially setting the appropriate permissions, ensuring that the database encryption key is protected by the asymmetric key stored in the key vault.

When TDE is configured to use a key from the Azure Key Vault, the server sends the database encryption key of each TDE-enabled database to the Key Vault for a wrapkey request. Key Vault returns the encrypted database encryption key, which is stored in the user database. It is vital to remember that once a key is stored in the Key Vault, that key never leaves the Key Vault. The server can only send key operation requests to the TDE protector material with Key Vault.

The benefits of using Azure Key Vault are many, including:

Support for key rotation.

Key Vault is designed such that no one sees or extracts any encryption keys.

Central management of TDE encryption keys.

More granular control and increased transparency to self-manage the TDE protector.

Separation of keys and data management.

Managing TDE using T-SQL

Transparent Data Encryption can be managed with T-SQL at the database level through a small handful of T-SQL statements and DMVs (dynamic management views). TDE can be enabled and disabled at the database level through the ALTER DATABASE command as follows:

ALTER DATABASE Database1 SET ENCRYPTION OFF

The sys.dm_database_encryption_encryption_keys DMV shows information about the database encryption state and associated encryption keys. A corresponding DMV for the SQL Data Warehouse exists that provides the same information: sysdm_pdw_nodes_database_encryption_keys.

Currently there are no T-SQL statements that allow you to manage TDE at the server level.

Managing TDE using PowerShell

PowerShell provides a nice set of cmdlets with which to configure TDE. In order to use these cmdlets, you must be connected as an Azure owner, contributor, or SQL Security manager.

Set-AzureRmSqlDatabaseTransparentDataEncryption Enables or disables TDE for a database.

Get-AzureRmSqlDatabaseTransparentDataEncryption Gets the TDE state for as the database.

Get-AzureRmSqlDatabaseTransparentDataEncryptionActivity Checks the encryption progress for a database.

Add-AzureRmSqlServerKeyVaultKey Adds an Azure Key Vault key to a SQL server.

Get-AzureRmSqlServerKeyVaultKey Gets a SQL server’s Azure Key Vault keys.

Set-AzureRmSqlServerTransparentDataEncryptionProtector Sets the TDE Protector for a SQL server.

Get-AzureRmSqlServerTransparentDataEncryptionProtector Gets the TDE protector.

Remove-AzurermSqlServerKeyVaultKey Removes an Azure Key Vault key from a SQL server.

The following code snipped uses the Set-AzureRmSqlDatabaseTransparentDataEncryption cmdlet to enable TDE on database on server demo908 and database database4. When executing, be sure to replace the resources with the appropriate names in your environment.

Set-AzureRmSqlDatabaseTransparentDataEncryption -ResourceGroupName "RG-WestUS" -ServerName "demo908" -DatabaseName "database4" -State Enabled

Skill 2.2: Configure SQL Server performance settings

The most recent releases of SQL Server have come with significant built-in performance enhancements to ensure databases perform well. However, simply installing SQL Server is not enough, and there is not a built-in “make it go faster switch.” There are several post-installation steps to ensure SQL Server itself, as well as the databases, perform appropriately and effectively.

The knowledge and skill necessary to effectively optimize and improve database performance whether you are on-premises and in the cloud, and the skills in this section focus on the approach and steps necessary to monitor and configure your database for optimum database performance.

This skill covers how to:

Configure database performance settings

This section will focus primarily on the common configuration steps and tasks necessary to improve overall database performance. While the majority of the configuration settings can be done at the database level, there is one or two which are configured at the server level and should not be overlooked. The server and database level performance configuration settings include:

Power Plan (Server)

Trace Flags

Parallelism

Query Plan

Many of these performance configuration settings can be done with very little effort but can have a significant performance impact overall, but left unchecked (and improperly configured) can cause your SQL Server to unnecessarily slow down and work harder than it needs to.

Power Plan

Windows Power Plan is a Control Panel configuration setting that was introduced in Windows 2008. A “Power Plan” is a collection of hardware and software settings with the responsibility of managing how your computer manages power. The goal with Power Plan is two-fold; save energy and maximize performance. Thus, the idea behind the “Power Plan” is that Windows may, and does, throttle power to the CPUs to save energy.

The idea and concept behind the Windows Power Plan is good and for the most part, Windows Power Plan does a great job. However, it can wreak havoc on SQL Server. Power Plan throttles CPUs down when they are idle, and throttles them back up when the server is busy, essentially running your server, and SQL Server, at anywhere between 50-70% power.

The reality of this is that CPU-throttling does not respond well to CPU pressure, because in order to get the CPUs back up to 100% utilization, the CPUs need a sustained period of high CPU utilization (of the existing 50-70%) in order to trigger the throttle-up back up to 100% CPU utilization. This does not bode well for SQL Server because overall SQL Server performance will suffer. Queries will take longer to run, transactions will take longer, and on down the line. This applies to both on-premises environments as well as cloud-based IaaS environments.

By default, Windows sets the Power Plan to Balanced, meaning, that Windows will manage the hardware and software and come up with a plan to save energy and maximize performance. Luckily, there are two ways to check the throttling of your CPUs. The first is a third-party tool called CPU-Z (https://www.cpuid.com), and the other is through a Windows performance counter.

Figure 2-43 show the output from the CPU pressure on a real SQL Server box. The Specification is the speed that the processor is rated for, and the Core Speed is the actual running speed of the CPU. Here, the rated speed is 4.00 GHz, but the Core Speed is well below that.

In this case there is not a lot of activity on the server so the CPU was fluctuating quite a bit, and would fluctuate as low as 20-25% for the Core Speed.

Figure 2-43 Comparing rated CPU speed with actual CPU speed

Similar CPU performance information can be obtained through the Windows performance counter Processor Information\% of Maximum Frequency which shows current power level of the CPUs of the maximum frequency. As seen in Figure 2-44, the blue graph bar is tracking the % of Maximum Frequency, and while there are occasional spikes, the average level is around 20%.

Figure 2-44 Using Performance Monitor to track actual CPU utilization

While the Windows Power Plan is not a SQL Server configuration setting, nor is it a setting that is configured at the database level, it is something that needs to be addressed when configuring performance for SQL Server.

This configuration setting can be changed either through the Power Settings option in the Control Panel, or through the BIOS. Depending on the server model, and if the configuration is via the BIOS, power throttling might need to be disable in the BIOS.

To configure the Power Plan in the Control Panel, open the Control Panel and search for Power Options. In the search results, select Power Options, which should show that the Balanced power option is selected. Click the Change plan settings option, then select the Change advanced power settings option, which opens the Power Options dialog.

Figure 2-45 shows the Power Options dialog and the Balanced power plan selected by default. To change the plan, select the drop down, and select High performance to make that power plan the active plan.

Figure 2-45 Configuring Windows Power Plan

With no other changes to the system, either Windows or SQL Server, simply changing this setting alone can improve SQL Server and database performance.

While in the Advanced Settings dialog, also change the setting to Turn off hard disks. By default, the value for this setting is 20 minutes. Set this value to 0.

For Microsoft Azure virtual machines that are created from the Azure portal, both of these settings (Power Plan and Disk sleep setting) are configured appropriately and do not need to be changed. This applies to Windows VMs and SQL Server VMs.

Parallelism

Parallelism is both a server-level and database-level configuration setting that affects the database performance and applies to scenarios where SQL Server runs on a server that has more than one CPU.

Parallelism is the number of processors used to run a single T-SQL statement for each parallel plan execution. When SQL Server is installed on a multi-CPU system, SQL Server does its best to detect the best degree of parallelism.

Parallelism is configured by setting the Maximum Degree of Parallelism, or MaxDOP, value. There is both a server-level configuration value and a database configuration value for MaxDOP. Setting the server-level configuration value sets MaxDOP for all databases on the server. Setting MaxDOP at the database level configures MaxDOP and overrides the server-level configuration value for that database. This section will discuss configuring the setting at the server level.

By default, SQL Server sets the value to 0, which tells SQL Server to determine the degree of parallelism that essentially tells SQL Server to use all the available processors up to 64 processors.

Most PCs today that SQL Server is installed on are NUMA (Non-Uniform Memory Access), meaning that the CPUs are clustered in a way in which they can share memory locally, thus improving performance. Typically, a cluster will consist of four CPUs that are interconnected on a local bus to shared memory.

The easiest way to tell how many NUMA nodes you have is to open Task Manager and select the Performance tab. On the Performance tab, click on the CPU graph that displays a graph on the right. Right-click the graph on the right and select Change Graph To from the context menu. You should see a NUMA node option. If the NUMA node option is grayed out, you have one NUMA node. If it isn’t grayed out, then select that option. The number of graphs you see on the right hand side is how many NUMA nodes the computer has.

Knowing the number of NUMA nodes is important to appropriately configuring the MaxDOP setting. Best practice states that for NUMA computers, MaxDOP should be set at the number of CPUs per NUMA node, or eight, whichever is less. Put simply, the proper setting comes down to whether or not you have eight logical cores inside a NUMA node. For a single NUMA node with less than eight logical processors, keep the MaxDOP at or below the number of logical processors. If more than eight, set it to eight. The same thing applies to Multiple NUMA nodes.

Configuring MaxDOP appropriately can have a significant performance implication. Setting the value too high can decrease concurrency, causing queries to back up on worker threads, thus awaiting time to be executed on a CPU. When the number of parallel threads is high, SQL Server will take the path of keeping parallelized tasks within a single NUMA node. Often times this will cause threads of the same query to share CPU time with other threads of the same query. This can cause an imbalance if some CPU threads are handling parallel threads and other threads are handling single threads, resulting in some threads finishing much more quickly than others.

It is recommended that Maximum Degree of Parallelism, or MaxDOP, not be set to a value of one, because this will suppress parallel plan generation.

Another parallelism setting that should be looked at is Cost Threshold for Parallelism, which is an estimate of how much work SQL Server will do to complete a particular query plan task. The default value is five, which may or may not be good because at this value, queries do not need to be particularly large to be a considered for parallelism.

Cost Threshold for Parallelism is a server-level configuration setting. There is no database-level configuration setting for Cost Threshold for Parallelism.

Best practice states that this value be larger, which will prevent smaller queries being parallelized, thus freeing up threads on the CPU to concentrate on larger queries. Smaller queries might take a bit more time to complete, but it will increase concurrency without reducing MaxDOP for larger queries.

While the default value for this configuration setting is five, this is known to be low in most environments. Best practice states that this value be configured with a higher value depending on your workload, with suggested starting values ranging from 25 to 50 and adjusting appropriately.

Cost Threshold for Parallelism does not apply if your computer has only one logical processor, or if your MaxDOP is configured with a value of one.

Both of these settings can be configured through the Advanced page in the Server Properties page in SQL Server Management Studio, as shown in Figure 2-46. In this example, Maximum Degree of Parallelism has been set to a value of four and the Cost Threshold for Parallelism has been configured with a value of 30 for all the databases on the server.

Figure 2-46 Configuring Max Degree of Parallelism

Cost Threshold for Parallelism can also be configured in SQL Server using T-SQL as follows:

USE master GO EXEC sp_configure 'show advanced options', 1 ; GO RECONFIGURE GO EXEC sp_configure 'cost threshold for parallelism', 30 ; EXEC sp_configure 'max degree of parallelism', 4; GO RECONFIGURE GO

The Cost Threshold for Parallelism cannot be configured in Azure SQL Database, because this is a server-level setting. Configuring MaxDOP at the database level will be discussed later in this chapter.

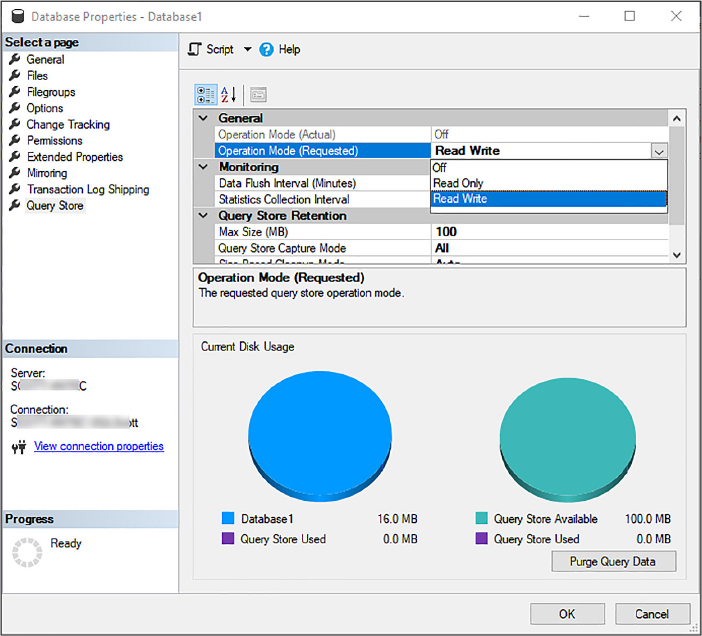

Query Store

The SQL Server Query Store is a feature introduced in SQL Server 2016 that provides real-time insights into query performance proactively. The idea and goal with Query Store is that it aims to simplify performance troubleshooting of queries by providing detailed look at query plan changes quickly and efficiently to identify poor performing queries.

Query store does this by capturing a history of all executed queries, their plans, and associated runtime statistics. This information is then available for you to examine through a nice user interface. The data is viewable via time slices so that you can see over a given period of time what changed and when and obtain detailed information about the usage patterns of each query.

It is commonly known that query execution plans can change over time for a variety of reasons. The most common reason is due to the change in statistics, but other reasons can include the modification of indexes or schema changes. Regardless of the reason, the problem has been that the cache where the cache plans are stored only stores the most recent execution plan. Additionally, plans can also be removed from the cache for a number of reasons as well, such as memory pressure. Add these all up and it makes troubleshooting query performance a problem.