Chapter 1 Implement SQL in Azure

Moving or provisioning new databases on the Azure platform requires a different set of skills than managing traditional on-premises installations. You need to have a broader understanding of cloud computing concepts and technologies like platform as a service, infrastructure as a service, and scripting.

Important: Have you read page xiii?

It contains valuable information regarding the skills you need to pass the exam.

Skills in this chapter:

Skill 1.1: Deploy a Microsoft Azure SQL Database

Skill 1.2: Plan for SQL Server installation

Skill 1.3: Deploy SQL Server instances

Skill 1.4: Deploy SQL Server databases to Azure virtual machines

Skill 1:1: Deploy a Microsoft Azure SQL Database

This skill deals with the process of setting up an Azure SQL Database. Azure SQL Database is a Platform as a Service (PaaS) offering that can be quite different from a traditional on-premises implementation of SQL Server.

This skill covers how to:

Choose a service tier

Unlike traditional on-premises architecture, or even Infrastructure as a Service (IaaS) architecture, Azure SQL Database is not configured by choosing CPU, RAM, and storage metrics. Microsoft has categorized several different service tiers:

Basic

Standard

Premium

Premium-RS

Your service tier affects several critical factors about your database including size, performance level, availability, and concurrency. Each tier of service has limits on sizing and performance capacity, which is measure in Database Transaction Units (DTUs). Let us examine each performance level in detail.

Basic The basic service tier is best suited for small databases that are in early stages of development. The size of this tier is limited to 2 gigabytes (GB) and computing resources are extremely limited.

Standard The standard tier offers a wide range of performance and is good for applications with moderate performance needs and tolerance for small amounts of latency. Your database can be up to 250 GB in size.

Premium The premium tier is designed for low latency, high throughput, mission critical databases. This service tier offers the broadest range of performance, high input/output (I/O) performance, and parallelism. This service tier offers databases up to 4 terabytes (TB) in size.

Premium RS The premium RS service tier is designed for databases that have I/O intensive workloads, but may not have the same availability requirements of premium databases. This could be used for performance testing of new applications, or analytical applications.

The fundamental concept of performance in Azure SQL Database is the Database Transaction Unit or DTU (you are introduced to this concept when you learn about elastic pools with the elastic Database Transaction Unit or eDTU). As mentioned earlier, when sizing an Azure SQL Database, you do not choose based on various hardware metrics, instead you choose a performance level based on DTUs.

There is one other significant feature difference as it relates to standard and basis tiers versus the premium performance tiers—in-memory features of SQL Server. Both columnstore and in-memory OLTP, which are features that are used for analytic and high throughput OLTP workloads are limited only to the premium and premium RS tiers. This is mainly due to resource limitations—at the lower service tiers there is simply not enough physical memory available to take advantage of these features, which are RAM intensive.

The basic performance level has a max DTU count as shown in Table 1-1.

Table 1-1 Basic performance level limits

Performance level |

Basic |

Max DTUs |

5 |

Max database size |

2 GB |

Max in-memory OLTP storage |

N/A |

Max concurrent workers (requests) |

30 |

Max concurrent logins |

30 |

Max concurrent sessions |

300 |

The standard performance level offers size increases, and increased DTU counts and supports increased concurrency (see Table 1-2).

Table 1-2 Standard performance tier limits

Performance level |

S0 |

S1 |

S2 |

S3 |

Max DTUs |

10 |

20 |

50 |

100 |

Max database size |

250 GB |

250 GB |

250 GB |

1024 GB |

Max in-memory OLTP storage |

N/A |

N/A |

N/A |

N/A |

Max concurrent workers (requests) |

60 |

90 |

120 |

200 |

Max concurrent logins |

60 |

90 |

120 |

200 |

Max concurrent sessions |

600 |

900 |

1200 |

2400 |

Recently, Microsoft made several additions to the standard database performance offerings (Table 1-3), both increasing the size and performance limits of the standard tier.

Table 1-3 Extended Standard Performance Tier Limits

Performance level |

S4 |

S6 |

S7 |

S9 |

S12 |

Max DTUs |

200 |

400 |

800 |

1600 |

3000 |

Max Database Storage |

1024 GB |

1024 GB |

1024 GB |

1024 GB |

1024 GB |

Max in-memory OLTP storage (GB) |

N/A |

N/A |

N/A |

N/A |

N/A |

Max concurrent workers (requests) |

400 |

800 |

1600 |

3200 |

6000 |

Max concurrent logins |

400 |

800 |

1600 |

3200 |

6000 |

Max concurrent sessions |

4800 |

9600 |

19200 |

30000 |

30000 |

The Premium performance tier (see Table 1-4) offers larger capacity, and greatly increased storage performance, making it ideal for I/O intensive workloads.

Table 1-4 Premium Performance Tier Limits

Performance level |

P1 |

P2 |

P4 |

P6 |

P11 |

P15 |

Max DTUs |

125 |

250 |

500 |

1000 |

1750 |

4000 |

Max database size |

500 GB |

500 GB |

500 GB |

500 GB |

4096 GB |

4096 GB |

Max in-memory OLTP storage |

1 GB |

2 GB |

4 GB |

8 GB |

14 GB |

32 GB |

Max concurrent workers (requests) |

200 |

400 |

800 |

1600 |

2400 |

6400 |

Max concurrent logins |

200 |

400 |

800 |

1600 |

2400 |

6400 |

Max concurrent sessions |

30000 |

30000 |

30000 |

30000 |

30000 |

30000 |

The Premium RS tier (see Table 1-5) is similar to the Premium tier in terms of performance, but with lower availability guarantees, making it ideal for test environments.

Table 1-5 Premium RS performance tier limits

Performance level |

PRS1 |

PRS2 |

PRS4 |

PRS6 |

Max DTUs |

125 |

250 |

500 |

1000 |

Max database size |

500 GB |

500 GB |

500 GB |

500 GB |

Max in-memory OLTP storage |

1 GB |

2 GB |

4 GB |

8 GB |

Max concurrent workers (requests) |

200 |

400 |

800 |

1600 |

Max concurrent logins |

200 |

400 |

800 |

1600 |

Max concurrent sessions |

30000 |

30000 |

30000 |

30000 |

Exam Tip

Exam Tip

It is important to understand the relative performance levels and costs of each service tier. You do not need to memorize the entire table, but you should have a decent understanding of relative performance and costs.

More Info Database Transaction Units

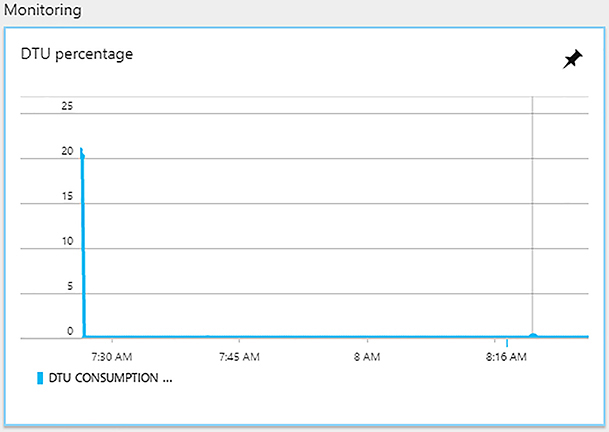

For a single database at a given performance level, Microsoft offers a performance level based on a specific, predictable level of performance. This amount of resources is a blended measure of CPU, memory, data, and transaction log I/O. Microsoft built this metric based on an online transaction processing benchmark workload. When your application exceeds the amount of any of the allocated resources, your throughput around that resource is throttled, resulting in slower overall performance. For example, if your log writes exceed your DTU capacity, you may experience slower write speeds, and your application may begin to experience timeouts. In the Azure Portal you can see your current and recent DTU utilization, shown in Figure 1-1.

Figure 1-1 A screen shot of the DTU percentage screen for an Azure SQL Database from the Azure Portal

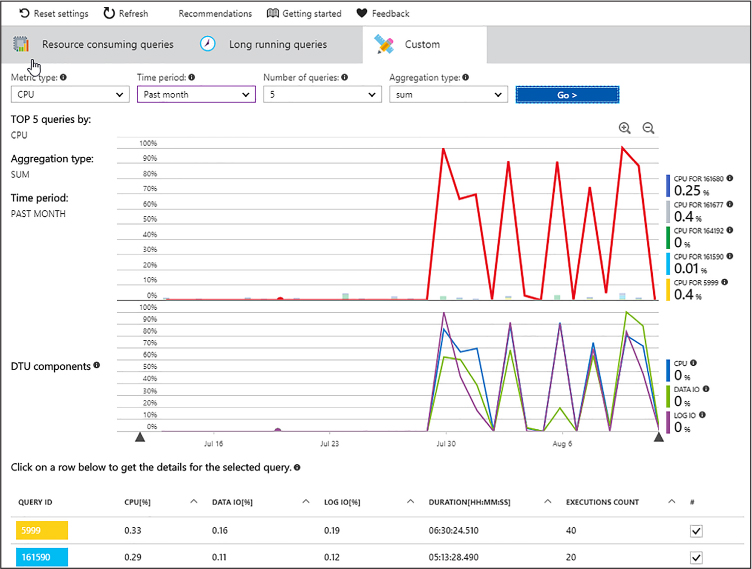

The Azure Portal offers a quick glance, but to better understand the components of your application’s DTU consumption by taking advantage of Query Performance Insight feature in the Azure Portal, you can click Performance Overview from Support and Troubleshooting menu, which shows you the individual resource consumption of each query in terms of resources consumed (see Figure 1-2).

Figure 1-2 A screen shot of the DTU percentage screen for an Azure SQL Database from the Azure Portal

The graphic in Figure 1-2 is built on top of the data collected by the Query Store feature that is present in both Azure SQL Database and SQL Server. This feature collects both runtime data like execution time, parallelism, and execution plan information for your queries. The powerful part of the Query Store is combining these two sets of data to make intelligent decisions about query execution. This feature supports the Query Performance Insight blade on the Azure Portal. As part of this feature you can enable the performance recommendations feature, which creates and removes indexes based on the runtime information in your database’s Query Store, and can changes query execution plans based on regression of a given query’s execution time.

More Info About Query Performance Insight

You can learn more about query performance insight at: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-query-performance.

The concept of a DTU can be very confusing to a DBA or developer who is used to choosing hardware based on specific requirements like amount of RAM and number of CPU cores. Microsoft has built the DTU model to abstract those hardware decisions away from the user. It is important to understand that DTUs represent relative performance of your database—a database with 200 DTUs is twice as powerful as one with 100 DTUs. The DTUs are based on the Azure SQL Database benchmark, which is a model that Microsoft has built to be a representative online transaction processing (OLTP) application, which also scales with service tier, and runs for at least one hour (see Table 1-6).

Table 1-6 Azure SQL Database Benchmark information

Class of Service |

Throughput Measure |

Response Time Requirement |

Premium |

Transactions per second |

95th percentile at 0.5 seconds |

Standard |

Transactions per minute |

90th percentile at 1.0 seconds |

Basic |

Transactions per hour |

80th percentile at 2.0 seconds |

More Info About SQL Database Benchmark

You can learn more about SQL Database Benchmark insight at: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-benchmark-overview.

Performance tuning

Before the Query Store and Query Performance Insight was available, a database administrator would have had to either use a third-party monitoring tool or build their own repositories to store information about the runtime history of their database. With these features in conjunction with auto-tuning features that have been released, the administrator can focus efforts on deeper tuning, building more optimal data structures, and developing more robust applications.

Automatic tuning

This is a feature that is unique to Azure SQL Database, and is only possible because of the power of cloud computing and machine learning elements that support Microsoft Azure. Proper index design and management is the key to relational database performance, whether you are in an on-premises environment or a platform as a service one. By monitoring your workloads Azure SQL Database can teach itself to identify and create indexes that should be added to your database.

In a traditional environment, this process consisted of the database administrator trying to track many queries, write scripts that would periodically collect data from various system views, and then take a best guess effort at creating the right set of indexes. The Azure SQL Database automated tuning model analyzes the workload proactively, and identifies queries that could potentially be run faster with a new index, and identifies indexes that may be unused or duplicated.

Azure also continually monitors your database after it builds new indexes to ensure that the changes help the performance of your queries. Automatic tuning also reverts any changes that do not help system performance. This ensures that changes made by this tuning process have no negative impact against your workloads. One set of relatively new automatic tuning features came with the introduction of compatibility level 140 into Azure SQL Database.

Even though Azure SQL Database does not have versions, it does allow the administrator or developer to set the compatibility level of the database. It does also support older compatibility levels for legacy applications. Compatibility level does tie back to the level at which the database optimizer operates, and has control over what T-SQL syntax is allowed. It is considered a best practice to run at the current compatibility level.

Azure SQL Database currently supports compatibility levels from 100 (SQL Server 2008 equivalent) to 140 (SQL Server 2017 equivalent). It is important to note that if you are dependent on an older compatibility level, Microsoft could remove them as product versions go off support. You can check and change the compatibility level of your database by using SQL Server Management studio, or the T-SQL, as shown in Figure 1-3.

Figure 1-3 Options Windows from SQL Server Management Studio showing compatibility level options

To determine the current compatibility levels of the database in T-SQL, you can execute the following query:

SELECT compatibility_level FROM sys.databases WHERE [name] = 'Your Database Name';

To change the compatibility level of the database using T-SQL, you would execute the following command replacing “database_name” with the name of your database:

ALTER DATABASE database_name SET COMPATIBILITY_LEVEL = 140;

Performance enhancements in compatibility level 140

Compatibility level 140 introduces several new features into the query optimization process that further improve the automated tuning process. These features include:

Batch mode memory grant feedback

Batch mode adaptive join

Interleaved query execution

Plan change regression analysis

Let’s look at each of these features in detail.

Batch mode memory grant feedback

Each query in SQL database gets a specific amount of memory allocated to it to manage operations like sorting and shuffling of pages to answer the query results. Sometimes the optimizer grants too much or too little memory to the query based on the current statistics it has on the data, which may affect the performance of the query or even impact overall system throughput. This feature monitors that allocation, and dynamically changes it based on improving future executions of the query.

Batch mode adaptive join

This is a new query operator, which allows dynamic selection to choose the most optimal join pattern based on the row counts for the queries at the time the query is executed.

Interleaved Execution

This is designed to improve the performance of statements that use multi-statement table valued functions (TVFs), which have traditionally had optimization issues (in the past the optimizer had no way of knowing how many rows were in one of these functions). This feature allows the optimizer to take count of the rows in the individual TVF to use an optimal join strategy.

Plan change regression analysis

This is probably the most interesting of these new features. As data changes, and perhaps the underlying column statistics have not been updated, the decisions the query optimizer makes may be based on bad information, and lead to less than optimal execution plans. Because the Query Store is maintaining runtime information for things like duration, it can monitor for queries that have suddenly had execution plan changes, and had regression in performance. If SQL Database determines that the plan has caused a performance problem, it reverts to using the previously used plan.

More Info About Database Compatibility Levels

You can learn more about database compatibility levels at: https://docs.microsoft.com/en-us/sql/t-sql/statements/alter-database-transact-sql-compatibility-level.

Choosing an initial service tier

While Microsoft gives guidance for the type of application that should use each database, there is a wide range of potential performance tiers and costs associated with that decision. Given the importance of the database tier to overall application performance, it is important to choose correctly.

The first part of making this decision is understanding the nature of your application—is it an internet-facing application that will see large scale and requires the database to store session state? Or is it a batch processing application that needs to complete its work in an eight-hour window? The former application requires extremely low levels of latency and would mostly be placed in the premium storage tier, with adjustments to up the performance curve for peak times to optimize cost. An example of this might mean keeping the database at the P4 performance level during off-peak times, but using P11 for high loads like peak business hours, or holidays for retailers. For the batch processing application, an S2 or S3 may be a good starting point. The latency incurred does not matter so long as the batch processing occurs within its eight-hour window.

For most applications, the S2 or S3 tiers are a good starting point. For applications that rely on intensive CPU and I/O operations, the premium tier is a better fit, offering more CPU and starting at 10x I/O performance over the standard tier. The premium RS tier can be a good fit for performance testing your application because it offers the same performance levels as the premium tier, but with a reduced uptime service level agreement (SLA).

More Info About Azure SQL Database Performance Tiers

You can learn more about Azure SQL Database service tiers at https://docs.microsoft.com/en-us/azure/sql-database/sql-database-service-tiers.

Changing service levels

Changing the service level of the database is always an option—you are not locked into the initial size you chose at the time of creation. You review elastic pools later in this chapter, which give more flexibility in terms of scale. However, scaling an individual database is still an option.

When you change the scale of an individual database, it requires the database to be copied on the Azure platform as a background operation. A new replica of your database is created, and no data is lost. The only outage that may occur is that in-flight transactions may be lost during the actual switchover (should be under four seconds, and is under 30 seconds 99 percent of the time). It is for this reason that it is important to build retry logic into applications that use Azure SQL Database. During the rest of the resizing process the original database is available. This change in service can last a few minutes to several hours depending on the size of the database. The duration of the process is dependent on the size of the database and its original and target service tiers. For example, if your database is approaching the max size for its service, the duration will be significantly longer than for an empty database. You can resize your database via the portal (Figure 1-4), T-SQL, or PowerShell. Additional options for making these changes include using the Azure Command Line Interface or the Rest API for Azure SQL Database.

Exam Tip

Exam Tip

Remember how to choose the right service tier based on the application workload and performance requirements.

Figure 1-4 Portal experience for changing the size of Azure SQL DB

You can also execute this change in T-SQL.

ALTER DATABASE [db1] MODIFY (EDITION = 'Premium', MAXSIZE = 1024 GB, SERVICE_OBJECTIVE = 'P15');

The only major limitation around resizing individual databases is size. Performance level has some correlation with storage. For example, the database that is being resized in the above T-SQL example is now a P15, which supports up to 4 TB of data. If for example the database contained 2.5 TB of data, you would be limited to the P11 or P15 performance level because those support 4 TB, whereas the P1-P6 databases only supports 500 GB.

Create servers and databases

When talking about Platform as a Service offerings there are always many abstractions of things like hardware and operating systems. Remember, nearly everything in Microsoft Azure is virtualized or containerized. So, what does this mean for your Azure SQL Database? When you create a “server” with multiple databases on it, those databases could exist in different virtual machines than your “server.” The server in this example is simply a logical container for your databases; it is not specific to any piece of hardware.

Now that you understand that your “server” is just a logical construct, you can better understand some of the concepts around building a server (see Figure 1-5). To create your server, you need a few things:

Server Name Any globally unique name.

Server admin login Any valid name.

Password Any valid password.

Subscription The Azure subscription you wish to create this server in. If your account has access to multiple subscriptions, you are in the correct place.

Resource Group The Azure resource group associated with this server and databases. You may create a new resource group, or use an existing resource group.

Location The server can only exist in one Azure region.

Figure 1-5 Creating an Azure SQL Database Server in the Azure Portal

In earlier editions of Azure SQL Database, you were required to use a system-generated name; this is no longer the case; however, your name must be globally unique. Remember, your server name will always be servername.database.windows.net.

Other Options for creating a logical server

Like most services in Azure, Azure SQL Database offers extensive options for scripting to allow for automated deployment. You can use the following PowerShell command to create a new server:

PS C:>New-AzureSqlDatabaseServer -Location "East US" -AdministratorLogin "AdminLogin" -AdministratorLoginPassword "Password1234!" -Version "12.0"

The Azure CLI is another option for creating your logical server. The syntax of that command is:

az sql server create --name YourServer--resource-group DemoRG --location $location

--admin-user "AdminLogin" --admin-password "Password1234!"

To run these demos you need Azure PowerShell. If you are on an older version of Windows you may need to install Azure PowerShell. You can download the installer at: https://www.microsoft.com/web/handlers/webpi.ashx/getinstaller/WindowsAzurePowershellGet.3f.3f.3fnew.appids.

You can also install using the following PowerShell cmdlet:

# Install the Azure Resource Manager modules from the PowerShell Gallery Install-Module AzureRM

More Info About Azure CLI and SQL Database

You can learn more the Azure CLI and database creation at: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-get-started-cli.

Database and server firewall rules

One of the concepts of Azure SQL Database is that it is exposed over the Internet via a TCP endpoint over port 1433. This can sound a little bit scary—your database is open over the Internet? However, Microsoft provides you with multiple levels of security to secure your data and databases. Figure 1-6 provides an overview of how this security process works. There are two sets of firewall rules. The first is the database level firewall rule, which is the more granular of these two rules. The database level rule is set within the individual database where it can be viewed in the catalog view sys.database_firewall_rules. You can set these database rules using T-SQL within the database, however they may also be set using PowerShell, the Azure CLI, or the REST API interface. These rules as mentioned are specific to an individual database, if you need to replicate them across multiple databases you need to include that as part of your deployment scripts. You may also delete and update these firewall rules using all aforementioned methods. An example of the T-SQL to create a database level firewall rule is as follows:

EXECUTE sp_set_firewall_rule @name = N'ContosoFirewallRule', @start_ip_address = '192.168.1.1', @end_ip_address = '192.168.1.10'

Server level firewall rules on the other hand, can only be set through the Azure Portal, PowerShell, Azure CLI, the Rest API, or in the master database of the logical server. You can view server level firewall rules from within your Azure SQL Database by querying the catalog view sys.firewall_rules.

A server-level firewall rule is less granular than the database rule—an example of where you might use these two features in conjunction would be a Software as a Service application (SaaS) where you have a database for each of your customers in a single logical server. You might whitelist your corporate IP address with a server-level firewall rule so that you can easily manage all your customer databases, whereas you would have an individual database rule for each of your customers to gain access to their database.

Figure 1-6 Azure SQL Database firewall schematic

As mentioned, there are several ways to set a firewall rule at the server level. Here is an example using PowerShell.

New-AzureRmSqlServerFirewallRule -ResourceGroupName "Group-8" ' -ServerName "servername" -FirewallRuleName "AllowSome" -StartIpAddress "192.168.1.0" -EndIpAddress "192.168.1.4"

Here is an example using the Azure CLI

az sql server firewall-rule create --resource-group myResourceGroup

--server yourServer -n AllowYourIp --start-ip-address 192.168.1.0 --end-ip-address

192.168.1.4

In both examples, a range of four IP addresses is getting created. All firewall rules can either be a range or a single IP address. Server level firewall rules are cached within Azure to improve connection performance. If you are having issues connecting to your Azure SQL Database after changing firewall rules consider executing the DBCC FLUSHAUTHCACHE command to remove any cached entries that may be causing problems, from a machine that can successfully connect to your database.

Exam Tip

Exam Tip

Remember how to configure firewall settings using both PowerShell and the Azure Portal.

Connecting to Azure SQL Database from inside of Azure

You may have noticed that in Figure 1-5 there was a check box that says, Allow Azure Services To Access This Server.” This creates a server level firewall rule for the IP range of 0.0.0.0 to 0.0.0.0, which indicates internal Azure services (for example Azure App Services) to connect to your database server. Unfortunately, this means all of Azure can connect to your database, not just your subscription. When you select this option, which may be required for some use cases, you need to ensure that the security within your database(s) is properly configured, and that you are auditing traffic to look for anomalous logins.

Auditing in Azure SQL Database

One of the benefits of Azure SQL Database is its auditing functionality. In an on-premises SQL Server, auditing was commonly associated with large amounts of performance overhead, and was used rarely in heavily regulated organizations. With Azure SQL Database, auditing runs external to the database, and audit information is stored on your Azure Storage account, eliminating most concerns about space management and performance.

Auditing does not guarantee your regulatory compliance; however, it can help you maintain a record of what changes occurred in your environment, who accessed your environment, and from where, and allow you to have visibility into suspected security violations. There are two types of auditing using different types of Azure storage—blob and table. The use of table storage for auditing purposes has been deprecated, and blob should be used going forward. Blob storage offers greater performance and supports object-level auditing, so even without the deprecation, it is the better option.

More Info About Azure Compliance

You can learn more about Azure compliance practices at the Azure Trust Center: https://azure.microsoft.com/support/trust-center/compliance/.

Much like with firewall rules, auditing can be configured at the server or the database level. There are some inheritance rules that apply here. An auditing policy that is created on the logical server level applies to all existing and newly created databases on the server. However, if you enable blob auditing on the database level, it will not override and change any of the settings of the server blob auditing. In this scenario, the database would be audited twice, in parallel (once by the server policy, and then again by the database policy). Your blob auditing logs are stored in your Azure Storage account in a container named “sqldbauditlogs.”

More Info About Azure SQL Db Audit File Formats

You can learn more about Azure SQL Database Auditing here: https://go.microsoft.com/fwlink/?linkid=829599.

You have several options for consuming these log files from Azure SQL Database. You can view them within the Azure Portal, as seen in Figure 1-7. Or you can consume them using the Sys.fn_get_audit_file system function within your database, which will return them in tabular format. Other options include using SSMS to save the audit logs into an XEL or CSV file, or even a SQL Database table, or using the Power BI template created by Microsoft to access your audit log files.

Figure 1-7 View Audit Log option in Azure SQL Database Blade in Azure Portal

Much like the rest of the Azure SQL Database platform, auditing can be configured using PowerShell or the Rest API, depending on your automation needs.

More Info About Azure SQL Db Audit Data Analysis

Learn more about auditing, and automation options for configuring auditing here: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-auditing.

SQL Database Threat Detection

Unlike auditing, which is mostly replicating the behavior of auditing in an on-premises SQL Server, Threat Detection is a feature that was born in Azure, and is very dependent on background Azure compute resources to provide higher levels of security for your databases and applications. SQL Threat Detection uses more advanced methodology to protect your database from common security threats like SQL Injection, suspicious database activities, and anomalous login patterns.

SQL Injection is one of the most common vulnerabilities among web applications. This occurs when a hacker determines that a website is passing unchecked SQL into its database, and takes advantage of this by generating URLs that would escalate the privileges of an account, or get a list of users, and then change one of their passwords.

Threat detection gives you several types of threats to monitor and alert on:

All

SQL Injection

SQL Injection Vulnerability

Anomalous client login

The best practice recommendation is just to enable all threat types for your threat detection so you are broadly protected. You can also supply an email address to notify in the event of a detected threat. A sample email from a SQL Injection vulnerability is in Figure 1-8.

Figure 1-8 SQL Injection Vulnerability email

Microsoft will link to the event that triggered the alert to allow you to quickly assess the threat that is presented. Threat detection is an additional cost option to your Azure SQL Database, and integrates tightly with Azure Security Center. By taking advantage of machine learning in the Azure Platform, Threat Detection will become smarter and more reactive to threats over time.

Backup in Azure SQL Database

One of the benefits of Azure SQL Database is that your backup process is fully automated.

As soon as your database is provisioned it is backed up, and the portal allows for easy point in time recovery with no manual intervention. Azure SQL Database also uses Azure read-access geo-redundant storage (RA-GRS) to provide redundancy across regions. Much like you might configure in an on-premises SQL Server environment Azure SQL Database takes full, differential, and transaction log backups of your database. The log backups take place based on the amount of activity in the database, or at a fixed time interval. You can restore a database to any point-in-time within its retention period. You may also restore a database that was deleted, if you are within the retention period for that database.

It is important to note that the service tier of your database determines your backup retention (the basic tier has a five-day retention period, standard and premium have 35 days). In many regulated industries backups are required to be retained for much longer periods—including up to seven years for some financial and medical systems. So, what is the solution? Microsoft has a solution that is used in conjunction with the Azure Recovery Services component that allows you to retain weekly copies of your Azure SQL Database backups for up to 10 years (see Figure 1-9).

Figure 1-9 Azure Portal Long-Term Backup Retention configuration

To take advantage of the long-term retention feature, you need to create an Azure Recovery Vault in the same Azure region as your Azure SQL Database. You will then define a retention policy based on the number of years you need to retain your backups. Because this feature uses the Azure Backup services infrastructure, pricing is charged at those rates. There is a limit of 1000 databases per vault. Additionally, there is a limit of enabling 200 databases per vault in any 24-hour period. It is considered a best practice to use a separate vault for each Azure SQL Database server to simplify your management.

Restoring a database from long-term storage involves connecting to the backup vault where your database backups are retained and restoring the database to its server, much like the normal Azure SQL Database restore process.

More Info About Restoring Long-Term Azure SQL Database Backups

Restoring from long-term backup involves a different process than normal restores—learn more here: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-long-term-backup-retention-configure.

Azure SQL Database pricing includes up to 200 percent of your maximum provisioned database storage for your backups. For example, a standard tier database would have 500 GB of backup associated with it. If your database exceeds that 200 percent threshold, you can either choose to have Microsoft support reduce your retention period, or pay extra for additional backup storage, which is priced at the standard RA-GRS pricing tier. Reasons why your database may exceed the 200 percent threshold are databases that are close to the maximal size of the service tier that have a lot of activity increasing the size of transaction log and differential backups.

Azure SQL Database backups are encrypted if the underlying database is using transparent data encryption (TDE). As of early 2017, Microsoft has automatically enabled TDE for all new Azure SQL Databases. If you created your database before then, you may want to ensure that TDE is enabled, if you have a requirement for encrypted backups.

Exam Tip

Exam Tip

Remember how to configure long-term backup retention and how to restore an Azure SQL Database to a point-in-time.

High availability and disaster recovery in Azure SQL Database

One of the benefits of using the platform as a service offering is that many things are done for you. One of those includes high availability—local high availability is configured automatically for you. There are always three copies of your database to manage things like patching and transient hardware failures. This protects you in the event of any failures that happen within a local Azure region. However, to protect your database and application against broader regional failures or to give your application global read-scale, you will want to take advantage of the Active Geo-Replication feature in Azure SQL Database.

Active geo-replication allows you to have up to four readable secondary replicas of your database in the region of your choosing (see Figure 1-10). These secondary replicas can be used strictly for disaster recovery or can be used for active querying. This protects your application against larger regional failures, and provides resiliency to allow you to perform operations like rolling application upgrades and schema changes. Azure makes recommendations as to the best region for your geo-replica—this is based on the paired region concept in Azure. This paired region concept is not a limiter—you can build your replicas in any supported region in Azure. Many organizations do this to provide global read-scale for applications that are distributed globally. You can put a replica of the database much closer to your users reducing latency and improving overall performance.

More Info About Azure Paired Regions

Azure uses paired regions as a key DR concept that respects geo-political boundaries, learn more about this concept here: https://docs.microsoft.com/en-us/azure/best-practices-availability-paired-regions.

Figure 1-10 Geo-Replication for Azure SQL Database from Azure Portal

Configuring geo-replication requires you to have a logical server in each region you want to geo-replicate to. Configuring a second logical server is the only configuration that is required; no network or other infrastructure components are required. Your secondary database can run at a lower DTU level than your primary to reduce costs, however it is recommended to run with no less than half of the DTUs of the primary so that the replication process can keep up. The important metric to monitor for this is log IO percentage. For example if your primary database is an S3 (with 100 DTUs) and its log IO percentage was at 75 percent, your secondary would need to have at least 75 DTUs. Since there is no performance level with 75 DTUs, you would need an S3 as your secondary. Azure SQL Database requires that your secondary be on the same performance tier as the primary, for example it would not be supported to have a P1 primary and an S0 secondary, but you could have a S3 primary and an S0 secondary.

The administrator typically manages the failover process under normal circumstances, however in the event of an unplanned outage, Azure automatically moves the primary to one of the secondary copies. If after the failure, the administrator would like to be moved back to the preferred region, the administrator would need to perform a manual failover.

Automatic failover with failover groups

Failover groups increase the utility of geo-replicas by supporting group level replication for databases and automated failover processing. More importantly this feature allows applications to use a single connection string after failover. There are few key components to failover groups:

Failover Group Unit of failover can be one or many databases per server, which are recovered as a single unit. A failover group must consist of two logical servers.

Primary Server The logical server for the primary databases.

Secondary Server The logical server, which hosts the secondary databases. This server cannot be in the same region as the primary server.

There are a few things to keep in mind with failover groups—because data replication is an asynchronous process, there may be some data loss at the time of failure. This is configurable using the GracePeriodWithDataLossHours parameter. There are also two types of listener endpoints: read-write and read-only to route traffic to either the primary (for write activity) or to a group of secondary replicas (for read activities). These are DNS CNAME records that are FailoverGroupName.database.windows.net.

Geo-replication and failover groups can be configured in the Azure Portal, using PowerShell (see below example), or the REST API.

# Establish Active Geo-Replication $database = Get-AzureRmSqlDatabase -DatabaseName mydb -ResourceGroupName ResourceGroup1 -ServerName server1 $database | New-AzureRmSqlDatabaseSecondary -PartnerResourceGroupName ResourceGroup2 -PartnerServerName server2 -AllowConnections "All"

Create a sysadmin account

Unlike SQL Server, where many users can be assigned the System Admin role, in Azure SQL Database there is only one account that can be assigned server admin. If your Azure subscription is configured with Azure Active Directory, this account can be an Azure Active Directory (AAD) group (not to be confused with on-premises Active Directory). Using an AAD group is the best practice for this admin account, because it allows multiple members of a team to share server admin access without having to use a shared password.

You can set the Active Directory admin for a logical server using the Azure Portal as seen in Figure 1-11. The only requirement for implementing this configuration is that an Azure Active Directory must be configured as part of the subscription.

Figure 1-11 Azure Portal Azure Active Directory Admin configuration screen

Azure Active Directory and Azure SQL Database

Azure Active Directory gives a much more robust and complete security model for Azure SQL Database than merely using SQL logins for authentication. Azure AD allows you to stop the spread of identities across your database platform. The biggest benefit of this solution is the combination of your on-premises Active Directory being federated to your Azure Active Directory and offering your users a single-sign on experience.

In configurations with Active Directory Federation Services (ADFS), users can have a very similar pass-through authentication experience to using a Windows Authentication model with SQL Server. One important thing to note with ADFS versus non-ADFS implementations of hybrid Active Directory—in non-ADFS implementations the hashed values of on-premises user passwords are stored in the Azure AD because authentication is performed within Azure. In the example shown in Figure 1-12, where the customer is using ADFS, the authentication first routes to the nearest ADFS server, which is behind the customer’s firewall. You may notice the ADALSQL in that diagram which is the Active Directory Authentication Library for SQL Server, which you can use to allow your custom applications to connect to Azure SQL Database using Azure Active Directory authentication.

Azure Active Directory offers additional benefits, including easy configuration for multi-factor authentication, which can allow verification using phone calls, text messages, or mobile application notification. Multi-factor authentication is part of the Azure Active Directory premium offering.

Figure 1-12 Azure AD Authentication Model for Azure SQL Database

Configuring logins and users with Azure AD is similar to using Windows Authentication in SQL Server. There is one major difference concerning groups—even though you can create a login from an on-premises Active Directory user, you cannot create one from an on-premises Active Directory group. Group logins must be created based on Azure Active Directory groups. In most cases, where you will want to replicate the on-premises group structure, you can just create holding groups in your Azure AD that have a single member, the on-premises Active Directory group. There are several options for authentication to your Azure SQL Database, as shown in Figure 1-13.

Figure 1-13 SQL Server Management Studio Options for Authentication

Windows Authentication Not supported for Azure SQL Database.

SQL Server Authentication Traditional authentication model where the hashed credentials are stored in the database.

Active Directory Universal Authentication This model is used when multi-factor authentication is in place, and generates a browser-based login experience that is similar to logging into the Azure Portal.

Active Directory Password Authentication This model has the user enter their username in [email protected] format with their Azure Active Directory password. If MFA is enabled this will generate an error.

Active Directory Integrated This model is used when ADFS is in place, and the user is on a domain joined machine. If ADFS is not in place, connecting with this option will generate an error.

Some other recommendations from Microsoft for this include setting timeout values to 30 seconds because the initial authentication could be delayed. You also want to ensure that you are using newer versions of tools like SQL Server Management Studio, SQL Server Data Tools, and even bcp and sqlcmd command line tools because older versions do not support the Azure Active Directory authentication model.

Exam Tip

Exam Tip

Remember how to configure Azure Active Directory authentication for Azure SQL Database.

Configure elastic pools

All the topics you have read about so far refer to single database activities. Each database must be sized, tuned, and monitored individually. As you can imagine, in a larger organization or SaaS application that supports many customers it can be problematic to manage each database individually, and it may lead to overprovisioning of resources and additional costs associated with meeting performance needs. Elastic pools resolve this problem by provisioning a shared pool of resources that is shared by a group; like individual databases, elastic pools use a concept of eDTUs, which is simply the concept of DTUs applied to a group of databases. This concept allows databases to better share resources and manage peak processing loads. An easy thought comparison is that of a traditional SQL Server instance housing multiple databases from multiple applications.

Within a given pool a set eDTU is allocated and shared among all of the databases in the pool. The administrator can choose to set a minimum and maximum eDTU quota to prevent one database from consuming all the eDTUs in the pool and impacting overall system performance.

When to choose an elastic pool

Pools are a well suited to application patterns like Software as a Service (SaaS) where your application has many (more than 10) databases. The performance pattern that you are looking for is where DTU consumption Is relatively low with some spikes. This pattern can lead to cost savings even with as few as two S3 databases in a single pool. There are some common elements you want to analyze when deciding whether or not to put databases in a pool:

Size of the databases Pools do not have a large amount of storage. If your databases are near the max size of their service tier, you may not get enough density to be cost effective.

Timing of peak workloads Elastic pools are ideal for databases that have different peak workloads. If all of your databases have the same peak workload time, you may need to allocate too many eDTUs.

Average and peak utilization For databases that have minimal difference between their average and peak workloads, pools may not be a good architectural fit. An ideal scenario is where the peak workload is 1.5x its average utilization.

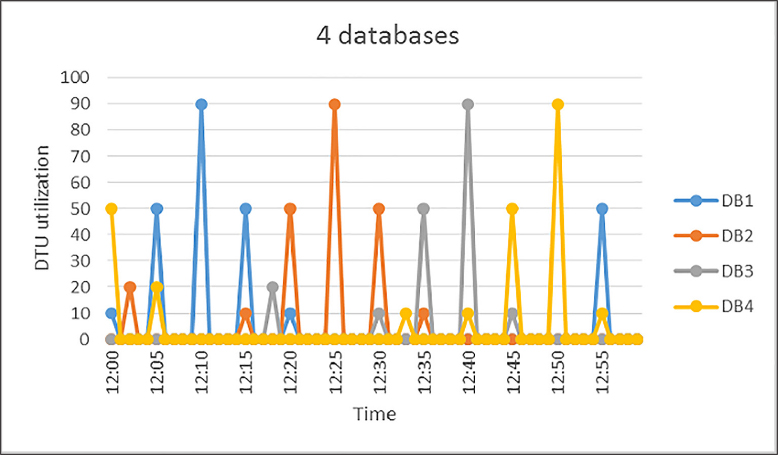

Figure 1-14 shows an example of four databases that are a good fit for an elastic pool. While each database has a maximum utilization of 90 percent, the average utilization of the pool is quite low, and each of the databases has their peak workloads at different times.

Figure 1-14 Image showing DTU workload for four databases

In Figure 1-15 you can see the workload utilization of 20 databases. The black line represents aggregate DTU usage for all databases; it never exceeds 100 DTUs. Cost and management are the key inputs into this decision—while eDTUs costs 1.5x more than DTUs used by single databases, they are shared across databases in the pool. In the scenario in Figure 1-15, the 20 databases could share 100 eDTUs versus each database having to be allocated 100 DTUs, which would reduce cost by 20x (this relies on S3 performance level for individual databases).

Figure 1-15 Chart showing the DTU workload for twenty databases

Exam Tip

Exam Tip

Understand when to choose an elastic pool versus a standalone database, both from the perspective of management and cost.

Sizing elastic pools

Sizing your elastic pool can be challenging, however elastic pools are flexible and can be changed dynamically. As a rule of thumb, you want a minimum of 10-15 databases in your pool, however in some scenarios, like the S3 databases mentioned earlier, it can be cost effective with as few as two databases. The formula for this is if the sum of the DTUs for the single databases is more than 1.5x the eDTUs needed for the pool, the elastic pool will be a cost benefit (this relating to the cost difference per eDTU versus DTUs). There is fixed upper limit to the number of databases you can include in a pool (shown in Table 1-7), based on the performance tier of the pool. This relates to the number of databases that reach peak utilization at the same time, which sets the eDTU number for your pool. For example, if you had a pool with four S3 databases (which would have a max of 100 DTUs as standalone) that all have a peak workload at the same time, you would need to allocate 400 eDTUs, as each database is consuming 100 eDTUs at exactly the same time. If as in Figure 1-14, they all had their peak at different times (each database was consuming 100 eDTUs, while the other 3 databases were idle), you could allocate 100 eDTUs and not experience throttled performance.

Table 1-7 Elastic Pool Limits

Tier |

Max DBs |

Max Storage Per Pool (GB) |

Max DTUs per Pool |

Basic |

500 |

156 |

1600 |

Standard |

500 |

1536 |

3000 |

Premium |

100 |

4096 |

4000 |

Premium-RS |

100 |

1024 |

1000 |

Configuring elastic pools

Building an elastic pool is easy—you allocate the number of eDTUs and storage, and then set a minimum and maximum eDTU count for each database. Depending on how many eDTUs you allocate, the number of databases you can place in the elastic pool will decrease (see Figure 1-16). Premium pools are less dense than standard pools, as shown by the maximum number of databases shown in Table 1-7.

Figure 1-16 Elastic Pool Configuration Screen from the Azure Portal

You can also build elastic pools using PowerShell and the Azure CLI as shown in the next two examples.

Login-AzureRmAccount

New-AzureRmSqlElasticPool -ResourceGroupName "ResourceGroup01" -ServerName "Server01"

-ElasticPoolName "ElasticPool01" -Edition "Standard" -Dtu 400 -DatabaseDtuMin 10

-DatabaseDtuMax 100

az sql elastic-pool create ñname "ElasticPool01" --resource-group "RG01"

--server "Server01" --db-dtu-max 100 --db-dtu-min 100 --dtu 1000 --edition

"Standard" --max-size 2048

The process for creating an elastic pool is to create the pool on an existing logical server, then to either add existing databases to the pool, or create new databases within the pool (see Figure 1-17). You can only add databases to the pool that are in the same logical server. If you are working with existing databases the Azure Portal makes recommendations for service tier, pool eDTUs, minimum, and maximum eDTUs based on the telemetry data from your existing databases. You should note that these recommendations are based on the last 30 days of data. There is a requirement for a database to have existed for at least seven days to appear in these recommendations.

Figure 1-17 Elastic Pool Configuration Screen from the Azure Portal

Managing and monitoring an elastic pool is like managing an individual database. The best place to go for pool information is the Azure Portal, which shows pool eDTU utilization and enables you to identify databases that may be negatively impacting the performance of the pool. By default, the portal shows you storage and eDTU utilization for the last hour, but you can configure this to show more historical data. You can also use the portal to create alerts and notifications on various performance metrics. You may also move a database out of an elastic pool, if after monitoring it is not a good fit for the profile of the pool.

More Info About Azure SQL Database Elastic Pool Limits

The elastic pool limits and resources are changing regularly. You can learn more about the limits and sizing of elastic pools here: https://docs.microsoft.com/en-us/azure/sql-database/sql-database-elastic-pool.

It is important to know that the limits of the pools are changing frequently as Microsoft makes updates to the Azure platform, so you should refer to books online and the portal before making decisions around designing your architecture.

If all the eDTUs in the elastic pool are consumed, performance in the pool is throttled. Each database receives an equal amount of resources for processing. The Azure SQL Database service ensures that each database has equal amounts of compute time. The easiest comparison to how this works is the use of the resource governor feature in an on-premises or IaaS SQL Server environment.

Changing pool sizing

There are two tiers of changing sizes in an elastic pool—one is changing the minimum and maximum eDTU settings for individual databases. These changes typically take less than five minutes. Changing the size of the elastic pool takes longer, and is dependent on the size of the databases in the pool, but in general a rule of thumb is that changing pool eDTUs takes around 90 minutes per 100 GB of data in your pool. It is important to keep this in mind if planning to dynamically alter the size of your pools for varying workloads—you may need to do this far in advance for larger pools.

Elastic jobs

One of the challenges to Azure SQL Database has been the inability to perform cross-database transactions. If we use the SaaS example, where each customer has a database, if you need to update master data, or issue a schema change across all customer databases, your deployment process would require a query to be issued against each individual database. While this process could be automated, it is still messy. Elastic pools allow for the concept of elastic jobs you can execute SQL across a group of databases in an elastic pool, and manage the results in a single place (see Figure 1-18).

Figure 1-18 Elastic Database Jobs workflow

Running elastic jobs as a few requirements—you need to install elastic jobs components in your Azure environment, and your jobs must be idempotent, which means for the script to succeed and run again it must have the same result. For example, if you were creating a new stored procedure, you would want to use the CREATE OR ALTER PROCEDURE syntax versus simply using the CREATE PROCEDURE option, which would fail the second time the script was executed.

Elastic jobs do require an additional database, which is designated a control database to store all metadata and state data. In an elastic pool scenario, this database would have minimal additional cost. The job service talks to the control database and launches and tracks jobs. If you are using elastic pools, you can access the elastic jobs interface through the portal, however if you wish to use this feature for group of standalone databases, you need to use PowerShell or the Azure CLI.

Elastic pools and geo-replication

Databases that are in an Elastic pools do have the same architecture as standalone databases, so features like backup and high availability are already in place. Just like standalone databases, you have the option to configure active geo-replication for databases in an elastic pool. The geo-replication is quite flexible because you do not have to include every database in the pool in your geo-replication set, and you may geo-replicate either to a standalone database, or an elastic pool. The only hard requirement is that the service tiers of the pools be the same.

Skill 1:2: Plan for SQL Server installation

This skill deals with planning for SQL Server installation. This includes choosing the right infrastructure, whether you are on-premises or in the cloud, configuring storage to meet your performance needs, and understanding best practices for installation.

This skill covers how to:

Plan for an IaaS or on-premises SQL Server deployment

Planning is needed for any SQL Server deployment, whether it is in the cloud or on-premises. It can be quite a challenge to gather the information you need to properly size and build out your environment. There are many questions you want to ask before beginning any deployment project:

What is your licensing situation?

What edition are you licensed for, and for how many cores?

If Azure, are you renting the license or bringing your own?

Do you have Software Assurance?

Are you migrating an existing application?

Are you upgrading versions of SQL Server?

Are you able to capture workload from the existing application?

What are the availability needs of the application?

Do you need a multiple data center solution?

Do you need high availability?

How long can the application be down?

What are the performance needs of the application?

Is this a customer facing latency-sensitive application?

Or is it a batch processing application that only needs to complete its jobs in a large time window?

How much data are you going to have to store?

How much data is accessed regularly?

How many concurrent sessions are you expecting?

Licensing

Licensing is not the most interesting topic; however, it is very important to many architectural discussions. You do not need to be a licensing expert; however, you do need to understand the basic rules and how they work. An important change Microsoft made with SQL Server 2016 is that all non-production environments (development, QA, test) only need to be licensed with developer edition. They also made developer edition free—this combination can greatly reduce license costs. Express Edition is also free for production use, but is limited to database sizes of 10 GB.

Standard Edition is licensed by either a Server/Client Access License (CAL) model or a core based licensing model. The server/CAL model is best suited for smaller organizations who do not have a lot of users (the “users” here refer to users of the application and not database users), where the core model is best suited for Internet facing applications that effectively have an unlimited number of users. One important thing to note is as of Service Pack 1 of SQL Server 2016, Standard Edition has the same programming surface area as Enterprise Edition.

Enterprise Edition is licensed in the core model only. Enterprise Edition is designed for applications that need scaling. It supports unlimited memory, larger scale-out farms, and the support needed for tier-1 workloads.

Software Assurance is effectively an annual support contract with Microsoft that entitles you to upgrades and more importantly grants you the right to have a second idle server for either high availability or disaster recovery. For example, if you have a license of Enterprise Edition for 16 cores, and you ran your production application with that, you would be entitled to have a second server (as part of an Availability Groups, Log Shipping Partner, Failover Cluster Instance, etc.) with no additional licensing charge. You may not use that server for any operations like backups, integrity checks, or restores, otherwise a license is required.

More Info About SQL Server Editions and Features

You can learn about each edition of SQL Server and what the differences are in this post: https://docs.microsoft.com/en-us/sql/sql-server/editions-and-components-of-sql-server-2017.

Azure VMs have two options for licensing SQL Server—you can rent the license as part of your monthly Azure cost, or you can bring your own license (BYOL), which lowers your monthly cost (see Figure 1-19).

Figure 1-19 Bring Your Own License SQL Server VMs in Azure Portal

You do need to have Software Assurance to choose this option.

It is important to choose a BYOL image if you are using an existing license; otherwise, you will be charged for the cost of the SQL Server licensing and a support ticket will be required to switch to your own license. Additionally, you can bring your own Windows license; this is a different process that you can see in Figure 1-20 that happens at VM deployment. Similarly, this option must be configured at deployment, or you will be charged the cost of the Windows license as part of your monthly bill. A support ticket can also resolve this issue, if you need to change after the fact.

Exam Tip

Exam Tip

Have a good understanding of the comparative costs associated with running SQL Server on Azure including infrastructure (compute and storage) and licensing. Understand the licensing rules as they relate to high availability and disaster recovery.

Figure 1-20 Bring Your Own License for WINDOWS VMs in Azure Portal

More Info About SQL Server Licensing

To learn more about the costs of SQL Server licensing by edition and software assurance visit: https://www.microsoft.com/en-us/sql-server/sql-server-2016-pricing.

Existing application

If you are migrating an existing application to a new SQL Server, you can bypass a lot of assessment. Whether as part of a migration to an Azure VM or a new on-premises server, you have access to all the working data you need. The first thing you want to do is use performance monitor to gather a performance profile of the source system. To do that, you can execute the following steps:

Launch Performance Monitor from the Windows menu.

If you are running this remotely, select Action > Connect To Another Computer and enter the name of the server in the Select Compute dialog box. (Note: you will need permission to run Perfmon on a remote server)

Click Data Collector Sets.

Right click User Defined and select New > Data Collector Set as shown in Figure 1-21.

Figure 1-21 New Data Collector Set Performance Monitor

Name your collection set SQL Server Baseline and click the radio button to create manually.

On the screen that says, What Kind Of Data Do You Want To Include? click the radio button next to Performance Counters.

On the next screen click, Add, and you should then see the screen in Figure 1-22.

Figure 1-22 Performance Monitor counter add screen

Add the following counters:

Processor Information Processor(*)% Processor Time Process(sqlservr)% Processor Time Processor(*)% Privileged Time Process(sqlservr)% Privileged Time Memory Available Mbytes Memory Pages/sec Process(sqlservr)Private Bytes Process(sqlservr)Working Set SQLServer: Memory Manager Total Server Memory SQLServer: Memory Manager Target Server Memory Physical Disk PhysicalDisk(*)Avg. Disk sec/Read PhysicalDisk(*)Avg. Disk sec/Write PhysicalDisk Avg. Disk Queue Length Disk Bytes/sec Avg Disk Bytes/Transfer Process(sqlservr)IO Data Operations/sec Network Network InterfaceBytes Received/sec Network InterfaceBytes Sent/sec Network Interface(*)Output Queue Length SQL Server: SQL Statistics Batch Requests/sec SQL Server: SQL Statistics SQL Compilations/sec SQL Server: SQL Statistics SQL Recompilations/sec SQL Server: Wait Statistics Latch Waits > 15 sec Locks > 30 sec IO Latch Timeouts

Typically, when assessing a server workload you want to capture this performance monitor data over a period of at least a week, or even a month for systems that have heavy month end processing. Once you have completed your data capture you can use the performance analysis of logs (PAL) tool, an open-source project to analyze and format your logs. You can use this analysis to help you design your target environment.

More Info About Pal Tool

The Performance Analysis of Logs tool is a Microsoft created open-source tool which allows for easier analysis of Performance Monitor input: https://github.com/clinthuffman/PAL.

Managing availability

You need to plan your SQL Server deployment for high availability and disaster recovery. If your application is critical to the business you need to design your underlying infrastructure to reflect that criticality. SQL Server offers many features for that high availability and disaster recovery in the form of AlwaysOn Availability Groups and Failover Cluster Instances. Or you can choose to deploy a more manual solution like log shipping either by itself or in conjunction with another option. Both of these options are dependent on Windows Server Failover Cluster (WSFC), though starting with SQL Server 2017, Availability Groups may be deployed for migration purposes only, without an underlying WSFC. These techniques all protect against machine and operating system failure, and allow you to minimize downtime during maintenance operations like patching Windows and SQL Server.

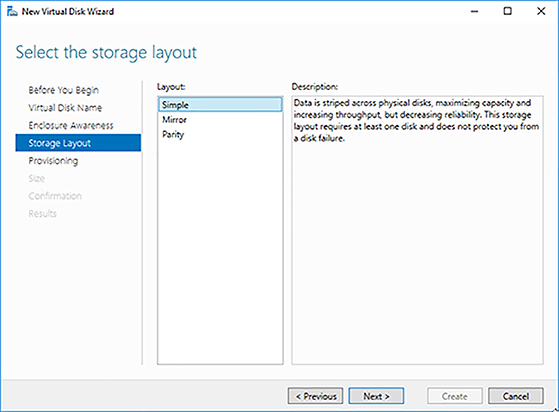

Failover Cluster Instances are an option that provides local high availability. This option depends on shared storage, where a single copy of your instance floats between two or more nodes running SQL Server. Failover cluster instances require a dedicated cluster installation of SQL Server. Most installations of failover cluster instance are in one location; however, they may be combined with storage replication to provide disaster recovery. To implement failover cluster instances in Azure, you need Windows Server 2016 Storage Spaces Direct to provide shared storage. A failover cluster instance has a single point of failure at the shared storage layer, so you need to use an additional technique like Availability Groups if you need additional data protection.

A failover cluster instance encompasses your while instance. This means things like logins and SQL Agent jobs are always on all nodes where your instance is installed. There is no process required to sync because the instance contains a single copy of that master data. The unit of failover is at the instance level.

Availability Groups are an option that can provide a level of high availability locally and disaster recovery in a remote data center. Like Failover Cluster Instances, Availability Groups (AGs) are built on top of a WSFC. However, AGs use standalone instances of SQL Server, and do not encompass the entire instance. The AG is a group of databases that are organized together to send transaction data to one or more secondary replicas. An AG can have up to eight replicas for a total of nine nodes. These replicas can be used for reading and backups to offload work from the primary replica. An AG provides an additional measure of protection over a failover cluster instance because there are inherently multiple copies of the data in the environment. Additionally, there is automatic page repair for any data page that is deemed corrupt from the primary replica.

Each AG has its own listener, which serves as a virtual IP address for the group. By default, the listener always routes a connection to the primary instance, however if used in conjunction with read-only routing and the application intent flag in a SQL Server connection string, connections can be routed to a secondary for read offload. An AG offers two types of replicas, synchronous and asynchronous, which are typically used for local and remote copies respectively. If you are using synchronous mode, you should be aware that a write transaction will not complete on the primary replica until it reaches the transaction log on the secondary replica, so it is important to ensure that there is minimal latency between the replicas. Additionally, you can only have automatic failover between nodes that are synchronous. Asynchronous replicas require manual failover due to the potential data loss of in-flight transactions.

One challenge with AGs is keeping users and jobs in sync between replicas—because the feature uses standalone instances there is nothing to keep the jobs or users in sync. For users, the solution is relatively straightforward—you can use contained database users, which are local to the database in question, rather than stored in the master database.

More Info Contained Database Users

You can learn about creating contained database users and the security requirements around them here: https://docs.microsoft.com/en-us/sql/relational-databases/security/contained-database-users-making-your-database-portable.

Agent jobs are a little bit more challenging because you would need to build some intelligence into the job to determine which node is currently primary. One solution is to regularly copy the agent jobs from the primary to the secondary server(s), and to add logic to the job to ensure that it only operates on the primary replica.

As shown in Figure 1-23, these two technologies (FCIs and AGs) can be combined to provide higher levels of data protection. The one caveat to combining the techniques is that automatic failover can no longer be used within the AG due to the presence of the FCI. It should be noted that the WSFC is using a file share for quorum. This is common configuration for multi-subnet distributed clusters. Another option if you are using Windows Server 2016 is to use Azure Blob Storage for your cloud quorum option. You should always try to use the latest supported version of Windows for your SQL Server installations because enhancements to the failover clustering software stack have been numerous in recent releases.

Choosing the right high availability solution comes down to budget and the needs of your business for your application. You should have an understanding from your business of what the availability needs for each application are, and design your architecture appropriately.

Figure 1-23 Availability Group and Failover Cluster Instance Combined Architecture

Managing performance

Performance is one of the more challenging components in developing new deployments. Whether you are in Azure or on-premises, or on physical hardware or virtual hardware, all database systems have differing performance requirements, based on both available hardware resources, workload of the application, and business requirements of the application.

For example, a database that paints web pages for a customer facing application needs to have extremely low latency, whereas an application that does overnight back office processing typically just needs to have enough resources to finish its batch within an eight-hour timeframe.

The official requirements for installing SQL Server are as follows.

Table 1-8 SQL Server Installation Requirements

Component |

Requirement |

MEMORY |

Minimum: |

Express Editions: 512 MB |

|

All other editions: 1 GB |

|

Recommended: |

|

Express Editions: 1 GB |

|

All other editions: At least 4 GB and should be increased as database size increases to ensure optimal performance. |

|

PROCESSOR SPEED |

Minimum: x64 Processor: 1.4 GHz |

PROCESSOR TYPE |

Recommended: 2.0 GHz or faster x64 Processor: AMD Opteron, AMD Athlon 64, Intel Xeon with Intel EM64T support, Intel Pentium IV with EM64T support |

STORAGE |

A minimum of 6 GB is required to install SQL Server |

Table 1-8 shows the minimum hardware requirements as specified by Microsoft for SQL Server 2016. It is important to note that these are absolute minimum supported values, especially when talking about memory—4 GB is required just to get SQL Server up and running. In a production environment, you should use at least 16 GB, and adjust depending on your workload.

Storage

You will notice the only disk requirement is the 6 GB required to install SQL Server. You learn more about proper disk layout for SQL Server on Azure later in this section, however there are two other important metrics around disk to know about—I/O Operations Per Second (IOPs) and latency. IOPs are a measure of how fast your disk can read and write data, while latency is a measure of how long it takes the data to be read from disk. Latency will be reduced greatly when using solid state drives—in fact in Azure, it is effectively a requirement to use Premium Storage (which is SSD based) with SQL Server workloads. More and more on-premises SQL Server workloads are using SSD based storage due to the reduced latency and higher IOPs that the SSDs offer. Storage performance is critical to database performance, so it is important to properly design your storage subsystem.

More Info About SQL Server Storage Configuration

You can learn more about SQL Server storage performance at: https://technet.microsoft.com/en-us/library/cc298801.aspx.

Storage architecture is complex, especially given the number of different storage options on the market. The important thing is to perform testing of your storage configuration to ensure that it meets the expected needs of your application. Microsoft supplies a tool named DiskSpeed, which is a benchmarking tool to help you understand the performance of your storage subsystem, and lets you assess any changes. If you are migrating an existing application, you can use the following Performance Monitor (Performance Monitor) counters from SQL Server to get an assessment of your current IOPs:

SQL Server Resource Pool Stats: Disk Read IO/Sec

SQL Server Resource Pool Stats: Disk Write IO/Sec

Those two counters will get the number of IOPs performed by your SQL Server instance. If you want latency metrics you can also use Performance Monitor to get that data:

Physical Disk/Logical Disk->Avg. Disk Sec/Read

Physical Disk/Logical Disk->Avg. Disk Sec/Write

You can also get this data from the SQL Server dynamic management views and functions (DMVs and DMFs) including sys.dm_io_virtual_file_stats, however, that DMF is not resettable (its stats exist for the life of the instance) so using Performance Monitor gives you more real-time information.

Memory

One of the biggest keys to database performance is having enough available memory so that the working set of data does not have to be read from disk. As fast as modern disk is, it is still orders of magnitude slower than reading and writing from memory. It is a common myth that database servers are memory hogs, while a SQL Server instance will use all the memory allocated to it, which is by design as opposed to any runaway processing. Memory does tend to be a little bit of a black box, however there is a Performance Monitor metric from SQL Server that can give you a good idea of memory utilization.

So how do you decide how much memory to include in your SQL Server? If you are running in Azure, this is relatively straightforward. Choose what you think is best and then adjust the size of your server up or down to better suit the needs of your application. If you are running the standard edition of SQL Server you are limited to 128 GB, however, with Enterprise Edition having unlimited memory, this can be a tough decision. There are a few inputs you can use to guide this:

What is your largest table?

Are you using in-memory features like OLTP and columnstore?

Are you using data compression?

There is no hard and fast rule for how much memory your SQL Server instance needs. There are a couple things you will want to monitor: disk usage and page life expectancy (PLE) in SQL Server (how long a page is expected to last in the buffer pool). If you do not have enough RAM, you will see significantly more disk activity as the database needs to reach out to the storage to bring pages into memory. Additionally, you will see PLE decease rapidly when you do not have enough memory. If you are using data compression, that can give you better utilization of RAM, by allowing your largest tables in indexes to fit into fewer data pages. If you are using features like in-memory OLTP or columnstore, you need to account for that in your calculation. Sizing your system’s memory is challenging and does require changing over time, however, buying additional RAM tends to be cheaper than buying additional storage IOPs, and can mask a lot of bad development practice, so it is best to err on the high side of RAM.

More Info About SQL Server Memory Utilization

SQL Server memory utilization can be complicated; here are some examples of how to monitor SQL Server’s memory internals: https://docs.microsoft.com/en-us/sql/relational-databases/performance-monitor/monitor-memory-usage.

CPU

Like memory, CPU for a new application tends to be a black box. Unlike memory, due to the way SQL Server is licensed, increasing the amount of CPU allocated to your SQL Server can carry a very expensive cost beyond the hardware involved. If you are migrating an existing workload, you can look at the output from your Performance Monitor around processor utilization, and adjust up or down based on current processor utilization. As a rule of thumb for adequate throughput, you would like to see your database servers running at under 70-80 percent processor utilization during normal operation. There will be spikes during heavy processing, but if your server is constantly running at 80-90 percent, your throughput will be limited and latency may increase.

If helps to understand the nature of your application as well—most online analytical processing databases (OLAP) benefit from a higher CPU count, due to the parallel nature of reporting. On the other hand, OLTP systems benefit from pure CPU speed, so when building your servers, you may want to go with a lower core count, but with faster individual cores. Also, on some virtual platforms over allocating CPU can lead to poorer performance because of scheduling operations. So, do not needlessly over allocate CPU in virtualized environments.

Select the appropriate size for a virtual machine