12

Developing Event-Based Solutions

In this chapter, we will be introduced to various event-based services available in Azure. We will become familiar with Azure Event Grid, Azure Event Hubs, Azure IoT Hub, and Azure Notification Hubs. We will also learn about the advantages and limitations of these services and find out which service is better to use in business scenarios. Furthermore, we will look at use case scenarios where we can leverage event-based services. Then, we will be able to compare event-driven solutions and detect their pros and cons.

By the end of this chapter, you will be able to configure event-processing services in Azure and you will know how to leverage them in your solution. By using the provided code snippets and scripts, you will be able to provision and connect to the services, generate events, and consume the events from the code.

In this chapter, we will cover the following topics:

- Understanding the role of event-driven solutions

- Discovering Azure Event Hubs

- Consuming event streams with Azure IoT Hub

- Exploring Azure Event Grid

- Comparing Azure event-based services

Technical requirements

The scripts provided in the chapter can be run in Azure Cloud Shell, but they can also be executed locally. The Azure CLI and Visual Studio Code are ideal tools to execute the code and commands provided in the following repository: https://github.com/PacktPublishing/Developing-Solutions-for-Microsoft-Azure-AZ-204-Exam-Guide/tree/main/Chapter12.

The code and scripts in this repository will provide you with examples of provisioning and development applications for Event Hubs, IoT Hub, and Azure Event Grid.

Code in Action videos for this chapter: https://bit.ly/3xBkdmt

Understanding the role of event-driven solutions

An event-driven solution plays a significant role in the modern world to help services running on different platforms and environments communicate. An event is a small chunk of data rapidly transferred by producers and processed by consumers. Events can form a stream of events where each event represents the current state of the remote system. For instance, IoT sensors can generate measurements in a real production environment. Then, Azure Stream Analytics services can implement the event-processing platform and leverage Azure Machine Learning services to monitor and predict trends in changes and recommend adjustments and maintenance.

Another common example of leveraging events is the reactive programming model. Imagine a website that requires scaling at the time of peak load. You could build a service that can consume the events received from the monitoring system. When the workload hits the threshold, it triggers your system to scale the website. This is scaling by reacting to incoming telemetry events. There are many other examples of leveraging event-based solutions, including streaming from IoT platforms, reactive programming, big data ingestions, and logging user activities. To better understand where you can leverage event-based solutions, let’s look at the services available in Azure.

There is some terminology that needs to be clarified before you go down the rabbit hole:

- Event: A small piece of valuable data that contains information about what is happening. Usually, the event body is represented in JSON format.

- Event source or publisher: The services where the event takes place.

- Event consumer, subscriber, or handler: The services that receive the event for processing or react to the event.

- Event schema: The key-value pairs that must be provided in the event body by the event publisher.

There are several services in Azure that allow you to build event-based solutions. Some of these services will be described in detail in this chapter. Other services will be described briefly because they will be proposed as part of the solution to exam questions:

- Azure Event Hubs is commonly used in big data ingestion scenarios that involve event source systems and big data services such as Azure Synapse Analytics and Databricks. Event Hubs can also be used to collect diagnostic logs on a high-loaded networking environment where millions of requests and packages need to be tracked for processing later.

- Azure IoT Hub is an extension of Event Hubs and is designed as a two-way communication resource between IoT devices and Azure. IoT Hub provides services to consume and analyze the streaming of events from IoT sensors. It also helps to manage device settings, call methods of the device to update firmware, and restart the device. Meanwhile, Event Hubs also support event streaming, but they do not support device-to-cloud communication.

- Azure Notification Hubs is designed to support notification delivery to mobile applications. Notification Hubs became an orchestration and broadcast service responsible for delivering notifications to a large number of devices based on different messaging platforms such as Android, iOS, and Windows. The Azure-hosted Notification Hubs is registered as a publisher and mobile devices are registered as notification consumers.

- Azure Event Grid is a service that implements a reactive programming platform. It is also a bridge between many Azure PaaS services. Azure Event Grid is also commonly used for creating event processing flows to react to changes and activity in Azure subscriptions. For example, a file uploaded to Azure Blob storage can trigger an event that is processed by Azure Functions and submitted as a message to Azure Service Bus to be consumed by a third-party system.

From a developer standpoint, you need to learn how to leverage the event-based services available in Azure to achieve high performance, scale the processing system, and develop applications that leverage stream processing and reactive programming models. In the following section, you will learn how to leverage the most common Azure event-based services in your solution.

Discovering Azure Event Hubs

Azure Event Hubs is an event-based processing service hosted in Azure. Event Hubs is designed to implement the classic publisher-subscriber pattern, where multiple publishers generate millions of events that need to be processed and temporarily stored unless the consuming services can pull those events. Ingress connections are made by services (publishers) to produce the events and event streams. Egress connections are used by services (consumers) to receive the events for further processing. The events received from Event Hubs can be transformed, persisted, and analyzed with streaming solutions and big data storage.

For instance, Event Hubs can be connected to Azure Stream Analytics to analyze the content of an event, use a window function to detect any anomalies, and push them to the Power BI dashboard for real-time monitoring. Another common example of leveraging Event Hubs is ingesting data in big data solutions. For instance, Event Hubs can support the Apache Kafka interface and allow Kafka clients to get connected to Event Hubs. From a telemetry standpoint, Event Hubs is a real hub for a variety of monitoring streams where metrics data can be temporarily persisted and pulled by the analytics application. Event Hubs is commonly used as the target of log forwarding from Azure services such as VMs and networking resources. Logs can be forwarded from the source and ingested into external Security Information and Event Management (SIEM) tools.

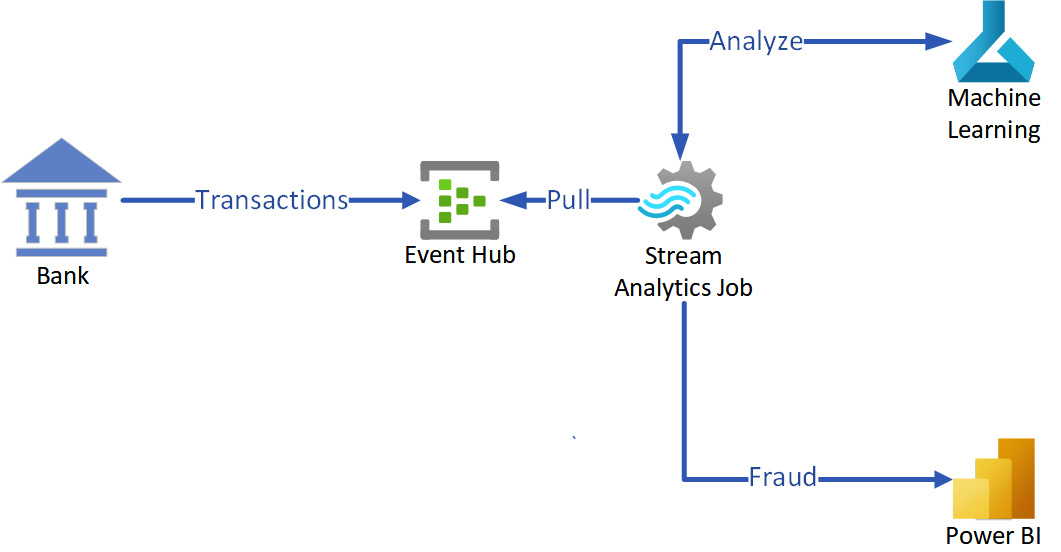

The following diagram depicts the common scenario of processing a stream of incoming events (bank transactions) to detect fraudulent activity and update a live Power BI dashboard:

Figure 12.1 – Detecting fraud transactions with Event Hubs and ML algorithms

In the next subsection, you will learn about Event Hubs connectivity, configuration, scaling, and pricing to successfully leverage event-based services for your enterprise solutions.

Provisioning namespaces

An Event Hubs namespace is a virtual server that you need to deploy in Azure to provision Event Hubs. A namespace works as a logical container for hubs and provides isolation and management features for access control. When you provision a namespace, you receive the Fully Qualified Domain Name (FQDN) endpoint for connection. The network connectivity to the Event Hubs namespace can be managed from the firewall and is supposed to accept connections along with MQ Telemetry Transport (MQTT), Advanced Message Queuing Protocol (AMQP), AMQP over WebSockets, and HTTPS protocols. IoT devices commonly support those protocols and allow device-to-cloud connections to Event Hubs.

Pricing model

The Event Hubs namespace provides a different set of features, depending on the price tier:

- Basic tier: An affordable tier with minimum performance that doesn’t allow dynamic partition scaling, capturing events, and VNet integration. The retention policy is limited to 1 day.

- Standard tier: This tier allows you to capture events in a storage account, integrate with VNets, and has increased retention for up to 7 days.

- Premium tier: An expensive tier that includes all sets of features and does not have any throughput limits. The retention policy is limited to 90 days.

- Dedicated tier: This tier has the same features as the Premium tier and is provisioned in an exclusive single-tenant environment.

Scaling

Event Hubs is a highly scalable solution designed for high-load data ingestion. The scaling process includes extending the number of throughput units and processing units.

The Basic and Standard price tiers are allowed to scale 40 throughput units, which are responsible for ingress and egress traffic. To increase throughput limits, admins can manually adjust the number of throughput units from the Azure portal. Each throughput unit will be charged individually per hour. An error will be generated when the load exceeds the available throughput.

Premium tier Event Hubs work in resource-isolated environments and allow horizontal scaling by increasing the processing units that represent units of the isolated environment. Because the Premium tier does not have any throughput limitations, ingress or egress requests can be made but will wait to be processed. The processing unit can handle the traffic that corresponds to about 10 throughput units in the Basic and Standard tiers. The Premium tier is allowed to scale up to 16 processing units.

Leveraging partitions

Partition numbers can also affect the performance of Event Hubs. The idea of partitions is the parallel processing of events individually on each of the partitions. The number of partitions is configured in the provisioning step, with a minimum of two, and cannot be changed later. The number of provisioned partitions should correspond to the number of throughput units with a 1:1 ratio.

In Event Hubs, the partition represents the logically separated queue, where the event is stored until it’s pulled by the client. The queue supports the First-In, First-Out (FIFO) direction to retrieve events. When an event is received without a partition address being provided, it will be spread between available partitions. Later, events can be picked from each of the partitions individually.

Provisioning Azure Event Hubs

Provisioning Event Hubs consists of two steps. The first is namespace provisioning, which requires selecting pricing tiers, throughput units or processing units, and a location. After provisioning a namespace, Event Hubs can be provisioned with a certain number of partitions and with the number of days for event retentions. The rest of the feature set includes capturing events, network integration, and retrieving a shared access signature (SAS) key for connection, all of which can be configured from the Azure portal.

The following bash script will help you provision a namespace and an Event Hubs resource in your Azure subscription by leveraging the Azure CLI:

From this script, you can provision a storage account and configure event capturing. Later, when you run the publisher, you will be able to observe the captured events. The Avro file that contains captured events should look as follows:

Figure 12.2 – Captured events from the Avro file opened from the Azure portal

Now, let’s look at the event capturing feature.

Capturing events

Azure Event Hubs receives events from publishers and persists events based on the retention period before consumers pull them. All events received by Event Hubs can be copied to the buffer and then flashed to the blob. If an event is received or deleted because of the expiration time, its copy still exists in the blob and is available for analysis.

Event-capturing functionality is available for the Standard and Premium price tiers of Event Hubs. Be aware that capturing can produce extra charges for the Standard tier of Event Hubs, but those charges are included in the Premium tier. To minimize charges, you can specify the size quotas and time window of capturing. You can leverage Azure Blob storage and Azure Data Lake to capture events. Capturing functionality generates files in the provided container with time and date-based names per partition. The Apache Avro file format is used to capture and provide a compact binary structure to consume in Visual Studio Code and third-party tools.

In the previous script execution, you provisioned Event Hubs and also configured event capturing in a storage account. Later, when you build an application to send and receive events, you will also observe captured events in the storage account of the same resource group.

Consumer groups

Consumer groups are used to perform an independent read of events by the application. Consumer groups represent the view of events’ state in the partition for events consumers. The service-published events can tag the event with a specific consumer group. A $Default consumer group is always created for each Event Hubs and other groups can be added up to the amount allowed by the pricing tier. The recommended best practice is to provide a single group for each downstream application that produces the event stream. Meanwhile, multiple readers of the same consumer group and the same partition can complicate event consumption and cause duplicates. The number of consumers should not exceed five consumers per group, per partition.

Connections with SAS tokens

The authentication and authorization of publishers and consumers are implemented based on SAS tokens generated with listen, send, and manage rights. The manage rights allow you to listen for and send events. Based on the principle of least privilege, publishers should only be allowed to send events, and consumers should only be allowed to listen to events. The default SAS token is generated with manage rights for all Event Hubs namespaces during provisioning. The SAS token works for all Event Hubs created with the namespace but should only be used for management purposes. After provisioning the Event Hubs, you can generate a SAS token based on the rights required for the service. That token should be used in the connection string for publishers and consumers. During the processing streams of events, the SAS tokens can also identify the producer.

Event consumption services

Many services are available in Azure to consume events from Event Hubs:

- Azure Event Grid can be connected to Event Hubs to publish and consume events and is usually used as a bridge to Azure Queue Storage, Azure Service Bus, Azure Functions, Azure Cosmos DB, and many others.

- Azure Steam Analytics is the most commonly used service in conjunction with Event Hubs to process events and ingest them into Azure Blob storage, Azure Queue Storage, Azure Service Bus, Azure Cosmos DB, Azure Synapse Analytics, and Power BI datasets. Compared to Event Grid, Azure Stream Analytics can leverage sophisticated filtering algorithms, including leveraging Azure Machine Learning services.

- Big data ingestion solutions also support Event Hubs as a source of streaming data. For instance, Azure Synapse Data Explorer can be connected to Event Hubs directly to pull and process events. Another example is the Azure Databricks solution, which operates with Event Hubs for Jupyter notebooks to submit and receive events.

Developing applications for Event Hubs

From the previous sections, you already know enough about Event Hubs to leverage your solutions. Now, you have a chance to observe how events can be submitted and consumed by C# applications.

From the following repository, you can build a publisher project and subscriber project to connect to the instance of Event Hubs provisioned earlier. To configure the project, you need to retrieve the connections string for Event Hubs with listening and sending rights. You also need the Azure Storage connection string, which enables your subscriber to persist checkpoints. Both strings were generated in the previous script run. You need to copy them from the output and update the Program.cs file.

To receive the events from Event Hubs, you need to start the consumer (the subscriber project). It will keep connected and show the body of events in the output when they are received. Then, you can run a publisher project (which produces 10 messages and outputs each message on the console). When you switch back to the subscriber console, you will see the received messages. These projects are located in the following repository: https://github.com/PacktPublishing/Developing-Solutions-for-Microsoft-Azure-AZ-204-Exam-Guide/tree/main/Chapter12/02-eventhub-process.

This example has demonstrated how a connection to Event Hubs is made with code. From the code, you can observe the following classes leveraged for communication:

|

Class |

Description |

|

EventHubClient |

This class is responsible for generating events. It should be configured with connection strings to Event Hubs. |

|

EventProcessorHost |

This class performs event listening and should be configured with connection strings to Event Hubs and a storage account. |

|

SimpleEventProcessor |

This is a custom class that implements the IEventProcessor interface and functions to open and process received events and output their body. |

Table 12.1 – C# SDK classes for event management with Event Hubs

Azure Event Hubs is quite a sophisticated service that we won’t discuss in depth, though we have covered enough for you to be familiar with it according to the exam requirements. In the next section, you will learn how to consume events with Azure IoT Hub and Azure Stream Analytics.

Consuming event streams with Azure IoT Hub

Azure IoT Hub is another event-based service that we’ll take a close look at. The Azure IoT Hub platform is very similar to Event Hubs. It has important differences though that are worth mentioning. First of all, Azure IoT Hub is designed for consuming streaming telemetry from IoT devices and also managing the devices that produce the streams. Azure IoT Hub can communicate with IoT devices if the devices need to be restarted or the firmware needs to be updated. Azure IoT Hub can also register devices to set up a secure communication channel and deregister devices if they are stolen. Azure IoT Hub should also support industry-standard communication protocols.

There are a variety of devices available on the market. Some IoT devices are powerful enough to get connected to the internet and provide telemetry from sensors. Other small, low-power IoT devices communicate with the hubs through the gateway. The devices can support TCP protocols, AMQP, and MQTT protocols, and their implementation through HTTPS. Because the amount of supported devices is quite high and telemetry streams can provide live updates with a high number of events, the performance of Azure IoT Hub is the most valuable metric.

Another bright side of Azure IoT Hub is its integration with other Azure services. Azure IoT Hub can be integrated with the powerful Stream Analytics service, which can use jobs to pull events from Event Hubs and IoT Hub and submit them to a SQL database, a storage account, Cosmos DB, or Power BI. Moreover, you can deploy Azure IoT Central, the service that’s responsible for UI visualization of telemetry information and also managing device parameters and control connected to the device sensors.

Pricing model

Azure IoT Hub provides two pricing tiers with different features and different amounts of messages that can be consumed based on tier limitations. You can plan your deployment when you know the number of devices you are going to support and the number of messages they will send per month. IoT Hub will stop accepting messages if the number of messages per month allowed by the tier is exceeded. In that case, a scale-up will be required. The following price tiers are available:

- Basic (B1-B3): This tier allows you to ingest 0.4 MB to 300 MB of messages and leverage all communication protocols (HTTP, AMQP, and MQTT). It also allows messages to be routed into Event Grid directly. Unfortunately, it does not support cloud-to-device messaging.

- Standard (S1-S3): This tier allows you to ingest the same number of messages and has the same features as the Basic tier. The Standard tier also supports cloud-to-device messaging, device management through device enrolment, and device twins for configuration. The main difference between the Standard and Basic tiers is Azure IoT Edge support. The IoT Edge functionality allows you to orchestrate containers and their configuration on the device itself. For example, self-driving cars can leverage IoT Edge to spin containers with AI on the car and minimize the latency of communication.

- Free (F1): This tier has the same features as the Standard pricing tier. Only one instance can be deployed per subscription.

Device registration

The device registration process can be performed manually from the Azure portal or by using the automation options. In the case of managing a large fleet of devices, the IoT Hub Device Provisioning Service (DPS) should be used for device enrolment. Registering devices is a way to provide authentication and authorization for devices to send telemetry streams and receive controlling messages from the cloud. Two registration options are supported – one is with the use of SAS keys, while the other is a certificate. Both options can be revoked if a device is lost or stolen. In the provisioning script for Azure IoT Hub, you will register a device with a SAS key.

Azure IoT Edge

Azure IoT Edge is a service and software that enables you to bring logic and AI services to a device. The Azure IoT Edge package needs to be installed on the IoT device registered in Azure IoT Hub and configured with a connection string. IoT Edge is based on the IoT Edge agent and IoT Edge hubs Docker containers. The agent is responsible for updating restarts and updating configuration. The hubs is responsible for providing interfaces for communication. The IoT Edge runtime enables you to run custom code in separate containers and download and update container images to the new version when it’s available.

Provisioning Azure IoT Hub

Provisioning IoT Hub does not require provisioning any namespaces as Event Hubs does. It only requires selecting a pricing tier and location with a unique name. All other settings, including enrolling devices, can be configured after provisioning. For device enrolment management, you also can leverage the IoT Hub DPS, but it’s not required for managing a single device, which we will use in the following script.

Provisioning IoT Hub and enrolling a single device are automated with the Azure CLI in the following script. The commands from the script should be completed in Bash. The script’s output should provide the connection string for the virtual device. The connection string should be persisted so that you can configure the upcoming code example: https://github.com/PacktPublishing/Developing-Solutions-for-Microsoft-Azure-AZ-204-Exam-Guide/blob/main/Chapter12/05-iot-hub/demo.azcli.

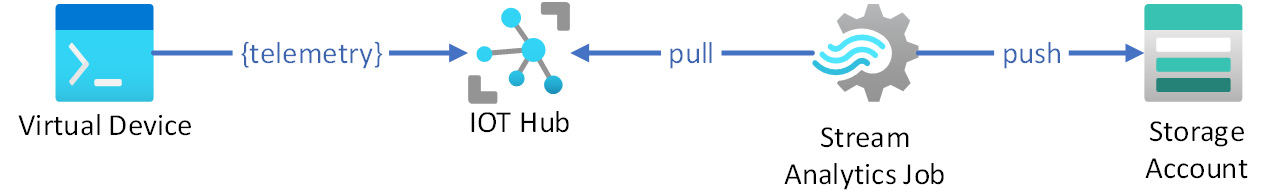

After running the commands in the script, you can observe the deployed resources from the Azure portal. The following resources should be provisioned: Azure IoT Hub and virtual device, Azure storage account for monitoring output, and Azure Stream Analytics job to pull events from Azure IoT Hub. The device will report telemetry and the job will persist to the storage account, but only for messages with a humidity of more than 70%. The following schema will help you understand the flow of the event stream from an IoT device:

Figure 12.3 – Processing an event stream with IoT Hub and a Stream Analytics job

In the next section, we will learn how the IoT device can be configured to produce telemetry. The connection string from the script’s output should be saved for the next step.

Developing applications for Azure IoT Hub

IoT devices are available on a variety of platforms and for many of them, Microsoft provides SDKs that can be leveraged for connection. The main functionality of the SDK is scoped for the following tasks:

- Telemetry streaming: A telemetry stream is usually provided with an event sent to Azure IoT Hub. The body of an event is formatted in JSON and contains values collected from sensors.

- Receiving controlling messages: A device can be registered for receiving cloud-to-device messages. The messages contain the body and key-value properties for transfer commands and states. The delivery of the messages is guaranteed.

- Providing methods for invocation: Invocation methods are another way of managing a device from the cloud. They are commonly used to restart a device and update the firmware. They can also be leveraged for remote control.

- Handling configuration changes: The device configuration (named device twins) describes the current device settings and contains the desired and reported properties for the device. The device itself should update its settings to the desired values and report the changes back to Azure IoT Hub. Those settings are persisted in the JSON file hosted on IoT Hub.

In the following example, you will learn how to connect your virtual device to Azure IoT Hub and provide telemetry streaming. Because a real device is difficult to set up and configure, we will use a virtual algorithm that works in a browser and mimics the communication between an IoT device and IoT Hub. The algorithm is represented by JavaScript code that can be modified. To complete the task, follow the following link and replace the value of connectionString in line 15 with the connection string from the previous script run: https://azure-samples.github.io/raspberry-pi-web-simulator/.

Once you have replaced the connection string, you can hit the Run button on the gray output console to start the telemetry stream. The JavaScript code will start producing messages with temperature and humidity values following this format:

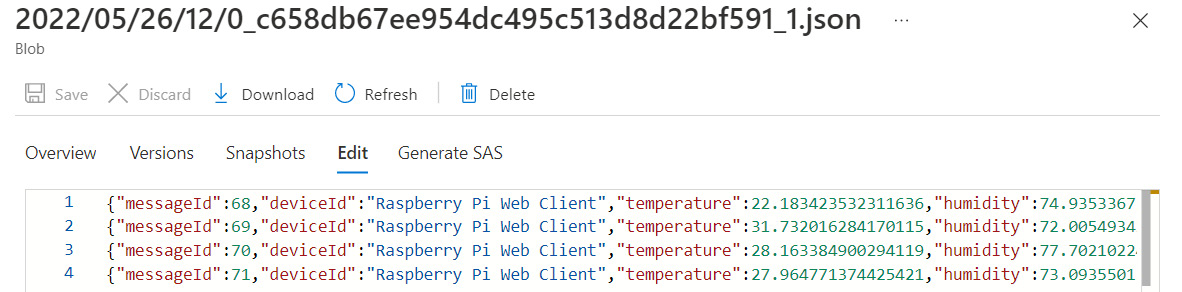

Sending message: {"messageId":3,"deviceId":"Raspberry Pi Web Client","temperature":29.837685611725128,"humidity":62.19790641562577}You can observe the messages from the console. The messages will be delivered to Azure IoT Hub, pulled by the Stream Analytics job, and stored in the container in Azure Blob storage with the name state. The filename will depend on the current date and time. The blob file should only contain messages that state humidity is higher than 70%. The file’s content should look as follows:

Figure 12.4 – The collected telemetry data from the virtual device in Azure Blob storage

The Raspberry Pi simulator is the only way to mimic event streaming between an IoT device and Azure IoT Hub. There are many others available, including the Azure code samples available on GitHub: https://github.com/Azure-Samples/Iot-Telemetry-Simulator.

This simulator is written in C# and enables you to learn about additional aspects of Azure IoT Hub device-to-cloud and cloud-to-device collaboration. In the next section, you will learn more about event-based technologies and how to receive and submit events to Azure Event Grid.

Exploring Azure Event Grid

Azure Event Grid implements a reactive programming model where a specific algorithm is triggered depending on the event processed on Event Grid. For instance, let’s say that a blob stored on Azure Storage was modified (the file changed its access tier from Cool to Archive) and the appropriate event was triggered by Event Grid because it monitors modifications for a specific Azure storage account. The Azure function that monitors events from Event Grid received the event and processed the blob according to the business logic (sending an email to the administrator). Access tiers were introduced in Chapter 6, Developing Solutions That Use Azure Blob Storage.

This example of monitoring blobs uses a pure reactive programming model. The Azure function is not hammering the blob to pull the available changes. It waits to get triggered by Azure Event Grid and reacts depending on the logic. The solution schema is shown in the following diagram:

Figure 12.5 – Monitoring changes in Azure Storage with Event Grid and an Azure function

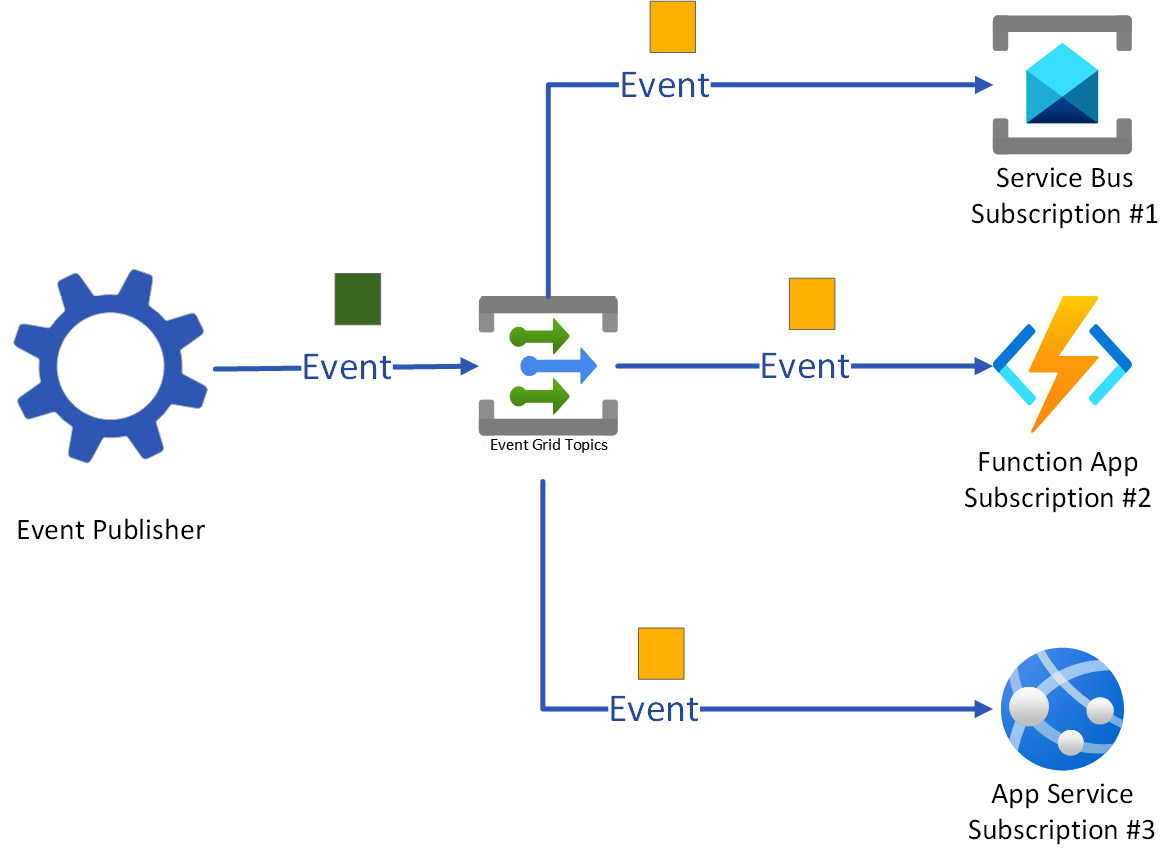

Another scenario where Event Grid can be involved in event processing is a simple Pub/Sub-like Azure Event Hubs instance. The significant difference is that Event Grid can duplicate an event for all registered subscribers. This means that events can be delivered to each of the services that subscribed to those events. This logic, when one data change is duplicated for all registered subscribers, is known as a topic.

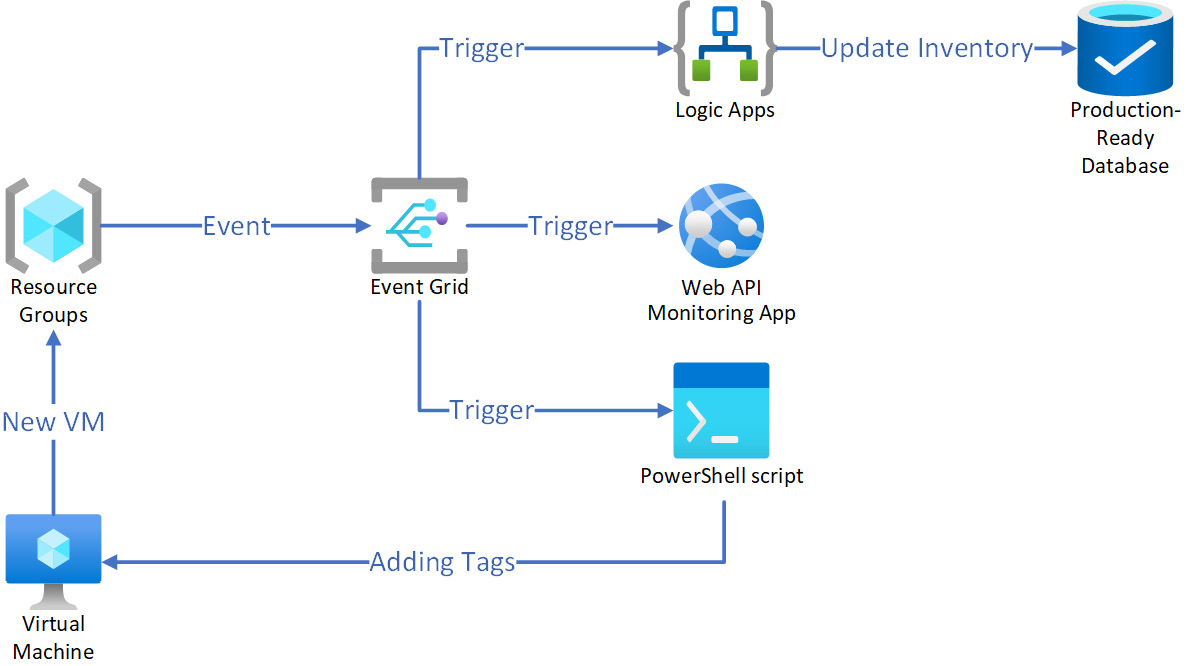

For example, let’s say there is a request to monitor a resource group for modifications (tracking quotes per type of resource). The new VM was provisioned in the resource group and Event Grid triggers all subscribers for this type of event. Azure Logic App was triggered to update the third-party inventory system. An Azure Automation account was also triggered to run a PowerShell script to update the resource tag on the VM. Finally, a custom tracking portal was triggered to log the monitoring event. Three subscribers are registered for updates on the resource group and all of them will receive a copy of the event to notify them that the VM has been provisioned. The subscription approach will enable you to monitor events without removing them from Event Grid. This is unlike Event Hubs, which delivers the event only once and then removes it from the server.

The solution schema with three subscriptions (Logic App, Automation, and web portal) can be seen in the following diagram:

Figure 12.6 – Resource group change monitoring with Event Grid

Since you are already familiar with most of the event-based processing terminology, in the next few sections, only new terms will be explained.

Event sources and handlers

Based on the preceding examples, there is a need to clarify Event Grid terminology to explain configuration aspects:

- Event source or publisher: For Event Grid, this is one of the following services: Azure Blob storage, Azure Resource Manager, Event Hubs or IoT Hub, Azure Service Bus, Azure Media Services, Azure Maps, or another Azure PaaS service. Moreover, Event Grid can work as a message broker. Your application can submit custom events to the public endpoint and they can be delivered to subscribers.

- Event handlers or subscribers: For Event Grid, these can be registered in one or many of the following services: Azure Automation runbooks and Logic Apps, Azure Functions, Event Hubs, Service Bus, and Azure Queue Storage. Moreover, you can register a custom webhook to a third party to be triggered as an internal Azure service.

The relationship between the event source and the event handler is implemented by an Event Grid topic. The endpoint that’s used by the event source to send events is named the event topic. On the other hand, the event subscription is a record that contains event handler registration, the kind of topic it subscribes to, and the type of event it wants to receive.

The following schema represents event processing by Event Grid topics:

Figure 12.7 – Topic functionality – event processing from publisher to handlers

As you can see, event processing with topics in Event Grid is quite different from event processing in Event Hubs. The topic provides copies of events for each of the subscribers. The subscribers are represented by the different Azure services and process events according to the values defined by the schema.

Schema formats

The schema is a set of rules that define the event structure. Event Grid supports two schema formats: the default and the CloudEvent schema. The CloudEvent format is maintained for multi-cloud collaboration. According to the default event schema, the events processed by Event Grid are presented in JSON format and contain valuable information such as the type of event, subject, topic, ID, and version. The main payload of the event is stored in the data field and its format depends on the publisher. The following JSON structure represents the event received from Event Grid:

[

{

"topic": string,

"subject": string,

"id": string,

"eventType": string,

"eventTime": string,

"data":{

object-unique-to-each-publisher

},

"dataVersion": string,

"metadataVersion": string

}

]The event, according to this schema, can contain the custom payload in the data field that depends on the event publisher. The field that existed in the default event schema can also be used for filtering. Your application that subscribed to the event should parse the JSON according to the schema and extract the data payload from the event. Each event can transfer a significant amount of data and can be used to deserialize an object and its state in the code. The maximum size of the event is 1 MB. You will see the real event payload in the next few sections as you provision your Event Grid instance.

Access management

The authentication and authorization process in Event Grid is handled by Azure Active Directory and access keys or SAS keys. These keys can be generated to access topics that submit events. Access keys provide full access to the topic and a SAS key should be used instead to provide specific rights for event management. Alternatively, Event Grid supports Azure AD identities, which can be used to provide role-based access to topics and subscriptions. In the provisioning script shown in the Provisioning Azure Event Grid section, you will see examples of retrieving the keys from the Azure CLI. Alternatively, you can pick access keys from the Azure portal.

Event Grid event domains

The event domain is a logical structure that helps manage a high number of event recipients and also provides security isolation for subscribers by managing authorization and authentication settings. An Event Grid domain is provisioned as a separate resource in the same way as the Event Grid topic. Domains can be formed by multiple topics that are used by subscribers to receive a copy of the event. Event Grid supports domain-scoped subscriptions when the subscriber receives events submitted to all the topics in the domain. From an access management standpoint, Azure RBAC is leveraged to manage subscriptions for each tenant in your application.

Delivery retries

Errors can occur while events are being delivered by Event Grid. Those errors can postpone or prevent delivery. The most common error occurs when the subscriber is not available and Event Grid keeps trying to deliver the event. You can customize the settings for how many times and how long Event Grid should keep retrying. You also can specify the dead-letter container on Azure Storage to persist the events that are not delivered after the retries end. When you design your solution with Event Grid, you need to be aware that the events could be delivered to the subscriber in the wrong sequence. This happens because retry attempts for event delivery can occur asynchronously. The retrying and dead-lettering of events are optional and are not configured by default. If the retry option is not configured, then Event Grid will drop events.

Filters

Another important concept that can affect delivery to handlers is filtering events. Filters allow you to subscribe only for a specific event (successful or unsuccessful resource deployment, update, and deletion). Filters are based on a specific file (key) delivered by the event. These field values are provided in the JSON payload of the event and can be analyzed by the event-delivering algorithm. You can build a filter based on the event type, subject, and values of the specific field. Moreover, you can leverage operators to combine the multiple filters with logical operators and string operators. Filtering is one of the powerful functionalities that can significantly reduce the number of events and number of handler runs, which positively affects the solution cost.

Pricing model

The Event Grid pricing model is designed for consumption use. The charges are based on the bulk of operations started after the first 100K of operations. These operations include ingress attempts, plus retrying attempts, to deliver the event. This means that the total charges represent multiplying the subscribed handler’s counts by the number of processed events.

Provisioning Azure Event Grid

Provisioning an Event Grid service consists of two resources: the Event Grid topic and event subscription. If you start by provisioning the Event Grid topic, you can create an event subscription directly from the topic page. Alternatively, you can create an event subscription and choose what service will be used as an event publisher. The subscription for the service will be created automatically.

Manual topic creation is usually required for custom event processing scenarios. When Event Grid implements an event broker pattern, the event subscription for the custom topic is usually provisioned as a web-hosted service and uses webhook communication to push the event. Meanwhile, general web-hosted services can be replaced by Azure Functions, Azure App Service, Logic Apps, Event Hubs, and many others. Each topic can communicate with more than one subscribed service. When the custom topic is provisioned, you can pick the public endpoint and access key to submit the event. This scenario will be introduced later in this chapter.

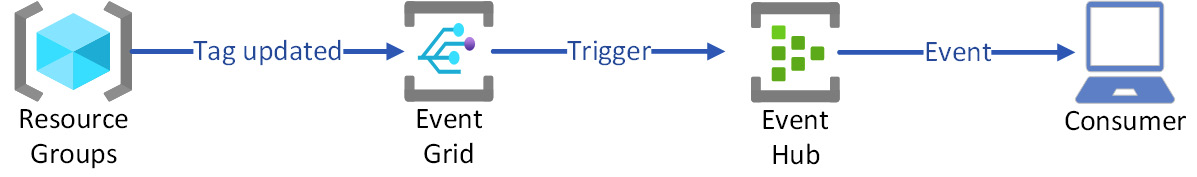

When you created the event subscription from the Azure portal, you had to select the event source. You can choose several available services and objects, including the resource group or subscription. Resource groups and subscriptions will submit all activities to the subscribed consumers. In the following example, you’ll build an event subscription for activities in a specific resource group and then trigger the event by updating the group’s tag. The subscription does not leverage any filters, so all activities will lead to event generation.

During script execution, you connect the Event Hubs instance that you previously deployed in your subscription. The name of this Event Hubs can be picked from the previous script output. You also need to run the consumer for the Event Hubs instance from the previous application. It should be appropriately connected to the Event Hubs instance by the connection string that was retrieved previously. No changes or code updates are required for Event Hubs and consumers. Here is the script to help you provision an event subscription and connect Event Hubs as a subscriber, and trigger events by updating the tag value. Be aware of the 1-minute delay in delivering the event to the Event Hubs consumer and keep it running while updating the tag. This is a PowerShell script, and you can execute it in PowerShell mode in Cloud Shell or locally if you install the Azure PowerShell module: https://github.com/PacktPublishing/Developing-Solutions-for-Microsoft-Azure-AZ-204-Exam-Guide/blob/main/Chapter12/03-eventgrid-provision/demo.ps1.

From the demo script, you will learn how to leverage event subscriptions. You’ll become familiar with the event structure by observing it on your consumer application in the next section. The following schema will help you understand the event processing solution you have provisioned:

Figure 12.8 – Resource group change processing with Event Grid

The previously demonstrated solution does not involve any development skills, but the next example will leverage custom event handling in the reactive programming model. Let’s take a look at how the event broker can be implemented with Event Grid.

Developing applications for custom event handling

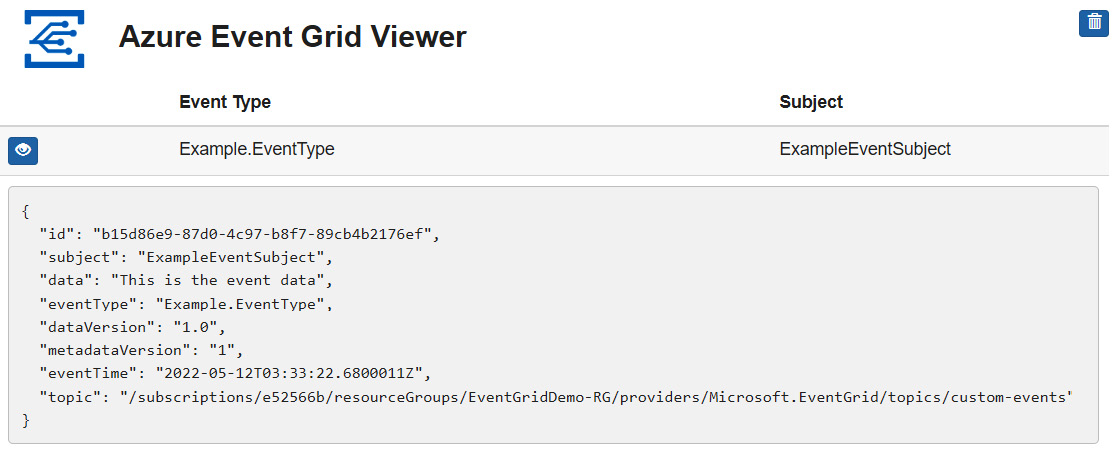

The custom event handling process requires a web-hosted application that provides a webhook URL for event subscriptions. That URL will be used to deliver the events via an HTTP POST request. For event handling, you can leverage the well-known project, Azure Event Grid Viewer (https://github.com/Azure-Samples/azure-event-grid-viewer). This tool can be used to receive an event, persist it in memory, and demonstrate the event’s payload. This tool is implemented as an ASP.NET Core MVC application and deserializes the event from the webhook request by using EventGridEvent from the Azure.Messaging.EventGrid package.

The following script will help you provision an event topic and deploy Event Grid Viewer to the App Service instance. Then, you can use the App Service URL to subscribe to the events. The script leverages Azure CLI commands and works better when run from Cloud Shell: https://github.com/PacktPublishing/Developing-Solutions-for-Microsoft-Azure-AZ-204-Exam-Guide/blob/main/Chapter12/04-eventgrid-process/demo.azcli.

Event submission to the custom topic is implemented by the console application. The application needs to be configured with the topic endpoint and access key provided in the output of the script run. Update the Program.cs file with appropriate values from the output. The code of the application is available at https://github.com/PacktPublishing/Developing-Solutions-for-Microsoft-Azure-AZ-204-Exam-Guide/tree/main/Chapter12/04-eventgrid-process/publisher.

This preceding example demonstrated how to submit custom events in an Event Grid topic endpoint. The following SDK classes were used in the project:

|

Class |

Description |

|

EventGridPublisherClient |

This class is used to build a client to send the event and is configured with endpoint and access key information. |

|

EventGridEvent |

This event class represents events that deserialize in JSON with the required event schema. |

|

AzureKeyCredential |

Credential information that is used for client configuration with an access key. |

Table 12.2 – C# SDK classes for event management with Event Grid

When you run the application, it generates a single event with the "This is the event data" text payload. You should be able to observe the event on the Azure Event Grid Viewer page. The following screenshot shows the viewer page with the event structure:

Figure 12.9 – Received custom event

In the previous examples, you learned how to provision Azure Event Grid and how to leverage custom Event Grid topics to submit an event. You also learned how to provision Event Grid topics to monitor resource group activities. You became familiar with the code of the application you built and consumed the event delivered through Event Hubs with an Event Grid subscription. Another application you built was submitting events to the custom Event Grid topic that was received by Event Grid Viewer.

In the next section, the available event-based services will be summarized in a table to help you understand their usage patterns.

Comparing Azure event-based services

This chapter has introduced you to several event processing services available in Azure. Some of the services can be used for the same task. For example, Azure Event Hubs and IoT Hub can process streams of data, and Event Grid and Event Hubs can be used as message brokers. However, some services may not be the optimal choice for specific tasks. For example, Event Grid has a delay in delivery events and is not designed for a high scale of events; you’re better off choosing Event Hubs for high-scale workloads.

The following table will help you understand which of the services works best:

|

Service |

Main Pattern |

Pros |

Cons |

|

Event Hubs |

Big data ingestion and streaming |

High-scale. Event persistence. Capturing events. Low-cost. |

An event can be received only once. Complex order support with multiple partitions. Server-managed cursor. |

|

IoT Hub |

Telemetry streaming |

Two-way communication. Device registration and settings management. Free Standard tier. |

No flexible pricing models. Sophisticated integration devices with Azure IoT Edge. |

|

Notification Hubs |

Event broadcasting |

Multi-platform support. Free tier. |

Lack of troubleshooting tools. A limited number of supported platforms. |

|

Event Grid |

Pub/Sub and reactive programming |

Consumption-based pricing. Integration with other services. Topic support. |

The events delivery sequence can be changed. |

Table 12.3 – Comparing Azure event-based services

Knowing the pros and cons of the different event-based services will help you select the optimal service for your solution and recommend the appropriate choice for the exam.

Summary

Event-based technology plays a significant role in the data processing solutions hosted in Azure. The solutions based on event processing provide high-scale and high-availability services that are maintained in Azure with minimum administrative effort. Event-based services are commonly used for big data ingestion, telemetry stream processing, reactive programming, and mobile platform notifications.

Connectivity to the services and receiving events are implemented in custom applications based on SDKs and involve a variety of Azure services. Plug and play integration of Azure services is the biggest advantage of using event-based technology in Azure for asynchronous processing scenarios. By completing this chapter, you can select the appropriate service based on your requirements and leverage it to process events in an enterprise solution for your company.

In the next chapter, you will learn about the differences between message and event processing and will be able to maximize outcomes from the message-based services in Azure, such as Azure Queue Storage and Azure Service Bus.

Questions

Answer the following questions to test your knowledge of this chapter:

- What is a namespace?

- How is Azure IoT Hub different from Event Hubs?

- How long does an event persist in Event Hubs?

- What service helps you connect Azure Functions and Event Hubs?

- What type of authentication can be used to connect IoT devices to Azure IoT Hub?

- What is a possible misconfiguration for an event handler that will not allow it to receive all events sent by Event Grid?

Further reading

To learn more about the topics that were covered in this chapter, take a look at the following resources:

- The following article will help you learn about Event Hubs features and terminology:

https://docs.microsoft.com/en-us/azure/event-hubs/event-hubs-features

- The Event Hubs price tiers are discussed in the following document:

https://docs.microsoft.com/en-us/azure/event-hubs/compare-tiers

- You can learn about Event Grid terminology in the following article:

https://docs.microsoft.com/en-us/azure/event-grid/concepts

- The Event Grid event schema is explained in the following article:

https://docs.microsoft.com/en-us/azure/event-grid/event-schema

- Learn how the capturing of events is implemented in Event Hubs:

- The following article explains device-to-cloud communication for Azure IoT Hub:

https://docs.microsoft.com/en-us/azure/iot-hub/iot-hub-devguide-c2d-guidance

- You can learn more about the combination of event-based and message-based solutions from the following article:

https://docs.microsoft.com/en-us/azure/event-grid/compare-messaging-services