14

Mock Exam Questions

At this point, we trust you have gained the knowledge and confidence to sit and pass the AZ-204 exam – we wish you the best of luck! Congratulations on making it to the end. We encourage you to take some time to review the Further reading sections of each chapter and do your own exploration. A single book can only cover so much – exercise your intellectual curiosity to expand your knowledge and understanding.

In this chapter, you’ll go through a mock exam that will give you exposure to some of the types of questions you might encounter in the exam. You can find the answers to these questions in the Mock Exam Answers section at the end of this book. The exam itself has between 40 and 60 questions, and you’ll have 100 or 120 minutes to answer them, depending on whether or not there are labs.

The questions in this mock exam are intended to give you an idea of the types of questions you might face in the exam. We can only cover certain types of questions in this book, but the exam can include other types of questions, such as drag and drop, simulations, and reordering lists.

A useful tool that Microsoft has made available for you to practice interacting with the different question types in a sandbox environment is the exam sandbox: https://aka.ms/examdemo. You don’t need a login to access it. The questions are samples and not reflective of the exam questions, but they give a good idea of what question types you might be presented with during the exam.

We wish you the very best of luck! Please remember to revisit chapters where needed and do your own exploration and testing to cement your understanding.

Implementing IaaS solutions

- You’re developing a solution that requires multiple Azure VMs to communicate with each other with the lowest possible latency between them.

Which one of the following features will meet this low-latency requirement?

- Availability zones

- Availability sets

- Proximity placement groups

- VM scale sets

- Your company uses ARM templates to define resources and their configurations that should be present in your resource group. After adding some additional resources to the resource group for testing, someone else deploys the same ARM template to the same resource group via the CLI, and you discover that the new resources you created have now been deleted while all other resources remain unimpacted.

Which one of the following commands could have had this impact?

- az deployment group create -g "RG-AZ-204" -n "Deployment_45" -f ./template.json -p deletenew="true"

- az deployment group create -g "RG-AZ-204" -n "Deployment_45" -f ./template.json --mode Complete

- az deployment group create -g "RG-AZ-204" -n "Deployment_45" -f ./template.json --mode Incremental

- az deployment group create -g "RG-AZ-204" -n "Deployment_45" -f ./template.json --force-no-wait

- You have a Dockerfile that you’d like to test locally before pushing it to a registry.

Which of the following commands should you use to build the container image on your local machine (assuming all of the prerequisites are in place)?

- docker run -it --im docker:file

- az acr build --image dockerfile/myimage:latest --file Dockerfile .

- docker build -t myimage:latest .

- az acr run --file Dockerfile .

- You’ve deployed an ASP.NET Core application to a single container in Azure Container Instances and need to collect the logs from the application and send them to long-term storage. A second container should be used to collect the logs from the application. You need to select a service that has the lowest cost and the fewest changes required to deploy the application.

Which one of the following services should you use to implement the solution?

- An Azure Container Instances container group

- A Kubernetes Pod

- Azure Container Registry

- A Log Analytics workspace

- The following ARM template successfully deploys a storage account with the correct configuration, and you would now like to store it centrally for others to use. In its current state, colleagues would need to change the name of the resource within the template each time to create a new storage account.

What changes should you make to the template so that colleagues can specify a name for the storage account without having to hardcode the value in the template each time?

{

"$schema": "https://schema.management.azure.com/schemas/2019-04-01/deploymentTemplate.json#",

"contentVersion": "1.0.0.0",

"resources": [

{

"type": "Microsoft.Storage/storageAccounts",

"apiVersion": "2021-09-01",

"name": "mysta",

"location": "West Europe",

"kind": "StorageV2",

"sku": {

"name": "Standard_LRS"

},

"properties": {

"accessTier": "Hot"

}

}

]

}

- Create a new version of the template with different values for the name each time a new name is required and store it in a source control repository.

- No changes to the template are required. You can specify the name in the command line when deploying it.

- Create a parameters section in the template and add the name so that it can be specified at deployment time.

- Create a variables section in the template and add the name so that it can be specified at deployment time.

Creating Azure App Service web apps

- You’re developing a web app with the intention of deploying it to App Service and have been told that the messages generated by your application code need to be logged for review at a later date.

Which two of the following would be required to meet this application logging requirement?

- A Linux App Service plan

- A Windows App Service plan

- Blob application logging

- Filesystem application logging

- You’re developing an API application that runs in an App Service named myapi, which has the https://myapi.az204.com custom domain configured. This API is consumed by a JavaScript single-page app called myapp with the https://az204.com custom domain configured.

The part of the app trying to consume myapi isn’t working, giving an error that includes the phrase The Same Origin Policy disallows reading the remote resource at https://myapi.companyname.com.

Which one of the following is likely to be required to resolve this?

- Change the custom domain of the app to https://myapp.az204.com.

- Run the az webapp up command from myapp.

- Run the az webapp cors command from myapi.

- Move myapi to the same resource group as myapp.

- Run the az webapp cors command from myapp.

- An App Service web app you’re developing needs to make outbound calls to a TCP endpoint over a specific port, which is hosted on an on-premises Windows Server 2019 Datacenter server. Outbound traffic to Azure over port 443 is allowed from the on-premises server. Which Azure service can be used to provide this access?

- Private endpoint

- Hybrid Connection

- Private Link

- VNet integration

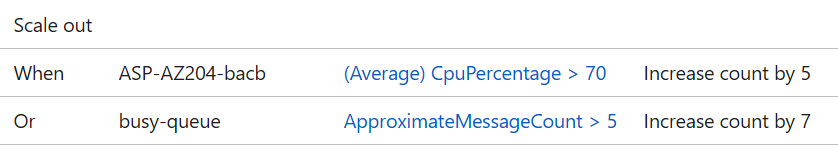

- An App Service web app has been configured with the scale-out autoscale rules shown in the following screenshot. One is monitoring a storage queue, and another is monitoring the CPU percentage of the App Service plan:

Figure 14.1 – Two scale-out autoscale rules in App Service

In a scenario where both rules are triggered at the same time, assuming the current instance count is 1 and the maximum is set to 15, which one of the following scale actions would be performed?

- The instance count increases to 7.

- The instance count increases to 5.

- The instance count increases to 12.

- The instance count doesn’t change as the two rules conflict.

Implementing Azure Functions

- You notice that a function hosted on a Consumption plan is always slow to run first thing in the morning. After the first run, the function runs again as expected.

What is the most likely reason for this behavior?

- A long-running function that started overnight needs to finish before processing the early morning request.

- The Always on setting is turned off overnight and enabled at a scheduled time automatically.

- The function instances scaled to 0 due to being idle, and it takes some time for a cold startup.

- You’ve inherited responsibility for a function that someone else created. This function uses a timer trigger, and you need to identify the schedule on which this function triggers.

The trigger has been configured with the following NCrontab syntax: 0 0 */2 * * mon-fri.

What is the schedule on which this timer has been triggered?

- Every 2 days between Monday and Friday (Monday, Wednesday, and Friday)

- Every Monday–Friday for a month every 2 months

- Every 2 hours from Monday–Friday

- Where do you configure triggers and bindings for a function written in Python?

- Update the function.json file.

- Decorate methods and parameters with the required attributes in code.

- Update the app configuration within the Azure portal.

Developing solutions that use Cosmos DB storage

- Your company uses Cosmos DB with a SQL API to store JSON documents. The documents are often updated and sometimes deleted. You want to use the Cosmos DB change feed to track changes.

Which statement from the following list about the Cosmos DB change feed is incorrect?

- The change feed is enabled in Cosmos DB by default.

- All modified documents are tracked in the change feed.

- The change feed is implemented in a first-in-first-out order.

- You can use SQL to query records in the change feed.

- Which of the following factors determines the number of physical partitions in Azure Cosmos DB with Core (SQL) API provisioned with exact throughput?

- The storage and consistency level

- The storage and throughput

- The throughput and consistency level

- The number of documents and throughput

- You develop a C# application to process credit card payments. You choose Cosmos DB with Core (SQL) API as a database to persist the payment info. The document schema is provided here:

{

Id: ""

customer: ""

cardNumber: ""

amount: ""

}

After reviewing your application design, the security engineer requests that you enable encryption for card payment information. The encryption technology must implement the following requirements:

- Encryption must be applied to all documents in the container.

- Encryption must protect sensitive payment information.

- Encryption keys must be controlled by the organization.

- The data must encrypted in transit.

What encryption technology can you implement to meet the requirements?

- Enable Transparent Data Encryption.

- Enable encryption at rest with service-managed keys.

- Enable encryption at rest with customer-managed keys.

- Use Always Encrypted technology and configure the encryption policy with a /* path.

- Use Always Encrypted technology and configure the encryption policy with a /cardNumber path.

- You provision Cosmos DB with the Core (SQL) API in the two Azure regions. You keep the Session consistency level as the default consistency level for collection. Meanwhile, one of the applications that work with Cosmos DB must ensure that data is not being lost, and updates apply for both regional instances in real time.

What action should you take to meet the requirements?

- Implement transactions and update documents in both regions individually.

- Change the default consistency level for the collection to Strong.

- Change the default consistency level for the collection to Consistent Prefix.

- In the application’s code, provide a Strong consistency level with RequestOptions when calling updates.

- Your application is tracking payment transactions with a single instance of Cosmos DB with the Core (SQL) API. The hundreds of transactions arrive at the end of the business day and need to be ingested into Cosmos DB with Core (SQL) API as a single transaction per document. The business process required the data to be ingested with maximum speed.

What action should you complete to meet the requirements?

- Change the default consistency level to Eventual.

- Change the default consistency level to Strong.

- Change the indexing mode to Consistent.

- Change the indexing mode to None.

- Implement a pre-trigger for creating operations.

Developing solutions that use Azure Blob Storage

- Your company is going to host a single-page web application in Azure. You are required to provide a service with the following corresponding features:

- Availability in at least two regions.

- Support versioning for HTML and JS code.

- Register the website with the yourcompany.com custom domain.

- Use out-of-the-box features where possible.

- Minimum cost.

Which of the following services will you recommend?

- App Service on the Free tier

- App Service on the Basic tier

- An Azure Storage account

- An Azure VM

- Azure Container Instances

- Your application generates log files that should be stored in the Azure Blob Storage. What is the best access and pricing tier that you recommend? The solution must minimize cost:.

- The Hot tier for Premium storage

- The Cold tier for Premium storage

- The Archive tier for Standard storage

- The Hot tier for Standard storage

- You develop an application for IoT devices that persist files on Azure Blob Storage. You created a connection string using a single SAS key that allows read and write operations with the blobs. After the testing phase, one of the IoT devices was stolen, and the security engineer requested you revoke write access to the storage account from the stolen device.

What will you do to revoke the access?

- Regenerate the access keys for the storage account.

- Revoke the SAS from the list of generated signatures on the Azure portal.

- Generate another SAS token with read-only permission.

- Update the SAS expiration time from the Azure portal.

- Your application is connected to the Azure Blob Storage and periodically crashes with an exception. You discover the following code that produces the exception:

BlobContainerClient containerClient =

client.GetBlobContainerClient(containerName);

Dictionary<string, string> metaData

= new Dictionary<string, string>()

{

{ "Department", "Marketing" }

};

await containerClient.SetMetadataAsync(metaData);

What might be the reason for the exception?

- The container does not exist.

- The container’s metadata format is not set properly.

- The organization has not registered the marketing department in Azure AD.

- Metadata can only be assigned to the blob, not to the containers.

Implementing user authentication and authorization

- An ASP.NET Core application that you’ve successfully deployed to an App Service needs to authenticate users with their Azure Active Directory (AAD) accounts. When you enable authentication for your application within the Azure portal, which one of the following types of resources is required to integrate your app with AAD?

- An application gateway

- An application proxy

- An app registration

- You’re developing an internal .NET Core application that will run on users’ devices and require them to authenticate with their AAD accounts. You are using the Microsoft.Identity.Client NuGet package in your project:

var app = [missing word]

.Create(_clientId)

.WithAuthority(AzureCloudInstance.AzurePublic, _tenantId)

.WithRedirectUri("http://localhost")

.Build();

Which one of the following is the missing word from the application code in the preceding code sample?

- PublicClientApplicationBuilder

- PrivateClientApplicationBuilder

- ConfidentialClientApplicationBuilder

- ClientApplication

- An ASP.NET Core application you’re developing on App Service connects to a container in an Azure Storage account using a SAS token, which is stored in an application setting of the App Service.

Your security team has advised that SAS tokens need to be managed in a way that allows you to modify the permissions and validity of those tokens.

Which three of the following can be performed to meet this requirement?

- Run the az storage container generate-sas command.

- Run the az storage container lease change command.

- Run the az storage container policy create command.

- Run the az webapp config appsettings set command.

- Run the az webapp config access-restriction add command.

- Run the az appconfig create command.

Implementing secure cloud solutions

- An App Service web app you’re developing needs to access a secret from one of your key vaults using an identity that shares the same life cycle as the web app.

Which two of the following commands should be used to enable this (in addition to any code changes that might be required)?

- az webapp identity assign

- az keyvault set-policy

- az identity create

- az policy assignment create

- You’re developing a solution that implements several Azure functions and an App Service web app.

You need to centrally manage the application features and configuration settings for all the functions and the web app. You’re already using Azure Key Vault for the central management of application secrets.

Which one of the following services is most appropriate to use in this scenario?

- Azure Key Vault

- Application groups

- API connections

- App Configuration

- Developers within your company have been doing local development and testing with application configuration key-value pairs in a local JSON file.

You need to import the key-value pairs into an App Configuration resource.

Which one of the following commands can be used to achieve this?

- az webapp config backup restore

- az appconfig kv import

- az keyvault backup start

- az kv import config

Integrating caching and content delivery within solutions

- You are deploying a web application that uses Azure Cache for Redis to an Azure VM. You need to configure firewall rules to let the VM communicate with instances of the cache. What protocol should be enabled to allow communication?

- TCP

- UPD

- HTTP

- MQTT

- You build an instance of Azure Cache for Redis and connect your web application to the instance. After starting the web application, you want to observe the values stored in the cache without exporting the full cache content.

What tool can you not use to view data stored in Azure Cache for Redis?

- Visual Studio Code

- redis-cli.exe

- The Redis console from the Azure portal

- Cloud Shell

- The Azure CLI

- Your application uses Azure Cognitive Services to analyze communication with clients. The requests and responses use RESTful services and are formatted in JSON. Your manager notices that many requests from clients are identical and responses for them can be reused.

You have been asked to recommend an Azure service to do the following:

- Minimize the number of requests from your application to Azure Cognitive Services.

- Configure how long the response can be reused.

- Use out-of-the-box solutions where possible.

- Minimize costs and provide the best performance.

What service can you recommend?

- Azure CDN

- Azure Front Door

- Azure Cache for Redis

- API Management

- An Azure Storage account

- Your company is developing an antivirus solution. Your application is used by millions of clients spread across the world. Whenever a new virus is detected, you update the binaries of your application. The updates are delivered as files of about 50 MB in size that need to be installed on the client machine. The update must be delivered ASAP. You have been asked to recommend an Azure PaaS service to distribute the updates.

Which of the following services will help you implement the distribution of updates and minimize the cost?

- An Azure Storage account

- Azure App Service

- Azure Data Lake

- Azure CDN

- Azure DevOps

- Azure Container Registry

- Your company is hosting a website on Azure App Service. Additionally, your company is using a CDN to store JavaScript and media files. Recently, users have complained about JS errors occurring on the main page. After troubleshooting the error, you find the bug and fix it in the JS file. However, users are still complaining about the errors. After additional troubleshooting, you discover that users are still using the old version of the JS file with the bug.

What should you do to make sure that issue is fixed for the users?

- Restart the VM of users who are still complaining about errors.

- Ask the user to install the Edge Insider browser.

- Restart your web app.

- Purge the Azure CDN endpoint.

- Delete the CDN and create a new CDN profile.

Instrumenting solutions to support monitoring and logging

- Your console application is using Azure SQL Database. Your application is one of many other applications that query databases during the day. You suspect that the performance of the SQL database has been degraded. You want to collect information on query performance and compare it with the previous day.

What actions should you take with a minimum administration overhead?

- From the Azure SQL instance, choose Enable Query Performance Insight to investigate the query performance.

- From Application Insights, choose Application Map and investigate the performance of SQL Server.

- From Application Insights, choose Performance, and then select Profiler to profile the request to SQL Server.

- From the Log Analytics workspace, connect your Azure SQL instance and choose Query Performance Insights.

- From Azure Monitor, choose the CPU percentage metric to monitor CPU utilization per request.

- Your ASP.NET Core MVC application contains the following code in the controller class:

// GET: Get Clients list

public async Task<IActionResult> Index()

{

TelemetryClient telemetry = new TelemetryClient();

Stopwatch stopwatch = new Stopwatch();

stopwatch.Start();

var clients = _context.Clients.ToList();

stopwatch.Stop();

telemetry.TrackMetric("TimeToTrack",

stopwatch.ElapsedMilliseconds);

return View(clients);

}

What will the result of the execution of this code be?

- Application Insights creates a performance record for the Index page view.

- Application Insights traces a custom event when the Index page is requested.

- Application Insights traces a custom event when the client list is loaded for the Index page.

- Application Insights tracks the custom metric to log the time of loading the Index page.

- Application Insights tracks the custom metric to log the time of loading the clients’ list for the Index page.

- You need to collect and query IIS logs from an Azure VM (IaaS) that runs your website. What are the activities you need to perform on the Azure portal and VM?

- From the VM page on the Azure portal, configure log exporting in the storage account.

- From the VM page on the Azure portal, configure log exporting to Event Hubs.

- From the VM page on the Azure portal, export the activity log in CVS format.

- Deploy the Azure Log Analytics workspace. From the workspace’s page, connect the VM and enable IIS log collection.

- From the VM page on the Azure portal, open the log interface and write a KQL query to find the log activities.

- You are observing frequent crashes on your production ASP.NET Core web application hosted on the Azure App Service platform. When you try to run the application locally, you cannot reproduce the issue. Which of the following Azure techniques gives you the best troubleshooting experience? Choose the most effective and least invasive approach:

- Add the tracing commands to the crashing function. Update the web app and reproduce the issue. Analyze the application logs with tracing commands.

- Enable remote debugging, connect to the production web application, and set a breakpoint on the function that crashes. Reproduce the issue and investigate the variables and parameters.

- Configure and collect the Detailed error messages and Failed requests tracing logs from App Service.

- Configure Application Insights to collect a debugging snapshot and analyze it on the Application Insights page.

- Configure Application Insights to collect a profiling trace for the crashed function.

Implementing API Management

- You find the following code snippet of an APIM policy:

<policies>

<inbound>

<rate-limit-by-key calls="7"

renewal-period="60"

increment-condition="@(context.Response.StatusCode == 200)"

counter-key="@(context.Request.IpAddress)"

remaining-calls-variable-name="remainingCallsPerIP"/>

</inbound>

</policies>

What is the result of applying the preceding statement?

- Any calls from any customer with any IP address will be accepted at a rate of seven calls per hour.

- Any seven successful calls per minute will be accepted from customers with the same IP address.

- Any seven calls per minute will be accepted from customers with the same IP address.

- Any seven calls per hour will be accepted from customers with the same IP address.

- If APIM returns an error, the call will be counted toward the rate limit of seven calls per minute.

- Your company exposes a weather forecast web API on an API Management instance. Your APIM instance should call a backend web API that supports authentication. What type of backend authentication is not supported by APIM?

- Managed identities

- Basic authentication

- Client Authentification Certificate

- Windows Authentication

- Your company creates an API Management instance and releases products to allow access to an the analytics web API. Which of the following listed features is not supported by API Management?

- Import endpoint definition.

- Allow developers to test your analytics API.

- Provide public access to the web API endpoints.

- Back up the usage data.

- Generate a response for a request without querying the underlying web API.

- Track policy changes in source control.

Developing event-based solutions

- You need to diagnose the content of incoming events to detect malfunctioning applications. Thousands of incoming events from hundreds of applications are collected by Azure Event Hubs. Event Hubs is provisioned with the Basic price tier. What solution can you recommend to minimize cost and administrative efforts?

- Provision Event Grid, which is triggered whenever the event is received by Event Hubs and triggers an Azure function that logs the event in Cosmos DB.

- Scale Event Hubs to the Standard tier to increase the partition count, and reconfigure each of the connected applications to send the event for an individual partition.

- Scale Event Hubs to the Standard tier and enable the capturing of events in a Data Lake account.

- Enable Azure Monitor to track the received events by Event Hubs.

- Enable Azure Application Insights to track the received events by Event Hubs.

- Connect Event Hubs to a Log Analytics workspace, and use KQL to query the content of events.

- Your IoT device constantly generates a stream of telemetry values collected by Azure IoT Hub (Basic SKU). You notice that the temperature value provided by the device is rising, and the device should be able to turn on the cooling system to avoid overheating. The device logic should be dynamically adjusted to the changes in temperature threshold. The threshold that is configured should be controlled on the server.

You need to recommend a solution that minimizes the reaction time and minimizes the total cost of the solution. Which of the following would you choose?

- Collect historical data on the temperature, and train an ML model in Azure ML Studio. When the temperature value is received, the ML model can recommend turning on the cooling system.

- You configure an Azure Stream Analytics job to monitor reported temperature values. When the value exceeds the threshold, the temperature value is logged in a file located in an Azure Blob Storage. Azure Functions running on an IoT Edge device triggers when the file is updated and starts the cooling system.

- Azure IoT Hub can use cloud-to-device communication and send a command to the device to start cooling when the temperature exceeds a certain threshold.

- Azure IoT Hub can use twin settings to provide the temperature threshold, and the device will start cooling when the collected temperature exceeds the threshold. Upgrading Azure IoT Hub to the Standard SKU is required.

- You can use Azure IoT Edge with a custom Docker container that measures the temperature and starts cooling when the temperature exceeds the threshold hardcoded in the container. Updating the threshold can be performed by rebuilding the container image.

- You provisioned a new Event Hubs namespace named namespeace1 and a new Event Hubs instance named eventhub1 in your Azure subscription. Which of the following commands will help you generate the connection string for applications that are going to send events to Azure Event Hubs? The principle of least privilege should be used to select the required command:

- az eventhubs eventhub show --name eventhub1

- az eventhubs eventhub authorization-rule keys create --eventhub-name eventhub1

- az eventhubs eventhub authorization-rule create --eventhub-name eventhub1 --rights Send

- az eventhubs eventhub authorization-rule create --eventhub-name eventhub1 --rights Manage

- az eventhubs georecovery-alias authorization-rule keys list --namespace-name namespace1

Developing message-based solutions

- You’re developing a message consumer for Azure Queue Storage. The consumer runs on the App Service WebJobs platform in Azure. You need to implement autoscaling rules to scale out the consumer instances horizontally when the total amount of messages in the queue increases by 100 messages.

What is the name of the metric you should use in the configuration of criteria for scaling?

- Approximate message count

- Count of active messages in the queue

- Completed messages

- Incoming messages

- You develop a consumer application to process money transfer transactions from a bank. Each transaction is delivered in a single message. All generated transactions need to be processed in sequence. Losing any transactions is unacceptable. The solution should provide maximum tolerance for possible connections lost by the application.

What service do you recommend for implementing transaction processing?

- Event Grid with a custom topic

- Event Hubs with an increased retention period

- Azure Queue Storage with the Geo-Redundant option

- Azure Service Bus queue with increased maximum deliveries

- You are developing an application based on the Reactive programming model. The application should monitor Azure Service Bus and trigger custom logic when the message has been received. The application should always run as a service and react to the message in real time without restarts.

Which of the following C# SDK classes should you use to implement requirements?

- ServiceBusSender

- ServiceBusReceiver

- ServiceBusProcessor

- ServiceBusClient