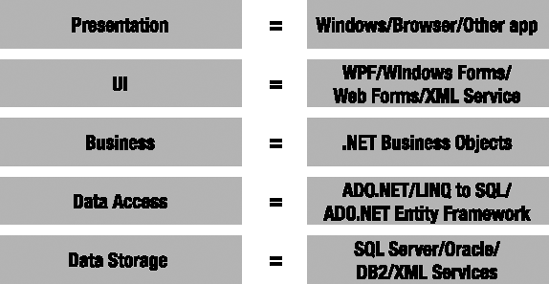

In Chapter 1, I discussed some general concepts about physical and logical n-tier architecture, including a 5-layer model for describing systems logically. In this chapter, I take that 5-layer logical model and expand it into a framework design. Specifically, this chapter will map the logical layers against the technologies illustrated in Figure 2-1.

The CSLA .NET framework itself will focus on the Business Logic and Data Access layers. This is primarily due to the fact that there are already powerful technologies for building Windows, web (browser-based and XML-based services), and mobile interface layers. Also, there are already powerful data-storage options available, including SQL Server, Oracle, DB2, XML documents, and so forth.

Recognizing that these preexisting technologies are ideal for building the Presentation and UI layers, as well as for handling data storage, allows business developers to focus on the parts of the application that have the least technological support, where the highest return on investment occurs through reuse. Analyzing, designing, implementing, testing, and maintaining business logic is incredibly expensive. The more reuse achieved, the lower long-term application costs become. The easier it is to maintain and modify this logic, the lower costs will be over time.

Note

This is not to say that additional frameworks for UI creation or simplification of data access are bad ideas. On the contrary, such frameworks can be very complementary to the ideas presented in this book; and the combination of several frameworks can help lower costs even further.

When I set out to create the architecture and framework discussed in this book, I started with the following set of high-level guidelines:

The task of creating object-oriented applications in a distributed .NET environment should be simplified.

The interface developer (Windows, web service, or workflow) should never see or be aware of SQL, ADO.NET, or other raw data concepts but should instead rely on a purely object-oriented model of the problem domain.

Business object developers should be able to use "natural" coding techniques to create their classes—that is, they should employ everyday coding using fields, properties, and methods. Little or no extra knowledge should be required.

The business classes should provide total encapsulation of business logic, including validation, manipulation, calculation, and authorization. Everything pertaining to an entity in the problem domain should be found within a single class.

It should be possible to achieve clean separation between the business logic code and the data access code.

It should be relatively easy to create code generators, or templates for existing code generation tools, to assist in the creation of business classes.

An n-layer logical architecture that can be easily reconfigured to run on one to four physical tiers should be provided.

Complex features in .NET should be used, but they should be largely hidden and automated (WCF, serialization, security, deployment, etc.).

The concepts present in the framework from its inception should carry forward, including validation, authorization, n-level undo, and object-state tracking (

IsNew, IsDirty, IsDeleted).

In this chapter, I focus on the design of a framework that allows business developers to make use of object-oriented design and programming with these guidelines in mind. After walking through the design of the framework, Chapters 6 through 16 dive in and implement the framework itself, focusing first on the parts that support UI development and then on the providing of scalable data access and object-relational mapping for the objects. Before I get into the design of the framework, however, let's discuss some of the specific goals I am attempting to achieve.

When creating object-oriented applications, the ideal situation is that any nonbusiness objects already exist. This includes UI controls, data access objects, and so forth. In that case, all developers need to do is focus on creating, debugging, and testing the business objects themselves, thereby ensuring that each one encapsulates the data and business logic needed to make the application work.

As rich as the .NET Framework is, however, it doesn't provide all the nonbusiness objects needed in order to create most applications. All the basic tools are there but there's a fair amount of work to be done before you can just sit down and write business logic. There's a set of higher-level functions and capabilities that are often needed but aren't provided by .NET right out of the box.

These include the following:

Validation and maintaining a list of broken business rules

Standard implementation of business and validation rules

Tracking whether an object's data has changed (is it "dirty"?)

Integrated authorization rules at the object and property levels

Strongly typed collections of child objects (parent-child relationships)

N-level undo capability

A simple and abstract model for the UI developer

Full support for data binding in WPF, Windows Forms, and Web Forms

Saving objects to a database and getting them back again

Custom authentication

Other miscellaneous features

In all of these cases, the .NET Framework provides all the pieces of the puzzle, but they must be put together to match your specialized requirements. What you don't want to do, however, is to have to put them together for every business object or application. The goal is to put them together once so that all these extra features are automatically available to all the business objects and applications.

Moreover, because the goal is to enable the implementation of object-oriented business systems, the core object-oriented concepts must also be preserved:

Abstraction

Encapsulation

Polymorphism

Inheritance

The result is a framework consisting of a number of classes. The design of these classes is discussed in this chapter and their implementation is discussed in Chapters 6 through 16.

Tip

The Diagrams folder in the Csla project in the code download includes FullCsla.cd, which shows all the framework classes in a single diagram. You can also get a PDF document showing that diagram at www.lhotka.net/cslanet/download.aspx.

Before getting into the details of the framework's design, let's discuss the desired set of features in more detail.

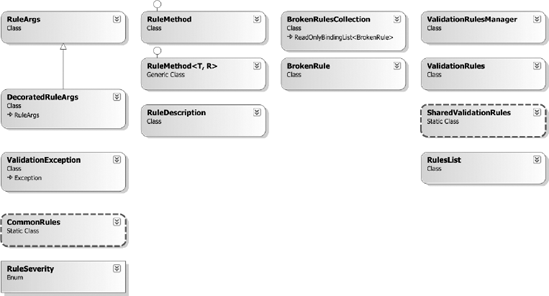

A lot of business logic involves the enforcement of validation rules. The fact that a given piece of data is required is a validation rule. The fact that one date must be later than another date is a validation rule. Some validation rules involve calculations and others are merely toggles. You can think about validation rules as being either broken or not. And when one or more rules are broken the object is invalid.

A similar concept is the idea of business rules that might alter the state of the object. The fact that a given piece of text data must be all uppercase is a business rule. The calculation of one property value based on other property values is a business rule. Most business rules involve some level of calculation.

Because all validation rules ultimately return a Boolean value, it is possible to abstract the concept of validation rules to a large degree. Every rule is implemented as a bit of code. Some of the code might be trivial, such as comparing the length of a string and returning false if the value is zero. Other code might be more complex, involving validation of the data against a lookup table or through a numeric algorithm. Either way, a validation rule can be expressed as a method that returns a Boolean result.

Business rules typically alter the state of the object and usually don't enforce validation at the same time. Still, every business rule is implemented as a bit of code, and that code might be trivial or very complex.

The .NET Framework provides the delegate concept, making it possible to formally define a method signature for a type of method. A delegate defines a reference type (an object) that represents a method. Essentially, delegates turn methods into objects, allowing you to write code that treats the method like an object; and of course they also allow you to invoke the method.

I use this capability in the framework to formally define a method signature for all validation and business rules. This allows the framework to maintain a list of validation rules for each object, enabling relatively simple application of those rules as appropriate. With that done, every object can easily maintain a list of the rules that are broken at any point in time and has a standardized way of implementing business rules.

Note

There are commercial business rule engines and other business rule products that strive to take the business rules out of the software and keep them in some external location. Some of these are powerful and valuable. For most business applications, however, the business rules are typically coded directly into the software. When using object-oriented design, this means coding them into the objects.

A fair number of validation rules are of the toggle variety: required fields, fields that must be a certain length (no longer than, no shorter than), fields that must be greater than or less than other fields, and so forth. The common theme is that validation rules, when broken, immediately make the object invalid. In short, an object is valid if no rules are broken but is invalid if any rules are broken.

Rather than trying to implement a custom scheme in each business object in order to keep track of which rules are broken and whether the object is or isn't valid at any given point, this behavior can be abstracted. Obviously, the rules themselves are often coded into an application, but the tracking of which rules are broken and whether the object is valid can be handled by the framework.

Tip

Defining a validation rule as a method means you can create libraries of reusable rules for your application. The framework in this book includes a small library with some of the most common validation rules so you can use them in applications without having to write them.

The result is a standardized mechanism by which the developer can check all business objects for validity. The UI developer should also be able to retrieve a list of currently broken rules to display to the user (or for any other purpose).

Additionally, this provides the underlying data required to implement the System.ComponentModel.IDataErrorInfo interface defined by the .NET Framework. This interface is used by the data binding infrastructure in WPF and Windows Forms to automate the display of validation errors to the user.

Some validation rules may interact with the database or could be very complex in other ways. In these cases, you may want to allow the user to move on to editing other data while a validation rule is running in the background. To this end, you can choose to implement a validation rule (though not a business rule) to run asynchronously on a background thread.

The reason this only works with validation rules is that an async rule method won't have access to the real business object. For thread safety reasons, it is provided with a copy of the property values to be validated so it can do its work. Since a business rule manipulates the business object's data, I don't allow them to be implemented as an async operation.

Another concept is that an object should keep track of whether its state data has been changed. This is important for the performance and efficiency of data updates. Typically, data should only be updated into the database if the data has actually changed. It's a waste of effort to update the database with values it already has. Although the UI developer could keep track of whether any values have changed, it's simpler to have the object take care of this detail and it allows the object to better encapsulate its behaviors.

This can be implemented in a number of ways, ranging from keeping the previous values of all fields (allowing comparisons to see if they've changed) to saying that any change to a value (even "changing" it to its original value) will result in the object being marked as having changed.

Rather than having the framework dictate one cost over the other, it will simply provide a generic mechanism by which the business logic can tell the framework whether each object has been changed. This scheme supports both extremes of implementation, allowing you to make a decision based on the requirements of a specific application.

Applications also need to be able to authorize the user to perform (or not perform) certain operations or view (or not view) certain data. Such authorization is typically handled by associating users with roles and then indicating which roles are allowed or disallowed for specific behaviors.

Note

Authorization is just another type of business logic. The decisions about what a user can and can't do or can and can't see within the application are business decisions. Although the framework will work with the .NET Framework classes that support authentication, it's up to the business objects to implement the rules themselves.

Later, I discuss authentication and how the framework supports both integrated Windows and AD authentication and custom authentication. Either way, the result of authentication is that the application has access to the list of roles (or groups) to which the user belongs. This information can be used by the application to authorize the user as defined by the business.

While authorization can be implemented manually within the application's business code, the business framework can help formalize the process in some cases. Specifically, objects must use the user's role information to restrict what properties the user can view and edit. There are also common behaviors at the object level—such as loading, deleting, and saving an object—that are subject to authorization.

As with validation rules, authorization rules can be distilled to a set of fairly simple yes/no answers. Either a user can or can't read a given property. Either a user can or can't delete the object's data. The business framework includes code to help a business object developer easily restrict which object properties a user can or can't read or edit and what operations the user can perform on the object itself. In Chapter 12, you'll also see a common pattern that can be implemented by all business objects to control whether an object can be retrieved, deleted, or saved.

Not only does this business object need access to this authorization information but the UI does as well. Ideally, a good UI will change its display based on how the current user is allowed to interact with an object. To support this concept, the business framework will help the business objects expose the authorization rules such that they are accessible to the UI layer without duplicating the authorization rules themselves.

The .NET Framework includes the System.Collections.Generic namespace, which contains a number of powerful collection objects, including List<T>, Dictionary<TKey, TValue>, and others. There's also System.ComponentModel.BindingList<T>, which provides collection behaviors and full support for data binding, and the less capable System.ComponentModel.ObservableCollection<T>, which provides support only for WPF data binding.

A Short Primer on Generics

Generic types are a feature introduced in .NET 2.0. A generic type is a template that defines a set of behaviors but the specific data type is specified when the type is used rather than when it is created. Perhaps an example will help.

Consider the ArrayList collection type. It provides powerful list behaviors but it stores all its items as type object. While you can wrap an ArrayList with a strongly typed class or create your own collection type in many different ways, the items in the list are always stored in memory as type object.

The new List<T> collection type has the same behaviors as ArrayList but it is strongly typed—all the way to its core. The type of the indexer, enumerator, Remove(), and other methods are all defined by the generic type parameter, T. Even better, the items in the list are stored in memory as type T, not type object.

So what is T? It is the type provided when the List<T> is created:

List<int> myList = new List<int>();

In this case, T is int, meaning that myList is a strongly typed list of int values. The public properties and methods of myList are all of type int, and the values it contains are stored internally as int values.

Not only do generic types offer type safety due to their strongly typed nature, but they typically offer substantial performance benefits because they avoid storing values as type object.

Strongly Typed Collections of Child Objects

Sadly, the basic functionality provided by even the generic collection classes isn't enough to integrate fully with the rest of the framework. The business framework supports a set of relatively advanced features such as validation and n-level undo capabilities. Supporting these features requires that collections of child objects interact with the parent object and the objects contained in the collection in ways not implemented by the basic collection and list classes provided by .NET.

For example, a collection of child objects needs to be able to indicate if any of the objects it contains have been changed. Although the business object developer could easily write code to loop through the child objects to discover whether any are marked as dirty, it makes a lot more sense to put this functionality into the framework's collection object. That way the feature is simply available for use. The same is true with validity: if any child object is invalid, the collection should be able to report that it's invalid. If all child objects are valid, the collection should report itself as being valid.

As with the business objects themselves, the goal of the business framework is to make the creation of a strongly typed collection as close to normal .NET programming as possible, while allowing the framework to provide extra capabilities common to all business objects. What I'm defining here are two sets of behaviors: one for business objects (parent and/or child) and one for collections of business objects. Though business objects will be the more complex of the two, collection objects will also include some very interesting functionality.

Many Windows applications provide users with an interface that includes OK and Cancel buttons (or some variation on that theme). When the user clicks an OK button, the expectation is that any work the user has done will be saved. Likewise, when the user clicks a Cancel button, he expects that any changes he's made will be reversed or undone.

Simple applications can often deliver this functionality by saving the data to a database when users click OK and discarding the data when they click Cancel. For slightly more complex applications, the application must be able to undo any editing on a single object when the user presses the Esc key. (This is the case for a row of data being edited in a DataGridView: if the user presses Esc, the row of data should restore its original values.)

When applications become much more complex, however, these approaches won't work. Instead of simply undoing the changes to a single row of data in real time, you may need to be able to undo the changes to a row of data at some later stage.

Note

It is important to realize that the n-level undo capability implemented in the framework is optional and is designed to incur no overhead if it is not used.

Consider the case of an Invoice object that contains a collection of LineItem objects. The Invoice itself contains data that the user can edit plus data that's derived from the collection. The TotalAmount property of an Invoice, for instance, is calculated by summing up the individual Amount properties of its LineItem objects. Figure 2-2 illustrates this arrangement.

The UI may allow the user to edit the LineItem objects and then press Enter to accept the changes to the item or Esc to undo them. However, even if the user chooses to accept changes to some LineItem objects, she can still choose to cancel the changes on the Invoice itself. Of course, the only way to reset the Invoice object to its original state is to restore the states of the LineItem objects as well, including any changes to specific LineItem objects that might have been "accepted" earlier.

As if this isn't enough, many applications have more complex hierarchies of objects and subobjects (which I'll call child objects). Perhaps the individual LineItem objects each has a collection of Component objects beneath it. Each Component object represents one of the components sold to the customer that makes up the specific line item, as shown in Figure 2-3.

Now things get even more complicated. If the user edits a Component object, the changes ultimately impact the state of the Invoice object itself. Of course, changing a Component also changes the state of the LineItem object that owns the Component.

The user might accept changes to a Component but cancel the changes to its parent LineItem object, thereby forcing an undo operation to reverse accepted changes to the Component. Or in an even more complex scenario, the user may accept the changes to a Component and its parent LineItem only to cancel the Invoice. This would force an undo operation that reverses all those changes to the child objects.

Implementing an undo mechanism to support such n-level scenarios isn't trivial. The application must implement code to take a snapshot of the state of each object before it's edited so that changes can be reversed later on. The application might even need to take more than one snapshot of an object's state at different points in the editing process so that the object can revert to the appropriate point, based on when the user chooses to accept or cancel any edits.

Note

This multilevel undo capability flows from the user's expectations. Consider a typical word processor, where the user can undo multiple times to restore the content to ever earlier states.

And the collection objects are every bit as complex as the business objects themselves. The application must handle the simple case in which a user edits an existing LineItem, but it must also handle the case in which a user adds a new LineItem and then cancels changes to the parent or grandparent, resulting in the new LineItem being discarded. Equally, it must handle the case in which the user deletes a LineItem and then cancels changes to the parent or grandparent, thereby causing that deleted object to be restored to the collection as though nothing had ever happened.

Things get even more complex if you consider that the framework keeps a list of broken validation rules for each object. If the user changes an object's data so that the object becomes invalid but then cancels the changes, the original state of the object must be restored. The reverse is true as well: an object may start out invalid (perhaps because a required field is blank), so the user must edit data until it becomes valid. If the user later cancels the object (or its parent, grandparent, etc.), the object must become invalid once again because it will be restored to its original invalid state.

Fortunately, this is easily handled by treating the broken rules and validity of each object as part of that object's state. When an undo operation occurs, not only is the object's core state restored but so is the list of broken rules associated with that state. The object and its rules are restored together.

N-level undo is a perfect example of complex code that shouldn't be written into every business object. Instead, this functionality should be written once, so that all business objects support the concept and behave the way we want them to. This functionality will be incorporated directly into the business object framework—but at the same time, the framework must be sensitive to the different environments in which the objects will be used. Although n-level undo is of high importance when building sophisticated Windows user experiences, it's virtually useless in a typical web environment.

In web-based applications, users typically don't have a Cancel button. They either accept the changes or navigate away to another task, allowing the application to simply discard the changed object. In this regard, the web environment is much simpler, so if n-level undo isn't useful to the web UI developer, it shouldn't incur any overhead if it isn't used. The framework design takes into account that some UI types will use the concept while others will simply ignore it.

At this point, I've discussed some of the business object features that the framework will support. One of the key reasons for providing these features is to make the business object support Windows and web-style user experiences with minimal work on the part of the UI developer. In fact, this should be an overarching goal when you're designing business objects for a system. The UI developer should be able to rely on the objects to provide business logic, data, and related services in a consistent manner.

Beyond all the features already covered is the issue of creating new objects, retrieving existing data, and updating objects in some data store. I discuss the process of object persistence later in the chapter, but first this topic should be considered from the UI developer's perspective. Should the UI developer be aware of any application servers? Should he be aware of any database servers? Or should he simply interact with a set of abstract objects? There are three broad models to choose from:

UI-in-charge

Object-in-charge

Class-in-charge

To a greater or lesser degree, all three of these options hide information about how objects are created and saved and allow us to exploit the native capabilities of .NET. In the end, I settle on the option that hides the most information (keeping development as simple as possible) and best allows you to exploit the features of .NET.

Note

Inevitably, the result will be a compromise. As with many architectural decisions, there are good arguments to be made for each option. In your environment, you may find that a different decision would work better. Keep in mind though that this particular decision is fairly central to the overall architecture of the framework, so choosing another option will likely result in dramatic changes throughout the framework.

To make this as clear as possible, the following discussion assumes the use of a physical n-tier configuration, whereby the client or web server is interacting with a separate application server, which in turn interacts with the database. Although not all applications will run in such configurations, it is much easier to discuss object creation, retrieval, and updating in this context.

UI in Charge

One common approach to creating, retrieving, and updating objects is to put the UI in charge of the process. This means that it's the UI developer's responsibility to write code that will contact the application server in order to retrieve or update objects.

In this scheme, when a new object is required, the UI will contact the application server and ask it for a new object. The application server can then instantiate a new object, populate it with default values, and return it to the UI code. The code might be something like this:

Customer result = null;

var factory =

new ChannelFactory<BusinessService.IBusinessService>("BusinessService");

try

{

var proxy = factory.CreateChannel();

using (proxy as IDisposable)

{

result = proxy.CreateNewCustomer();

}

}

finally

{

factory.Close();

}Here the object of type IBusinessService is anchored, so it always runs on the application server. The Customer object is mobile, so although it's created on the server, it's returned to the UI by value.

Note

This code example uses Windows Communication Foundation to contact an application server and have it instantiate an object on the server. In Chapter 15, you'll see how CSLA .NET abstracts this code into a much simpler form, effectively wrapping and hiding the complexity of WCF.

This may seem like a lot of work just to create a new, empty object, but it's the retrieval of default values that makes it necessary. If the application has objects that don't need default values, or if you're willing to hard-code the defaults, you can avoid some of the work by having the UI simply create the object on the client workstation. However, many business applications have configurable default values for objects that must be loaded from the database; and that means the application server must load them.

Retrieving an existing object follows the same basic procedure. The UI passes criteria to the application server, which uses the criteria to create a new object and load it with the appropriate data from the database. The populated object is then returned to the UI for use. The UI code might be something like this:

Customer result = null;

var factory =

new ChannelFactory<BusinessService.IBusinessService>("BusinessService");

try

{

var proxy = factory.CreateChannel();

using (proxy as IDisposable)

{

result = proxy.GetCustomer(criteria);

}

}

finally

{

factory.Close();

}Updating an object happens when the UI calls the application server and passes the object to the server. The server can then take the data from the object and store it in the database. Because the update process may result in changes to the object's state, the newly saved and updated object is then returned to the UI. The UI code might be something like this:

Customer result = null;

var factory =

new ChannelFactory<BusinessService.IBusinessService>("BusinessService");

try

{

var proxy = factory.CreateChannel();

using (proxy as IDisposable)

{

result = proxy.UpdateCustomer(customer);

}

}

finally

{

factory.Close();

}Overall, this model is straightforward—the application server must simply expose a set of services that can be called from the UI to create, retrieve, update, and delete objects. Each object can simply contain its business logic without the object developer having to worry about application servers or other details.

The drawback to this scheme is that the UI code must know about and interact with the application server. If the application server is moved, or some objects come from a different server, the UI code must be changed. Moreover, if a Windows UI is created to use the objects and then later a web UI is created that uses those same objects, you'll end up with duplicated code. Both types of UI will need to include the code in order to find and interact with the application server.

The whole thing is complicated further if you consider that the physical configuration of the application should be flexible. It should be possible to switch from using an application server to running the data access code on the client just by changing a configuration file. If there's code scattered throughout the UI that contacts the server any time an object is used, there will be a lot of places where developers might introduce a bug that prevents simple configuration file switching.

Object in Charge

Another option is to move the knowledge of the application server into the objects themselves. The UI can just interact with the objects, allowing them to load defaults, retrieve data, or update themselves. In this model, simply using the new keyword creates a new object:

Customer cust = new Customer();

Within the object's constructor, you would then write the code to contact the application server and retrieve default values. It might be something like this:

public Customer()

{

var factory =

new ChannelFactory<BusinessService.IBusinessService>("BusinessService");

try

{

var proxy = factory.CreateChannel();

using (proxy as IDisposable)

{

var tmp = proxy.GetNewCustomerDefaults();

_field1 = tmp.Field1Default;

_field2 = tmp.Field2Default;

// load all fields with defaults here

}

}

finally

{

factory.Close();

}

}Notice that the previous code does not take advantage of the built-in support for passing an object by value across the network. In fact, this technique forces the creation of some other class that contains the default values returned from the server.

Given that both the UI-in-charge and class-in-charge techniques avoid all this extra coding, let's just abort the discussion of this option and move on.

Class-in-Charge (Factory Pattern)

The UI-in-charge approach uses .NET's ability to pass objects by value but requires the UI developer to know about and interact with the application server. The object-in-charge approach enables a very simple set of UI code but makes the object code prohibitively complex by making it virtually impossible to pass the objects by value.

The class-in-charge option provides a good compromise by providing reasonably simple UI code that's unaware of application servers while also allowing the use of .NET's ability to pass objects by value, thus reducing the amount of "plumbing" code needed in each object. Hiding more information from the UI helps create a more abstract and loosely coupled implementation, thus providing better flexibility.

Note

The class-in-charge approach is a variation on the Factory design pattern, in which a "factory" method is responsible for creating and managing an object. In many cases, these factory methods are static methods that may be placed directly into a business class—hence the class-in-charge moniker.[1]

In this model, I make use of the concept of static factory methods on a class. A static method can be called directly without requiring an instance of the class to be created first. For instance, suppose that a Customer class contains the following code:

[Serializable()]

public class Customer

{

public static Customer NewCustomer()

{

var factory =

new ChannelFactory<BusinessService.IBusinessService>("BusinessService");

try

{

var proxy = factory.CreateChannel();

using (proxy as IDisposable)

{

return = proxy.CreateNewCustomer ();

}

}

finally

{

factory.Close();

}

}

}The UI code could use this method without first creating a Customer object, as follows:

Customer cust = Customer.NewCustomer();

A common example of this tactic within the .NET Framework itself is the Guid class, whereby a static method is used to create new Guid values, as follows:

Guid myGuid = Guid.NewGuid();

This accomplishes the goal of making the UI code reasonably simple; but what about the static method and passing objects by value? Well, the NewCustomer() method contacts the application server and asks it to create a new Customer object with default values. The object is created on the server and then returned back to the NewCustomer() code, which is running on the client. Now that the object has been passed back to the client by value, the method simply returns it to the UI for use.

Likewise, you can create a static method in the class in order to load an object with data from the data store as shown:

public static Customer GetCustomer(string criteria)

{

var factory =

new ChannelFactory<BusinessService.IBusinessService>("BusinessService");

try

{

var proxy = factory.CreateChannel();

using (proxy as IDisposable)

{

return = proxy.GetCustomer (criteria);

}

}

finally

{

factory.Close();

}

}Again, the code contacts the application server, providing it with the criteria necessary to load the object's data and create a fully populated object. That object is then returned by value to the GetCustomer() method running on the client and then back to the UI code.

As before, the UI code remains simple:

Customer cust = Customer.GetCustomer(myCriteria);

The class-in-charge model requires that you write static factory methods in each class but keeps the UI code simple and straightforward. It also takes full advantage of .NET's ability to pass objects across the network by value, thereby minimizing the plumbing code in each object. Overall, it provides the best solution, which is used (and refined further) in the chapters ahead.

For more than a decade, Microsoft has included some kind of data binding capability in its development tools. Data binding allows developers to create forms and populate them with data with almost no custom code. The controls on a form are "bound" to specific fields from a data source (such as an entity object, a DataSet, or a business object).

Data binding is provided in WPF, Windows Forms, and Web Forms. The primary benefits or drivers for using data binding in .NET development include the following:

Data binding offers good performance, control, and flexibility.

Data binding can be used to link controls to properties of business objects.

Data binding can dramatically reduce the amount of code in the UI.

Data binding is sometimes faster than manual coding, especially when loading data into list boxes, grids, or other complex controls.

Of these, the biggest single benefit is the dramatic reduction in the amount of UI code that must be written and maintained. Combined with the performance, control, and flexibility of .NET data binding, the reduction in code makes it a very attractive technology for UI development.

In WPF, Windows Forms, and Web Forms, data binding is read-write, meaning that an element of a data source can be bound to an editable control so that changes to the value in the control will be updated back into the data source as well.

Data binding in .NET is very powerful. It offers good performance with a high degree of control for the developer. Given the coding savings gained by using data binding, it's definitely a technology that needs to be supported in the business object framework.

Enabling the Objects for Data Binding

Although data binding can be used to bind against any object or any collection of homogeneous objects, there are some things that object developers can do to make data binding work better. Implementing these "extra" features enables data binding to do more work for you and provide a superior experience. The .NET DataSet object, for instance, implements these extra features in order to provide full data binding support to WPF, Windows Forms, and Web Forms developers.

The IEditableObject Interface

All editable business objects should implement the interface called System.ComponentModel.IEditableObject. This interface is designed to support a simple, one-level undo capability and is used by simple forms-based data binding and complex grid-based data binding alike.

In the forms-based model, IEditableObject allows the data binding infrastructure to notify the business object before the user edits it so that the object can take a snapshot of its values. Later, the application can tell the object whether to apply or cancel those changes based on the user's actions. In the grid-based model, each of the objects is displayed in a row within the grid. In this case, the interface allows the data binding infrastructure to notify the object when its row is being edited and then whether to accept or undo the changes based on the user's actions. Typically, grids perform an undo operation if the user presses the Esc key, and an accept operation if the user presses Enter or moves off that row in the grid by any other means.

The INotifyPropertyChanged Interface

Editable business objects need to raise events to notify data binding any time their data values change. Changes that are caused directly by the user editing a field in a bound control are supported automatically—however, if the object updates a property value through code, rather than by direct user editing, the object needs to notify the data binding infrastructure that a refresh of the display is required.

The .NET Framework defines System.ComponentModel.INotifyPropertyChanged, which should be implemented by any bindable object. This interface defines the PropertyChanged event that data binding can handle to detect changes to data in the object.

The INotifyPropertyChanging Interface

In .NET 3.5, Microsoft introduced the System.ComponentModel.INotifyPropertyChanging interface so business objects can indicate when a property is about to be changed. Strictly speaking, this interface is optional and isn't (currently) used by data binding. For completeness, however, it is recommended that this interface be used when implementing INotifyPropertyChanged.

The INotifyPropertyChanging interface defines the PropertyChanging event that is raised before a property value is changed, as a complement to the PropertyChanged event that is raised after a property value has changed.

The IBindingList Interface

All business collections should implement the interface called System.ComponentModel.IBindingList. The simplest way to do this is to have the collection classes inherit from System.ComponentModel.BindingList<T>. This generic class implements all the collection interfaces required to support data binding:

IBindingListIListICollectionIEnumerableICancelAddNewIRaiseItemChangedEvents

As you can see, being able to inherit from BindingList<T> is very valuable. Otherwise, the business framework would need to manually implement all these interfaces.

This interface is used in grid-based binding, in which it allows the control that's displaying the contents of the collection to be notified by the collection any time an item is added, removed, or edited so that the display can be updated. Without this interface, there's no way for the data binding infrastructure to notify the grid that the underlying data has changed, so the user won't see changes as they happen.

Along this line, when a child object within a collection changes, the collection should notify the UI of the change. This implies that every collection object will listen for events from its child objects (via INotifyPropertyChanged) and in response to such an event will raise its own event indicating that the collection has changed.

The INotifyCollectionChanged Interface

In .NET 3.0, Microsoft introduced a new option for building lists for data binding. This new option only works with WPF and Silverlight and is not supported by Windows Forms or Web Forms. The System.ComponentModel.INotifyCollectionChanged interface defines a CollectionChanged event that is raised by any list implementing the interface. The simplest way to do this is to have the collection classes inherit from System.ComponentModel.ObservableCollection<T>. This generic class implements the interface and related behaviors.

When implementing a list or a collection you must choose to use either IBindingList or INotifyCollectionChanged. If you implement both, data binding in WPF will become confused, as it honors both interfaces and will always get duplicate events for any change to the list.

You should only choose to implement INotifyCollectionChanged or use ObservableCollection<T> if you are absolutely certain your application will only need to support WPF or Silverlight and never Windows Forms.

Because CSLA .NET supports Windows Forms and Web Forms along with WPF, the list and collection types defined in the framework implement IBindingList by subclassing BindingList<T>.

Events and Serialization

The events that are raised by business collections and business objects are all valuable. Events support the data binding infrastructure and enable utilization of its full potential. Unfortunately, there's a conflict between the idea of objects raising events and the use of .NET serialization via the Serializable attribute.

When an object is marked as Serializable, the .NET Framework is told that it can pass the object across the network by value. As part of this process, the object will be automatically converted into a byte stream by the .NET runtime. It also means that any other objects referenced by the object will be serialized into the same byte stream, unless the field representing it is marked with the NonSerialized attribute. What may not be immediately obvious is that events create an object reference behind the scenes.

When an object declares and raises an event, that event is delivered to any object that has a handler for the event. WPF forms and Windows Forms often handle events from objects, as illustrated in Figure 2-4.

How does the event get delivered to the handling object? It turns out that behind every event is a delegate—a strongly typed reference that points back to the handling object. This means that any object that raises events can end up with bidirectional references between the object and the other object/entity that is handling those events, as shown in Figure 2-5.

Even though this back reference isn't visible to developers, it's completely visible to the .NET serialization infrastructure. When serializing an object, the serialization mechanism will trace this reference and attempt to serialize any objects (including forms) that are handling the events. Obviously, this is rarely desirable. In fact, if the handling object is a form, this will fail outright with a runtime error because forms aren't serializable.

Note

If any nonserializable object handles events that are raised by a serializable object, you'll be unable to serialize the object because the .NET runtime serialization process will error out.

Solving this means marking the events as NonSerialized. It turns out that this requires a bit of special syntax when dealing with events. Specifically, a more explicit block structure must be used to declare the event. This approach allows manual declaration of the delegate field so it is possible to mark that field as NonSerialized. The BindingList<T> class already declares its event in this manner, so this issue only pertains to the implementation of INotifyPropertyChanged and INotifyPropertyChanging (or any custom events you choose to declare in your business classes).

The IDataErrorInfo Interface

Earlier I discussed the need for objects to implement business rules and expose information about broken rules to the UI. The System.ComponentModel.IDataErrorInfo interface is designed to allow data binding to request information about broken validation rules from a data source.

We will already have the tools needed to easily implement IDataErrorInfo, given that the object framework already helps the objects manage a list of all currently broken validation rules. This interface defines two methods. The first allows data binding to request a text description of errors at the object level, while the second provides a text description of errors at the property level.

By implementing this interface, the objects will automatically support the feedback mechanisms built into the Windows Forms DataGridView and ErrorProvider controls.

One of the biggest challenges facing a business developer building an object-oriented system is that a good object model is almost never the same as a good relational data model. Because most data is stored in relational databases using a relational model, we're faced with the significant problem of translating that data into an object model for processing and then changing it back to a relational model later on to persist the data from the objects back into the data store.

Note

The framework in this book doesn't require a relational model, but since that is the most common data storage technology, I focus on it quite a bit. You should remember that the concepts and code shown in this chapter can be used against XML files, object databases, or almost any other data store you are likely to use.

Relational vs. Object Modeling

Before going any further, let's make sure we're in agreement that object models aren't the same as relational models. Relational models are primarily concerned with the efficient storage of data, so that replication is minimized. Relational modeling is governed by the rules of normalization, and almost all databases are designed to meet at least the third normal form. In this form, it's quite likely that the data for any given business concept or entity is split between multiple tables in the database in order to avoid any duplication of data.

Object models, on the other hand, are primarily concerned with modeling behavior, not data. It's not the data that defines the object but the role the object plays within your business domain. Every object should have one clear responsibility and a limited number of behaviors focused on fulfilling that responsibility.

Tip

I recommend the book Object Thinking by David West (DV-Microsoft Professional, 2004) for some good insight into behavioral object modeling and design. Though my ideas differ somewhat from those in Object Thinking, I use many of the concepts and language from that book in my own object-oriented design work and in this book.

For instance, a CustomerEdit object may be responsible for adding and editing customer data. A CustomerInfo object in the same application may be responsible for providing read-only access to customer data. Both objects will use the same data from the same database and table, but they provide different behaviors.

Similarly, an InvoiceEdit object may be responsible for adding and editing invoice data. But invoices include some customer data. A naïve solution is to have the InvoiceEdit object make use of the aforementioned CustomerEdit object. That CustomerEdit object should only be used in the case where the application is adding or editing customer data—something that isn't occurring while working with invoices. Instead, the InvoiceEdit object should directly interact with the customer data it needs to do its job.

Through these two examples, it should be clear that sometimes multiple objects will use the same relational data. In other cases, a single object will use relational data from different data entities. In the end, the same customer data is being used by three different objects. The point, though, is that each one of these objects has a clearly defined responsibility that defines the object's behavior. Data is merely a resource the object needs to implement that behavior.

Behavioral Object-Oriented Design

It is a common trap to think that data in objects needs to be normalized like it is in a database. A better way to think about objects is to say that behavior should be normalized. The goal of object-oriented design is to avoid replication of behavior, not data.

At this point, most people are struggling. Most developers have spent years programming their brains to think relationally, and this view of object-oriented design flies directly in the face of that conditioning. Yet the key to the successful application of object-oriented design is to divorce object thinking from relational or data thinking.

Perhaps the most common objection at this point is this: if two objects (e.g., CustomerEdit and InvoiceEdit) both use the same data (e.g., the customer's name), how do you make sure that consistent business rules are applied to that data? And this is a good question.

The answer is that the behavior must be normalized. Business rules are merely a form of behavior. The business rule specifying that the customer name value is required, for instance, is just a behavior associated with that particular value.

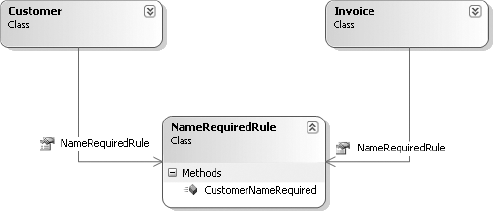

Earlier in the chapter I discuss the idea that a validation rule can be reduced to a method defined by a delegate. A delegate is just an object that points to a method, so it is quite possible to view the delegate itself as the rule. Following this train of thought, every rule then becomes an object.

Behavioral object-oriented design relies heavily on the concept of collaboration. Collaboration is the idea that an object should collaborate with other objects to do its work. If an object starts to become complex, you can break the problem into smaller, more digestible parts by moving some of the sub-behaviors into other objects that collaborate with the original object to accomplish the overall goal.

In the case of a required customer name value, there's a Rule object that defines that behavior. Both the CustomerEdit and InvoiceEdit objects can collaborate with that Rule object to ensure that the rule is consistently applied. As you can see in Figure 2-6, the actual rule is only implemented once but is used as appropriate—effectively normalizing that behavior.

It could be argued that the CustomerName concept should become an object of its own and that this object would implement the behaviors common to the field. While this sounds good in an idealistic sense, it has serious performance and complexity drawbacks when implemented on development platforms such as .NET. Creating a custom object for every field in your application can rapidly become overwhelming, and such an approach makes the use of technologies such as data binding very complex.

My approach of normalizing the rules themselves provides a workable compromise: providing a high level of code reuse while still offering good performance and allowing the application to take advantage of all the features of the .NET platform.

In fact, the idea that a string value is required is so pervasive that it can be normalized to a general StringRequired rule that can be used by any object with a required property anywhere in an application. In Chapter 11, I implement a CommonRules class containing several common validation rules of this nature.

Object-Relational Mapping

If object models aren't the same as relational models (or some other data models that we might be using), some mechanism is needed by which data can be translated from the Data Storage and Management layer up into the object-oriented Business layer.

Note

This mismatch between object models and relational models is a well-known issue within the object-oriented community. It is commonly referred to as the impedance mismatch problem, and one of the best discussions of it can be found in David Taylor's book, Object-Oriented Technology: A Manager's Guide (Addison-Wesley, 1991).

Several object-relational mapping (ORM) products from various vendors, including Microsoft, exist for the .NET platform. In truth, however, most ORM tools have difficulty working against object models defined using behavioral object-oriented design. Unfortunately, most of the ORM tools tend to create "superpowered" DataSet equivalents, rather than true behavioral business objects. In other words, they create a data-centric representation of the business data and wrap it with business logic.

The differences between such a data-centric object model and what I am proposing in this book are subtle but important. Responsibility-driven object modeling creates objects that are focused on the object's behavior, not on the data it contains. The fact that objects contain data is merely a side effect of implementing behavior; the data is not the identity of the object. Most ORM tools, by contrast, create objects based around the data, with the behavior being a side effect of the data in the object.

Beyond the philosophical differences, the wide variety of mappings that you might need and the potential for business logic driving variations in the mapping from object to object make it virtually impossible to create a generic ORM product that can meet everyone's needs.

Consider the CustomerEdit object example discussed earlier. While the customer data may come from one database, it is totally realistic to consider that some data may come from SQL Server while other data comes through screen scraping a mainframe screen. It's also quite possible that the business logic will dictate that some of the data is updated in some cases but not in others. Issues such as these are virtually impossible to solve in a generic sense, and so solutions almost always revolve around custom code. The most a typical ORM tool can do is provide support for simple cases, in which objects are updated to and from standard, supported, relational data stores. At most, they provide hooks by which you can customize their behavior. Rather than trying to build a generic ORM product as part of this book, I'll aim for a much more attainable goal.

The framework in this book defines a standard set of four methods for creating, retrieving, updating, and deleting objects. Business developers will implement these four methods to work with the underlying data management tier by using the ADO.NET Entity Framework, LINQ to SQL, raw ADO.NET, the XML support in .NET, XML services, or any other technology required to accomplish the task. In fact, if you have an ORM (or some other generic data access) product, you'll often be able to invoke that tool from these four methods just as easily as using ADO.NET directly.

Note

The approach taken in this book and the associated framework is very conducive to code generation. Many people use code generators to automate the process of building common data access logic for their objects, thus achieving high levels of productivity while retaining the ability to create a behavioral object-oriented model.

The point is that the framework will simplify object persistence so that all developers need to do is implement these four methods in order to retrieve or update data. This places no restrictions on the object's ability to work with data and provides a standardized persistence and mapping mechanism for all objects.

Preserving Encapsulation

As I noted at the beginning of the chapter, one of my key goals is to design this framework to provide powerful features while following the key object-oriented concepts, including encapsulation. Encapsulation is the idea that all of the logic and data pertaining to a given business entity is held within the object that represents that entity. Of course, there are various ways in which one can interpret the idea of encapsulation—nothing is ever simple.

One approach is to encapsulate business data and logic in the business object and then encapsulate data access and ORM behavior in some other object: a persistence object. This provides a nice separation between the business logic and data access and encapsulates both types of behavior, as shown in Figure 2-7.

Although there are certainly some advantages to this approach, there are drawbacks, too. The most notable of these is that it can be challenging to efficiently get the data from the persistence object into or out of the business object. For the persistence object to load data into the business object, it must be able to bypass business and validation processing in the business object and somehow load raw data into it directly. If the persistence object tries to load data into the object using the object's public properties, you'll run into a series of issues:

The data already in the database is presumed valid, so a lot of processing time is wasted unnecessarily revalidating data. This can lead to a serious performance problem when loading a large group of objects.

There's no way to load read-only property values. Objects often have read-only properties for things such as the primary key of the data, and such data obviously must be loaded into the object, but it can't be loaded via the normal interface (if that interface is properly designed).

Sometimes properties are interdependent due to business rules, which means that some properties must be loaded before others or errors will result. The persistence object would need to know about all these conditions so that it could load the right properties first. The result is that the persistence object would become very complex, and changes to the business object could easily break the persistence object.

On the other hand, having the persistence object load raw data into the business object breaks encapsulation in a big way because one object ends up directly tampering with the internal fields of another. This could be implemented using reflection or by designing the business object to expose its private fields for manipulation. But the former is slow and the latter is just plain bad object design: it allows the UI developer (or any other code) to manipulate these fields, too. That's just asking for the abuse of the objects, which will invariably lead to code that's impossible to maintain.

What's needed is a workable compromise, where the actual data access code is in one object while the code to load the business object's fields is in the business object itself. This can be accomplished by creating a separate data access layer (DAL) assembly that is invoked by the business object. The DAL defines an interface the business object can use to retrieve and store information, as shown in Figure 2-8.

This is a nice compromise because it allows the business object to completely manage its own fields and yet keeps the code that communicates with the database cleanly separated into its own location.

There are several ways to implement such a DAL, including the use of raw ADO.NET and the use of LINQ to SQL. The raw ADO.NET approach has the benefit of providing optimal performance. In this case the DAL simply returns a DataReader to the business object and the object can load its fields directly from the data stream.

Creating the DAL using LINQ to SQL or the ADO.NET Entity Framework provides a higher level of abstraction and simplifies the DAL code. However, this approach is slower, because the data must pass through a set of data transfer objects (DTOs) or entity objects as it flows to and from the business object.

Note

In many CSLA .NET applications, the data access code is directly embedded within the business object. While this arguably blurs the boundary between layers, it often provides the best performance and the simplest code.

The examples in this book use a formal data access layer created using LINQ to SQL, but the same architecture supports creation of a DAL that uses raw ADO.NET or many other data access technologies.

Supporting Physical N-Tier Models

The question that remains then is how to support physical n-tier models if the UI-oriented and data-oriented behaviors reside in one object.

UI-oriented behaviors almost always involve a lot of properties and methods, with a very fine-grained interface with which the UI can interact in order to set, retrieve, and manipulate the values of an object. Almost by definition, this type of object must run in the same process as the UI code itself, either on the Windows client machine with WPF or Windows Forms or on the web server with Web Forms.

Conversely, data-oriented behaviors typically involve very few methods: create, fetch, update, delete. They must run on a machine where they can establish a physical connection to the data store. Sometimes this is the client workstation or web server, but often it means running on a physically separate application server.

This point of apparent conflict is where the concept of mobile objects enters the picture. It's possible to pass a business object from an application server to the client machine, work with the object, and then pass the object back to the application server so that it can store its data in the database. To do this, there needs to be some black-box component running as a service on the application server with which the client can interact. This black-box component does little more than accept the object from the client and then call methods on the object to retrieve or update data as required. But the object itself does all the real work. Figure 2-9 illustrates this concept, showing how the same physical business object can be passed from application server to client, and vice versa, via a generic router object that's running on the application server.

In Chapter 1, I discussed anchored and mobile objects. In this model, the business object is mobile, meaning that it can be passed around the network by value. The router object is anchored, meaning that it will always run on the machine where it's created.

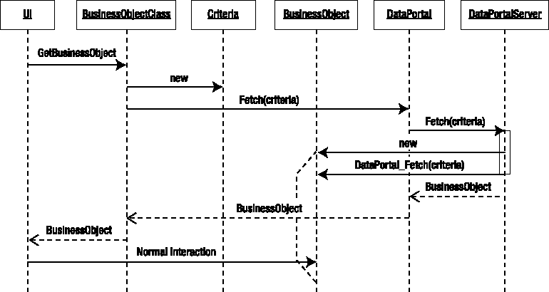

In the framework, I'll refer to this router object as a data portal. It will act as a portal for all data access for all the objects. The objects will interact with this portal in order to retrieve default values (create), fetch data (read), update or insert data (update), and remove data (delete). This means that the data portal will provide a standardized mechanism by which objects can perform all create, read, update, delete (CRUD) operations.

The end result will be that each business class will include a factory method that the UI can call in order to load an object based on data from the database, as follows:

public static Customer GetCustomer(string customerId)

{

return DataPortal.Fetch<Customer>(

new SingleCriteria<Customer, string>(customerId));

}Notice how the data portal concept abstracts the use of WCF, and so this code is far simpler than the WCF code used earlier in the chapter.

The actual data access code will be contained within each of the business objects. The data portal will simply provide an anchored object on a machine with access to the database server and will invoke the appropriate CRUD methods on the business objects themselves. This means that the business object will also implement a method that will be called by the data portal to actually load the data. That method will look something like this:

private void DataPortal_Fetch(SingleCriteria<Customer, string> criteria)

{

// Code to load the object's fields with data goes here

}The UI won't know (or need to know) how any of this works, so in order to create a Customer object, the UI will simply write code along these lines:

var cust = Customer.GetCustomer("ABC");The framework, and specifically the data portal, will take care of all the rest of the work, including figuring out whether the data access code should run on the client workstation or on an application server.

Using the data portal means that all the logic remains encapsulated within the business objects, while physical n-tier configurations are easily supported. Better still, by implementing the data portal correctly, you can switch between having the data access code running on the client machine and placing it on a separate application server just by changing a configuration file setting. The ability to change between 2- and 3-tier physical configurations with no changes to code is a powerful and valuable feature.

Application security is often a challenging issue. Applications need to be able to authenticate the user, which means that they need to verify the user's identity. The result of authentication is not only that the application knows the identity of the user but that the application has access to the user's role membership and possibly other information about the user. Collectively, I refer to this as the user's profile data. This profile data can be used by the application for various purposes, most notably authorization.

CSLA .NET directly supports integrated security. This means that you can use objects within the framework to determine the user's Windows identity and any domain or Active Directory (AD) groups to which they belong. In some organizations, this is enough; all the users of the organization's applications are in AD, and by having users log in to a workstation or a website using integrated security, the applications can determine the user's identity and roles (groups).

In other organizations, applications are used by at least some users who are not part of the organization's NT domain or AD. They may not even be members of the organization in question. This is very often the case with web and mobile applications, but it's surprisingly common with Windows applications as well. In these cases, you can't rely on Windows integrated security for authentication and authorization.

To complicate matters further, the ideal security model would provide user profile and role information not only to server-side code but also to the code on the client. Rather than allowing the user to attempt to perform operations that will generate errors due to security at some later time, the UI should gray out the options, or not display them at all. This requires that the developer has consistent access to the user's identity and profile at all layers of the application, including the UI, Business, and Data Access layers.

Remember that the layers of an application may be deployed across multiple physical tiers. Due to this fact, there must be a way of transferring the user's identity information across tier boundaries. This is often called impersonation.

Implementing impersonation isn't too hard when using Windows integrated security, but it's often problematic when relying on roles that are managed in a custom SQL Server database, an LDAP store, or any other location outside of AD.

The CSLA .NET framework will provide support for both Windows integrated security and custom authentication, in which you define how the user's credentials are validated and the user's profile data and roles are loaded. This custom security is a model that you can adapt to use any existing security tables or services that already exist in your organization. The framework will rely on Windows to handle impersonation when using Windows integrated or AD security and will handle impersonation itself when using custom authentication.

So far, I have focused on the major goals for the framework. Having covered the guiding principles, let's move on to discuss the design of the framework so it can meet these goals. In the rest of this chapter, I walk through the various classes that will combine to create the framework. After covering the design, in Chapters 6 through 16 I dive into the implementation of the framework code.

A comprehensive framework can be a large and complex entity. There are usually many classes that go into the construction of a framework, even though the end users of the framework—the business developers—only use a few of those classes directly. The framework discussed here and implemented in Chapters 6 through 16 accomplishes the goals I've just discussed, along with enabling the basic creation of object-oriented n-tier business applications. For any given application or organization, this framework will likely be modified and enhanced to meet specific requirements. This means that the framework will grow as you use and adapt it to your environment.

The CSLA .NET framework contains a lot of classes and types, which can be overwhelming if taken as a whole. Fortunately, it can be broken down into smaller units of functionality to better understand how each part works. Specifically, the framework can be divided into the following functional groups:

Business object creation

N-level undo functionality

Data binding support

Validation and business rules

A data portal enabling various physical configurations

Transactional and nontransactional data access

Authentication and authorization

Helper types and classes

For each functional group, I'll focus on a subset of the overall class diagram, breaking it down into more digestible pieces.

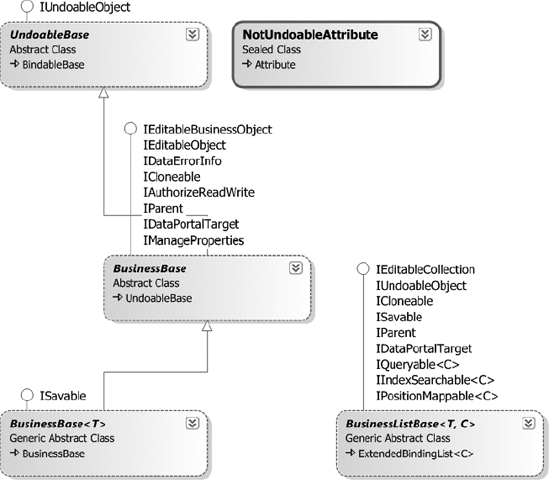

First, it's important to recognize that the key classes in the framework are those that business developers will use as they create business objects but that these are a small subset of what's available. In fact, many of the framework classes are never used directly by business developers. Figure 2-10 shows only those classes the business developer will typically use.

Obviously, the business developer may periodically interact with other classes as well, but these are the ones that will be at the center of most activity. Classes or methods that the business developer shouldn't have access to will be scoped to prevent accidental use.

Table 2-1 summarizes each class and its intended purpose.

Table 2.1. Business Framework Base Classes

Class | Purpose |

|---|---|

| Inherit from this class to create a single editable business object such as |

| Inherit from this class to create an editable collection of business objects such as |

| Inherit from this class to implement a collection of business objects, where changes to each object are committed automatically as the user moves from object to object (typically in a data bound grid control). |

| Inherit from this class to implement a command that should run on the application server, such as implementation of a |

| Inherit from this class to create a single read-only business object such as |

| Inherit from this class to create a read-only collection of objects such as |

| Inherit from this class to create a read-only collection of key/value pairs (typically for populating drop-down list controls) such as |

These base classes support a set of object stereotypes. A stereotype is a broad grouping of objects with similar behaviors or roles. The supported stereotypes are listed in Table 2-2.

Table 2.2. Supported Object Stereotypes

Let's discuss each stereotype in a bit more detail.

Editable Root

The BusinessBase class is the base from which all editable (read-write) business objects will be created. In other words, to create a business object, inherit from BusinessBase, as shown here:

[Serializable]

public class CustomerEdit : BusinessBase<CustomerEdit>

{

}When creating a subclass, the business developer must provide the specific type of new business object as a type parameter to BusinessBase<T>. This allows the generic BusinessBase type to expose strongly typed methods corresponding to the specific business object type.

Behind the scenes, BusinessBase<T> inherits from Csla.Core.BusinessBase, which implements the majority of the framework functionality to support editable objects. The primary reason for pulling the functionality out of the generic class into a normal class is to enable polymorphism. Polymorphism is what allows you to treat all subclasses of a type as though they were an instance of the base class. For example, all Windows Forms—Form1, Form2, and so forth—can be treated as type Form. You can write code like this:

Form form = new Form2(); form.Show();

This is polymorphic behavior, in which the variable form is of type Form but references an object of type Form2. The same code would work with Form1 because both inherit from the base type Form.

It turns out that generic types are not polymorphic like normal types.

Another reason for inheriting from a non-generic base class is to make it simpler to customize the framework. If needed, you can create alternative editable base classes starting with the functionality in Core.BusinessBase.

Csla.Core.BusinessBase and the classes from which it inherits provide all the functionality discussed earlier in this chapter, including n-level undo, tracking of broken rules, "dirty" tracking, object persistence, and so forth. It supports the creation of root objects (top-level) and child objects. Root objects are objects that can be retrieved directly from and updated or deleted within the database. Child objects can only be retrieved or updated in the context of their parent object.

Note

Throughout this book, it is assumed that you are building business applications, in which case almost all objects are ultimately stored in the database at one time or another. Even if an object isn't persisted to a database, you can still use BusinessBase to gain access to the n-level undo and business, validation, and authorization rules and change tracking features built into the framework.

For example, an InvoiceEdit is typically a root object, though the LineItem objects contained by an InvoiceEdit object are child objects. It makes perfect sense to retrieve or update an InvoiceEdit, but it makes no sense to create, retrieve, or update a LineItem without having an associated InvoiceEdit.

The BusinessBase class provides default implementations of the data access methods that exist on all root business objects.

Note

The default implementations are a holdover from a very early version of the framework. They still exist to preserve backward compatibility to support users who have been using CSLA .NET for many years and over many versions.

These methods will be called by the data portal mechanism. These default implementations all raise an error if they're called. The intention is that the business objects can opt to override these methods if they need to support, create, fetch, insert, update, or delete operations. The names of these methods are as follows:

DataPortal_Create()DataPortal_Fetch()DataPortal_Insert()DataPortal_Update()DataPortal_DeleteSelf()DataPortal_Delete()

Though virtual implementations of these methods are in the base class, developers will typically implement strongly typed versions of DataPortal_Create(), DataPortal_Fetch(), and DataPortal_Delete(), as they all accept a criteria object as a parameter. The virtual methods declare this parameter as type object, of course; but a business object will typically want to use the actual data type of the criteria object itself. This is discussed in more detail in Chapters 15 and 18.

The data portal also supports three other (optional) methods for pre- and post-processing and exception handling. The names of these methods are as follows:

DataPortal_OnDataPortalInvoke()DataPortal_OnDataPortalInvokeComplete()DataPortal_OnDataPortalException()

Editable root objects are very common in most business applications.

Editable Child

Editable child objects are always contained within another object and they cannot be directly retrieved or stored in the database. Ultimately there's always a single editable root object that is retrieved or stored.