Optimum System Safety and Optimum System Resilience: Agonistic or Antagonistic Concepts?

Introduction: Why are Human Activities Sometimes Unsafe?

It is a simple fact of life that the variety of human activities at work corresponds to a wide range of safety levels.

For example, considering only the medical domain, we may observe on the one hand that audacious grafts have a risk of fatal adverse event (due to unsecured innovative protocol, infection, or graft rejection) greater than one per ten cases (10-1) and that surgeries have an average risk of adverse event close to one per thousand (10-3), while on the other hand fatal adverse events for blood transfusion or anaesthesia of young pregnant woman at delivery phase are much below 1 per million cases (see Amalberti, 2001, for a detailed review). Note that we are not, strictly, speaking here of differences that are due to the inherent severity of illnesses, but merely on differences due to the rate of errors and adverse events.

Another example is flying. It is usually said that aviation is an ‘ultra safe’ system. However, a thorough analysis of statistics shows that only commercial fixed wing scheduled flights are ultra safe (meaning that the risk is 10-6 or lower). Chartered flights are about one order of magnitude below (10-5), helicopters, scheduled flights and business aviation are about two orders of magnitude below (10-4), and some aeronautical leisure activities are at least three orders of magnitude below (10-3), cf. Figures 16.1 and 16.2.

Figure 16.1: Raw number of accidents in 2004 in France. General aviation accident rate is about two orders of magnitude of commercial aviation

In both cases, neither the lack of safety solutions nor the opportunity to learn about their use may explain the differences. Most safety tools are well known by stakeholders, available ‘on the shelf’, and implemented in daily activities. These tools comprise safety audits and evaluation techniques, a combination of mandatory and voluntary reporting systems, enforced recommendations, protocols, and rules, and significant changes in the governance of systems and in corporate safety cultures.

This slightly bizarre situation raises the central question debated in this chapter: If safety tools in practice are available for everyone, then differences of safety levels among industrial activities must be due to a precisely balanced approach for obtaining a maximum resilience effect, rather than to the ignorance of end users.

One element in support of that interpretation is that the relative ranking of safety in human activities at work has remained remarkably stable for decades. Safety is only slowly improving in parallel for most of these activities, available figures showing an improvement of an order of magnitude during the last two decades for most human activities.

Figure 16.2: Accident rate in several types of aviation

Only a very central set of human activities have seen their safety improving faster than average, and when considering these activities, e.g., medicine, the reason is usually a severe threat to the economy and the resilience of the system rather than because safety as such was seen as not being good enough.

For example, the impressive effort in the US made in patient safety since 1998 was directed by the growing medical insurance crisis and not by any spontaneous willingness of the medical community to improve safety (Kohn et al., 1999). Consistent with this, once a new level of resilience has been reached the system re-stabilises the balance and does not continue to improve. This levelling off can be seen in medicine. After an initial burst of enthusiasm and an impressive series of actions that led to a number of objective improvements, the second stage of the ‘safety rocket’ is failing because very few medical managers want to continue. It is as if a new resilience should have been reached, with a new set of rules, a new economic balance, and therefore, no need to go further (Amalberti et al., 2005; Amalberti & Hourlier, 2005).

To sum up, this paper suggests two supplementary interpretations:

• Existence of various types of resilience. That there are various types of resilience corresponding to classes of human activities. The resilience should be understood as a stable property of a given professional system, i.e., the ability to make good business in the present and near future with the capacity to survive an occasional crisis without changing the main characteristic of the business. Of course, the safety achievement may be extremely different from one type of resilience to another: frequent and dreadful accidents may be tolerated and even disregarded by the rest of the profession (ultra-light biological spreading activities against crickets, or mining accidents in third world countries), although a no-accident situation is the unique hope for others (nuclear industry). The following section will propose a tentative classification of the types of resilience.

• Incentives to change. Jumping from one stage of resilience to another, adopting more demanding safety logic is not a natural and spontaneous phenomenon. A professional system changes its level of resilience only when it cannot continue making effective business with the present level of resilience. It is hypothesised in this chapter that there are two major types of incentives to change. One is the external incentives due to the emergence of an economic or/and political crisis. The crisis can follow a ‘big accident’, a sudden revelation by media of a severe threat or a scandal (blood transfusion in France, unsafe electricity system in northern US, medical insurances in all other Western countries), or a political threat (patient safety in UK and re-election of Tony Blair). Another is internal and relates to the age of the system. Indeed, systems have a life cycle similar to humans. When aging, the systems are ‘freezing’, not necessarily at the highest level of safety, but with the feeling that there is no more innovation and resources to expect, and that a new paradigm is required to make the business effective in the future. The following sections detail these two incentives to change, external and internal.

Examples: Concorde and the Fireworks Industry

For example, when the Concorde aeroplane was designed in the late 1960s it was in perfect compliance with regulations existing at the time. Since it was impossible to make any retrofit later in the life cycle of the aircraft (because of the very few aeroplanes existing in the series and the unaffordable cost of making retrofits), the Concorde progressively became in breach of the improvements in Civil Aviation regulations. At the end of its lifetime, in the late 1990s, this airplane was unable to comply with many of the regulations. For example, adhering to the new rule of flying 250 kts maximum below flight level 100 was not possible (as there would not be enough fuel to cross the Atlantic if complying). It was also unable to carry passengers’ heavy luggage (choices had to be made between removing some fuel, some seats, or some luggage) and this limitation was totally in contradiction with the increased use of Concorde for prestige chartered flights. In both cases, solutions were found to resist and adapt to growing contradictions, redesigning the operative instructions to be on the borderline of regulations but compatible with the desirable outcome. To sum up, for the continuation of Concorde flight operations, adopting all of the new safety standards of civil aviation without compromising would have resulted in grounding the aeroplane. The resilience of Concorde, playing with boundaries of legality, was so good that Concorde survived for three decades with borderline tolerated conditions of use [BTCUs] before being definitively grounded (see Polet et al., 2003, for a theory on BTCUs). And even after the Paris crash on July, 25th, 2000, that killed 111 persons, Concorde continued to flight for two more years despite evidences that it should have been definitively grounded. Again this strange result can be explained by the superb resilience of the Concorde operations in British Airways and Air France (of course, in that case the resilience unfortunately does not apply to the Concorde having crashed, but applies to the survival of global operations). Concorde was more than an aeroplane, it was a European flag. As such, it was considered that the priority of resilience should be put on the continuation of operations all around the world and not on ultimate safety.

The story of Concorde is just one example among many in the industry. For another, consider the fireworks industry. The average safety standards of this type of industry remains two orders of magnitude below the safety of advanced chemical industries. However, when looking carefully at their logic, they deal with extremely unstable conditions of work. The job is based on a difficult crafting and innovative savoir-faire to assemble a mix of chemical products and powders. Innovation is required to remain competitive and products (made for recreational use only) must be cheap enough to be affordable to individuals and municipal corporations. All of these conditions make the fireworks industry a niche of small companies with low salaries. Finally, a common way of resilience to ensure survival is to adapt first to the risk, save money and refuse drastic safety solutions. One modality of adaptation has been illustrated by the national inquiry on the chemical industry in France conducted after the AZF explosion in Toulouse in September 2001. The report showed how firework factories leave big cities in favour of small and isolated villages where a significant number of inhabitants tolerate the risk because they are working in the firework factory. In that case, resilience (i.e., industry survival) has reached a safety deadlock. Accepting to improve safety would kill this industry. That is exactly what its representatives advocated to the commission, threatening to leave France if the law had to be respected. (Note that safety requirements already existed, and the problem was, in that case, either to enforce them or to continue tolerating borderline compliance.)

Mapping the Types of Resilience

The analysis of the various human activities at work and their safety-related policies lead us to consider four classes of system resiliencies (Table 16.1).

Ultra-Performing Systems

A first category of human activities is based on the constant search and expression of maximum performance. These activities are typically crafting activities made by individuals or small teams (extreme sports, audacious grafts in medicine, etc.). They are associated with limited fatalities when failing. Moreover, the risk of failure, including fatal failure, is inherent to the activity, accepted as such to permit the maximum performance. Safety is a concern only at the individual level. People experiencing accidents are losers; people surviving have a maximum of rewards and are winners.

Table 16.1: Types of systems and resilience

Egoistic Systems

A second category of human activities corresponds to a type of resilience based on the apparent contradiction between global governance and local anarchy. The system is like a ‘governed market’ open to ‘individual customers’. This is for instance the case for drivers using the road facilities or patients choosing their doctors. The governance of the system is quite active via a series of co-ordinations, influencing of roles, and safety requirements. However, the job is done at the individual level with a limited vision of the whole, and logically the losses are also at the individual level with fatalities here and there.

One of the main characteristics of this system is that the choice is made at the users’ level, directly beneficial to him or her, and therefore exposes most of the safety loopholes. For example, the patient may choose his/her doctor, or even decide on proposed medical strategies with pros and cons. General requirements, such as quality procedures, are sold as additional criteria that may influence the choice of the customer. Since accident causes mix the choice of end users and of professionals, they tend to be considered as isolated problems with little or no consequence for the rest of the activity. The usual vision is that losers are poor workers or unlucky customers. There are very few inquiries, and most complaints are based on individual customers suing individual professionals. Systems of that type are stable and do not seek better safety, at least as long as the economic relationships remain encapsulated in these inter-individual professional exchanges. However, should the choice no longer be the prerogative of individuals, the whole system is immediately put at risk and therefore moves to a new balance, adopting the rules of resilience of the next safety level. Regarding safety tools used at that stage, the priority is to standardise people (competence), work (procedure) and technology (ergonomics) with recommended procedures in design (ergonomics) and operations (guidelines, protocols) as the main generic tools. The tools for standardisation will then move on to more official prescriptions at the next level, and ultimately will be turned into new federal or national laws in the last stage.

Systems of Collective Expectation

The third category of human activities corresponds to public services and large low risk public industries. Here people in the street are not making direct choices. This is typically the case of the food, post-office, energy supply, bank supply, and a series of medical accompanying services (general hygiene conditions, sanitary prevention, biology, etc.). These services are considered as necessary at the town and region level. Their failures can lead to social chaos, directly engaging the competency and commitment of the local top managers and politicians. This is the reason why resilience at this level consistently incorporates a high public visibility and communication effort on risk management. Safety bureaus with safety officers deliver public reports giving proofs of safety value on a regular basis. To sum up, resilience of that type mainly consists in showing a higher commitment, developing transparency and communication on safety, and managing the media, pushing evolution but not revolutions, and never abandoning the local control of the governance. However, with this evolution of governance, safety strategies benefit from new impulses. A continuous audit is required to address and control residual problems. This may require an extensive development of monitoring tools such as in-service experience, reporting systems, sentinel events, morbidity and mortality conferences. It is an occasion to consider safety at a systemic level, enhancing communication and the safety culture at all levels, including the management level. The teaching of non-technical skills (such as Crew Resource Management) becomes a priority in order to get people to work as a team. Macro-ergonomics tend to replace local or micro-ergonomics, and policies tend to replace simple guidelines. The audit procedure can identify a series of recurrent system and human loopholes, which resist safety solutions based on standardisation, education, and ergonomics. All solutions follow an inverted U-curve in efficiency. When the cost-benefit of a solution becomes negative (for example, the ratio of time spent on recurrent education versus effective duty time), the governance of the system has to envisage transitioning to the next step and the present system of resilience is questioned.

This is typically the effect of the new French law on industrial risk prevention (loi Bachelot-Narquin, July 2003) voted after the AZF explosion in Toulouse in September 2001. The AZF explosion destabilised the resilience of the whole chemical industry in France, questioning its presence on French territory. However, the final content of the law issued two years after the accident is the result of a series of contradictory and severe debates on the type of action to be conducted at the nation level for reinforcing safety in the chemical industry (see the Fiterman report, 2003). This law produced a very prudent ‘baby’. Instead of changing the foundations and recommending the adoption of a radical change/restrictions in technique and operations to make the job safer (considered as a non-affordable effort and a major threat by the chemical industry unions), the law in the end almost only asks for better visibility, communication and information to neighbours on the risk around factories. The communication procedure relies on the creation of ‘local citizen consulting committees’ with a mixture of industrial and citizen representatives. To rephrase, the letter of the law matches the characteristics of the resilience of this industry, with a conscious acceptance that the very core of the business, and the inherent associated risk, cannot change.

Another example is provided by the electricity power supply in the Northern US. This power supply system is known to be severely at risk after multiple failures occurred in the past three winters: however, the plan for correctives action taken by the US government is a prudent compromise that preserves the present balance and resilience, taking most initiatives at the local regional level and not at the national, or even at the continental level with Canada.

Ultra-Safe Systems

The fourth and last category of systems corresponds to those for which the risk of multiple fatalities is so high that even single accidents are unacceptable. If one accident occurs to one operator it may mean the end of business for all operators. This is the case of the energy industry (chemical, nuclear), and public transportation. The resilience of such systems adds to the characteristics seen in the previous category the need for a transfer of safety authority at the nation level or at international level, often leading to the creation of new agencies. Safety becomes a high priority at all levels of the system and an object of action by itself. Here the watchword of safety is supervision. Supervision means full traceability by means of information technology (IT) to enforce standardisation and personal accountability for errors, and growing automation to change progressively the role of technical people, freeing them from repetitive, time consuming and error prone techniques, and making them more focused on decisions.

For instance, advances in IT and computers provided aviation with an end-to-end supervisory system using systematic analysis of on-board black boxes, and leading to the eventual deployment of a global-sky-centralised-automated-management-system called the data-link. The impact on people and their jobs, whether pilots, controllers, or mechanics, is far-reaching.

To conclude this section, there are multiple examples in the industry which show that safety managers and professional are perfectly aware of how the jobs can be done more safely, yet fail to do it in practice. In most cases, they are limiting their safety actions because the system can survive economically with remaining accidents, and because the constraints associated with a better safety would not be affordable. This is a typical logic of losers and winners. As long as the losers stay isolated in a competitive market and their failures do not contaminate the winners, the system is not ready to change and give priority for resilience to competitiveness.

When the reality of accidents not only affects the unlucky losers but also propagates to the profession or to politicians, the system tunes resilience differently and increases the priority on safety. The first move consists in keeping the control on performance but increasing transparency on risk management. The second move is transferring the ultimate authority of risk management far from the workplace, giving gradual power to national and international agencies. Only very robust, organised, and money making systems may resist this last move, which generates an escalation of safety standards with little consideration for their cost.

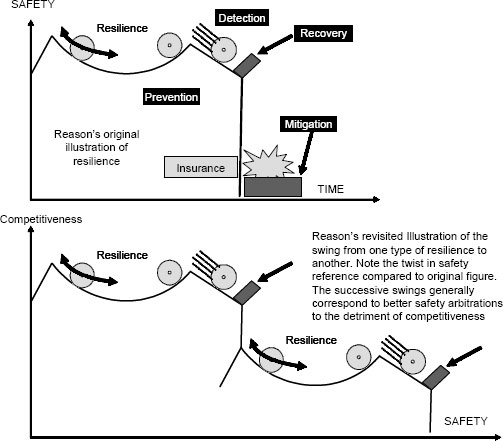

Figure 16.3 illustrates a revision of Reason’s general framework model of safety and resilience to fit with this idea of a cascade of resiliencies, with a paradoxical inversion of the paradigm. The spontaneous initial stage of resilience corresponds to highly competitive and relatively unsafe systems. When this stage is no longer viable, the system reverts to the next type of resilience, accepting more constraints and regulation, better safety, but losing some capacity to exceptional performance, etc. The cascade reversions continue until the system has reached a plateau where no further solutions exist to improve safety. This is generally the time of the death of the system, followed by the emergence of a new system (see section below). Note that the longer a system can remain in the first stages of resilience with high performance and low safety values, the longer its total life cycle will be (in that case, the system extends its life cycle, and postpones the arrival of the final asymptote).

Figure 16.3: Revisiting Jim Reason’s illustration of system resilience, turning to a cascade model of resiliencies

Understanding the Transition from One Type of Resilience to Another

As noted above there are two families of causes, external and internal, that may provoke the transition of one stage of resilience to another. The reader must understand the paradox of this concept of shift. Resilience, indeed, is by itself a concept that expresses the reluctance or resistance to shift. Therefore, each of the stages or types of resilience described in the previous section, oppose the shift as long as possible. However, no stage of resilience can exist forever in a given system. The external and internal forces will sooner or later provoke an inadequacy of this resilience system and ask for a new one. The conditions for these breakdown periods are described below.

External Causes of Transition

The external destabilising factors are in that case sudden and unpredictable: they can be a specific accident, or an economical crisis.

For example, a series of listeriosis infections occurred in France in the early 1990s due to improper preparation and storage of farm cheeses and milk products by farmers or (very) small food companies. Six young pregnant women died in 1992 and 1993, and the reaction of the media was so strong that this accident became the start of a large change in the farm product business. Within a period of two years after the problem occurred, a new law prescribed restrictions for farmers’ food production, conservation, and conditions of food presentation in the marketplaces. Within a period of five years after the problem, a new national agency, the AFSSA (‘Agence Française de Sécurité Sanitaire des Aliments’), was created (April 1999) concentrating the authority for food quality and control, and reinforcing the requirements for inspections (new directive dated May, 16th, 2000). From that date, the business drastically changed. Most isolated small fresh food companies collapsed or had to merge or sell activities to bigger trusts. The traditional French farm business that had bet for years on a great and cheap variety of products has now cut down the variety of products by 30%, been re-concentrated in the hands of a big combination of collective unions, and has definitively changed its working methods, moving to a new setting of resilience giving greater priority to safety.

However, the numerous examples given in the first section of this paper (e.g., Concorde, AZF follow-up actions) tend to demonstrate that such a reactive shift after an accident is far from being a standard in industry. Precisely because each type of human activity has adopted a system of resilience, it can long resist perturbations and accidents. In general, the very forces that push the professional activities to adopt a different system of resilience are more likely to be part of the history of the profession. This is why it is important to consider a more global framework model on the evolution of the life cycles of industry.

Internal Causes of Transition

The Life Cycle of Industry and Safety Related Concepts. Socio-technical systems, as well as humans, have a limited life span. This is specifically true if we adopt a teleological point of view where a social system is defined as a solution (a means) to satisfy a function (an end) (see Rasmussen, 1997; Rasmussen & Svedung, 2000, Amalberti, 2001). The solution is usually based on a strategy for coupling humans to technology; one can use the term ‘master coupling paradigm’.

For example, the master coupling paradigm for train driving is based on a train driver being informed of danger by means of instructions and signals along the railway. However, some suburban trains have already adopted a different master coupling, becoming fully automated, hence with no drivers on board.

The master coupling paradigm for photographs has been stable for one century based on argentic printing. Nevertheless, the system totally collapsed in a short period of time in the early 2000s, being replaced by the new business of digital cameras and photos. Note also that the function of taking pictures was integrally maintained for end users through this drastic change.

Another example of a change in the master coupling paradigm has been the move from balloons to aeroplanes in air transportation. For nearly half of century between 1875 and 1925, commercial public air transportation was successfully made by airship. The airship era totally collapsed in a short period after the Hindenburg accident in New York in 1936, and was immediately replaced by the emerging aeroplane industry. Again, this change full preserved the main function of the system (transporting passengers by air).

Note that the same aeroplanes and associated master coupling paradigm that replaced balloons are themselves at the end of their life cycle. The present cockpit design is based on the presence of front-line actors (pilots, controllers) who have a large autonomy of decision-making. The deployment of the aircraft traffic satellite guidance via data link system that is just around the corner will drastically change the coupling paradigm and redefine all of the front-line professions on board and across the board.

The total lifespan for a given working activity based on a master coupling technological paradigm is likely to be equal to a human lifespan: half a century in the 19th century, and now about a century. However, during the period of the life cycle, where the master coupling paradigm remains macroscopically stable, the quality of the paradigm is continuously improving. Each period corresponds to a series of safety characteristics and resilience. This vision is another way to look at the different step of resiliencies that have been described above. We have seen that there are several categories of resilience with associated safety properties. We have seen that they are some conditions that lead to a transition from one to another. In a certain sense, we rephrase the idea from that section only adding the unavoidability of these transitions within the life cycle of systems. All system will transition and finally die. Some external events may precipitate this cycle that will inevitably come. The most paradoxical result is that speeding up the cycle will result in rapidly improving safety, but rapidly exposing the system to dying.

It is also important to consider that the usual way to look at system resilience and safety proceeds much more by audit and instant ‘snapshots’.

With the information contained in the previous section of the chapter, such audits make it possible to classify the observed system in one of the four categories of resilience. It is then possible to infer the pragmatic behaviour after accidents, the limit of requirements that can be reasonably put on this system, and certain conditions that clearly announce the looming need to transition to the next category of resilience.

With the information presented in the following section of the paper, it will be possible to go beyond the instant classification and create a perspective of the dynamic evolution and the long time evolution of the system resiliencies. Four successive periods seems to characterise the life cycle of most systems (see Figure 16.4).

A short, initial period corresponds to the pioneering efforts. This is typical of the pre-industrial time where the master coupling paradigm is elaborated. At that stage, the specimens of the system are very few and confined to laboratories. Accident are very frequent (relative to the low number of existing systems), but safety is not a central concern, and pioneers are likely to escape justice when making errors.

A long period of optimisation can be termed the ‘hope period’. Hughes (1983, 1994) says that pioneers are fired at that stage and replaced by businessmen and production engineers. The system enters into a long continuous momentum of improvement of the initial master coupling paradigm to fit the growing commercial market demand. Safety is systematically improved in parallel with the technical changes. It is time for hope: ‘The upgraded version of the system already planned and available for tomorrow will certainly avoid the accident of today’. Despite remaining accidents, very few victims sue the professionals. As long as clients share the perception that the progresses are rapid, they tend to excuse loopholes and accept that errors and approximations are definitively part of this momentum of hope and innovation. The safer a system is, the more likely it is that society will seek to blame someone or seek legal recourse when injuries occur. For example, it is only in recent times that there has been an acceleration of patients suing their doctors. In France, for example, the rate of litigation per 100 physicians has increased from 2.5% in 1988 to 4.5% in 2001 (source MACSF).

Figure 16.4: Evolution of risk acceptance along the life cycle of systems

The third period of the life cycle corresponds to the ‘safety period’. The perception of the public is now that the system is reaching an asymptotic level of knowledge corresponding to a high level of potential performance. The customers expect to reap the benefits of this performance and do not hesitate any longer to sue workers. They particularly sue failing workers and institutions any time they consider that these people have failed to provide the expected service owing to an incorrect arbitration of priority in applying the available knowledge. A step further in this escalation of people suing workers is the shift from requirements for means to requirements for ends. People require good results.

The increase of legal pressure and media scrutiny is a paradoxical characteristic of systems at that stage. The safer they get, the more they are scrutinised. The first paradox is that the accidents that still occur tend to be more serious on account of the enhanced performance of the systems. Such accidents in safe systems are often massively more expensive in terms of compensation for the victims, to such an extent that in many sectors they can give rise to public insurance crises. Accidents therefore become intolerable on account of their consequences rather than their frequency. The skewing of policy caused by the interplay of technical progress and the increasing intolerance of residual risks is problematic. On the one hand the system experiences higher profits and better objective safety, but, on the other hand, infrequent, but serious, accidents cause an overreaction among the general public, which is capable of censoring and firing people, sweeping away policies, and sometimes even destroying industries. It is worth noticing that these residual safety problems can often bias objective risk analysis, assigning a low value to deaths that are sparsely distributed though they may be numerous, and assigning a high value to cases where massive concentration of fatalities occur even if they are scarce, such as aircraft accidents, problems of blood transfusion in France, or fire risks in hospitals. In essence, 100 isolated, singular deaths may have far less emotional impact than 10 deaths in a single event.

The consequence of this paradox is, for example, that patient injuries that occur tend to be massively more expensive in terms of patient compensation, and thus fuel the liability crisis.

The very last period of the life cycle is the death of the present coupling paradigm, and the re-birth of the system with a new coupling paradigm. The longer the life cycle of a system has lasted, the smaller will be the event causing its death. The last event that causes the system to die can be termed the ‘big one’. The social life cycle of a system and of a paradigm can be extended for years when the conditions to shift and adopt a new system are not met. The two basic prerequisites for the change are the availability of a new technical paradigm and an acceptable cost of the change (balance between insurance and social crisis associated to the aging present system vs. cost of deployment of future system). Paradoxically, the adoption of the new coupling paradigm starts a new cycle and gives new tolerance to accidents, with a clear step back in the resilience space, associated with a relative degradation of safety.

Changing the Type of Resilience, Changing the Model of an Accident. When the safety of a system improves, the likelihood of new accidents decreases (see Table 16.2). The processing of in-service experience needs an increasingly complex recombination of available information to imagine the story of the next accident (Amalberti, 2001; Auroy et al., 2004).

The growing difficulties in foreseeing the next accident create room for inadequate resilience strategies as the system becomes safer and safer. To rephrase, the lack of visibility make resilience in safe systems much more difficult than in unsafe systems. This is also a factor that explains the usual short duration of the last stage of resilience in the system’s life cycle. When systems have become ultra safe, the absence of ultimate visibility on a risk system may lead them towards their own death.

Table 16.2: Evolution of the prediction model based on past accidents

Up to 10-3 |

10-3 to up to 10-5 |

10-6 or better |

The next accident will repeat the previous accidents |

The next accident is a recombination of part of already existing accidents or incidents, in particular using the same precursors |

The next accident has never been seen before. Its decomposition may invoke a series of already seen micro incidents, although most have been deemed inconsequential for safety |

Conclusion: Adapt the Resilience – and Safety – to the Requirements and Age of Systems

The important messages of this chapter can be summarised by the following five points:

• Forcing a system to adopt the safety standards of the best performers is not only a naïve requirement but could easily result in accelerating the collapse of the system.

• Respect the ecology of resilience instead of systematically adopting forcing functions; the native or spontaneous resilience corresponds to well performing but unsafe systems, at least in the sense that safety is not a first priority.

• All systems will transition from their native resilience to new stages with much better associated safety. However, the ultimate stage will be so constraining that it will lead the system to die.

• It is crucial to have a good knowledge of the characteristics and the causal events announcing the transition for one resilience stage to another. These factors are external and internal.

• Smart safety solutions depend on the stage of resilience. Standardise, audit, and supervise are three key families of solutions that have to be successively deployed according to the safety level. Continuing standardisation beyond necessity will result in either no effect or in negative consequences.