CHAPTER 3

What're You Trying to Answer?: Mapping a User Test Approach to Your Desired Learnings

Getting meaningful, actionable, high‐quality human insight from user testing is all about asking the right questions of the right people. We desperately want more businesses to seek and apply human insight, but we know from experience that asking misguided, unfocused, or badly formulated questions of your customers can be disastrous.

Remember in 2009 when Walmart surveyed their customers about store layout? The retail giant asked its shoppers what seemed like a straightforward, customer‐centric question: “Would you like Walmart to be less cluttered?”

When the answer turned out to be a resounding “Yes!” leadership swung into action. Hoping to both declutter stores for current customers and potentially start attracting higher income Target shoppers in the process, they rolled out an initiative called Project Impact.

This five‐year plan for streamlining stores, improving navigation, and upgrading interior aesthetics was ambitious and aggressive. Millions were spent replacing fixtures and renovating existing stores, but the most noticeable change was removing the merchandise that traditionally sat in the aisles between fixtures. To do this, the leaders of Project Impact had to remove nearly 15 percent of the items sold in physical Walmart stores.

Although initial customer survey responses to these changes were positive, the eventual results were catastrophic. Once the Project Impact renovations had transformed around 600 Walmart stores, it became clear that reducing store inventory was a mistake. Year over year same‐store sales plummeted, and the project was put on indefinite pause. Walmart lost an estimated $1.85 billion in sales plus hundreds of millions they spent renovating the actual stores.1

All because they asked a poorly worded question.

We know that sounds ominous, but don't worry. We're going to show you exactly how to avoid misfires like Project Impact. And the key is carefully calibrating your questions before you ask them.

Here's how.

Understand How Your Question Relates to the Business

Curiosity is an important ingredient to better understand your customers, but product and marketing teams must be careful about letting it run wild in user testing. The user testing process is about finding answers to questions meant to improve the customer experience. That means formulating the approach with direct links to business outcomes so you can tie your experiments and findings to what matters most.

In practice, this means ensuring that your immediate questions around a campaign or product—the ones that rise to the surface naturally as you hone and develop—map back to high‐level organizational goals.

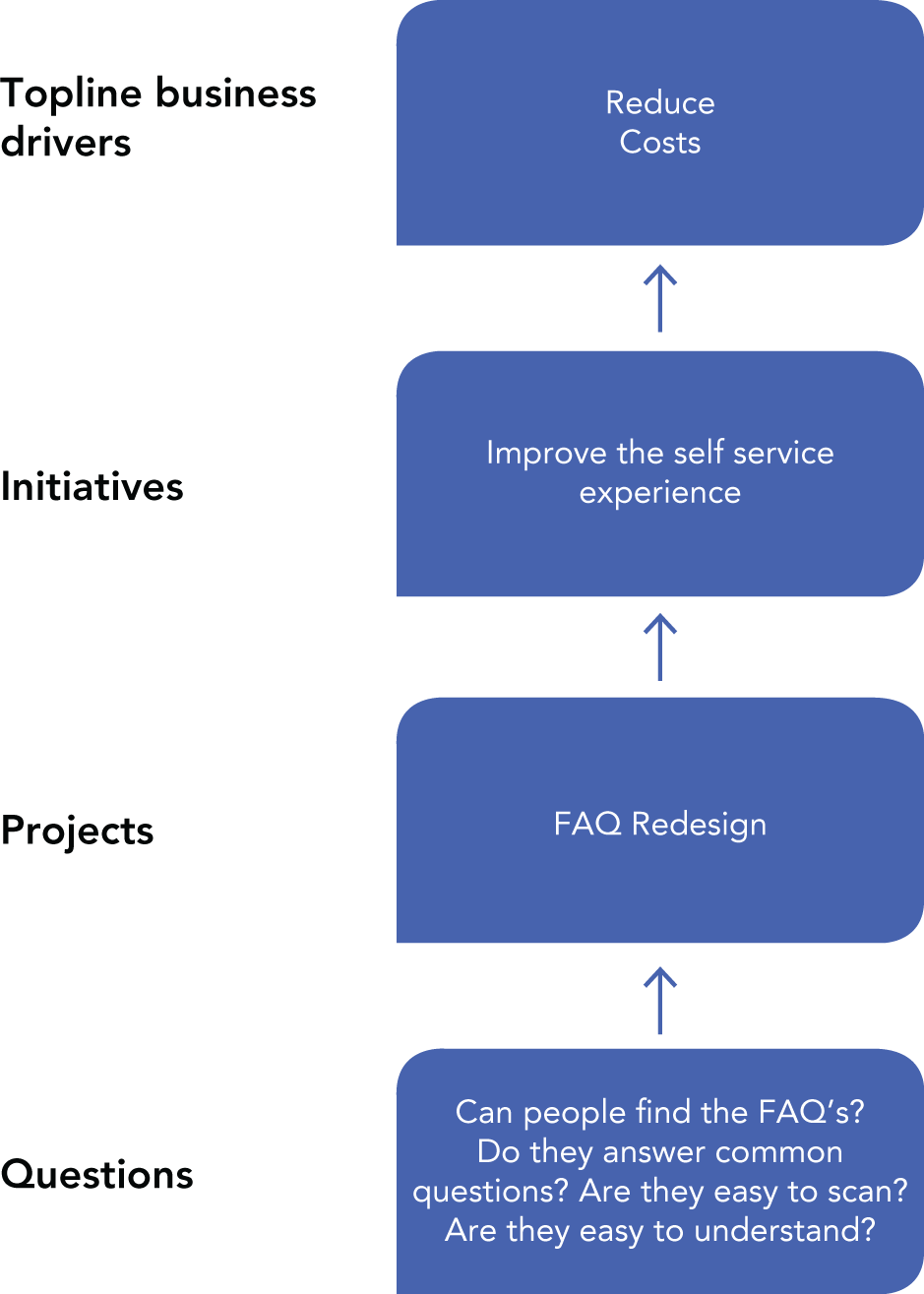

For example, if you're responsible for the self‐service experience for your company and notice that people are dropping off after viewing the FAQ section of your site, you'll want to know why so you can address it. That's the question at the testing level, but if you consider the next logical step up it would be the project, which could be something like a FAQ redesign. One level up from there is the larger initiative, which is your focus on improving the self‐service experience. And finally, if you level up again, you've linked to the topline business driver, which is to reduce costs.

FIGURE 3.1 Mapping Your Question to a Topline Business Driver

And that's the level that C‐suite execs will be eager to discuss.

To make their value clear to leaders, the questions asked of customers must ladder up to something that matters. You're using time and energy to explore it; it's an investment. Spend it wisely. Spend it on a question that will help you understand how to get to market faster, sell to new customers, drive customer loyalty, cut costs, or obliterate the competition through great customer experiences.

Consider How and Where You Plan to Apply Your Learnings

The questions we formulate and ask of our customers don't just need to be tied to the business, they need to be tied to where you are in your workflow or development phase and how you plan to apply your learnings. For example, it's wildly inappropriate to ask about feature prioritization when you're still sussing out what customer problem you'll solve. Try not to get ahead of yourself. Instead stay grounded in the work you're doing right now.

Wondering what questions are appropriate for various stages of common workflows and processes? Here are our suggestions:

Questions to Ask Before You Create

Since you're in the early phase of developing your product, offering, or new brand positioning, the questions you ask of your customers should help you develop the right approach. Input from real people is valuable at every stage of this process, but it's especially important here.

You've got a hypothesis about a pain point, problem, or need, but until you connect with some customers and pick their brains, you don't have enough context or deep understanding to act on that hypothesis. You must talk to people outside the company walls to understand it deeply so you can respond appropriately.

A consumer‐packaged goods company recently modeled this important strategy with a CEO‐led initiative to change single‐use products to reduce waste. Instead of jumping to a solution right away, the packaging design team performed a series of user tests to better understand the problem they were trying to solve.

The tests started by connecting with people who were willing to show the team their bathroom supplies, including how and where they were stored. The team focused on drain cleaner to make sure they weren't gathering information that would prove too broad to be useful. Then, they formulated a series of questions including, “How often do you clean your drain? Do you do it only when the drain becomes clogged or regularly? Would you show us how you tackle this task? Where do you store the product you use to clean your drain?” They were able to find out how often drain cleaning products were needed and used, whether people bought large or small bottles to accommodate their storage capacity, and even if refillable drain cleaner would appeal to them.

By getting customer perspectives that provided a glimpse into their worlds, the team could solve a problem they understood intimately, and this helped them bring the best solution to market.

Questions to Ask While You Create

Before narrowing to a specific solution or idea, it's time to vet different solution ideas and pick a path. You should be using divergent thinking, design thinking (see sidebar in this chapter for more details), or other exploratory methodologies to sketch out rough concepts. You're likely tinkering with multiple ideas, which means this is a great time to get some user input.

Without sufficient testing and user input during the solutioning phase, you run the risk of building a suboptimal solution. Take Juicero, an at‐home cold‐pressed juice device that cost $400 back in 2016 at the height of the juicing craze. Founded by Doug Evans, Juicero raised $120 million and counted respected VC firms among its backers, including KPCB and Google Ventures, and yet it failed spectacularly once on the market.

The inner works of the machine were overengineered, brimming with specialized custom‐tooled parts, and yet it still failed to do the one thing it was designed to do: create juice. In a Bloomberg video review, it was shown that the Juicero‐produced QR code‐stamped fruit bags could be more easily juiced by squeezing them manually than feeding them into the machine. Adding insult to injury, the fruit bags had strictly timed lives, which meant a full bag could be rejected by the machine on “freshness” grounds after a short duration. Had the creators of Juicero run even a handful of user tests, they likely would have gotten an earful about these frustrating features and been able to course‐correct. Alas, the product sat on shelves for just a year before the founder decided it was a lost cause.2

While exploring various ideas, we've seen some teams and companies build landing pages to get customer reactions on proposed products or offerings. They haven't built a product or a true prototype yet, but they've hosted a landing page on their site so it looks relatively “real” to customers offering input.

Marketing teams can get feedback on early ideas and concepts, too. For example, something as simple as a moodboard—a visual collage that reflects a creative direction and language choices—is a perfect candidate for user testing. Teams can create multiple boards to show to users and collect their input. Teams may offer several options and ask, “Which of these makes you think of our brand?” or “If each of these were a famous person, who would they be?” This exercise can provide insight that informs campaigns, specific messaging, and branding discussions.

Ultimately, this is an interim phase. And the best possible course of action is to get lots of feedback on the array of possible solutions you're considering, so you can hone in on the one that will be most successful at launch.

Once you've selected the right solution path, now it's all about building it the right way. Building prototypes of physical products, websites, and apps makes everything feel more real—for you and for the customers who user test it. It can be tough to remain objective when you run user tests this late in the game since you've invested so much time and effort in concepting and building your offering, but you need to remain open to honest feedback.

If you're a veteran product manager or marketer, you likely know that products do get pulled at this phase. If user testing reveals an issue or hiccup that's big enough to cause major concerns, entire designs may get scrapped. When they're pushed through to release despite known issues, disaster may follow.

For example, video conferencing service Zoom offers virtual backgrounds to its users, but in late 2020 people noticed that the algorithm running the backgrounds didn't register Black users' hair. Or sometimes their entire heads.3 An issue this severe should have been caught and addressed long before release.

Questions to Ask After You Launch

No offering is ever perfect. Regardless of how much you (or your customers) love a solution, there's always something that can be fixed or improved, which is why we encourage companies to continue gathering feedback and running user tests after the launch of a new experience. Continual optimization based on regular collection of human insight leads to the best experiences.

Again, it may seem like post‐launch user testing is overkill, but we promise you it's not. Here's an example to prove it to you: A mortgage service provider choose to continually user test their flagship app once it was launched.

After several rounds of user tests, they discovered that there were parts of the application checklist that people found confusing. For example, after completing the checklist and clicking “Apply Now,” people expected to be done providing information, but instead were surprised to find even more form fields they needed to fill out.

Based on these learnings, the team immediately took measures to better communicate all of the required steps in the application checklist.

Today, when all the steps in the application checklist have been completed, customers are presented with a “Congratulations!” page. It is now clearly conveyed that there is nothing else required and their application has been submitted for review.

To make sure the changes met and exceeded customers' overall satisfaction, the company measured the Net Promoter Score (NPS) of the app before and after making the changes to the process. Before the changes, the app's NPS was ‐43. After the changes, the app's NPS was 67—a more than 250 percent increase in customer satisfaction. Clearly, testing post‐launch was a worthwhile endeavor.

Don’t Ask Leading Questions or Go in to “Prove” Your Hypothesis

There's a big difference between performing user testing to explore customer perspectives and doing it to confirm your own beliefs. If internal teams are convinced that their new offering is “the next big thing” and run user tests to “prove” their idea, the learnings will be misleading. The questions will lead customers toward the answers that the company wants and hopes to hear. For more best practices on conducting interviews, see the following sidebar.

Apologies to Walmart, but their question in the anecdote mentioned earlier (“Would you like Walmart to be less cluttered?”) is a perfect example of one that nudges the respondent toward a particular answer. Even shoppers who hadn't given a single thought to the layout of stores might find themselves thinking, “Well, now that you mention it, yeah. Walmart does feel cluttered.”

So how do you avoid biased questions? Here are some tips for you.

- Avoid questions that require a yes or no response. If you perform an exit poll and ask, “Did you enjoy your experience today?” you may inadvertently influence answers as people will likely just agree with you. A better way is to frame your question as, “Would you tell us about your experience today? What worked well? What didn't?” Or ask them to rate their experience on a scale.

- Be careful with positive or negative framing. Questions like “How delightful was your interaction with us today?” are framed in a positive light and will likely lead to favorable answers. In Walmart's case, “clutter” is a negative frame that was embedded in the question itself, and it certainly skewed the resulting data and sent the teams down a disastrous path.

- Don't give away the answer in your question. If you ask, “Have you spent at least $100 online in the past month?” you're pointing respondents toward the answer you want and expect. It's better to ask open‐ended questions like, “How much have you spent online in the past month?”

Keep It Focused

One of the biggest stumbling blocks we see when teams run user testing is that they cover too much ground. They decide to ask a myriad of questions in a single user test, such as:

FIGURE 3.3 Too Many Broad Questions in a User Test Can Overwhelm

Questions that cover a wide range of topics in a single sitting yield answers that are more overwhelming than illuminating. And when you go too broad, it's exhausting for both the questioner and the questioned. Remember that you're not writing a thesis here. Keep the focus narrow, the question snackable, and have a clear line back to the business outcome at hand. And if you do need to cover a lot, consider breaking it up across multiple user test sessions. Three thirty‐minute sessions, each focused on a specific topic, is a better approach than a single ninety‐minute session that covers several meaty topics.

Keep Your Question Top‐of‐Mind

Before you start gathering information, commit to holding yourself accountable to your question. User testing is fascinating and compelling work that tends to breed tangents and rabbit holes. Once you start asking your question of actual users and hearing their replies, you may want to start piling on related questions or see if you can quickly get their feedback on a more general matter. Don't.

Veering away from your original question will do two things:

It Will Distract You

When you're coming up with your questions, do your best to consider possible distractions. Are there certain related ideas that you need to filter out? Are there aspects of the user test that are likely to yield the best signals and therefore merit more of your attention? Remind yourself of your narrowly focused and business‐contingent question so you don't get distracted and charge off in some new direction.

For example, let's say your question is: “How do we differentiate from the competition?” As you begin to gather customer input, you may start wondering about the customer support experience your company provides, which leads you to ask customers about their thoughts on chatbots. Occasionally, this yields new initiatives that are more valuable than the original ones. More often, it eats up your time and resources while your carefully formulated question languishes unanswered.

It Will Drown You

Adding more questions to the mix means generating more data to sift through. Sometimes much, much more data than you have the time or resources to examine. If you lose sight of your original question or add onto it, you'll be overwhelmed by the amount of information that comes back to you.

To illustrate, if your original question is “How do we clarify our pricing?” but you allow feedback on shipping options to sneak in, you've just doubled your scope. That second question is definitely related, but is it germane to your larger objective? More specifically, do you have the bandwidth to tabulate and analyze those auxiliary responses? And does the team have the capacity to make improvements to any issues you identify?

Once it's formulated, your question should become your beacon. Revisit it whenever you launch a new experiment, start working with a new panel of customers, or enter a new phase of development. Make it your mantra. Ensure it's with you at every step of the user testing journey.

Ask Questions with the Intention of Sharing with Others

Plenty of the stories and perspectives you capture will only be relevant to you and your team, but the broad themes and learnings can and should be disseminated. With that in mind, consider including a handful of questions in your user test that will yield some intriguing and shareable perspectives. These shouldn't be the only questions you ask, obviously, but they can be part of your overall approach.

A few of our favorites include:

- Use three words to describe how this experience makes you feel. This is a great way to prompt customers to generalize and offer up language that can be repurposed into marketing efforts.

- Describe this [value prop, product, company mission] in your own words. This gives you a peek into how the customer's mind works and may also pinpoint areas of misunderstanding that can be proactively addressed.

- Capture a few meaningful or important interactions to grab the interest of viewers. Consider including activities that will be compelling to watch and tie to business performance; think initial onboarding, downloading and installing an app, searching for information on your website, or reacting to an ad.

Socializing these customer perspectives creates universal buy‐in and sharing snackable human insight ensures that team members across the organization understand the people they're serving.

Always Ask Why

As we've mentioned, numeric data can give you tons of information about what people are doing, but it cannot tell you why they're doing it. Fortunately, running user tests gives you the opportunity to gather that perspective. Understanding customer motivations, complicating factors, and reasoning helps you craft offerings and experiences that truly serve them. Without this level of insight, your assumptions are driving the train.

Consider this example: You notice a trend of customers opting out of your text alert program, so you decide to investigate and send them a multiple choice survey. Even if the majority select, “I don't like getting texts from companies,” you still haven't reached a true “why.” If you interview customers directly, you may find that many of them have mobile phone plans that charge a fee whenever you ping them. Or maybe your texts arrive at inopportune times, such as when they're running a daily stand‐up meeting or putting the kids down for a nap. In the case of irritating text fees, you could create a way to direct people opting out of texts toward signing up for email alerts instead. In the case of bad timing, you could offer customers the chance to select the time of day text alerts hit their phones. But if you never ask, and just stop at “I don't like getting texts from companies,” you'll likely assume all of those people are lost causes and let them drift away instead of actively cultivating their loyalty.

The nature of this work is to do everything in your power to deeply understand the people you serve. If you're looking to get yes or no responses, there are other, more efficient ways to do that. User testing is about getting context, understanding motives, and digging into the “why” so you can make better business decisions.

When You’ve Formulated a Decent Approach, Reuse It

Here's some good news: You can reuse many of the questions you formulate for future user tests. Save common questions and approaches so you can refer back to them. There's no need to start from zero every time you are planning a new user test. As you ask questions of customers more and regularly, you'll develop a trusted approach or template to make the process quicker and easier for future tests.

And now that you've got the tools you need to formulate and ask questions that yield insightful customer answers, you need to go out and start asking them. But who should you ask? And how can you find those people?

Keep reading to find out.