Chapter 10

MAC Layer Design

THE FOLLOWING CWDP EXAM TOPICS ARE COVERED IN THIS CHAPTER:

- Demonstrate a detailed knowledge of WLAN architectures and solutions. Identify best practice design concepts for each architecture including the following considerations:

- Management solutions

- Protocols for communication and discovery

- Data forwarding models

- Scalability and bottlenecks

- Redundancy Strategies

- Device location in the network

- Encryption and decryption

- VLANs

- QoS

- Roaming considerations

- Architecture-specific security considerations

- RF and channel planning

- Capacity planning

- AP-Controller associations

- Licensing

- Advantages and limitations

- Explain best practices for common WLAN feature support, configuration, and deployment strategies.

- Demonstrate a detailed understanding of the role that the wired network infrastructure plays in WLAN design.

- Explain design approaches related to specific layers of the OSI model.

- Explain the significance of QoS in multi-service WLANs and illustrate a comprehensive understanding of the following:

- WLAN arbitration

- WMM and EDCA operations and parameters

- Policy-based queuing

- 802.1p (802.1D/Q) CoS priority tagging

- Differentiated Services Code Point (DSCP)

- Admission control

- End-to-end QoS

- Airtime fairness mechanisms

- Understand and describe VLAN use in wired and wireless network segmentation.

- Describe load balancing, what purpose it serves for the network, and when and how it should be implemented.

- Describe common design practices for high availability and redundancy.

- Illustrate best practices for roaming support in a WLAN.

- Understand the basics of 802.11 arbitration processes and wireless contention domains, and describe how these factors influence network design.

- Discuss design concepts related to frequencies and bands used for WLAN communications.

- Demonstrate a detailed knowledge of the common problems related to high user densities and describe effective strategies to address them.

- Illustrate best practices for data rate/MCS configurations to manage client connectivity.

- Describe the role of Transmit Power Control (TPC) in WLANs and explain when and how it should be implemented.

- Demonstrate the importance of, and design considerations related to, Fast BSS Transition (Fast/Secure Roaming).

Application performance demands are continuing to increase, and new efficacious features are required to keep pace. The aim of this chapter is to highlight proper network design and feature selection as it relates to the many MAC technologies and protocols that have propelled Wi-Fi as a convincing access technology.

As you probably know already, the 802.11 specification addresses WLAN protocols and operation at the MAC and PHY layers of the OSI model, so it should come as no surprise that MAC-layer design will be a major focus in the deployment of an 802.11 WLAN. A stock set of standardized MAC functionality will often serve adequately for simple Wi-Fi networks, but optimization for high-performance multiservice networks usually requires additional features and design considerations at this level.

Quality of service (QoS) is possibly the most important MAC feature in use today, largely because QoS is usually required to support sensitive applications like voice and video. Wi-Fi protocols use QoS at the MAC layer, but QoS functionality also depends on reliable RF handling at the physical layer (layer 1), infrastructure support at the MAC and Network layers (layers 2 and 3, respectively), as well as data classification from layers 4–7. This chapter will look at the many details of QoS design and will include a discussion about airtime fairness, a proprietary feature that fits generally into the category of QoS-like features.

With VoWiFi phones, tablet computers, handheld devices, and other battery-sensitive devices requiring service from wireless networks, power conservation features have become very significant. There are currently a few power-saving methods available, and network designers should understand how each one works, why they are significant, when they should be used, and what other power save considerations should be made. This chapter will address deployment considerations and best practices for power save features.

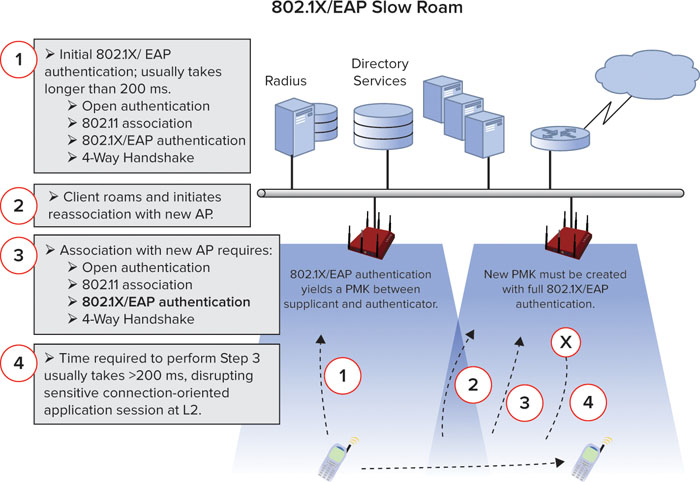

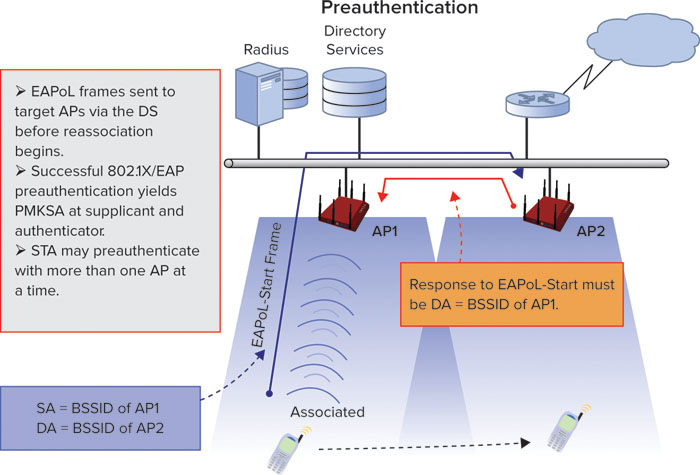

Many battery-sensitive devices are also highly mobile and pose other challenges to network design. Mobile devices supporting session-based applications like voice and real-time client/server applications have also gained popularity on Wi-Fi-capable devices, which adds a new emphasis on minimizing latency during inter-AP roaming. With a litany of different network architectures with proprietary and standardized roaming features, network designers must stay abreast of the technical merits, limitations, and functionality of each roaming method. We will cover this topic here as well.

Understanding Quality of Service

If we look at changes in network uses over the course of the last 10 years, it is quite clear that simple, data-only networks are a thing of the past. In an effort to maximize efficiency, mobility, and productivity, competitive businesses are continuing to adopt wireless technologies to support mission-critical, high-performance, and often sensitive applications. Although this trend toward wireless mobility has motivated the progress of Wi-Fi standards and proprietary feature development by Wi-Fi vendors, it has also created new burdens that were previously only experienced by a select few enterprises at the leading edge of the technology. Enterprise network applications have never been more demanding, and as the role of wireless changes from convenience to mission-critical, network design must change with it.

Generally speaking, QoS is a network function designed to optimize application performance by providing differentiated services to devices, users, applications, or service sets. In other words, applications vary in their performance requirements, and QoS helps control the distribution of resources to meet application-specific needs. For example, some applications, devices, or service sets require more bandwidth than others. At the same time, some applications require less latency than others. Some applications are tolerant to low bandwidth and low latency, but require little or no loss. To meet these varying application needs in a network with limited resources, you need differentiated services.

Application Performance Requirements

Chapter 3, “Designing for Applications,” addresses the details of application-specific design requirements, so we will not cover them in detail here. But QoS design is dependent on an intimate understanding of the applications that will be supported on a network. Each application requires a certain performance commitment from the network, and MAC design must take these performance requirements into consideration. Bandwidth, latency, jitter, and loss are all important performance factors that may be directly correlated to, and dependent on, properly provisioned networks. Understanding application performance requirements is the first step to planning the resources that will meet the application’s needs.

Bandwidth

Some applications demand a lot of throughput. However, high bandwidth usage does not necessarily make an application demanding. In most cases, high throughput is only challenging when it is paired with a requirement for low latency and low jitter. For example, an application like FTP file transfers may be somewhat bandwidth hungry, but FTP is tolerant to high latency and jitter, so the throughput requirement can be spread across a longer period of time, which preserves precious bandwidth for other applications. HD video teleconferencing is a good example of an application that can require high throughput while also requiring low latency, low jitter, and low loss. This type of application is more sensitive to properly provisioned and controlled network throughput.

One simple way to protect the bandwidth of a WLAN is to configure rate limits for devices or service sets that do not require special priority handling. Traffic control has many relevant uses in WLANs, and when network congestion is causing higher-priority traffic streams to experience performance problems, rate limits may be an effective way of managing excess traffic.

Latency

Latency is the same as delay and is a measurement of the time it takes for a frame to reach its destination (end-to-end delay). Or latency can be measured in the time it takes a frame to reach its destination and the response to be sent back to the original transmitter (round-trip latency). In any case, there are generally two contributors to latency: fixed and variable factors.

Fixed Latency This includes static elements that do not change, such as preparing a signal for transmission), encoding/decoding, and translation.

Variable Latency This is often the most important type of latency and includes queuing delays, errors and retransmissions, contention and congestion, and more.

Voice and video are two of the most common applications that are sensitive to latency. In an optimum deployment, total end-to-end delay for voice would be less than 150 ms.

Jitter

Jitter is the variation in latency between packets. When jitter is high, an application’s jitter buffer may not be large enough to maintain a steady supply of data for application delivery. This may lead to choppy voice calls, abnormal pace in audio delivery, or video delays or quality impairment. Some applications provide adaptive jitter buffers that change along with jitter conditions. Some applications have a user-defined jitter buffer, which can be helpful if jitter is a suspected cause of poor application performance. For voice, less than 5 ms of latency is typically optimal.

Loss

Loss is a measurement of the difference between packets transmitted and packets received by the destination. Loss can be caused by channel congestion, poor signal quality (low SNR, excessive multipath, collisions), buffer overflow, normal routing procedures, and more. 802.11 MAC protocols provide a resiliency to packet loss by virtue of acknowledgments and retransmissions. Of course, this applies only to unicast traffic. Multicast and broadcast traffic are not acknowledged, so higher levels of loss are far more likely.

As most networking folks already know, use of TCP or UDP at the transport layer also makes a difference here. With TCP, loss is less significant because TCP accommodates loss with acknowledgments and retransmissions, but with UDP, no provision is made to recover lost frames. With UDP, if network conditions are creating loss, no recovery is provided and application quality can suffer. While TCP does minimize loss, acknowledgments and retransmissions may also impact performance. The application in use will determine whether to use group addresses or a unicast address, and it will also determine the transport-layer protocol.

Identifying Application Requirements for Video

As we discuss application requirements and network provisioning, let’s quickly look at the example of video over wireless. You might be tempted to think that video streams on the Wi-Fi network have a pretty standard set of performance requirements, characterized by moderate bandwidth, low latency, low jitter, and low loss. In some cases, this assumption would be right, but not all video is the same.

Wireless users are consuming different kinds of video media all the time, and companies are relying on wireless connectivity to serve varying video needs, such as:

- Streaming video (e.g., surveillance or webcasts)

- On-demand video sources (e.g., Internet-based media or locally hosted media)

- Interactive video (conferencing and collaboration)

Each of these categories of video includes several subtypes, and each subtype of video delivery has a unique traffic fingerprint. Many require moderate to high bandwidth, but some are low-quality video streams, demanding only small amounts of bandwidth. Some applications can tolerate high latency or jitter, whereas others are very sensitive to high latency or jitter.

As an example, consider the differences between consumption-based video such as YouTube and collaboration-based video like video teleconferencing. Though both of these applications fall under the category of video, the application requirements are completely different. On-demand video like YouTube requires moderate bandwidth, but since these videos are buffered, they can tolerate high latency and jitter. Conversely, teleconferencing applications are real-time, demanding high bandwidth as well as low delay, jitter, and loss. These are stringent demands, but the demands must be known if the network is to be properly provisioned.

Table 10.1 shows a common set of applications along with their typical performance requirements. As a disclaimer, it can be a challenge to identify an application with a specific set of performance requirements because some application types have many variant implementations. It is also often difficult to obtain specific information about the application’s network requirements from the application vendor for a variety of reasons. For clarification, Table 10.1 uses the terms Low, Medium, and High to refer to the application’s needs or tolerances. For example, low throughput indicates that the application does not require much throughput. Low jitter, latency, or loss indicates that the application requires low jitter, latency, and loss. High jitter, latency, or loss means the application is tolerant to high jitter, latency, or loss.

TABLE 10.1 Application Performance Requirements

Application requirements are an important determining factor in QoS design. Some applications are tolerant, and others are demanding. Understanding the application’s needs is a proper first start to deploying network resources and ensuring that those resources will yield a pleasurable end-user experience.

End-to-End QoS Flow

Very few applications are initiated on a wireless station, cross a wireless medium, and terminate on a wireless station without also traversing a wired medium somewhere along the way. For that reason, WLANs that support sensitive applications usually depend on systemwide QoS support, which is also known as end-to-end QoS, by all networking systems from endpoint to endpoint.

However, before application data makes it onto the wireless medium, it must first be classified and prioritized within the initiating station. Intra-station QoS begins at the application itself (layer 7) and must continue, without disruption, all the way down the protocol stack (in OSI speak) and onto the outgoing transmission interface. Preserving QoS within a station requires that classifying traits are passed between management layer entities. Similarly, QoS protocols at each layer within the station have internal contention and collision procedures that serve to maintain priority between different transmission queues within the station.

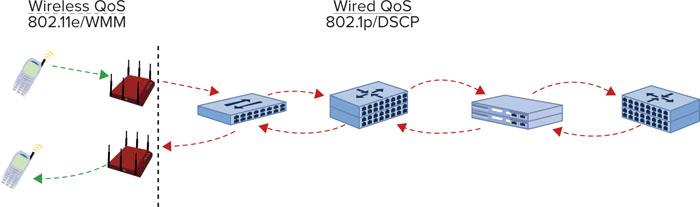

After the data is transmitted onto the carrier medium, it must also be classified (identified as traffic requiring QoS prioritization) and honored (scheduled or queued) with the proper QoS parameters across all network links. There may be several links in the transmission path, and prioritization must usually occur across switched (layer 2) and routed (layer 3) network hops, sometimes including the public Internet, where service is unpredictable. WLAN specifications (802.11e and WMM) define how QoS works within and between wireless stations, but in most cases, the wireless frame is translated by the AP and/or WLAN controller to a wired networking format (Ethernet), as shown in Figure 10.1.

FIGURE 10.1 Demarcation of wireless and QoS

In other words, QoS is an end-to-end endeavor and must be planned as such. One weak link (no QoS or poorly implemented QoS) in the network path can ruin the service quality that network designers work so hard to protect.

It is also important to consider that QoS is dependent on reliable and highly available carrier mediums. There are several places in this book where we discuss the dynamic and unstable nature of the wireless medium, and for good reason. The RF domain is an important foundation on which wireless technologies are built. QoS protocols is not an adequate answer to an unreliable RF domain. If all the proper classification, queuing, and prioritization occur within stations and on the wired network but the wireless domain is unreliable (high collisions/retries, low bandwidth, interference, etc.), QoS won’t be effective for ensuring application performance. For that reason, it is important that wireless designers ensure optimum planning and use of the RF medium so that applications can use the wireless resources effectively and end users can keep smiling. QoS is end to end, or it is nothing at all.

Classifying and Marking

Classifying and marking are two terms related to the way in which packets are identified as needing differentiated treatment across network hops. Classification is generally considered to be the interpretation of QoS settings for incoming packets. Marking is generally considered the designation of QoS parameters for outgoing packets. So, you classify incoming traffic, and you mark (or tag) outgoing traffic.

There are a number of ways to classify QoS data, and these methods largely depend on the medium type of the network, the protocol in use, and the capabilities—and configuration—of the infrastructure equipment. In some cases, application data can be classified on a per-hop basis by some characteristic of the data (i.e., IP addresses, MAC addresses or OUIs, or network service), but it is more common (and often easier) to rely on trusted markings in frame or packet headers as specified by a common MAC- or IP-layer protocol, such as DSCP. After classifying data, appropriate QoS policies can be applied to the data.

Queuing and Scheduling

Queuing and scheduling refer to the way in which classified data is handled and the order in which it is processed for outgoing transmission. In other words, how is priority actually provided? There are several techniques designed to facilitate priority. Among others, these include:

- Scheduled polling

- Weighting mechanisms

- Round-robin

These are generic types of QoS scheduling, and there are many different variations on these types. Data arrives at a station, is classified into a queue, and is then selected from queues in accordance with the scheduling or queuing algorithm or mechanism. A queue is sometimes called a bucket, where frames wait to be processed for transmission by a network node.

In accordance with the network medium type, networking protocols, and QoS method(s) in use, network nodes perform frame and/or packet translation (conversion from one type to another). As a part of translation, stations must take the incoming data classification and convert that classification into an outgoing QoS marking or tag. This translation and conversion process facilitates the end-to-end QoS at each hop in the data’s transmission path. In some cases, translation will convert one type of classification and convert it into another type of marking. In other cases, the same protocol(s) may be in use on the incoming and outgoing interfaces, so the classification translation is a direct transfer.

In the next sections, we will look at the intricate details of WLAN QoS as well as some of the most common wired QoS protocols. As we flesh out the details of these protocols, it will help us to discuss network design practices from a technical perspective.

WLAN Arbitration

If we compare wired and wireless technologies today, it becomes apparent fairly quickly that wired networking technologies generally offer greater capacity and performance than wireless networks. Wireless networks come with many benefits as well—such as mobility and low cost—but their primary drawback is the constraint of a shared wireless medium. As 802.11n has demonstrated, wireless technologies are always improving and capacity is increasing, but resources remain limited.

In this section, we will take a deeper dive into WLAN QoS by looking at the technologies, processes, and protocols that are used on WLANs. The foundational element to this discussion is WLAN arbitration and 802.11 channel access. This is a hefty topic by itself, but we will attempt to truncate it to the most essential components for this treatment and to tease these design applications out in a following section.

Our intention here is not to bog you down with the details but to provide the details that any professional WLAN designer should know. An understanding of 802.11 arbitration is fundamental to proper network design and QoS provisioning.

Channel Access Methods

The 802.11 specification defines a MAC architecture for 802.11 channel access. This architecture includes four different channel access methods that dictate how wireless stations should use the shared wireless medium in a “good neighborly” way.

These channel access methods (or functions) dictate the rules or patterns in which a wireless station can access the wireless medium. Each method has different rules for different network environments. In general, they are designed to facilitate relative “fairness” or to create statistical priority for devices or applications to access the wireless medium. Here’s a description of each:

Distributed Coordination Function (DCF) The Distributed Coordination Function (DCF) is the fundamental, required contention-based access function for all networks. DCF does not support QoS.

Point Coordination Function (PCF) The Point Coordination Function (PCF) is an optional, contention-free function, used for non-QoS STAs. PCF is not currently implemented in the market.

Hybrid Coordination Function (HCF) Enhanced Distributed Channel Access (EDCA) Hybrid Coordination Function (HCF) Enhanced Distributed Channel Access (EDCA) is optional, but it is the method used to provide prioritized contention-based QoS services. EDCA is the way to provide QoS for modern WLANs.

Hybrid Coordination Function (HCF) Controlled Channel Access (HCCA) Hybrid Coordination Function (HCF) Controlled Channel Access (HCCA) is optional, and provides parameterized contention-free QoS services. HCCA is not currently implemented in the market.

Of the four channel access methods, only two (DCF and EDCA) are used today. The other two access methods, PCF and HCCA, have not been implemented by WLAN vendors, so we will avoid discussing these coordination functions here. The MAC channel access architecture is shown in Figure 10.2.

FIGURE 10.2 802.11 MAC architecture

The foundational access method is called Distributed Coordination Function (DCF). In DCF, the coordination function logic is generally the same in every station (STA) in a basic service set (BSS)—except PHY-specific parameters, such as slot times. Stated differently, each station within a DCF follows the same channel access rules. DCF is contention based, which means that each device “competes” with the other devices to gain access to the wireless medium. After contention is won, the STA can transmit a frame, or series of frames, depending on the access rules. Then the contention process resumes. As the original 802.11 network access method, DCF is the most simple channel access method; however, being the first access method, it lacks support for QoS. To maintain support for non-QoS devices in QoS-enabled networks, support for DCF is required in all 802.11 networks.

As an optional access method that may be used in addition to DCF, HCF was introduced to support QoS. HCF EDCA offers prioritized contention-based wireless medium access. In other words, EDCA provides a way to prioritize 802.11 traffic so that certain traffic types are statistically more or less likely to be transmitted first. This is done by classifying 802.11 traffic types by user priorities (UP) and access categories (AC), which are associated with more or less aggressive contention parameters, depending on the desired priority.

A user priority (UP) is a value associated with a medium access control (MAC) service data unit (MSDU) that indicates how the MSDU is to be handled. The UP is assigned to an MSDU in the layers above the MAC. There are eight UPs, and these eight UPs map to four WMM access categories.

An access category (AC) is a label for a set of parameters used by QoS stations to contend for priority access to the medium. There are four ACs.

Contention Mechanisms

Both DCF and EDCA use the same basic contention mechanisms to arbitrate access to the wireless medium. We will look at these mechanisms in greater detail in this section, and we will discuss how differentiated priority is provided within EDCA.

Carrier Sense

To be friendly users of the shared frequency space in which they operate, Wi-Fi devices listen before transmitting. This process of listening is called carrier sense, and as the term implies, it is a way for stations to sense the activity on the medium. There are two modes of carrier sense: physical and virtual. Carrier sense works the same way with all channel access mechanisms.

Physical Carrier Sense—CCA There are actually two subtypes of Clear Channel Assessment (CCA)—carrier sense (CS) and energy detect (ED):

Carrier Sense (CS) The first type is simply called carrier sense (CS). CCA’s CS mechanism is used to monitor the RF domain for incoming WLAN traffic. In other words, when a STA is not transmitting, its receiver is always listening and ready to process modulated WLAN frames, beginning with the PHY preamble (the start of a WLAN frame). If a STA detects an incoming WLAN frame at an RF amplitude above its CCA CS threshold, the STA will identify the RF medium as “busy” and it will not contend for the medium or transmit a frame. If there are no incoming frames or the amplitude of an incoming frame is lower than the CCA CS threshold, the STA will perceive the wireless medium as “idle” and continue to freely contend for the medium and transmit frames if it wins contention.

Energy Detect (ED) The second type of CCA is called energy detect (ED). ED is similar to CCA CS, but instead of listening for WLAN frames, the ED busy/idle state is dependent on raw RF energy. For ED to indicate a “busy” medium, the RF energy on the wireless channel must be pretty substantial.

With either method (CS or ED), a “busy” medium will prevent the STA from attempting to win contention. During a busy medium, WLAN stations sit quietly and wait to begin “contending” again. As you can see, CCA is a bit like a permission slip for WLAN transmissions. When the medium is idle, STAs can contend and transmit frames. If the medium is busy, they must wait. CCA is a critical aspect of WLAN arbitration.

Physical Carrier Sense Threshold Values

As an example of the CCA thresholds for a specific PHY, let’s look at 802.11a details in Clause 17.2.10.5 (CCA sensitivity) of the 802.11-2007 specification:

CCA CS CCA indicates a busy medium when the start of a valid OFDM transmission is received at a level equal to or greater than the minimum modulation and coding rate sensitivity (−82 dBm).

CCA ED If the PHY preamble of the frame is missed, the CCA holds a busy medium for any signal 20 dB above the minimum modulation and coding rate sensitivity, which equates to −62 dBm.

Clause 17’s CCA sensitivity is much higher (meaning the signal can be lower power) when the frame header is received and processed correctly. The sensitivity threshold for RF noise, including 802.11 frames whose header was not processed, as well as non-802.11 RF sources, is much lower, at −62 dBm. Thus, it takes a much stronger signal to indicate a busy medium when CCA ED is used instead of CCA CS.

A real-world example of this would be if an 802.11b/g/n client station were attempting to transmit a frame and a microwave oven in the area caused the 802.11 station to indicate a “busy” medium and cease transmitting. That is, if the microwave oven’s amplitude was measured by the wireless station at a level above its ED threshold, the station would not be able to transmit on the affected channel.

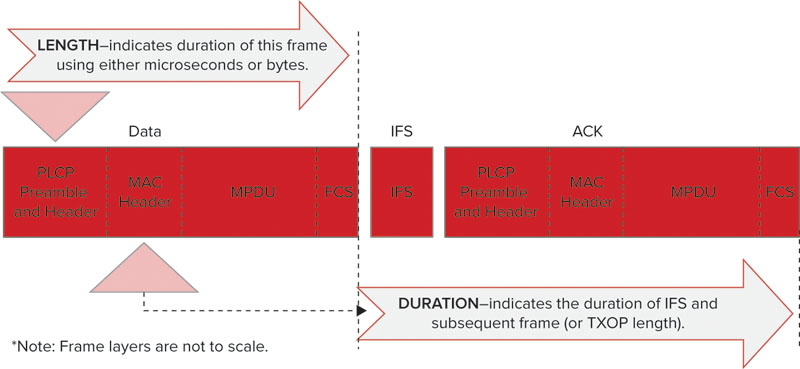

Virtual Carrier Sense—Network Allocation Vector (NAV) In addition to the physical carrier sense mechanisms, the 802.11 specification also defines a virtual carrier sense. Within the MAC header of WLAN frames is a field called the Duration field. This field carries a value that is used to indicate the duration of time before STAs can contend for the wireless medium again. To be completely accurate, the duration value covers the interframe space (required quiet period) after the frame in which it resides, as well as all remaining frames and interframe spaces that are a part of the current frame exchange or transmission opportunity. Figure 10.3 shows a simple frame exchange and the values that are used by the STA to virtually identify the busy/idle state of the wireless medium.

FIGURE 10.3 Purpose and location of the Duration field

When STAs process the Duration value, they set a network allocation vector (NAV) timer, and count down that timer until the duration value reaches zero. The NAV timer must equal zero for a STA to contend for the wireless medium and transmit a frame. The NAV timer is used in concert with the CCA to inform the STA of the status of the wireless medium, whether busy or idle.

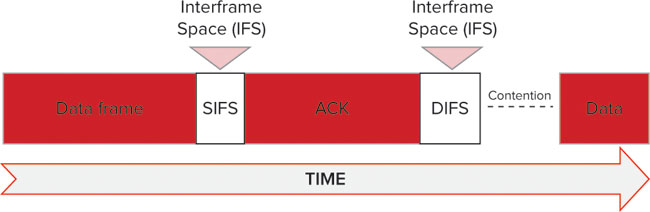

Interframe Spacing

Interframe spaces are an important part of channel access rules that dictate when a STA can access the medium, or when they can begin contending for access. After a frame has been transmitted on the wireless medium, there must be a short idle period before another frame may be transmitted by any station. This idle period is called an interframe space (IFS) and is measured in microseconds (μs). Interframe spaces are used to provide granular controlled access to the wireless medium. For example, some frames must be immediately acknowledged. Thus, before an ACK frame is transmitted, a short IFS (SIFS) is observed by the transmitter. This ensures that the ACK frame takes priority over other frames, which require longer IFS intervals before transmission.

The length of an IFS depends on the frame being transmitted and the access method in use. There are six types of IFS:

- Short interframe space (SIFS)

- PCF interframe space (PIFS)

- DCF interframe space (DIFS)

- Arbitration interframe space (AIFS)

- Extended interframe space (EIFS)

- Reduced interframe space (RIFS)

For our discussion here, we will only look at three of the interframe spaces: SIFS, DIFS, and AIFS.

The basic concept of an IFS is shown in Figure 10.4, which shows a data frame, SIFS, and ACK. Following the ACK frame, a DIFS would be observed (assuming DCF); then contention would continue until the next station transmits a frame.

FIGURE 10.4 The basic concept of an interframe space

SHORT INTERFRAME SPACE (SIFS)

SIFS are used within both DCF and EDCA. For 802.11-2007, SIFS is the shortest of the IFS values and is used prior to ACK and clear to send (CTS) frames. However, with 802.11n, a shorter IFS (RIFS, which we will not discuss here) was introduced. The purpose of SIFS is for stations to maintain control of the wireless medium during a frame exchange sequence that warrants priority. By using SIFS, which is a small amount of time, no other stations (which would use other, longer IFS) will be able to win contention and disturb an ongoing exchange.

In other words, SIFS is used as a priority interframe space once a frame exchange sequence has begun. It allows the participants of a frame exchange sequence to complete their conversation uninterrupted. This is true when multiple frames are transmitted within a transmission opportunity (TXOP) (as with frame bursting), and it is also true when a single frame is transmitted (as with typical Data-ACK exchanges).

DCF INTERFRAME SPACE (DIFS)

When a STA desires to transmit a data frame (MAC protocol data unit, or MPDU) or management frame (MMPDU) for the first time (not a retry) within a DCF network, the duration of a DIFS must be observed after the previous frame’s completion. As the IFS for DCF, a DIFS does not provide QoS differentiation. To provide relative equality in channel contention, DIFS values are the same for all STAs with similar PHY capabilities. The DIFS values are shown in Table 10.2.

TABLE 10.2 Calculations for IFS Values

ARBITRATION INTERFRAME SPACE (AIFS)

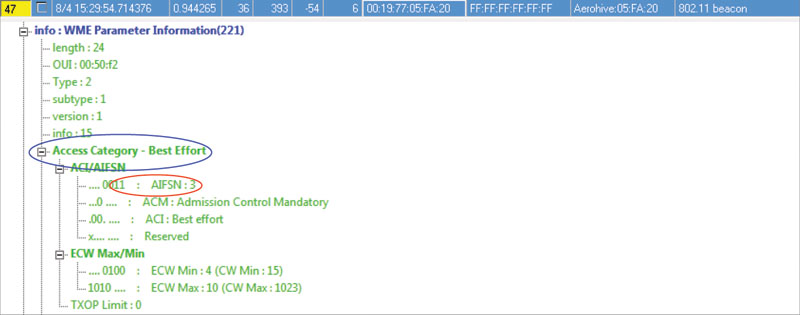

With EDCA, the basic contention logic is the same as with non-QoS networks, but in order to facilitate QoS differentiation, some notable differences are provided. While DCF designates a single DIFS value for each PHY to use for contention, as shown in Table 10.2, EDCA establishes unique IFS durations for each AC within a BSS. These unique IFS values are called AIFS, and because an AIFS is unique for each AC, an AIFS is notated as an AIFS[AC]. QoS STAs’ TXOPs are obtained for a specific AC, so each STA is simultaneously contending for medium access with multiple ACs. An AIFS interval is used by QoS stations when attempting to transmit data frames, management frames, and some control frames.

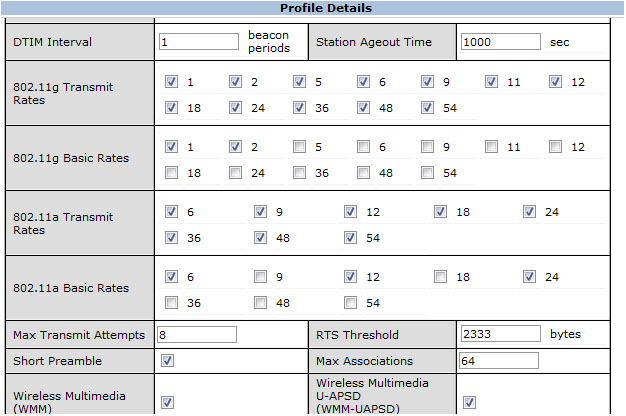

For improved control of QoS mechanisms, AIFS values are user-configurable. By default, QoS APs announce an EDCA parameter set in the Beacon frame that notifies stations in the BSS about these QoS values. When you change these values in the AP configuration, the AP will broadcast a different set of parameters to the BSS. These parameters have a direct impact on the amount of priority given to a specific AC.

Figure 10.5 shows a Beacon frame that includes the AIFSN value for the Best Effort AC. As in the figure, these values can be found for each AC in the EDCA Parameter Set Element within a Beacon frame. In Figure 10.5, a highlight shows that this is the best-effort AC and the second highlight shows that the AIFSN is currently set to 3, the default setting. The AIFSN value determines the duration of the AIFS[AC], as we will discuss next.

FIGURE 10.5 Default AIFSN value as seen in a Beacon frame

As a quick disclaimer, some of the calculations related to IFS values can be a bit intimidating at first. Other materials are available for the same topic, and we highly recommend that you look at these topics in greater depth.

![]()

For a more thorough and in-depth treatment of this topic, refer to the whitepaper titled “802.11 Arbitration,” authored by Marcus Burton, which is available at http://www.cwnp.com/pdf/802.11_arbitration.pdf.

An AIFSN is a number (AIFS number) value that is user-configurable and determines the brevity (or length) of an AIFS interval. AIFSN values are set for each access category, giving the AIFS[AC] a shorter or longer duration, in accordance with the desired priority of the AC. This is demonstrated by the formula used to calculate an AIFS[AC]:

AIFS[AC] = AIFSN[AC] × aSlotTime + aSIFSTime

These calculations can be made using the Slot Time and SIFS values from Table 10.2. The default values for each AIFSN[AC] are shown in Table 10.3 along with the resulting AIFS[AC] value for each PHY. By modifying the AIFSN[AC] shown in Table 10.3, administrators can manipulate the AIFS[AC] duration.

TABLE 10.3 Default AIFS Parameter Set

In comparison with Table 10.2, which shows the fixed SIFS and DIFS values, you can see in Table 10.3 that AIFS values are always longer than a SIFS for a given PHY. This ensures that SIFS-separated frame exchanges take priority.

Although much of this discussion about IFS has been academic, understanding these principles is relevant to network design. Figure 10.6 shows how the IFS values differ, and how a change in these values is directly related to a device’s ability to win contention and transmit frames.

FIGURE 10.6 Relationship of interframe spaces in contention framework

Backoff Timers

The concept of a backoff timer is fairly complex, and we will avoid all of the intricate details for this discussion. Instead, we will focus on the simple purpose of a backoff timer, some basics on its functionality, and how it is relevant to network design.

A random backoff timer is a way to add randomness to the 802.11 contention process to minimize collisions between competing stations. The backoff timer is a value that is randomly selected from a range of values called the contention window (CW). The CW is based on EDCA parameters specified by the 802.11 standard and are user-configurable for QoS control. Here’s how it works.

A STA randomly selects a backoff value from the CW. The backoff value represents the number of slot times (we will discuss this value in the next section) that must be observed with the wireless medium remaining idle before a STA can transmit a frame. So, if a STA selects a random backoff value of 7, it must wait seven slot times. The size of the CW varies in accordance with the PHY technology in use on a STA as well as the AC for QoS STAs. As you might guess, higher-priority ACs have smaller CWs, which gives them a higher probability of selecting a lower backoff value. For example, if you have a voice AC with a CW from 0–7 and you have a best-effort AC with a CW from 0–31, the voice AC is far more likely to select a lower backoff value than the best-effort AC. Lower backoff values represent a greater likelihood to win contention and transmit a frame.

The lower range of the CW is always 0. The upper range of the CW is based on one of two values: the CWmin and the CWmax. For the first attempt at a frame transmission, the CWmin value is used as the upper limit of the CW. For example, if the CWmin is 15, the CW for the first attempt at a frame transmission would be 0–15. If a collision occurs and a frame must be retransmitted, the upper limit of the CW increases exponentially for each retry until this value reaches the CWmax, as shown in Figure 10.7. In other words, collisions and retries are bad. Collisions require that STAs wait longer (statistically) for each subsequent attempt at a frame transmission. This helps us to see why high retry rates in latency-sensitive environments can be problematic for application performance.

FIGURE 10.7 Relationship between retries and a CWmax

Just to reiterate, the CW is the range of values from which a STA randomly chooses a backoff value. This backoff value is equal to the number of slot times the transmission medium must be idle before the STA can transmit a frame.

So, as we mentioned in passing before, the CW represents a statistical probability for priority. ACs or STAs with lower CW values are more likely to select lower backoff timers, and thus, win contention more frequently. Higher-priority ACs, such as for voice and video, have small CWs. To make this concept more tangible, the CW parameters are shown in Table 10.4 for an 802.11n STA using default EDCA parameters.

TABLE 10.4 802.11n STA Default Contention Window Values

As you can see from Table 10.4, AC_VO and AC_VI have a significantly higher priority than AC_BE and AC_BK due to a lower AIFSN[AC] and smaller CWmin and CWmax values. As this plays out in the arbitration process, high-priority ACs are far more likely to win contention than low-priority ACs and STAs.

Slot Times

As we discussed previously, a slot time is a PHY-specific time value, measured in microseconds (μs), that is used as a part of the arbitration process. Specifically, the random backoff value reflects the number of slot times that must be observed before a frame may be transmitted. So, a lower slot time reflects a contention advantage over other devices with higher slot times. Refer to Table 10.2 to see the slot times for each PHY. As you can see from Table 10.2, legacy PHYs have higher slot times than modern PHYs.

Implications for WLAN Design

Let’s now look at some of the ways in which we can apply this knowledge at a high level:

Contention Domains and Probabilistic Contention The first obvious fact about wireless networks is that they operate within a shared contention domain. Contention domains can be segmented—generally speaking—by isolating devices physically, by using nonoverlapping channels, and by shaping RF propagation with antennas and transmit power configurations. Operating within the constraints of 802.11 arbitration, we must use these controllable design elements to maximize capacity and minimize contention within the RF domain. As the number of devices within a contention domain increases, collisions will inevitably increase. Of course, a modest number of collisions and retries is acceptable and normal, but in excess, collisions and retries can bring application performance to unacceptable levels.

Standardized WLAN arbitration is probabilistic. This means that QoS only provides a statistical advantage for some devices to access the network; it does not provide a guaranteed priority. Based on that knowledge, you should approach network design by assessing the load that will be placed on your network and the mission-criticality of sensitive applications. If voice is the most important application on your WLAN, you may want to consider taking extra steps toward isolating VoWiFi to its own contention domain or ensuring that the statistical priority is sufficient for application performance requirements. Many experts in the field recommend deploying latency-sensitive applications like voice in an entirely different frequency band than data. This is an option, but it may lead to other problems, such as what to do with devices that are both data and voice devices (such as smartphones and laptops with softphones). Similarly, the advances of 802.11n in 5 GHz are most beneficial to data applications, whereas voice applications enjoy only a modest benefit. Further, if you prioritize voice applications by supporting them in 5 GHz, your data applications will likely see a performance impact if they are relegated to 2.4 GHz. If there is a clear priority for one application over another, this may be a viable option, but in most cases, several applications are mission-critical, which prevents complete isolation of contention domains by application.

SSIDs and Contention Domains As you assess the options for segmenting applications into different contention domains, remember that SSIDs do not divide a network into different RF domains. All SSIDs supported on a radio operate within the same contention domain. SSIDs are one way to control the access rules by which client devices compete for the medium (such as by preventing certain access categories, or by supporting proprietary access control mechanisms like airtime fairness), but SSID “segmentation” has limited benefit to wireless contention.

Customizing EDCA Parameter Sets While the standardized set of EDCA parameters is usually sufficient for WLAN QoS, some networks may require fine-tuning of these parameters. Thankfully, most WLAN infrastructure vendors allow administrators to change these settings, so you can provide greater differentiation between low- and high-priority access categories.

Channel Capacity and User Density Designers should also consider AP-to-client load expectations with arbitration in mind. When there is a high number of high-priority devices (such as VoWiFi phones), designers may do well to attempt to minimize the load on each AP by decreasing AP coverage areas, performing load balancing, or using other load management practices. The traffic patterns of some low-latency, high-priority applications can be somewhat demanding when there are high user densities. This is often because these applications must transmit frames frequently, and they observe short contention periods. For this reason, a fewer number of devices can typically be supported by a single AP. When CWs range from 0–3 (default for AC_VO) and devices are attempting to access the medium frequently, the statistical likelihood of two devices transmitting at the same time increases dramatically. This may lead to a significant number of collisions when client density is too high. For this reason, WLAN voice client vendors typically have a recommended maximum client density for each AP.

WLAN QoS: WMM and 802.11e

The WLAN QoS mechanisms described in the previous section and later sections were introduced to 802.11 WLANs by the 802.11e amendment, which is now a part of 802.11-2007. However, in the real world, only a subset of 802.11e functionality has been implemented by vendors. The Wi-Fi Alliance introduced a specification based on 802.11e QoS, called Wi-Fi Multimedia (WMM). WMM represents the functionality we see in the real-world implementations.

The Wi-Fi Alliance’s WMM knowledgebase can be found at www.wi-fi.org/knowledge_center/wmm. Some of this content requires a Wi-Fi Alliance membership.

As a subset of 802.11e, WMM makes implementation of WLAN QoS easier for infrastructure and client vendors. As with other Wi-Fi Alliance specifications, a certification program is in place to validate device compliance with WMM functionality. Device certification is an important insurance for device selection and planning of QoS-enabled WLANs. Today, all 802.11n certified devices support WMM.

In the wireless domain, WMM is a feature that should be supported by both the AP and the client for maximum effectiveness; however, WMM does not have to be supported by both. In a QoS BSS, non-QoS traffic is assigned best effort (AC_BE) priority. Most WLAN vendors’ APs can still perform downstream QoS, even if the recipient client does not support WMM. Remember, 802.11 QoS is largely designed to provide prioritized access to the transmission medium, so even if QoS is only downlink, it will still be helpful for some applications. If an application requires bidirectional prioritization, as with most voice implementations, performance will suffer if the client does not support WMM. Applications like unidirectional video will not be as noticeably impacted if WMM is only supported on the downlink. For WMM admission control, WMM is mandatory on both the client and the AP. Similarly, there are many facets to WMM that are lost when both the client and the AP do not support WMM.

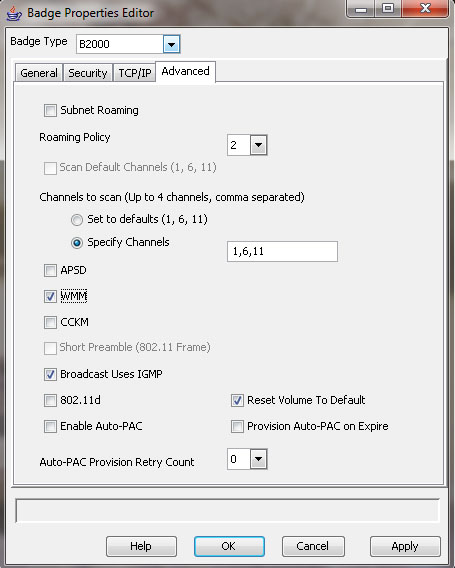

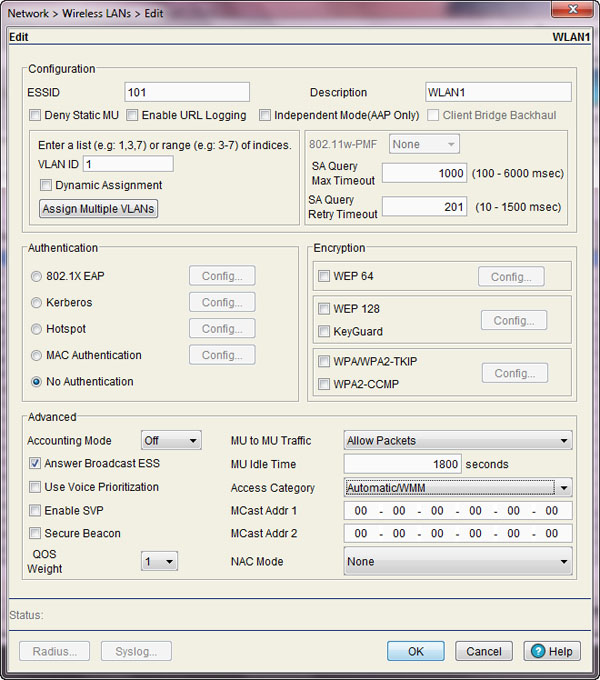

In many client devices supporting WMM, very few configuration parameters are provided. In some cases, there aren’t any client configuration variables. In others, configuration is limited to enabling or disabling WMM, as is shown in the Vocera badge configuration utility in Figure 10.8. In that regard, the client application and software driver dictate the operation of a specific NIC.

FIGURE 10.8 WMM configuration options for Vocera VoWiFi badges

There are many important aspects to WMM operation, so we will explore them in the following sections.

WMM QoS Classification

In our introduction to QoS in the WLAN arbitration section, we mentioned that there are eight user priorities (UPs) and four access categories (ACs). As data is passed from an application down the protocol stack within a STA, it is classified with a UP in accordance with the coding of the application. This UP is passed to the MAC layer for classification and queuing.

Because of the relationship between WLANs and Ethernet LANs, WMM classification is correlated with 802.1p (now part of 802.1D) UPs. We will look briefly at 802.1p UPs later in the section entitled “QoS in Wired Networks.” In WMM, these UP classifications are mapped to four ACs, which possess their own queues and access parameters. The UP to AC mapping and naming conventions are shown in Table 10.5. To preserve the UP value for a frame when it is translated to different media types, WMM stations also include the UP (which is more granular than the AC) in the QoS Control field of transmitted QoS frames—802.11 management frames do not include the QoS Control field, so they are treated with AC_VO access parameters on the wireless medium, and they are not forwarded to the wired interface. The QoS Control field enables the receiving STA (usually an AP) to read the UP classification and then properly mark the frame when it is translated and transmitted onto the wired medium.

TABLE 10.5 Relationship Between 802.11 Access Categories and 802.1p User Priorities

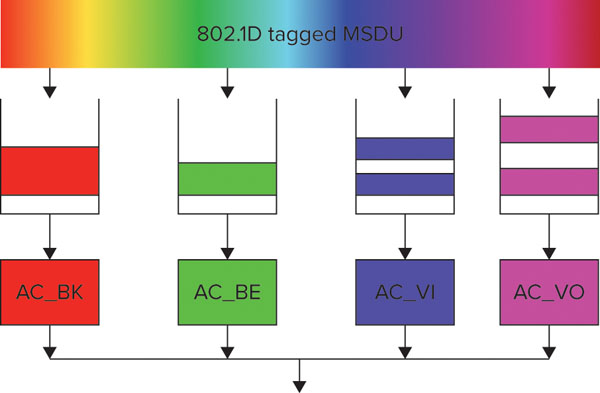

Each of the four ACs specified by WMM has its own contention parameters and frame queues. The intra-station contention and queuing concept is shown in Figure 10.9.

FIGURE 10.9 WMM queuing within a STA

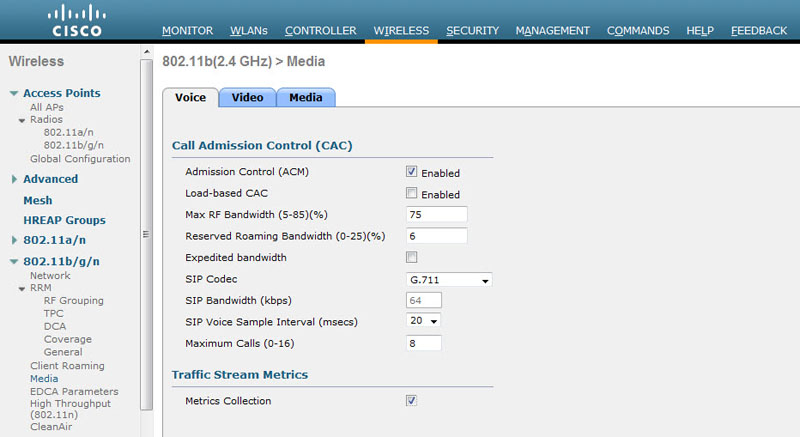

Admission Control and Traffic Streams

Admission control is a configurable option in which stations must request permission prior to using an AC. Support for admission control is indicated by use of an “admission control mandatory” bit, so you will often see the abbreviation ACM referring to admission control. In busy network environments, when too many users are attempting to access the network resources at the same time, quality will suffer for everyone. The purpose of admission control is for the AP to maintain control of the use of network resources so as to preserve application quality for the maximum number of stations. Clients must check in with the AP to see if an acceptable amount of resources are available. The AP’s admission control algorithms are vendor specific and are dependent on channel capacity, RF link conditions, network performance indicators (errors, retransmissions, etc.), and other criteria. Some user-defined admission control parameters are also commonly used, such as the number of permitted calls and maximum bandwidth allocation; these configuration elements are shown for a Cisco 5508 WLAN controller in Figure 10.10. Figure 10.11 shows a Beacon frame with admission control disabled for all ACs.

FIGURE 10.10 Cisco admission control settings for voice traffic

FIGURE 10.11 Admission Control disabled for all ACs

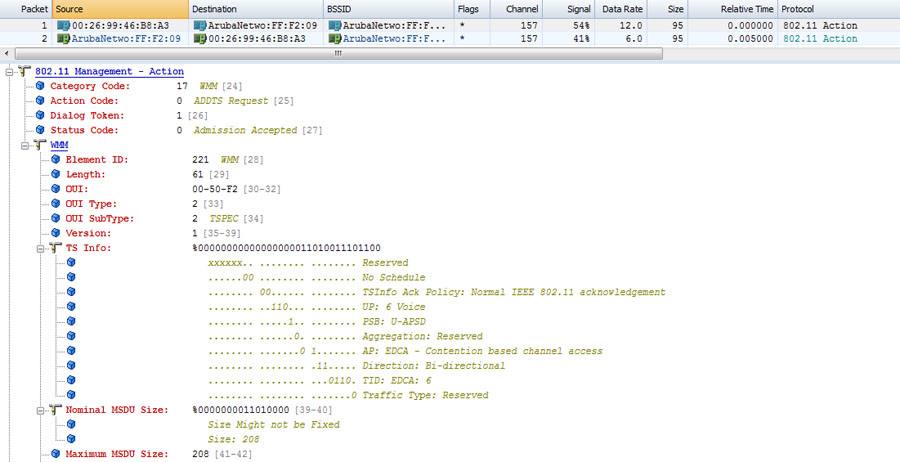

If admission control is enabled, associated clients seek the AP’s permission to use a network resource by sending an ADDTS (short for Add Traffic Stream) Request Action frame to the AP. ADDTS frames are designed to create new traffic streams (TSs) with custom service parameters. Within an ADDTS is a TSPEC (short for Traffic Specification) element, which is a set of parameters that classify the performance requirements of a traffic stream. A sample ADDTS Request frame is shown in Figure 10.12.

FIGURE 10.12 ADDTS Request frame

The AP processes the ADDTS Request (by using the information in the TSPEC element), compares it with the admission control algorithms, and either accepts, rejects, or modifies the TSPEC information. The AP’s response is sent in an ADDTS Response frame, which informs the client how to proceed. A sample ADDTS Response frame is shown in Figure 10.13. If clients are joining a BSS, a TSPEC can be established by means of a TSPEC element in (re)association request frames. The AP’s response to the TSPEC comes in the (re)association response frame. The details of admission control processes are beyond the scope of the CWDP exam, but proper design always requires that designers understand the technology and processes that underlie the deployment.

FIGURE 10.13 ADDTS Response frame

Even when admission control is not required, traffic streams with custom TSPEC parameters may still be established. If the upper layers of a STA determine that a set of frames requires some specific treatment beyond the basic set of contention parameters defined for an AC, the upper layers can indicate this requirement in the instructions to the MAC. The STA then follows the same steps described in the previous paragraph to set up a TSPEC to meet the traffic stream’s performance requirements.

Identifying Traffic for Prioritization

When the upper layers of a station have data to send, they send the data down the protocol stack with instructions to the MAC layer with information about the data to be transmitted. In the instructions from the Logical Link Control (LLC) to the MAC, each MSDU includes a traffic identifier (TID), as determined by the upper layers. The TID indicates the type of treatment desired for a specific MSDU. TID values range from 0–15; however, only TID values 0–7 are currently used with EDCA. TID values 8–15 correspond with HCCA (or HEMM) traffic streams. The information sent from the upper layers also indicates whether additional prioritization measures are required for the set of MSDUs. These additional measures would call for a unique set of traffic requirements, as defined within a TSPEC.

Assigning QoS Policies

Within a typical WLAN infrastructure, QoS policies may define traffic classifications, admission control parameters, bandwidth contracts, scheduling policies, and more. These QoS policies are then tied either to SSIDs for ESS-wide QoS policy enforcement or to groups and users for more granular QoS. AAA attributes, or other user-based authentication methods, are typically used to assign a QoS profile to a user or group. As you plan your method of QoS application, realize that user-based QoS is far more granular and allows for more differentiated services within a service set. However, applying QoS directly to a WLAN profile (SSID) may reflect an easier administrative approach. The decision ultimately comes down to the way in which you are dividing your traffic and service types up within the WLAN profiles.

QoS in Wired Networks

After all of our discussion about WLAN QoS, we must remember that WLAN QoS is useless if it isn’t translated to the wired network. For that reason, if we’re to understand wireless QoS, we must also understand at least the basics of wired QoS.

In general, WLANs are an extension of 802.3 Ethernet LANs that support the IP protocol at Layer 3. With that in mind, we will provide a quick overview of the predominant QoS protocols and considerations that are relevant to those medium types.

The Difference Between Wired and Wireless QoS

We should point out one significant difference between wired and wireless QoS. It is a common practice in wired network design to gratuitously overprovision networks so that there are enough resources to accommodate the expected traffic load without stressing the network’s capacity. In any network with sufficient resources, throughput, latency, jitter, and loss requirements are likely to be met for most applications if the network is not being overly taxed. In practice, overprovisioning usually adds cost to a network deployment and is embraced as a tactic to avoid the complexities of configuring systemwide QoS. Unfortunately, overprovisioning is not an option with WLANs, as bandwidth is limited by virtue of a crowded, shared contention domain; therefore, end users are forced to use the available resources with utmost discrimination. That discrimination leads us to the necessity of QoS.

802.1D, 802.1Q, and 802.1p Standards

802.11 WLANs may be deployed into many different types of wired networks, and the overwhelming majority of deployments are as an extension of 802.3 Ethernet LANs. End-to-end QoS implementations are heavily dependent on compatibility of standardized QoS protocols between different media types, so the IEEE 802.1 working group specifies standards that are intended to facilitate compatibility between different 802.x mediums, such as 802.11 and 802.3. 802.x standards are primarily MAC-layer protocols, and these protocols are an important part of QoS homogeneity across an enterprise. For the wired QoS discussion with Ethernet, 802.1p UPs are our primary topic of interest, but we should briefly discuss 802.1D and 802.1Q before looking specifically at 802.1p.

802.1D The 802.1D standard defines IEEE MAC bridging. The original 802.1D standard did not specify a way for bridged networks to signal user priority within a frame, so 802.1p was specified as an addition to 802.1D for that purpose.

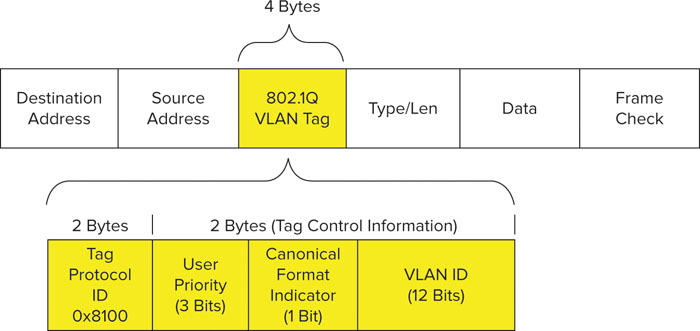

802.1Q 802.1Q is a standard that defines virtual LANs within the context of the bridging framework defined by 802.1D. At this point, you may be asking what VLANs have to do with QoS. The answer is that they don’t really have much to do with QoS, but 802.1Q modifies MAC frame formats in such a way that 802.1p user priorities can be included in the modified frames. 802.1Q provides VLAN support by extending the MAC header of 802 frames with a 4-byte (32-bit) field, which includes a VLAN identifier. 802.1p was developed separately from 802.1Q, but 802.1p is used as a part of 802.1Q frame expansion. Specifically, 3 of the 32 bits that are added to an 802.1Q-expanded frame are used for the 802.1p user priority, also known as a priority code point (PCP). For a reference, the 802.1Q expansion field is shown in Figure 10.14.

FIGURE 10.14 802.1Q frame expansion

802.1p As you can see in Figure 10.14, the PCP (User Priority, in the figure) subfield is 3 bits in length, which accommodates values from 0 to 7. The eight PCP subfield values are 802.1p UPs, and correspond to different classes of service (CoSs), which are also known as traffic categories. These eight UPs are shown in Table 10.6 with their corresponding traffic type. As we discussed earlier, these eight UPs are mapped to 802.11 AC classifications.

TABLE 10.6 802.1p User Priority Classifications

| User Priority | Acronym | Traffic Type |

| 1 | BK | Background |

| 2 | -- | Spare |

| 0 (Default) | BE | Best Effort |

| 3 | EE | Excellent Effort |

| 4 | CL | Controlled Load |

| 5 | VI | Video |

| 6 | VO | Voice |

| 7 | NC | Network Control |

IEEE Std 802.11-2007, Copyright 2007 IEEE. All rights reserved.

Keeping 802.x Standards Organized

It can be a challenge to keep the myriad 802.x standards organized. The numbering and lettering formats can get confusing. While we don’t have a quick and easy way to keep it all organized, repetition may be helpful. As it relates to 802.x standards, let’s review:

802.1D Specifies IEEE MAC Bridging. 802.1p was added to the 802.1D standard.

802.1Q A standard that defines virtual LANs within the framework of the 802.1D Bridging standard.

802.1p Defines eight UPs, which are signaled in a 3-bit priority field that is a part of 802.1Q-tagged Ethernet frames. Despite its relationship to 802.1Q, 802.1p was written as an addition to the 802.1D standard, and was rolled into 802.1D in 1998.

802.3ac An amendment to the Ethernet (802.3) working group that expanded the maximum frame size from 1518 to 1522 bytes. This change accommodates the 4 additional bytes from 802.1Q expansion, which includes the 3-bit PCP (802.1p) CoS field.

802.11e An amendment to the 802.11 standard; defines QoS for WLANs. 802.11e was rolled into 802.11-2007.

DiffServ Protocols

All of the QoS protocols we’ve discussed thus far have been MAC-layer protocols. At the network layer, DiffServ is the primary QoS mechanism used in today’s IP-based networks. To meet the needs of a highly divergent set of IP networks, DiffServ is a highly flexible QoS mechanism.

DiffServ Resources

The IETF defines DiffServ and provides additional help in a number of RFCs, as the following list shows. A simple Internet search will also yield a number of informative articles and links about DiffServ.

RFC 2474 Definition of the Differentiated Services field (DS field) in the IPv4 and IPv6 headers

RFC 2475 An architecture for Differentiated Services

RFC 2597 An assured forwarding PHB group

RFC 3140 Per-hop behavior identification codes (obsoletes RFC 2836)

RFC 3246 An expedited forwarding PHB (obsoletes RFC 2598)

RFC 3260 New terminology and clarifications for DiffServ

RFC 4594 Configuration guidelines for DiffServ service classes

DiffServ classification can be performed in a number of ways, including the IP source or destination address, the network service, or more commonly, Differentiated Services Code Point (DSCP) bits in the IP packet header. Classification results in an assignment of each packet to a traffic class, which is associated with policies that determine the services provided to that traffic class. The frame handling policies for a traffic class are referred to as per-hop behaviors (PHBs). The IETF provides recommended PHBs for each traffic class and DiffServ is designed to be highly flexible and granular, so network designers may also apply other traffic engineering policies, such as rate limiting and traffic shaping, to IP traffic.

Within an IPv4 packet header, there is an 8-bit differentiated services field. Six of these bits are the DSCP values used for classification, which provides a total of up to 64 different traffic classes. Half of the DSCP values are designated for specific services, but the other half are open for user-defined services.

Each implementation is tailored to the needs of a specific network, which makes its relationship to wireless networks slightly more flexible and fluid. In other words, the DSCP policies that are applied at the network layer do not have a fixed correlation to the policies on the 802.11 MAC. Whereas 802.1p and WMM share a common mapping of 3-bit UP values, DSCP and WMM do not share a consistent one-to-one priority mapping for all networks. However, the IETF does provide fairly extensive documentation and deployment recommendations for DSCP traffic classes, services, and code-point assignments, and these recommendations pave the way for a reasonable amount of consistency across different networks. This allows WLAN vendors to use some consistent UP-to-DSCP conversion.

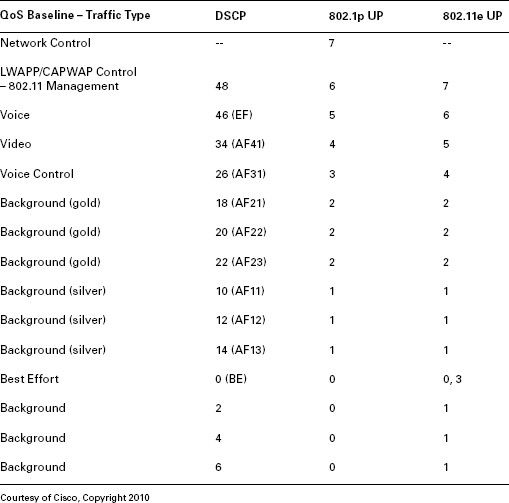

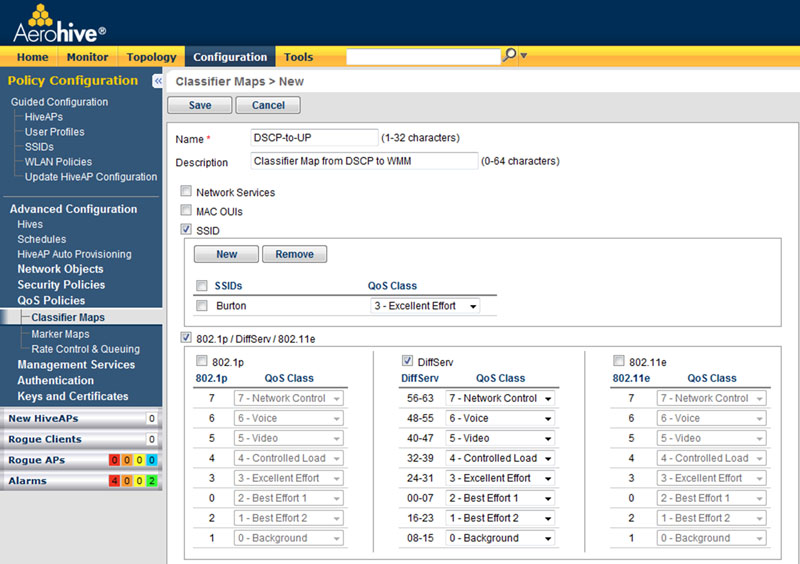

Instead of using 1:1 UP to DSCP conversions—because there are so many DSCP traffic classes—UP to DSCP classifier maps may translate a range of DSCP values to a single UP value for 802.1p or WMM. For example, DSCP values 40–47 may all be mapped to a UP value of 5 (which maps to WMM AC_VI), and vice versa. To demonstrate how this might look in an actual implementation, Table 10.7 shows Cisco’s suggested DSCP to 802.1p and 802.11e UP baseline conversion maps, and Figure 10.15 shows Aerohive Networks’ default classifier map policy, which maps DSCP ranges to UP values.

TABLE 10.7 Cisco’s Suggested DSCP Conversion Map

FIGURE 10.15 Classifier map configuration menu

Network Control Priority

You may notice from Table 10.7 that Cisco’s baseline QoS mapping reserves the highest priority 802.1p value for Network control. This traffic type is necessary for maintaining functionality and availability of network resources, so it takes precedence over all types of user traffic. For that reason, it is assigned the highest priority and all other services (such as voice and video) are assigned the next lower priority class to accommodate. This illustrates that vendor-specific design practices are an important part of network design, as is integration of the WLAN into the wired LAN.

Integrating WLANs into Wired Networks

There are several considerations to make as you design the wireless network’s integration with the wired network. Many are architectural in nature and relate to translation of frame markings from one protocol or OSI layer to another. Also, many others are related to integrating with existing wired QoS practices and configuring QoS trust on infrastructure devices.

There are two primary models for data forwarding at the AP: distributed and centralized. When distributed forwarding is employed, APs must have sufficient processing and memory capacity to apply sophisticated QoS scheduling and queuing policies to each frame. This was previously a limitation for some deployments because of underpowered APs. Today, many vendors are moving intelligence into the AP to accommodate this need. QoS configuration on the WLAN will largely depend on the QoS policy on the wired LAN. Some WLAN infrastructure vendors provide granular control of classification and marking maps. In other cases, these maps are fixed and are based on common mappings. If these maps don’t coincide with the desired QoS policy for the network, the wired infrastructure will have to be configured to reclassify traffic that originated from the WLAN or is destined for the WLAN. Let’s look more closely at QoS design considerations for distributed and centralized forwarding models.

Distributed Forwarding One of the first design practices for QoS in distributed forwarding situations is to ensure that the AP is configured to translate between WMM (L2) and 802.1p (L2) and DSCP (L3). In addition to this, the AP’s Ethernet switch must be configured to support and honor the same QoS priority sent by the AP. If the network is using 802.1p CoS bits to provide QoS at the MAC layer, the switch should be configured with appropriate L2 trust and 802.1Q should be enabled on the switch port. On the other hand, if network layer QoS (DSCP) is preferred, the switch should be honoring the DSCP values provided by the AP, and 802.1Q becomes arbitrary for QoS. Depending on the systemwide approach to QoS, the network infrastructure may be applying its own QoS classification policies aside from, or in addition to, code-point values, which may mean that the DSCP values are ignored or changed in favor of new markings. These QoS actions are likely already defined within the company by routing and switching policies, and the primary responsibility of the WLAN is to get the WMM classifications translated to 802.1p and/or DSCP. The wired infrastructure will do the rest.

When a WLAN client constructs an MPDU, the DSCP values are marked in the IP header, encapsulated into an 802.11 header, and passed to the AP. In normal L2 operations, the AP does not inspect DSCP values sent from the wireless client. Thus, in a distributed forwarding model, when the AP forwards the frame on to the wired network, the original DSCP markings from the 802.11 client remain intact.

Centralized Forwarding However, in a centralized forwarding model (frames are forwarded through the WLAN controller), the AP encapsulates the original 802.11 MPDU with new IP and Ethernet headers (with L3 tunneling to the WLAN controller) and marks the new headers in accordance with values derived from the MAC header of the 802.11 frame and the AP’s QoS translation table. In other words, the AP takes the UP from the 802.11 frame, and converts it into 802.1p for the new MAC header and DSCP for the new IP header (assuming 802.1p is marked on the new MAC header, which it may not be). Then these encapsulated frames are sent through the network to the WLAN controller. The original DSCP markings from the wireless client are left intact. When L3 tunneling is used between the AP and WLAN controller, DSCP classification is favored and 802.1p may not be marked at all.

When the WLAN controller receives these encapsulated packets, the outer L2 and L3 headers are removed and the original 802.11 frame is handled by the WLAN controller. In accordance with the QoS policy for that frame, the WLAN controller will apply the appropriate 802.1p and/or DSCP markings on the outgoing frame if it is not destined to return to the AP.

If a frame is destined to go from the WLAN controller to the AP, the controller will encapsulate that frame with an outer MAC and IP header (again, if L3 tunneling is used), marking 802.1p and/or DSCP on the outer headers for proper handling through the network to the AP.

When a centralized forwarding model is used, the network switch to which the WLAN controller is connected must also be properly configured to handle QoS tags from the WLAN controller. This switch port, or combination of switch ports, must be designated with QoS trust in accordance with the desired L2 or L3 QoS policy for the network. If the WLAN controller marks 802.1p and DSCP, the switch may only use 802.1p (CoS trust), or it may strip off and ignore the MAC header CoS bits and rely on the WLAN controller’s DSCP markings. Yet another option is that the Ethernet switch would reclassify the traffic and mark it with new QoS tags to comply with the rest of the wired network configuration.

QoS Challenges

It is difficult to cover the full scope of challenges that may arise with Wi-Fi QoS, but here is a short list of the most high-level headaches:

RF is an unstable and dynamic medium. First, the RF medium is inherently unstable and dynamic. New RF obstructions are introduced and removed. RF interference comes on and goes off. New office partitions are built, removed, or otherwise modified. Doors open and close. Furniture is rearranged. The RF environment is the foundation on which upper-layer protocols and mechanisms, like QoS, are built. If the RF environment is unstable or unreliable, upper-layer features that are built on it will also be unreliable. Stability at this level will generally improve application performance.

Users are mobile and user populations are dynamic. Similarly, users are mobile and are constantly moving throughout a service set. Laptops and other Wi-Fi devices are coming and going, being turned on and off. Legacy devices—with legacy PHYs or no QoS support—join and leave. Neighboring networks are installed, removed, or modified. For many reasons, data rates are always changing. All of these changes affect network capacity and contention.

WLAN QoS is largely probabilistic. We’ve already mentioned it, but it bears repeating that WLAN QoS is probabilistic. Contention mechanisms are designed to provide statistical priority based on a device or access category’s contention parameters. This is not a service guarantee. One of the ways to combat this is to attempt to isolate high-priority devices into their own contention domain. However, when you use separate SSIDs to differentiate between different services, what do you do with devices that don’t fit entirely into a specific service category (e.g., data or voice)? Many end users are using voice services on their laptops along with interactive video, email, and web browsing. Similarly, smartphones, tablet devices, and other similar electronics require many different types of applications. Restricting services to a specific band is becoming increasingly difficult.

Standardized QoS may be insufficient. Standardized QoS mechanisms are usually adequate for maintaining service quality in most WLANs; however, they also fall short in some areas, such as balancing airtime usage among clients with high or low data rates so as to maximize efficiency. For this purpose, proprietary features may be required.

Application-Specific Challenges

For some networks where standardized QoS falls short, we must often turn to proprietary solutions implemented by WLAN vendors.

Increased Demand for Video

One of the hot topics of modern WLANs is the increased demand for video consumption. Video raises a unique challenge for WLANs because some video applications rely on multicast traffic. In fact, any application that relies on multicast for sensitive traffic may pose problems, because wireless multicast has some significant QoS drawbacks, such as:

- Multicast traffic is assigned to AC_BE. Network administrators cannot designate a higher-priority access category for multicast and broadcast (MC/BC) traffic. This is a major drawback, because contention management is the primary QoS mechanism in WLANs.

- All WLAN traffic with an MC/BC destination address must be transmitted at a rate in the Basic Rate Set. This often constrains multicast and broadcast traffic to low data rates, such as 1, 2, 5.5, 6 or 11 Mbps.

- MC/BC traffic in a WLAN is not acknowledged, which paves the way for high loss and low reliability.

- When power save functionality is supported within the BSS, power save operation can severely delay the delivery of MC/BC traffic. Specifically, MC/BC traffic is buffered at the AP until after Delivery Traffic Indication Message (DTIM) Beacons, at which point all stations awake to receive these frames. Even if every Beacon is a DTIM, this is still a problematic amount of delay for sensitive traffic.

- Multicast group subscription is not easily determined by the AP unless the AP snoops client traffic for multicast join requests. It is quite possible that all members of a multicast group (for whom the multicast stream is still active) would be disconnected from an AP, but the AP would still be transmitting a multicast data stream, taking up valuable network airtime.

To address some of these concerns, vendors have responded with proprietary solutions. The primary way to address many of these multicast-related problems is to convert multicast traffic into unicast traffic. This addresses many of the problems in the previous list and allows these data flows to be given proper treatment. As a word of caution for multicast to unicast conversion, if there are a lot of client subscriptions to the multicast flow in a BSS, network capacity can quickly become overwhelmed when a single multicast flow is transferred into many high-priority unicast flows. If all unicast flows are at a high data rate, the adverse affect may be minimized, but if a few clients are connected at low data rates, the impact to network utilization could be severe.

Other Demands

In addition to multicast related problems, some applications have other demands that are difficult to meet with standardized QoS. For example, some applications are sensitive to latency and loss. It may be advantageous to intentionally transmit these application flows at a lower data rate to ensure signal reception with lower loss and lower retries. Similarly, with video applications, some of the data frames are more significant than others; these frames should also be prioritized in a way that reduces retries and maximizes efficiency, but this type of functionality requires application-level insight by the WLAN infrastructure. These types of application-specific hurdles are minor, but many networks can benefit from any and all QoS improvements that are available. To offer these types of advanced QoS features, application and network infrastructure vendors will either have to be tightly integrated or these products will have to be provided by the same vendor.

Time Sensitivity

With voice and video, the time sensitivity of some data is a higher priority than loss. If there are high retries, at some point, a specific frame should be dropped because it may no longer be valid for the application flow. This can be user-defined with retry count limits and CWmax settings, but in an ideal world, the WLAN infrastructure would be aware of application requirements and could adjust its behavior accordingly. Proprietary features are required to address these custom application optimizations.

Basic QoS Troubleshooting

When application performance isn’t meeting expectations but QoS is enabled and configured, what do you do?

First, consider all the factors that impact network traffic from start to finish, among them:

- Proper marking and tagging

- The maintaining and honoring of classification across all network hops

- QoS trust

- Transport delays

- Translation delays

- Queuing delays

- Utilization of network links (congestion)

- End-to-end bandwidth resources

- RF interference

- Data forwarding models

- Roaming

As you encounter specific problems and try to isolate the root cause, it may help to consider each of these variables and how they may affect the network.

Keep in mind that many WLAN QoS problems are also caused by poor RF design and resource management. A simple analysis of channel traffic may help to identify if the wireless medium is saturated, if RF interference is causing issues, if clients with sensitive applications are roaming excessively, or if the wireless domain is operating as it should be. Is there too much management traffic (too many SSIDs?), are you supporting the lowest basic rates (try disabling 1, 2, and 5.5 Mbps), are there too many errors and retries? Look for indicators that would help you isolate the problem to the wireless or wired domains.

You should also consider whether other applications are functioning properly. If so, you’re likely looking at an issue of sensitive application requirements and insufficient resource provisioning. Ensure that your application’s traffic is being marked and prioritized properly (on transmit and receive). Analyze latency and jitter if the flow is bidirectional. If bandwidth is being consumed by other lower-priority devices, explore ways to limit bandwidth consumption—move some stations back to the wired network, use rate limiting or service level agreement (SLA) policies, and so forth—and free up resources for the sensitive applications.

Another issue you may observe is one-way QoS—for instance, your phone is sending properly tagged and prioritized upstream traffic, but downstream traffic from the AP is not being prioritized. This is often an issue of improperly planned end-to-end bidirectional QoS. Since there are several potential points of failure along the end-to-end QoS link, you should reassess your APs QoS configuration, including conversion of markings. Also, ensure that your access switches are configured with proper trust. There are many other considerations here as well. One way to test proper end-to-end QoS in a site assessment is to initiate a voice call to another voice station in the same BSS. If frames are transmitted and received by both clients with proper markings, your end-to-end QoS should be intact.

Finally, what if your wireless station isn’t sending properly tagged frames? Begin by ensuring that your device supports QoS. Is it WMM certified? Is WMM enabled on the client and infrastructure—for VoIP, check the VoIP server as well. Is your application properly tagging the data? Does your driver support WMM?

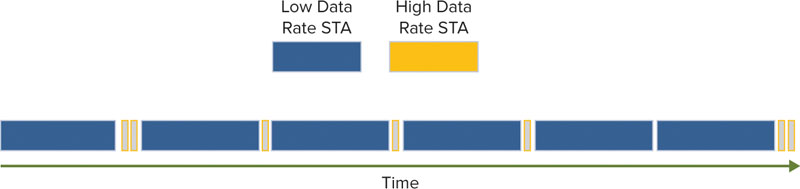

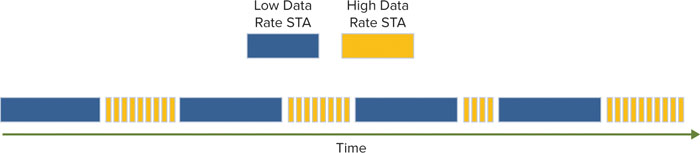

Airtime Fairness

In the “WLAN Arbitration” section, we discussed the standardized methods by which wireless contention is managed. Standardized contention parameters were specified by the IEEE to handle normal traffic loads and to provide priority to some devices or access categories, but new network technologies demand new network solutions. In many cases, standardized arbitration mechanisms are not enough to provide the type of differentiated service that many enterprises require for the network, and other solutions, like airtime fairness, are needed.