2

Introduction: Brain–Computer Interface and Deep Learning

Muskan Jindal1, Eshan Bajal1* and Areeba Kazim2

1Amity School of Engineering and Technology, Amity University, Noida, Uttar Pradesh, India

2Noida Institute of Engineering and Technology, Greater Noida, Uttar Pradesh, India

Abstract

Brain signals or radiations are a relatively new concept that works with information collected via human brain and cognitive activity. These signals like human brain are influenced by all the physical, geographical, emotional, and cognitive activities around it, are individualistic in nature, and follow numerous patterns. Complying with the same Brain–computer interface (BCI), is the area of science where these signals are contemplated to advance the human-computer interaction applications. This area of research ties the cord between human cognitive to computer’s processing and speed, aiming to create a unique field in biomedical sciences to introduce infinite applications like, neural rehabilitation, biometric authentication, educational programs, and entertainment applications. Although nascent, Brain–computer interface (BCI) has four major grades of processing steps – signal acquisition, signal pre-processing, feature extraction, and classification. This study firstly, thoroughly elaborates upon its various processing steps while exploring the origin, need, and current stage of development of Brain– computer interface (BCI) with respect to brain signals. Post complete understanding of basic concepts and terminologies of brain signals, Brain–computer interface (BCI) and their interconnection along in the field of bio-medicine; this study elucidates upon primordial methodologies of Brain–computer interface (BCI) along with respective merits and demerits with intensive classification. A differential based analysis is provided to insight into new age deep learning-based method in the field of Brain–computer interface (BCI) while comparing them with primordial techniques. A complete data intensive review is performed for new era deep learning techniques while properly classifying the same into multiple gradations based on deep learning framework and their various versions implemented namely, neural networks (CNN) and recurrent neural networks (RNN), long short-term memory (LSTM) architecture, U-net among other. This chapter also aims to provide real world application, challenges, scope of future growths, avenues of expansion and complete industry specific guide for implementing insights gathered from brain signals in the nascent area of Brain– computer interface (BCI). Lastly, to fathom the performance of Brain–computer interface (BCI) this study also provides its application in multiple case studies with desperate health hazards like brain tumor, Dementias, Epilepsy and Other Seizure Disorders, Stroke and Transient Ischemic Attack (TIA), Alzheimer’s Disease, Parkinson’s and Other Movement Disorders. This chapter aims to not only provide background, current status, future challenges and case studies but also an application specific perspective in Brain–computer interface (BCI) in the field of bio-medical.

Keywords: Brain–computer interface (BCI), brain signals, convolutional neural networks (CNN), recurrent neural networks (RNN), long short-term memory (LSTM) architecture, functional magnetic resonance imaging (fMRI), electroencephalogram (EEG), electrocardiogram (ECG or EKG)

2.1 Introduction

Brain–Computer interface primarily thrives on brain signals, which is biometric information that is compiled or processed by human brain. These brain signals can be result of any kind of thoughts or activity that is result of active and passive mental state. A normal human brain always emits brain signals due to the constant state of activity that occurs even when the physical body is at complete rest, i.e., brain emits signals when humans are sleeping as well. Thus, there is no paucity of brain signals [1]. Psychologists have often used the emotions or thoughts a human think to understand or interpret the real meaning of these brain signals but often human mind does not let complete visibility into these brain signals [2]. Thus, by the implementation of precise brain signal decoding one can comprehend or interpret the actual meaning of these brain signals without disturbing the current mental and physical state of prospect’s psychology. These brain signals when interpreted correctly can improve the prospect’s quality of life or provide insight into their inner mind-set or psychology [3]. Based on the research done by various experts and psychologists, brain signals collation had two kinds of signals-invasive signals and non-invasive signals [4]. Invasive signals require deeper penetration and can be collected via deploying electrodes on human scalp. While non-invasive signals are acquired rather easily without any penetration or use of electrode as they are collected over the scalp [5].

Brain–computer interface (BCI) systems uses certain mechanism to use these brain signals to create a constant mode of communication between human brain and computer, such that the messages, communication, commands, and emotions of human brain are conveyed to computer without any physical moment of either the human brain or the computer interface [6]. This is done by the Brain–Computer Interface (BCI) systems via monitoring the conscious electric brain activities by the application of electroencephalogram (EEG) signals that can detect any impulse of thoughts or activity that occurs in human brain [7]. These EEG signals have been actively use by neurologists in the healthcare industry to diagnose multiple diseases, help identify any incumbent brain activity, medical procedures like surgeries, provide insights into real anatomy and implications of human brain and other medical and psychological applications [8, 9]. For Brain–Computer Interface (BCI) systems, EEG signals use captured and then digitalized or processed by the use of various processing algorithms, so that these EEG signals or brain signals are converted to real time control signals [10]. This establishes a link between the prospect or the human brain and the computer, such that all the active or passive activities in the human brain can be detected by the computer system. This connection enables the computer to comprehend all the activities, emotions, or demands that human brain exhibits, enabling many revolutionary tasks. Like, help physically disabled people or people with temporary limb or people with any kind of disability perform almost all the tasks [11]. This makes them independent, self-sufficient, confident and improve their quality of life that medical science cannot even imagine to do [12–14]. Different Brain–Computer Interface (BCI) systems control different types of brain activity, considering there are diverse activities that the human brain performs – classifying Brain–Computer Interface (BCI) systems into different kinds based on the activities they perform or the organ replacement they are responsible for [15]. Another way to classify Brain–Computer Interface (BCI) systems is the kind of brain signals they use considering there are multiple kinds namely, electroencephalogram (EEG), electrocardiogram (ECG or EKG), functional magnetic resonance imaging (fMRI), or hybrid input of any two or more brain signal [16]. A very established EEG based Brain–Computer Interface (BCI) system is P-300, Steady State Visual Evoked Potential (SSVEP), Event Related Desynchronization (ERD) and other include slow cortical potential based Brain–Computer Interface (BCI) System [17–20]. This research study based book chapter will evaluate classical Brain–Computer Interface (BCI) Systems like P300 and its various hybrids due to its splendid user adaptability as compared to others, plethora of applications and economic viability [20].

2.1.1 Current Stance of P300 BCI

This study has surveyed all the journey of P300 Brain–Computer Interface (BCI). This was founded in the year 1988 [21], but the subsequent two years saw no research publications in terms of recognized journals on the topic. The next couple of years precisely from 2000 to 2005 saw some minor increment on the published research publications, most of which were focused around processing the data in different formats or from disparate sources of data [22, 23]. However, the next decade witnessed some path breaking work on P300 Brain–Computer Interface (BCI) with plethora of studies continuously improving the previously released models in multiple peer reviewed research publications. With each passing year, the new models that were proposed were more efficient, robust, easy to implement, economically viable and required less time or space complexity-running on limited computation processing framework [24–26].

To provide a more factual and bigger picture, this has shown graphically how many research publications were released on Google scholar that were indexed by Scopus or any other reputed indexing criteria like SCI from the year 2000 to 2020 in the graph attached below:

This study, firstly, thoroughly elaborates upon its various processing steps while exploring the origin, need, and current stage of development of Brain–computer interface (BCI) with respect to brain signals. Post complete understanding of basic concepts and terminologies of brain signals, Brain–computer interface (BCI), and their interconnection along in the field of bio-medicine; this study elucidates upon primordial methodologies of Brain–computer interface (BCI) along with respective merits and demerits with intensive classification. A differential based analysis is provided to insight into new age deep learning based method in the field of Brain–computer interface (BCI) while comparing them with primordial techniques. A complete data intensive review is performed for new era deep learning techniques while properly classifying the same into multiple gradations based on deep learning framework and their various versions implemented namely, neural networks (CNN) and recurrent neural networks (RNN), long short-term memory (LSTM) architecture, U-net, among others. This chapter also aims to provide real world application, challenges, scope of future growths, avenues of expansion, and complete industry-specific guide for implementing insights gathered from brain signals in the nascent area of Brain–computer interface (BCI). To further edify the application understanding, this study also aims to provide an extended gradation based on the representation of technique used for gathering brain signals namely, electroencephalogram (EEG), electrocardiogram (ECG or EKG), functional magnetic resonance imaging (fMRI) or hybrid input of any two or more brain signal capturing techniques, this information aids the further classification of application to create industry specific inputs.

2.2 Brain–Computer Interface Cycle

This section of the research study evaluates various steps with their respective details that are involved in the processing of a classical Brain–Computer Interface (BCI) System as summarized in Figure 2.1. The significance of this section increases as the rudimentary knowledge of various processes are cardinal before getting into various approaches of Brain–Computer Interface (BCI) Systems and their respective applications [27, 28].

Step 1: Task and Stimuli

If one considers an ideal Brain–Computer Interface system then theoretically user just needs to send strong and active brain stimuli, a task that requires limited effort and the user does not fatigues his/her physical system. Theoretically, a Brain–Computer Interface system is very easy to control and use, in fact the measure of brain signals can determine the strength of the activity performed, the user has to be conscious enough to intend a particular activity and the computer can process the respective task. But, most of Brain–Computer Interface systems are very far from ideal, and there exists no system that can actually execute all the above mentioned features, i.e., become ideal [29]. Most of users are also not ideal; they are trained to use a BCI system, but often not many users tend to send brain signals without any intention to produce action or manually proceed to do the action when the brain–computer interface fails to do [30]. The environment in which the user’s brain signals are transmitted also pose an issue as when the media of communication is not clean or is scattered, the signals are either interpreted wrong or are lost in the environment noise. Thus, voluntarily generated active brain signals and noise free environment are required a perfect Brain– Computer Interface to work. The first such Brain–computer Interface was developed as a spelling device that enabled users with paralysis to write, after huge amount of training [31]. Another way of training any BCI system is via neurofeedback training, which includes validating the intentions of the user by feeding him/her the features of brain signals extracted, providing users full control of the activity [32].

Figure 2.1 The ideal cycle for any Brain–Computer Interface (BCI) System begins with a stimulus in the user’s brain – i.e., the activity in active brain that is created in precedence of an action. This stimulus creates brain signals that can be detected by the sensory devices of the computer’s processor. The outcome of these brain signals are predicted by the processors and computation abilities of the computer, i.e., the action that brain intended.

As mentioned above and the various publication that discuss challenges of Brain–computer Interface, training such a detailed computer system is a huge task as it requires huge amount of time, attention along with plethora of data to feed on [33]. Thus, more recent models of the BCI systems are looking for effective alternatives instructive cognitive tasks that require comparatively less training [34]. Another approach is tagging a particular stimuli to be important by watermarking it or providing it a neuronal signature. For instance, when our brain is aware of a particular sight or entity it can identify that entity in very noisy environment as well like a fast moving car, plethora of signs, or any such diverse situation as our brain has already registered that particular symbol via a neuronal signature [35]. Similar neuronal signatures can be in different forms like ranging from visual signatures to musical signatures or metal navigation. Higher cognitive tasks can also be done by signatures that are then converted to stimuli which are cue based or synchronous in nature either by active signals or by passive signals. For cue based or Synchronous signals the BCI systems have a particular response time on the other hand for non-cue based or Asynchronous based BCI systems the response time is not defined – this further delays the process creating other issues or problems [36]. Coming of age tech makers and minds like various researchers in the field are expanding to beyond brain signals like use of internal speech as that’s the most direct, easy and effective way of communication one’s direct thoughts to the Brain–Computer Interface, as this would be as effective or robust as detecting the thoughts but this approach comes with its own set of challenges like understanding different dialects, languages and their actual meanings since human use sarcasm, metaphor among other figure of speech [37].

Step 2: Brain signal detection and measurement

The classification of various brain signals capturing and measuring devices are categorized based on the type of the signals these devices capture – Invasive and Non-Invasive methods. Electroencephalography (EEG) and Magnetoencephalography (MEG) are graded into the category of Non-Invasive signals, these can aid in capturing and detecting average brain activity via detecting the activities of neural cells and their dendritic currents. While their capability to capture the sense of activities and respective brain signals is cogent but the exact location of origin of this brain signal cannot be determined by the Non-Invasive signals. This happens due to the spatial noise in the medium of traversal, like bones, tissues, skin, organs or different cortical areas in the brain tissue that are not responsible for producing the brain signals. Also, Non-Invasive signals are very sensitive to the noise created by very minute body moments like muscle moment or slight eye moments [38]. Apart from Electroencephalography (EEG) and Magnetoencephalography (MEG) signals, recent developments in Brain–Computer Interface (BCI) systems also use Functional magnetic resonance imaging or functional MRI (fMRI) [39, 40]. Since fMRI measures the changes in magnetic properties of hemoglobin levels in the blood that is directly related to neural activities in the brain, its spatial resolution is very advanced and refined as compared to EEG and MEG but again lacks in temporal resolution [41].

An approach to obtain much better performance with both spatial and temporal resolution is using non-invasive brain signals capturing techniques and use electrode implantation [42]. These electrodes are capable of recoding both minor and major spikes in brain signals, like spikes in multiple regions and multiple spikes in the same region. Their abilities can identify the increased or decreased intensity in brain signals and also determine their exact epicenter of origin. After seeing the theoretical results of this technique, it was implemented on monkeys [43, 44] but when it was approved for human trials some risks were identified, later these risks turned out to be very severe for all most all the BCI systems. Other risks and challenges associated with this technique are operational challenges – due to the complicated and delicate nature of electrodes, a continuous monitoring team of experts and heavy hardware is needed on daily basis. The recent advances in brain signals capturing or detecting devices is deriving innovation for optimized techniques in brain–computer interface systems. But some issues remain open challenges to solve – tissue scaring, operational challenges, economic viability, use of complicated hardware and risk to human life. Moreover, less noisy environment, spatial and temporal visibility and light weight scanners are also few loop holes to improve in future under the Brain–computer Interface domain [45].

Step 3: Signatures

The next step in the Brain–Computer Interface cycle is feature or characteristic detection or feature extraction that includes extracting features from the brain signals received from the user. This feature extraction is the first insight gathered from the brain signals received by user, which if gathered incorrectly can create issues in detecting intend to action of user. These features or characteristics are called neural signatures or signatures. This signature is contingent to a particular task or an activity, such that the presence of a signature is a direct indication of occurrence of that task. For instance, when a person is asleep the brain induces or sends a particular signal know as sleep spindle. Thus, when sensors detect this signature, it is clear that prospect is at sleep [46] – refer to Figure 2.2.

The current stance in medical science are still trying to find various signatures for any period or frequently occurring activity via their respective brain signal signature. The signatures in Brain–computer Interface can be classified into two categories namely – Evoked and Induced. While Induced signatures are power locked, the former (Evoked) signatures are time or phase fixation. The responses received are calculated via an event-related potential (ERP) or event-related field (ERF) [47]. But both these techniques have their own set of drawbacks and merits, most of the issues and challenges are due to the internal and external noise-like similar signatures or signals that occur in various parts of the brain or brain tissues, continuous and unpredicted moment of the prospect, any sudden emotion or secretion of any hormone on prospect’s body.

With a more in-depth understanding of how various parts of brain communicate with each other, how and when a particular neural activity takes place, what triggers these sudden activities, how visual, sensory or temporal stimuli are triggered in the human brain and knowledge other intricacies is required [48]. More anatomy intensive research is required to aid the Brain–computer interface systems breakthrough.

Figure 2.2 A typical sleep spindle – between dashed lines.

Step 4: Feature Processing or Pre-Processing

After successfully extracting the feature or characteristics, it is cardinal to process them in order to obtain the intention of action from the respective brain signals by maximizing the signals to noise ratio. This step is most important and intensive task in terms of time or computation capabilities. Apart from knowledge of various incoming signals and their signatures, use of optimal image capturing technology is also a requisite. This study elaborates upon feature processing of electromagnetic signals out of the available technology for other types of brain signals. This process mainly deals with denoising or filtering out the unwanted artifacts from the captured features. The unwanted entities are known as noise – that can corrode the captured signals and create disruption in identification of intend of action of user. These disruptions include unwanted signals of brain captured to other activities like blinking of the eye, involuntary action of the user, signals corroded by brain tissues or any other type of activity. The two main categories discuss in this section of the research chapter – spatial filtering and spectral filtering. Spectral filtering deals with the denoising part, i.e., eradicating noise or other signals from the required brain signal to clear out way for feature recognition. Spatial filtering is ideally done after spectral filtering or denoising, it combines the linearly loose or weak signals to provide a concentrated signal. During feature extraction we pre-process the received signals by robust identification of spectral and temporal characteristics. Spectral features are derived directly from the signals captured or the signals averaged over time, while temporal signals are derived from the straight or intensity of the captured signals. An alternate approach here is Time-frequency representations (TFRs) that are obtained by combining spectral and temporal features as represented in Figure 2.3.

Figure 2.3 A general representation of time-frequency representations (TFRs).

Thus, feature extraction and feature recognition are two most intensive and equally important steps in the Brain–computer interface cycle as they are vital for identification of user’s intent.

Step 5: Prediction

The subsequent step or the penultimate step includes predicting or determining the outcome from the processed and extracted features. This is generally done via the application of machine learning theorems. So, the application of machine learning theorem produces the final output is either discrete or continuous depending on the algorithm implemented. Like, if the algorithm implemented is regression based then output is continuous or if the algorithm used is classification based then result is discrete as shown in Figure 2.4.

In the past decade, a lot of machine learning algorithms have been applied in the prediction process of Brain–computer Interface Systems – popular algorithms being linear discriminant analysis and Support Vector machine [49, 50]. But the performance of the output received does not completely depend on machine learning algorithm selected but the quality of extracted features, training data availability, technique implemented for validation.

Figure 2.4 Graphical representation of discrete and continuous distribution.

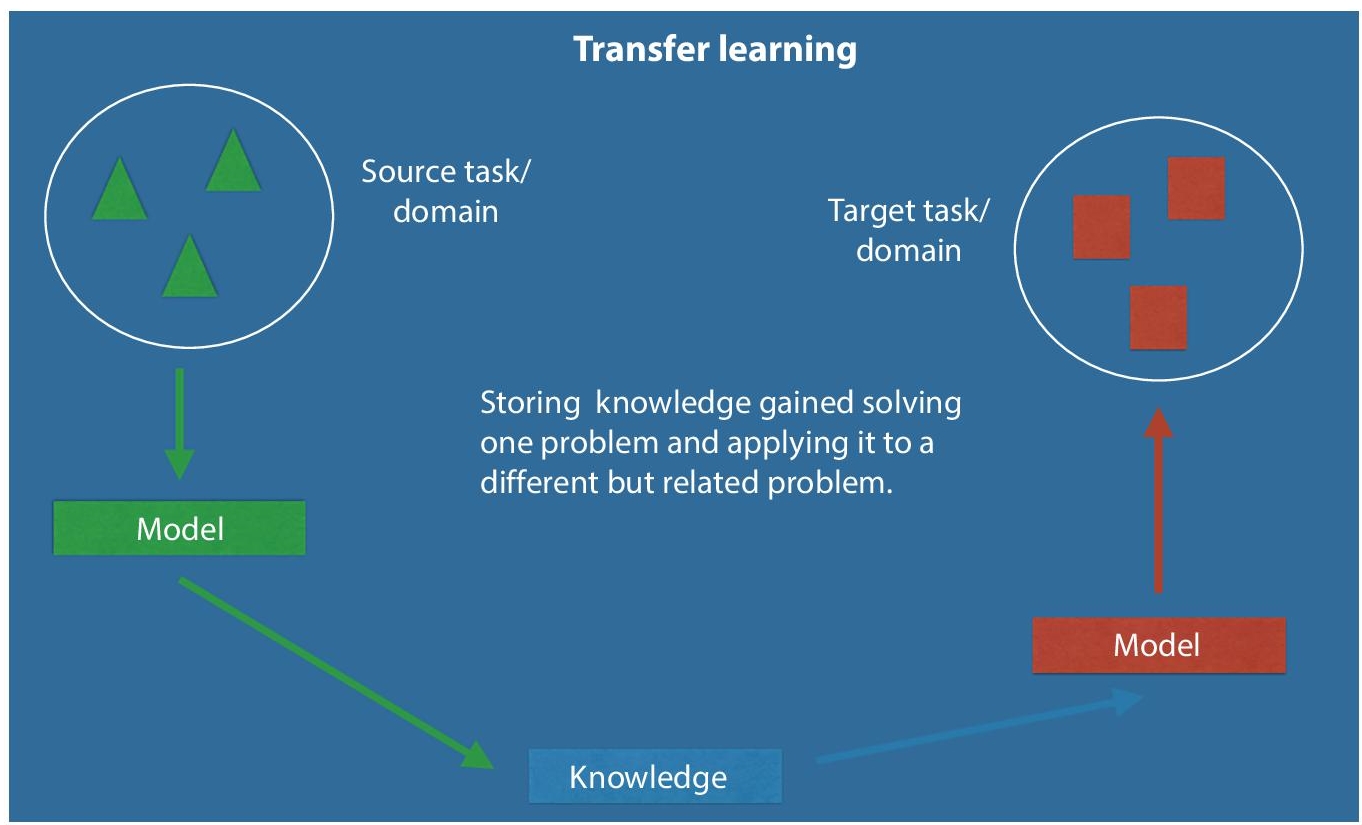

The nature of neural signal data is very eccentric – it has many features, each with individual characteristics and since the amount of data is limited model over fitting becomes a problem [51]. Solutions to this issue include – implementing a simpler or linear fitting algorithm or using feature selection to remove unwanted features in order to prevent over fitting. Another concept that has got attention of Brain–computer interface researcher in machine learning is transfer learning (Figure 2.5) or multi-task learning. Here, the performance is improved by avoiding over fitting the model on signal data but it also avoids inclusion or processing of new data or any kind of outlier data [52]. Researchers in the field are looking for alternatives and diving into hybrid algorithms that combine classification and regression, but human brain is a complex system that contain endless number of internal processes, signals and concepts that medical science is still trying to unveil. Thus, with more in-depth knowledge of anatomy of human mind, Brain–Computer Interface Community will able to innovate further. Alternate algorithm based solutions that attempted to address issues in BCI are – hybrid classification algorithms namely, hidden Markov and optimized Bayesian networks [53, 54] as they enable time to time monitoring or mapping and are flexible enough to adjust to the continuously changing human brain signals [55].

Conclusively, selecting an optimal algorithm is not an issue as over fitting can be easily avoided by using a linear or simple algorithm, it’s the inclusion of all the features, continuous mapping and adjusting to changing brain signals that creates an issue with almost all the classification algorithms in Brain–Computer Interface community.

Figure 2.5 A regular transfer learning set up for any machine learning environment.

Step 6. Results and Action Performed

The result and final stage of a Brain–Computer Interface system is when user’s intended action is predicted correctly after feature extraction, pre-processing and predicted using machine learning algorithm, this action is then performed via any computer or physical device like prosthetic arm or wheelchair or any computer system as seen in Figure 2.6. After predicted action is performed by the external output device, feedback is given by the user to further improve the Brain–Computer Interface system. There are multiple types of output devices out here to use depending on the type of activity performed or the need of user like audio [56], text [57], graphical [58], motor commands [59] and vibrotactile [60] is among others.

Figure 2.6 Some examples for output devices of brain–computer interface.

2.3 Classification of Techniques Used for Brain– Computer Interface

In this section the various techniques and research documentation available pertaining to use of different techniques for brain machine interface has been discussed. The entire collection is organized and based on the four core domains, namely, predicators and diagnostics in mental and neurological disorders, observation and analysis of sleep pattern, brain activity associated with mood and lastly some miscellaneous topics.

2.3.1 Application in Mental Health

Epileptic seizures are the most common aspect which is of great interest to the researchers. Many methods have been used for this implementing CNN to various degrees [61]. Johansen et al. implemented AUC to depict the 1 Hz high pas of epileptic episode in an EEG graph using a custom CNN setup. Morabito, et al. [62] devised the use of CNN in the detection Alzheimer’s and associated motor decline. This set used the rapid eye movement data for providing with results at 82% accuracy. Chu et al. [63] combined the traditional and stacking models to detect schizophrenia [64]. In the domain of psychological disorders, Acharya et al. [65] devised a multi layered CNN to detect depression consisting of more than 10 layers. Ruffini et al. [66] described an Echo State Networks (ESNs) model, a particular class of RNN, to distinguish RBD from healthy individuals.

Hosseini et al. [67] modeled a sparse bi-layered D-AE for studying the EEG during an epileptic seizure. Similarly, Lin [68] created a model with three hidden layers to parse EEG data. Page et al. [69] designed a system to use DBN representative model to analyses seizure EEG signals. The system combined said method with a regression-based model to detect onset of seizures. A depression detection system consisting of DBN-RBM was used by Al et al. [70] which used two streams of EEG which was later merged with PCA.

2.3.2 Application in Motor-Imagery

In the field of motor imagining, that is, scans pertaining to the motor cortex of the brain different techniques are used as the techniques of normal EEG have certain shortcomings. In this field also we see the most number of implementations of CNN, as follows. MI-EEG for mapping of neural connection was done by Wang et al. [71] using CNN. General performance enhancements in the CNN structure have been done by Zhang et al. [72] and Lee et al. [73]. MI-EEG images and their spectral features have been documented by Hartmann et al. [74], showing gradient features proportional to the strength of the EEG signal.

For the representative class, DBN based systems have shown the most impressive results [75]. Following this direction, Li et al. [76] applied DBN-AE to the EEG of wavelet transform and followed it up with denoising techniques. Similarly, Ren et al. [77] showed the feature representation of the EEG signals by means of CDBN. Other notable works include implementation of LSTM and reinforced learning [78], XGBoost augmentation [79], Genetic algorithm use [80] and KNN classifiers [81] for the analysis of signals.

2.3.3 Application in Sleep Analysis

The EEG data generated during sleep is the most well researched domain among all fields using BCI. EEG data from sleep can provide information about the persons sleep patterns, mental and physical wellbeing and act as predictor for onset of disorders [82–84].

In this field most of the work involves EEG scans of a phase of sleep at constant frequency [85]. Time-frequency based features were analyzed by Viamala [86] with a generous score of 82% accuracy in prediction. Tan et al. [87] achieved a 92% accurate F-1 score in sleep spectral pattern by Power Spectral Density analysis. A multi-step sleep feature extraction with the combination of DBN and CNN was performed by Zang et al. [88]. Further work has been done in the form of implementing KNN [89] and LSTM [90].

2.3.4 Application in Emotion Analysis

Emotions are a core aspect of humans and other higher mammals. Although research into the exact mechanism of development of emotions is still ongoing, the presence of an emotional state can be easily understood with the help of EEG scans. As such many deep learning techniques have been proposed and many studies conducted to research into the emotional changes of the brain.

RNN and CNN are gaining popularity in the domain of emotion development [91, 92] while the classic MLP techniques have a long standing history of use for the same [93]. Zhang et al. [94] proposed a spatial-temporal recurrent neural network, which employs a multi-directional RNN layer to discover long-range contextual cues and a bi-directional RNN layer to capture sequential features produced by the previous spatial RNN. Talathi [95] proposed a deep learning model for the GRU cell type model. The study spatial configuration of the EEG matrix by transformation into two-dimensional matrix was done by Li et al. [96].

A novel model called Bimodal Deep AutoEncoder (BDAE) was created by Yan et al. [97] in which a gender based research into the EEG patterns of males and females were fused with the eye movement data via a SVM to discover emotional response variations. Chai et al. [98] put forth a solution to the problem of training dependency by designing a Subspace Alignment AutoEncoder (SAAE) that used unsupervised domain adaptation. The technique had a mean accuracy of 77.8%. Zheng [99] combined DBN with a Hidden Markovian Model while Zhao et al. [100] combined it with SVM to address the state of emotion in the unsupervised learning subspace.

2.3.5 Hybrid Methodologies

It has been observed that a hybrid technique, that is the amalgamation of two or more techniques can synergies with each other and provide better results. Also, this synergy opens up new avenues for evaluation and processing of EEG data. In this domain, the majority of improvements have been made in the last 7 years due to advances in sensor technology, improved neural network codes and development of innovative hardware.

Popular models involve the combination of two of the most widely used techniques: CNN and RNN. This was done by Shah et al. [101] for a multi-channel seizure detection system with 33% sensitivity. A combination of the temporal and spatial features of EEG was done by Golmohammadi et al. [102] where CNN and LSTM were combined to interpret EEG of the THU seizure corpus with a specificity of 96.85%. Morabito et al. [103] implemented a combination of MLP and D-AE for early stage detection of Creutzfeldt-Jakob Disease. This system passed the EEG was processed via the D-AE with additional hidden layers for feature representation and MLP classified the results after training with a mean of 82% accuracy. In unsupervised learning models CNN has been used instead of generic AE layers with favorable results [104].

Tan et al. [105] implemented a combination of CNN and RNN with a DBN-AE for dimensional reduction to discover buried details in spatial domain with an average accuracy of 73%. Tabar et al. [106] combined CNN and a seven layered DBN-AE to extract representation of frequency domain and location information for high precision.

Dong et al. [107] trained a hybrid LSTM-MLP model for deducing hierarchical features of temporal sleep stages. Manzano et al. [108] devised a CNN-MLP algorithm to predict the states of sleep. This algorithm used the CNN to process the EEG waveform while the MLP was fed a pre-processed signal of <32 Hz. A similar model pertaining to neonatal sleep was designed by Fraiwan et al. [109]. An automated scoring system to qualify sleep efficacy was proposed by Supratak et al. [110] which used a bi-directional LSTM to parse the sleep features.

2.3.6 Recent Notable Advancements

The relation of EEG with change in auditory and visual stimuli is of great interest to researchers. Stimuli have been presented in many ways varying from repeated sounds of a frequency or a flickering light. They are also used to distinguish between the different moods of a subject [111]. Many studies have been conducted at different institutes around the world, of which the most prominent ones are mentioned here.

Stober et al. [112] focused on the effect of repeated stimuli and its relation with changes in EEG. In the study performed, used CNN along with AE in 12 and 24 category classification with 24.4% to 27% accuracy. Sternin et al. [113] tried to map a CNN to the EEG changes when a subject hears music. Sarkar et al. [114] designed a CNN and three-stage DBN-RBM to classify audiovisual stimuli with 91.70% accuracy. EEG changes during intense cognitive activity and straining physical workload has also been studied [115, 116]. In this regard, Bashivan et al. published two studies [117, 118]. The former extracted the spatial features via wavelet entropy which was fed to a MLP, and the latter analyzed general changes in wave pattern formation during a dynamic workload. Li et al. [119] mapped variations at different mental states of fatigue and alertness, while Yin et al. [120] imposed a binary classifier on the data of EEG during varied mental workloads, combined with D-AE refining. These researches have practical indications, like the detection of response time of drunken drivers based on their EEG as done by Huang et al. [121]. Similarly, the work of Hajinoroozi et al. [122] involves the processing of EEG by an ICA followed by evaluation by the DBN-RBM net which gives satisfactory results of 85% accuracy. Another DBN-RBM method for the same case of alcohol influence on brain function was structured by San et al. [123] with 73.29% result accuracy. Almogbel et al. [124] proposed a method that directly associates the mental condition of a subject based on the raw EEG signals parsed by a CNN.

Event-related desynchronization refers to the change of EEG signals, specifically in the power of the waveforms during different brain states [125]. In this context, there are two important factors: ERD and ERS. The former denotes the decrease in power while the later measures the increment in power of the EEG scan. Research based on these factors are limiting owing to the low accuracy across subjects [125]. However, there are promising avenues with great potential. For example, Baltatzis et al. [126] trained a CNN to detect if the subject is a victim of school bullying by analyzing the EEG patterns. Similar work by Volker et al. [127] has performed or par and beyond the other existing frameworks. Furthering this stream of work, Zhang et al. [128] discovered latent information by including graph theory into the CNN.

Mood Detection

Similar to previous examples the most common technique involve a combination of CNN with RNN to predict mood and correspond mood changes based on external stimuli. As an example, a CNN-RNN combined cascading framework was built by Miranda-Correa et al. [129] to predict the personalistic characteristics. Attempts have been made to study the efficacy of such systems to identify genders based on EEG signals [130]. The study reached an accuracy of 81% using unaugment CNN on public data libraries. Hernandez et al. [131] proposed a framework that deduced the state of a driver, time taken for intention to brake after getting a visual cue, using CNN algorithms. The resultant accuracy was 71.8%.Lawhern et al. [132] introduced a EEGNet based on CNN to evaluate the various robust aspects of brain signals in response to perceived changes. Teo et al. [133] created a recommendation system that learned from the like to dislike ratio when a subject was shown an image of a product.

ERP

In the last section the strategies were based on the analysis of EEG signals. In this section the studies reviewed pertain to ERP (Evoked Potential). ERP studies are mostly done using the P300 phenomena, hence most studies here are related to publication of P300 scenarios. ERP has two subcate-gories VEP and AEP which will be discussed in the next section.

Ma et al. [134] combined genetic algorithm with multi-level sensory system for the compression of raw data which was send to DBN-RBM for analysis of higher level features. Maddula et al. [135] analyzed the low frequency P300 signals using a 2D CNN to extract the spatial features in combination with a LSTM layer. A high accuracy of 97.3% was observed using a DBN-RMB model with SVM classifier by Liu et al. [136]. The experiment by Gao et al. [137] used a combination of SVM classifiers followed by AE for filtration. This experiment had an accuracy of 88.1% when executed for a sample of 150 points per data segment. Cecotti et al. [138] modified P300 with low pass CNN in an attempt to increase the alphabet detection of the P300. A batch normalized method, which was a variant of the CNN method was proposed by Liu et al. [139]. The framework comprised of six independent batch layers. Kawasaki et al. [140] achieved an accuracy of 90.8% using MLP to differentiate between P300 and other models.

Works focused on AEP include Carabez et al. [141] who proposed an experiment to test AEP and CNN models. An auditory response to the oddball paradigm was the basis of this experiment. 18 different models were tested which showed accuracy comparable to other competing models. Better results were obtained when the signal to the AEP was downs-ampled to 25 Hz.

RSVP

This model has also garnered much attention due to its recent success [142, 143]. The most used models consist of pass bands with frequency filtered between 0.1Hz and 50Hz. Cecotti et al. [144] performed an experiment with a scenario where cars and faces were labeled as target and non-target. The images were presented in each session with a frequency of 2Hz. A specialized CNN model was used for the detection of targets in the RSVP. The experiment gave promising results with an AUC score of 86.1%. Mao et al. [145] created a trio consisting of MLP, CNN, DBN to differentiate between the subject’s perceptions of a target. This had accuracy of 81.7%, 79.6%, and 81.6% respectively. Another work from Mao et al. [146] involves person identification in RSVP model. Vareka et al. [147] verified the performance of P300 system in different scenarios, where target identification was the main goal. The model was a DBN-AE comprising of five hidden AE layers and two sofmax layers following it, which gave it an accuracy of 69%. A representative deep neural network for RSVP after a low pass by Manor et al. [148] achieved an accuracy of 85.06%.

SSEP

This system focuses on the SSVEP which refers to brain oscillation observed when a flickering visual stimuli are introduced. This phenomenon is mainly concerned with the activities of the occipital lobe which is responsible for processing our vision. Notable examples in this field include, the CNN-RNN hybrid method by Attia et al. [149] to capture and process information from this region of the brain. Waytowich et al. [150] used a CNN to process SSVEP signals and study the accuracy across subjects. Thomas et al. [151] processed the raw data from a low pass SSVEP using a CNN and LSTM to get 69.03% and 66.89% accuracy respectively. Aznan et al. [152] applied a discriminatory CNN over the standard SSVEP signals to reach a high accuracy of 96%. This study used signals from dry electrodes which were more challenging to process than standard EEG signals. Hachem et al. [153] studied the use of automation of wheelchairs based on the fatigue levels of the user. This study used a SSVEP and MLP to detect the fatigue of the patient. Kulasingham et al. [154] applied DBN-AE and DBN-RBM to study results of guilty knowledge test. This study generated results of accuracy 86.02% and 86.89% respectively. Prez et al. [155] achieved an accuracy of 97.78% using sofmax function layers to process SSVEP signals from multi-sourced stimuli.

fNIRS

In [156] Naseer et al. extracted the fNIRS data of a brain during rest and workload(mathematical tasks). The six features extracted from the pre-frontal cortex were fed into the fNIRS and analyzed by a number of different models. Of the models tested, the MLP model gave the best result of 96.3% accuracy outperforming all the other systems such as KNN, SVM, Bayes etc. Similarly, another MLP model was designed by Huve et al. [157] where he extracted data during three states of rest, cognitive activity, and vocabulary activity. The model achieved an accuracy of 66.48%. In another study the same author studied the binary classification capabilities of said model and got an accuracy of 66%. Chiarelli et al. [158] combined the EEG signal with a fNIRS scan for MI right left classification of EEG. The said study had a 16 lead system with eight leads from either (OxyHb and DeoxyHb) parts. Furthermore, Hiroyasu et al. [159] carried out gender detection using a D-AE as a denoising tool followed by a MLP for classification. The model has an accuracy of 81% over the local datasets. A corollary of this paper was that fNIRS was more affordable than PET (Positron Emission Tomography) scans.

fMRI

This tool is of great use in detecting brain impairment including both injury related damages as well as psychological and cognitive problems [160, 161].

In this case too CNN is the most prevalent with varying results. Havaei et al. [162] created a CNN based model that reads both local and global data to analyses brain tumors. Different filters have been used for different cases providing a more accurate result. In other diagnostics field, Sarraf et al. [163] implemented a deep CNN for the recognition of signs of Alzheimer’s fMRI scans. The model obtained a score of 88% when applied on the BRATS dataset. Similarly, a CNN was applied by Hosseini et al. [164] for the detection of epileptic seizure using SVM classifiers. Moreover, Li et al. [165] combined fMRI to fill buttress the data from PET scans. In this model, two layered CNN mapped the connection between the two, which outperformed the non-combined model at 92.87% accuracy. In a different study, Koyamada et al. [166] extracted the features common to all subjects in the HCP (Human Connectome Project). Another aspect of these models is in the detection of diseases. Hu et al. [167] divided the brain in to 90 regions and converted the data derived from these regions into a correlation matrix which calculated the functional activity between the different segments. This use of fMRI allowed for the detection of Alzheimer’s disease during early stages. Thereafter the system had been improved by the addition of a AE as a filter, which gave a net accuracy of 87.5%. Plis et al. [168] used a multi-layered DBN-RBM to extract features from an ICA processed fMRI signal achieving 90% average F1 scores across multiple datasets. Suk et al. [169] fed the fMRI data from a cognitively impaired source into an SVM to discover newer features. The SVM classifier after training gave an accuracy score of 72.58%. Similarly, Ortiz et al. [170] merged multiple SVM classifiers to achieve greater accuracy in analyzing fMRI data. Generative models in this field focus on the relation between a stimuli and the corresponding fMRI data. As such, Seeliger et al. [171] devised a convolutional GAD that was trained to generate the perceived visual image from the fMRI data. This type of studies has a lot of potential for helping people with disabilities in the future. Shen et al. [172] presented an alternative approach by mapping the relation of the fMRI to the distance of the target to the observer, at different distances. Han et al. [173] compared two different GAN, DCGAN (Deep Convolutional GAN) and WGAN (Wasserstein GAN) to conclude that DCGAN outperformed its opponent.

MEG

Cichy et al. [174] combined MEG with fMRI for object recognition. Shu et al. [175] demonstrated a newer approach if single word recognition using a sparse AE to decode the MEG. This however did not prove to be any better than the conventional means. Hasasneh et al. [176] used MLP to extract the features of a MEG read using a simple CNN. Garg et al. [177] streamlined the data in a MEG by refining the video and purging the unnecessary artifacts like blinking. A single level CNN decoded the MEG messages which were read by ICA. On a local dataset, this model has shown a sensitivity of 85% and specificity of 96%.

2.4 Case Study: A Hybrid EEG-fNIRS BCI

The model in review is a multi-modal EEG-fNIRS combined with the Deep Learning Classification algorithm. The model involves a training using Left and Right hand Imagery with a 1 second frame difference. This model is then compared with similar models such as fNIRS, EEG and MI.

Equipment and Training

Brain activity was recorded using 128 channel EEG system at 50 impedance as per manufacturer recommendations. The HydroGel Geosics Sensor Node [178] provided a highly accurate value of signals. Real time EEG processing at 250 Hz was done with a window of 1 second. Brain hemodynamic activity was recorded using a standard NIRS system at the sensorimotor region. Optical fibers of 0.4 mm core and 3 mm core were used for the input and output channels respectively.

Training of the model was done by a group comprising of 15 volunteers who were free of psychoneurological diseases. The squeezing motion of the subject was recorded for both hands with a 5 sec active and 10 sec inactive phases. The hand was clenched during the active phase and the hand was relaxed during the inactive phase. The experiment recorded 200 training sets per subject to give a total of 3000 sets.

Classifiers

The DNN model was customized to incorporate a feed-forward network instead of backpropagation. The hidden layers perform ReLU function to remedy the gradient of errors seen in linear models. This is followed by the SoftMax layer which gives the output in the form of a prediction of right or right arm. The classifier was trained using a supervised learning approach with an adjusting parameter that could be modified based on the difference between desired and actual outputs.

Performance and Analysis

The model was tested thoroughly using a 10 fold validation using 200 trials of both the arms, with 20 sets per iteration. For comparison the other models (SVM, KNN) were trained with parameters equal to that of the experimental model.

2.5 Conclusion, Open Issues and Future Endeavors

Theoretically, Brain–Computer Interface community has seen many path breaking developments like, hybrid algorithms, state of art output devices, noise removal or robust filtering techniques [179, 180]. Some of open issues are listed below

- Functional gaps in Implementation of the framework – A lot of functional gaps have been identified in the currently used brain signal capturing and implementation framework that utilizes EEG, ERP, and fMRI as brain signals and machine learning for feature processing along with classical filtering techniques for feature extraction or artifact removal [181]. Using Deep learning for feature extraction, feature precession and prediction processes could solve a lot of current challenges as it is capable of handling multiple features without over fitting, getting optimized results over a small dataset, adapting to the changing features of brain signals and continuous mapping the user feedback [182]. Past few years have seen some research to edify the same [183, 184], but using deep learning as feature extraction and feature processing is something still a nascent research domain.

- Prospect Independent Brain signals processing or Signature dependent brain signal processing – The current stance of Brain–computer interface is focused on deliberating the signals detected or collated for a single person/prospect/entity. A single brain is analyzed for any present signature, features and then the intended action for that particular features are identifies. An open research area for future researchers to work *on is considering multiple prospects and their multiple features instead of deliberating a single one. This will help researchers map a particular signal to a specific activity back to its respective signature. Thereby, building a signature library, which will not only expedite the process of Brain–computer Interface but also create room for understanding the anatomy of human brain in fresh perspective. Moreover, the challenge of adapting to a prospects changing brain signals and then starting afresh with another prospect is also solved – providing research a process contingent view instead of a prospect contingent one [185].

- Real time Brain signal processing – Present research set up mostly offline with previously collected data, that is later processed in the BCI units. But with advancing technology, almost everything works on real time data – web applications, Machine learning models even prosthetic systems also work with live streams of data continuously flowing in real time [186, 187]. It’s the BCI set up that first collects the data then processes it later according to the availability of the processing units. Thus, a research domain open to future fellow researchers to work upon is implementing Brain–computer Interface set with online resources and live stream of data, i.e., brain signals are flowing in real time to the processing units to then feature extraction, filtration, processing and prediction straight to the output devices to perform intended actions [188].

- Lightweight and flexible hardware – Most of Brain– computer interface require very heavy, complex and expensive hardware that is neither flexible nor easy to handle nor it is portable to other fellow devices or technical frameworks. The brain signal capturing devices are very heavy, ugly, and large – making users uncomfortable referred in Figure 2.7. In most cases users are very hesitant to wear such devices due to sheer fear or disgust.

Figure 2.7 Wearable BCI devices.

References

- 1. Kumar, Y., Dewal, M.L., Anand, R.S., Relative wavelet energy and wavelet entropy based epileptic brain signals classification. Biomed. Eng. Lett., 2, 3, 147–157, 2012.

- 2. Sanei, S., Adaptive processing of brain signals, John Wiley & Sons, 23–28, 2013.

- 3. Soon, C.S., Allefeld, C., Bogler, C., Heinzle, J., Haynes, J.D., Predictive brain signals best predict upcoming and not previous choices. Front. Psychol., 5, 406, 2014.

- 4. Waldert, S., Invasive vs. non-invasive neuronal signals for brain-machine interfaces: Will one prevail? Front. Neurosci., 10, 295, 2016.

- 5. Ball, T., Kern, M., Mutschler, I., Aertsen, A., Schulze-Bonhage, A., Signal quality of simultaneously recorded invasive and non-invasive EEG. Neuroimage, 46, 3, 708–716, 2009.

- 6. Schalk, G., McFarland, D.J., Hinterberger, T., Birbaumer, N., Wolpaw, J.R., BCI2000: a general-purpose brain–computer interface (BCI) system. IEEE Trans. Biomed. Eng., 51, 6, 1034–1043, 2004.

- 7. Wolpaw, J.R., McFarland, D.J., Vaughan, T.M., Schalk, G., The Wadsworth Center brain–computer interface (BCI) research and development program. IEEE Trans. Neural Syst. Rehabil. Eng., 11, 2, 1–4, 2003.

- 8. Curran, E.A. and Stokes, M.J., Learning to control brain activity: A review of the production and control of EEG components for driving brain–computer interface (BCI) systems. Brain Cogn., 51, 3, 326–336, 2003.

- 9. Fabiani, G.E., McFarland, D.J., Wolpaw, J.R., Pfurtscheller, G., Conversion of EEG activity into cursor movement by a brain–computer interface (BCI). IEEE Trans. Neural Syst. Rehabil. Eng., 12, 3, 331–338, 2004.

- 10. Sathyamoorthy, M., Kuppusamy, S., Dhanaraj, R.K. et al., Improved K-means based Q learning algorithm for optimal clustering and node balancing in WSN. Wirel. Pers. Commun., 122, 2745–2766, 2022.

- 11. Moore, M.M., Real-world applications for brain–computer interface technology. IEEE Trans. Neural Syst. Rehabil. Eng., 11, 2, 162–165, 2003.

- 12. Jena, S.R., Shanmugam, R., Saini, K., Kumar, S., Cloud computing tools: Inside views and analysis. Procedia Computer Science, vol. 173, pp. 382–391, 2020.

- 13. Hwang, H.J., Kwon, K., Im, C.H., Neurofeedback-based motor imagery training for brain–computer interface (BCI). J. Neurosci. Methods, 179, 1, 150–156, 2009.

- 14. Pfurtscheller, G. and Neuper, C., Future prospects of ERD/ERS in the context of brain–computer interface (BCI) developments. Prog. Brain Res., 159, 433–437, 2006.

- 15. Pfurtscheller, G., Müller-Putz, G.R., Scherer, R., Neuper, C., Rehabilitation with brain–computer interface systems. Computer, 41, 10, 58–65, 2008.

- 16. Fatourechi, M., Bashashati, A., Ward, R.K., Birch, G.E., EMG and EOG artifacts in brain computer interface systems: A survey. Clin. Neurophysiol., 118, 3, 480–494, 2007.

- 17. Käthner, I., Ruf, C.A., Pasqualotto, E., Braun, C., Birbaumer, N., Halder, S., A portable auditory P300 brain–computer interface with directional cues. Clin. Neurophysiol., 124, 2, 327–338, 2013.

- 18. İşcan, Z. and Nikulin, V.V., Steady state visual evoked potential (SSVEP) based brain–computer interface (BCI) performance under different perturbations. PloS One, 13, 1, e0191673, 2018.

- 19. Huang, D., Qian, K., Fei, D.Y., Jia, W., Chen, X., Bai, O., Electroencephalography (EEG)-based brain–computer interface+ (BCI): A 2-D virtual wheelchair control based on event-related desynchronization/synchronization and state control. IEEE Trans. Neural Syst. Rehabil. Eng., 20, 3, 379–388, 2012.

- 20. Brouwer, A.M. and Van Erp, J.B., A tactile P300 brain–computer interface. Front. Neurosci., 4, 19, 2010.

- 21. Donchin, E. and Coles, M.G., Is the P300 component a manifestation of context updating. Behav. Brain Sci., 11, 3, 357–427, 1988.

- 22. Bayliss, J.D., Inverso, S.A., Tentler, A., Changing the P300 brain computer interface. Cyber Psychol. Behav., 7, 6, 694–704, 2004.

- 23. Serby, H., Yom-Tov, E., Inbar, G.F., An improved P300-based brain–computer interface. IEEE Trans. Neural Syst. Rehabil. Eng., 13, 1, 89–98, 2005.

- 24. Jin, J., Sellers, E.W., Zhou, S., Zhang, Y., Wang, X., Cichocki, A., A P300 brain–computer interface based on a modification of the mismatch negativity paradigm. Int. J. Neural Syst., 25, 03, 1550011, 2015.

- 25. Münßinger, J., II, Halder, S., Kleih, S.C., Furdea, A., Raco, V., Hösle, A., Kubler, A., Brain painting: First evaluation of a new brain–computer interface application with ALS-patients and healthy volunteers. Front. Neurosci., 4, 182, 2010.

- 26. Käthner, I., Ruf, C.A., Pasqualotto, E., Braun, C., Birbaumer, N., Halder, S., A portable auditory P300 brain–computer interface with directional cues. Clin. Neurophysiol., 124, 2, 327–338, 2013.

- 27. Tong, J. and Zhu, D., Multi-phase cycle coding for SSVEP based brain– computer interfaces. Biomed. Eng. Online, 14, 1, 1–13, 2015.

- 28. Van Gerven, M., Farquhar, J., Schaefer, R., Vlek, R., Geuze, J., Nijholt, A., Desain, P., The brain–computer interface cycle. J. Neural Eng., 6, 4, 041001, 2009.

- 29. Neuper, C., Scherer, R., Wriessnegger, S., Pfurtscheller, G., Motor imagery and action observation: Modulation of sensorimotor brain rhythms during mental control of a brain–computer interface. Clin. Neurophysiol., 120, 2, 239–247, 2009.

- 30. Ramakrishnan, V., Chenniappan, P., Dhanaraj, R.K., Hsu, C.H., Xiao, Y., Al-Turjman, F., Bootstrap aggregative mean shift clustering for big data anti-pattern detection analytics in 5G/6G communication networks. Comput. Electr. Eng., 95, 107380, 2021.

- 31. Birbaumer, N., Ghanayim, N., Hinterberger, T., Iversen, I., Kotchoubey, B., Kübler, A., Flor, H., 5 Freeing the mind: Brain communication that bypasses the body, in: Pioneering studies in cognitive neuroscience, vol. 67, 2009.

- 32. Hwang, H.J., Kwon, K., Im, C.H., Neurofeedback-based motor imagery training for brain–computer interface (BCI). J. Neurosci. Methods, 179, 1, 150–156, 2009.

- 33. Dhanaraj, R.K. et al., Random forest bagging and x-means clustered antipattern detection from SQL query log for accessing secure mobile data. Wirel. Commun. Mob. Comput., 3–7, 2021, 2021.

- 34. Jensen, O., Bahramisharif, A., Oostenveld, R., Klanke, S., Hadjipapas, A., Okazaki, Y.O., van Gerven, M.A., Using brain–computer interfaces and brain-state dependent stimulation as tools in cognitive neuroscience. Front. Psychol., 2, 100, 2011.

- 35. Sellers, E.W., Kubler, A., Donchin, E., Brain–computer interface research at the University of South Florida Cognitive Psychophysiology Laboratory: The P300 speller. IEEE Trans. Neural Syst. Rehabil. Eng., 14, 2, 221–224, 2006.

- 36. Palaniappan, R., Brain computer interface design using band powers extracted during mental tasks, in: Conference Proceedings. 2nd International IEEE EMBS Conference on Neural Engineering, 2005, IEEE, pp. 321–324, 2005, March.

- 37. Formisano, E., De Martino, F., Bonte, M., Goebel, R., Who” is saying” what”? Brain-based decoding of human voice and speech. Science, 322, 5903, 970– 973, 2008.

- 38. Lotte, F., Congedo, M., Lécuyer, A., Lamarche, F., Arnaldi, B., A review of classification algorithms for EEG-based brain–computer interfaces. J. Neural Eng., 4, 2, R1, 2007.

- 39. Scharnowski, F. and Weiskopf, N., Cognitive enhancement through real-time fMRI neurofeedback. Curr. Opin. Behav. Sci., 4, 122–127, 2015.

- 40. Hinterberger, T., Veit, R., Wilhelm, B., Weiskopf, N., Vatine, J.J., Birbaumer, N., Neuronal mechanisms underlying control of a brain–computer interface. Eur. J. Neurosci., 21, 11, 3169–3181, 2005.

- 41. Hinterberger, T., Veit, R., Lal, T.N., Birbaumer, N., Neural mechanisms underlying control of a Brain–Computer-Interface (BCI): Simultaneous recording of bold-response and EEG, in: 44th Annual Meeting of the Society for Psychophysiological Research, Blackwell Publishing Inc, p. S100, 2004, September.

- 42. Carmena, J.M., Lebedev, M.A., Crist, R.E., O’Doherty, J.E., Santucci, D.M., Dimitrov, D.F., Nicolelis, M.A., Learning to control a brain–machine interface for reaching and grasping by primates. PloS Biol., 1, 2, e42, 2003.

- 43. Musallam, S., Andersen, R.A., Corneil, B.D., Greger, B., Scherberger, H., U.S. Patent and Trademark Office, Washington, DC, 2010.

- 44. Hochberg, L.R., Serruya, M.D., Friehs, G.M., Mukand, J.A., Saleh, M., Caplan, A.H., Donoghue, J.P., Neuronal ensemble control of prosthetic devices by a human with tetraplegia. Nature, 442, 7099, 164–171, 2006.

- 45. Schwartz, A.B., Cortical neural prosthetics. Annu. Rev. Neurosci., 27, 487– 507, 2004.

- 46. Johnson, L.A., Blakely, T., Hermes, D., Hakimian, S., Ramsey, N.F., Ojemann, J.G., Sleep spindles are locally modulated by training on a brain–computer interface. Proceedings of the National Academy of Sciences, vol. 109, pp. 18583– 18588, 2012.

- 47. Middendorf, M., McMillan, G., Calhoun, G., Jones, K.S., Brain–computer interfaces based on the steady-state visual-evoked response. IEEE Trans. Rehabil. Eng., 8, 2, 211–214, 2000.

- 48. Petersen, S.E., Fox, P.T., Posner, M., II, Mintun, M., Raichle, M.E., Positron emission tomographic studies of the cortical anatomy of single-word processing. Nature, 331, 6157, 585–589, 1988.

- 49. McFarland, D.J. and Wolpaw, J.R., Sensorimotor rhythm-based brain–computer interface (BCI): Feature selection by regression improves performance. IEEE Trans. Neural Syst. Rehabil. Eng., 13, 3, 372–379, 2005.

- 50. Kohlmorgen, J., Dornhege, G., Braun, M., Blankertz, B., Müller, K.R., Curio, G., Kincses, W., Improving human performance in a real operating environment through real-time mental workload detection. Toward Brain–Comput. Interfacing, 409422, 409–422, 2007.

- 51. Raina, R., Ng, A.Y., Koller, D., Constructing informative priors using transfer learning, in: Proceedings of the 23rd international conference on Machine learning, pp. 713–720, 2006, June.

- 52. van Gerven, M., Hesse, C., Jensen, O., Heskes, T., Interpreting single trial data using groupwise regularisation. NeuroImage, 46, 3, 665–676, 2009.

- 53. Sykacek, P., Roberts, S.J., Stokes, M., Adaptive BCI based on variational Bayesian Kalman filtering: an empirical evaluation. IEEE Trans. Biomed. Eng., 51, 5, 719–727, 2004.

- 54. Vidaurre, C., Schlogl, A., Cabeza, R., Scherer, R., Pfurtscheller, G., A fully on-line adaptive BCI. IEEE Trans. Biomed. Eng., 53, 6, 1214–1219, 2006.

- 55. Nijboer, F., Furdea, A., Gunst, I., Mellinger, J., McFarland, D.J., Birbaumer, N., Kübler, A., An auditory brain–computer interface (BCI). J. Neurosci. Methods, 167, 1, 43–50, 2008.

- 56. Birbaumer, N., Ghanayim, N., Hinterberger, T., Iversen, I., Kotchoubey, B., Kübler, A., Flor, H., A spelling device for the paralysed. Nature, 398, 6725, 297–298, 1999.

- 57. Nicolelis, M.A., Actions from thoughts. Nature, 409, 6818, 403–407, 2001.

- 58. Birbaumer, N. and Cohen, L.G., Brain–computer interfaces: Communication and restoration of movement in paralysis. J. Physiol., 579, 3, 621–636, 2007.

- 59. Mason, S.G., Bashashati, A., Fatourechi, M., Navarro, K.F., Birch, G.E., A comprehensive survey of brain interface technology designs. Ann. Biomed. Eng., 35, 2, 137–169, 2007.

- 60. Wolpaw, J.R. and McFarland, D.J., Control of a two-dimensional movement signal by a noninvasive brain–computer interface in humans. Proceedings of the national academy of sciences, vol. 101, pp. 17849–17854, 2004.

- 61. Yuan, Y., Xun, G., Ma, F., Suo, Q., Xue, H., Jia, K., Zhang, A., A novel channel-aware attention framework for multi-channel EEG seizure detection via multi-view deep learning, in: 2018 IEEE EMBS International Conference on Biomedical & Health Informatics (BHI), IEEE, pp. 206–209, 2018, March.

- 62. Johansen, A.R., Jin, J., Maszczyk, T., Dauwels, J., Westover, M.B., Epileptiform spike detection via convolutional neural networks, in: IEEE International Conference on Acoustics, Sp, .

- 63. Morabito, F.C., Campolo, M., Ieracitano, C., Ebadi, J.M., Bonanno, L., Bramanti, A., Bramanti, P., Deep convolutional neural networks for classification of mild cognitive impaired and Alzheimer’s disease patients from scalp EEG recordings, in: 2016 IEEE 2nd International Forum on Research and Technologies for Society and Industry Leveraging a better tomorrow (RTSI), IEEE, pp. 1–6, 2016, September.

- 64. Chu, L., Qiu, R., Liu, H., Ling, Z., Zhang, T., Wang, J., Individual recognition in schizophrenia using deep learning methods with random forest and voting classifiers: Insights from resting state EEG streams, in: Computer Vision and Pattern Recognition v2, 2017. arXiv preprint arXiv:1707.03467.U. R., 5, 2018.

- 65. Acharya, S.L.O., Hagiwara, Y., Tan, J.H., Adeli, H., Subha, D.P., Automated EEG-based screening of depression using deep convolutional neural network. Comput. Methods Programs Biomed., 161, 103–113, 2018.

- 66. Ruffini, G., Ibañez, D., Castellano, M., Dunne, S., Soria-Frisch, A., EEG-driven RNN classification for prognosis of neurodegeneration in at-risk patients, in: International Conference on Artificial Neural Networks, Springer, Cham, pp. 306–313, 2016, September.

- 67. Hosseini, M.P., Soltanian-Zadeh, H., Elisevich, K., Pompili, D., Cloud-based deep learning of big EEG data for epileptic seizure prediction, in: 2016 IEEE global conference on signal and information processing (GlobalSIP), IEEE, pp. 1151–1155, 2016, December.

- 68. Lin, Q., Ye, S.Q., Huang, X.M., Li, S.Y., Zhang, M.Z., Xue, Y., Chen, W.S., Classification of epileptic EEG signals with stacked sparse autoencoder based on deep learning, in: International Conference on Intelligent Computing, Springer, Cham, pp. 802–810, 2016, August.

- 69. Page, A., Turner, J.T., Mohsenin, T., Oates, T., Comparing raw data and feature extraction for seizure detection with deep learning methods, in: The Twenty-Seventh International Flairs Conference, 2014, May.

- 70. Al-kaysi, A.M., Al-Ani, A., Boonstra, T.W., A multichannel deep belief network for the classification of EEG data, in: International Conference on Neural Information Processing, Springer, Cham, pp. 38–45, 2015, November.

- 71. Wang, Q., Hu, Y., Chen, H., Multi-channel EEG classification based on Fast convolutional feature extraction, in: International Symposium on Neural Networks, Springer, Cham, pp. 533–540, 2017, June.

- 72. Zhang, X., Yao, L., Huang, C., Sheng, Q.Z., Wang, X., Intent recognition in smart living through deep recurrent neural networks, in: International Conference on Neural Information Processing, Springer, Cham, pp. 748–758, 2017, November.

- 73. Lee, H.K. and Choi, Y.S., A convolution neural networks scheme for classification of motor imagery EEG based on wavelet time-frequency image, in: 2018 International Conference on Information Networking (ICOIN), IEEE, pp. 906–909, 2018, January.

- 74. Hartmann, K.G., Schirrmeister, R.T., Ball, T., Hierarchical internal representation of spectral features in deep convolutional networks trained for EEG decoding, in: 2018 6th International Conference on Brain–Computer Interface (BCI), IEEE, pp. 1–6, 2018, January.

- 75. Lu, N., Li, T., Ren, X., Miao, H., A deep learning scheme for motor imagery classification based on restricted Boltzmann machines. IEEE Trans. Neural Syst. Rehabil. Eng., 25, 6, 566–576, 2016.

- 76. Li, J. and Cichocki, A., Deep learning of multifractal attributes from motor imagery induced EEG, in: International Conference on Neural Information Processing, Springer, Cham, pp. 503–510, 2014, November.

- 77. Ren, Y. and Wu, Y., Convolutional deep belief networks for feature extraction of EEG signal, in: 2014 International joint conference on neural networks (IJCNN), IEEE, pp. 2850–2853, 2014, July.

- 78. Zhang, X., Yao, L., Huang, C., Wang, S., Tan, M., Long, G., Wang, C., Multi-modality sensor data classification with selective attention, in: Computer Vision and Pattern Recognition, arXiv preprint arXiv:1804.05493, 2018.

- 79. Zhang, X., Yao, L., Zhang, D., Wang, X., Sheng, Q.Z., Gu, T., Multi-person brain activity recognition via comprehensive EEG signal analysis, in: Proceedings of the 14th EAI International Conference on Mobile and Ubiquitous Systems: Computing, Networking and Services, pp. 28–37, 2017, November.

- 80. Nurse, E.S., Karoly, P.J., Grayden, D.B., Freestone, D.R., A generalizable brain–computer interface (BCI) using machine learning for feature discovery. PloS One, 10, 6, e0131328, 2015.

- 81. Redkar, S., Using deep learning for human computer interface via electroencephalography. IAES Int. J. Robot. Automat., 4, 4, 2015.

- 82. Massa, R., de Saint-Martin, A., Hirsch, E., Marescaux, C., Motte, J., Seegmuller, C., Metz-Lutz, M.N., Landau–Kleffner syndrome: Sleep EEG characteristics at onset. Clin. Neurophysiol., 111, S87–S93, 2000.

- 83. Kang, S.G., Mariani, S., Marvin, S.A., Ko, K.P., Redline, S., Winkelman, J.W., Sleep EEG spectral power is correlated with subjective-objective discrepancy of sleep onset latency in major depressive disorder. Progress in Neuro-Psychopharmacology and Biological Psychiatry, vol. 85, pp. 122–127, 2018.

- 84. Dahl, R.E., Ryan, N.D., Matty, M.K., Birmaher, B., Al-Shabbout, M., Williamson, D.E., Kupfer, D.J., Sleep onset abnormalities in depressed adolescents. Biol. Psychiatry, 39, 6, 400–410, 1996.

- 85. Sors, A., Bonnet, S., Mirek, S., Vercueil, L., Payen, J.F., A convolutional neural network for sleep stage scoring from raw single-channel EEG. Biomed. Signal Process. Control, 42, 107–114, 2018.

- 86. Vilamala, A., Madsen, K.H., Hansen, L.K., Deep convolutional neural networks for interpretable analysis of EEG sleep stage scoring, in: 2017 IEEE 27th International Workshop on Machine Learning for Signal Processing (MLSP), IEEE, pp. 1–6, 2017, September.

- 87. Tan, D., Zhao, R., Sun, J., Qin, W., Sleep spindle detection using deep learning: a validation study based on crowdsourcing, in: 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), IEEE, pp. 2828–2831, 2015, August.

- 88. Zhang, J., Wu, Y., Bai, J., Chen, F., Automatic sleep stage classification based on sparse deep belief net and combination of multiple classifiers. Trans. Inst. Meas. Control, 38, 4, 435–451, 2016.

- 89. Biswal, S., Kulas, J., Sun, H., Goparaju, B., Westover, M.B., Bianchi, M.T., Sun, J., SLEEPNET: Automated sleep staging system via deep learning, in: arXiv preprint arXiv:1707.08262, 2, 2017.

- 90. Tsiouris, K.M., Pezoulas, V.C., Zervakis, M., Konitsiotis, S., Koutsouris, D.D., Fotiadis, D., II, A long short-term memory deep learning network for the prediction of epileptic seizures using EEG signals. Comput. Biol. Med., 99, 24–37, 2018.

- 91. Li, J., Zhang, Z., He, H., Implementation of eeg emotion recognition system based on hierarchical convolutional neural networks, in: International Conference on Brain Inspired Cognitive Systems, Springer, Cham, pp. 22–33, 2016, November.

- 92. Liu, W., Jiang, H., Lu, Y., Analyze EEG signals with convolutional neural network based on power spectrum feature selection. Proceedings of Science, 2017.

- 93. Frydenlund, A. and Rudzicz, F., Emotional affect estimation using video and EEG data in deep neural networks, in: Canadian Conference on Artificial Intelligence, Springer, Cham, pp. 273–280, 2015, June.

- 94. Zhang, T., Zheng, W., Cui, Z., Zong, Y., Li, Y., Spatial–temporal recurrent neural network for emotion recognition, in: IEEE Trans. Cybern., 49, 3, 839– 847, 2018.

- 95. Talathi, S.S., Deep recurrent neural networks for seizure detection and early seizure detection systems, 2017. arXiv preprint arXiv:1706.03283.

- 96. Wang, F., Zhong, S.H., Peng, J., Jiang, J., Liu, Y., Data augmentation for EEG-based emotion recognition with deep convolutional neural networks, in: International Conference on Multimedia Modeling, Springer, Cham, pp. 82–93, 2018, February.

- 97. Tang, H., Liu, W., Zheng, W.L., Lu, B.L., Multimodal emotion recognition using deep neural networks, in: International Conference on Neural Information Processing, Springer, Cham, pp. 811–819, 2017, November.

- 98. Chai, X., Wang, Q., Zhao, Y., Liu, X., Bai, O., Li, Y., Unsupervised domain adaptation techniques based on auto-encoder for non-stationary EEG-based emotion recognition. Comput. Biol. Med., 79, 205–214, 2016.

- 99. Zheng, W.L., Zhu, J.Y., Peng, Y., Lu, B.L., EEG-based emotion classification using deep belief networks, in: 2014 IEEE International Conference on Multimedia and Expo (ICME), IEEE, pp. 1–6, 2014, July.

- 100. Zhao, Y. and He, L., Deep learning in the EEG diagnosis of Alzheimer’s disease, in: Asian conference on computer vision, Springer, Cham, pp. 340–353, 2014, November.

- 101. Golmohammadi, M., Shah, V., Obeid, I., Picone, J., Deep learning approaches for automated seizure detection from scalp electroencephalograms, in: Signal processing in medicine and biology, pp. 235–276, Springer, Cham, 2020.

- 102. Golmohammadi, M., Ziyabari, S., Shah, V., de Diego, S.L., Obeid, I., Picone, J., Deep architectures for automated seizure detection in scalp EEGs, in: 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), 745–750, IEEE, 2018.

- 103. Morabito, F.C., Campolo, M., Mammone, N., Versaci, M., Franceschetti, S., Tagliavini, F., Aguglia, U., Deep learning representation from electroencephalography of early-stage Creutzfeldt-Jakob disease and features for differentiation from rapidly progressive dementia. Int. J. Neural Syst., 27, 02, 1650039, 2017.

- 104. Wen, T. and Zhang, Z., Deep convolution neural network and autoencoders-based unsupervised feature learning of EEG signals. IEEE Access, 6, 25399–25410, 2018.

- 105. Tan, C., Sun, F., Zhang, W., Chen, J., Liu, C., Multimodal classification with deep convolutional-recurrent neural networks for electroencephalography, in: International Conference on Neural Information Processing, Springer, Cham, pp. 767–776, 2017, November.

- 106. Tabar, Y.R. and Halici, U., A novel deep learning approach for classification of EEG motor imagery signals, in: J. Neural Eng., 14, 1, 016003, 2016.

- 107. Dong, H., Supratak, A., Pan, W., Wu, C., Matthews, P.M., Guo, Y., Mixed neural network approach for temporal sleep stage classification. IEEE Trans. Neural Syst. Rehabil. Eng., 26, 2, 324–333, 2017.

- 108. Manzano, M., Guillén, A., Rojas, I., Herrera, L.J., Combination of EEG data time and frequency representations in deep networks for sleep stage Classification, in: International Conference on Intelligent Computing, Springer, Cham, pp. 219–229, 2017, August.

- 109. Fraiwan, L. and Lweesy, K., Neonatal sleep state identification using deep learning autoencoders, in: 2017 IEEE 13th International Colloquium on Signal Processing & its Applications (CSPA), IEEE, pp. 228–231, 2017, March.

- 110. Supratak, A., Dong, H., Wu, C., Guo, Y., DeepSleepNet: A model for automatic sleep stage scoring based on raw single-channel EEG. IEEE Trans. Neural Syst. Rehabil. Eng., 25, 11, 1998–2008, 2017.

- 111. Miranda-Correa, J.A. and Patras, I., A multi-task cascaded network for prediction of affect, personality, mood and social context using eeg signals, in: 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), IEEE, pp. 373–380, 2018, May.

- 112. Stober, S., Cameron, D.J., Grahn, J.A., Classifying EEG recordings of rhythm perception, in: ISMIR, pp. 649–654, 2014.

- 113. Sternin, A., Stober, S., Grahn, J.A., Owen, A.M., Tempo estimation from the eeg signal during perception and imagination of music, in: International Workshop on Brain–Computer Music Interfacing/International Symposium on Computer Music Multidisciplinary Research (BCMI/CMMR), 2015.

- 114. Sternin, A., Stober, S., Grahn, J.A., Owen, A.M., Tempo estimation from the eeg signal during perception and imagination of music, in: International Workshop on Brain–Computer Music Interfacing/International Symposium on Computer Music Multidisciplinary Research (BCMI/CMMR), 2015.

- 115. Shang, J., Zhang, W., Xiong, J., Liu, Q., Cognitive load recognition using multi-channel complex network method, in: International Symposium on Neural Networks, Springer, Cham, pp. 466–474, 2017, June.

- 116. Gordienko, Y., Stirenko, S., Kochura, Y., Alienin, O., Novotarskiy, M., Gordienko, N., Deep learning for fatigue estimation on the basis of multimodal human-machine interactions, in: Computers and Society 2017, 6, 2017. arXiv preprint arXiv:1801.06048.

- 117. Bashivan, P., Yeasin, M., Bidelman, G.M., Single trial prediction of normal and excessive cognitive load through EEG feature fusion, in: 2015 IEEE Signal Processing in Medicine and Biology Symposium (SPMB), pp. 1–5, 2015, December.

- 118. Bashivan, P., Rish, I., Yeasin, M., Codella, N., Learning representations from EEG with deep recurrent-convolutional neural networks, in: Published as a conference paper at ICLR 2016, 3-4, 2015. arXiv preprint arXiv:1511.06448.

- 119. Li, P., Jiang, W., Su, F., Single-channel EEG-based mental fatigue detection based on deep belief network, in: 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), IEEE, pp. 367–370, 2016, August.

- 120. Yin, Z. and Zhang, J., Cross-session classification of mental workload levels using EEG and an adaptive deep learning model. Biomed. Signal Process. Control, 33, 30–47, 2017.

- 121. Hung, Y.C., Wang, Y.K., Prasad, M., Lin, C.T., Brain dynamic states analysis based on 3D convolutional neural network, in: 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), IEEE, pp. 222–227, 2017, October.

- 122. Hajinoroozi, M., Jung, T.P., Lin, C.T., Huang, Y., Feature extraction with deep belief networks for driver’s cognitive states prediction from EEG data, in: 2015 IEEE China Summit and International Conference on Signal and Information Processing (ChinaSIP), IEEE, pp. 812–815, 2015, July.

- 123. San, P.P., Ling, S.H., Chai, R., Tran, Y., Craig, A., Nguyen, H., EEG-based driver fatigue detection using hybrid deep generic model, in: 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), IEEE, pp. 800–803, 2016, August.

- 124. Almogbel, M.A., Dang, A.H., Kameyama, W., EEG-signals based cognitive workload detection of vehicle driver using deep learning, in: 2018 20th International Conference on Advanced Communication Technology (ICACT), IEEE, pp. 256–259, 2018, February.