Android for Game Developers

Android’s application framework is vast and can be confusing at times. For every possible task you can think of, there’s an API you can use. Of course, you have to learn the APIs first. Luckily, we game developers only need an extremely limited set of these APIs. All we want is a window with a single UI component that we can draw to, and from which we can receive input, as well as the ability to play back audio. This covers all of our needs for implementing the game framework that we designed in Chapter 3, and in a rather platform-agnostic way.

In this chapter, you’ll learn the bare minimum number of Android APIs that you need to make Mr. Nom a reality. You’ll be surprised at how little you actually need to know about these APIs to achieve that goal. Let’s recall what ingredients we need:

- Window management

- Input

- File I/O

- Audio

- Graphics

For each of these modules, there’s an equivalent in the application framework APIs. We’ll pick and choose the APIs needed to handle those modules, discuss their internals, and finally implement the respective interfaces of the game framework that we designed in Chapter 3.

If you happen to be coming from an iOS/Xcode background, we have a little section at the end of this chapter that will provide some translation and guidance. Before we can dive into window management on Android, however, we have to revisit something we discussed only briefly in Chapter 2: defining our application via the manifest file.

Defining an Android Application: The Manifest File

An Android application can consist of a multitude of different components:

- Activities: These are user-facing components that present a UI with which to interact.

- Services: These are processes that work in the background and don’t have a visible UI. For example, a service might be responsible for polling a mail server for new e-mails.

- Content providers: These components make parts of your application data available to other applications.

- Intents: These are messages created by the system or applications themselves. They are then passed on to any interested party. Intents might notify us of system events such as the SD card being removed or the USB cable being connected. Intents are also used by the system for starting components of our application, such as activities. We can also fire our own intents to ask other applications to perform an action, such as opening a photo gallery to display an image or starting the Camera application to take a photo.

- Broadcast receivers: These react to specific intents, and they might execute an action, such as starting a specific activity or sending out another intent to the system.

An Android application has no single point of entry, as we are used to having on a desktop operating system (for example, in the form of Java’s main() method). Instead, components of an Android application are started up or asked to perform a certain action by specific intents.

What components comprise our application and to which intents these components react are defined in the application’s manifest file. The Android system uses this manifest file to get to know what makes up our application, such as the default activity to display when the application is started.

Note We are only concerned about activities in this book, so we’ll only discuss the relevant portions of the manifest file for this type of component. If you want to make yourself dizzy, you can learn more about the manifest file on the Android Developers site (http://developer.android.com).

The manifest file serves many more purposes than just defining an application’s components. The following list summarizes the relevant parts of a manifest file in the context of game development:

- The version of our application as displayed and used on Google Play

- The Android versions on which our application can run

- Hardware profiles our application requires (that is, multitouch, specific screen resolutions, or support for OpenGL ES 2.0)

- Permissions for using specific components, such as for writing to the SD card or accessing the networking stack

In the following subsections we will create a template manifest file that we can reuse, in a slightly modified manner, in all the projects we’ll develop throughout this book. For this, we’ll go through all the relevant XML tags that we need to define our application.

The <manifest> Element

The <manifest> tag is the root element of an AndroidManifest.xml file. Here’s a basic example:

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

package="com.helloworld"

android:versionCode="1"

android:versionName="1.0"

android:installLocation="preferExternal">

...

</manifest>

We are assuming that you have worked with XML before, so you should be familiar with the first line. The <manifest> tag specifies a namespace called android, which is used throughout the rest of the manifest file. The package attribute defines the root package name of our application. Later on, we’ll reference specific classes of our application relative to this package name.

The versionCode and versionName attributes specify the version of our application in two forms. The versionCode attribute is an integer that we have to increment each time we publish a new version of our application. It is used by Google Play to track our application’s version. The versionName attribute is displayed to users of Google Play when they browse our application. We can use any string we like here.

The installLocation attribute is only available to us if we set the build target of our Android project in Eclipse to Android 2.2 or newer. It specifies where our application should be installed. The string preferExternal tells the system that we’d like our application to be installed to the SD card. This will only work on Android 2.2 or newer, and this string is ignored by all earlier Android applications. On Android 2.2 or newer, the application will always get installed to internal storage where possible.

All attributes of the XML elements in a manifest file are generally prefixed with the android namespace, as shown previously. For brevity, we will not specify the namespace in the following sections when talking about a specific attribute.

Inside the <manifest> element, we then define the application’s components, permissions, hardware profiles, and supported Android versions.

The <application> Element

As in the case of the <manifest> element, let’s discuss the <application> element in the form of an example:

<application android:icon="@drawable/icon" android:label="@string/app_name">

...

</application>

Now doesn’t this look a bit strange? What’s up with the @drawable/icon and @string/app_name strings? When developing a standard Android application, we usually write a lot of XML files, where each defines a specific portion of our application. Full definition of those portions requires that we are also able to reference resources that are not defined in the XML file, such as images or internationalized strings. These resources are located in subfolders of the res/ folder, as discussed in Chapter 2 when we dissected the Hello World project in Eclipse.

To reference resources, we use the preceding notation. The @ specifies that we want to reference a resource defined elsewhere. The following string identifies the type of the resource we want to reference, which directly maps to one of the folders or files in the res/directory. The final part specifies the name of the resource. In the preceding case, this is an image called icon and a string called app_name. In the case of the image, it’s the actual filename we specify, as found in the res/drawable-xxx/ folders. Note that the image name does not have a suffix like .png or .jpg. Android will infer the suffix automatically based on what’s in the res/drawable-xxx/ folder. The app_name string is defined in the res/values/strings.xml file, a file where all the strings used by the application will be stored. The name of the string was defined in the strings.xml file.

Note Resource handling on Android is an extremely flexible, but also complex thing. For this book, we decided to skip most of resource handling for two reasons: it’s utter overkill for game development, and we want to have full control over our resources. Android has the habit of modifying resources placed in the res/ folder, especially images (called drawables). That’s something we, as game developers, do not want. The only use we’d suggest for the Android resource system in game development is internationalizing strings. We won’t get into that in this book; instead, we’ll use the more game development-friendly assets/ folder, which leaves our resources untouched and allows us to specify our own folder hierarchy.

The meaning of the attributes of the <application> element should become a bit clearer now. The icon attribute specifies the image from the res/drawable/ folder to be used as an icon for the application. This icon will be displayed in Google Play as well as in the application launcher on the device. It is also the default icon for all the activities that we define within the <application> element.

The label attribute specifies the string being displayed for our application in the application launcher. In the preceding example, this references a string in the res/values/string.xml file, which is what we specified when we created the Android project in Eclipse. We could also set this to a raw string, such as My Super Awesome Game. The label is also the default label for all of the activities that we define in the <application> element. The label will be shown in the title bar of our application.

We have only discussed a very small subset of the attributes that you can specify for the <application> element. However, these are sufficient for our game development needs. If you want to know more, you can find the full documentation on the Android Developers site.

The <application> element contains the definitions of all the application components, including activities and services, as well as any additional libraries used.

The <activity> Element

Now it’s getting interesting. Here’s a hypothetical example for our Mr. Nom game:

<activity android:name=".MrNomActivity"

android:label="Mr.Nom"

android:screenOrientation="portrait">

android:configChanges="keyboard|keyboardHidden|orientation">

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

</activity>

Let’s have a look at the attributes of the <activity> tag first:

- name: This specifies the name of the activity’s class relative to the package attribute we specified in the <manifest> element. You can also specify a fully qualified class name here.

- label: We already specified the same attribute in the <application> element. This label is displayed in the title bar of the activity (if it has one). The label will also be used as the text displayed in the application launcher if the activity we define is an entry point to our application. If we don’t specify it, the label from the <application> element will be used instead. Note that we used a raw string here instead of a reference to a string in the string.xml file.

- screenOrientation: This attribute specifies the orientation that the activity will use. Here we specified portrait for our Mr. Nom game, which will only work in portrait mode. Alternatively, we could specify landscape if we wanted to run in landscape mode. Both configurations will force the orientation of the activity to stay the same over the activity’s life cycle, no matter how the device is actually oriented. If we leave out this attribute, then the activity will use the current orientation of the device, usually based on accelerometer data. This also means that whenever the device orientation changes, the activity will be destroyed and restarted—something that’s undesirable in the case of a game. We usually fix the orientation of our game’s activity either to landscape mode or portrait mode.

- configChanges: Reorienting the device or sliding out the keyboard is considered a configuration change. In the case of such a change, Android will destroy and restart our application to accommodate the change. That’s not desirable in the case of a game. The configChanges attribute of the <activity> element comes to the rescue. It allows us to specify which configuration changes we want to handle ourselves, without destroying and re-creating our activity. Multiple configuration changes can be specified by using the | character to concatenate them. In the preceding case, we handle the changes keyboard, keyboardHidden, and orientation ourselves.

As with the <application> element, there are, of course, more attributes that you can specify for an <activity> element. For game development, we get away with the four attributes just discussed.

Now, you might have noticed that the <activity> element isn’t empty, but it houses another element, which itself contains two more elements. What are those for?

As we pointed out earlier, there’s no notion of a single main entry point to your application on Android. Instead, we can have multiple entry points in the form of activities and services that are started in response to specific intents being sent out by the system or a third-party application. Somehow, we need to communicate to Android which activities and services of our application will react (and in what ways) to specific intents. That’s where the <intent-filter> element comes into play.

In the preceding example, we specify two types of intent filters: an <action> and a <category>. The <action> element tells Android that our activity is a main entry point to our application. The <category> element specifies that we want that activity to be added to the application launcher. Both elements together allow Android to infer that, when the icon in the application launcher for the application is pressed, it should start that specific activity.

For both the <action> and <category> elements, the only thing that gets specified is the name attribute, which identifies the intent to which the activity will react. The intent android.intent.action.MAIN is a special intent that the Android system uses to start the main activity of an application. The intent android.intent.category.LAUNCHER is used to tell Android whether a specific activity of an application should have an entry in the application launcher.

Usually, we’ll only have one activity that specifies these two intent filters. However, a standard Android application will almost always have multiple activities, and these need to be defined in the manifest.xml file as well. Here’s an example definition of this type of a subactivity:

<activity android:name=".MySubActivity"

android:label="Sub Activity Title"

android:screenOrientation="portrait">

android:configChanges="keyboard|keyboardHidden|orientation"/>

Here, no intent filters are specified—only the four attributes of the activity we discussed earlier. When we define an activity like this, it is only available to our own application. We start this type of activity programmatically with a special kind of intent; say, when a button is pressed in one activity to cause a new activity to open. We’ll see in a later section how we can start an activity programmatically.

To summarize, we have one activity for which we specify two intent filters so that it becomes the main entry point of our application. For all other activities, we leave out the intent filter specification so that they are internal to our application. We’ll start these programmatically.

Note As indicated earlier, we’ll only ever have a single activity in our game. This activity will have exactly the same intent filter specification as shown previously. The reason we discussed how to specify multiple activities is that we are going to create a special sample application in a minute that will have multiple activities. Don’t worry—it’s going to be easy.

The <uses-permission> Element

We are leaving the <application> element now and coming back to elements that we normally define as children of the <manifest> element. One of these elements is the <uses-permission> element.

Android has an elaborate security model. Each application is run in its own process and virtual machine (VM), with its own Linux user and group, and it cannot influence other applications. Android also restricts the use of system resources, such as networking facilities, the SD card, and the audio-recording hardware. If our application wants to use any of these system resources, we have to ask for permission. This is done with the <uses-permission> element.

A permission always has the following form, where string specifies the name of the permission we want to be granted:

<uses-permission android:name="string"/>

Here are a few permission names that might come in handy:

- android.permission.RECORD_AUDIO: This grants us access to the audio-recording hardware.

- android.permission.INTERNET: This grants us access to all the networking APIs so we can, for example, fetch an image from the Internet or upload high scores.

- android.permission.WRITE_EXTERNAL_STORAGE: This allows us to read and write files on the external storage, usually the SD card of the device.

- android.permission.WAKE_LOCK: This allows us to acquire a wake lock. With this wake lock, we can keep the device from going to sleep if the screen hasn’t been touched for some time. This could happen, for example, in a game that is controlled only by the accelerometer.

- android.permission.ACCESS_COARSE_LOCATION: This is a very useful permission as it allows you to get non-GPS-level access to things like the country in which the user is located, which can be useful for language defaults and analytics.

- android.permission.NFC: This allows applications to perform I/O operations over near field communication (NFC), which is useful for a variety of game features involving the quick exchange of small amounts of information.

To get access to the networking APIs, we’d thus specify the following element as a child of the <manifest> element:

<uses-permission android:name="android.permission.INTERNET"/>

For any additional permissions, we simply add more <uses-permission> elements. You can specify many more permissions; we again refer you to the official Android documentation. We’ll only need the set just discussed.

Forgetting to add a permission for something like accessing the SD card is a common source of error. It manifests itself as a message in the device log, so it might survive undetected due to all the clutter in the log. In a subsequent section we’ll describe the log in more detail. Think about the permissions your game will need, and specify them when you initially create the project.

Another thing to note is that, when a user installs your application, he or she will first be asked to review all of the permissions your application requires. Many users will just skip over these and happily install whatever they can get hold of. Some users are more conscious about their decisions and will review the permissions in detail. If you request suspicious permissions, like the ability to send out costly SMS messages or to get a user’s location, you may receive some nasty feedback from users in the Comments section for your application when it’s on Google Play. If you must use one of those problematic permissions, your application description also should tell the user why you’re using it. The best thing to do is to avoid those permissions in the first place or to provide functionality that legitimately uses them.

The <uses-feature> Element

If you are an Android user yourself and possess an older device with an old Android version like 1.5, you will have noticed that some awesome applications won’t show up in the Google Play application on your device. One reason for this can be the use of the <uses-feature> element in the manifest file of the application.

The Google Play application will filter all available applications by your hardware profile. With the <uses-feature> element, an application can specify which hardware features it needs; for example, multitouch or support for OpenGL ES 2.0. Any device that does not have the specified features will trigger that filter so that the end user isn’t shown the application in the first place.

A <uses-feature> element has the following attributes:

<uses-feature android:name="string" android:required=["true" | "false"]

android:glEsVersion="integer" />

The name attribute specifies the feature itself. The required attribute tells the filter whether we really need the feature under all circumstances or if it’s just nice to have. The last attribute is optional and only used when a specific OpenGL ES version is required.

For game developers, the following features are most relevant:

- android.hardware.touchscreen.multitouch: This requests that the device have a multitouch screen capable of basic multitouch interactions, such as pinch zooming and the like. These types of screens have problems with independent tracking of multiple fingers, so you have to evaluate if those capabilities are sufficient for your game.

- android.hardware.touchscreen.multitouch.distinct: This is the big brother of the last feature. This requests full multitouch capabilities suitable for implementing things like onscreen virtual dual sticks for controls.

We’ll look into multitouch in a later section of this chapter. For now, just remember that, when our game requires a multitouch screen, we can weed out all devices that don’t support that feature by specifying a <uses-feature> element with one of the preceding feature names, like so:

<uses-feature android:name="android.hardware.touchscreen.multitouch" android:required="true"/>

Another useful thing for game developers to do is to specify which OpenGL ES version is needed. In this book, we’ll be concerned with OpenGL ES 1.0 and 1.1. For these, we usually don’t specify a <uses-feature> element because they aren’t much different from each other. However, any device that implements OpenGL ES 2.0 can be assumed to be a graphics powerhouse. If our game is visually complex and needs a lot of processing power, we can require OpenGL ES 2.0 so that the game only shows up for devices that are able to render our awesome visuals at an acceptable frame rate. Note that we don’t use OpenGL ES 2.0, but we just filter by hardware type so that our OpenGL ES 1.x code gets enough processing power. Here’s how we can do this:

<uses-feature android:glEsVersion="0x00020000"android:required="true"/>

This will make our game only show up on devices that support OpenGL ES 2.0 and are thus assumed to have a fairly powerful graphics processor.

Note This feature is reported incorrectly by some devices out there, which will make your application invisible to otherwise perfectly fine devices. Use it with caution.

Let’s say you want to have optional support of USB peripherals for your game so that the device can be a USB host and have controllers or other peripherals connected to it. The correct way of handling this is to add the following:

<uses-feature android:name="android.hardware.usb.host" android:required="false"/>

Setting "android:required" to false says to Google Play, “We may use this feature, but it’s not necessary to download and run the game.” Setting usage of the optional hardware feature is a good way to future-proof your game for various pieces of hardware that you haven’t yet encountered. It allows manufacturers to limit the apps only to ones that have declared support for their specific hardware, and, if you declare optional support for it, you will be included in the apps that can be downloaded for that device.

Now, every specific requirement you have in terms of hardware potentially decreases the number of devices on which your game can be installed, which will directly affect your sales. Think twice before you specify any of the above. For example, if the standard mode of your game requires multitouch, but you can also think of a way to make it work on single-touch devices, you should strive to have two code paths—one for each hardware profile—so that your game can be deployed to a bigger market.

The <uses-sdk> Element

The last element we’ll put in our manifest file is the <uses-sdk> element. It is a child of the <manifest> element. We defined this element when we created our Hello World project in Chapter 2 and made sure our Hello World application works from Android 1.5 onward with some manual tinkering. So what does this element do? Here’s an example:

<uses-sdk android:minSdkVersion="3" android:targetSdkVersion="16"/>

As we discussed in Chapter 2, each Android version has an integer assigned, also known as an SDK version. The <uses-sdk> element specifies the minimum version supported by our application and the target version of our application. In this example, we define our minimum version as Android 1.5 and our target version as Android 4.1. This element allows us to deploy an application that uses APIs only available in newer versions to devices that have a lower version installed. One prominent example would be the multitouch APIs, which are supported from SDK version 5 (Android 2.0) onward. When we set up our Android project in Eclipse, we use a build target that supports that API; for example, SDK version 5 or higher (we usually set it to the latest SDK version, which is 16 at the time of writing). If we want our game to run on devices with SDK version 3 (Android 1.5) as well, we specify the minSdkVersion, as before, in the manifest file. Of course, we must be careful not to use any APIs that are not available in the lower version, at least on a 1.5 device. On a device with a higher version, we can use the newer APIs as well.

The preceding configuration is usually fine for most games (unless you can’t provide a separate fallback code path for the higher-version APIs, in which case you will want to set the minSdkVersion attribute to the minimum SDK version you actually support).

Android Game Project Setup in Eight Easy Steps

Let’s now combine all of the preceding information and develop a simple step-by-step method to create a new Android game project in Eclipse. Here’s what we want from our project:

- It should be able to use the latest SDK version’s features while maintaining compatibility with the lowest SDK version that some devices still run. That means that we want to support Android 1.5 and above.

- It should be installed to the SD card when possible so that we don’t fill up the internal storage of the device.

- It should have a single main activity that will handle all configuration changes itself so that it doesn’t get destroyed when the hardware keyboard is revealed or when the orientation of the device is changed.

- The activity should be fixed to either portrait or landscape mode.

- It should allow us to access the SD card.

- It should allow us to get a hold of a wake lock.

These are some easy goals to achieve with the information you just acquired. Here are the steps:

- Create a new Android project in Eclipse by opening the New Android Project wizard, as described in Chapter 2.

- Once the project is created, open the AndroidManifest.xml file.

- To make Android install the game on the SD card when available, add the installLocation attribute to the <manifest> element, and set it to preferExternal.

- To fix the orientation of the activity, add the screenOrientation attribute to the <activity> element, and specify the orientation you want (portrait or landscape).

- To tell Android that we want to handle the keyboard, keyboardHidden, and orientation configuration changes, set the configChanges attribute of the <activity> element to keyboard|keyboardHidden|orientation.

- Add two <uses-permission> elements to the <manifest> element, and specify the name attributes android.permission.WRITE_EXTERNALSTORAGE and android.permission.WAKE_LOCK.

- Set the minSdkVersion and targetSdkVersion attributes of the <uses-sdk> element (e.g., minSdkVersion is set to 3 and targetSdkVersion is set to 16).

- Create a folder called drawable/ in the res/ folder and copy the res/drawable-mdpi/ic_launcher.png file to this new folder. This is the location Android 1.5 will search for the launcher icon. If you don’t want to support Android 1.5, you can skip this step.

There you have it. Eight easy steps that will generate a fully defined application that will be installed to the SD card (on Android 2.2 and over), will have a fixed orientation, will not explode on a configuration change, will allow you to access the SD card and wake locks, and will work on all Android versions starting from 1.5 up to the latest version. Here’s the final AndroidManifest.xml content after executing the preceding steps:

<?xml version="1.0" encoding="utf-8"?>

<manifest xmlns:android="http://schemas.android.com/apk/res/android"

package="com.badlogic.awesomegame"

android:versionCode="1"

android:versionName="1.0"

android:installLocation="preferExternal">

<application android:icon="@drawable/icon"

android:label="Awesomnium"

android:debuggable="true">

<activity android:name=".GameActivity"

android:label="Awesomnium"

android:screenOrientation="landscape"

android:configChanges="keyboard|keyboardHidden|orientation">

<intent-filter>

<action android:name="android.intent.action.MAIN" />

<category android:name="android.intent.category.LAUNCHER" />

</intent-filter>

</activity>

</application>

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE"/>

<uses-permission android:name="android.permission.WAKE_LOCK"/>

<uses-sdk android:minSdkVersion="3" android:targetSdkVersion="16"/>

</manifest>

As you can see, we got rid of the @string/app_name in the label attributes of the <application> and <activity> elements. This is not really necessary, but having the application definition in one place is preferred. From now on, it’s all about the code! Or is it?

Google Play Filters

There are so many different Android devices, with so many different capabilities, that it’s necessary for the hardware manufacturers to allow only compatible applications to be downloaded and run on their device; otherwise, the user would have the bad experience of trying to run an application that’s just not compatible with the device. To deal with this, Google Play filters out incompatible applications from the list of available applications for a specific device. For example, if you have a device without a camera, and you search for a game that requires a camera, it simply won’t show up. For better or worse, it will appear to you, the user, as if the app just doesn’t exist.

Many of the previous manifest elements we’ve discussed are used as filters, including <uses-feature>, <uses-sdk>, and <uses-permission>. The following are three more elements that are specific to filtering that you should keep in mind:

- <supports-screens>: This allows you to declare the screen sizes and densities your game can run on. Ideally, your game will work on all screens, and we’ll show you how to make sure that it will. However, in the manifest file, you will want to declare support explicitly for every screen size you can.

- <uses-configuration>: This lets you declare explicit support for an input configuration type on a device, such as a hard keyboard, QWERTY-specific keyboard, touchscreen, or maybe trackball navigation input. Ideally, you’ll support all of the above, but if your game requires very specific input, you will want to investigate and use this tag for filtering on Google Play.

- <uses-library>: This allows for the declaration that a third-party library, on which your game is dependent, must be present on the device. For example, you might require a text-to-speech library that is quite large, but very common, for your game. Declaring the library with this tag ensures that only devices with that library installed can see and download your game. A common use of this is to allow GPS/map-based games to work only on devices with the Google Maps library installed.

As Android moves forward, more filter tags are likely to become available, so make sure to check the official Google Play filters page at http://developer.android.com/guide/google/play/filters.html to get up-to-date information before you deploy.

Defining the Icon of Your Game

When you deploy your game to a device and open the application launcher, you will see that its entry has a nice, but not really unique, Android icon. The same icon would be shown for your game on Google Play. How can you change it to a custom icon?

Have a closer look at the <application> element. There, we defined an attribute called icon. It references an image in the res/drawable-xxx directory called icon. So, it should be obvious what to do: replace the icon image in the drawable folder with your own icon image.

Following through the eight easy steps to create an Android project, you’ll see something similar to Figure 4-1 in the res/ folder.

Figure 4-1. What happened to my res/ folder?

We saw in Chapter 1 that devices come in different sizes, but we didn’t talk about how Android handles those different sizes. It turns out that Android has an elaborate mechanism that allows you to define your graphical assets for a set of screen densities. Screen density is a combination of physical screen size and the number of pixels of the screen. We’ll look into that topic in more detail in chapter 5. For now, it suffices to know that Android defines four densities: ldpi for low-density screens, mdpi for standard-density screens, hdpi for high-density screens, and xhdpi for extra-high-density screens. For lower-density screens, we usually use smaller images; and for higher-density screens, we use high-resolution assets.

So, in the case of our icon, we need to provide four versions: one for each density. But how big should each of those versions be? Luckily, we already have default icons in the res/drawable folders that we can use to re-engineer the sizes of our own icons. The icon in res/drawable-ldpi has a resolution of 36×36 pixels, the icon in res/drawable-mdpi has a resolution of 48×48 pixels, the icon in res/drawable-hdpi has a resolution of 72×72 pixels, and the icon in res/drawable-xhdpi has a resolution of 96×96 pixels. All we need to do is create versions of our custom icon with the same resolutions and replace the icon.png file in each of the folders with our own icon.png file. We can leave the manifest file unaltered as long as we call our icon image file icon.png. Note that file references in the manifest file are case sensitive. Always use all lowercase letters in resource files, to play it safe.

For true Android 1.5 compatibility, we need to add a folder called res/drawable/ and place the icon image from the res/drawable-mdpi/ folder there. Android 1.5 does not know about the other drawable folders, so it might not find our icon.

Finally, we are ready to get some Android coding done.

For Those Coming from iOS/Xcode

Android’s environment differs greatly from that of Apple’s. Where Apple is very tightly controlled, Android relies on a number of different modules from different sources that define many of the APIs, control the formats, and dictate which tools are best suited for a specific task, e.g. building the application.

Eclipse/ADT vs. Xcode

Eclipse is a multiproject, multidocument interface. You can have many Android applications in a single workspace, all listed together under your Package Explorer view. You can also have multiple files open from these projects all tabbed out in the Source Code view. Just like forward/back in Xcode, Eclipse has some toolbar buttons to help with navigation, and even a navigation option called Last Edit Location that will bring you back to the last change you made.

Eclipse has many language features for Java that Xcode does not have for Objective-C. Whereas in Xcode you have to click “Jump to definition,” in Eclipse you simple press F3 or click Open Declaration. Another favorite is the reference search feature. Want to find out what calls a specific method? Just click to select it and then either press Ctrl+Shift+G or choose Search ![]() References

References ![]() Workspace. All renaming or moving operations are classified as “Refactor” operations, so before you get frustrated by not seeing any way to rename a class or file, look at the Refactor options. Because Java does not have separate header and implementation files, there is no “jump to header/impl” shortcut. Compiling of Java files is automatic if you have Project

Workspace. All renaming or moving operations are classified as “Refactor” operations, so before you get frustrated by not seeing any way to rename a class or file, look at the Refactor options. Because Java does not have separate header and implementation files, there is no “jump to header/impl” shortcut. Compiling of Java files is automatic if you have Project ![]() Build Automatically enabled. With that setting enabled, your project will be compiled incrementally every time you make a change. To autocomplete, just press Ctrl+Space.

Build Automatically enabled. With that setting enabled, your project will be compiled incrementally every time you make a change. To autocomplete, just press Ctrl+Space.

One of the first things you’ll notice as a new Android developer is that to deploy on a device, you don’t have to do too much other than enabling a setting. Any executable code on Android still needs to be signed with a private key, just like in iOS, but the keys don’t need to be issued from a trusted authority like Apple, so the IDE actually creates a “debug” key for you when you run test code on your device. This key will be different from your production key, but not having to mess around with anything to get the application testing is very helpful. The key is located in the user home directory under a sub-directory called .android/debug.keystore.

Like Xcode, Eclipse supports Subversion (SVN), though you’ll need to install a plug-in. The most common plug-in is called Subclipse, which is available at http://subclipse.tigris.org. All SVN functionality is available either under the Team context menu option or by opening a view by choosing Window ![]() Show View

Show View ![]() Other

Other ![]() SVN. Check there first to get to your repositories and start checking out or sharing projects.

SVN. Check there first to get to your repositories and start checking out or sharing projects.

Most everything in Eclipse is contextual, so you will want to right-click (or double-click/Ctrl-click) the names of projects, files, classes, methods, and just about anything else to see what your options are. For instance, running a project for the first time is best done by just right-clicking the project name and choosing Run As ![]() Android Application.

Android Application.

Locating and Configuring Your Targets

Xcode can have a single project with multiple targets, like My Game Free and My Game Full, that have different compile-time options and can produce different applications based on these options. Android has no such thing in Eclipse, because Eclipse is project-oriented in a very flat manner. To do the same thing in Android, you will need to have two different projects that share all code except maybe one special piece of configuration code for that project. Sharing code is very easy and can be done using the simple “linked source” features of Eclipse.

If you’re used to Xcode plists and pages of configuration, you’ll be happy to hear that most everything you can possibly need in Android is located in one of two locations: AndroidManifest.xml (covered in this chapter) and the project’s Properties window. The Android manifest file covers things very specific to the app, just like Summary and Info for the Xcode target, and the project’s Properties window covers features of the Java language (such as which libraries are linked, where classes are located, etc.). Right-clicking the project and selecting Properties presents you with a number of categories to configure from. The Android and Java Build Path categories deal with libraries and source code dependencies, much like many of the Build Settings, Build Phases, and Build Rules tab options in Xcode. Things will surely be different, but understanding where to look can save a great deal of time.

Other Useful Tidbits

Of course there are more differences between XCode and Eclipse. The following list tells you about those that we find most useful.

- Eclipse shows the actual filesystem structure, but caches many things about it, so get good with the F5/refresh feature to get an up-to-date picture of your project files.

- File location does matter, and there is no virtualization of locations equivalent to groups. It’s as if all folders are folder references, and the only way to not include files is to set up exclusion filters.

- Settings are per-workspace, so you can have several workspaces each with different settings. This is very useful when you have both personal and professional projects and you want to keep them separate.

- Eclipse has multiple perspectives The current perspective is identified by the active icon in the upper-right area of the Eclipse window, which is Java by default. As discussed in Chapter 2, a perspective is a preconfigured set of views and some associated contextual settings. If things seem to get weird at any point, check to make sure you are in the correct perspective.

- Deploying is covered in this book, but it is not like changing the scheme or target as you do in Xcode. It’s an entirely separate operation that you do via the right-click context menu for the project (Android Tools

Export Signed Application Package).

Export Signed Application Package). - If code edits simply don’t seem to be taking effect, most likely your Build Automatically setting is turned off. You will usually want that enabled for desired behavior (Project

Build Automatically).

Build Automatically). - There is no direct equivalent to XIB. The closest thing is the Android layout, but Android doesn’t do outlets like XIB does, so just assume you’ll always use the ID convention. Most games don’t need to care about more than one layout, but it’s good to keep in mind.

- Eclipse uses mostly XML-based configuration files in the project directory to store project settings. Check for “dot” files like .project if you need to make changes manually or build automation systems. This plus AndroidManifest.xml is very similar to the project.pbxproj file in Xcode.

Android API Basics

In the rest of the chapter, we’ll concentrate on playing around with those Android APIs that are relevant to our game development needs. For this, we’ll do something rather convenient: we’ll set up a test project that will contain all of our little test examples for the different APIs we are going to use. Let’s get started.

Creating a Test Project

From the previous section, we already know how to set up all our projects. So, the first thing we do is to execute the eight steps outlined earlier. Create a project named ch04–android-basics, using the package name com.badlogic.androidgames with a single main activity called AndroidBasicsStarter. We are going to use some older and some newer APIs, so we set the minimum SDK version to 3 (Android 1.5) and the build SDK version to 16 (Android 4.1). You can fill in any values you like for the other settings, such as the title of the application. From here on, all we’ll do is create new activity implementations, each demonstrating parts of the Android APIs.

However, remember that we only have one main activity. So, what does our main activity look like? We want a convenient way to add new activities, and we want the ability to start a specific activity easily. With one main activity, it should be clear that that activity will somehow provide us with a means to start a specific test activity. As discussed earlier, the main activity will be specified as the main entry point in the manifest file. Each additional activity that we add will be specified without the <intent-filter> child element. We’ll start those programmatically from the main activity.

The AndroidBasicsStarter Activity

The Android API provides us with a special class called ListActivity, which derives from the Activity class that we used in the Hello World project. The ListActivity class is a special type of activity whose single purpose is to display a list of things (for example, strings). We use it to display the names of our test activities. When we touch one of the list items, we’ll start the corresponding activity programmatically. Listing 4-1 shows the code for our AndroidBasicsStarter main activity.

Listing 4-1. AndroidBasicsStarter.java, Our Main Activity Responsible for Listing and Starting All Our Tests

package com.badlogic.androidgames;

import android.app.ListActivity;

import android.content.Intent;

import android.os.Bundle;

import android.view.View;

import android.widget.ArrayAdapter;

import android.widget.ListView;

public class AndroidBasicsStarter extends ListActivity {

String tests[] = { "LifeCycleTest", "SingleTouchTest", "MultiTouchTest",

"KeyTest", "AccelerometerTest", "AssetsTest",

"ExternalStorageTest", "SoundPoolTest", "MediaPlayerTest",

"FullScreenTest", "RenderViewTest", "ShapeTest", "BitmapTest",

"FontTest", "SurfaceViewTest" };

public void onCreate(Bundle savedInstanceState) {

super .onCreate(savedInstanceState);

setListAdapter(new ArrayAdapter<String>(this ,

android.R.layout.simple_list_item_1, tests));

}

@Override

protected void onListItemClick(ListView list, View view, int position,

long id) {

super .onListItemClick(list, view, position, id);

String testName = tests[position];

try {

Class clazz = Class

.forName("com.badlogic.androidgames." + testName);

Intent intent = new Intent(this , clazz);

startActivity(intent);

}catch (ClassNotFoundException e) {

e.printStackTrace();

}

}

}

The package name we chose is com.badlogic.androidgames. The imports should also be pretty self-explanatory; these are simply all the classes we are going to use in our code. Our AndroidBasicsStarter class derives from the ListActivity class—still nothing special. The field tests is a string array that holds the names of all of the test activities that our starter application should display. Note that the names in that array are the exact Java class names of the activity classes we are going to implement later on.

The next piece of code should be familiar; it’s the onCreate() method that we have to implement for each of our activities, and that will be called when the activity is created. Remember that we must call the onCreate() method of the base class of our activity. It’s the first thing we must do in the onCreate() method of our own Activity implementation. If we don’t, an exception will be thrown and the activity will not be displayed.

With that out of the way, the next thing we do is call a method called setListAdapter(). This method is provided to us by the ListActivity class we derived it from. It lets us specify the list items we want the ListActivity class to display for us. These need to be passed to the method in the form of a class instance that implements the ListAdapter interface. We use the convenient ArrayAdapter class to do this. The constructor of this class takes three arguments: the first is our activity, the second we’ll explain in the next paragraph, and the third is the array of items that the ListActivity should display. We happily specify the tests array we defined earlier for the third argument, and that’s all we need to do.

So what’s this second argument to the ArrayAdapter constructor? To explain this, we’d have to go through all the Android UI API stuff, which we are not going to use in this book. So, instead of wasting pages on something we are not going to need, we’ll give you the quick-and-dirty explanation: each item in the list is displayed via a View. The argument defines the layout of each View, along with the type of each View. The value android.R.layout.simple_list_item_1 is a predefined constant provided by the UI API for getting up and running quickly. It stands for a standard list item View that will display text. Just as a quick refresher, a View is a UI widget on Android, such as a button, a text field, or a slider. We introduced views in form of a Button instance while dissecting the HelloWorldActivity in Chapter 2.

If we start our activity with just this onCreate() method, we’ll see something that looks like the screen shown in Figure 4-2.

Figure 4-2. Our test starter activity, which looks fancy but doesn’t do a lot yet

Now let’s make something happen when a list item is touched. We want to start the respective activity that is represented by the list item we touched.

Starting Activities Programmatically

The ListActivity class has a protected method called onListItemClick() that will be called when an item is tapped. All we need to do is override that method in our AndroidBasicsStarter class. And that’s exactly what we did in Listing 4-1.

The arguments to this method are the ListView that the ListActivity uses to display the items, the View that got touched and that’s contained in that ListView, the position of the touched item in the list, and an ID, which doesn’t interest us all that much. All we really care about is the position argument.

The onListItemClicked() method starts off by being a good citizen and calls the base class method first. This is always a good thing to do if we override methods of an activity. Next, we fetch the class name from the tests array, based on the position argument. That’s the first piece of the puzzle.

Earlier, we discussed that we can start activities that we defined in the manifest file programmatically via an intent. The Intent class has a nice and simple constructor to do this, which takes two arguments: a Context instance and a Class instance. The latter represents the Java class of the activity we want to start.

Context is an interface that provides us with global information about our application. It is implemented by the Activity class, so we simply pass this reference to the Intent constructor.

To get the Class instance representing the activity we want to start, we use a little reflection, which will probably be familiar to you if you’ve worked with Java. Reflection allows us to programmatically inspect, instantiate, and call classes at runtime. The static method Class.forName() takes a string containing the fully qualified name of a class for which we want to create a Class instance. All of the test activities we’ll implement later will be contained in the com.badlogic.androidgames package. Concatenating the package name with the class name we fetched from the tests array will give us the fully qualified name of the activity class we want to start. We pass that name to Class.forName() and get a nice Class instance that we can pass to the Intent constructor.

Once the Intent instance is constructed, we can start it with a call to the startActivity() method. This method is also defined in the Context interface. Because our activity implements that interface, we just call its implementation of that method. And that’s it!

So how will our application behave? First, the starter activity will be displayed. Each time we touch an item on the list, the corresponding activity will be started. The starter activity will be paused and will go into the background. The new activity will be created by the intent we send out and will replace the starter activity on the screen. When we press the back button on the Android device, the activity is destroyed and the starter activity is resumed, taking back the screen.

Creating the Test Activities

When we create a new test activity, we have to perform the following steps:

- Create the corresponding Java class in the com.badlogic.androidgames package and implement its logic.

- Add an entry for the activity in the manifest file, using whatever attributes it needs (that is, android:configChanges or android:screenOrientation). Note that we won’t specify an <intent-filter> element, as we’ll start the activity programmatically.

- Add the activity’s class name to the tests array of the AndroidBasicsStarter class.

As long as we stick to this procedure, everything else will be taken care of by the logic we implemented in the AndroidBasicsStarter class. The new activity will automatically show up in the list, and it can be started by a simple touch.

One thing you might wonder is whether the test activity that gets started on a touch is running in its own process and VM. It is not. An application composed of activities has something called an activity stack. Every time we start a new activity, it gets pushed onto that stack. When we close the new activity, the last activity that got pushed onto the stack will get popped and resumed, becoming the new active activity on the screen.

This also has some other implications. First, all of the activities of the application (those on the stack that are paused and the one that is active) share the same VM. They also share the same memory heap. That can be a blessing and a curse. If you have static fields in your activities, they will get memory on the heap as soon as they are started. Being static fields, they will survive the destruction of the activity and the subsequent garbage collection of the activity instance. This can lead to some bad memory leaks if you carelessly use static fields. Think twice before using a static field.

As stated a couple of times already, we’ll only ever have a single activity in our actual games. The preceding activity starter is an exception to this rule to make our lives a little easier. But don’t worry; we’ll have plenty of opportunities to get into trouble even with a single activity.

Note This is as deep as we’ll get into Android UI programming. From here on, we’ll always use a single View in an activity to output things and to receive input. If you want to learn about things like layouts, view groups, and all the bells and whistles that the Android UI library offers, we suggest you check out Grant Allen’s book, Beginning Android 4 (Apress, 2011), or the excellent developer guide on the Android Developers site.

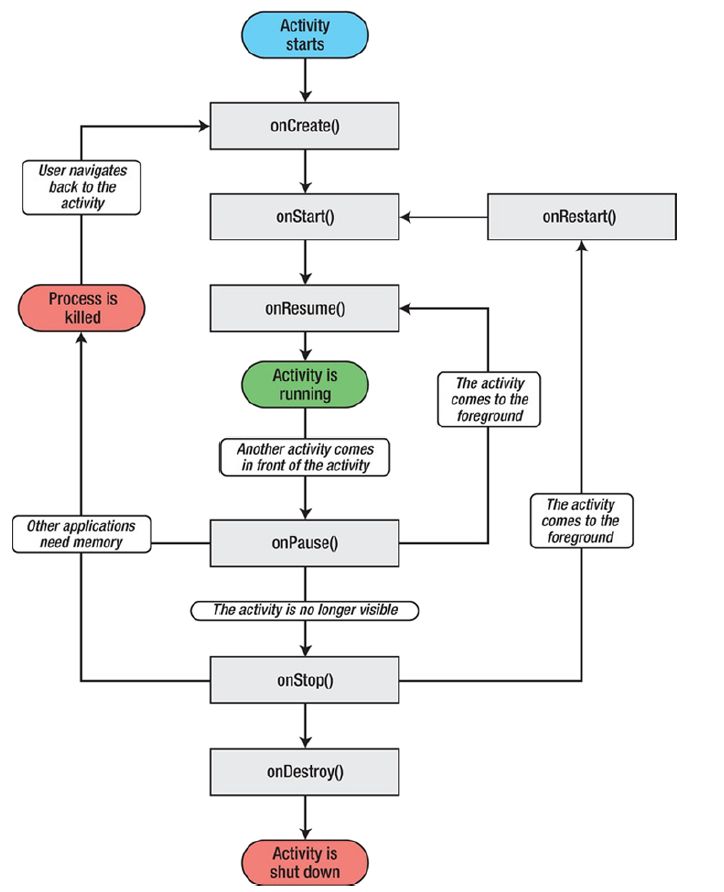

The first thing we have to figure out when programming for Android is how an activity behaves. On Android, this is called the activity life cycle. It describes the states and transitions between those states through which an activity can live. Let’s start by discussing the theory behind this.

In Theory

An activity can be in one of three states:

- Running: In this state, it is the top-level activity that takes up the screen and directly interacts with the user.

- Paused: This happens when the activity is still visible on the screen but partially obscured by either a transparent activity or a dialog, or if the device screen is locked. A paused activity can be killed by the Android system at any point in time (for example, due to low memory). Note that the activity instance itself is still alive and kicking in the VM heap and waiting to be brought back to a running state.

- Stopped: This happens when the activity is completely obscured by another activity and thus is no longer visible on the screen. Our AndroidBasicsStarter activity will be in this state if we start one of the test activities, for example. It also happens when a user presses the home button to go to the home screen temporarily. The system can again decide to kill the activity completely and remove it from memory if memory gets low.

In both the paused and stopped states, the Android system can decide to kill the activity at any point in time. It can do so either politely, by first informing the activity by calling its finished() method, or impolitely, by silently killing the activity’s process.

The activity can be brought back to a running state from a paused or stopped state. Note again that when an activity is resumed from a paused or stopped state, it is still the same Java instance in memory, so all the state and member variables are the same as before the activity was paused or stopped.

An activity has some protected methods that we can override to get information about state changes:

- Activity.onCreate(): This is called when our activity is started up for the first time. Here, we set up all the UI components and hook into the input system. This method will get called only once in the life cycle of our activity.

- Activity.onRestart(): This is called when the activity is resumed from a stopped state. It is preceded by a call to onStop().

- Activity.onStart(): This is called after onCreate() or when the activity is resumed from a stopped state. In the latter case, it is preceded by a call to onRestart().

- Activity.onResume(): This is called after onStart() or when the activity is resumed from a paused state (for example, when the screen is unlocked).

- Activity.onPause(): This is called when the activity enters the paused state. It might be the last notification we receive, as the Android system might decide to kill our application silently. We should save all states we want to persist in this method!

- Activity.onStop(): This is called when the activity enters the stopped state. It is preceded by a call to onPause(). This means that an activity is stopped before it is paused. As with onPause(), it might be the last notification we get before the Android system silently kills the activity. We could also save persistent state here. However, the system might decide not to call this method and just kill the activity. As onPause() will always be called before onStop() and before the activity is silently killed, we’d rather save all our stuff in the onPause() method.

- Activity.onDestroy(): This is called at the end of the activity life cycle when the activity is irrevocably destroyed. It’s the last time we can persist any information we’d like to recover the next time our activity is created anew. Note that this method actually might never be called if the activity was destroyed silently after a call to onPause() or onStop() by the system.

Figure 4-3 illustrates the activity life cycle and the method call order.

Figure 4-3. The mighty, confusing activity life cycle

Here are the three big lessons we should take away from this:

- Before our activity enters the running state, the onResume() method is always called, whether or not we resume from a stopped state or from a paused state. We can thus safely ignore the onRestart() and onStart() methods. We don’t care whether we resumed from a stopped state or a paused state. For our games, we only need to know that we are now actually running, and the onResume() method signals that to us.

- The activity can be destroyed silently after onPause(). We should never assume that either onStop() or onDestroy() gets called. We also know that onPause() will always be called before onStop(). We can therefore safely ignore the onStop() and onDestroy() methods and just override onPause(). In this method, we have to make sure that all the states we want to persist, like high scores and level progress, get written to external storage, such as an SD card. After onPause(), all bets are off, and we won’t know whether our activity will ever get the chance to run again.

- We know that onDestroy() might never be called if the system decides to kill the activity after onPause() or onStop(). However, sometimes we’d like to know whether the activity is actually going to be killed. So how do we do that if onDestroy() is not going to get called? The Activity class has a method called Activity.isFinishing() that we can call at any time to check whether our activity is going to get killed. We are at least guaranteed that the onPause() method is called before the activity is killed. All we need to do is call this isFinishing() method inside the onPause() method to decide whether the activity is going to die after the onPause() call.

This makes life a lot easier. We only override the onCreate(), onResume(), and onPause() methods.

- In onCreate(), we set up our window and UI component to which we render and from which we receive input.

- In onResume(), we (re)start our main loop thread (discussed in Chapter 3).

- In onPause(), we simply pause our main loop thread, and if Activity.isFinishing() returns true, we also save to disk any state we want to persist.

Many people struggle with the activity life cycle, but if we follow these simple rules, our game will be capable of handling pausing, resuming, and cleaning up.

In Practice

Let’s write our first test example that demonstrates the activity life cycle. We’ll want to have some sort of output that displays which state changes have happened so far. We’ll do this in two ways:

- The sole UI component that the activity will display is a TextView. As its name suggests, it displays text, and we’ve already used it implicitly for displaying each entry in our starter activity. Each time we enter a new state, we will append a string to the TextView, which will display all the state changes that have happened so far.

- We won’t be able to display the destruction event of our activity in the TextView because it will vanish from the screen too fast, so we will also output all state changes to LogCat. We do this with the Log class, which provides a couple of static methods with which to append messages to LogCat.

Remember what we need to do to add a test activity to our test application. First, we define it in the manifest file in the form of an <activity> element, which is a child of the <application> element:

<activity android:label="Life Cycle Test"

android:name=".LifeCycleTest"

android:configChanges="keyboard|keyboardHidden|orientation" />

Next we add a new Java class called LifeCycleTest to our com.badlogic.androidgames package. Finally, we add the class name to the tests member of the AndroidBasicsStarter class we defined earlier. (Of course, we already have that in there from when we wrote the class for demonstration purposes.)

We’ll have to repeat all of these steps for any test activity that we create in the following sections. For brevity, we won’t mention these steps again. Also note that we didn’t specify an orientation for the LifeCycleTest activity. In this example, we can be in either landscape mode or portrait mode, depending on the device orientation. We did this so that you can see the effect of an orientation change on the life cycle (none, due to how we set the configChanges attribute). Listing 4-2 shows the code of the entire activity.

Listing 4-2. LifeCycleTest.java, Demonstrating the Activity Life Cycle

package com.badlogic.androidgames;

import android.app.Activity;

import android.os.Bundle;

import android.util.Log;

import android.widget.TextView;

public class LifeCycleTest extends Activity {

StringBuilder builder = new StringBuilder();

TextView textView;

private void log(String text) {

Log.d("LifeCycleTest", text);

builder.append(text);

builder.append(' '),

textView.setText(builder.toString());

}

@Override

public void onCreate(Bundle savedInstanceState) {

super .onCreate(savedInstanceState);

textView = new TextView(this );

textView.setText(builder.toString());

setContentView(textView);

log("created");

}

@Override

protected void onResume() {

super .onResume();

log("resumed");

}

@Override

protected void onPause() {

super .onPause();

log("paused");

if (isFinishing()) {

log("finishing");

}

}

}

Let’s go through this code really quickly. The class derives from Activity—not a big surprise. We define two members: a StringBuilder, which will hold all the messages we have produced so far, and the TextView, which we use to display those messages directly in the Activity.

Next, we define a little private helper method that will log text to LogCat, append it to our StringBuilder, and update the TextView text. For the LogCat output, we use the static Log.d() method, which takes a tag as the first argument and the actual message as the second argument.

In the onCreate() method, we call the superclass method first, as always. We create the TextView and set it as the content view of our activity. It will fill the complete space of the activity. Finally, we log the message created to LogCat and update the TextView text with our previously defined helper method log().

Next, we override the onResume() method of the activity. As with any activity methods that we override, we first call the superclass method. All we do is call log() again with resumed as the argument.

The overridden onPause() method looks much like the onResume() method. We log the message as “paused” first. We also want to know whether the activity is going to be destroyed after the onPause() method call, so we check the Activity.isFinishing() method. If it returns true, we log the finishing event as well. Of course, we won’t be able to see the updated TextView text because the activity will be destroyed before the change is displayed on the screen. Thus, we also output everything to LogCat, as discussed earlier.

Run the application, and play around with this test activity a little. Here’s a sequence of actions you could execute:

- Start up the test activity from the starter activity.

- Lock the screen.

- Unlock the screen.

- Press the home button (which will take you back to the home screen).

- On the home screen, on older Android versions (prior to version 3), hold the home button until you are presented with the currently running applications. On Android versions 3+, touch the Running Apps button. Select the Android Basics Starter app to resume (which will bring the test activity back onscreen).

- Press the back button (which will take you back to the starter activity).

If your system didn’t decide to kill the activity silently at any point when it was paused, you will see the output in Figure 4-4 (of course, only if you haven’t pressed the back button yet).

Figure 4-4. Running the LifeCycleTest activity

On startup, onCreate() is called, followed by onResume(). When we lock the screen, onPause() is called. When we unlock the screen, onResume() is called. When we press the home button, onPause() is called. Going back to the activity will call onResume() again. The same messages are, of course, shown in LogCat, which you can observe in Eclipse in the LogCat view. Figure 4-5 shows what we wrote to LogCat while executing the preceding sequence of actions (plus pressing the back button).

Figure 4-5. The LogCat output of LifeCycleTest

Pressing the back button again invokes the onPause() method. As it also destroys the activity, the if-statement in onPause() also gets triggered, informing us that this is the last we’ll see of that activity.

That is the activity life cycle, demystified and simplified for our game programming needs. We now can easily handle any pause and resume events, and we are guaranteed to be notified when the activity is destroyed.

As discussed in previous chapters, we can get information from many different input devices on Android. In this section, we’ll discuss three of the most relevant input devices on Android and how to work with them: the touchscreen, the keyboard, the accelerometer and the compass.

Getting (Multi-)Touch Events

The touchscreen is probably the most important way to get input from the user. Until Android version 2.0, the API only supported processing single-finger touch events. Multitouch was introduced in Android 2.0 (SDK version 5). The multitouch event reporting was tagged onto the single-touch API, with some mixed results in usability. We’ll first investigate handling single-touch events, which are available on all Android versions.

Processing Single-Touch Events

When we processed taps on a button in Chapter 2, we saw that listener interfaces are the way Android reports events to us. Touch events are no different. Touch events are passed to an OnTouchListener interface implementation that we register with a View. The OnTouchListener interface has only a single method:

public abstract boolean onTouch (View v, MotionEvent event)

The first argument is the View to which the touch events get dispatched. The second argument is what we’ll dissect to get the touch event.

An OnTouchListener can be registered with any View implementation via the View.setOnTouchListener() method. The OnTouchListener will be called before the MotionEvent is dispatched to the View itself. We can signal to the View in our implementation of the onTouch() method that we have already processed the event by returning true from the method. If we return false, the View itself will process the event.

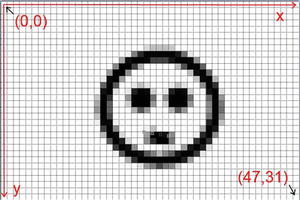

The MotionEvent instance has three methods that are relevant to us:

- MotionEvent.getX() and MotionEvent.getY(): These methods report the x and y coordinates of the touch event relative to the View. The coordinate system is defined with the origin in the top left of the view, with the x axis pointing to the right and the y axis pointing downward. The coordinates are given in pixels. Note that the methods return floats, and thus the coordinates have subpixel accuracy.

- MotionEvent.getAction(): This method returns the type of the touch event. It is an integer that takes on one of the values MotionEvent.ACTION_DOWN, MotionEvent.ACTION_MOVE, MotionEvent.ACTION_CANCEL, or MotionEvent.ACTION_UP.

Sounds simple, and it really is. The MotionEvent.ACTION_DOWN event happens when the finger touches the screen. When the finger moves, events with type MotionEvent.ACTION_MOVE are fired. Note that you will always get MotionEvent.ACTION_MOVE events, as you can’t hold your finger still enough to avoid them. The touch sensor will recognize the slightest change. When the finger is lifted up again, the MotionEvent.ACTION_UP event is reported. MotionEvent.ACTION_CANCEL events are a bit of a mystery. The documentation says they will be fired when the current gesture is canceled. We have never seen that event in real life yet. However, we’ll still process it and pretend it is a MotionEvent.ACTION_UP event when we start implementing our first game.

Let’s write a simple test activity to see how this works in code. The activity should display the current position of the finger on the screen as well as the event type. Listing 4-3 shows what we came up with.

Listing 4-3. SingleTouchTest.java; Testing Single-Touch Handling

package com.badlogic.androidgames;

import android.app.Activity;

import android.os.Bundle;

import android.util.Log;

import android.view.MotionEvent;

import android.view.View;

import android.view.View.OnTouchListener;

import android.widget.TextView;

public class SingleTouchTest extends Activity implements OnTouchListener {

StringBuilder builder = new StringBuilder();

TextView textView;

public void onCreate(Bundle savedInstanceState) {

super .onCreate(savedInstanceState);

textView = new TextView(this );

textView.setText("Touch and drag (one finger only)!");

textView.setOnTouchListener(this );

setContentView(textView);

}

public boolean onTouch(View v, MotionEvent event) {

builder.setLength(0);

switch (event.getAction()) {

case MotionEvent.ACTION_DOWN:

builder.append("down, ");

break ;

case MotionEvent.ACTION_MOVE:

builder.append("move, ");

break ;

case MotionEvent.ACTION_CANCEL:

builder.append("cancel", ");

break ;

case MotionEvent.ACTION_UP:

builder.append("up, ");

break ;

}

builder.append(event.getX());

builder.append(", ");

builder.append(event.getY());

String text = builder.toString();

Log.d("TouchTest", text);

textView.setText(text);

return true ;

}

}

We let our activity implement the OnTouchListener interface. We also have two members: one for the TextView, and a StringBuilder we’ll use to construct our event strings.

The onCreate() method is pretty self-explanatory. The only novelty is the call to TextView.setOnTouchListener(), where we register our activity with the TextView so that it receives MotionEvents.

What’s left is the onTouch() method implementation itself. We ignore the view argument, as we know that it must be the TextView. All we are interested in is getting the touch event type, appending a string identifying it to our StringBuilder, appending the touch coordinates, and updating the TextView text. That’s it. We also log the event to LogCat so that we can see the order in which the events happen, as the TextView will only show the last event that we processed (we clear the StringBuilder every time onTouch() is called).

One subtle detail in the onTouch() method is the return statement, where we return true. Usually, we’d stick to the listener concept and return false in order not to interfere with the event-dispatching process. If we do this in our example, we won’t get any events other than the MotionEvent.ACTION_DOWN event. So, we tell the TextView that we just consumed the event. That behavior might differ between different View implementations. Luckily, we’ll only need three other views in the rest of this book, and those will happily let us consume any event we want.

If we fire up that application on the emulator or a connected device, we can see how the TextView will always display the last event type and position reported to the onTouch() method. Additionally, you can see the same messages in LogCat.

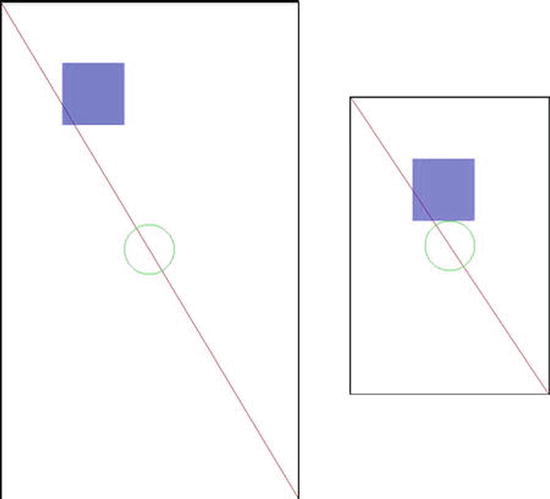

We did not fix the orientation of the activity in the manifest file. If you rotate your device so that the activity is in landscape mode, the coordinate system changes, of course. Figure 4-6 shows the activity in portrait mode (left) and landscape mode (right). In both cases, we tried to touch the middle of the View. Note how the x and y coordinates seem to get swapped. The figure also shows the x and y axes in both cases (the yellow lines), along with the point on the screen that we roughly touched (the green circle). In both cases, the origin is in the upper-left corner of the TextView, with the x axis pointing to the right and the y axis pointing downward.

Figure 4-6. Touching the screen in portrait and landscape modes

Depending on the orientation, our maximum x and y values change, of course. The preceding images were taken on a Nexus One running Android 2.2 (Froyo), which has a screen resolution of 480×800 pixels in portrait mode (800×480 in landscape mode). Since the touch coordinates are given relative to the View, and since the view doesn’t fill the complete screen, our maximum y value will be smaller than the resolution height. We’ll see later how we can enable full-screen mode so that the title bar and notification bar don’t get in our way.

Sadly, there are a few issues with touch events on older Android versions and first-generation devices:

- Touch event flood: The driver will report as many touch events as possible when a finger is down on the touchscreen—on some devices, hundreds per second. We can fix this issue by putting a Thread.sleep(16) call into our onTouch() method, which will put to sleep for 16 ms the UI thread on which those events are dispatched. With this, we’ll get 60 events per second at most, which is more than enough to have a responsive game. This is only a problem on devices with Android version 1.5. If you don’t target that Android version, ignore this advice.

- Touching the screen eatsthe CPU: Even if we sleep in our onTouch() method, the system has to process the events in the kernel as reported by the driver. On old devices, such as the Hero or G1, this can use up to 50 percent of the CPU, which leaves a lot less processing power for our main loop thread. As a consequence, our perfectly fine frame rate will drop considerably, sometimes to the point where the game becomes unplayable. On second-generation devices, the problem is a lot less pronounced and can usually be ignored. Sadly, there’s no solution for this on older devices.

Processing Multitouch Events

Warning: Major pain ahead! The multitouch API has been tagged onto the MotionEvent class, which originally handled only single touches. This makes for some major confusion when trying to decode multitouch events. Let’s try to make some sense of it.

Note The multitouch API apparently is also confusing for the Android engineers that created it. It received a major overhaul in SDK version 8 (Android 2.2) with new methods, new constants, and even renamed constants. These changes should make working with multitouch a little bit easier. However, they are only available from SDK version 8 onward. To support all multitouch-capable Android versions (2.0+), we have to use the API of SDK version 5.

Handling multitouch events is very similar to handling single-touch events. We still implement the same OnTouchListener interface we implemented for single-touch events. We also get a MotionEvent instance from which to read the data. We also process the event types we processed before, like MotionEvent.ACTION_UP, plus a couple of new ones that aren’t too big of a deal.

Pointer IDs and Indices

The differences between handling multitouch events and handling single-touch events start when we want to access the coordinates of a touch event. MotionEvent.getX() and MotionEvent.getY() return the coordinates of a single finger on the screen. When we process multitouch events, we use overloaded variants of these methods that take a pointer index. This might look as follows:

event.getX(pointerIndex);

event.getY(pointerIndex);